Information-Theoretic Lower Bounds for Distributed Function Computation

Abstract

We derive information-theoretic converses (i.e., lower bounds) for the minimum time required by any algorithm for distributed function computation over a network of point-to-point channels with finite capacity, where each node of the network initially has a random observation and aims to compute a common function of all observations to a given accuracy with a given confidence by exchanging messages with its neighbors. We obtain the lower bounds on computation time by examining the conditional mutual information between the actual function value and its estimate at an arbitrary node, given the observations in an arbitrary subset of nodes containing that node. The main contributions include: 1) A lower bound on the conditional mutual information via so-called small ball probabilities, which captures the dependence of the computation time on the joint distribution of the observations at the nodes, the structure of the function, and the accuracy requirement. For linear functions, the small ball probability can be expressed by Lévy concentration functions of sums of independent random variables, for which tight estimates are available that lead to strict improvements over existing lower bounds on computation time. 2) An upper bound on the conditional mutual information via strong data processing inequalities, which complements and strengthens existing cutset-capacity upper bounds. 3) A multi-cutset analysis that quantifies the loss (dissipation) of the information needed for computation as it flows across a succession of cutsets in the network. This analysis is based on reducing a general network to a line network with bidirectional links and self-links, and the results highlight the dependence of the computation time on the diameter of the network, a fundamental parameter that is missing from most of the existing lower bounds on computation time.

Index Terms:

Distributed function computation, computation time, small ball probability, Lévy concentration function, strong data processing inequality, cutset bound, multi-cutset analysisI Introduction and preview of results

I-A Model and problem formulation

The problem of distributed function computation arises in such applications as inference and learning in networks and consensus or coordination of multiple agents. Each node of the network has an initial random observation and aims to compute a common function of the observations of all the nodes by exchanging messages with its neighbors over discrete memoryless point-to-point channels and by performing local computations. A problem of theoretical and practical interest is to determine the fundamental limits on the computation time, i.e., the minimum number of steps needed by any distributed computation algorithm to guarantee that, when the algorithm terminates, each node has an accurate estimate of the function value with high probability.

Formally, a network consisting of nodes connected by point-to-point channels is represented by a directed graph , where is a finite set of nodes and is a set of edges. Node can send messages to node only if . Accordingly, to each edge we associate a discrete memoryless channel with finite input alphabet , finite output alphabet , and stochastic transition law that specifies the transition probabilities for all . The channels corresponding to different edges are assumed to be independent. Initially, each node has access to an observation given by a random variable (r.v.) taking values in some space . We assume that the joint probability law of is known to all the nodes. Given a function , each node aims to estimate the value via local communication and computation. For example, when is given by the identity mapping , the goal of each node is to estimate the observations of all other nodes in the network.

The operation of the network is synchronized, and takes place in discrete time. A -step algorithm is a collection of deterministic encoders and estimators , for all and , given by mappings

where and . Here, and are, respectively, the in-neighborhood and the out-neighborhood of node . The algorithm operates as follows: at each step , each node computes , and then transmits each message along the edge . For each , the received message at each is related to the transmitted message via the stochastic transition law . At step , each node computes as an estimate of , where for .

Given a nonnegative distortion function , we use the excess distortion probability to quantify the computation fidelity of the algorithm at node . A key fundamental limit of distributed function computation is the -computation time:

| (1) |

If an algorithm has the property that

then we say that it achieves accuracy with confidence . Thus, is the minimum number of time steps needed by any algorithm to achieve accuracy with confidence . The objective of this paper is to derive general lower bounds on for arbitrary network topologies, discrete memoryless channel models, continuous or discrete observations, and functions .

Previously, this problem (for real-valued functions and quadratic distortion) has been studied by Ayaso et al. [1] and by Como and Dahleh [2] using information-theoretic techniques. This problem is also related to the study of communication complexity of distributed computing over noisy channels. In that context, Goyal et al. [3] studied the problem of computing Boolean functions in complete graphs, where each pair of nodes communicates over a pair of independent binary symmetric channels (BSCs), and obtained tight lower bounds on the number of serial broadcasts using an approach tailored to that special problem. The technique used in [3] has been extended to random planar networks by Dutta et al. [4]. Other related, but differently formulated, problems include communication complexity and information complexity in distributed computing over noiseless channels, surveyed in [5]; minimum communication rates for distributed computing [6, 7, 8], compression, or estimation based on infinite sequences of observations, surveyed in [9, Chap. 21]; and distributed computing in wireless networks, surveyed in [10]. Some achievability results for specific distributed function computation problems can be found in [11, 12, 13, 1, 14, 15, 16, 17, 18].

I-B Method of analysis and summary of main results

Our analysis builds upon the information-theoretic framework proposed by Ayaso et al. [1] and Como and Dahleh [2]. The underlying idea is rather natural and exploits a fundamental trade-off between the minimal amount of information any good algorithm must necessarily extract about the function value when it terminates and the maximal amount of information any algorithm is able to obtain due to time and communication constraints. To be more precise, given any set of nodes , let denote the vector of observations at all the nodes in . The quantity that plays a key role in the analysis is the conditional mutual information between the actual function value and the estimate at an arbitrary node , given the observations in an arbitrary subset of nodes containing .

Consider an arbitrary -step algorithm that achieves accuracy with confidence . Then, as we show in Lemma 1 of Sec. II-A, this mutual information can be lower-bounded by

| (2) |

where is the binary entropy function, and

is the conditional small ball probability of given . The conditional small ball probability quantifies the difficulty of localizing the value of in a “distortion ball” of size given partial knowledge about the value of , namely . For example, as discussed in Sec. IV, when is a linear function of the observations , the conditional small ball probability can be expressed in terms of so-called Lévy concentration functions [19], for which tight estimates are available under various regularity conditions.

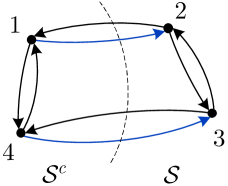

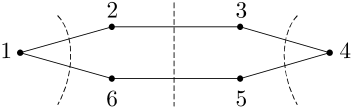

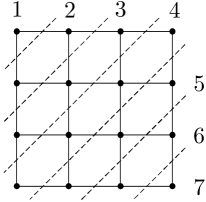

On the other hand, if is a -step algorithm, then the amount of information any node has about once terminates can be upper-bounded by a quantity that increases with and also depends on the network topology and on the information transmission capabilities of the channels connecting the nodes. To quantify this amount of information, we consider a cut of the network, i.e., a partition of the set of nodes into two disjoint subsets and , such that . The underlying intuition is that any information that nodes in receive about must flow across the edges from nodes in to nodes in . The set of these edges, denoted by , is referred to as the cutset induced by . Figure 1 illustrates these concepts on a simple four-node network. We then have the following upper bound [1, 2] (see also Lemma 2 in Sec. II-B):

| (3) |

The quantity , referred to as the cutset capacity, is the sum of the Shannon capacities of all the channels located on the edges in the cutset . Thus, if there exists a cut with a small value of , then the amount of information gained by the nodes in about will also be small. Note that the cutset upper bound grows linearly with . However, when the initial observations are discrete, we also know that

where is the conditional entropy of given , which does not depend on . In fact, we sharpen this bound by showing in Lemma 5 in Sec. II-D that

| (4) |

Here, is defined as

where the supremum is over all triples of r.v.’s, such that takes values in an arbitrary alphabet, is a Markov chain, takes values in , takes values in , and the conditional probability law is equal to the product of all the channels entering . As we discuss in detail in Sec. II-C, this constant is related to so-called strong data processing inequalities (SDPIs) [20], and quantifies the information transmission capabilities of the channels entering . When , the upper bound (4) is strictly smaller than . With the upper bound (4), we can strengthen the cutset bound to the following:

| (5) |

Combining the bounds in (2) and (5), we conclude that, if there exists a -step algorithm that achieves accuracy with confidence , then

| (6) |

moreover, this inequality holds for all choices of and . The precise statements of the resulting lower bounds on are given in Theorem 1 and Theorem 2.

The lower bound in (I-B) accounts for the difficulty of estimating the value of given only a subset of observations through the small ball probability , and for the communication bottlenecks in the network through the cutset capacity and the constants . The presence of in the bound ensures the correct scaling of in the high-accuracy limit . In particular, when the function is real-valued and the probability distribution of has a density, it is not hard to see that , and therefore grows without bound at the rate of as . By contrast, the bounds of Ayaso et al. [1] saturate at a finite constant even when no computation error is allowed, i.e., when . Detailed comparison with existing bounds is given in Sec. IV, where we particularize our lower bounds to the computation of linear functions. Moreover, in certain cases our lower bound on tends to infinity in the high-confidence regime . By contrast, existing lower bounds that rely on cutset capacity estimates remain bounded regardless of how small we make .

Throughout the paper, we provide several concrete examples that illustrate the tightness of the general lower bound in (I-B). In particular, Example 1 in Sec. II-E concerns the problem of computing the mod- sum of two independent random variables in a network of two nodes communicating over binary symmetric channels (BSCs). For that problem, we obtain a lower bound on that matches an achievable upper bound within a factor of . In Example 2 in Sec. II-E, we consider the case where the nodes aim to distribute their discrete observations to all other nodes, and obtain a lower bound on that captures the conductance of the network, which plays a prominent role in the previously published bounds of Ayaso et al. [1]. In Sec. V, we study two more examples: computing a sum of independent Rademacher random variables in a dumbbell network of BSCs, and distributed averaging of real-valued observations in an arbitrary network of binary erasure channels (BECs). Our lower bound for the former example can precisely capture the dependence of the computation time on the number of nodes in the network, while for the latter example it captures the correct dependence of the computation time on the accuracy parameter .

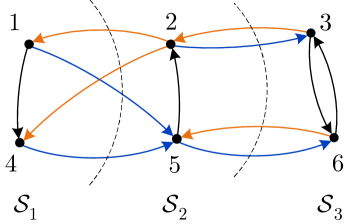

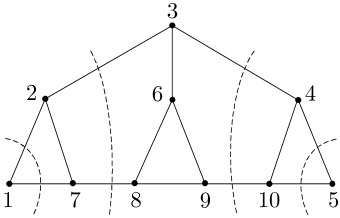

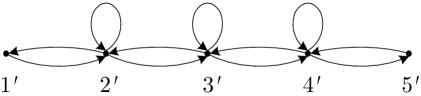

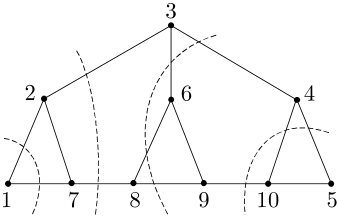

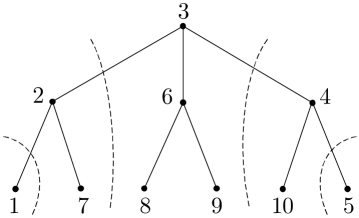

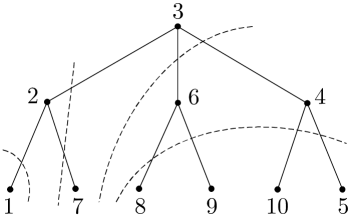

A significant limitation of the analysis based on a single cut of the network is that it only captures the flow of information across the cutset , but does not account for the time it takes the algorithm to disseminate this information to all the nodes in . We address this limitation in Sec. III through a multi-cutset analysis. The main idea is to partition the set of nodes into several subsets , such that, for all , the cutsets , are disjoint, and to analyze the flow of information across this sequence of cutsets. Once such a partition is selected, the analysis is based on a network reduction argument (Lemma 7), which lumps all the nodes in each into a single virtual “supernode.” The construction of the partition ensures that each supernode only communicates with supernodes and , and can also send noisy messages to itself (this is needed to simulate noisy communication among the nodes within in the original network). Thus, the reduced network takes the form of a chain with nodes communicating with their nearest neighbors over bidirectional noisy links and, in addition, sending noisy messages to themselves. We refer to this network as a bidirected chain of length . Figure 2a shows the partition of a six-node network, and the bidirected chain reduced from this network is shown in Fig. 2b.

Once this reduction is carried out, we can convert any -step algorithm running on the original network into a randomized -step algorithm running on the reduced network with the same accuracy and confidence guarantees as . Consequently, it suffices to analyze distributed function computation in bidirected chains. The key quantitative statement that emerges from this analysis can be informally stated as follows: For any bidirected chain with nodes, there exists a constant that plays the same role as in (4) and quantifies the information transmission capabilities of the channels in the chain, such that, for any algorithm that runs on this chain and takes time , the conditional mutual information between the function value and its estimate at the rightmost node given the observations of nodes through is upper-bounded by

| (7) |

where is the Shannon capacity of the channel from node to node . The precise statement is given in Lemma 8 in Sec. III-A. Intuitively, this shows that, unless the algorithm uses steps, the information about will dissipate at an exponential rate by the time it propagates through the chain from node to node . Combining (7) with the lower bound on based on small ball probabilities, we can obtain lower bounds on the computation time . The precise statement is given in Theorem 3. Moreover, as we show, it is always possible to reduce an arbitrary network with bidirectional point-to-point channels between the nodes to a bidirected chain whose length is equal to the diameter of the original network, which implies that, for networks with sufficiently large diameter, and for sufficiently small values of ,

| (8) |

where denotes the diameter. This dependence on , which cannot be captured by the single-cutset analysis, is missing in almost all of the existing lower bounds on computation time. An exception is the paper by Rajagopalan and Schulman [13] that gives an asymptotic lower bound on the time required to broadcast a single bit over a chain of unidirectional BSCs. Our multi-cutset analysis applies to both discrete and continuous observations, and to general network topologies. It can be straightforwardly particularized to specific networks, such as bidirected chains, rings, trees, and grids, as discussed in Sec. III-B. We note that techniques involving multiple (though not necessarily disjoint) cutsets have also been proposed in the study of multi-party communication complexity by Tiwari [21] and more recently by Chattopadhyay et al. [22], while our concern is the influence of network topology and channel noise on the computation time.

I-C Organization of the paper

The remainder of the paper is structured as follows. We start with the single-cutset analysis in Sec. II. The lower bound on the conditional mutual information via the conditional small ball probability is presented in Sec. II-A. The cutset upper bound and the SDPI upper bound on the conditional mutual information are presented in Sec. II-B and Sec. II-D. An introduction on SDPIs given in Sec. II-C. The lower bound on computation time is given in Sec. II-E, along with two concrete examples. Sec. III is devoted to the multi-cutset analysis, where we first present the network reduction argument in Sec. III-A, then derive general lower bounds on computation time and particularize the results to special networks in Sec. III-B. In Sec. IV, we discuss lower bounds for computing linear functions, where we relate the conditional small ball probability to Lévy concentration functions, and evaluate them in a number of special cases. We also make detailed comparisons of our results with existing lower bounds in Sec. IV-D. In Sec. V, we compare the lower bounds on computation time with the achievable upper bounds for two more examples: computing a sum of independent Rademacher random variables in a dumbbell network of BSCs, and distributed averaging of real-valued observations in an arbitrary network of binary erasure channels (BECs). We conclude this paper and point out future research directions in Sec. VI. A couple of lengthy technical proofs are relegated to a series of appendices.

II Single-cutset analysis

We start by deriving information-theoretic lower bounds on the computation time based on a single cutset in the network. Recall that a cutset associated to a partition of into two disjoint sets and consists of all edges that connect a node in to a node in :

When is a singleton, i.e., , we will write instead of the more clunky . As the discussion in Sec. I-B indicates, our analysis revolves around the conditional mutual information for an arbitrary set of nodes and for an arbitrary node . The lower bound on expresses quantitatively the intuition that any algorithm that achieves

must necessarily extract a sufficient amount of information about the value of . On the other hand, the upper bounds on formalize the idea that this amount cannot be too large, since any information that nodes in receive about must flow across the edges in the cutset (cf. [23, Sec. 15.10] for a typical illustration of this type of cutset arguments). We capture this information limitation in two ways: via channel capacity and via SDPI constants.

The remainder of this section is organized as follows. We first present conditional mutual information lower bounds in Sec. II-A. Then we state the upper bound based on cutset capacity in Sec. II-B. After a brief detour to introduce the SDPIs in Sec. II-C, we state the SDPI-based upper bounds in Sec. II-D. Finally, we combine the lower and upper bounds to derive lower bounds on in Sec. II-E.

II-A Lower bound on

For any , , and , define the conditional small ball probability of given as

| (9) |

This quantity measures how well the conditional distribution of given concentrates in a small region of size as measured by . The following lower bound on in terms of the conditional small ball probability is essential for proving lower bounds on .

Lemma 1.

If an algorithm achieves

| (10) |

then for any set and any node ,

| (11) |

where is the binary entropy function.

Proof:

Fix an arbitrary and an arbitrary . Consider the probability distributions and . Define the indicator random variable . Then from (10) it follows that . On the other hand, since form a Markov chain under , by Fubini’s theorem,

| (12) |

Consequently,

where

-

(a)

follows from the data processing inequality for divergence, where is the binary divergence function;

-

(b)

follows from the fact that ;

- (c)

∎

For a fixed , Lemma 1 captures the intuition that, the more spread the conditional distribution is, the more information we need about to achieve the required accuracy; similarly, for a fixed , the smaller the accuracy parameter , the more information is necessary. In Section IV, we provide explicit expressions and upper bounds for the conditional small ball probability in the context of computing linear functions of real-valued r.v.’s with absolutely continuous probability distributions. We show that, in such cases, , which implies that the lower bound of Lemma 1 grows at least as fast as in the high-accuracy limit .

II-B Upper bound on via cutset capacity

Our first upper bound involves the cutset capacity , defined as

Here, denotes the Shannon capacity of the channel .

Lemma 2.

For any set , let . Then, for any -step algorithm and for any ,

Proof:

The first inequality follows from the data processing lemma for mutual information. The second inequality has been obtained in [1] and [2] as well, but the proof in [1] relies heavily on differential entropy. Our proof is more general, as it only uses the properties of mutual information.

For a set of nodes , let and . For two subsets and of , define as the messages sent from nodes in to nodes in at step , and as the messages received by nodes in from nodes in at step . We will be using this notation in the proofs that follow, as well.

If , then for any , , hence . For , we start with the following chain of inequalities:

| (13) |

where

-

(a)

follows from data processing inequality, and the fact that and ;

-

(b)

follows from the fact that ;

-

(c)

follows from the memorylessness of the channels, hence the Markov chain , and the weak union property of conditional independence [24, p. 25];

-

(d)

follows from the Markov chain

together with the fact that, if form a Markov chain, then

To prove this, we expand in two ways to get

The claim follows because .

From now on we drop the step index and denote as to simplify the notation. Note that and . We have

| (14) |

where

-

(a)

follows from the Markov chain and the weak union property of conditional independence;

-

(b)

follows from the Markov chains and , and the weak union property of conditional independence;

-

(c)

follows from the fact that the channels associated with are independent, and the fact that the capacity of a product channel is at most the sum of the capacities of the constituent channels [25].

II-C Preliminaries on strong data processing inequalities

In Sec. II-D, we will upper-bound using so-called strong data processing inequalities (SDPI’s) for discrete channels (cf. [20] and references therein). Here we provide the necessary background. A discrete memoryless channel is specified by a triple , where is the input alphabet, is the output alphabet, and is the stochastic transition law. We say that the channel satisfies an SDPI at input distribution with constant if for any other input distribution . Here and denote the marginal distribution of the channel output when the input has distribution and , respectively. Define the SDPI constant of as

The SDPI constants of some common discrete channels have closed form expressions. For example, for a binary symmetric channel (BSC) with crossover probability , [26], and for a binary erasure channel (BEC) with erasure probability , . It can be shown that is also the maximum mutual information contraction ratio in a Markov chain with [27]:

(see [28, App. B] for a proof of this formula in the setting of abstract alphabets). Consequently, for any such Markov chain,

This is a stronger result than the ordinary data processing inequality for mutual information, as it quantitatively captures the amount by which the information contracts after passing through a channel. We will also need a conditional version of the SDPI:

Lemma 3.

For any Markov chain with ,

For binary channels, this result was first proved by Evans and Schulman [29, Corollary 1]. A proof for the general case is included in [30, Lemma 2.7]. Finally, we will need a bound on the SDPI constant of a product channel. The tensor product of two channels and is a channel with

for all . The extension to more than two channels is obvious. The following lemma is a special case of Corollary 2 of Polyanskiy and Wu [31], obtained using the method of Evans and Schulman [29]. We give the proof, since we adapt the underlying technique at several points in this paper.

Lemma 4.

For a product channel , if the constituent channels satisfy for , then

Proof:

Let and be the input and output of the product channel . Let be an arbitrary random variable, such that form a Markov chain. It suffices to show that

| (15) |

From the chain rule,

Since form a Markov chain, and , Lemma 3 gives

It follows that

where the last step follows from the ordinary data processing inequality and the Markov chain . Unrolling the above recursive upper bound on and noting that , we get

II-D Upper bound on via SDPI

Having the necessary background at hand, we can now state our upper bounds based on SDPI constants. Let be the overall transition law of the channels across the cutset . Define

as the SDPI constant of , and

as the largest SDPI constant among all the channels across . Our second upper bound on involves these SDPI constants, and the conditional entropy of given .

Lemma 5.

For any set , any node , and any -step algorithm ,

Proof:

We adapt the proof of Lemma 4. For any and , define the shorthand . If , then for any , ; hence . If , then for any ,

where (a) follows from the conditional SDPI (Lemma 3) and the fact that form a Markov chain for . Unrolling the above recursive upper bound on , and noting that , we get

The weakened upper bound follows from the fact that , due to Lemma 4. This completes the proof of Lemma 5.∎

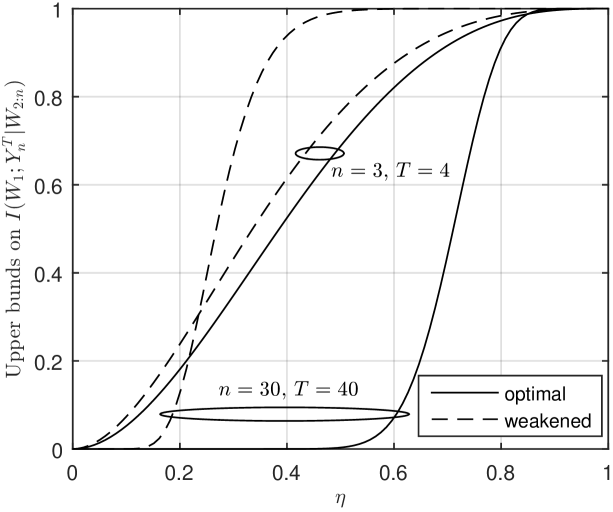

Comparing Lemma 2 and Lemma 5, we note that the upper bound in Lemma 2 captures the communication constraints through the cutset capacity alone, in accordance with the fact that the communication constraints do not depend on or . The bound applies when is either discrete or continuous; however, it grows linearly with . By contrast, the upper bound in Lemma 5 builds on the fact that is upper bounded by , and goes a step further by capturing the communication constraint through a multiplicative contraction of . It never exceeds as increases. However, it is useful only when the conditional entropy is well-defined and finite (e.g., when is discrete). We give an explicit comparison of Lemma 2 and Lemma 5 in the following example:

Example 1.

Consider a two-node network, where the nodes are connected by BSCs. The problem is for the two nodes to compute the mod- sum of their one-bit observations. Formally, we have with , , , and are independent r.v.’s, , and .

Choosing , Lemma 2 gives

| (16) |

whereas Lemma 5, together with the fact that , gives

| (17) |

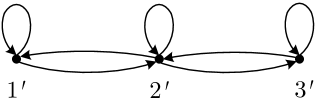

where, for , . For this example, the cutset-capacity upper bound is always tighter for small , as

Fig. 3 shows the two upper bounds with : the cutset-capacity upper bound is tighter when .

II-E Lower bounds on computation time

We now proceed to derive lower bounds on the computation time based on the previously derived lower and upper bounds on the conditional mutual information . Define the shorthand notation

| (18) |

which is the lower bound on in Lemma 1 .

II-E1 Cutset-capacity bounds

Combined with the conditional small ball probability lower bound in Lemma 1, the cutset-capacity upper bound in Lemma 2 leads to a lower bound on :

Theorem 1.

For an arbitrary network, for any and ,

From an operational point of view, the lower bound of Theorem 1 reflects the fact that the problem of distributed function computation is, in a certain sense, a joint source-channel coding (JSCC) problem with possibly noisy feedback. In particular, the lower bound on from Lemma 1, which is used to prove Theorem 1, can be interpreted in terms of a reduction of JSCC to generalized list decoding [32, Sec. III.B]. Given any algorithm and any node , we may construct a “list decoder” as follows: given the estimate , we generate a “list” . If we fix a set and allow all the nodes in to share their observations , then is an upper bound on the -measure of the list of any node . Therefore, is a lower bound on the total amount of information that is necessary for the JSCC problem. The complementary cutset upper bound on bounds the amount of information that can be accumulated with each channel use. The lower bound on can thus be interpreted as a lower bound on the blocklength of the JSCC problem.

II-E2 SDPI bounds

Theorem 2.

For an arbitrary network, for any and ,

| (19) |

where .

The lower bounds in Theorem 1 and Theorem 2 can behave quite differently. To illustrate this, we compare them in two cases:

When , Theorem 2 gives

which has essentially the same dependence on as the lower bound given by Theorem 1. In this case, Theorem 1 gives more useful lower bounds as long as , especially when is continuous.

When and is small, serves as a sharp proxy of . Theorem 1 in this case gives

while Theorem 2 gives

where in the last step we have used the fact that as . Theorem 1 in this case is sharper in capturing the dependence of on the amount of information contained in , in that the lower bound is proportional to , whereas the lower bound given by Theorem 2 depends on only through . On the other hand, Theorem 2 in this case is much sharper in capturing the dependence of on the confidence parameter , since grows without bound as , while the lower bound given by Theorem 1 remains bounded. We consider two examples for this case.

The first is Example 1 in Section II-D, for the two-node mod- sum problem. We have , and . Theorems 1 and 2 imply the following:

Corollary 1.

To obtain an achievable upper bound on in Example 1, we consider the algorithm where each node uses a length- repetition code to send its one-bit observation to the other node. Using the Chernoff bound, as in [33], it can be shown that the probability of decoding error at each node is upper-bounded by , and therefore this algorithm achieves accuracy with confidence parameter . This gives the upper bound

| (21) |

Comparing (21) with the second lower bound in (20), we see that they asymptotically differ only by a factor of as , as . Thus, for the problem in Example 1, the converse lower bound on obtained from the SDPI closely matches the achievable upper bound on .

The second example concerns the problem of disseminating all of the observations through an arbitrary network:

Example 2.

Consider the problem where ’s are i.i.d. samples from the uniformly distribution over , , and . In other words, the goal of the nodes is to distribute their observations to all other nodes.

In this example, , and . Following Ayaso et al. [1, Def. III.4], we define the conductance of the network as

Then we have the following corollary:

Corollary 2.

Again, we see that the lower bound obtained from SDPI is much sharper for capturing the dependence of on , since as . On the other hand, the lower bound obtained from the cutset capacity upper bound is tighter in its dependence on , and can also capture the dependence on the conductance of the network.

Finally, we point out that Theorem 1 gives the correct lower bound when the network graph is disconnected (assuming depends on the observations of all nodes): If consists of two disconnected components and , then , which results in . Despite the sharp dependence of the lower bounds of Theorems 1 and 2 on and , they have the same limitation as all previously known bounds obtained via single-cutset arguments: they examine only the flow of information across a cutset , but not within ; hence they cannot capture the dependence of computation time on the diameter of the network. We address this limitation in the following section.

III Multi-cutset analysis

We now extend the techniques of Section II to a multi-cutset analysis, to address the limitation of the results obtained from the single-cutset analysis. In particular, the new results are able to quantify the dissipation of information as it flows across a succession of cutsets in the network. As briefly sketched in Sec. I-B, we accomplish this by partitioning a general network using multiple disjoint cutsets, such that the operation of any algorithm on the network can be simulated by another algorithm running on a chain of bidirectional noisy links. We then derive tight mutual information upper bounds for such chains, which in turn can be used to lower-bound the computation time for the original network.

III-A Network reduction

Consider an arbitrary network . If there exists a collection of nested subsets of , such that the associated cutsets are disjoint, and the cutsets are also disjoint, then we say that is successively partitioned according to into subsets , where , with and . For , a node in is called a left-bound node of if there is an edge from it to a node in . The set of left-bound nodes of is denoted by . For , define for an arbitrary . In addition, for , let

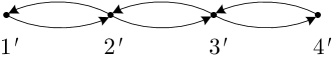

| (25) |

be the number of edges entering from its neighbors and , plus the number of edges entering from itself. For example, Fig. 2a in Sec. I-B illustrates a successive partition of a six-node network into three subsets , and , with , and . In addition, and . As another example, the network in Fig. 4a, where each undirected edge represents a pair of channels with opposite directions, can be successively partitioned into , , , , and , with , , , , and . In addition, , , , and .

Formally, a network has bidirectional links if, for any pair of nodes , if and only if . A path between and is a sequence of edges , such that and (if is connected, there is at least one path between any pair of nodes). The graph distance between and , denoted by , is the length of a shortest path between and (shortest paths are not necessarily unique). The diameter of is then defined by

The following lemma states that any such network can be successively partitioned into subsets:

Lemma 6.

Any network with bidirectional links (i.e., if and only if ) admits a successive partition into subsets with .

Proof:

For any and any , we define the sets

and

i.e., the ball and the sphere of radius centered at . In particular, .

We now construct the desired successive partition. Let , and pick any pair of nodes that achieve the maximum in the definition of . With this, we take

Clearly, , and moreover

From this construction, we see that

and

The pairwise disjointness of the cutsets , as well as of the cutsets , is immediate. ∎

Remarks:

-

•

Using the construction underlying the proof, we can also show that, for any two nodes in , we can successively partition into subsets.

-

•

For the successive partition constructed in the proof, all nodes in are left-bound nodes, and is the sum of the in-degrees of the nodes in .

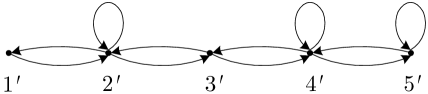

As an example, Fig. 5a shows the successive partition of the network in Fig. 4a using the construction in the proof, where , , , , , with , , and , , , and .

The successive partition of ensures that nodes in only communicate with nodes in and , as well as among themselves. Indeed, suppose that the network graph includes an edge with and , where . By construction of the successive partition, and . Therefore, belongs to both and . However, the cutsets and are disjoint, so we arrive at a contradiction. Likewise, we can use the disjointness of the cutsets and to show that the network graph contains no edges with , , and .

In view of this, we can associate to the partition a bidirected chain , i.e., a network with vertex set , edge set

and channel transition laws

| (26) | ||||

| (27) | ||||

| (28) |

where node in observes

In other words, the subset in is reduced to node in ; the channels across the subsets in are reduced to the channels between the nodes in ; and the channels from to in are reduced to a self-loop at node in . The channels from to in are not included in , and will be simulated by node using private randomness. For the network in Fig. 2a in Sec. I-B, according to the illustrated partition, it can be reduced to a -node bidirected chain in Fig. 2b, with , , and . For the network in Fig. 4a, according to the illustrated partition, it can be reduced to a -node bidirected chain in Fig. 4b, with , , and . According to the partition in Fig. 5a, the same network can be reduced to a -node bidirected chain in Fig. 5b, with , , and .

For the bidirected chain reduced from , we consider a class of randomized -step algorithms that run on and are of a more general form compared to the deterministic algorithms considered so far. Such a randomized algorithm operates as follows: at step , node computes the outgoing messages , , and , and computes the private message , where is the private randomness held by node , uniformly distributed on and independent across and . At step , node computes the final estimate of . These randomized algorithms have the feature that the message sent to the node on the left and the final estimate of a node are computed solely based on the node’s initial observation and received messages, whereas the messages sent to the node on the right and to itself are computed based on the node’s initial observation, received messages, as well as private messages, and the computation of the private messages involves the node’s private randomness. Define

| (29) |

as the -computation time for on using the randomized algorithms described above. The following lemma indicates that we can obtain lower bounds on by lower-bounding .

Lemma 7.

Consider an arbitrary network that can be successively partitioned into , such that ’s are all nonempty. Let be the bidirected chain constructed from according to the partition. Then, given any -step algorithm on that achieves , we can construct a randomized -step algorithm on , such that . Consequently, for computing on is lower bounded by defined in (III-A).

Proof:

Appendix A. ∎

Remark: In the network reduction, we can alternatively map all the channels from to (instead of only mapping the channels from to ) in the original network to the self-loop at node of the reduced chain . By doing so, to simulate the operation of an algorithm that runs on , the algorithm that runs on no longer needs to generate private messages using the nodes’ private randomness, since all the channels in are preserved in . In other words, under this alternative reduction, any -step algorithm that runs on can be simulated by a -step algorithm of the same deterministic type as that runs on . However, this alternative reduction increases the information transmission capability of the self-loops in , and will result in a looser lower bound on , as will be discussed in the remark following Theorem 3.

In light of Lemma 7, in order to lower-bound for computing on , we just need to lower-bound defined in (III-A). To this end, we derive upper bounds on the conditional mutual information for bidirected chains by extending the techniques behind Lemma 2 and Lemma 5:

Lemma 8.

Consider an -node bidirected chain with vertex set and edge set

and an arbitrary randomized -step algorithm that runs on this chain. Let denote the SDPI constant of the channel , and let . If , then

If , then

| | (30) | ||||

| | (31) |

with . For , the above upper bounds can be weakened to

| (32) | |||||

| (33) |

Moreover, if and

for some , then

| (34) |

Proof:

Appendix B. ∎

Equation (30) is reminiscent of a result of Rajagopalan and Schulman [13] on the evolution of mutual information in broadcasting a bit over a unidirectional chain of BSCs. The result in [13] is obtained by solving a system of recursive inequalities on the mutual information involving suboptimal SDPI constants. Our results apply to chains of general bidirectional links and to the computation of general functions. We arrive at a system of inequalities similar to the one in [13], which can be solved in a similar manner and gives (30) and (31). We also obtain weakened upper bounds in (32) and (33), which show that, for a fixed , the conditional mutual information decays at least exponentially fast in . The upper bound in (8) provides another weakening of (30) and (31), and shows explicitly the dependence of the upper bound on .

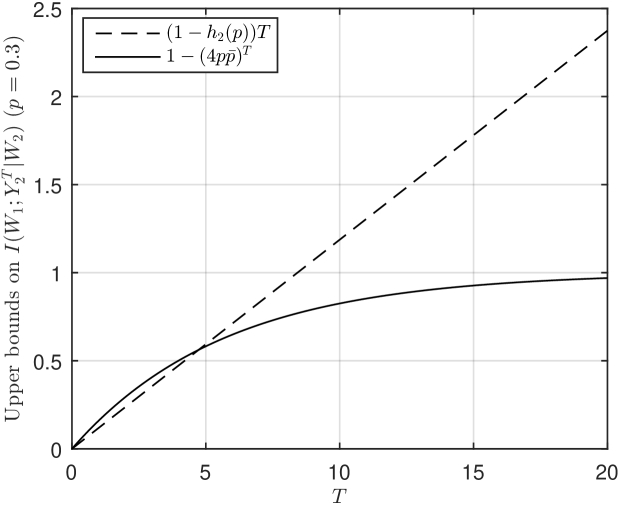

Assuming for simplicity that , Fig. 6 compares (30) with the weakened upper bound in (32). We can see that the gap can be large when is large and is much larger than . Nevertheless, the weakened upper bounds in (32) and (33) allow us to derive lower bounds on computation time that are non-asymptotic in , and explicit in , , and channel properties.

III-B Lower bounds on computation time

We now build on the results presented above to obtain lower bounds on the by reducing the original problem to function computation over bidirected chains. We first provide the result for an arbitrary network, and then particularize it to several specific topologies (namely, chains, rings, grids, and trees).

III-B1 Lower bound for an arbitrary network

Theorem 3 below contains general lower bounds on computation time for an arbitrary network. The statement of the theorem is somewhat lengthy, but can be parsed as follows: Given an arbitrary connected network with bidirectional links, any reduction of that network to a bidirected chain gives rise to a system of inequalities that must be satisfied by the computation time . These inequalities, presented in (3), are nonasymptotic in nature and involve explicitly computable parameters of the network, but cannot be solved in closed form. The first inequality follows from an SDPI-based analysis analogous to Theorem 2, while the second inequality is a cutset bound in the spirit of Theorem 1. Explicit but weaker expressions that lower-bound in terms of network parameters appear below as (36) and (37), together with asymptotic expressions for large (the size of the reduced bidirected chain). Both of these bounds state that is lower-bounded by the size of the bidirected chain plus a correction term that accounts for the effect of channel noise (via channel capacities and SDPI constants). Finally, (38) and (39) provide the precise version of the bound in (8): asymptotically, the computation time scales as , where is the worst-case SDPI constant of the reduced network. By Lemma 6, it is always possible to reduce the network to a bidirected chain of length , so the main message of Theorem 3 is that the computation time scales at least linearly in the network diameter. Thus, the main advantage of the multi-cutset analysis over the usual single-cutset analysis is that it can capture this dependence on the network diameter.

Theorem 3.

Assume the following:

-

•

The network graph is connected, the capacities of all edge links are upper-bounded by , and the SDPI constants of edge links are upper-bounded by .

-

•

admits a successive partition into , such that ’s are all nonempty.

Let

where

as defined in (25), and let

Then for and , the -computation time must satisfy the inequalities

| (35) |

The above results can be weakened to

| (36) | ||||

as , and

| (37) |

Moreover, if the partition size is large enough, so that and

| (38) |

then

| (39) |

Proof:

In light of Lemma 7, it suffices to show that the lower bounds in Theorem 3 need to be satisfied by for the bidirected chain , to which reduces according to the partition .

Consider any randomized -step algorithm that achieves on . From Lemma 1,

Then from Lemma 8 and the fact that

| (40) |

we have

and for ,

| (41) |

Using (40), (III-B1) can be weakened to

| (42) |

The first line of (III-B1) leads to

where the last step follows from the fact that as for . The second line of (III-B1) leads to

Remarks:

-

•

We call a node in a boundary node if there is an edge (either inward or outward) between it and a node in or . Denote the set of boundary nodes of by . The results in Theorem 3 can be weakened by replacing with

namely the summation of the in-degrees of boundary nodes of , since for .

-

•

As discussed in the remark following Lemma 7, an alternative network reduction is to map all the channels from to (instead of only mapping the channels from to ) in the original network to the self-loop at node of the reduced chain . Using the same proof strategy with this alternative reduction, we can obtain lower bounds on of the same form as the results in Theorem 3, but with ’s replaced by

Since for , the lower bounds on obtained by this alternative network reduction are weaker than the results in Theorem 3, and are even weaker than the results obtained by replacing ’s with s.

- •

-

•

Choosing a successive partition of with is equivalent to choosing a single cutset. In that case, we see that (37) recovers Theorem 1, while (36) recovers a weakened version of Theorem 2 (in (36), is at least the sum of the in-degrees of the left-bound nodes of , while Theorem 2 involves the in-degree of only one node in ).

We now apply Theorem 3 to networks with specific topologies. We assume that nodes communicate via bidirectional links. Thus, any such network will be represented by an undirected graph, where each undirected edge represents a pair of channels with opposite directions.

III-B2 Chains

For chains, the proof of Theorem 3 already contains lower bounds on . These lower bounds apply to as well, since the class of -step algorithms on a chain is a subcollection of randomized -step algorithms on the same chain. We thus have the following corollary.

Corollary 3.

Consider an -node bidirected chain without self-loops, where the SDPI constants of all channels are upper bounded by . Then for and , must satisfy the inequalities in Theorem 3 with and for all . In particular, if all channels are , then

for all sufficiently large .

III-B3 Rings

Consider a ring with nodes, where the nodes are labeled clockwise from to . The diameter is equal to . According to the successive partition in the proof of Lemma 6, this ring can be partitioned into , , , and . As an example, Fig. 7a shows a -node ring and Fig. 7b shows the chain reduced from it.

With this partition, we can apply Theorem 3 and get the following corollary.

Corollary 4.

Consider a -node ring, where the SDPI constants of all channels are upper bounded by . Then for and , must satisfy the inequalities in Theorem 3 with and for all . In particular, if all channels are , then

for all sufficiently large .

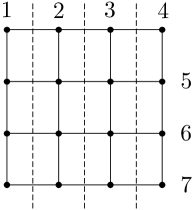

III-B4 Grids

Consider an grid (where we assume is odd), which has diameter . Figure 8a shows a successive partition of a grid into subsets, with . Figure 8b shows the successive partition in the proof of Lemma 6, which partitions the network into subsets, with , thus resulting in strictly tighter lower bounds on computation time compared to the ones obtained from the partition in Fig. 8a. With the latter partition, we get the following corollary.

Corollary 5.

Consider an grid, where is one of the longest paths. Assume that the SDPI constants of all channels are upper bounded by . Then for and , must satisfy the inequalities in Theorem 3 with , , , and . In particular, if all channels are , then

for all sufficiently large .

III-B5 Trees

Consider a tree, whose nodes are numbered in such a way that is one of the longest paths. Then the diameter of the tree is , and nodes and are necessarily leaf nodes. The tree can be viewed as being rooted at node . Let be the union of node and its descendants in the rooted tree, and let , . The tree can then be successively partitioned into . In the -node bidirected chain reduced according to this partition, the edges between nodes and are the pair of channels between nodes and in the tree, and the self-loop of node , , is the channel from to node in the tree.

As an example, Fig. 9a shows this partition of a tree network, and the chain reduced from it has the same form as the one in Fig. 4b. With this partition, we get the following corollary.

Corollary 6.

Consider a -regular tree network where is one of the longest paths. Assume that the SDPI constants of all channels are upper bounded by . Then for and , must satisfy the inequalities in Theorem 3 with and for all . In particular, if all channels are , then

for all sufficiently large .

If we use the successive partition in the proof of Lemma 6 on a -regular tree with diameter , then the tree will be reduced to an -node bidirected chain without self-loops. Figure 9b shows such an example. However, with this partition, increases with , which renders the resulting lower bound on computation time looser than the one in Corollary 6. It means that, although the partition in the proof of Lemma 6 always captures the diameter of a network, it may not always give the best lower bound on computation time among all possible successive partitions.

IV Small ball probability estimates for computation of linear functions

The bounds stated in the preceding sections involve the conditional small ball probability, defined in (9). In this section, we provide estimates for this quantity in the context of a distributed computation problem of wide interest — the computation of linear functions. Specifically, we assume that the observations , are independent real-valued random variables, and the objective is to compute a linear function

| (43) |

for a fixed vector of coefficients , subject to the absolute error criterion . We will use the following shorthand notation: for any set , let and .

The independence of the ’s and the additive structure of allow us to express the conditional small ball probability defined in (9) in terms of so-called Lévy concentration functions of random sums [19]. The Lévy concentration function of a real-valued r.v. (also known as the “small ball probability”) is defined as

| (44) |

If we fix a subset , and consider a specific realization of the observations of the nodes in , then

| (45) |

where in the second line we have used the fact that the ’s are independent r.v.’s, while in the third line we have used the fact that for any function and any , . In other words, for a fixed , the quantity is independent of the boundary condition , and is controlled by the probability law of the random sum , i.e., the part of the function that depends on the observations of the nodes in .

The problem of estimating Lévy concentration functions of sums of independent random variables has a long history in the theory of probability — for random variables with densities, some of the first results go back at least to Kolmogorov [34], while for discrete random variables it is closely related to the so-called Littlewood–Offord problem [35]. We provide a few examples to illustrate how one can exploit available estimates for Lévy concentration functions under various regularity conditions to obtain tight lower bounds on the computation time for linear functions. The examples are illustrated through Theorem 1, as it tightly captures the dependence of computation time on . (However, since the results of Theorems 2 and 3 also involve the quantity , the estimates for Lévy concentration functions can be applied there as well.)

IV-A Computing linear functions of continuous observations

IV-A1 Gaussian sums

Suppose that the local observations , , are i.i.d. standard Gaussian random variables. Then, for any , is a zero-mean Gaussian r.v. with variance (here, is the usual Euclidean norm). A simple calculation shows that

Using this in Theorem 1, we get the following result.

Corollary 7.

For the problem of computing a linear function in (43), where , suppose that the coefficients are all nonzero. Then for and ,

Thus, the lower bound on the computation time for (43) depends on the vector of coefficients only through its norm.

IV-A2 Sums of independent r.v.’s with log-concave distributions

Another instance in which sharp bounds on the Lévy concentration function are available is when the observations of the nodes are independent random variables with log-concave distributions (we recall that a real-valued r.v. is said to have a log-concave distribution if it has a density of the form , where is a convex function; this includes Gaussian, Laplace, uniform, etc.). The following result was obtained recently by Bobkov and Chistyakov [36, Theorem 1.1]: Let be independent random variables with log-concave distributions, and let . Then, for any ,

| (46) |

Corollary 8.

For the problem of computing a linear function in (43), where the ’s are independent random variables with log-concave distributions and with variances at least , suppose that the coefficients are all nonzero. Then for and ,

IV-A3 Sums of independent r.v.’s with bounded third moments

It is known that random variables with log-concave distributions have bounded moments of any order. Under a much weaker assumption that the local observations , have bounded third moments, we can prove the following result.

Corollary 9.

Consider the problem of computing the linear function in (43), where the ’s are independent zero-mean r.v.’s with variances at least and with third moments bounded by , and the coefficients satisfy the constraint for some . Then for and ,

where with some absolute constant .

Proof:

Under the conditions of the theorem, a small ball estimate due to Rudelson and Vershynin [37, Corollary 2.10] can be used to show that, for any ,

The desired conclusion follows immediately. ∎

IV-B Linear vector-valued functions

Similar to the Lévy concentration function of a real-valued random variable, the Lévy concentration function of a random vector taking values in can be defined as

Consider the case where each node observes an independent real-valued random variable , and the observations form a vector . Suppose the nodes wish to compute a linear transform of ,

| (47) |

with some fixed matrix , subject to the Euclidean-norm distortion criterion . In this case

where is the submatrix formed by the columns of with indices in . We will need the following result, due to Rudelson and Vershynin [38]. Let , , denote the singular values of arranged in non-increasing order, and define the stable rank of by

where is the Hilbert-Schmidt norm of , and is the spectral norm of . (Note that for any nonzero matrix , .) Then, provided

for all , we will have

where is an absolute constant [38, Theorem 1.4]. This result relates the Lévy concentration function of the linear transform of a vector to the Lévy concentration function of each coordinate of the vector. Applying this result in Theorem 1, we get a lower bound on for computing linear vector-valued functions.

Corollary 10.

For the problem of computing a linear transform of the observations defined in (47), where ’s are independent real-valued r.v.s, suppose the rows of are nonzero vectors. Then for and ,

for some absolute constant .

IV-C Linear function of discrete observations

Finally, we consider a case when the local observations have discrete distributions. Specifically, let the ’s be i.i.d. Rademacher random variables, i.e., each takes values with equal probability. We still use the absolute distortion function to quantify the estimation error. In this case, the Lévy concentration function will be highly sensitive to the direction of the vector , rather than just its norm. For example, consider the extreme case when for a single node , and all other coefficients are zero. Then . On the other hand, if for all and is even, then

where the last step is due to Stirling’s approximation. Moreover, a celebrated result due to Littlewood and Offord, improved later by Erdős [39], says that, if for all , then

which translates into a lower bound on the -computation time which is of the same order as the lower bound on the zero-error computation time.

Corollary 11.

For the problem of computing the linear function in (43), where the ’s are independent Rademacher random variables, suppose that for all , and . Then

as .

IV-D Comparison with existing results

We illustrate the utility of the above bounds through comparison with some existing results. For example, Ayaso et al. [1] derive lower bounds on a related quantity

One of their results is as follows: if is a linear function of the form (43) and for some , then

| (48) |

for all sufficiently small , where is a fixed constant [1, Theorem III.5]. Let us compare (48) with what we can obtain using our techniques. It is not hard to show that

| (49) |

where is the norm of . Moreover, since any r.v. uniformly distributed on a bounded interval of the real line has a log-concave distribution, we can use Corollary 8 to lower-bound the right-hand side of (49). This gives

| (50) |

for all sufficiently small . We immediately see that this bound is tighter than the one in (48). In particular, the right-hand side of (48) remains bounded for vanishingly small and , and in the limit of tends to

By contrast, as , the right-hand side of (50) grows without bound as .

Another lower bound on the -computation time was obtained by Como and Dahleh [2]. Their starting point is the following continuum generalization of Fano’s inequality [2, Lemma 2] in terms of conditional differential entropy: if are two jointly distributed real-valued r.v.’s, such that , then, for any ,

| (51) |

If we use (51) instead of Lemma 1 to lower-bound , then we get

| (52) |

Again, let us consider the case when is a linear function of the form (43) with all nonzero and with . Then (IV-D) becomes

| (53) |

The lower bound of our Corollary 7 will be tighter than (53) for all as long as

Note that the quantity on the right-hand side is nonpositive. More generally, for observations with log-concave distributions, the result of Lemma 1 can be weakened to get a lower bound involving the conditional differential entropy , which is tighter than similar results obtained in [2].

Corollary 12.

If the observations , , have log-concave distributions, then for computing the sum subject to the absolute error criterion , for and ,

Proof:

Let denote the probability density of . Then from (45),

| (54) |

for all , where is the sup norm of . By a result of Bobkov and Madiman [40, Proposition I.2], if is a real-valued r.v. with a log-concave density , then the differential entropy is upper-bounded by . Using this fact together with (54), the log-concavity of , and the fact that the ’s are mutually independent, we can write

Using this estimate in Theorem 1, we get the desired lower bound on . ∎

V Comparison with upper bounds on computation time

For the two-node mod- sum problem in Example 1, we have shown in Corollary 1 that the lower bound on computation given by Theorem 2 can tightly match the upper bound. In this section, we provide two more examples in which our lower bounds on computation time are tight. In the first example, our lower bound precisely captures the dependence of computation time on the number of nodes in the network. In the second example, our lower bound tightly captures the dependence of computation time on the accuracy parameter .

V-A Rademacher sum over a dumbbell network

Example 3.

Consider a dumbbell network of bidirectional BSCs with the same crossover probability. Formally, suppose is even, and let the nodes be indexed from to . Nodes to form a clique (i.e., each pair of nodes are connected by a pair of BSCs), while nodes to form another clique. The two cliques are connected by a pair of BSCs between nodes and . Each node initially observes a (or Rademacher) r.v. The goal is for the nodes to compute the sum of the observations of all nodes. The distortion function is .

By choosing the cutset as the pair of BSCs that joins the two cliques, our lower bound for random Rademacher sums in Corollary 11 gives the following lower bound on computation time.

Corollary 13.

Now we show that the above lower bound matches the upper bound on the computation time, which turns out to be

As shown by Gallager [11], for a fixed success probability, nodes and can learn the partial sum of the observations in their respective cliques in steps. These two nodes then exchange their partial sum estimates using binary block codes. Each partial sum can take values, and can be encoded losslessly with bits. The blocklength needed for transmission of the encoded partial sums is thus , where the hidden factor depends on the required success probability and the channel crossover probability, but not on . Having learned the partial sum of the other clique, nodes and continue to broadcast this partial sum to other nodes in their own clique. This takes another step. In total, the computation can be done in steps, to have all nodes learn the sum of all observations, for any prescribed success probability. This shows that .

V-B Distributed averaging over discrete noisy channels

Example 4.

Consider a network where the nodes are connected by binary erasure channels with the same erasure probability. Each node initially observes a log-concave r.v. The goal is for the nodes to compute the average of the observations of all nodes.

For this example, Carli et al. [14] define the computation time as

and show that

| (55) |

where is the second largest singular value of the consensus matrix adapted to the network, and and are positive constants depending only on channel erasure probability. It can be shown that the above upper bound still holds (with different constants) when channels are BSCs.

We use Corollary 12 to derive the following lower bound on .

Corollary 14.

For the problem in Example 4,

| (56) |

Proof:

The lower bound given by (56) states that is necessarily logarithmic in , which tightly matches the poly-logarithmic dependence on in the upper bound given by (55). As pointed out in Carli et al. [41], it is possible to prove that a computation time logarithmic in is achievable by embedding a quantized consensus algorithm for noiseless networks into the simulation framework developed by Rajagopalan and Schulman for noisy networks in [13].

VI Conclusion and future research directions

We have studied the fundamental time limits of distributed function computation from an information-theoretic perspective. The computation time depends on the amount of information about the function value needed by each node and the rate for the nodes to accumulate such an amount of information. The small ball probability lower bound on conditional mutual information reveals how much information is necessary, while the cutset-capacity upper bound and the SDPI upper bound capture the bottleneck on the rate for the information to be accumulated. The multi-cutset analysis provides a more refined characterization of the information dissipation in a network.

Here are some questions that are worthwhile to consider in the future:

-

•

In the multi-cutset analysis, the purpose of introducing self-loops when reducing the network to a chain is to establish necessary Markov relations for proving upper bounds on in bidirected chains, and the reason for considering left-bound nodes is to improve the lower bounds on computation time. We could have included all channels from to into the self-loop at node in , but this would result in looser lower bounds on computation time (cf. the remark after Theorem 3). However, there might be other network reduction methods, e.g., different ways to construct the bidirected chain, that will yield even tighter lower bounds on computation time than our proposed method.

-

•

In the first step of the derivation of Lemma 2 and Lemma 5, we have upper-bounded using the ordinary data processing inequality as

One may wonder whether we can tighten this step by a judicious use of SDPIs. The answer is negative. It can be shown that

where the contraction coefficient depends on the joint distribution of the observations and the function . However,

for both discrete and continuous observations. For discrete observations, this is a consequence of the fact that if and only if the graph is connected [26], and the fact that, for any induced by a deterministic function , this graph is always disconnected. This condition can be extended to continuous alphabets [42]. It would be interesting to see whether nonlinear SDPI’s, e.g., of the sort recently introduced by Polyanskiy and Wu [28], can be somehow applied here to tighten the upper bounds.

-

•

If the function to be computed is the identity mapping, i.e., , then the goal of the nodes is to distribute their observations to all other nodes in the network. In this case, our results on the computation time can provide non-asymptotic lower bounds on the blocklength of the codes for the source-channel coding problems in multi-terminal networks. In Example 2, we have considered one such case with discrete observations, and obtained lower bounds in Corollary 2 based on the single cutset analysis. It would be interesting to apply the multi-cutset analysis to the source-channel coding problems in multi-terminal, multi-hop networks.

Acknowledgment

The authors would like to thank the Associate Editor Prof. Chandra Nair and two anonymous referees for numerous constructive suggestions on how to improve the flow and the structure of the paper.

Appendix A Proof of Lemma 7

The goal of this proof is to show that, given any -step algorithm running on , we can construct a randomized -step algorithm running on that simulates . Fix any -step algorithm that runs on . For each , we can factor the conditional distribution of the messages given as follows:

| (A.1) |

Likewise, the conditional distribution of the received messages given can be factored as

| (A.2) |

Since the successive partition of ensures that nodes in can communicate with nodes in only if , the messages originating from at step can be decomposed as

and the messages received by nodes in at step can be decomposed as

| (A.3) |

According to the operation of algorithm , for each there exists a mapping , such that . By the definition of , we can write

Thus, there exists a mapping , such that

| (A.4) |

where

| (A.5) |

By the same token, there exist mappings , and , such that

| (A.6) | ||||

| (A.7) | ||||

| (A.8) |

Define the random variables

From the decomposition of in (A.3), we know that contains ; while from the decomposition of in (A.5), we know that contains . Therefore, from Eqs. (A.4) and (A.6)-(A.8), we deduce the existence of mappings , , , and , such that the messages transmitted by nodes in at time can be generated as

| (A.9) | ||||

| (A.10) | ||||

| (A.11) | ||||

| (A.12) |

Note that the computation of does not involve . Next, the messages received by nodes in at step are related to the transmitted messages as

where the stochastic transition laws have the same form as those in Eqs. (26) to (28). In addition, since and are related through the channels from to , there exists a mapping such that can be realized as

| (A.13) |

where can be taken as a random variable uniformly distributed over and independent of everything else. From (A.12) and (A.13), we know that can be realized by a mapping as

| (A.14) |

Taking all of this into account, we can rewrite the factorization (A.1) as follows:

| (A.15) |

and we can rewrite the factorization (A.2) as

| (A.16) |

where the channel can be realized by the mapping with the r.v. .

To summarize: the mappings defined in (A.9) to (A.11) and (A.14) specify a randomized -step algorithm that runs on and simulates the -step algorithm that runs on . Specifically, using these mappings, each node in can generate all the transmitted and received messages of in as . Moreover, from (A.15) and (A.16) we see that the random objects

and

have the same joint distribution.

Finally, as we have assumed that ’s are all nonempty, we can define

with an arbitrary . From the definition of and the fact that contains , it follows that there exists a mapping such that

Using this mapping, node in can generate the final estimate of the chosen in as , such that and have the same joint distribution. This guarantees that

The claim that for computing on is lower bounded by for computing on then follows from the definition of in (III-A). This proves Lemma 7.

Appendix B Proof of Lemma 8

Recall that, for any randomized -step algorithm , at step , node computes the outgoing messages , , and , and the private message , where is the private randomness of node . At step , node computes . We will use the Bayesian network formed by all the relevant variables and the d-separation criterion [24, Theorem 3.3] to find conditional independences among these variables. To simplify the Bayesian network, we merge some of the variables by defining

and

for . The joint distribution of the variables can then be factored as

| (B.1) |

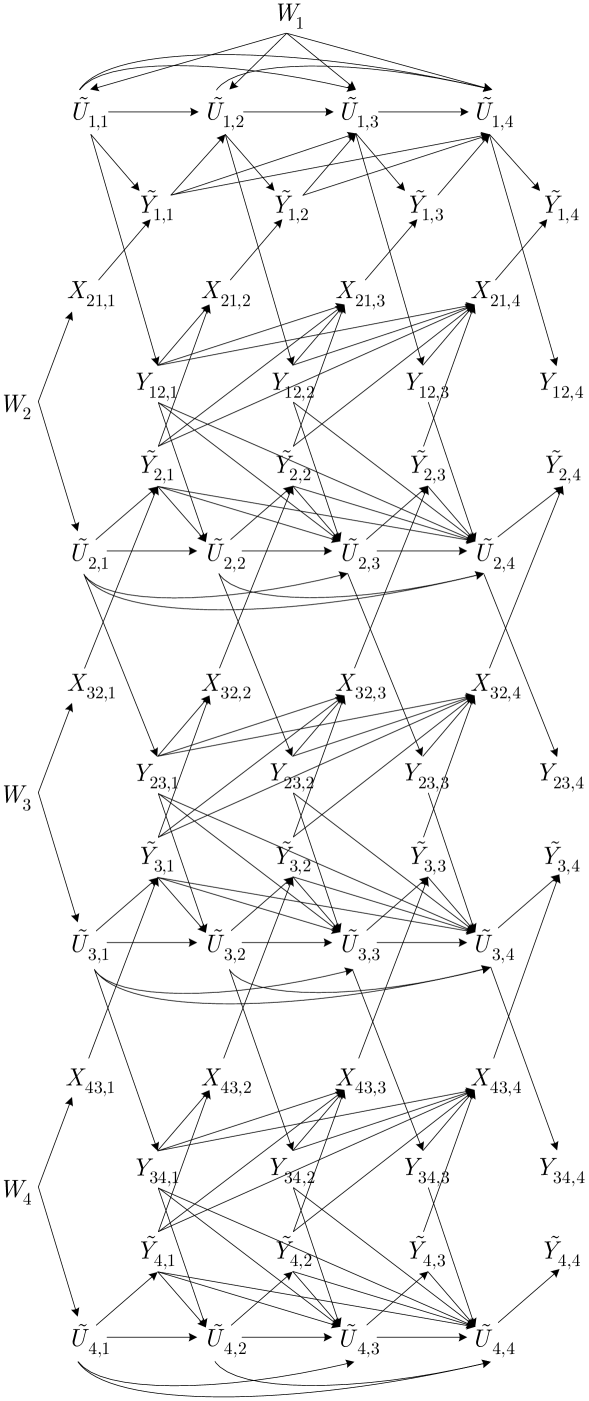

The Bayesian network corresponding to this factorization for and is shown in Fig. 10.

If , then , hence . For , we prove the upper bounds in the following steps, where we assume . The case can be proved by skipping Step 2, and the case can be proved by skipping Step 1 and Step 2.

Step 1:

For any and , define the shorthand , where is the in-neighborhood of node . From the Markov chain and Lemma 3, we follow the same argument as the one used for proving Lemma 5 to show that

Applying the d-separation criterion to the Bayesian network corresponding to (B.1) (see Fig. 10 for an illustration), we can read off the Markov chain

for , since all trails from to are blocked by , and all trails from to are blocked by . This implies the Markov chain , since is included in and is included in . Consequently,111This follows from the ordinary DPI and from the fact that, if is a Markov chain, then is a Markov chain conditioned on .

| (B.2) |

Also note that .

Step 2:

For , from the Markov chain

and Lemma 3,

From the Bayesian network corresponding to (B.1), we can read off the Markov chain

for , since all trails from to

are blocked by , and all trails from

to are blocked by . This implies the Markov chain

since is included in and is included in . Therefore,

| (B.3) |

for . Also note that

Step 3:

Finally, we upper-bound for .

From the Markov chain and Lemma 3,

| (B.4) |

This upper bound is useful only when is finite. If the observations are continuous r.v.’s, we can upper bound in terms of the channel capacity :

| (B.5) |

where we have used the Markov chain for , which follows by applying the d-separation criterion to the Bayesian network corresponding to the factorization in (B.1), so that the second term in (a) is zero; the Markov chain , which also implies the Markov chain by the weak union property of conditional independence, hence (b) and (c); and the fact that .

Step 4:

Define for and .

From (B.2), (B.3), (B.4), and (B.5), we can write, for , , and ,

| (B.6) |

where , and . In addition, for ,

| (B.7) |

and .

An upper bound on can be obtained by solving this set of recursive inequalities with the specified boundary conditions. It can be checked by induction that if . For , if for all , then the above inequalities continue to hold with ’s replaced with . The resulting set of inequalities is similar to the one obtained by Rajagopalan and Schulman [13] for the evolution of mutual information in broadcasting a bit over a unidirectional chain of BSCs. With

the exact solution is given by

for , and

For general ’s, we obtain a suboptimal upper bound by unrolling the first term in (B.6) for each and using the fact that for , getting

Iterating over , and noting that

we get for and ,

| (B.8) |

The weakened upper bounds in (32) and (33) are obtained by replacing in (B) with

Finally, we show (8) using an argument similar to the one in [13]. If and for some , then

where the last inequality follows from the assumption that , since otherwise . The upper bounds in (30) and (31) can be weakened to

where

-

(a)

and (b) follow from monotonicity properties of the binomial distribution;

-

(c)

follows from the Chernoff–Hoeffding bound;

-

(d)

follows from the fact that the channels associated with are independent, and the fact that the assumption that and .

References

- [1] O. Ayaso, D. Shah, and M. Dahleh, “Information-theoretic bounds for distributed computation over networks of point-to-point channels,” IEEE Trans. Inform. Theory, vol. 56, no. 12, pp. 6020–6039, 2010.

- [2] G. Como and M. Dahleh, “Lower bounds on the estimation error in problems of distributed computation,” in Proc. Inform. Theory and Applications Workshop, 2009, pp. 70–76.

- [3] N. Goyal, G. Kindler, and M. Saks, “Lower bounds for the noisy broadcast problem,” SIAM Journal on Computing, vol. 37, no. 6, pp. 1806–1841, 2008.

- [4] C. Dutta, Y. Kanoria, D. Manjunath, and J. Radhakrishnan, “A tight lower bound for parity in noisy communication networks,” in Proc. ACM Symposium on Discrete Algorithms (SODA), 2014, pp. 1056–1065.

- [5] M. Braverman, “Interactive information and coding theory,” in Proc. Int. Congress Math., 2014.

- [6] A. Orlitsky and J. Roche, “Coding for computing,” IEEE Trans. Inform. Theory, vol. 47, no. 3, pp. 903–917, 2001.

- [7] J. Körner and K. Marton, “How to encode the modulo-two sum of binary sources,” IEEE Trans. Inform. Theory, vol. 25, no. 2, pp. 219–221, 1979.

- [8] A. B. Wagner, S. Tavildar, and P. Viswanath, “Rate region of the quadratic Gaussian two-encoder source-coding problem,” IEEE Trans. Inform. Theory, vol. 54, no. 5, pp. 1938–1961, 2008.

- [9] A. El Gamal and Y.-H. Kim, Network Information Theory. Cambridge Univ. Press, 2011.

- [10] A. Giridhar and P. Kumar, “Toward a theory of in-network computation in wireless sensor networks,” IEEE Communications Magazine, vol. 44, no. 4, pp. 98–107, April 2006.

- [11] R. Gallager, “Finding parity in a simple broadcast network,” IEEE Trans. Inform. Theory, vol. 34, no. 2, pp. 176–180, 1988.

- [12] L. Schulman, “Coding for interactive communication,” IEEE Trans. Inform. Theory, vol. 42, no. 6, pp. 1745–1756, 1996.

- [13] S. Rajagopalan and L. Schulman, “A coding theorem for distributed computation,” in ACM Symposium on Theory of Computing, 1994.

- [14] R. Carli, G. Como, P. Frasca, and F. Garin, “Distributed averaging on digital erasure networks,” Automatica, vol. 47, no. 115-121, 2011.

- [15] S. Kar and J. Moura, “Distributed consensus algorithms in sensor networks with imperfect communication: Link failures and channel noise,” IEEE Trans. Signal Process., vol. 57, no. 1, pp. 355–369, 2009.

- [16] N. Noorshams and M. Wainwright, “Non-asymptotic analysis of an optimal algorithm for network-constrained averaging with noisy links,” IEEE J. Sel. Top. Sign. Proces., vol. 5, no. 4, pp. 833–844, 2011.

- [17] L. Ying, R. Srikant, and G. Dullerud, “Distributed symmetric function computation in noisy wireless sensor networks with binary data,” in International Symposium on Modeling and Optimization in Mobile, Ad-Hoc and Wireless networks (WiOpt), 2006.

- [18] S. Deb, M. Medard, and C. Choute, “Algebraic gossip: a network coding approach to optimal multiple rumor mongering,” IEEE Trans. Inform. Theory, vol. 52, no. 6, pp. 2486–2507, 2006.

- [19] V. V. Petrov, Sums of Independent Random Variables. Berlin: Springer-Verlag, 1975.

- [20] M. Raginsky, “Strong data processing inequalities and -Sobolev inequalities for discrete channels,” IEEE Trans. Inform. Theory, vol. 62, no. 6, pp. 3355–3389, 2016.

- [21] P. Tiwari, “Lower bounds on communication complexity in distributed computer networks,” J. ACM, vol. 34, no. 4, pp. 921–938, Oct. 1987.

- [22] A. Chattopadhyay, J. Radhakrishnan, and A. Rudra, “Topology matters in communication,” in Proc. IEEE Annu. Symp. on Foundations of Comp. Sci. (FOCS), Oct 2014, pp. 631–640.

- [23] T. Cover and J. Thomas, Elements of Information Theory, 2nd ed. New York: Wiley.

- [24] D. Koller and N. Friedman, Probabilistic Graphical Models: Principles and Techniques. MIT Press, 2009.

- [25] Y. Polyanskiy and Y. Wu, “Lecture Notes on Information Theory,” Lecture Notes for ECE563 (UIUC) and 6.441 (MIT), 2012-2016. [Online]. Available: http://people.lids.mit.edu/yp/homepage/data/itlectures_v4.pdf

- [26] R. Ahlswede and P. Gács, “Spreading of sets in product spaces and hypercontraction of the Markov operator,” Ann. Probab., vol. 4, no. 6, pp. 925–939, 1976.

- [27] V. Anantharam, A. Gohari, S. Kamath, and C. Nair, “On maximal correlation, hypercontractivity, and the data processing inequality studied by Erkip and Cover,” arXiv preprint, 2013. [Online]. Available: http://arxiv.org/abs/1304.6133

- [28] Y. Polyanskiy and Y. Wu, “Dissipation of information in channels with input constraints,” IEEE Trans. Inform. Theory, vol. 62, no. 1, pp. 35–55, 2016.

- [29] W. Evans and L. Schulman, “Signal propagation and noisy circuits,” IEEE Trans. Inform. Theory, vol. 45, no. 7, pp. 2367–2373, 1999.

- [30] A. Xu, “Information-theoretic limitations of distributed information processing,” Ph.D. dissertation, University of Illinois at Urbana-Champaign, 2016.