Infrared Small Target Detection via tensor norm minimization and ASSTV regularization: A Novel Tensor Recovery Approach

Abstract

In recent years, there has been a noteworthy focus on infrared small target detection, given its vital importance in processing signals from infrared remote sensing. The considerable computational cost incurred by prior methods, relying excessively on nuclear norm for noise separation, necessitates the exploration of efficient alternatives. The aim of this research is to identify a swift and resilient tensor recovery method for the efficient extraction of infrared small targets from image sequences. Theoretical validation indicates that smaller singular values predominantly contribute to constructing noise information. In the exclusion process, tensor QR decomposition is employed to reasonably reduce the size of the target tensor. Subsequently, we address a tensor Norm Minimization via T-QR (TLNMTQR) based method to effectively isolate the noise, markedly improving computational speed without compromising accuracy. Concurrently, by integrating the asymmetric spatial-temporal total variation regularization method (ASSTV), our objective is to augment the flexibility and efficacy of our algorithm in handling time series data. Ultimately, our method underwent rigorous testing with real-world data, affirmatively showcasing the superiority of our algorithm in terms of speed, precision, and robustness.

Index Terms:

Tensor recovery, norm, infrared small target detection, tensor QR decomposition, asymmetic spatial-temporal total variation, image recovery.I INTRODUCTION

In the contemporary era of the internet, our lives are intricately woven into the fabric of signals [1]. As we harness these signals, our constant aspiration is for precise and expeditious data collection. Nonetheless, in practical application, the dichotomy between speed and accuracy arises due to technological constraints. Sampling at lower rates presents a formidable challenge in acquiring truly comprehensive data. This challenge is particularly pronounced in the domain of infrared small target detection [2, 3, 4], where there is a pressing demand for a rapid and efficient methodology.

Compressive sensing [5, 6] offers a technical solution by sparsely sampling signals at lower rates and solving a optimization problems to ultimately reconstruct the complete signal. However, this method often applies to one-dimensional data. In contemporary society, data is typically stored in matrices, such as infrared images, audio, and video. Similarly, these data encounter partial element loss during collection, transmission, and storage. If compressive sensing methods are used, they may overlook the relationships between elements in two-dimensional rows and columns.

This is where matrix recovery methods come into play. When discussing early effective algorithms in matrix recovery, it’s inevitable to mention Robust Principal Component Analysis (RPCA). The RPCA model is defined as . This model describes the process of decomposing a noisy original matrix into a low-rank matrix and a sparse matrix . In the solving process, an optimization problem composed of the -norm and nuclear norm is used. These methods fall under the category of Low-Rank and Sparse Matrix Decomposition Models (LRSD) [7, 8, 9]. For instance, [10] introduced a swift and effective solver for robust principal component analysis. Building upon this, various methods have since emerged, leveraging the concept of low-rank matrix recovery, see [11, 12, 13].

| vectors | the identity matrix | ||

| matrices | the identity tensor | ||

| tensor | the inner product of | ||

| a third-order tensor | conj | the complex conjugate of | |

| the th entry of | the tensor Frobenius norm of | ||

| the tube fiber of | the tensor norm of | ||

| the th frontal slice | the tensor norm of | ||

| the conjugate transpose of | the tensor nuclear norm of | ||

| the result of DFT on | the tensor ASSTV norm of |

As research progresses, tensors are seen as a way to further optimize and upgrade LRSD, known as Tensor Low-Rank and Sparse Matrix Decomposition Models (TLRSD) [14, 15]. Tensors, due to their increased dimensions, encompass more information and exhibit significantly enhanced computational efficiency.

Analogous to matrix recovery methods, Tensor Robust Principal Component Analysis (TRPCA) [16] was proposed and showed promising results in tensor recovery. Similarly, methods related to tensor decomposition have gradually emerged. In recent years, Total Variation (TV) regularization [17, 18, 19] has been widely applied to solve tensor recovery problems. However, traditional TV regularization, while considering spatial information, is computationally complex. Hence, spatial spectral total variation (SSTV) [20] was introduced, significantly reducing the complexity of solving TV regularization. Yet, besides the spatial information within the tensor itself, the temporal information in tensors remained underutilized, leading to the introduction of ASSTV [21] to incorporate both temporal and spatial information.

Thus, tensor recovery has evolved to primarily solve the ASSTV regularization and nuclear norm optimization problems. However, this process involves tensor singular value decomposition (TSVD) [22] for solving tensor nuclear norm minimization problem, which is highly time-consuming. To address this, a new method ASSTV-TLNMTQR was proposed, which combining ASSTV regularization and TLNMTQR. Our method contributes in the following ways:

-

1.

Introducing the innovative ASSTV-TLNMTQR method which has significantly elevated the pace of tensor decomposition. Through rigorous experimentation in infrared small target detection, this approach has showcased its rapid and efficient tensor recovery capabilities.

-

2.

A pioneering strategy, rooted in tensor decomposition, has been presented for minimizing the norm. This method has proven to be highly effective in solving tensor decomposition problems, surpassing the speed of previous approaches reliant on nuclear norm methodologies.

-

3.

Our approach integrates ASSTV regularization and TLNMTQR, resulting in a model endowed. This integration empowers our model to produce outstanding solutions across varied contexts, facilitated by flexible parameter adjustments.

II NOTATIONS AND PRELIMINARIES

II-A Fast fourier transform

In this paper, we have compiled a comprehensive list of symbols and definitions essential for reference, as presented in TABLE I. In order to effectively articulate our model, it is imperative to introduce fundamental definitions and theorems. Primarily, we emphasize a critically significant operation associated with tensor product and matrix product— the Discrete Fourier Transform (DFT). Here, we denote as an integral part of this discussion.

where is DFT matrix denoted as

,

where and is the imaginary unit. So we can learn that is orthogonal, i.e.,

| (1) |

By providing a proof, we can easily obtain the following conclusions:

| (2) |

where denotes the Kronecker product and is orthogonal. Moreover, and are defined as follows.

,

.

II-B Tensor product

Here, we provide the definition of the T-product: Let and . Then the t-product is the following tensor of size :

| (3) |

where and are the unfold operator map and fold operator map of respectively, i.e.,

,

.

II-C Tensor QR decomposition

The most important component in -norm [23] is the tensor QR decomposition, which is an approximation of SVD. Let . Then it can be factored as

| (4) |

where is orthogonal, and is analogous to the upper triangular matrix.

II-D Asymmetric spatialtemporal total variation

The TV regularizaiton [17, 18, 19] is widely applied in infrared small target detection, as it allows us to better utilize the information in the images.By incorporating the TV regularizaiton, we can maintain the smoothness of the image during the processes of image recovery and denoising, thereby eliminating potential artifacts. Typically, the TV regularizaiton is applied to matrices as shown in Equation (5).

| (5) |

However, this approach neglects the temporal information. Hence, we use a new method called asymmetric spatial-temporal total variation regularizaiton method to model both spatial and temporal continuity. There are two main reasons for choosing ASSTV regularizaiton method:

-

•

It facilitates the preservation of image details and better noise elimination.

-

•

By introducing a new parameter , it allows us to assign different weights to the temporal and spatial components, enabling more flexible tensor recovery.

Now, we provide the definition of ASSTV, as shown in Equation (6):

| (6) |

Where , , and are the horizontal, vertical, and temporal difference operators, respectively. denotes a positive constant, which is used to control the contribution in the temporal dimension. Here are the definitions of the three operators:

| (7) |

| (8) |

| (9) |

II-E Tensor norm minimization via tensor QR decomposition

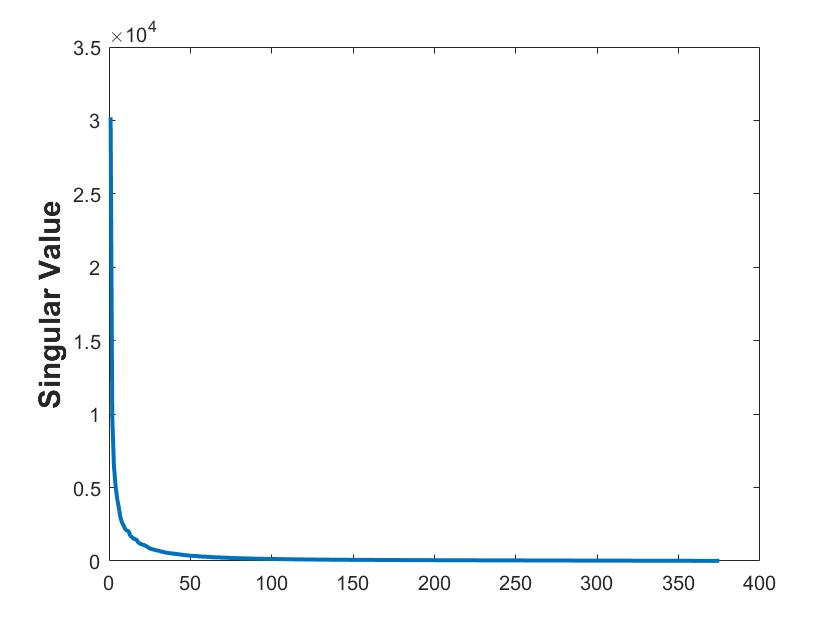

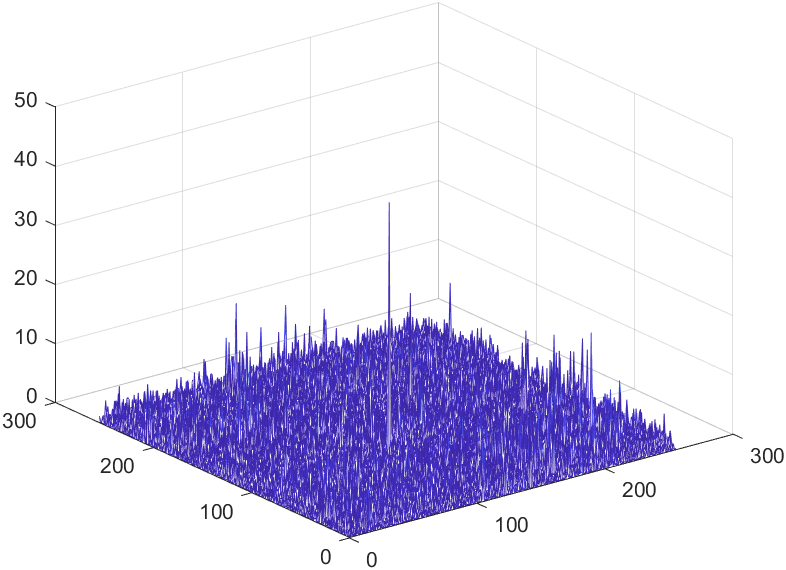

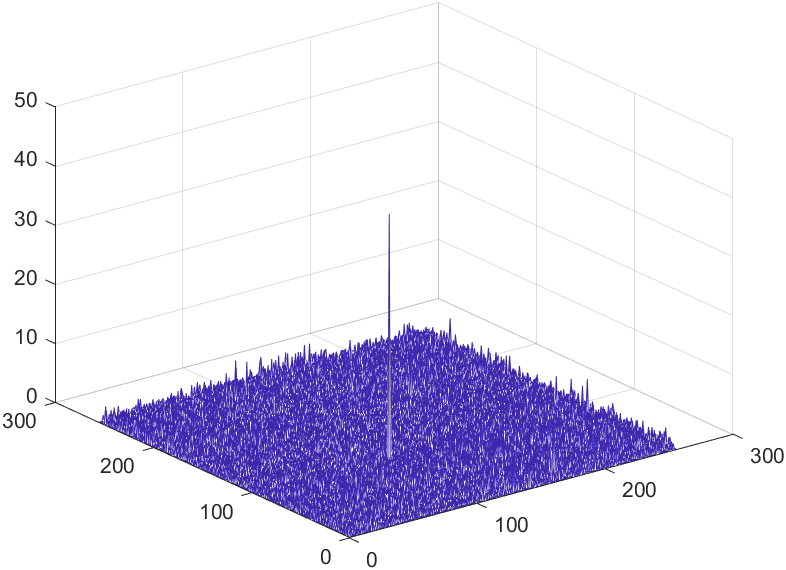

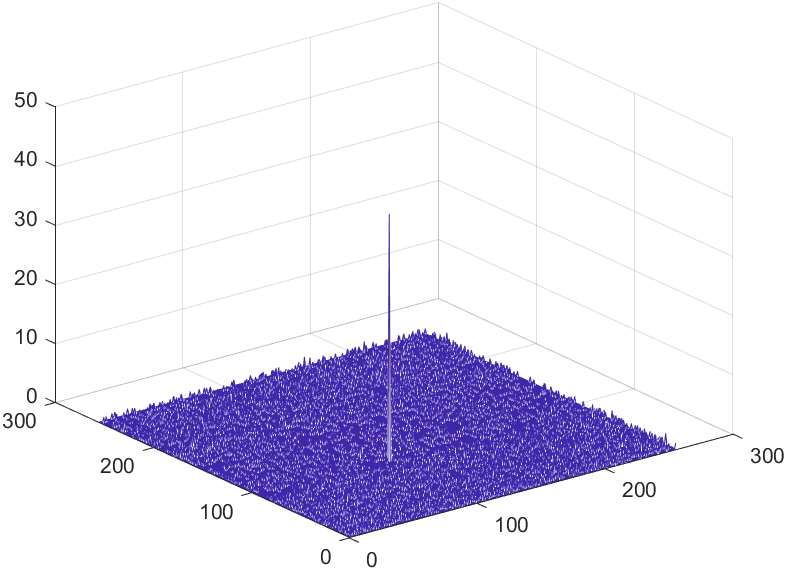

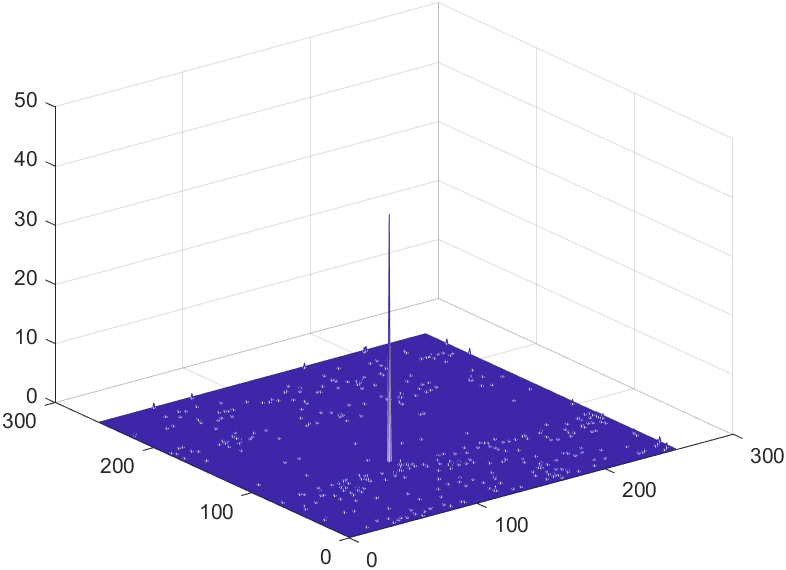

In the realm of matrix recovery, a commonly employed approach involves solving the nuclear norm minimization problem through Singular Value Decomposition (SVD) to disentangle noise and restore images. When applying the SVD to a single channel of an RGB image, the singular values can be observed, as depicted in Fig. 1. However, the SVD is computationally demanding and often requires a substantial amount of algorithmic execution time to converge. Consequently, we adopt a novel tensor recovery method based on tensor tri-factorization to address the norm minimization problem. This approach aims to achieve image recovery with reduced computational complexity.

| (10) |

First, we will introduce the matrix version. For the problem (10), there exists an optimal solution (11).

| (11) |

However, computing the entire matrix requires significant computational resources and time. Moreover, in most cases, smaller singular values in the matrix are primarily used to reconstruct noise information within the matrix. Therefore, it is unnecessary to compute them during the process of matrix recovery. Hence, it aims to find a method that only computes the first few larger singular values and their corresponding singular vectors, accelerating the overall computation process.

This problem is essentially finding an approximate rank- approximation of the matrix , meaning solving the problem (12).

| (12) |

Where , , , and represents the rank of matrix (). and are column orthogonal and row orthogonal matrices respectively, while is a regular square matrix. is a positive error threshold. For problem (12), we can use the iterative method to solve it.

We initialize three matrices as follows: , , and . Then, we iterate to update , , and using the QR decomposition. First, fixing , we have:

Next, fixing , we obtain:

Finally:

After multiple iterations, the tri-factorization of is completed.

Next, we utilize the obtained tri-factorization matrices to optimize our problem. First, we have , and then we establish the following minimization model:

| (13) |

Let , then we have Equation (15).

| (15) |

We observe that this problem, resembling (10), can be solved using the contraction operator from (11), as shown in Equation (16).

| (16) |

Similarly, for the tensor , we need to solve the following optimization problem:

| (17) |

We need to perform tri-factorization on the tensor and find three tensors such that , where and are orthogonal, and is a tensor.

We obtain , , and by solving the following optimization problem:

| (18) |

Then, we can utilize iterative method to obtain , , and . The final solution to the problem (17) is transformed into solving the following problem:

| (19) |

Let . The optimal solution for this modified problem is:

| (21) |

The complete algorithmic process is illustrated in Algorithm 1.

III OUR METHOD FOR TENSOR RECOVERY

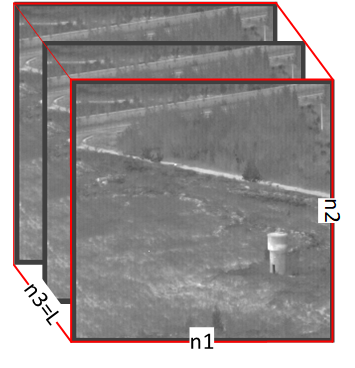

There are different methods for constructing tensor under different backgrounds. In infrared small target detection, we divide a continuous sequence of images into blocks by using a sliding window [21, 24], moving from the top left to the bottom right. Eventually, we obtain a tensor , see Fig. 2. The height and width of the sliding window are and , respectively, with a thickness of .

After constructing the tensor , if we performing the SVD on , we can clearly observe that is a low-rank tensor.

Then, we define the following linear model:

| (22) |

Where , , and represent the background image, target image, and noise image,respectively, .

III-A The proposed model

By combining the norm and ASSTV regularization, we present the definition of our model as follows:

| (23) |

Our model employs the norm, which significantly enhances algorithm efficiency. Additionally, we utilize tensor low-rank approximation to better assess the background, and the use of ASSTV allows for more flexible recovery of tensor.

III-B Optimization procedure

In this section, we utilize the Alternating Direction Method of Multipliers (ADMM) method to solve (23). First, we modify (23) to the following form:

| (25) |

Next, we present the augmented Lagrangian formulation of (25):

| (26) |

where represent a positive penalty scalar and , , , , represent the Lagrangian multiplier. We will use ADMM algorithm to solve (26), which includes , , , , , and . Then we will alternately update the variable as:

-

1.

We update the value of using the following equation:

(27) For more details about the update of , please refer to Algorithm 1. In the experiment, one iteration produced promising results, so we only performed one.

-

2.

We update the value of using the following equation:

(28) To solve (28), we can utilize the following system of linear equations:

(29) where ,and T is the matrix transpose. By considering convolutions along two spatial directions and one temporal direction, we obtain a new Equation (30) as follows:

(30) -

3.

We update the value of using the following equation:

(31) The closedform solution of (31) can be obtained by resorting to the element-wise shrinkage operator, that is:

(32) -

4.

We update the values of , , and using the following equations:

(33) Here, we can also utilize the element-wise shrinkage operator to solve the above problem. The Equation is as follows:

(34) -

5.

We update the value of using the following equation:

(35) TABLE II: Pictures introduction Sequence Frames Image Size Average SCR Target Descriptions Background Descriptions 1 120 5.45 Far range, single target ground 2 120 5.11 Near to far, single target ground 3 120 6.33 Near to far, single target ground 4 120 6.07 Far to near, single target ground 5 120 5.20 Far distance, single target ground-sky boundary 6 120 1.98 Target near to far, single target, faint target ground TABLE III: Parameter setting Methods Acronyms Parameter settings Total Variation Regularization and Principal Component Pursuit [25] TV-PCP , , , Partial Sum of the Tensor Nuclear Norm [26] PSTNN Sliding step: 40, Multiple Subspace Learning and Spatial-temporal Patch-Tensor Model [27] MSLSTIPT ,,, Nonconvex tensor fibered rank approximation [28] NTFRA ,,, Non-Convex Tensor Low-Rank Approximation [21] ASSTV-NTLA , , , , Tensor Norm Minimization via Tensor QR Decomposition ASSTV-TLNMTQR , , , , , The solution of (35) is as follows:

(36) -

6.

Updating multipliers y1; y2; y3; y4; y5 with other variables being fixed:

(37) -

7.

Updating by .

Algorithm 2 : ASSTV-TLNMTQR algorithm 0: infrared image sequence , number of frames , parameters ;1: Initialize: Transform the image sequence into the original tensor , ====0, , =0, , , , , , .2: while Not converged do9: Updating by10: Check the convergence conditions11: Update12: end while12: ,,

IV NUMERICAL TESTS AND APPLICATIONS

The main purpose of our algorithm is to decompose the tensor into three tensors: the background tensor , the target tensor , and the noise tensor . We will explore the effects of different parameter adjustments and ultimately identify a relatively suitable parameter configuration for the most effective extraction of infrared small target detection. Finally, we will compare our algorithm with the latest algorithms.

IV-A Infrared small target detection

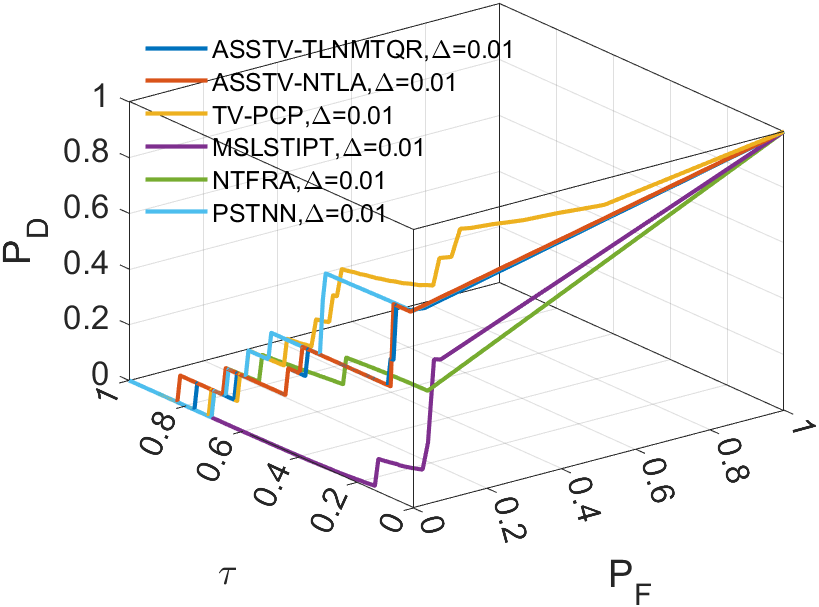

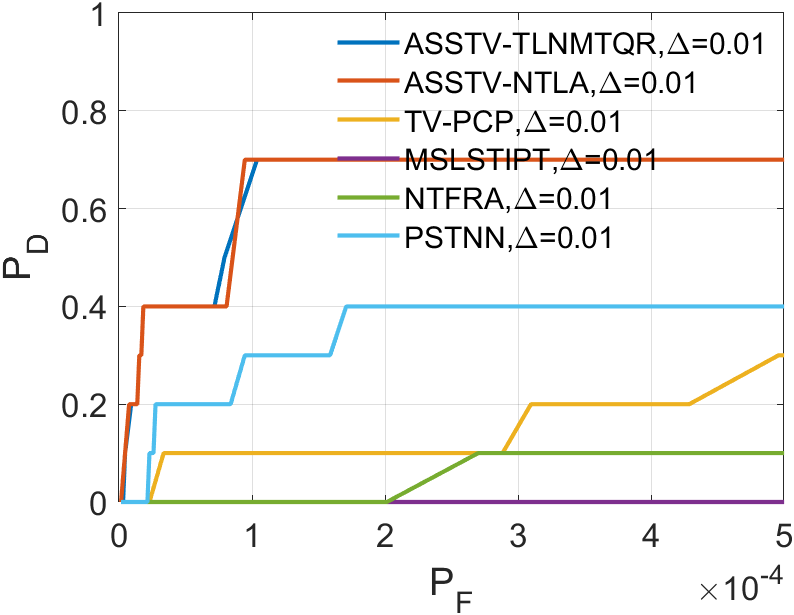

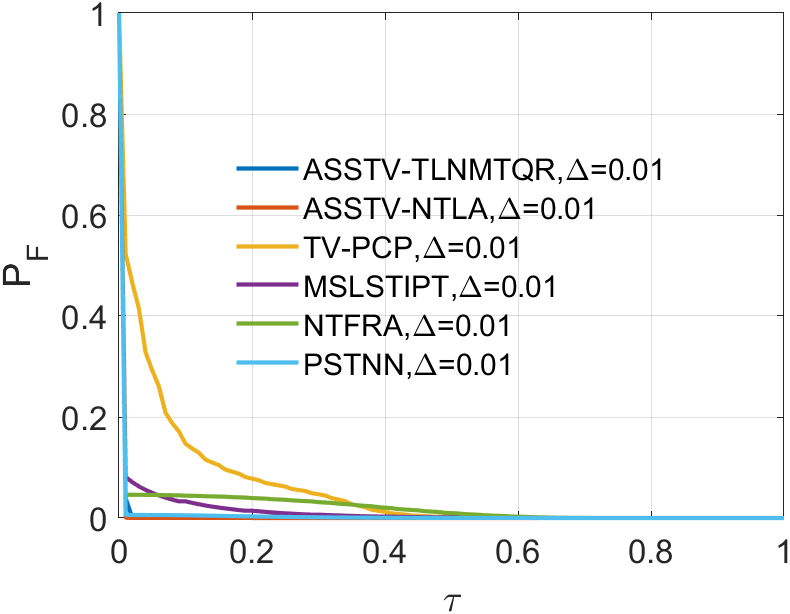

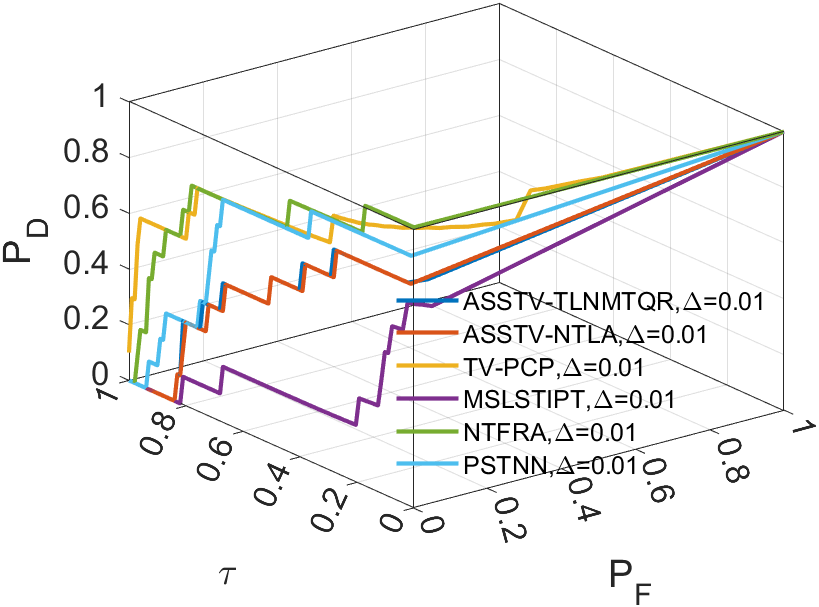

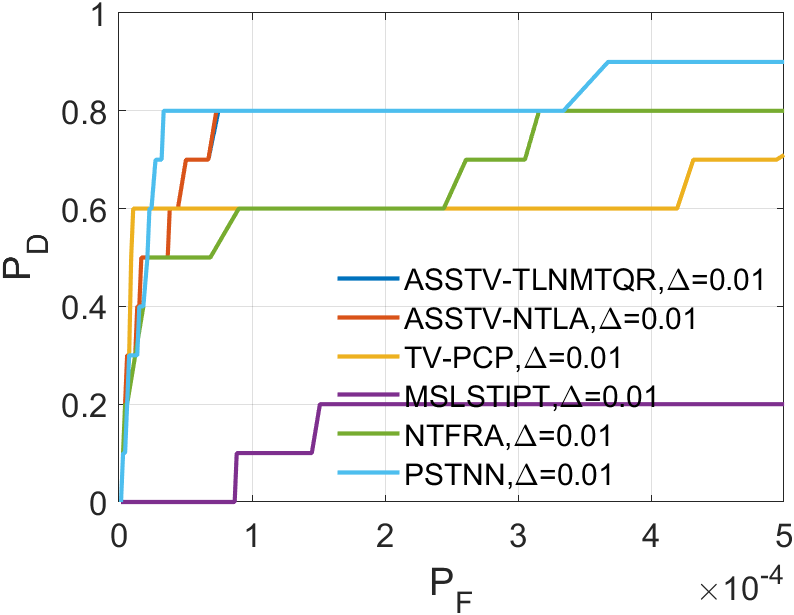

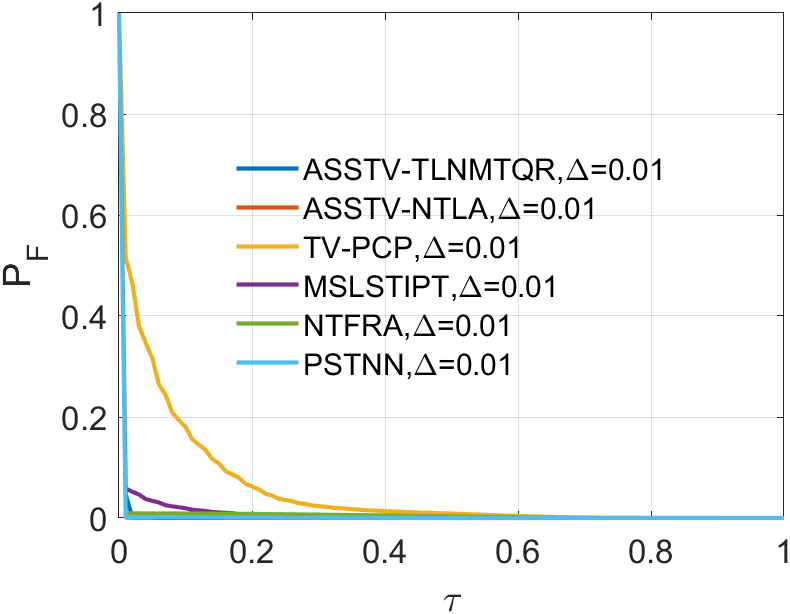

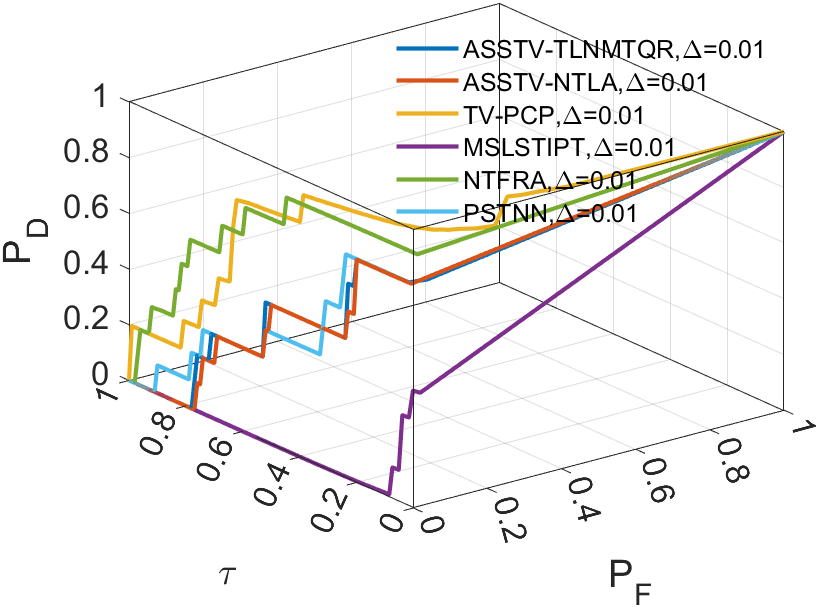

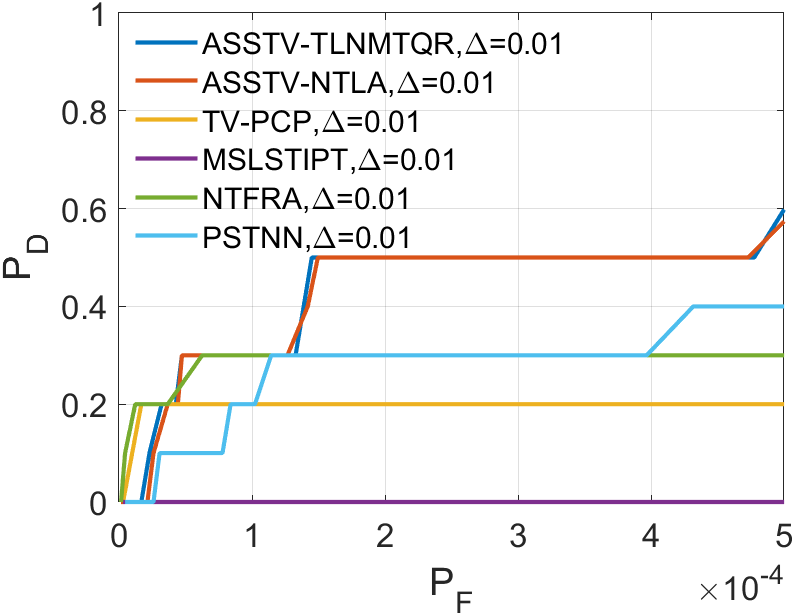

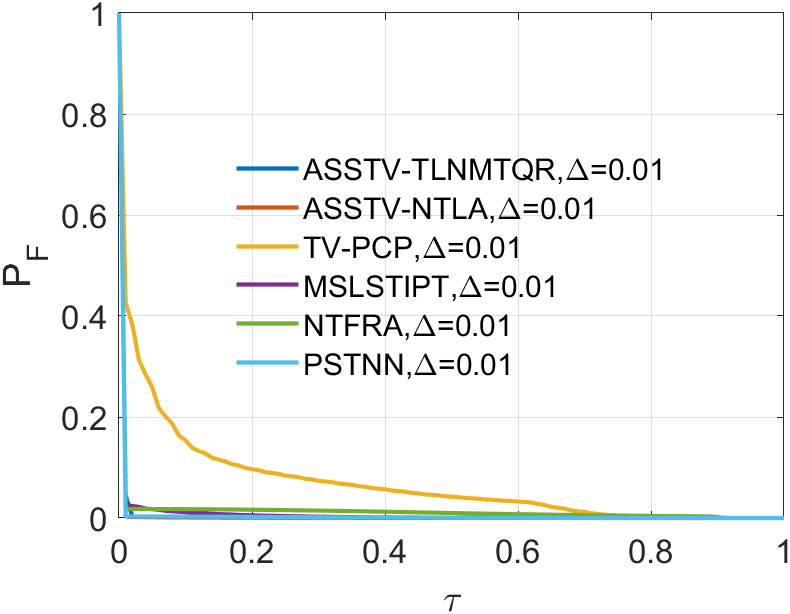

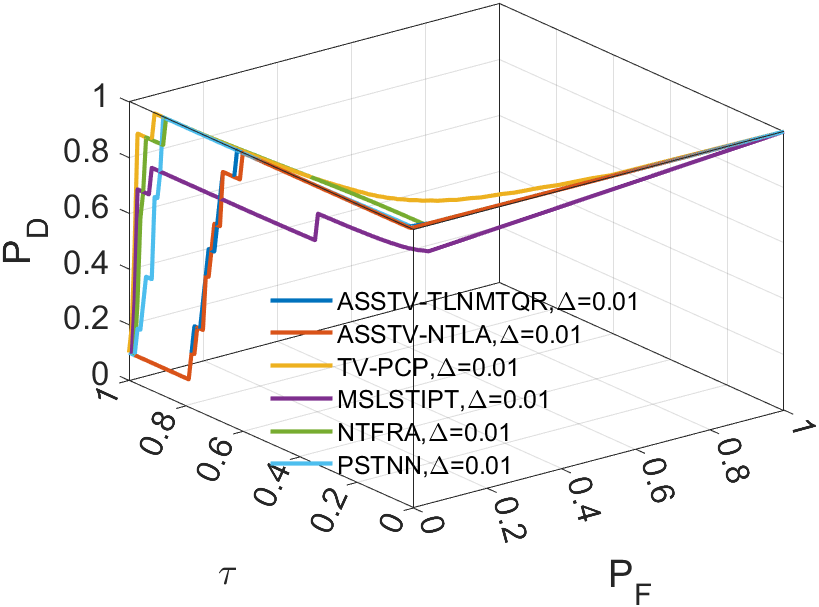

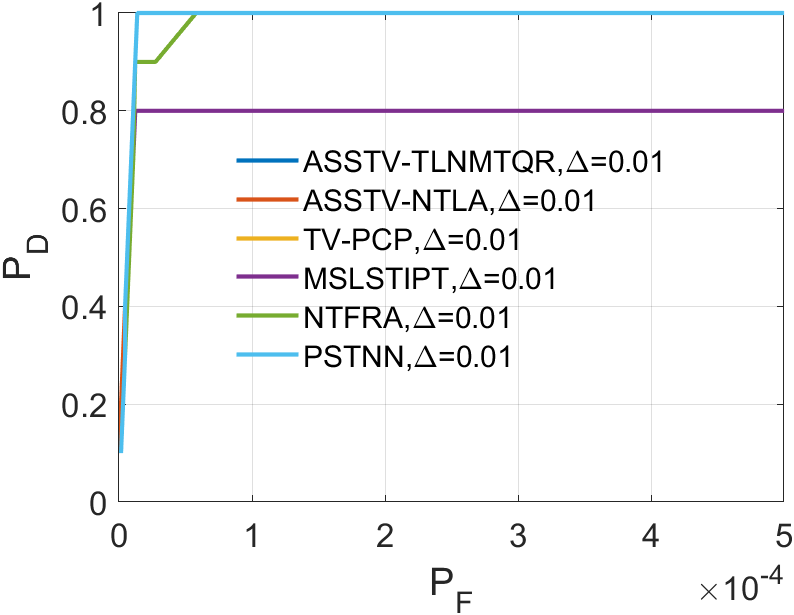

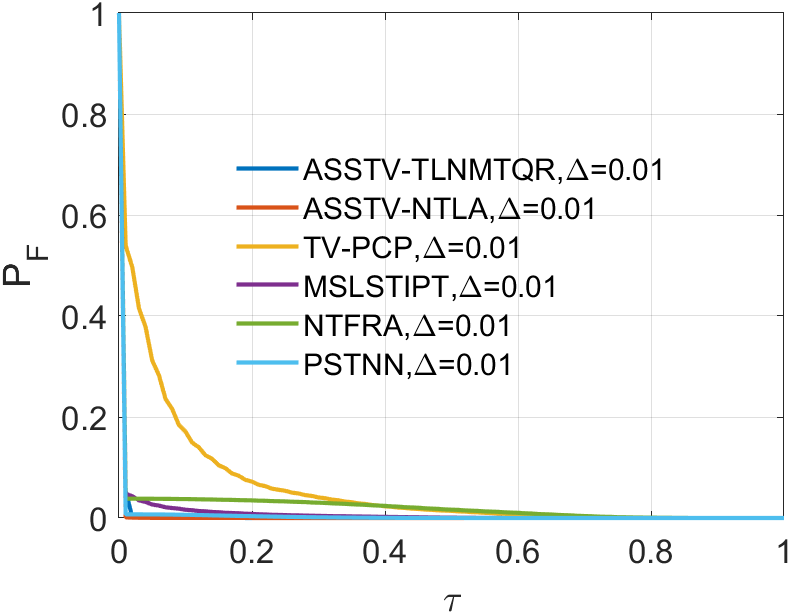

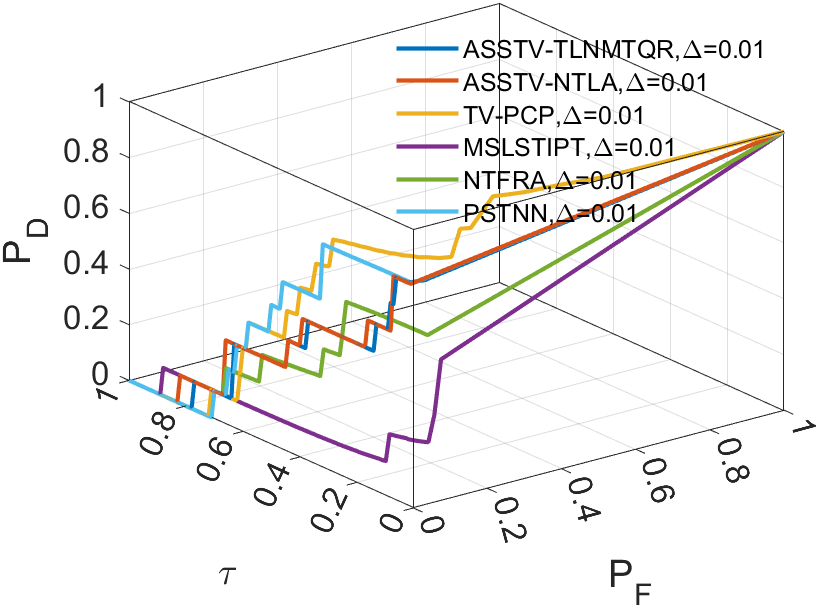

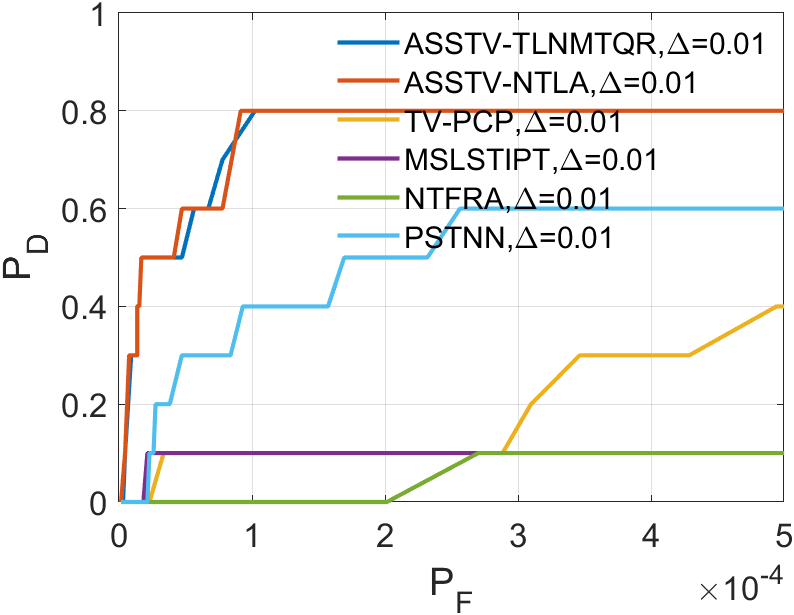

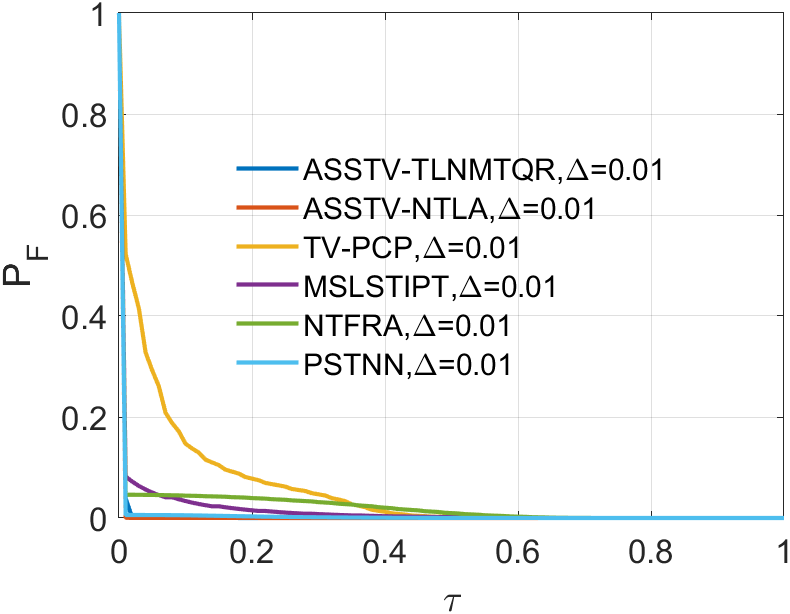

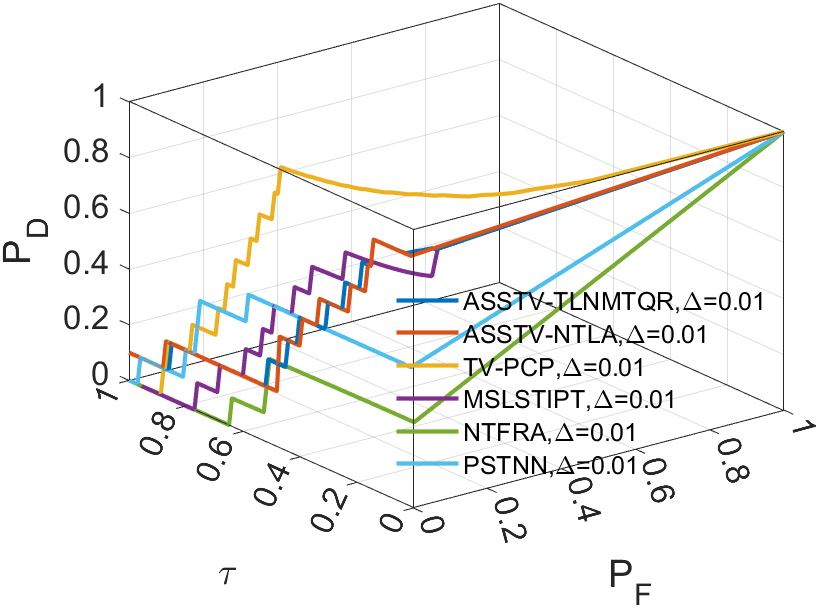

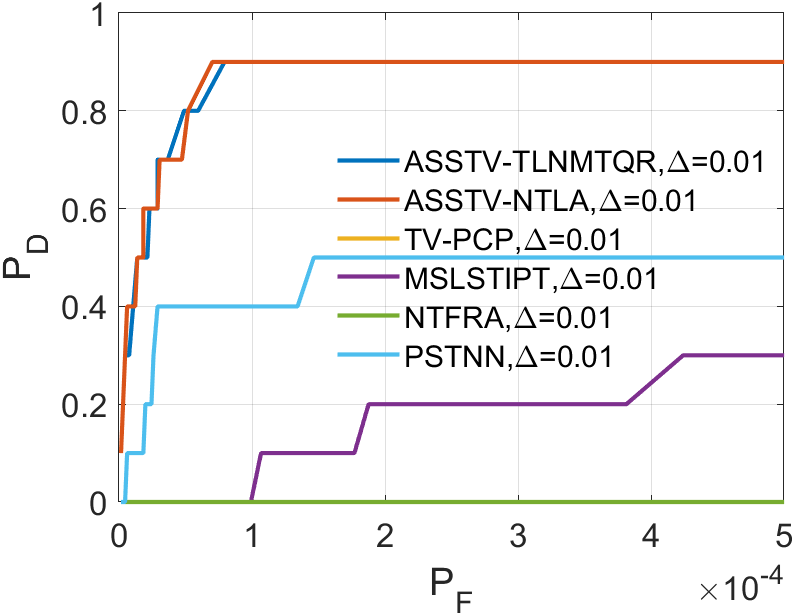

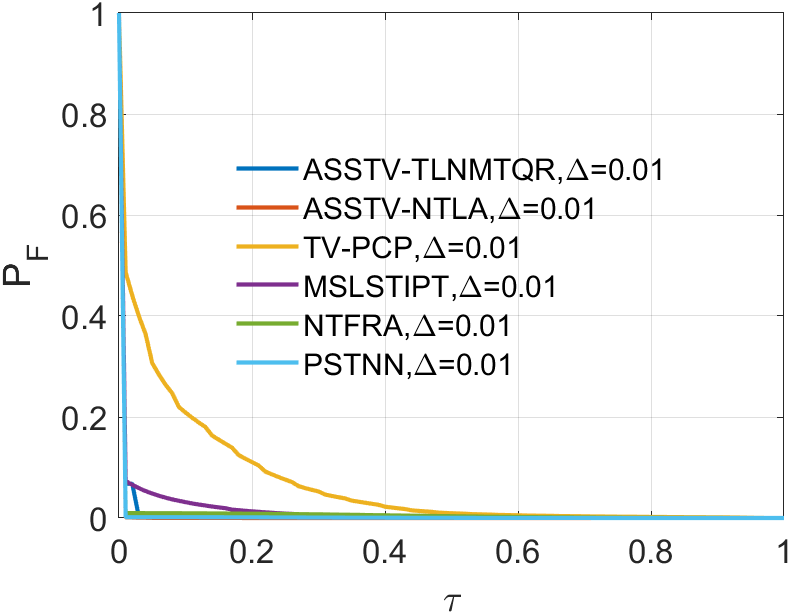

This section aims to demonstrate the robustness of our algorithm through a series of experiments related to infrared small target detection. We employ 3D Receiver Operating Characteristic Curve (ROC) [29] to assess the performance of our algorithm in separating tensor . Additionally, we have selected five comparative methods to highlight the superiority of our approach.

IV-A1 Evaluation metrics and baseline methods

In the experiments related to small infrared targets, we’ll use 3D ROC to assess the algorithm’s capability. The 3D ROC are based on 2D ROC [30, 31]. Initially, we plot the curve, followed by separate curves for and , and finally combine them to form the 3D ROC.

The horizontal axis of 2D ROC represents the false alarm rate , and the vertical axis represents the detection probability . They are defined as follows:

| (38) |

| (39) |

The above two indicators range between 0 and 1.

| Sequence | ASSTV-TLNMTQR(ours) | ASSTV-NTLA | NTFRA | MSLSTIPT | PSTNN | TVPCP | |

|---|---|---|---|---|---|---|---|

| Sequence 1 | 0.844 | 0.849 | 0.881 | 0.701 | 0.682 | 0.848 | |

| 0.005 | 0.005 | 0.054 | 0.013 | 0.021 | 0.006 | ||

| 4.135 | 5.304 | 25.688 | 0.161 | 3.031 | 0.490 | ||

| Sequence 2 | 0.895 | 0.899 | 0.965 | 0.833 | 0.998 | 0.950 | |

| 0.005 | 0.005 | 0.054 | 0.011 | 0.009 | 0.006 | ||

| 4.337 | 5.558 | 27.126 | 0.219 | 3.112 | 0.442 | ||

| Sequence 3 | 0.895 | 0.899 | 0.960 | 0.686 | 0.946 | 0.899 | |

| 0.005 | 0.005 | 0.068 | 0.008 | 0.015 | 0.006 | ||

| 4.476 | 5.806 | 26.409 | 0.251 | 2.948 | 0.369 | ||

| Sequence 4 | 0.998 | 0.998 | 0.951 | 0.947 | 0.972 | 0.981 | |

| 0.006 | 0.005 | 0.59 | 0.010 | 0.023 | 0.007 | ||

| 4.061 | 5.188 | 21.649 | 0.213 | 2.732 | 0.443 | ||

| Sequence 5 | 0.896 | 0.8999 | 0.951 | 0.705 | 0.781 | 0.899 | |

| 0.005 | 0.005 | 0.054 | 0.014 | 0.022 | 0.008 | ||

| 4.136 | 5.133 | 24.497 | 0.159 | 2.817 | 0.517 | ||

| Sequence 6 | 0.947 | 0.949 | 0.995 | 0.937 | 0.645 | 0.750 | |

| 0.006 | 0.005 | 0.066 | 0.013 | 0.009 | 0.006 | ||

| 4.367 | 5.203 | 25.966 | 0.195 | 2.869 | 0.492 |

In all ROC, we assess the algorithm’s performance by comparing the Area Under Curve (AUC). In 2D ROC, a bigger AUC generally signifies better algorithm performance. However, when the algorithm’s false alarm rate is high, indicating a higher detection of irrelevant points, it can create a false impression of a large area under the curve. Hence, the 2D ROC may not fully reflect the algorithm’s performance, prompting our choice of the 3D ROC. Within the 3D ROC, we’ve incorporated the relationships between PD, PF, and the threshold . The curve has the same implication as , a larger area signifies better performance. Therefore, for subsequent experiments, we will solely showcase the curve. Meanwhile, the curve reflects the algorithm’s background suppression capability, specifically the quantity of irrelevant points. Generally, a smaller area under the curve implies a stronger background suppression ability and better algorithm performance.

IV-A2 Parameter setting and datasets

IV-B Parameter analysis

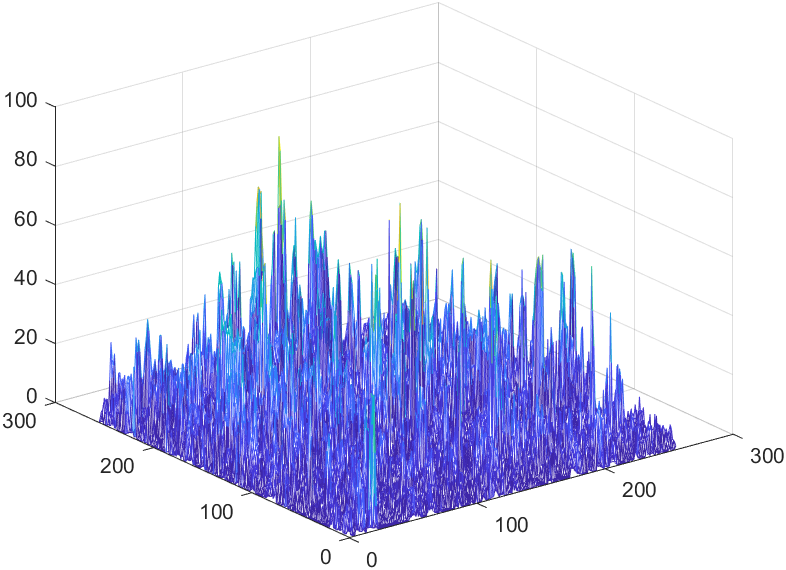

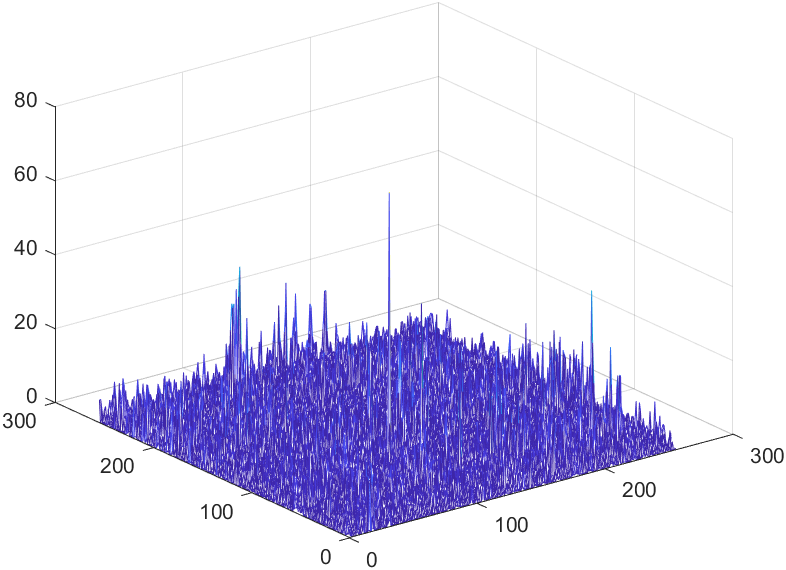

IV-B1 of tensor

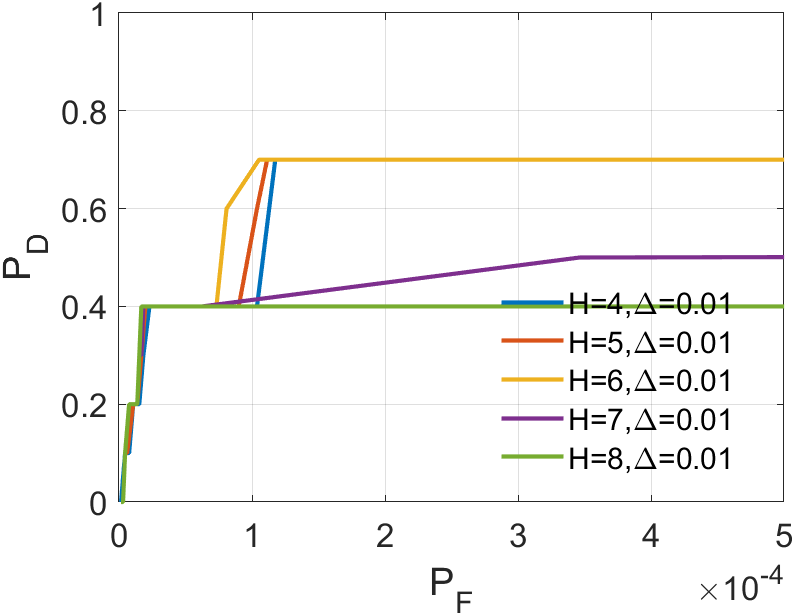

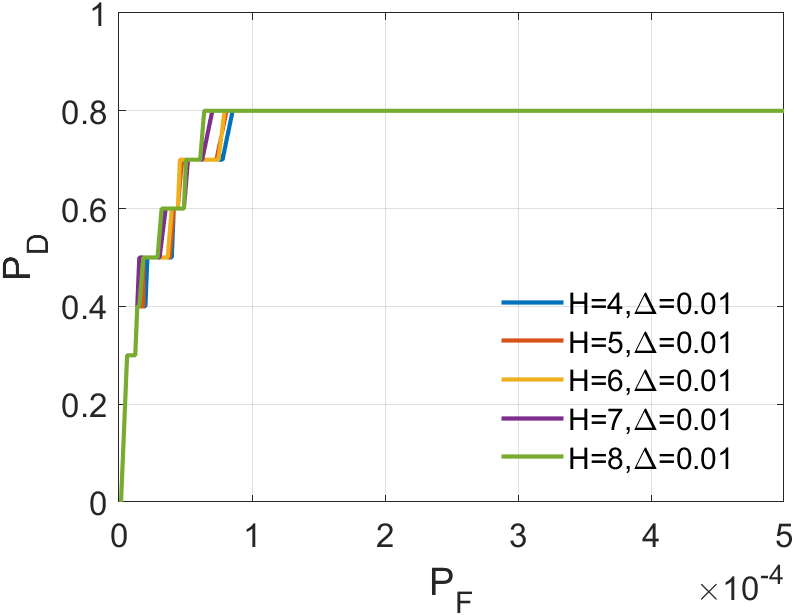

In our algorithm, the tensor serves as an approximation of tensor in Equation (27), making the size of tensor the most critical parameter. The variation in ’s size significantly impacts our algorithm’s performance in detecting weak infrared targets. Refer to Fig. 8 to Fig. 8. We utilized Sequence 6 to run our algorithm. By plotting mesh grids of different values on the same frame, a clear trend emerges: as gradually increases, the detection performance for infrared small targets improves. Additionally, in the mesh grids for and , the differences in detection results become negligible. To pursue faster processing, we have chosen as the parameter for our subsequent experiments.

IV-B2 Number of frames

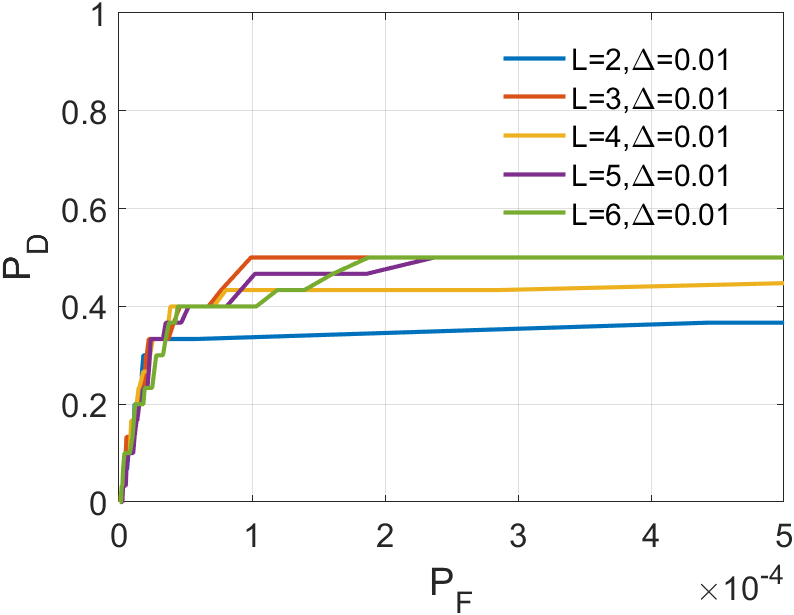

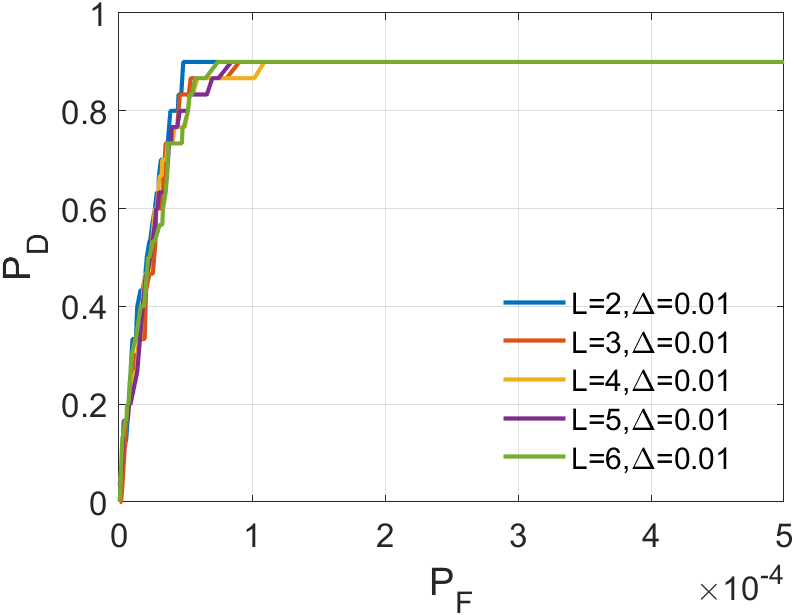

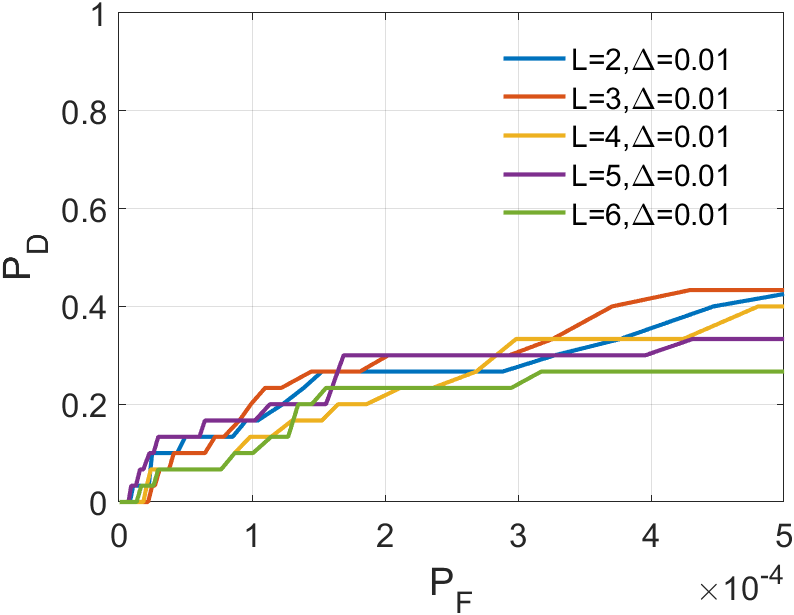

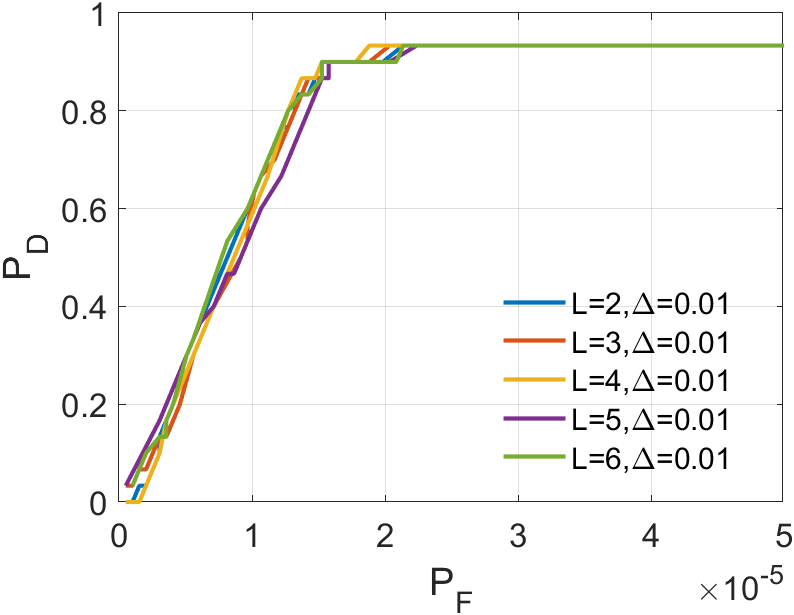

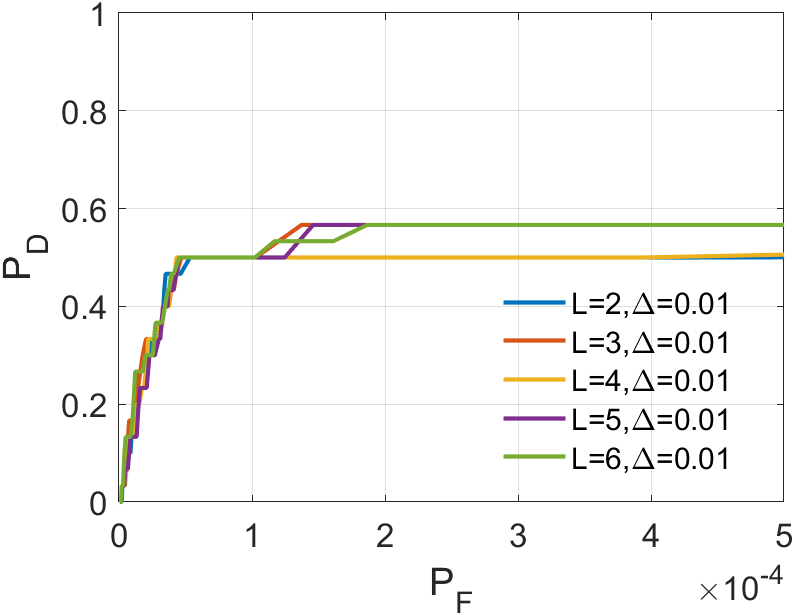

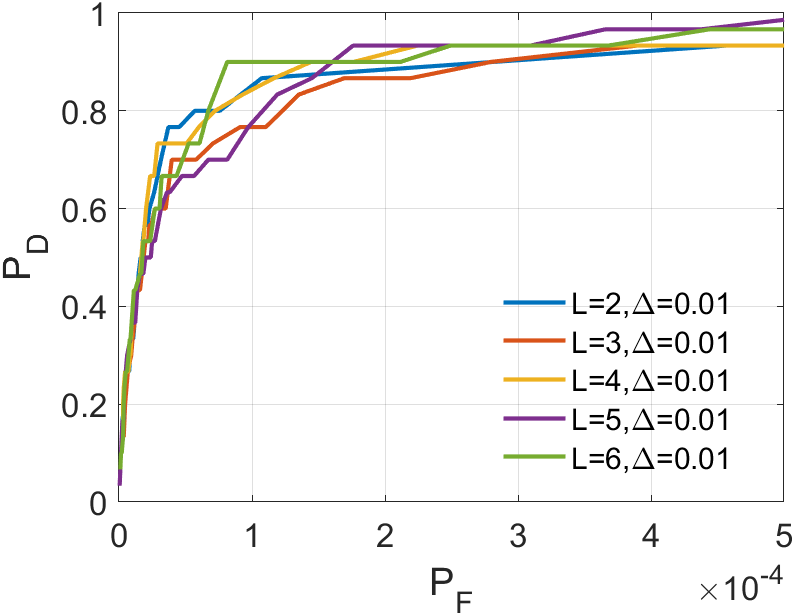

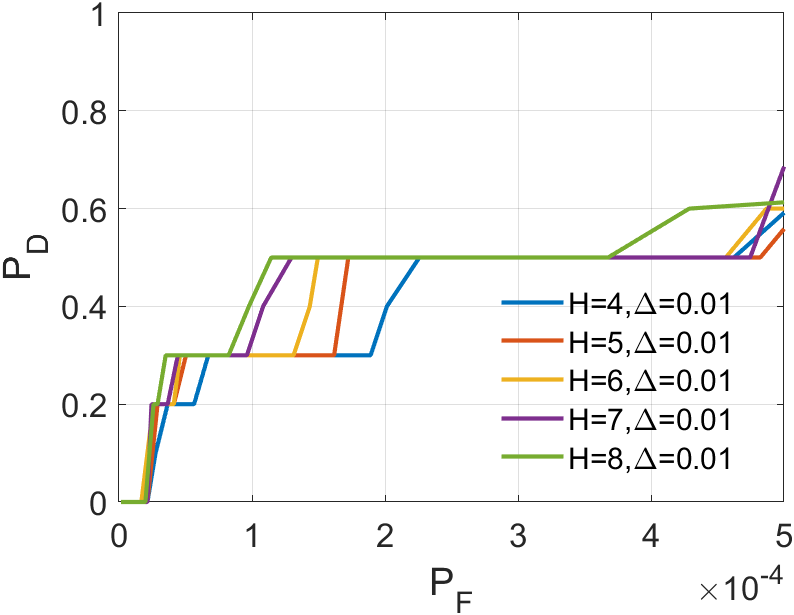

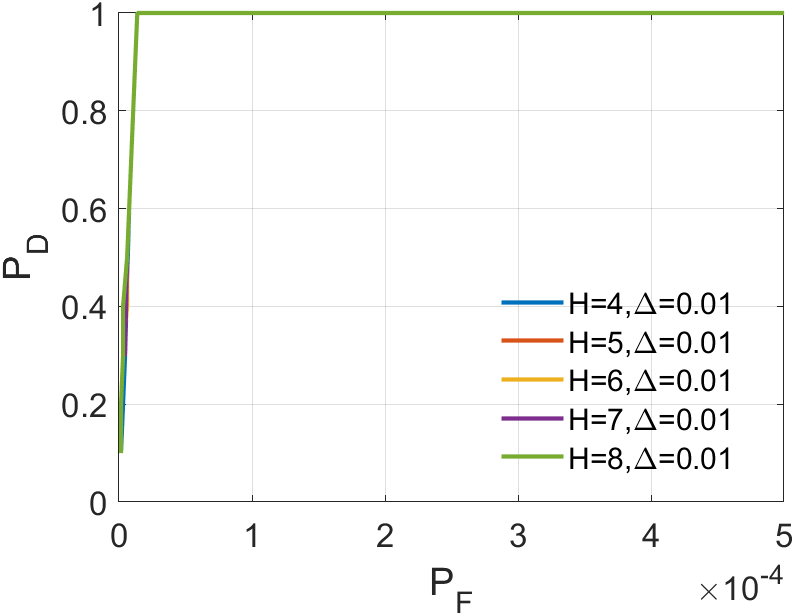

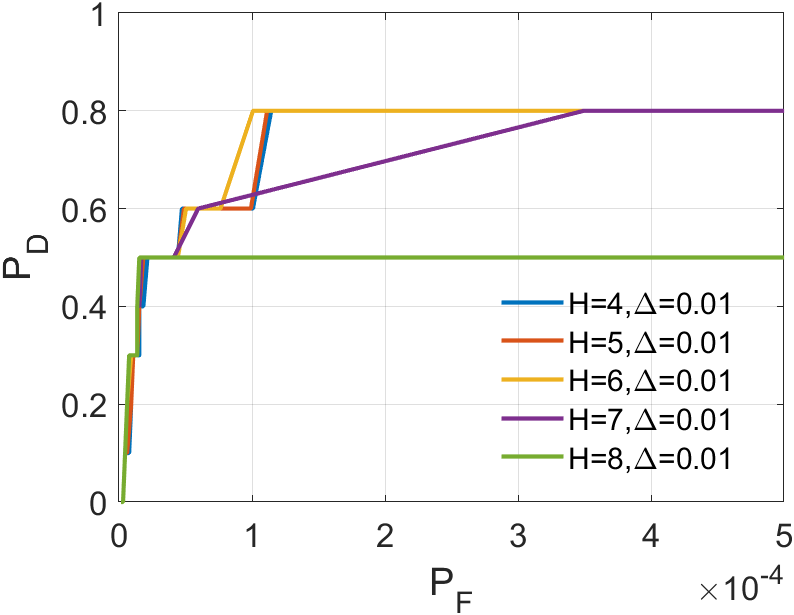

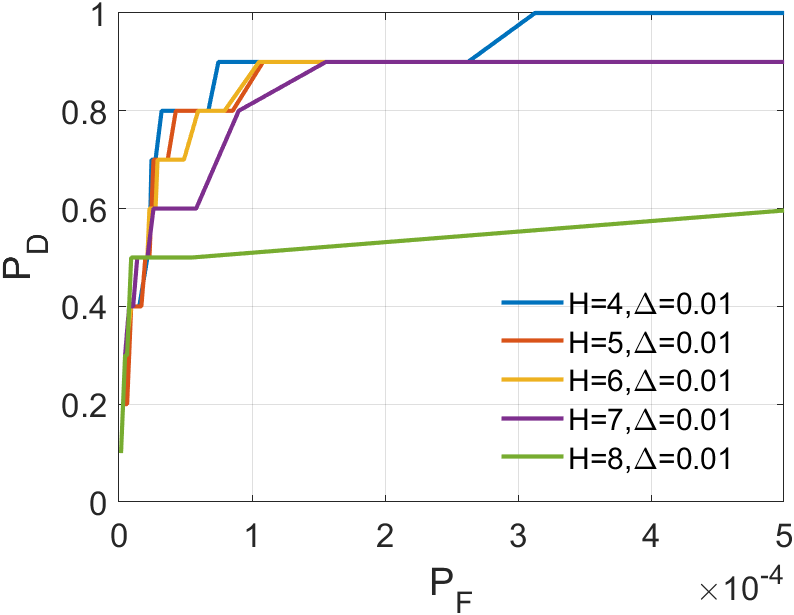

In a sequence, the number of frames inputted each time significantly affects the dimension of the tensor we construct. The dimension primarily encapsulates temporal information, greatly influencing the accuracy of ASSTV calculations. Hence, we need to test the frame quantity to identify the optimal number of frames. In our algorithm, the frame quantity is denoted by the variable . We vary from 2 to 6 with an increment of 1. From the experimental results in 14 to 14, we observe that in Sequence 1, Sequence 3, and Sequence 5, the best results are achieved when , with minimal differences in the remaining sequences. After seeing the false alarm rates of the remaining sequences, we found that is the lowest. Therefore, for subsequent experiments, we will adopt .

IV-B3 Tunning parameter

plays a crucial role in optimizing the ASTTV-TLNMTQR model. We vary from 2 to 10 with increments of 2. From the results in 20 to 20, it’s evident that has different effects across various backgrounds. In Sequence 1 and Sequence 5, emerges as the best choice, maintaining a top-three position in other sequences as well. Although performs best in Sequence 2 and Sequence 3, but its false alarm rate is high, so its result in Sequence 2 and Sequence 3 means nothing. Hence, we consider as the most stable parameter, and we will continue using this value in the subsequent experiments.

IV-C Comparison to state-of art methods

In our comparative experiments, we systematically evaluated the performance of our ASSTV-NTLA algorithm against five other algorithms, all of which were tested on the six sequences outlined in TABLE II. The specific parameter settings for each algorithm can be found in III.

Upon analyzing the experimental results depicted in Fig. 21 to Fig. 22, we observed that certain algorithms demonstrated detection rates either higher or nearly comparable to ASSTV-NTLA and ASSTV-TLNMTQR (ours) in specific sequences. However, a closer examination of TABLE IV reveals that these seemingly impressive detection rates are accompanied by high false alarm rates. This critical insight underscores the superior performance of ASSTV-NTLA and ASSTV-TLNMTQR (ours), as they consistently achieve the highest detection rates while maintaining lower false alarm rates. Furthermore, the comprehensive analysis presented in TABLE IV highlights an additional advantage of our ASSTV-TLNMTQR algorithm, which consistently performs at a speed approximately faster than ASSTV-NTLA across all six sequences. Notably, this accelerated speed is achieved without compromising accuracy, as evidenced by the mere difference in average accuracy compared to the ASSTV-NTLA method.

In summary, our ASSTV-TLNMTQR algorithm is the optimal choice. It has the fastest speed while ensuring higher detection rate and lower false alarm rate.

V CONCLUSION

This article introduces an innovative tensor recovery algorithm. It combines ASSTV regularization and TLNMTQR methods, resulting in a significant improvement in computational speed. We conducted experiments in infrared small target detection to evaluate the capabilities of our algorithm. In experiments on small infrared target detection, we tested the algorithm’s ability to extract the target tensor and suppress the background tensor . Our algorithm greatly enhances computational speed without sacrificing accuracy.

In future research, we have several areas for optimization. Firstly, when addressing the norm minimization problem, we can consider assigning different weights to different eigenvalues to enhance background extraction capabilities. Secondly, apart from parameter adjustments, we can explore more effective methods to determine the optimal size for approximating tensor .

References

- [1] R. R. Nadakuditi, “Optshrink: An algorithm for improved low-rank signal matrix denoising by optimal, data-driven singular value shrinkage,” IEEE Transactions on Information Theory, vol. 60, no. 5, pp. 3002–3018, 2014.

- [2] Z. Zhang, C. Ding, Z. Gao, and C. Xie, “Anlpt: Self-adaptive and non-local patch-tensor model for infrared small target detection,” Remote Sensing, vol. 15, no. 4, p. 1021, 2023.

- [3] H. Yi, C. Yang, R. Qie, J. Liao, F. Wu, T. Pu, and Z. Peng, “Spatial-temporal tensor ring norm regularization for infrared small target detection,” IEEE Geoscience and Remote Sensing Letters, vol. 20, pp. 1–5, 2023.

- [4] L. Chuntong and W. Hao, “Research on infrared dim and small target detection algorithm based on low-rank tensor recovery,” Journal of Systems Engineering and Electronics, 2023.

- [5] M. Lustig, D. L. Donoho, J. M. Santos, and J. M. Pauly, “Compressed sensing mri,” IEEE Signal Processing Magazine, vol. 25, no. 2, pp. 72–82, 2008.

- [6] M. Rani, S. B. Dhok, and R. B. Deshmukh, “A systematic review of compressive sensing: Concepts, implementations and applications,” IEEE Access, vol. 6, pp. 4875–4894, 2018.

- [7] V. Chandrasekaran, S. Sanghavi, P. A. Parrilo, and A. S. Willsky, “Sparse and low-rank matrix decompositions,” IFAC Proceedings Volumes, vol. 42, no. 10, pp. 1493–1498, 2009, 15th IFAC Symposium on System Identification. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S1474667016388632

- [8] D. Bertsimas, R. Cory-Wright, and N. A. Johnson, “Sparse plus low rank matrix decomposition: A discrete optimization approach,” Journal of Machine Learning Research, vol. 24, no. 267, pp. 1–51, 2023.

- [9] G. Daniel, G. Meirav, O. Noam, B.-K. Tamar, R. Dvir, O. Ricardo, and B.-E. Noam, “Fast and accurate t2 mapping using bloch simulations and low-rank plus sparse matrix decomposition,” Magnetic Resonance Imaging, vol. 98, pp. 66–75, 2023.

- [10] Z. Lin, M. Chen, and Y. Ma, “The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices,” arXiv preprint arXiv:1009.5055, 2010.

- [11] Y. Chen, Y. Guo, Y. Wang, D. Wang, C. Peng, and G. He, “Denoising of hyperspectral images using nonconvex low rank matrix approximation,” IEEE Transactions on Geoscience and Remote Sensing, vol. 55, no. 9, pp. 5366–5380, 2017.

- [12] Y. Xie, Y. Qu, D. Tao, W. Wu, Q. Yuan, and W. Zhang, “Hyperspectral image restoration via iteratively regularized weighted schatten -norm minimization,” IEEE Transactions on Geoscience and Remote Sensing, vol. 54, no. 8, pp. 4642–4659, 2016.

- [13] H. Zhang, W. He, L. Zhang, H. Shen, and Q. Yuan, “Hyperspectral image restoration using low-rank matrix recovery,” IEEE transactions on geoscience and remote sensing, vol. 52, no. 8, pp. 4729–4743, 2013.

- [14] J. Xue, Y. Zhao, S. Huang, W. Liao, J. C.-W. Chan, and S. G. Kong, “Multilayer sparsity-based tensor decomposition for low-rank tensor completion,” IEEE Transactions on Neural Networks and Learning Systems, vol. 33, no. 11, pp. 6916–6930, 2022.

- [15] M. Wang, D. Hong, Z. Han, J. Li, J. Yao, L. Gao, B. Zhang, and J. Chanussot, “Tensor decompositions for hyperspectral data processing in remote sensing: A comprehensive review,” IEEE Geoscience and Remote Sensing Magazine, 2023.

- [16] C. Lu, J. Feng, Y. Chen, W. Liu, Z. Lin, and S. Yan, “Tensor robust principal component analysis with a new tensor nuclear norm,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 4, pp. 925–938, 2020.

- [17] W. He, H. Zhang, and L. Zhang, “Total variation regularized reweighted sparse nonnegative matrix factorization for hyperspectral unmixing,” IEEE Transactions on Geoscience and Remote Sensing, vol. 55, no. 7, pp. 3909–3921, 2017.

- [18] M.-D. Iordache, J. M. Bioucas-Dias, and A. Plaza, “Total variation spatial regularization for sparse hyperspectral unmixing,” IEEE Transactions on Geoscience and Remote Sensing, vol. 50, no. 11, pp. 4484–4502, 2012.

- [19] V. Vishnevskiy, T. Gass, G. Szekely, C. Tanner, and O. Goksel, “Isotropic total variation regularization of displacements in parametric image registration,” IEEE Transactions on Medical Imaging, vol. 36, no. 2, pp. 385–395, 2017.

- [20] W. He, H. Zhang, H. Shen, and L. Zhang, “Hyperspectral image denoising using local low-rank matrix recovery and global spatial–spectral total variation,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 11, no. 3, pp. 713–729, 2018.

- [21] T. Liu, J. Yang, B. Li, C. Xiao, Y. Sun, Y. Wang, and W. An, “Nonconvex tensor low-rank approximation for infrared small target detection,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–18, 2022.

- [22] S. Weiland and F. Van Belzen, “Singular value decompositions and low rank approximations of tensors,” IEEE transactions on signal processing, vol. 58, no. 3, pp. 1171–1182, 2009.

- [23] Y. Zheng and A.-B. Xu, “Tensor completion via tensor qr decomposition and l2,1-norm minimization,” Signal Processing, vol. 189, p. 108240, 2021. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0165168421002772

- [24] C. Gao, D. Meng, Y. Yang, Y. Wang, X. Zhou, and A. G. Hauptmann, “Infrared patch-image model for small target detection in a single image,” IEEE Transactions on Image Processing, vol. 22, no. 12, pp. 4996–5009, 2013.

- [25] X. Wang, Z. Peng, D. Kong, P. Zhang, and Y. He, “Infrared dim target detection based on total variation regularization and principal component pursuit,” Image and Vision Computing, vol. 63, pp. 1–9, 2017. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0262885617300756

- [26] L. Zhang and Z. Peng, “Infrared small target detection based on partial sum of the tensor nuclear norm,” Remote Sensing, vol. 11, no. 4, p. 382, 2019.

- [27] Y. Sun, J. Yang, and W. An, “Infrared dim and small target detection via multiple subspace learning and spatial-temporal patch-tensor model,” IEEE Transactions on Geoscience and Remote Sensing, vol. 59, no. 5, pp. 3737–3752, 2020.

- [28] X. Kong, C. Yang, S. Cao, C. Li, and Z. Peng, “Infrared small target detection via nonconvex tensor fibered rank approximation,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–21, 2021.

- [29] C.-I. Chang, “An effective evaluation tool for hyperspectral target detection: 3d receiver operating characteristic curve analysis,” IEEE Transactions on Geoscience and Remote Sensing, vol. 59, no. 6, pp. 5131–5153, 2021.

- [30] S. Atapattu, C. Tellambura, and H. Jiang, “Analysis of area under the roc curve of energy detection,” IEEE Transactions on Wireless Communications, vol. 9, no. 3, pp. 1216–1225, 2010.

- [31] S. Manti, M. K. Svendsen, N. R. Knøsgaard, P. M. Lyngby, and K. S. Thygesen, “Exploring and machine learning structural instabilities in 2d materials,” npj Computational Materials, vol. 9, no. 1, p. 33, 2023.

- [32] B. Hui, Z. Song, H. Fan, P. Zhong, W. Hu, X. Zhang, J. Lin, H. Su, W. Jin, Y. Zhang, and Y. Bai, “A dataset for infrared image dim-small aircraft target detection and tracking under ground / air background,” Oct. 2019.