Integrating Reward Maximization and Population Estimation: Sequential Decision-Making for Internal Revenue Service Audit Selection

Abstract

We introduce a new setting, optimize-and-estimate structured bandits. Here, a policy must select a batch of arms, each characterized by its own context, that would allow it to both maximize reward and maintain an accurate (ideally unbiased) population estimate of the reward. This setting is inherent to many public and private sector applications and often requires handling delayed feedback, small data, and distribution shifts. We demonstrate its importance on real data from the United States Internal Revenue Service (IRS). The IRS performs yearly audits of the tax base. Two of its most important objectives are to identify suspected misreporting and to estimate the “tax gap” — the global difference between the amount paid and true amount owed. Based on a unique collaboration with the IRS, we cast these two processes as a unified optimize-and-estimate structured bandit. We analyze optimize-and-estimate approaches to the IRS problem and propose a novel mechanism for unbiased population estimation that achieves rewards comparable to baseline approaches. This approach has the potential to improve audit efficacy, while maintaining policy-relevant estimates of the tax gap. This has important social consequences given that the current tax gap is estimated at nearly half a trillion dollars. We suggest that this problem setting is fertile ground for further research and we highlight its interesting challenges. The results of this and related research are currently being incorporated into the continual improvement of the IRS audit selection methods.

1 Introduction

Sequential decision-making algorithms, like bandit algorithms and active learning, have been used across a number of domains: from ad targeting to clinical trial optimization (Bouneffouf and Rish 2019). In the public sector, these methods are not yet widely adopted, but could improve the efficiency and quality of government services if deployed with care. Henderson et al. (2021) provides a review of this potential. Many administrative enforcement agencies in the United States (U.S.) face the challenge of allocating scarce resources for auditing regulatory non-compliance. But these agencies must also balance additional constraints and objectives simultaneously. In particular, they must maintain an accurate estimate of population non-compliance to inform policy-making. In this paper, we focus on the potential of unifying audit processes with these multiple objectives under a sequential decision-making framework. We call our setting optimize-and-estimate structured bandits. This framework is useful in practical settings, challenging, and has the potential to bring together methods from survey sampling, bandits, and active learning. It poses an interesting and novel challenge for the machine learning community and can benefit many public and private sector applications (see more discussion in Appendix C). It is critical to many U.S. federal agencies that are bound by law to balance enforcement priorities with population estimates of improper payments (Henderson et al. 2021; Office of Management and Budget 2018, 2021).

We highlight this framework with a case study of the Internal Revenue Service (IRS). The IRS selects taxpayers to audit every year to detect under-reported tax liability. Improving audit selection could yield 10:1 returns in revenue and help fund socially beneficial programs (Sarin and Summers 2019). But the agency must also provide an accurate assessment of the tax gap (the projected amount of tax under-reporting if all taxpayers were audited). Currently, the IRS accomplishes this via two separate mechanisms: (1) a stratified random sample to estimate the tax gap; (2) a focused risk-selected sample of taxpayers to collect under-reported taxes. Based on a unique multiyear collaboration with the IRS, we were provided with full micro data access to masked audit data to research how machine learning could improve audit selection. We investigate whether these separate mechanisms and objectives can be combined into one batched structured bandit algorithm, which must both maximize reward and maintain accurate population estimates. Ideally, if information is reused, the system can make strategic selections to balance the two objectives. We benchmark several sampling approaches and examine the trade-offs between them with the goal of understanding the effects of using bandit algorithms in this high-impact setting. We identify several interesting results and challenges using historical taxpayer audit data in collaboration with the IRS.

First, we introduce a novel sampling mechanism called Adaptive Bin Sampling (ABS) which guarantees an unbiased population estimate by employing a Horvitz-Thompson (HT) approach (Horvitz and Thompson 1952), but is comparable to other methods for cumulative reward. Its unbiasedness and comparable reward comes at the cost of additional variance, though the method provides fine-grained control of this variance-reward trade-off.

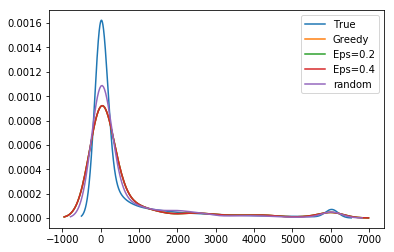

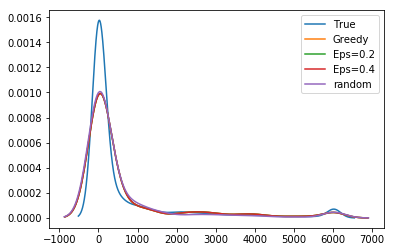

Second, we compare this approach to -greedy and optimism-based approaches, where a model-based population estimate is used. We find that model-based approaches are biased absent substantial reliance on , but low in variance. Surprisingly, we find that greedy approaches perform well in terms of reward, reinforcing findings by Bietti, Agarwal, and Langford (2018) and Bastani, Bayati, and Khosravi (2021). But we find the bias from population estimates in the greedy regime to be substantial. These biases are greatly reduced even with small amounts of random exploration, but the lack of unbiasedness guarantees make them unacceptable for many public policy settings.

Third, we show that more reward-optimal approaches tend to sample high-income earners versus low-income earners. And more reward-optimal approaches tend to audit fewer tax returns that yield no change (a reward close to 0). This reinforces the importance of reducing the amount of unnecessary exploration, which would place audit burdens on compliant taxpayers. Appendix D details other ethical and societal considerations taken into account with this work.

Fourth, we show that model errors are heteroskedastic, resulting in more audits of high-income earners by optimism-based methods, but not yielding greater rewards.111We note that it is possible that these stem from measurement limitations in the high income space (Guyton et al. 2021).

We demonstrate that combining random and focused audits into a single framework can more efficiently maximize revenue while retaining accuracy for estimating the tax gap. While additional research is needed in this new and challenging domain, this work demonstrates the promise of applying a bandit-like approach to the IRS setting, and optimize-and-estimate structured bandits more broadly. The results of this and related research are currently being incorporated into the continual improvement of the IRS audit selection methods.

2 Background

Related Work. The bandit literature is large. To fully engage with it, we provide an extended literature review in Appendix E , but we mention several strands of related research here. The fact that adaptively collected data leads to biased estimation (whether model-based or not) is well-known. See, e.g., Nie et al. (2018); Xu, Qin, and Liu (2013); Shin, Ramdas, and Rinaldo (2021). A number of works have sought to develop sampling strategies that combat bias. See, e.g. Dimakopoulou et al. (2017). This work has been in the multi-armed bandit (MAB) or (mostly linear) contextual bandit settings. In the MAB setting, there has also been some work which explicitly considers the trade-off between reward and model-error. See, e.g, Liu et al. (2014); Erraqabi et al. (2017). In Appendix E we provide a comparison against our setting, but crucially we have volatile arms which make our setting different and closer to the linear stochastic bandit work (a form of structured bandit) (Abbasi-Yadkori, Pál, and Szepesvári 2011; Joseph et al. 2018). However, we require non-linearity and batched selection, as well as adding the novel estimation objective to this structured bandit setting. To our knowledge, ours is the first formulation which actively incorporates bias and variance of population estimates into a batched structured bandit problem formulation. Moreover, our focus is to study this problem in a real-world public sector domain, taking on the challenges proposed by Wagstaff (2012). No work we are aware of has analyzed the IRS setting in this way.

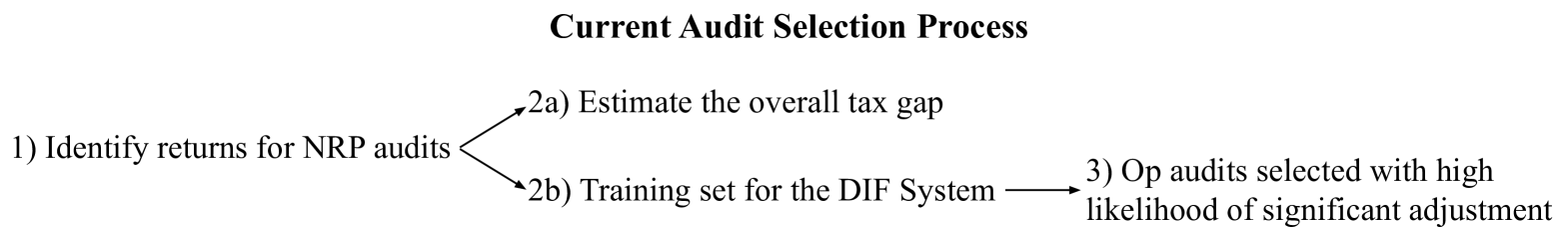

Institutional Background. The IRS maintains two distinct categories of audit processes. National Research Program (NRP) audits enable population estimation of non-compliance while Operational (Op) audits are aimed at collecting taxes from non-compliant returns. The NRP is a core measurement program for the IRS to regularly evaluate tax non-compliance (Government Accountability Office 2002, 2003). The NRP randomly selects, via a stratified random sample, 15k tax returns each year for research audits (Internal Revenue Service 2019), although this has been decreasing in recent years and there is pressure to reduce it further (Marr and Murray 2016; Congressional Budget Office 2020). These audits are used to identify new areas of noncompliance, estimate the overall tax gap, and estimate improper payments of certain tax credits. Given a recent gross tax gap estimate of $441 billion (Internal Revenue Service 2019), even minor increases in efficiency can yield large returns. In addition to its use for tax gap estimation, NRP serves as a training set for certain Op audit programs like the Discriminant Function (DIF) System (Internal Revenue Service 2022), which is based on a modified Linear Discriminant Analysis (LDA) model (Lowe 1976). DIF also incorporates other measures and policy objectives that we do not consider here. We instead focus on the stylized setting of only population estimation and reward maximization. Tax returns that have a high likelihood of a significant adjustment, as calculated by DIF, have a higher probability of being selected for Op audits.

It is important to highlight that Op data is not used for estimating the DIF risk model and is not used for estimating the tax gap (specifically, the individual income misreporting component of the tax gap). Though NRP audits are jointly used for population estimates of non-compliance and risk model training, the original sampling design was not optimized for both revenue maximization and estimator accuracy for tax non-compliance. Random audits have been criticized for burdening compliant taxpayers and for failing to target areas of known non-compliance (Lawsky 2008). The current process already somewhat represents informal sequential decision-making system. NRP strata are informed by the Op distribution, and are adjusted year-to-year. We posit that by formalizing the current IRS system in the form of a sequential decision-making problem, we can incorporate more methods to improve its efficiency, accuracy, and fairness.

Data. The data used throughout this work is from the NRP’s random sample (Andreoni, Erard, and Feinstein 1998; Johns and Slemrod 2010; Internal Revenue Service 2016, 2019), which we will treat as the full population of audits, since they are collected via a stratified random sample and represent the full population of taxpayers. The NRP sample is formed by dividing the taxpayer base into activity classes based on income and claimed tax credits, and various strata within each class. Each stratum is weighted to be representative of the national population of tax filers. Then a stratified random sample is taken across the classes. NRP audits seek to estimate the correctness of the whole return via a close to line-by-line examination (Belnap et al. 2020). This differs from Op audits, which are narrower in scope and focus on specific issues. Given the expensive nature of NRP audits, NRP sample sizes are relatively small (15k/year) (Guyton et al. 2018). The IRS uses these audits to estimate the tax gap and average non-compliance.222The IRS uses statistical adjustments to compensate naturally occurring variation in the depth of audit, and taxpayer misreporting that is difficult to find via auditing, and other NRP sampling limitations (Guyton et al. 2020; Internal Revenue Service 2019; Erard and Feinstein 2011). For the goals of this work we ignore these. Legal requirements for these estimates exist (Taxpayer Advocate Service 2018). The 2018 Office of Management and Budget (OMB) guidelines, for instance, state that these values should be “statistically valid” (unbiased estimates of the mean) and have “ or better margin of error at the 95% confidence level for the improper payment percentage estimate” (Office of Management and Budget 2018). Later OMB guidelines have provided more discretion to programs for developing feasible point estimates and confidence intervals (CIs) (Office of Management and Budget 2021). Unbiasedness remains an IRS policy priority.

Our NRP stratified random audit sample covers from 2006 to 2014. We use 500 covariates as inputs to the model which are a superset of those currently used for fitting the DIF model. The covariates we use include every value reported by a taxpayer on a tax return. For example, the amount reported in Box 9 of Form 1040 is Total income and would be included in these covariates. Table 5 , in the Appendix, provides summary statistics of the NRP research audits conducted on a yearly basis. Since NRP audits are stratified, the unweighted means represent the average adjustment made by the IRS to that year’s return for all audited taxpayers in the sample. The weighted mean takes into account stratification weights for each sample. One can think of the weighted mean as the average taxpayer misreporting across all taxpayers in the United States, while the unweighted mean is the average taxpayer misreporting in the NRP sample.

Problem Formulation. We formulate the optimize-and-estimate structured bandit problem setting in the scenario where there is an extremely large, but finite, number () of arms () to select from at every round. This set of arms is the population at timestep . The population can vary such that the set of available arms may be totally different at every step, similar to a sleeping or volatile bandit (Nika, Elahi, and Tekin 2021). In fact, it may not be possible to monitor any given arm over several timesteps.333Note the reason we make this assumption is because the NRP data does not track a cohort of taxpayers, but rather randomly samples. We are not guaranteed to ever see a taxpayer twice. To make the problem tractable, it is assumed that the reward for a given arm can be modeled by a shared function where are some set of features associated with arm at timestep , and are the parameters of the true reward function. Assume is any realizable or -realizable function. Thus, as is typical of the structured bandit setting “choosing one action allows you to gain information about the rewards of other actions” (Lattimore and Szepesvári 2020, p. 301). The agent chooses a batch of arms to: (1) maximize reward; (2) yield an accurate and unbiased estimate of the average reward across all arms – even those that have not been chosen (the population reward). Thus we seek to optimize a selection algorithm that chooses non-overlapping actions according to a selection policy () and outputs a population estimate () according to an estimation algorithm ():

| (1) | ||||

| s.t. | (2) |

where is the underlying distribution from which all taxpayers are pulled. In our IRS setting each arm () represents a taxpayer which the policy could select for a given year (). The associated features () are the 500 covariates in our data for the tax return. The reward () is the adjustment recorded after the audit. The population average reward that the agent seeks to accurately model is the average adjustment (summing together would instead provide the tax gap).

3 Methods

We focus on three methods: (1) -greedy; (2) optimism-based approaches; (3) ABS sampling (see Appendix F for reasoning and method selection criteria).

-greedy. Here we choose to sample randomly with probability . Otherwise, we select the observation with the highest predicted reward according to a fitted model , where indicates fitted model parameters. To batch sample, we repeat this process times. The underlying model is then trained on the received observation-reward pairs, and we repeat. For population estimation, we use a model-based approach (see, e.g., Esteban et al. 2019). After the model receives the true rewards from the sampled arms, the population estimate is predicted as: , where is the NRP sample weight444The returns in each NRP strata can be weighted by the NRP sample weights to make the sample representative of the overall population, acting as inverse propensity weights. We use NRP weights for population estimation. See Appendix K. from the population distribution.

Optimism. We refer readers to Lattimore and Szepesvári (2020) for a general introduction to Upper Confidence Bound (UCB) and optimism-based methods. We import an optimism-based approach into this setting as follows. Consider a random forest with trees . We form an optimistic estimate of the reward for each arm according to: , where is an exploration parameter based on the variance of the tree-based predictions, similar to Hutter, Hoos, and Leyton-Brown (2011). We select the returns with the largest optimistic reward estimates. We shorthand this approach as UCB and use the same model-based population estimation method as -greedy.

ABS Sampling. Adaptive Bin Sampling brings together sampling and bandit literatures to guarantee statistically unbiased population estimates, while enabling an explicit trade-off between reward and the variance of the estimate. In essence, ABS performs adjustable risk-proportional random sampling over optimized population strata. By maintaining probabilistic sampling, ABS can employ HT estimation to achieve an unbiased measurement of the population.

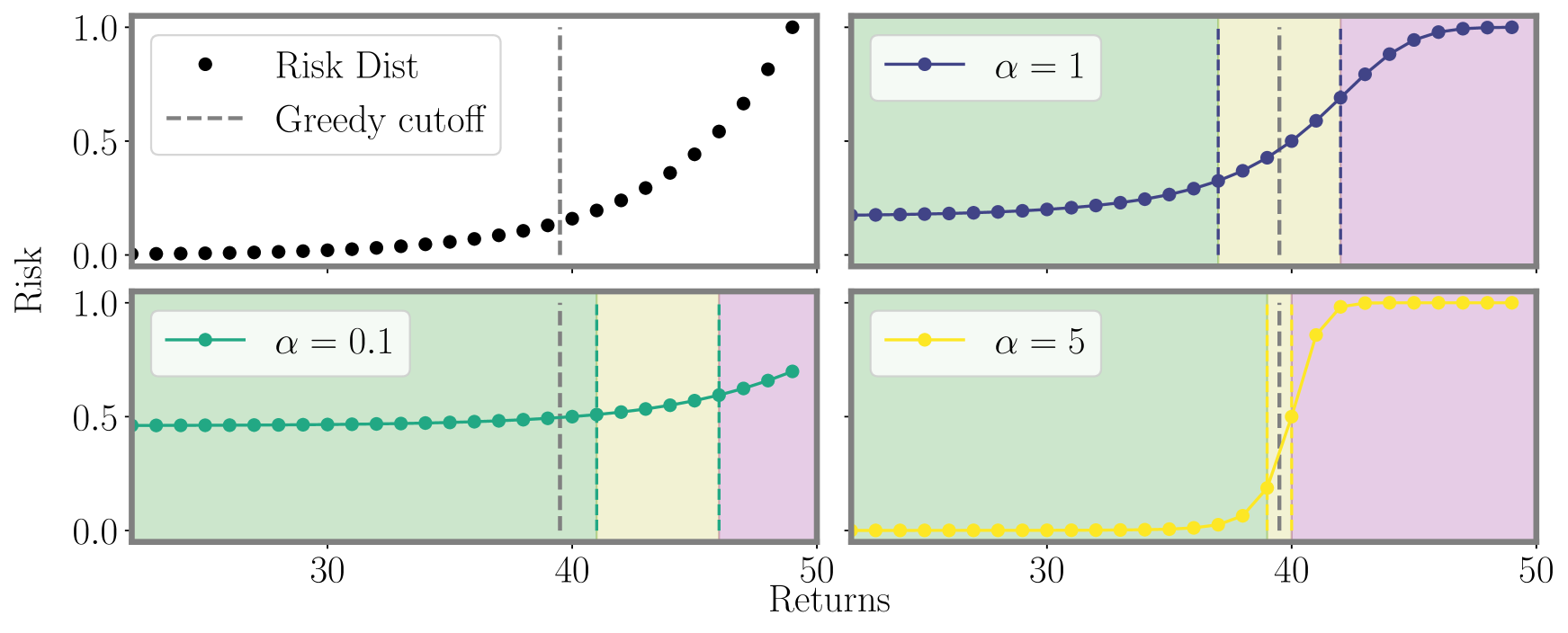

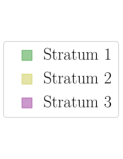

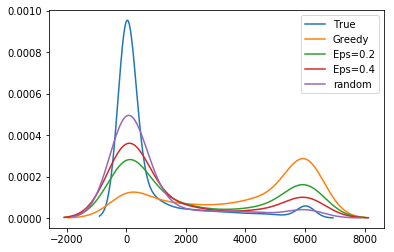

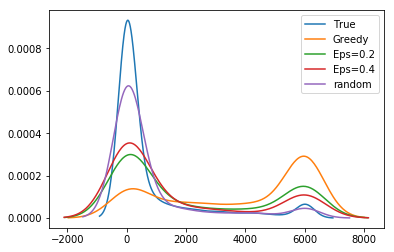

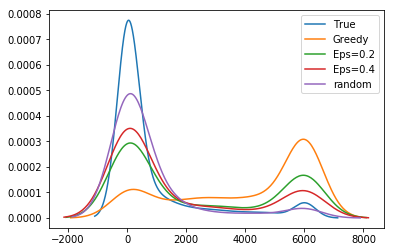

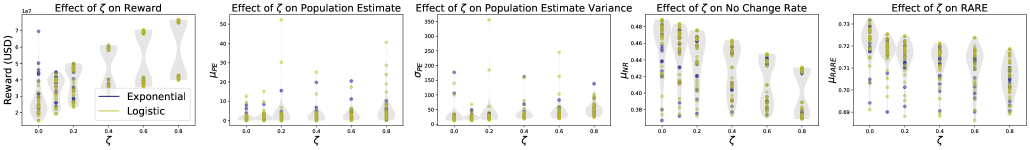

Pseudocode is given in Algorithm 1. Fix timestep and let be our budget. Let be the predicted risk for return . First we sample the top returns. To make the remaining selections, we parameterize the predictions with a mixing function intended to smoothly transition focus between the reward and variance objectives, but whose only requirement is that it be monotone (rank-preserving). For our empirical exploration we examine two such mixing functions, a logistic function, and an exponential function . is the value of the -th largest value amongst reward predictions . As decreases, approaches a uniform distribution which results in lower variance for but lower reward. As increases, the variance of increases but so too does the reward. Figure 1 provides a visualization of this.

The distribution of transformed predictions is then stratified into non-intersecting strata . We choose strata in order to minimize intra-cluster variance, such that there are at least points per bin:

| (3) |

where is the average value of the points in bin . We place a distribution over the bins by averaging the risk in each bin:

| (4) |

To make our selection, we sample times from to obtain a bin, and then we sample uniformly within that bin to choose the return. We do not recalculate after each selection, so while we are sampling without replacement at the level of returns (we cannot audit the same taxpayer twice), we are sampling with replacement at the level of bins. The major benefit of ABS is that by sampling according to the distribution , we can employ HT estimation to eliminate bias. Indeed, if is the set of arms sampled during an epoch, is an unbiased estimate of the true population, where is the probability that arm was selected (i.e., ) and is the NRP weight. Like with other HT-based methods (Potter 1990; Alexander, Dahl, and Weidman 1997), to reduce variance we also add an option for a minimum probability of sampling a bin, which we call the trim %. See Appendix N for more details, proof of unbiased estimation, and estimator variance. See Appendix U for regret bounds.

Reward Structure Models. As the data is highly non-linear and high-dimensional, we use Random Forest Regression (RFR) for our reward model. We exclude linear models from our suite of algorithms after verifying that they consistently underperform RFR (Appendix M). We do not include neural networks in this analysis as the data regime is too small. Future approaches might build on this work using pretraining methods suited for a few-shot context (Bommasani et al. 2021). We do compare to an LDA baseline (Appendix T.3). This is included both as context to our broad modeling decisions, and as an imperfect stylized proxy for one component of the current risk-based selection approach used by the IRS.

4 Evaluation Protocol

We evaluate according to three metrics: cumulative reward, percent difference of the population estimate, and the no-change rate. More details in Appendix L.2 and L.3.

Cumulative reward () is simply the total reward of all arms selected by the agent across the entire time series . It represents the total amount of under-reported tax revenue returned to the government after auditing. This is averaged across seeds and denoted as .

Percent difference (, ) is the difference between the estimated population average and the true population average: . is absolute mean percent difference across seeds (bias). is the standard deviation of the percent difference across random seeds.

No-change rate () is the percent of arms that yield no reward where we round down such that any reward $200 is considered no change . NR is of some importance. An audit that results in no adjustment can be perceived as unfair, because the taxpayer did not commit any wrongdoing (Lawsky 2008). It can have adverse effects on future compliance (Beer et al. 2015; Lederman 2018). is the average NR across seeds.

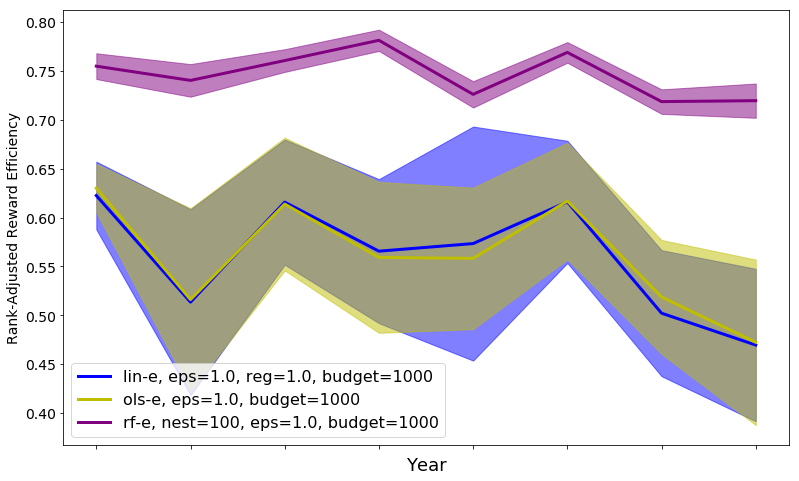

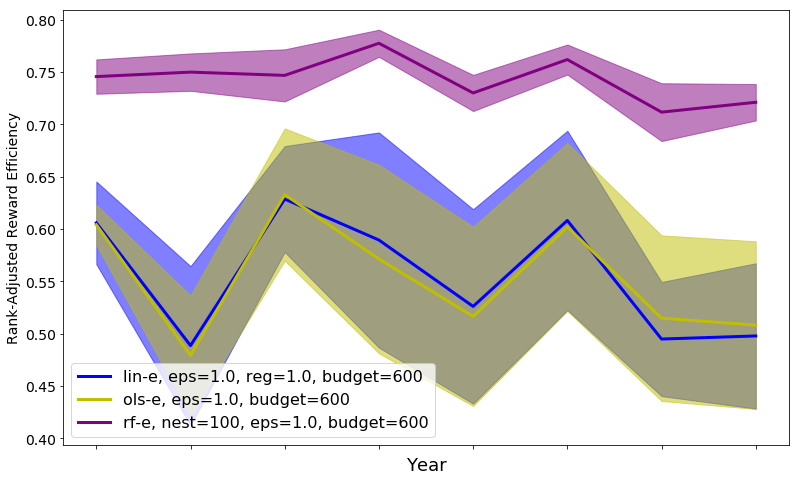

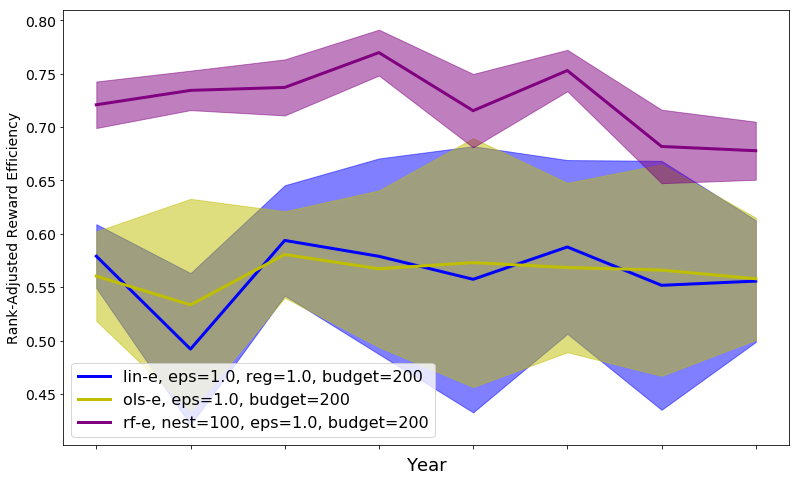

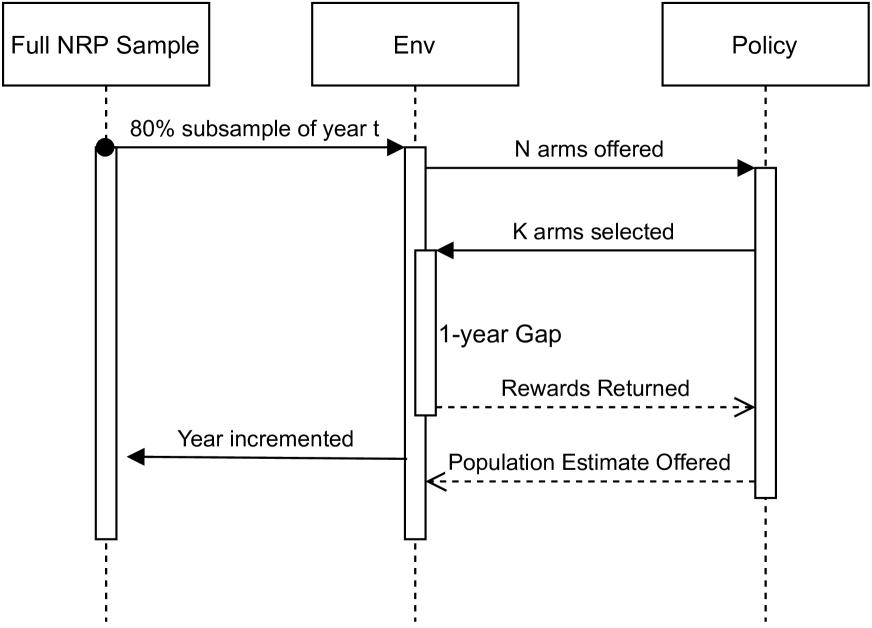

Experimental Protocol. Our evaluation protocol for all experiments follows the same pattern. For a given year we offer 80% of the NRP sample as arms for the agent to select from. We repeat this process across 20 distinct random seeds such that there are 20 unique subsampled datasets that are shared across all methods, creating a sub-sampled bootstrap for Confidence Intervals (more in Appendix S). Comparing methods seed-to-seed will be the same as comparing two methods on the same dataset. Each year, the agent has a budget of 600 arms to select from the population of 10k+ arms (description of budget selection in Appendix R). We delay the delivery of rewards for one year. This is because the majority of audits are completed and returned only after such a delay (DeBacker et al. 2018). Thus, the algorithm in year 2008 will only make decisions with the information from 2006. Because of this delay the first two years are randomly sampled for the entire budget (i.e., there is a warm start). After receiving rewards for a given year, the agent must then provide a population estimate of the overall population average for the reward (i.e., the average tax adjustment after audit). This process repeats until 2014, the final year available in our NRP dataset (diagram in Appendix O).

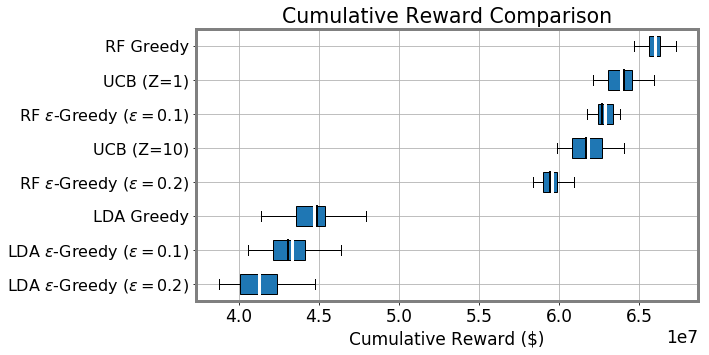

Best Reward Settings Policy Unbiased ABS-1 $41.5M∗ 0.4 ✓ 31.0 37.6% -only $41.3M∗ 4.3✓ 37.4 38.3% ABS-2 $40.5M∗ 0.6✓ 24.5 38.3% Random $12.7M 1.5✓ 14.7 53.1% Biased Greedy $43.6M∗ 16.4 ✗ 8.8 36.5% UCB-1 $42.4M∗ 15.3 ✗ 9.4 38.6% -Greedy $41.3M∗ 6.1 ✗ 7.5 38.3% UCB-2 $40.7M∗ 15.6 ✗ 10.21 40.7%

5 Results

We highlight several key findings with additional results and sensitivity analyses in Appendix T.

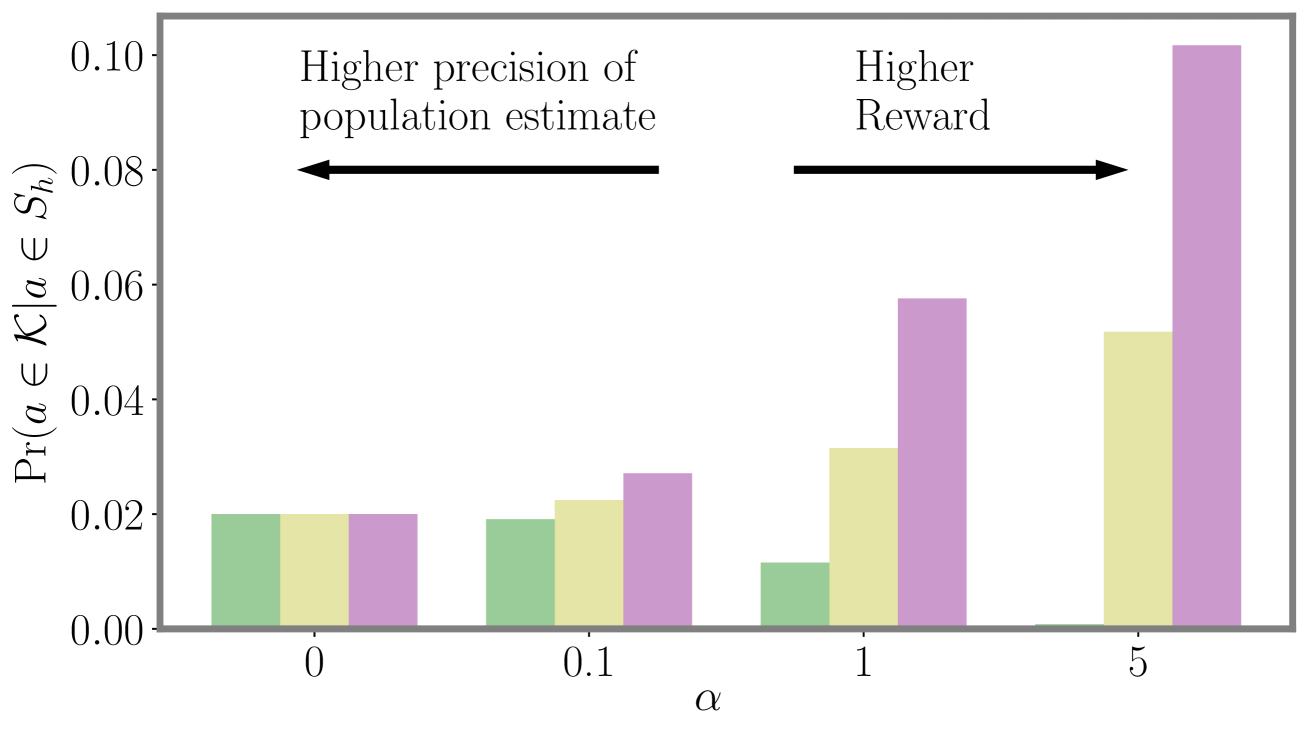

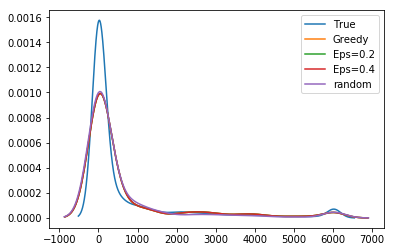

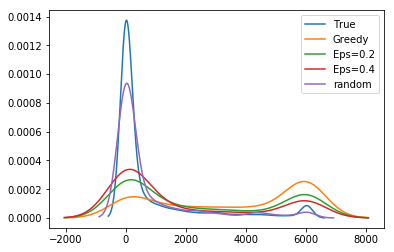

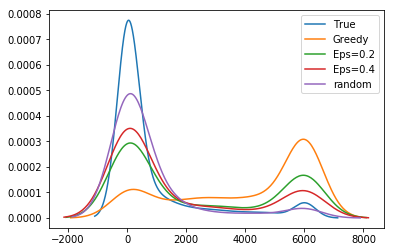

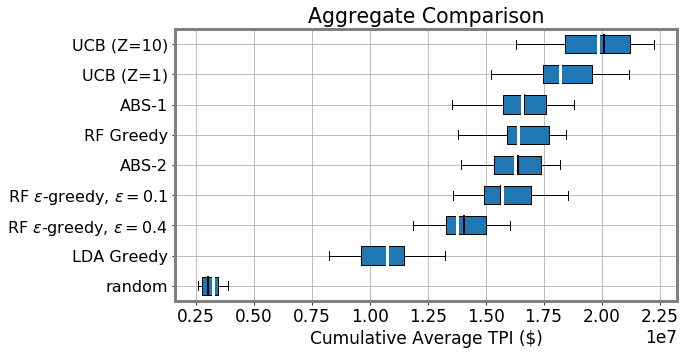

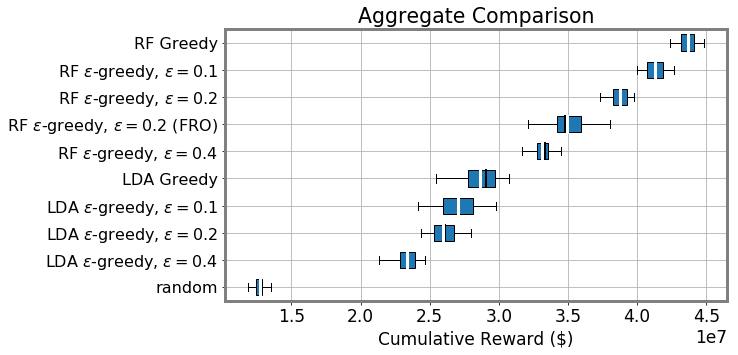

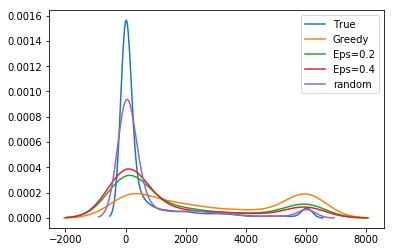

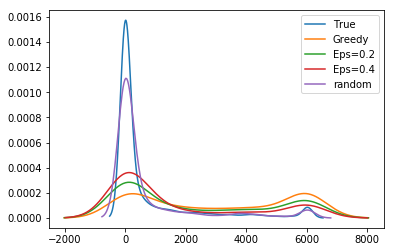

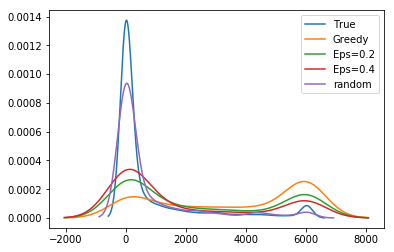

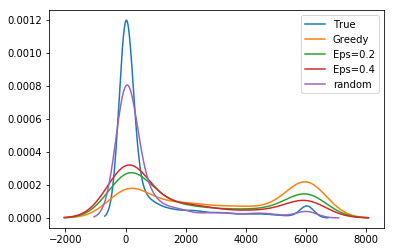

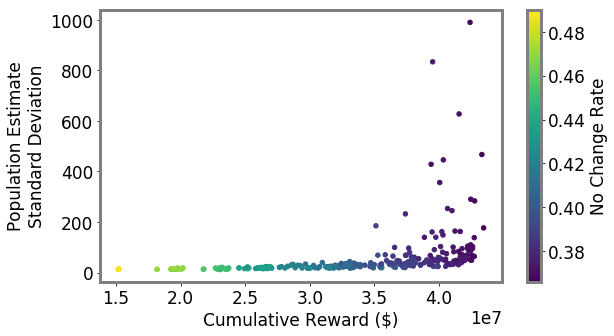

Unbiased population estimates are possible with little impact to reward. ABS sampling can achieve similar returns to the best performing methods in terms of audit selection, while yielding an unbiased population estimate (see Table 1). Conversely, greedy, -greedy, and UCB approaches – which use a model-based population estimation method – do not achieve unbiased population estimates. Others have noted that adaptively collected data can lead to biased models (Nie et al. 2018; Neel and Roth 2018). In many public sector settings provably unbiased methods like ABS are required. For -greedy, using the -sample only would also achieve an unbiased estimate, yet due to its small sample size the variance is prohibitively high. ABS reduces variance by 16% over the best -only method, yielding even better reward. Trading off $1M over 9 years improves variance over -Greedy (-only) by 35%. It is possible to reduce this variance even further at the cost of some more reward (see Figure 2). Note, due to an extremely small sample size, though the sample is unbiased in theory, we see some minor bias in practice. Model-based estimates are significantly lower variance, but biased. This may be because models re-use information across years, whereas ABS does not. Future research could re-use information in ABS to reduce variance, perhaps with a model’s assistance. Nonetheless, we emphasize that model-based estimates without unbiasedness guarantees are unacceptable for many public sector uses from a policy perspective.

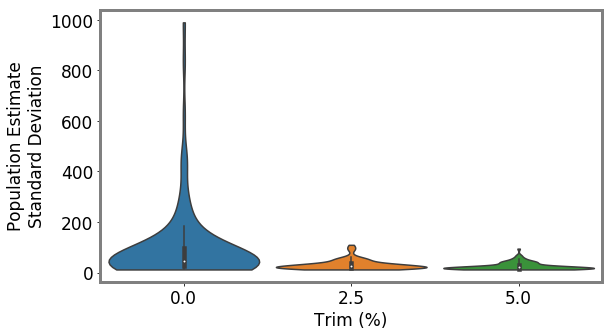

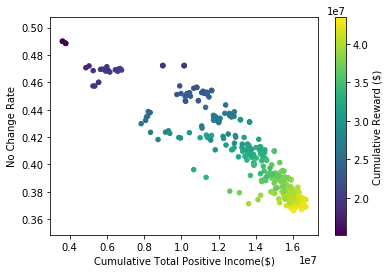

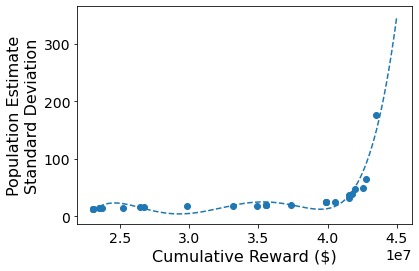

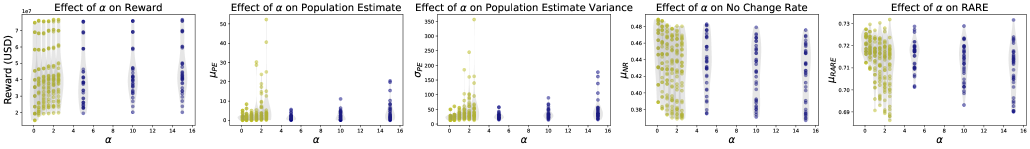

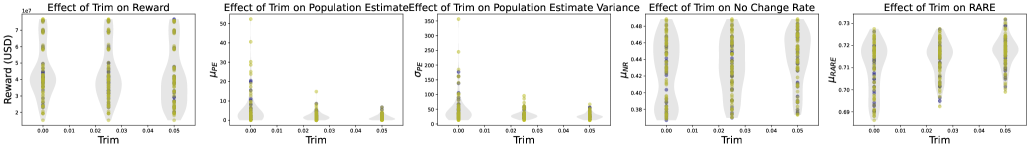

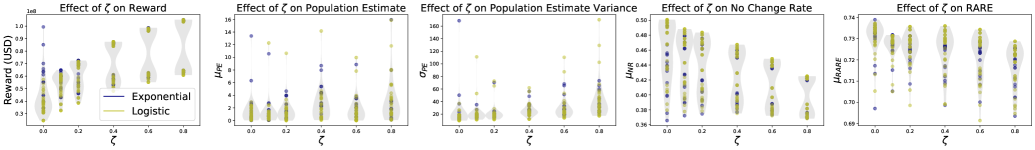

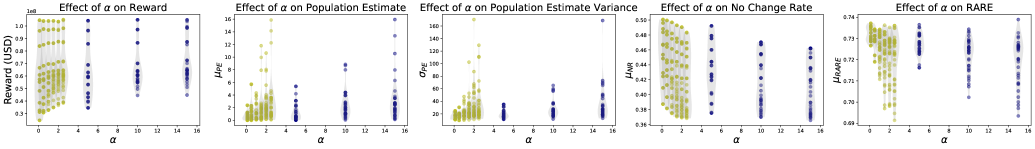

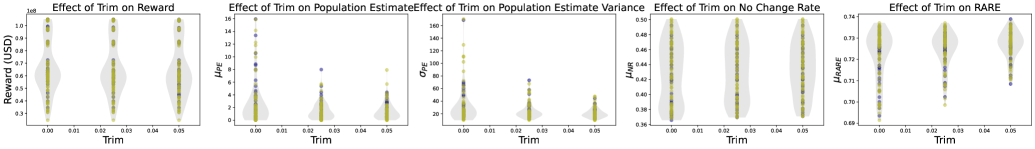

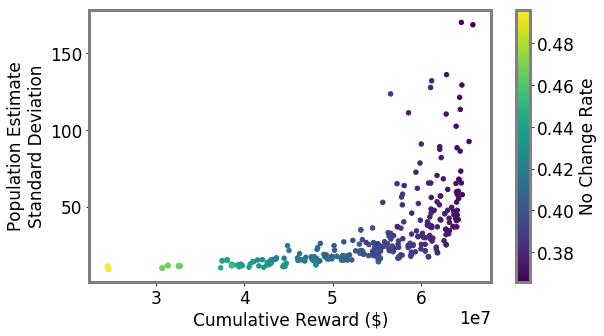

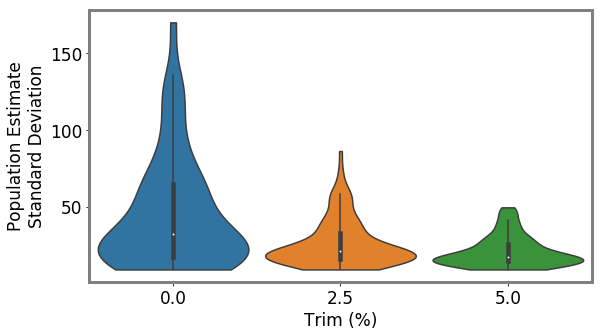

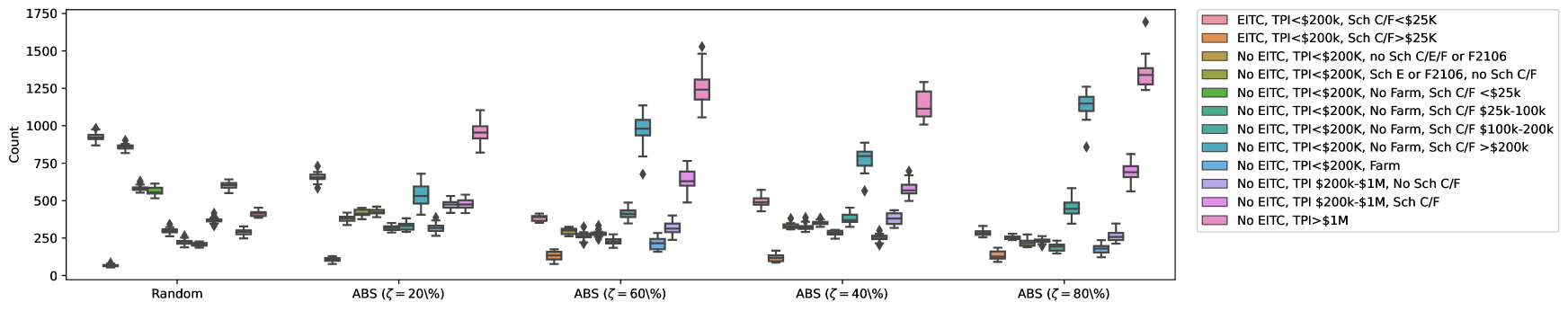

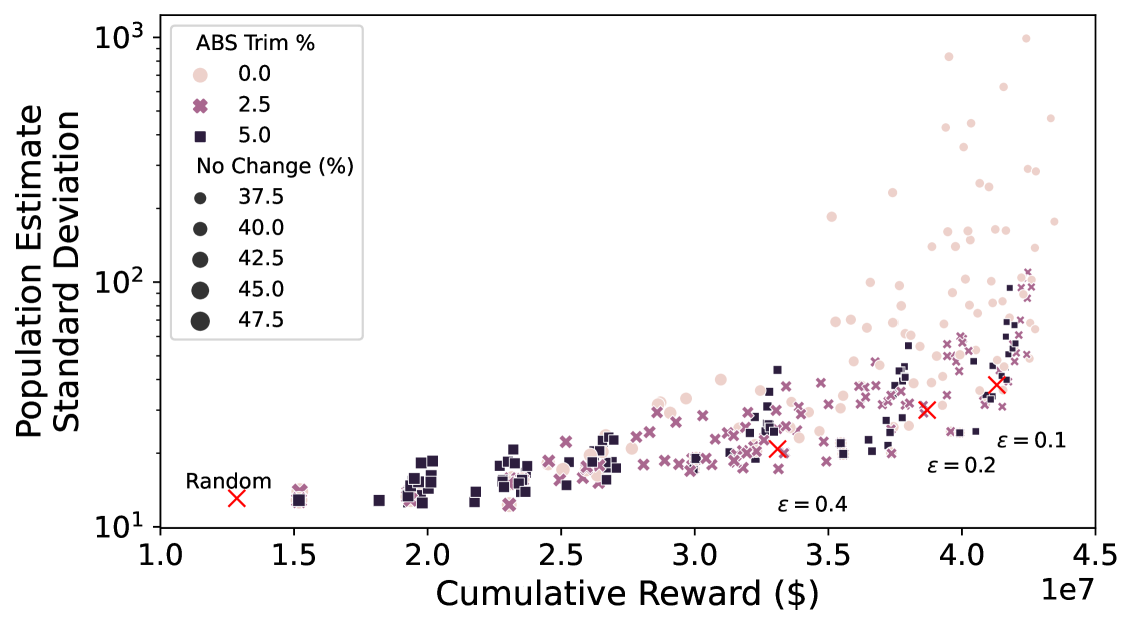

ABS allows fine-grained control over variance-reward trade-off. We sample a grid of hyperparameters for ABS (see Appendix P). Figure 2 shows that more hyperparameter settings close to optimal rewards have higher variance in population estimates. We can control this variance with the trimming mechanism. This ensures that each bin of the risk distribution will be sampled some minimum amount. Figure 2 also shows that when we add trimming, we can retain large rewards and unbiased population estimates. Top configurations (Table 1) can keep variance down to only 1.7x that of a random sample, while yielding 3.2x reward. While -greedy with the random sample only does surprisingly well, optimal ABS configurations have a better Pareto front. We can fit a function to this Pareto front and estimate the marginal value of the reward-variance trade-off (see Appendix T.2).

Greedy is not all you need. Greedy surprisingly achieves more optimal reward compared to all other methods (see Table 1). This aligns with prior work suggesting that a purely greedy approach in contextual bandits might be enough to induce sufficient exploration under highly varied contexts (Bietti, Agarwal, and Langford 2018; Kannan et al. 2018; Bastani, Bayati, and Khosravi 2021). Here, there are several intrinsic sources of exploration that may cause this result: intrinsic model error, covariate drift (see Appendix Table 5), differences in tax filing compositions, and the fact that our population of arms already come from a stratified random sample (changing in composition year-to-year).

Figure 2 (bottom) demonstrates greedy sampling’s implicit exploration for one random seed. As the years progress, greedy is (correctly) more biased toward sampling arms with high rewards. Nonetheless, it yields a large number of arms that are the same as a random sample would yield. This inherent exploration backs the hypothesis that the test sample is highly stochastic, leading to implicit exploration. It is worth emphasizing that in a larger population and with a larger budget, greedy’s exploration may not be sufficient and more explicit exploration may be needed. The key difference from our result and prior work showing greedy’s surprising performance (Bietti, Agarwal, and Langford 2018; Kannan et al. 2018; Bastani, Bayati, and Khosravi 2021) is our additional population estimation objective. The greedy policy has a significant bias when it comes to model-based population estimation. This bias is similar – but not identical – to the bias reported in other adaptive data settings (Thrun and Schwartz 1993; Nie et al. 2018; Shin, Ramdas, and Rinaldo 2021; Farquhar, Gal, and Rainforth 2021). Even a 10% random sample – significantly underpowered for typical sampling-based estimation – can reduce this bias by more than 2.5 (see Table 1). Even if greedy can be optimal for a high-variance contextual bandit, it is not optimal for the optimize-and-estimate setting. -greedy achieves a compromise between variance that may be more acceptable in settings when some bias is permitted, but bias is not desirable in most public sector settings. We also show that RFR regressors significantly outperform LDA and that incorporating non-random data helps (Appendix T.3). This is a stylized proxy of the status quo system that uses a small -only sample (NRP) for population estimates and an LDA-like algorithm (DIF) for selection.

A more focused approach audits higher cumulative total positive income. A key motivator for our work is that inefficiently-allocated randomness in audit selection will not only be suboptimal for the government, but could impose unnecessary burdens on taxpayers (Lawsky 2008; Davis-Nozemack 2012). An issue that has received increasing attention by policymakers and commentators in recent years concerns the fair allocation of audits by income (Kiel 2019; Internal Revenue Service 2021; Treasury 2021). Although we do not take a normative position on the precise contours of a fair distribution of audits, we examine how alternative models shape the income distribution of audited taxpayers.

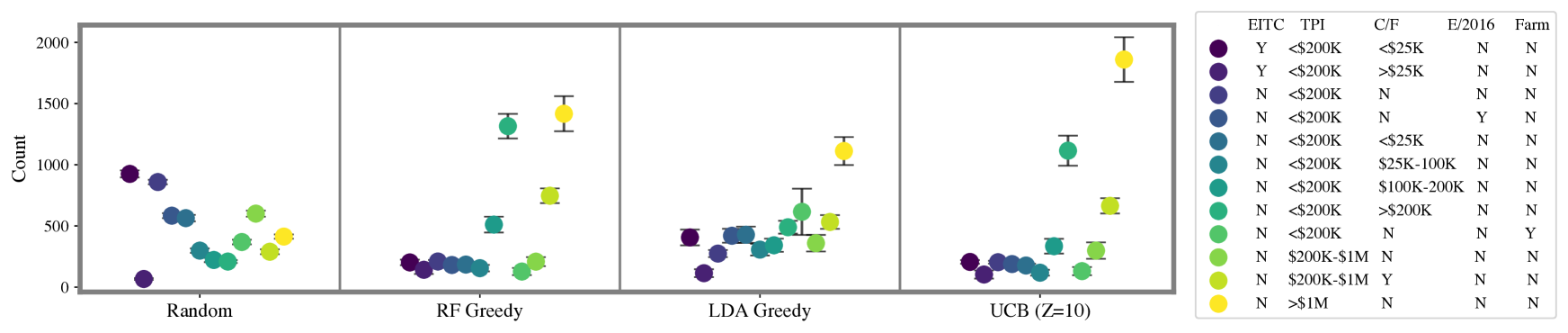

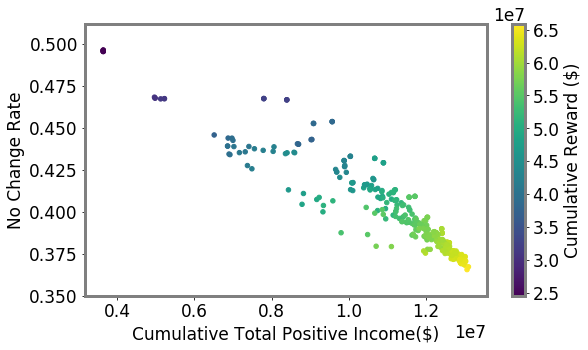

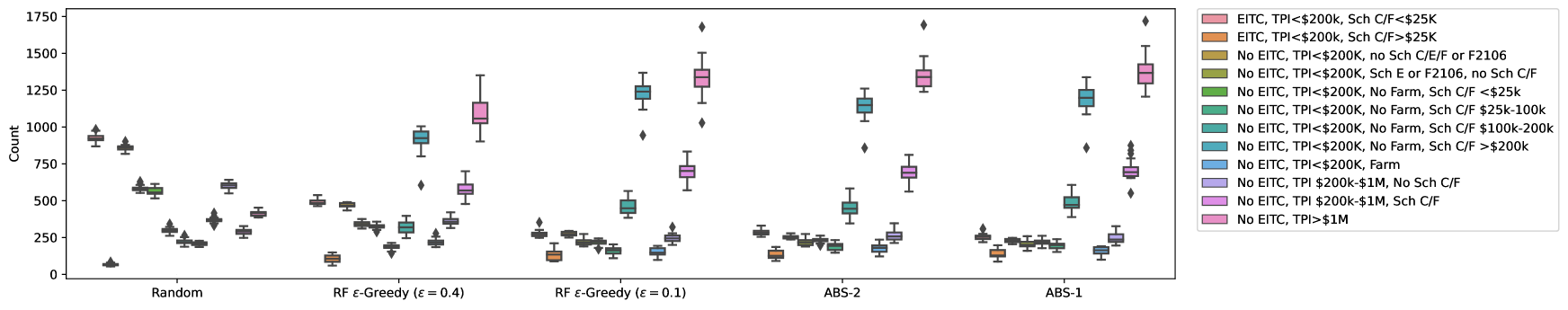

As shown in Figure 3, we find that as methods become more optimal we see an increase in the total positive income (TPI) of the individuals selected for audit (RF Greedy selects between $1.8M and $9.4M more cumulative TPI than LDA Greedy, effect size 95% CI matched by seed). We also show the distribution of ABS hyperparameter settings we sampled. As the settings are more likely to increase reward and decrease no change rates, the cumulative TPI increases. This indicates that taxpayers with lower TPI are less likely to be audited as models are more likely to sample in the higher range of the risk distribution. We confirm this in Figure 3 (top) which shows the distribution of activity classes sampled by different approaches. These classes are used as strata in the NRP sample. The UCB and RF Greedy approaches are more likely to audit taxpayers with more than $1M in TPI (with UCB sampling this class significantly more, likely due to heteroskedasticity). More optimal approaches also significantly sample those with $200K in TPI, but more than $200K reported on their Schedule C or F tax return forms (used to report business and farm income, respectively).

Errors are heteroskedastic, causing difficulties in using model-based optimism methods. Surprisingly, our optimism-based approach audits tax returns with higher TPI more often ($1.2M to $5.8M million cumulative TPI more than RF Greedy) despite yielding similar returns as the greedy approach. We believe this is because adjustments and model errors are heteroskedastic. Though TPI is correlated with the adjustment amount (Pearson ), all errors across model fits were heteroskedastic according to a Breusch–Pagan test (). A potential source of large uncertainty estimates in the high income range could be because: (1) there are fewer datapoints in that part of the feature space; (2) NRP audits may not give an accurate picture of misreporting at the higher part of the income space, resulting in larger variance and uncertainty (Guyton et al. 2021); or (3) additional features are needed to improve precision in part of the state space. This makes it difficult to use some optimism-based approaches since there is a confound between aleatoric and epistemic uncertainty. As a result, optimism-based approaches audit higher income individuals more often, but do not necessarily achieve higher returns. This poses another interesting challenge for future research.

6 Discussion

We have introduced the optimize-and-estimate structured bandit setting. The setting is motivated by common features of public sector applications (e.g., multiple objectives, batched selection), where there is wide applicability of sequential decision making, but, to date, limited understanding of the unique methodological challenges. We empirically investigate the use of structured bandits in the IRS setting and show that ABS conforms to IRS specifications (unbiased estimation) and enables parties to explicitly trade off population estimation variance and reward maximization. This framework could help address longstanding concerns in the real-world setting of IRS detection of tax evasion. It could shift audits toward tax returns with larger understatements (correlating with more total positive income) and recover more revenue than the status quo, while maintaining an unbiased population estimate. Though there are other real-world objectives to consider, such as the effect of audit policies on tax evasion, our results suggest that unifying audit selection with estimation may help ensure that processes are as fair, optimal, and robust as possible. We hope that the methods we describe here are a starting point for both additional research into sequential decision-making in public policy and new research into optimize-and-estimate structured bandits.

Acknowledgements

We would like to thank Emily Black, Jason DeBacker, Hadi Elzayn, Tom Hertz, Andrew Johns, Dan Jurafsky, Mansheej Paul, Ahmad Qadri, Evelyn Smith, and Ben Swartz for helpful discussions. This work was supported by the Hoffman Yee program at Stanford’s Institute for Human-Centered Artificial Intelligence and Arnold Ventures. PH is supported by the Open Philanthropy AI Fellowship. This work was conducted while BA was at Stanford University. The findings, interpretations, and conclusions expressed in this paper are entirely those of the authors and do not necessarily reflect the views or the official positions of the U.S. Department of the Treasury or the Internal Revenue Service. Any taxpayer data used in this research was kept in a secured Treasury or IRS data repository, and all results have been reviewed to ensure no confidential information is disclosed.

References

- Abbasi-Yadkori, Pál, and Szepesvári (2011) Abbasi-Yadkori, Y.; Pál, D.; and Szepesvári, C. 2011. Improved algorithms for linear stochastic bandits. Advances in Neural Information Processing Systems, 24.

- Abe and Long (1999) Abe, N.; and Long, P. M. 1999. Associative reinforcement learning using linear probabilistic concepts. In ICML, 3–11. Citeseer.

- Agarwal et al. (2021) Agarwal, R.; Schwarzer, M.; Castro, P. S.; Courville, A. C.; and Bellemare, M. 2021. Deep reinforcement learning at the edge of the statistical precipice. Advances in Neural Information Processing Systems, 34.

- Agrawal and Devanur (2015) Agrawal, S.; and Devanur, N. R. 2015. Linear contextual bandits with knapsacks. arXiv preprint arXiv:1507.06738.

- Alexander, Dahl, and Weidman (1997) Alexander, C. H.; Dahl, S.; and Weidman, L. 1997. Making estimates from the american community survey. In Annual Meeting of the American Statistical Association (ASA), Anaheim, CA.

- Andreoni, Erard, and Feinstein (1998) Andreoni, J.; Erard, B.; and Feinstein, J. 1998. Tax compliance. Journal of Economic Literature, 36(2): 818–860.

- Ash, Galletta, and Giommoni (2021) Ash, E.; Galletta, S.; and Giommoni, T. 2021. A Machine Learning Approach to Analyze and Support Anti-Corruption Policy.

- Bastani, Bayati, and Khosravi (2021) Bastani, H.; Bayati, M.; and Khosravi, K. 2021. Mostly exploration-free algorithms for contextual bandits. Management Science, 67(3): 1329–1349.

- Beer et al. (2015) Beer, S.; Kasper, M.; Kirchler, E.; and Erard, B. 2015. Audit Impact Study. Technical report, National Taxpayer Advocate.

- Belnap et al. (2020) Belnap, A.; Hoopes, J. L.; Maydew, E. L.; and Turk, A. 2020. Real effects of tax audits: Evidence from firms randomly selected for IRS examination. SSRN.

- Bertomeu et al. (2021) Bertomeu, J.; Cheynel, E.; Floyd, E.; and Pan, W. 2021. Using machine learning to detect misstatements. Review of Accounting Studies, 26(2): 468–519.

- Biecek (2018) Biecek, P. 2018. DALEX: explainers for complex predictive models in R. The Journal of Machine Learning Research, 19(1): 3245–3249.

- Bietti, Agarwal, and Langford (2018) Bietti, A.; Agarwal, A.; and Langford, J. 2018. A contextual bandit bake-off. arXiv preprint arXiv:1802.04064.

- Bommasani et al. (2021) Bommasani, R.; Hudson, D. A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M. S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. 2021. On the Opportunities and Risks of Foundation Models. arXiv preprint arXiv:2108.07258.

- Bouneffouf and Rish (2019) Bouneffouf, D.; and Rish, I. 2019. A survey on practical applications of multi-armed and contextual bandits. arXiv preprint arXiv:1904.10040.

- Bouthillier et al. (2021) Bouthillier, X.; Delaunay, P.; Bronzi, M.; Trofimov, A.; Nichyporuk, B.; Szeto, J.; Mohammadi Sepahvand, N.; Raff, E.; Madan, K.; Voleti, V.; et al. 2021. Accounting for variance in machine learning benchmarks. Proceedings of Machine Learning and Systems, 3.

- Caria et al. (2020) Caria, S.; Kasy, M.; Quinn, S.; Shami, S.; Teytelboym, A.; et al. 2020. An adaptive targeted field experiment: Job search assistance for refugees in Jordan.

- Chugg et al. (2022) Chugg, B.; Henderson, P.; Goldin, J.; and Ho, D. E. 2022. Entropy Regularization for Population Estimation. arXiv preprint arXiv:2208.11747.

- Chugg and Ho (2021) Chugg, B.; and Ho, D. E. 2021. Reconciling Risk Allocation and Prevalence Estimation in Public Health Using Batched Bandits. NeurIPS workshop on Machine Learning in Public Health.

- Congressional Budget Office (2020) Congressional Budget Office. 2020. Trends in the Internal Revenue Service’s Funding and Enforcement.

- Davis-Nozemack (2012) Davis-Nozemack, K. 2012. Unequal burdens in EITC compliance. Law & Ineq., 31: 37.

- de Roux et al. (2018) de Roux, D.; Perez, B.; Moreno, A.; Villamil, M. d. P.; and Figueroa, C. 2018. Tax fraud detection for under-reporting declarations using an unsupervised machine learning approach. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 215–222.

- DeBacker, Heim, and Tran (2015) DeBacker, J.; Heim, B. T.; and Tran, A. 2015. Importing corruption culture from overseas: Evidence from corporate tax evasion in the United States. Journal of Financial Economics, 117(1): 122–138.

- DeBacker et al. (2018) DeBacker, J.; Heim, B. T.; Tran, A.; and Yuskavage, A. 2018. The effects of IRS audits on EITC claimants. National Tax Journal, 71(3): 451–484.

- Deliu, Williams, and Villar (2021) Deliu, N.; Williams, J. J.; and Villar, S. S. 2021. Efficient Inference Without Trading-off Regret in Bandits: An Allocation Probability Test for Thompson Sampling. arXiv preprint arXiv:2111.00137.

- Dickey, Blanke, and Seaton (2019) Dickey, G.; Blanke, S.; and Seaton, L. 2019. Machine Learning in Auditing. The CPA Journal, 16–21.

- Dimakopoulou et al. (2017) Dimakopoulou, M.; Zhou, Z.; Athey, S.; and Imbens, G. 2017. Estimation considerations in contextual bandits. arXiv preprint arXiv:1711.07077.

- Drugan and Nowé (2014) Drugan, M. M.; and Nowé, A. 2014. Scalarization based Pareto optimal set of arms identification algorithms. In 2014 International Joint Conference on Neural Networks (IJCNN), 2690–2697. IEEE.

- Dularif et al. (2019) Dularif, M.; Sutrisno, T.; Saraswati, E.; et al. 2019. Is deterrence approach effective in combating tax evasion? A meta-analysis. Problems and Perspectives in Management, 17(2): 93–113.

- Erard and Feinstein (2011) Erard, B.; and Feinstein, J. S. 2011. The individual income reporting gap: what we see and what we don’t. In IRS-TPC Research Conference on New Perspectives in Tax Administration.

- Erraqabi et al. (2017) Erraqabi, A.; Lazaric, A.; Valko, M.; Brunskill, E.; and Liu, Y.-E. 2017. Trading off rewards and errors in multi-armed bandits. In Artificial Intelligence and Statistics, 709–717. PMLR.

- Esteban et al. (2019) Esteban, J.; McRoberts, R. E.; Fernández-Landa, A.; Tomé, J. L.; and Næsset, E. 2019. Estimating forest volume and biomass and their changes using random forests and remotely sensed data. Remote Sensing, 11(16): 1944.

- Farquhar, Gal, and Rainforth (2021) Farquhar, S.; Gal, Y.; and Rainforth, T. 2021. On statistical bias in active learning: How and when to fix it. arXiv preprint arXiv:2101.11665.

- Foster and Rakhlin (2020) Foster, D. J.; and Rakhlin, A. 2020. Beyond UCB: Optimal and efficient contextual bandits with regression oracles. arXiv preprint arXiv:2002.04926.

- Foster et al. (2020) Foster, D. J.; Rakhlin, A.; Simchi-Levi, D.; and Xu, Y. 2020. Instance-dependent complexity of contextual bandits and reinforcement learning: A disagreement-based perspective. arXiv preprint arXiv:2010.03104.

- Government Accountability Office (2002) Government Accountability Office. 2002. New Compliance Research Effort Is on Track, but Important Work Remains. https://www.gao.gov/assets/gao-02-769.pdf. United States General Accounting Office: Report to the Committee on Finance, U.S. Senate. Online; Accessed Jan 10, 2022.

- Government Accountability Office (2003) Government Accountability Office. 2003. IRS Is Implementing the National Research Program as Planned. https://www.gao.gov/assets/gao-03-614.pdf. United States General Accounting Office: Report to the Committee on Finance, U.S. Senate. Online; Accessed Jan 10, 2022.

- Guo et al. (2021) Guo, W.; Agrawal, K. K.; Grover, A.; Muthukumar, V.; and Pananjady, A. 2021. Learning from an Exploring Demonstrator: Optimal Reward Estimation for Bandits. arXiv preprint arXiv:2106.14866.

- Guyton et al. (2020) Guyton, J.; Langetieg, P.; Reck, D.; Risch, M.; and Zucman, G. 2020. Tax Evasion by the Wealthy: Measurement and Implications. In Measuring and Understanding the Distribution and Intra/Inter-Generational Mobility of Income and Wealth. University of Chicago Press.

- Guyton et al. (2021) Guyton, J.; Langetieg, P.; Reck, D.; Risch, M.; and Zucman, G. 2021. Tax Evasion at the Top of the Income Distribution: Theory and Evidence. Technical report, National Bureau of Economic Research.

- Guyton et al. (2018) Guyton, J.; Leibel, K.; Manoli, D. S.; Patel, A.; Payne, M.; and Schafer, B. 2018. The effects of EITC correspondence audits on low-income earners. Technical report, National Bureau of Economic Research.

- Henderson et al. (2021) Henderson, P.; Chugg, B.; Anderson, B.; and Ho, D. E. 2021. Beyond Ads: Sequential Decision-Making Algorithms in Law and Public Policy. arXiv preprint arXiv:2112.06833.

- Henderson et al. (2020) Henderson, P.; Hu, J.; Romoff, J.; Brunskill, E.; Jurafsky, D.; and Pineau, J. 2020. Towards the systematic reporting of the energy and carbon footprints of machine learning. Journal of Machine Learning Research, 21(248): 1–43.

- Henderson et al. (2018) Henderson, P.; Islam, R.; Bachman, P.; Pineau, J.; Precup, D.; and Meger, D. 2018. Deep reinforcement learning that matters. In Proceedings of the AAAI conference on artificial intelligence, volume 32.

- Ho and Xiang (2020) Ho, D. E.; and Xiang, A. 2020. Affirmative algorithms: The legal grounds for fairness as awareness. U. Chi. L. Rev. Online, 134.

- Horvitz and Thompson (1952) Horvitz, D. G.; and Thompson, D. J. 1952. A generalization of sampling without replacement from a finite universe. Journal of the American statistical Association, 47(260): 663–685.

- Howard et al. (2020) Howard, B.; Lykke, L.; Pinski, D.; and Plumley, A. 2020. Can Machine Learning Improve Correspondence Audit Case Selection?

- Huang and Lin (2016) Huang, K.-H.; and Lin, H.-T. 2016. Linear upper confidence bound algorithm for contextual bandit problem with piled rewards. In Pacific-Asia Conference on Knowledge Discovery and Data Mining, 143–155. Springer.

- Hutter, Hoos, and Leyton-Brown (2011) Hutter, F.; Hoos, H. H.; and Leyton-Brown, K. 2011. Sequential model-based optimization for general algorithm configuration. In International conference on learning and intelligent optimization, 507–523. Springer.

- Internal Revenue Service (2016) Internal Revenue Service. 2016. Federal tax compliance research: Tax gap estimates for tax years 2008–2010.

- Internal Revenue Service (2019) Internal Revenue Service. 2019. Federal tax compliance research: Tax gap estimates for tax years 2011–2013.

- Internal Revenue Service (2021) Internal Revenue Service. 2021. IRS Update on Audits. https://www.irs.gov/newsroom/irs-update-on-audits. Online; Accessed Jan 10, 2022.

- Internal Revenue Service (2022) Internal Revenue Service. 2022.

- Järvelin and Kekäläinen (2002) Järvelin, K.; and Kekäläinen, J. 2002. Cumulated gain-based evaluation of IR techniques. ACM Transactions on Information Systems (TOIS), 20(4): 422–446.

- Johns and Slemrod (2010) Johns, A.; and Slemrod, J. 2010. The distribution of income tax noncompliance. National Tax Journal, 63(3): 397.

- Joseph et al. (2018) Joseph, M.; Kearns, M.; Morgenstern, J.; Neel, S.; and Roth, A. 2018. Meritocratic fairness for infinite and contextual bandits. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, 158–163.

- Kannan et al. (2018) Kannan, S.; Morgenstern, J. H.; Roth, A.; Waggoner, B.; and Wu, Z. S. 2018. A smoothed analysis of the greedy algorithm for the linear contextual bandit problem. Advances in Neural Information Processing Systems, 31.

- Kasy and Sautmann (2021) Kasy, M.; and Sautmann, A. 2021. Adaptive treatment assignment in experiments for policy choice. Econometrica, 89(1): 113–132.

- Katerenchuk and Rosenberg (2018) Katerenchuk, D.; and Rosenberg, A. 2018. RankDCG: Rank-ordering evaluation measure. arXiv preprint arXiv:1803.00719.

- Kato et al. (2020) Kato, M.; Ishihara, T.; Honda, J.; and Narita, Y. 2020. Efficient Adaptive Experimental Design for Average Treatment Effect Estimation.

- Kiel (2019) Kiel, P. 2019. It’s Getting Worse: The IRS Now Audits Poor Americans at About the Same Rate as the Top 1%. https://www.propublica.org/article/irs-now-audits-poor-americans-at-about-the-same-rate-as-the-top-1-percent. Online; Accessed Jan 10, 2022.

- Kotsiantis et al. (2006) Kotsiantis, S.; Koumanakos, E.; Tzelepis, D.; and Tampakas, V. 2006. Predicting fraudulent financial statements with machine learning techniques. In Hellenic Conference on Artificial Intelligence, 538–542. Springer.

- Lacoste et al. (2019) Lacoste, A.; Luccioni, A.; Schmidt, V.; and Dandres, T. 2019. Quantifying the Carbon Emissions of Machine Learning. arXiv preprint arXiv:1910.09700.

- Lansdell, Triantafillou, and Kording (2019) Lansdell, B.; Triantafillou, S.; and Kording, K. 2019. Rarely-switching linear bandits: optimization of causal effects for the real world. arXiv preprint arXiv:1905.13121.

- Lattimore and Szepesvári (2020) Lattimore, T.; and Szepesvári, C. 2020. Bandit algorithms. Cambridge University Press.

- Lawsky (2008) Lawsky, S. B. 2008. Fairly random: On compensating audited taxpayers. Conn. L. Rev., 41: 161.

- Lederman (2018) Lederman, L. 2018. Does enforcement reduce voluntary tax compliance. BYU L. Rev., 623.

- Li et al. (2010) Li, L.; Chu, W.; Langford, J.; and Schapire, R. E. 2010. A contextual-bandit approach to personalized news article recommendation. In Proceedings of the 19th international conference on World wide web, 661–670.

- Liu et al. (2014) Liu, Y.-E.; Mandel, T.; Brunskill, E.; and Popovic, Z. 2014. Trading Off Scientific Knowledge and User Learning with Multi-Armed Bandits. In EDM, 161–168.

- Lowe (1976) Lowe, V. L. 1976. Statement Before the Subcommittee on Oversight House Committee on Ways and Means on How the Internal Revenue Service Selects and Audits Individual Income Tax Returns.

- Marr and Murray (2016) Marr, C.; and Murray, C. 2016. IRS funding cuts compromise taxpayer service and weaken enforcement. http://www. cbpp. org/sites/default/files/atoms/files/6-25-14tax. pdf. Last accessed August, 29: 2016.

- Matta et al. (2016) Matta, J. C. D. L.; Guyton, J.; Hodge II, R.; Langetieg, P.; Orlett, S.; Payne, M.; Qadri, A.; Rupert, L.; Schafer, B.; Turk, A.; and Vigil, M. 2016. Understanding the Nonfi ler/Late Filer: Preliminary Findings. 6th Annual Joint Research Conference on Tax Administration Co-Sponsored by the IRS and the Urban-Brookings Tax Policy Center.

- Mersereau, Rusmevichientong, and Tsitsiklis (2009) Mersereau, A. J.; Rusmevichientong, P.; and Tsitsiklis, J. N. 2009. A structured multiarmed bandit problem and the greedy policy. IEEE Transactions on Automatic Control, 54(12): 2787–2802.

- Mittal, Reich, and Mahajan (2018) Mittal, S.; Reich, O.; and Mahajan, A. 2018. Who is bogus? using one-sided labels to identify fraudulent firms from tax returns. In Proceedings of the 1st ACM SIGCAS Conference on Computing and Sustainable Societies, 1–11.

- Mukherjee, Tripathy, and Nowak (2020) Mukherjee, S.; Tripathy, A.; and Nowak, R. 2020. Generalized Chernoff Sampling for Active Learning and Structured Bandit Algorithms. arXiv preprint arXiv:2012.08073.

- Neel and Roth (2018) Neel, S.; and Roth, A. 2018. Mitigating bias in adaptive data gathering via differential privacy. In International Conference on Machine Learning, 3720–3729. PMLR.

- Nie et al. (2018) Nie, X.; Tian, X.; Taylor, J.; and Zou, J. 2018. Why adaptively collected data have negative bias and how to correct for it. In International Conference on Artificial Intelligence and Statistics, 1261–1269. PMLR.

- Nika, Elahi, and Tekin (2021) Nika, A.; Elahi, S.; and Tekin, C. 2021. Contextual Combinatorial Volatile Bandits via Gaussian Processes. arXiv preprint arXiv:2110.02248.

- Office of Management and Budget (2018) Office of Management and Budget. 2018. Requirements for Payment Integrity Improvement. https://www.whitehouse.gov/wp-content/uploads/2018/06/M-18-20.pdf. Executive Office of the President. Online; Accessed Jan 10, 2022.

- Office of Management and Budget (2021) Office of Management and Budget. 2021. Requirements for Payment Integrity Improvement. https://www.whitehouse.gov/wp-content/uploads/2021/03/M-21-19.pdf. Executive Office of the President. Online; Accessed Jan 10, 2022.

- Pandey and Olston (2006) Pandey, S.; and Olston, C. 2006. Handling advertisements of unknown quality in search advertising. Advances in Neural Information Processing Systems, 19.

- Politis, Romano, and Wolf (1999) Politis, D.; Romano, J. P.; and Wolf, M. 1999. Subsampling.

- Politis (2003) Politis, D. N. 2003. The impact of bootstrap methods on time series analysis. Statistical science, 219–230.

- Politis and Romano (1994) Politis, D. N.; and Romano, J. P. 1994. Large sample confidence regions based on subsamples under minimal assumptions. The Annals of Statistics, 2031–2050.

- Potter (1990) Potter, F. J. 1990. A study of procedures to identify and trim extreme sampling weights. In Proceedings of the American Statistical Association, Section on Survey Research Methods, volume 225230. American Statistical Association Washington, DC.

- Qin and Russo (2022) Qin, C.; and Russo, D. 2022. Adaptivity and confounding in multi-armed bandit experiments. arXiv preprint arXiv:2202.09036.

- Rafferty, Ying, and Williams (2018) Rafferty, A. N.; Ying, H.; and Williams, J. J. 2018. Bandit assignment for educational experiments: Benefits to students versus statistical power. In International Conference on Artificial Intelligence in Education, 286–290. Springer.

- Sarin and Summers (2019) Sarin, N.; and Summers, L. H. 2019. Shrinking the tax gap: approaches and revenue potential. Technical report, National Bureau of Economic Research.

- Sen et al. (2021) Sen, R.; Rakhlin, A.; Ying, L.; Kidambi, R.; Foster, D.; Hill, D. N.; and Dhillon, I. S. 2021. Top-k extreme contextual bandits with arm hierarchy. In International Conference on Machine Learning, 9422–9433. PMLR.

- Sener and Savarese (2018) Sener, O.; and Savarese, S. 2018. Active Learning for Convolutional Neural Networks: A Core-Set Approach. In International Conference on Learning Representations.

- Settles (2009) Settles, B. 2009. Active learning literature survey.

- Shao and Wu (1989) Shao, J.; and Wu, C. J. 1989. A general theory for jackknife variance estimation. The annals of Statistics, 1176–1197.

- Shin, Ramdas, and Rinaldo (2021) Shin, J.; Ramdas, A.; and Rinaldo, A. 2021. On the Bias, Risk, and Consistency of Sample Means in Multi-armed Bandits. SIAM Journal on Mathematics of Data Science, 3(4): 1278–1300.

- Sifa et al. (2019) Sifa, R.; Ladi, A.; Pielka, M.; Ramamurthy, R.; Hillebrand, L.; Kirsch, B.; Biesner, D.; Stenzel, R.; Bell, T.; L”ubbering, M.; et al. 2019. Towards automated auditing with machine learning. In Proceedings of the ACM Symposium on Document Engineering 2019, 1–4.

- Simchi-Levi and Xu (2020) Simchi-Levi, D.; and Xu, Y. 2020. Bypassing the Monster: A Faster and Simpler Optimal Algorithm for Contextual Bandits under Realizability. Available at SSRN.

- Slemrod (2019) Slemrod, J. 2019. Tax compliance and enforcement. Journal of Economic Literature, 57(4): 904–54.

- Snow and Warren Jr (2005) Snow, A.; and Warren Jr, R. S. 2005. Tax evasion under random audits with uncertain detection. Economics Letters, 88(1): 97–100.

- Soemers et al. (2018) Soemers, D.; Brys, T.; Driessens, K.; Winands, M.; and Nowé, A. 2018. Adapting to concept drift in credit card transaction data streams using contextual bandits and decision trees. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 32.

- Taxpayer Advocate Service (2018) Taxpayer Advocate Service. 2018. Improper Earned Income Tax Credit Payments: Measures the IRS Takes to Reduce Improper Earned Income Tax Credit Payments Are Not Sufficiently Proactive and May Unnecessarily Burden Taxpayers. https://www.taxpayeradvocate.irs.gov/wp-content/uploads/2020/07/ARC18˙Volume1˙MSP˙06˙ImproperEarnedIncome.pdf. 2018 Annual Report to Congress — Volume One. Online; Accessed Jan 10, 2022.

- Tekin and Turğay (2018) Tekin, C.; and Turğay, E. 2018. Multi-objective contextual multi-armed bandit with a dominant objective. IEEE Transactions on Signal Processing, 66(14): 3799–3813.

- Thrun and Schwartz (1993) Thrun, S.; and Schwartz, A. 1993. Issues in using function approximation for reinforcement learning. In Proceedings of the Fourth Connectionist Models Summer School, 255–263. Hillsdale, NJ.

- Treasury (2021) Treasury, U. 2021. The American Families Plan Tax Compliance Agenda. Department of Treasury, Washington, DC.

- Turgay, Oner, and Tekin (2018) Turgay, E.; Oner, D.; and Tekin, C. 2018. Multi-objective contextual bandit problem with similarity information. In International Conference on Artificial Intelligence and Statistics, 1673–1681. PMLR.

- United States Census Bureau (2018) United States Census Bureau. 2018. Public Use Microdata Sample. https://www2.census.gov/programs-surveys/acs/data/pums/2018/5-Year/csv˙hca.zip.

- United States Census Bureau (2019) United States Census Bureau. 2019. 2019 Annual Social and Economic Supplements. https://www.census.gov/data/datasets/2019/demo/cps/cps-asec-2019.html.

- Wagstaff (2012) Wagstaff, K. 2012. Machine learning that matters. arXiv preprint arXiv:1206.4656.

- Webb et al. (2016) Webb, G. I.; Hyde, R.; Cao, H.; Nguyen, H. L.; and Petitjean, F. 2016. Characterizing concept drift. Data Mining and Knowledge Discovery, 30(4): 964–994.

- Wortman et al. (2007) Wortman, J.; Vorobeychik, Y.; Li, L.; and Langford, J. 2007. Maintaining equilibria during exploration in sponsored search auctions. In International Workshop on Web and Internet Economics, 119–130. Springer.

- Xiang (2020) Xiang, A. 2020. Reconciling legal and technical approaches to algorithmic bias. Tenn. L. Rev., 88: 649.

- Xu, Qin, and Liu (2013) Xu, M.; Qin, T.; and Liu, T.-Y. 2013. Estimation bias in multi-armed bandit algorithms for search advertising. Advances in Neural Information Processing Systems, 26.

- Zhang, Janson, and Murphy (2020) Zhang, K.; Janson, L.; and Murphy, S. 2020. Inference for batched bandits. Advances in Neural Information Processing Systems, 33: 9818–9829.

- Zheng et al. (2020) Zheng, S.; Trott, A.; Srinivasa, S.; Naik, N.; Gruesbeck, M.; Parkes, D. C.; and Socher, R. 2020. The AI economist: Improving equality and productivity with AI-driven tax policies. arXiv preprint arXiv:2004.13332.

- Zhu et al. (2022) Zhu, Y.; Foster, D. J.; Langford, J.; and Mineiro, P. 2022. Contextual Bandits with Large Action Spaces: Made Practical. In International Conference on Machine Learning, 27428–27453. PMLR.

Appendix A Software and Data

We are unable to publish even anonymized data due to statutory constraints. 26 U.S. Code § 6103. All code, however, is available at https://github.com/reglab/irs-optimize-and-estimate. We also provide datasets that can act as rough proxies to the IRS data for running the code, including the Public Use Microdata Sample (PUMS) (United States Census Bureau 2018) and Annual Social and Economic Supplement (ASEC) of the Current Population Survey (CPS) (United States Census Bureau 2019). These two datasets are provided by the U.S. Census Bureau. In this case, we use the proxy goal of identifying high-income earners with non-income-based features while maintaining an estimate of total population average income.

Appendix B Carbon Impact Statement

As suggested by Lacoste et al. (2019), Henderson et al. (2020), and others, we report the energy and carbon impacts of our experiments. While we are unable to calculate precise carbon emissions from hardware counters, we give an estimate of our carbon emissions. We estimate roughly 12 weeks of CPU usage total, including hyperparameter optimization and iteration on experiments, on two Intel Xeon Platinum CPUs with a TDP of 165 W each. This is equal to roughly 665 kWh of energy used and 471 kg CO2eq at the U.S. National Average carbon intensity.

Appendix C Importance and Relevance of the Optimize-and-Estimate Setting

We note that the optimize-and-estimate setting is essential to many real-world tasks. First, we highlight that many Federal Agencies are bound by law to estimate improper payments under the Improper Payments Information Act of 2002 (IPIA), as amended by the Improper Payments Elimination and Recovery Act of 2010 (IPERA) and the Improper Payments Elimination and Recovery Improvement Act of 2012 (IPERIA). “An improper payment is any payment that should not have been made or that was made in an incorrect amount under statutory, contractual, administrative, or other legally applicable requirements.”555https://www.ignet.gov/sites/default/files/files/19%20FSA%20IPERA%20Compliance%20Slides.pdf OMB guidance varies year-to-year on how improper payments should be estimated, but generally they must be “statistically valid” (unbiased estimate of the mean) (Office of Management and Budget 2018). In past years OMB has also required tight confidence intervals on estimates (Office of Management and Budget 2018).

Generally this means that agencies will need to conduct audits, as the IRS does described in this paper, to determine if there was misreporting or improper payments were made. Effectively, the optimize-and-estimate problem that we highlight here can apply more broadly to any federal agency that falls under the laws listed above.

We also highlight that the optimize-and-estimate structured bandit setting is not uniquely important to the public sector. Private sector settings also have all the qualities of an optimize-and-estimate problem. We consider one such application below: content moderation.

During some time period, a platform will have a large set of content that might violate their policies. They will have a set of moderators who will audit content that should be taken down. There will likely be more content in need of moderation than there are moderators. As a result, it is important to take down the most egregious cases of policy-violating content, while assessing an overall estimate of prevalence on the platform. To optimize this process the platform could construct an optimize-and-estimate problem as we do here. In this case, each arm would be a piece of content that needs review. Reward is a rating on how offensive or egregious the content policy violation was. The population estimate would then give an estimate of prevalence and valence of content policy violations on the platform. Note, here unbiasedness is likely equally important to the platform since a heavy bias will incorrectly affect policy decisions about content moderation.

Appendix D Society/Ethics Statement

As part of the initial planning of this collaboration, the project proposal was presented to an Ethics Review Board and was reviewed by the IRS. While the risks of this retrospective study – which uses historical data – are minimal, we are cognizant of distributive effects that targeted auditing may have. In this work we examine the distribution of audits across income, noting that more optimal models audit higher income taxpayers – in line with current policy proposals for fair tax auditing (Treasury 2021). Our collaboration will also be investigating other notions of fairness in separate follow-on and concurrent work as they require a more in-depth examination than can be done in this work alone.

There are multiple important (and potentially conflicting) goals in selecting who to audit, including maximizing the detection of under-reported tax liability, maintaining a population estimate, avoiding the administrative and compliance costs associated with false positive audits, and ensuring a fair distribution of audits across taxpayers of different incomes and other groups. It is important to note that the IRS and Treasury Department will ultimately be responsible for the policy decision about how to balance these various objectives. We see an important contribution of our project as understanding these trade-offs and making them explicit to the relevant policy-makers. We demonstrate how to quantify and incorporate these considerations into a multi-objective model. We also formalize an existing de facto sequential decision-making (SDM) problem to help identify relevant fairness frameworks and trade-offs for policymakers (Henderson et al. 2021). We note, however, that we do not consider a number of other objectives important to the IRS, including deterring tax evasion.

Finally, as is also well-known, there is no single solution for remedying fairness – different fairness definitions are contested and mutually incompatible. For this reason, our plan is not to adopt a single, fixed performance measure. Rather, we seek to show how the optimal algorithm varies based on the relative importance one attaches to the alternative goals. For example, in this work we examine how reward-optimal models shift auditing resources toward higher incomes or particular NRP audit classes. We also note that there are some challenges in examining sub-group fairness, however, including that the IRS may not collect or possess information about protected group status (e.g., race / ethnicity). If such data were available, now-standard bias assessments and mitigation could be implemented. We note that the legality of bias mitigation remains uncertain (Xiang 2020; Ho and Xiang 2020). Overcoming these challenges requires its own examination.

With thorough additional evaluation and safeguards, as part of our ongoing collaboration with the IRS, the results of this and related research are currently being incorporated into the continual improvement of the IRS audit selection method. However, we note that all models used by the IRS go through extensive internal review processes with robustness and generalizability checks beyond the scope of our work here. No model in this work will be directly used in any auditing mechanism. There are strict statutory rules that limit the use and disclosure of taxpayer data. All work in this manuscript was completed under IRS credentials and federal government security and privacy standards. All authors that accessed data have undergone a background check and been onboarded into the IRS personnel system under the Intergovernmental Personnel Act or the student analogue. That means all data-accessing authors took all trainings on security and privacy related to IRS data and were bound by law to relevant privacy standards (e.g., The Privacy Act of 1974, The Taxpayer Browsing Protection Act, and IRS Policy on Accessing Tax Information). Consent for use of taxpayer data by IRS for tax administration purposes is statutorily provided for under 26 U.S.C. §6103(h)(1), which grants authority to IRS employees to access data for tax administration purposes. This manuscript and associated data was cleared under privacy review. All work using taxpayer data was done on a secure system with separate hardware. No taxpayer names were associated with the features used in this work.

Appendix E Related Work

Please see Table 2 for a brief summary on what sets apart our setting from others and a description of related work below.

| Setting | Papers | Batched | Estimation | Volatile Arms | Per-arm Context | Non-linear |

|---|---|---|---|---|---|---|

| Multi-armed Bandit | Deliu, Williams, and Villar (2021) | N | Y | N | N | N |

| Caria et al. (2020) | N | Y | N | N | N | |

| Kasy and Sautmann (2021) | N | Y | N | N | N | |

| Pandey and Olston (2006) | Y | Y | N | N | N | |

| Xu, Qin, and Liu (2013) | N | Y | N | N | N | |

| Guo et al. (2021) | N | Y | N | N | N | |

| Contextual Bandit | Dimakopoulou et al. (2017) | N | Y | N | N | N |

| Qin and Russo (2022) | N | Y | N | N | N | |

| Sen et al. (2021) | Y | N | N | N | Y | |

| Simchi-Levi and Xu (2020) | N | N | N | N | Y | |

| Huang and Lin (2016) | Y | N | N | N | N | |

| Structured Bandit | Abbasi-Yadkori, Pál, and Szepesvári (2011) | N | N | Y | Y | N |

| Joseph et al. (2018) | Y | N | Y | Y | N | |

| Ours | - | Y | Y | Y | Y | Y |

E.1 Similar Applications

There is growing interest in the application of ML to detecting fraudulent financial statements (Dickey, Blanke, and Seaton 2019; Bertomeu et al. 2021). Previous methods have included unsupervised outlier detection (de Roux et al. 2018), decision trees (Kotsiantis et al. 2006), and analyzing statements with NLP (Sifa et al. 2019). Closer to our methodology is a bandit approach is used by Soemers et al. (2018) to detect fraudulent credit card transactions. Meanwhile, Zheng et al. (2020) propose reinforcement learning to learn optimal tax policies, but do not focus on enforcement. Finally, some work has investigated the use of machine learning for improved audit selection in various settings (Howard et al. 2020; Ash, Galletta, and Giommoni 2021; Mittal, Reich, and Mahajan 2018). None of these approaches takes into account population estimation and some do not use sequential decision-making.

E.2 Multi-objective Decision-making

Some prior work has investigated general multi-objective optimization in the context of bandits (Drugan and Nowé 2014; Tekin and Turğay 2018; Turgay, Oner, and Tekin 2018). Most work in this vein generalizes reward scalars to vectors, and seeks pareto optimal solutions. These techniques do not extend readily to our setting, which has a secondary objective of a particular form (unbiased estimation).

E.3 Batch Selection

Other works have examined similar batched selection mechanisms such as the linear structured bandit (Mersereau, Rusmevichientong, and Tsitsiklis 2009; Abbasi-Yadkori, Pál, and Szepesvári 2011; Joseph et al. 2018), top-k extreme contextual bandit (Sen et al. 2021), or contextual bandit with piled rewards (Huang and Lin 2016).

An alternative view of this problem is as a contextual bandit problem with no shared context, but rather a per arm context. This is similar to the setup to the contextual bandit formulation of Li et al. (2010) used for news recommendation systems. However, unlike in Li et al. (2010), rewards here would have to be delivered after rounds of selection (where is the budget of audits that can be selected in a given year). Since the IRS does not conduct audits on a rolling basis, the rewards are delayed and updated all at once. This is similar to the “piled-reward” variant of the contextual bandit framework discussed by Huang and Lin (2016) or possibly a variant of contextual bandits with knapsacks (Agrawal and Devanur 2015).

Notably a large difference in our setting is the scale of the problem (there are 200M+ arms per timestep in the fully scaled problem), the non-linearity of the structure. In other batched settings, it is typically to select K actions given one context, not a per-arm context as is the case for our setting.

E.4 Inference

Due to the well-known bias exhibited by data collected by bandit algorithms (Shin, Ramdas, and Rinaldo 2021; Nie et al. 2018; Xu, Qin, and Liu 2013), a large body of work seeks to improve hypothesis testing efficiency and accuracy via an active learning or structured bandit process (Kato et al. 2020; Mukherjee, Tripathy, and Nowak 2020; Deliu, Williams, and Villar 2021). There is also a body of work that seeks to improve estimation and inference properties in bandit settings (Kasy and Sautmann 2021; Wortman et al. 2007; Zhang, Janson, and Murphy 2020; Lansdell, Triantafillou, and Kording 2019). For instance, Dimakopoulou et al. (2017) consider balancing techniques to improve inference in non-parametric contextual bandits. Chugg and Ho (2021) seek to give unbiased population estimates after data has been sampled with a MAB algorithm. Guo et al. (2021) study how to develop estimation strategies when given the learning process of another low-regret algorithm. Some of this work can be classified as click-through-rate estimation (Pandey and Olston 2006; Wortman et al. 2007; Xu, Qin, and Liu 2013). Like other adaptive experimentation literature, these works are in the multi-armed bandit (MAB) or contextual bandit settings which do not neatly map onto our own setting. Our work deals with the unique challenges of the IRS setting, requiring the use of optimize-and-estimate structured bandits, discussed below.

Some work, similarly to our approach, explicitly considers trading off maximizing reward with another objective (Liu et al. 2014; Rafferty, Ying, and Williams 2018). Caria et al. (2020) develop a Thompson sampling algorithm which trades off estimation of treatment effects with estimation accuracy. Erraqabi et al. (2017) develop an objective function to trade off rewards and model error. Deliu, Williams, and Villar (2021) develop a similar approach for navigating such trade-offs. But all of these works occur in the MAB setting, however, and are difficult to apply to our structured bandit setting. That is because we are not selecting between several treatments, but rather we are selecting a batch of arms to pull which correspond to their own context. Additionally arms are volatile, we do not necessarily know which arm corresponds to an arm in a previous timestep. Finally, arms must be selected in batches with potentially delayed reward. For example, take the Thompson sampling approach of Caria et al. (2020). In that setting the authors selected among three treatments and examined the treatment effects among them given some context. Yet, in our setting we can never observe any effect for unselected arms so our setting must instead be formulated as a structured bandit.

E.5 Active Learning

We also note that there are some similarities between our formulation and the active learning paradigm (Settles 2009). For instance, the tax gap estimation requirement could be formulated as a pool-based active learning problem, wherein the model chooses each subsequent point in order to improve its estimation of the tax gap. This also coincides somewhat with the bandit exploration component since a better model will allow the agent to select the optimal arm more frequently. However, the revenue maximization objective we introduce corresponds with the exploit component of the bandit problem and is not found in the active learning framework.

E.6 Discussion

Overall, to our knowledge the optimize-and-estimate structured bandit setting has not been proposed as we describe it here. And, more importantly, no one work has examined the unique challenges of the audit selection application in the IRS in as an optimize-and-estimate structured bandit. The assumptions we make (essential to most policy contexts including the IRS) differ from each of these other contexts: (1) arms are volatile and cannot necessarily be linked across timesteps;666Note the reason we make this assumption is because the NRP data does not track a cohort of taxpayers, but rather randomly samples. We are not guaranteed to ever see a taxpayer twice. (2) decisions are batched; (3) contexts are per arm; (4) the underlying reward structure is highly non-linear; (5) an unbiased population estimate must be retained. These key features also distinguish the optimize-and-estimate structured bandit from past work handling dual optimization and estimation objectives. To simplify things, one might think of our work as a top- contextual bandit, but the key difference is that arms are volatile in our case (you may never see the same arm twice). Thus, we must formulate our setting in a different way: a structured bandit. Thus, perhaps closest to our own is the work of Joseph et al. (2018) and Abbasi-Yadkori, Pál, and Szepesvári (2011). However, we require non-linearity and batched selection, as well as adding the novel estimation objective to this selection setting. These features differentiate it from prior MAB or even contextual bandit work. To our knowledge, this is unlike any of the prior work that handles estimation trade-offs and is the reason why we call the novel domain an optimize-and-estimate structured bandit. Perhaps closest to our own is the work of Joseph et al. (2018) and Abbasi-Yadkori, Pál, and Szepesvári (2011). But we extend this work to the batched non-linear setting with a unique estimation objective.

Appendix F Baseline Methods Selection

In determining which baselines to use we surveyed existing literature on what methods might be directly applicable. First, we noticed that existing literature that handles estimation problems, such as the adaptive RCT literature (Caria et al. 2020), do not neatly map onto our setting. We do not have multiple treatments and we never see reward for unselected arms. This ruled out simple methods related to multi-armed bandits. Instead, the closest literature is the structured bandit or linear bandit literature where each arm is assumed to have a context and the policies selects arms as such. Few sampling methods guarantee unbiased estimation in the structured bandit setting, so we turn to a simple -greedy baseline for unbiasedness. In many ways this is similar to the current IRS setting. The sample can be thought of as the NRP sample and then the greedy sample can be thought of as Op audits. This is a natural baseline to compare against. Then, we selected one optimism based approach (UCB) which has proven regret bounds in the linear bandit setting (Lattimore and Szepesvári 2020). We show in another setting that a formulation of Thompson sampling does not appear to work well in this structured bandit setting, as seen in Appendix G. We do not select more such approaches because model-based approaches are not guaranteed to be unbiased. Thus, while we include one such approach for comparison and analysis, we instead focused our efforts elsewhere instead of adding additional methods that do not meet our optimization criteria. Note, in many ways ABS sampling bears a resemblance to Thompson sampling and we encourage future work to explore more direct mappings of existing literature to the optimize-and-estimate structured bandit setting. For convenience, we make a comparison of this in Table 2.

Appendix G Evaluation on Other Datasets

We provide a small ablation study on the additional Current Population Survey (CPS) dataset. We perform the same preprocessing as Chugg et al. (2022) and have a goal of predicting the income of a person based on 122 other features. We reuse the optimal ABS hyperparameters from the IRS setting and find similar results on this new dataset. Since CPS is more stationary than the IRS data, the reward-optimal method changes from greedy to UCB. This flows naturally from Bastani, Bayati, and Khosravi (2021), since IRS data is more stochastic, greedy is more optimal. CPS is less stochastic, so UCB is more optimal. ABS remains unbiased and yields high reward. We also implement a version of Thompson sampling where we estimate the standard deviation and mean of the random forest as with the UCB setting, but then sample randomly from a Gaussian distribution with these parameters. We run all permutations for 20 random seeds. We also find that if we try to back out propensity weights for the Thompson sampling approach via Monte Carlo simulations, they are not well-formed. We rolled out 1000 times per timestep and found that even under this regime roughly 75.3% of the propensity scores were 0. As such, Thompson sampling cannot maintain unbiasedness on its own with an HT-like estimator.

| Method | Reward | Bias | Variance |

|---|---|---|---|

| UCB-1 | 473 | 13.4 | 14.4 |

| ABS-2 | 444 | -1.3 | 26.3 |

| ABS-1 | 431 | 0.2 | 26.1 |

| Thompson | 427.2 | 9.7 | 12.8 |

| E-greedy, e=0.4 (Model-based) | 313.5 | 7.25 | 10.6 |

| E-greedy, e=0.2 (Model-based) | 243.9 | 7.46 | 10.0 |

Appendix H Limitations

While we have already expressed several limitations throughout this work, we gather them here as they are fertile ground for research in the optimize-and-estimate setting. First, the ABS approach does not re-use information year-to-year for population estimation. There may be better ways to re-use information in a mixed model-based and model-free mechanism, that retains unbiasedness. Second, we focus heavily on empirical analysis as we believed it was essential for a setting as important as the IRS. However, future work can delve into theoretical aspects of the problem, potentially examining whether there are lower-variance model-based approaches that retain unbiasedness guarantees. Third, we note that our evaluations may scale differently to larger datasets. For example, with more data, utilizing the entirety of all audit outcomes, more complicated models might be more feasible.

As an initial work introducing a highly relevant setting and application, we focused more on analysis of different approaches. We have one initial approach, ABS, that meets policy requirements and outperforms baselines. Future work may seek to improve on the performance of this method, focusing more on one novel method rather than analysis, which is our goal.

H.1 Deterrence