11email: phuc, koh, kashima@ml.ist.i.kyoto-u.ac.jp

22institutetext: Fujitsu Research, Fujitsu Ltd., Japan

22email: okajima.seiji, t.arseny, takebayashi.tom, maruhashi.koji@fujitsu.com

Inter-domain Multi-relational Link Prediction

Abstract

Multi-relational graph is a ubiquitous and important data structure, allowing flexible representation of multiple types of interactions and relations between entities. Similar to other graph-structured data, link prediction is one of the most important tasks on multi-relational graphs and is often used for knowledge completion. When related graphs coexist, it is of great benefit to build a larger graph via integrating the smaller ones. The integration requires predicting hidden relational connections between entities belonged to different graphs (inter-domain link prediction). However, this poses a real challenge to existing methods that are exclusively designed for link prediction between entities of the same graph only (intra-domain link prediction). In this study, we propose a new approach to tackle the inter-domain link prediction problem by softly aligning the entity distributions between different domains with optimal transport and maximum mean discrepancy regularizers. Experiments on real-world datasets show that optimal transport regularizer is beneficial and considerably improves the performance of baseline methods.

Keywords:

Inter-domain Link Prediction Multi-relational data Optimal Transport.1 Introduction

Multi-relational data represents knowledge about the world and provides a graph-like structure of this knowledge. It is defined by a set of entities and a set of predicates between these entities. The entities can be objects, events, or abstract concepts while the predicates represent relationships involving two entities. A multi-relational data contains a set of facts represented as triplets denoting the existence of a predicate from subject entity to object entity . In a sense, multi-relational data can also be seen as a directed graph with multiple types of links (multi-relational graph).

A multi-relational graph is often very sparse with only a small subset of true facts being observed. Link prediction aims to complete a multi-relational graph by predicting new hidden true facts based on the existing ones. Many existing methods follow an embedding-based approach which has been proved to be effective for multi-relational graph completion. These methods all aim to find reasonable embedding presentations for each entity (node) and each predicate (type of link). In order to predict if a fact holds true, they use a scoring function whose inputs are embeddings of the entities and the predicate to compute a prediction score. Some of the most prominent methods in that direction are TransE [3], RESCAL [22], DisMult [35], and NTN [27], to name a few.

TransE [3] model is inspired by the intuition from Word2Vec [18, 19] that many predicates represent linear translations between entities in the latent embedding space, e.g. . Therefore, TransE tries to learn low-dimensional and dense embedding vectors so that for a true fact . Its scoring function is defined accordingly via . RESCAL [22] is a tensor factorization-based method. It converts a multi-relational graph data into a -D tensor whose first two modes indicate the entities and the third mode indicates the predicates. A low-rank decomposition technique is employed by RESCAL to compute embedding vectors of the entities and embedding matrices of the predicates. Its scoring function is the bilinear product . DistMult [35] is also a bilinear model and is based on RESCAL where each predicate is only represented by a diagonal matrix rather than a full matrix. The neural tensor network (NTN) model [27] generalizes RESCAL’s approach by combining traditional MLPs and bilinear operators to represent each relational fact.

Despite achieving state of the art for link prediction tasks, existing methods are exclusively designed and limited to intra-domain link prediction. They only consider the case in which both entities belong to the same relational graph (intra-domain). When the needs for predicting hidden facts between entities of different but related graphs (inter-domain) arise, unfortunately, the existing methods are inapplicable. One of such examples is when it is necessary to build a large relational graph by integrating several existing smaller graphs whose entity sets are related. This study proposes to tackle the inter-domain link prediction problem by learning suitable latent embeddings that minimize dissimilarity between the domains’ entity distributions.

Two popular divergences, namely optimal transport’s Wasserstein distance (WD) and the maximum mean discrepancy (MMD), are investigated. Given two probability distributions, optimal transport computes an optimal transport plan that gives the minimum total transport cost to relocate masses between the distributions. The minimum total transport cost is often known under the name of Wasserstein distance. In a sense, the computed optimal transport plan and the corresponding Wasserstein distance provide a reasonable alignment and quantity for measuring the dissimilarity between the supports/domains of the two distributions. Minimizing Wasserstein distance has been proved to be effective in enforcing the alignment of corresponding entities across different domains and is successfully applied in graph matching [34], cross-domain alignment [7], and multiple-graph link prediction problems [25]. As another popular statistical divergence between distributions, MMD computes the dissimilarity by comparing the kernel mean embeddings of two distributions in a reproducing kernel Hilbert space (RKHS). It has been widely applied in two-sample tests for differentiating distributions [12, 13] and distribution matching in domain adaptation tasks [6], to name a few.

The proposed method considers a setting of two multi-relational graphs whose entities are assumed to follow the same underlying distribution. For example, the multi-relational graphs can be about relationships among users/items in different e-commerce flatforms of the same country. They could also be knowledge graphs of semantic relationships between general concepts that are built from different common-knowledge sources, e.g. Freebase and DBpedia. In both examples, it is safe to assume that the entity sets are distributionally identical. This assumption is fundamental for the regularizers to be effective in connecting the entity distributions of the two graphs.

2 Preliminary

This section briefly introduces the components that are employed in the proposed method.

2.1 RESCAL

RESCAL [22] formulates a multi-relational data as a three-way tensor , where is the number of entities and is the number of predicates. if the fact exists and otherwise. In order to find proper latent embeddings for the entities and the predicates, RESCAL performs a rank- factorization where each slice along the third mode is factorized as

Here, contains the latent embedding vectors of the entities and is an asymmetric matrix that represents the interactions between entities in the -th predicate.

Originally, it is proposed to learn and with the regularized squared loss function

where

and reg is the following regularization term

is a hyperparameter.

It is later proposed by the authors of RESCAL to learn the embeddings with pairwise loss training [21], i.e. using the following margin-based ranking loss function

| (1) |

where and are the sets of all positive triplets (true facts) and all negative triplets (false facts), respectively. denotes the score of , and is the ranking function

The negative triplet set is often generated by corrupting positive triplets, i.e. replacing one of the two entities in a positive triplet with a randomly sampled entity.

The pairwise loss training aims to learn and so that the score of a positive triplet is higher than the score of a negative triplet. Moreover, the margin-based ranking function is more flexible and easier to optimize with stochastic gradient descent (SGD) than the original squared loss function. In the proposed method, the pairwise loss training is adopted.

2.2 Optimal Transport

Given two probability vectors and that satisfy , a matrix is called a transport plan between and if and . Here, indicates a -dimensional vector of ones. Let’s denote the supports of and as and , respectively. A transport cost can be defined as

Given a transport matrix , the transport cost of a transport plan P is computed by

A transport plan that gives the minimum transport cost, , is called an optimal transport plan and the corresponding minimum cost is called the Wasserstein distance. The optimal transport plan gives a reasonable “soft” matching between the two distributions and while the Wasserstein distance provides a measurement of how far the two distributions are from each other.

In the scope of multi-relational graphs, and are predefined over the sets of entities, normally being set to be uniform and the supports and can be seen as embeddings of the entities.

The computational complexity of computing the optimal transport plan and Wasserstein distance is often prohibitive. An efficient approach to compute an approximation has been proposed by Cuturi et al. [9]. Instead of the exact optimal transport , they compute an entropic-regularized transport plan via minimizing a cost as follows,

| (2) |

where is a hyperparameter controlling the effect of the negative entropy of matrix . With large enough , emperically when , and the Wasserstein distance can be accurately approximated by and .

has a unique solution of the following form

where diag(u) indicates a diagonal matrix whose diagonal elements are elements of u. The matrix is the element-wise exponential of . Vectors and can be initialized randomly and updated via Sinkhorn iteration

2.3 Maximum Mean Discrepancy

Maximum Mean Discrepancy (MMD) is originally introduced as a non-parametric statistic to test if two distributions are different [12, 13]. It is defined as the difference between mean function values on samples generated from the distributions. If MMD is large, the two distributions are likely to be distinct. On the other hand, if MMD is small, the two distributions can be seen to be similar. Formally, let and be two distributions whose the supports are subsets of , and be a class of functions . Usually, is selected to be the unit ball in a universal RKHS . Then MMD is defined as

3 Problem Setting and Proposed Method

3.1 Problem Setting

The formal problem setting considered in this study is stated as follows. Given two multi-relational graphs and , each graph is defined with a set of entities (nodes) , a set of predicates (types of links) , and a set of true facts (observed links) for . For simplicity, this study only considers the case where the two graphs share the same set of predicates, i.e. . The goal is to predict if an inter-domain fact or holds true or not.

The entity embeddings of the two graphs are assumed to follow the same distribution, i.e. there exists a distribution such that for embedding of entity . In the experiments, the entity sets and are controlled so that they are completely disjoint or partially overlapped with only a small amount of common entities. The common entities are known in overlapping settings.

3.2 Proposed objective function

The proposed method’s objective function consists of two components. The first component is for learning embedding representations of the entities and the predicates of each multi-relational graph, which is based on an existing tensor-factorization method. RESCAL [22] is specifically chosen in the proposed method. The second component is a regularization term for enforcing the entity embedding distributions of the two graphs to become similar.

For each graph , lets denote the entity embeddings as , where is the embedding dimension. If the entity sets and overlap, the embeddings of common entities are set to be identical in both domains, i.e. where is the embeddings of common entities. The embedding of predicate is denoted as for . The objective function of the proposed method is given as

| (4) |

In (4), the first two terms are the loss functions of RESCAL and are defined as in (1). The third term is the entropic-regularized Wasserstein distance (WD) or the MMD discrepancy between the entity distributions of the two graphs. In the case of WD regularizer, is defined as in (2) with . In the case of MMD regularizer, is defined as in (3).

Via , the underlying embedding distribution over each entity set is learned and characterized into , while helps to drive these two distributions to become similar. Through the objective function , similar entities of and are expected to lie close to each other on the latent embedding space, which encourages similar entities to involve in similar relations/links. Specifically, if and have similar embeddings and , the inter-domain fact is likely to exist if the intra-domain fact exists thanks to their similar scores .

The objective function (MMD regularizer) is directly optimized with SGD. On the other hand, (WD regularizer) is minimized iteratively. In each epoch, the transport plan is fixed and the embedding vectors and are updated with SGD. At the end of each epoch, and are fixed and the plan is sequentially updated via Sinkhorn algorithm [9].

4 Experiments

4.1 Datasets

The datasets used in the experiments are created from four popular knowledge graph datasets, namely FB15k-237 [30], WN18RR [10], DBbook2014, and ML1M [5]. The FB15k-237 dataset contains facts about general knowledge. It has entities and predicates. The WN18RR dataset consists of facts about lexical relations between word senses. The other two datasets represent interactions among users and items in e-commerce. The ML1M (MovieLens-1M) dataset composes of facts with users/items and relations, while the DBbook2014 has facts with users/items and relations. To create and for each dataset, two smaller sub-graphs of around to entities are randomly sampled from the original graph. The two graphs are controlled to share some amounts of common entities. Different levels of entity overlapping are investigated, from (non-overlapping setting) to around , and (overlapping setting). Moreover, different predicates are removed so that and share the same predicate set, i.e. .

Intra-domain triplets whose both entities belong to the same graph are used for training. Inter-domain triplets whose entities belong to different graphs are used for validating and testing inter-domain performance. The validation and test ratio is . Even though the goal is to evaluate a model’s ability to perform inter-domain link prediction, both inter-domain and intra-domain link prediction performances are evaluated. This is because the proposed method should improve inter-domain link prediction while does not harm intra-domain link prediction. Therefore, of intra-domain triplets are further spared from the training data for monitoring intra-domain performance.

The details for the case of overlapping are shown in Table 1. In other cases, the datasets share similar statistics.

| Datasets | #Ent G1 | #Ent G2 | #Rel | #Train | #Inter Valid | #Intra Test | #Inter Test |

|---|---|---|---|---|---|---|---|

| FB15k-237 | 2675 | 2677 | 179 | 24.3k | 4.3k | 1.3k | 17.7k |

| WN18RR | 2804 | 2720 | 10 | 5.1k | 105 | 148 | 1.1k |

| DBbook2014 | 2932 | 2893 | 11 | 34.6k | 6.5k | 1.8k | 26.8k |

| ML1M | 2764 | 2726 | 18 | 39.3k | 6.5k | 2k | 27k |

4.2 Evaluation methods and Baselines

In the experiments, Hit@10 score and ROC-AUC score are used for quantifying both inter-domain and intra-domain performances.

4.2.1 Evaluation with Hit@10.

The Hit@10 score is computed by ranking true entities based on their scores. For each true triplet in the test sets, one entity (or ) is hidden to create an unfinished triplet (or ). All entities are used as candidates for completing the unfinished triplet and the scores of (or ) are computed. Note that the candidates are taken from the same entity set as (or ), i.e. if (or ) then entities are taken from . The ranking of (or ) is computed according to the scores. The higher “true” entities are ranked the better a model is at predicting hidden true triplets. Hit@10 score is used for quantifying the link prediction performance and is calculated as the percentage of “true” entities being ranked inside the top .

4.2.2 Evaluation with ROC-AUC.

In order to compute the ROC-AUC score, triplets in the test set are treated as positive samples. An equal number of triplets are uniformly sampled from the entity sets and the predicate set to create negative samples. Due to the sparsity of each graph, it is safe to consider the sampled triplets as negative. During the sampling process, both sampled entities are controlled to belong to the same graph in the intra-domain case and belong to different graphs in the inter-domain case.

4.2.3 Evaluated Models.

In the experiments, RESCAL is used as the baseline method. The proposed method with Wasserstein regularization is denoted as WD while the one with MMD regularization is denoted as MMD.

4.3 Implementation details

4.3.1 Negative sampling.

Only intra-domain negative triplets are used in order to train the pairwise ranking loss (1) with SGD, i.e. negative triplet set only contains negative triplets whose both entities belong to the same graph.

4.3.2 Warmstarting.

Completely learning from scratch might be difficult since the regularizer can add noise at the early state. Instead, it is beneficial to warmstart the proposed method’s embeddings with embeddings roughly learned by RESCAL. Specifically, we run RESCAL for epochs to learn initial embeddings. After that, to maintain the fairness of equal training time, both the proposed method and RESCAL are warmstarted with the roughly learned embeddings.

4.3.3 Hyperparameters.

In the implementation, the latent embedding dimension is set to equal . All experiments are run for epochs. Early stopping is employed with a patience budget of epochs. Other hyperparameters, namely , learning rate, and batch size, are tuned on the inter-domain validation set using Optuna [1]. During the tuning process, is sampled to be between and , while the learning rate and batch size are chosen from and , respectively. The hyperparameters of RESCAL is tuned similarly with fixed . The kernel used in MMD is set to be a mixture of Gaussian kernels with the bandwidth list of where is the mean Euclidean distance between the entities. All results are averaged over random runs111The code is available at https://github.com/phucdoitoan/inter-domain_lp.

4.4 Experimental results

The experimental results are shown in Tables 2, 3, 4, and 5. Note that a random predictor has a Hit@ score of less than and a ROC-AUC score of around .

4.4.1 Inter-domain link prediction.

As being demonstrated in tables 2 and 3, the proposed method with WD regularizer works well with the FB15k-237 dataset, which outperforms RESCAL in all settings. Especially in the overlapping cases where few entities are shared between the graphs, both Hit@10 and ROC-AUC scores are improved significantly. The WD regularizer also demonstrates its usefulness with the DBbook2014 and ML1M datasets. The Hit@10 scores are boosted up in most cases of overlapping settings, while the ROC-AUC scores are consistently enhanced over that of RESCAL. Most of the time, the improvements are considerable. However, for the case of the ML1M dataset with overlapping entities, the WD regularizer causes the Hit@10 score to deteriorate, from to . On the other hand, the MMD regularizer seems not to be beneficial for the task. Unexpectedly, the regularizer introduces noise and reduces the accuracy of inter-domain link prediction. In the case of the WN18RR dataset, both RESCAL and the proposed method fail to perform, in which all Hit@10 and ROC-AUC scores are close to random. This might be due to the extreme sparsity of the dataset, whose amount of observed triplets is only about one-fifth of that of the other datasets.

In all the four datasets, sharing some common entities, even with a small number, is helpful and important for predicting inter-domain links. These common entities act as anchors between the graphs, which guide the regularizer to learn similar embedding distributions. Without common entities, the learning process becomes more challenging and often results in uncertain predictors as being shown in the overlapping cases. The overlapping setting is reasonable because, in practice, the two graphs often share some amounts of common entities, e.g. the same users and the same popular items reappear in different e-commerce platforms.

| Overlapping | Model | FB15k-237 | WN18RR | DBbook2014 | ML1M |

|---|---|---|---|---|---|

| 0% | RESCAL | 0.110 0.038 | 0.027 0.003 | 0.087 0.058 | 0.062 0.074 |

| MMD | 0.111 0.038 | 0.031 0.004 | 0.085 0.057 | 0.063 0.072 | |

| WD | 0.145 0.063 | 0.024 0.004 | 0.084 0.070 | 0.061 0.067 | |

| 1.5% | RESCAL | 0.251 0.031 | 0.025 0.002 | 0.107 0.035 | 0.210 0.034 |

| MMD | 0.237 0.043 | 0.026 0.003 | 0.109 0.037 | 0.180 0.067 | |

| WD | 0.291 0.031∗ | 0.024 0.002 | 0.128 0.059 | 0.240 0.031∗ | |

| 3% | RESCAL | 0.302 0.020 | 0.028 0.004 | 0.266 0.056 | 0.230 0.003∗ |

| MMD | 0.292 0.020 | 0.026 0.004 | 0.227 0.081 | 0.228 0.002 | |

| WD | 0.328 0.011∗ | 0.025 0.004 | 0.318 0.066∗ | 0.213 0.006 | |

| 5% | RESCAL | 0.339 0.007 | 0.027 0.005 | 0.389 0.032 | 0.237 0.011 |

| MMD | 0.334 0.006 | 0.026 0.004 | 0.388 0.027 | 0.236 0.010 | |

| WD | 0.361 0.010∗ | 0.031 0.004 | 0.389 0.051 | 0.256 0.006∗ |

| Overlapping | Model | FB15k-237 | WN18RR | DBbook2014 | ML1M |

|---|---|---|---|---|---|

| 0% | RESCAL | 0.504 0.092 | 0.504 0.009 | 0.483 0.097 | 0.464 0.173 |

| MMD | 0.507 0.093 | 0.500 0.010 | 0.485 0.095 | 0.480 0.172 | |

| WD | 0.548 0.118 | 0.505 0.009 | 0.488 0.099 | 0.495 0.179 | |

| 1.5% | RESCAL | 0.793 0.044 | 0.512 0.009 | 0.640 0.066 | 0.805 0.027 |

| MMD | 0.770 0.063 | 0.507 0.009 | 0.632 0.063 | 0.754 0.087 | |

| WD | 0.837 0.033∗ | 0.510 0.007 | 0.671 0.087 | 0.842 0.017∗ | |

| 3% | RESCAL | 0.825 0.022 | 0.503 0.009 | 0.762 0.032 | 0.832 0.006 |

| MMD | 0.813 0.030 | 0.498 0.011 | 0.714 0.060 | 0.831 0.007 | |

| WD | 0.850 0.013∗ | 0.502 0.013 | 0.809 0.030∗ | 0.840 0.008 | |

| 5% | RESCAL | 0.870 0.008 | 0.498 0.021 | 0.824 0.012 | 0.845 0.007 |

| MMD | 0.875 0.007 | 0.498 0.012 | 0.823 0.015 | 0.845 0.006 | |

| WD | 0.902 0.010∗ | 0.498 0.013 | 0.835 0.020 | 0.867 0.003∗ |

4.4.2 Intra-domain link prediction.

Even though the main goal is to predict inter-domain links, it is preferable that the regularizers do not harm performance on intra-domain link prediction when fusing the two domains’ entity distributions. As being demonstrated in table 5, the proposed method is able to maintain similar or better intra-domain ROC-AUC scores compared to RESCAL. However, it sometimes requires trade-offs in terms of the Hit@10 score, which is shown in table 4. Specifically, the WD regularizer worsens the intra-domain Hit@10 scores compared to RESCAL in FB15k-237 with overlapping and ML1M with overlapping settings despite helping improve the inter-domain counterparts. It also hurts the intra-domain Hit@10 score in ML1M with overlapping setting.

| Overlapping | Model | FB15k-237 | WN18RR | DBbook2014 | ML1M |

|---|---|---|---|---|---|

| 0% | RESCAL | 0.451 0.031 | 0.418 0.031 | 0.468 0.011 | 0.302 0.076 |

| MMD | 0.461 0.029 | 0.342 0.086 | 0.449 0.012 | 0.307 0.070 | |

| WD | 0.469 0.019 | 0.421 0.032 | 0.472 0.014 | 0.332 0.027 | |

| 1.5% | RESCAL | 0.433 0.008 | 0.390 0.040 | 0.296 0.039 | 0.425 0.006∗ |

| MMD | 0.438 0.008 | 0.330 0.067 | 0.328 0.027 | 0.423 0.036 | |

| WD | 0.427 0.009 | 0.408 0.035 | 0.291 0.038 | 0.412 0.008 | |

| 3% | RESCAL | 0.433 0.009 | 0.476 0.074 | 0.413 0.008 | 0.447 0.006∗ |

| MMD | 0.447 0.011 | 0.485 0.074 | 0.411 0.017 | 0.444 0.008 | |

| WD | 0.439 0.009 | 0.620 0.026∗ | 0.412 0.009 | 0.413 0.021 | |

| 5% | RESCAL | 0.433 0.009∗ | 0.455 0.038 | 0.418 0.010 | 0.408 0.005 |

| MMD | 0.421 0.009 | 0.416 0.058 | 0.420 0.014 | 0407 0.004 | |

| WD | 0.413 0.007 | 0.479 0.076 | 0.412 0.022 | 0.401 0.005 |

| Overlapping | Model | FB15k-237 | WN18RR | DBbook2014 | ML1M |

|---|---|---|---|---|---|

| 0% | RESCAL | 0.925 0.018 | 0.819 0.018 | 0.915 0.004 | 0.897 0.022 |

| MMD | 0.924 0.018 | 0.818 0.019 | 0.915 0.005 | 0.897 0.035 | |

| WD | 0.928 0.006 | 0.811 0.017 | 0.918 0.005 | 0.932 0.004∗ | |

| 1.5% | RESCAL | 0.929 0.003 | 0.814 0.018 | 0.871 0.032 | 0.950 0.003 |

| MMD | 0.931 0.003 | 0.807 0.029 | 0.892 0.009 | 0.954 0.003 | |

| WD | 0.932 0.006 | 0.818 0.020 | 0.868 0.040 | 0.954 0.002 | |

| 3% | RESCAL | 0.922 0.006 | 0.870 0.018 | 0.885 0.008 | 0.946 0.005 |

| MMD | 0.926 0.005 | 0.861 0.011 | 0.877 0.026 | 0.948 0.003 | |

| WD | 0.921 0.007 | 0.860 0.018 | 0.890 0.005 | 0.949 0.003 | |

| 5% | RESCAL | 0.927 0.007 | 0.869 0.007 | 0.878 0.008 | 0.949 0.003 |

| MMD | 0.935 0.005 | 0.835 0.050 | 0.879 0.008 | 0.952 0.003 | |

| WD | 0.937 0.004∗ | 0.860 0.020 | 0.885 0.009∗ | 0.953 0.003 |

4.4.3 Summary.

The proposed method with WD regularizer significantly improves the performance of inter-domain link prediction over the baseline method while being able to preserve the intra-domain performance in the FB15k-237 and DBbook2014 datasets. In the ML1M dataset, it benefits the inter-domain performance at the risk of decreasing intra-domain Hit@10 scores. Unexpectedly, the MMD regularizer does not work well and empirically causes deterioration of the inter-domain performance. These negative results might be due to local optimal arising when minimizing MMD with a finite number of samples, as recently studied in [26]. Further detailed analysis would be necessary before one can firmly judge the performance of the MMD regularizer. We leave this matter for future works. It is also worth mentioning that, in the experiment setting, the sampling of and is repeated independently for each overlapping level. Therefore, it is not necessary for the link prediction scores to monotonically increase when the overlapping level increases.

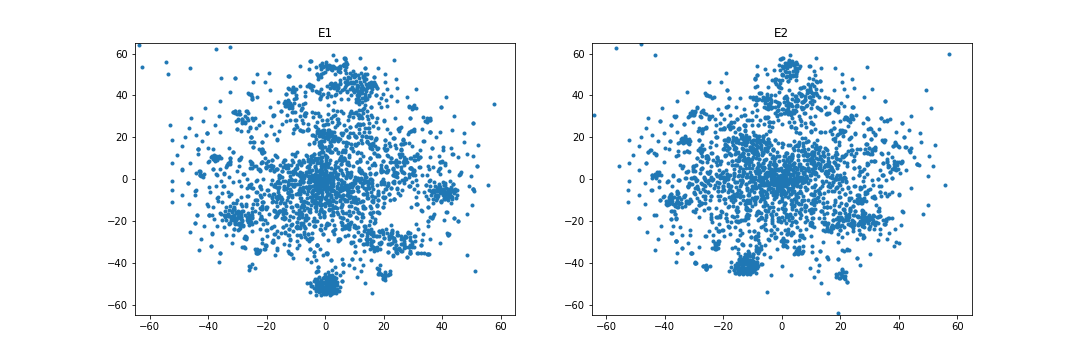

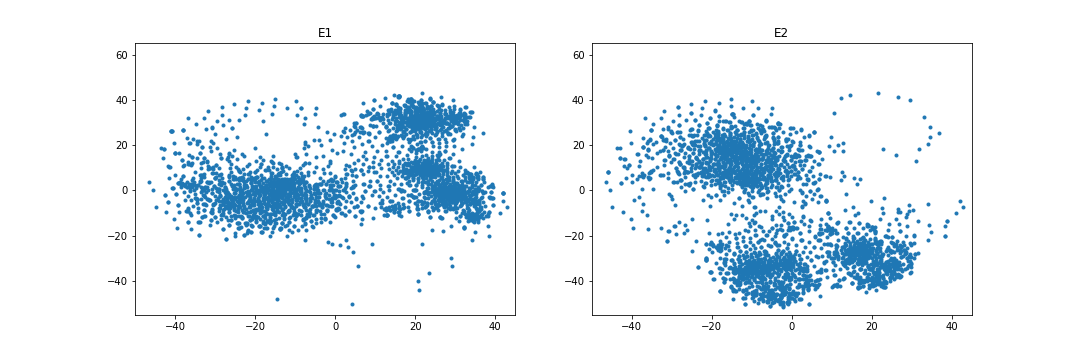

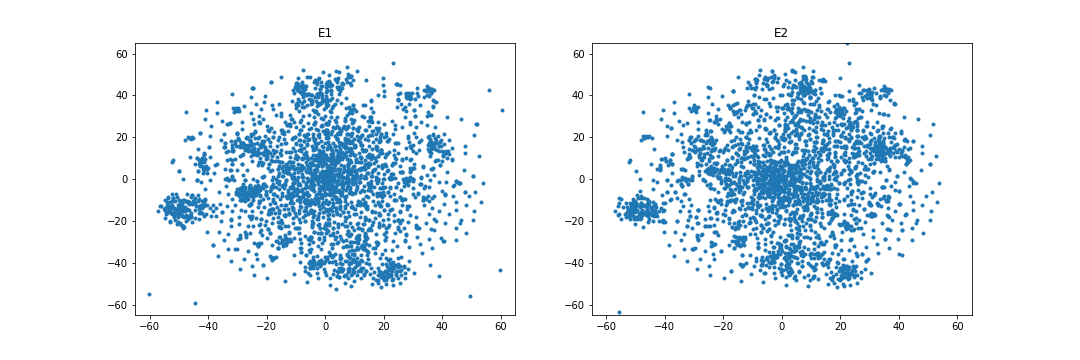

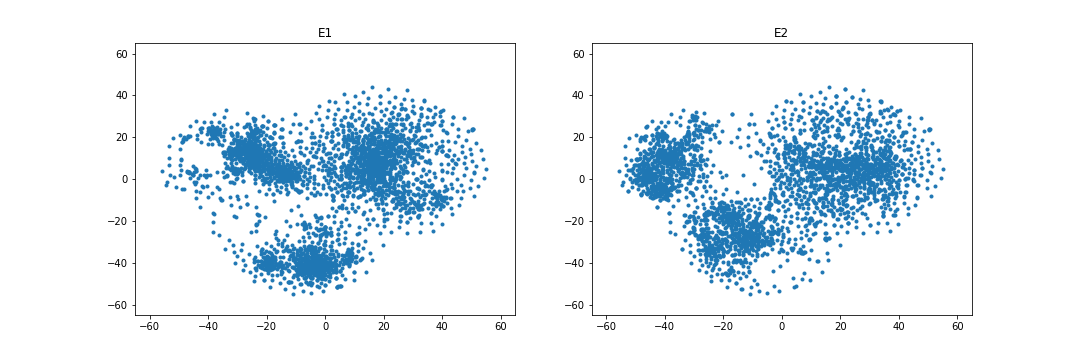

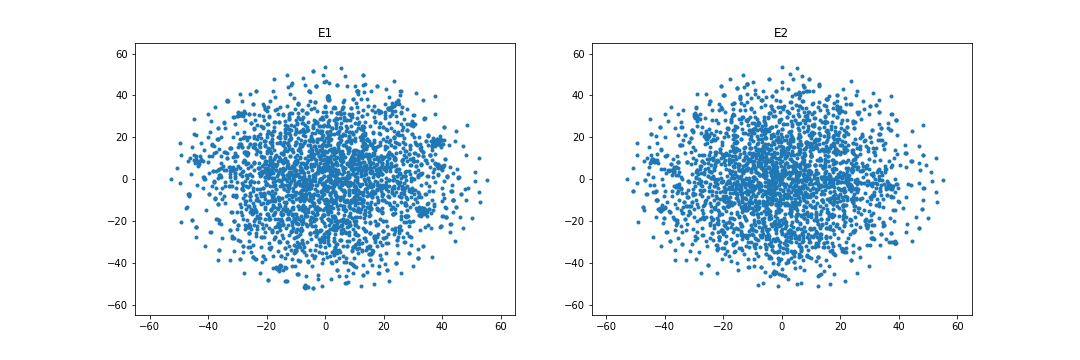

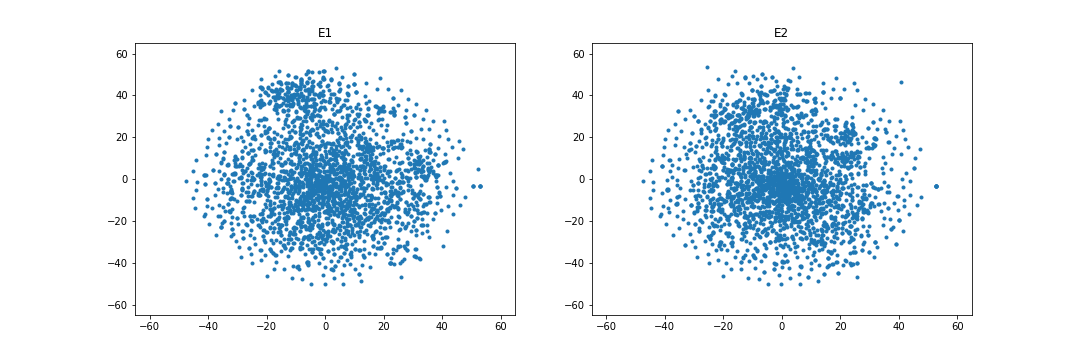

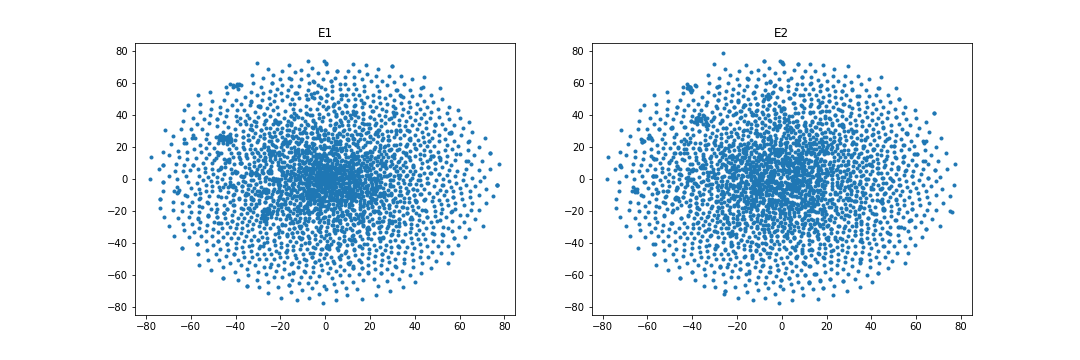

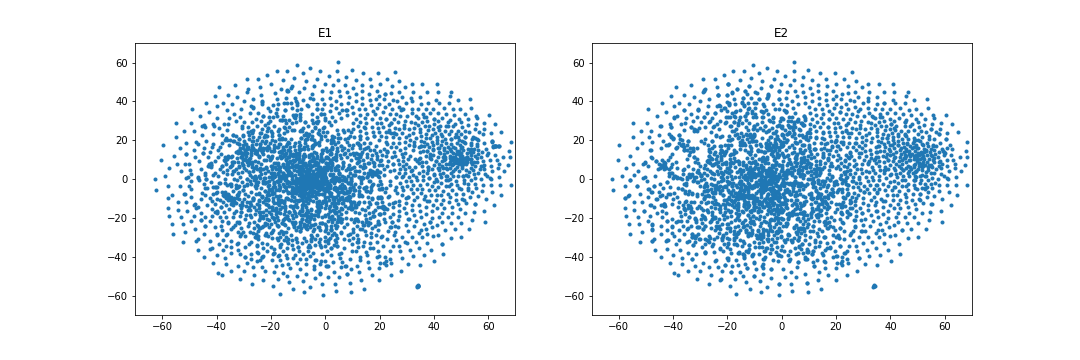

4.4.4 Embedding visualization.

Figures 1 and 2 visualize the entity embeddings learned by RESCAL and the WD regularizer in the case of overlapping. As being seen in Figure 1, WD can learn more identical embedding distributions than RESCAL in the case of the FB15k-237 and DBbook2014 datasets. Especially, in the DBbook2014 dataset, RESCAL can only learn similar shape distributions, but the regularizer can learn distributions with both similar shape and close absolute position. However, as being shown in Figure 2, in the WN18RR and ML1M datasets, the WD regularizer seems to only add noise when learning the embeddings, which results in no improvement or even degradation of both intra-domain and inter-domain Hit@10 scores.

5 Related Work

In recent years, the embedding-based approach has become popular in dealing with the link prediction task on a multi-relational knowledge graph (intra-domain). One of the pioneering works in this direction is TransE [3]. It is a translation model whose each predicate type corresponds to a translation between the entities’ embedding vectors. The model is suitable for -to- relationships only. Following models such as TransH, TransR, and TransD [33, 16, 14] are designed to deal with -to-, -to-, and -to- relationships. Furthermore, tensor-based models such that RESCAL, DistMult, and SimplE [22, 35, 15] also gain huge interest. They interpret multi-relational knowledge graphs as -D tensors and employ tensor factorization to learn the entity and predicate embeddings. Besides, neural network and complex vector-based models [27, 31] are also introduced in the literature. Further details can be found in [20].

To the best of our knowledge, the proposed method is the first to consider the inter-domain link prediction problem between multi-relational graphs. Existing methods in the literature do not directly deal with the problem. The closest line of research focuses on entity alignment in multilingual knowledge graphs, which often aims to match words of the same meanings between different languages. The first work in this line of research is MTransE [8]. It employs TransE to independently embed different knowledge graphs and perform matching on the embedding spaces. Other methods like JAPE [28] and BootEA [29] further improve MTransE by exploiting additional attributes or description information and bootstrapping strategy. MRAEA [17] directly learns multilingual entity embeddings by attending over the entities’ neighbors and their meta semantic information. Other methods [4, 11] apply Graph Neural Networks for learning alignment-oriented embeddings and achieve state-of-the-art results in many datasets. All these entity-matching methods implicitly assume most entities in one graph to have corresponding counterparts in the other graph, e.g. words in one lingual graph to have the same meaning words in the other lingual graph. Meanwhile, the proposed method only assumes the similarity between entity distributions.

Minimizing a dissimilarity criterion between distributions is a popular strategy for distribution matching and entity alignment problems. Cao et al. propose Distribution Matching Machines [6] that optimizes maximum mean discrepancy (MMD) between source and target domains for unsupervised domain adaptation tasks. The criterion is successfully applied in distribution matching and domain confusion tasks as well [2, 32]. Besides Wasserstein distance (WD), Gromov-Wasserstein distance (GWD) [24] also is a popular optimal transport metric. It measures the topological dissimilarity between distributions lying on different domains. GWD often requires much heavier computation than WD due to nested loops of Sinkhorn algorithm in current implementations [24]. Applying optimal transport into the graph matching problem, Xu et al. propose Gromov-Wasserstein Learning framework [34] for learning node embedding and node alignment simultaneously, and achieve state of the art in various graph matching datasets. Chen et al. [7] propose Graph Optimal Transport framework that combines both WD and GWD for entity alignment. The framework is shown to be effective in many tasks such as image-text retrieval, visual question answering, text generation, and machine translation. Due to the computational complexity of GWD, each domain considered in [34, 7] only contains less than several hundred entities. Phuc et al. [25] propose to apply WD to solve the link prediction problem on two graphs simultaneously. In terms of technical idea, the method is the most similar to the proposed method; however, it only focuses on the intra-domain link prediction problem on undirected homogeneous graphs and requires most of the nodes in one graph to have corresponding counterparts in the other graph.

6 Conclusion and Future Work

Inter-domain link prediction is an important task for constructing large multi-relational graphs from smaller related ones. However, existing methods in the literature do not directly address this problem. In this paper, we propose a new approach for the problem via jointly minimizing a divergence between entity distributions during the embedding learning process. Two regularizers have been investigated, in which the WD-based regularizer shows promising results and improves inter-domain link prediction performance considerably. For future works, we would like to verify the proposed method’s effectiveness using more baseline embedding methods besides RESCAL. Further analysis on the performance of the MMD-based regularizer will also be conducted. Moreover, the proposed method currently assumes that both domains share the same underlying entity distribution. This assumption is violated when the domains’ entity distributions are not completely identical but partially different. One possible direction for further research is to adopt unbalanced optimal transport as the regularizer, which flexibly allows mass destruction and mass creation between distributions.

Acknowledgements

The authors would like to thank the anonymous reviewers for their insightful suggestions and constructive feedback.

References

- [1] Akiba, T., Sano, S., Yanase, T., Ohta, T., Koyama, M.: Optuna: A next-generation hyperparameter optimization framework. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (KDD). pp. 2623–2631 (2019)

- [2] Baktashmotlagh, M., Harandi, M.T., Salzmann, M.: Distribution-matching embedding for visual domain adaptation. Journal of Machine Learning Research 17, 108:1–108:30 (2016)

- [3] Bordes, A., Usunier, N., García-Durán, A., Weston, J., Yakhnenko, O.: Translating embeddings for modeling multi-relational data. In: Advances in Neural Information Processing Systems 26. pp. 2787–2795 (2013)

- [4] Cao, Y., Liu, Z., Li, C., Liu, Z., Li, J., Chua, T.: Multi-channel graph neural network for entity alignment. In: Proceedings of the 57th Conference of the Association for Computational Linguistics (ACL). pp. 1452–1461 (2019)

- [5] Cao, Y., Wang, X., He, X., Hu, Z., Chua, T.: Unifying knowledge graph learning and recommendation: Towards a better understanding of user preferences. In: Proceedings of the World Wide Web Conference (WWW). pp. 151–161 (2019)

- [6] Cao, Y., Long, M., Wang, J.: Unsupervised domain adaptation with distribution matching machines. In: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence (AAAI). pp. 2795–2802 (2018)

- [7] Chen, L., Gan, Z., Cheng, Y., Li, L., Carin, L., Liu, J.: Graph optimal transport for cross-domain alignment. In: Proceedings of the 37th International Conference on Machine Learning (ICML). pp. 1542–1553 (2020)

- [8] Chen, M., Tian, Y., Yang, M., Zaniolo, C.: Multilingual knowledge graph embeddings for cross-lingual knowledge alignment. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence (IJCAI). pp. 1511–1517. ijcai.org (2017)

- [9] Cuturi, M.: Sinkhorn distances: Lightspeed computation of optimal transport. In: Advances in Neural Information Processing Systems 26. pp. 2292–2300 (2013)

- [10] Dettmers, T., Minervini, P., Stenetorp, P., Riedel, S.: Convolutional 2d knowledge graph embeddings. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence (AAAI). pp. 1811–1818 (2018)

- [11] Fey, M., Lenssen, J.E., Morris, C., Masci, J., Kriege, N.M.: Deep graph matching consensus. In: Proceedings of th 8th International Conference on Learning Representations (ICLR) (2020)

- [12] Gretton, A., Borgwardt, K.M., Rasch, M.J., Schölkopf, B., Smola, A.J.: A kernel method for the two-sample-problem. In: Advances in Neural Information Processing Systems 19. pp. 513–520 (2006)

- [13] Gretton, A., Borgwardt, K.M., Rasch, M.J., Schölkopf, B., Smola, A.J.: A kernel approach to comparing distributions. In: Proceedings of the 22nd AAAI Conference on Artificial Intelligence (AAAI). pp. 1637–1641 (2007)

- [14] Ji, G., He, S., Xu, L., Liu, K., Zhao, J.: Knowledge graph embedding via dynamic mapping matrix. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics (ACL). pp. 687–696 (2015)

- [15] Kazemi, S.M., Poole, D.: Simple embedding for link prediction in knowledge graphs. In: Advances in Neural Information Processing Systems 31. pp. 4289–4300 (2018)

- [16] Lin, Y., Liu, Z., Sun, M., Liu, Y., Zhu, X.: Learning entity and relation embeddings for knowledge graph completion. In: Proceedings of the 29th AAAI Conference on Artificial Intelligence (AAAI). pp. 2181–2187 (2015)

- [17] Mao, X., Wang, W., Xu, H., Lan, M., Wu, Y.: MRAEA: an efficient and robust entity alignment approach for cross-lingual knowledge graph. In: Proceedings of the 13th ACM International Conference on Web Search and Data Mining (WSDM). pp. 420–428 (2020)

- [18] Mikolov, T., Chen, K., Corrado, G., Dean, J.: Efficient estimation of word representations in vector space. In: Proceedings of the First International Conference on Learning Representations (ICLR) (2013)

- [19] Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. In: Advances in Neural Information Processing Systems 26. pp. 3111–3119 (2013)

- [20] Nguyen, D.Q.: An overview of embedding models of entities and relationships for knowledge base completion. CoRR abs/1703.08098 (2017)

- [21] Nickel, M., Murphy, K., Tresp, V., Gabrilovich, E.: A review of relational machine learning for knowledge graphs. Proceedings of the IEEE p. 11–33 (2016)

- [22] Nickel, M., Tresp, V., Kriegel, H.: A three-way model for collective learning on multi-relational data. In: Proceedings of the 28th International Conference on Machine Learning (ICML). pp. 809–816 (2011)

- [23] Peyré, G., Cuturi, M.: Computational optimal transport. Foundations and Trends in Machine Learning 11(5-6), 355–607 (2019)

- [24] Peyré, G., Cuturi, M., Solomon, J.: Gromov-Wasserstein averaging of kernel and distance matrices. In: Proceedings of the 33nd International Conference on Machine Learning (ICML). pp. 2664–2672 (2016)

- [25] Phuc, L.H., Takeuchi, K., Yamada, M., Kashima, H.: Simultaneous link prediction on unaligned networks using graph embedding and optimal transport. In: Proceedings of the Seventh IEEE International Conference on Data Science and Advanced Analytics (DSAA). pp. 245–254 (2020)

- [26] Sansone, E., Ali, H.T., Sun, J.: Coulomb autoencoders. In: ECAI 2020 - 24th European Conference on Artificial Intelligence. vol. 325, pp. 1443–1450 (2020)

- [27] Socher, R., Chen, D., Manning, C.D., Ng, A.Y.: Reasoning with neural tensor networks for knowledge base completion. In: Advances in Neural Information Processing Systems 26. pp. 926–934 (2013)

- [28] Sun, Z., Hu, W., Li, C.: Cross-lingual entity alignment via joint attribute-preserving embedding. In: Proceedings of the 16th International Semantic Web Conference (ISWC). pp. 628–644 (2017)

- [29] Sun, Z., Hu, W., Zhang, Q., Qu, Y.: Bootstrapping entity alignment with knowledge graph embedding. In: Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI). pp. 4396–4402 (2018)

- [30] Toutanova, K., Chen, D., Pantel, P., Poon, H., Choudhury, P., Gamon, M.: Representing text for joint embedding of text and knowledge bases. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing (EMNLP). pp. 1499–1509 (2015)

- [31] Trouillon, T., Welbl, J., Riedel, S., Gaussier, É., Bouchard, G.: Complex embeddings for simple link prediction. In: Proceedings of the 33rd International Conference on Machine Learning (ICML). pp. 2071–2080 (2016)

- [32] Tzeng, E., Hoffman, J., Zhang, N., Saenko, K., Darrell, T.: Deep domain confusion: Maximizing for domain invariance. CoRR abs/1412.3474 (2014)

- [33] Wang, Z., Zhang, J., Feng, J., Chen, Z.: Knowledge graph embedding by translating on hyperplanes. In: Proceedings of the 28th AAAI Conference on Artificial Intelligence (AAAI). pp. 1112–1119 (2014)

- [34] Xu, H., Luo, D., Zha, H., Carin, L.: Gromov-Wasserstein learning for graph matching and node embedding. In: Proceedings of the 36th International Conference on Machine Learning (ICML). pp. 6932–6941 (2019)

- [35] Yang, B., Yih, W., He, X., Gao, J., Deng, L.: Embedding entities and relations for learning and inference in knowledge bases. In: Proceedings of the Third International Conference on Learning Representations (ICLR) (2015)