Interference Cancellation Information Geometry Approach for Massive MIMO Channel Estimation

Abstract

In this paper, the interference cancellation information geometry approaches (IC-IGAs) for massive MIMO channel estimation are proposed. The proposed algorithms are low-complexity approximations of the minimum mean square error (MMSE) estimation. To illustrate the proposed algorithms, a unified framework of the information geometry approach for channel estimation and its geometric explanation are described first. Then, a modified form that has the same mean as the MMSE estimation is constructed. Based on this, the IC-IGA algorithm and the interference cancellation simplified information geometry approach (IC-SIGA) are derived by applying the information geometry framework. The a posteriori means on the equilibrium of the proposed algorithms are proved to be equal to the mean of MMSE estimation, and the complexity of the IC-SIGA algorithm in practical massive MIMO systems is further reduced by considering the beam-based statistical channel model (BSCM) and fast Fourier transform (FFT). Simulation results show that the proposed methods achieve similar performance as the existing information geometry approach (IGA) with lower complexity.

Index Terms:

Massive MIMO, interference cancellation (IC), information geometry approaches (IGA), beam based statistical channel model (BSCM), channel estimation.I Introduction

Massive multi-input multi-output (MIMO)[1, 2, 3] is the core enabling technology for the 5th generation (5G) mobile communications. It has further evolved into extra large-scale MIMO (XL-MIMO) [4, 5, 6], which has become a research hotspot of the 6th generation (6G) mobile communications. By increasing the number of antennas at the base station (BS), massive MIMO has significantly enhanced the spatial multiplexing and diversity gain and achieved a substantial increase in energy and spectral efficiency. To achieve these potential gains, the most important thing is acquiring accurate channel state information (CSI). In this paper, we focus on the channel estimation problem for massive MIMO.

The object of channel estimation is obtaining the a posteriori information of the channel from received signals. When the a priori probability density function (PDF) is Gaussian, the minimum mean square error (MMSE) estimator, which is also the a posteriori mean, is the optimal estimator. To fully exploit the sparsity of massive MIMO, analytical channel models with joint space-frequency representation such as the doubly beam-based stochastic model (BSCM)[7] are established, and the channel estimation problem can be transformed into the angle-delay domain. As the number of antennas increases, the pilot resources in massive MIMO are no longer enough [8, 9] and non-orthogonal pilots [10, 11] are often used. Thus, the channel estimator in massive MIMO usually needs to perform joint estimation of the channels of different users in the angle-delay domain, which makes the complexity of the matrix inversion in the MMSE estimation prohibitive.

Due to the complexity issue, low-complexity channel estimators that can achieve near MMSE performance are widely investigated in the literature. In [12], a polynomial expansion (PE) channel estimation is proposed for massive MIMO with arbitrary statistics. In [13], a low-complexity channel estimation with low dimensional channel gain estimation is proposed for massive MIMO with uniform planar array (UPA) by estimating the angle of arrival (AOA) first. Deep learning-based channel estimation approaches are proposed for beamspace mmWave massive MIMO and multi-cell massive MIMO systems in [14] and [15], respectively. In [16], a generalized approximate message passing (GAMP) method is proposed for channel estimation of a massive MIMO mmWave channel. Among these approaches, the GAMP might be the most promising one to be implemented in practical systems. However, the derivation of the GAMP is not easy to follow since it lacks of rigorous and concise mathematical explanation. In [11], an information geometry approach (IGA) that can achieve similar performance with similar complexity as the GAMP is proposed for massive MIMO channel estimation.

Information geometry theory arises from the study of invariant geometric structures in statistical inference [17] and provides the mathematical foundation of statistics [18]. It views the space of probability distributions as manifold and tackles problems in information science by using the concepts of differential geometry with tensor calculus [19]. Information geometry theory also plays an important role in machine learning, signal processing, optimization theory[20], and fields such as neuroscience [21] and quantum physics [22]. The information geometry explanation of the belief propagation (BP) algorithm [23] is given in [24], which also shows that the concave-convex procedure (CCCP) method [25] computing the marginal distribution can be interpreted by information geometry. In [26], the decoding algorithms for Turbo codes and low-density parity check (LDPC) codes are derived from the viewpoint of information geometry, and the equilibrium and error of the algorithms are analyzed.

The research on MIMO and massive MIMO based on information geometry is rarely seen in the literature. In [27], an information geometric approach is proposed to approximate ML estimation in semi-blind MIMO channel identification. For massive MIMO, information geometry is introduced in [11] and [28] to derive information geometry approaches (IGA) for channel estimation and detection, respectively. Moreover, a simplified IGA (S-IGA) algorithm is proposed in [29] by using the constant envelope property of the channel measurement matrix. Information geometry provides a unified framework for understanding belief propagation or message passing based algorithms. The detailed relation between the IGA and AMP algorithm is provided in [In preparation].

The information geometry approach define the auxiliary probability density functions (PDFs) based on the orignal PDF to obtain a low complexity algorithm. In [11], The auxiliary PDFs are defined based on the elements of the received signal, and each auxiliary PDF computes the message of all the channel elements. Thus, a natural question is whether we can derive a new channel estimation algorithm that is different from and has a lower complexity than that in [11]. To answer this question, we propose the interference cancellation information geometry approach (IC-IGA) for massive MIMO channel estimation in this paper. In the new algorithm, each auxiliary PDF focuses on the message for one element of the channel vector, and both time and space complexities are much lower than that of the IGA algorithm. To derive this new algorithm, we first provide a unified framework of the channel estimation information geometry approach, and explain the geometric meaning of the equilibrium of this approach. Then, we construct a modified channel estimation form that has the same mean as the MMSE estimation and apply the unified framework to obtain the new IG algorithm. To further reduce the complexity, the interference cancellation simplified information geometry approach (IC-SIGA) is proposed. Finally, the a posteriori means on the equilibriums of the proposed algorithms are proved to be equal to the mean of MMSE estimation, and the complexity analysis is provided.

The rest of this paper is organized as follows. The preliminaries about the manifold of complex Gaussian distributions are provided in Section II. The general information geometry framework for massive MIMO channel estimation is presented in Section III. The derivations of IC-IGA and IC-SIGA are presented in Sections IV and V, respectively. Simulation results are provided in Section VI. The conclusion is drawn in Section VII.

Notations: Throughout this paper, uppercase and lowercase boldface letters are used for matrices and vectors, respectively. The superscripts , , and denote the conjugate, transpose, and conjugate transpose operations, respectively. The mathematical expectation operator is denoted by . The operators represent the matrix determinant, and is the norm. The operators and denote the Hadamard and Kronecker product, respectively. The identity matrix is denoted by , and is used to denote when and when . A vector composed of the diagonal elements of is denoted by , and a diagonal matrix with along its diagonal is denoted by . We use or , or , and to denote the -th element of the vector , the -th element of the matrix , the -th column and the -th row of matrix , respectively. The symbol denotes the smallest integer among those larger than . Define . The operation denotes the integer modulo the integer .

II Preliminaries

In this section, we present an information geometric perspective on the space of multivariate complex Gaussian distributions by using the concepts from [17].

II-A Affine and Dual Affine Coordinate Systems

From information geometry theory, we have that the manifold of complex Gaussian distribution is a dually flat manifold, which has an affine coordinate system and a dual affine coordinate system. The two coordinate systems are also called natural parameters and expectation parameters in the literature. In the following, we describe the two affine coordinate systems in detail.

The Gaussian distributions belong to the exponential family of distributions [30]. Let be the natural parameter of a complex Gaussian distribution of a random vector , then the PDF is defined as

| (1) |

where is the normalization factor, which is called free energy function and given by

| (2) |

Let be the manifold of multivariate complex Gaussian distributions and is an coordinate system. The free energy function is a convex function of and introduces an affine flat structure, which means the is an affine coordinate system and each coordinate axis of is a straight line. Furthermore, the Bregman divergence [31] from to derived from is the same as the Kullback–Leibler (KL) divergence from to .

The dual coordinate system of is obtained by the Legendre transformation [32], i.e., the gradients of the free energy function . From (2), we can obtain the dual coordinate as

| (3a) | |||||

| (3b) |

where is the covariance matrix of . The dual coordinate is the combination of the first and second order moments of and is also called the expectation parameter. The dual function of is given by

| (4) |

which is the negative entropy of the PDF . By using the dual coordinate system, we have that

| (5) |

where is a constant. The dual function is a convex function of and induces the dual affine flat structure. The Bregman divergence from to derived from is the KL divergence from to .

The transformations from expectation parameters to natural parameters can also be obtained from the Legendre transformation as

| (6a) | |||||

| (6b) |

where is the covariance matrix and is used here for brevity. By using the expectation parameter, the PDF can also be written in the familiar form as

| (7) |

where .

II-B -flat Submanifold and -Projection

After introducing the affine and dual affine coordinate systems, we present the definitions of -flat and -projection, which are very important when describing the information geometry approach for channel estimation.

A submanifold is called -flat if it has a linear constraint in the affine coordinate . The term -flat can be similarly defined by using the dual affine coordinate . An example of -flat submanifold is the manifold of independent complex Gaussian distributions, defined as

| (8) |

where is a diagonal matrix. Since are diagonal matrices, the expectation parameter is very easy to obtain as

| = -Θ_0^-1. | (9) |

The projection to an -flat manifold is called -projection because the projection can be realized linearly in the dual affine coordinate system. Let and be two points in the manifold , refered as and , and and .

The -projection is unique and minimizes the KL divergence. Specifically, the -projection from to is defined as

| (10) | |||||

By using the affine and dual affine coordinate systems, the -projection is easy to obtain. First, rewrite the KL divergence as [17]

| (11) |

Then, the minimum can be obtained from the first-order optimal condition

| (12a) | |||||

| (12b) |

where is obtained because is a diagnal matrix. Thus, we have and for the projection point, and are their dual coordiantes. The projection is simple in the dual affine coordinate system. Let be the projection point, the dual straight line connecting and is the shortest one among those dual straight lines from to .

III Information Geometry Framework for Massive MIMO Channel Estimation

In this section, we provide a framework of information geometry methods for channel estimation in massive MIMO systems inspired by Section 11.3.3 and 11.3.4 in [17].

III-A Problem Formulation

In massive MIMO channel estimation, a general received signal model is given by

| (13) |

where is the deterministic measurement matrix, is a random Gaussian vector distributed as , and is the complex Gaussian noise vector distributed as .

For the received signal model in (13), the posterior distribution can be written as

| (14) |

According to (1), the natural parameters of are

| (15a) | |||||

| (15b) |

whereas the dual affine coordinate can be obtained from (3a) as

| (16a) | |||||

| (16b) |

The dual affine coordinate is the posterior mean of , and thus is also the MMSE estimation. For massive MIMO systems, the complexity of is often too high due to the inversion of the large dimensional matrix. Thus, one of the most important problems for massive MIMO is to derive low-complexity channel estimation methods.

III-B Information Geometry Framework

From Section II-B, we know that if we can -project onto the -flat submanifold , then is equal to the mean at the projection point, which is easy to obtain. However, the -projection still involves the matrix inversion of the large dimensional matrix, and thus can not provide a low-complexity solution.

Information geometry provides other low-complexity ways to find a point in whose dual coordinate is approximation or the same as that of the -projection point instead of using the -projection. The IGA algorithm proposed in [11] is a specific algorithm derived based on information geometry, but its complexity can be further reduced. To extend the information geometry approach to derive new low-complexity algorithms, we provide a framework of information geometry for massive MIMO channel estimation.

We call the submanifold the target manifold since we want to find a target point in it. Since the target point can not be obtained directly by using the -projection, the auxiliary manifolds and PDFs are needed to find a way to approximate the -projection. The natural parameters or affine coordinates of original posterior PDF are , . With the auxiliary manifolds, the process of the information geometry framework for channel estimation is summarized below:

-

(1)

The natural parameters and are split to construct auxiliary manifolds of PDFs and one target manifold of PDFs;

-

(2)

Initialize the auxiliary points and the target point in the auxiliary manifolds and target manifold, respectively;

-

(3)

Calculate the -projections of the auxiliary points to the target manifold and compute the beliefs in the affine coordinate system;

-

(4)

Update the natural parameters of the auxiliary and target points;

-

(5)

Repeat (3) and (4) until the algorithm converges or fixed iterations, output the mean and variance of the target point.

With the framework, the -projection of the original point to the target manifold is approximated by the -projections from the auxiliary points to the target manifold. To make the approximation well enough, two important conditions described in the following subsections are needed.

III-C Split of Natural Parameter and the -Condition

In the general information geometry framework, the most important thing is to define the auxiliary points and manifolds. These definitions depend on the way of splitting natural parameter and determine what specific algorithm can be derived.

The split of and into items is given as

| (17a) | |||

| (17b) |

where the setting of , , , and depends on specific algorithms, and is usually a diagonal matrix. Let be the -th column of . In the IGA algorithm proposed in [11], the split , , , and is used.

Based on the split, we define auxiliary points or PDFs. Let be a diagonal matrix. The natural parameter of the -th auxiliary point is defined by

| (18a) | |||||

| (18b) |

where and are variables in the natural parameter. The corresponding auxiliary PDF and manifold are then given as

| (19) |

and

| (20) |

These auxiliary manifolds s are parallel to each other when s are not diagonal since the points in different auxiliary manifolds never intersect.

The target manifold is still the manifold of independent complex Gaussian distributions . To be consistent with the auxiliary points, the natural parameter of the target point is defined as

| (21a) | |||||

| (21b) |

where is diagonal. The corresponding PDF is

| (22) |

The target manifold is also parallel to all the auxiliary manifolds.

Now, we introduce the first condition of the information geometry framework as

| (23) |

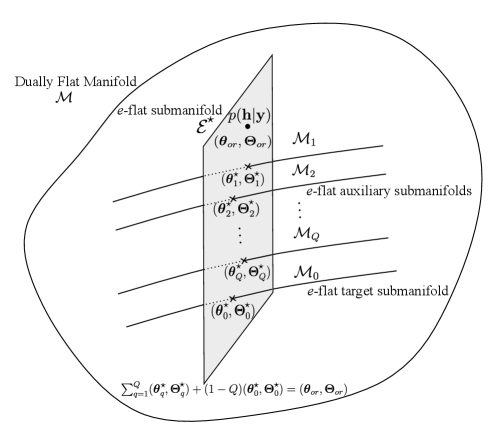

It means the original point, the target point and the auxiliary points are on a hyperplane in the affine coordinate system. This is called the -condition since it is a linear condition in the coordinate system as shown in Fig. 1. Because of the split, the -condition always holds if

| (24) |

Thus, the above formula is also the -condition. The -condition makes sure the target point and auxiliary points are related to the original point, which is important to finally get an approximation of the -projection point.

III-D The -Condition and General Algorithm

The -condition only states the relation between the natural parameters of the original point, the auxiliary points, and the target point, but what we need is part of the expectation parameters of the original point. To obtain an approximation of the -projection point, we need another condition, i.e, the -condition.

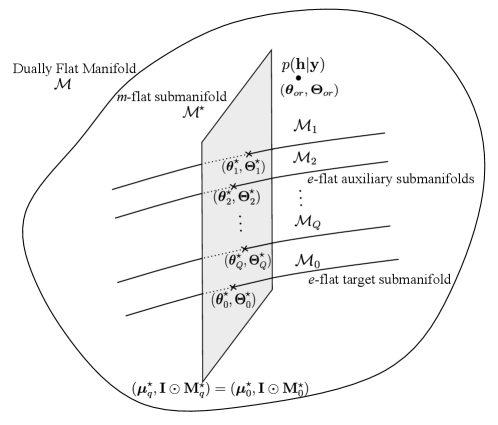

The -condition is named because it is a linear condition in the dual affine coordinate system , and is given by

| (25) |

From Section II-B, it means all the -projection points of the auxiliary points are the same and equal to the target point. By combining the -condition with the -condition, a good approximation of the -projection point on the target manifold of the original point can be obtained.

From (3a), the expectation parameters of auxiliary points can be obtained as

| (26a) | |||||

| (26b) |

Then, the expectation parameters of the -projection points on the target manifold satisfies

| (27a) | |||||

| (27b) |

as shown in Section II-B, and further we have the covariance of the -projection . The natural parameters of the -projection points can be obtained as

| (28a) | |||||

| (28b) |

To make both the -condition and the -condition hold, the auxiliary points need to exchange beliefs. Define and to make and hold. The beliefs are defined as

| (29a) | |||||

| (29b) |

Then, the natural parameters of the -projection points can be expressed as

| (30a) | |||||

| (30b) |

By comparing them with the natural parameters of auxiliary point , it can be observed that , are approximations of , in and , respectively, where is also diagonal.

After defining the beliefs, the iterative update of natural parameters of the target point and auxiliary points are constructed as

| (31a) | |||||

| (31b) |

and

| (32a) | |||||

| (32b) |

From the above two equations, it is observed that the -condition always holds. An information geometry based algorithm is obtained by iteratively calculating (28a), (29a), (31a) and (32a). When the algorithm converges, it is easy to obtain that

| (33a) | |||||

| (33b) |

which means all the -projection points are equal to the target point, and is equivalent to the -condition. In conclusion, both the -condition and the -condition are satisfied when the algorithm converges.

Finally, the output of this algorithm is the mean and covariance of the target point

| (34a) | |||||

| (34b) |

It is regarded as the approximated mean and covariance of the marginal PDF corresponding to the original PDF. When the algorithm does not converge, damping can be introduced in the updating of beliefs to ensure the convergence of the algorithm without changing its equilibrium. Although the process of the -projection in (28a) still involves the matrix inversion, i.e., , it can be implemented with low complexity by properly setting of since and are diagonal matrices. For example, in the IGA algorithm proposed in [11], the matrix is a rank- matrix.

III-E Geometrical Explanation

This iterative process and the stabilization point can be explained geometrically. Define the -flat manifold and the -flat manifold as

| (35a) | |||||

| (35b) |

where are the positive coefficients. The geometric interpretation of the -condition is given by Fig. 2. The -flat auxiliary and target manifolds are perpendicular to the -flat manifold under the metric induced by the KL divergence.

From the -condition and the -condition shown in Figs. 1 and 2, it can be seen that when the algorithm converges, all the auxiliary points, the target points and the original points are also on the same -flat manifold . Meanwhile, all the auxiliary points and the target point are on the same -flat manifold . The -condition makes sure the -projection points of the auxiliary points and the target point are the same. The -condition relates the auxiliary points and the target point to the original point. By combining these two conditions, the original point is close to the manifold . Theorem 11.8 of [17] proves that contains the original point when the factor graph is acyclic, i.e., which means the exact mean of the original distribution is obtained. However, this property is not guaranteed when the factor graph is cyclic, which is the common case for the channel estimation problem. Fortunately, for the channel estimation problem, it is usually able to prove the mean is equal to the mean when the algorithm converges as shown in [11].

IV IC-IGA for Massive MIMO Channel Estimation

In this section, an IC-IGA for massive MIMO channel estimation is proposed. First, a modified form equivalent to the MMSE estimation is given to define the auxiliary manifolds. Then, the framework of the information geometry approach is applied to derive this new IGA algorithm. Finally, the equilibrium and complexity analysis are provided.

IV-A Definition of Auxiliary Manifolds with Modified MMSE Form

In this subsection, we provide a new way of splitting the natural parameters based on a modified MMSE form. Then, the corresponding auxiliary manifolds are constructed.

To derive a new low-complexity IGA algorithm, we want to make each auxiliary manifold focus on the computation of one element of . According to the framework of information geometry methods, the -th auxiliary PDF can be defined as

| (36) |

where , and is set to be a zero matrix.

Let . To make the -th auxiliary PDF only compute the information of the -th element of , a natural idea is to set and as

| (41) |

where is the -th column of , is the vector obtained by deleting the -element from the -column of , , and is the ordering matrix obtained by extracting -th row of and put it in the first row. The matrix has the property that . By left-multiplying or right-multiplying , one can extract the -th row or column of a given matrix and put it in the first row or column.

However, there is a flaw in this setup. The items associated with elements other than in are missing in the auxiliary function, and thus might not form a Gaussian-distributed PDF since are not always positive semidefinite. To overcome this issue, we present the following theorem.

Theorem 1.

Let the matrices and be defined as and . The estimator

| (42) |

is equivalent to the MMSE estimator.

Proof.

The proof is provided in Appendix A. ∎

Based on Theorem 1, we can use the following PDF

| (43) | |||||

to compute the original mean. With the modified MMSE form, we propose a new way of splitting natural parameter as

| (44a) | |||

| (44b) |

where and are defined as

| (49) |

The natural parameter of the -th auxiliary PDF is defined as

| (50a) | |||||

| (50b) |

where are given by

| (51a) | |||

| (51b) |

The subscript instead of is used because we impose more constraints on , and the -th element has been set to zero. The auxiliary PDF can be constructed as

| (52) |

and the corresponding auxiliary manifolds are .

The natural parameter of the target point is defined as , , where

| (53a) | |||||

| (53b) |

The target PDF becomes

| (54) |

and the target manifold is still . It is easy to check the -condition always holds due to

| (55) |

IV-B Derivation of IC-IGA

After constructing the auxiliary manifolds, the estimation problem can be solved by applying the information geometry framework of section III. For convenience, we define as

| (56a) | |||

| (56b) |

The following theorem gives the beliefs , corresponding to the -th auxiliary point at the -th iteration.

Theorem 2.

Let the beliefs , be defined as and . Then, their elements are given by

| (57a) | |||

| (57b) |

where , , and .

Proof.

The proof is provided in Appendix B. ∎

Before proceeding to the derivation of IC-IGA, we present more insights about Theorem 2. Let and be defined as and

| (58) |

we have that

| (59) |

where is the normalization constant. It means this part of can be viewed as a conditional PDF of as . Furthermore, the remain part of can be viewed as

| (60) |

The corresponding PDF can be rewritten as

| (61) |

Thus, the marginal PDF of other elements will be the same as that provided by , and each auxiliary manifold only updates the marginal PDF of one element. This explains why the -th auxiliary manifold has a belief of for the other elements as shown in Theorem 2.

From Theorem 2, the -th auxiliary manifold only computes the mean and variance of the -th element of . The corresponding natural parameter is . In the computation, the mean and variance of the other elements are those of the other auxiliary manifolds in the previous iteration, which is consistent with the idea of interference cancellation (IC), and therefore this method is called IC-IGA.

After calculating the beliefs, the parameters , and , corresponding to the target and auxiliary points are updated based on (31a) and (32a). However, this update might cause the algorithm to diverge. By introducing damping, the convergence of the algorithm can be enhanced without changing its equilibrium. Let be the damping coefficient, the natural parameters and corresponding to the target and auxiliary points in , , and are updated as

| (62a) | |||||

| (62b) |

where the computation of and can be rewritten as

| (63a) | |||||

| (63b) |

where and . Besides, define the matrix and vector , then the computation of can be rewritten as

| (64) |

where is the vector where only the -th element is and the rest are all s. The channel estimation IC-IGA is summarized as Algorithm 1.

| (65a) | |||||

IV-C Equilibrium and Complexity Analysis

In this subsection, we present analysis for the equilibrium, i.e., fixed point or limit point, and the complexity of the IC-IGA.

From (25) and (24), Algorithm 1 satisfies the following conditions at the equilibrium

| (66) | |||

| (67) |

which leads to the following theorem.

Theorem 3.

At the equilibrium of IC-IGA, the mean of is equal to the mean of the posterior distribution .

Proof.

The proof is provided in Appendix C. ∎

We now analyze the complexity of the IC-IGA algorithm in terms of time complexity (or computational complexity) and space complexity. In each iteration, the time complexity is mainly in the multiplication of matrix and vector , where and , so the complexity is . Finally, the time complexity of this algorithm is , and is the number of iterations. The free variables of this algorithm are -dimensional vectors , , , , , and the number of free variables is , so the space complexity of this algorithm is . When is small, the complexity of this algorithm is lower compared with the time complexity of and the space complexity of in the MMSE algorithm. In addition, compared with the time complexity of and the space complexity of in the existing IGA algorithm [11], the IC-IGA algorithm has a comparable time complexity and a lower space complexity when is comparable to . In practice, the channel dimension is often smaller than the received signal dimension due to the sparsity of the channel, and the IC-IGA algorithm has lower time complexity and space complexity compared to the IGA algorithm.

V IC-SIGA for Massive MIMO Channel Estimation with BSCM and ZC Sequences

In this section, an IC-SIGA with lower complexity is proposed based on the IC-IGA by directly constructing the iterative update of the mean. To further reduce the complexity of IC-SIGA in practical systems, a massive MIMO with UPA is considered. By using BSCM and ZC sequences, a practical receive model is then established, and finally, the complexity of IC-SIGA is reduced by FFT and sparsity of the beam domain channel.

V-A Derivation of IC-SIGA

In this subsection, we provide the derivation of IC-SIGA, which can further reduce the complexity of channel estimation.

From the previous section, it is clear that the time complexity of the IC-IGA algorithm is mainly in the multiplication . This computation is related to the calculation of and , which involves the computation of the corresponding variance of the -projection of each auxiliary point. In the following, we reconsider the computation of the mean corresponding to the -projection of each auxiliary point. From (63a) and , we can obtain

| (68) |

where , , and denotes the element-by-element division of vectors. Equation (68) indicates that can be updated without , .

However, equation (68) might also not converge. Thus, we also need to introduce the damping coefficient . Let be defined as

| (69) |

where is the mean at the -th iteration. Then, the mean of the target point can be updated as

| (70) |

When the algorithm converges, output the mean of the target point . The IC-SIGA for channel estimation is summarized as Algorithm 2.

For the equilibrium, we can have the following theorem by using similar methods as that in IC-IGA.

Theorem 4.

At the equilibrium of IC-SIGA, the mean is equal to the mean of the posterior distribution .

Proof.

The proof is provided in Appendix D. ∎

V-B System Configuration and Channel Model of Massive MIMO

In this subsection, we consider a 3D massive MIMO system equipped with UPA. The system configuration and the BSCM are introduced to show the properties of the channel.

Consider a massive MIMO-OFDM system working in time division duplexing (TDD) mode. The antenna array at the BS is a UPA with antennas, where and are the numbers of vertical and horizontal antennas. All users are equipped with a single antenna. The carrier frequency is , and the wavelength is . The vertical and horizontal antenna spacings and are both set to half wavelength. The number of OFDM subcarriers is , of which training subcarriers are used to transmit the uplink pilot signal. Let the set of training subcarrier indexes be defined as . Let and be the length of the cyclic prefix (CP) and sampling interval, respectively. The subcarrier interval is and the transmission bandwidth is .

The directional cosines for the and axis are defined as and , where and are polar and azimuthal angles of arrival (AOA) at the BS. The space steering vector at the BS side is given by [7]

| (71) |

where

| (72) | |||

| (73) |

Let and be sampled directional cosines, defined as

| (74) | |||||

| (75) |

Based on the space steering vector, the matrix of sampled space steering vectors are defined by

| (76) |

where

| (77a) | |||||

| (77b) |

The symbols and are the numbers of sampled horizontal and vertical cosines, where and are fine factors. The frequency steering vector is defined similarly as

| (78) |

The matrix of sampled frequency steering vectors is then given by

| (79) |

where is the number of sampled delays, is the fine factor, , and are sampled delays, given by

| (80) |

Finally, the space-frequency domain channel matrix of user can be expressed as [11, 7]

| (81) |

where is the beam domain channel matrix. This model is called the BSCM. The beam-domain channel matrix has improved sparsity than the traditional beam-domain stochastic channel model based on the discrete Fourier transform (DFT) matrices [33]. The elements of are assumed to be independent and follow a complex Gaussian distribution with zero mean and different variances. The beam domain channel power matrix is defined as , where is the variance of . It is the statistical CSI and remains constant over a relatively longer period than the instantaneous CSI.

V-C Receive Model with BSCM and ZC Sequences

In this subsection, we describe a specific receive model in practical massive MIMO systems, which shows that multiplying vector can be realized by FFT.

The object of channel estimation is to obtain the a posteriori information of the space-frequency channel , which can be calculated from the beam domain channel with deterministic matrices and . Thus, we focus on the estimation of . We use the pilot signal sequence in [34] as

| (82) |

where is the Zadoff-Chu (ZC) sequence defined as

| (83) |

where is the largest prime number satisfying , and denote the root coefficient and cyclic shift, is the number of roots, is the number of UEs of each root, and is the sampled delay defined in (80).

Let be the pilot matrix. The received pilot signal matrix at the -th OFDM symbol is expressed as

| (84) |

where the noise matrix consists of i.i.d. elements with zero mean and variance .

For convenience, the subscript of the OFDM symbol is omitted hereafter. Substituting (81) and (82) into (84), we have

| (85) |

where . Let be an -dimensional DFT matrix and define the partial DFT matrix . We then have and .

In (85), can be calculated as

| (86) |

where

| (89) |

is the permutation matrix. Then, (85) can be reexpressed as

| (90) |

When , we can avoid mutual aliasing of UEs with the same root and define

| (91) |

Finally, the uplink received signal model is given as

| (92) |

where

| (93) | |||||

| (94) |

By vectorizing (92), the received signal model in vector form is

| (95) |

where , , , , , and . Since the beam domain channels are sparse, we can obtain a low dimensional from by removing the elements with zero variance, where is the extraction matrix. Then, the general received signal model in (13) can be obtained, where is the matrix obtained by removing corresponding columns of , and can be obtained from of all users.

V-D Complexity Analysis

The time complexity of the IC-SIGA algorithm is mainly in the multiplication and , where and are two arbitrary vectors. In the following, we analyze the fast implementation method and complexity of the operation . The computation of can be implemented similarly.

The computation of can be written as . From , the vector can be transformed into a matrix as , where , . Substituting the expression (94) for yields . Define , then , where can be realized by FFT. Since is a diagonal matrix, the complexity of is negligible. Thus, the time complexity of is .

From (77a) we have Then, can be realized by FFT with a time complexity of . In summary, the time complexity of IC-SIGA is b where is the iteration number.

Then, we analyze the space complexity. In IC-SIGA, the free variables are -dimensional vectors , , and the number of free variables is . Therefore, the space complexity of IC-SIGA is .

VI Simulation Results

| Parameter | Value |

|---|---|

| Number of BS antenna | 128 8 16 |

| UT number | 12, 24 |

| Center frequency | 4.8GHz |

| Number of subcarriers | 2048 |

| Subcarrier spacing | 30kHz |

| Number of training subcarriers | 120 |

| CP length | 144 |

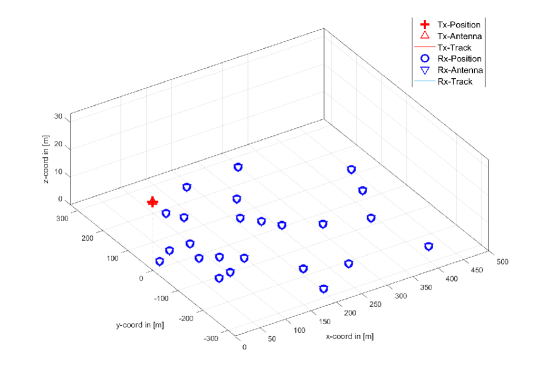

In this section, we provide simulation results to show the performance of the proposed IC-IGA and IC-SIGA for MIMO-OFDM channel estimation. We use the widely used QuaDRiGa channel model. The simulation scenario is set to “3GPP38.901UMaNLOS”. The main parameters for the simulations are summarized in Table I. The layout of this massive MIMO-OFDM system is plotted in Fig. 3, where the location of the BS is at , and the users are randomly generated in a sector with radius m around at m height. The channel is normalized as . Fine factors are set as . The SNR is set as SNR . We use the algorithm proposed in [7] to obtain the beam domain channel power matrices . The normalized mean-squared error (NMSE) is used as the performance metric for the channel estimation, and is defined as

| (96) |

where is the number of the received pilot signals, is the exact space-frequency domain channel matrix, and is the estimated space-frequency domain channel matrix. We set in our simulations.

| Algorithm | Time Complexity | Space Complexity |

|---|---|---|

| IC-IGA | ||

| IC-SIGA | ||

| IGA | ||

| MMSE |

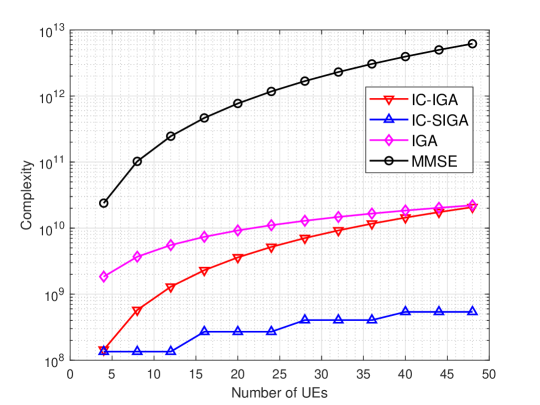

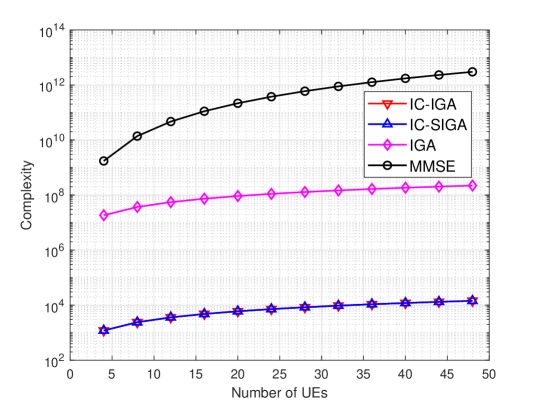

First, we present simulation results to show the complexity performance of different algorithms. The complexity of the IC-IGA, IC-SIGA, IGA, and MMSE algorithms is summarized in Table II. The time complexity and space complexity of the above four algorithms are presented in Figs. 4 and 5 for comparison, where the number of iterations is , the number of pilot roots is , and simulation parameters are configured as in Table I. From the figure, it can be found that the order of the time complexities of these algorithms is IC-SIGAIC-IGAIGAMMSE, and the order of the space complexities of these algorithms is IC-SIGAIC-IGAIGAMMSE. Meanwhile, the time complexity of IC-SIGA algorithm is the same for the same number of pilot roots. The time complexity of IC-IGA is much less than the MMSE algorithm and also less than the IGA, whereas the space complexity of IC-IGA is much less than the IGA and MMSE algorithm. The time complexity of IC-SIGA is much less than other algorithms, and the space complexity of IC-SIGA is much less than that of the IGA and the MMSE algorithm.

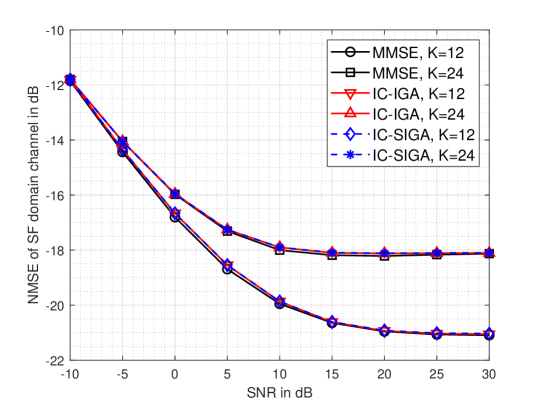

Fig. 6 shows the NMSE performance of IC-IGA and IC-SIGA channel estimation compared with IGA and MMSE. The user number is set to be and . The iteration numbers of IC-IGA and IC-SIGA are set as 100. The damping coefficients of IC-IGA and IC-SIGA are and , respectively. From the figure, it can be seen that both IC-IGA and IC-SIGA can obtain almost the same performance as MMSE for all SNR scenarios with two numbers of users. In addition, the performance using orthogonal pilots is more accurate, which is because the non-orthogonal pilots introduce interference from the pilots of users with other roots.

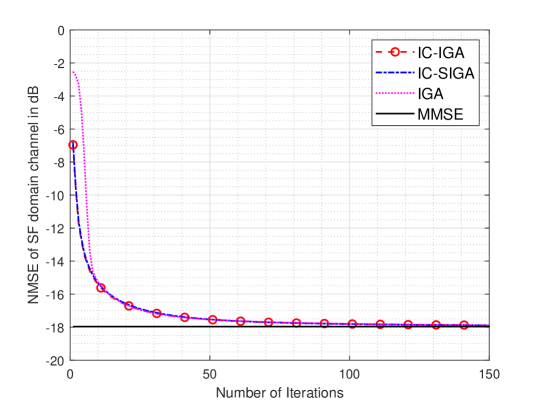

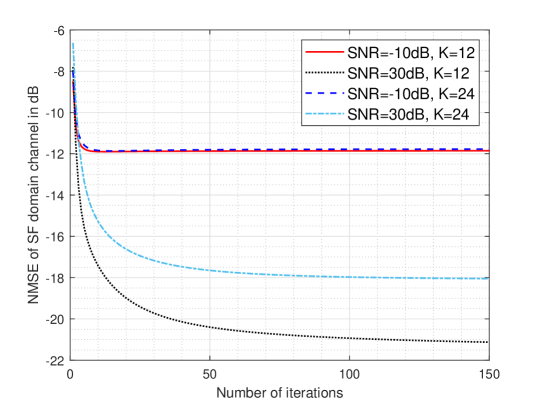

Fig. 7 plots the convergence performance of the IC-IGA and IC-SIGA for at SNR=dB. The results of the MMSE estimation and the IGA in [11] are given for comparison. The damping coefficients of IGA, IC-IGA and IC-SIGA are , and . As shown in the figure, the NMSE performance of IC-IGA and IC-SIGA can converge close to that of the MMSE estimation, which is consistent with Theorems 3 and 4. Furthermore, the NMSE of the IC-IGA decreases rapidly at the beginning, but the convergence speeds of the three algorithms, IC-IGA, IC-SIGA, and IGA, are nearly the same after about iterations.

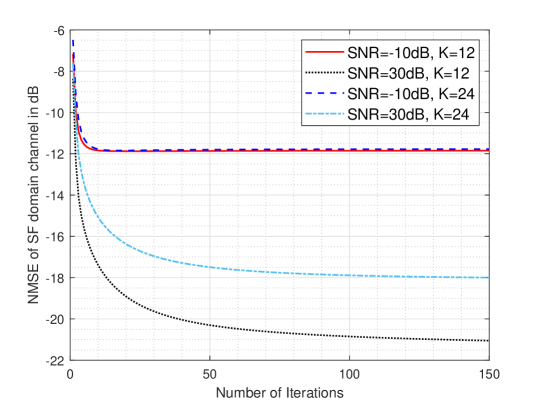

Fig. 8 and Fig. 9 show the convergence performance curves of IC-IGA and IC-SIGA for the orthogonal pilots case () and non-orthogonal pilots () case at different SNRs. The SNRs under consideration are low SNR scenarios and high SNR scenarios where . From the figure, it can be seen that IC-IGA and IC-SIGA can approach convergence within iterations in the low SNR scenario, and iterations in the high SNR scenario.

VII Conclusion

In this paper, manifolds of complex Gaussian distributions are illustrated under information geometry theory, and a unified information geometry framework for channel estimation is described. To obtain an interference cancellation style algorithm, a modified MMSE form that has the same mean as the original MMSE estimator is constructed. Based on the unified framework and the modified form, the IC-IGA is then proposed for massive MIMO. The form of IC-IGA is simpler than IGA. Then, IC-SIGA is proposed to further reduce the complexity. The equilibria and complexities of the algorithms are analyzed. Simulation results show that the proposed methods can obtain similar performance to the IGA algorithm with fewer iterations and lower complexity.

Appendix A Proof of Theorem 1

It is easy to verify that is invertible. Simultaneously multiplying inside and outside the matrix inversion in the MMSE estimator yields

| (97) |

By using , it follows that

| (98) |

where the second equality is due to the definition of . The above result means (42) is equivalent to the MMSE estimator.

Appendix B Proof of Theorem 2

The subscript is omitted here for convenience. After placing the -th row and -th column of in the first row and first column by left-multiplying of and right-multiplying of , its inverse matrix can be computed by applying block matrix inversion formula, i.e.,

| (101) | |||

| (104) |

where

| (105) |

By using the Sherman-Morrison formula for matrix inversion, we can obtain that

| (106) | |||||

where . It can be shown that .

Further, the natural parameters of the -projection of the auxiliary point to the target manifold are

| (109) | |||||

Since , then the belief is

| (114) | |||||

| (117) |

The mean of -projection is

| (122) | |||||

From

| (123) |

we have

| (126) |

where

| (127) |

The second and third terms can be further simplified as

| (128) |

and

| (129) |

where the equality is because , and is because . By using this simplified results, we further obtain

| (130) | |||||

where the equality is because , and are the mean and variance of the -th element of computed from the -th auxiliary manifold, respectively.

Further, the natural parameter of the -projection is

| (133) |

According to , the belief is

| (138) | |||||

| (141) |

Appendix C Proof of Theorem 3

Appendix D Proof of Theorem 4

From (68), at the Equilibrium, we have

| (147) |

It can be reexpressed as

| (148) |

From , it follows that , then it follows that

| (149) |

which means

| (150) |

References

- [1] L. Lu, G. Y. Li, A. L. Swindlehurst, A. Ashikhmin, and R. Zhang, “An overview of massive MIMO: Benefits and challenges,” IEEE J. Sel. Topics Signal Process., vol. 8, no. 5, pp. 742–758, 2014.

- [2] E. Björnson, J. Hoydis, M. Kountouris, and M. Debbah, “Massive MIMO systems with non-ideal hardware: Energy efficiency, estimation, and capacity limits,” IEEE Trans. Inf. Theory, vol. 60, no. 11, pp. 7112–7139, 2014.

- [3] T. L. Marzetta, E. G. Larsson, H. Yang, and H. Q. Ngo, Fundamentals of Massive MIMO. Cambridge University Press, 2016.

- [4] E. De Carvalho, A. Ali, A. Amiri, M. Angjelichinoski, and R. W. Heath, “Non-stationarities in extra-large-scale massive MIMO,” IEEE Wireless Communications, vol. 27, no. 4, pp. 74–80, 2020.

- [5] J. C. Marinello, T. Abrão, A. Amiri, E. De Carvalho, and P. Popovski, “Antenna selection for improving energy efficiency in XL-MIMO systems,” IEEE Trans. Veh. Technol., vol. 69, no. 11, pp. 13 305–13 318, 2020.

- [6] Z. Wang, J. Zhang, H. Du, D. Niyato, S. Cui, B. Ai, M. Debbah, K. B. Letaief, and H. V. Poor, “A tutorial on extremely large-scale MIMO for 6G: Fundamentals, signal processing, and applications,” IEEE Communications Surveys & Tutorials, 2024.

- [7] A.-A. Lu, Y. Chen, and X. Gao, “2D beam domain statistical CSI estimation for massive MIMO uplink,” IEEE Trans. Wireless Commun., vol. 23, no. 1, pp. 749 – 761, 2024.

- [8] O. Elijah, C. Y. Leow, T. A. Rahman, S. Nunoo, and S. Z. Iliya, “A comprehensive survey of pilot contamination in massive MIMO—5G system,” IEEE Communications Surveys & Tutorials, vol. 18, no. 2, pp. 905–923, 2015.

- [9] L. You, X. Gao, X.-G. Xia, N. Ma, and Y. Peng, “Pilot reuse for massive MIMO transmission over spatially correlated Rayleigh fading channels,” IEEE Trans. Wireless Commun., vol. 14, no. 6, pp. 3352–3366, 2015.

- [10] H. Wang, W. Zhang, Y. Liu, Q. Xu, and P. Pan, “On design of non-orthogonal pilot signals for a multi-cell massive mimo system,” IEEE Wireless Commun. Lett., vol. 4, no. 2, pp. 129–132, 2014.

- [11] J. Yang, A.-A. Lu, Y. Chen, X. Gao, X.-G. Xia, and D. T. Slock, “Channel estimation for massive MIMO: An information geometry approach,” IEEE Trans. Signal Process., vol. 70, pp. 4820–4834, 2022.

- [12] N. Shariati, E. Björnson, M. Bengtsson, and M. Debbah, “Low-complexity polynomial channel estimation in large-scale mimo with arbitrary statistics,” IEEE J. Sel. Topics Signal Process., vol. 8, no. 5, pp. 815–830, 2014.

- [13] A. Wang, R. Yin, and C. Zhong, “Channel estimation for uniform rectangular array based massive MIMO systems with low complexity,” IEEE Trans. Veh. Technol., vol. 68, no. 3, pp. 2545–2556, 2019.

- [14] H. He, C.-K. Wen, S. Jin, and G. Y. Li, “Deep learning-based channel estimation for beamspace mmWave massive MIMO systems,” IEEE Commun. Lett., vol. 7, no. 5, pp. 852–855, 2018.

- [15] E. Balevi, A. Doshi, and J. G. Andrews, “Massive MIMO channel estimation with an untrained deep neural network,” IEEE Trans. Wireless Commun., vol. 19, no. 3, pp. 2079–2090, 2020.

- [16] F. Bellili, F. Sohrabi, and W. Yu, “Generalized approximate message passing for massive MIMO mmWave channel estimation with Laplacian prior,” IEEE Trans. Commun., vol. 67, no. 5, pp. 3205–3219, 2019.

- [17] S.-i. Amari, Information Geometry and Its Applications. Springer, 2016.

- [18] N. Ay, J. Jost, H. Vân Lê, and L. Schwachhöfer, Information geometry. Springer, 2017, vol. 64.

- [19] F. Nielsen, “The many faces of information geometry,” Not. Am. Math. Soc, vol. 69, no. 1, pp. 36–45, 2022.

- [20] S.-i. Amari, “Information geometry in optimization, machine learning and statistical inference,” Frontiers of Electrical and Electronic Engineering in China, vol. 5, pp. 241–260, 2010.

- [21] M. Oizumi, N. Tsuchiya, and S.-i. Amari, “Unified framework for information integration based on information geometry,” Proceedings of the National Academy of Sciences, vol. 113, no. 51, pp. 14 817–14 822, 2016.

- [22] L. Banchi, P. Giorda, and P. Zanardi, “Quantum information-geometry of dissipative quantum phase transitions,” Physical Review E, vol. 89, no. 2, p. 022102, 2014.

- [23] J. Pearl, Probabilistic reasoning in intelligent systems: networks of plausible inference. Morgan kaufmann, 1988.

- [24] S. Ikeda, T. Tanaka, and S.-i. Amari, “Stochastic reasoning, free energy, and information geometry,” Neural Computation, vol. 16, no. 9, pp. 1779–1810, 2004.

- [25] A. L. Yuille, “CCCP algorithms to minimize the Bethe and Kikuchi free energies: Convergent alternatives to belief propagation,” Neural computation, vol. 14, no. 7, pp. 1691–1722, 2002.

- [26] S. Ikeda, T. Tanaka, and S.-i. Amari, “Information geometry of turbo and low-density parity-check codes,” IEEE Trans. Inf. Theory, vol. 50, no. 6, pp. 1097–1114, 2004.

- [27] A. Zia, J. P. Reilly, J. Manton, and S. Shirani, “An information geometric approach to ML estimation with incomplete data: application to semiblind MIMO channel identification,” IEEE Trans. Signal Process., vol. 55, no. 8, pp. 3975–3986, 2007.

- [28] J. Yang, Y. Chen, X. Gao, D. Slock, and X.-G. Xia, “Signal detection for ultra-massive MIMO: An information geometry approach,” IEEE Trans. Signal Process., 2024.

- [29] J. Yang, Y. Chen, A.-A. Lu, W. Zhong, X. Gao, X. You, X.-G. Xia, and D. Slock, “Channel estimation for massive MIMO-OFDM: simplified information geometry approach,” in 2023 IEEE 98th Vehicular Technology Conference (VTC2023-Fall). IEEE, 2023, pp. 1–6.

- [30] O. Simeone, Machine learning for engineers. Cambridge university press, 2022.

- [31] L. M. Bregman, “The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming,” USSR computational mathematics and mathematical physics, vol. 7, no. 3, pp. 200–217, 1967.

- [32] S.-i. Amari and H. Nagaoka, Methods of information geometry. American Mathematical Soc., 2000, vol. 191.

- [33] C. Sun, X. Gao, S. Jin, M. Matthaiou, Z. Ding, and C. Xiao, “Beam division multiple access transmission for massive MIMO communications,” IEEE Trans. Commun., vol. 63, no. 6, pp. 2170–2184, 2015.

- [34] 3GPP TS 36.211, “Evolved universal terrestrial radio access (E-UTRA); physical channels and modulation (Release 15),” 2019.