Is there a one-to-one correspondence between interparticle interactions and physical properties of liquid?

Abstract

In this study, we present the original method for reconstructing the potential of interparticle interaction from statistically averaged structural data, namely, the radial distribution function of particles in many-particle system. This method belongs to a family of machine learning methods and is implemented through the differential evolution algorithm. As demonstrated for the case of the Lennard-Jones liquid taken as an example, there is no one-to-one correspondence between structure and potential of interparticle interaction of a many-particle disordered system at a certain thermodynamic state. Namely, a whole family of the Mie potentials determined by two parameters and related to each other according to a certain rule can reproduce properly a unique structure of the Lennard-Jones liquid at a given thermodynamic state. It is noteworthy that this family of the potentials quite correctly reproduces for the Lennard-Jones liquid the transport properties (in particular, the self-diffusion coefficient) over a temperature range as well as the dynamic structure factor, which is one of the key characteristics of the collective dynamics of particles.

[kpfu]organization=Department of Computational Physics, Institute of Physics, Kazan Federal University, city=Kazan, postcode=420008, country=Russia

1 Indroduction

To reproduce most of the physical properties of a many-particle system, it is necessary to know its Hamiltonian, namely, the part of the Hamiltonian that defines the interparticle interaction. If the potential of interparticle interaction is known, then it is possible to calculate microscopic collective dynamics, to determine the transport coefficients (self-diffusion, viscosity, thermal conductivity), to compute the phase diagram as well as the characteristics of various phase transitions of the system under consideration [1, 2]. For simple many-particle systems, the interaction potential may be initially known. Typical example of such systems is the system of interacting atoms/molecules of inert gases, where the interparticle interaction is reproduced by the Lennard-Jones potential [3, 4]. Another example of simple system is a model single-component plasma, where the effective interparticle interaction is given by the Coulomb-type potential or the Yukawa potential [5, 6, 7, 8]. However, for the overwhelming majority of real physical systems, it is necessary to solve the problem of finding the interaction energy of particles as a function of radius vector (or distance for homogeneous isotropic case).

There are two theoretical concepts to recover the potential of a natural many-particle system. In accordance with the first and apparently most popular concept, the potential is constructed in such a way that the energies of various configurations of the system and the resulting forces acting on each particle of the system coincide with the energies and forces obtained from the ab initio molecular dynamics simulations [9, 10, 11, 12]. In this case, the search for the potential comes down either to finding the values of the parameters of a certain model potential, given in an analytical form [13], or to constructing the potential using neural networks [14, 15].

A feature of the second concept is that the search for the potential involves solving the so-called inverse problem [16]. Here, it is required to restore the potential, assuming that values of some physical characteristics determined by this potential are known from experiment. These can be characteristics obtained from traditional experimental techniques for microscopy, neutron or X-ray diffraction, or from a numerical experiment such as molecular dynamics simulations or Monte-Carlo simulations. The simplest implementation of the this approach assumes that the potential is constructed on the basis of known experimental data on the structure of the system [17, 18, 19, 20, 21]. The inverse problem in this case implies recovering the potential from the structural data and it is solved by the iteration (brute force) method as applied to some coarse-grained model of the potential with variable parameters. The corresponding enumeration is carried out until the required correspondence is reached in the values of a structural characteristic obtained with trial modification of the potential and measured experimentally [22, 23, 24]. With the development of machine learning methods [25, 26, 27, 28, 29, 30, 31, 32, 33], this approach is being improved in such a way that solving the problem requires fewer processing power as well as less computing time. In this paper, we propose an original method for recovering the interparticle interaction potentials based on the differential evolution algorithm, which also belongs to the class of machine learning algorithms [34]. The efficiency of this method is demonstrated by the recovering the Lennard-Jones potential under the condition that the structural data — the radial distribution function of the particles in this system — is known initially.

It is important to note that the following questions can be formulated in relation to any recovered potential of interparticle interaction. Is this recovered potential unambiguously determined, or are its various modifications possible, which will reproduce the experimental structure with acceptable accuracy? How correctly does this recovered potential reproduce other physical characteristics of the system under consideration (for example, the transport coefficients — self-diffusion, viscosity, thermal conductivity; the characteristics of collective particle dynamics — speed of sound propagation, sound attenuation coefficient, etc.)? Is it sufficient to rely only on structural data to recover the potential ? The purpose of this study is to provide answers to these questions.

The given study presents an original method for reconstruction of the interaction potential in a many-particle system. Although the main results are demonstrated on the example of a Lennard-Jones fluid, the method is general and can be applied to arbitrary systems of interacting particles in thermodynamic equilibrium (for example, equilibrium fluids of arbitrary physical nature). In particular, the method can be applied to estimate the interatomic interaction in heavy metal melts, where solving this problem on the basis of quantum mechanics calculations is laborious [35, 36, 37, 38, 39]. In addition, it can be used to reconstruct effective coarse-grained potentials for the case of polymer melts and other disordered systems [40, 41, 42].

2 Differential evolution algorithm for recovering the interparticle interaction potentials

2.1 Statement of the problem

Let us assume that for an investigated many-particle homogeneous isotropic system, such as a liquid or an amorphous solid, structural data are initially known, and these structural data are specified by the radial distribution function of particles. The system under study can be a real physical system or a model system, where the interaction of particles is specified by a certain potential . Consequently, the structural data can be experimental or calculated based on the results of molecular dynamics simulations or Monte Carlo simulations with a given potential. The radial distribution function corresponding to these structural data is known. This function has the meaning of an input quantity in the given problem and will be denoted as , where the subscript obj means objective.

Further, our goal is to recover the potential of interparticle interaction . Let us assume that it belongs to the family of the potentials , i.e.

the analytical form of which are specified by the set of parameters

| (1) |

The values of the parameters are initially unknown. However, some general form of the potential can be chosen taking into account the nature of the many-particle system. So, for example, it could be a system of point charges (electrons, ions), a system of dipoles or colloid particles, etc. Then, the parameters can be the exponents in attractive or repulsive contributions, the weights of these contributions, the characteristics of selected directions in particle interactions in the case of nonspherical potentials, etc. Thus, the problem of recovering the potential is reduced to determining the values of these parameters at which the model potential will correctly reproduce the structure of the system, namely, the ‘experimental’ radial distribution function .

The exact relationship between the interaction potential and the distribution function is known only for the case of a rarefied gas [43, 44, 45]:

| (2) |

For the condensed phases such as a high-dense gas, liquid and amorphous solid, a correspondence between the quantities and is unknown, and it is not possible to calculate analytically the interaction potential on the basis of the known function . On the other hand, the radial distribution function itself can be accurately calculated using configuration data resulted from molecular dynamics or Monte-Carlo simulations, if the potential is specified for a given thermodynamic state of the system under study [46, 47]. Thus, to restore the potential, we can use the following computational procedure. It is necessary to try different modifications of the potential for molecular dynamics (or Monte-Carlo) simulations, determining the radial distribution function and each time comparing the obtained trial function with the objective function . The procedure should be carried out until the required correspondence between functions and is obtained. Note that a modification of the potential is actually determined by values of the parameters . Thus, in order to restore the potential in accordance with this procedure, we really need to sort out various combinations of the values of its parameters and find the optimal one.

Let us choose the quantity determined by the following relation

| (3) |

as a measure of similarity (or difference) between the trial function and the objective function . Here, is the number of segments in -axis, and is the label of a segment. In fact, the quantity is the so-called mean squared deviation or weighted residual sum of squares known in statistics [48], and it can only take non-negative values. If , then both the functions and coincide. The more these functions differ, the greater value the quantity takes.

Further, let us introduce a phase space formed by the set of parameters . Hypothetically, each model potential from the family can be associated with a value of the quantity . The set of resulting values of the quantity will yield a landscape (surface) in this phase space. Then, the location of the global minimum of this landscape, where the quantity tends to zero, should be directly associated with the values of the parameters of the sought model potential . Finding this global minimum in the landscape is the same as finding the potential , which is capable to reproduce the experimental structure characteristic with a required accuracy. Thus, we have the typical optimization problem that can be solved by means of the differential evolution algorithm [34].

2.2 Adapted differential evolution algorithm and its implementation

The first step of the proposed computational scheme is to determine the family of model potentials, within which the search for the required model will be carried out. First of all, it is necessary to define some general model , the free parameters that set the model , as well as the range of acceptable values of these parameters. An important point is the number of free parameters and the range of their acceptable values. A relatively small number of parameters with narrow ranges of acceptable values simplifies the optimization process and increases the chances of achieving convergence of the values of free parameters with limited computing resources. On the other hand, a larger number of parameters significantly increases a flexibility of parameterization and increases the chances of finding a solution.

The differential evolution algorithm as applied to the problem of potential recovering includes two computing procedures.

The first procedure is associated with finding the equilibrium configurations of many-particle system in a given thermodynamic state. These configurations should be generated using the test model potential of interparticle interaction. They will be required for the subsequent calculation of the radial distribution function and comparison with the objective function . Consequently, it is convenient here to use a computational program or package that supports molecular dynamics or Monte-Carlo simulations. For example, this could be the LAMMPS package [49], which implements molecular dynamics simulations and is distinguished by high computational speed. It supports for calculations on GPU, simulations in various statistical ensembles (, , ), as well as on-the-fly calculations of various structural and transport properties.

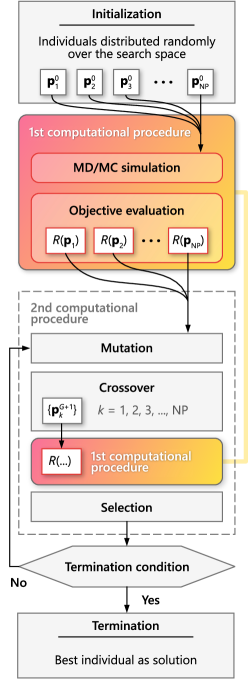

The second procedure involves computations in the framework of the adapted differential evolution algorithm, the main elements of which are given in Fig. 1. In accordance with the standard terminology of evolutionary algorithms, one can say that this computational procedure involves the following: formation of generations of individuals, as well as operations of mutation, crossover and selection of individuals [50]. So, let us define these basic terms and operations of the differential evolutionary algorithm methodology.

By individual, we mean a model potential from the initially given family of potentials . Here, is the label of an individual with a unique genome , which is a set of the parameters with specific numerical values, i.e.

Thus, has the meaning of a gene. From mathematical point of view, the genome can be viewed as a vector with components , , , . Therefore, all the standard mathematical operations with vectors are also applicable to it. Then, the quantity has the meaning of the dimensionality of the search space. A set of individuals form a population of a certain generation ; and the population size is denoted as . The size of the population is determined by the dimensionality of the search space [34]. As was found earlier (see, for example, work [34], p. 356), there is a rule of thumb which establishes a quantitative correspondence between the dimensionality of the search space and the minimum number of individuals in the population :

| (4) |

The minimal permissible value of is 4. We note that the term generation is, in a sense, a characteristic of a population: thus, there may be a population of a parent or a child generation. Therefore, in some cases it is convenient and appropriate to use the term generation instead of population. Individuals of each generation undergo operations of mutation, crossover and selection.

Mutation. – Mutation is defined as the mathematical operation applied to three random genomes , and of the individuals of the same population in accordance with the following vector addition rule:

| (5) |

Here, the weight coefficient known also as the mutation factor can take some a chosen non-integer value from the range as indicated in Ref. [51]. The higher value of , the more perturbations/changes are introduced into the genome. As found in Ref. [34], values of smaller than 0.4 or larger than 1 are effective only in specific cases. This operation is applied to the genomes of all the individuals of the population . The operation is considered acceptable if the obtained values of the genome parameters of the individual of the new mutated generation do not go beyond the range of permissible values. Such an implementation of mutation was proposed in the original work on differential evolution and it is essentially a reproduction of a similar operation in the Nelder-Mead method [51] (see, for example, Eq. (1.25) in [51]). It should be noted that to improve population diversity, the mutation operation given by equation (5) can be modified so as to be performed at a time on a larger number of individuals, if the population size is large enough [52].

Crossover. – Crossover is the operation carried out with the genomes of a pair of individuals, one each from the parental and the new mutated generations. Let us show this operation by the example of the formation of the -th individual for the new generation :

| (6) |

Here, is a random non-integer number from . All the individuals of both the generations and must undergo this operation. Eq. (6) sets that all the components of a vector of the parental generation are replaced with a certain probability by the components of the new mutated vector , while a one component, chosen at random and with the label , is necessarily replaced by the corresponding component of the mutated vector. The quantity is referred to as the crossover rate and can take a non-integer value from the range . Sequential application of mutation and crossover operations ensures that all the individuals of the new generation will be distinct from the individuals of the parent generation . The greater the value of , the more substantial is the change in the individuals. Solving the problem of restoring potential is computationally challenging, so rapid evolution of generations is necessary. Therefore, it is reasonable to set large values of , close to 1.

Selection. — The last stage, as a result of which the child generation is finally formed, is associated with the selection operation. The selection operation must ensure the evolution of generations in a required direction. Consequently, those individuals should be selected whose characteristics are closest to the required ones. In the problem under consideration, selection is carried out on the basis of a parameter determined for each th individual [see Eq. (3)]. The evolution should lead to the individuals with the lowest values of the parameter , which, in the methodology of evolutionary algorithms, has a meaning of a fitness function or cost function [51]. To perform the selection operation, it is necessary to consider once again the pairs of individuals, one for the parental -generation and for the -generation obtained as a result of mutations and crossovers. For the child generation, an individual is selected from these two, for which the cost function takes the smallest value.

Flowchart of the adapted differential evolution algorithm is given in Fig. 1. The initial population, i.e. the zero generation population, is created by selecting random individuals in the phase space (search space). Note that the probabilities of choosing one or another individual are the same. This is followed by the first computational procedure associated with finding equilibrium configurations for individuals in a given generation. Here, for all the individuals, the cost function is calculated. After that, the condition for termination of the computational algorithm is checked. If the condition is not met, then there is a transition to the second computing procedure with the simulation of the evolution process and a subsequent return to the first computing procedure. The algorithm is performed until an individual with the required properties is found.

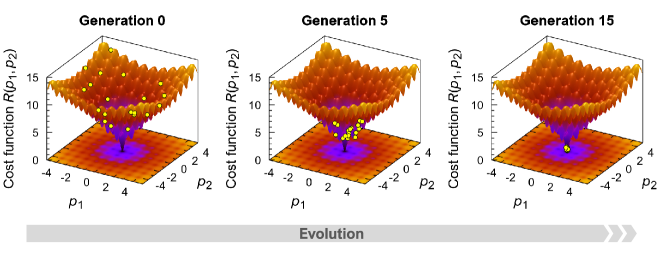

In fact, this algorithm should implement the search for the global minimum of the landscape . The more complex the geometry of the landscape , the more difficult it is to carry out such a search. Fig. 2 shows the results of testing the adapted differential evolution algorithm as applied to finding the global minimum of the model surface given by the Ackley function [53]. Notice that the Ackley function is a non-convex function containing many local minima, and it is usually used to test performance for optimization algorithms. In our test case, we define the cost function as the Ackley function of two variables and :

which has the global minimum

In Eq. (2.2), is the Euler’s number, and all the numerical coefficients are the same as in the original work [53]. As seen in Fig. 2, the fifteenth generation population converges at the point , indicating that the adapted differential evolution algorithm successfully finds the global minimum. Note that the algorithm was performed for a population of the size . Following the results of Ref. [54], the mutation factor and crossover rate were taken to be and , respectively.

3 Recovering the Lennard-Jones potential

3.1 Statement of the problem

Let us check the efficiency of the above method for the problem of restoring the Lennard-Jones potential

| (8) |

from the known radial distribution function for some thermodynamic ()-state. Here, and are the characteristic scales of energy and length given by the potential; and, throughout the section, all physical quantities will be defined in terms of these characteristics.

The Lennard-Jones potential is a special case of the Mie potential [55], which is a linear combination of repulsive and attractive contributions with arbitrary power dependencies:

| (9) |

and

The concrete analytic form of the Mie potential is given by the pair of parameters and . Thus, if the function for the Lennard-Jones system is known, then the problem of restoring the Lennard-Jones potential naturally reduces to finding the numerical values of the parameters and of the Mie potential or to finding the point corresponding to a global minimum on the landscape formed by the parameters and .

3.2 Relevant information about the system, calculations and simulation details

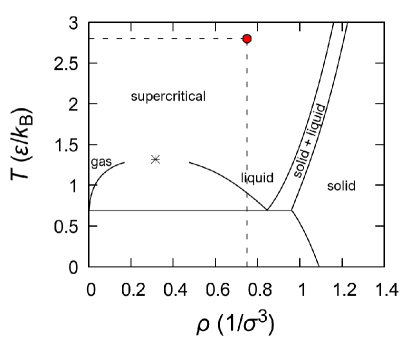

In the case of simple monatomic systems, such as the Lennard-Jones and/or Mie system, the thermodynamic states with the most complex homogeneous structure arise for the equilibrium liquid and supercritical fluid phases [56]. For this reason, in this study, we consider the state with the temperature and the density , that corresponds for the case of the Lennard-Jones system to supercritical fluid at the pressure . The () phase diagram of the Lennard-Jones system is given in Fig. 3. Note that for this isobar the melting temperature (liquidus) of the Lennard-Jones system is estimated to be , whereas the isotherm intersects the melting line in the () phase diagram at the pressure . Obviously, when the values of the parameters and of the Mie potential change from the values corresponding to the Lennard-Jones potential, the melting line in the phase diagram will also change.

For the chosen thermodynamic state of the Lennard-Jones fluid, the objective radial distribution function was preliminarily determined, which was subsequently used as an input quantity in the calculation procedures. The computational procedure related to the determination of the radial distribution function with the trial potential was performed in the framework of equilibrium molecular dynamics simulations in the ensemble. This ensemble is chosen for two reasons. First, such an ensemble makes it possible to realize conditions close to experimental ones, where temperature and pressure can be controlled. Second, molecular dynamics simulations in or ensembles with trial potentials can produce structurally heterogeneous systems (e.g., with pores and voids). In other words, no guarantee that any trial potential at (or ) conditions will produce the equation of state of the target system (namely, the equilibrium density of the target system). All simulations were performed for a cubic simulation box with particles; and periodic boundary conditions were applied in all directions. The pressure was controlled by the Berendsen barostat [46]. To optimize the molecular dynamics simulations, we apply to the trial potential and the corresponding force the so-called shifted force technique given by the conditions [59]

| (10a) | |||

| and | |||

| (10b) | |||

with the cutoff distance chosen as .

The search for the values of the Mie potential parameters that would be able to reproduce the radial distribution function of the Lennard-Jones liquid was carried out in the following ranges of parameter values:

| (11) |

where the parameters and are real numbers specified with an accuracy of five decimal place.

The first (main) maximum of the objective radial distribution function of the Lennard-Jones liquid is characterized by a shape close to Gaussian, which is typical for a system of particles interacting through the softcore potential. The exponent for the repulsion contribution taken as the largest in our considerations seems reasonable, since for larger values of this contribution will approach the hard-sphere potential. Further, the selected maximum value of the attractive contribution corresponds to the dipole-quadrupole interaction, which strongly depends on distance . Nevertheless, it is necessary to note that the ranges for values of both the parameters and can be taken even larger than in (11).

Thus, the search for the potential is carried out on the basis of the known radial distribution function . It is assumed that the temperature and density corresponding to this function are known. Trial potentials of interparticle interaction are generated on the basis of the Mie potential given by expression (9), where the parameters and can take values from the ranges defined by (11). Thus, the ranges given by (11) determine the specific region of the space within which the point will be searched.

Finally, it should be noted that the size of each population was sixty-four individuals (trial potentials), i.e. , that is optimal from point of view of the available computing resources. As in the case of the Ackley function considered above, the mutation rate and the crossover rate were taken equal to and , respectively. These values of both the parameters are taken from [54]; these values provide the fastest evolution of the population to the one containing the desired individual (solution). The choice of other acceptable values of these control parameters should not affect the final result.

3.3 Results

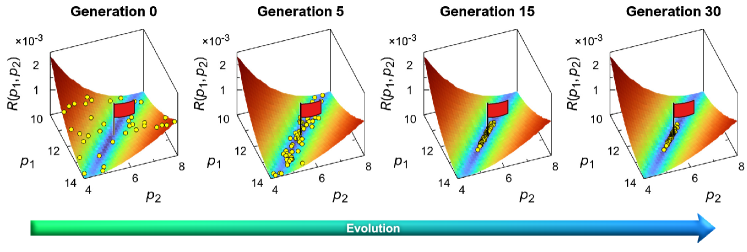

The applied algorithm finds succefully the desired individual corresponding to the Lennard-Jones potential. As seen from the scheme given in Fig. 4, the global minimum of the landscape corresponding to the Lennard-Jones potential is achieved in the process of evolution with fifteen generations. Further, in the process of evolution over ninety generations, the accuracy of the found parameters and is . All the individuals of the “final generation” are within a clear recognizable narrow range containing the desired individual . This is evidence of the efficiency of the proposed algorithm and indicates the successful solution of the problem formulated above in Sec. 3.1.

The results obtained lead to the question: Is there a one-to-one correspondence between the potential of interaction and the physical properties of the system? The answer to this question seems to be important even in the case of a simple monatomic system, where the interaction of an arbitrary pair of particles is determined only by their mutual distance, as in the case of the Lennard-Jones system. Let us try to get an answer to this question using the Lennard-Jones system as an example.

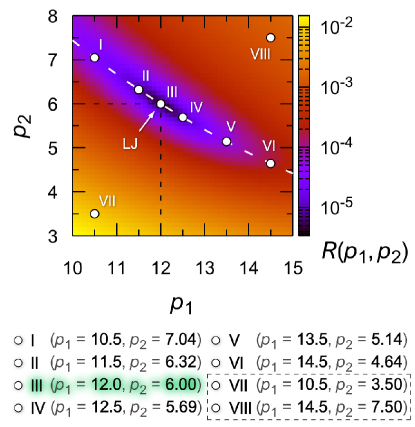

We start with the found residual , which quantitatively characterizes the degree of correspondence between the structure of the Mie system and the structure of the Lennard-Jones fluid. The best correspondence will be for such values of the parameters and for which the landscape has a minimum. As seen in Fig. 5, there is a depression (minimum) on the smooth landscape , which is not localized, but is represented by a narrow valley. It is quite natural that in this valley there is the point III corresponding to the Lennard-Jones system. The orientation of the valley in the phase space is such that, when moving along it, an increase in the parameter is compensated by a decrease in the parameter , and vice versa. As it turns out, such a correspondence between the parameters and results in a relatively conserved character of interparticle interaction, which is of the Lennard-Jones type. Let us choose six points (I – VI) along the valley and two points (VII and VIII) maximally remote from the valley as shown in Fig. 5 and consider further the structural, dynamic, and transport properties of the systems, which are determined by the values of the parameters of the Mie potential corresponding to these points.

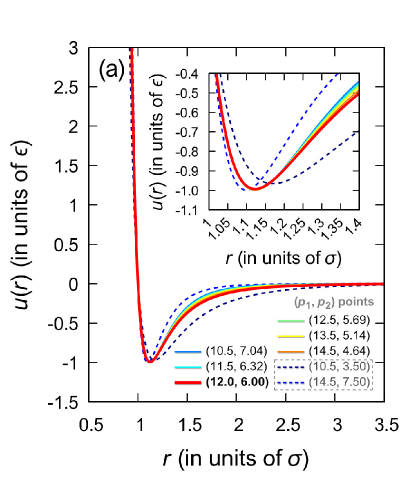

In Fig. 6(a), the Mie potential is shown for the eight combinations of the parameters and defined in Fig. 5. For points , marked with symbols I-VI, the general form of the Mie potential is practically the same, and only at the distances , correlated with the outer boundary of the first coordination sphere, very slight differences are found. Further, the minimum in the Mie potential will be located as in the Lennard-Jones potential at the distance , if the following equality is satisfied:

| (12) |

Solving this equation, we find the correspondence between the parameters of the Mie potential:

| (13) |

where is the Lambert function [60]. As can be seen from Fig. 5, Eq. (13) accurately reproduces the location of the valley bottom, where the function takes the minimum values and the best match between the Mie and Lennard-Jones potentials is found. Thus, at least for the range defined by and , the Mie potential with parameters and satisfying Eq. (13) reproduces the interparticle interactions almost identical to the Lennard-Jones ones.

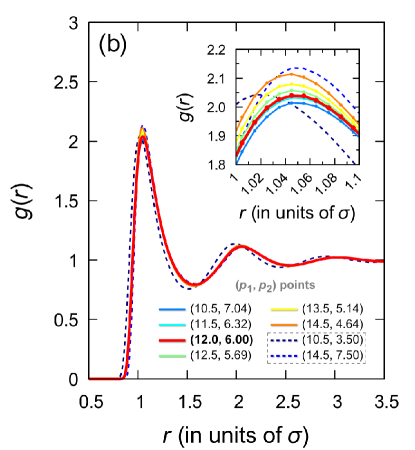

All structural features of the systems along the line containing points I-VI are practically identical. This is well confirmed by the radial distribution functions presented in Fig. 6(b). It is noteworthy that even when the repulsive and attractive contributions of the Mie potential mutually exceed the Lennard-Jones ones within certain limits, this can nevertheless lead to a radial distribution function typical of a Lennard-Jones fluid. This is seen, for example, for the case of point VIII with and shown in Fig. 6(b).

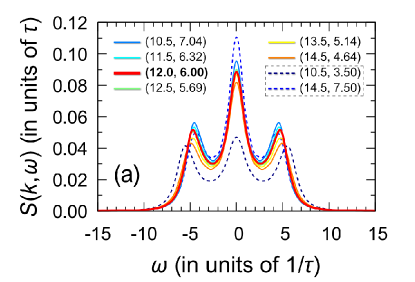

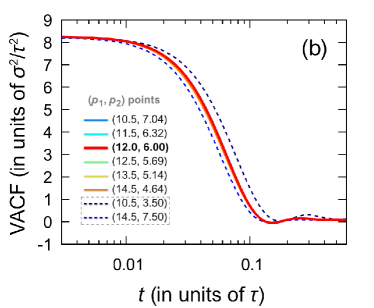

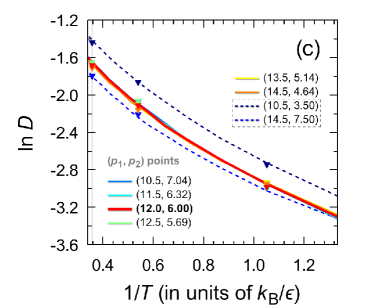

As known, the transport properties and collective dynamics of particles are extremely sensitive to all the nuances of interparticle interactions [61, 62, 63, 64, 65, 66, 67, 68]. The six Mie potentials corresponding to six points I-VI produce typical Lennard-Jones-like collective particle dynamics. This is confirmed by the spectra of the dynamic structure factor calculated by means of molecular dynamics simulations with the different Mie potentials. The widths of all peaks and the positions of high-frequency peaks in the spectra of the dynamic structure factor, shown in Fig. 7(a) for the case of the wavenumber as an example, are practically the same. Insignificant differences are observed only in the intensities of the central (Rayleigh) and high-frequency (Brillouin) components. The coincidence of the spectra of the dynamical structure factor indicates that the collective dynamics of these systems will be also identical to the Lennard-Jones one. The results of Fig. 7a directly indicate that all of these six Mie potentials should reproduce the sound propagation velocity, sound attenuation, and thermal conductivity typical of a Lennard-Jones fluid. The sound velocities for these Mie systems, estimated from molecular dynamics simulations, are as follows111The sound velocity was evaluated from the dispersion law , where is the location of the Brillouin peak of the dynamic structure factor at the generalized hydrodynamics low- regime.:

and

For the points I-VI, the same sound velocities, as well as the similar shapes of the high-frequency peak of the dynamic structure factor, indicate the same character of the propagation and attenuation of acoustic-like waves in the systems corresponding to the points I-VI. It is noteworthy that the velocity autocorrelation function, which characterizes the single-particle dynamics, also turns out to be the same for the potentials I-VI [see Fig. 7(b)]. Considering these results on the structure and particle dynamics, it can be expected that the Mie potentials (I-VI) are able even for a range of temperatures to reproduce the physical properties typical of the Lennard-Jones system. Indeed, as can be seen in Fig. 7(c), the self-diffusion coefficient calculated on the basis of the molecular dynamics simulations data along the isochore ( per particle) over a wide temperature range turns out to be the same for these systems. Thus, the Mie potentials I-VI lead to the single-particle dynamics and mass transport properties identical to those for the Lennard-Jones fluid.

4 Conclusion

The development of machine learning methods provides opportunities for solving physical problems that have been considered unsolvable for a long time. This class of problems includes the problem of restoring an interparticle interaction potential directly from empirical information about the physical properties of the system under study at the microscopic level. A necessary condition is that it should be possible to calculate analytically or numerically these physical properties on the basis of known interparticle interaction potential. For almost all (relevant) physical characteristics, this condition is satisfied. For simplest physical systems, the corresponding analytical equations are known from statistical mechanics, and these equations can only be solved in the direction “from potential to characteristic”, but not vice versa. In general, physical characteristics can be calculated using molecular dynamics simulations for a given interparticle interaction potential; and this is also possible only in one direction: “potential physical characteristic/propery”. Nevertheless, a very important point is what kind of information about the physical properties of the system under consideration will be optimal and sufficient to determine the potential. Would information about thermodynamic properties (e.g., equilibrium pressure, density) or about structure of the system, or about particle dynamics and transport properties of the system be suitable and sufficient?

The main result of this study is a differential evolution algorithm adapted in such a way as to restore the interparticle interaction potential from information about the static structure of a disordered many-particle system. The input quantity of the algorithm is the radial distribution function , which may initially be obtained from experiments on microscopy and diffraction. The relative simplicity of calculating the function by means of molecular dynamics or Monte-Carlo simulations with a taken potential makes this algorithm computationally efficient. However, if necessary, the algorithm can be modified for the case of potential recovery from the physical properties experimentally determined for some range of temperatures and/or pressures/densities (for example, from the self-diffusion coefficient measured by the nuclear magnetic resonance method). In this case, it would only be necessary to perform an appropriate generalization of the target function associated with a given measurable physical property.

In this study, the efficiency of the algorithm is tested on two problems. First, we considered a test optimization problem related to finding a global minimum of the Ackley function. It was found that the algorithm correctly finds this global minimum of the this function and thereby demonstrates its efficiency. Second, for the case of the Lennard-Jones system, the algorithm recovers the interparticle interaction potential solely from the known radial distribution function for some thermodynamic state. Thus, optimization by a structural characteristic turns out to be sufficient to recover the potential, and no corrections for other characteristics that take into account more subtle physical effects are required. There are no obvious restrictions on the applicability of the method to any specific systems. For monatomic or few-component systems, it can be applied directly.

The results of this study address the question of whether there is always a one-to-one correspondence between the potential, the structure and other physical properties of a system? For a system of soft particles interacting through attraction, we get the following answer. The overall effective interparticle interaction does indeed produce specific structural, dynamic, and transport properties. However, in turn, this resulting effective interparticle interaction may be a result of various combinations of attractive and repulsive contributions. This is well revealed in the example of the Lennard-Jones system considered in this study. Namely, the same structure, collective particle dynamics, and self-diffusion typical of a Lennard-Jones fluid can be generated by a whole family of interparticle interaction potentials realized under the same thermodynamic conditions. The exponents of the repulsive and attractive contributions in the potentials can take values from fairly wide intervals and these exponents turn out to be correlated.

CRediT authorship contribution statement

Anatolii V. Mokshin: Conceptualization, Methodology, Analysis & Interpretation, Writing. Roman A. Khabibullin: Software, Computational algorithms, Numerical simulations, Validation, Visualization.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

The authors are grateful to R.M. Khusnutdinoff and B.N. Galimzyanov for discussion of the results of molecular dynamics simulations and acknowledges the Foundation for the Advancement of Theoretical Physics and Mathematics “BASIS” for supporting the computational part of this work. This work was supported by the Russian Science Foundation (project No. --).

References

- [1] L. D. Landau, E. M. Lifshitz, Course of Theoretical Physics: Vol. 5, Statistical Physics, Part 1, Butterworth-Heinemann, Oxford, 1980.

- [2] D. J. Evans, G. P. Morris, Statistical Mechanics of Nonequilibrium Liquids, Academic Press, London, 1990.

- [3] K. Binder, W. Kob, Glassy Materials and Disordered Solids: An Introduction to Their Statistical Mechanics, World Scientific, Singapore, 2011.

- [4] J. G. Kirkwood, The Statistical Mechanical Theory of Transport Processes I. General Theory, J. Chem. Phys. 14 (1946) 180–201. https://doi.org/10.1063/1.1724117

- [5] J.-P. Hansen, I. R. McDonald, Theory of Simple Liquids, Academic Press, London, 2006.

- [6] I. I. Fairushin, S. A. Khrapak, A. V. Mokshin, Direct evaluation of the physical characteristics of Yukawa fluids based on a simple approximation for the radial distribution function, Results in Physics 19 (2020) 103359. https://doi.org/10.1016/j.rinp.2020.103359

- [7] A. V. Mokshin, I. I. Fairushin, I. M. Tkachenko, Self-consistent relaxation theory of collective ion dynamics in Yukawa one-component plasmas under intermediate screening regimes, Phys. Rev. E 105 (2022) 025204. https://doi.org/10.1103/PhysRevE.105.025204

- [8] C. N. Likos, Effective interactions in soft condensed matter physics, Phys. Rep. 348 (2001) 207. https://doi.org/10.1016/S0370-1573(00)00141-1

- [9] T. Mueller, A. Hernandez, C. Wang, Machine learning for interatomic potential models, J. Chem. Phys. 152 (2020) 050902. https://doi.org/10.1063/1.5126336

- [10] J. Behler, Perspective: Machine learning potentials for atomistic simulations, J. Chem. Phys. 145 (2016) 170901. https://doi.org/10.1063/1.4966192

- [11] P. Rowe, G. Csányi, D. Alfè, A. Michaelides, Development of a machine learning potential for graphene, Phys. Rev. B 97 (2018) 054303. https://doi.org/10.1103/PhysRevB.97.054303

- [12] V. L. Deringer, A. P. Bartòk, N. Bernstein, D. M. Wilkins, M. Ceriotti, G. Csányi, Gaussian Process Regression for Materials and Molecules, Chem. Rev. 121 (2021) 10073–10141. https://doi.org/10.1021/acs.chemrev.1c00022

- [13] F. Ercolessi, J. B. Adams, Interatomic Potentials from First-Principles Calculations: The Force-Matching Method, Europhys. Lett. 26 (1994) 583–588. https://doi.org/10.1209/0295-5075/26/8/005

- [14] T. B. Blank, S. D. Brown, A. W. Calhoun, D. J. Doren, Neural network models of potential energy surfaces, J. Chem. Phys. 103 (1995) 4129–4137. https://doi.org/10.1063/1.469597

- [15] R. E. Ryltsev, N. M. Chtchelkatchev, Deep machine learning potentials for multicomponent metallic melts: Development, predictability and compositional transferabilit, J. Mol. Liq. 349 (2022) 118181. https://doi.org/10.1016/j.molliq.2021.118181

- [16] C. Groetsch, Inverse Problems: Activities for Undergraduates, The Mathematical Association of America, 1999.

- [17] R. L. McGreevy, L. Pusztai, Reverse Monte Carlo Simulation: A New Technique for the Determination of Disordered Structures, Mol. Simulat. 1 (1988) 359–367. https://doi.org/10.1080/08927028808080958

- [18] A. P. Lyubartsev, A. Laaksonen, Calculation of effective interaction potentials from radial distribution functions: A reverse Monte Carlo approach, Phys. Rev. E 52 (1995) 3730–3737. https://doi.org/10.1103/PhysRevE.52.3730

- [19] T. Youngs, Dissolve: next generation software for the interrogation of total scattering data by empirical potential generation, Molecular Physics 117 (2019) 3464–3477. https://doi.org/10.1080/00268976.2019.1651918

- [20] D. Levesque, J. J. Weis, L. Reatto, Pair interaction from structural data for dense classical liquids, Phys. Rev. Lett. 54 (1985) 451–454. https://doi.org/10.1103/physrevlett.54.451

- [21] M.-C. Chang, C. H. Tung, S. Y. Chang, J. M. Carrillo, Y. Wang, B. G. Sumpter, G.-R. Huang, C. Do, W.-R. Chen, A machine learning inversion scheme for determining interaction from scattering, Commun. Phys. 5 (2022) 46. https://doi.org/10.1038/s42005-021-00778-y

- [22] A. K. Soper, Empirical potential Monte Carlo simulation of fluid structure, Chem. Phys. 202 (1996) 295–306. https://doi.org/10.1016/0301-0104(95)00357-6

- [23] H. Chan, M. J. Cherukara, B. Narayanan, T. D. Loeffler, C. Benmore, S. K. Gray, S. K. R. S. Sankaranarayanan, Machine learning coarse grained models for water, Nat. Commun. 10 (2019) 379. https://doi.org/10.1038/s41467-018-08222-6

- [24] S. Thaler, J. Zavadlav, Learning neural network potentials from experimental data via Differentiable Trajectory Reweighting, Nat. Commun. 12 (2021) 6884. https://doi.org/10.1038/s41467-021-27241-4

- [25] D. R. de Assis Elias, E. Granato, M. de Koning, Global exploration of phase behavior in frustrated Ising models using unsupervised learning techniques, Physica A 589 (2022) 126653. https://doi.org/10.1016/j.physa.2021.126653

- [26] Y. Jiang and M. T. Sulgani, R. Ranjbarzadeh, A. Karimipour, T. K. Nguyen, Hybrid GMDH-type neural network to predict fluid surface tension, shear stress, dynamic viscosity & sensitivity analysis based on empirical data of iron(II) oxide nanoparticles in light crude oil mixture, Physica A 526 (2019) 120948. https://doi.org/10.1016/j.physa.2019.04.184

- [27] C. O. Stoico, D. G. Renzi, F. Vericat, A genetic algorithm for the 1D electron gas, Physica A 387 (2008) 159–166. https://doi.org/10.1016/j.physa.2007.07.075

- [28] I. Grigorenko, M. E. Garcia, An evolutionary algorithm to calculate the ground state of a quantum system, Physica A 284 (2000) 131–139. https://doi.org/10.1016/S0378-4371(00)00218-1

- [29] W. Yu, P. Lyu, Unsupervised machine learning of phase transition in percolation, Physica A 559 (2020) 125065. https://doi.org/10.1016/j.physa.2020.125065

- [30] D. Yadav, P. Dansena, S. K. Ghosh, P. K. Singh, A unique multilayer perceptron model (ANN) for different oxide/EG nanofluid’s viscosity from the experimental study, Physica A 549 (2020) 124030. https://doi.org/10.1016/j.physa.2019.124030

- [31] F. Sofos, A. Charakopoulos, K. Papastamatiou, T. E. Karakasidis, A combined clustering/symbolic regression framework for fluid property prediction, Phys. Fluids 34 (2022) 062004. https://doi.org/10.1063/5.0096669

- [32] T. M. Alam, J. P. Allers, C. J. Leverant, J. A. Harvey, Symbolic regression development of empirical equations for diffusion in Lennard-Jones fluids, J. Chem. Phys. 157 (2022) 014503. https://doi.org/10.1063/5.0093658

- [33] K. Papastamatiou, F. Sofos, T. E. Karakasidis, Machine learning symbolic equations for diffusion with physics-based descriptions, AIP Adv. 12 (2022) 025004. https://doi.org/10.1063/5.0082147

- [34] R. Storn, K. Price, Differential Evolution — A Simple and Efficient Heuristic for global Optimization over Continuous Spaces, J. Global Optim. 11 (1997) 341–359. https://doi.org/10.1023/A:1008202821328

- [35] I. A. Kruglov, A. Yanilkin, A. R. Oganov, P. Korotaev, Phase diagram of uranium from ab initio calculations and machine learning, Phys. Rev. B 100 (2019) 174104. https://doi.org/10.1103/PhysRevB.100.174104

- [36] H. Wang, X.-L. Pan, Y.-F. Wang, X.-R. Chen, Y.-X. Wang, H.-Y. Geng, Lattice dynamics and elastic properties of -U at high-temperature and high-pressure by machine learning potential simulations, J. Nucl. Mater. 572 (2022) 154029. https://doi.org/10.1016/j.jnucmat.2022.154029

- [37] N. Van Nghia, H. K. Hieu, The melting curves of tin, uranium, cadmium, thallium and indium metals under pressure, Chem. Phys. 553 (2022) 111389. https://doi.org/10.1016/j.chemphys.2021.111389

- [38] B. Beeler, K. Mahbuba, Y. Wang, A. Jokisaari, Determination of Thermal Expansion, Defect Formation Energy, and Defect-Induced Strain of -U Via ab Initio Molecular Dynamics, Front. Mater. 8 (2021) 661387. https://doi.org/10.3389/fmats.2021.661387

- [39] K. Migdal, A. Yanilkin, Cold and hot uranium in DFT calculations: Investigation by the GTH pseudopotential, PAW, and APW + lo methods, Comput. Mater. Sci. 199 (2021) 110665. https://doi.org/10.1016/j.commatsci.2021.110665

- [40] A. J. Clark, J. McCarty, M. G. Guenza, Effective potentials for representing polymers in melts as chains of interacting soft particles, J. Chem. Phys. 139 (2013) 124906. https://doi.org/10.1063/1.4821818

- [41] J. Jin, A. J. Pak, A. E. P. Durumeric, T. D. Loose, G. A. Voth, Bottom-up Coarse-Graining: Principles and Perspectives, J. Chem. Theory Comput. XXX (2022) XXX. https://doi.org/10.1021/acs.jctc.2c00643

- [42] S. Y. Joshi, S. A. Deshmukh, A review of advancements in coarse-grained molecular dynamics simulations, 47 (2021) 786-803. https://doi.org/10.1080/08927022.2020.1828583

- [43] I. Z. Fisher, Statistical Theory of Liquids, University of Chicago Press, Chicago, IL, 1964.

- [44] J. P. Boon, S. Yip, Molecular Hydrodynamics, Dover Publications, New York, 1991.

- [45] J. A. Barker, D. Henderson, What is “liquid”? Understanding the states of matter, Rev. Mod. Phys. 48 (1976) 587–671. https://doi.org/10.1103/RevModPhys.48.587

- [46] M. P. Allen, D. J. Tildesley, Computer Simulation of Liquids, Oxford University Press, Oxford, 1991.

- [47] D. Frenkel and B. Smit, Understanding Molecular Simulation: From Algorithms to Applications, Academic Press, San Diego, 2002.

- [48] N. R. Draper and H. Smith, Applied Regression Analysis, John Wiley & Sons, 1998.

- [49] S. Plimpton, Fast Parallel Algorithms for Short-Range Molecular Dynamics, J. Comp. Phys. 117 (1995) 1–19. https://doi.org/10.1006/jcph.1995.1039

- [50] T. Bäck, Evolutionary Algorithms in Theory and Practice: Evolution Strategies, Evolutionary Programming, Genetic Algorithms, Oxford University Press, Oxfrod, 1996.

- [51] K. V. Price, R. M. Storn and J. A. Lampinen, Differential Evolution: A Practical Approach to Global Optimization, Springer, Berlin, 2005.

- [52] K. V. Price, Differential evolution: a fast and simple numerical optimizer, NAFIPS’96 (1996) 524–527. https://doi.org/10.1109/NAFIPS.1996.534790

- [53] D. H. Ackley, A connectionist machine for genetic hillclimbing, Kluwer Academic Publishers, Boston, 1987.

- [54] M. Centeno-Telleria, E. Zulueta, U. Fernandez-Gamiz, D. Teso-Fz-Betono, A. Teso-Fz-Betono, Differential Evolution Optimal Parameters Tuning with Artificial Neural Network, Mathematics 9 (2021) 427. https://doi.org/10.3390/math9040427

- [55] G. Mie, Zur kinetischen Theorie der einatomigen Körper, Ann. Phys. 11 (1903) 657–697. https://doi.org/10.1002/andp.19033160802

- [56] K. Trachenko, V. V. Brazhkin, Collective modes and thermodynamics of the liquid state, Rep. Prog. Phys. 79 (2016) 016502. https://doi.org/10.1088/0034-4885/79/1/016502

- [57] S. Stephan, M. Thol, J. Vrabec, H. Hasse, Thermophysical Properties of the Lennard-Jones Fluid: Database and Data Assessment, J. Chem. Inf. Model. 59 (2019) 4248–4265. https://doi.org/10.1021/acs.jcim.9b00620

- [58] A. J. Schultz, D. A. Kofke, Comprehensive high-precision high-accuracy equation of state and coexistence properties for classical Lennard-Jones crystals and low-temperature fluid phases, J. Chem. Phys. 149 (2018) 204508. https://doi.org/10.1063/1.5053714

- [59] S. Toxvaerd, J. C. Dyre, Communication: Shifted forces in molecular dynamics, J. Chem. Phys. 134 (2011) 081102. https://doi.org/10.1063/1.3558787

- [60] D. Veberič, Lambert W function for applications in physics, Comput. Phys. Commun. 183 (2012) 2622–2628. https://doi.org/10.1016/j.cpc.2012.07.008

- [61] P. Gallo, R. Pellarin, M. Rovere, Single particle dynamics of a confined Lennard–Jones mixture in the supercooled regime, Physica A 314 (2002) 530–538. https://doi.org/10.1016/S0378-4371(02)01046-4

- [62] Y. D. Fomin, V. V. Ryzhov, E. N. Tsiok, V. V. Brazhkin, Dynamical crossover line in supercritical water, Sci. Rep. 5 (2015) 14234. https://doi.org/10.1038/srep14234

- [63] A. V. Mokshin, Self-consistent approach to the description of relaxation processes in classical multiparticle systems, Theor. Math. Phys. 183 (2015) 449–477. https://doi.org/10.4213/tmf8778

- [64] A. V. Mokshin, B. N. Galimzyanov, A method for analyzing the non-stationary nucleation and overall transition kinetics: A case of water, J. Chem. Phys. 140 (2014) 024104. https://doi.org/10.1063/1.4851438

- [65] A. V. Mokshin, B. N. Galimzyanov, Self-consistent description of local density dynamics in simple liquids. The case of molten lithium, J. Phys.: Condens. Matter 30 (2018) 085102. https://doi.org/10.1088/1361-648X/aaa7bc

- [66] R. M. Khusnutdinoff, B. N. Galimzyanov, A. V. Mokshin, Dynamics of Liquid Lithium Atoms. Pseudopotential and EAM-Type Potentials, J. Exp. Theor. Phys. 126 (2018) 83–89. https://doi.org/10.1134/S1063776118010041

- [67] R. M. Khusnutdinoff, A. V. Mokshin, B. A. Klumov, R. E. Ryltsev, N. M. Chtchelkatchev, Structural features and the microscopic dynamics of the three-component Zr47Cu46Al7 system: Equilibrium melt, supercooled melt, and amorphous alloy, J. Exp. Theor. Phys. 123 (2016) 265–276. https://doi.org/10.1134/S1063776116060042

- [68] A. V. Mokshin, S. O. Zabegaev, R. M. Khusnutdinoff, Dynamic heterogeneity of a colloidal solution near the sol-gel transition, Phys. Solid State 53 (2011) 570–576. https://doi.org/10.1134/S106378341103019X