Joint Depth Estimation and Mixture of Rain Removal From a Single Image

Abstract

Rainy weather significantly deteriorates the visibility of scene objects, particularly when images are captured through outdoor camera lenses or windshields. Through careful observation of numerous rainy photos, we have found that the images are generally affected by various rainwater artifacts such as raindrops, rain streaks, and rainy haze, which impact the image quality from both near and far distances, resulting in a complex and intertwined process of image degradation. However, current deraining techniques are limited in their ability to address only one or two types of rainwater, which poses a challenge in removing the mixture of rain (MOR). In this study, we propose an effective image deraining paradigm for Mixture of rain REmoval, called DEMore-Net, which takes full account of the MOR effect. Going beyond the existing deraining wisdom, DEMore-Net is a joint learning paradigm that integrates depth estimation and MOR removal tasks to achieve superior rain removal. The depth information can offer additional meaningful guidance information based on distance, thus better helping DEMore-Net remove different types of rainwater. Moreover, this study explores normalization approaches in image deraining tasks and introduces a new Hybrid Normalization Block (HNB) to enhance the deraining performance of DEMore-Net. Extensive experiments conducted on synthetic datasets and real-world MOR photos fully validate the superiority of the proposed DEMore-Net. Code is available at https://github.com/yz-wang/DEMore-Net.

Index Terms:

DEMore-Net, Image deraining, Depth estimation, Mixture of rain, Joint learning, Hybrid normalization blockI Introduction

Rain, being one of the most ubiquitous weather phenomena, unavoidably induces conspicuous image quality degradation and hinders the performance of various outdoor vision systems [1, 2, 3]. The visibility of the captured scenes is significantly deteriorated by the presence of rain streaks in the atmosphere, as well as the detrimental effect of raindrops that fall onto the camera lens. Moreover, the accumulation of minuscule raindrops in the distant background also engenders a haze-like effect, further exacerbating the visual degradation. This intricate and tangled process of image deterioration, resulting from these three forms of rainwater, is a captivating phenomenon and we designate it as a Mixture of Rain (MOR).

Image deraining aims at restoring the sharp images from their rainy counterparts, which is a challenging ill-posed problem. Existing deraining techniques can roughly fall into two categories: rain streak removal and raindrop removal. Early rain streak removal wisdom commonly exploits hand-crafted priors with empirical observations, such as sparse coding [4], low-rank representation [5], and Gaussian Mixture Model (GMM) [6]. Notwithstanding the commendable outcomes achieved by the prior-based deraining algorithms, their capacity to model and remove rain is restricted. The advent of deep learning techniques in the field of image enhancement [7, 8] has led to a proliferation of learning-based approaches for rain streak and raindrop removal [9, 10, 11, 12, 13, 14]. Despite producing promising results on various datasets, most of these approaches only focus on addressing one type of rainwater, thereby being insufficient for effectively handling the MOR challenge.

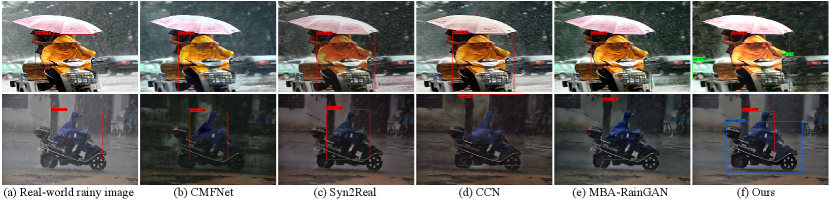

Recently, this issue has been picked up by several learning-based deraining studies [15, 16, 17, 18]. These algorithms argue that more than one type of rainwater is present in rainy images and attempt to remove them in one go. Hu et al. [15] first observed that rainy images are composed of rain streaks and rainy haze, which often co-occur during image capture. To address this issue, they develop DAF-Net to remove rain streaks and haze simultaneously. Afterward, several deraining paradigms have been proposed for removing both forms of rainwater artifacts with some success. However, since these efforts only consider two types of rainwater and ignore the MOR effect, they cannot well address the challenging MOR problems. To our knowledge, there is presently only one deraining model that considers the removal of all three forms of rainwater, namely MBA-RainGAN [18]. Despite achieving satisfactory results in some rainy scenarios, the deraining capacity of MBA-RainGAN is still limited since it ignores the fact that depth information is quite important, which can provide additional beneficial information to guide the network to remove different forms of rainwater. As illustrated in Fig. 1, compared with the state-of-the-art (SOTA) deraining algorithms, the proposed DEMore-Net with the guidance information of the scene depth produces a much clearer and perceptually more pleasing derained result.

This work goes beyond previous image deraining techniques by considering the importance of depth information and developing a unified deraining paradigm called DEMore-Net that integrates depth estimation and image deraining. DEMore-Net leverages a joint learning framework to simultaneously perform image deraining and depth estimation tasks and encourages them to collaborate and promote each other. The estimated depth map serves as a guide to removing different forms of rainwater in a targeted manner depending on the distance, while the image deraining module helps the depth estimation network learn better depth estimation. Additionally, a novel Hybrid Normalization Block (HNB) is proposed to advance the stability of the network training and enhance its learning and generalization capacities, thereby helping the model in tackling the intractable MOR problem. Moreover, to further advance the MOR removal capacity of the model, an efficient feature enhancement network (self-calibrated convolutions [22]) is introduced in the design of our DEMore-Net to generate more discriminative feature representations. Extensive experiments on synthetic MOR testbeds (RainCityscapes++ [18]) and real-world MOR photos demonstrate that our DEMore-Net outperforms the SOTA image deraining algorithms significantly.

Overall, the main contributions are summarized as follows:

-

•

A novel unified deraining paradigm is proposed for the removal of mixture of rain, called DEMore-Net, which combines depth estimation and image deraining tasks by a joint learning framework. DEMore-Net is trained in an end-to-end fashion to simultaneously learn about depth estimation and MOR removal, thus encouraging the two sub-tasks to benefit from each other.

-

•

We propose a novel Hybrid Normalization Block (HNB) to enhance the stability of the network during the training phase and facilitate its learning and generalization capacities for better recovery of MOR images.

-

•

We compare the proposed DEMore-Net with 14 state-of-the-art image deraining approaches through considerable experiments. The results are evaluated in terms of full- and no-referenced image quality assessments, visual quality, and human subjective surveys. As observed, the proposed DEMore-Net achieves SOTA performance on both synthetic MOR testbeds and real MOR photos.

The subsequent sections of this paper are structured as follows. In Section II, a brief review of the relevant literature is provided, which is divided into two categories: single-type rain removal and multi-type rain removal approaches. Section III presents a detailed overview of DEMore-Net, which is proposed for removing the mixture of rain. Section IV describes the implemented experiments and discusses the results, followed by conclusions in Section V.

II Related Work

In this section, we briefly categorize the discussion into two aspects: single-type rain removal and multi-type rain removal algorithms.

II-A Single-Type Rain Removal

Rain streak removal. Conventional rain streak removal efforts resort to exploiting hand-crafted priors based on image statistics to restore the rainy images [4, 5, 6, 23, 24]. Kang et al. [4] employ dictionary learning and sparse coding to decompose the rainy images into different components and then reconstruct the clean images. Chen et al. [5] develop a generalized low-rank appearance model to remove rain streaks from images. Similarly, Chang et al. [23] exploit a low-rank image decomposition model to restore the rain-free images from their rainy versions. Zhu et al. [24] propose a joint optimization framework with three image priors for rain streak removal from rainy images.

Recently, learning-based approaches have demonstrated their superiority for rain streak removal [9, 10, 25, 26, 27]. Yang et al. [9] develop a multi-task framework to detect and remove rain streaks jointly. Zhang et al. [10] create a density-aware image deraining network to simultaneously predict rain density and remove rain streaks. Li et al. [25] develop a recurrent squeeze-and-excitation contextual dilated network for single image deraining and achieve very promising results. Zhang et al. [26] exploit a conditional GAN-based model with additional regularization for rain streaks removal. Ahn et al. [27] develop a two-step rain removal method that first estimates the rain density and rain streak intensity and then remove them.

Raindrop removal. Since most rain streak removal algorithms cannot be directly applied to raindrop removal, many approaches have been proposed for raindrop detection and removal [28, 29, 12, 30, 13, 14]. Kurihata et al. [28] employ the well-known PCA to learn the shape of raindrops and attempt to match the rainy regions. Roser et al. [29] propose an algorithm for monocular raindrop detection in single images. Eigen et al. [31] develop a convolutional network to remove raindrops from a single image. Latter, You et al. [32] exploit Spatio-temporal information for video raindrop removal. More recently, Qian et al. [12] propose an attentive GAN-based network for single-image raindrop removal. Quan et al. [30] create a CNN-based network to remove raindrops by using shape-driven attention and channel re-calibration. Yan et al. [14] propose a two-stage video-based raindrop removal approach that first employs a single image module to produce the initial clean results and then refine them by using temporal constraints.

II-B Multi-Type Rain Removal

Very recently, several image deraining approaches [15, 16, 33, 17, 18] have noticed the presence of more than one type of rainwater artifact in rainy images and attempted to remove them in one go. Hu et al. [15] first establish a new dataset with rain streaks and rainy haze, and then develop an end-to-end model to remove them simultaneously. Guo et al. [16] propose an integrated multi-task framework to handle the joint raindrop and haze removal problem by combining the atmospheric scattering model and deep neural network. Zhang et al. [33] create a dual branch neural network for removing both rain streaks and raindrops. Quan et al. [17] propose an effective cascaded network for removing raindrops and rain streaks simultaneously via using the neural architecture search algorithm. Shen et al. [18] develop a multi-branch attention GAN-based framework to simultaneously remove rain streaks, raindrops, and rainy haze, which is the only study that considers the MOR effect among existing rain removal algorithms. However, these methods either ignore the MOR effect or do not exploit the depth information of the scene, which can be regarded as important prior knowledge to guide the network in removing different types of rainwater artifacts, thus limiting their rain removal capacity in these complex scenarios.

III DEMore-Net

As the scene depth can accurately portray the different forms of rainwater artifacts according to distance, which can be used as important prior knowledge to guide the network to remove these artifacts in a targeted manner. Unfortunately, prevailing image deraining methods tend to overlook this significant guidance information, thus limiting their deraining performance in various scenarios. To address this limitation, we propose a unified and efficient deraining paradigm that seamlessly integrates the depth estimation and image deraining tasks for MOR removal, termed DEMore-Net. In the following, we first present the imaging model of MOR images, which is degraded by three forms of rainwater. Next, the overview of DEMore-Net is elaborated to reveal how we address the entangled MOR removal problem. Subsequently, we describe the network architecture of the MOR removal and depth estimation networks, showcasing how they operate in our unified deraining paradigm. Finally, the proposed Hybrid Normalization Block (HNB) is introduced for better restoring the MOR images.

III-A MOR Imaging Model

Rain Streak Model. We adopt the widely used rain model [34] to define a rain streak degraded image as a superposition of the clean background and the accumulated rain streak layer , and the observed at pixel can be expressed as follows:

| (1) |

where

| (2) |

In the above equations, denotes the intensity of uniformly-distributed rain streaks in the image; refers to the pixel-wise multiplication; and denotes the intensity map of rain streak, which depends on the value of the scene depth and can be expressed as:

| (3) |

where denotes the attenuation coefficient, which controls the intensity of the rain streaks. Specifically, objects close to the camera lens are mainly affected by the raindrops and the rain streak intensity is smaller here. As the objects are far from the camera, rain streak intensity gradually increases to its maximum intensity and then falls to zero with the increase of . At this point, the image is mainly degraded by the rainy haze.

Raindrop Model. A raindrop degraded image can be modeled as a combination of the clean background as well as the impact of the raindrops , and the observed can be expressed as [12]:

| (4) |

where denotes whether the pixel is corrupted by raindrops or not, and refers to the raindrop layer.

Rainy Haze Model. Unlike rain streaks, the visual intensity of rainy haze increases exponentially with the scene depth , we adopt the standard atmosphere scattering model [35] to simulate the image degradation process caused by rainy haze, and the observed hazy image at pixel can be formulated as:

| (5) |

where

| (6) |

In the above equations, represents the global atmosphere light, denotes the atmospheric transmission, and denotes the atmosphere scattering parameter. Note that a larger suggests a thicker rainy haze and vice versa.

MOR Model. In rainy weather, especially under heavy rain conditions, raindrops, rain streaks, and rainy haze often appear simultaneously during outdoor image capture. Therefore, a MOR image can be formulated as follows:

| (7) |

where refers to the light conditions and color cast from active light sources. Unlike the fixed global atmosphere light , enables us to model the nighttime MOR images, which expands the application scenarios of the MOR imaging model. Note that rain streaks and rainy haze may also change the illumination conditions, which in turn affects the transparency of raindrops during image capture. That is, these three rainwater artifacts interact with each other to form the final MOR effect, which is a quite complex and entangled process.

III-B Overview of DEMore-Net

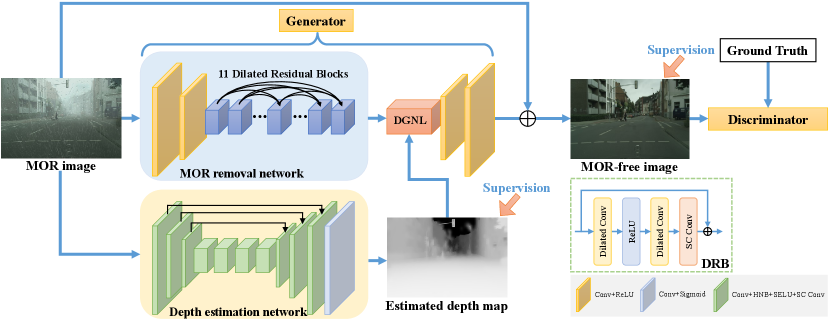

As observed in Eq. 7, scene depth plays a crucial role in the degradation process of MOR images. To fully exploit the informative guidance provided by the scene depth, we present a highly effective and unified deraining paradigm called DEMore-Net. As illustrated in Fig. 2, DEMore-Net consists of two principal modules: the MOR removal sub-network and the depth estimation sub-network. In this way, the joint learning framework enables the MOR removal and depth estimation tasks to collaborate and benefit from each other. DEMore-Net operates in an end-to-end fashion, taking a MOR photo as input and producing the corresponding MOR-free image and depth map as outputs. To produce clearer and more realistic MOR-free images, we introduce the adversarial training strategy in our MOR removal sub-network.

The pipeline of DEMore-Net can be described in detail as follows: First, the input MOR image undergoes preliminary image restoration and depth estimation tasks through both the MOR removal sub-network and the depth estimation sub-network. Then, the predicted depth map is utilized by the MOR removal sub-network to provide guidance for better restoring the MOR image. To achieve this, a depth-guided non-local (DGNL) [36] module is employed to fuse the depth information with the coarsely restored features, resulting in a refined MOR-free image. Finally, a discriminator is employed to evaluate the authenticity of the restored MOR-free image, determining whether it is a real clean image or a fake image generated by the MOR removal network. This allows for the generation of more realistic images.

III-C Network Architecture

Overall, DEMore-Net comprises three components: 1) a depth estimation sub-network that employs an encoder-decoder network with multiple feature enhancement modules to produce the depth map; 2) a MOR removal sub-network that produces the MOR-free image using the estimated depth map as additional guidance; and 3) a discriminator that utilizes adversarial training strategies to improve the quality of the restored MOR-free images.

Depth Estimation Network. Inspired by the success of encoder-decoder architecture and ResNet in image restoration field [12, 37, 38], we exploit an encoder-decoder network as our depth estimation network and introduce an up-to-date multi-scale feature extraction network (self-calibrated convolutions [22]) to further improve the prediction accuracy of depth maps. Self-calibrated convolution module is an improved convolutional network, which can construct long-range spatial and inter-channel dependencies for each spatial location in the feature space, thereby enriching the output features and helping CNNs generate more discriminative representations. Herein, we employ self-calibrated convolutions as our feature enhancement module to improve the depth estimation network’s performance.

As depicted in Fig. 2, the depth estimation network adopts 11 convolutional blocks to extract features from the input MOR image and then output the depth map in an end-to-end manner. For the first 10 convolution blocks, each block contains a convolutional operation, a residual block, a hybrid normalization, a Scaled Exponential Linear Unit (SELU), and a self-calibrated convolution module. Note that all the normalization methods used in this work are the proposed hybrid normalization block, which will be described in the next section. After extracting the image features, we adopt a convolutional operation, and a sigmoid activation function (i.e., convolutional block) to output the final depth map in a supervised manner. Here, we adopt the simple loss to train the depth estimation network, which can be expressed as:

| (8) |

where is the predicted depth map, is the ground truth depth map; and denote the width and height of the depth map, respectively.

MOR Removal Network. Considering that adversarial training strategies can encourage the generated images close to the clean images (i.e., ground truth) and make them more realistic, we built the MOR removal sub-network based on a standard generative adversarial network. As demonstrated in Fig. 2, the proposed generator consists of a convolution head to extract image features, a DenseNet-shape body to extract deeper features and restore the clean features simultaneously, and a 11 convolution tail to convert channel numbers and output the restored clean image features. The DenseNet-shape body is a stack of dilated residual blocks (DRB) with skip-connections, which contains two 33 dilated convolutions and a non-linear ReLU layer. Although applying dilated convolutions can enlarge the fields of view of the model without adding additional computation, it may easily cause the well-known gridding problem. To address this issue, we follow [36] to combine dilated convolutions with conventional convolutions and set the dilation rates of these 11 DRBs to . Finally, the depth-guided non-local (DGNL) module is employed to fuse the predicted clean image features with the depth map, thus helping the model to better remove different types of rainwater according to the distance.

The function of the discriminator is to determine whether an input image is a real MOR-free image or a fake image produced by the restoration network, thereby encouraging the generator to generate high-quality images. For adversarial training, we adopt 3 convolutional layers and a linear layer to construct our discriminator. Considering that the Least-Squares GAN (LSGAN) loss is more effective in improving the training stability than vanilla GAN loss, we employ the LSGAN to train our MOR removal network. The definition of adversarial loss can be formulated as:

| (9) |

| (10) |

where refers to the ground truth images and represents the restored MOR-free images.

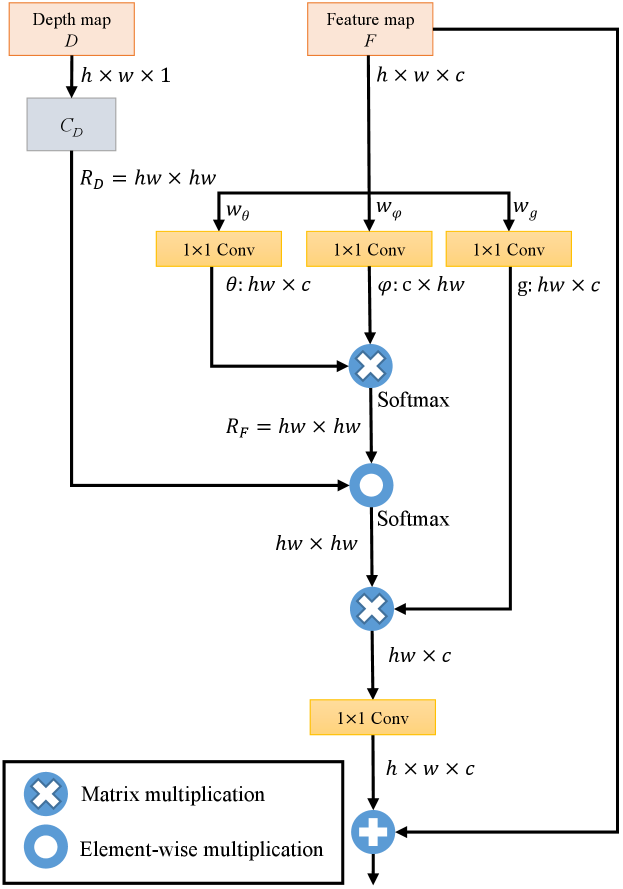

Depth-Guided Non-Local Module. The depth-guided non-local (DGNL) module [36] is an effective feature fusion network, which is employed to build the relationship between each pair of spatial locations based on the depth map and hence enriches the output features. In addition, as mentioned previously, both the type and visual intensity of rainwater artifacts in MOR images depend on scene depth, as does the process of MOR removal. Therefore, we adopt the DGNL module to fuse the depth information with the restored clean image features to help our DEMore-Net better recover the MOR-free images.

As depicted in Fig. 3, the DGNL module is extended based on the Non-local neural network [39] and aggregated the depth information as additional guidance to enrich the final output features. In light of this, we regard the depth information as a piece of prior knowledge and leverage the DGNL module to fuse the depth map with restored image features, thus helping DEMore-Net to remove different types of rainwater in a depth-guided manner. After fusing the depth and image features, we employ two convolutional operations to enlarge the feature map and output the final MOR-free images.

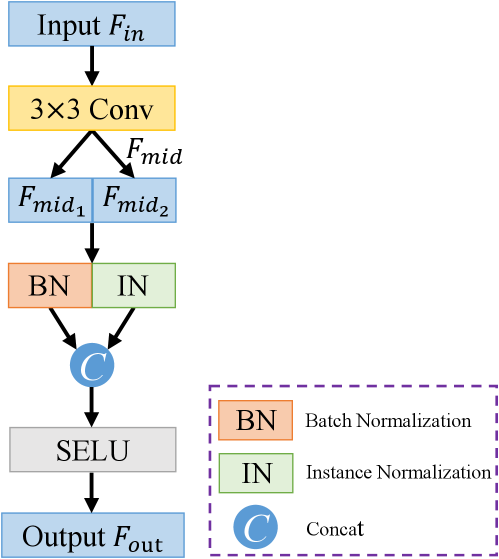

III-D Hybrid Normalization Block

Normalization has become a crucial component in various high-level vision tasks but is rarely used in low-level vision tasks, especially Batch Normalization (BN). In fact, the work [40] has demonstrated that Instance Normalization (IN) can learn features that are not affected by appearance changes, such as brightness, colors, and styles, whilst BN is important to preserve content-related features. Both BN and IN are essential as they can promote each other to benefit the vision tasks. Inspired by this, we carefully integrate BN and IN as building blocks and develop a Hybrid Normalization Block (HNB) to advance the network performance in MOR removal tasks. Specifically, HNB leverages both BN and IN in one normalization operation, thus improving its learning and generalization capacities. Also, the additional parameters and computational costs introduced by HNB can be ignored.

As depicted in Fig. 4, given a feature map , a 33 convolution is first adopted to produce the intermediate feature map . Then, HNB divides the input into two parts, namely, and (). The first part is normalized by BN with learnable parameters and the second part is normalized by IN, and then they are concatenated in the channel dimension. HNB employs BN on half of the channels and IN on the other half, so that content-related features can be preserved while learning features that are invariant to appearance changes. After concatenating the two intermediate features, the output feature map is obtained by passing features to a SELU layer.

III-E Loss Functions

To train the proposed DEMore-Net more effectively, we take into account all the positive factors that can improve the quality of the restored MOR-free images. Besides depth estimation loss and adversarial loss, we also adopt the reconstruction loss, Dark Channel (DC) loss [41] and Total Variation (TV) loss [42] to train our DEMore-Net. The total loss function can be expressed as:

| (11) |

where , are loss weights, and we set , , and in our experiments.

Reconstruction Loss. The reconstruction loss in this work is a combination of multi-scale structural similarity (MS-SSIM) loss [43] and loss, which is formulated as follows:

| (12) |

where

| (13) |

| (14) |

In the above equations, is the hyperparameter, and we empirically set in our experiments. is the total number of the scales and is set to 5 according to [43]; , and , represent the mean and standard deviations of and , refers to their covariance. and denote the relative importance of the two components, both are simply set to 1 in our experiments. Two small constants and are added to avoid the unstable case of division by zero.

Dark Channel Loss. In light of the effectiveness of the dark channel prior [44] in the image dehazing field, we apply the Dark Channel loss to help the DEMore-Net deal with the rainy haze in the MOR images, which is formulated as:

| (15) |

where represents the vector form of the dark channel of the restored MOR-free images . Since dark channel operation is a highly non-convex and non-linear term, it cannot be directly embedded into the learning networks. We adopt the look-up table scheme (following [41]) to implement the forward and backward steps of the dark channel operation.

Total Variation Loss. We adopt the total variation loss [42] to reduce the difference between adjacent pixel values to make the restored images more natural, which can be expressed as:

| (16) |

where and denote the horizontal and vertical differential operation matrices, respectively.

IV Experiments

In this section, comprehensive experiments are conducted on both synthetic RainCityscapes++ benchmark [18] and real-world rainy images to evaluate the MOR removal capacity of DEMore-Net and other algorithms. Moreover, we also perform an ablation study to evaluate the effectiveness of each component in DEMore-Net. The details are as follows.

IV-A Implementation Details

Dataset. To the best of our knowledge, the RainCityscapes++ dataset [18] stands as the sole synthetic MOR benchmark to encompass raindrops, rain streaks, and rainy haze in one consolidated dataset. It comprises a total of 8580 synthetic MOR images, out of which 7580 images serve for training and 1000 images for testing. Henceforth, we adopt the RainCityscapes++ dataset to train and evaluate the performance of our proposed DEMore-Net.

Training Details. DEMore-Net is implemented by PyTorch 1.7 on a system with an Intel Core i9-12900KF CPU, 32 GB RAM, and an NVIDIA GeForce RTX 3090 GPU. We adopt the Adam optimizer with a batch size of 2 to train our model, where the momentum parameters and are set to 0.9 and 0.999. The total number of iterations and the initial learning rate are empirically set to and , respectively. Considering that the original image size of the RainCityscapes++ dataset is too large ( pixels), we resize the images to pixels for both training and inference.

Evaluation Settings. To quantitatively assess the performance of DEMore-Net, we employ the average Peak Signal to Noise Ratio (PSNR) and Structural Similarity index (SSIM) as the evaluation metrics, which are the most widely used image objective evaluation indexes in the field of image restoration. The proposed DEMore-Net is compared with various SOTA image deraining approaches. These algorithms can be divided into two categories, namely, single-type rain removal methods and multi-type rain removal methods. For single-type rain removal methods, we compare with both raindrop, rain streak, and rainy haze removal approaches, including AttentiveGAN [12], CMFNet [19], MSPFN [45], Syn2Real [20], DGCN [46], MPRNet [37], LD-Net [47], and SGID [21]. For multi-type rain removal methods, we compare with DAF-Net [15], DGNL-Net [36], CCN [17], MBA-RainGAN [18], and the cascade of different types rain removal models (i.e., raindrop+rain streak+rainy haze removal).

| Method | Publication | Removal Type | PSNR | SSIM | |

| AttentiveGAN [12] | CVPR’18 | Raindrop | 24.03 | 0.842 | |

| CMFNet [19] | arXiv’22 | Raindrop | 28.59 | 0.891 | |

| MSPFN [45] | CVPR’20 | Rain Streak | 22.19 | 0.704 | |

| Syn2Real [20] | CVPR’20 | Rain Streak | 27.15 | 0.898 | |

| DGCN [46] | AAAI’21 | Rain Streak | 23.64 | 0.794 | |

| MPRNet [37] | CVPR’21 | Rain Streak | 22.87 | 0.762 | |

| LD-Net [47] | TIP’21 | Rainy Haze | 20.06 | 0.741 | |

| SGID [21] | TIP’22 | Rainy Haze | 21.87 | 0.761 | |

| DAF-Net [15] | CVPR’19 | RainS + RainH | 25.21 | 0.852 | |

| DGNL-Net [36] | TIP’21 | RainS + RainH | 26.89 | 0.864 | |

| CCN [17] | CVPR’21 | RainD + RainS | 27.51 | 0.901 | |

| MBA-RainGAN [18] | ICASSP’22 | RainD + RainS + RainH | 29.16 | 0.913 | |

| DGNL-Net + CMFNet | - | RainD + RainS + RainH | 24.89 | 0.866 | |

| CCN + SGID | - | RainD + RainS + RainH | 24.43 | 0.867 | |

| DEMore-Net | ours | RainD + RainS + RainH | 35.46 | 0.959 |

IV-B Comparison with State-of-the-arts

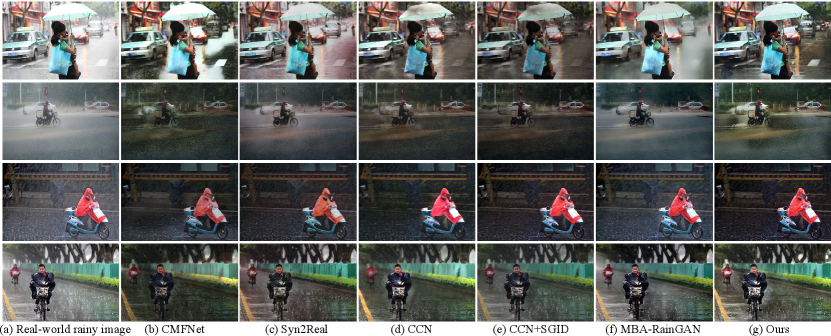

Comparison on Synthetic Dataset. For quantitative evaluation, we report the averaged PSNR and SSIM metrics of 14 state-of-the-art dehazing algorithms on the RainCityscapes++ test set in Table I. For a fair comparison, all compared methods are retrained on the RainCityscapes++ training set. As can be seen, since single-type rain removal algorithms can only remove one type of rainwater artifact, they cannot achieve satisfactory results. Surprisingly, cascading combinations of different rain removal methods (raindrop+rain streak+rainy haze) also usually yielded poor results. We argue this is because different restoration methods may introduce additional artifacts or lose details in the image processing process (see Fig. 5), and this process is constantly accumulating, degrading the quality of the final restored images. Compared with these SOTA approaches, the proposed DEMore-Net achieves the best performance with 35.46 PSNR and 0.959 SSIM.

Moreover, Fig. 5 exhibits a visual comparison of synthetic MOR images from the RainCityscapes++ dataset. As observed, Syn2Real cannot completely remove the rainy haze in the image, and some haze remains in the sky area. Although CMFNet and CCN succeed in removing the rain to some extent, they cannot preserve the details of the image well, making the image look a little blurry. CCN+SGID (cascading combination method) seems unable to cope with the intractable MOR problem by combining it with another dehazing algorithm. Compared with the original CCN approach, it introduces additional artifacts and tends to darken the restored images. The deraining results of MBA-RainGAN are pretty good but still cannot completely remove the rainy haze in sky regions. In contrast, our DEMore-Net can produce much clearer and more natural MOR-free images.

Comparison on Real-World MOR Images. To evaluate the effectiveness of DEMore-Net in real-world rainy scenarios, we compare our method with SOTA rain removal approaches on real-world MOR images. Fig. 6 displays 4 real-world rainy images and the deraining results by different algorithms. As observed, Syn2Real cannot produce satisfactory deraining results due to color distortion. For CMFNet and CCN, although they have largely overcome the issue of color distortion, they introduce additional artifacts into the image, making the deraining results look unreal and unnatural. The results of CCN+SGID look very dark, and there is still some remaining rain. MBA-RainGAN can remove most of the rain streaks and raindrops in the image, but still cannot effectively address the rainy haze in some regions. Compared with these SOTA methods, our DEMore-Net produces the most realistic MOR-free with perceptually pleasing and high quality.

To better understand the deraining capacity of DEMore-Net in real-world rainy scenarios, a user study is performed to quantitatively evaluate the deraining results. To be more specific, we first prepare 50 real-world rainy images from an existing real-world rainy dataset (DDN-SIRR [11]) or from the Internet. Then, we apply 5 representative SOTA deraining algorithms and our DEMore-Net to restore these 50 images. Next, 10 participants (5 males/5 females) are recruited and asked to score the deraining results on a scale from 1 (worst) to 5 (best). We exhibit to each participant these 300 derained images in a random order, without telling them the corresponding deraining algorithms. The experimental results are depicted in Table II, demonstrating that our DEMore-Net can cope well with real-world MOR removal tasks.

| Method | Rating (mean & standard dev.) |

|---|---|

| CMFNet [19] | 3.34 ± 0.45 |

| Syn2Real [20] | 3.16 ± 0.55 |

| CCN [17] | 3.13 ± 0.74 |

| CCN + SGID | 2.75 ± 0.82 |

| MBA-RainGAN [18] | 3.42 ± 0.57 |

| DEMore-Net (ours) | 3.74 ± 0.33 |

| Method | NIQE | SSEQ | BRISQUE | PI |

|---|---|---|---|---|

| Rainy image | 4.582 | 28.456 | 23.276 | 3.259 |

| Syn2Real [20] | 3.894 | 28.559 | 19.142 | 2.594 |

| CMFNet [19] | 4.495 | 27.322 | 21.934 | 3.144 |

| CCN [17] | 3.961 | 26.382 | 22.574 | 3.106 |

| CCN + SGID | 4.553 | 28.450 | 23.052 | 3.077 |

| MBA-RainGAN [18] | 4.409 | 19.626 | 17.938 | 2.939 |

| DEMore-Net | 3.714 | 19.480 | 18.875 | 2.555 |

For quantitative comparison, 4 well-known no-reference image quality assessment indexes are employed to evaluate the performance of real-world MOR removal, including NIQE, SSEQ, BRISQUE, and PI [48]. All these indexes are evaluated on the above-mentioned 50 real-world rainy images. As tabulated in Table III, our DEMore-Net achieves the best performance in terms of NIQE, SSEQ, and PI, showing that the restored MOR-free images produced by our approach are much clearer and more realistic. Furthermore, DEMore-Net also achieves an impressive performance in BRISQUE. In a nutshell, our DEMore-Net wins three of the four indexes, fully validating the superiority of DEMore-Net on real-world rain removal tasks.

IV-C Ablation Study

The proposed DEMore-Net exhibits superior MOR removal capacity compared to 14 SOTA deraining algorithms. To evaluate the effectiveness of DEMore-Net, an ablation study is performed to validate different components, including the DenseNet structure, self-calibrated convolutions, depth estimation branch, adversarial training strategy, and Hybrid Normalization Block (HNB).

We first adopt 11 dilated residual blocks (DRB) with 2 convolutional operations to construct the base model of the MOR removal network (without depth estimation branch) and then train this model via the aforementioned implementation details. The normalization approach used here is group normalization [49]. Next, different components are gradually added to our base model:

-

1.

base model + DenseNet structure ,

-

2.

+ self-calibrated convolutions ,

-

3.

+ depth estimation branch (including DGNL) ,

-

4.

+ adversarial training strategy ,

-

5.

+ Hybrid Normalization Block (full model).

All these models are retrained in the same training strategy as before and evaluated on the RainCityscapes++ dataset. The experimental results of these models are depicted in Table IV.

As exhibited in Table IV, each component in our DEMore-Net contributes to MOR removal, especially the well-designed depth estimation branch, which achieves a 4.96dB PSNR and 0.057 SSIM improvement over variant . Additionally, the proposed HNB has significantly advanced the performance of our network in terms of PSNR, and the use of adversarial training strategy and self-calibrated convolutions improve the model performance in SSIM. The adoption of the DenseNet structure has also improved the deraining performance of the model. As observed, the committee with these five components produced the best MOR removal performance, indicating that the five components can complement each other.

| Variants | Base | |||||

| DenseNet | w/o | ✓ | ✓ | ✓ | ✓ | ✓ |

| SC Conv | w/o | w/o | ✓ | ✓ | ✓ | ✓ |

| Depth Estimation Branch | w/o | w/o | w/o | ✓ | ✓ | ✓ |

| Adversarial Training | w/o | w/o | w/o | w/o | ✓ | ✓ |

| HNB | w/o | w/o | w/o | w/o | w/o | ✓ |

| PSNR | 24.38 | 25.60 | 26.86 | 31.82 | 33.43 | 35.46 |

| SSIM | 0.845 | 0.855 | 0.869 | 0.926 | 0.941 | 0.959 |

IV-D Application

Typically, the accuracy of object detectors drops significantly in rainy conditions due to obvious visibility degradation. To prove that our DEMore-Net can benefit vision-based applications, we adopt a pre-trained YOLOXs [50] detector to detect objects on real-world rainy images and the corresponding deraining results by different algorithms. As depicted in Fig. 7, after removing the rain, the confidence in recognizing objects is greatly improved. Clearly, DEMore-Net outperforms the other image deraining approaches, fully demonstrating the effectiveness of our approach in real-world deraining tasks.

IV-E Efficiency Analysis

Considering that efficiency is quite crucial for computer vision systems, we evaluate the computational performance of some typical deraining algorithms and report their average running times in Table V. All the methods are implemented on an NVIDIA GeForce RTX 3090 GPU. It can be observed that DEMore-Net takes on average about 0.07 to process a rainy image from the RainCityscapes++ dataset (720480 pixels). Our DEMore-Net has the second-fastest inference speed among the 13 representative deraining approaches.

| Method | Platform | Average time |

|---|---|---|

| AttentiveGAN [12] | PyTorch (GPU) | 0.25 |

| CMFNet [19] | PyTorch (GPU) | 0.22 |

| MSPFN [45] | TensorFlow (GPU) | 0.68 |

| Syn2Real [20] | PyTorch (GPU) | 0.37 |

| DGCN [46] | TensorFlow (GPU) | 0.28 |

| MPRNet [37] | PyTorch (GPU) | 0.23 |

| LD-Net [47] | PyTorch (GPU) | 0.35 |

| SGID [21] | PyTorch (GPU) | 0.52 |

| DAF-Net [15] | Caffe (GPU) | 0.05 |

| DGNL-Net [36] | PyTorch (GPU) | 0.09 |

| CCN [17] | PyTorch (GPU) | 0.36 |

| MBA-RainGAN [18] | PyTorch (GPU) | 0.43 |

| DEMore-Net (ours) | PyTorch (GPU) | 0.07 |

V Conclusion

This paper presents a highly efficient unified paradigm for MOR removal, named DEMore-Net. The proposed methodology leverages a joint learning framework to perform depth estimation and image deraining tasks simultaneously. By considering the depth map as an essential prior knowledge, the network is guided to remove various types of rainwater more effectively. Additionally, the deraining module’s output features are shared to improve the depth prediction in the other branch, resulting in a collaborative and mutually beneficial approach. To enhance the model’s stability and generalization abilities, a novel Hybrid Normalization Block (HNB) is developed in the network design. Moreover, to further improve the MOR removal capacity of DEMore-Net, the self-calibrated convolution network is introduced as a feature enhancement module to produce more discriminative feature representations. Both quantitative and qualitative evaluations demonstrate that DEMore-Net surpasses 14 contemporary SOTA image deraining algorithms.

References

- [1] S. Cobreces, E. J. Bueno, D. Pizarro, F. J. Rodriguez, and F. Huerta, “Grid impedance monitoring system for distributed power generation electronic interfaces,” IEEE Trans. Instrum. Meas., vol. 58, no. 9, pp. 3112–3121, Sep. 2009.

- [2] N. Buch, S. A. Velastin, and J. Orwell, “A review of computer vision techniques for the analysis of urban traffic,” IEEE Trans. Intell. Transp. Syst., vol. 12, no. 3, pp. 920–939, Sep. 2011.

- [3] S. S. M. Ali, B. George, L. Vanajakshi, and J. Venkatraman, “A multiple inductive loop vehicle detection system for heterogeneous and lane-less traffic,” IEEE Trans. Instrum. Meas., vol. 61, no. 5, pp. 1353–1360, May. 2012.

- [4] L.-W. Kang, C.-W. Lin, and Y.-H. Fu, “Automatic single-image-based rain streaks removal via image decomposition,” IEEE Trans. Image Process., vol. 21, no. 4, pp. 1742–1755, Apr. 2012.

- [5] Y.-L. Chen and C.-T. Hsu, “A generalized low-rank appearance model for spatio-temporally correlated rain streaks,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), Dec. 2013, pp. 1968–1975.

- [6] Y. Li, R. T. Tan, X. Guo, J. Lu, and M. S. Brown, “Rain streak removal using layer priors,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2016, pp. 2736–2744.

- [7] N. Singh and A. K. Bhandari, “Principal component analysis-based low-light image enhancement using reflection model,” IEEE Trans. Instrum. Meas., vol. 70, pp. 1–10, Jul. 2021.

- [8] Z. Zhou, Z. Shi, and W. R. Ren, “Linear contrast enhancement network for low-illumination image enhancement,” IEEE Trans. Instrum. Meas., vol. 71, pp. 1–12, Dec. 2022.

- [9] W. Yang, R. T. Tan, J. Feng, J. Liu, Z. Guo, and S. Yan, “Deep joint rain detection and removal from a single image,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jul. 2017, pp. 1357–1366.

- [10] H. Zhang and V. M. Patel, “Density-aware single image de-raining using a multi-stream dense network,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2018, pp. 695–704.

- [11] W. Wei, D. Meng, Q. Zhao, Z. Xu, and Y. Wu, “Semi-supervised transfer learning for image rain removal,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2019, pp. 3877–3886.

- [12] R. Qian, R. T. Tan, W. Yang, J. Su, and J. Liu, “Attentive generative adversarial network for raindrop removal from a single image,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2018, pp. 2482–2491.

- [13] Q. Luo, K. Liu, J. Su, C. Yang, W. Gui, L. Liu, and O. Silvén, “Waterdrop removal from hot-rolled steel strip surfaces based on progressive recurrent generative adversarial networks,” IEEE Trans. Instrum. Meas., vol. 70, pp. 1–11, Jul. 2021.

- [14] W. Yan, L. Xu, W. Yang, and R. T. Tan, “Feature-aligned video raindrop removal with temporal constraints,” IEEE Trans. Image Process., vol. 31, pp. 3440–3448, May. 2022.

- [15] X. Hu, C.-W. Fu, L. Zhu, and P.-A. Heng, “Depth-attentional features for single-image rain removal,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2019, pp. 8022–8031.

- [16] Y. Guo, J. Chen, X. Ren, A. Wang, and W. Wang, “Joint raindrop and haze removal from a single image,” IEEE Trans. Image Process., vol. 29, pp. 9508–9519, Oct. 2020.

- [17] R. Quan, X. Yu, Y. Liang, and Y. Yang, “Removing raindrops and rain streaks in one go,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2021, pp. 9147–9156.

- [18] Y. Shen, Y. Feng, W. Wang, D. Liang, J. Qin, H. Xie, and M. Wei, “Mba-raingan: A multi-branch attention generative adversarial network for mixture of rain removal,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), May. 2022, pp. 3418–3422.

- [19] C.-M. Fan, T.-J. Liu, and K.-H. Liu, “Compound multi-branch feature fusion for real image restoration,” arXiv preprint arXiv:2206.02748, 2022.

- [20] R. Yasarla, V. A. Sindagi, and V. M. Patel, “Syn2real transfer learning for image deraining using gaussian processes,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2020, pp. 2726–2736.

- [21] H. Bai, J. Pan, X. Xiang, and J. Tang, “Self-guided image dehazing using progressive feature fusion,” IEEE Trans. Image Process., vol. 31, pp. 1217–1229, Jan. 2022.

- [22] J.-J. Liu, Q. Hou, M.-M. Cheng, C. Wang, and J. Feng, “Improving convolutional networks with self-calibrated convolutions,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2020, pp. 10 096–10 105.

- [23] Y. Chang, L. Yan, and S. Zhong, “Transformed low-rank model for line pattern noise removal,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), Oct. 2017, pp. 1726–1734.

- [24] L. Zhu, C.-W. Fu, D. Lischinski, and P.-A. Heng, “Joint bi-layer optimization for single-image rain streak removal,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), Oct. 2017, pp. 2526–2534.

- [25] X. Li, J. Wu, Z. Lin, H. Liu, and H. Zha, “Recurrent squeeze-and-excitation context aggregation net for single image deraining,” in Proc. Eur. Conf. Comput. Vis. (ECCV), Sep. 2018, pp. 254–269.

- [26] H. Zhang, V. Sindagi, and V. M. Patel, “Image de-raining using a conditional generative adversarial network,” IEEE Trans. Circuits Syst. Video Technol., vol. 30, no. 11, pp. 3943–3956, Nov. 2019.

- [27] N. Ahn, S. Y. Jo, and S. Kang, “Eagnet: Elementwise attentive gating network-based single image de-raining with rain simplification,” IEEE Trans. Circuits Syst. Video Technol., vol. 32, no. 2, pp. 608–620, Mar. 2022.

- [28] H. Kurihata, T. Takahashi, I. Ide, Y. Mekada, H. Murase, Y. Tamatsu, and T. Miyahara, “Rainy weather recognition from in-vehicle camera images for driver assistance,” in IEEE Proceedings of Intelligent Vehicles Symposium, Jan. 2005, pp. 205–210.

- [29] M. Roser and A. Geiger, “Video-based raindrop detection for improved image registration,” in Proc. IEEE Int. Conf. Comput. Vis. Workshops (ICCVW), Sep. 2009, pp. 570–577.

- [30] Y. Quan, S. Deng, Y. Chen, and H. Ji, “Deep learning for seeing through window with raindrops,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), Oct. 2019, pp. 2463–2471.

- [31] D. Eigen, D. Krishnan, and R. Fergus, “Restoring an image taken through a window covered with dirt or rain,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), Dec. 2013, pp. 633–640.

- [32] S. You, R. T. Tan, R. Kawakami, Y. Mukaigawa, and K. Ikeuchi, “Adherent raindrop modeling, detection and removal in video,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 38, no. 9, pp. 1721–1733, Sep. 2016.

- [33] K. Zhang, D. Li, W. Luo, and W. Ren, “Dual attention-in-attention model for joint rain streak and raindrop removal,” IEEE Trans. Image Process., vol. 30, pp. 7608–7619, Sep. 2021.

- [34] Y. Luo, Y. Xu, and H. Ji, “Removing rain from a single image via discriminative sparse coding,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), Dec. 2015, pp. 3397–3405.

- [35] S. G. Narasimhan and S. K. Nayar, “Chromatic framework for vision in bad weather,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2000, pp. 598–605.

- [36] X. Hu, L. Zhu, T. Wang, C.-W. Fu, and P.-A. Heng, “Single-image real-time rain removal based on depth-guided non-local features,” IEEE Trans. Image Process., vol. 30, pp. 1759–1770, Jan. 2021.

- [37] S. W. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, M.-H. Yang, and L. Shao, “Multi-stage progressive image restoration,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2021, pp. 14 821–14 831.

- [38] Y. Wang, X. Yan, D. Guan, M. Wei, Y. Chen, X.-P. Zhang, and J. Li, “Cycle-snspgan: Towards real-world image dehazing via cycle spectral normalized soft likelihood estimation patch gan,” IEEE Trans. Intell. Transp. Syst., May. 2022.

- [39] X. Wang, R. Girshick, A. Gupta, and K. He, “Non-local neural networks,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2018, pp. 7794–7803.

- [40] X. Pan, P. Luo, J. Shi, and X. Tang, “Two at once: Enhancing learning and generalization capacities via ibn-net,” in Proc. Eur. Conf. Comput. Vis. (ECCV), Sep. 2018, pp. 464–479.

- [41] L. Li, Y. Dong, W. Ren, J. Pan, C. Gao, N. Sang, and M.-H. Yang, “Semi-supervised image dehazing,” IEEE Trans. Image Process., vol. 29, pp. 2766–2779, Nov. 2019.

- [42] H. A. Aly and E. Dubois, “Image up-sampling using total-variation regularization with a new observation model,” IEEE Trans. Image Process., vol. 14, no. 10, pp. 1647–1659, Oct. 2005.

- [43] Z. Wang, E. P. Simoncelli, and A. C. Bovik, “Multiscale structural similarity for image quality assessment,” in Proc. 37th Asilomar Conf. Signals, Syst. Comput., vol. 2, Nov. 2003, pp. 1398–1402.

- [44] K. He, J. Sun, and X. Tang, “Single image haze removal using dark channel prior,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 33, no. 12, pp. 2341–2353, Dec. 2011.

- [45] K. Jiang, Z. Wang, P. Yi, C. Chen, B. Huang, Y. Luo, J. Ma, and J. Jiang, “Multi-scale progressive fusion network for single image deraining,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Jun. 2020, pp. 8346–8355.

- [46] X. Fu, Q. Qi, Z.-J. Zha, Y. Zhu, and X. Ding, “Rain streak removal via dual graph convolutional network,” in Proc. Conf. Artif. Intell. (AAAI), vol. 35, no. 2, Feb. 2021, pp. 1352–1360.

- [47] H. Ullah, K. Muhammad, M. Irfan, S. Anwar, M. Sajjad, A. S. Imran, and V. H. C. de Albuquerque, “Light-dehazenet: a novel lightweight cnn architecture for single image dehazing,” IEEE Trans. Image Process., vol. 30, pp. 8968–8982, Oct. 2021.

- [48] Y. Blau, R. Mechrez, R. Timofte, T. Michaeli, and L. Zelnik-Manor, “The 2018 PIRM challenge on perceptual image super-resolution,” in Proc. Eur. Conf. Comput. Vis. Workshops (ECCVW), vol. 11133, Sep. 2018, pp. 334–355.

- [49] Y. Wu and K. He, “Group normalization,” in Proc. Eur. Conf. Comput. Vis. (ECCV), Sep. 2018, pp. 3–19.

- [50] Z. Ge, S. Liu, F. Wang, Z. Li, and J. Sun, “Yolox: Exceeding yolo series in 2021,” arXiv preprint arXiv:2107.08430, 2021.