Jut: A Framework for Just-in-Time Data Access

Abstract

With the proliferation of sensor and personal devices, our physical spaces are now awash in potential data sources. In principle this data could serve a wide range of applications and services. However, leveraging these data sources is challenging with today’s systems because they are not typically designed to consume data opportunistically, from a new device that happens to arrive in the vicinity.

In this paper, we present the design and implementation of Jut, a system designed for “Just-in-Time” data access — in which an application is able to discover and consume data from any available source, even ones not known at development or installation time. Jut combines two novel design choices: modularizing data processing systems to better reflect the physical world, and a new form of application-data integration that equips data processing pipelines with the information they need to process new and evolving data formats and schemas. We show that these choices greatly simplify the development and use of smart-space and IoT applications. For a representative set of devices and application scenarios, we show that Jut can implement use-cases not easily supported today, or can do so with 3.2-14.8 less development effort and 3-12 lower query complexity than current systems.

1 Introduction

From phones to health trackers, smart appliances, and self-driving cars, we are witnessing an explosive growth in connected devices. These devices stand to transform the world of data since every connected device is now a potential data source. And while early Big Data was driven by the growth in online content (e.g., web pages, video), today, data is increasingly generated by devices in the physical world.

A growing number of applications in domains ranging from retail and heath, to smart spaces and environmental monitoring would like to leverage these data sources. An important class of such applications — and our primary focus in this paper — relates to smart spaces. These apps must accurately capture our physical spaces and hence leveraging data from users and devices that inhabit the space is invaluable. E.g., consider a smart campus app that today relies on data from sensors deployed by the building owners to optimize their HVAC systems for current occupancy and air quality. In principle, this app’s dataset could be augmented by data collected from the devices carried by the space’s occupants. I.e., a user entering a building may contribute sensor readings from her phone to the building’s app. This data could improve the app’s accuracy (offering data from a different vantage point) and cost (requiring fewer sensors).

For the most part, today’s apps are limited to data from sources that are predefined and manually onboarded rather than (say) collected from a user that happened to walk in the door. Our goal is to enable apps to consume data in a manner that is more opportunistic than organized: apps should dynamically discover potential sources, ingest their data, and incorporate this data into the data-driven insights they expose. We call this “Just-in-Time” data access (JIT-DA), reflecting the fact that we want to leverage data sources discovered at runtime vs. development or installation time.

Can we achieve JIT-DA with today’s systems? The current landscape of smart-space and IoT app development is, unfortunately, rather messy. These apps are often closed and vertical silos, with a single vendor that develops an app to manage their own devices [4, 30, 25] - as such, they don’t address the problem of JIT data sources. An alternative is to use one of the open frameworks for IoT app development - e.g., AWS IoT [13] and Home Assistant [26] in the commercial arena or research prototypes such as BOSS [56] and dSpace [58]. These are application frameworks - they provide services [13, 44] or code [38, 11, 32] that aid in onboarding devices and expressing the application logic for automating these devices: e.g., defining IFTTT rules [27] for automation (e.g., if <condition> then dim lights), defining identifier and tag namespaces (e.g., to represent rooms, buildings), associating tags with devices (e.g., tagging a lamp with the id of the room it is in), grouping devices (e.g., lamps in room Foo), and so forth. The focus of these app frameworks is on device automation and actuation logic and, as such, their support for data storage and analytics is thin. The typical assumption is that data streams from devices will be loaded into a standalone database/datalake. Leveraging insights from this data is typically out of scope for these app frameworks and hence it is left to the app developer to bring in a separate data processing system111By a data processing system, we mean the various components to do with data ingestion (parsing, cleaning, normalizing input data), storage (e.g., row- or column-based), organization (e.g., schema registries, metadata tables, indexes, repositories), query engines, and so forth. and integrate it with their app, a process that developers typically address in a bespoke manner. Relevant to our focus: in both app and data processing frameworks today, the operator typically onboards new devices or data sources manually and, as such, they are not designed for JIT data.

The Jut framework we present in this paper aims to enable the use of JIT data sources in smartspace/IoT apps. As we’ll discuss, the manner in which Jut achieves this has the fortuitous benefit of simplifying the above app development process, even for regular (i.e., non-JIT) data sources. In a nutshell, Jut achieves this by: (i) providing a richer and more systematic approach to integrating app and data processing layers and, (ii) making it easier for app developers to efficiently identify and query data that is relevant to what we term an application-level context, such as a room or building. In the remainder of this section, we elaborate on both the gaps in existing systems when it comes to JIT data sources and how Jut addresses these gaps.

1.1 Challenges in realizing JIT-DA

In this section, we argue that enabling JIT-DA must start by examining the data processing architecture that typically underlies data-driven apps. Realizing JIT-DA with existing data processing systems is hard for three key reasons.

(1) Unplanned data sources.

JIT data sources are often unplanned in the sense that they are not known at app development or installation time. As a result, the app developer is unaware of both what form the input data will take in terms of data formats and schemas, and when, if at all, the data source will appear. Instead, as users in (for example) our smart campus enter the building, their data streams must be integrated with the building’s ingestion pipeline, appear in the query results, etc.

As a result, the data processing pipeline must be prepared to handle data that is heterogeneous (spanning different representations and semantics) and must be dynamic (evolving at runtime in an automated manner to handle new sources that appear/disappear). As we discuss in §2, recent efforts in the database community address the former but not the latter [65, 60, 66, 69]. Instead, in today’s systems, the data processing pipeline is typically static and evolution requires manual intervention. Our work addresses how we can construct pipelines that adapt dynamically and automatically to app-level events, while incorporating recent techniques for heterogeneous data models within these pipelines.

(2) Decentralized ownership and policies.

In the scenarios we consider, an app’s data sources might be owned and operated independently from the app provider. This is true not just of end users and their devices but also across smart-space operators - e.g., even within a single campus, the operator of a CS building might have very different operational requirements from that of the life sciences building. Thus in such app environments, supporting the policies and autonomy of the various stakeholders becomes key. A user might share data with one building app but not another; one building operator might have restrictions on whether their data can be stored in the cloud while another may not; etc. Today, an app’s input streams are typically dumped into a monolithic database or data lake, making it difficult to impose fine-grained policies - e.g., per building, department, or user.

(3) Data sources are application unaware.

The apps we consider typically have abstractions that reflect their application context – e.g., room, building – and the entire purpose of these apps is to support operations over these abstractions: querying "the room", configuring "the building", and so forth. However, because JIT-DA sources are independent of a space or app, these abstractions are typically not represented in their data schemas: e.g., the data schema that a phone vendor uses to represent the temperature readings on the phone are unlikely to include a field that represents the building the phone is currently in. This omission complicates querying "the room" because a necessary first step in querying a context is knowing which data sources are associated with that context: e.g., a query for the average room temperature must first know which phones were present in the room and when, so that it only uses data from the appropriate phones at the appropriate times.

Unfortunately, as mentioned above, the association between an app and a JIT data source is potentially complex: opportunistic, dynamically changing, and subject to distributed control. And yet, while this association lasts, we want to incorporate the source’s data into the application logic. This implies that someone must track the association between the app and its data sources. Today’s app frameworks offer no systematic support for tracking these associations nor for translating from operations on high-level app abstractions to a heterogeneous and evolving set of per-source data streams. Instead app developers must do so with bespoke and ad-hoc techniques: e.g., defining metadata stores that track when and which sources were in the room, rewriting queries, dynamically augmenting the input data stream. As we illustrate in §5, this adds significant complexity to the development of smart-space apps.

1.2 Jut: An architecture for JIT-DA

In this paper we present Jut, a new framework for building JIT-DA apps that addresses the above challenges. Our approach starts with the observation that the abstractions (e.g., devices, rooms, buildings) and information (e.g., when a device is present in a room) needed to address some of our challenges can be found in the application layer. The problem is that these abstractions/information are absent or not well represented in the underlying data processing systems. Moreover, there is no way to systematically and automatically reflect this app-level information in the underlying data processing layer; e.g., when the app detects a new device (described below), this should automatically trigger the app’s data processing pipeline to consider ingesting data from the device’s APIs.

Our framework thus adapts existing data architectures as follows. First, we modularize the data processing layer to reflect the natural modularity found in smart-space apps. Thus, instead of one monolithic data processing pipeline, in Jut, every entity – whether a device data source, a room, or a building – has an independent data processing pipeline that implements an ingest dataflow (i.e., processing input data relevant to that entity), storage, and an egress dataflow (i.e., for exporting data from its store). We refer to an entity modeled in this way as a context.

Next, we extend the data processing architecture to expose a new join(C1, C2) interface (and corresponding leave()) via which an application can inform its underlying data processing system that a context C1 is available to serve as a data source for a context C2. The implementation of join connects C1’s egress pipeline to C2’s ingestion pipeline. Importantly, join does so in a manner that enforces their respective policies (e.g., that is from a trusted vendor) and reconciles any mismatch in their heterogeneous data representations (e.g., converting from ’s data format to that required by ’s storage system). Thus a key contribution in Jut is a novel approach that, at runtime, compiles high-level policies into low-level functions for processing newly discovered data source. In effect, join allows applications to "plumb" contexts, to reflect the (dynamically evolving) relationship between JIT data sources and their physical space or context.

Taken together, the above changes address the challenges from §1.1. The modularity of contexts means we can develop and operate context code independently, supporting decentralized ownership and control. In addition, this modularity allows us to efficiently implement “by context” queries, since a context’s data is curated in its datastore (vs. requiring additional steps to first identify data relevant to a context). Finally, the join interface enables data processing pipelines that adapt to reflect the complex interactions between data sources and consumers in the physical world.

Jut thus provides general yet powerful abstractions that simplify building (smart-space) apps. Specifically, an app developer interacts with the Jut framework to create contexts, compose, and query them. Because contexts are an explicit yet modular and composable abstraction, developers can: (i) treat contexts themselves as data sources that can be queried, logged, configured, etc., (ii) can compose contexts into higher level ones (e.g., rooms as data sources in a building context); (iii) reuse contexts to accelerate development, and so forth.

Jut’s changes do not impact the internal techniques of current data processing systems: e.g., their storage formats, query planners, optimizers. Instead, the novelty that Jut brings is a shift in the system architecture of data processing systems: introducing a new modularity (based on contexts) and a new, more systematic and structured, integration between data and app layers (based on joining contexts).

In this paper, we present the design and implementation of the Jut framework that implements the above design approach. We evaluate Jut by using it to implement a range of smart-space scenarios using real physical devices and spaces. Our results show that Jut can implement use-cases that are not easily supported today, or can do so with 3.2-14.8 less development effort and 3-12 lower query complexity than current commercial [13] and research [58] systems.

2 Goals and Assumptions

Our goal is to make it easier for app developers to leverage the wealth of data sources available to them. We focus on smart-space apps as they are an important emerging class of applications,222Reports project the smart space market will cross $100B by 2030, fueled by the deployment of mobile and IoT devices and the adoption of AI techniques to consume/control these devices. but also because the number of connected devices in such spaces has grown tremendously and yet today’s apps leverage data from only a small fraction of them.

Operator and user incentives.

Our work is predicated on two assumptions regarding incentives. The first is that providers of smart-space apps are interested in consuming data from 3rd-party data sources; i.e., in JIT-DA. We believe this assumption is reasonable for a few different reasons. First, the additional data can improve the quality of the insights these apps provide. For example, by using data from tenants’ BYOD devices [1] such as smartphones and laptops, a smart building app such as Comfy [28] can estimate building occupancy, monitor ambient noise levels, and assess network quality in real-time across all occupied areas. Second, leveraging third-party data sources means that operators can deploy fewer data sensors (cameras, air quality monitors, motion sensors, and so on) reducing both their capital expenditure and the operational costs of maintaining the same. For example, tracking the number of people throughout a commercial building (typically with 100s of rooms) can be cost-prohibitive when using fixed, dedicated sensors like the Density radar [15]. Each radar, designed to cover around 1,325 sq. ft. for a room or space, has an upfront installation fee of $895, accompanied by an annual maintenance and data access charge of $795 [2]. The total annual operational costs can easily exceed $100,000, not to mention for campuses or complexes spanning 1,000s of acres with many buildings. In contrast, leveraging data from tenants’ devices can augment these dedicated sensors or even replace them in areas, achieving similar objectives at a fraction of the cost. Finally, such data can enable new differentiating features that are otherwise not possible. For example, by leveraging data about tenants’ exercise habits (e.g., Fitbit steps taken [22]), a building app can suggest appropriate walking routes and highlight available amenities within the building.

User incentives.

Likewise, we assume that users/sources may opt to share data with some smart-space app providers. We envision multiple possibilities why they might do so. One is because it leads to reciprocal benefits - i.e., sharing my data improves my app experience. For example, the smart building app could tag building areas based on tenant activity data, while recommending them quiet rooms for deep work. Another is that the user might have no option but to do so given the terms of their app/device. This is often the case, for example, with corporate apps and devices where employees may be required to use specific apps that track usage, location, or other data as part of the organization’s IT security and compliance measures. Yet another possibility is that the user benefits financially from sharing their data. For example, employees might be offered discounts on building amenities, in exchange for sharing certain data. Finally, in some situations, users might volunteer their data for purely altruistic motives. Consider a scenario where building occupants voluntarily share data to monitor environmental conditions, aiming for a communal goal of reducing the building’s carbon footprint or promoting sustainability.

Service discovery.

Our work also makes two assumptions on the technical front. The first is that service discovery - i.e., discovering apps and their APIs - is largely a solved problem. Consider our canonical scenario in which a user, running an app A1 on her device, walks into a building that is associated with a smart-space app A2. Our ultimate goal is to incorporate A1’s data stream into A2’s analytics pipeline and/or vice versa. But first A1 and A2 must discover each other based on location and exchange information about their APIs, data schemas, and so forth. This discovery can be bootstrapped through one of many well-known techniques: (i) the user scans a QR code in the building [24], (ii) a captive portal on attaching to the building’s WiFi AP [35], (iii) a well-known naming scheme (e.g., building.cs.univ.edu), and so forth. Once this initial discovery is complete, A1 and A2 can exchange higher-level information (e.g., API descriptions, credentials, schemas) in a straightforward manner via techniques such as: (i) a cloud-based directory service(s) that A1 and A2 register with, (ii) a peer-to-peer protocol in which A1 and A2 directly exchange relevant information, and so forth. API discovery of this form is common even today in systems such as GCP service directory [23], RapidAPI [39], BLE neighbor discovery [62], and AirDrop [5].

Heterogeneous data.

Our second technical assumption is that data analytics over heterogeneous data is, or will soon be, a solved problem. Processing heterogeneous data, that spans different schemas and formats, has been a long-standing challenge in databases [68, 57]. Heterogeneity raises two high-level problems. The first is that querying across heterogeneous data must reconcile the syntactic and semantic gaps across different entries in the dataset [69]. The former occurs when different formats are used to represent identical information (e.g., string vs. int types; or parquet vs. json) while the latter refers to fields that define related but not identical information. In some cases, we can reconcile a semantic gap (e.g., converting a field in celsius to fahrenheit) while others prove harder (e.g., a sensor that defines its temperature field as the average temperature vs. one that defines it as the max). Reconciling such gaps is a fundamentally difficult problem. Yet both research and deployed systems have adopted a range of techniques that mitigate (if not “solve”) the issue: formal or de-facto naming standards [61], manually coded or auto-generated format converters [57], AI-driven translation functions [71], ontologies and schema registries [41, 52], etc.. As we discuss in §3.2, our work assumes such techniques exist and shows how to use them in a JIT-DA environment.

3 Design

In this section, we first present the design of our Jut framework (§3.1 and §3.2) and then step through an end-to-end example of how a JIT-DA app operates, highlighting how our approach differs from current systems (§3.3).333In §5, we compare building an app using Jut vs. doing so with existing solutions: AWS IoT over AWS Timestream and dSpace over Postgres.

Jut is a data processing architecture that enables app developers to opportunistically leverage data sources present in a physical environment. Such developers may implement their application logic using an app framework (e.g., AWS-IoT, dSpace, SmartThings) but will leverage Jut as the data storage and processing system underlying these applications.

There are two important pieces to the Jut architecture: (1) the abstraction of a context and, (2) the join (correspondingly leave) API used to compose contexts in reaction to app-level events. We discuss each in turn below.

| Name | Notation | API | Description |

| Metadata | - | .kind | Kind of the context |

| .name | Name of the context | ||

| .intent | Contexts to ingest data from | ||

| .role | Role for access control | ||

| Data Store | load() | Load data to the store | |

| query() | Query the store | ||

| Egress | .id | Egress identifier | |

| .view |

|

||

| Ingress | .id | Ingress identifier | |

| .source | Data source of the ingress | ||

| .rule | Schema match-action rules | ||

| .flow |

|

3.1 The context abstraction

Contexts impose modularity in the data processing layer, reflecting the modularity commonly found in the app layer.

Context metadata.

A context has three important pieces of associated metadata : names, kinds, and sourcing intents.

The name field acts as a unique identifier for while its kind is used to identify the broader class of contexts to which belongs. For example, all Apple smartphones might share the same kind="iphone" while a user might use the name field to identify their specific instance of an iphone. In this sense, a context kind resembles a “class” in programming languages, allowing for the creation and customization of instances based on that kind. The rationale for including both names and kinds is that while there will be an enormous number of devices/contexts, there will be far fewer kinds of devices/contexts that will be of interest to a specific context. Developers can write data processing pipelines for these kinds, and this approach encourages a natural consolidation towards a few popular/standard kinds (e.g., apple.com/v1/iphone) while still giving developers the flexibility to support more niche kinds (vendor_x/v1/phone). Moreover, kinds allow developers to indicate their ability to ingest data from classes of sources rather than specific ones; this is desirable since specific contexts might not be known at the development time.

Finally sourcing intents list the other contexts that is prepared to ingest data from. Intents are specified in terms of the names and kinds (including wildcards) of other contexts thus allowing a developer to limit ingestion to a specific other context (e.g., name="building.cs.foo-univ.edu") or to a potentially large set of them (e.g., kind="apple.iphone"; kind=""). Context metadata are summarized in Table 1.

Components of a context.

A context is composed of three components: a data store (C.store), an ingress (C.ingress), and an egress pipeline (C.egress).

C.store is a regular data store (whether relational database, data lake, or lakehouse444For our implementation, we choose a particular existing datastore that we use with no modification (§4).) that is used to store data relevant to . It thus acts as a convenient and efficient repository over which to execute queries pertaining to .

C.egress A context can have one or more egresses, each of which defines a schema representing data that exports. Thus an egress exposes data records in to data consumers such as other contexts, apps, and users. can also be associated with a dataflow pipeline: at runtime, reads from , processes records as per the dataflow operators, and then caches the results persistently. We refer to this resultant data as the egress view, borrowing the notion of views from databases [59, 40, 36]. Like any database view, can be queried and our implementation supports both one-shot queries (that return a set of records) and continuous queries (that return a stream of records). Table 1 summarizes the relevant fields in .

C.ingress An ingress reads data from a source, processes this data, and stores it in . A context can have multiple ingresses, each with a unique identifier. The sources associated with a particular ingress are determined during the join process which we describe shortly in §3.2. Processing is implemented as a dataflow which contains operators (e.g., sort, join) and functions (e.g., sum, filter) that process a sequence of input data records and generate a sequence of output records. The resultant derived data is written to . Again, deciding which dataflow operators and functions should be applied to a given source is done during the join process.

An important aspect of an ingress is a "match:action" table that expresses high-level rules/policies for how the ingress will handle heterogeneous data schemas. An entry in the table specifies the conditions for matching a schema and the corresponding actions to be taken. For example:

|

These rules are used to update the ingress’s dataflow at runtime, in response to join events as described below. Our current implementation allows matching on: schema name, combinations of schema fields (all, any), and wildcards. Actions include developer-provided functions such as drop (the record will not be processed and stored), log (routes the record to a separate log store), trim (deletes fields), rename (for field names), custom field conversions (e.g., fahrenheit to celsius), and so forth. Our appendices include additional detail. More generally, we expect three categories of actions: (i) accepting the data as is, (ii) rejecting all data if unexpected fields are encountered, or (iii) transforming the data to adhere to a known schema, such as by filtering out unknown fields or converting them to a predefined schema.

Both match and actions are extensible allowing developers to tailor the system to their application domain.

3.2 The join/leave interface

As mentioned earlier, contexts can be composed which allows data to flow from the egress of one context to the ingress of another: e.g., the egress of a user device might be composed with the ingress of a room context and, simultaneously, the egress of the room context might be composed with the ingress of the building context.

The join (leave) API is how such composition (decomposition) is realized. As described in §2, we assume that two applications can discover one another through some service discovery mechanism.

For illustration, we’ll consider two applications and that have discovered each other: e.g., in our canonical scenario, might be an app on a user’s phone that exports sensor readings and other information from the phone, while is the building app for the building that the user just entered. Let’s say that and are the contexts associated with and respectively. As part of the discovery process, we’ll assume that and exchange information about their respective contexts and focus on the actions taken at ; processing at to incorporate as a data source in follows along the same lines. We assume that will first perform any application-level security and policy checks deemed necessary and, assuming these are satisfied, then invokes its underlying Jut layer’s join interface thus letting know that is available as a potential data source.

To implement join, the Jut runtime first checks whether is a potential data source for by comparing ’s name and kind fields against ’s sourcing intents. In case of a match, it then checks each of ’s egress schemas against each of ’s ingress match:action tables. If the schema for one of ’s egresses, denoted matches a rule in the match:action table for ’s ingress then the corresponding actions are compiled into dataflow operators and the generated dataflow is prepended to the existing dataflow for and is added as a data source for . For instance, given a schema <measurement:watt:string>, the first rule from the table above is matched, and a dataflow is added to ’s dataflow that extracts the field watt from data records matching the schema.

In summary, using the above join (and corresponding leave) interface we can “plumb" data sources to contexts, and contexts to other contexts. Importantly, the use of these interfaces is not limited to JIT sources; rather developers can use them to plumb any data source to the context - whether a JIT phone, a static building sensor, or another known context. Thus, using join/leave gives developers a uniform approach to setting up data processing pipelines that capture the potentially complex and evolving association between data sources and context apps.

Now that we’ve explained how contexts and join work, we briefly discuss the role of naming. Jut does not require that data sources adopt a single naming scheme but it certainly works better if data sources consolidate around a smaller number of approaches. Specifically, in deciding whether and how to ingest data from a source, Jut uses a context’s name and kind fields, together with the data schemas for its egresses. Any of these are optional and developers can always choose whether to accept or reject data with an unknown name, type, or schema. Likewise, the developer of a context can always choose whether to embrace an existing naming scheme or define their own. In practice, we expect that a small number of naming schemes will emerge as defacto standards with widely available libraries to convert between them. This is, in fact, how the world of data works even today with defacto formats such as JSON, Parquet, etc.; protocols such as Matter for unified device communication [32]; Project Haystack [37] and Brick for building ontologies [52], and so forth. Jut’s contribution is in taking these techniques (formats, schema converters, etc.) from the database literature and showing how to automatically and systematically insert them into the processing pipeline as new JIT sources appear/disappear.

More generally, bridging the syntactic/semantic gaps between data representations has historically been achieved by arriving at a middleground between rigid standardization on the one hand, and extreme customization on the other. Jut does not change the fundamentals of these tradeoffs and instead merely provides a flexible framework within which we can arrive at this desired middleground.

3.3 Putting the pieces together

We now step through the “life of data” in a Jut implementation of our canonical scenario of a user with a mobile phone interacting with a building app.

Discovery and ingestion.

Continuing with our notation from earlier, we assume that (for example) the user discovers the building app by scanning a QR code on entering the building; doing so launches a pop-up via which the user indicates her willingness to share data from with . This leads to an app-level discovery process in which and exchange any relevant information (e.g., credentials) including the names and API endpoints (§4) for and . Assuming all app-level conditions are met, invokes join(, ) on its underlying Jut runtime that implements . The Jut runtime implements the steps in §3.2, as a result of which data from is ingested and processed by . How ’s data is processed is determined by ’s ingress pipeline and the match:action rules relevant to ’s schemas. As a specific example, let’s say that a data record exported by includes two fields: a unique user ID and a temperature reading in celsius and that ’s ingress pipeline both augments and transforms this data into a record that consists of a building ID, user ID, time-stamp, and temperature in fahrenheit. These transformed records are stored in .store.

Querying contexts.

Now consider a campus analyst interested in understanding the usage and temperature conditions in the above building. The analyst issues queries such as "what is the average occupancy and temperature of this building ID between 8am-10am?", or "how much time does an average user spend in building ID?". Likewise, our user might want to ask what is the warmest floor in the building at times when I visit?". Since all data relevant to that building is stored in .store, these queries can be efficiently executed directly over that single data store, rather than sifting through all user records across all campus buildings in order to identify the relevant data over which to compute statistics. The latter is what would be required in existing systems [58, 13, 26] that simply load input data into per-user device tables. Moreover, by associating an ingestion pipeline with each building context, Jut makes it easy to augment/modify input data with the required fields needed to optimize query performance for that context; e.g., the building ID. By contrast, existing systems would need to maintain separate metadata tables mapping a user ID to the building ID they were in at different points in time. In short, Jut’s per-context modularity avoids the complexity and inefficiency of having to sift through a sprawl of per-table devices.

Decentralized control and configuration.

Later, the user enters their campus’ medical building and, as before, agrees to contribute data to . However, for HIPAA compliance, this building’s operator must store a copy of ingested data records in their insurance provider’s cloud. The building operator can easily achieve this by setting up a data replicator function in the ingress pipeline for the medical building’s context. Similarly, the user might configure their egress policy to drop or anonymize their user ID when exporting data to this particular building. Again, Jut’s context-level modularity makes it easy for both users and building operators to unilaterally define and enforce their policies. Achieving the same in a system that simply loads all user records into a monolithic campus-wide data lake would be more complicated since the operator would need to (manually) communicate their replication needs to the campus operator and the latter would (likely) require complex scripts to first identify which users were in the medical building and at what time and then copy those select records to the cloud.

Developing and configuring a context.

Finally, let’s consider a scenario in which the literature department decides it is time they also offer a building app. The developer tasked with the job might start by simply copying the context implementation from another building app. However, the building operator is unsure what ingestion policies to configure. They can thus start by configuring their match:action table to simply log all input records and analyze the data offline to determine what data types are available and useful. The operator can then use their new understanding to refine their match:action rules for better data curation [70] and can continue to do so over the lifetime of the app and as new devices and data types appear. In short, Jut allows flexible and JIT ingestion from any available data source, enabling richer insights. Moreover, the context-level modularity simplifies app development since it supports (context) code reuse and simplifies configuring and evolving individual contexts.

3.4 Other design pieces

In addition to any app-level security and access control, Jut supports access control at the context level. We achieve this using standard role-based access control techniques for which each context is associated with role metadata that gets checked at join time. Since our RBAC design is fairly standard, we omit further details.

Beyond JIT data access, we have found that the context abstraction facilitates a number of operational tasks. For example, having a per-context ingress/egress makes it easy to implement policies for tracking provenance, since we can easily log statistics about when and where data was consumed from different sources. Similarly, we can support diverse compliance needs by tailoring how data is stored or replicated for each context; e.g., ensuring different standards of encryption and backup for data collected in a public vs. private building.

Our Jut prototype described in the following section implements RBAC, data provenance, logging, and replication.

4 Implementation

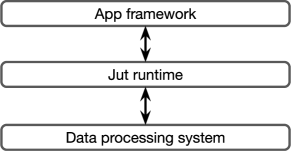

Jut is implemented as a runtime that sits between an app and its underlying data processing system as shown in Fig.1; together these three components constitute a complete smart-space application or service.

4.1 End-to-end System Architecture.

As shown in Figure 1, Jut integrates with existing systems that implement data processing and application-layer logic. We briefly describe our system choices for these components.

Zed as the data processing layer.

Jut uses the recent Zed [65] system as its underlying data processing engine. Zed introduces a new super-structured data model that aims to unify the traditional relational and document models in order to achieve the benefits of both – efficient analytics (the relational model’s strength) and flexibility in ingesting and querying heterogeneous data (the document model’s strength)?

Jut does not require using Zed; we also considered systems such as Postgres [36], DuckDB [67], and Sqlite [43]. Ultimately, we selected Zed because of its sophisticated support for heterogeneous data. In Zed, all data are strongly typed, as in relational models. However, there is no restriction on which types of data may coexist in the same stream of data (akin to JSON systems). Instead, Zed requires that data is “self-describing” by storing type definitions inline with the data stream: i.e., when a new data type appears in a stream of super-structured data, its type definition is stored inline with the data; this ensures that data consumers always have the necessary type information to parse data. This approach is convenient for Jut since we can define rich type-based match:action rules; e.g., using different actions when encountering a new type definition and processing records differently based on their type.

dSpace as the application framework.

There are a growing number of frameworks for building IoT and smart-space applications: AWS IoT [13], Samsung SmartThings [4], and Comfy [28]. However most are closed systems: we can use their publicly available service APIs but cannot modify their internals. Within open source options, we considered HomeAssistant [26], BOSS [56], and dSpace [58]. We ultimately chose dSpace since it most directly aligns with our focus on smart spaces. In dSpace, developers model a space or sensor using a “digivice” abstraction, which is largely equivalent to our notion of a context. A digivice is a control abstraction that implements (for example) the automation logic for a room (when and how to dim lights, etc.). For every digivice, dSpace does provide a corresponding “digidata” abstraction to model the data associated with a space. In the dSpace implementation, a digidata is simply a wrapper around Postgres with no support for the dynamic ingestion, policies, etc. that we address. We thus modified their digidata abstraction to instead integrate with Jut thus replacing Postgres by our Jut runtime Zed. An added benefit of this choice is that – by comparing dSpace-over-Postgres to dSpace-over-Jut– we can evaluate the benefits of Jut with the same app layer (§5).

Legacy data sources.

In §3.2, we described join as having the ingress of a context read data from the output of another. In practice, some contexts will have to read from data sources that do not conform to our architecture and, indeed, we use many such sources in our evaluation in §5. For such “legacy” sources, we rely on proxies that access data from the source based on whatever APIs or protocols they expose - e.g., using the Matter protocol [32] or via the device vendor cloud [30] - and convert the stream to a Zed format [48]. Fortunately, Zed already offers a number of such proxies which we were able to leverage.

4.2 The Jut Runtime

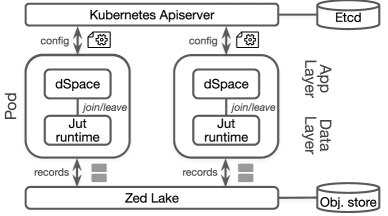

Fig. 2 illustrates the runtime architecture of Jut, comprising the following components:

Kubernetes. Kubernetes is a widely used container orchestrator. Jut uses Kubernetes to deploy all of its runtime components and uses its apiserver [10], built on etcd [19], to store the context metadata, ingress, and egress configurationspersistently as API objects. Jut also uses the apiserver as the service registry for apps and contexts.

Zed lake. We use the Zed lake [49] to support data storage and querying in Jut. The Zed lake stores super-structured data persistently in an object store and organizes data in “pools”. Jut leverages the Zed lake by placing each context’s data store in a separate data pool; the Jut runtime is responsible for moving data between contexts (pools).

App and runtime. The dSpace app and the Jut runtime run in the same Kubernetes pod with one container for each. The Jut runtime exposes the join/leave API via a REST endpoint for the dSpace app to invoke. Meanwhile, the Jut runtime can query and load data from and to the Zed lake whereas apps may query and load data from the Jut runtime via the interfaces exposed by it (§4.3).

We implement a pipelet data sync agent in the runtime that continuously pulls data from a data pool on the Zed lake, processes data with the Zed dataflow, and loads it to another data pool on the Zed lake. For each egress-to-ingress pair and data-store-to-egress pair in context, Jut creates an instance of the pipelet agent to plumb the data flow.

Our Jut prototype consists of 2,393 SLOC of Python and 815 SLOC of Go. The Jut programming library, code generators, join/leave extension, and app are written in Python, while the pipelet data sync agent is written in Go. Besides, we developed a Go-based CLI in 600 SLOC for Jut that includes APIs for context deployment, configuration, and interaction with Zed and dSpace. To implement device connectors for proxied ingestion, we rely on vendor libraries [12, 18, 17], which account for 400 SLOC in Python for IoT devices and laptops, and 550 SLOC in Swift for smartphones [12].

4.3 Programming Interface

Jut exposes a declarative interface built on Kubernetes [3]. This interface allows context configurations (metadata, ingress, and egress) to be represented as attribute-value pairs, which are stored as API objects on the Kubernetes apiserver [10]. We expose context configurations over this interface so that developers and operators can reuse existing Kubernetes tooling to handle configurations. Developers or context operators can specify the ingresses and egresses on the interface. We present the details of the programming interface in the Appendix.

Developers use the join/leave() API in the Jut programming library to inform the context about availability of new data sources. They use the Zed dataflow language [50, 65] to specify the dataflow operators in each context’s ingress and egress. Zed provides convenient operators such as filtering and cleaning data using type information (§5.2). Users can query the egresses of contexts using the same dataflow language. For example, a building administrator can query the BioHall occupancy by running jut query BioHall.egress.occupancy "avg()" (jut is the CLI). Developers can also use the query API to run a query against the context in the JIT-DA apps; or use the @on.context(egress) (implemented as a Python decorator) to watch and process the data streams continuously, which is useful when implementing data-driven automation. Besides, an app can load data to the by calling context.load(..) with the data records, which can be used for ingesting data from sources that Jut’s ingress doesn’t already support, such as devices that communicate via custom device driver/libraries.

5 Evaluation

We evaluate Jut to answer two high level questions: (i) does Jut simplify the use and development of smart-space apps, relative to existing app frameworks? and (ii) what performance overheads does Jut introduce and does our implementation’s end-to-end performance meet real-world requirements?

We explain our experimental setup (§5.1) and then evaluate each of the above questions in §5.2 and §5.3 respectively.

5.1 Experimental setup

Our overall evaluation approach is as follows. Using a set of real-world physical devices, we construct a series of smart-space scenarios, each designed to highlight a particular aspect of Jut’s design (e.g., querying, implementing policy). We evaluate the complexity of this process using the metrics defined below. In each case, we then attempt to implement the same scenario using two existing systems that we select as our baseline and again quantify the complexity of the experience using the same metrics. In what follows, we introduce our devices, metrics, and baselines.

| Device | Brand | Model | Quantity | ||

|---|---|---|---|---|---|

| Smartphone | Apple | iPhone 13 Pro Max | 2 | ||

| Laptop | Apple | Macbook Air M1 | 2 | ||

| Motion Sensor | Ring | Alarm Security Kit | 6 | ||

| Underdesk PIR | Pressac |

|

2 | ||

| Contact Sensor | Eve | Door & Window | 4 | ||

| Plug | Eve | Plug & Power Meter | 4 | ||

|

Apple | HomePod mini | 1 | ||

| Smart light bulb | Lifx | Mini | 8 | ||

| Heater + Fan | Dyson | HP01 | 1 |

Devices. Our experiments use the physical devices listed in Table 2. These devices span personal mobile devices as well as fixed sensors. For the former we use Apple phones and laptops - these devices both contribute data as well as run user-facing apps that consume data from our smart-space contexts. Two devices – Pressac [47] and Ring [6] – are used to detect occupancy by signaling whether there is motion or not. The Eve Contact sensor [20] is used to detect door open/close events. The Eve plugs [21] and Dyson [16] heater connect to electrical appliances (e.g., TV, fridge, air purifier) and report energy statistics. Lifx [30] is a smart light bulb. The table also lists what we term the "native" API via which the vendors export data from their devices. For the iPhone and laptop we wrote a custom Jut client that exports relevant data (described later) in JSON. In our experimental scenarios, these devices are connected via an Apple Hub Thread border router.

Metrics. We evaluate ease of development using source lines of code (SLOC). We count the source lines of code, configurations, and scripts required to build/configure an app. To evaluate ease of use, we measure query complexity (Qcx) as representing the effort required to write queries. Qcx is calculated by summing the number of dataflow operators and data subjects in a query. For example, a query with two data subjects and one dataflow operator, such as BioHall.egress.occupancy "avg()", has a Qcx of 2.555We recognize that there is no widely accepted method for measuring query complexity, and hence we adopt this simple approach, inspired by proposals from prior literature [73, 72].

Baselines. We compare Jut to two app frameworks: AWS IoT [13] and dSpace [58]. Both require an underlying data processing layer. For AWS-IoT, we use AWS Timestream [14] which is a common recommendation and, for dSpace [58] we use Postgres [36] which is the default choice in dSpace. We select these as AWS IoT is widely used in industry, while dSpace represents the state-of-the-art from research. In reporting our findings (Table 4), we select the better of AWS-IoT and dSpace and report that number, thus providing an optimistic view of baseline performance; our explanations in the text make clear which system is being discussed.

Unless otherwise mentioned, we run the various software components (Jut runtime, Zed lake, dSpace, and the baselines) using Kubernetes (v1.21.0) in a Thinkcentre M720 machine (Intel Core i5-8400T, 6 cores).

Contexts We developed a Jut context for each of the physical devices and data sources listed in Table 2. These contexts are listed in Table 3, including the data sources they pull data from and the egresses they expose. As expected, the ingress for these contexts is typically the vendor’s native API. In some cases, multiple devices can be represented by the same context. E.g., the Motion context reads data from the Pressac [47] or Ring and internally converts their schemas to the same representation on egress. A benefit of this consolidation is that downstream contexts that consume data from Motion need not address the heterogeneity across the native data sources. Finally, we created contexts for higher-level constructs such as room, building, campus, etc.. These contexts consume data from other Jut contexts (vs. native APIs) and, as we’ll show, can themselves serve as data sources for downstream contexts.

In counting SLOC, we include all (non runtime) code needed to implement the above contexts but leave out the code specific to converting from a native API format to Zed’s format. We omit this code because it is incurred by any solution including our baselines. Moreover, this overhead is somewhat artificial since it depends on the (native and egress) schemas we define, rather than fundamental differences in framework abstractions.

| Context | Ingress | Egress | ||||||

| Smartphone | Jut client |

|

||||||

| Laptop | Jut client |

|

||||||

| Motion |

|

detected | ||||||

| Contact | Matter native API | detected | ||||||

| Lamp | Lifx native API | energy, brightness | ||||||

| Appliance |

|

energy | ||||||

| Room |

|

|

||||||

| Building |

|

energy, occupancy | ||||||

| Campus |

|

|

5.2 Implementing smart spaces with Jut

We implemented 7 different smart-space scenarios using the above devices and contexts. Our results showing the SLOC and Qcx for each scenario are summarized in Table 4. Additional detail including code examples can be found in our Appendix.

| Scenario: | S1 | S2 | S3 | S4 | S5 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Requirement |

|

|

|

|

|

||||||||||

|

25 (-) | 19 (-) | 12 (178) | 14 (45) | 8 (-) | ||||||||||

|

18 (159) | 6 (73) | 6 (43) | 11 (33) | 4 (-) |

S1: Query by context This scenario focuses on the ease of supporting query by context. Our goal is to query rooms for their energy usage and occupancy, identify the rooms that are least occupied or consume the most energy, and analyze the correlation between energy use and occupancy.

To set up this experiment, we installed devices in 4 rooms/offices, where each room has one contact sensor, two motion detectors, two lamps, and one appliance; Each room has its own WiFi access point (AP). We reuse this room setup in subsequent scenarios.

We then ran three types of queries over the room contexts: (i) querying an individual room (for its current occupancy or energy usage), (ii) querying across multiple rooms (to rank rooms by their occupancy or energy usage), and (iii) querying across a room’s different egresses (to identify the correlation between a room’s energy usage and occupancy). For example, for (ii), we rank and find the least used room with:

> jut query room1.egress.occupancy "head | sort occupancy"}

Implementing this scenario required 25 SLOC, most of which were to implement the necessary contexts. Composing data sources required few SLOC - calls to \join and, as necessary, configuring context match:action rules.

In terms of query complexity: implementing the above queries with \arch required a total of 18 Qcx.

By contrast, using dSpace with Postgres, we first need to manually create a table that stores the mapping between devices and their room. Given this, we can then run this query but it is still inefficient in Qcx. The same queries required 159 Qcx because dSpace stores input data in per-device Postgres tables. The user thus first has to filter table rows that belong to the room of interest and additionally aggregate those rows over time to derive the room occupancy values (detailed queries are in our Appendix). By contrast, in \arch, the relevant data is already selected and stored in the room context’s store which can be directly queried.

\noindent \underline

S2: Context as a data source

Now consider that we want to enable building-level energy and occupancy analysis. A convenient approach is to compose the room contexts to a building context that computes and exposes building-level data from the room-level data.

Implementing this in Jut took an additional 19 SLOC (over S1) to implement the building context:

we specify the room’s occupancy and energy egresses as the building’s ingresses and the building’s ingress dataflow aggregate input data as it arrives.

Querying for building occupancy or energy are then straightforward queries to room1.egress.occupancy or room1.egress.energy, respectively, with a Qcx of 6.

Implementing this query with Postgres incurs a higher Qcx for similar reasons as above.

S3: Ingestion from JIT sources

This scenario focuses on Jut’s ability to opportunistically ingest data from user devices. Here, our smart campus app allows people to identify rooms with both good network connectivity. To achieve this, it consumes network quality data from tenants’ phones and laptops.

We now extend the scenario from above to include phones and laptops as potential data sources for a room.

Our user phone app is configured to join and leave rooms upon detecting a change in the BSSID for the WiFi AP it is currently connected to. Each time the phone changes APs, it takes a network measurement in the background using the NDT tool [33, 34] and reports the raw data to the phone’s context

(exposed by phone.egress.netTest). We add an ingress to the room context to ingest data from phone.egress.netTest, and an egress room.egress.netSpeed that exports the average (across all phones/laptops) download/upload bandwidth data to the building context, and so on. We repeat the same process for the laptop and its context.

Implementing the above scenario in Jut was straightforward, because much of the low-level "plumbing" code is encapsulated within the join API.

Thus supporting this scenario required creating a context for the phone/laptop with a phone.egress.netTest egress; this took 3 SLOC each. Similarly, adding the room.egress.netSpeed egress to the room context required 5 SLOC and 1 match:action rule to room’s ingress.

To find rooms with good connectivity, users simply query the room for their average connectivity quality, which is achieved with a Qcx of 6.

By contrast, this scenario cannot be easily supported in AWS-IoT or dSpace.

In order to approximate JIT-DA in AWS-IoT we hack together a solution as follows.

AWS-IoT allows devices (together with their schemas and other metadata information) to be registered in a device registry. We create an entry for our phone and laptop devices and manually hardcode an associated room identifier (RoomId) for each device (deviceID). We then write a script that, when it receives an app-level signal that a device is in the room, takes the following steps: (i) provision a new table in AWS TimeStream for that device, (ii) updates the AWS message broker with a new topic corresponding to the deviceID in the device’s data stream such that all records from the device are routed to the new table, (iii) set up a rule associated with the topic that allows incoming records to be processed prior to storage in TimeStream; this rule adds a new field to each data record into which we write the RoomId corresponding to deviceID, retrieved from the device registry. (Note that supporting user mobility would require additional techniques to dynamically update the deviceID-to-roomID mapping in the device registry.)

Implementing the above took 178 SLOC.

A query to compute the average connectivity quality of the room then requires averaging the appropriate rows from each device’s table, for all devices that might have been in the room. Writing such a query involved a Qcx of 43.

S4: Improved app-data integration.

We focus now on the ease with which applications can

leverage insights from JIT data: i.e., for data-driven automation.

For this scenario, our goal is to support two tasks. First, we want a room’s brightness level to be automatically set when the door opens, where the level of brightness is computed from the historical data for that room.

Second, as a security measure, we want an app that automatically sends an SMS to a home owner when room occupancy exceeds a specified threshold.

In Jut, the above automation rules are specific in dSpace but rely on queries made to the underlying Jut data processing system. Writing these queries in Jut is straightforward because the abstraction over which dSpace expresses the above automation logic – i.e., a room – is clearly mirrored in the underlying data storage. Hence, we simply write dSpace’s automation logic to make continuous queries to the relevant room context - this took 14 SLOC (in dSpace) and 11 Qcx to query the room brightness and occupancy. By contrast, implementing the same over Postgres took 3x more Qcx and 3.2x more SLOC for reasons similar to those discussed above (provisioning and querying over multiple per-device tables).

S5: Handling heterogeneous data.

Our last scenario highlights the issue of heterogeneous data sources.

Our goal here is a smart-app that wants to allow users to track their personal carbon footprint: i.e., allowing users to track the energy consumption of (say) lamps, heaters, etc. that they encounter at different locations on campus during their stay. To achieve this, the app must be able to opportunistically consume data from a heterogeneous set of (device, space, building )contexts as they move.

To implement this scenario, we configure the match:action rules on the phone context to ingest and aggregate readings from any available data source with a *.egress.energy

These data may have heterogeneous and unexpected/unknown schemas, such as data types (e.g., string vs. float), names (energy” vs. power”), or units (e.g., watt” vs. kilowatts”). For example:

⬇

{watt:"80",from:"biolab",event_ts:..,ts:..}

{watt:100,from:"office",event_ts:..,ts:..}

{power:120.,unit:"watt",from:"lounge",event_ts:..,ts:..}

Contexts in Jut are able to ingest these heterogeneous data records. At the phone context’s ingress, we clean and convert the energy readings to the same data type and unit with:

⬇

flow: "rename watt:=power | shape(this, <{watt:float64}>)

| cut watt,event_ts,from"

Querying for a user’s carbon footprint is then a trivial query to the energy egress of the user’s phone context. We implemented this in Jut with 8 SLOC and a Qcx of 4.

In summary, by introducing the modularity of contexts and by systematizing how new sources are added/removed to data pipelines, Jut achieves a significant reduction in both development effort (3.2-14.8x SLOC) and query complexity (3-12x Qcx) relative to AWS-IoT and dSpace.

5.3 Performance benchmarks

We aim to answer three performance-related questions for Jut: (1) How does Jut’s context-oriented approach impact query performance compared to device-oriented approaches? (2) What is the overhead of using Jut for data-driven insights in the existing applications? and (3) Can Jut scale to a large number of contexts with our implementation choices?

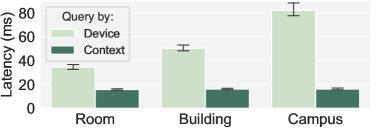

Impact on query performance. To answer (1), we set up a benchmark with the smart campus app, using a week-long occupancy dataset from the UCI repository [53]. We load the dataset into the motion detector contexts, which in turn populate the contexts of rooms, buildings, and the campus. We compare the latency (10 runs, reported average in ) of querying the room, building, and campus occupancy using Jut and Zed lake as the baseline, as Jut already uses it as the underlying analytics engine. For Jut, we wait for the occupancy detector contexts to propagate to the other contexts, whereas for the baseline, we pre-process the dataset to add context information and then load it into Zed lake. Fig.3 shows that Jut achieved an average latency of for the room context, which is 2.2x faster than the device-oriented baseline. As the context level goes higher, the gap widens with 3.1x for the building context ( vs. ) and 5.1x for the campus context ( vs. ). The performance improvements stem from two factors. First, a Jut context contains only data ingested for that context, so querying the context directly involves loading less data compared to querying all device data, resulting in a 23% difference in data loading time. Second, querying the context directly enables simpler queries to be evaluated compared to querying device-level data directly. Note that this improvement does not mean that Jut eliminates the need to process data, but instead shifts the processing to contexts, where the data is transformed on write/ingestion to enable faster query evaluation.

| Setup | Lamp | LR | OLR | OR-SMS |

| dSpace | 188.84 ms | 406.91 ms | 736.01 ms | 3.02 s |

| Jut | 199.01 ms | 419.39 ms | 742.32 ms | 2.88 s |

| Overhead | 5.40 % | 3.10% | 0.90% | -4.6% |

Integrating with existing control apps. We compare the performance of Jut with control applications written in dSpace, which do not have a data-driven component. We reused the setup from scenario S4 (smart home, §5.2) to implement three scenarios in Jut and in dSpace. Table 5 shows that Jut adds at most 5.6% latency overhead across the scenarios for the extra latency () performing a query against the context. This querying overhead is negligible for control applications whose routines typically take several seconds, such as invoking the Twilio API to send an SMS message upon an overcrowding event. Therefore, the performance impact of Jut on control applications is insignificant.

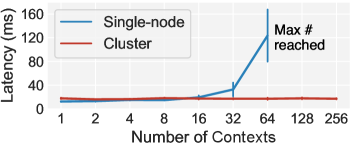

Scale to large deployment. Jut’s target apps can have various scales from smart homes with a few rooms to buildings and campuses with hundreds of rooms. We assess whether Jut is able to handle any of these scales. To that end, we compare the query latency with the single-node setup and a cluster setup. For the latter, we run Jut on a Kubernetes cluster running on AWS EC2 and configure a cluster autoscaler [29] to automatically provision new EC2 instances (m5.xlarge) under load. We run the smart campus application in S1 and S2 but now vary the number of rooms from 1 to 256; we simulate the data exchange between contexts by feeding the occupancy data to the motion sensor contexts as we did previously. As shown in Fig.4, the results suggest that while the single-node setup can’t scale beyond 60 rooms (due to the per-node resource limits on number of containers), Jut is able to scale to a much larger deployment size (256 rooms on 5 EC2 instances) with a cluster setup.

6 Conclusion and Related Work

This paper presents Jut, a new application framework that simplifies the use and development of apps that perform JIT-DA, with a first-class abstraction for context and explicit app-data coordination. Besides the related work discussed in §2 and §5, we highlight additional works on IoT and database systems that are pertinent to JIT-DA apps. Smart space and IoT frameworks. Today’s IoT frameworks provide extensive support for the IoT app layer functionalities, such as tracking component metadata and relationships and implementing actuation logic. For example, AWS IoT Things Graph provides an entity abstraction [44], Home Assistant provides a component abstraction [26], and dSpace [58] introduces a “digivice” abstraction. However, these frameworks offer only limited support for the data layer of an app. Most frameworks use standalone off-the-shelf data processing systems to store the data streams generated by individual devices, and thus context-related insights must be extracted from these per-device streams. For example, dSpace on Postgres [36] and TimescaleDB [45] (via a “digidata” thin wrapper [58]), Home Assistant relies on SQLite [43], AWS IoT on Timestream [14], DynamoDB [7], or IoT Analytics cloud services [8]. These frameworks also lack the necessary abstractions to adapt data processing pipelines to handle the JIT-DA data sources in smart space applications. This results in constrained data sources an app can incorporate or the need for a significant amount of manual configuration and custom code. Jut addresses these issues by providing flexible and adaptable data layer abstractions to support JIT-DA. Databases and dataflow systems. There is extensive work on dataflow systems, including continuous queries [55], incremental dataflow [63], dataflow for ML workloads [51], streaming engines [42, 54], databases [31, 40, 59], and general-purpose analytics engines [64, 9, 46, 54]. Jut, as a new data architecture, and context, as a new data processing abstraction, are complementary to these pioneering approaches. One can leverage these existing techniques in context to support additional dataflow features such as streaming [46], more comprehensive timeseries analytics [45], and optimizations that improve context performance and efficiency by supporting stateful operators and incremental computation [63, 59, 55]. In turn, Jut can help scale these systems to handle JIT-DA and support diverse apps. We plan to explore integration of these systems and techniques with Jut in future work.

References

- [1] Byod, iot and wearables thriving in the enterprise. https://www.techrepublic.com/article/byod-iot-and-wearables-thriving-in-the-enterprise/, 2016.

- [2] Density: Product overview. https://www.solutionzinc.com/hubfs/603ee8372ec54a47d690d734_density-product-overview.pdf, 2020.

- [3] Kubernetes: open-source system for automating deployment, scaling, and management of containerized applications. https://kubernetes.io/, 2022.

- [4] Smartthings. https://smartthings.developer.samsung.com/, 2022.

- [5] Airdrop. https://en.wikipedia.org/wiki/AirDrop, 2023.

- [6] Alarm motion detector. https://shop.ring.com/products/alarm-motion-detector-v2, 2023.

- [7] Amazon dynamodb. https://aws.amazon.com/dynamodb, 2023.

- [8] Amazon dynamodb. https://aws.amazon.com/iot-analytics/, 2023.

- [9] Apache spark. https://spark.apache.org/, 2023.

- [10] apiserver. https://github.com/kubernetes/apiserver, 2023.

- [11] Apple homekit. https://developer.apple.com/homekit/, 2023.

- [12] Apple ios api. https://developer.apple.com/documentation/docc/api-reference-syntax, 2023.

- [13] Aws iot. https://aws.amazon.com/iot/, 2023.

- [14] Aws timestream. https://aws.amazon.com/timestream/, 2023.

- [15] Density: Trusted space analytics for a flexible workplace. https://density.io/, 2023.

- [16] Dyson pure cool link library. https://github.com/CharlesBlonde/libpurecoollink/, 2023.

- [17] An easy-to-use api for devices that use tuya’s cloud services. https://github.com/codetheweb/tuyapi, 2023.

- [18] Emqx mqtt broker. https://www.emqx.io/, 2023.

- [19] Etcd: A distributed, reliable key-value store for the most critical data of a distributed system. https://etcd.io/, 2023.

- [20] Eve door & window wireless contact sensor. https://www.evehome.com/en-us/eve-door-window, 2023.

- [21] Eve energy smart plug. https://www.evehome.com/en-us/eve-energy, 2023.

- [22] Fitbit official site for activity trackers. https://www.fitbit.com/global/us/home, 2023.

- [23] Gcp service directory. https://cloud.google.com/service-directory, 2023.

- [24] Get a free qr code menu in seconds. https://get.menulabs.com/menu-management-system/qr-code-menu-generator/, 2023.

- [25] Google nest, build your connected home. https://store.google.com/us/category/connected_home, 2023.

- [26] Home assistant: Open source home automation that puts local control and privacy first. https://www.home-assistant.io/, 2023.

- [27] Ifttt: Everything works better together. https://ifttt.com/, 2023.

- [28] Intelligent workplaces for dynamic businesses. https://comfyapp.com/, 2023.

- [29] Kubernetes cluster autoscaler. https://docs.aws.amazon.com/eks/latest/userguide/autoscaling.html, 2023.

- [30] Lifx smart home light. https://cloud.lifx.com/, 2023.

- [31] Materialize streaming database. https://materialize.com/, 2023.

- [32] Matter: The foundation for connected things. https://csa-iot.org/all-solutions/matter/, 2023.

- [33] Ndt (network diagnostic tool). https://www.measurementlab.net/tests/ndt/, 2023.

- [34] Ndt7 ios. https://github.com/m-lab/ndt7-client-ios, 2023.

- [35] Openwisp configurable wifi login pages. https://github.com/openwisp/openwisp-wifi-login-pages, 2023.

- [36] Postgresql. https://www.postgresql.org/, 2023.

- [37] Project haystack. https://project-haystack.org/doc/docHaystack/Ontology, 2023.

- [38] Python library for accessing lifx devices locally. https://github.com/mclarkk/lifxlan, 2023.

- [39] Rapid api hub. https://rapidapi.com/hub, 2023.

- [40] Risingwave: the next-generation streaming database in the cloud. https://github.com/singularity-data/risingwave, 2023.

- [41] Schema registry. https://docs.confluent.io/platform/current/schema-registry/index.html, 2023.

- [42] Spark streaming. https://spark.apache.org/docs/latest/streaming-programming-guide.html, 2023.

- [43] Sqlite. https://www.sqlite.org/index.html, 2023.

- [44] Things graph. https://aws.amazon.com/iot-things-graph/, 2023.

- [45] Timescaledb. https://www.timescale.com/, 2023.

- [46] The unified apache beam model. https://beam.apache.org/, 2023.

- [47] Wireless desk occupancy sensors. https://www.pressac.com/desk-occupancy-sensors/, 2023.

- [48] Zed formats. https://github.com/brimdata/zed/tree/main/docs/formats, 2023.

- [49] Zed lake api. https://github.com/brimdata/zed/blob/main/docs/lake/api.md, 2023.

- [50] The zed language. https://github.com/brimdata/zed/tree/main/docs/language, 2023.

- [51] M. Abadi et al. Tensorflow: A system for large-scale machine learning. In Proc. USENIX OSDI, 2016.

- [52] B. Balaji et al. Brick: Towards a unified metadata schema for buildings. In Proc. ACM BuildSys, 2016.

- [53] L. M. Candanedo and V. Feldheim. Accurate occupancy detection of an office room from light, temperature, humidity and co2 measurements using statistical learning models. Energy and Buildings, 112:28–39, 2016.

- [54] P. Carbone, A. Katsifodimos, S. Ewen, V. Markl, S. Haridi, and K. Tzoumas. Apache flink: Stream and batch processing in a single engine. Bulletin of the IEEE Computer Society Technical Committee on Data Engineering, 2015.

- [55] S. Chandrasekaran et al. Telegraphcq: continuous dataflow processing. In Proc. ACM SIGMOD, 2003.

- [56] S. Dawson-Haggerty et al. Boss: Building operating system services. In Proc. USENIX NSDI, 2013.

- [57] A. Doan, A. Halevy, and Z. Ives. Principles of data integration. Elsevier, 2012.

- [58] S. Fu and S. Ratnasamy. dspace: Composable abstractions for smart spaces. In Proc. ACM SOSP, 2021.

- [59] J. Gjengset, M. Schwarzkopf, J. Behrens, L. T. Araújo, M. Ek, E. Kohler, M. F. Kaashoek, and R. Morris. Noria: dynamic, partially-stateful data-flow for high-performance web applications. In Proc. USENIX OSDI, 2018.

- [60] M. Karpathiotakis, I. Alagiannis, and A. Ailamaki. Fast queries over heterogeneous data through engine customization. Proceedings of the VLDB Endowment, 9(12):972–983, 2016.

- [61] Y. Li, C. Network, N. Networking, and R. Jian. Naming in the internet of things. 2013.

- [62] J. Liu, C. Chen, and Y. Ma. Modeling neighbor discovery in bluetooth low energy networks. IEEE communications letters, 16(9):1439–1441, 2012.

- [63] F. McSherry, D. G. Murray, R. Isaacs, and M. Isard. Differential dataflow. In CIDR, 2013.

- [64] D. G. Murray, F. McSherry, R. Isaacs, M. Isard, P. Barham, and M. Abadi. Naiad: a timely dataflow system. In Proc. ACM SOSP, 2013.

- [65] A. Ousterhout, S. McCanne, H. Dubois-Ferriere, S. Fu, S. Ratnasamy, and N. Treuhaft. Zed: Leveraging data types to process eclectic data. In CIDR, 2023.

- [66] S. Palkar, F. Abuzaid, P. Bailis, and M. Zaharia. Filter before you parse: Faster analytics on raw data with sparser. Proceedings of the VLDB Endowment, 11(11):1576–1589, 2018.

- [67] M. Raasveldt and H. Mühleisen. Duckdb: an embeddable analytical database. In Proceedings of the 2019 International Conference on Management of Data, pages 1981–1984, 2019.

- [68] M. Stonebraker. Background. Readings in Database Systems, 2015.

- [69] M. Stonebraker. Chapter 12: A biased take on a moving target: Data integration. Readings in Database Systems, 2015.

- [70] M. Stonebraker, D. Bruckner, I. F. Ilyas, G. Beskales, M. Cherniack, S. B. Zdonik, A. Pagan, and S. Xu. Data curation at scale: the data tamer system. In Cidr, volume 2013, 2013.

- [71] M. Stonebraker, I. F. Ilyas, et al. Data integration: The current status and the way forward. IEEE Data Eng. Bull., 41(2):3–9, 2018.

- [72] M. A. P. Subali and S. Rochimah. A new model for measuring the complexity of sql commands. In 2018 10th International Conference on Information Technology and Electrical Engineering (ICITEE), pages 1–5. IEEE, 2018.

- [73] E. J. Weyuker. Evaluating software complexity measures. IEEE Transactions on Software Engineering, 14(9):1357–1365, 1988.

Appendix

.1 Programming Interface for Jut

| API | At | Description |

|---|---|---|

| c.query(query, egress="main") | App | Query an egress. |

| c.load("spl":.., "unit":..) | App | Load data to store. |

| on.ctx(egress="main") | App | Watch an egress. |

| sources: [ kind | name.egress, .. ] | Context | Specify data sources. |

| flow: "head | cast(spl,<string>)" | Context | Declare dataflow. |

| jut query c.egress [ .. ] "sort watt" | CLI | Query egress(es). |

We provide an overview of the programming and analytics APIs in Jut, using (simplified) examples from a smart campus app in our evaluation §5. Table 6 summarizes the programming interfaces available in Jut.

Programming context. Jut’s context exposes a declarative interface built on Kubernetes [3]. This interface allows configurations to be represented as attribute-value pairs, which are stored as API objects on the Kubernetes apiserver [10]. We extend this interface to support context configurations, so that developers and operators can reuse existing Kubernetes tooling to handle configurations. Fig.5 shows the programming interface for the building BioHall. Developers or context operators can specify the ingresses and egresses on the interface, including the name, dataflow, and data sources (for ingress). Specifically, lines 4 to 16 contain the configurations for two ingresses, room_energy and room_occupancy, where the BioHall ingests room-level energy and occupancy as specified in the intent fields (lines 6 and 10) with indirect references and are resolved in the sources (lines 7 and 11). Jut also allows the use of an “any” quantifier for context, as shown in Fig.6 (right; line 6), which allows AlicePhone to collect energy readings from any contexts it may join.

Processing context data. Fig.7 shows example data records from the BioHall context with the Zed data format. These records have different schemas and are ordered by their processing time ts, with the values omitted for brevity. In Jut, each data record contains two timestamps - the event timestamp (event_ts) and the processing timestamp (ts). The event timestamp tracks when the record is generated at the data source. If the data source doesn’t attach an event timestamp to the record, then the first-hop context will set the event_ts to be the ts. The ts on the other hand tracks when the context processes the data, i.e., when the ingress loads it to the store. Apart from being used in analytics such as timeseries queries, the timestamps are also used to implement exactly-once guarantees. The pipelet agent supports exactly-once, in-order processing (EOIO) when pulling data records from one Zed lake pool/branch to another. This is achieved by writing data records along with the latest ts in the records as part of the commit message atomically, which is then stored at the target pool. Upon failure and restart, the agent will first read and cache the last-seen ts from the target pool’s commit messages. When querying data from the source pool, the agent filters the data by reading only the ones with ts greater than the latest ts.

.2 Queries in Performance Benchmarks

.3 Room context