Kernel-based measures of association between inputs and outputs using ANOVA

Abstract

ANOVA decomposition of function with random input variables provides ANOVA functionals (AFs), which contain information about the contributions of the input variables on the output variable(s). By embedding AFs into an appropriate reproducing kernel Hilbert space regarding their distributions, we propose an efficient statistical test of independence between the input variables and output variable(s). The resulting test statistic leads to new dependent measures of association between inputs and outputs that allow for i) dealing with any distribution of AFs, including the Cauchy distribution, ii) accounting for the necessary or desirable moments of AFs and the interactions among the input variables. In uncertainty quantification for mathematical models, a number of existing measures are special cases of this framework. We then provide unified and general global sensitivity indices and their consistent estimators, including asymptotic distributions. For Gaussian-distributed AFs, we obtain Sobol’ indices and dependent generalized sensitivity indices using quadratic kernels.

keywords:

Dimension reduction , Independence tests , Kernel methods , Reducing uncertainties , Non-independent input variables1 Introduction

In statistical modeling and data analysis, symmetric measures of dependence for random vectors such as the Pearson correlation ([1]); the Spearman correlation; the Kendall correlation; the canonical correlation coefficient ([2, 3]); the RV coefficient for two random vectors ([4]); the maximum mean discrepancy ([5, 6, 7]), including the energy distance ([8]); the Hilbert-Schmidt independence criterion ([9, 10, 11]), including the distance correlation ([12, 13]) and a recent correlation coefficient ([14]) have been used by different communities for testing whether two random vectors are independent or not, and then for measuring the dependence between such random vectors. Drawbacks, advantages and links between such dependent measures can be found in [15, 16, 11, 17] for instance.

The above dependent measures do not require any function linking both random vectors. In presence of a mathematical model or a black box function of the form , where are the model inputs with known probability distributions and is the model output, such measures can still be used for testing the independence between the model output and some inputs such as . Such a problem occurs in computer experiments where a natural or human induced phenomenon is represented by complex computer code with numerous input variables ([18]). For dimension reduction, it is relevant to identify unessential variables by means of different criteria such as statistical tests of independence or the variance-based sensitivity indices (SIs) ([19, 20, 21, 22, 23]), which were developed in the framework of the statistical theory called ANOVA ([24, 25, 26, 19]).

Formally, the above dependent measures between and rely on the test statistic of the form or with some given functions. When a statistical test reveals that the random variables and are dependent, the associated test statistic usually serves as a reasonable measure of the association between both variables. While this crude dependent measure is relevant for screening input variables in some cases,

it may be not satisfying for ranking input variables because it involves only the inputs of interest and the output or the output and its conditional expectation given the inputs of interest ([27, 28, 29, 30]). Moreover, it i) may result in dealing with transformations of the model output rather than using ([31]); ii) may include undesirable effects or complicate the problem ([31, 32]); iii) ignores the asymmetric role of the model inputs and output. For instance, the MMD-based indices result in using as outputs with some given functions a.k.a. feature maps (see [33]). In the same sense, the HSIC-based indices proposed in [33] result in working with transformations of the original inputs and output, as the HSIC requires defining one kernel on the inputs and another one on the outputs ([34]).

It is known that ANOVA remains an important step in exploratory and confirmatory analysis of model ([35]), and it relies on direct use of the model output(s) and inputs. The well-known ANOVA-like decomposition of with independent variables can be written as follows ([24, 25, 26, 19, 36, 37]):

| (1) |

where is a subset of the model inputs whose subscripts belong to ; and denotes the total ANOVA functional (AF) of . The FANOVA decomposition of functions with non-independent variables is similar thanks to dependency models of dependent variables (see Section 2 and [38, 39, 40]). ANOVA functionals are random variables, which contain the primary information about the contributions of the input variables on the output variable. In view of Equation (1), does not dependent on whenever the total AF follows the Dirac probability measure , that is,

almost surely (a.s.) (see [41] for independent variables and Lemma 1 in general).

The aim of this paper is to propose a new dependent measure between the model inputs and output(s) that is more comprehensive and much flexible to account for the necessary or desirable statistical properties of AFs (e.g., higher-order moments, the Cauchy and heavy tailed distributions, asymmetric distributions) and the interactions among the input variables. The proposed dependent measure relies on the properties of AFs such as the independence criterion and the reproducing kernel Hilbert space (RKHS) ([42, 43, 44]). The RKHS theory offers a flexible framework for precise statistical inference of probability distributions ([6, 34, 16]), and it is crucial for conducting independence tests that account for the necessary or sufficient or desirable statistical properties of distributions ([9, 6, 34, 45, 46, 32]). The proposed dependent measure leads to provide the first-order and total kernel-based sensitivity indices, and its empirical expression is used for deriving a test statistic for testing the independence between the model inputs and output(s).

This paper is organized as follows: Section 2 deals with AFs and their statistical properties in terms of their ability to assess the effects of the input variables on the model output(s). Such properties enable the formulation of the initial null hypothesis for the independence test between input variables and the model output(s) and equivalent null hypothesis such as the variance . Most of variance-based SIs rely on that null hypothesis for performing dimension reduction. Indeed, represents the non-normalized total SI of .

Since the associated alternative hypothesis is sufficient for AFs that are Gaussian-distributed and the Cauchy distribution does not have the variance, Section 3 is devoted to develop equivalent null hypothesis for the independence test by embedding AFs into an appropriate RKHS regarding their distributions. Moreover, as a given kernel can help for working with specific moments ([32]), we also provide the set of distribution-free kernels that guarantee the equivalent null hypothesis for the independence test between the model inputs and output(s). Section 4 presents a statistical test that leads to an interesting measure of association between inputs and output(s). In Section 5, we formally introduce our empirical dependent measure and the associated test statistic. We also provide and study kernel-based SIs. We provide analytical and numerical results in Section 8 and conclude this work in Section 9.

General notations

For an inetegr , input variables and , we use , and for the number of elements in . Thus, we have the partition . We also use to say that and have the same cumulative distribution function (CDF).

2 Theoretical properties of ANOVA functionals of (in)-dependent inputs

In this section, we provide the properties of ANOVA functionals for functions with non-independent variables. Such properties serve as the initial null hypothesis for testing the independence between the random input variables and output variables. For the sequel of generality, consider a vector-valued function , which includes a -dimensional random vector as inputs and provides outputs given by . We are interested in measuring the association between the following two random vectors: and with and , keeping in mind the asymmetric role between both random vectors.

For any distribution of , we are able to model as follows ([47, 38, 40, 39]):

| (2) |

where is a function; is a random vector of independent variables, and is independent of . Note that when is consisted of independent variables, the dependency function in (2) comes down to . Composing by the function in (2) yields

| (3) |

In view of Equation (3), the function includes two independent random vectors (i.e., and ) as inputs, and it provides outputs sharing the distribution of . For independent variables , we can see that

. For the sequel of generality, we are going to use in what follows, which comes down to when the inputs are independent. As a matter of fact, we

can always claim that

(A1) our function of interest includes two independent random vectors: and .

Note that (A1) is not theoretically or practically restrictive because it is always satisfied thanks to dependency models (see (2)).

2.1 ANOVA functionals

Under (A1), the Hoeffding decomposition of is given by ([24, 25, 26, 19])

where is the expectation taking w.r.t. ;

The first-order and total AFs of are defined as follows: ([23, 48, 38, 39, 49, 40])

It is worth noting that both AFs are zero-mean and -dimensional random vectors, which are directly based on the model outputs. We also have the following relationship:

| (4) |

Recall that AFs contain the primary information about the contribution of the random vector to the model outputs given by . The main interesting property of AFs is derived in Lemma 1.

Lemma 1

Under (A1), is independent of iff

Proof. See Appendix A.

From Lemma 1, it is clear that the total AF is sufficient for fully characterizing the independence between and . We then formulate the initial test hypotheses of independence as follows:

While the null hypothesis is equivalent to , with a null matrix, its alternative hypothesis given by may be not satisfying because it does not account for higher-order moments. Moreover, this null hypothesis implicitly requires the existence of the variance-covariance of AFs, which is not the case for the Cauchy distribution for instance. Therefore, we need a dependent measure that accounts for sufficient or desirable higher-order moments for a given distribution of . Since the total AF is a random vector, we may use the probability metrics based on the difference between distribution functions or the Wasserstein metric or the kernel methods for making such comparisons. Significant discussions about such metrics and kernel methods can be found in [16]. To develop our dependent measure, we are going to use the kernel methods that are much flexible for including specific moments of AFs.

2.2 Embedding ANOVA functionals into a RKHS

To build a statistical test that i) leads to interesting dependent measures, ii) is much flexible for explicitly including specific moments, iii) is able to distinguish two different distributions, AFs are going to be embedded into a RKHS or feature spaces according to their distributions ([44, 6, 34]).

Definition 1

(Aronszajn, 1950)

Let be an arbitrary space, be an Hilbert space endowed with the inner product . The functions

(i) is called a feature map;

(ii) given by is called a valid kernel.

A kernel is said centered at when . Given a kernel , we can construct a new kernel that is centered at as follows ([11]):

For a -dimensional random vector having as CDF (i.e., ) such as AFs, the transformation aims at embedding this random vector into a RKHS induced by with an i.i.d. copy of . Linear statistics in the new RKHS such as the mean element account for all the moments or desirable moments of depending on the kernel ([6, 34, 7]). For embedding AFs into a RKHS and working with the mean element (see Definition 2), we use for the set of Borel probability distributions and define the set of AFs distributions as follows:

The class of distributions is adequate for manipulating AFs. For a valid and measurable kernel, we assume that

(A2): for all .

Definition 2

Generally, the feature map is used to embed into a higher dimensional random vectors so as to include all type of information about the data. Characteristic kernels aim to accomplish such tasks ([50, 6, 45, 46, 51]).

Definition 3

Taking the distance between and leads to the maximum mean discrepancy (MMD), that is,

([5, 6, 7]). Note that the centered kernel associated with a valid kernel is a characteristic kernel if and only if is a characteristic one ([11]).

Thus, the mean element associated with a characteristic kernel uniquely determines a probability distribution. This interesting property gives us the ability to use the mean element for fully characterizing the distribution of AFs. It is to be noted that characteristic kernels for specific class of distributions such as the class of Gaussian distributions can be defined as well. Thus, characteristic kernels on are sufficient for distinguishing different AFs. For instance, while a test statistic that can distinguish the first two moments is sufficient for a class of Gaussian distributions, the mean element of the form with , which generalizes the notion of moment-generating function in probability, allows for distinguishing all the moments of a probability distribution.

3 Kernels for equivalent null hypothesis

This section provides a set of kernels that ensure the equivalent criterion of independence between the input variables and the model outputs. Recall that the null hypothesis is an equivalent criterion of independence, and it is also obtained using the quadratic kernel, that is, . Indeed, if we use for i.i.d. copies of , we can check that

It is worth noting that kernels of the form for integer lead to an equivalent null hypothesis of independence, although such kernels are not characteristic in general. Since some kernels do not ensure the independence criterion, let us start with the following definitions thanks to Lemma 1. Namely, we use for the set of valid and measurable kernels; for the CDF of the Dirac probability measure .

Definition 4

A kernel is said to be an equivalent kernel for the independence test between and whenever

| (5) |

The equivalence used in Definition 4 is guaranteed by Lemma 1. For a centered kernel at (i.e., ), the left term of Equation (5) becomes

.

To construct the set of equivalent kernels for the independence test, consider the following set of kernels:

| (6) |

We can check that contains quadratic kernels that are centered at , and we are going to see that it contains some well-known characteristic kernels. Lemma 2 gives interesting properties of regarding the null hypothesis.

Lemma 2

Let and assume that (A1) and (A2) hold. Then,

is an equivalent kernel for the independence test between and .

Proof. See Appendix B.

Lemma 2 shows that the set given by (6) contains equivalent kernels for the independence criterion whatever are the distributions of the model outputs and total AFs. Despite is not exhaustive, Lemma 3 shows its richness. To that end, consider the famous radial-based characteristic kernels on given by

where is a positive and bounded Borel measure with the support .

Lemma 3

Let and assume (A1)-(A2) hold.

(i) If is a characteristic kernel, then the centered kernel .

(ii) If is a radial-based characteristic kernel, then .

Proof. See Appendix C.

Kernels of Point (i) in Lemma 3 lead to a comparison between the distributions of the total AF and the Dirac measure using the maximum mean discrepancy (see Section 6.2). Thus, Point (ii) offers other possibilities that allow for working with non-centered and characteristic kernels such as Gaussian kernels.

Example of equivalent kernels for the independence test

-

1.

A class of distance-induced characteristic kernels that are already centered at contains the following kernels ([11]):

-

2.

Recall that kernels given by for any integer belong to . Moreover, for any integer and positive definite and diagonal matrix , kernels of the form are in . Each kernel is sufficient for incorporating the -order moments of the total AF and all the correlations among the components of AFs. In general, we are able to incorporate all the moments by taking , which leads to the exponential kernel, that is, with .

-

3.

Finally, radial-based characteristic kernels such as Gaussian, Laplacian and the Cauchy kernels and their associated centered kernels belong to . Note that the Gaussian kernel is the normalized version of the exponential kernel.

4 New independence test and dependent measure between inputs and outputs

For testing the independence between and and obtaining an interesting dependent measure when contribute to the outputs , we are going to use kernels that guarantee the equivalent null hypothesis of independence between these random vectors such as the set of kernels .

4.1 Test hypotheses and deviation mesaure from independence

For concise notations, we use , , and we see that is an i.i.d. copy of . For a real , , the generic test hypotheses are formally given by

If we use for the CDF of , we can measure the deviation from independence as follows:

| (7) |

When , stands for . The discrepancy measure in (7 ) is still valid for any CDF such as the CDF of the first-order AFs, that is, . A reasonable measure of the deviation from independence (or equivalently kernel) must be able to account for the fact that the first-order AFs bring partial information compared to the total ones (see Equation (4)). This leads to the following definition.

Definition 5

Consider AFs given by , and a kernel .

The kernel is said to be ANOVA compatible whenever

Using Jensen’s inequality, we can check that the quadratic kernel is ANOVA compatible while the Hellinger kernel given by is clearly not. Combining the notion of ANOVA-compatible kernels and equivalent kernels for the independence test leads to the definition of importance-measure kernels (IMKs).

Definition 6

A valid kernel is said to be an IMK whenever is ANOVA compatible and . We use for the set of IMKs.

While the quadratic kernel is part of , it is not a characteristic kernel in general. Indeed, it is characteristic kernel for the class of Gaussian distributed AFs. Lemma 4 provides some conditions for kernels to be IMKs.

Lemma 4

Let be kernels, and assume (A1)-(A2) hold.

(i) If and is convex in , then .

(ii) If is convex in , then .

(iii) If is concave in and , then .

Proof. See Appendix D.

From Lemma 4, convex and some concave kernels are IMKs. Of course, the assumptions of convexity or concavity are required on the support of the output distribution. For log-concave kernels of the form with a convex function and , we are able to control such kernels through in order to obtain concave kernels on the support of the outputs (see Section 7).

Examples of IMKs on .

-

1.

The quadratic kernel of the form with a diagonal and positive definite matrix.

-

2.

The absolute kernel or the -based kernel of the form .

-

3.

The moment-generating kernel or the exponential kernel given by and its associated centered kernel at with .

-

4.

The Laplacian kernel and the Gaussian kernel for some values of (see Section 7).

The IMKs provided in Lemma 4 ensure interesting properties of the discrepancy measure defined in Equation (7). To provide such results in Theorem 1, let us consider the input variables with . We use (resp. ) for the CDF of the first-order (resp. total) AF of , that is, and .

Proof. See Appendix E.

It comes out from Theorem 1 that the discrepancy measure increases with the cardinality of a subset of inputs. The fact that increasing the number of components in a subset of inputs does not make the discrepancy measure smaller is commonly expected, as that property is satisfied in ANOVA and variance-based sensitivity analysis. Thus, Lemma 4 provides kernels that guarantee interesting properties encountered in ANOVA.

4.2 Test statistic and dependent measure between inputs and outputs

Based on the results from Theorem 1, it becomes clear that IMKs given in Lemma 4 and Equation (7) can lead to a coherent dependence measure of association between inputs and outputs according to Rényi’ axioms ([15]). To define such dependent measures, we use for the CDF of the centered outputs and for an i.i.d. copy of .

Definition 7

For a real , let be an IMK and be the CDF of any AF.

The dependent measure of a random vector is defined by

| (10) |

For IMKs provided in Lemma 4, the right term of Equation (10) can be written without the absolute symbol. Moreover, the dependent measures of the first-order and total AFs of having and as CDFs are given by, respectively

In what follows, we will call and the first-order and total kernel-based sensitivity indices, respectively. Indeed, we are going to see that and represent some well-known first-order and total sensitivity indices for some kernels (see Section 6). Formal properties of such dependent measures are given in Corollary 1.

Corollary 1

Let be an IMK given in Lemma 4. Assume that (A1)-(A2) hold. Then,

(i) ;

(ii) iff is independent of ;

(iii) iff ;

(iv) .

Proof. See Appendix F.

In view of Corollary 1, an equivalent null hypothesis for the independence test between the inputs and the outputs is given by

and the associated test statistic will rely on the estimator of . Indeed, performing a statistical test of independence requires an empirical statistic and its distribution under the null hypothesis.

5 Empirical test statistic and dependent measures

This section aims at providing empirical dependent measures, including empirical kernel-based sensitivity indices (Kb-SIs) and the test statistic. Note that the first-order AF and the total AF for all will lead to the first-order and total Kb-SIs. For concise notations and when there is no ambiguity, we are going to use , instead of , .

For computing the dependent measures defined in Section 4.2, we are given two i.i.d. samples, that is, and from the random vector , where the four components are mutually independent. Define

Also, recall that the law of large numbers (LLN) ensures the convergence in probability of the following estimators when :

Using the plug-in approach, we provide the estimators of , and their statistical properties in Theorem 2.

Theorem 2

Let be a differentiable kernel almost everywhere (A3), and assume (A1)-(A2) hold. If , then

(i) a consistent estimator of is given by

| (11) |

(ii) A consistent estimator of is given by

| (12) |

(iii) A consistent estimator of under the null hypothesis is given by

| (13) |

(iv) A consistent estimator of is given by

| (14) |

Proof. See Appendix G.

Based on Theorem 2, we derive i) the estimators of Kb-SIs in Corollary 2, and ii) the empirical test statistic under the null hypothesis and its asymptotic distribution in Corollary 3. Estimating the Kb-SIs for at least the input variables with or every subset of inputs will require different samples of the form . We then use for the size of the sample that can be used to estimate , that is,

Corollary 2

Let be a Gaussian variable, and assume (A1)-(A3) hold.

(i) The consistent estimators of and are given by

| (15) |

| (16) |

(ii) If , with , then we have the following asymptotic distributions:

Proof. See Appendix H.

Corollary 3

Let be a Gaussian variable, and assume (A1)-(A3) hold.

(i) If , then a test statistic under the null hypothesis is given by

| (17) |

(ii) If with , then a discrepancy-based test statistic under the null hypothesis is given by

| (18) |

Using Theorem 2 and Corollary 2, the results provided in Corollary 3 are straightforward by applying the Slutsky theorem. For instance, we can see that

under the null hypothesis. We will rely on given by (18) for performing independence tests, as it is linked to the total Kb-SIs. Thus, the estimators , and are going to be used for performing such independence tests. The testing procedure consists in computing , and then comparing the value obtained to the critical value of at a given threshold such as . In general, this critical value is the empirical quantile of the distribution of associeted with . For or , the quantile of the chi or chi-squared distribution of degree can be directly used.

6 Links with other importance measures

For a -dimensional random vectors and the kernels of the form , the discrepancy measure becomes

6.1 Variance-based importance measure

For the kernel , the associated discrepancy measure leads to the generalized sensitivity indices (GSIs) of the first-type, including Sobol’ indices (see [19, 20, 21, 22] for independent variables and [38, 39] for dependent and correlated variables). Likewise, the kernel and the associated measure lead to the second-type GSIs (see [48, 38, 39]).

Remark 1

For any AF , it is worth noting that

(i) is the first-order Taylor approximation of with the Gaussian kernel;

(ii) is the second-order Taylor approximation of with the exponential kernel.

Thus, the well-known variance-based SIs are the approximations of the kernel-based SIs associated with some characteristic IMks.

6.2 Maximum mean discrepancy and Energy distance

For any AF and the centered kernel at zero given by ; we can see that

is the squared MMD between the distribution and the Dirac measure .

Kernels induced by the semimetric with , that is,

are centered at zero and belong to the set of kernels that guarantee the independence criterion. For such kernels, the measure

is twice the squared energy distance between the distribution and the Dirac delta measure (see [8] for more details). Recall that such kernels must be convex or concave on the support of AFs to be IMKs.

While the MMD and distance energy are used for testing independence between random vectors, it comes out that additional conditions on the associated kernels are needed in order to obtain ANOVA-compatible kernels and importance dependent measures between the inputs and the outputs.

7 Importance measure kernels based on log-concave kernels

This section shows how one can control log-concave kernels of the form to obtain concave kernels, where and is a convex function. Note that some radial-based characteristic kernels such as the Laplacian and Gaussien kernels are log-concave kernels. To that end, we use for the subgradient of ([52]) and for the Hessian of . When is differentiable, the subgradient is equal to the gradient .

Lemma 5

Let be the support of the outputs and . Assume is convex and continuous.

(i) The function is concave on whenever

(ii) If is twice differentiable, then is concave on when

Proof. See Appendix I.

For a bounded support , that is, for all with a constant, the first condition becomes

For instance, the Laplacian kernel given by is concave on the bounded support for every satisfying

.

Likewise, the Gaussian kernel given by is concave on when

.

For an unbounded support , which is the case of most log-concave probability measures, the parameter can be chosen so that the inequalities provided in Lemma 5 fail with a small value of probability, that is, about (see Corollary 4).

Corollary 4

Let be two i.i.d. copies of the outputs ; and . Assume that is convex and continuous.

(i) The kernel is concave on with high probability () when

(ii) If is twice differentiable, then is concave with high probability when

Proof. See Appendix J.

Thus, the Laplacian kernel is concave on the support with higher probability () when . In the case of the Gaussian kernel, the condition becomes . For a given , We may choose for the first condition and for the second one.

8 Simulation study

To illustrate our approach, we consider two functions and the following kernels: the quadratic kernel , the -based kernel , the Gaussian kernel and the Laplacian kernel . In this section, and were chosen according to Corollary 4 using the variance of the model output(s).

8.1 Sobol’ function

Consider a model that includes ten independent variables following the uniform distribution, that is, with , and given by

The variance of is , and the Kb-SIs were computed using , , , and (see Corollary 4). The estimated Kb-SIs are reported in Table 1, including the Kb-SIs associated with the exponential kernel, that is, .

The independence statistical tests based on (18)) reveal that the output depends on all the input variables for most of the kernels, except the exponential kernel. According to the statistical test based on the exponential kernel, depends only on and . For other kernels, it comes out that we have the same ranking of inputs using the total Kb-SIs. For selecting the most influential variables, it is common to fix a threshold . When , it appears that all the inputs are important according the the Gaussian, Laplacian and somehow the L1-based kernels. Sobol’ indices (quadratic kernel) and the exponential Kb-SIs identify and as the most important variables. Such differences are due to the fact that different kernels capture different information of AFs. For instance, it is known that small norms such as the the L1-based kernel capture slow variations of AFs.

| Kernels | X1 | X2 | X3 | X4 | X5 | X6 | X7 | X8 | X9 | X10 |

|---|---|---|---|---|---|---|---|---|---|---|

| First-order Kb-SIs | ||||||||||

| L1-based | 0.678 | 0.679 | 0.090 | 0.090 | 0.090 | 0.090 | 0.090 | 0.090 | 0.090 | 0.090 |

| Quadratic | 0.393 | 0.393 | 0.007 | 0.007 | 0.007 | 0.007 | 0.007 | 0.007 | 0.007 | 0.007 |

| Gaussian | 0.695 | 0.694 | 0.097 | 0.098 | 0.097 | 0.097 | 0.097 | 0.097 | 0.097 | 0.097 |

| Laplacian | 0.854 | 0.853 | 0.328 | 0.329 | 0.327 | 0.327 | 0.327 | 0.328 | 0.327 | 0.327 |

| Exponential | 0.106 | 0.107 | 0.001 | 0.003 | 0.002 | 0.002 | 0.002 | 0.001 | 0.001 | 0.001 |

| Total Kb-SIs | ||||||||||

| L1-based | 0.675 | 0.678 | 0.089 | 0.089 | 0.089 | 0.089 | 0.089 | 0.089 | 0.088 | 0.091 |

| Quadratic | 0.531 | 0.548 | 0.013 | 0.012 | 0.012 | 0.012 | 0.012 | 0.012 | 0.012 | 0.013 |

| Gaussian | 0.787 | 0.787 | 0.131 | 0.131 | 0.131 | 0.130 | 0.130 | 0.131 | 0.131 | 0.131 |

| Laplacian | 0.894 | 0.890 | 0.356 | 0.357 | 0.356 | 0.356 | 0.355 | 0.357 | 0.357 | 0.356 |

| Exponential | 0.174 | 0.188 | 0.002 | 0.002 | 0.002 | 0.005 | 0.004 | 0.003 | 0.002 | 0.004 |

| Test statistics values (, (18)) | ||||||||||

| L1-based | 4.966 | 4.907 | 4.236 | 4.362 | 4.355 | 4.314 | 4.285 | 4.289 | 4.374 | 4.271 |

| Quadratic | 3.659 | 3.494 | 2.640 | 2.770 | 2.928 | 2.677 | 2.654 | 2.745 | 2.955 | 2.836 |

| Gaussian | 5.244 | 5.189 | 4.517 | 4.554 | 4.571 | 4.609 | 4.543 | 4.504 | 4.590 | 4.524 |

| Laplacian | 6.034 | 6.008 | 5.688 | 5.699 | 5.695 | 5.710 | 5.702 | 5.707 | 5.711 | 5.692 |

| Exponential | 2.621 | 2.259 | 0.314 | 0.364 | 0.275 | 0.959 | 0.687 | 0.473 | 0.305 | 0.624 |

| Critical values | ||||||||||

| L1-based | 1.393 | 1.412 | 1.379 | 1.386 | 1.394 | 1.387 | 1.408 | 1.393 | 1.398 | 1.370 |

| Quadratic | 1.412 | 1.426 | 1.410 | 1.380 | 1.409 | 1.363 | 1.403 | 1.422 | 1.386 | 1.396 |

| Gaussian | 1.399 | 1.399 | 1.406 | 1.399 | 1.373 | 1.391 | 1.399 | 1.374 | 1.392 | 1.405 |

| Laplacian | 1.387 | 1.409 | 1.404 | 1.394 | 1.401 | 1.409 | 1.395 | 1.411 | 1.393 | 1.409 |

| Exponential | 1.420 | 1.422 | 1.385 | 1.415 | 1.383 | 1.388 | 1.389 | 1.413 | 1.396 | 1.385 |

| Decision about dependence | ||||||||||

| L1-based | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Quadratic | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Gaussian | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Laplacian | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Exponential | Yes | Yes | No | No | No | No | No | No | No | No |

8.2 Vector-valued function

Consider the following model

| (19) |

which includes two correlated variables, that is, with the correlation coefficient and . Using the dependency models ([38, 39]), that is, and with and , the equivalent representations of the model are given by

The first-order and total AFs are given by

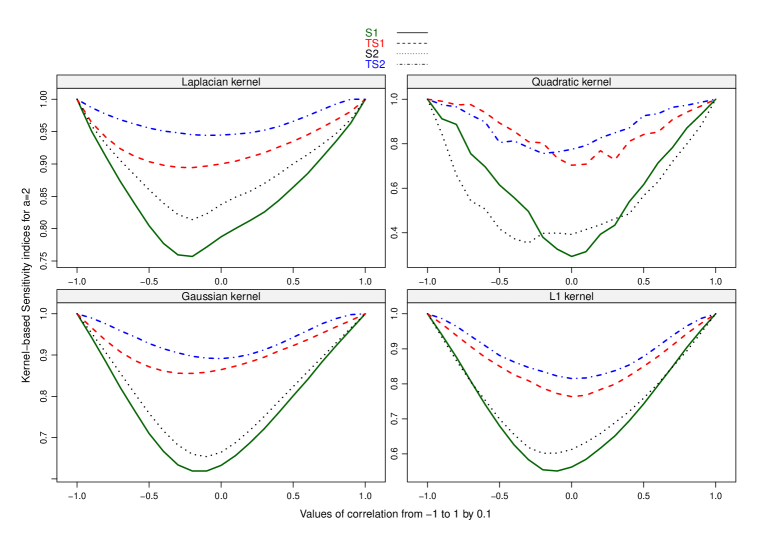

When , both inputs are independent, and we can see that the components of and are correlated while those of and are clearly not, but they are dependent. We can also check that such AFs are not Gaussian-distributed. Figure 1 compares estimates of kernel-based SIs associated with the four kernels using and with the trace of the covariance of when (see [38]).

We can see that the total Kb-SIs are always greater than the first-order indices, as expected. While the Gaussian, Laplacian and Kb-SIs give the same ranking of inputs, the quadratic Kb-SIs inversely identify as the most important input for negative values of the correlation coefficient.

9 Conclusion

In this paper, we have proposed a new dependent measure of association between the model inputs and outputs and the associated statistical test of independence by making use of the total ANOVA functional (AF) of inputs and kernel methods. The proposed test statistic and dependent measures are i) well-suited for any output domain or object and models with non-independent variables, ii) much flexible for explicitly including specific moments of AFs, iii) able to distinguish two different distributions of AFs. Regarding the test statistics, we have provided generic kernels that guarantee the independence criterion between the outputs and the inputs. Most of characteristic kernels are equivalent kernels for the independence criterion. It comes out that convex and some concave kernels that guarantee the independence criterion lead to interesting dependent measures according to Rényi’ axioms.

In uncertainty quantification, the variance-based SA (Sobol’ indices; generalized sensitivity indices ([21, 22, 53, 48]) and dependent generalized sensitivity indices (dGSIs) ([38, 39])) and the Owen measure are special cases of the proposed dependent measures by properly choosing the kernels. Moreover, it comes out that some kernels lead to the application of the maximum mean discrepancy and distance energy ([5, 6, 7, 8]). For the choice of kernels, we should prefer the importance-measure kernels that detect independence between inputs and outputs as effectively as possible, that is, account for the necessary and sufficient high-order moments such as the first and second-order moments for Gaussian-distributed AFs. For other distributions of AFs, the Laplacian, Gaussian and exponential kernels that account for all the moments of any distribution can be used since such kernels are importance-measure kernels for some values of their parameters. In general, Sobol’ indices and dGSIs are a first-order (resp. second-order) approximation of the proposed dependent measures associated with the Gaussian (resp. exponential) kernel.

The computations of AFs require evaluating conditional expectations of the model outputs given some inputs, and such evaluations may be time demanding to converge. In next future, it is interesting to investigate efficient methods for computing conditional expectations in high dimension. Gaussian process emulator ([54, 55]) of the models or new emulator based on the paper [49] or the Nadaraya-Watson kernel estimator for high-dimensional nonparametric regression ([56]) are going to be investigated.

Appendix A Proof of Lemma 1

We can see that the output is independent of implies

Therefore, we have .

Conversely, if , the properties of conditional expectation show that there exists a function such that

which means that is a function of only, and the result holds.

Appendix B Proof of Lemma 2

Bearing in mind Definition 4, we want to show that

First, let us start with the kernel . Using the theorem of transfer, we can write

with the CDF of and the CDF of .

For a SPD kernel , when the above identity is zero, it implies that ; and .

Second, we are going to use the criterion . Since is centered at , we have

which implies that .

Appendix C Proof of Lemma 3

For Point (i), we are going to use the first criterion of (see Equation (6)). We can write for all

Note that with the CDF of , and is a characteristic kernel when is a characteristic one. Thus, Point (i) holds because implies .

For Point (ii), we are going to use the second criterion of . According to the Bochner Lemma and the Fubini theorem, we can write for independent vectors

Thus, implies that for all . For a class of finite and signed Borel measures of the form with a measurable function such as a difference of two probability densities, the function

is the Fourier transform of . As for all , we then have bearing in mind the inverse Fourier transform. Point (ii) holds because .

Appendix D Proof of Lemma 4

Let denote an Hilbert space induced by . Without loss of generality, we are going to show the results for .

First, using the convexity of with , we know that there exist a gradient of (i.e., ) such that for all ([52])

Second, for and , we have (see Equation (4))

For Point (i), knowing that for the centered kernel ,

we can write (bearing in mind that )

Point (i) holds using the Jensen inequality and Equation (4).

For (ii), since and is convex, we can write without the absolute symbol thanks to Jensen’s theorem, that is,

Using the convexity of , we can write

Thus, Point (ii) holds using the Jensen inequality and Equation (4).

For Point (iii), as and is concave, we have

and we can write

Using (4), Point (iii) holds by applying the Jensen inequality to , which is convex.

Appendix E Proof of Theorem 1

Without loss of generality, we suppose that the outputs is centered, that is, . Recall that AFs are also centered. Using , we can write with and . Thus, .

First, as ,

and it is known that (see [39]; Lemma 3)

, we can see that

Second, for the convex kernel , the Jensen inequality allows for writting as

Thus, the first result holds by applying the Jensen inequality, that is,

For the second result, it comes out from the above equivalent in distribution that

and we want to show that

To that end, let be an i.i.d. copy of ; and consider the function . Since the three components of (resp. ) are independent, we can write

Moreover, the properties of conditional expectation allow for writing

because the space of projection and the filtration associated with contain those of . The second result holds by applying the conditional Jensen inequality, as is convex.

Finally, the results for a concave kernel can be deduced from the above results. Indeed, we can see that is convex and becomes

Appendix F Proof of Corollary 1

It is sufficient to show the results for .

For Point (i), according to Theorem 1, we can write

Thus, we have because .

Point (ii) is obvious because , the set of kernels that guarantee the independence criterion.

The if part of Point (iii) is obvious. For the only if part, the equality implies that

which also implies that for the second kind of kernels of .

Point (iv) holds for IMKs by definition.

Appendix G Proof of Theorem 2

Firstly, we have when .

Knowing that

and

,

the Taylor expansion of about yields

| (22) |

where when . Therefore, we can write

| (25) |

where when . Since the second term of the above equation converge in probability toward , the LLN ensures that is a consistent estimator of . thus, the first result of Point (i) holds.

Secondly, we obtain the second result of Point (i) by applying the central limit theorem (CLT) to the first term of the above equation, as the second term converge in probability toward .

The proof of Point (ii) is similar to the proof of Point (i). Indeed, using the Taylor expansion of , we obtain the consistency of the second-order moment of . The Slutsky theorem ensures the consistency of the cross components and .

Point (iii) is then obvious using Point (ii).

The proofs of Point (iv) is similar to those of Point (i).

Appendix H Proof of Corollary 2

First, the results about the consistency of the estimators are obtained by using Theorem 2 and the Slutsky theorem.

The numerators of Equations (15)-(16) are asymptotically distributed as Gaussian variable according to Theorem 2. To obtain the asymptotic distributions of the sensitivity indices, we first applied the Slutsky theorem, and second, we use the fact that and are asymptotically equivalent in probability under the technical condition (see [23] for more details).

Appendix I Proof of Lemma 5

For Point (i), the convexity of implies the existence of such that

which also implies (thanks to the Taylor expansion) that

| (26) |

under the condition (thanks to Cauchy-Schwartz)

Equivalently, we can write . Equation (26) implies that is concave under the above condition. Indeed, we have

with the subgradient of . Thus, is convex because is continuous ([52]).

For Point (ii), the gradient and the hessian of w.r.t. are

Therefore, if we use , then is concave when is negative definite. Thus, for all , we can write

Appendix J Proof of Corollary 4

Namely, we use and for the upper bounds of (see proof of Lemma 5). For the sequel of simplicity, we use with for either or .

As is random variable, we have (Markov’s inequality)

which implies that .

Moreover, using Markov’s inequality we can write

which implies that .

References

- [1] K. Pearson, On lines and planes of closest fit to systems of points in space, Philosophical Magazine 2 (1901) 559–572.

- [2] H. Hotelling, Relations between two sets of variates, Vol. 28, 1936, pp. 321–377.

- [3] I. Kojadinovic, M. Holmes, Tests of independence among continuous random vectors based on Cramr-von Mises functionals of the empirical copula process, Journal of Multivariate Analysis 100 (6) (2009) 1137–1154.

- [4] Y. Escoufier, Le traitement des variables vectorielles, Biometrics 29 (1973) 751–760.

- [5] K. M. Borgwardt, A. Gretton, M. J. Rasch, H.-P. Kriegel, B. Schölkopf, A. J. Smola, Integrating structured biological data by Kernel Maximum Mean Discrepancy, Bioinformatics 22 (14) (2006) 49–57.

- [6] A. Gretton, K. Borgwardt, M. Rasch, B. Schölkopf, A. Smola, A kernel method for the two-sample-problem, in: B. Schölkopf, J. Platt, T. Hoffman (Eds.), Advances in Neural Information Processing Systems, Vol. 19, MIT Press, 2007.

- [7] A. Gretton, K. M. Borgwardt, M. J. Rasch, B. Schölkopf, A. Smola, A kernel two-sample test, J. Mach. Learn. Res. 13 (2012) 723–773.

- [8] M. L. Rizzo, G. J. Székely, Energy distance, WIREs Computational Statistics 8 (1) (2016) 27–38.

- [9] A. Gretton, R. Herbrich, A. Smola, O. Bousquet, B. Schölkopf, Kernel methods for measuring independence, Journal of Machine Learning Research 6 (2005) 2075–2129.

- [10] A. Gretton, O. Bousquet, A. Smola, B. Schölkopf, Measuring statistical dependence with hilbert-schmidt norms, in: International conference on algorithmic learning theory, Springer, 2005, pp. 63–77.

- [11] D. Sejdinovic, B. Sriperumbudur, A. Gretton, K. Fukumizu, Equivalence of distance-based and RKHS-based statistics in hypothesis testing, The Annals of Statistics 41 (5) (2013) 2263–2291.

- [12] A. Feuerverger, A consistent test for bivariate dependence, International Statistical Review / Revue Internationale de Statistique 61 (3) (1993) 419–433.

- [13] G. J. Székely, M. L. Rizzo, N. K. Bakirov, Measuring and testing dependence by correlation of distances, The Annals of Statistics 35 (6) (2007) 2769–2794.

- [14] S. Chatterjee, A new coefficient of correlation, Journal of the American Statistical Association (2020) 1–21.

- [15] A. Renyi, On measures of dependence, Acta Mathematica Academiae Scientiarum Hungarica 10 (3-4) (1959) 441–451.

- [16] B. K. Sriperumbudur, A. Gretton, K. Fukumizu, B. Schölkopf, G. R. Lanckriet, Hilbert space embeddings and metrics on probability measures, The Journal of Machine Learning Research 11 (2010) 1517–1561.

- [17] J. Josse, S. Holmes, Measuring multivariate association and beyond, Statistics Surveys 10 (none) (2016) 132–167.

- [18] E. de Rocquigny, N. Devictor, S. Tarantola (Eds.), Uncertainty in industrial practice, Wiley, 2008.

- [19] I. M. Sobol, Sensitivity analysis for non-linear mathematical models, Mathematical Modelling and Computational Experiments 1 (1993) 407–414.

- [20] A. Saltelli, K. Chan, E. Scott, Variance-Based Methods, Probability and Statistics, John Wiley and Sons, 2000.

- [21] M. Lamboni, H. Monod, D. Makowski, Multivariate sensitivity analysis to measure global contribution of input factors in dynamic models, Reliability Engineering and System Safety 96 (2011) 450–459.

- [22] F. Gamboa, A. Janon, T. Klein, A. Lagnoux, Sensitivity indices for multivariate outputs, Comptes Rendus Mathematique 351 (7) (2013) 307–310.

- [23] M. Lamboni, Uncertainty quantification: a minimum variance unbiased (joint) estimator of the non-normalized Sobol’ indices, Statistical Papers (2018) –doi:https://doi.org/10.1007/s00362-018-1010-4.

- [24] W. Hoeffding, A class of statistics with asymptotically normal distribution, Annals of Mathematical Statistics 19 (1948) 293–325.

- [25] B. Efron, C. Stein, The jacknife estimate of variance, The Annals of Statistics 9 (1981) 586–596.

- [26] A. Antoniadis, Analysis of variance on function spaces, Series Statistics 15 (1) (1984) 59–71.

- [27] S. D. Veiga, Global sensitivity analysis with dependence measures, Journal of Statistical Computation and Simulation 85 (7) (2015) 1283–1305.

- [28] S. Xiao, Z. Lu, P. Wang, Multivariate global sensitivity analysis for dynamic models based on energy distance, Structural and Multidisciplinary Optimization 57 (1) (2018) 279–291.

- [29] E. Plischke, E. Borgonovo, Fighting the curse of sparsity: Probabilistic sensitivity measures from cumulative distribution functions, Risk Analysis 40 (12) (2020) 2639–2660.

- [30] J. Barr, H. Rabitz, A generalized kernel method for global sensitivity analysis, SIAM/ASA Journal on Uncertainty Quantification 10 (1) (2022) 27–54.

- [31] A. B. Owen, J. Dick, S. Chen, Higher order Sobol’ indices, Information and Inference: A Journal of the IMA 3 (1) (2014) 59–81.

- [32] L. Song, A. Smola, A. Gretton, J. Bedo, K. Borgwardt, Feature selection via dependence maximization, Journal of Machine Learning Research 13 (5) (2012).

- [33] S. Da Veiga, Kernel-based anova decomposition and shapley effects–application to global sensitivity analysis, arXiv preprint arXiv:2101.05487 (2021) –.

- [34] A. Smola, A. Gretton, L. Song, B. Schölkopf, A hilbert space embedding for distributions, in: International Conference on Algorithmic Learning Theory, Springer, 2007, pp. 13–31.

- [35] A. Gelman, Analysis of variance-why it is more important than ever, The Annals of Statistics 33 (1) (2005) 1 – 53.

- [36] M. Lamboni, B. Iooss, A.-L. Popelin, F. Gamboa, Derivative-based global sensitivity measures: General links with Sobol’ indices and numerical tests, Mathematics and Computers in Simulation 87 (0) (2013) 45 – 54.

- [37] M. Lamboni, Global sensitivity analysis: an efficient numerical method for approximating the total sensitivity index, International Journal for Uncertainty Quantification 6 (1) (2016) 1–17.

- [38] M. Lamboni, S. Kucherenko, Multivariate sensitivity analysis and derivative-based global sensitivity measures with dependent variables, Reliability Engineering & System Safety 212 (2021) 107519.

- [39] M. Lamboni, On dependent generalized sensitivity indices and asymptotic distributions, arXiv preprint arXiv2104.12938 (2021).

- [40] M. Lamboni, Efficient dependency models: simulating dependent random variables, Mathematics and Computers in Simulation , submitted on 03/01/2021 (2021).

- [41] M. Lamboni, Derivative-based integral equalities and inequality: A proxy-measure for sensitivity analysis, Mathematics and Computers in Simulation 179 (2021) 137 – 161.

- [42] N. Aronszajn, Theory of reproducing kernels, Transactions of the American Mathematical Society 68 (1950) 337–404.

- [43] B. Schölkopf, A. J. Smola, Learning with Kernels, MIT Press, Cambridge, MA, 2002.

- [44] A. Berlinet, C. Thomas, T. A. Gnan, Reproducing Kernel Hilbert Space in probability and statistics, Kluwer Academic, 2004.

- [45] K. Fukumizu, A. Gretton, X. Sun, B. Schölkopf, Kernel measures of conditional dependence, in: Proceedings of the 20th International Conference on Neural Information Processing Systems, NIPS’07, Curran Associates Inc., Red Hook, NY, USA, 2008, pp. 489–496.

- [46] K. Fukumizu, A. Gretton, B. Schölkopf, B. K. Sriperumbudur, Characteristic kernels on groups and semigroups, in: D. Koller, D. Schuurmans, Y. Bengio, L. Bottou (Eds.), Advances in Neural Information Processing Systems, Vol. 21, Curran Associates, Inc., 2009.

- [47] A. V. Skorohod, On a representation of random variables, Theory Probab. Appl 21 (3) (1976) 645–648.

- [48] M. Lamboni, Derivative-based generalized sensitivity indices and Sobol’ indices, Mathematics and Computers in Simulation 170 (2020) 236 – 256.

- [49] M. Lamboni, Weak derivative-based expansion of functions: Anova and some inequalities, Mathematics and Computers in Simulation 194 (2022) 691–718.

- [50] K. Fukumizu, F. Bach, M. Jordan, Kernel dimensionality reduction for supervised learning, in: S. Thrun, L. Saul, B. Schölkopf (Eds.), Advances in Neural Information Processing Systems, Vol. 16, MIT Press, 2004.

- [51] K. Fukumizu, A. Gretton, B. Schölkopf, B. K. Sriperumbudur, Characteristic kernels on groups and semigroups, in: D. Koller, D. Schuurmans, Y. Bengio, L. Bottou (Eds.), Advances in Neural Information Processing Systems, Vol. 21, Curran Associates, Inc., 2009.

- [52] S. Boyd, L. Vandenberghe, Convex optimization, Cambridge university press, 2004.

- [53] F. Gamboa, A. Janon, T. Klein, A. Lagnoux, Sensitivity analysis for multidimensional and functional outputs, Electron. J. Statist. 8 (1) (2014) 575–603.

- [54] J. E. Oakley, A. O’Hagan, Probabilistic sensitivity analysis of complex models: a bayesian approach, Journal of the Royal Statistical Society: Series B (Statistical Methodology) 66 (3) (2004) 751–769.

- [55] S. Conti, A. O’Hagan, Bayesian emulation of complex multi-output and dynamic computer models, Journal of Statistical Planning and Inference 140 (3) (2010) 640 – 651.

- [56] D. Conn, G. Li, An oracle property of the Nadaraya -Watson kernel estimator for high-dimensional nonparametric regression, Scandinavian Journal of Statistics 46 (3) (2019) 735–764.