Large-scale Heteroscedastic Regression via Gaussian Process

Abstract

Heteroscedastic regression considering the varying noises among observations has many applications in the fields like machine learning and statistics. Here we focus on the heteroscedastic Gaussian process (HGP) regression which integrates the latent function and the noise function together in a unified non-parametric Bayesian framework. Though showing remarkable performance, HGP suffers from the cubic time complexity, which strictly limits its application to big data. To improve the scalability, we first develop a variational sparse inference algorithm, named VSHGP, to handle large-scale datasets. Furthermore, two variants are developed to improve the scalability and capability of VSHGP. The first is stochastic VSHGP (SVSHGP) which derives a factorized evidence lower bound, thus enhancing efficient stochastic variational inference. The second is distributed VSHGP (DVSHGP) which (i) follows the Bayesian committee machine formalism to distribute computations over multiple local VSHGP experts with many inducing points; and (ii) adopts hybrid parameters for experts to guard against over-fitting and capture local variety. The superiority of DVSHGP and SVSHGP as compared to existing scalable heteroscedastic/homoscedastic GPs is then extensively verified on various datasets.

Index Terms:

Heteroscedastic GP, Large-scale, Sparse approximation, Stochastic variational inference, Distributed learning.Notation

| Latent function values and log variances | |||

| Inducing sizes for each expert in DVSHGP | |||

| Number of basis functions for GPVC [8] | |||

| Subset size for EHSoD [13] | |||

| Number of VSHGP experts | |||

| Training size and test size | |||

| Training size for each expert in DVSHGP | |||

| Training and test data points | |||

| Training and test observations | |||

| Step parameter for optimization | |||

I Introduction

In supervised learning, we learn a machine learning model from training points and the observations , where is the underlying function and is the independent noise. The non-parametric Gaussian process (GP) places a GP prior on with the iid noise , resulting in the standard homoscedastic GP. The homoscedasticity offers tractable GP inference to enable extensive applications in regression and classification [1], visualization [2], Bayesian optimization [3], multi-task learning [4], active learning [5], etc.

Many realistic problems, e.g., volatility forecasting [6], biophysical variables estimation [7], cosmological redshifts estimation [8], robotics and vehicle control [9, 10], however should consider the input-dependent noise rather than the simple constant noise in order to fit the local noise rates of complicated data distributions. In comparison to the conventional homogeneous GP, the heteroscedastic GP (HGP) provides better quantification of different sources of uncertainty, which further brings benefits for the downstream tasks, e.g., active learning, optimization and uncertainty quantification [11, 12].

To account for the heteroscedastic noise in GP, there exists two main strategies: (i) treat the GP as a black-box and interpret the heteroscedasticity using another separate model; and (ii) integrate the heteroscedasticity within the unifying GP framework. The first post-model strategy first trains a standard GP to capture the underlying function , and then either trains another GP to take the remaining empirical variance into account [13], or use the quantile regression [14] to model the lower and upper quantiles of the variance respectively.

In contrast, the second integration strategy provides an elegant framework for heteroscedastic regression. The simplest way to mimic variable noise is through adding independent yet different noise variances to the diagonal of kernel matrix [15]. Goldberg et al. [16] introduced a more principled HGP which infers a mean-field GP for and an additional GP for jointly. Similarly, other HGPs which describe the heteroscedastic noise using the pointwise division of two GPs or the general non-linear combination of GPs have been proposed for example in [17, 18, 19]. Note that unlike the homoscedastic GP, the inference in HGP is challenging since the model evidence (marginal likelihood) and the posterior are intractable. Hence, various approximate inference methods, e.g., markov chain monte carlo (MCMC) [16], maximum a posteriori (MAP) [20, 21, 22, 23], variational inference [24, 25], expectation propagation [26, 27] and Laplace approximation [28], have been used. The most accurate MCMC is time-consuming when handling large-scale datasets; the MAP is a point estimation which risks over-fitting and oscillation; the variational inference and its variants, which run fast via maximizing over a tractable and rigorous evidence lower bound (ELBO), provide a trade-off.

This paper focuses on the HGP model developed in [16]. When handling training points, the standard GP suffers from a cubic complexity due to the inversion of an kernel matrix, which makes it unaffordable for big data. Since HGP employs an additional log-GP for noise variance, its complexity is about two times that of standard GP. Hence, to handle large-scale datasets, which is of great demand in the era of big data, the scalability of HGP should be improved.

Recently, there has been an increasing trend on studying scalable GPs, which have two core categories: global and local approximations [29]. As the representative of global approximation, sparse approximation considers () global inducing pairs to summarize the training data by approximating the prior [30] or the posterior [31], resulting in the complexity of . Variants of sparse approximation have been recently proposed to handle millions of data points via distributed inference [32, 33], stochastic variational inference [34, 35], or structured inducing points [36]. The global sparse approximation, however, often faces challenges in capturing quick-varying features [37]. Differently, local approximation, which follows the idea of divide-and-conquer, first trains GP experts on local subsets and then aggregates their predictions, for example by the means of product-of-experts (PoE) [38] and Bayesian committee machine (BCM) [39, 40, 41]. Hence, local approximation not only distributes the computations but also captures quick-varying features [42]. Hybrid strategies thereafter have been presented for taking advantages of global and local approximations [43, 44, 45].

The developments in scalable homoscedastic GPs have thus motivated us to scale up HGPs. Alternatively, we could combine the simple Subset-of-Data (SoD) approximation [46] with the empirical HGP [13] which trains two separate GPs for predicting mean and variance, respectively. The empirical variance however is hard to fit since it follows an asymmetric Gaussian distribution. More reasonably, the GP using variable covariances (GPVC) [8] follows the idea of relevance vector machine (RVM) [47] that a stationary kernel has a positive and finite Fourier spectrum, suggesting using only () independent basis functions for approximation. Note that GPVC shares the basis functions for and which however might produce distinct features. Besides, the RVM-type model usually suffers from underestimated prediction variance when leaving [48].

Besides, there are some scalable “pseudo” HGPs [49, 43] which are not designed for such case, but can describe the heteroscedasticity to some extent due to the factorized conditionals. For instance, the Fully Independent Training Conditional (FITC) [49] and its block version named Partially Independent Conditional (PIC) [43] adopt a factorized training conditional to achieve a varying noise [50]. Though equipped with heteroscedastic variance, these models (i) severely underestimate the constant noise variance, (ii) sacrifice the prediction mean [50], and (iii) may produce discontinuous predictions on block boundaries [44]. Recently, the stochastic/distributed variants of FITC and PIC have been developed to further improve the scalability [35, 33, 51, 45].

The high capability of describing complicated data distribution with however poor scalability motivate us to develop variational sparse HGPs which employ an additional log-GP for heteroscedasticity. Particularly, the main contributions are:

1. A variational inference algorithm for sparse HGP, named VSHGP, is developed. Specifically, VSHGP derives an analytical ELBO using inducing points for and inducing points for , resulting in a greatly reduced complexity of . Besides, some tricks for example re-parameterization are used to ease the inference;

2. The stochastic variant SVSHGP is presented to further improve the scalability of VSHGP. Specifically, we derive a factorized ELBO which allows using efficient stochastic variational inference;

3. The distributed variant DVSHGP is presented for improving the scalability and capability of VSHGP. The local experts with many inducing points (i) distribute the computations for parallel computing, and (ii) employ hybrid parameters to guard against over-fitting and capture local variety;

4. Extensive experiments111The SVSHGP is implemented based on the GPflow toolbox [52], which benefits from parallel/GPU speedup and automatic differentiation of Tensorflow [53]. The DVSHGP is built upon the GPML toolbox [54]. These implementations are available at https://github.com/LiuHaiTao01. conducted on datasets with up to two million points reveal that the localized DVSHGP exhibits superior performance, while the global SVSHGP may sacrifice the prediction mean for capturing heteroscedastic noise.

The remainder of the article is organized as follows. Section II first develops the VSHGP model via variational inference. Then, we present the stochastic variant in Section III and the distributed variant in Section IV to further enhance the scalability and capability, followed by extensive experiments in Section VI. Finally, Section VII provides concluding remarks.

II Variational sparse HGP

II-A Sparse approximation

We follow [16] to define the HGP as , wherein the latent function and the noise follow

| (1) |

It is observed that the input-dependent noise variance enables describing possible heteroscedasticity. Notably, this HGP degenerates to homoscedastic GP when is a constant. To ensure the positivity, we particularly consider the exponential form , wherein the latent function akin to follows an independent GP prior

| (2) |

The only difference is that unlike the zero-mean GP prior placed on , we explicitly consider a prior mean to account for the variability of the noise variance.222For , we can pre-process the data to fulfill the zero-mean assumption. For , however, it is hard to satisfy the zero-mean assumption, since we have no access to the “noise” data. The kernels and could be, e.g., the squared exponential (SE) kernel equipped with automatic relevance determination (ARD)

| (3) |

where the signal variance is an output scale, and the length-scale is an input scale along the th dimension.

Given the training data , the joint priors follow

| (4) |

where and for . Accordingly, the data likelihood becomes

| (5) |

where the diagonal noise matrix has .

To scale up HGP, we follow the sparse approximation framework to introduce inducing variables at the inducing points for ; similarly, we introduce inducing variables at the independent for . Besides, we assume that is a sufficient statistic for , and a sufficient statistic for .333Sufficient statistic means the variables and are independent given , i.e., it holds . As a result, we obtain two training conditionals

where , , and .

In the augmented probability space, the model evidence

together with the posterior where , however, is intractable. Hence, we use variational inference to derive an analytical ELBO of .

II-B Evidence lower bound

We employ the mean-field theory [55] to approximate the intractable posterior as

| (6) |

where and are free variational distributions to approximate the posteriors and , respectively.

In order to push the approximation towards the exact , we minimize their Kullback-Leibler (KL) divergence , which, on the other hand, is equivalent to maximizing the ELBO , since , as

| (7) |

As a consequence, instead of directly maximizing the intractable for inference, we now seek the maximization of w.r.t. the variational distributions and .

By reformulating we observe that

where is the information entropy of ; is the optimal distribution since it maximizes the bound , and it satisfies, given the normalizer ,

| (8) |

Thereafter, by substituting back into , we arrive at a tighter ELBO, given , as

| (9) | ||||

where the diagonal matrix has , with the mean and variance

| (10a) | ||||

| (10b) | ||||

coming from which approximates . It is observed that the analytical bound depends only on since we have “marginalized” out.

Let us delve further into the terms of in (9):

-

•

The log term , where , is analogous to that in a standard GP. It achieves bias-variance trade-off for both and by penalizing model complexity and low data likelihood [1].

-

•

The two trace terms act as a regularization to choose good inducing sets for and , and guard against over-fitting. It is observed that and represent the total variance of the training conditionals and , respectively. To maximize , the traces should be very small, implying that and are very informative (i.e., sufficient statistics, also called variational compression [56]) for and . Particularly, the zero traces indicate that and , thus recovering the variational HGP (VHGP) [24]. Besides, the zero traces imply that the variances of equal to that of .

-

•

The KL term is a constraint for rationalising . It is observed that minimizing the traces only push the variances of towards that of . To let the co-variances of rationally approximate that of , the KL term penalizes so that it does not deviate significantly from the prior .

II-C Reparameterization and inference

In order to maximize the ELBO in (9), we need to infer free variational parameters in and . Assume that , then is larger than when the training size , leading to a high-dimensional and non-trivial optimization task.

We observe that the derivatives of w.r.t. and are

where the diagonal matrix with , , and the operator being the element-wise product. Hence, it is observed that the optimal and satisfy

Interestingly, we find that both the optimal and depend on , which is a positive semi-definite diagonal matrix, see the non-negativity proof in Appendix A. Hence, we re-parameterize and in terms of as

| (11a) | ||||

| (11b) | ||||

This re-parameterization eases the model inference by (i) reducing the number of variational parameters from to , and (ii) limiting the new variational parameters to be non-negative, thus narrowing the search space.

So far, the bound depends on the variational parameters , the kernel parameters and , the mean parameter for , and the inducing points and . We maximize to infer all these hyperparameters jointly for model selection. This non-linear optimization task can be solved via conjugate gradient descent (CGD), since the derivatives of w.r.t. these hyperparameters have closed forms, see Appendix B.

II-D Predictive Distribution

The predictive distribution at the test point is approximated as

| (12) |

As for , we first calculate from (8) as

| (13) |

where . Using , we have with

| (14a) | ||||

| (14b) | ||||

It is interesting to find in (14b) that the correction term contains the heteroscedasticity information from the noise term . Hence, produces heteroscedastic variances over the input domain, see an illustration example in Fig. 2(b). The heteroscedastic (i) eases the learning of , and (ii) plays as an auxiliary role, since the heteroscedasticity is mainly explained by . Also, our VSHGP is believed to produce a better prediction mean through the interaction between and in (14a).444This happens when is learned well, see the numerical experiments below.

Similarly, we have the predictive distribution where, given ,

| (15a) | ||||

| (15b) | ||||

Finally, using the posteriors and , and the likelihood , we have

| (16) |

which is intractable and non-Gaussian. However, the integral can be approximated up to several digits using the Gauss-Hermite quadrature, resulting in the mean and variance as [24]

| (17) |

In the final prediction variance, the variance represents the uncertainty about due to data density, and it approaches zero with increasing ; the exponential term implies the intrinsic heteroscedastic noise uncertainty.

It is notable that the unifying VSHGP includes VHGP [24] and variational sparse GP (VSGP) [31] as special cases: when and , VSHGP recovers VHGP; when , i.e., we are now facing a homoscedastic regression task, VSHGP regenerates to VSGP.

Overall, by introducing inducing sets for both and , VSHGP is equipped with the means to handle large-scale heteroscedastic regression. However, (i) the current time complexity , which is linear with training size, makes VSHGP still unaffordable for, e.g., millions of data points; and (ii) as a global approximation, the capability of VSHGP is limited by the small and global inducing sets.

To this end, we introduce below two strategies to further improve the scalability and capability of VSHGP.

III Stochastic VSHGP

To further improve the scalability of VSHGP, the variational distribution is re-introduced to use the original bound in (7). Given the factorized likelihood , the ELBO is

| (18) | ||||

where the first expectation term is expressed as

with and .

The new is a relaxed version of in (9). It is found that the derivatives satisfy

| (19) | ||||

Let the gradients be zeros, we recover the optimal solution in (13), indicating that with the equality at .

The scalability is improved by through the first term in the right-hand side of (18), which factorizes over data points. The sum form allows using efficient stochastic gradient descent (SGD), e.g., Adam [57], with mini-batch mode for big data. Specifically, we choose a random subset to have an unbiased estimation of as

| (20) | ||||

where is the mini-batch size. More efficiently, since the two variational distributions are defined in terms of KL divergence, we could optimize them along the natural gradients instead of the Euclidean gradients, see Appendix C.

Finally, the predictions of the stochastic VSHGP (SVSHGP) follow (17), with the predictions replaced as

and

Compared to the deterministic VSHGP, the stochastic variant greatly reduces the time complexity from to , at the cost of requiring many more optimization efforts in the enlarged probabilistic space.555Compared to VSHGP, the SVSHGP cannot re-parameterize and , and has to infer more variational parameters in and . Besides, the capability of SVSHGP akin to VSHGP is still limited to the finite number of global inducing points.

IV Distributed VSHGP

To further improve the scalability and capability of VSHGP via many inducing points, the distributed variant named DVSHGP proposes to combine VSHGP with local approximations, e.g., the Bayesian committee machine (BCM) [39, 40], to enable distributed learning and capture local variety.

IV-A Training experts with hybrid parameters

We first partition the training data into subsets , . Then, we train a VSHGP expert on by using the relevant inducing sets and . Particularly, to obtain computational gains, an independence assumption is posed for all the experts such that is decomposed into the sum of individuals

| (21) |

where is the hyperparameters in , and is the ELBO of . The factorization (21) calculates the inversions efficiently as and .

We train these VSHGP experts with hybrid parameters. Specifically, the BCM-type aggregation requires sharing the priors and over experts. That means, we should share the hyperparameters including , and across experts. These global parameters are beneficial for guarding against over-fitting [40], at the cost of however degrading the capability. Hence, we leave the variational parameters and the inducing points and for each expert to infer them individually. These local parameters improve capturing local variety by (i) pushing towards the posterior of , and (ii) using many inducing points.

Besides, because of the local parameters, we prefer partitioning the data into disjoint experts rather than random experts like [40]. The disjoint partition using clustering techniques produces local and separate experts which are desirable for learning the relevant local parameters. In contrast, the random partition, which assigns points randomly to the subsets, provides global and overlapped experts which are difficult to well estimate the local parameters. For instance, when DVSHGP uses random experts on the toy example below, it fails to capture the heteroscedastic noise.

Finally, suppose that each expert has the same training size , the training complexity for an expert is , where is the inducing size for and the inducing size for . Due to the local experts, DVSHGP naturally offers parallel/distributed training, hence reducing the time complexity of VSHGP with a factor ideally close to the number of machines when and .

IV-B Aggregating experts’ predictions

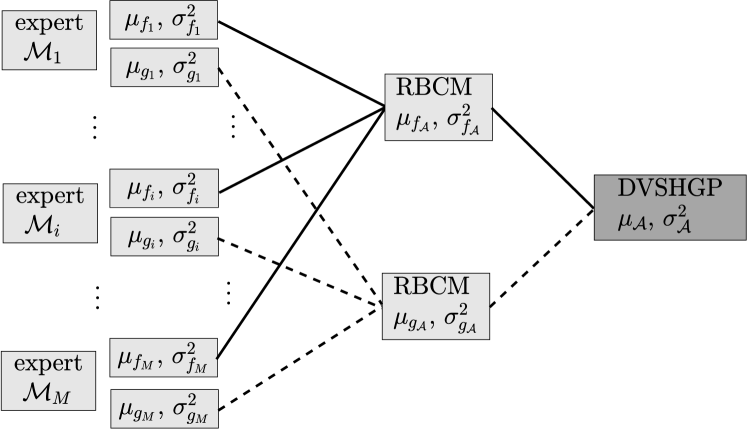

For each VSHGP expert , we obtain the predictive distribution with the means and variances . Thereafter, we combine the experts’ predictions together to perform the final prediction by, for example the robust BCM (RBCM) aggregation, which naturally supports distributed/parallel computing [40, 58].

The key to the success of aggregation is that we do not directly combine the experts’ predictions . This is because (i) the RBCM aggregation of produces an inconsistent prediction variance with increasing and [41]; and (ii) the predictive distribution in (16) is non-Gaussian.666Note that the generalized RBCM strategy [41], which provides consistent predictions, however is not favored by our DVSHGP with local parameters, since it requires (i) the experts’ predictions to be Gaussian and (ii) an additional global base expert. To have a meaningful prediction variance, which is crucial for heteroscedastic regression, we perform the aggregation for the latent functions and , respectively. This is because the prediction variances of and approach zeros with increasing . This agrees with the property of RBCM.

We first have the aggregated predictive distribution for , given the prior , as

| (22) |

with the mean and variance expressed respectively, as

where the weight represents the contribution of at for , and is defined as the difference in the differential entropy between the prior and the posterior as . Similarly, for which explicitly considers a prior mean , the aggregated predictive distribution is

| (23) |

with the mean and variance, expressed respectively as

where is the prior precision of , and is the weight of at for .

Thereafter, as shown in Fig. 1, the final predictions akin to (17) are combined as

| (24) |

The hierarchical and localized computation structure enables (i) large-scale regression via distributed computations, and (ii) flexible approximation of slow-/quick-varying features by local experts and many inducing points (up to the training size ).

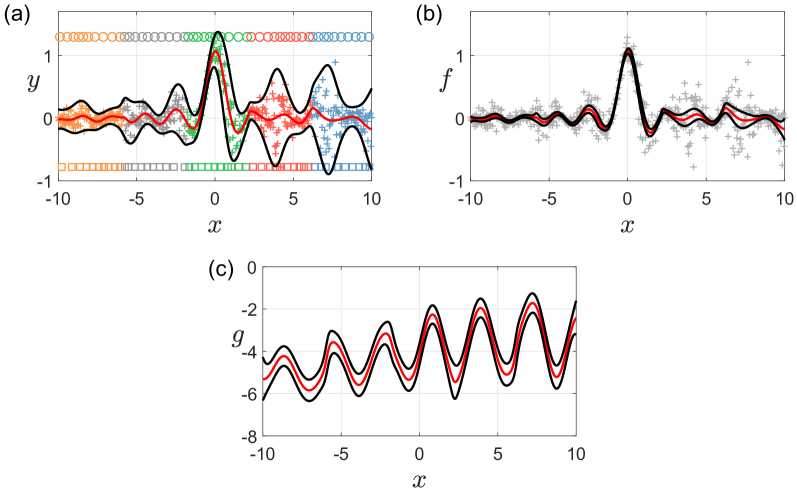

Finally, we illustrate the DVSHGP on a heteroscedastic toy example expressed as

| (25) |

where and . We draw 500 training points from (25), and use the -means technique to partition them into five disjoint subsets. We then employ ten inducing points for both and of the VSHGP expert , . Fig. 2 shows that (i) DVSHGP can efficiently employ up to 100 inducing points for modeling through five local experts, and (ii) DVSHGP successfully describes the underlying function and the heteroscedastic log noise variance .

V Discussions regarding implementation

V-A Implementation of DVSHGP

As for DVSHGP, we should infer (i) the global parameters including the kernel parameters and , and the mean ; and (ii) the local parameters including the variational parameters and the inducing parameters and , for local experts .

Notably, the variational parameters are crucial for the success of DVSHGP, since they represent the heteroscedasticity. To learn the variational parameters well, there are two issues: (i) how to initialize them and (ii) how to optimize them. As for initialization, let us focus on VSHGP, which is the foundation for the experts in DVSHGP. It is observed in (11) that directly determines the initialization of . Given the prior , we intuitively place a prior mean on , resulting in . In contrast, if we initialize with a value larger or smaller than , the cumulative term in (11a) becomes far away from zero with increasing , leading to improper prior mean for . As for optimization, compared to standard GP, our DVSHGP needs to additionally infer variational parameters and inducing parameters, which greatly enlarge the parameter space and increase the optimization difficulty. Hence, we use an alternating strategy where we first optimize the variational parameters individually to capture the heteroscedasticity roughly, followed by learning all the hyperparameters jointly.

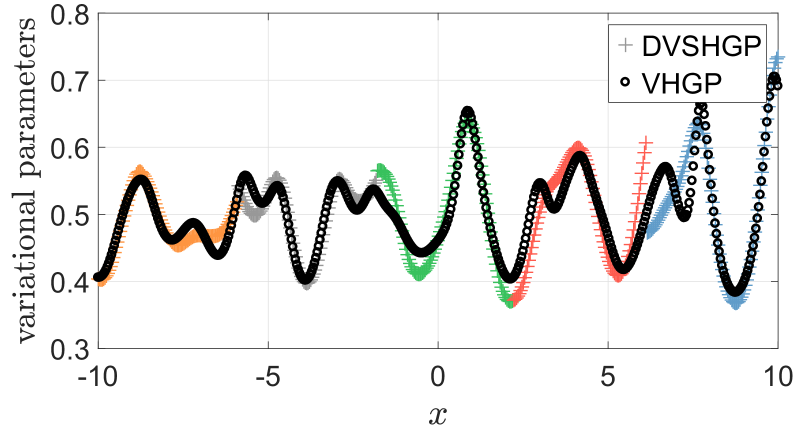

Fig. 3 depicts the inferred variational parameters varying over training points by DVSHGP and the original VHGP [24], respectively, on the toy problem. It turns out that the variational parameters estimated by DVSHGP (i) generally agree with that of VHGP, and (ii) showcase local characteristics that are beneficial for describing local variety.

V-B Implementation of SVSHGP

As for SVSHGP, to effectively infer the variational parameters in and , we adopt the natural gradient descent (NGD), which however should carefully tune the step parameter defined in Appendix C. For the Gaussian likelihood, the optimal solution is , since taking an unit step is equivalent to performing a VB update [34]. But for the stochastic case, empirical results suggest that should be gradually increased to some fixed value. Hence, we follow the schedule in [59]: take and log-linearly increase to over five iterations, and then keep for the remaining iterations.

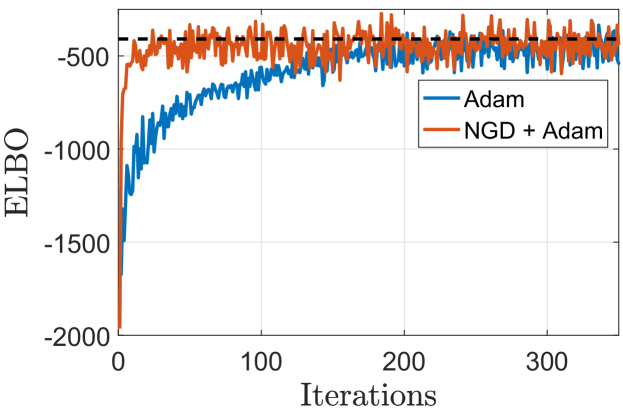

Thereafter, we employ a hybrid strategy, called NGD+Adam, for optimization. Specifically, we perform a step of NGD on the variational parameters with the aforementioned schedule, followed by a step of Adam on the remaining hyperparameters with a fixed step .

Fig. 4 depicts the convergence histories of SVSHGP using Adam and NGD+Adam respectively on the toy example. We use inducing points and a mini-batch size of . As the ground truth, the final ELBO obtained by VSHGP is provided. It is observed that (i) the NGD+Adam converges faster than the pure Adam, and (ii) the stochastic optimizers finally approach the solution of CGD used in VSHGP.

V-C DVSHGP vs. (S)VSHGP

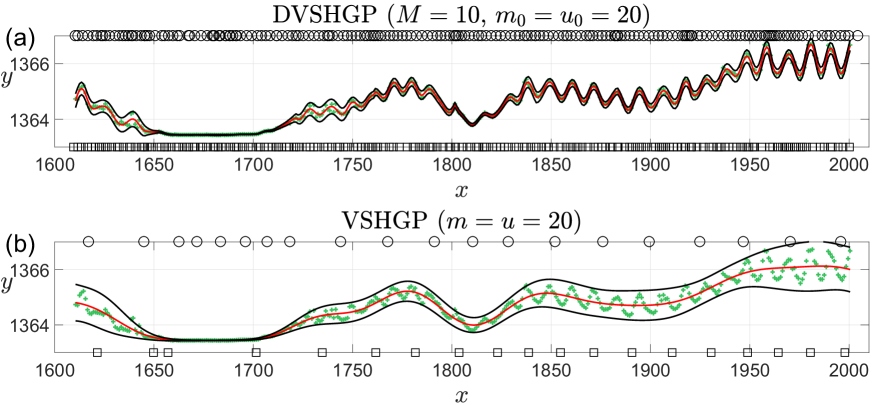

Compared to the global (S)VSHGP, the performance of DVSHGP is enhanced by many inducing points and the localized experts with individual variational and inducing parameters, resulting in the capability of capturing quick-varying features. To verify this, we apply DVSHGP and (S)VSHGP to the time-series solar irradiance dataset [60] which contains quick-varying and heteroscedastic features.

In the comparison, DVSHGP employs the -means technique to partition the 391 training points into subsets, and uses inducing points for each expert; (S)VSHGP employs inducing points. Particularly, we initialize the length-scales in the SE kernel (3) with a pretty small value of 5.0 for and for (S)VSHGP on this quick-varying dataset. Fig. 5 shows that (i) DVSHGP captures the quick-varying and heteroscedastic features successfully via local experts and many inducing points; (ii) (S)VSHGP however fails due to the small set of global inducing points.777Since VSHGP and SVSHGP show similar predictions on this dataset, we only illustrate the VSHGP predictions here.

VI Numerical experiments

This section verifies the proposed DVSHGP and SVSHGP against existing scalable HGPs on a synthetic dataset and four real-world datasets. The comparison includes (i) GPVC [8], (ii) the distributed variant of PIC (dPIC) [33], (iii) FITC [49], and (iv) the SoD based empirical HGP (EHSoD) [13]. Besides, the comparison also employs the homoscedastic VSGP [31] and RBCM [40] to showcase the benefits brought by the consideration of heteroscedasticity.

We implement DVSHGP, FITC, EHSoD, VSGP and RBCM by the GPML toolbox [54], and implement SVSHGP by the GPflow toolbox [52]; we use the published GPVC codes available at https://github.com/OxfordML/GPz and the dPIC codes available at https://github.com/qminh93/dSGP_ICML16. They are executed on a personal computer with four 3.70 GHz cores and 16 GB RAM for the synthetic and three medium-sized datasets, and on a Linux workstation with eight 3.20 GHz cores and 32GB memory for the large-scale dataset.

All the GPs employ the SE kernel in (3). Normalization is performed for both and to have zero mean and unit variance before training. Finally, we use test points to assess the model accuracy by the standardized mean square error (SMSE) and the mean standardized log loss (MSLL) [1]. The SMSE quantifies the discrepancy between the predictions and the exact observations. Particularly, it equals to one when the model always predicts the mean of . Moreover, the MSLL quantifies the predictive distribution, and is negative for better models. Particularly, it equals to zero when the model always predicts the mean and variance of .

VI-A Synthetic dataset

We employ a 2D version of the toy example (25) as

with highly nonlinear latent function and noise . We randomly generate 10,000 training points and evaluate the model accuracy on 4,900 grid test points. We generate ten instances of the training data such that each model is repeated ten times.

We have and for DVSHGP, resulting in data points assigned to each expert; we have basis functions for GPVC; we have for SVSHGP, FITC and VSGP; we have and for dPIC; we have for RBCM; finally, we train two separate GPs on a subset of size for EHSoD. As for optimization, DVSHGP adopts a two-stage process: it first only optimizes the variational parameters using CGD with up to 30 line searches, and then learns all the parameters jointly using up to 70 line searches; SVSHGP trains with NGD+Adam using over 1,000 iterations; VSGP, FITC, GPVC and RBCM use up to 100 line searches to learn the parameters; dPIC employs the default optimization settings in the published codes; and finally EHSoD uses up to 50 line searches to train the two standard GPs, respectively.

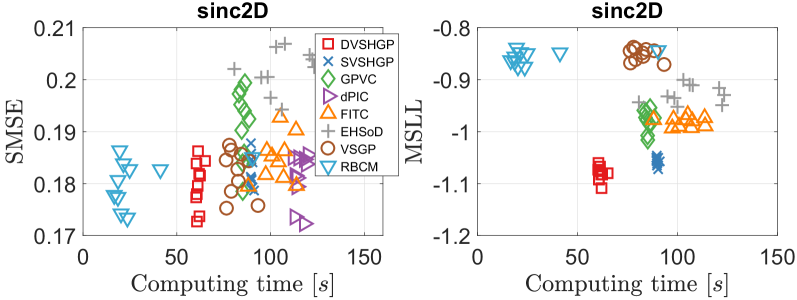

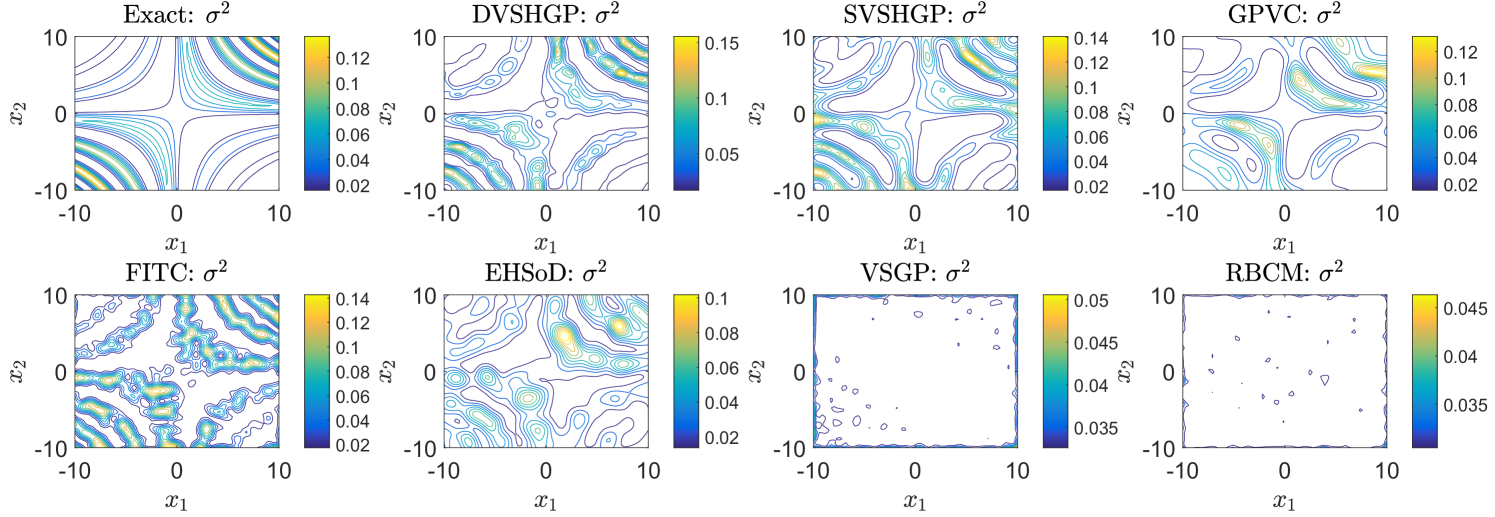

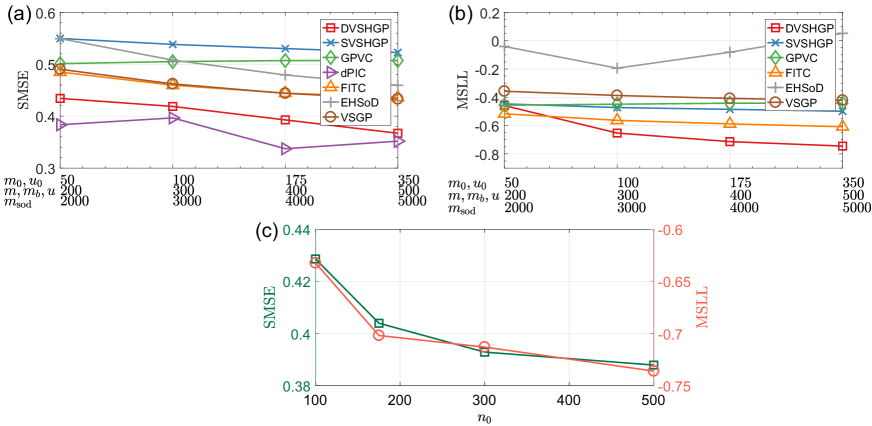

Fig. 6 depicts the modeling results of different GPs over ten runs on the synthetic sinc2D dataset.888Since the dPIC codes only provide the prediction mean, we did not offer its MSLL value as well as the estimated noise variance in the following plots. The horizontal axis represents the sum of training and predicting time for a model. It turns out that DVSHGP, SVSHGP, dPIC, VSGP and RBCM are competitive in terms of SMSE; but DVSHGP and SVSHGP perform better in terms of MSLL due to the well estimated heteroscedastic noise. Compared to the homoscedastic VSGP and RBCM, the FITC has heteroscedastic variances, which is indicated by the lower MSLL, at the cost of however (i) sacrificing the accuracy of prediction mean, and (ii) suffering from invalid noise variance .999FITC estimates as 0.0030, while VSGP estimates it as 0.0309. As a principled HGP, GPVC performs slightly better than FITC in terms of MSLL. Finally, the simple EHSoD has the worst SMSE; but it outperforms the homoscedastic VSGP and RBCM in terms of MSLL.

In terms of efficiency, the RBCM requires less computing time since it contains no variational/inducing parameters, thus resulting in (i) lower complexity, and (ii) early stop for optimization. This also happens for the three datasets below.

Finally, Fig. 7 depicts the prediction variances of all the GPs except dPIC in comparison to the exact on the synthetic dataset. It is first observed that the homoscedastic VSGP and RBCM are unable to describe the complex noise variance: they yield a nearly constant variance over the input domain. In contrast, DVSHGP, SVSHGP and GPVC capture the varying noise variance accurately by using an additional noise process ; FITC also captures the profile of the exact with however unstable peaks and valleys; EHSoD is found to capture a rough expression of the exact .

VI-B Medium real-world datasets

This section conducts comparison on three real-world datasets. The first is the 9D protein dataset [61] with 45,730 data points. This dataset, taken from CASP 5-9, describes the physicochemical properties of protein tertiary structure. The second is the 21D sarcos dataset [1], which relates to the inverse kinematics of a robot arm, has 48,933 data points. The third is the 3D 3droad dataset which comprises 434,874 data points [62] extracted from a 2D road network in North Jutland, Denmark, plussing elevation information.

VI-B1 The protein dataset

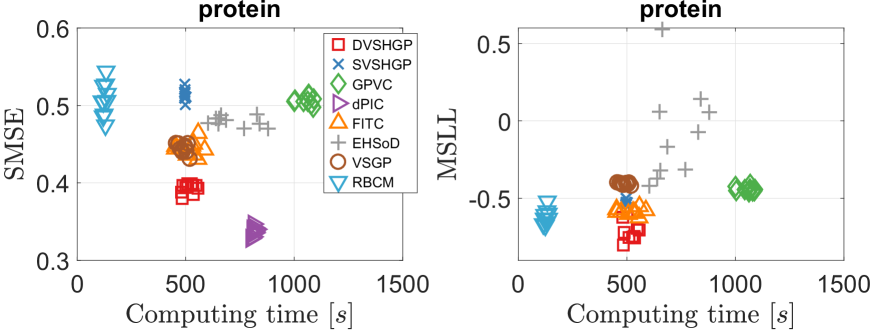

For the protein dataset, we randomly choose 35,000 training points and 10,730 test points. In the comparison, we have (i.e., ) and for DVSHGP; we have for SVSHGP, VSGP and FITC; we have and for dPIC; we have for GPVC; we have for RBCM; and finally we have for EHSoD. As for optimization, SVSHGP trains with NGD+Adam using over 2,000 iterations. The optimization settings of other GPs keep consistent to that for the synthetic dataset.

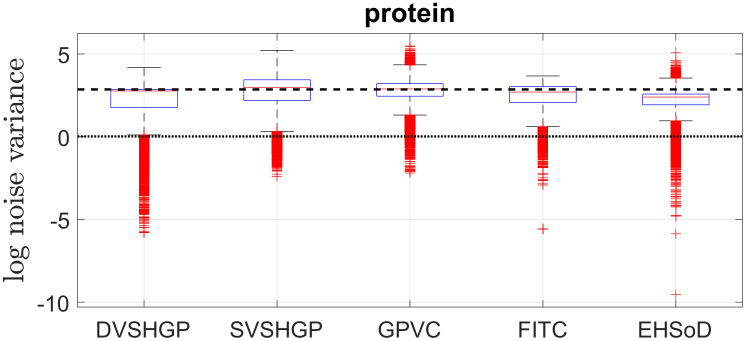

The results of different GPs over ten runs are summarized in Fig. 8. Among the HGPs, it is observed that dPIC outperforms the others in terms of SMSE, followed by DVSHGP. On the other hand, DVSHGP performs the best in terms of MSLL, followed by FITC and SVSHGP. The simple EHSoD is found to produce unstable MSLL results because of the small subset. Finally, the homoscedastic VSGP and RBCM provide mediocre SMSE and MSLL results.

Next, Fig. 9 offers insights into the distributions of log noise variances of all the GPs except dPIC on the protein dataset for a single run. Note that (i) as homoscedastic GPs, the log noise variances of VSGP and RBCM are marked as dash and dot lines, respectively; and (ii) we plot the variance of for FITC since (a) it accounts for the heteroscedasticity and (b) the scalar noise variance is severely underestimated. The results in Fig. 9 indicate that the protein dataset may contain heteroscedastic noise. Besides, compared to the VSGP which uses a global inducing set, the localized RBCM provides a more compact estimation of . This compact noise variance, which has also been observed on the two datasets below, brings lower MSLL for RBCM.

Furthermore, we clearly observe the interaction between and for DVSHGP, SVSHGP and GPVC. The small MSLL of RBCM suggests that the protein dataset may own small noise varaicnes at some test points. Hence, the localized DVSHGP, which is enabled to capture the local variety through individual variational and inducing parameters for each expert, produces a longer tail in Fig. 9. The well estimated heteroscedastic noise in turn improves the prediction mean of DVSHGP through the interaction between and . In contrast, due to the limited global inducing set, the prediction mean of SVSHGP and GPVC is traded for capturing heteroscedastic noise.

Notably, the performance of sparse GPs is affected by their modeling parameters, e.g., the inducing sizes , , and , the number of basis functions , and the subset size . Fig. 10(a) and (b) depict the average results of sparse GPs over ten runs using different parameters. Particularly, we investigate the impact of subset size on DVSHGP in Fig. 10(c) using . It is found that DVSHGP favours large (small ) and large and . Similarly, VSGP and FITC favour more inducing points. However, dPIC offers an unstable SMSE performance with increasing ; GPVC performs slightly worse with increasing in terms of both SMSE and MSLL, which has also been observed in the original paper [8], and may be caused by the sharing of basis functions for and . Finally, EHSoD showcases poorer MSLL values when , because of the difficulty of approximating the empirical variances.

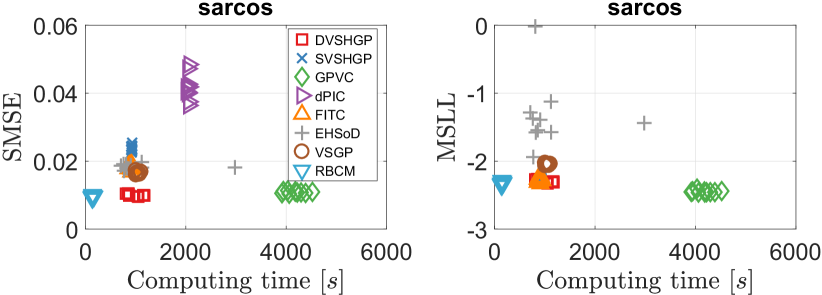

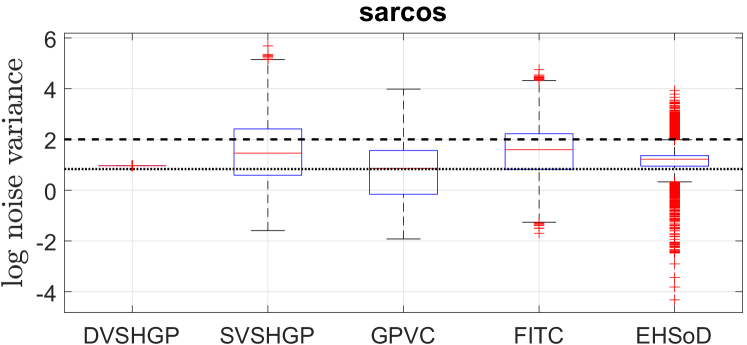

VI-B2 The sarcos dataset

For the sarcos dataset, we randomly choose 40,000 training points and 8,933 test points. In the comparison, we have (i.e., ) and for DVSHGP; we have for SVSHGP, VSGP and FITC; we have and for dPIC; we have for GPVC; we have for RBCM; and finally we have for EHSoD. The optimization settings are the same as before.

The results of different GPs over ten runs on the sarcos dataset are depicted in Fig. 11. Besides, Fig. 12 depicts the log noise variances of the GPs on this dataset. Different from the protein dataset, the sarcos dataset seems to have weak heteroscedastic noises across the input domain, which is verified by the facts that (i) the noise variance of DVSHGP is a constant, and (ii) DVSHGP agrees with RBCM in terms of both SMSE and MSLL. Hence, all the HGPs except EHSoD perform similarly in terms of MSLL.

In addition, the weak heteroscedasticity in the sarcos dataset reveals that we can use only a few inducing points for to speed up the inference. For instance, we retrain DVSHGP using . This extremely small inducing set for brings (i) much less computing time of about 350 seconds, and (ii) almost the same model accuracy with SMSE = 0.0099 and MSLL = -2.3034.

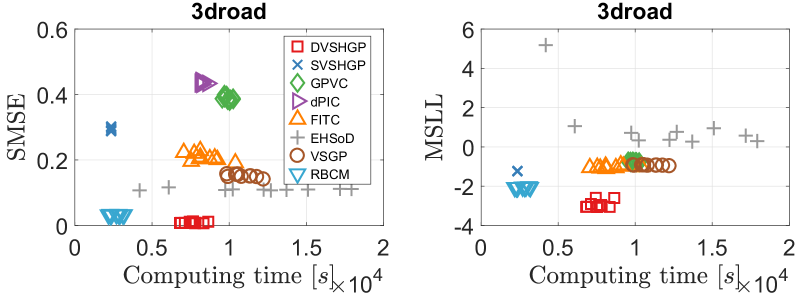

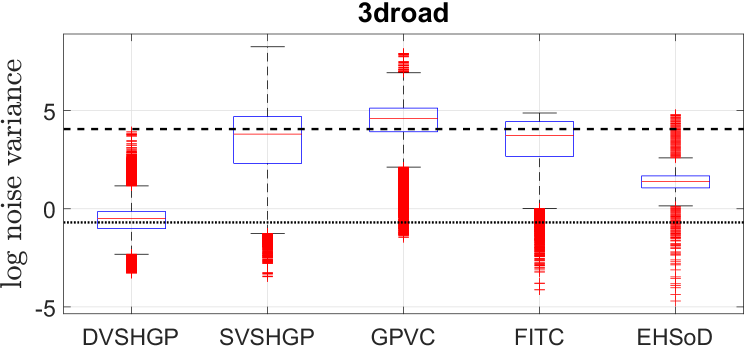

VI-B3 The 3droad dataset

Finally, for the 3droad dataset, we randomly choose 390,000 training points, and use the remaining 44,874 data points for testing. In the comparison, we have (i.e., ) and for DVSHGP; we have for SVSHGP, VSGP and FITC; we have and for dPIC; we have for GPVC; we have for RBCM; and finally we have for EHSoD. As for optimization, SVSHGP trains with NGD+Adam using over 4,000 iterations. The optimization settings of other GPs keep the same as before.

The results of different GPs over ten runs on the 3droad dataset are depicted in Fig. 13. It is observed that DVSHGP outperforms the others in terms of both SMSE and MSLL, followed by RBCM. For other HGPs, especially SVSHGP and GPVC, the relatively poor noise variance (large MSLL) in turn sacrifices the accuracy of prediction mean. Even though, the heteroscedastic noise helps SVSHGP, GPVC and FITC perform similarly to VSGP in terms of MSLL.

In addition, Fig. 14 depicts the log noise variances of these GPs on the 3droad dataset. The highly accurate prediction mean of DVSHGP helps well estimate the heteroscedastic noise. It is observed that (i) the noise variances estimated by DVSHGP are more compact than that of other HGPs; and (ii) the average noise variance agrees with that of RBCM.

Finally, the results from the 3droad dataset together with the other two datasets indicate that:

-

•

the well estimated noise variance of HGPs in turn improves the prediction mean via the interaction between and ; otherwise, it may sacrifice the prediction mean;

-

•

the heteroscedastic noise usually improves sparse HGPs over the homoscedastic VSGP in terms of MSLL.

VI-C Large real-world dataset

The final section evaluates the performance of different GPs on the 11D electric dataset,101010The dataset is available at https://archive.ics.uci.edu/ml/index.php. which is partitioned into two million training points and 49,280 test points. The HGPs in the comparison include DVSHGP, SVSHGP, dPIC and EHSoD.111111GPVC and FITC are unaffordable for this massive dataset. Besides, the stochastic variant of FITC [35] is not included, since it does not support end-to-end training. Besides, the RBCM and the stochastic variant of VSGP, named SVGP [34], are employed for this big dataset.

| DVSHGP | SVSHGP | dPIC | EHSoD | SVGP | RBCM | |

|---|---|---|---|---|---|---|

| SMSE | 0.0020 | 0.0029 | 0.0042 | 0.0103 | 0.0028 | 0.0023 |

| MSLL | -3.4456 | -3.1207 | - | -1.9453 | -2.8489 | -3.0647 |

| [] | 11.05 | 7.44 | 47.22 | 4.72 | 3.55 | 3.97 |

In the comparison, we have (i.e., ) and for DVSHGP; we have for SVSHGP and SVGP; we have and for dPIC; we have for RBCM; and finally we have for EHSoD. As for optimization, SVSHGP trains with NGD+Adam using over 10,000 iterations; The optimization settings of other GPs keep the same as before.

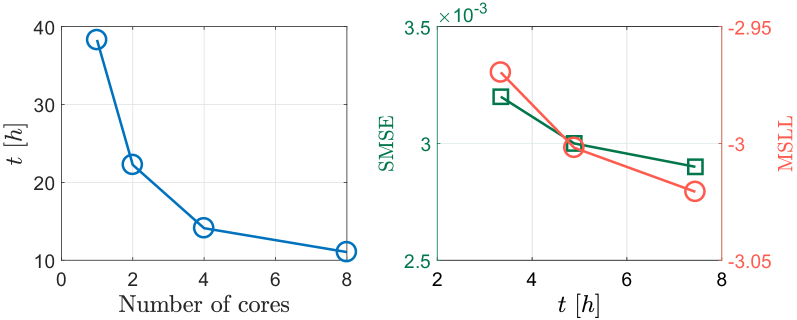

The average results over five runs in Table I indicate that DVSHGP outperforms the others in terms of both SMSE and MSLL, followed by SVSHGP. The simple EHSoD provides the worst performance, and cannot be improved by using larger due to the memory limit in current infrastructure. Additionally, in terms of efficiency, we find that (i) SVSHGP is better than DVSHGP due to the parallel/GPU acceleration deployed in Tensorflow;121212Further GPU speedup could be utilized for DVSHGP in Matlab. (ii) SVGP is better than SVSHGP because of lower complexity; and (iii) the huge computing time of dPIC might be incurred by the unoptimized codes.

Finally, due to the distributed framework, Fig. 15(a) depicts the total computing time of DVSHGP using different numbers of processing cores. It is observed that the DVSHGP using eight cores achieves a speedup around 3.5 in comparison to the centralized counterpart. Fig. 15(b) also exploits the performance of SVSHGP using a varying mini-batch size . It is observed that (i) a small significantly speeds up the model training, and (ii) different mini-batch sizes yield similar SMSE and MSLL here, because the model has been optimized over sufficient iterations.

VII Discussions and conclusions

In order to scale up the original HGP [16], we have presented distributed and stochastic variational sparse HGPs. The proposed SVSHGP improves the scalability through stochastic variational inference. The proposed DVSHGP (i) enables large-scale regression via distributed computations, and (ii) achieves high model capability via localized experts and many inducing points. We compared them to existing scalable homoscedastic/heteroscedastic GPs on a synthetic dataset and four real-world datasets. The comparative results obtained indicated that DVSHGP exhibits superior performance in terms of both SMSE and MSLL; while due to the limited global inducing set, SVSHGP may sacrifice the prediction mean for capturing heteroscedastic noise.

Apart from our scalable HGP framework, there are some new GP paradigms developed recently from different perspectives for improving the predictive distribution. For instance, instead of directly modeling the heteroscedastic noise, we could introduce additional latent inputs to modulate the kernel [63, 64]; or we directly target the interested posterior distribution to enhance the prediction of [65]; or we adopt highly flexible priors, e.g., implicit processes, over functions [66]; or we mix various GPs during the inference [67, 68]; or we develop the specific non-stationary kernel [69]. They bring new interpretations at the cost of however losing the direct description of heteroscedasticity or raising complicated inference with high complexity. But all these paradigms together with our scalable HGPs greatly enable future exploitation of fitting the data distribution with high quality and efficiency.

Appendix A Non-negativity of

We know that the variational diagonal matrix expresses

In order to prove the non-negativity of , we should figure out the non-negativity of the diagonal elements of and , respectively.

Firstly, the diagonal elements of write

where the diagonal elements of satisfy , ; and the diagonal elements of are the variances of training conditional . Therefore, has non-negative diagonal elements.

Secondly, the diagonal elements of write, given ,

For , the diagonal elements are non-negative. For , given the Cholesky decomposition , we have

indicating that the diagonal elements must be non-negative.

Hence, from the foregoing discussions, we know that is a non-negative diagonal matrix.

Appendix B Derivatives of w.r.t. hyperparameters

Let collect variational parameters in the log form for non-negativity, we have the derivatives of w.r.t. as

where , and the operator represents the element-wise power.

The derivatives of w.r.t. the kernel parameters are

Similarly, the derivatives of w.r.t. the kernel parameters are

where and .

The derivatives of w.r.t. the mean parameter of is

Finally, we calculate the derivatives of w.r.t. the inducing points and . Since the inducing points are involved in the kernel matrices, we get the derivatives , , , , , and , where and . We first obtain the derivatives of w.r.t. as

where , and . Similarly, the derivatives of w.r.t. write

For in , we have

where , and . For , we have

For , we have

where .

The calculation of and requires a loop over and parameters of the inducing points, which is quite slow for even moderate , and . Fortunately, we know that the derivative only has or () non-zero elements. Due to the sparsity, can be performed in vectorized operations such that the derivatives w.r.t. all the inducing points can be calculated along a specific dimension.

Appendix C Natural gradients of and

For exponential family distributions131313The probability density function (PDF) of exponential family is , where is natural parameters, is underlying measure, is sufficient statistic, and is log normalizer. Besides, the expectation parameters are defined as . parameterized by natural parameters , we update the parameters using natural gradients as

where is the objective function, and is the fisher information matrix with being the expectation parameters of exponential distributions.

For , its natural parameters are which are partitioned into two components

where comprises the first elements of , and the remaining elements reshaped to a square matrix. Accordingly, the expectation parameters are divided as

Thereafter, we update the natural parameters with step as

where and . The derivatives and are respectively expressed as

where , and is a diagonal matrix with the diagonal element being

For , the updates of and follow the foregoing steps, with the derivatives and taking (19).

Acknowledgment

This work is funded by the National Research Foundation, Singapore under its AI Singapore programme [Award No.: AISG-RP-2018-004], the Data Science and Artificial Intelligence Research Center (DSAIR) at Nanyang Technological University and supported under the Rolls-Royce@NTU Corporate Lab.

References

- [1] C. E. Rasmussen and C. K. I. Williams, Gaussian processes for machine learning. MIT Press, 2006.

- [2] N. Lawrence, “Probabilistic non-linear principal component analysis with Gaussian process latent variable models,” Journal of Machine Learning Research, vol. 6, no. Nov, pp. 1783–1816, 2005.

- [3] B. Shahriari, K. Swersky, Z. Wang, R. P. Adams, and N. De Freitas, “Taking the human out of the loop: A review of Bayesian optimization,” Proceedings of the IEEE, vol. 104, no. 1, pp. 148–175, 2016.

- [4] H. Liu, J. Cai, and Y. Ong, “Remarks on multi-output Gaussian process regression,” Knowledge-Based Systems, vol. 144, no. March, pp. 102–121, 2018.

- [5] B. Settles, “Active learning,” Synthesis Lectures on Artificial Intelligence and Machine Learning, vol. 6, no. 1, pp. 1–114, 2012.

- [6] P. Kou, D. Liang, L. Gao, and J. Lou, “Probabilistic electricity price forecasting with variational heteroscedastic Gaussian process and active learning,” Energy Conversion and Management, vol. 89, pp. 298–308, 2015.

- [7] M. Lázaro-Gredilla, M. K. Titsias, J. Verrelst, and G. Camps-Valls, “Retrieval of biophysical parameters with heteroscedastic Gaussian processes,” IEEE Geoscience and Remote Sensing Letters, vol. 11, no. 4, pp. 838–842, 2014.

- [8] I. A. Almosallam, M. J. Jarvis, and S. J. Roberts, “GPz: non-stationary sparse Gaussian processes for heteroscedastic uncertainty estimation in photometric redshifts,” Monthly Notices of the Royal Astronomical Society, vol. 462, no. 1, pp. 726–739, 2016.

- [9] M. Bauza and A. Rodriguez, “A probabilistic data-driven model for planar pushing,” in International Conference on Robotics and Automation, 2017, pp. 3008–3015.

- [10] A. J. Smith, M. AlAbsi, and T. Fields, “Heteroscedastic Gaussian process-based system identification and predictive control of a quadcopter,” in AIAA Atmospheric Flight Mechanics Conference, 2018, p. 0298.

- [11] J. Kirschner and A. Krause, “Information directed sampling and bandits with heteroscedastic noise,” in Proceedings of the 31st Conference On Learning Theory, 2018, pp. 358–384.

- [12] A. Kendall and Y. Gal, “What uncertainties do we need in Bayesian deep learning for computer vision?” in Advances in Neural Information Processing Systems, 2017, pp. 5574–5584.

- [13] S. Urban, M. Ludersdorfer, and P. Van Der Smagt, “Sensor calibration and hysteresis compensation with heteroscedastic Gaussian processes,” IEEE Sensors Journal, vol. 15, no. 11, pp. 6498–6506, 2015.

- [14] F. C. Pereira, C. Antoniou, J. A. Fargas, and M. Ben-Akiva, “A metamodel for estimating error bounds in real-time traffic prediction systems,” IEEE Transactions on Intelligent Transportation Systems, vol. 15, no. 3, pp. 1310–1322, 2014.

- [15] E. Snelson and Z. Ghahramani, “Variable noise and dimensionality reduction for sparse Gaussian processes,” in Uncertainty in Artificial Intelligence, 2006, pp. 461–468.

- [16] P. W. Goldberg, C. K. Williams, and C. M. Bishop, “Regression with input-dependent noise: A Gaussian process treatment,” in Advances in Neural Information Processing Systems, 1998, pp. 493–499.

- [17] L. Muñoz-González, M. Lázaro-Gredilla, and A. R. Figueiras-Vidal, “Divisive Gaussian processes for nonstationary regression,” IEEE Transactions on Neural Networks and Learning Systems, vol. 25, no. 11, pp. 1991–2003, 2014.

- [18] L. Munoz-Gonzalez, M. Lázaro-Gredilla, and A. R. Figueiras-Vidal, “Laplace approximation for divisive Gaussian processes for nonstationary regression,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 38, no. 3, pp. 618–624, 2016.

- [19] A. D. Saul, J. Hensman, A. Vehtari, and N. D. Lawrence, “Chained Gaussian processes,” in Artificial Intelligence and Statistics, 2016, pp. 1431–1440.

- [20] K. Kersting, C. Plagemann, P. Pfaff, and W. Burgard, “Most likely heteroscedastic Gaussian process regression,” in International Conference on Machine Learning, 2007, pp. 393–400.

- [21] N. Quadrianto, K. Kersting, M. D. Reid, T. S. Caetano, and W. L. Buntine, “Kernel conditional quantile estimation via reduction revisited,” in International Conference on Data Mining, 2009, pp. 938–943.

- [22] M. Heinonen, H. Mannerström, J. Rousu, S. Kaski, and H. Lähdesmäki, “Non-stationary Gaussian process regression with hamiltonian monte carlo,” in Artificial Intelligence and Statistics, 2016, pp. 732–740.

- [23] M. Binois, R. B. Gramacy, and M. Ludkovski, “Practical heteroscedastic Gaussian process modeling for large simulation experiments,” Journal of Computational and Graphical Statistics, vol. 27, no. 4, pp. 808–821, 2018.

- [24] M. K. Titsias and M. Lázaro-Gredilla, “Variational heteroscedastic Gaussian process regression,” in International Conference on Machine Learning, 2011, pp. 841–848.

- [25] M. Menictas and M. P. Wand, “Variational inference for heteroscedastic semiparametric regression,” Australian & New Zealand Journal of Statistics, vol. 57, no. 1, pp. 119–138, 2015.

- [26] L. Muñoz-González, M. Lázaro-Gredilla, and A. R. Figueiras-Vidal, “Heteroscedastic Gaussian process regression using expectation propagation,” in Machine Learning for Signal Processing, 2011, pp. 1–6.

- [27] V. Tolvanen, P. Jylänki, and A. Vehtari, “Expectation propagation for nonstationary heteroscedastic Gaussian process regression,” in Machine Learning for Signal Processing, 2014, pp. 1–6.

- [28] M. Hartmann and J. Vanhatalo, “Laplace approximation and natural gradient for Gaussian process regression with heteroscedastic student-t model,” Statistics and Computing, vol. 29, no. 4, pp. 753–773, 2019.

- [29] H. Liu, Y.-S. Ong, X. Shen, and J. Cai, “When Gaussian process meets big data: A review of scalable GPs,” IEEE Transactions on Neural Networks and Learning Systems, pp. 1–19, 2020.

- [30] J. Quiñonero-Candela and C. E. Rasmussen, “A unifying view of sparse approximate Gaussian process regression,” Journal of Machine Learning Research, vol. 6, no. Dec, pp. 1939–1959, 2005.

- [31] M. Titsias, “Variational learning of inducing variables in sparse Gaussian processes,” in Artificial Intelligence and Statistics, 2009, pp. 567–574.

- [32] Y. Gal, M. van der Wilk, and C. E. Rasmussen, “Distributed variational inference in sparse Gaussian process regression and latent variable models,” in Advances in Neural Information Processing Systems, 2014, pp. 3257–3265.

- [33] T. N. Hoang, Q. M. Hoang, and B. K. H. Low, “A distributed variational inference framework for unifying parallel sparse Gaussian process regression models.” in International Conference on Machine Learning, 2016, pp. 382–391.

- [34] J. Hensman, N. Fusi, and N. D. Lawrence, “Gaussian processes for big data,” in Uncertainty in Artificial Intelligence, 2013, pp. 282–290.

- [35] T. N. Hoang, Q. M. Hoang, and B. K. H. Low, “A unifying framework of anytime sparse Gaussian process regression models with stochastic variational inference for big data,” in International Conference on Machine Learning, 2015, pp. 569–578.

- [36] A. Wilson and H. Nickisch, “Kernel interpolation for scalable structured Gaussian processes (KISS-GP),” in International Conference on Machine Learning, 2015, pp. 1775–1784.

- [37] T. D. Bui and R. E. Turner, “Tree-structured Gaussian process approximations,” in Advances in Neural Information Processing Systems, 2014, pp. 2213–2221.

- [38] G. E. Hinton, “Training products of experts by minimizing contrastive divergence,” Neural Computation, vol. 14, no. 8, pp. 1771–1800, 2002.

- [39] V. Tresp, “A Bayesian committee machine,” Neural Computation, vol. 12, no. 11, pp. 2719–2741, 2000.

- [40] M. P. Deisenroth and J. W. Ng, “Distributed Gaussian processes,” in International Conference on Machine Learning, 2015, pp. 1481–1490.

- [41] H. Liu, J. Cai, Y. Wang, and Y.-S. Ong, “Generalized robust Bayesian committee machine for large-scale Gaussian process regression,” in International Conference on Machine Learning, 2018, pp. 3137–3146.

- [42] H. Liu, J. Cai, Y.-S. Ong, and Y. Wang, “Understanding and comparing scalable Gaussian process regression for big data,” Knowledge-Based Systems, vol. 164, pp. 324–335, 2019.

- [43] E. Snelson and Z. Ghahramani, “Local and global sparse Gaussian process approximations,” in Artificial Intelligence and Statistics, 2007, pp. 524–531.

- [44] J. Vanhatalo and A. Vehtari, “Modelling local and global phenomena with sparse Gaussian processes,” in Uncertainty in Artificial Intelligence, 2008, pp. 571–578.

- [45] R. Ouyang and K. H. Low, “Gaussian process decentralized data fusion meets transfer learning in large-scale distributed cooperative perception,” in AAAI Conference on Artificial Intelligence, 2018.

- [46] K. Chalupka, C. K. Williams, and I. Murray, “A framework for evaluating approximation methods for Gaussian process regression,” Journal of Machine Learning Research, vol. 14, no. Feb, pp. 333–350, 2013.

- [47] M. E. Tipping, “Sparse Bayesian learning and the relevance vector machine,” Journal of Machine Learning Research, vol. 1, no. Jun, pp. 211–244, 2001.

- [48] C. E. Rasmussen and J. Quinonero-Candela, “Healing the relevance vector machine through augmentation,” in International Conference on Machine Learning, 2005, pp. 689–696.

- [49] E. Snelson and Z. Ghahramani, “Sparse Gaussian processes using pseudo-inputs,” in Advances in Neural Information Processing Systems, 2006, pp. 1257–1264.

- [50] M. Bauer, M. van der Wilk, and C. E. Rasmussen, “Understanding probabilistic sparse Gaussian process approximations,” in Advances in Neural Information Processing Systems, 2016, pp. 1533–1541.

- [51] H. Yu, T. Nghia, B. K. H. Low, and P. Jaillet, “Stochastic variational inference for bayesian sparse Gaussian process regression,” in International Joint Conference on Neural Networks, 2019, pp. 1–8.

- [52] D. G. Matthews, G. Alexander, M. Van Der Wilk, T. Nickson, K. Fujii, A. Boukouvalas, P. León-Villagrá, Z. Ghahramani, and J. Hensman, “GPflow: A Gaussian process library using TensorFlow,” Journal of Machine Learning Research, vol. 18, no. 1, pp. 1299–1304, 2017.

- [53] M. Abadi, P. Barham, J. Chen, Z. Chen, A. Davis, J. Dean, M. Devin, S. Ghemawat, G. Irving, M. Isard et al., “Tensorflow: A system for large-scale machine learning,” in 12th USENIX Symposium on Operating Systems Design and Implementation, 2016, pp. 265–283.

- [54] C. E. Rasmussen and H. Nickisch, “Gaussian processes for machine learning (GPML) toolbox,” Journal of Machine Learning Research, vol. 11, no. Nov, pp. 3011–3015, 2010.

- [55] S. Sun, “A review of deterministic approximate inference techniques for Bayesian machine learning,” Neural Computing and Applications, vol. 23, no. 7-8, pp. 2039–2050, 2013.

- [56] J. Hensman and N. D. Lawrence, “Nested variational compression in deep Gaussian processes,” arXiv preprint arXiv:1412.1370, 2014.

- [57] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [58] B. Ingram and D. Cornford, “Parallel geostatistics for sparse and dense datasets,” in GeoENV VII–Geostatistics for Environmental Applications. Springer, 2010, pp. 371–381.

- [59] H. Salimbeni, S. Eleftheriadis, and J. Hensman, “Natural gradients in practice: Non-conjugate variational inference in Gaussian process models,” in International Conference on Artificial Intelligence and Statistics, 2018, pp. 689–697.

- [60] J. Hensman, N. Durrande, A. Solin et al., “Variational fourier features for Gaussian processes.” Journal of Machine Learning Research, vol. 18, pp. 1–52, 2018.

- [61] D. Dheeru and E. Karra Taniskidou, “UCI machine learning repository,” 2017. [Online]. Available: http://archive.ics.uci.edu/ml

- [62] M. Kaul, B. Yang, and C. S. Jensen, “Building accurate 3D spatial networks to enable next generation intelligent transportation systems,” in International Conference on Mobile Data Management, vol. 1, 2013, pp. 137–146.

- [63] C. Wang and R. M. Neal, “Gaussian process regression with heteroscedastic or non-gaussian residuals,” arXiv preprint arXiv:1212.6246, 2012.

- [64] V. Dutordoir, H. Salimbeni, J. Hensman, and M. Deisenroth, “Gaussian process conditional density estimation,” in Advances in Neural Information Processing Systems, 2018, pp. 2385–2395.

- [65] M. Jankowiak, G. Pleiss, and J. R. Gardner, “Sparse Gaussian process regression beyond variational inference,” arXiv preprint arXiv:1910.07123, 2019.

- [66] C. Ma, Y. Li, and J. M. Hernandez-Lobato, “Variational implicit processes,” in International Conference on Machine Learning, 2019, pp. 4222–4233.

- [67] T. Nguyen and E. Bonilla, “Fast allocation of Gaussian process experts,” in International Conference on Machine Learning, 2014, pp. 145–153.

- [68] D. Wu and J. Ma, “A two-layer mixture model of Gaussian process functional regressions and its MCMC EM algorithm,” IEEE Transactions on Neural Networks and Learning Systems, vol. 29, no. 10, pp. 4894–4904, 2018.

- [69] S. Remes, M. Heinonen, and S. Kaski, “Non-stationary spectral kernels,” in Advances in Neural Information Processing Systems, 2017, pp. 4642–4651.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a3f231f6-5290-4b1f-aa04-bc2fb933e8b3/HTLiu.png) |

Haitao Liu received the Ph.D. degree from the School of Energy and Power Engineering, Dalian University of Technology, Dalian, China, in 2016. He is currently a Research Fellow with the Rolls-Royce@NTU Corp Laboratory, Nanyang Technological University, Singapore. His current research interests include multi-task learning, large-scale Gaussian process, active learning, and optimization. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a3f231f6-5290-4b1f-aa04-bc2fb933e8b3/YSOng.png) |

Yew-Soon Ong (M’99-SM’12-F’18) received the Ph.D. degree in artificial intelligence in complex design from the University of Southampton, U.K., in 2003. He is a President’s Chair Professor in Computer Science at the Nanyang Technological University (NTU), and holds the position of Chief Artificial Intelligence Scientist at the Agency for Science, Technology and Research Singapore. At NTU, he serves as Director of the Data Science and Artificial Intelligence Research Center and Director of the Singtel-NTU Cognitive & Artificial Intelligence Joint Lab. His research interest is in artificial and computational intelligence. He is founding Editor-in-Chief of the IEEE Transactions on Emerging Topics in Computational Intelligence, Technical Editor-in-Chief of Memetic Computing and Associate Editor of IEEE Transactions on Neural Networks & Learning Systems, the IEEE Transactions on Cybernetics, IEEE Transactions on Evolutionary Computation and others. He has received several IEEE outstanding paper awards, listed as a Thomson Reuters highly cited researcher and among the World’s Most Influential Scientific Minds. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a3f231f6-5290-4b1f-aa04-bc2fb933e8b3/JFCai.png) |

Jianfei Cai (S’98–M’02–SM’07) is a Professor at Faculty of IT, Monash University, where he currently serves as the Head for the Data Science & AI Department. Before that, he was a full professor, a cluster deputy director of Data Science & AI Research center (DSAIR), Head of Visual and Interactive Computing Division and Head of Computer Communications Division in Nanyang Technological University (NTU). His major research interests include computer vision, multimedia and deep learning. He has published more than 200 technical papers in international conferences and journals. He is a co-recipient of paper awards in ACCV, ICCM, IEEE ICIP and MMSP. He has served as an Associate Editor for IEEE T-IP, T-MM, T-CSVT and Visual Computer as well as serving as Area Chair for ICCV, ECCV, ACM Multimedia, ICME and ICIP. He was the Chair of IEEE CAS VSPC-TC during 2016-2018. He had also served as the leading TPC Chair for IEEE ICME 2012 and the best paper award committee co-chair for IEEE T-MM 2019. |