Latent Evolution Model for Change Point Detection in Time-varying Networks

Abstract

Graph-based change point detection (CPD) play an irreplaceable role in discovering anomalous graphs in the time-varying network. While several techniques have been proposed to detect change points by identifying whether there is a significant difference between the target network and successive previous ones, they neglect the natural evolution of the network. In practice, real-world graphs such as social networks, traffic networks, and rating networks are constantly evolving over time. Considering this problem, we treat the problem as a prediction task and propose a novel CPD method for dynamic graphs via a latent evolution model. Our method focuses on learning the low-dimensional representations of networks and capturing the evolving patterns of these learned latent representations simultaneously. After having the evolving patterns, a prediction of the target network can be achieved. Then, we can detect the change points by comparing the prediction and the actual network by leveraging a trade-off strategy, which balances the importance between the prediction network and the normal graph pattern extracted from previous networks. Intensive experiments conducted on both synthetic and real-world datasets show the effectiveness and superiority of our model.

keywords:

Change point detection , Time-varying networks , Network prediction1 Introduction

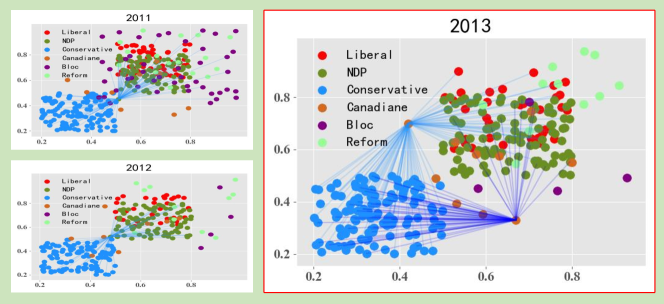

Time-varying network has shed a light on the spatio-temporal information of this network (graph), which represents multiple structure of nodes and dynamic changes [1]. A stable and gradual development of such network is essential to many real-world applications such as city-wide traffic management, weather detection, and detection of epidemic outbreaks [2, 3, 4, 5, 6]. As one of the anomaly detection tasks, change point detection (CPD) concentrates on identifying the anomalous timestamps in temporal networks, which deviates from the normal network evolution [7]. For example, in the Canadian parliament voting network, there is an anomalous voting pattern in 2013, as shown in the right part of Figure 1 (a). The graph in the red box reveals different voting interactions compared with previous two years (2011 and 2012). CPD can identify anomalies like this from the time-varying network sequences.

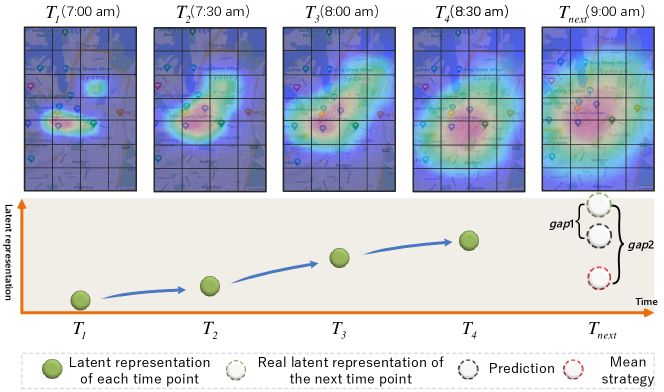

Nowadays, existing methods mainly compare the target network with a learned normal graph pattern [7, 8]. This normal pattern is extracted from previous networks in a given time-window via a mean or global representation learning method [7, 9, 10]. Even these methods have achieved encouraging results on the synthetic test, most of them neglect capturing the real-world dynamic evolution patterns. In other words, they are suitable for implementing in a relatively stable environment [11], but not always able to capture anomalies in temporal networks (e.g., traffic network) , as they do not take into account the evolution trend across the temporal networks [12, 13, 14]. For example, the upper part of Figure 2 illustrates the temporal changes of crowd flow in the Sydney traffic network. If these traffic maps are embedded into a latent space, their latent representations could be presented in the bottom part of this figure. There is a clear upward trend in the real-world traffic network. Considering the change point detection for the next timestamp, we aim to capture the time-evolving trend and make an accurate prediction to the traffic map at . When a prediction (represented by the black dotted circle) is obtained, we can compare it with the real map representation (the green dotted circle) at . However, if we use an average or global method to compute the normal graph pattern of previous networks, we can only capture the latent representation as illustrated by the red dotted circle. Apparently, the is much larger than , suggesting that only extracting the previous normal graph pattern based on the average method leads to a distorted result. Moreover, a time-varying network is not only reflected by the link connections, but also varied in the weight changes. Even though a few of methods can capture the changes for link connections, they do not consider the dynamic weights [15].

Motivated by the above issues, we draw inspiration from several latent space prediction model [16, 17] and propose a new evolution method for the change point detection. One of the purposes in this paper is to capture the evolution trend of a time-varying network. Once the trend is learned, we can make a prediction of the network with the evolving patterns. Accordingly, the difference between the prediction and the actual network can be recognized as a criteria to identify the change point. After casting the problem as a prediction task, there are two challenges along with that need to be carefully handled.

-

1.

One is how to capture temporal dependencies and evolution trends.

-

2.

The second is how to identify the anomalous network by considering the prediction result.

To address these challenges, we have developed a prediction model for the time-varying network, and proposed a trade-off strategy based on the prediction result and the previous normal graph pattern to detect change points. Specifically, we first embedded the original network into latent spaces via matrix factorization model. Then, our method goes a step further to capture the dynamic movements of these latent spaces from current timestamp to the next via the latent transition learning process. When the latent spaces and transition matrices are learned, we can make a prediction to the target network. At second, we choose the Laplacian spectrum strategy to compare the differences between the prediction and the actual network. At last, the normal graph pattern learned from previous networks is taken into consideration as a trade-off strategy to identify whether the target network is a change point. We summarize the main contributions and innovations of this paper as follows:

- 1.

-

2.

To improve the effectiveness and robustness of the model in various real-world scenarios, we further take long-term and periodic properties into consideration, and propose a novel long-term guidance method based on the weighted multi-view learning.

-

3.

We develop a trade-off strategy to identify change points based on the Laplacian spectrum method, which balances the importance between the prediction network and the normal graph pattern extracted from previous networks.

2 Related Work

2.1 Change Point Detection

In this section, we briefly introduce some related literatures of CPD, which can be used to discover outliers in network and sequences [18, 19, 20, 21]. [22] provided the first attempt on transforming the CPD problem to detect the times of fundamental changes. The proposed LetoChange method used Bayesian hypothesis testing to determine whether a model with parameter changes is acceptable. [3] proposed a Markov process based method, which treats the time-varying network as a first order Markov process. This work used joint edge probabilities as the ”feature vector”. [10] proposed a tensor-based CPD model. Tensor factorization is widely recognized as an effective method to capture a low-rank representation in the time dimension. In this method, after getting the latent attributes of each time point, the clustering and outlier detection methods were used to identify change points. Activity vector method was proposed by [9], it focused on finding anonymous networks which are significant deviation from previous normal ones. Activity vector leveraged the principal eigenvector of the adjacency matrix to present each network, it considered a short term window to capture the normal graph pattern. Currently, [7] proposed a Laplacian-based detection method for CPD, named LAD. It utilised the Laplacian matrix and SVD to obtain embedding vector of each network. In additional, two window sizes are involved to capture both short and long term properties. [15] proposed an efficient approach for network change point localization. However, this method only considered the changes in link connections that cannot capture the dynamics for weight graphs.

As aforementioned discussion, most of the existing relevant methods did not fully consider the dynamic trends in the time-varying networks when detecting change points.

2.2 Dynamic Latent Space Model

Recent studies have indicated that the latent space learning methods provide excellent solutions for the dynamic network problems, e.g., flow prediction [17], community detection systems [23], social network evolution learning [24], sequential analysis [25, 26, 27, 28], and traffic speed prediction [16]. The benefits of a latent space model is to obtain the low-rank approximations from the original features, so that obtain a more compact matrix by removing the redundant information of the large scale matrix [29, 30]. [31] proposed a dynamic model for a static co-occurrence model CODE, where the coordinates can change with time to get new observations. There are several advanced investigations focusing on traffic analysis under the latent space assumption. For example, to address the issues of finding abnormal activities in crowded scenes, [32] devised a spatio-temporal Laplacian eigenmap strategy to detect anomalies. [33] leveraged a tensor structure to resolve the high-order time-varying prediction problem. The key characteristics of the original time sequences can be represented by the learned latent core tensor. [16] developed a real-time traffic speed prediction model that learns temporal and topological features from the road network. It then used an online latent space learning method to solve the proposed constrained optimization problem. [34] took the advantage of dynamic latent space learning to address the temporal analysis problem very efficiently on the large-scale streaming data. In other applications, [4, 17] used the online matrix factorization model to solve the crowd flow distribution problem.

In summary, latent space models can effectively capture dynamic evolving trends from real-world networks, as well as learning the latent embedding for such networks.

3 Problem Formulation

In the CPD problem, we use a directed graph to define the network at timestamp , where is the group of edges and denotes the group of vertexes. An edge denotes the connection between node and node with weight at timestamp . Following the same setting in [7, 10], = 1 for all edges in unweighted graphs and for weighted graphs; and the number of nodes in the graph is assumed to be constant across all timestamps.

Given a certain time window , our work first aims to predict the next network by using a series of previous networks, . Second, the next snapshot will be identified as the change point or not via a trade-off strategy by leveraging the prediction and the previous normal graph pattern. Among which, the normal graph pattern indicates the average network embedding learned from previous in a given time window. The detailed learning method is presented in Section 4.5.

4 Methodology

4.1 Temporal Topology Embedding

Considering inherent complexities of the time-varying network, we leverage the latent space model to represent dynamic graphs. Latent space models are widely applied into solving network-wide problems, e.g., community detection [23], social and heterogeneous networks construction[1], and network-wide traffic prediction[17, 35], etc. The benefit of low-rank approximation of latent space model is to get a more compact matrix by removing the redundant information of the large-scale matrix [36]. For a slice (the timestamp is omitted for brevity), we use tri-factorization that decomposes into three latent representations, in the form , where and denote the latent attributes of start and end nodes respectively, denotes the attribute interaction patterns, is the number of nodes and is the number of dimension of latent spaces. Therefore, we approximate by a non-negative matrix tri-factorization form :

| (1) |

Note that, some tri-factorization methods prefer to use the factorization form because they usually focus on the undirected graph [23, 37]. However, in our problem, some real-world networks are formulated as the directed graph. In this way, provides a more flexible formulation to represent the graph [38, 39]. Thus, our basic problem formulation differs from that of methods in [16, 4, 17].

The reasons that we utilise the non-negativity constraint are: 1) the reasonable assumptions of latent attributes and the better interpretability of the results [40, 41]; 2) all weights in our networks are non-negative, thus latent spaces , and should be non-negative as well.

To take the time-series pattern into consideration, we formulate this sequential problem as a time-dependent model. Given a certain time window (i.e., a set contains previous networks, and presents the current time as well), for each timestamp [1, ], our method aims to learn the time-dependent latent representations , and from . Although the latent attribute matrix is time-dependent, we assume that the latent attribute interaction matrix is an inherent property, and thus is fixed for all timestamps. This assumption is based on the fact that temporal network exists invariant properties [16]. After considering the temporal pattern, our model can be formulated as:

| (2) |

4.2 Latent Space Evolution

Since our model is a time-varying forecasting system, the network is continually changing over time. We not only want to learn the time-dependent latent attributes, but also aim to learn the evolution patterns of latent attributes and from previous timestamps to the next, i.e., how to learn the evolution forms such as and .

To this end, we define two transition matrices and to represent the smooth trends of and in T previous conditions. The learned transition patterns and can be treated as a global evolution trend from previous timestamps. Thus, matrices and can be recognized as the evolving patterns of the dynamic network. For example, approximates the changes of between time t-1 to t, i.e., optimizes .

Then, let us involve all timestamps in a window-size , our latent transition learning can be expressed as:

| (3) |

When we consider the temporal topology problem jointly, we have:

| (4) |

where is the regularization parameter.

To this stage, transition matrices and can learn the short-term knowledge from previous networks in a window size. Considering that the long-term knowledge is helpful for the CPD and network evolution problems [3, 17], we also take the long-term pattern into consideration to improve the prediction performance in the next section.

4.3 Long-term Guidance

Dynamic networks, such as climate and travel networks, usually have a strong periodic property. Such property is considered as the long-term guidance which has more gradual trends. Because of this point, we can leverage a long-term window to achieve the large-scaled network evolution, and propose a novel long-term guidance to extract periodic and stable information. Given a long-term set of networks (the window size extends from to 4 for example, then the long-term guidance will consider the states of 4 previous networks), we learn two guide matrices, and , to represent the common characteristic of :

| (5) |

where is the adaptive weight calculated by function: , and is the similarity between and the current network . We use the matrix L2-norm to calculate the similarities among networks, and then normalize them satisfying . The assumption behind this is that can provide more useful information if it is more similar to . is the Hadamard product operator.

The main idea of the above process is analogous to the multi-view learning which projects multiple views into shared common spaces [42, 21]. The adaptive weight controls how much information can be extracted from . Such technique has been successfully used in common space learning [43, 44]. The learning approaches of Eq. (5) are:

| (6) |

| (7) |

After having and , the strategy of the long-term guidance is formulated as:

| (8) |

where and denote the latent representations at current timestamp .

Taking all techniques jointly into consideration, the final optimization function is expressed as:

| (9) |

where is the regularization factor.

Make a Prediction. The learned latent space matrices and , and the evolving patterns , and can be used to make the prediction after solving Equation (9). Then we have the predicted network as:

| (10) |

4.4 Learning Process

Due to the non-convexity of Eq. (9), we use an effective gradient descent approach with the multiplicative update method [45] to find its local optimization.

Theorem 1

is non-increasing by optimizing , , and alternatively.

| (11) |

| (12) |

is given by:

| (13) |

| (14) |

| (15) |

| (16) |

where is the transpose of . The proof of Theorem 1 and the detailed derivations are deferred to the Appendix.

4.5 Change Point Detection

By computing the prediction network for timestamp +1, we can compare it with the actual network to check whether is a change point or not. Specifically, we use the Laplacian-spectrum-based method which has shown a state-of-the-art performance in graph comparisons [46]. The eigenvalues of the Laplacian matrix capture the structural properties of the corresponding graph. Note that real-world applications contain both undirected and directed graphs. For the undirected graphs, we construct a normalized Laplacian matrix , defined as , where is the identity matrix; is the weight matrix of and is a diagonal matrix = . For the directed graphs, we leverage the method proposed in [47] to build the Laplacian matrix:

| (17) |

| (18) |

where is the matrix with the Perron vector [48] of in the diagonal; is a transition probability matrix [47].

Given the Laplacian matrices for all timestamps, i.e., , , and , where is built by actual network ; is built by the prediction . We follow a well-performed metric that utilises the singular values calculated of Laplacian matrices as the network attributes [7]. Then, the vector made up of singular values is denoted as , [1, +1]. Let () denote the vector of actual network, (singular values of ) denote the vector of prediction network. The first anomaly score based on the cosine distance is defined as:

| (19) |

It is evident that the more dissimilar between the prediction and the actual network, is closer to 1, and vice versa111The range of is [0,1] because of the non-negativity of .

Trade-off Strategy

The score of reflects the difference between the prediction and ground truth. This evaluation is more suitable for the temporal network that evolves gradually. However, the normal graph pattern extracted from the previous networks is also important since it can reflect the average evolution representation. Thus we introduce the second anomaly score which considers the normal graph patterns. Let be the average vector of previous snapshots, i.e., = (, is defined as:

| (20) |

Finally, the combined anomaly score is a trade-off between and :

| (21) |

where is the trade-off factor. Algorithm 1 summarizes our learning and detection process in LEM-CPD.

4.6 Complexity Analysis

Here we analyse the time complexity of LEM-CPD algorithm. After the prediction process, the time complexity of the change point detection is mainly affected by SVD. Even though randomized SVD has an complexity, it is not involved in the update loop of variables (such as and ). In the prediction process, the time complexity of Eq. (11) - Eq. (12) is dominated by the matrix multiplication operations in each iteration. Therefore, for each iteration, the computational complexity of LEM-CPD is . Compared with the latest CPD method LAD () [7], the time complexity of our method is , which is higher than LAD.

5 Experiments and Results

Baselines

We compare the proposed method LEM-CPD with the following baselines. All parameters of the proposed method and baselines are optimized by the grid search method in the prepared validation dataset. For example, in the real-world Flow dataset, five workdays in one week is chosen as the validation data, then we test our method on the next week.

-

1.

LT-A. We compared the next network with its long-term average network.

-

2.

LAD. [7] proposed a Laplacian-based detection method for CPD. It used the Laplacian matrix and SVD to obtain embedding vector of each network. In additional, two window sizes are involved to capture both short and long term properties.

-

3.

LADdos. [8] devised a variant method of LAD to identify time steps where the graph structure deviates significantly from the norm.

-

4.

EdgeMonitoring. This method used joint edge probabilities as the ”feature vector”, and formulated the time-varying network as a first order Markov process [3].

-

5.

TensorSplat. [10] proposed a tensor-based CPD model, which utilised the CP decomposition to capture a low-rank space in the time dimension. Then an outlier detection method is used to identify the change points.

-

6.

Activity vector. This algorithm used the principal eigenvector of the adjacency matrix to present each network and only considered short term properties [9].

-

7.

LEM-CPD-lt. The variant of the proposed method, which removes the long-term guidance.

Metrics

The Hit Ratio (HR@K) metric is a popular evaluation method that reflects the proportion of right detected anomalies in the top K most anomalous points. Unlike the synthetic experiments, we cannot get the ground truth in the real-world datasets, and thus the well-known anomalous timestamps are used as labels.

5.1 Synthetic Experiments

Setting: We chose a widely used data generator Stochastic Block Model (SBM) [49] to generate synthetic data. We followed two settings in the data generation process in [7]. 1) Pure datasets: four time-varying networks with 500 nodes and 151 time points are generated, which contain 3, 7, 10, 15 change points respectively. Therefore, we can use HR@K, where K is setting to 3, 7, 10, 15 to evaluate all competitive methods. Hybrid dataset: we generate four homogeneous networks of the Pure datasets, while contains both change points and event points according to the hybrid setting in [7]. Thus, HR@K (K = 3, 7, 10, 15) is also suitable in this dataset. In the experiments, our short and long term window sizes are set to 3 and 12 respectively; the change point detection threshold is set to 0.5; the trade-off factor is set to 0.2.

Discussion: Table 1 presents the HR@K accuracy of all test methods on eight datasets. It is apparent that there are several methods which can effectively handle the CPD problem in the synthetic datasets, e.g., LEM-CPD (ours), LAD, and EdgeMonitoring. It is mainly because the normal evaluation pattern in the synthetic datasets is very stable, so that these method can easily detect anomalies if some dramatically changes appeared. TensorSplat method cannot capture all change points in the sparse datasets. Unlike LADdoc, LAD and LEM-CPD, Activity vector chooses the principal eigenvectors of the adjacency matrix to represent the graph embedding directly, the latent space may not be learned well compared with the Laplacian spectrum method. Furthermore, as the results shown in Table 1, we can see that without the long-term guidance, LEM-CPD-lt performs worse than our final model, which demonstrates the effectiveness of the long-term guidance. For methods that use the prediction process, such as LEM-CPD-lt and LEM-CPD, can achieve the best detection accuracy as shown in Table 1.

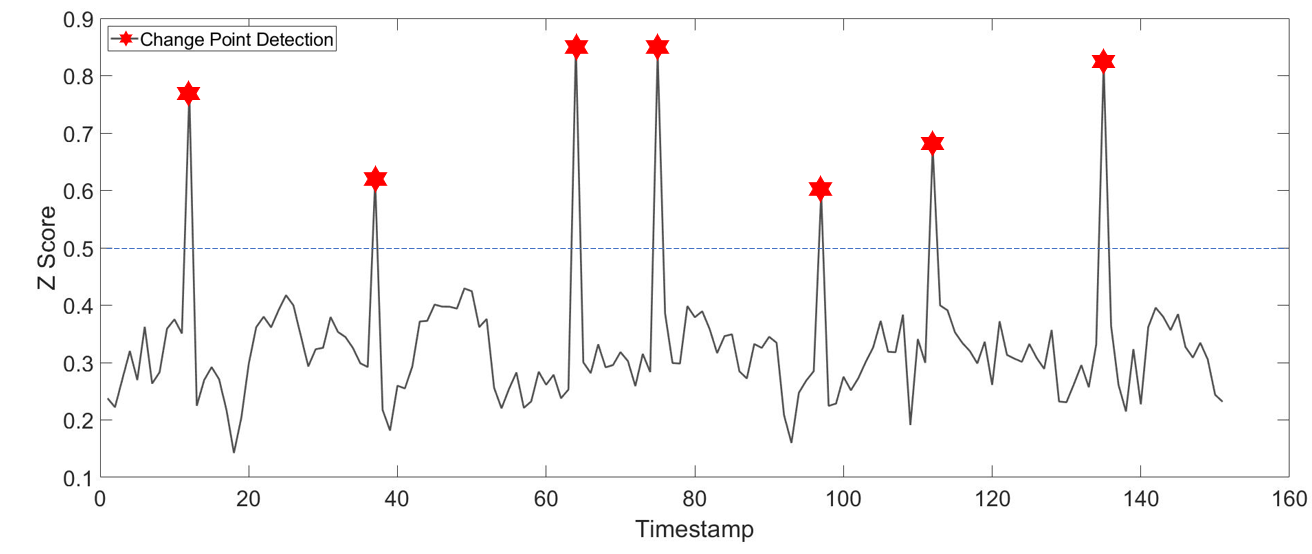

Figure 3 provides the detection results and their scores in the Pure dataset with seven change points. The seven most anomalous points are correctly detected. We can clearly see that the scores of change points are markedly different from the normal ones. The scenario proves our point that the normal evolutionary pattern of the synthetic network is stable. Therefore, in our trade-off strategy, the score can greatly handle this situation, so that we use a small in the experiments.

| Metric | HR@3 | HR@7 | HR@10 | HR@15 | Average | ||||

| Dataset | Pure | Hybrid | Pure | Hybrid | Pure | Hybrid | Pure | Hybrid | |

| LT-A | 66.7% | 33.3% | 42.9% | 28.6% | 40.0% | 20.0% | 46.7% | 33.3% | 38.9% |

| TensorSplat [10] | 66.7% | 66.7% | 71.4% | 42.9% | 70.0% | 60.0% | 73.3% | 66.7% | 64.7% |

| Activity vector [9] | 66.7% | 33.3% | 57.1% | 14.2% | 60.0% | 30.0% | 66.7% | 33.3% | 45.2% |

| EdgeMonitoring [3] | 100.0% | 66.7% | 71.4% | 57.1% | 50.0% | 60.0% | 73.3% | 80.0% | 69.8% |

| LAD [7] | 100.0% | 66.7% | 100.0% | 85.7% | 90.0% | 80.0% | 86.7% | 86.7% | 86.9% |

| LADdoc [8] | 100.0% | 66.7% | 100.0% | 85.7% | 80.0% | 90.0% | 80.0% | 86.7% | 86.1% |

| LEM-CPD-lt (Ours) | 100.0% | 100.0% | 100.0% | 85.7% | 90.0% | 80.0% | 80.0% | 86.7% | 90.3% |

| LEM-CPD (Ours) | 100.0% | 100.0% | 100.0% | 85.7% | 90.0% | 90.0% | 86.7% | 86.7% | 92.4% |

| Metric | Flow | Maintenance | Senate | Average | |||

| Dataset | HR@3 | HR@6 | HR@1 | HR@3 | HR@1 | HR@2 | |

| LT-A | 23.3% | 16.7% | 100.0% | 66.6% | 50.0% | 50.0% | 51.1% |

| TensorSplat [10] | 50.0% | 43.3% | 100.0% | 66.6% | 100.0% | 50.0% | 68.3% |

| Activity vector [9] | 33.3% | 26.7% | 0.0% | 33.3% | 50.0% | 50.0% | 32.2% |

| EdgeMonitoring [3] | 53.3% | 40.0% | 100.0% | 66.6% | 100.0% | 100.0% | 76.6% |

| LAD [7] | 40.0% | 36.7% | 100.0% | 33.3% | 100.0% | 100.0% | 68.3% |

| LADdoc [8] | 53.3% | 40.0% | 100.0% | 33.3% | 100.0% | 100.0% | 71.1% |

| LEM-CPD-lt (Ours) | 76.6% | 63.3% | 100.0% | 66.6% | 100.0% | 100.0% | 84.4% |

| LEM-CPD (Ours) | 90.0% | 76.6% | 100.0% | 66.6% | 100.0% | 100.0% | 88.9% |

5.2 Real-world Experiments

Setting: To evaluate the performance of our method in the real-world time-varying networks, we conduct experiments on three datasets. The selection of K of HR@K is based on the number of change points in the corresponding dataset. For example, there are two change points in the Senate dataset, then K will be chosen from 1 and 2.

1) Flow dataset: a large-scale, real-world dataset that is collected from the city transport. This data records the crowd flows among the entire city train network. In a weekday, there exist six change points during the rush and non-rush transition period. 2) Maintenance dataset: The Maintenance of metro lines will change the connections among stations. In this dataset, the one line of the city train network had been maintained on three separated days. Therefore, three change points are included in the dataset (21 days in total). 3) Senate dataset: a social connection network between legislators during the 97rd-108th Congress [50]. In this dataset, 100th and 104th congress networks are recognized as the change points in many references [3, 7]. The performances of different methods are summarized in Table 2.

Discussion: Our model, LEM-CPD significantly outperforms all other comparative methods as shown in Table 2, especially LEM-CPD has outperformed in the Flow dataset. In this test, we report the average accuracy of HR@3 and HR@6 in five weekdays. Compared with LAD and Activity vector, the prediction strategy can capture much more dynamic trends with time evolving. As the motivation illustrated in the Introduction, LADdoc, LAD and Activity vector may difficult to identify the natural gaps between the previous normal graph pattern and the actual network. TensorSplat and EdgeMonitoring achieved the second and third positions because they considered a part of evolution trend during their learning process, e.g., tensor-based methods are able to get the evolution information. In the real-world experiments, we set up a larger =0.6 to let the prediction score participate more in the final decision. There presents similar conclusions in the Maintenance dataset because the city train network has the strong dynamic characters. However, in the Senate dataset, LADdoc, LAD and EdgeMonitoring methods can also get 100% accuracy, it is because the 100th and 104th congress networks are significant deviation from others.

| Weekdays | Weekends | |

| GPR | 3.283 | 2.333 |

| LSM-RN-All | 3.713 | 2.105 |

| SARIMA | 1.939 | 2.270 |

| HA | 1.652 | 2.176 |

| OLS [17] | 1.531 | 1.944 |

| LEM-CPD (Ours) | 1.615 | 2.037 |

5.3 Prediction Evaluation and Visualization

5.3.1 Baselines and Metric used in the Prediction Evaluation

HA: We predict CFD by the historical average method on each timespan. For example, all historical time spans from 9:45 AM to 10:00 AM on Tuesdays are utilized to do the forecast for the same time interval.

OLS: the latest Online latent space based model utilizing side information [4].

LSM-RN-All: A state-of-the-art matrix factorization based method to predict network-wide traffic speed problem [16].

SARIMA: A linear regression model with seasonal property to effectively predict future values in a time series.

GPR: Gaussian process regression (GPR) would handle the spatiotemporal pattern prediction in a stochastic process. It usually suffers from a heavy computational cost [51].

Measures. The metric used in this paper is Mean Absolute Error (MAE), as it is generally employed in evaluating time series accuracy [52, 13].

where is a forecasting value and is the ground truth; is the number of predictions.

5.3.2 Results and Visualization

This part of experiments is designed to assess the prediction ability of our model. Table 3 reports the average prediction error (mean absolute error) on the Flow dataset. We compared our model with some state-of-the-art methods. In the prediction process, LEM-CPF can achieve the second best result because the best model OLS involves much more external information.

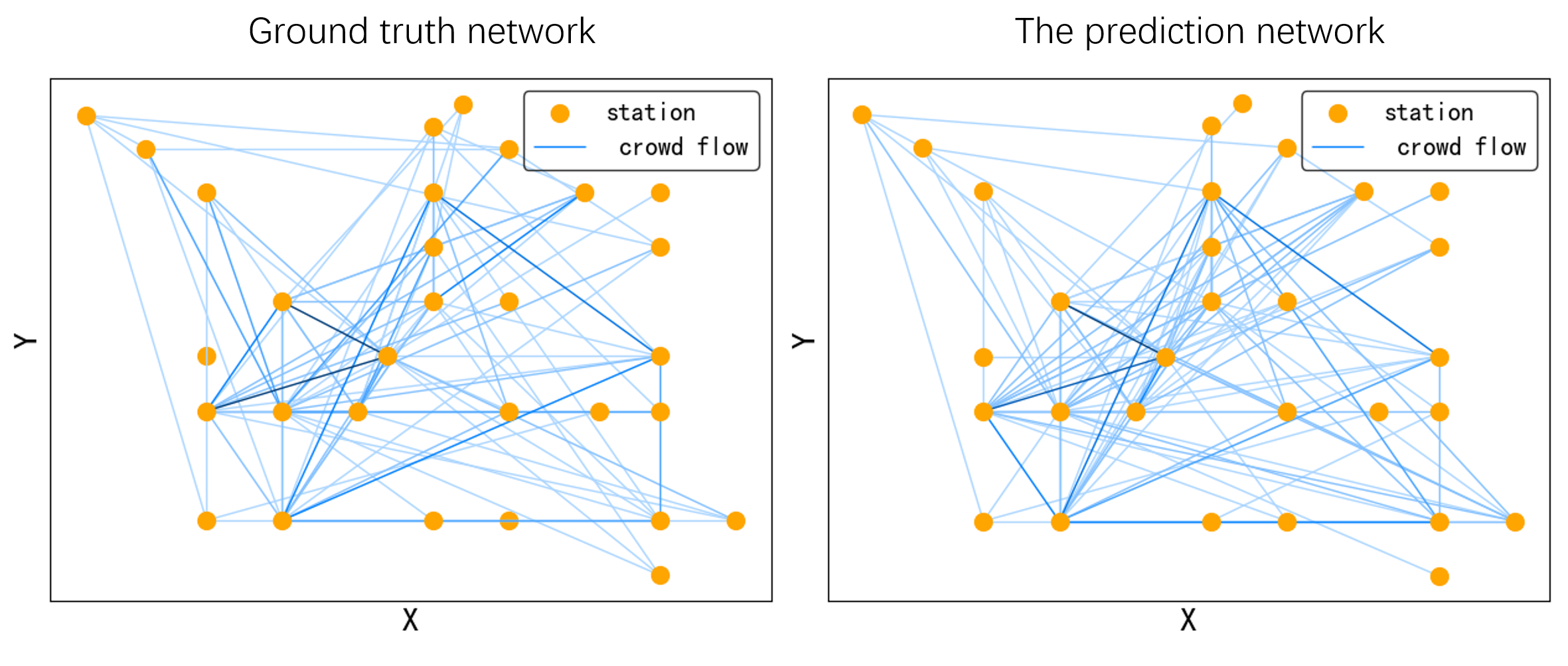

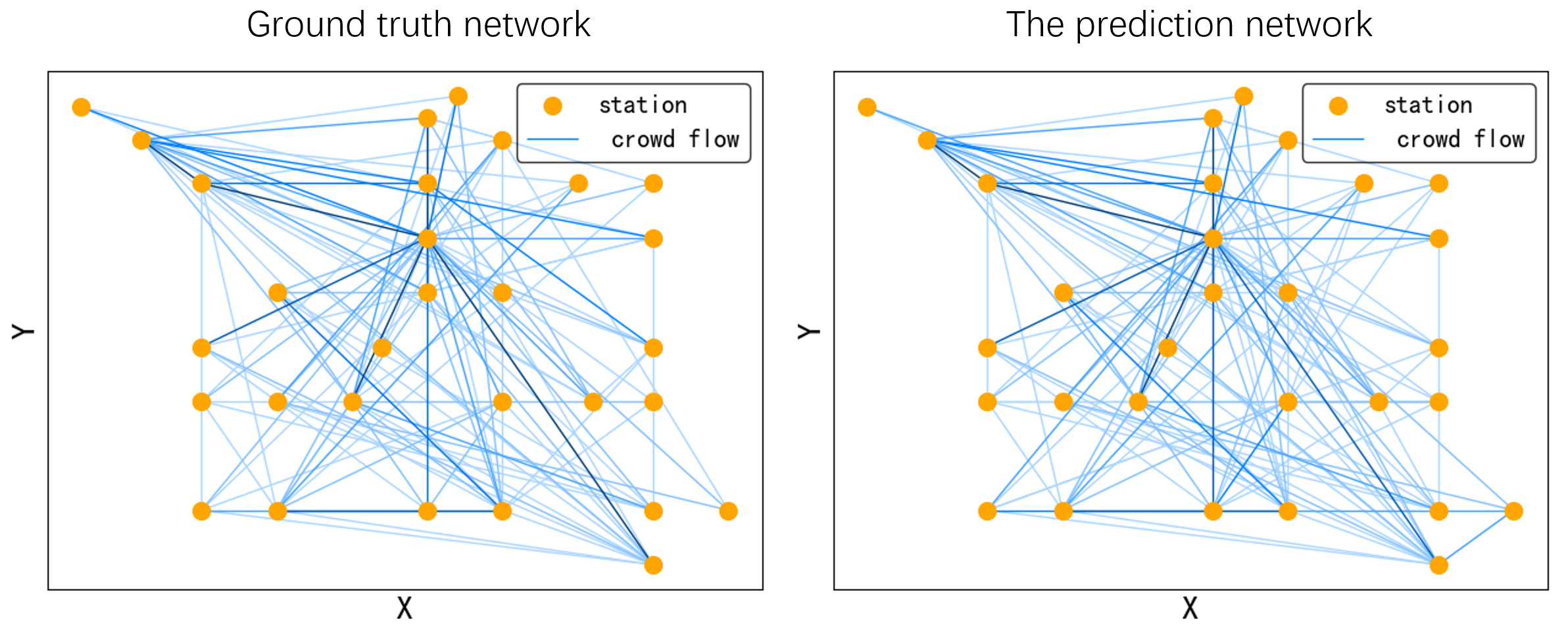

Figure 4 gives an intuitive presentation of the flow prediction in Flow dataset; we pick up 30 stations to keep the image clear. Figure 4 (a) and (b) illustrate the ground truth and prediction of the crowd flow network, each node presents a station, the weight 0 if there exists a passenger flow in the current timestamp. The depth of color indicates the number of crowd flows. It is apparent that our prediction can construct the flow network precisely.

5.4 Sensitivity of Parameters

This section evaluates the performances of LEM-CPD by varying the critical parameters (, and ). We here show the experimental results for the Flow dataset. We discuss them separately but pick them up by the grid search method because four parameters have high dimensional correlations that are hard to visualize. Our illustration approach that discusses parameters separately has been widely used in many other research papers [16, 4].

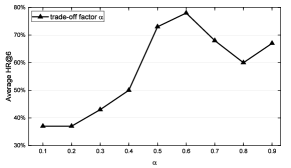

Fig. 5 (a) shows the different performances with a varying setting for . The indicates the balance factor to control the importance of prediction results. When we increase from 0.1 to 0.6, the results improve significantly. However, the performance tends to stay stable at 0.5 0.9. In particular, LEM-CPD achieves the best result when , while it can get good performance if the is set between 0.5 and 0.9. It indicates that the prediction result is important and useful to the change point detection.

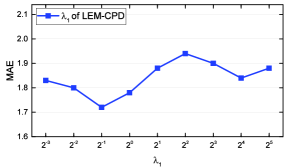

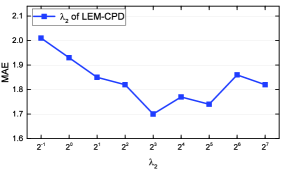

Fig. 5 (b) and (c) reveal the effect of varying and . These two parameters determine the strength of the transition matrices and the long-term guidance, respectively. = and = yield the best prediction results for LEM-CPD.

6 Conclusion

To date, despite achievements in graph-based CPD, most recent methods cannot perform well in the real-world dynamic networks. It is mainly because the real temporal network usually evolves over time. In this paper, we first cast the CPD problem as a prediction task, and develop a novel method to capture latent transitions from previous timestamps to the next. When we made a prediction for the target network, the change point can be identified by a trade-off strategy. Compared with other approaches, the main innovation of this paper is providing an effectiveness CPD method via a prediction process. All the experimental results demonstrate the superiority of LEM-CPD, which achieves more 12 percent than other competitive methods.

References

- [1] X. Wang, Y. Lu, C. Shi, R. Wang, P. Cui, S. Mou, Dynamic heterogeneous information network embedding with meta-path based proximity, IEEE Transactions on Knowledge and Data Engineering.

- [2] J. Chen, K. Li, H. Rong, K. Bilal, K. Li, S. Y. Philip, A periodicity-based parallel time series prediction algorithm in cloud computing environments, Information Sciences 496 (2019) 506–537.

- [3] Y. Wang, A. Chakrabarti, D. Sivakoff, S. Parthasarathy, Fast change point detection on dynamic social networks., in: Proceedings of 26th International Joint Conference on Artificial Intelligence, 2017, pp. 2992–2998.

- [4] Y. Gong, Z. Li, J. Zhang, W. Liu, Y. Zheng, C. Kirsch, Network-wide crowd flow prediction of sydney trains via customized online non-negative matrix factorization, in: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, 2018, pp. 1243–1252.

- [5] B. Wang, J. Lu, Z. Yan, H. Luo, T. Li, Y. Zheng, G. Zhang, Deep uncertainty quantification: A machine learning approach for weather forecasting, in: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2019, pp. 2087–2095.

- [6] J. Chen, K. Li, K. Li, P. S. Yu, Z. Zeng, Dynamic planning of bicycle stations in dockless public bicycle-sharing system using gated graph neural network, ACM Transactions on Intelligent Systems and Technology (TIST) 12 (2) (2021) 1–22.

- [7] S. Huang, Y. Hitti, G. Rabusseau, R. Rabbany, Laplacian change point detection for dynamic graphs, in: Proceedings of the 26th ACM SIGKDD, 2020, pp. 349–358.

- [8] S. Huang, G. Rabusseau, R. Rabbany, Scalable change point detection for dynamic graphs (2021) 1–6.

- [9] T. Idé, H. Kashima, Eigenspace-based anomaly detection in computer systems, in: Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining, 2004, pp. 440–449.

- [10] D. Koutra, E. E. Papalexakis, C. Faloutsos, Tensorsplat: Spotting latent anomalies in time, in: 2012 16th Panhellenic Conference on Informatics, IEEE, 2012, pp. 144–149.

- [11] K. Li, W. Yang, K. Li, Performance analysis and optimization for spmv on gpu using probabilistic modeling, IEEE Transactions on Parallel and Distributed Systems 26 (1) (2014) 196–205.

- [12] C. Chen, K. Li, S. G. Teo, X. Zou, K. Li, Z. Zeng, Citywide traffic flow prediction based on multiple gated spatio-temporal convolutional neural networks, ACM Transactions on Knowledge Discovery from Data (TKDD) 14 (4) (2020) 1–23.

- [13] H. Qu, Y. Gong, M. Chen, J. Zhang, Y. Zheng, Y. Yin, Forecasting fine-grained urban flows via spatio-temporal contrastive self-supervision, IEEE Transactions on Knowledge and Data Engineering.

- [14] J. Liu, H. Qu, M. Chen, Y. Gong, Traffic flow prediction based on spatio-temporal attention block, in: 2022 International Conference on Machine Learning, Cloud Computing and Intelligent Mining (MLCCIM), IEEE, 2022, pp. 32–39.

- [15] D. Wang, Y. Yu, A. Rinaldo, Optimal change point detection and localization in sparse dynamic networks, The Annals of Statistics 49 (1) (2021) 203–232.

- [16] D. Deng, C. Shahabi, U. Demiryurek, L. Zhu, R. Yu, Y. Liu, Latent space model for road networks to predict time-varying traffic, in: Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining, ACM, 2016, pp. 1525–1534.

- [17] Y. Gong, Z. Li, J. Zhang, W. Liu, Y. Zheng, Online spatio-temporal crowd flow distribution prediction for complex metro system, IEEE Transactions on Knowledge and Data Engineering.

- [18] X. Dong, Y. Gong, L. Zhao, Comparisons of typical algorithms in negative sequential pattern mining, in: 2014 IEEE Workshop on Electronics, Computer and Applications, IEEE, 2014, pp. 387–390.

- [19] Y. Gong, C. Liu, X. Dong, Research on typical algorithms in negative sequential pattern mining, The Open Automation and Control Systems Journal 7 (1).

- [20] Y. Gong, T. Xu, X. Dong, G. Lv, e-nspfi: Efficient mining negative sequential pattern from both frequent and infrequent positive sequential patterns, International Journal of Pattern Recognition and Artificial Intelligence 31 (02) (2017) 1750002.

- [21] J. Huang, L. Zhang, Y. Gong, J. Zhang, X. Nie, Y. Yin, Series photo selection via multi-view graph learning, arXiv preprint arXiv:2203.09736.

- [22] L. Peel, A. Clauset, Detecting change points in the large-scale structure of evolving networks, in: Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 29, 2015.

- [23] Y. Zhang, D.-Y. Yeung, Overlapping community detection via bounded nonnegative matrix tri-factorization, in: Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining, 2012, pp. 606–614.

- [24] K. S. Xu, A. O. Hero, Dynamic stochastic blockmodels for time-evolving social networks, IEEE Journal of Selected Topics in Signal Processing 8 (4) (2014) 552–562.

- [25] X. Dong, Y. Gong, L. Cao, F-nsp+: A fast negative sequential patterns mining method with self-adaptive data storage, Pattern Recognition 84 (2018) 13–27.

- [26] X. Dong, Y. Gong, L. Cao, e-rnsp: An efficient method for mining repetition negative sequential patterns, IEEE transactions on cybernetics 50 (5) (2018) 2084–2096.

- [27] P. Qiu, Y. Gong, Y. Zhao, L. Cao, C. Zhang, X. Dong, An efficient method for modeling nonoccurring behaviors by negative sequential patterns with loose constraints, IEEE Transactions on Neural Networks and Learning Systems.

- [28] Y. Gong, J. Yi, D.-D. Chen, J. Zhang, J. Zhou, Z. Zhou, Inferring the importance of product appearance with semi-supervised multi-modal enhancement: A step towards the screenless retailing, in: Proceedings of the 29th ACM International Conference on Multimedia, 2021, pp. 1120–1128.

- [29] Z. Li, J. Zhang, Q. Wu, Y. Gong, J. Yi, C. Kirsch, Sample adaptive multiple kernel learning for failure prediction of railway points, in: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2019, pp. 2848–2856.

- [30] Z. Li, J. Zhang, Y. Gong, Y. Yao, Q. Wu, Field-wise learning for multi-field categorical data, Advances in Neural Information Processing Systems 33 (2020) 9890–9899.

- [31] P. Sarkar, S. M. Siddiqi, G. J. Gordon, A latent space approach to dynamic embedding of co-occurrence data, in: Artificial Intelligence and Statistics, PMLR, 2007, pp. 420–427.

- [32] M. Thida, H.-L. Eng, P. Remagnino, Laplacian eigenmap with temporal constraints for local abnormality detection in crowded scenes, IEEE Transactions on Cybernetics 43 (6) (2013) 2147–2156.

- [33] P. Jing, Y. Su, X. Jin, C. Zhang, High-order temporal correlation model learning for time-series prediction, IEEE transactions on cybernetics 49 (6) (2018) 2385–2397.

- [34] F. Wang, C. Tan, P. Li, A. C. König, Efficient document clustering via online nonnegative matrix factorizations, in: Proceedings of the 2011 SIAM International Conference on Data Mining, SIAM, 2011, pp. 908–919.

- [35] Y. Gong, Z. Li, J. Zhang, W. Liu, J. Yi, Potential passenger flow prediction: A novel study for urban transportation development, in: Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34, 2020, pp. 4020–4027.

- [36] Z. Zhang, X. Mei, B. Xiao, Abnormal event detection via compact low-rank sparse learning, IEEE Intelligent Systems 31 (2) (2015) 29–36.

- [37] Y. Pei, N. Chakraborty, K. Sycara, Nonnegative matrix tri-factorization with graph regularization for community detection in social networks, in: Twenty-fourth international joint conference on artificial intelligence, 2015, pp. 2083–2089.

- [38] T. Li, Y. Zhang, V. Sindhwani, A non-negative matrix tri-factorization approach to sentiment classification with lexical prior knowledge, in: Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP, 2009, pp. 244–252.

- [39] H. Wang, F. Nie, H. Huang, F. Makedon, Fast nonnegative matrix tri-factorization for large-scale data co-clustering, in: Twenty-Second International Joint Conference on Artificial Intelligence, 2011, pp. 1553–1558.

- [40] X. Li, G. Cui, Y. Dong, Graph regularized non-negative low-rank matrix factorization for image clustering, IEEE transactions on cybernetics 47 (11) (2016) 3840–3853.

- [41] X. Luo, H. Wu, H. Yuan, M. Zhou, Temporal pattern-aware qos prediction via biased non-negative latent factorization of tensors, IEEE transactions on cybernetics 50 (5) (2019) 1798–1809.

- [42] J. Zhao, X. Xie, X. Xu, S. Sun, Multi-view learning overview: Recent progress and new challenges, Information Fusion 38 (2017) 43–54.

- [43] Y. Gong, Z. Li, J. Zhang, W. Liu, B. Chen, X. Dong, A spatial missing value imputation method for multi-view urban statistical data., in: Proceedings of 29th International Joint Conference on Artificial Intelligence, 2020, pp. 1310–1316.

- [44] Y. Gong, Z. Li, J. Zhang, W. Liu, Y. Yin, Y. Zheng, Missing value imputation for multi-view urban statistical data via spatial correlation learning, IEEE Transactions on Knowledge and Data Engineering.

- [45] D. D. Lee, H. S. Seung, Algorithms for non-negative matrix factorization, in: Advances in neural information processing systems, 2001, pp. 556–562.

- [46] R. C. Wilson, P. Zhu, A study of graph spectra for comparing graphs and trees, Pattern Recognition 41 (9) (2008) 2833–2841.

- [47] F. Chung, Laplacians and the cheeger inequality for directed graphs, Annals of Combinatorics 9 (1) (2005) 1–19.

- [48] S. U. Pillai, T. Suel, S. Cha, The perron-frobenius theorem: some of its applications, IEEE Signal Processing Magazine 22 (2) (2005) 62–75.

- [49] P. W. Holland, K. B. Laskey, S. Leinhardt, Stochastic blockmodels: First steps, Social networks 5 (2) (1983) 109–137.

- [50] J. H. Fowler, Legislative cosponsorship networks in the us house and senate, Social Networks 28 (4) (2006) 454–465.

- [51] C. E. Rasmussen, Gaussian processes in machine learning, in: Summer School on Machine Learning, Springer, 2003, pp. 63–71.

- [52] Y. Gong, Network-wide spatio-temporal predictive learning for the intelligent transportation system, Ph.D. thesis (2020).

- [53] K. B. Petersen, M. S. Pedersen, et al., The matrix cookbook, Technical University of Denmark 7 (15) (2008) 510.

- [54] M. D. Gupta, J. Xiao, Non-negative matrix factorization as a feature selection tool for maximum margin classifiers, in: CVPR 2011, IEEE, 2011, pp. 2841–2848.

Appendix A Derivation process of Update Rules

We here derive the update rule of as an example, other variables can be solved with a similar process. The objective of could be rewritten as follows:

, where:

| (22) |

We provide the derivative of respect to as an example, the other components can be derived in the same way. could also be rewritten as follows:

| (23) |

analogously, we can get:

| (24) |

| (25) |

Taking the derivation of with respect to , we can get according to [53]:

As introduced in [45], the traditional gradient descent method is expressed as: = - = - , where and denote all positive and negative items in , respectively (e.g., = ). We can set the step to:

| (26) |

then, we got the update rule of as shown in Equation (8) of the main paper.

Appendix B Proof of Convergence

To prove Theorem 1, we will find an auxiliary function similar to that used in the [45]. We here give the convergence proof of and other variables can similarly be proofed.

Definition 1. is an auxiliary function for our final function if the following conditions are satisfied:

| (27) |

Lemma 1 If is an auxiliary function, then is non-increasing under the update:

| (28) |

consequently, we have:

| (29) |

The proof of Lemma 1 is given by [45]. Lemma 1 illustrates that when exits ; is a column vector of .

Lemma 2. If is a diagonal matrix under the following definition,

| (30) |

then,

| (31) |

is an auxiliary function for .

Proof 1

Since = is obvious, we need only show that . To do this, we compare

| (32) |

with Equation (31) to find that is equivalent to

| (33) |

The next step is to prove is positive semi-definite. Let , then can be expressed as . As the Lemma 1 provided in [54], if Q is a symmetric non-negative matrix and be a positive vector, then the matrix .

| (34) |

Since Equation (31) is an auxiliary function, is nonincreasing under this update rule, according to Lemma 1. Writing the components of this equation explicitly, we obtain

| (35) |

By reversing the roles of and in Lemma 1 and Lemma 2, can similarly be shown to be nonincreasing under the update rules for .

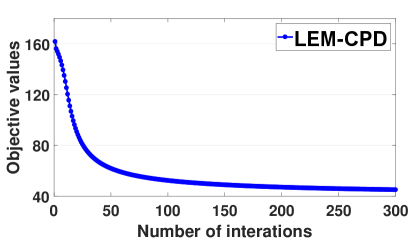

As shown in Figures 6, our model LEM-CPD can efficiently converge into a local optimization with a small number of iterations.