Learning-Based Massive Beamforming

Abstract

Developing resource allocation algorithms with strong real-time and high efficiency has been an imperative topic in wireless networks. Conventional optimization-based iterative resource allocation algorithms often suffer from slow convergence, especially for massive multiple-input-multiple-output (MIMO) beamforming problems. This paper studies learning-based efficient massive beamforming methods for multi-user MIMO networks. The considered massive beamforming problem is challenging in two aspects. First, the beamforming matrix to be learned is quite high-dimensional in case with a massive number of antennas. Second, the objective is often time-varying and the solution space is not fixed due to some communication requirements. All these challenges make learning representation for massive beamforming an extremely difficult task. In this paper, by exploiting the structure of the most popular WMMSE beamforming solution, we propose convolutional massive beamforming neural networks (CMBNN) using both supervised and unsupervised learning schemes with particular design of network structure and input/output. Numerical results demonstrate the efficacy of the proposed CMBNN in terms of running time and system throughput.

Index Terms:

Beamforming, WMMSE, convolutional neural network, massive MIMOI Introduction

The rapid development of deep learning in various applications has greatly changed many aspects of our life [1]. Besides the changes in human life, many research fields are also revolutionized by deep learning, such as computer vision and natural language processing. In the research of wireless network communication, deep learning (and machine learning) based methods are gaining more and more attention due to their efficacy. In response to this, embedding deep learning into the 5th generation of mobile systems (5G) and wireless networks is becoming an increasingly hot topic in recent years [2, 3].

At the same time, the advantages of massive MIMO in energy efficiency, spectral efficiency, robustness and reliability proved massive MIMO to be indispensable in the 5G era [4, 5]. To improve the quality of communication in massive MIMO systems, downlink beamforming or precoding is one of the most important transmission technologies. For beamforming design, the system throughput (weighted sum-rate) maximization under a total power constraint is an important metric of communication quality, which is the focus of our paper.

Many algorithms developed for beamforming are based on optimization theory like weighted minimum mean square error (WMMSE) [6], which can find locally optimal solutions of a formulated optimization problem through iterations. However, such optimization based algorithms often suffer from high computational costs (e.g., WMMSE involves complex matrix inversion operations). When large-scale antenna arrays are deployed on transmitter [7], the computational cost of these algorithms can be prohibitive. Meanwhile, algorithms with low complexity like zero-forcing method [8] cannot achieve good performance when the number of users or antennas becomes large.

As a result, deep learning-based methods were proposed to solve such problems in recent years. Supervised deep neural network (DNN) has been applied to power control, which can achieve similar sum-rate performance as the classical power allocation algorithm WMMSE [2]. In contrast, unsupervised learning can reach (even better) the performance of the WMMSE algorithm [9, 10]. Meanwhile, a hybrid precoding scheme with DNN-based autoencoder [11] was proposed for Millimeter wave (mmWave) MIMO systems. Apart from the aforementioned DNN models, a distributed convolutional neural network (CNN)-based deep power control network was introduced [12] to maximize the system spectral efficiency or energy efficiency with local CSI. Furthermore, CNN-based beamforming neural networks (BNNs) were proposed [13] for three typical beamforming optimization problems in multi-user multiple-input-single-output (MISO) networks. For the sum-rate maximization problem, BNNs were trained using both supervised learning and unsupervised learning.

Although these deep learning-based methods have been proposed for multi-user MIMO downlink beamforming, current methods mainly focus on the basic case of the sum-rate maximization problem without taking more complicated situations like user priority or varying number of stream per user into consideration. Besides, the neural network design does not utilize the structure of the closed-form update in the iterative algorithm.

In summary, two main challenges remain unaddressed in learning-based massive MIMO beamforming. First, as the number of antennas becomes large in massive MIMO system, both the input and output of the neural network (NN)-based methods would be of high dimension, which makes the neural network more complex and harder to train. Second, in real-world systems, the number of user streams and user priority are both changeable over time which means the solution space is not fixed. Thus, it will be quite challenging to take such two cases into consideration without increasing the neural network complexity significantly.

In this paper, to tackle the above challenges, we propose a new deep learning framework called convolutional massive beamforing neural networks (CMBNN). The main contributions of this paper are summarized as follows:

1) We utilize the structure of the closed-form solution of WMMSE algorithm in the design of the NN structure. In addition, we design a novel NN structure to cope with varying number of user streams. By doing so, for the first time, we are able to handle beamforming with time-varying user priority and varying number of user streams without significantly increasing the NN complexity or sacrificing model performance.

2) Due to the use of problem structure in the design of our networks, all NN structures proposed in our paper are much simpler than existing approaches. The low complexity of NN structures makes our method more appealing under the real-time requirements in 5G wireless communication systems.

II System Model and Problem Formulation

II-A System Model

Consider a single cell multi-user massive MIMO system where the BS is equipped with transmit antennas and serves users each equipped with antennas [14]. Let denotes the transmit beamforming that the BS employs to send the signal to user . The BS signal is given by,

where it is assumed .

Assuming a flat-fading channel model, the received signal at user can be written as

| (1) | ||||

where matrix represents the channel matrix from the BS to user , while denotes the additive white Gaussian noise with distribution . We assume that the signals for different users are independent from each other and from receiver noises. In this paper, we treat the multi-user interference as noise and employ linear receive beamforming strategy, i.e., , so that the estimated signal is given by

II-B Problem Formulation

A basic problem of interest is to find the transmit beamformers such that the system weighted sum-rate is maximized subject to a total power constraint due to the BS power budget. Mathematically, it can be written as follows

| (2) |

where denotes the BS power budget, the weight represents the priority of user in the system, and is the rate of user given by

where .

Under the independence assumption of ’s and ’s, the MSE matrix can be written as,

| (3) |

Followed by [6], we can obtain the equivalent WMMSE form as

Furthermore, inspired by the structure of ZF beamforming [8], to reduce the complexity in the massive antenna scenario, we also restrict ’s to the range space of , i.e., let it satisfy with some , where . As a result, by defining and , we can derive the three main steps of the corresponding WMMSE algorithm as follows

| (4) | ||||

| (5) | ||||

| (6) |

The algorithm repeats the above three steps until convergence. For ease of exposition, it is termed as reduced-WMMSE (R-WMMSE).

III Proposed Method

Our key idea is to learn the R-WMMSE algorithm above using deep learning, so that the complexity can be further reduced by choosing appropriate neural network structure and input/output.

III-A Reformulation

In previous work like [13], the noise power is often fixed for all scenarios, resulting in the trained network only adapting to this noise level. Here we remove the effect of noise by reformulating the problem. Let us define and

Then we have the following proposition.

Proposition 1

III-B Neural Network Architecture

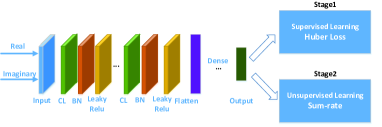

Figure 1 presents the CNN-based network architecture for beamforming design followed by the idea of [13], where CL and BN denote the convolutional layer and batch normalization layer respectively, leaky relu is chosen as the activation function and several dense layers are used after the flatten layer. The network architecture is further detailed as follows.

III-B1 Supervised Learning

Supervised learning is a straightforward way to train a beamforming neural network. All data samples can be generated through running the R-WMMSE algorithm. For the CNN model, as the input or are all complex matrices, we would like to reshape the complex matrix to a tensor like an image but with only two channels, one represents the real part while the other represents the imaginary part. However, different from the traditional image processing with convolutional and pooling layers, we would not use pooling layer because it may cause information loss which would influence the learning result. Adam and huber loss are selected as the optimizer and loss function respectively.

III-B2 Unsupervised Learning

Even if the huber loss of supervised learning becomes small, the weighted sum-rate result is not necessarily large enough. The intuitive reason is that the supervised learning does not aim directly to maximize the weighted sum-rate and its performance is largely limited by the training samples. On the other hand, we have a direct objective, i.e., weighted sum-rate maximization. Hence, we could use the negative weighted sum-rate as an alternative training loss which could improve the sum-rate directly.

| (8) |

III-B3 Supervised + Unsupervised Learning

As the loss function of unsupervised learning is complicated involving many complex matrix operations, both the loss calculation and the corresponding gradient computation would be more time-consuming than the computation of traditional loss (e.g., MSE). Considering the trade-off between convergence speed and accuracy, we choose to combine both supervised learning and unsupervised learning to train the beamforming neural network. Specifically, supervised learning is used for pre-training and unsupervised learning is for further refinement. In practice, only one or two epochs for unsupervised learning is enough.

III-C Design of Input and Output

In massive MIMO system, the number of transmit antennas could be very large. Hence, if we still directly take and (or ) as input and output as in [13, 9], the input/output of the neural network (NN) would be both high dimensional matrices, making it not easy to train an NN. As a consequence, the NN input and output should be redesigned to reduce the NN input/output size (and thus the training complexity and difficulty). In terms of the R-WMMSE algorithm, we find that beamformer is uniquely determined by while depends on . Hence, can be regarded as the NN input, which has reduced size as compared to when . Moreover, since can be determined by and , we can take as the NN output in order to reduce the size of NN output. Tables I and II list the dimension of various input/output schemes. It can be seen that different choice of input/output leads to different size of input/output. Note that we generally have , , in the massive MIMO case.

| Input | Dimension |

|---|---|

| Output | Dimension |

|---|---|

| and |

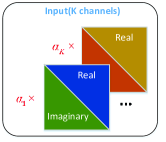

Furthermore, due to the conjugate symmetry of , both the real part and imaginary part of depend uniquely on their upper or lower triangular parts. Hence, we can further reduce the input size by combining the real part and the imaginary part in a way as shown in Fig. 2. As a result, the dimension of NN input is finally reduced to . Similar operation can be done for , leading to a further reduced size of NN output.

III-D Architecture Design for User Priority

In practice, each user in the system may have a different priority with weight that often changes with time. While most current methods do not take this into consideration, the NN input or structure should be carefully redesigned when the weights are considered. According to the R-WMMSE algorithm mentioned before, both and depend on .

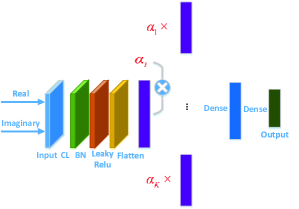

Two very intuitive ways to merge the weights into the NN are depicted in Figure 3 and Figure 4. One is to merge weights into input as K channels (see Figure 3), and the other is to concatenate weight after convolution and flatten of the input (see Figure 4). Our simulation results show that these two methods can achieve reasonably good performance.

However, these two methods will bring higher computational complexity to the original network which can lead to extra time and cost. Surprisingly, inspired by the update rule of in (II-B), we find that, just by taking as input, where and , we can reach the same performance as the previous two intuitive methods without any need for increasing network complexity.

III-E Architecture Design for varying number of user streams

In practical systems, sometimes only one stream is transmitted for some user during communication. This raises a new challenge that the number of streams can vary but the dimension of the network output needs to be fixed. Table III shows the number of valid output elements when is different. Thus, to ensure that the network output have fixed dimension, certain positions should be set to zero when there exists a single stream transmission.

| Num of valid elements | |||

|---|---|---|---|

| 2 | 12 | ||

| 1 | 5 |

There are a few simple and intuitive solutions to this problem. The simplest solution is to directly merge the information of the number of user streams into the original input . Another solution is to ignore the number of streams used and manually set to zero the positions corresponding to empty streams of the output, which may result in discontinuous loss function. These two methods both result in unsatisfactory performance in our experiments.

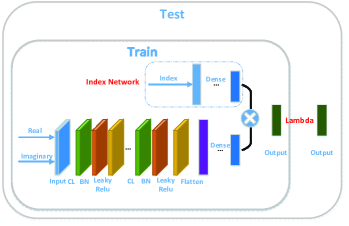

To achieve better performance than the solutions mentioned above, we introduce an auxiliary network called Index Network whose function is to softly zero out the corresponding positions given the number of streams used. Specifically, as shown in Figure 5, the Index Network is a network separated from the main network, it takes the number of user streams as input and outputs a soft mask having the same dimension of the output of the main network. The output of the main network is multiplied by the mask element-wisely to produce the final output. We find that this method can effectively stabilize the training and improve the performance.

During the testing stage, to ensure that the network outputs a beamforming with correct number of streams, the output elements at certain positions will be set to zero manually at the last layer (Lambda Layer).

During the unsupervised learning phase, variables of the Index Network should be fixed and specific positions should also be assigned with zero before calculating the unsupervised loss.

IV Experiments

IV-A Neural Network configuration

The main network consists of one convolutional layer with 4 kernels of size , followed by batch normalization (BN) layer and activation function layer (leaky relu), then only one dense layer with 32 hidden units. The Index Network is of similar scale as the dense layer before. Our network is much simpler than the previous work [13, 9] with much more layers and hidden units (mostly having more than two convolutional and dense layers).

IV-B Data generation

For weighted sum-rate maximization, the channel matrix is generated from the complex Gaussian distribution with pathloss between the users and the BS. The pathloss is set to [dB] [15] where is the distance between the user and the BS in km (). The noise power is set to be the same for all users and can be calculated by , where signal-to-noise ratio (SNR) is set as . The priority coefficients of the users are generated randomly and , and is also generated randomly for each user ( indicates dual stream and indicates single stream). In the simulation, 45000 samples are generated for training and 5000 are for testing. Table IV lists three main test cases in the following experiment with . The last test case is of great importance in industry.

| Case | ||

|---|---|---|

| 1 | 8 | 2 |

| 2 | 8 | 4 |

| 3 | 32 | 12 |

IV-C Simulation Result

To test whether the predicted precoder is good enough to maximize the weighted sum-rate maximization problem, the predicted output should be put back to the objective function and the performance can be defined as follows.

| (9) |

| (10) |

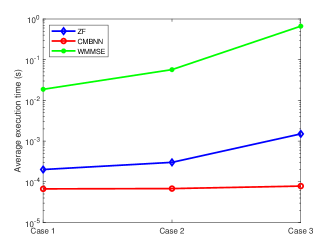

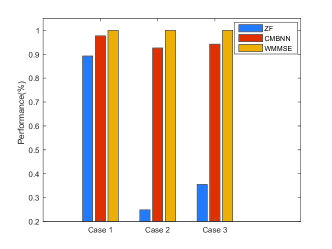

Figures 6 and 7 show the average execution time and average performance compared with the WMMSE algorithm and ZF algorithm respectively. It can be observed that 1) the proposed method can achieve similar performance as the WMMSE algorithm in most cases, while significantly outperforming ZF algorithm (which is unable to handle the user priority); and 2) the proposed method costs less execution time (in testing stage) than both the WMMSE method including many inversion operations and ZF method which needs extra time to decide which beamforming vector should be used for sending single stream.

In summary, our proposed CMBNN model is superior from the perspective of both performance and efficiency.

V Conclusion

In this paper, we have proposed a convolutional massive beamforming neural networks (CMBNN) with low complexity. Specifically, we have designed the neural network according to the structure of optimization problem to handle complex situations with changeable user priority and varying number of user streams. Compared with the methods in literature, our proposed framework can achieve better performance and higher efficiency.

References

- [1] O. Simeone, “A very brief introduction to machine learning with applications to communication systems,” IEEE Transactions on Cognitive Communications and Networking, vol. 4, no. 4, pp. 648–664, 2018.

- [2] H. Sun, X. Chen, Q. Shi, M. Hong, X. Fu, and N. D. Sidiropoulos, “Learning to optimize: Training deep neural networks for interference management,” IEEE Transactions on Signal Processing, vol. 66, no. 20, pp. 5438–5453, 2018.

- [3] C. Zhang, P. Patras, and H. Haddadi, “Deep learning in mobile and wireless networking: A survey,” IEEE Communications Surveys & Tutorials, 2019.

- [4] L. Lu, G. Y. Li, A. L. Swindlehurst, A. Ashikhmin, and R. Zhang, “An overview of massive mimo: Benefits and challenges,” IEEE journal of selected topics in signal processing, vol. 8, no. 5, pp. 742–758, 2014.

- [5] E. G. Larsson, O. Edfors, F. Tufvesson, and T. L. Marzetta, “Massive mimo for next generation wireless systems,” IEEE Communications Magazine, vol. 52, no. 2, pp. 186–195, 2014.

- [6] Q. Shi, M. Razaviyayn, Z.-Q. Luo, and C. He, “An iteratively weighted mmse approach to distributed sum-utility maximization for a mimo interfering broadcast channel,” IEEE Transactions on Signal Processing, vol. 59, no. 9, pp. 4331–4340, 2011.

- [7] F. W. Vook, A. Ghosh, and T. A. Thomas, “Mimo and beamforming solutions for 5g technology,” in 2014 IEEE MTT-S International Microwave Symposium (IMS2014). IEEE, 2014, pp. 1–4.

- [8] T. Yoo and A. Goldsmith, “On the optimality of multiantenna broadcast scheduling using zero-forcing beamforming,” IEEE Journal on selected areas in communications, vol. 24, no. 3, pp. 528–541, 2006.

- [9] H. Huang, W. Xia, J. Xiong, J. Yang, G. Zheng, and X. Zhu, “Unsupervised learning-based fast beamforming design for downlink mimo,” IEEE Access, vol. 7, pp. 7599–7605, 2018.

- [10] F. Liang, C. Shen, W. Yu, and F. Wu, “Towards optimal power control via ensembling deep neural networks,” IEEE Transactions on Communications, vol. 68, no. 3, pp. 1760–1776, 2020.

- [11] H. Huang, Y. Song, J. Yang, G. Gui, and F. Adachi, “Deep-learning-based millimeter-wave massive mimo for hybrid precoding,” IEEE Transactions on Vehicular Technology, vol. 68, no. 3, pp. 3027–3032, 2019.

- [12] W. Lee, M. Kim, and D.-H. Cho, “Deep power control: Transmit power control scheme based on convolutional neural network,” IEEE Communications Letters, vol. 22, no. 6, pp. 1276–1279, 2018.

- [13] W. Xia, G. Zheng, Y. Zhu, J. Zhang, J. Wang, and A. P. Petropulu, “A deep learning framework for optimization of miso downlink beamforming,” IEEE Transactions on Communications, vol. 68, no. 3, pp. 1866–1880, 2020.

- [14] G. Caire and S. Shamai, “On the achievable throughput of a multiantenna gaussian broadcast channel,” IEEE Transactions on Information Theory, vol. 49, no. 7, pp. 1691–1706, 2003.

- [15] H. Dahrouj and W. Yu, “Coordinated beamforming for the multicell multi-antenna wireless system,” IEEE transactions on wireless communications, vol. 9, no. 5, pp. 1748–1759, 2010.