ifaamas \acmConference[AAMAS ’22]Proc. of the 21st International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2022)May 9–13, 2022 Auckland, New ZealandP. Faliszewski, V. Mascardi, C. Pelachaud, M.E. Taylor (eds.) \copyrightyear2022 \acmYear2022 \acmDOI \acmPrice \acmISBN \affiliation \institutionUniversity of Dhaka \affiliation \institutionUniversity of Dhaka \affiliation \institutionUniversity of Massachusetts Amherst \affiliation \institutionUniversity of Dhaka

Learning Cooperation and Online Planning Through Simulation and Graph Convolutional Network

Abstract.

Multi-agent Markov Decision Process (MMDP) has been an effective way of modelling sequential decision making algorithms for multi-agent cooperative environments. A number of algorithms based on centralized and decentralized planning have been developed in this domain. However, dynamically changing environment, coupled with exponential size of the state and joint action space, make it difficult for these algorithms to provide both efficiency and scalability. Recently, Centralized planning algorithm FV-MCTS-MP and decentralized planning algorithm Alternate maximization with Behavioural Cloning (ABC) have achieved notable performance in solving MMDPs. However, they are not capable of adapting to dynamically changing environments and accounting for the lack of communication among agents, respectively. Against this background, we introduce a simulation based online planning algorithm, that we call SiCLOP, for multi-agent cooperative environments. Specifically, SiCLOP tailors Monte Carlo Tree Search (MCTS) and uses Coordination Graph (CG) and Graph Neural Network (GCN) to learn cooperation and provides real time solution of a MMDP problem. It also improves scalability through an effective pruning of action space. Additionally, unlike FV-MCTS-MP and ABC, SiCLOP supports transfer learning, which enables learned agents to operate in different environments. We also provide theoretical discussion about the convergence property of our algorithm within the context of multi-agent settings. Finally, our extensive empirical results show that SiCLOP significantly outperforms the state-of-the-art online planning algorithms.

Key words and phrases:

Cooperation Learning, Multi-agent Markov Decision Process, Transfer Learning, Graph Convolutional Network1. Introduction

Sequential decision making models for multi-agent environments hold the key to many real life problems such as autonomous vehicles

Shalev-Shwartz et al. (2016), controlling robots Kober et al. (2013), resource allocation Yang et al. (2018), games with multiple types of agents such as Starcraft II Rashid et al. (2018) and so on. Cooperation among agents is an essential part of these problems. The agents need to learn collaboration in order to work together towards a common goal. This adds to the difficulty of making decisions because the agents must interact not only with the environment, but also with one another. As a result, they need to adapt to the policies of other agents to learn cooperation. Another fundamental challenge of this domain is the curse of dimensionality. With the increase in the number of agents, the joint action space and number of states grows exponentially, rendering single agent planning algorithms inefficient in these cases. In multi-agent systems, the sequential decision making problem can be modeled as a variant of Markov Decision Process (MDP) Sutton and Barto (2011), called Multi-agent Markov Decision Process (MMDP) Boutilier (1996).

Over the years, a number of algorithms have been proposed to solve MMDPs. They are broadly divided into two categories. Centralized planning and decentralized planning. To solve MMDPs through centralized planning, the naive approach is to extend the idea of single agent algorithms by treating all the agents as a single agent and combining their joint action space to represent the action space. However, due to the curse of dimensionality, this naive approach fails to scale up to large environments. Therefore, a different approach, decentralized planning, has been widely used to solve MMDPs. This is accomplished by decomposing the multi-agent characteristics through decentralizing their value function. Best et al. Best et al. (2019) proposed a decentralized online planning algorithm to solve the dimensionality problem by using parallel MCTS trees and periodic communication. On the other hand, Aleksander et. al. Czechowski and Oliehoek (2020) introduced an algorithm ABC, where agents do not use communication, rather they train their individual policy prediction functions one at a time and try to induce cooperation by using the learned agent models. However, ABC experiences poor performance since, because of the lack of communication, it is unable to account for penalties generated by agent interaction (see Section 4).

Guestrin et al. Guestrin et al. (2001) showed that communication among agents can be represented as a coordination graph (CG) and joint value functions can be estimated from the higher order value factorization. This encouraged other approaches that use coordination graph to learn value functions or for policy generation. Choudhury et al. Choudhury et al. (2021) proposed simulation based anytime online planning algorithm FV-MCTS-MP, where factored value function is used with CG to incorporate communication among agents. The proposed solution solves both the cooperation and dimensionality problem but is not applicable in dynamically changing environments. In such cases, the state space grows exponentially as the size of the environment rises, limiting FV-MCTS-MP’s ability to calculate state values and deliver good solutions given limited computational resources.

Against this background, we introduce a MCTS based multi-agent anytime planning algorithm, SiCLOP, that can scale for large environments and provide realtime action selection capabilities. SiCLOP uses Graph Convolutional Network (GCN) Kipf and Welling (2016) for individual agent policy prediction and propagates the updated policies to other agents through Coordination Graphs (CG). The agents use only their local information for policy prediction and training. This enables them to learn cooperation in a small environments and transfer that knowledge to a larger setting, making the training easier and faster. We also introduce an effective pruning method to reduce the size of the joint action space by sampling only a small subset of all possible joint actions using Gibbs sampling Geman and Geman (1984) and iteratively updating the actions of every individual agent. Finally, we evaluate our algorithm empirically, and the result depicts that our algorithm outperforms the state-of-the-art online planning algorithms in terms of solution quality, adaptability, robustness and scalability.

2. Problem Formulation and Background

In this section, we first formulate the MMDP problem. We then review the general ideas of solving MMDPs with . Afterwards, we discuss the impact of Coordination Graph (CG) and graph based neural networks in improving the solution quality and adaptability,

Multi-agent Markov Decision Process, MMDP

An MMDP can be formalized as a tuple . is the set of all agents, indexed by . We use to refer to all other agents except . is the set of all possible states and , is a set of action spaces where is the set of actions for agent . A joint action is the combination of individual actions of all agents, . is a transition function that takes a joint action and changes the state according to the deployed environment specifications. is the global utility function and for every agent denotes the utility function to determine the payoff of states in for that agent. A policy is the task of deciding which action to choose in any state. The policy for the -th agent is denoted by , a distribution over the action space depending on the state the agent is in. The collection of policies of all the agents is called the joint policy . If the joint policy is fixed, then all the agents take actions according to that, and the expected reward or the state value of an state for agent is:

| (1) |

And the expected reward of an action or action value of state for agent in joint action is:

| (2) |

Best Response Policy

This assumes that the policies of other agents are stationary, although in multi-agent policy learning contexts, this is rarely the case. Even if agent does not change its policy, the expected reward will change when other agents change their policies.

In a multi-agent setting, the agents have to search the same state space to create strategies that maximise their payoff. Here, the goal of the agents is to adapt to the non-stationary policies of other agents. A policy for agent is defined by the set . It is the set of policies that maximise the reward assuming that the joint policy of other agents is stationary.A policy belongs to if and only if the following inequality holds:

| (3) |

Online Planning

Simulation based methods provide a means of policy learning and planning in non-stationary environments where it is possible for the agents to find their best response policy according to their current model of other agents. Monte Carlo Tree Search (MCTS) Kocsis and Szepesvári (2006) is an effective tree searching algorithm that uses simulation data to balance between exploration of new solutions and exploitation of solutions with potential. There are four major steps in the traditional MCTS simulation process. At any given moment, with an already expanded simulation tree, the first step is to start from the root and select a new leaf by going down the tree. While traversing, at every node, scores for its child nodes are calculated and the child with the best score is selected to go further down until a leaf is reached. The score is calculated with,

| (4) |

where is the action value, is the visit count of action and is the total visit count of state . The constant determines the tradeoff between exploration and exploitation. The next step is to expand the selected leaf and add its child nodes to the tree, called the expansion step. After selection and expansion, the state value of the child nodes are estimated, traditionally with random rollouts. The last step is to carry the newfound information about the expanded node to its ancestors through backpropagation. In an simulated tree, every node in that tree holds information about its expected reward, the number of times that node was visited and the edges to its child nodes.

AlphaZero Silver et al. (2017) takes a different approach with MCTS. In recent studies, it has been shown that generating policies for large state and action spaces can be done with deep neural networks rather than random plays Mnih et al. (2015). Hence, instead of estimating state values with computationally exhausting rollouts, a neural network is used in AlphaZero. The neural network also provides an initial policy for the action space to better guide the tree searching process. This not only makes the process faster, but also helps focus on better solutions.

Coordination Graph and Graph Neural Networks

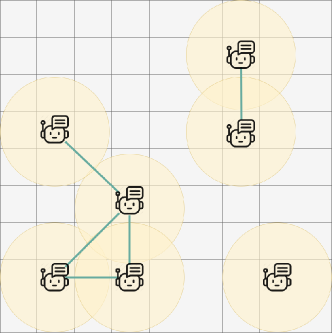

In multi-agent environments, every agent interacts with a subset of other agents. These interactions can be modeled using a graph structure called Coordination Graph (CG), where the nodes are the agents and interacting agents have edges between them. This structure captures the locality of the dependencies of the agents regarding payoffs. This locality of dependency also determines the cooperative policies of the agents. In Figure 1, the agents create a forest of connected graphs based on their range. The edge creation criteria can be predefined and the generated CG will denote which agents need to communicate.

New models and architectures have been brought to light that use graphs in training models to complete tasks regarding graphical structure, called Graph Neural Networks (GNN). The use of GNNs has been shown to be effective in agent modeling Böhmer et al. (2020)Liu et al. (2020). Graph Convolutional Networks (GCN) is a popular GNN for graph embedding. GCN uses a message passing mechanism that lets the nodes of a graph to aggregate and retain information about their neighbors of different distances. This greatly helps in representation learning of graphical structures and use it to generate practical solutions.

3. Multi-Agent Online Planning

Our proposed algorithm Simulation based Cooperation Learner and Online Planner (SiCLOP) is an MCTS based MMDP solver. SiCLOP has three major components, simulator SiCLOP-S, pruner SiCLOP-P, and predictor SiCLOP-NN. SiCLOP-S modifies the traditional MCTS to accommodate the pruning capabilities of SiCLOP-P and generate a deep simulation tree to find optimal policies. SiCLOP-P is a novel pruning technique that iterates over the joint policy space of the agents and uses best response policy predictor SiCLOP-NN to sample a small number of potential joint actions from the exponential joint action space. Lastly, SiCLOP-NN is a GCN based neural network that takes the local information and interaction of the agents (represented by CG) as input to predict best response policies for the agents and estimate the state value. Combining these three components, SiCLOP becomes a scalable and adaptive MMDP solver that is also capable of transfer learning.

The MMDP problems are represented as initial states. The algorithms takes a set of initial states , set of agents and step limit . SiCLOP is run for every initial state in in lines 1-10. For steps, the simulator SiCLOP-S finds an optimum joint action in line 3, and the state transitions to the next step in line 4, and repeats unless terminated in line 5. After steps, the simulation for that initial state is finished and the rewards are calculated in line 7. Then in lines 8-9, the state-action and state-reward pairs are stored in a database and then the database is used to train SiCLOP-NN in line 10. To summarize this section, the goal is to use simulation (depends on the predictor) to find solutions and then use data from that simulation to improve the predictor so that next time, SiCLOP can find even better solutions.

3.1. SiCLOP-S, Simulation and Action Selection

The simulator starts with parameters initial state and set of all the agents . The of the simulation tree is initialized by the initial state in line 12.

There are four steps in the simulation process and they are repeated multiple times within a fixed amount of time in the while loop in lines 13-22. In the first step, a leaf is reached from the in line 14 by frequently calculating the scores of the children of a node with Eq. 4 and choosing the child with the highest score. Visit counts and state values of visited nodes, while traversing down the tree, are updated. After choosing a leaf node, its child nodes are created and the leaf is labeled as expanded. The number of possible child nodes is exponential in magnitude with respect to the number of agents. So, in the second step, a small subset of joint actions are sampled from the joint action space with SiCLOP-P in line 17 and the child nodes are created from the set of sampled actions to complete step three and four in lines 18-22. After creating a child node in line 18, its state value is determined in lines 20-21 by calculating penalties and prediction made by SiCLOP-NN. Then the state value is backpropagated to its ancestors in line 22.

At the end of generating the simulation tree, the most visited joint action which denotes the joint action with the most potential, is selected in line 23 and then returned.

3.2. SiCLOP-P, Pruning the Joint Action Space

The SiCLOP-P component of the algorithm described in lines 25-35, focuses on pruning the joint action space for SiCLOP-S. We propose a novel joint action sampling method inspired by Gibbs sampling to effectively search over the joint policy space. First, we elaborate the sampling process. The predictor SiCLOP-NN generates the best response policy for the agents given a state. Using this predictor, the best response policy of every agent is sampled considering the agent policies as static periodically. The sampling runs for multiple cycle. At cycle , the policy of agent is updated with the conditional best response policy

| (5) |

In SiCLOP-P, the initial policy is represented by initial joint action selection in line 28. Then, in lines 29-34, a fixed number of joint actions are sampled. The agents are selected periodically in lines 30-32 where the current policy of agent is replaced by the best response policy. To update the policy, all other agents’ policies are considered to be static. The current adopted policies of the agents are represented by in line 31. Then the best response policy is updated in line 32 and new action is sampled from that policy in line 33. At the end of the cycles, in line 34, the updated joint action is added to the sampled joint actions and after a fixed number of cycles, the set of sampled joint actions are returned.

The policy generator SiCLOP-PNN predicts the best response policy for an agent. So, the generated policy for agent belongs to . In Lemma 3.2, we show that SiCLOP-P eventually converges to an equilibrium joint policy for a group of agents.

Lemma \thetheorem

In any iteration of sampling for agent in group , the new sampled policy will lead to non-decreasing reward and iterating through all the agents of will lead to an equilibrium for that group.

Proof.

If the current joint policy of all the agents is and the updated policy for agent is , then for all

| (6) |

If all the agents in the group acquire non-decreasing rewards, the combined reward will also be non-decreasing. As both the joint action space and reward are finite, iterating through the policy space will converge to a joint policy where no individual policy can be updated to get a better result, hence converging to an equilibrium for the group . ∎

This shows that with a well trained policy prediction function, the joint policy of every group will converge to a best response policy equilibrium. But in non-stationary environments, there might not a single joint policy equilibrium for the agents to converge to. In that case, the agents continuously create new best response policies to maximise their respective payoffs.

The characteristics of the sampled joint actions change gradually throughout the learning process. In the early stages of learning, the policies of the agents are initialized to be random or according to prior knowledge. The set sampled joint actions represent that initial joint policy. If it is random, then the agents explore different strategies through simulation as a group and develop their policies by finding strategies that lead to better payoffs. At the same time, agents adapt to other agents’ policies and search for best responses. And if it is initialized with handcrafted policy, then the agents evolve by finding counter strategies and updating their existing policies. In both cases, they initially search randomly for new strategies and select the optimal ones in simulation. After repeating the learning process, the agents start to generate more definitive policies, meaning some actions are more preferred than others in their respective policies. This causes the sampling method to sample joint actions that have higher probability of being chosen to maximize rewards for all the agents. Directly predicting the optimal policy can concentrate on single policies and might lose robustness. Rather, simulating a search tree tests different ways of solving a MMDP with a set of best response policies to choose from. This reduces the dependency on single strategies and provides stable robust solutions.

3.3. SiCLOP-NN, Prediction with Neural Network

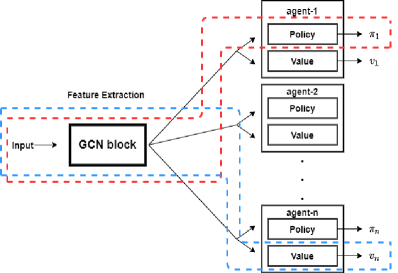

The neural network SiCLOP-NN is a GCN based policy predictor and state value estimator. It has two parts, feature extraction and prediction, as shown in Figure 2. The first part is used for graph embedding as our algorithm focuses on CGs. This feature extraction part consists of multiple GCN layers. The number of GCN layers determine the number of hops of feature information among agents. Two GCN layers would mean that the features of the agents will reach other agents up to two hops away. The second part takes the extracted feature vectors of the agents from the GCN block and generates predicted best response policies and state value estimations for every agent. The state values are aggregated to construct a combined state value. In Figure 2, The red outlined pipeline is policy predictor and the blue outlined pipeline is state value estimator. SiCLOP-NN returns a boltzman probability distribution over the actions to enable weighted sampling of actions in SiCLOP-NN.

SiCLOP-NN takes the local information of the agents and a CG as input. The setting of the local information can be defined, for example, the cells within a limited range of the agent. The CG is also constructed according to predefined rules. In SiCLOP, the input is created in the preprocess function where the local information of the agents are collected into a set in lines 39-40. Then the set is returned with the constructed CG.

The training of the neural network occurs after a fixed number of simulation episodes. In Algorithm 2, a fixed-sized batch of state-policy and state-value pairs are randomly selected from the data from recent episodes for every agent. The reason behind selecting data from recent episodes is to train the neural network on recently found policies of the agents. This allows the agents to adapt to new policies faster. Then predictions are made on that batch of states to calculate the cross-entropy error between the real policy and predicted policy for agent . This is calculated using

| (7) |

And the mean squared error between real value and predicted value is calculated with

| (8) |

The neural network is updated to minimize the combined loss.

The neural network trains and predicts on states consisting of any number of agents, with their internal interactions passed as CG into the GCN block. This adds the flexibility of performing predictions on variable number of agents. On the other hand, the input is a collection of observations of the agents dictated by a constraint of locality, i.e. communication range. SiCLOP-NN’s architecture allows making prediction in environments of any size and shape. If the agents are trained in a smaller environment with fewer agents, the learned agents can still apply the learned policies on much larger environments with more agents as their policy prediction only depends on the locality of information.

4. Empirical Results

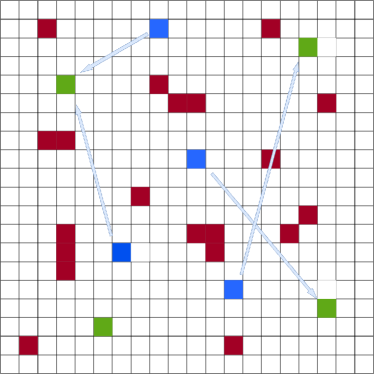

To test and compare our algorithm, we used a modified version of the drone delivery environment Choudhury et al. (2021). The modification is the inclusion of obstacles to make the environment more realistic, making the movement of the agents difficult. The environment is shown in Figure 3. Here, agents need to reach their designated objectives but agents can collide with the obstacles or other agents if they try to enter the same cell (can be objective cell) at the same time. They can also choose actions that take them out of the environment area. These actions are heavily penalized. The goal of the environment design is to test if the agents can learn to avoid penalizing actions by using cooperation and complete the tasks, as seen in most realistic problems. Every agent gets point for reaching an objective, for collision and for going out of bounds. There are also penalties if multiple agents stick too close to each other. Agents are rewarded if they get closer to their objectives. The agents have a total of possible actions, going to the surrounding cells and staying in place. The MMDP episode ends when all agents reach their goals or the pre-defined number of steps are used up. The results are shown as average score per agent as the number of agents vary in multiple environmental setting.

Next, we will present an analysis of how the algorithm performs, how it can scale up and how it performs compared to FV-MCTS-MP and ABC planning and learning algorithms.

4.1. Agent Training and Transfer Learning

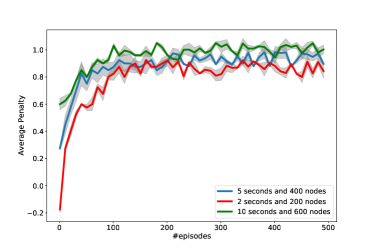

The first experiment is to observe how the agents improve their policies to get better results. In this experiment, there are agents in a grid with obstacles. The agents have maximum 40 steps to complete the tasks. A total of 500 randomly generated problem states were generated and SiCLOP was tested on these 500 solution episodes. Agent policy predictor was trained every 10 episodes. For every step in the episode, the simulator can either run for a maximum amount of time or can expand limited number of simulation nodes. The algorithm was tested on three such settings. To test the scaling up ability of the algorithm, the trained policy predictors were used to test if it can generate good quality solutions on new environments of different sizes and difficulties.

| Environment List | |||

|---|---|---|---|

| Grid Size | Agents | Obstacles | Average Score |

| 8x8 | 5 | 4 | 1.004 |

| 20x20 | 20 | 10 | 1.06 |

| 50x50 | 100 | 150 | 1.35 |

| 100x100 | 150 | 200 | 1.52 |

From the results shown in Figure 4, we can see that at the beginning of the experiment, the agents adopt random policies and as the episodes progress they start to adapt to the rules of the environment and converge to a high score around . An average score around denotes that the agents are avoiding penalties and succeeding at their given tasks. If the simulation limitations are tighter, the algorithm struggles to find good solutions at the beginning, even yielding to negative scores. But as SiCLOP is allowed to do more simulation before taking actions, it generates better solutions from the start, training the agents on quality solutions. This helps converging to optimal scores much faster, as we can see in Figure 4. It is also shown that, in general, more simulation leads to better score on average.

After training the agents in a grid, the policy predictor is transferred to randomly generated environments. These environments differ in size, number of agents and obstacles. In Table 1, we can see that the average score per agent in these environments are above , which implies that the algorithm is capable of adapting to a varying environment and avoid penalties by using knowledge from a different environment. But in case of other planning algorithms, FV-MCTS-MP stores state values of visited states so it cannot use any prior knowledge on new environments and ABC uses the entire state to predict policies, so it can not be applied to an environment of different size. Transfer learning lets the algorithm to train on different environments, enabling agents to initially train in a small environment and then train in larger environments. As seen from the experiment, the prediction model can transfer the knowledge allowing the training in large environments to converge faster. This can save time and computational resources in case of complex environments and large single agent action spaces, making the algorithm applicable to most realistic MMDP problems.

4.2. Learning Cooperation

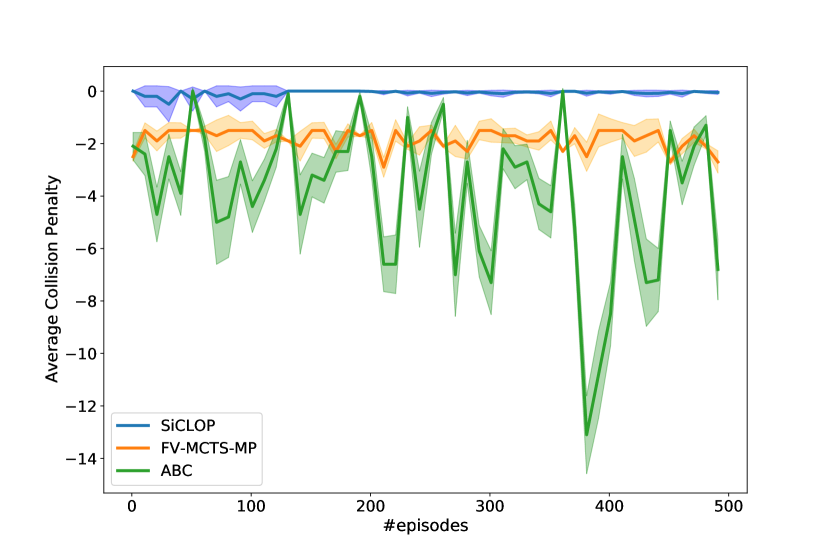

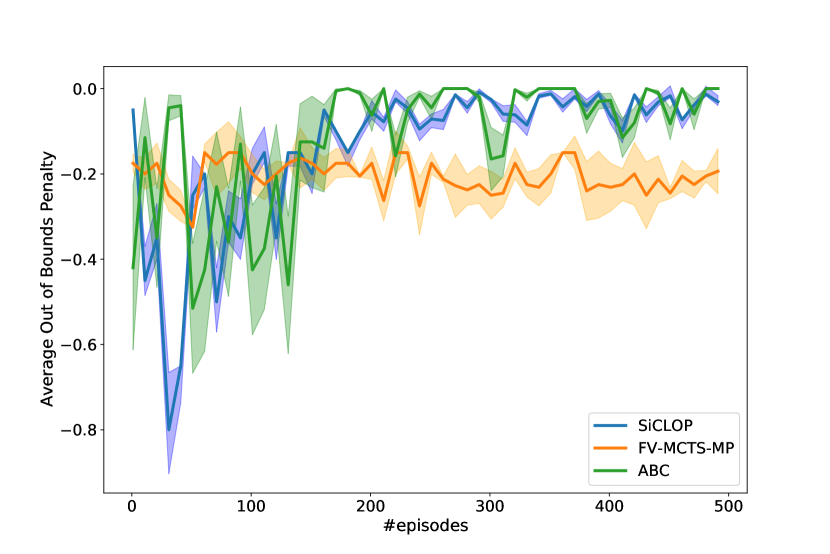

In the second experiment, the cooperation learning and adaptability of the algorithms were tested. SiCLOP and ABC are both model learning algorithms and FV-MCTS-MP is a centralized tree searching algorithm. While ABC adopts a decentralized approach, SiCLOP chooses and learns its policies in a centralized manner. To test the cooperation learning ability of the agents, both algorithms were run on the same 500 episodes with seconds time limit per action in a grid with obstacles and agents. In Figure 5, we can see how the algorithms learn cooperation and adapt to handle and penalties.

From Figure 5, it is clear that ABC fails to learn how to avoid collisions but can learn to avoid going out of bounds. The penalty of going out of bounds depends on individual agents and collision mostly depends on the interaction of the agents. ABC learns the agent models one at a time and tries every available action. This helps the agent models to learn individual actions effectively. But in case of collision, it is very hard to avoid without communication among agents, causing the ABC agent models to not converge to effective policies. As there is no communication, there is no differentiation between successful and unsuccessful joint actions before the actions are executed. FV-MCTS-MP consistently outperforms ABC in cooperation but due to time limit and small number of iterations, there were no significant increase in performance. SiCLOP outperforms both algorithms as it searches over the joint policy space and needs lesser number of iterations to find cooperative joint policies to avoid the penalties. Throughout the training process, SiCLOP shows a stable performance and converges to almost no average penalty.

Contrary to the collision penalty, all three algorithms manage to adapt to the out of bounds penalty. Before training, FV-MCTS-MP outperforms both SiCLOP and ABC but after training these two perform better. Similar to previous experiment, FV-MCTS-MP’s performance does not get better because of the limitations. The reason behind the similar performances of SiCLOP and ABC is that avoiding out of bounds penalty does not require any cooperation.

4.3. Performance Comparison

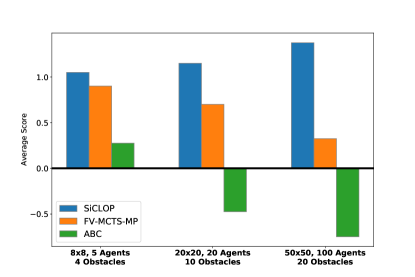

In this experiment, SiCLOP was compared to FV-MCTS-MP and ABC by average scores accumulated on gradually increasing size of environments and number of agents. All of the algorithms had seconds time limit per action. SiCLOP and ABC algorithms were trained on 500 episodes. In Figure 6, we can see the comparison in scores.

The algorithms were tested in three different environments of varying sizes. As the size of the environment gets larger, accumulating a high score becomes harder as there are more obstacles and the objectives are farther. From this experiment, it is clear that as the environment gets larger, ABC is more prone to penalties and accumulates lower average scores. FV-MCTS-MP, with limited time and number of iterations, is unable to find sequence of actions that lead to high score. As the simulation tree of FV-MCTS-MP is wide, it calculates the expected action value over a wide and shallow tree, causing it to have average negative values. So, FV-MCTS-MP prefers not to move the agents to minimize penalties. But, as SiCLOP searches over a deeper search tree, it can find better solutions to the MMDP problems. Also, the size of the environment hardly affects the performance of SiCLOP as the agents only use their local information and interactions. These attributes and better cooperation learning allow SiCLOP to outperform both ABC and FV-MCTS-MP by a significant margin.

5. Conclusions and Future Work

In this paper, we introduced a scalable and adaptive algorithm to solve MMDP. Our algorithm SiCLOP uses local information of the agents to construct state dependant dynamic CGs and uses it to find optimum policies through simulation and effective pruning. We have shown that our algorithm can adapt to larger, more realistic environments and outperform existing online MMDP solvers.

SiCLOP is an algorithm that uses policy predictions as a tool to find better policies, which implies that the quality of the initial prediction holds great significance on the final outcome. More work is required for finding better ways to train the prediction models, which may include newer ways of selecting self-play data on which the model is trained on and better neural network architectures that suit the process better.

References

- (1)

- Bernstein et al. (2009) Daniel S Bernstein, Christopher Amato, Eric A Hansen, and Shlomo Zilberstein. 2009. Policy iteration for decentralized control of Markov decision processes. Journal of Artificial Intelligence Research 34 (2009), 89–132.

- Best et al. (2019) Graeme Best, Oliver M Cliff, Timothy Patten, Ramgopal R Mettu, and Robert Fitch. 2019. Dec-MCTS: Decentralized planning for multi-robot active perception. The International Journal of Robotics Research 38, 2-3 (2019), 316–337.

- Böhmer et al. (2020) Wendelin Böhmer, Vitaly Kurin, and Shimon Whiteson. 2020. Deep coordination graphs. In International Conference on Machine Learning. PMLR, 980–991.

- Boutilier (1996) Craig Boutilier. 1996. Planning, learning and coordination in multiagent decision processes. In TARK, Vol. 96. Citeseer, 195–210.

- Buşoniu et al. (2010) Lucian Buşoniu, Robert Babuška, and Bart De Schutter. 2010. Multi-agent reinforcement learning: An overview. Innovations in multi-agent systems and applications-1 (2010), 183–221.

- Choudhury et al. (2021) Shushman Choudhury, Jayesh K Gupta, Peter Morales, and Mykel J Kochenderfer. 2021. Scalable Anytime Planning for Multi-Agent MDPs. arXiv preprint arXiv:2101.04788 (2021).

- Claes et al. (2017) Daniel Claes, Frans Oliehoek, Hendrik Baier, Karl Tuyls, et al. 2017. Decentralised online planning for multi-robot warehouse commissioning. In AAMAS’17: PROCEEDINGS OF THE 16TH INTERNATIONAL CONFERENCE ON AUTONOMOUS AGENTS AND MULTIAGENT SYSTEMS. 492–500.

- Claes et al. (2015) Daniel Claes, Philipp Robbel, Frans Oliehoek, Karl Tuyls, Daniel Hennes, and Wiebe Van der Hoek. 2015. Effective approximations for multi-robot coordination in spatially distributed tasks. In Proceedings of the 14th international conference on autonomous agents and multiagent systems (AAMAS 2015). International Foundation for Autonomous Agents and Multiagent Systems, 881–890.

- Czechowski and Oliehoek (2020) Aleksander Czechowski and Frans Oliehoek. 2020. Decentralized MCTS via Learned Teammate Models. arXiv preprint arXiv:2003.08727 (2020).

- Foerster et al. (2016) Jakob N Foerster, Yannis M Assael, Nando De Freitas, and Shimon Whiteson. 2016. Learning to communicate with deep multi-agent reinforcement learning. arXiv preprint arXiv:1605.06676 (2016).

- Geman and Geman (1984) Stuart Geman and Donald Geman. 1984. Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Transactions on pattern analysis and machine intelligence 6 (1984), 721–741.

- Guestrin et al. (2001) Carlos Guestrin, Daphne Koller, and Ronald Parr. 2001. Multiagent Planning with Factored MDPs.. In NIPS, Vol. 1. 1523–1530.

- Guo et al. (2014) Xiaoxiao Guo, Satinder Singh, Honglak Lee, Richard L Lewis, and Xiaoshi Wang. 2014. Deep learning for real-time Atari game play using offline Monte-Carlo tree search planning. In Advances in neural information processing systems. 3338–3346.

- Gupta et al. (2017) Jayesh K Gupta, Maxim Egorov, and Mykel Kochenderfer. 2017. Cooperative multi-agent control using deep reinforcement learning. In International Conference on Autonomous Agents and Multiagent Systems. Springer, 66–83.

- Kipf and Welling (2016) Thomas N Kipf and Max Welling. 2016. Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907 (2016).

- Kober et al. (2013) Jens Kober, J Andrew Bagnell, and Jan Peters. 2013. Reinforcement learning in robotics: A survey. The International Journal of Robotics Research 32, 11 (2013), 1238–1274.

- Kocsis and Szepesvári (2006) Levente Kocsis and Csaba Szepesvári. 2006. Bandit based monte-carlo planning. In European conference on machine learning. Springer, 282–293.

- Liu et al. (2020) Yong Liu, Weixun Wang, Yujing Hu, Jianye Hao, Xingguo Chen, and Yang Gao. 2020. Multi-agent game abstraction via graph attention neural network. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34. 7211–7218.

- Matignon et al. (2007) Laëtitia Matignon, Guillaume J Laurent, and Nadine Le Fort-Piat. 2007. Hysteretic q-learning: an algorithm for decentralized reinforcement learning in cooperative multi-agent teams. In 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 64–69.

- Mnih et al. (2015) Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A Rusu, Joel Veness, Marc G Bellemare, Alex Graves, Martin Riedmiller, Andreas K Fidjeland, Georg Ostrovski, et al. 2015. Human-level control through deep reinforcement learning. nature 518, 7540 (2015), 529–533.

- OroojlooyJadid and Hajinezhad (2019) Afshin OroojlooyJadid and Davood Hajinezhad. 2019. A review of cooperative multi-agent deep reinforcement learning. arXiv preprint arXiv:1908.03963 (2019).

- Panait and Luke (2005) Liviu Panait and Sean Luke. 2005. Cooperative multi-agent learning: The state of the art. Autonomous agents and multi-agent systems 11, 3 (2005), 387–434.

- Rashid et al. (2018) Tabish Rashid, Mikayel Samvelyan, Christian Schroeder, Gregory Farquhar, Jakob Foerster, and Shimon Whiteson. 2018. Qmix: Monotonic value function factorisation for deep multi-agent reinforcement learning. In International Conference on Machine Learning. PMLR, 4295–4304.

- Shalev-Shwartz et al. (2016) Shai Shalev-Shwartz, Shaked Shammah, and Amnon Shashua. 2016. Safe, Multi-Agent, Reinforcement Learning for Autonomous Driving. arXiv preprint arXiv:1610.03295 (2016).

- Silver et al. (2017) David Silver, Thomas Hubert, Julian Schrittwieser, Ioannis Antonoglou, Matthew Lai, Arthur Guez, Marc Lanctot, Laurent Sifre, Dharshan Kumaran, Thore Graepel, et al. 2017. Mastering chess and shogi by self-play with a general reinforcement learning algorithm. arXiv preprint arXiv:1712.01815 (2017).

- Silver and Veness (2010) David Silver and Joel Veness. 2010. Monte-Carlo planning in large POMDPs. Neural Information Processing Systems.

- Sunehag et al. (2017) Peter Sunehag, Guy Lever, Audrunas Gruslys, Wojciech Marian Czarnecki, Vinicius Zambaldi, Max Jaderberg, Marc Lanctot, Nicolas Sonnerat, Joel Z Leibo, Karl Tuyls, et al. 2017. Value-decomposition networks for cooperative multi-agent learning. arXiv preprint arXiv:1706.05296 (2017).

- Sutton and Barto (2011) Richard S Sutton and Andrew G Barto. 2011. Reinforcement learning: An introduction.

- Tampuu et al. (2017) Ardi Tampuu, Tambet Matiisen, Dorian Kodelja, Ilya Kuzovkin, Kristjan Korjus, Juhan Aru, Jaan Aru, and Raul Vicente. 2017. Multiagent cooperation and competition with deep reinforcement learning. PloS one 12, 4 (2017), e0172395.

- Watkins and Dayan (1992) Christopher JCH Watkins and Peter Dayan. 1992. Q-learning. Machine learning 8, 3-4 (1992), 279–292.

- Yang et al. (2018) Yaodong Yang, Rui Luo, Minne Li, Ming Zhou, Weinan Zhang, and Jun Wang. 2018. Mean field multi-agent reinforcement learning. In International Conference on Machine Learning. PMLR, 5571–5580.

- Zhang and Lesser (2010) Chongjie Zhang and Victor Lesser. 2010. Multi-agent learning with policy prediction. In Twenty-fourth AAAI conference on artificial intelligence.