Learning on a Budget via Teacher Imitation

Abstract

Deep Reinforcement Learning (RL) techniques can benefit greatly from leveraging prior experience, which can be either self-generated or acquired from other entities. Action advising is a framework that provides a flexible way to transfer such knowledge in the form of actions between teacher-student peers. However, due to the realistic concerns, the number of these interactions is limited with a budget; therefore, it is crucial to perform these in the most appropriate moments. There have been several promising studies recently that address this problem setting especially from the student’s perspective. Despite their success, they have some shortcomings when it comes to the practical applicability and integrity as an overall solution to the learning from advice challenge. In this paper, we extend the idea of advice reusing via teacher imitation to construct a unified approach that addresses both advice collection and advice utilisation problems. We also propose a method to automatically tune the relevant hyperparameters of these components on-the-fly to make it able to adapt to any task with minimal human intervention. The experiments we performed in different Atari games verify that our algorithm either surpasses or performs on-par with its top competitors while being far simpler to be employed. Furthermore, its individual components are also found to be providing significant advantages alone.

Index Terms:

reinforcement learning, deep q-networks, action advising, teacher-student frameworkI Introduction

Deep Reinforcement Learning (RL) has been proven to be a successful approach to solve decision-making problems in a variety of difficult domains such as video games [1], board games [2] or robot manipulation [3]. However, achieving the reported performances is not entirely straightforward. One of the most critical setbacks in deep RL is the exhaustive training processes that usually require many interactions with the environment. This occurs mainly due to the RL inherent exploration challenges as well as the complexity of the incorporated function approximators, e.g. deep neural networks. To this date, there has been a remarkable amount of research effort to overcome the sample inefficiency by devising advanced exploration strategies [4]. In addition to these, other lines of work that focus on leveraging some legacy knowledge to tackle these issues have also been studied extensively with great success.

The ability to learn by utilising the prior experience instead of starting from scratch is an essential component of intelligence. In RL, this idea has been investigated in various forms that are tailored for different problem settings. Imitation Learning (IL) [5] studies the concept of mimicking an expert behaviour presented in a pre-recorded dataset without allowing any RL rewards from the environment itself. Similarly, Learning from Demonstrations (LfD) [6][7] extends this idea to incorporate the RL interactions and rewards to further surpass the experts. Some other approaches such as Policy Reuse [8] study the ways of speeding up the agent’s learning by leveraging the past policies directly, instead of datasets.

In this paper, we study a different problem setup where it is not possible to have any pre-generated datasets or to directly obtain the useful policies themselves; instead, the learning agent only has access to some peer(s) over a limited communication channel. Such a setting is especially relevant for the scenarios with unknown task specifications (prior to learning and deployment) and non-transferable yet beneficial policies, e.g. humans, non-stationary agents, in the loop. A flexible framework that is tailored for this setting is called Action Advising [9]. According to this, agents exchange knowledge between each other in the form of actions to speed-up their learning progressions. However, the number of these peer-to-peer interactions are limited with a budget to resemble the real-world limitations, which essentially converts the problem into determining the best possible way to utilise the available budget. Based on the peer that is in charge of driving these interactions, action advising algorithms can either be student-initiated, teacher-initiated or jointly-initiated.

Action advising algorithms in deep RL have obtained promising results with an emphasis on student-initiated strategies [10][11][12]. While the majority of these focus on addressing when to ask for advice question, some recent approaches [13] have also investigated the ways to further utilise the collected advice by imitating and reusing the teacher policy. Despite these developments, there are currently several significant shortcomings present. These techniques often employ some threshold hyperparameters to control the decisions to initiate advice exchange interactions which play a key role in their efficiencies. However, these parameters are sensitive to the learning state of the models as well as the domain properties. Therefore, they need to be tuned very carefully prior to execution, which involve unrealistically accessing the target tasks for trial runs. Furthermore, the studies to further leverage the teacher advice beyond collection are currently in their early stages and do not provide a complete solution to the problem besides addressing the advice reusing aspect.

In this paper, we present an all-in-one student-initiated approach that is capable of collecting and reusing advice in a budget-efficient manner, by extending [13] in multiple ways. First, we propose a method for automatically determining the threshold parameters responsible for the decisions to request and reuse advice. This greatly alleviates the burden of task-specific hyperparameter tuning procedures. Secondly, we follow a decaying advice reuse schedule that is not tied to the student’s exploration strategy. Finally, instead of using the imitated policy only for reusing advice as in [13], we incorporate this policy to determine and collect more diverse advice to construct a more universal imitation policy.

The rest of this paper is structured as follows: Section II outlines the most relevant previous work. In Section III, the background knowledge that is required to understand the paper is provided. Afterwards, we describe our approach in detail in Section IV. Then, Section V presents our evaluation domain and experimental procedure. In Section VI, we share and analyse the experiment results. Finally, Section VII wraps up this study with conclusions and future remarks.

II Related Work

Action advising techniques with budget constraints were originally invented and studied extensively in tabular domains. In [9], the teacher-student learning procedure was formalised for the first time and some solutions from the teacher’s perspective were proposed. This was later extended with some new heuristics [14] as well as with a meta-learning approach [15]. Later on, the action advising interactions are also studied in student-initiated and jointly-initiated forms [16] which were also adopted in multi-agent problems where the agents take student and teacher roles interchangeably [17]. In a recent work [18], the idea of reusing the previously collected advice is investigated to improve the learning performance and also to make a more efficient use of the available budget.

Deep RL is a considerably new domain for the action advising studies. [19] introduced a novel LfD setup in which the demonstrations dataset is built interactively as in action advising. To do so, they employed uncertainty estimation capable models with LfD loss terms integrated in the learning stage. [10] extended the jointly-initiated action advising [17] to be applicable in multi-agent deep RL for the first time. This study replaced the state counters that were used to assess uncertainty in the tabular version [17] with state novelty measurements with the aid of Random Network Distillation (RND) [20]. In [11], an uncertainty-based advice collection strategy was proposed. According to this, the student adopts a multi-headed neural network architecture to access epistemic uncertainty estimations as in [19]. Later, [12] further studied the state novelty-based idea of [10] to devise a better student-initiated advice collection method. Specifically, they made RND module to be updated only for the states that are involved in advice collection. That way, the student was ensured to benefit from the teacher regardless of its own knowledge about the states to tackle the special cases of belated inclusion of the teacher. More recently, [13] studied the advice reuse idea in deep RL. In this work, an imitation model of the teacher is constructed partially with the collected advice. Furthermore, Dropout regularisation [21] is also incorporated in this model to enable it to make uncertainty-aware decisions when it comes to reusing the self-generated advice.

Among these studies in deep RL, only [19] and [13] investigated the concept of advice reuse. Our paper differs from them in several ways. The idea in [19] require using uncertainty estimation capable models in the student’s RL algorithm. Moreover, the RL algorithm also needs to be modified to have the LfD loss terms. In contrast, the algorithm we propose does not require the student to have any specific RL models or loss functions. Thus, the student agent can be treated as a blackbox, which lets our method to be applied to a wider range of agent types. [13] performs advice reuse as a separate module, as we described here. However, they rely on the previously proposed advice collection strategies instead of taking advantage of its own imitation module to manage the advice collection process via uncertainty. Furthermore, some of the hyperparameters in [13] limit its practical applicability due to being difficult to tune, which we also address in this work.

III Background

III-A Reinforcement Learning and Deep Q-Networks

Reinforcement Learning (RL) [22] is a trial-and-error learning paradigm that studies decision-making problems where an agent learns to accomplish a task. This interaction within the environment is commonly formalised as a Markov Decision Process (MDP). In MDP, the environment is defined with the tuple where is the set of states, is the set of available actions, is the reward function, defines the transition probabilities, and is the discount factor. At every timestep , the agent applies an action in state to advance to the next state while receiving a reward . These actions are determined by the agent’s policy , and RL’s goal is to learn an agent policy (with parameters ) that maximises the cumulative discounted sum of the rewards . There are various different approaches in the RL literature to achieve this. For instance, the well-known Q-learning algorithm does so by learning the state-action values via Bellman equations [22] and making the agent follow the policy .

In the recent years, RL algorithms have been studied extensively in a branch referred to as Deep RL to deal with non-tabular state spaces with the aid of non-linear function approximation. Deep Q-Networks (DQN) [23] is a substantial one among these, that serves as a strong baseline in the domains with discrete actions. In this off-policy algorithm, values are approximated with a deep neural network with weights . By using the transitions stored in a replay buffer, is learned by minimising the loss terms with stochastic gradient descent, where stands for the periodically updated copy of . This is the target network trick that DQN incorporates to battle the RL induced non-stationarity in the approximator learning. Another critical component is the replay buffer, that lets the agent save samples to be learned from over a long course by also breaking the non-i.i.d. property of sequential collection. DQN’s success has led it to be studied and enhanced further over years. The most prominent of these are summarised in Rainbow DQN [24]. Among these, we employ Dueling Networks [25] and Double Q-learning [26] in this paper.

III-B Action Advising

Action advising [9] is a peer-to-peer knowledge exchange framework that requires only a common set of actions and a communication protocol between the peers. According to this, a learning peer (student) receives advice in the form of actions from a more knowledgeable peer (teacher) to accelerate its learning. These advice actions are generated directly from the teacher’s decision-making policy; therefore, it is important for the student and the teacher to have the same goal in the task they are performing in. A key property that distinguishes this approach from the similar frameworks such as Policy Reuse [8] is the notion of budget constraint. By considering the realistic scenarios where it is not possible to reliably exchange information, the number of interactions in this framework is also limited with a budget. Consequently, the algorithms that operate in this problem setup should be capable of determining the most appropriate moments to exchange advice either from the perspective of teacher (teacher-initiated), student (student-initiated) or both (jointly-initiated).

IV Proposed Algorithm

We adopt the MDP formalisation presented in Section III in our problem definition. The setup in this study includes an off-policy deep RL agent (student) with policy learning to perform some task in an environment with continuous state space and discrete actions. There is also another agent with policy (teacher) that is competent in . The teacher is isolated from the environment itself, but is reachable by the student via a communication channel for a limited number of times defined by the advising budget . By using this mechanism, the student can request action advice for its current state . The objective of the student in this problem is to maximise its learning performance in by timing these interactions to make the most efficient use of .

Our approach provides a unified solution for addressing when to ask for advice and how to leverage the advice questions. In addition to the RL algorithm, the student is equipped with a neural network with weights that are not shared with the RL model in any way. The student also has a transitions buffer with no capacity limit that holds the collected state-advice pairs. By using the samples in , is trained periodically to provide the student an up-to-date imitation model of to make it possible to reuse the previously provided advice. Moreover, is also used to determine what advice to collect by being regarded as a representation of ’s contents. Obviously, making these decisions require to have a form of awareness of what it is trained on (in terms of samples). Therefore, employs Dropout regularisation in the fully-connected layers to have an estimation of epistemic uncertainty denoted by for any state as it is done in [21][13]. None of these components share anything with or require access to the student’s RL algorithm. This is especially advantageous when it comes to pairing up our approach with different RL methods.

At the beginning of the student’s learning process, is initialised randomly and . Then, at every timestep in state , the student goes through stages of our algorithm: Collection, Imitation, Reuse. The remainder of this section describes these stages with the line number references to the complete flow of our algorithm summarised in Algorithm 1.

The collection stage (lines 13-19) remains active from the beginning until the student runs out of its advising budget . At this step, the student attempts to collect advice if its current state has not been advised before. This is determined by the value of . If it is higher than the uncertainty threshold (which is set automatically in the imitation stage), it is decided that is has not been advised before; thus, the student proceeds with requesting advice. However, if is undetermined, this request is carried out without performing any uncertainty check.

The imitation module is responsible for training and tuning accordingly. This stage (lines 20-22) is always active, but it is only triggered when these conditions that are checked at every timestep are met: the student has collected new samples in (since the last imitation) or the student has taken steps (since the last imitation) with at least new samples in . Here, and are hyperparameters. These are set in order to keep the number of imitation processes within a reasonable number while also ensuring remains up-to-date with the collected advice. On one hand, if was updated for every new state-advice pair, it would be a very accurate model of ’s contents, but the total training times would be a significant computational burden. On the other hand, if was updated infrequently, it would not cause any computational setbacks; however, it would not be a good representation of the collected advice either.

Once the imitation is triggered, is trained for iterations (if it is the first ever training; else, for iterations) with the minibatches of samples drawn randomly from . This process resembles the simplest form of behavioural cloning where the supervised negative log-likelihood loss is minimised. Afterwards, is updated automatically to be compatible with the new state of this imitation network. This is done by measuring and storing them in a set for each in that satisfies . Then, the uncertainty value that corresponds to the percentile (hyperparameter) in the ascending-order sorted is assigned to . We do this to pick a threshold such that can consider these samples it classifies correctly as “known” while leaving a small portion that are likely to be outliers out, when is compared with . This approach could be further developed by also considering the true-positive and false-positive rates, however, we opted for a simpler approach in this study.

Finally, the reuse stage (lines 23-26) handles the execution of the imitated advice whenever appropriate, to aid the student in efficient exploration. It becomes active as soon as the imitation model is trained for the first time. Then, whenever (i.e. is familiar with ), no advice collection is occurred at and reusing is enabled for this particular episode, the student executes the imitated advice . Unlike [13], we do not limit advice reusing to the exploration stage of learning, e.g. the period is annealed to its final value in -greedy. Instead, we define a reuse schedule that is independent than the underlying RL algorithm’s exploration strategy. At the beginning of each episode, the agent either enables reuse module with a probability of (set as initially). This value is decayed until it reaches its final value over steps, similarly to -greedy annealing. This approach further eliminates the dependency of our algorithm to the RL algorithm’s exploration strategy.

V Experimental Setup

We designed our experiments to answer the following questions about our proposal:

-

•

How does our automatic threshold tuning perform against the manually-set ones in terms reuse accuracy and learning performance?

-

•

How much does using advice imitation model to drive the advice collection process help with collecting more diverse state-advice dataset?

-

•

Does collecting a dataset with more diverse samples make any significant impact on the learning performance?

-

•

How much does every particular modification contribute in the final performance?

In the remainder of this section, we first describe our evaluation domain. Then, we provide the details our experimental process along with the substantial implementation details111The code for our experiments can be found at https://github.com/ercumentilhan/advice-imitation-reuse.

V-A Evaluation Domain

In order to have the adequate amount of difficulty that is relevant to the modern deep RL algorithms, we chose the widely experimented Arcade Learning Environment (ALE) [27] that contains more than Atari games as our testbed. We picked well-known games among these, namely Enduro, Freeway, Pong, Q*bert, Seaquest, which involve different mechanics and present various learning challenges. In each of these games, the agent receives observations as RGB images with a size of . These observations are converted into grayscale images to reduce the amount of representational complexity, and are also stacked as the most recent frames to eliminate the effect of partial observability. Furthermore, since these games are originally processed at high frame-per-second rates with very little differences between two consecutive frames, agent actions are repeated for frames by skipping frames. Consequently, the final sized observations the agent gets are built with the most recent game frames. The range of rewards in these games are also different and unbounded. Therefore, to facilitate the stability of learning, they are clipped to be in before they are provided to the agent. The game episodes are limited to last for k frames (k agent steps) at maximum.

V-B Settings and Procedure

We experiment with an extensive set of agents to be able to determine the most beneficial enhancements included in our algorithm. The student agent variants we compare in our experiments are as follows:

-

•

No Advising (NA): No form of action advising is employed, the agent relies on its RL algorithm only.

-

•

Early Advising (EA): The student asks for advice greedily until its budget runs out. There is no further utilisation of advice beyond their execution at the time of collection. This is a simple yet well-performing heuristic.

-

•

Random Advising (RA): The student asks for advice randomly with probability. This heuristic uses the intuition that spacing out requests may yield more diverse and information rich advice.

-

•

EA + Advice Reuse (AR): The agent employs the previously proposed advice reuse approach [13]. Advice is collected with early advising strategy, and the teacher is imitated with these advice. Then, advice are reused in place of the random exploration actions in approximately of the episodes.

-

•

AR + Automatic Threshold Tuning (AR+A): AR is combined with our automatic threshold tuning technique.

-

•

AR+A + Extended Reuse (AR+A+E): AR is combined with both our automatic threshold tuning technique and the extended reusing scheme.

-

•

Advice Imitation & Reuse (AIR): This agent mode incorporates all of our proposed enhancements (as detailed in Section IV). On top of AR+A+E, this mode also uses the imitation module’s uncertainty to drive the advice collection process instead of relying on early advising.

We test the agents in learning sessions with length of M steps (equals to M game frames due to frame skipping) with an advising budget of k that corresponds to only of the total number of steps in a session. At every kth step, the agents are evaluated in a separate set of episodes by having their action advising and exploration mechanisms disabled. The cumulative rewards obtained in these episodes are averaged and recorded as evaluation scores for that corresponding learning session step. This lets us measure the actual learning progress of the agents as the main performance metric.

The deep RL algorithm of the student agents is Double DQN with a neural network structure comprised of convolutional layers ( filters with a stride of followed by filters with a stride of followed by filters with a stride of ) and fully-connected layers with a single hidden layer ( units) and dueling stream output. For exploration, -greedy strategy with linearly decaying is adopted. The teacher agents are generated separately for each of the games prior to the experiments, by using the identical DQN algorithm and structure with the student. Even though the resulting agents are not necessarily at super-human levels achievable by DQN, they have competent policies that can achieve the evaluation scores of , , , , for Enduro, Freeway, Pong, Q*bert, Seaquest, respectively.

As we described in Section IV, our approach requires the student to be equipped with an additional behavioural cloning module that includes a neural network. We used the identical neural network structure to the student’s DQN model except for the dueling streams. Fully-connected layers of this network are enhanced with Dropout regularisation with a dropout rate of and the number of forward passes to measure the epistemic uncertainty via variance is set at .

The uncertainty threshold for AR is set as for every game. Determining a reasonable value for this parameter requires to access the tasks briefly, which we have performed prior to the experiments; even though this will not be reflected at the numerical results, it should be noted that this is a critical disadvantage of AR. The automatic threshold tuning percentile used in AR+A, AR+A+E, AIR is set as . This is a very straightforward hyperparameter to adjust compared to the (manual) uncertainty threshold itself and can potentially be valid in a wide variety of tasks. For the extensive reuse scheme in AR+A+E and AIR, we set and as and , respectively. We defined the annealing schedule to begin at kth step and last until Mth step. For the imitation triggering conditions in AIR, is set as k (samples) and is set as k (timesteps). Finally, the number of imitation network training iterations is set as k for the initial one (applies to all modes but NA, EA and RA) and k for the periodic ones (only applies to AIR).

All of the aforementioned hyperparameters reported in this section are set empirically prior to the experiments and are kept the same across every game. The most significant ones among the unmentioned hyperparameters of the student’s learning components are presented in Table I.

| Hyperparameter name | Value |

|---|---|

| Discount factor | |

| Learning rate | |

| Minibatch size | |

| Replay memory min. size and capacity | k, k |

| Target network update period | |

| initial, final, decay steps | , , k |

| Learning rate | |

| Minibatch size |

VI Results

| Evaluation Score | Advice Reuse | |||

| Game | Mode | Final | Ratio () | Accuracy (%) |

| Enduro | NA | — | — | |

| EA | — | — | ||

| RA | — | — | ||

| AR | ||||

| ARA | ||||

| ARAE | ||||

| AIR | ||||

| Freeway | NA | — | — | |

| EA | — | — | ||

| RA | — | — | ||

| AR | ||||

| ARA | ||||

| ARAE | ||||

| AIR | ||||

| Pong | NA | — | — | |

| EA | — | — | ||

| RA | — | — | ||

| AR | ||||

| ARA | ||||

| ARAE | ||||

| AIR | ||||

| Q*bert | NA | — | — | |

| EA | — | — | ||

| RA | — | — | ||

| AR | ||||

| ARA | ||||

| ARAE | ||||

| AIR | ||||

| Seaquest | NA | — | — | |

| EA | — | — | ||

| RA | — | — | ||

| AR | ||||

| ARA | ||||

| ARAE | ||||

| AIR | ||||

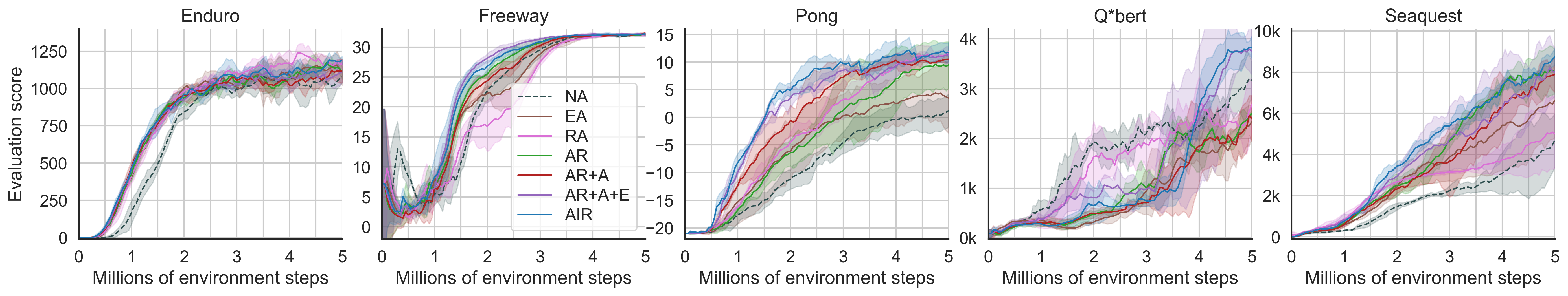

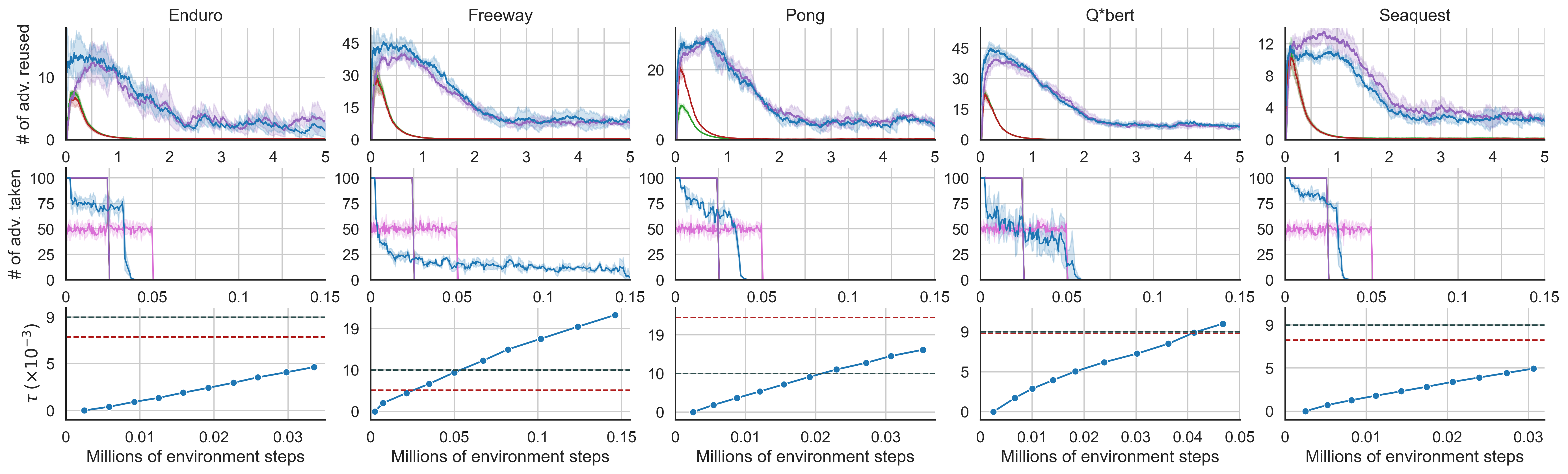

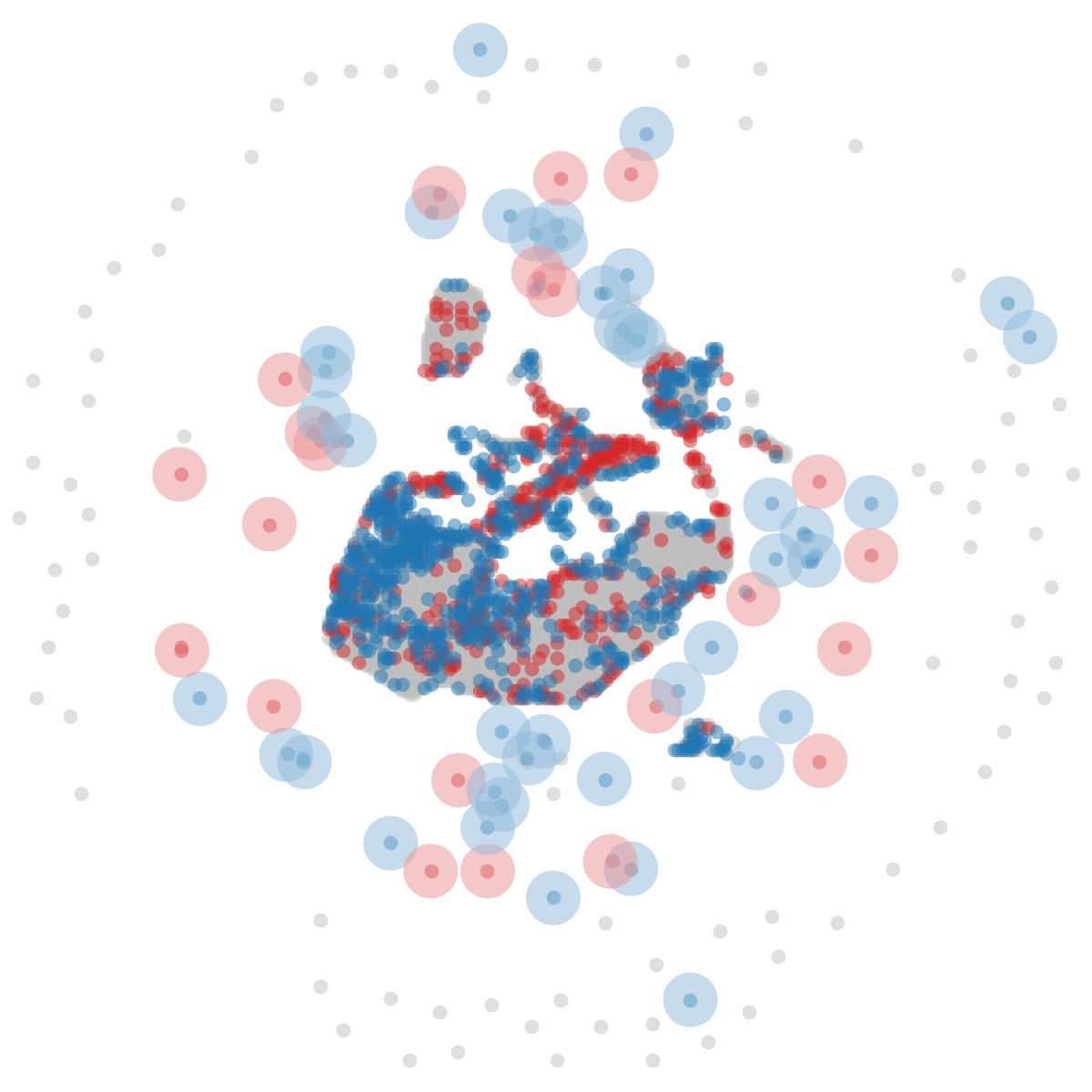

The results of our experiments in Enduro, Freeway, Pong, Q*bert and Seaquest are presented in several plots to let us analyse the performance of the student modes NA, EA, RA, AR, AR+A, AR+A+E, AIR in different aspects. Figure 2 contains the evaluation scores plots. In Figure 3, plots for the number of advice reuses per steps (top row) performed by AR, AR+A, AR+A+E, AIR; plots for the number of advice collections per steps performed by NA, RA, AR, AR+A, AR+A+E, AIR (middle row); plots for the values of the hyperparameter (uncertainty threshold) used by AR, AR+A, AR+A+E, AIR (bottom row) in a single run are shown. The shaded areas in these plots show the standard deviation across runs. The final evaluation scores, percentage of advice reuses in total number of environment steps as well as their accuracies are presented in Table II. Finally, in order to highlight the differences in the advice-collected state diversity of EA (identical collection strategy to AR, AR+A, AR+A+E), RA and AIR, these states from a single Pong run are visualised with the aid of UMAP [28] dimensionality reduction technique in Figure 4. Here, the scatter plot on the left compares AIR (blue) vs. EA (red) and the one on the right compares AIR (blue) vs. RA (pink); and the samples collected in common are shown in grey.

We first analyse the learning performances via evaluation scores. In Enduro, all methods but NA have a very similar learning speed and final scores, with AIR being slightly ahead of the rest. In Freeway, they all achieve nearly the same final scores, but they are distinguishable with small differences in learning speed where AR+A+E is on the top followed by other advanced student modes AIR, AR, AR+A. When we move to Pong, Q*bert and Seaquest, we finally see the student mode performances to be more distinctive. Even though the basic heuristics (RA and EA) show that a little number of advice from a competent policy can make substantial boosts on learning, these modes fall behind of those that employ advice reuse and fail to be a reliable choice, i.e. performing worse than NA in Freeway and Q*bert. Overall, the best mode AIR and the runner-up AR+A+E are ahead of all, with AR and AR+A following them.

Among the advice reuse approaches, we see that the most beneficial modification is the extended reuse schedule (+E) as it is highlighted by the difference between AR+A and AR+A+E. Defining such a schedule independently from the student’s RL exploration strategy involves using some extra hyperparameters, nevertheless, they are rather trivial to set arbitrarily. The trends in the advice reuse plots (Figure 3, top row) show how these schedules differ. The versions with +E (AIR and AR+A+E) yield around more reusing, which apparently plays an important role in the performance improvement. However, it is still not clear how to define the optimal reuse schedules.

Automatic threshold tuning (+A) also performs comparably, if not better, with the manual tuning approach (AR) as we can observe in the evaluation scores. Additionally, AR+A managed to achieve very similar reuse accuracies with AR; this also supports its success. When we examine the values, we see AR+A determined values that are close to the hand-tuned ones, except for the case in Pong where the difference is more significant. This reflected in reuse trend and evaluation performance, giving AR+A a very advantageous head start. These results support the idea that +A a far more preferable approach considering how problematic it can be to tune the sensitive threshold manually. For instance, if they were to deployed in some significantly different domains as they are, then we could potentially see AR+A coming far ahead of AR with a poorly tuned . Furthermore, the periodic imitation model updates incorporated in AIR makes +A an essential component. In the bottom row of Figure 3, we also show how AIR changes its values over time as it collects more advice samples and updates its imitation model accordingly. Clearly, it is very difficult to manage these changes manually.

Finally, we also see that collecting advice by utilising the imitation model’s uncertainty (as it is done by AIR) contributes to the agent’s learning. When we look at the advice collection plots, we see different types of behaviours: early collection (EA, AR, AR+A, AR+A+E), random collection (RA), and AIR. Even though they seem to be occurring mostly in the same time windows, AIR does this in an uncertainty-aware fashion; hence the decreasing collection rate over time. Freeway is the case in which AIR is very selective. This is possibly due to the fact that in Freeway, the agent can traverse only a limited space which consequently reduces the diversity of the acquired observations. Nonetheless, this is not reflected in the evaluation scores as dramatically due to this game being rather trivial to solve. Another interesting observation is made by analysing the advice collected states in Seaquest by AIR, EA (which is identical to AR, AR+A, AR+A+E in terms of collection strategy), RA in a reduced dimensionality as seen in Figure 4. We chose Seaquest since it is the game where AIR is significantly ahead of AR+A+E, which can be credited to AIR’s only difference from it (collection strategy). We also include RA here mainly because it can potentially do better in acquiring different samples than EA. Here, the large circles denote the outliers (diverse samples) that are only covered only by either AIR, EA or RA. These are the important bits to pay attention to and compare. As it can be seen, AIR yields larger coverage, i.e. more diverse dataset of advice, in both cases against EA and RA collection strategies.

VII Conclusions and Future Work

In this study, we proposed an automatic threshold tuning technique, an extended advice reusing schedule and an imitation model uncertainty-based advice collection procedure by extending the previously proposed advice reusing algorithm. We also developed a combined approach by incorporating these components, that is able to collect a diverse set of advice to build a more widely applicable advice imitation model for advice reuse.

The experiments in different Atari games from the ALE domain have shown that our enhancements provide significant improvements over the baseline advice reuse method as well as the basic action advising heuristics. First, being able to tune the uncertainty thresholds on-the-fly was observed to yield the learning performance of the carefully tuned threshold, which require unrealistic access to the tasks and extra effort to be adjusted. Secondly, we found that having the advice reusing process span across a larger portion of the learning session rather than just the steps that involve random exploration can yield superior performance. However, defining the best schedule for the maximum advice utilisation efficiency remains to be an open question. Thirdly, the uncertainty-driven advice collection method was found to be successful way to improve the imitation module’s dataset diversity. Nevertheless, periodic training process can be improved with better incremental learning techniques to make a better use of this simultaneous collection-imitation idea. Finally, our unified algorithm demonstrated state-of-the-art performance across Atari games by performing either on-par or better than its closest competitors.

The future extensions of this work can involve experimenting with more principled Policy Reuse approaches in the literature to further improve the advice reuse strategy. Furthermore, it will be a worthwhile study to make the teacher imitation better at learning online from the new samples it acquires. Finally, even though it is in the core motivation of our approach not to access and modify the agent’s RL components, it will be beneficial to investigate Learning from Demonstrations techniques and their possible contributions in our framework.

Acknowledgment

This research utilised Queen Mary’s Apocrita HPC facility, supported by QMUL Research-IT. http://doi.org/10.5281/zenodo.438045

References

- [1] Oriol Vinyals et al. “Grandmaster level in StarCraft II using multi-agent reinforcement learning” In Nature 575.7782, 2019, pp. 350–354

- [2] Julian Schrittwieser et al. “Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model” In CoRR abs/1911.08265, 2019

- [3] OpenAI et al. “Solving Rubik’s Cube with a Robot Hand” In CoRR abs/1910.07113, 2019

- [4] Adrien Ali Taı̈ga, William Fedus, Marlos C. Machado, Aaron C. Courville and Marc G. Bellemare “Benchmarking Bonus-Based Exploration Methods on the Arcade Learning Environment” In CoRR abs/1908.02388, 2019

- [5] Dean Pomerleau “Efficient Training of Artificial Neural Networks for Autonomous Navigation” In Neural Com. 3.1, 1991, pp. 88–97

- [6] Stefan Schaal “Learning from Demonstration” In Advances in Neural Information Processing Systems 9, NIPS, Denver, CO, USA, December 2-5, 1996 MIT Press, 1996, pp. 1040–1046

- [7] Todd Hester et al. “Deep Q-learning From Demonstrations” In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18) AAAI Press, 2018, pp. 3223–3230

- [8] Fernando Fernández and Manuela M. Veloso “Probabilistic policy reuse in a reinforcement learning agent” In 5th International Joint Conference on Autonomous Agents and Multiagent Systems (AAMAS 2006), Hakodate, Japan, May 8-12, 2006 ACM, 2006, pp. 720–727

- [9] Lisa Torrey and Matthew E. Taylor “Teaching on a budget: agents advising agents in reinforcement learning” In International conference on Autonomous Agents and Multi-Agent Systems, AAMAS ’13, Saint Paul, MN, USA, May 6-10, 2013, 2013, pp. 1053–1060

- [10] Ercüment Ilhan, Jeremy Gow and Diego Pérez-Liébana “Teaching on a Budget in Multi-Agent Deep Reinforcement Learning” In IEEE Conference on Games, CoG 2019, London, United Kingdom, August 20-23, 2019, 2019, pp. 1–8

- [11] Felipe Leno Silva, Pablo Hernandez-Leal, Bilal Kartal and Matthew E. Taylor “Uncertainty-Aware Action Advising for Deep Reinforcement Learning Agents” In The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020 AAAI Press, 2020, pp. 5792–5799

- [12] Ercument Ilhan, Jeremy Gow and Diego Perez-Liebana “Student-Initiated Action Advising via Advice Novelty”, 2021 arXiv:2010.00381

- [13] Ercüment Ilhan, Jeremy Gow and Diego Perez-Liebana “Action Advising with Advice Imitation in Deep Reinforcement Learning” In Proceedings of the 20th Conference on Autonomous Agents and Multi-Agent Systems, AAMAS 2021, May 3-7, 2021 IFAAMAS, 2021

- [14] Matthew E. Taylor, Nicholas Carboni, Anestis Fachantidis, Ioannis P. Vlahavas and Lisa Torrey “Reinforcement learning agents providing advice in complex video games” In Connect. Sci. 26.1, 2014, pp. 45–63

- [15] Matthieu Zimmer, Paolo Viappiani and Paul Weng “Teacher-Student Framework: A Reinforcement Learning Approach” In AAMAS Workshop Autonomous Robots and Multirobot Systems, 2014

- [16] Ofra Amir, Ece Kamar, Andrey Kolobov and Barbara J. Grosz “Interactive Teaching Strategies for Agent Training” In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, IJCAI 2016 IJCAI/AAAI Press, 2016, pp. 804–811

- [17] Felipe Leno Silva, Ruben Glatt and Anna Helena Reali Costa “Simultaneously Learning and Advising in Multiagent Reinforcement Learning” In Proceedings of the 16th Conference on Autonomous Agents and Multi-Agent Systems, AAMAS 2017 ACM, 2017, pp. 1100–1108

- [18] Changxi Zhu, Yi Cai, Ho-fung Leung and Shuyue Hu “Learning by Reusing Previous Advice in Teacher-Student Paradigm” In Proceedings of the 19th International Conference on Autonomous Agents and Multiagent Systems, AAMAS ’20 International Foundation for Autonomous AgentsMultiagent Systems, 2020, pp. 1674–1682

- [19] Si-An Chen, Voot Tangkaratt, Hsuan-Tien Lin and Masashi Sugiyama “Active Deep Q-learning with Demonstration” In CoRR abs/1812.02632, 2018

- [20] Yuri Burda, Harrison Edwards, Amos J. Storkey and Oleg Klimov “Exploration by Random Network Distillation” In CoRR abs/1810.12894, 2018

- [21] Yarin Gal and Zoubin Ghahramani “Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning” In Proceedings of the 33nd International Conference on Machine Learning, ICML 2016, New York City, NY, USA, June 19-24, 2016 48, 2016, pp. 1050–1059

- [22] Richard S Sutton and Andrew G Barto “Reinforcement learning: An introduction” MIT press, 2018

- [23] Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Alex Graves, Ioannis Antonoglou, Daan Wierstra and Martin A. Riedmiller “Playing Atari with Deep Reinforcement Learning” In CoRR abs/1312.5602, 2013

- [24] Matteo Hessel, Joseph Modayil, Hado Hasselt, Tom Schaul, Georg Ostrovski, Will Dabney, Dan Horgan, Bilal Piot, Mohammad Gheshlaghi Azar and David Silver “Rainbow: Combining Improvements in Deep Reinforcement Learning” In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18) AAAI Press, 2018, pp. 3215–3222

- [25] Ziyu Wang, Tom Schaul, Matteo Hessel, Hado Hasselt, Marc Lanctot and Nando Freitas “Dueling Network Architectures for Deep Reinforcement Learning” In Proceedings of the 33nd International Conference on Machine Learning, ICML 2016 48, JMLR Workshop and Conference Proceedings, 2016, pp. 1995–2003

- [26] Hado Hasselt, Arthur Guez and David Silver “Deep Reinforcement Learning with Double Q-Learning” In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence AAAI Press, 2016, pp. 2094–2100

- [27] Marc G. Bellemare, Yavar Naddaf, Joel Veness and Michael Bowling “The Arcade Learning Environment: An Evaluation Platform for General Agents” In J. Artif. Intell. Res. 47, 2013, pp. 253–279

- [28] Leland McInnes and John Healy “UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction” In CoRR abs/1802.03426, 2018