Learning the Structure and Parameters of Large-Population Graphical Games from Behavioral Data

Abstract

We consider learning, from strictly behavioral data, the structure and parameters of linear influence games (LIGs), a class of parametric graphical games introduced by Irfan and Ortiz [2014]. LIGs facilitate causal strategic inference (CSI): Making inferences from causal interventions on stable behavior in strategic settings. Applications include the identification of the most influential individuals in large (social) networks. Such tasks can also support policy-making analysis. Motivated by the computational work on LIGs, we cast the learning problem as maximum-likelihood estimation (MLE) of a generative model defined by pure-strategy Nash equilibria (PSNE). Our simple formulation uncovers the fundamental interplay between goodness-of-fit and model complexity: good models capture equilibrium behavior within the data while controlling the true number of equilibria, including those unobserved. We provide a generalization bound establishing the sample complexity for MLE in our framework. We propose several algorithms including convex loss minimization (CLM) and sigmoidal approximations. We prove that the number of exact PSNE in LIGs is small, with high probability; thus, CLM is sound. We illustrate our approach on synthetic data and real-world U.S. congressional voting records. We briefly discuss our learning framework’s generality and potential applicability to general graphical games.

1 Introduction

Game theory has become a central tool for modeling multi-agent systems in AI. Non-cooperative game theory has been considered as the appropriate mathematical framework in which to formally study strategic behavior in multi-agent scenarios.111See, e.g., the survey of Shoham [2008] and the books of Nisan et al. [2007] and Shoham and Leyton-Brown [2009] for more information. The core solution concept of Nash equilibrium (NE) [Nash, 1951] serves a descriptive role of the stable outcome of the overall behavior of systems involving self-interested individuals interacting strategically with each other in distributed settings for which no direct global control is possible. NE is also often used in predictive roles as the basis for what one might call causal strategic inference, i.e., inferring the results of causal interventions on stable actions/behavior/outcomes in strategic settings (See, e.g., Ballester et al. 2004, 2006, Heal and Kunreuther 2003, 2006, 2007, Kunreuther and Michel-Kerjan 2007, Ortiz and Kearns 2003, Kearns 2005, Irfan and Ortiz 2014, and the references therein). Needless to say, the computation and analysis of NE in games is of significant interest to the computational game-theory community within AI.

The introduction of compact representations to game theory over the last decade have extended computational/algorithmic game theory’s potential for large-scale, practical applications often encountered in the real-world. For the most part, such game model representations are analogous to probabilistic graphical models widely used in machine learning and AI.222The fundamental property such compact representation of games exploit is that of conditional independence: each player’s payoff function values are determined by the actions of the player and those of the player’s neighbors only, and thus are conditionally (payoff) independent of the actions of the non-neighboring players, given the action of the neighboring players. Introduced within the AI community about a decade ago, graphical games [Kearns et al., 2001] constitute an example of one of the first and arguably one of the most influential graphical models for game theory.333Other game-theoretic graphical models include game networks [La Mura, 2000], multi-agent influence diagrams (MAIDs) [Koller and Milch, 2003], and action-graph games [Jiang and Leyton-Brown, 2008].

There has been considerable progress on problems of computing classical equilibrium solution concepts such as NE and correlated equilibria (CE) [Aumann, 1974] in graphical games (see, e.g., Kearns et al. 2001, Vickrey and Koller 2002, Ortiz and Kearns 2003, Blum et al. 2006, Kakade et al. 2003, Papadimitriou and Roughgarden 2008, Jiang and Leyton-Brown 2011 and the references therein). Indeed, graphical games played a prominent role in establishing the computational complexity of computing NE in general normal-form games (see, e.g., Daskalakis et al. 2009 and the references therein).

An example of a recent computational application of non-cooperative game-theoretic graphical modeling and causal strategic inference (CSI) that motivates the current paper is the work of Irfan and Ortiz [2014]. They proposed a new approach to the study of influence and the identification of the “most influential” individuals (or nodes) in large (social) networks. Their approach is strictly game-theoretic in the sense that it relies on non-cooperative game theory and the central concept of pure-strategy Nash equilibria (PSNE)444In this paper, because we concern ourselves primarily with PSNE, whenever we use the term “equilibrium” or “equilibria” without qualification, we mean PSNE. as an approximate predictor of stable behavior in strategic settings, and, unlike other models of behavior in mathematical sociology,555Some of these models have recently gained interest and have been studied within computer science, specially those related to diffusion or contagion processes (see, e.g., Granovetter 1978, Morris 2000, Domingos and Richardson 2001, Domingos 2005, Even-Dar and Shapira 2007). it is not interested and thus avoids explicit modeling of the complex dynamics by which such stable outcomes could have arisen or could be achieved. Instead, it concerns itself with the “bottom-line” end-state stable outcomes (or steady state behavior). Hence, the proposed approach provides an alternative to models based on the diffusion of behavior through a social network (See Kleinberg 2007 for an introduction and discussion targeted to computer scientists, and further references).

The underlying assumption for most work in computational game theory that deals with algorithms for computing equilibrium concepts is that the games under consideration are already available, or have been “hand-designed” by the analyst. While this may be possible for systems involving a handful of players, it is in general impossible in systems with at least tens of agent entities, if not more, as we are interested in this paper.666Of course, modeling and hand-crafting games for systems with many agents may be possible if the system has particular structure one could exploit. To give an example, this would be analogous to how one can exploit the probabilistic structure of HMMs to deal with long stochastic processes in a representationally succinct and computationally tractable way. Yet, we believe it is fair to say that such systems are largely/likely the exception in real-world settings in practice. For instance, in their paper, Irfan and Ortiz [2014] propose a class of games, called influence games. In particular, they concentrate on linear influence games (LIGs), and, as briefly mentioned above, study a variety of computational problems resulting from their approach, assuming such games are given as input.

Research in computational game theory has paid relatively little attention to the problem of learning (both the structure and parameters of) graphical games from data. Addressing this problem is essential to the development, potential use and success of game-theoretic models in practical applications. Indeed, we are beginning to see an increase in the availability of data collected from processes that are the result of deliberate actions of agents in complex system. A lot of this data results from the interaction of a large number of individuals, being not only people (i.e., individual human decision-makers), but also companies, governments, groups or engineered autonomous systems (e.g., autonomous trading agents), for which any form of global control is usually weak. The Internet is currently a major source of such data, and the smart grid, with its trumpeted ability to allow individual customers to install autonomous control devices and systems for electricity demand, will likely be another one in the near future.

In this paper, we investigate in considerable technical depth the problem of learning LIGs from strictly behavioral data: We do not assume the availability of utility, payoff or cost information in the data; the problem is precisely to infer that information from just the joint behavior collected in the data, up to the degree needed to explain the joint behavior itself. We expect that, in most cases, the parameters quantifying a utility function or best-response condition are unavailable and hard to determine in real-world settings. The availability of data resulting from the observation of an individual public behavior is arguably a weaker assumption than the availability of individual utility observations, which are often private. In addition, we do not assume prior knowledge of the conditional payoff/utility independence structure as represented by the game graph.

Motivated by the work of Irfan and Ortiz [2014] on a strictly non-cooperative game-theoretic approach to influence and strategic behavior in networks, we present a formal framework and design algorithms for learning the structure and parameters of LIGs with a large number of players. We concentrate on data about what one might call “the bottom line:” i.e., data about“end-states”, “steady-states” or final behavior as represented by possibly noisy samples of joint actions/pure-strategies from stable outcomes, which we assume come from a hidden underlying game. Thus, we do not use, consider or assume available any temporal data about the detailed behavioral dynamics. In fact, the data we consider does not contain the dynamics that might have possibly led to the potentially stable joint-action outcome! Since scalability is one of our main goals, we aim to propose methods that are polynomial-time in the number of players.

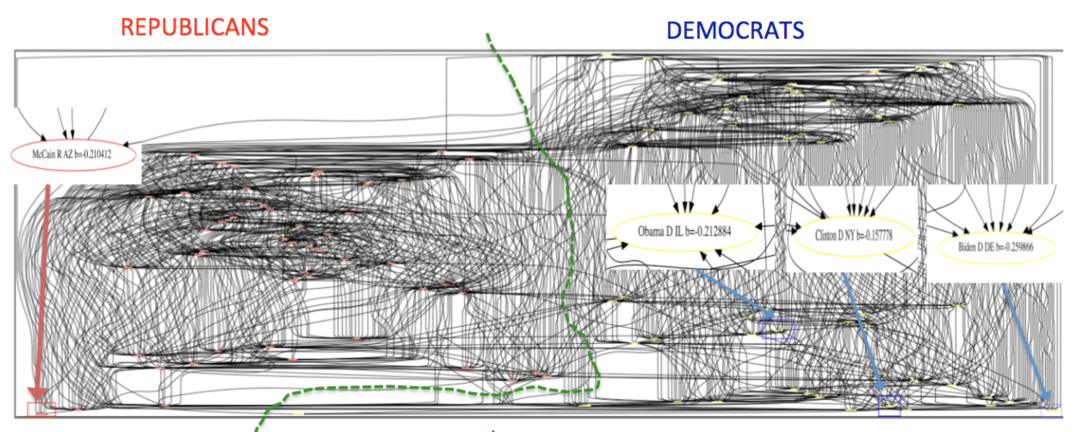

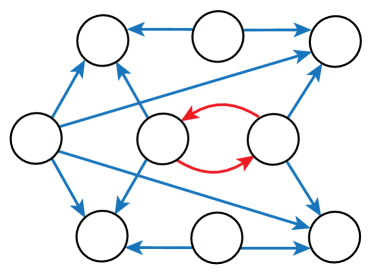

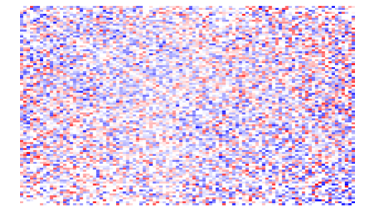

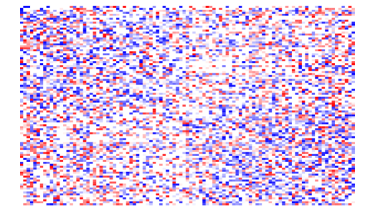

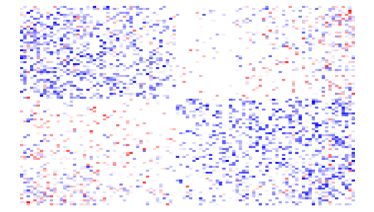

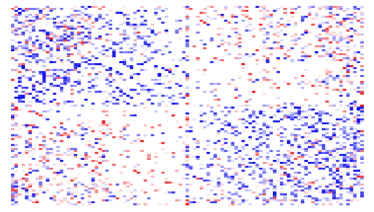

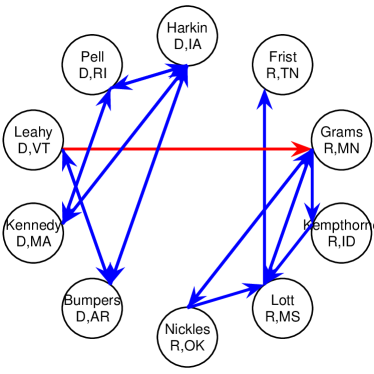

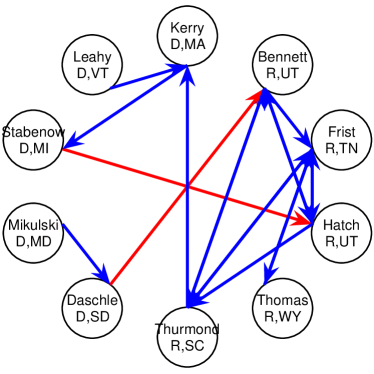

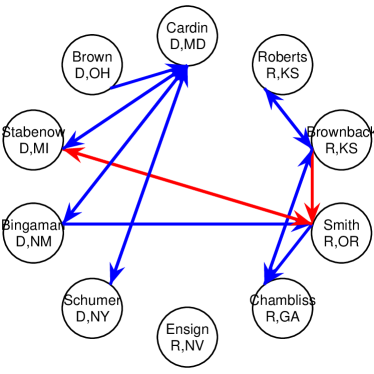

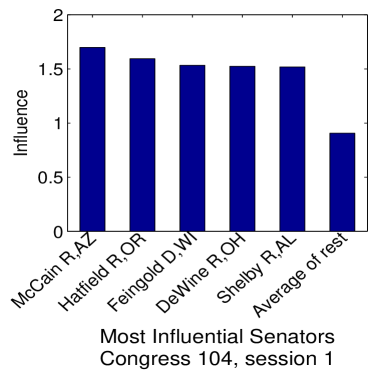

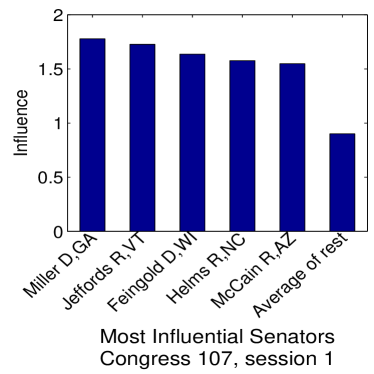

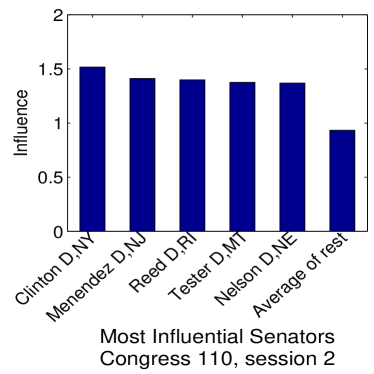

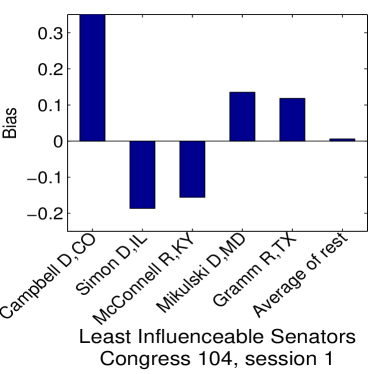

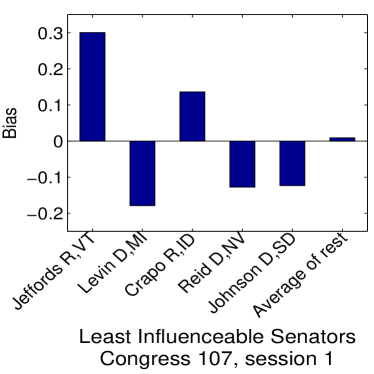

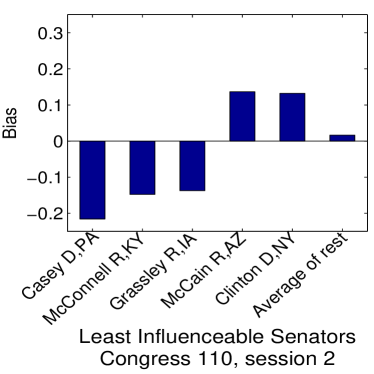

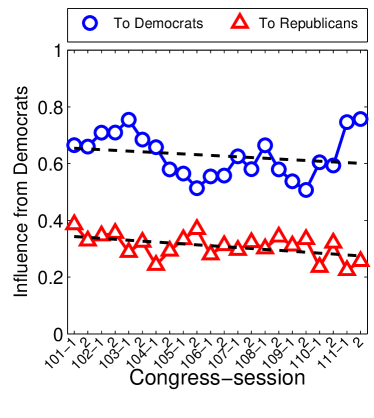

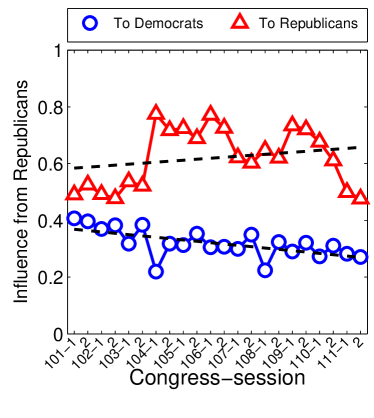

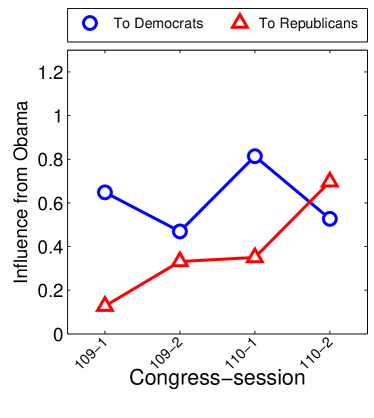

Given that LIGs belong to the class of 2-action graphical games [Kearns et al., 2001] with parametric payoff functions, we first needed to deal with the relative dearth of work on the broader problem of learning general graphical games from purely behavioral data. Hence, in addressing this problem, while inspired by the computational approach of Irfan and Ortiz [2014], the learning problem formulation we propose is in principle applicable to arbitrary games (although, again, the emphasis is on the PSNE of such games). In particular, we introduce a simple statistical generative mixture model, built “on top of” the game-theoretic model, with the only objective being to capture noise in the data. Despite the simplicity of the generative model, we are able to learn games from U.S. congressional voting records, which we use as a source of real-world behavioral data, that, as we will illustrate, seem to capture interesting, non-trivial aspects of the U.S. congress. While such models learned from real-world data are impossible to validate, we argue that there exists a considerable amount of anecdotal evidence for such aspects as captured by the models we learned. Figure 1 provides a brief illustration. (Should there be further need for clarification as to the why we present this figure, please see Footnote 14.)

As a final remark, given that LIGs constitute a non-trivial sub-class of parametric graphical games, we view our work as a step in the direction of addressing the broader problem of learning general graphical games with a large number of players from strictly behavioral data. We also hope our work helps to continue to bring and increase attention from the machine-learning community to the problem of inferring games from behavioral data (in which we attempt to learn a game that would “rationalize” players’ observed behavior).777This is a type of problem arising from game theory and economics that is different from the problem of learning in games (in which the focus is the study of how individual players learn to play a game by a sequence of repeated interactions), a more matured and perhaps better known problem within machine learning (see, e.g., Fudenberg and Levine 1999).

1.1 A Framework for Learning Games: Desiderata

The following list summarizes the discussion above and guides our choices in our pursuit of a machine-learning framework for learning game-theoretic graphical models from strictly behavioral data.

-

•

The learning algorithm

-

–

must output an LIG (which is a special type of graphical game); and

-

–

should be practical and tractably deal with a large number of players (typically in the hundreds, and certainly at least ).

-

–

-

•

The learned model objective is the “bottom line” in the sense that the basis for its evaluation is the prediction of end-state (or steady-state) joint decision-making behavior, and not the temporal behavioral dynamics that might have lead to end-state or the stable steady-state joint behavior.888Note that we are in no way precluding dynamic models as a way to end-state prediction. But there is no inherent need to make any explicit attempt or effort to model or predict the temporal behavioral dynamics that might have lead to end-state or the stable steady-state joint behavior, including pre-play “cheap talk,” which are often overly complex processes. (See Appendix A.1 for further discussion.)

-

•

The learning framework

-

–

would only have available strictly behavioral data on actual decisions/actions taken. It cannot require or use any kind of payoff-related information.

-

–

should be agnostic as to the type or nature of the decision-maker and does not assume each player is a single human. Players can be institutions or governments, or associated with the decision-making process of a group of individuals representing, e.g., a company (or sub-units, office sites within a company, etc.), a nation state (like in the UN, NATO, etc.), or a voting district. In other words, the recorded behavioral actions of each player may really be a representative of larger entities or groups of individuals, not necessarily a single human.

-

–

must provide computationally efficient learning algorithm with provable guarantees: worst-case polynomial running time in the number of players.

-

–

should be “data efficient” and provide provable guarantees on sample complexity (given in terms of “generalization” bounds).

-

–

1.2 Technical Contributions

While our probabilistic model is inspired by the concept of equilibrium from game theory, our technical contributions are not in the field of game theory nor computational game theory. Our technical contributions and the tools that we use are the ones in classical machine learning.

Our technical contributions include a novel generative model of behavioral data in Section 4 for general games. Motivated by the LIGs and the computational game-theoretic framework put forward by Irfan and Ortiz [2014], we formally define “identifiability” and “triviality” within the context of non-cooperative graphical games based on PSNE as the solution concept for stable outcomes in large strategic systems. We provide conditions that ensure identifiability among non-trivial games. We then present the maximum-likelihood estimation (MLE) problem for general (non-trivial identifiable) games. In Section 5, we show a generalization bound for the MLE problem as well as an upper bound of the functional/strategic complexity (i.e., analogous to the“VC-dimension” in supervised learning) of LIGs. In Section 6, we provide technical evidence justifying the approximation of the original problem by maximizing the number of observed equilibria in the data as suitable for a hypothesis-space of games with small true number of equilibria. We then present our convex loss minimization approach and a baseline sigmoidal approximation for LIGs. For completeness, we also present exhaustive search methods for both general games as well as LIGs. In Section 7, we formally define the concept of absolute-indifference of players and show that our convex loss minimization approach produces games in which all players are non-absolutely-indifferent. We provide a bound which shows that LIGs have small true number of equilibria with high probability.

2 Related Work

We provide a brief summary overview of previous work on learning games here, and delay discussion of the work presented below until after we formally present our model; this will provide better context and make “comparing and contrasting” easier for those interested, without affecting expert readers who may want to get to the technical aspects of the paper without much delay.

Table 1 constitutes our best attempt at a simple visualization to fairly present the differences and similarities of previous approaches to modeling behavioral data within the computational game-theory community in AI.

| Reference | Class | Needs | Learns | Learns | Guarant. | Equil. | Dyn. | Num. |

| Payoff | Param. | Struct. | Concept | Agents | ||||

| Wright and Leyton-Brown [2010] | NF | Y | Na | - | N | QRE | N | 2 |

| Wright and Leyton-Brown [2012] | NF | Y | Na | - | N | QRE | N | 2 |

| Gao and Pfeffer [2010] | NF | Y | Y | - | N | QRE | N | 2 |

| Vorobeychik et al. [2007] | NF | Y | Y | - | N | MSNE | N | 2-5 |

| Ficici et al. [2008] | NF | Y | Y | - | N | MSNE | N | 10-200 |

| Duong et al. [2008] | NGT | Y | Na | N | N | - | N | 4,10 |

| Duong et al. [2010] | NGTb | Y | Nc | N | N | - | Yd | 10 |

| Duong et al. [2012] | NGTb | Y | Nc | Ye | N | - | Yd | 36 |

| Duong et al. [2009] | GG | Y | Y | Yf | N | PSNE | N | 2-13 |

| Kearns and Wortman [2008] | NGT | N | - | - | Y | - | Y | 100 |

| Ziebart et al. [2010] | NF | N | Y | - | N | CE | N | 2-3 |

| Waugh et al. [2011] | NF | N | Y | - | Y | CE | Y | 7 |

| Our approach | GG | N | Y | Yg | Y | PSNE | N | 100g |

The research interest of previous work varies in what they intend to capture in terms of different aspects of behavior (e.g., dynamics, probabilistic vs. strategic) or simply different settings/domains (i.e., modeling “real human behavior,” knowledge of achieved payoff or utility, etc.).

With the exception of Ziebart et al. [2010], Waugh et al. [2011], Kearns and Wortman [2008], previous methods assume that the actions as well as corresponding payoffs (or noisy samples from the true payoff function) are observed in the data. Our setting largely differs from Ziebart et al. [2010], Kearns and Wortman [2008] because of their focus on system dynamics, in which future behavior is predicted from a sequence of past behavior. Kearns and Wortman [2008] proposed a learning-theory framework to model collective behavior based on stochastic models.

Our problem is clearly different from methods in quantal response models [McKelvey and Palfrey, 1995, Wright and Leyton-Brown, 2010, 2012] and graphical multiagent models (GMMs) [Duong et al., 2008, 2010] that assume known structure and observed payoffs. Duong et al. [2012] learns the structure of games that are not graphical, i.e., the payoff depends on all other players. Their approach also assumes observed payoff and consider a dynamic consensus scenario, where agents on a network attempt to reach a unanimous vote. In analogy to voting, we do not assume the availability of the dynamics (i.e., the previous actions) that led to the final vote. They also use fixed information on the conditioning sets of neighbors during their search for graph structure. We also note that the work of Vorobeychik et al. [2007], Gao and Pfeffer [2010], Ziebart et al. [2010] present experimental validation mostly for 2 players only, 7 players in Waugh et al. [2011] and up to 13 players in Duong et al. [2009].

In several cases in previous work, researchers define probabilistic models using knowledge of the payoff functions explicitly (i.e., a Gibbs distribution with potentials that are functions of the players payoffs, regrets, etc.) to model joint behavior (i.e., joint pure-strategies); see, e.g., Duong et al. [2008, 2010, 2012], and to some degree also Wright and Leyton-Brown [2010, 2012]. It should be clear to the reader that this is not the same as our generative model, which is defined directly on the PSNE (or stable outcomes) of the game, which the players’ payoffs determine only indirectly.

In contrast, in this paper, we assume that the joint actions are the only observable information and that both the game graph structure and payoff functions are unknown, unobserved and unavailable. We present the first techniques for learning the structure and parameters of a non-trivial class of large-population graphical games from joint actions only. Furthermore, we present experimental validation in games of up to players. Our convex loss minimization approach could potentially be applied to larger problems since it has polynomial time complexity in the number of players.

2.1 On Learning Probabilistic Graphical Models

There has been a significant amount of work on learning the structure of probabilistic graphical models from data. We mention only a few references that follow a maximum likelihood approach for Markov random fields [Lee et al., 2007], bounded tree-width distributions [Chow and Liu, 1968, Srebro, 2001], Ising models [Wainwright et al., 2007, Banerjee et al., 2008, Höfling and Tibshirani, 2009], Gaussian graphical models [Banerjee et al., 2006], Bayesian networks [Guo and Schuurmans, 2006, Schmidt et al., 2007b] and directed cyclic graphs [Schmidt and Murphy, 2009].

Our approach learns the structure and parameters of games by maximum likelihood estimation on a related probabilistic model. Our probabilistic model does not fit into any of the types described above. Although a (directed) graphical game has a directed cyclic graph, there is a semantic difference with respect to graphical models. Structure in a graphical model implies a factorization of the probabilistic model. In a graphical game, the graph structure implies strategic dependence between players, and has no immediate probabilistic implication. Furthermore, our general model differs from Schmidt and Murphy [2009] since our generative model does not decompose as a multiplication of potential functions.

Finally, it is very important to note that our specific aim is to model behavioral data that is strategic in nature. Hence, our modeling and learning approach deviates from those for probabilistic graphical models which are of course better suited for other types of data, mostly probabilistic in nature (i.e., resulting from a fixed underlying probability distribution). As a consequence, it is also very important to keep in mind that our work is not in competition with the work in probabilistic graphical models, and is not meant to replace it (except in the context of data sets collected from complex strategic behavior just mentioned). Each approach has its own aim, merits and pitfalls in terms of the nature of data sets that each seeks to model. We return to this point in Section 8 (Experimental Results).

2.2 On Linear Threshold Models and Econometrics

Irfan and Ortiz [2014] introduced LIGs in the AI community, showed that such games are useful, and addressed a variety of computational problems, including the identification of most influential senators. The class of LIGs is related to the well-known linear threshold model (LTM) in sociology [Granovetter, 1978], recently very popular within the social network and theoretical computer science community [Kleinberg, 2007].999López-Pintado and Watts [2008] also provide an excellent summary of the various models in this area of mathematical social science. Irfan and Ortiz [2014] discusses linear threshold models in depth; we briefly discuss them here for self-containment. LTMs are usually studied as the basis for some kind of diffusion process. A typical problem is the identification of most influential individuals in a social network. An LTM is not in itself a game-theoretic model and, in fact, Granovetter himself argues against this view in the context of the setting and the type of questions in which he was most interested [Granovetter, 1978]. Our reading of the relevant literature suggests that subsequent work on LTMs has not taken a strictly game-theoretic view either. The problem of learning mathematical models of influence from behavioral data has just started to receive attention. There has been a number of articles in the last couple of years addressing the problem of learning the parameters of a variety of diffusion models of influence [Saito et al., 2008, 2009, 2010, Goyal et al., 2010, Gomez Rodriguez et al., 2010, Cao et al., 2011].101010Often learning consists of estimating the threshold parameter from data given as temporal sequences from“traces” or “action logs.” Sometimes the “influence weights” are estimated assuming a given graph, and almost always the weights are assumed positive and estimated as “probabilities of influence.” For example, Saito et al. [2010] considers a dynamic (continuous time) LTM that has only positive influence weights and a randomly generated threshold value. Cao et al. [2011] uses active learning to estimate the threshold values of an LTM leading to a maximum spread of influence.

Our model is also related to a particular model of discrete choice with social interactions in econometrics (see, e.g. Brock and Durlauf 2001). The main difference is that we take a strictly non-cooperative game-theoretic approach within the classical “static”/one-shot game framework and do not use a random utility model. We follow the approach of Irfan and Ortiz [2014] who takes a strictly non-cooperative game-theoretic approach within the classical “static”/one-shot game framework, and thus we do not use a random utility model. In addition, we do not make the assumption of rational expectations, which in the context of models of discrete choice with social interactions essentially implies the assumption that all players use exactly the same mixed strategy.111111A formal definition of “rational expectations” is beyond the scope of this paper. We refer the reader to the early part of the article by Brock and Durlauf [2001] where they explain why assuming rational expectations leads to the conclusion that all players use exactly the same mixed strategy. That is the relevant part of that work to ours.

3 Background: Game Theory and Linear Influence Games

In classical game-theory (see, e.g. Fudenberg and Tirole 1991 for a textbook introduction), a normal-form game is defined by a set of players (e.g., we can let if there are players), and for each player , a set of actions, or pure-strategies , and a payoff function mapping the joint actions of all the players, given by the Cartesian product , to a real number. In non-cooperative game theory we assume players are greedy, rational and act independently, by which we mean that each player always want to maximize their own utility, subject to the actions selected by others, irrespective of how the optimal action chosen help or hurt others.

A core solution concept in non-cooperative game theory is that of an Nash equilibrium. A joint action is a pure-strategy Nash equilibrium (PSNE) of a non-cooperative game if, for each player , ; that is, constitutes a mutual best-response, no player has any incentive to unilaterally deviate from the prescribed action , given the joint action of the other players in the equilibrium. In what follows, we denote a game by , and the set of all pure-strategy Nash equilibria of by121212Because this paper concerns mostly PSNE, we denote the set of PSNE of game as to simplify notation.

A (directed) graphical game is a game-theoretic graphical model [Kearns et al., 2001]. It provides a succinct representation of normal-form games. In a graphical game, we have a (directed) graph in which each node in corresponds to a player in the game. The interpretation of the edges/arcs of is that the payoff function of player is only a function of the set of parents/neighbors in (i.e., the set of players corresponding to nodes that point to the node corresponding to player in the graph). In the context of a graphical game, we refer to the ’s as the local payoff functions/matrices.

Linear influence games (LIGs) [Irfan and Ortiz, 2014] are a sub-class of -action graphical games with parametric payoff functions. For LIGs, we assume that we are given a matrix of influence weights , with , and a threshold vector . For each player , we define the influence function and the payoff function . We further assume binary actions: for all . The best response of player to the joint action of the other players is defined as

Intuitively, for any other player , we can think of as a weight parameter quantifying the “influence factor” that has on , and we can think of as a threshold parameter quantifying the level of “tolerance” that player has for playing .131313As we formally/mathematically define here, LIGs are -action graphical games with linear-quadratic payoff functions. Given our main interest in this paper on the PSNE solution concept, for the most part, we simply view LIGs as compact representations of the PSNE of graphical games that the algorithms of Irfan and Ortiz [2014] use for CSI. (This is in contrast to a perhaps more natural, “intuitive” but still informal description/interpretation one may provide for instructive/pedagogical purposes based on “direct influences,” as we do here.) This view of LIGs is analogous to the modern, predominant view of Bayesian networks as compact representations of joint probability distributions that are also very useful for modeling uncertainty in complex systems and practical for probabilistic inference [Koller and Friedman, 2009]. (And also analogous is the “intuitive” descriptions/interpretations of BN structures, used for instructive/pedagogical purposes, based on “causal” interactions between the random variables Koller and Friedman, 2009.)

As discussed in Irfan and Ortiz [2014], LIGs are also a sub-class of polymatrix games [Janovskaja, 1968]. Furthermore, in the special case of and symmetric , a LIG becomes a party-affiliation game [Fabrikant et al., 2004].

In this paper, the use of the verb “influence” strictly refers to influences defined by the model.

Figure 1 provides a preview illustration of the application of our approach to congressional voting.141414We present this game graph because many people express interest in “seeing” the type of games we learn on this particular data set. The reader should please understand that by presenting this graph we are definitely not implying or arguing that we can identify the ground-truth graph of “direct influences.” (We say this even in the very unlikely event that the “ground-truth model” be an LIG that faithfully capture the “true direct influences” in this U.S. Congress, something arguably no model could ever do.) As we show later in Section 4.2, LIGs are not identifiable with respect to their local compact parametric representation encoding the game graph through their weights and biases, but only with respect to their PSNE, which are joint actions capturing a global property of a game that we really care about for CSI. Certainly, we could never validate the model parameters of an LIG at the local, microscopic level of “direct influences” using only the type of observational data we used to learn the model depicted by the graph in the figure. For that, we would need help from domain experts to design controlled experiments that would yield the right type of data for proper/rigorous scientific validation.

4 Our Proposed Framework for Learning LIGs

Our goal is to learn the structure and parameters of an LIG from observed joint actions only (i.e., without any payoff data/information).151515In principle, the learning framework itself is technically immediately/easily applicable to any class of simultaneous/one-shot games. Generalizing the algorithms and other theoretical results (e.g., on generalization error) while maintaining the tractability in sample complexity and computation may require significant effort. Yet, for simplicity, most of the presentation in this section is actually in terms of general 2-action games. While we make sporadic references to LIGs throughout the section, it is not until we reach the end of the section that we present and discuss the particular instantiation of our proposed framework with LIGs.

Our main performance measure will be average log-likelihood (although later we will be considering misclassification-type error measures in the context of simultaneous-classification, as a result of an approximation of the average log-likelihood). Our emphasis on a PSNE-based statistical model for the behavioral data results from the approach to causal strategic inference taken by Irfan and Ortiz [2014], which is strongly founded on PSNE.161616The possibility that PSNE may not exist in some LIGs does not present a significant problem in our case because we are learning the game, and can require that the LIG output has at least one PSNE. Indeed, in our approach, games with no PSNE achieve the lowest possible likelihood within our generative model of the data; said differently, games with PSNE have higher likelihoods than those that do not have any PSNE.

Note that our problem is unsupervised, i.e., we do not know a priori which joint actions are PSNE and which ones are not. If our only goal were to find a game in which all the given observed data is an equilibrium, then any “dummy” game, such as the “dummy” LIG , would be an optimal solution because .171717Ng and Russell [2000] made a similar observation in the context of single-agent inverse reinforcement learning (IRL). In this section, we present a probabilistic formulation that allows finding games that maximize the empirical proportion of equilibria in the data while keeping the true proportion of equilibria as low as possible. Furthermore, we show that trivial games such as LIGs with , obtain the lowest log-likelihood.

4.1 Our Proposed Generative Model of Behavioral Data

We propose the following simple generative (mixture) model for behavioral data based strictly in the context of “simultaneous”/one-shot play in non-cooperative game theory, again motivated by Irfan and Ortiz [2014]’s PSNE-based approach to causal strategic inference (CSI).181818Model “simplicity” and “abstractions” are not necessarily a bad thing in practice. More “realism” often leads to more “complexity” in terms of model representation and computation; and to potentially poorer generalization performance as well [Kearns and Vazirani, 1994]. We believe that even if the data could be the result of complex cognitive, behavioral or neuronal processes underlying human decision making and social interactions, the practical guiding principle of model selection in ML, which governs the fundamental tradeoff between model complexity and generalization performance, still applies. Let be a game. With some probability , a joint action is chosen uniformly at random from ; otherwise, is chosen uniformly at random from its complement set . Hence, the generative model is a mixture model with mixture parameter corresponding to the probability that a stable outcome (i.e., a PSNE) of the game is observed, uniform over PSNE. Formally, the probability mass function (PMF) over joint-behaviors parameterized by is

| (1) |

where we can think of as the “signal” level, and thus as the “noise” level in the data set.

Remark 1.

Note that in order for Eq. (1) to be a valid PMF for any , we need to enforce the following conditions and . Furthermore, note that in both cases () the PMF becomes a uniform distribution. We also enforce the following condition:191919We can easily remove this condition at the expense of complicating the theoretical analysis on the generalization bounds because of having to deal with those extreme cases. if then .

4.2 On PSNE-Equivalence and PSNE-Identifiability of Games

For any valid value of mixture parameter , the PSNE of a game completely determines our generative model . Thus, given any such mixture parameter, two games with the same set of PSNE will induce the same PMF over the space of joint actions.202020It is not hard to come up with examples of multiple games that have the same PSNE set. In fact, later in this section, we show three instances of LIGs with very different weight-matrix parameter that have this property. Note that this is not a roadblock to our objectives of learning LIGs because our main interest is the PSNE of the game, not the individual parameters that define it. We note that this situation is hardly exclusive to game-theoretic models: an analogous issue occurs in probabilistic graphical models (e.g., Bayesian networks).

Definition 2.

We say that two games and are PSNE-equivalent if and only if their PSNE sets are identical, i.e., .

We often drop the “PSNE-” qualifier when clear from context.

Definition 3.

We say a set of valid parameters for the generative model is PSNE-identifiable with respect to the PMF defined in Eq. (1), if and only if, for every pair , if for all then and . We say a game is PSNE-identifiable with respect to and the , if and only if, there exists a such that .

Definition 4.

We define the true proportion of equilibria in the game relative to all possible joint actions as

| (2) |

We also say that a game is trivial if and only if (or equivalently ), and non-trivial if and only if (or equivalently ).

The following propositions establish that the condition ensures that the probability of an equilibrium is strictly greater than a non-equilibrium. The condition also guarantees that non-trivial games are identifiable.

Proposition 5.

Given a non-trivial game , the mixture parameter if and only if for any and .

Proof.

Note that and given Eq. (2), we prove our claim. ∎

Proposition 6.

Let and be two valid generative-model parameter tuples.

-

(a)

If and then ,

-

(b)

Let and be also two non-trivial games such that and . If , then and .

Proof.

The last proposition, along with our definitions of “trivial” (as given in Definition 4) and “identifiable” (Definition 3), allows us to formally define our hypothesis space.

Definition 7.

Let be a class of games of interest. We call the set the hypothesis space of non-trivial identifiable games and mixture parameters. We also refer to a game that is also in some tuple for some , as a non-trivial identifiable game.212121Technically, we should call the set “the hypothesis space consisting of tuples of non-trivial games from and mixture parameters identifiable up to PSNE, with respect to the probabilistic model defined in Eq. (1).” Similarly, we should call such game “a non-trivial game from identifiable up to PSNE, with respect to the probabilistic model defined in Eq. (1).” We opted for brevity.

Remark 8.

Recall that a trivial game induces a uniform PMF by Remark 1. Therefore, a non-trivial game is not equivalent to a trivial game since by Proposition 5, non-trivial games do not induce uniform PMFs.222222In general, Section 4.2 characterizes our hypothesis space (non-trivial identifiable games and mixture parameters) via two specific conditions. The first condition, non-triviality (explained in Remark 1), is . The second condition, identifiability of the PSNE set from its related PMF (discussed in Propositions 5 and 6), is . For completeness, in this remark, we clarify that the class of trivial games (uniform PMFs) is different from the class of non-trivial games (non-uniform PMFs). Thus, in the rest of the paper we focus exclusively on non-trivial identifiable games; that is, games that produce non-uniform PMFs and for which the PSNE set is uniquely identified from their PMFs.

4.3 Additional Discussion on Modeling Choices

We now discuss other equilibrium concepts, such as mixed-strategy Nash equilibria (MSNE) and quantal response equilibria (QRE). We also discuss a more sophisticated noise process as well as a generalization of our model to non-uniform distributions; while likely more realistic, the alternative models are mathematically more complex and potentially less tractable computationally.

4.3.1 On Other Equilibrium Concepts

There is still quite a bit of debate as to the appropriateness of game-theoretic equilibrium concepts to model individual human behavior in a social context. Camerer’s book on behavioral game theory [Camerer, 2003] addresses some of the issues. Our interpretation of Camerer’s position is not that Nash equilibria is universally a bad predictor but that it is not consistently the best, for reasons that are still not well understood. This point is best illustrated in Chapter 3, Figure 3.1 of Camerer [2003].

(Logit) quantal response equilibria (QRE) [McKelvey and Palfrey, 1995] has been proposed as an alternative to Nash in the context of behavioral game theory. Models based on QRE have been shown superior during initial play in some experimental settings, but prior work assumes known structure and observed payoffs, and only the “precision/rationality parameter” is estimated, e.g. Wright and Leyton-Brown [2010, 2012]. In a logit QRE, the precision parameter, typically denoted by , can be mathematically interpreted as the inverse-temperature parameter of individual Gibbs distributions over the pure-strategy of each player .

It is customary to compute the MLE for from available data. To the best of our knowledge, all work in QRE assumes exact knowledge of the game payoffs, and thus, no method has been proposed to simultaneously estimate the payoff functions when they are unknown. Indeed, computing MLE for , given the payoff functions, is relatively efficient for normal-form games using standard techniques, but may be hard for graphical games; on the other hand, MLE estimation of the payoff functions themselves within a QRE model of behavior seems like a highly non-trivial optimization problem, and is unclear that it is even computationally tractable, even in normal-form games. At the very least, standard techniques do not apply and more sophisticated optimization algorithms or heuristics would have to be derived. Such extensions are clearly beyond the scope of this paper.232323Note that despite the apparent similarity in mathematical expression between logit QRE and the PSNE of the LIG we obtain by using individual logistic regression, they are fundamentally different because of the complex correlations that QRE conditions impose on the parameters of the payoff functions. It is unclear how to adapt techniques for logistic regression similar to the ones we used here to efficiently/tractably compute MLE within the logit QRE framework.

Wright and Leyton-Brown [2012] also considers even more mathematically complex variants of behavioral models that combine QRE with different models that account for constraints in “cognitive levels” of reasoning ability/effort, yet the estimation and usage of such models still assumes knowledge of the payoff functions.

It would be fair to say that most of the human-subject experiments in behavioral game theory involve only a handful of players [Camerer, 2003]. The scalability of those results to games with a large population of players is unclear.

Now, just as an example, we do not necessarily view the Senators final votes as those of a single human individual anyway: after all, such a decision is (or should be) obtained with consultation with their staff and (one would at least hope) the constituency of the state they represent. Also, the final voting decision is taken after consultation or meetings between the staff of the different senators. We view this underlying process as one of “cheap talk.” While cheap talk may be an important process to study, in this paper, we just concentrate on the end result: the final vote. The reason is more than just scientific; as the congressional voting setting illustrates, data for such process is sometimes not available, or would seem very hard to infer from the end-states alone. While our experiments concentrate on congressional voting data, because it is publicly available and easy to obtain, the same would hold for other settings such as Supreme court decisions, voluntary vaccination, UN voting records and governmental decisions, to name a few. We speculate that in almost all those cases, only the end-result is likely to be recorded and little information would be available about the “cheap talk” process or “pre-play” period leading to the final decision.

In our work we consider PSNE because of our motivation to provide LIGs for use within the casual strategic inference framework for modeling “influence” in large-population networks of Irfan and Ortiz [2014]. Note that the universality of MSNE does not diminish the importance of PSNE in game theory.242424Research work on the properties and computation of PSNE include Rosenthal [1973], Gilboa and Zemel [1989], Stanford [1995], Rinott and Scarsini [2000], Fabrikant et al. [2004], Gottlob et al. [2005], Sureka and Wurman [2005], Daskalakis and Papadimitriou [2006], Dunkel [2007], Dunkel and Schulz [2006], Dilkina et al. [2007], Ackermann and Skopalik [2007], Hasan et al. [2008], Hasan and Galiana [2008], Ryan et al. [2010], Chapman et al. [2010], Hasan and Galiana [2010]. Indeed, a debate still exist within the game theory community as to the justification for randomization, specially in human contexts. While concentrating exclusively on PSNE may not make sense in all settings, it does make sense in some.252525For example, in the context of congressional voting, we believe Senators almost always have full-information about how some, if not all other Senators they care about would vote. Said differently, we believe uncertainty in a Senator’s final vote, by the time the vote is actually taken, is rare, and certainly not the norm. Hence, it is unclear how much there is to gain, in this particular setting, by considering possible randomization in the Senators’ voting strategies. In addition, were we to introduce mixed-strategies into the inference and learning framework and model, we would be adding a considerable amount of complexity in almost all respects, thus requiring a substantive effort to study on its own.262626For example, note that because in our setting we learn exclusively from observed joint actions, we could not assume knowledge of the internal mixed-strategies of players. Perhaps we could generalize our model to allow for mixed-strategies by defining a process in which a joint mixed strategy from the set of MSNE (or its complement) is drawn according to some distribution, then a (pure-strategy) realization is drawn from that would correspond to the observed joint actions. One problem we might face with this approach is that little is known about the structure of MSNE in general multi-player games. For example, it is not even clear that the set of MSNE is always measurable in general!

4.3.2 On the Noise Process

Here we discuss a more sophisticated noise process as well as a generalization of our model to non-uniform distributions. The problem with these models is that they lead to a significantly more complex expression for the generative model and thus likelihood functions. This is in contrast to the simplicity afforded us by the generative model with a more global noise process defined above. (See Appendix A.2.1 for further discussion.)

In this paper we considered a “global” noise process, modeled using a parameter corresponding to the probability that a sample observation is an equilibrium of the underlying hidden game. One could easily envision potentially better and more natural/realistic “local” noise processes, at the expense of producing a significantly more complex generative model, and less computationally amenable, than the one considered in this paper. For instance, we could use a noise process that is formed of many independent, individual noise processes, one for each player. (See Appendix A.2.2 for further discussion.)

4.4 Learning Games via MLE

We now formulate the problem of learning games as one of maximum likelihood estimation with respect to our PSNE-based generative model defined in Eq. (1) and the hypothesis space of non-trivial identifiable games and mixture parameters (Definition 7). We remind the reader that our problem is unsupervised; that is, we do not know a priori which joint actions are equilibria and which ones are not. We base our framework on the fact that games are PSNE-identifiable with respect to their induced PMF, under the condition that , by Proposition 6.

First, we introduce a shorthand notation for the Kullback-Leibler (KL) divergence between two Bernoulli distributions parameterized by and :

| (3) |

Using this function, we can derive the following expression of the MLE problem.

Lemma 9.

Given a data set , define the empirical proportion of equilibria, i.e., the proportion of samples in that are equilibria of , as

| (4) |

The MLE problem for the probabilistic model given in Eq. (1) can be expressed as finding:

| (5) |

where and are as in Definition 7, and is defined as in Eq. (2). Also, the optimal mixture parameter .

Proof.

Let , and . First, for a non-trivial , for , and for . The average log-likelihood . By adding , this can be rewritten as , and by using Eq. (3) we prove our claim.

Note that by maximizing with respect to the mixture parameter and by properties of the KL divergence, we get . We define our hypothesis space given the conditions in Remark 1 and Propositions 5 and 6. For the case , we “shrink” the optimal mixture parameter to in order to enforce the second condition given in Remark 1. ∎

Remark 10.

Furthermore, Eq. (5) implies that for non-trivial identifiable games , we expect the true proportion of equilibria to be strictly less than the empirical proportion of equilibria in the given data. This is by setting the optimal mixture parameter and the condition in our hypothesis space.

4.4.1 Learning LIGs via MLE: Model Selection

Our main learning problem consists of inferring the structure and parameters of an LIG from data with the main purpose being modeling the game’s PSNE, as reflected in the generative model. Note that, as we have previously stated, different games (i.e., with different payoff functions) can be PSNE-equivalent. For instance, the three following LIGs, with different weight parameter matrices, induce the same PSNE sets, i.e., for :272727Using the formal mathematical definition of “identifiability” in statistics, we would say that the LIG examples prove that the model parameters of an LIG are not identifiable with respect to the generative model defined in Eq. (1). We note that this situation is hardly exclusive to game-theoretic models. As example of an analogous issue in probabilistic graphical models is the fact that two Bayesian networks with different graph structures can represent not only the same conditional independence properties but also exactly the same set of joint probability distributions [Chickering, 2002, Koller and Friedman, 2009]. As a side note, we can distinguish these games with respect to their larger set of mixed-strategy Nash equilibria (MSNE), but, as stated previously, we do not consider MSNE in this paper because our primary motivation is the work of Irfan and Ortiz [2014], which is based on the concept of PSNE.

Thus, not only the MLE may not be unique, but also all such PSNE-equivalent MLE games will achieve the same level of generalization performance. But, as reflected by our generative model, our main interest in the model parameters of the LIGs is only with respect to the PSNE they induce, not the model parameters per se. Hence, all we need is a way to select among PSNE-equivalent LIGs.

In our work, the indentifiability or interpretability of exact model parameters of LIGs is not our main interest. That is, in the research presented here, we did not seek or attempt to work on creating alternative generative models with the objective to provide a theoretical guarantee that, given an infinite amount of data, we can recover the model parameters of an unknown ground-truth model generating the data, assuming the ground-truth model is an LIG. We opted for a more practical ML approach in which we just want to learn a single LIG that has good generalization performance (i.e., predictive performance in terms of average log-likelihood) with respect to our generative model. Given the nature of our generative model, this essentially translate to learning an LIG that captures as best as possible the PSNE of the unknown ground-truth game. Unfortunately, as we just illustrated, several LIGs with different model parameter values can have the same set of PSNE. Thus, they all would have the same (generalization) performance ability.

As we all know, model selection is core to ML. One of the reason we chose an ML-approach to learning games is precisely the elegant way in which ML deals with the problem of how to select among multiple models that achieve the same level of performance: invoke the principle of Ockham’s razor and select the “simplest” model among those with the same (generalization) performance. This ML philosophy is not ad hoc. It is instead well established in practice and well supported by theory. Seminal results from the various theories of learning, such as computational and statistical learning theory and PAC learning, support the well-known ML adage that “learning requires bias.” In short, as is by now standard in an ML-approach, we measure the quality of our data-induced models via their generalization ability and invoke the principle of Ockham’s razor to bias our search toward simpler models using well-known and -studied regularization techniques.

Now, as we also all know, exactly what “simple” and “bias” means depends on the problem. In our case, we would prefer games with sparse graphs, if for no reason other than to simplify analysis, exploration, study, and (visual) “interpretability” of the game model by human experts, everything else being equal (i.e., models with the same explanatory power on the data as measured by the likelihoods).282828Just to be clear, here we mean “interpretability” not in any formal mathematical sense, or as often used in some areas of the social sciences such as economics. But, instead, as we typically use it in ML/AI textbooks, such as for example, when referring to shallow/sparse decision trees, generally considered to be easier to explain and understand. Similarly, the hope here is that the “sparsity” or “simplicity” of the game graph/model would make it also simpler for human experts to explain or understand what about the model is leading them to generate novel hypotheses, reach certain conclusions or make certain inferences about the global strategic behavior of the agents/players, such as those based on the game’s PSNE and facilitated by CSI. We should also point out that, in preliminary empirical work, we have observed that the representationally sparser the LIG graph, the computationally easier it is for algorithms and other heuristics that operate on the LIG, as those of Irfan and Ortiz [2014] for CSI, for example. For example, among the LIGs presented above, using structural properties alone, we would generally prefer the former two models to the latter, all else being equal (e.g., generalization performance).

5 Generalization Bound and VC-Dimension

In this section, we show a generalization bound for the maximum likelihood problem as well as an upper bound of the VC-dimension of LIGs. Our objective is to establish that with probability at least , for some confidence parameter , the maximum likelihood estimate is within of the optimal parameters, in terms of achievable expected log-likelihood.

Given the ground-truth distribution of the data, let be the expected proportion of equilibria, i.e.,

and let be the expected log-likelihood of a generative model from game and mixture parameter , i.e.,

Let be a maximum-likelihood estimate as in Eq. (5) (i.e., ), and be the maximum-expected-likelihood estimate: .292929If the ground-truth model belongs to the class of LIGs, then is also the ground-truth model, or PSNE-equivalent to it. We use, without formally re-stating, the last definitions in the technical results presented in the remaining of this section.

Note that our hypothesis space as stated in Definition 7 includes a continuous parameter that could potentially have infinite VC-dimension. The following lemma will allow us later to prove that uniform convergence for the extreme values of implies uniform convergence for all in the domain.

Lemma 11.

Consider any game and, for , let , and . If, for any we have and , then .

Proof.

Let , , , , and and be the expectation and probability with respect to the ground-truth distribution of the data.

First note that for any , we have .

Similarly, for any , we have . So that .

Furthermore, the function is strictly monotonically increasing for . If then . Else, if , we have . Finally, if then . ∎

In the remaining of this section, denote by the number of all possible PSNE sets induced by games in , the class of games of interest.

The following theorem shows that the expected log-likelihood of the maximum likelihood estimate converges in probability to that of the optimal , as the data size increases.

Theorem 12.

The following holds with -probability at least :

Proof.

First our objective is to find a lower bound for .

Let . Now, we have . The last equality follows from invoking Lemma 11.

Note that and that since , the log-likelihood is bounded as , where . Therefore, by Hoeffding’s inequality, we have .

Furthermore, note that there are possible parameters , since we need to consider only two values of and because the number of all possible PSNE sets induced by games in is . Therefore, by the union bound we get the following uniform convergence . Finally, by solving for we prove our claim. ∎

Remark 13.

A more elaborate analysis allows to tighten the bound in Theorem 12 from to . We chose to provide the former result for clarity of presentation.

The following theorem establishes the complexity of the class of LIGs, which implies that the term of the generalization bound in Theorem 12 is only polynomial in the number of players .

Theorem 14.

If is the class of LIGs, then .

Proof.

The logarithm of the number of possible pure-strategy Nash equilibria sets supported by (i.e., that can be produced by some game in ) is upper bounded by the VC-dimension of the class of neural networks with a single hidden layer of units and input units, linear threshold activation functions, and constant output weights.

For every LIG in , define the neural network with a single layer of hidden units, of the inputs corresponds to the linear terms and corresponds to the quadratic polynomial terms for all pairs of players , . For every hidden unit , the weights corresponding to the linear terms are , respectively, while the weights corresponding to the quadratic terms are , for all pairs of players , , respectively. The weights of the bias term of all the hidden units are set to . All output weights are set to while the weight of the output bias term is set to . The output of the neural network is . Note that we define the neural network to classify non-equilibrium as opposed to equilibrium to keep the convention in the neural network literature to define the threshold function to output for input . The alternative is to redefine the threshold function to output instead for input .

Finally, we use the VC-dimension of neural networks [Sontag, 1998]. ∎

Corollary 15.

The following holds with -probability at least :

where is the class of LIGs, in which case (Definition 7) becomes the hypothesis space of non-trivial identifiable LIGs and mixture parameters.

6 Algorithms

In this section, we approximate the maximum likelihood problem by maximizing the number of observed equilibria in the data, suitable for a hypothesis space of games with small true proportion of equilibria. We then present our convex loss minimization approach. We also discuss baseline methods such as sigmoidal approximation and exhaustive search.

But first, let us discuss some negative results that justifies the use of simple approaches. Irfan and Ortiz [2014] showed that counting the number of Nash equilibria in LIGs is #P-complete; thus, computing the log-likelihood function, and therefore MLE, is NP-hard.303030This is not a disadvantage relative to probabilistic graphical models, since computing the log-likelihood function is also NP-hard for Ising models and Markov random fields in general, while learning is also NP-hard for Bayesian networks. General approximation techniques such as pseudo-likelihood estimation do not lead to tractable methods for learning LIGs.313131We show that evaluating the pseudo-likelihood function for our generative model is NP-hard. Consider a non-trivial LIG . Furthermore, assume has a single non-absolutely-indifferent player and absolutely-indifferent players ; that is, assume that and (See Definition 19). Let , we have and therefore . The result follows because computing is #P-complete, even for this specific instance of a single non-absolutely-indifferent player [Irfan and Ortiz, 2014]. From an optimization perspective, the log-likelihood function is not continuous because of the number of equilibria. Therefore, we cannot rely on concepts such as Lipschitz continuity.323232To prove that counting the number of equilibria is not (Lipschitz) continuous, we show how small changes in the parameters can produce big changes in . For instance, consider two games , where and for . For , any -norm but remains constant. Furthermore, bounding the number of equilibria by known bounds for Ising models leads to trivial bounds.333333The log-partition function of an Ising model is a trivial bound for counting the number of equilibria. To see this, let , , where denotes the partition function of an Ising model. Given the convexity of [Koller and Friedman, 2009], and that the gradient vanishes at , we know that , which is the maximum .

6.1 An Exact Quasi-Linear Method for General Games: Sample-Picking

As a first approach, consider solving the maximum likelihood estimation problem in Eq. (5) by an exact exhaustive search algorithm. This algorithm iterates through all possible Nash equilibria sets, i.e., for , we generate all possible sets of size with elements from the joint-action space . Recall that there exist of such sets of size and since the search space is super-exponential in the number of players .

Based on few observations, we can obtain an algorithm for samples. First, note that the above method does not constrain the set of Nash equilibria in any fashion. Therefore, only joint actions that are observed in the data are candidates of being Nash equilibria in order to maximize the log-likelihood. This is because the introduction of an unobserved joint action will increase the true proportion of equilibria without increasing the empirical proportion of equilibria and thus leading to a lower log-likelihood in Eq. (5). Second, given a fixed number of Nash equilibria , the best strategy would be to pick the joint actions that appear more frequently in the observed data. This will maximize the empirical proportion of equilibria, which will maximize the log-likelihood. Based on these observations, we propose Algorithm 1.

As an aside note, the fact that general games do not constrain the set of Nash equilibria, makes the method more likely to over-fit. On the other hand, LIGs will potentially include unobserved equilibria given the linearity constraints in the search space, and thus they would be less likely to over-fit.

6.2 An Exact Super-Exponential Method for LIGs: Exhaustive Search

Note that in the previous subsection, we search in the space of all possible games, not only the LIGs. First note that sample-picking for linear games is NP-hard, i.e., at any iteration of sample-picking, checking whether the set of Nash equilibria corresponds to an LIG or not is equivalent to the following constraint satisfaction problem with linear constraints:

| (6) |

Note that Eq. (6) contains “or” operators in order to account for the non-equilibria. This makes the problem of finding the that satisfies such conditions NP-hard for a non-empty complement set . Furthermore, since sample-picking only consider observed equilibria, the search is not optimal with respect to the space of LIGs.

Regarding a more refined approach for enumerating LIGs only, note that in an LIG each player separates hypercube vertices with a linear function, i.e., for and we have . Assume we assign a binary label to each vertex , then note that not all possible labelings are linearly separable. Labelings which are linearly separable are called linear threshold functions (LTFs). A lower bound of the number of LTFs was first provided in Muroga [1965], which showed that the number of LTFs is at least . Tighter lower bounds were shown later in Yamija and Ibaraki [1965] for and in Muroga and Toda [1966] for . Regarding an upper bound, Winder [1960] showed that the number of LTFs is at most . By using such bounds for all players, we can conclude that there is at least and at most LIGs (which is indeed another upper bound of the VC-dimension of the class of LIGs; the bound in Theorem 14 is tighter and uses bounds of the VC-dimension of neural networks). The bounds discussed above would bound the time-complexity of a search algorithm if we could easily enumerate all LTFs for a single player. Unfortunately, this seems to be far from a trivial problem. By using results in Muroga [1971], a weight vector with integer entries such that is sufficient to realize all possible LTFs. Therefore we can conclude that enumerating LIGs takes at most steps, and we propose the use of this method only for .

For we found that the number of possible PSNE sets induced by LIGs is 23,706. Experimentally, we did not find differences between this method and sample-picking since most of the time, the model with maximum likelihood was an LIG.

6.3 From Maximum Likelihood to Maximum Empirical Proportion of Equilibria

We approximately perform maximum likelihood estimation for LIGs, by maximizing the empirical proportion of equilibria, i.e., the equilibria in the observed data. This strategy allows us to avoid computing as in Eq. (2) for maximum likelihood estimation (given its dependence on ). We propose this approach for games with small true proportion of equilibria with high probability, i.e., with probability at least , we have for . Particularly, we will show in Section 7 that for LIGs we have . Given this, our approximate problem relies on a bound of the log-likelihood that holds with high probability. We also show that under very mild conditions, the parameters belong to the hypothesis space of the original problem with high probability.

First, we derive bounds on the log-likelihood function.

Lemma 16.

Given a non-trivial game with , the KL divergence in the log-likelihood function in Eq. (5) is bounded as follows:

Proof.

Let and . Note that ,343434Here we are making the implicit assumption that . This is sensible. For example, in most models learned from the congressional voting data using a variety of learning algorithms we propose, the total number of PSNE would range roughly from 100K—1M; using base 2, this is roughly from —. This may look like a huge number until one recognizes that there could potential be PSNE. Hence, we have that would be in the range of —. In fact, we believe this holds more broadly because, as a general objective, we want models that can capture as many PSNE behavior as possible but no more than needed, which tend to reduce the PSNE of the learned models, and thus their values, while simultaneously trying to increase as much as possible. and . Since the function is convex we can upper-bound it by .

To find a lower bound, we find the point in which the derivative of the original function is equal to the slope of the upper bound, i.e., , which gives . Then, the maximum difference between the upper bound and the original function is given by . ∎

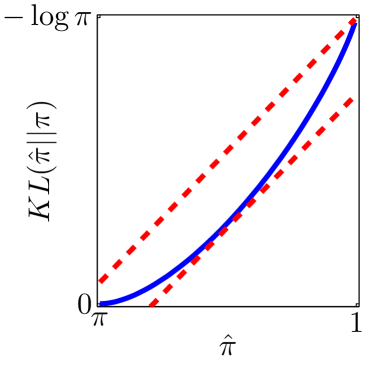

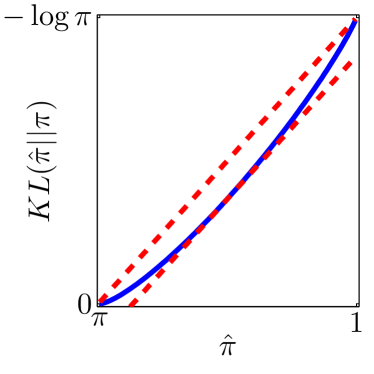

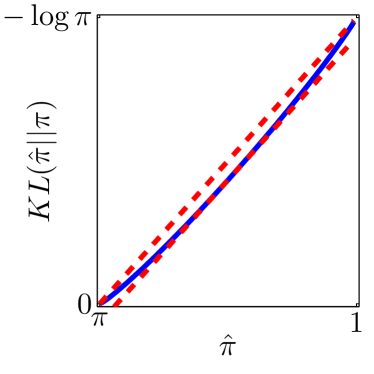

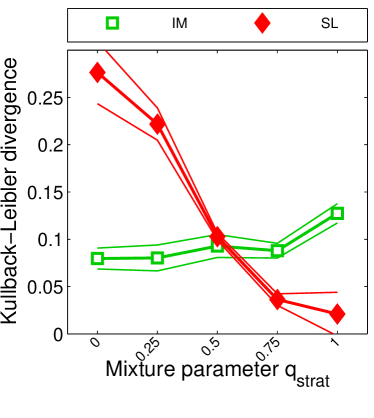

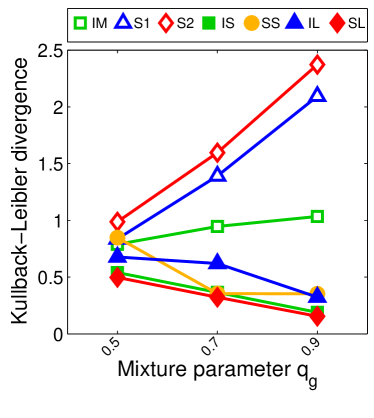

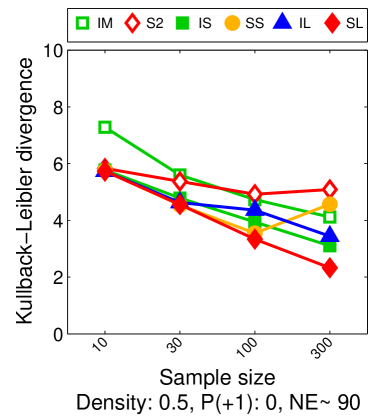

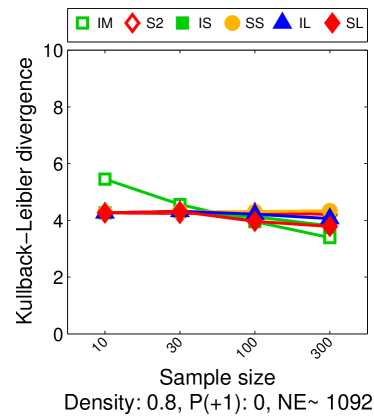

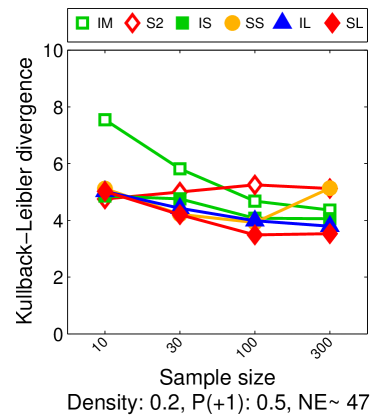

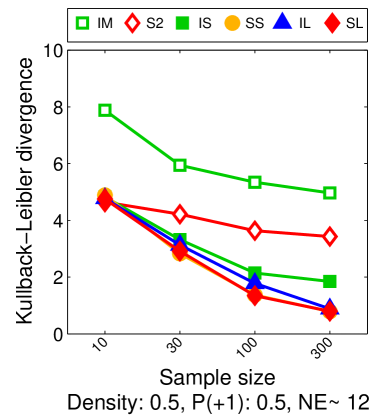

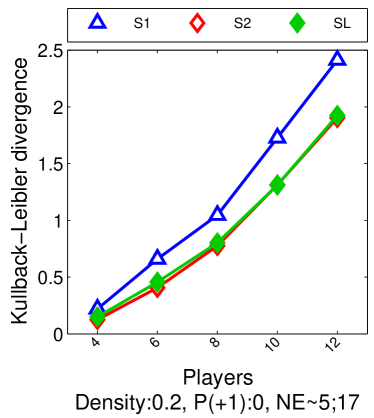

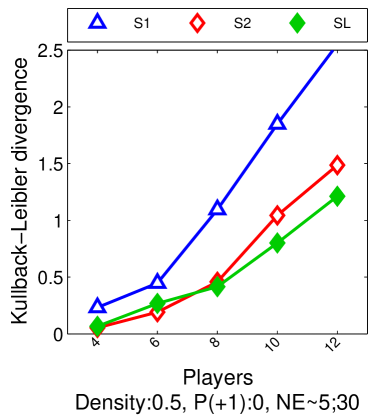

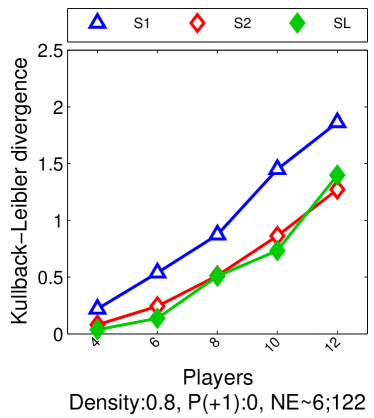

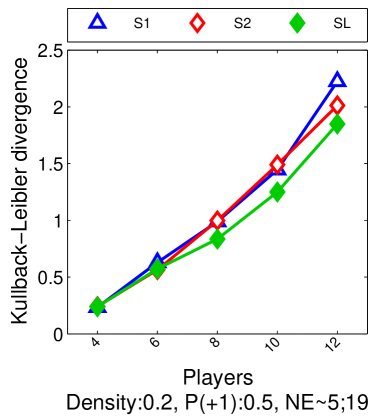

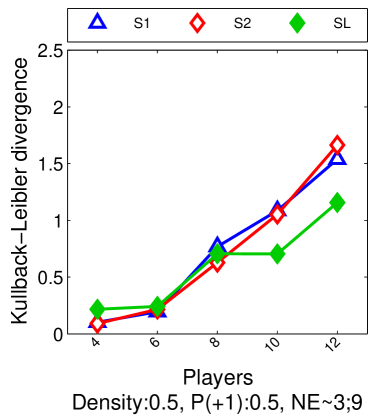

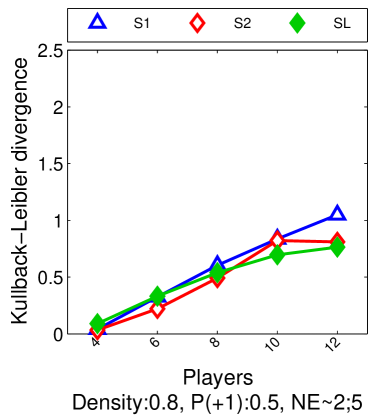

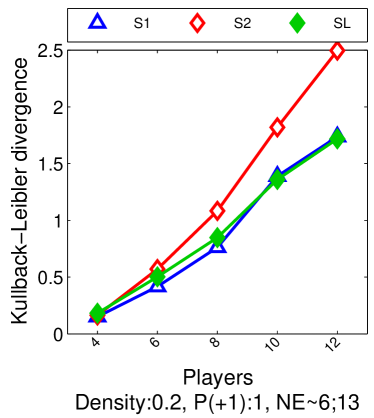

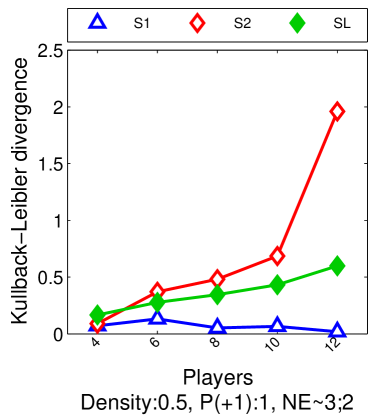

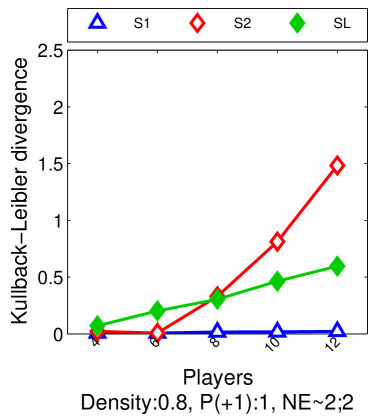

Note that the lower and upper bounds are very informative when (or in our setting when ), since becomes small when compared to , as shown in Figure 2.

Next, we derive the problem of maximizing the empirical proportion of equilibria from the maximum likelihood estimation problem.

Theorem 17.

Assume that with probability at least we have for . Maximizing a lower bound (with high probability) of the log-likelihood in Eq. (5) is equivalent to maximizing the empirical proportion of equilibria:

| (7) |

furthermore, for all games such that for some , for sufficiently large and optimal mixture parameter , we have , where is the hypothesis space of non-trivial identifiable games and mixture parameters.

Proof.

By applying the lower bound in Lemma 16 in Eq. (5) to non-trivial games, we have . Since , we have . Therefore . Regarding the term , if , and if and approaches 0 when . Maximizing the lower bound of the log-likelihood becomes by removing the constant terms that do not depend on .

In order to prove we need to prove . For proving the first inequality , note that , and therefore has at least one equilibria. For proving the third inequality , note that . For proving the second inequality , we need to prove and . Since and , it suffices to prove . Similarly we need to prove . Putting both together, we have since and . Finally, . ∎

6.4 A Non-Concave Maximization Method: Sigmoidal Approximation

A very simple optimization approach can be devised by using a sigmoid in order to approximate the 0/1 function in the maximum likelihood problem of Eq. (5) as well as when maximizing the empirical proportion of equilibria as in Eq. (7). We use the following sigmoidal approximation:

| (8) |

The additional term ensures that for we get . We perform gradient ascent on these objective functions that have many local maxima. Note that when maximizing the “sigmoidal” likelihood, each step of the gradient ascent is NP-hard due to the “sigmoidal” true proportion of equilibria. Therefore, we propose the use of the sigmoidal maximum likelihood only for .

In our implementation, we add an -norm regularizer where to both maximization problems. The -norm regularizer encourages sparseness and attempts to lower the generalization error by controlling over-fitting.

6.5 Our Proposed Approach: Convex Loss Minimization

From an optimization perspective, it is more convenient to minimize a convex objective instead of a sigmoidal approximation in order to avoid the many local minima.

Note that maximizing the empirical proportion of equilibria in Eq. (7) is equivalent to minimizing the empirical proportion of non-equilibria, i.e., . Furthermore, . Denote by the 0/1 loss, i.e., . For LIGs, maximizing the empirical proportion of equilibria in Eq. (7) is equivalent to solving the loss minimization problem:

| (9) |

We can further relax this problem by introducing convex upper bounds of the 0/1 loss. Note that the use of convex losses also avoids the trivial solution of Eq. (9), i.e., (which obtains the lowest log-likelihood as discussed in Remark 10). Intuitively speaking, note that minimizing the logistic loss will make , while minimizing the hinge loss will make unlike the 0/1 loss that only requires in order to be minimized. In what follows, we develop four efficient methods for solving Eq. (9) under specific choices of loss functions, i.e., hinge and logistic.

In our implementation, we add an -norm regularizer where to all the minimization problems. The -norm regularizer encourages sparseness and attempts to lower the generalization error by controlling over-fitting.

6.5.1 Independent Support Vector Machines and Logistic Regression

We can relax the loss minimization problem in Eq. (9) by using the loose bound . This relaxation simplifies the original problem into several independent problems. For each player , we train the weights in order to predict independent (disjoint) actions. This leads to 1-norm SVMs of Bradley and Mangasarian [1998], Zhu et al. [2004] and -regularized logistic regression. We solve the latter with the -projection method of Schmidt et al. [2007a]. While the training is independent, our goal is not the prediction for independent players but the characterization of joint actions. The use of these well known techniques in our context is novel, since we interpret the output of SVMs and logistic regression as the parameters of an LIG. Therefore, we use the parameters to measure empirical and true proportion of equilibria, KL divergence and log-likelihood in our probabilistic model.

6.5.2 Simultaneous Support Vector Machines

While converting the loss minimization problem in Eq. (9) by using loose bounds allow to obtain several independent problems with small number of variables, a second reasonable strategy would be to use tighter bounds at the expense of obtaining a single optimization problem with a higher number of variables.

For the hinge loss , we have and the loss minimization problem in Eq. (9) becomes the following primal linear program:

| (10) |

where .

6.5.3 Simultaneous Logistic Regression

For the logistic loss , we could use the non-smooth loss directly. Instead, we chose a smooth upper bound, i.e., . The following discussion and technical lemma provides the reason behind our us of this simultaneous logistic loss.

Given that any loss is a decreasing function, the following identity holds . Hence, we can either upper-bound the function by the function or lower-bound the function by a negative . We chose the latter option for the logistic loss for the following reasons: Claim i of the following technical lemma shows that lower-bounding generates a loss that is strictly less than upper-bounding . Claim ii shows that lower-bounding generates a loss that is strictly less than independently penalizing each player. Claim iii shows that there are some cases in which upper-bounding generates a loss that is strictly greater than independently penalizing each player.

Lemma 18.

For the logistic loss and a set of numbers :

Proof.

Given a set of numbers , the function is bounded by the function by [Boyd and Vandenberghe, 2006]. Equivalently, the function is bounded by .

These identities allow us to prove two inequalities in Claim i, i.e., and . To prove the remaining inequality , note that for the logistic loss and . Since , strict inequality holds.

To prove Claim ii, we need to show that . This is equivalent to . Finally, we have because the exponential function is strictly positive.

To prove Claim iii, it suffices to find set of numbers for which . This is equivalent to . By setting , we reduce the claim we want to prove to . Strict inequality holds for . Furthermore, note that . ∎

Returning to our simultaneous logistic regression formulation, the loss minimization problem in Eq. (9) becomes

| (12) |

where .

7 On the True Proportion of Equilibria

In this section, we justify the use of convex loss minimization for learning the structure and parameters of LIGs. We define absolute indifference of players and show that our convex loss minimization approach produces games in which all players are non-absolutely-indifferent. We then provide a bound of the true proportion of equilibria with high probability. Our bound assumes independence of weight vectors among players, and applies to a large family of distributions of weight vectors. Furthermore, we do not assume any connectivity properties of the underlying graph.

Parallel to our analysis, Daskalakis et al. [2011] analyzed a different setting: random games which structure is drawn from the Erdős-Rényi model (i.e., each edge is present independently with the same probability ) and utility functions which are random tables. The analysis in Daskalakis et al. [2011], while more general than ours (which only focus on LIGs), it is at the same time more restricted since it assumes either the Erdős-Rényi model for random structures or connectivity properties for deterministic structures.

7.1 Convex Loss Minimization Produces Non-Absolutely-Indifferent Players

First, we define the notion of absolute indifference of players. Our goal in this subsection is to show that our proposed convex loss algorithms produce LIGs in which all players are non-absolutely-indifferent and therefore every player defines constraints to the true proportion of equilibria.

Definition 19.

Given an LIG , we say a player is absolutely indifferent if and only if , and non-absolutely-indifferent if and only if .

Next, we concentrate on the first ingredient for our bound of the true proportion of equilibria. We show that independent and simultaneous SVM and logistic regression produce games in which all players are non-absolutely-indifferent except for some “degenerate” cases. The following lemma applies to independent SVMs for and simultaneous SVMs for .

Lemma 20.

Given , the minimization of the hinge training loss guarantees non-absolutely-indifference of player except for some “degenerate” cases, i.e., the optimal solution if and only if and where is defined as , and .

Proof.

Let . By noting that , we can rewrite .

Note that has the minimizer if and only if belongs to the subdifferential set of the non-smooth function at . In order to maximize , we have , and . The previous rules simplify at the solution under analysis, since .

Let and . By making and , we get and . Finally, by noting that , we prove our claim. ∎

Remark 21.

Note that for independent SVMs, the “degenerate” cases in Lemma 20 simplify to and .

The following lemma applies to independent logistic regression for and simultaneous logistic regression for .

Lemma 22.

Given , the minimization of the logistic training loss guarantees non-absolutely-indifference of player except for some “degenerate” cases, i.e., the optimal solution if and only if and .

Proof.

Note that has the minimizer if and only if the gradient of the smooth function is at . Let and . By making and , we get and . Finally, by noting that , we prove our claim. ∎

Remark 23.

Note that for independent logistic regression, the “degenerate” cases in Lemma 22 simplify to and .