(eccv) Package eccv Warning: Package ‘hyperref’ is loaded with option ‘pagebackref’, which is *not* recommended for camera-ready version

22institutetext: Beijing Academy of Artificial Intelligence

22email: {ziluoding}@baai.ac.cn 33institutetext: College of Future Technology, Peking University

33email: {liumianzhi}@stu.pku.edu.cn

Learning to Robustly Reconstruct Dynamic Scenes from Low-light Spike Streams

Abstract

Spike camera with high temporal resolution can fire continuous binary spike streams to record per-pixel light intensity. By using reconstruction methods, the scene details in high-speed scenes can be restored from spike streams. However, existing methods struggle to perform well in low-light environments due to insufficient information in spike streams. To this end, we propose a bidirectional recurrent-based reconstruction framework to better handle such extreme conditions. In more detail, a light-robust representation (LR-Rep) is designed to aggregate temporal information in spike streams. Moreover, a fusion module is used to extract temporal features. Besides, we synthesize a reconstruction dataset for high-speed low-light scenes where light sources are carefully designed to be consistent with reality. The experiment shows the superiority of our method. Importantly, our method also generalizes well to real spike streams. Our project is: https://github.com/Acnext/Learning-to-Robustly-Reconstruct-Dynamic-Scenes-from-Low-light-Spike-Streams/.

Keywords:

Spike camera Reconstruction1 Introduction

As a neuromorphic sensor with high temporal resolution (40,000 Hz), spike camera [41, 14] has shown enormous potential for high-speed visual tasks, such as reconstruction [36, 42, 40, 37, 43, 3, 6, 5, 7], optical flow estimation [13, 39, 33], and depth estimation [35, 21, 19]. Different from event cameras [20, 4, 1], it can record per-pixel light intensity by accumulating photons and firing continuous binary spike streams. Correspondingly, high-speed dynamic scenes can be reconstructed from spike streams. Recently, many deep learning methods have advanced this field and shown great success in reconstructing more detailed scenes. However, existing methods struggle to perform well in low-light high-speed scenes due to insufficient information in spike streams.

A dilemma arises for visual sensors, that is, the quality of sampled data can greatly decrease in a low-light environment [11, 18, 17, 39, 10]. Low-quality data creates many difficulties for all kinds of vision tasks. Similarly, the reconstruction for the spike camera also suffers from this problem. To improve the performance of reconstruction in low-light high-speed scenes, two non-trivial matters should be carefully considered. First, constructing a low-light high-speed scene dataset for spike camera is crucial to evaluating different methods. However, due to the frame rate limitations of traditional cameras, it is difficult to capture images clearly in real high-speed scenes as supervised signals. Instead of it, a reasonable way is to synthesize datasets for spike camera [37, 43, 13, 35]. To ensure the reliability of the reconstruction dataset, synthetic low-light high-speed scenes should be as consistent as possible with the real world, e.g. light source. Second, as shown in Fig. 1, with the decrease of illuminance in the environment, the total number of spikes in spike streams decreases greatly which means the valid information in spike streams can greatly decrease. Fig. 1(a) shows that the state-of-the-art method often fail under low-light conditions since they have no choice but to rely on inadequate information.

In this work, we aim to address all two issues above-mentioned. In more detail, a reconstruction dataset for high-speed low-light scenes is proposed. We carefully design the scene by controlling the type and power of the light source and generating noisy spike streams based on [38]. Besides, we propose a light-robust reconstruction method as shown in Fig. 1(b). Specifically, to compensate for information deficiencies in low-light spike streams, we propose a light-robust representation (LR-Rep). In LR-Rep, the release time of forward and backward spikes is used to update a global inter-spike interval (GISI). Then, to further excavate temporal information in spike streams, LR-Rep is fused with forward (backward) temporal features. During the feature fusion process, we add alignment information to avoid the misalignment of motion from different timestamps. Finally, the scene is clearly reconstructed from fused features.

Empirically, we show the superiority of our reconstruction method. Importantly, our method also generalizes well to real spike streams. In addition, extensive ablation studies demonstrate the effectiveness of each component. The main contributions of this paper can be summarized as follows:

A reconstruction dataset for high-speed low-light scenes is proposed. We carefully construct varied low-light scenes that are close to reality.

We propose a bidirectional recurrent-based reconstruction framework where a light-robust representation, LR-Rep, and fusion module can effectively compensate for information deficiencies in low-light spike streams.

Experimental results on real and synthetic datasets have shown our method can more effectively handle spike streams in high-speed low-light scenes than previous methods.

2 Related Work

2.1 Low-light Vision

Low-light environment has always been a challenge not only for human perception but also for computer vision methods. For traditional cameras, some works [27, 15, 11, 29, 31, 2, 25, 9] mainly concern the enhancement of low-light images. Wei et al. [27] propose the LOL dataset containing low/normal-light image pairs and propose a deep Retinex-Net including a Decom-Net for decomposition and an Enhance-Net for illumination adjustment. Guo et al. [11] proposes Zero-DCE which formulates light enhancement as a task of image-specific curve estimation with a deep network. Retinexformer [2] formulates a simple yet principled One-stage Retinex-based Framework to light up low-light images. Besides, some work focuses on the robustness of vision tasks to low-light, e.g. object detection. Wang et al. [24] combines with the image enhancement algorithm to improve the accuracy of object detection. For spike camera, it is also affected by low-light environments. Dong et al. [8] propose a real low-light high-speed dataset for reconstruction. However, it lacks corresponding image sequences as ground truth. Besides, the concurrent work [44] synthesizes a low-light spike stream dataset. However, it only contains static scenes and cannot evaluate the performance of reconstruction methods during motion.

2.2 Reconstruction for Spike Camera

The reconstruction of high-speed dynamic scenes has been a popular topic for spike camera. Based on the statistical characteristics of spike stream, Zhu et al. [41] first reconstruct high-speed scenes. Zhao et al. [36] improved the smoothness of reconstructed scenes by introducing motion aligned filter. Zhu et al. [42] construct a dynamic neuron extraction model to distinguish the dynamic and static scenes. With the rise of spiking neural networks [30, 23, 22, 45], for enhancing reconstruction results, Zheng et al. [40] uses short-term plasticity mechanism to exact motion area. Zhao et al. [37] first proposes a deep learning-based reconstruction framework, Spk2ImgNet (S2I), to handle the challenges brought by both noise and high-speed motion. Chen et al. [3] build a self-supervised reconstruction framework by introducing blind-spot networks. It achieves desirable results compared with S2I. The reconstruction method [34] presents a novel Wavelet Guided Spike Enhancing (WGSE) paradigm. By using multi-level wavelet transform, the noise in the reconstructed results can be effectively suppressed. Besides, we would like to mention the concurrent work, RSIR [44]. In RSIR, the AST representation is used to adaptively extract the number of spike in a spike stream under different illuminations. Then, a multi-scale wavelet recurrent network can reconstruct images from the AST representation. However, AST compresses a spike stream into a spike number map which ignores dynamic information, resulting in more motion blur for high-speed low-light scenes. This greatly limits the contribution of RSIR to spike camera reconstruction, as the original intention of spike camera is to handle high-speed dynamic scenes. Unlike AST, our proposed representation, LR-Rep, first calculates global inter-spike interval map (GISI). It can better preserve dynamic information while aggregating temporal information.

2.3 Spike Camera Simulation

Spike camera simulation is a popular way to generate spike streams and accurate labels. Zhao et al. [37] first convert interpolated image sequences with high frame rate into spike stream. Based on [37], the simulators [43, 16, 38] add some random noise to generate spike streams more accurately. To avoid motion artifacts caused by interpolation, Hu et al. [13] presents the spike camera simulator (SPCS) combining simulation function and rendering engine tightly. Then, based on SPCS, optical flow datasets for spike camera are first proposed. Zhang et al. [35] generate the first spike-based depth dataset by the spike camera simulation. Zhang et al. [34] generate the first semantic segmentation spike streams dataset by the spike camera simulation.

3 Reconstruction Datasets

In order to train and evaluate the performance of reconstruction methods in low-light high-speed scenes, we propose two low-light spike stream datasets, Rand Low-Light Reconstruction (RLLR) and Low-Light Reconstruction (LLR) based on spike camera model. RLLR is used as our train dataset and LLR is carefully designed to evaluate the performance of different reconstruction methods as test dataset. We first introduce the spike camera model, and then introduce our datasets where noisy spike streams are generated by the spike camera model.

Spike camera model Each pixel on the spike camera model converts light signal into the current signal and accumulates the input current. For pixel , if the accumulation of input current reaches a fixed threshold , a spike is fired and then the accumulation can be reset as,

| (1) | |||

| (2) |

where is the accumulation at time , is the accumulation without reset before time , is the input current at time (proportional to light intensity) and is the main fixed pattern noise in spike camera, i.e. dark current [43, 38, 12]. Further, due to limitations of circuits, each spike is read out at discrete time ( is a micro-second level). Thus, the output of the spike camera is a spatial-temporal binary stream with size. The and are the height and width of the sensor, respectively, and is the temporal window size of the spike stream. According to the spike camera model, it is natural that the spikes (or information) in low-light spike streams are sparse because reaching the threshold is lengthy.

RLLR As shown in Fig. 2, RLLR includes 100 random low-light high-speed scenes where high-speed scenes are first generated by SPCS [13] and then the light intensity of all pixels in each scene is darkened by multiplying a random constant (-). Each scene in RLLR continuously records a spike stream with size and corresponding image sequence. Then, for each image, we clip a spike stream with size from the spike stream as input.

LLR As shown in Fig. 2, LLR includes 52 carefully designed high-speed scenes where we use the scenes with five kinds of motion (named Ball, Car, Cook, Fan, and Rotate) and each scene corresponds to two light sources (normal and low). To ensure the reliability of our scenes, different light sources are used, and the power of the light source is consistent with the real world. Besides, the motion in Ball, Cook, Fan, and Rotate is from [13] while the motion in Car is created based on vehicle speed in the real world. Hence, the motion of objects is close to the real world. Each scene in LLR continuously records 21 spike streams with size and 21 corresponding images. In the proposed datasets, we consider the noise of spike camera based on [38].

4 Method

4.1 Problem Statement

For simplicity, we write to denote a spike stream from time to ( is the fixed temporal window) and write to denote the instantaneous light intensity received in spike camera at time . Reconstruction is to use continuous spike streams, to restore the light intensity information at different time, . Generally, the temporal window is set as 41 which is the same with [37, 3, 34].

4.2 Overview

To overcome the challenge of low-light spike streams, i.e. the recorded information is sparse (see Fig.1), we propose a light-robust reconstruction method that can fully utilize temporal information of spike streams. It is beneficial from two modules: 1. A light-robust representation, LR-Rep. 2. A fusion module. As shown in Fig. 3, to recover the light intensity information at time , , we first calculate the light-robust representation at time , written as . Then, we use a ResNet module to extract deep features, , from . is fused with forward (backward) temporal features as (). Finally, we reconstruct the image at time , with and .

4.3 Light-robust Representation

As shown in Fig. 4, a light-robust representation, LR-Rep, is proposed to aggregate the information in low-light spike streams. LR-Rep mainly consists of two parts, GISI transform and feature extraction.

GISI transform Calculating the local inter-spike interval from the input spike stream is a common operation [3, 39] and we call it as LISI transform. Different from LISI transform, we propose a GISI transform that can utilize the release time of forward and backward spikes to obtain the global inter-spike interval . It needs to be performed twice, i.e. once forward and once backward respectively. Taking GISI transform backward as an example, it can be summarized as three steps as shown in Fig. 5. GISI transform can extract more temporal information from spike streams than LISI transform as shown in Fig. 6.

Feature extraction After GISI transform, we separately extract shallow features of and input spike stream, and through convolution block. Finally, is obtained by an attention module where and are integrated, i.e.

| (3) | |||

| (4) |

where denotes an attention block including 3-layer convolution with 3-layer activation function and is our LR-Rep at time .

4.4 Fusion and Reconstruction

We first extract the deep feature of through a ResNet with 16 layers. Then, as shown in Fig. 7(a), for forward, temporal features and are fused as temporal features of the input spike stream . For backward, temporal features and are fused as temporal features of the input spike stream . To avoid the misalignment of motion from different timestamps, we use a Pyramid Cascading and Deformable convolution (PCD) [26] to add alignment information to . The above process can be written as,

| (5) | |||

| (6) | |||

| (7) |

where denotes the feature extraction and denotes the PCD module. Finally, as shown in Fig. 7(b), we use forward and backward temporal features ( and ) to reconstruct the current scene at time , i.e.

| (8) | |||

| (9) |

where denotes 3-layer convolution with 2-layer ReLU, is the loss function, denotes 1-norm and is the number of continuous spike streams.

5 Experiment

5.1 Implementation Details

We train our method in the proposed dataset, RLLR. Consistent with previous work [37, 3, 34], the temporal window of each input spike stream is 41. The spatial resolution of input spike streams is randomly cropped the spike stream to during the training procedure and the batch size is set as 8. Besides, forward (backward) temporal features and the release time of spikes in our method are maintained from 21 continuous spike streams. We use Adam optimizer with and . The learning rate is initially set as 1e-4 and scaled by 0.1 after 70 epochs. The model is trained for 100 epochs on 1 NVIDIA A100-SXM4-80GB GPU.

5.2 Results

We compare our method with traditional reconstruction methods, i.e. TFI [41], STP [40], SNM [42] and deep learning-based reconstruction methods, i.e. SSML [3], Spk2ImgNet (S2I) [37], WGSE [34], concurrent work RSIR [44]. The supervised learning methods, S2I, WGSE and RSIR, are trained on RLLR. We evaluate methods on two kinds of data:

(1) The carefully designed synthetic dataset, LLR.

(2) The real spike streams dataset, PKU-Spike-High-Speed [37] and low-light high-speed spike streams dataset [8].

The reproduction of these methods is from their official source codes.

| Metric | TFI | RSIR | SSML | S2I | STP | SNM | WGSE | Ours |

|---|---|---|---|---|---|---|---|---|

| ICME,19 | MM,23 | IJCAI,22 | CVPR,21 | CVPR,21 | PAMI,22 | AAAI,23 | This paper | |

| PSNR | 31.409 | 34.121 | 38.432 | 40.883 | 24.882 | 25.741 | 42.959 | 45.075 |

| SSIM | 0.72312 | 0.88337 | 0.89942 | 0.95915 | 0.55537 | 0.80281 | 0.97066 | 0.98681 |

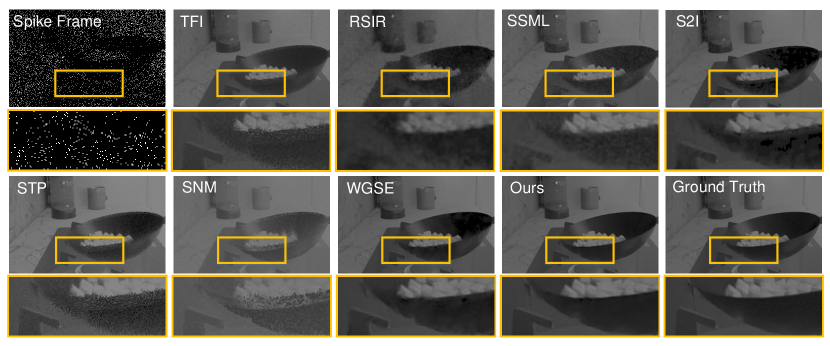

Results on our synthetic dataset As shown in Table. 1, we use the two reference image quality assessment (IQA) metrics, i.e. PSNR and SSIM to evaluate the performance of different methods on LLR. We can find that our method achieves the best reconstruction performance and has a PSNR gain over 2dB than the state-of-the-art reconstruction method, WGSE, which demonstrates its effectiveness. Fig. 8 shows the visualization results from different reconstruction methods. We can find that our method can better restore motion details in low-light motion regions than other methods. Besides, RSIR is designed to handle spike streams in static scenes and we find that it can suffer from large motion blur in low-light high-speed scenes.

Results on real datasets For real data, we test different methods on two spike stream datasets, PKU-Spike-High-Speed [37] and low-light spike streams [8]. PKU-Spike-High-Speed includes 4 high-speed scenes under normal-light conditions and [8] includes 5 high-speed scenes under low-light conditions. Fig. 9 shows the reconstruction results. Note that we apply the traditional enhancement method [32] to reconstruction results on [8] because scenes are too dark. We can find that, for high-speed scenes under normal-light conditions, deep learning-based methods (SSML, RSIR, S2I, WGSE, and Ours) can reconstruct scene details well. However, for high-speed scenes under low-light conditions, SSML and RSIR introduce a large amount of motion blur while S2I and WGSE may introduce some artifacts in dark backgrounds. Our method can more effectively restore the information in scenes, i.e., clear texture.

As shown in Fig. 10, we perform a user study written as US [28, 15] to quantify the visual quality of different methods. For each scene in datasets, we randomly select reconstructed images at the same time from different methods and display them on the screen (the image order is randomly shuffled). 20 human subjects (university degree or above) are invited to independently score the visual quality of the reconstructed image. The scores of visual quality range from 1 to 8 (worst to best quality). The average subjective scores for each spike stream dataset are shown in Fig. 10 and our method reaches the highest US score in all methods.

Temporal consistency of reconstructed results Our reconstruction method is stable to spike stream at different moments. Fig. 11 shows the continuous reconstructed results in a real high-speed low-light scene. We find that our method can recover scene details at different moments, while the state-of-the-art WGSE introduces temporal-varying artifacts. Besides, we also provide a reconstruction video in our supplementary material.

5.3 Ablation

Proposed modules To investigate the effect of the proposed light-robust representation LR-Rep, the adjacent (forward and backward) deep temporal features (ADF), i.e. and in our fusion module, the alignment information in our fusion module (AIF) and GISI transform in LR-Rep, we compare 5 baseline methods with our final method. (A) is the basic baseline without LR-Rep, ADF, and AIF. Table. 2 shows ablation results on the proposed dataset, LLR. The comparison between (A) and (C) ((B) and (D)) proves the effectiveness of LR-Rep. The comparison between (A) and (B) ((C) and (D)) proves the effectiveness of ADF. Further, by adding the alignment information in the fusion module i.e. AIF, our final method (E) appropriately reduces the misalignment of motion from different timestamps and can reconstruct high-speed scenes more accurately than (D). Besides, the comparison between (E) and (F) shows GISI has better performance than LISI. This is because GISI can extract more temporal information than LISI (see Fig. 6). More importantly, the cost of using GISI instead of LISI is negligible (we only need to use two 400250 matrices to store the time of the forward spike and the backward spike, respectively), which does not affect the parameter and efficiency of the network.

| Index | Effect of different network structures | PSNR | SSIM |

|---|---|---|---|

| (A) | Basic baseline | 42.743 | 0.97403 |

| (B) | Adding ADF to (A) | 44.151 | 0.98514 |

| (C) | Adding LR-Rep to (A) | 44.739 | 0.98636 |

| (D) | Adding ADF & LR-Rep to (A) | 44.956 | 0.98678 |

| (E) | Adding ADF & LR-Rep & AIF | 45.075 | 0.98681 |

| (F) | Replacing GISI with LISI in (E) | 44.997 | 0.98676 |

Comparison with other representation We compare the performance of different representations in our framework, i.e. (1) General representation of spike stream: TFI and TFP [41] (2) Tailored representation for reconstruction networks: AST in RSIR [44], AMIM [3] in SSML, SALI [37] in S2I and WGSE-1d [34] in WGSE. We replace LR-Rep in our method as the above representation. They are trained on the dataset, RLLR, and implementation details are the same as our method. As shown in Table. 3, our LR-Rep achieves the best performance which means LR-Rep can better adapt to our framework.

| Rep. | TFP | TFI | AST | AMIM | SALI | WGSE-1d | LR-Rep |

|---|---|---|---|---|---|---|---|

| ICME,19 | ICME,19 | MM,23 | IJCAI,22 | CVPR,21 | AAAI,23 | Ours | |

| PSNR | 38.615 | 37.617 | 37.997 | 41.950 | 43.314 | 42.302 | 45.075 |

| SSIM | 0.96641 | 0.93632 | 0.95463 | 0.97493 | 0.98304 | 0.97438 | 0.98681 |

| Metric | 20% | 40% | 60% | 80% | 100% |

|---|---|---|---|---|---|

| PSNR | 35.001 | 38.618 | 44.415 | 44.753 | 45.075 |

| SSIM | 0.93411 | 0.97113 | 0.98459 | 0.98581 | 0.98681 |

Train dataset size. The size of train datasets has an impact on the performance of our network. A larger train dataset typically provides more samples and a wider range of variations. In fact, proposed RLLR is enough for the reconstruction task of low-light spike streams. As shown in Table. 4, we find that as the dataset size increase, the performance of the model also improves. However, it is observed that the performance improvement becomes less significant after the dataset size reaches 60% of RLLR. It shows that the proposed RLLR is sufficient for training our network.

The number of continuous spike streams For solving the reconstruction difficulty caused by inadequate information in low-light scenes, the release time of spike in LR-Rep and temporal features in fusion module are maintained forward and backward in a recurrent manner. The number of continuous spike streams has an impact on our method performance. Fig. 12 shows its effect on the performance. We can find that, as the number increases, the performance of our method can greatly increase until convergence. This is because, as the number increases, our method can utilize more temporal information until sufficient. The reconstrued image from 21 continuous spike streams has more details in a shaded area.

6 Conclusion

We propose a bidirectional recurrent-based reconstruction framework for spike camera to better handle different light conditions. In our framework, a light-robust representation (LR-Rep) is designed to aggregate temporal information in spike streams. Moreover, a fusion module is used to extract temporal features. To evaluate the performance of different methods in low-light high-speed scenes, we synthesize a reconstruction dataset where light sources are carefully designed to be consistent with reality. The experiment on both synthetic data and real data shows the superiority of our method.

Acknowledgement This work was supported by the National Science and Technology Major Project (Grant No. 2022ZD0116305), the Beijing Natural Science Foundation (Grant No. JQ24023), and the Beijing Municipal Science & Technology Commission Project (No.Z231100006623010).

References

- [1] Brandli, C., Berner, R., Yang, M., Liu, S.C., Delbruck, T.: A 240 × 180 130 db 3 latency global shutter spatiotemporal vision sensor. IEEE Journal of Solid-State Circuits (JSSC) 49(10), 2333–2341 (2014)

- [2] Cai, Y., Bian, H., Lin, J., Wang, H., Timofte, R., Zhang, Y.: Retinexformer: One-stage retinex-based transformer for low-light image enhancement. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). pp. 12504–12513 (2023)

- [3] chen, S., Duan, C., Yu, Z., Xiong, R., Huang, T.: Self-supervised mutual learning for dynamic scene reconstruction of spiking camera. In: Proceedings of the International Joint Conference on Artificial Intelligence, IJCAI. pp. 2859–2866 (2022)

- [4] Delbrück, T., Linares-Barranco, B., Culurciello, E., Posch, C.: Activity-driven, event-based vision sensors. IEEE International Symposium on Circuits and Systems (ISCAS) pp. 2426–2429 (2010)

- [5] Dong, Y., Xiong, R., Zhang, J., Yu, Z., Fan, X., Zhu, S., Huang, T.: Super-resolution reconstruction from bayer-pattern spike streams. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 24871–24880 (2024)

- [6] Dong, Y., Xiong, R., Zhao, J., Zhang, J., Fan, X., Zhu, S., Huang, T.: Joint demosaicing and denoising for spike camera. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 38, pp. 1582–1590 (2024)

- [7] Dong, Y., Xiong, R., Zhao, J., Zhang, J., Fan, X., Zhu, S., Huang, T.: Learning a deep demosaicing network for spike camera with color filter array. IEEE Transactions on Image Processing 33, 3634–3647 (2024)

- [8] Dong, Y., Zhao, J., Xiong, R., Huang, T.: High-speed scene reconstruction from low-light spike streams. In: 2022 IEEE International Conference on Visual Communications and Image Processing (VCIP). pp. 1–5. IEEE (2022)

- [9] Fu, Z., Yang, Y., Tu, X., Huang, Y., Ding, X., Ma, K.K.: Learning a simple low-light image enhancer from paired low-light instances. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 22252–22261 (June 2023)

- [10] Graca, R., McReynolds, B., Delbruck, T.: Optimal biasing and physical limits of dvs event noise. arXiv preprint arXiv:2304.04019 (2023)

- [11] Guo, C., Li, C., Guo, J., Loy, C.C., Hou, J., Kwong, S., Cong, R.: Zero-reference deep curve estimation for low-light image enhancement. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 1780–1789 (2020)

- [12] Hu, L., Ma, L., Guo, Y., Huang, T.: Scsim: A realistic spike cameras simulator. arXiv preprint arXiv:2405.16790 (2024)

- [13] Hu, L., Zhao, R., Ding, Z., Ma, L., Shi, B., Xiong, R., Huang, T.: Optical flow estimation for spiking camera. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 17844–17853 (2022)

- [14] Huang, T., Zheng, Y., Yu, Z., Chen, R., Li, Y., Xiong, R., Ma, L., Zhao, J., Dong, S., Zhu, L., et al.: 1000 faster camera and machine vision with ordinary devices. Engineering (2022)

- [15] Jiang, Y., Gong, X., Liu, D., Cheng, Y., Fang, C., Shen, X., Yang, J., Zhou, P., Wang, Z.: Enlightengan: Deep light enhancement without paired supervision. IEEE transactions on image processing 30, 2340–2349 (2021)

- [16] Kang, Z., Li, J., Zhu, L., Tian, Y.: Retinomorphic sensing: A novel paradigm for future multimedia computing. In: Proceedings of the ACM International Conference on Multimedia (ACMMM). p. 144–152 (2021)

- [17] Li, C., Guo, C., Han, L., Jiang, J., Cheng, M.M., Gu, J., Loy, C.C.: Low-light image and video enhancement using deep learning: A survey. IEEE transactions on pattern analysis and machine intelligence 44(12), 9396–9416 (2021)

- [18] Li, C., Guo, C., Loy, C.C.: Learning to enhance low-light image via zero-reference deep curve estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence 44(8), 4225–4238 (2021)

- [19] Li, J., Liu, J., Wei, X., Zhang, J., Lu, M., Ma, L., Du, L., Huang, T., Zhang, S.: Uncertainty guided depth fusion for spike camera. arXiv preprint arXiv:2208.12653 (2022)

- [20] Lichtsteiner, P., Posch, C., Delbruck, T.: A 128 × 128 120 db 15 latency asynchronous temporal contrast vision sensor. IEEE Journal of Solid-State Circuits (JSSC) 43(2), 566–576 (2008)

- [21] Liu, J., Zhang, Q., Li, J., Lu, M., Huang, T., Zhang, S.: Unsupervised spike depth estimation via cross-modality cross-domain knowledge transfer. arXiv preprint arXiv:2208.12527 (2022)

- [22] Shen, J., Ni, W., Xu, Q., Tang, H.: Efficient spiking neural networks with sparse selective activation for continual learning. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 38, pp. 611–619 (2024)

- [23] Shen, J., Xu, Q., Liu, J.K., Wang, Y., Pan, G., Tang, H.: Esl-snns: An evolutionary structure learning strategy for spiking neural networks. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 37, pp. 86–93 (2023)

- [24] Wang, J., Yang, P., Liu, Y., Shang, D., Hui, X., Song, J., Chen, X.: Research on improved yolov5 for low-light environment object detection. Electronics 12(14), 3089 (2023)

- [25] Wang, T., Zhang, K., Shen, T., Luo, W., Stenger, B., Lu, T.: Ultra-high-definition low-light image enhancement: A benchmark and transformer-based method. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 37, pp. 2654–2662 (2023)

- [26] Wang, X., Chan, K.C., Yu, K., Dong, C., Change Loy, C.: Edvr: Video restoration with enhanced deformable convolutional networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops (June 2019)

- [27] Wei, C., Wang, W., Yang, W., Liu, J.: Deep retinex decomposition for low-light enhancement. arXiv preprint arXiv:1808.04560 (2018)

- [28] Wilson, T.D.: On user studies and information needs. Journal of documentation 37(1), 3–15 (1981)

- [29] Wu, Y., Pan, C., Wang, G., Yang, Y., Wei, J., Li, C., Shen, H.T.: Learning semantic-aware knowledge guidance for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 1662–1671 (2023)

- [30] Xu, Q., Gao, Y., Shen, J., Li, Y., Ran, X., Tang, H., Pan, G.: Enhancing adaptive history reserving by spiking convolutional block attention module in recurrent neural networks. Advances in Neural Information Processing Systems 36 (2024)

- [31] Xu, X., Wang, R., Lu, J.: Low-light image enhancement via structure modeling and guidance. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 9893–9903 (2023)

- [32] Ying, Z., Li, G., Gao, W.: A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv preprint arXiv:1711.00591 (2017)

- [33] Zhai, M., Ni, K., Xie, J., Gao, H.: Spike-based optical flow estimation via contrastive learning. In: ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). pp. 1–5. IEEE (2023)

- [34] Zhang, J., Jia, S., Yu, Z., Huang, T.: Learning temporal-ordered representation for spike streams based on discrete wavelet transforms. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 37, pp. 137–147 (2023)

- [35] Zhang, J., Tang, L., Yu, Z., Lu, J., Huang, T.: Spike transformer: Monocular depth estimation for spiking camera. In: European Conference on Computer Vision (ECCV) (2022)

- [36] Zhao, J., Xiong, R., Huang, T.: High-speed motion scene reconstruction for spike camera via motion aligned filtering. In: International Symposium on Circuits and Systems (ISCAS). pp. 1–5 (2020)

- [37] Zhao, J., Xiong, R., Liu, H., Zhang, J., Huang, T.: Spk2imgnet: Learning to reconstruct dynamic scene from continuous spike stream. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 11996–12005 (2021)

- [38] Zhao, J., Zhang, S., Ma, L., Yu, Z., Huang, T.: Spikingsim: A bio-inspired spiking simulator. In: 2022 IEEE International Symposium on Circuits and Systems (ISCAS). pp. 3003–3007. IEEE (2022)

- [39] Zhao, R., Xiong, R., Zhao, J., Yu, Z., Fan, X., Huang, T.: Learninng optical flow from continuous spike streams. In: Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS) (2022)

- [40] Zheng, Y., Zheng, L., Yu, Z., Shi, B., Tian, Y., Huang, T.: High-speed image reconstruction through short-term plasticity for spiking cameras. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 6358–6367 (2021)

- [41] Zhu, L., Dong, S., Huang, T., Tian, Y.: A retina-inspired sampling method for visual texture reconstruction. In: IEEE International Conference on Multimedia and Expo (ICME). pp. 1432–1437 (2019)

- [42] Zhu, L., Dong, S., Li, J., Huang, T., Tian, Y.: Ultra-high temporal resolution visual reconstruction from a fovea-like spike camera via spiking neuron model. IEEE Transactions on Pattern Analysis and Machine Intelligence 45(1), 1233–1249 (2022)

- [43] Zhu, L., Li, J., Wang, X., Huang, T., Tian, Y.: Neuspike-net: High speed video reconstruction via bio-inspired neuromorphic cameras. In: IEEE International Conference on Computer Vision (ICCV). pp. 2400–2409 (2021)

- [44] Zhu, L., Zheng, Y., Geng, M., Wang, L., Huang, H.: Recurrent spike-based image restoration under general illumination. In: Proceedings of the ACM International Conference on Multimedia (ACMMM). pp. 8251––8260 (2023)

- [45] Zhu, Y., Fang, W., Xie, X., Huang, T., Yu, Z.: Exploring loss functions for time-based training strategy in spiking neural networks. Advances in Neural Information Processing Systems 36 (2024)