Learning with Linear Function Approximations in Mean-Field Control††thanks: E. Bayraktar is partially supported by the National Science Foundation under grant DMS-2106556 and by the Susan M. Smith chair.

Abstract

The paper focuses on mean-field type multi-agent control problems with finite state and action spaces where the dynamics and cost structures are symmetric and homogeneous, and are affected by the distribution of the agents. A standard solution method for these problems is to consider the infinite population limit as an approximation and use symmetric solutions of the limit problem to achieve near optimality. The control policies, and in particular the dynamics, depend on the population distribution in the finite population setting, or the marginal distribution of the state variable of a representative agent for the infinite population setting. Hence, learning and planning for these control problems generally require estimating the reaction of the system to all possible state distributions of the agents. To overcome this issue, we consider linear function approximation for the control problem and provide coordinated and independent learning methods. We rigorously establish error upper bounds for the performance of learned solutions. The performance gap stems from (i) the mismatch due to estimating the true model with a linear one, and (ii) using the infinite population solution in the finite population problem as an approximate control. The provided upper bounds quantify the impact of these error sources on the overall performance.

1 Introduction

The goal of the paper is to present various learning methods for mean-field control problems under linear function approximations and to provide provable error bounds for the learned solutions.

1.1 Literature Review

Learning for multi agent control problems is a practically relevant and a challenging problem where there has been as a growing interest in recent years. A general solution methodology for multi-agent control problems is difficult to obtain and the solution, in general, is intractable except for special information structures between the agents. We refer the reader to the survey paper by [43] for a substantive summary of learning methods in the context of multi-agent decision making problems.

In this paper, we study a particular case of multi-agent problems in which both the agents and their interactions are symmetric and homogeneous. For these mean-field type decision making problems, the agents are coupled only through the so-called mean-field term. These problems can be broadly divided into two categories; mean-field game problems where the agents are competitive and interested in optimizing their self objective functions, and mean-field control problems, where the agents are interested in a common objective function optimization. We cite some papers by [21, 12, 11, 42, 26, 2, 18, 19, 24, 35, 40, 38, 39] and references therein, for papers in mean-field game setting. We do not discuss these in detail as our focus will be on mean-field control problems which are significantly different in both analysis and the nature of the problems of interest.

For mean-field control problems, where the agents are cooperative and work together to minimize (or maximize) a common cost (or reward) function, see [8, 17, 30, 14, 36, 13, 20, 7, 10] and references therein for the study of dynamic programming principle and learning methods in continuous time. In particular, we point out the papers [28, 17] which provide the justification for studying the centralized limit problem by rigorously connecting the large population decentralized setting and the infinite population limit problem.

For papers studying mean-field control in discrete time, we refer the reader to [33, 5, 22, 23, 32, 15]. [33, 32] study existence of solutions to the control problem in both infinite and finite population settings, and they rigorously establish the connection between the finite and infinite population problems. [5] studies the finite population mean-field control problems and their infinite population limit, and provide solutions of the ergodic control problems for some special cases.

In the context of learning, [22, 23] study dynamic programming principle and Q learning methods directly for the infinite population control problem. The value functions and the Q functions are defined for the lifted problem, where the state process is the measure-valued mean-field flow. They consider dynamics without common noise, and thus the learning problem from the perspective of a coordinator becomes a deterministic one.

[15] also considers the limit (infinite population) problem and studies different classes of policies that achieve optimal performance for the infinite population (limit problem) and focuses on Q learning methods for the problem after establishing the optimality of randomized feedback policies for the agents. The learning problem considers the state as the measure valued mean-field term and defines a learning problem over the set of probability measures where various approximations are considered to deal with the high dimension issues.

[4, 3] have studied learning methods for the mean-field game and control problems from a joint lens. However, for the control setup, they consider a different control objective compared to the previously cited papers. In particular, they aim to optimize the asymptotic phase of the control problem where the agents are assumed to reach to their stationary distributions under joint symmetric policies. Furthermore, the agents only use their local state variables, and thus the objective is to find a stationary measure for the agents where the cost is minimized under this stationary regime. Since the agents only use their local state variables (and not the mean-field term) for their control, the authors can define a Q function over the finite state and action spaces of the agents.

[34] consider a closely related problem to our setting, where they propose model-based learning methods for the mean-field control. Similar to [22, 23], they directly work with the infinite population dynamics without analyzing the approximation consistency between the finite-population dynamics and their infinite-population counterpart. Furthermore, they restrict the dynamics to the models with additive noise, and the optimality search is within deterministic and Lipschitz continuous controls.

We also note that there are various studies that focus on the application of the mean-field modeling using numerical methods based on machine learning techniques, see e.g. the works by [37, 1, 29].

In this paper, we will consider the learning problem using an alternative formulation where the state is represented as the measure valued mean-field term. To approximate this uncountable space, and the cost and transition functions, different from the previous works in the mean-field control setting, we will consider linear function approximation methods. These methods have been studied well for single agent discrete time stochastic control problems. We cite papers by [31, 16, 41, 27] in which reinforcement learning techniques are used to study Markov decision problems with continuous state spaces using linear function approximations.

Contributions.

-

•

In Section 2, we present the learning methods using linear function approximation. We focus on various scenarios.

-

–

We first consider the ideal case where we assume that the team has infinitely many agents. For this case, we study; (i) learning by a coordinator who has access to information about every agent in the team, and estimates a model from a data set by fitting a linear model that minimizes the distance between the training data and the estimate linear model, (ii) each agent estimates their own linear model using their local information via an iterative algorithm from a single sequence of data.

-

–

In Section 2.3, we consider the practical case, where the team has finitely many agents, and they aim to estimate a linear model from a single sequence of data, using their local information variables.

-

–

-

•

The methods we study in Section 2 minimize the distance between the learned linear model and the actual model under a probability measure that depends on the training data. However, to find upper bounds for the performance loss of the policies designed for the learned linear estimates in any scenario, we need uniform estimation errors rather than estimation errors. In Section 3, we generalize error bounds to uniform error bounds.

-

•

The proposed learning methods do not match the true model perfectly in general, due to linear approximation mismatch. Therefore, finally, in Section 4, we provide upper bounds on the performance of the policies that are designed for the learned models when they are applied on the true control problem. We note that the flow of the mean-field term is deterministic for infinitely many agents, and thus can be estimated using the dynamics without observing the mean-field term. Therefore, for the execution of the policies we focus on two methods, (i) open loop control, where the agents only observe their local states and estimate the mean-field term with the learned dynamics, (ii) closed loop control where the agents observe both their local information and the mean-field term. For each of these execution procedures, we provide upper bounds for the performance loss. As in Section 2, we first consider the ideal case where it is assumed that the system has infinitely many agents. In this case, the error bound depends on the uniform model mismatch between the learned model and the true model. We then consider the case with finitely many agents. We assume that each agent follows the policy that they calculate considering the limit (infinite population) model. In this case, the error upper bounds depend on both the uniform model mismatch, and an empirical concentration bound since we estimate the finitely many agent model with the infinite population limit problem.

1.2 Problem formulation.

The dynamics for the model are presented as follows: suppose agents (decision-makers or controllers) act in a cooperative way to minimize a cost function, and the agents share a common state and an action space denoted by and . We assume that and are finite. We refer the reader to the paper by [6], for finite approximations of mean-field control problems where the state and actions spaces of the agents are continuous. For any time step , and agent we have

| (1) |

for a measurable function , where denotes the i.i.d. idiosyncratic noise process.

Furthermore, denotes the empirical distribution of the agents on the state space such that for a given joint state of the team of agents

where represents the Dirac measure centered at . Throughout this paper, we use the notation

to denote the space of all joint state variables of the team equipped with the product topology on . We further define , the set of all empirical measures on constructed using sequences of states in , such that

Note that where denotes the set of all probability measures on equipped with the weak convergence topology.

Equivalently, the next state of the agent is determined by some stochastic kernel, that is, a regular conditional probability distribution:

| (2) |

At each time stage , each agent receives a cost determined by a measurable stage-wise cost function . If the state, action, and empirical distribution of the agents are given by , then the agent receives the cost.

For the remainder of the paper, by an abuse of notation, we will sometimes denote the dynamics in terms of the vector state and action variables, , and , and vector noise variables such that

For the initial formulation, every agent is assumed to know the state and action variables of every other agent. We define an admissible policy for an agent , as a sequence of functions , where is a -valued (possibly randomized) function which is measurable with respect to the -algebra generated by

| (3) |

Accordingly, an admissible team policy, is defined as , where is an admissible policy for the agent . In other words, agents share the complete information.

The objective of the agents is to minimize the following cost function

where denotes the expectation with respect to the probability measure induces by the team policy , and where

The optimal cost is defined by

| (4) |

where denotes the set of all admissible team policies.

We note that this information structure (3) will be our benchmark for evaluating the performance of the approximate solutions using simpler information structures presented in the paper. In other words, the value function that is achieved when the agents share full information and full history will be taken to be our reference point for simpler information structures.

For example, one immediate observation is that the problem under full information sharing can be reformulated as a centralized control problem where the state and action spaces are and . Therefore, one can consider Markov policies such that without loss of optimality.

However, if the problem is modeled as an MDP with state space and action space , we face some computational challenges:

-

(i)

the curse of dimensionality when is large, since and might be too large even when are of manageable size,

-

(ii)

the curse of coordination: even if the optimal team policy is found, its execution at the agent level requires coordination among the agents. In particular, the agents may need to follow asymmetric policies to achieve optimality, even though we assume full symmetry for the dynamics and the cost models. The following simple example from [9] shows that the agents may need to follow asymmetric policies to achieve optimality which requires coordination among the agents.

Example 1.1.

Consider a team control problem with two agents, i.e. . We assume that . The stage wise cost function of the agents is defined as

where

In words, the state distribution should be distributed equally over the state space for minimal stage-wise cost. For the dynamics we assume a deterministic model such that

In words, the action of an agent purely determines the next state of the same agent. The goal of the agents is to minimize

for some initial state values , by choosing policies . The expectation is over the possible randomization of the policies. We assume full information sharing such that every agent has access to the state and action information of the other agent.

We let the initial states be . An optimal policy for the agents for the problem is given by

which always spreads the agents equally over the state space. One can realize that, when the agents are positioned at either or , they have to use personalized policies to decide on which one to be placed at or .

For any symmetric policy , including the randomized ones, there will always be cases with strict positive probability, where the agents are positioned at the same state, and thus the performance will be strictly worse than the optimal performance.

A standard approach to deal with mean-field control problems when is large is to consider the infinite population problem, i.e. taking the limit . A propagation of chaos argument can be used to show that in the limit, the agents become asymptotically independent. Hence, the problem can be formulated from the perspective of a representative single agent. This approach is suitable to deal with coordination challenges, as the correlation between the agents vanish in the limit, and thus the symmetric policies can achieve optimal performance for the infinite population control problem. In particular, for Example 1.1 in the infinite population setting, the optimal policy is to follow a randomized policy such that . We will introduce the limit problem in Section 1.5 and make the connections between the limit problem and the finite population problem rigorous.

1.3 Preliminaries.

Recall that we assume that the state and action spaces of agents are finite (see [6] for finite approximations of continuous space mean-field control problems).

Note. Even though we assume that and are finite, we will continue using integral signs instead of summation signs for expectation computations due to notation consistency, by simply considering Dirac delta measures.

We metrize and so that if and otherwise. Note that with this metric, for any and for any coupling of , we have that

which in particular implies via the optimal coupling that

where denotes the first order Wasserstein distance, or the Kantorovich–Rubinstein metric, and denotes the total variation norm for signed measures.

Note further that for measures defined on finite spaces, we have that

| (5) |

Hence, in what follows we will simply write to refer to the distance between and , which may correspond to the total variation distance, the first order Wasserstein metric, or the normalized distance.

We also define the following Dobrushin coefficient for the kernel :

| (6) |

Realize that we always have . In certain cases, we can also have strict inequality, e.g. if there exists some such that

then one can show that

1.4 Measure Valued Formulation of the Finite Population Control Problem

For the remaining part of the paper, we will often consider an alternative formulation of the control problem for the finitely many agent case where the controlled process is the state distribution of the agents, rather than the state vector of the agents. We refer the reader to [6] for the full construction; in this section, we will give an overview.

We define an MDP for the distribution of the agents, where the control actions are the joint distribution of the state and action vectors of the agents.

We let the state space be which is the set of all empirical measures on that can be constructed using the state vectors of -agents. In other words, for a given state vector , we consider to be the new state variable of the team control problem.

The admissible set of actions for some state , is denoted by , where

| (7) |

that is, the set of actions for a state , is the set of all joint empirical measures on whose marginal on coincides with .

We equip the state space , and the action sets , with the norm (see (5)) .

One can show that [6, 9] the empirical distributions of the states of agents , and of the joint state and actions define a controlled Markov chain such that

| (8) |

where is the transition kernel of the centralized measure valued MDP, which is induced by the dynamics of the team problem.

We define the stage-wise cost function by

| (9) |

Thus, we have an MDP with state space , action space , transition kernel and the stage-wise cost function .

We define the set of admissible policies for this measured valued MDP as a sequence of functions such that at every time , is measurable with respect to the -algebra generated by the information variables

We denote the set of all admissible control policies by for the measure valued MDP.

In particular, we define the infinite horizon discounted expected cost function under a policy by

We also define the optimal cost by

| (10) |

The following result shows that this formulation is without loss of optimality:

Theorem 1 ([6]).

Under Assumption 1, for any that satisfies , that is for any with distribution , we have that

-

i.)

-

ii.)

There exists a stationary and Markov optimal policy for the measure valued MDP, and using , every agent can construct a policy such that for , we have that

That is, the policy obtained from the measure valued formulation attains the optimal performance for the original team control problem.

1.5 Mean-field Limit Problem

We now introduce the control problem for infinite population teams, i.e. for . For some agent , we define the dynamics as

where and is the law of the state at time . The agent tries to minimize the following cost function:

where is an admissible policy such that is measurable with respect to the information variables

Note that the agents are no longer correlated and they are indistinguishable. Hence, in what follows we will drop the dependence on when we refer to the infinite population problem.

The problem is now a single agent control problem; however, the state variable is not Markovian. However, we can reformulate the problem as an MDP by viewing the state variable as the measure valued .

We let the state space to be . Different from the measure valued construction we have introduced in Section 1.4, we let the action space to be . In particular, an action for the team is a randomized policy at the agent level. We equip with the product topology, where we use the weak convergence for each coordinate. We note that each action and state induce a distribution on , which we denote by .

Recall the notation in (2); at time , we can use the following stochastic kernel for the dynamics:

which is induced by the idiosyncratic noise . Hence, we can define

| (11) |

Note that the dynamics are deterministic for the infinite population measure valued problem. Furthermore, we can define the stage-wise cost function as

| (12) |

Hence, the problem is a deterministic MDP for the measure valued state process . A policy, say for the measure-valued MDP, can be written as

for some . That is, an agent observes and chooses their actions as an agent-level randomized policy .

We reintroduce the infinite horizon discounted cost of the agents for the measure valued formulation:

for some initial mean-field term and under some policy . Furthermore, the optimal policy is denoted by

At a given time , the pair can be used as sufficient information for decision making by the agent . Furthermore, if the model is fully known by the agents, then the mean-field flow can be perfectly estimated if every agent agrees and follows the same policy , since the dynamics of is deterministic.

We note that for the infinite population control problem, the coordination requirement between the agents may be relaxed, though cannot be fully abandoned in general (see Section 1.6). In particular, if the agents agree on a common policy , then for the execution of this policy, no coordination or communication is needed since every agent can estimate the mean-field term independently and perfectly. Furthermore, every agent can use the same agent-level policy symmetrically, without any coordination with the other agents.

The following result makes the connection between the finite population and the infinite population control problem rigorous [32, 5, 9].

Assumption 1.

- i.

-

ii

is Lipschitz in such that

for some .

Theorem 2.

Under Assumption 1, the following holds:

-

i.

For any ,

That is, the optimal value function of the finite population control problem converges to that of the infinite population control problem as .

-

ii.

Suppose each agent solves the infinite population control problem given in (11) and (12), and constructs their policies, say

If they follow the infinite population solution in the finite population control problem, for any we then have

That is, the symmetric policy constructed using the infinite population problem is near optimal for finite but sufficiently large populations.

Remark 1.

The result has significant implications for the computational challenges we have mentioned earlier. Firstly, the second part of the result states that if the number of agents is large enough, then the symmetric policy obtained from the limit problem is near optimal. Hence, the agents can use symmetric policies without coordination, solving their control problems as long as they have access to the mean-field term and their local state. Secondly, note that the flow of the mean-field term (11) is deterministic if there is no common noise affecting the dynamics. Thus, agents can estimate the marginal distribution of their local state variables , without observing the mean-field term if they know the dynamics. In particular, without the common noise, the local state of the agents and the initial mean-field term are sufficient information for near optimality.

However, as we will see in what follows, to achieve near optimal performance, agents must agree on a particular policy . In particular, if the optimal infinite population policy is not unique, and the agents apply different optimal policies without coordination, the results of the previous results might fail. Hence, coordination cannot be fully ignored.

1.6 Limitations of Full Decentralization

We have argued in the previous section that the team control problem can be solved near optimally by using the infinite population control solution. Furthermore, if the agents agree on the application of a common optimal policy, the resulting team policy can be executed independently in a decentralized way and achieves near-optimal performance.

The following example shows that if the agents do not coordinate on which policy to follow, i.e. if they are fully decentralized, then the resulting team policy will not achieve the desired outcome.

Example 1.2.

Consider a team control problem with infinite population where . The stage wise cost function of the agents is defined as

where

In words, the state distribution should be either be distributed equally over the state space or it should fully concentrate in state for minimal stage-wise cost. One can check that the cost function satisfies Assumption 1 for some (e.g. ). For the dynamics we assume a deterministic model such that

In words, the action of an agent purely determines the next state of the same agent. The goal of the agents is to minimize

where the initial distribution is given by .

It is easy to see that there are two possible optimal policies for the agents and where

If all the agents coordinate and apply either or all together, the realized costs will be , i.e.

However, if the agents do not coordinate and pick their policies from randomly, the cost incurred will be strictly greater than . For example, assume that any given agent decides to use with probability and the policy with probability . Then the resulting policy, say will be such that

Thus, at every time step , of the agents will be in state and of the agents will be in state , hence the total accumulated cost of the resulting policy will be

Thus, we see that if the optimal policy for the mean-field control problem is not unique, the agents cannot follow fully decentralized policies, and they need to coordinate at some level. For this problem, if they agree initially on which policy to follow, then no other communication is needed afterwards for the execution of the decided policy. Nonetheless, an initial agreement and coordination is needed to achieve the optimal performance.

We note that the issue with the previous example results from the fact that the optimal policy is not unique. If the optimal policy can be guaranteed to be unique, then the agents can act fully independently.

2 Learning for Mean-field Control with Linear Approximations

We have seen in the previous sections that in general there are limitations for full decentralization, and that a certain level of coordination is required for optimal or near optimal performance during control. In this section, we will study the learning problem in which neither the agents nor the coordinator know the dynamics and aims to learn the model or optimal decision strategies from the data.

We have observed that the limit problem introduced in Section 1.5 can be seen as a deterministic centralized control problem. In particular, if the model is known, and once it is coordinated which control strategy to follow, the agents do not need further communication or coordination to execute the optimal control. Each agent can simply apply an open-loop policy using only their local state information, and the mean-field term can be estimated perfectly, if every agent is following the same policy. However, to estimate the deterministic mean-field flow , the model must be known. For problems where the model is not fully known, the open-loop policies will not be applicable.

Our goal in this section is to present various learning algorithms to learn the dynamics and cost model of the control problem. We will first focus on the idealized scenario, where we assume that there exist infinitely many agents on the team. For this case, we provide two methods; (i) the first one where a coordinator has access to all information of every agent, and decides on the exploration policy, and (ii) the second one where each agent learns the model on their own by tracking their local state and the mean-field term. However, the agents need to coordinate for the exploration policy through a common randomness variable to induce stochastic dynamics for better exploration. Next, we study the realistic setting where the team has large but finitely many agents. For this case, we only consider an independent learning method where the agents learn the model on their own using their local information variables.

Before we present our learning algorithms, we note that the space is uncountable even under the assumption that is finite. Therefore, we will focus on finite representations of the cost function and the kernel . In particular, we will try to learn the functions of the following form

| (13) |

where , for a set of linearly independent functions for each pair , for some . We assume that the basis functions are known and the goal is to learn the parameters and . We assume , and is a vector of unknown signed measures on .

In what follows, we will assume that the basis functions, are uniformly bounded. Note that this is without loss of generality.

Assumption 2.

We assume that

for every pair, and for all .

For the rest of the paper, we will use and interchangeably; similarly we will use and interchangeably.

Remark 2.

We note that we do not assume that the model and the cost function have the linear form given in (2). However, we will aim to learn and estimate models among the class of linear functions presented in (2). We will later analyze error bounds for the case where the actual model is not linear and thus the learned model does not perfectly match the true model and study the performance loss when we apply policies that are learned for the linear model.

2.1 Coordinated Learning with Linear Function Approximation for Infinitely Many Players

In this section, we will consider an idealized scenario, where there are infinitely many agents, and a coordinator learns the model by linear function approximation.

Data collection. For this section, we assume that there exists a training set that consists of a time sequence of length . The training set is assumed to be coming from an arbitrary sequence of data. The data at each time stage contains

for all the agents present in the team, , where the agents’ states are distributed according to at the given time step. That is, every data point includes the current state and action, the one-step ahead state, the stage-wise cost realization, and the mean-field term for every agent. Furthermore, we assume the ideal scenario where there are infinitely many agents. Hence, at every time step, the coordinator has access to infinitely many data points where the spaces are finite. The coordinator then has access to infinitely many sample transitions observed under , and thus, the kernel can be perfectly estimated for every such that and , via empirical measures. Here, represents the exploration policy of the agents. We assume the following:

Assumption 3.

For any , the exploration policy for every agent puts positive probability on to every control action such that

We define the following sets for which the model and the cost functions can be learned perfectly within the training data: let , we define

| (14) |

denotes the set of probability measures which assign positive measure to a particular state that are also in the training data for the mean-field terms. In particular, for a given pair, the kernel and the cost can be learned perfectly for every with Assumption 3.

For a given , we denote by the number of mean-field terms within the set (see (14)). For every pair, the coordinator aims to find and such that

is minimized.

The least squares linear models can be estimated in closed form for and using the training data. We define the following vector and matrices to present the closed form solution in a more compact form: for each we introduce , and ,

| (15) |

Furthermore, we also define

| (16) |

Assuming that has linearly independent columns, i.e. and are linearly independent for , the estimates for and can be written as follows

| (17) |

Note that above, each row of represents a signed measure on .

2.2 Independent Learning with Linear Function Approximation for Infinitely Many Players

In this section, we will introduce a learning method where the agents perform independent learning to some extent. Here, rather than using a training set, we will focus on an online learning algorithm where at every time step, agent observes . That is, each agent has access to their local state, action, cost realizations, one-step ahead state, and the mean-field term. However, they do not have access to local information about the other agents.

We first argue that full decentralization is usually not possible in the context of learning either. Recall that the mean-field flow is deterministic if every agent follows the same independently randomized agent-level policy. Furthermore, the flow of the mean-field terms remains deterministic even when the agents choose different exploration policies if the randomization is independent. To see this, assume that each agent picks some policy randomly by choosing from some arbitrary distribution, where the mapping is predetermined. If the agents pick independently, the mean-field dynamics is given by

where is the distribution by which the agents perform their independent randomization for the policy selection. Hence, the mean-field term dynamics follow a deterministic flow. Note that for the above example, for simplicity, we assume that the agents pick according to the same distribution. In general, even if the agents follow different distributions for , the dynamics of the mean-field flow would remain deterministic according to a mixture distribution.

Deterministic behavior might cause poor exploration performance. There might be cases where the mean-field flow gets stuck at a fixed distribution without learning or exploring the ‘important’ parts of the space sufficiently. To overcome this issue, and to make sure that the system is stirred sufficiently well during the exploration, one option is to introduce a common randomness for the selection of the exploration policies. In particular, each agent follows a randomized policy where the common randomness is mutual information. Then the dynamics of the mean-field flow can be written as

| (18) |

The common noise variable ensures a level of coordination among the agents. However, this is still a significant relaxation compared to full coordination where the agents share their full state or control data. In this section, we show that agents can construct independent learning iterates that converge by coordinating through an arbitrary common source of randomness.

We assume the following for the mean-field flow during the exploration:

Assumption 4.

Consider the Markov chain whose dynamics are given by (18) We assume that has geometric ergodicity with a unique invariant probability measure such that

for some and some .

Remark 3.

We can establish some sufficient conditions on the transition kernel of the system to test the ergodicity. We note that ([25, p 56, 3.1.1]) a sufficient condition is the following: there exists a mean-field state, say such that

| (19) |

That is, we need to be able to find a set of common noise realizations whose induced randomized exploration policies can take the state distribution to independent of the starting distribution .

The assumption stated in this form indicates that the condition is of the stochastic reachability or controllability type. It requires that from any initial distribution , there exists a control policy that can steer the distribution of the system to some target measure . We also note that the above can be generalized to a -step transition requirement. Analyzing this stochastic controllability behavior for the mean field systems is beyond the scope of the current paper, however, we give some examples in what follows.

We look further into (19). We fix some , and we define the following values:

Note that is the average of the probabilities under the measure for the values. Furthermore, by selecting an appropriate randomized policy, we can control these values in the interval

Thus, if the intersection of these intervals is nonempty, i.e. if

| (20) |

then one can set to be a value in this intersection independent of . By doing this for all , we can set a reachable from any . As a result, if (20) holds for every , then (19), and thus Assumption 4 can be shown to hold.

A somewhat restrictive example for (20) is the following: assume that there exists a control action which can reset the state to some from any state and any mean-field term , that is

This means that for all when , and for all when . Hence, can be reached from any starting point by applying the policy for all . If this policy is among the set of exploration policies, the ergodicity assumption for the mean-field flow would be satisfied.

We note again that a general result would require more in depth analysis, however, the above gives some idea on the implications of this assumption on the controllability of the mean-field model.

We now define the trained measures, for each pair based on the invariant measure for the mean-field flow. Under Assumption 3, that is assuming for all pairs, we can write

| (21) |

Note that the trained sets of mean-field terms are independent of the control action , as the exploration policies are independent of the mean-field terms given the state . These sets have similar implications as the sets defined in (14). In particular, they indicate for which mean-field terms, one can estimate the kernel and the cost function via the training process.

We now summarize the algorithm used for each agent. We drop the dependence on agent identity , and summarize the steps for a generic agent. At every time step , every agent performs the following steps:

-

•

Observe the common randomness given by the coordinator, and pick an action such that

-

•

Collect where

-

•

For all

(22) -

•

Note that the signed measure vector consists signed measures defined on , we denote by the vector values of for where . For all and

(23)

We next show that the above algorithm converges if the learning rates are chosen properly. To show the convergence, we first present a convergence result for stochastic gradient descent algorithms with quadratic cost, where the error is Markov and stationary. We note that similar results have been established in the literature for the stochastic gradient iterations under Markovian noise processes under various assumptions; however, verifying most of these assumptions, such as the boundedness of the gradient, the boundedness of the iterates, or uniformly bounded variance, is not straightforward. Hence, we provide a proof in the appendix for completeness.

Proposition 1.

Let denote a Markov chain with the invariant probability measure where is a standard Borel space. We assume that has geometric ergodicity such that for some and some . Let be such that

for some , and for . We assume that are uniformly bounded. We denote by

Consider the iterations

where the gradient is with respect to . If the learning rates are such that and with probability one, we then have that almost surely.

Proof.

The proof can be found in Appendix A. ∎

Corollary 1.

Let Assumption 4 and Assumption 3 hold and let the learning rates be chosen such that unless . Furthermore, and with probability one for all . Then, the iterations given in (22) and (23) converge with probability 1. Furthermore, the limit points, say and are such that

for every pair and for every , where is the th column of . Furthermore, denotes the trained set based on the invariant measure of the mean-field flow under the exploration policy with common randomness (see (2.2)).

Proof.

We define the following stopping times

such that indicates the -th time the pair is visited.

For the iterations (22), Proposition 1 applies such that for each , , and and finally the noise process . Note that and are assumed to be uniformly bounded which also agrees with the assumptions in Propositions 1. Furthermore, with the strong Markov property, is also a Markov chain which is sampled when the state-action pair is . Thus, the invariant measure for the sampled process is as defined in (2.2).

For the iterations (23), Proposition 1 applies such that for each and each , , and . We note that (see (1)) where is the i.i.d. noise for the dynamics of agents. Thus, the noise process for iterations (23) can be taken to be the joint process where is an ergodic Markov process, and is an i.i.d. process. In particular, for every pair and for every , if we consider the expectation over where , we get

for every .

The algorithm in (23) minimizes

for each where is the distribution of the noise term. We can then open up the above term to write:

Hence, the algorithm minimizes

for each . ∎

2.3 Learning for Finitely Many Players

In this section, we will study the more realistic scenario in which the number of agents is large but finite. The learning methods presented in the previous sections have focused on the ideal case where the system has infinitely many players. Although the setting with the infinitely many agents helps us to fix the ideas for the learning in the mean-field control setup, we should note that it is only an artificial setup, and the infinite population setup is only used as an approximation for large population control problems. Hence, we need to study the actual setup for which the limit problem is argued to be a well approximation, that is the problem with very large but finitely many agents.

We will apply the independent learning algorithm presented for the infinite population case, and study the performance of the learned solutions for the finitely many player setting. In particular, we will assume that the agents follow the iterations given in (22) and (23). We note, however, that the agents will not need to use common randomness during exploration as the flow of the mean-field term is stochastic for finite populations without common randomness. The method remains valid under common randomness as well; in fact, the common randomness, in general, encourages the exploration of the state space. The method is identical to the one presented in Section 2.2. However, we present the method again since it has some subtle differences.

At every time step , agent performs the following steps:

-

•

Pick an action such that

-

•

Collect where and

-

•

For all

(24) -

•

Denoting by the vector values of for all where . For all and

(25)

Remark 4.

We note that the iterates and depend on the agent identity , in this case, as each agent can learn the model independently. Moreover, the learning rates and the basis functions might depend on the agent identity as well. However, we omit the dependence in the notation to reduce notational clutter.

Assumption 5.

Under the exploration team policy , the state vector process of the agents is irreducible and aperiodic and in particular admits a unique invariant measure, and thus the mean-field flow admits a unique invariant measure, say , as well.

Remark 5.

We note that a sufficient condition for the above assumption to hold is that there exists some such that for any and for any . In particular, this implies that

and thus [25, p 56, 3.1.1] implies that the process is geometrically ergodic.

The next result shows the convergence of the algorithm. Similar to the previous section, we first define the trained sets of mean-field terms for every pair using the stationary distribution of the mean-field terms. We assume that Assumption 3 holds for every policy such that for every pair. Denoting the invariant distribution of the joint state process by , for some , for agent , we can write

| (26) |

Note that the trained measure of mean-fields is independent of the control actions; however, it does depend on the agent identity as the agents follow distinct exploration policies.

Corollary 2.

Let Assumption 5 hold, and let Assumption 3 hold for each policy . Assume further that the learning rates of every agent satisfy the assumption of Corollary 1 such that and with probability one for all . Then, the iterations given in (24) and (25) converge with probability one. Furthermore, for agent , the limit points, say and are such that

for every pair and for every , where is the th column of . Furthermore, denotes the trained set based on the invariant measure of the mean-field flow under the exploration policy with common randomness (see (2.3)).

3 Uniform Error Bounds for Model Approximation

The learning methods we have presented in Section 2 minimize the distance between the true model and the linear approximate model, under the probability measure induced by the training data. In particular, denoting the learned parameters for a fixed pair by , and , we have that

| (27) |

for some probability measure . The measure depends on the learning method used.

- •

- •

-

•

Finally, for the individual learning method for finite populations in Section 2.3, depends on the agent identity , and thus denoted by . Similar to the infinite population setting, it represents the invariant measure of the process conditioned on the event , for agent where is the dimensional vector state of the team of agents. We note that each agent might have different trained sets of mean-field terms in this setting, since the policies may be distinct.

When the learned policy is executed, the flow of the mean-field is not guaranteed to stay in the support of the training measure . Hence, in what follows, we aim to generalize the performance of the learned models over the space .

In what follows, we will sometimes refer to as the training measure.

3.1 Ideal Case: Perfectly Linear Model

If the cost and the kernel are fully linear for a given set of basis functions then the linear model can be learned perfectly. That is, for the given basis functions , there exist and such that

The model can be learned perfectly with a coordinator under the method presented in Section 2.1 if

-

•

the training set is such that for each pair , there exist at least different data points. Furthermore, for a given data point of the form , the state-action distribution for this point is such that

-

•

and if the basis functions and are linearly independent for every that is if (see (2.1)) has independent columns.

3.2 Nearly Linear Models

In this section, we provide a result that states that if the true model can be approximated close to a linear model, then the models learned with the least square method can approximate the true model uniformly in the order of if the training set is informative enough.

The following assumption states that the true model is nearly linear.

Assumption 6.

We assume the existence of and with the following property: denoting by the th column of , for some , and

| (28) |

In particular, further assuming , the above implies that

for all .

We note that, in general, there is no guarantee that the learned dynamics constitute a proper stochastic kernel. This can be guaranteed when the model is fully linear as discussed in Section 3.1 or when we consider a discretization based approximation as described in Section 3.3. However, for general linear approximations, as in this section, we project the learned model onto the set of probability measures , i.e. the simplex over .

In particular, we use the following notation:

| (29) |

where and denote the learned models based on the least square method, see (3).

Proposition 2.

Proof.

We first note that since the learned minimizes the distance to the true model under the training measure , (6) implies that

In particular, via the triangle inequality (under the norm) we also have that

We can further write that

where is the minimum eigenvalue of . Thus, we have that

Finally, using the triangle inequality with the fact that

where we used since we assume that via Assumption 2.

For the proof of the error bound of the estimate kernel we follow identical steps, recalling that by construction minimizes the distance to and using Assumption 6, we write that

which yields

where is the minimum eigenvalue of .

Since is the projection of onto the space of probability measures, we have, by the definition of the projection, that

We then write the following using the triangle inequality

where we used with Assumption 6. ∎

3.3 A Special Case: Linear Approximation via Discretization

In this section, we show that the discretization of the space can be seen as a particular case of linear function approximation with a special class of basis functions. In particular, for this case, we can analyze the error bounds of the learned policy with mild conditions on the model.

Let be a disjoint set of quantization bins of such that . We define the basis functions for the linear approximation such that

for all pairs. Note that in general the quantization bins, ’s, can be chosen differently for every ; for the simplicity of the analysis, we will work with a discretization scheme which is the same for every . An important property of the discretization is that the basis functions form an orthonormal basis for any training measure with for each quantization bin . That is

for every pair. This property allows us to analyze the uniform error bounds of the discretization method more directly.

The linear fitted model (see (3) with the chosen basis functions becomes

| (30) |

In words, the learned coefficients are the averages of cost and transition realizations from the training set of the corresponding quantization bin.

The following then is an immediate result of Assumption 1.

4 Error Analysis for Control with Misspecified Models

In the previous section, we have studied the uniform mismatch bounds of the learned models. In this section, we will focus on what happens if the controllers designed for the linear estimates are used for the true dynamics. We will provide error bounds for the performance loss of the control designed for a possibly missepecified model.

We will analyze the infinite population and the finite population settings separately. We note that some of the following results (e.g. Lemma 3) have been studied in the literature to establish the connection between the -agent control problems and the limit mean-field control problem without the model mismatch aspect. That is, existing results study what happens if one uses the infinite population solution for the finite population control problem with perfectly known dynamics (see e.g. [33, 5, 32]). However, we present the proof of every result for completeness and because of the connections in the analysis we follow throughout the paper. Furthermore, the existing results are often stated under slightly different assumptions and settings such as being stated only for closed loop policies, or only for policies that are open loop in the sense that they are measurable with respect to the noise process.

4.1 Error Bounds for Infinitely Many Agents

As we have observed in Example 1.2, even when agents agree on the model knowledge, without coordination on which policy to follow, the optimality may not be achieved. Therefore, we assume that after the learning period, the team of agents collectively agrees on the cost and transition models given by and and designs policies for this model. We will assume that

| (31) |

for some and for all . That is represents the uniform model mismatch constant.

We will consider two different cases for the execution of the designed control.

-

•

Closed loop control: The team decides on a policy , and uses their local states and the mean-field term to apply the policy . That is, an agent observes the mean-field term , chooses and applies their control action according to with the local state . The important distinction is that the mean-field term is observed by every agent, and they decide on their agent-level policies with the observed mean-field term. Hence, we refer to this execution method to be the closed loop method since the mean-field term is given as a feedback variable.

-

•

Open loop control: We have argued earlier that the flow of the mean-field term is deterministic for the infinitely many agent case, see (11). In particular, the mean-field term can be estimated with the model information. Hence, for this case, we will assume that the agents only observe their local states, and estimates the mean-field term independent instead of observing it. That is, an agent estimates the mean-field term , and applies their control action according to with the local state . Note that if the model dynamics were perfectly known, this estimate would coincide with the true flow of the mean-field term. However, when the model is misspecified, the estimate and the correct mean-field term will deviate from each other, and we will need to study the effects of this deviation on the control performance, in addition to the incorrect computation of the control policy.

In what follows, previously introduced constants and will be used often. We refer the reader to Assumption 1 for , and equation (6) for .

For the results in this section, we will require that where . We note that this assumption is needed to show the Lipschitz continuity of the value function with respect to . The following provides an example where this bound is not satisfied, and the value function is not Lipschitz continuous.

Example 4.1.

Consider a control-free (without loss of optimality) dynamics, with a binary state space . We assume that

that is, the state process moves to with probability independent of the value of the state at the current step. We first notice that for the binary state space. Furthermore, we note that this kernel is Lipschitz continuous in with Lipschitz constant 2, that is . To see this, consider the following for

where we used the bound that which is the minimal uniform upper bound for all .

Hence, the kernel is Lipschitz continuous with constant 2. Furthermore, since the dynamics do not depend and , we have that , and thus .

The stage-wise cost is given by . We consider Lipschitz continuity of the value function around ,i.e. around . Note that for some initial distribution , one can iteratively show that

Hence, we can write the value function as

To show that this function is not Lipschitz continuous, we consider two points , without loss of generality assume that :

for some where we used the mean value theorem for . We can see that the above cannot be bounded uniformly when is around if , i.e. if . This implies that the value function cannot be Lipschitz continuous if .

4.1.1 Error Bounds for Closed Loop Control

We assume that the agents calculate an optimal policy, say , for the incorrect model ( and ), and observe the correct mean-field term say , at every time step . The agents then use

| (32) |

to select their control actions at time .

We denote the accumulated cost under this policy by , and we will compare this with the optimal cost that can be achieved, which is for some initial distribution .

Theorem 3.

Proof.

We start with the following upper-bound

| (33) |

where denotes the optimal value function for the mismatched model. We have an upper-bound for the second term by Lemma 1. We write the following Bellman equations for the first term:

We can then write

We note that and . Using Lemma 1 for the third and the last terms above, we get

Rearranging the terms and taking the supremum on the left hand side over , and noting that we can then write

Combining this bound, and Lemma 1 with (33), we can conclude the proof.

∎

4.1.2 Error Bounds for Open Loop Control

We assume that the agents calculate an optimal policy, say for the incorrect model, and estimate the mean-field flow under the incorrect model with the policy . That is, at every time step , the agents use

| (34) |

to select their control actions at time . Furthermore, is estimated with

| (35) |

where is the learned and possibly incorrect model. We are then interested in the optimality gap given by

where denotes the accumulated cost when the agents follow the open loop policy at every time . We note that the distinction from the closed loop control is that is not observed but estimated using the model .

Theorem 4.

Proof.

We start with the following upper-bound

| (36) |

We have an upper-bound for the second term by Lemma 1. We now focus on the first term:

where we write , and denotes the measure flow under the true dynamics with the incorrect policy , that is

We next claim that

We show this by induction. For , we have that

We now assume that the claim is true for :

where we used the induction argument at the last inequality. We now go back to:

For the term inside the summation, we write

Using this bound, we finalize our argument. In the following we denote by and to conclude:

This is the bound for the first term in (36), combining this with the upper-bound on the second term in (36) by Lemma 1, we can complete the proof. ∎

Lemma 1.

Proof.

The proof can be found in the appendix B. ∎

4.2 Error Bounds for Finitely Many Agents

We introduce the following constant to denote the expected distance of an empirical measure to its true distribution:

| (37) | |||

| (38) |

where is an empirical measure of the distribution , and the expectation is with respect to the randomness over the realizations of .

Remark 6.

We note that the constants can be bounded in terms of the population size . In particular, for the finite space and

where in general depends on the underlying space (or the space for ). Furthermore, for continuous state and action spaces, e.g. for , the empirical error term is in the order of .

4.2.1 Error Bounds for Open Loop Control

In this section, we will study the case where each agent in an -agent control system follows the open-loop control given by the solution of the infinite population control problem with mismatched model estimation. We summarize this for some agent as follows:

- •

-

•

Estimate the mean-field term at time according to (35) using the approximate model

-

•

Find the randomized agent level policy using

-

•

Observe local state , and apply action .

If every agent follows this policy, we have the following upperbound for the performance loss compared to the optimal value of the -population control problem,

Theorem 5.

Under Assumption 1, if each agent follows the steps summarized above, we then have that

where , and .

Proof.

Lemma 2.

Let be the state of the agent at time when each agent follows the open-loop policy in an -agent control dynamics. We denote by the vector of the states of agents at time . Under Assumption 1, we then have that

where , and where the expectation is with respect to the random dynamics of the player control system.

Proof.

The proof can be found in Appendix C. ∎

Lemma 3.

Under Assumption 1,

where and , for any that is for any that can be achieved with an empirical distribution of agents.

Proof.

The proof can be found in the appendix D. ∎

4.2.2 Error Bounds for Closed Loop Control

In this section, we will assume that the agents find and agree on an optimal policy for the control problem using the agreed-upon mismatched model with infinite agent dynamics. However, unlike open-loop control, to execute this policy, they observe the empirical state distribution of the team of -agents, say at time and apply . We summarize the application of this policy as follows:

- •

-

•

Observe the correct mean-field term .

-

•

Find the randomized agent level policy using

-

•

Observe local state , and apply action .

We denote the incurred cost under this policy by for some initial state distribution .

Theorem 6.

Proof.

The proof follows very similar steps to the results we have proved earlier. For some

| (39) |

The second term above is bounded by Lemma 1, the last term is bounded by Lemma 3. For the first term we write the Bellman equations:

We can then write

Using almost identical arguments that we have used in the proof of Lemma 3 and Lemma 2, we can bound the last term as

Re arranging the terms and noting that , we can write that

Combining this bound with (4.2.2), one can show that

∎

5 Numerical Study

We now present a numerical example to verify the results we have established in the earlier sections.

We consider a multi-agent taxi service model where each agent represents a taxi. The state and action spaces are binary such that . We assume that at any given time a given zone is in either a surge or a non-surge mode. The state variable represents the location of the agent

-

•

agent is in a surge zone (high demand)

-

•

agent is in a non-surge zone (low demand)

The action variable represents the movement decisions:

-

•

remains where they are

-

•

relocates to another area.

The cost structure is defined as follows:

-

•

If an agent is in a non-surge zone (), they incur a cost due to lost earnings

-

•

If an agent relocates (), they receive a cost , for movement expenses.

-

•

Furthermore, to encourage a balanced distribution, we penalize deviations from the %-% distribution by introducing a cost where is the fraction of agents in the surge zones.

For the dynamics, we assume that a non-surge area has a fixed probability of becoming a surge area in the next time step. Furthermore, we assume that a surge area has a probability of becoming a non-surge area, indicating that as more drivers there are in a surge area ( is high), the likelihood of it remaining a surge zone decreases (due to increased supply). This then defines the transition probabilities as follows:

We set the parameters as , and .

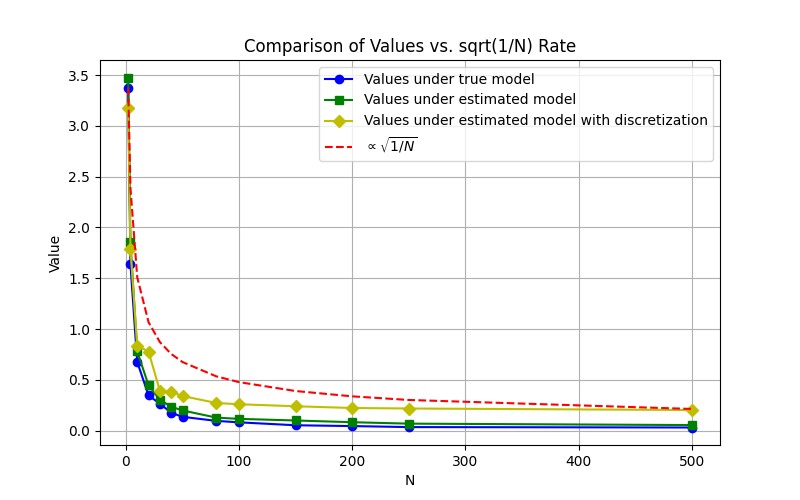

Near optimality of learned models and infinite population approximations. Figure 1 shows the value functions loss for different values , the number of agents in the system. We graph the loss functions under 3 settings:

-

•

The optimal policy for the infinite population model with perfect knowledge of transition dynamics and costs.

-

•

The estimate policy for the infinite population model, where the transition-cost function is learned using discretization basis functions based on the discretization of the measure space into 6 subsets (see Section 3.3).

-

•

The estimate policy for the infinite population model, where the transition-cost function is learned using a class of basis functions:

Note that the cost and the transitions are perfectly linear under the basis functions .

For the loss, we compare the value of the policy with the optimal value in an infinite population environment. Furthermore, we assume that the initial distribution is .

In the figure, we also plot a scaled line which represents the decay rate of the empirical consistency term defined in (37). As verified by the results, the loss in all cases decays at a rate similar to .

We also observe that the policies for the learned model with polynomial basis functions perform as well as the policies under perfect model knowledge, which is expected as the model is perfectly linear for these basis functions.

For the learned model under discretization, there is a small performance gap, which is also expected since the model is not perfectly linear under discretization basis functions. Thus, the learned model does not perfectly match the true model under discretization.

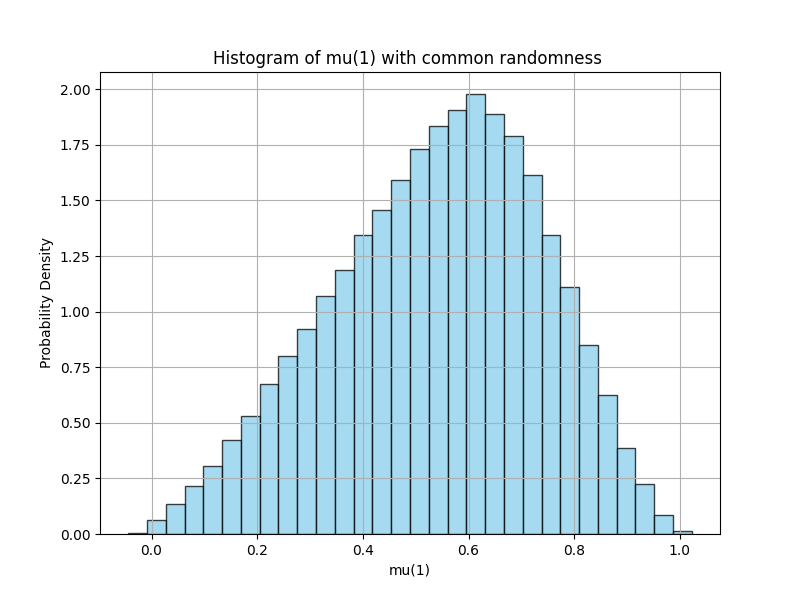

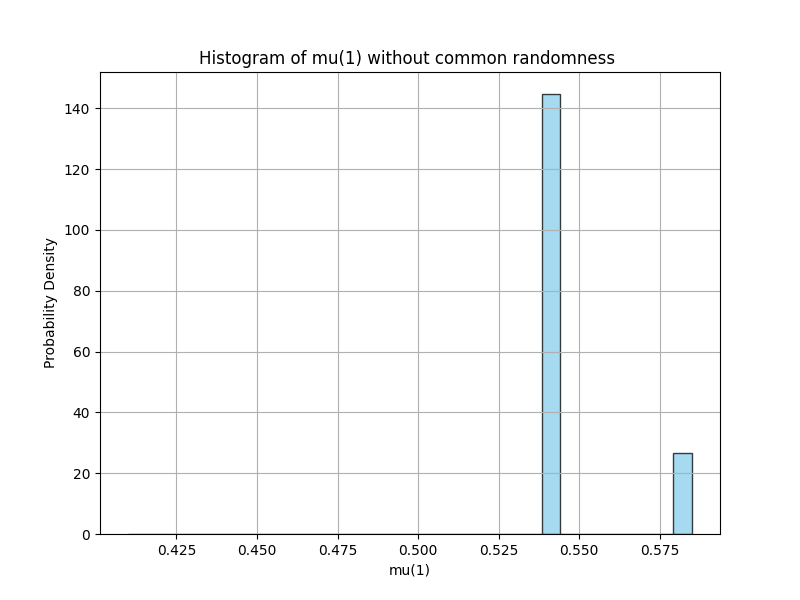

Lack of exploration without common randomness. Another significant observation from the previous sections about the exploration is also verified in this numerical study. In particular, when agents perform learning individually, we observe that the mean-field term tends to get stuck in certain regions without common randomness. However, if agents choose their actions based on a common randomness, then exploration becomes more efficient as seen in Figure 2. In the right graph, the agents follow a policy of the form where is an i.i.d. noise term that is independent across the agents which results in a deterministic flow of the mean-field term, and results in poor exploration. In the graph on the left, the agents follow exploration policies of the form where is a common noise that is shared by all agents. As a result, the flow of the mean-field term becomes stochastic and a better exploration is observed.

6 Conclusion

We have studied model-based learning methods for mean-field control problems using linear function approximations, focusing on both fully coordinated and independent learning approaches. We have observed that full decentralization is generally not possible even when the agents agree on a common model. For the independent learning method, although agents do not need to share their local state information for the convergence, a certain level of coordination is inevitable especially for the exploration phase of the control problem which is done using a common noise process. For the learned models, we have provided error analysis which stems from two main sources (i) modeling mismatch due to linear function approximation, (ii) error arising from the infinite population approximation.

We have observed that the exploration is a key challenge in the learning of mean-field control systems. The analysis in the paper suggests that the stochastic controllability of the mean-field systems is closely related to the exploration problem. A natural future direction is then to further analysis of the controllability and exploration properties of the mean-field control system.

Appendix A Proof of Proposition 1

Step 1. We first show that remains uniformly bounded over . We write

| (40) |

For we have that

where the generic constant may represent different values at different steps. Denoting by , if we take the expectation on both sides of (40) we can write

| (41) |

where at the last step we used the convexity of for every . We now introduce which are independent over and each is distributed according to . For the middle term above we write

| (42) |

where the expectation is with respect to the independent coupling between .

We denote by

For , we consider its absolute value to and write

| (43) |

where we used generic constant for the above analysis that might have different values at different steps. Furthermore, we used the inequality . We also assume that to use , note that this is without loss of generality as we are trying to show that is bounded, and for , the boundedness is immediate. For the following analysis, we will denote by

We now consider the series . Since is ergodic with a geometric rate with invariant measure and , we have that

| (44) |

We now go back to (A)

For the following we denote by

Note that one can show the infinite product converges if and only if the sum

converges. We have shown that the sum is convergent due to geometric ergodicity, and we also have that is summable. Thus, we write

for some . One can then iteratively show that

Consider ; since for all , and since , for all . Thus, we can simply remove terms to get a further upperbound. For , we have that

using an identical argument we used to show . In particular,

which shows that is bounded uniformly over , which also implies that is bounded.

Step 2. Now we have the boundedness, we go back to (A); using the bound on (only for the second in (A)), and summing over the terms, we can write

again using the boundedness of , and the fact that and sending , we get

We now introduce which are independent over and each is distributed according to . We then write

| (45) |

where we overwrite the definitions of , , and (only changing the signs of these terms, see (A)).

Recall the analysis for in (A), together with the uniform boundedness of over , we can write that

where we exchange the sum and expectation with monotone convergence theorem, and where the last step follows from what we have shown in (44).

For the last term similarly, we have that , from (44), since is geometrically ergodic with invariant measure and and is fixed.

Going back to (A), now that we have shown the last and the first terms are finite, we can write

Since is i.i.d and distributed according to , the above also implies that

which in turn implies that

almost surely. Furthermore, since is not summable, and (as achieves the minimum of ), we must have that

Appendix B Proof of Lemma 1

We begin the proof by writing the Bellman equations

where and are optimal agent-level policies that achieves the minimum at the right hand side of the Bellman equations respectively. We can use then use the same agent level policies by exchanging them to get the following upper-bound

| (46) |

We have that

Hence, by rearranging the terms in (B), we can write

Finally, a slight modification of [6, Lemma 6] for finite can be used to show that

which completes the proof that

Appendix C Proof of Lemma 2

We use the notation for the following analysis. Note that with stochastic realization results, there exists a random variable uniformly distributed on , and a measurable function such that

has the same distribution as , where we overwrite the notation for simplicity. Let denote a vector of size state variables that are distributed according to , i.e. such that for all . Furthermore, let denote a vector of size where each element is independent and distributed according to the law of . We then study the following conditional expected difference:

Let denote the vector of size for the noise variables of the agents at time . Note that we have where for each . We also introduce such that .

We further introduce another vector of noise variables where each element is independently distributed, and the distribution of agrees the with the kernel . In other words, we use the functional representation of where

for some measurable .

We denote by denote the distribution of the vector where it is assumed that and are independent for all . is also distributed according to . For the joint distribution of , we use a coupling of the form

That is, we assume independence over , however, an arbitrary coupling is assumed between the distribution of . We will later specify the particular selection of coordinate wise couplings , however, the following analysis will hold correct for a general selection of .

For given realizations of , we write

| (47) |

Note that is a vector of size where each entry is independent and distributed according to . Furthermore, , and thus for each . Thus, is an empirical measure for For the second term above, we then have:

| (48) |

see for the definition of .

For the first term in (C); we note that and are empirical measures, and thus for every given realization of and , the Wasserstein distance is achieved with a particular permutation of and combined together. That is, letting denote a permutation map for the vector . we have

We will however, consider a particular permutation where

| (49) |

For the following analysis, we will drop the permutation notation and assume that the given order of achieves the Wasserstein distance in (C). Furthermore, the coupling is assumed to have the same order of coordinate-wise coupling.

We then write

| (50) |

The analysis thus far, works for any coupling . In particular, the analysis holds for the coupling that satisfies

for every for some coordinate-wise coupling . Continuing from the term (C), we can then write

where the last step follows from the particular permutation we consider (see (C)).

Furthermore, we also have that

| (51) |

where for the first term we use the following bound:

Appendix D Proof of Lemma 3

We start by writing the Bellman equations:

where we assume that an optimal selector for the infinite population problem at is such that the agents should use the randomized agent-level policy . For the -agent problem, we assume that an optimal state-action distribution at is given by some , which can be achieved by some such that and .

We first assume that . For the infinite population problem, instead of using the optimal selector , we use a randomized agent-level policy from the finite population problem by writing , and letting the agents use . We emphasize that the optimal state action distribution for -agents is not achieved if each agent symmetrically use , in other words, is not an optimal agent-level policy for the -population problem. To have the equality the number of agents needs to tend to infinity. We can then write

Note that

Hence, we can continue:

| (52) |

We now focus on the term . We will follow a very similar methodoly as we have used in the proof of Lemma 2 with slight differences. We denote by denote the distribution of the vector where it is assumed that and are independent for all . Let such that and . We then have that

where . We now introduce where , which are different than the state action vectors and forms on empirical measure for whereas forms an empirical measure for . We further introduce . is also distributed according to . For the joint distribution of , we use a coupling of the form

That is, we assume independence over , however, an arbitrary coupling is assumed between the distribution of . We will later specify the particular selection of coordinate wise couplings . We write

where the expectation is with respect to the random realizations of . The first term corresponds to the expected difference between the empirical measures of and itself, and thus is bounded by .

For the second term, we note that and are empirical measures, and thus for every given realization of and , the Wasserstein distance is achieved with a particular permutation of and combined together. That is, letting denote a permutation map for the vector . we have

We will however, consider a particular permutation where

For the following analysis, we will drop the permutation notation and assume that the given order of achieves the Wasserstein distance above. Furthermore, the coupling is assumed to have the same order of coordinate-wise coupling.

We then write

The analysis thus far, works for any coupling . In particular, the analysis holds for the coupling that satisfies

for every for some coordinate-wise coupling . We can then write

We can then write that

where in the last step we used the fact that is an empirical measure for .

We then conclude that for the term (D):

| (53) |

We now assume that . To get an upper bound similar to (D), for the finite population problem, we let agents to use the randomized policy that is optimal for the infinite population problem, instead of choosing actions that achieves which is the optimal selection for the population problem for the state distribution . Let be such that , we introduce where for some i.i.d. . Denoting by , and following the steps leading to (D), we now write

| (54) |

Following almost identical steps as the first case, one can show that

where , and , , and the expectation above is with respect to the random selections of and . Note that and uses the same randomization , hence averaging over the distribution of , we can write that

In particular, we can conclude that the bound (D) can be concluded as:

| (55) |

Thus, noting that , and combining (D) and (55), we can write

Rearranging the terms and taking the supremum on the left hand side over , we can write

which proves the result together with and .

References

- [1] Yves Achdou, Pierre Cardaliaguet, François Delarue, Alessio Porretta, Filippo Santambrogio, Yves Achdou, and Mathieu Laurière. Mean field games and applications: Numerical aspects. Mean Field Games: Cetraro, Italy 2019, pages 249–307, 2020.

- [2] Berkay Anahtarci, Can Deha Kariksiz, and Naci Saldi. Q-learning in regularized mean-field games. Dynamic Games and Applications, pages 1–29, 2022.

- [3] Andrea Angiuli, Jean-Pierre Fouque, and Mathieu Laurière. Unified reinforcement q-learning for mean field game and control problems. Mathematics of Control, Signals, and Systems, 34(2):217–271, 2022.

- [4] Andrea Angiuli, Jean-Pierre Fouque, Mathieu Laurière, and Mengrui Zhang. Convergence of multi-scale reinforcement q-learning algorithms for mean field game and control problems. arXiv preprint arXiv:2312.06659, 2023.