Natural Language and Dialogue Systems Lab, University of California, Santa Cruz

11email: kkbowden, mawalker@ucsc.edu

Let’s Get Personal: Personal Questions Improve SocialBot Performance in the Alexa Prize

Abstract

There has been an increased focus on creating conversational open-domain dialogue systems in the spoken dialogue community. Unlike traditional dialogue systems, these conversational systems cannot assume any specific information need or domain restrictions, i.e., the only inherent goal is to converse with the user on an unknown set of topics. While massive improvements in Natural Language Understanding (NLU) and the growth of available knowledge resources can partially support a robust conversation, these conversations generally lack the rapport between two humans that know each other. We developed a robust open-domain conversational system, Athena, that real Amazon Echo users access and evaluate at scale in the context of the Alexa Prize competition. We experiment with methods intended to increase intimacy between Athena and the user by heuristically developing a rule-based user model that personalizes both the current and subsequent conversations and evaluating specific personal opinion question strategies in A/B studies. Our results show a statistically significant positive impact on perceived conversation quality and length when employing these strategies.

1 Introduction

There has recently been an increased focus on creating dialogue systems capable of open-domain social dialogue konrad2021alquist ; finch2020emora ; gunrock2018 ; fang2018sounding . Unlike traditional systems, these conversational dialogue systems cannot assume domain restrictions or any specific information need, i.e., the only inherent goal is to converse with the user. While modern systems have access to more information and better tools, foundational components of natural human-human conversation remain elusive, i.e., intimacy and agency. Combined, these should increase engagement in the conversation.

Our conversational open-domain dialogue system, Athena harrison2020athena ; walker-etal-2021-athena , is a competitor in the Amazon Alexa Prize gabriel2020further ; hu2021further , which gives real Amazon Echo users the ability to anonymously access and evaluate open-domain dialogue systems. These systems must interact socially, about any topic, with the goal of achieving a highly rated (>4 out of 5 average user rating) conversation that lasts longer than 20 minutes. Further detail on Athena’s technical components are available in the associated technical reports harrison2020athena ; walker-etal-2021-athena .

In general, there are three primary challenges with open-domain conversation:

-

P1:

There are an infinite number of valid topics we must support. While some topics are common and have been explored in previous dialogue research, e.g., Movies and Music, other topics reflect a user’s special interest. Moreover, since Athena is a live system, there is an implicit expectation that Athena has up-to-the minute live data.

-

P2:

We cannot rely on the user having an explicit goal or information need; they may just want to chat for entertainment.

-

P3:

The conversation must be social. This means that we cannot artificially bloat the length of the conversation by telling twenty jokes in a row. Instead we must be responsive to a user’s interest in different topics.

In this paper we focus on personalization as a partial solution for all three of these core problems. We cannot remove topics from the pool of valid topics in P1, however by learning more about the user’s interests we can direct the conversation to areas of Athena’s knowledge. To accomplish this, we can start the conversation with personal questions that help Athena learn about the user. Whether or not the user has an explicit goal in mind for P2, we can share control of the conversation by using personal opinion questions to engage the user on a level beyond pure fact retrieval. This process will help direct users to content about which Athena has opinions. Personal questions are also a crucial part of human-human social conversation, as in P3. Most human-human conversations would quickly end if one of the conversational partners feel their interests are ignored. It’s vital, then, that Athena displays high levels of responsiveness to demonstrate her listening abilities and show understanding reis2011familiarity ; reis1996attachment . Current research has also found in dyadic conversations that a conversational partner was more well liked if they ask more follow-up questions huang2017doesnthurttoask : a similar strategy could increase the rapport between the user and Athena.

Existing work also shows the impact of experience with dialogue systems when engaging with a system like Athena bowden2019entertaining . Besides varying levels of experience, there are several user pools with different needs: there are users who have never interacted with Athena vs. repeat users, kids vs. adults, and users with varied backgrounds and interests. The only way to effectively cater to all of them is to personalize each conversation for each user. For example, the personal opinion questions we discuss below are labeled with age appropriateness, e.g., when talking about Movies with a child, Athena talks about cartoons like Frozen. Participation in the Alexa Prize allows us to evaluate our hypotheses: asking questions at the start of the conversation allows Athena to populate a personalized user model, users are willing to give highly informative feedback if invited, and A/B testing with live traffic shows a statistically significant positive impact on both perceived conversation quality and length when using personal opinion questions.

The remainder of this paper is organized as follows: Section 2 examines the current state of open-domain conversational systems with a specific emphasis on the Amazon Alexa Prize. Section 3 demonstrates Athena’s strategy of front-loading the conversation with personal questions to quickly build a personalized user model. Subsequently, Section 3.1 analyzes the resultant trends established across a large pool of real Alexa users while Section 3.2 explores the utility of asking the users directly for feedback and inviting personal questions from users. Then, in Section 4 we investigate two specific personal opinion question strategies (asking topic related Would You Rather choices and open-ended Hypothetical questions), for which we see a statistically significant improvement in both rating and length when evaluated at scale. In Section 5 we conclude.

2 Related Work

Dialogue systems have been an area of interest for over 50 years weizenbaum1966eliza . Much previous work with dialogue systems has been centered around the explicit goal of completing a task, e.g., booking a flight, or booking a restaurant. Commonly, these systems anticipate an explicit goal or “information need” kiseleva2016predicting ; chuklin2015click ; Radlinski17 . Our goal of making a casual social dialogue system that is open domain is a very distinct task.

There have been many different approaches to open-domain dialogue ma2021unstructured . Recent work has seen an increased emphasis on trained end-to-end conversational systems sordoni2015neural ; vinyals2015neural ; mila2017 ; burtsev2018first . However, we believe that these models are not yet ready for real user interactions adiwardana2020meena ; zhang2020dialogpt ; roller2021recipes . Instead, many Alexa Prize systems take a hybrid approach of combining rules, retrieval, and generation to create a more robust system song2016twoAreBetterThanOne ; fedorenko2018avoiding ; zhou2020xiolice . These hybrid approaches closely resemble Athena’s design.

Other work that is focused on open-domain personalization has been focused on short exchanges yang2017personalizedResponseGenViaDomainAdaptation , or used Twitter and TV Script data as a source of utterances li2016persona . This is not adequate for Alexa Prize conversations bowden2018opendomain .

Athena’s unique environment, the Amazon Alexa Prize chandra2018conversational ; gabriel2020further , requires open-domain social chitchat with a spoken dialogue system. Over the last four years several competitive systems have emerged konrad2021alquist ; finch2021emora ; liang2020gunrock ; fang2018sounding . Personalization has become an increasingly important part of these social systems. IrisBot, Genuine2, and Caspr use repeat user detection to slightly alter some responses ahmadvand2018iris ; kinjal2021caspr ; rodriguezCantelar2021genuine . SoundingBoard associated their users with Big-5 personality traits and adapted the conversation to those traits fang2018sounding . Tartan and Emora personalized conversation by using user-demographic information with handcrafted rules to affect follow-up content and ask an increased number of personal questions chen2020tartan ; finch2021emora . Alana used user profiles to track the interests of returning users and asked personal questions to build rapport alana2018 . Gunrock maintained user profiles which were used to both affect follow-up content and change sub-topic discussions according to gender, i.e., Fashion liang2020gunrock . Audrey trained a reinforcement learning model tasked with personalizing topic switching hong2020audrey . Proto uses an unspecified slot extraction process during the natural language understanding phase to extract attributes later used when personalizing responses saha2021proto , while Dream used Reddit data with the BART language model lewis2020bart to create a topic-based vectorization of Reddit user personas, which was subsequently used to give topic recommendation to real users baymurzina2021Dream . Alquist experimented with personalized sub-dialogue selection konrad2021alquist , while Athena reported results using a personalized topic promotion strategy walker-etal-2021-athena . This work is closely related to our own; however none of these systems evaluate and report the direct results of asking personal opinion questions, nor do they present the trends captured by their user models/personas.

We targeted two playful personal opinion question strategies (Would You Rather and Hypothetical questions) because playfulness is considered important when building rapport between conversational agents and users shani2022alexasnowman and humor has been shown to increase likeability and trust meyer2015understanding . Would You Rather questions have also been used in previous therapeutic settings to encourage conversation fields2009would .

3 Gathering User Preferences, Interests, and Advice

| [A6] | Cool, what have you been out doing? |

| [U6] | swim |

| [A7] | swimming? I’m glad to hear that you’ve been able to engage in your interests. It seems like the world has completely changed over the last year. Have you been able to work from home? |

| [U7] | i don’t work but i’ve been able to do school |

| [A8] | Well, I hope you’re able to keep up with your school work remotely. Does it get hard at times? |

| [U8] | not really |

| [A9] | Hm. Well, thinking about it, I kind of have to stay at home all the time too. Hey, I know it’s probably not possible right now, but I’ve always wanted to go on a vacation and to travel. What’s a place that you’ve always wanted to travel to? |

| [U9] | hawaii |

| [A10] | I’ve heard that it’s very nice there. For me, I think the great wall of china is at the top of my list of travel destinations. It seems like it would be amazing to walk along it. So, Is there a particular reason why you want to go to hawaii? |

| [U10] | i’ve already been there and i really liked it |

| [A11] | Nice! Hey, I’m curious, What do you like the most about going somewhere? |

| [U11] | just bring out with to feel like i don’t have any responsibility there because it’s not my own house |

| [A12] | That’s an interesting answer. I really like the culture! For example, I would love to see all the paintings in the Louvre. Hmm, Did you go on family vacations as a kid? |

| [U12] | yeah |

| [A12] | That’s cool. While I would love to go on vacation, that isn’t really an option at the moment. But What do you normally like to do for fun. |

| [U13] | i play chess |

| [A13] | Oh, I see. chess sounds like a really interesting weekend activity! I wish I had more of a body, so that I was able to do some fun activities on the weekend. … Hey, is it alright if I ask for a little advice? Do you have any ideas how I could be more interesting? |

| [U15] | if you had a different voice all the time |

| [A15] | Thanks for the advice! You know, I realize I’ve been asking you a lot of questions, but how about me? Is there anything you want to know about me? |

| [U16] | how old are you |

| [A16] | I like to think of myself as being 20 something. |

Similar to when two humans are first meeting, it is challenging for Athena to break the ice with a new user; Athena has no idea where to direct the conversation in-order to engage the user. In this paper we argue that personalization increases the sense of intimacy and engagement. Therefore, Athena aims to build a user model as quickly as possible. To this end, Athena starts each new conversation with a carefully crafted introduction sequence that is explicitly designed to elicit user interests. Athena employs several different dialogue strategies to elicit this information. We ask the user for their name and learn about how the pandemic has impacted them. We also ask them about their hobbies, weekend activities, and vacation plans before inviting them to learn more about Athena by soliciting advice and inviting questions. By front-loading the conversation with these probing questions, we increase the chances of the user model picking up usable information.

In Figure 1 we provide an excerpt of a conversation and introductory sequence. During this conversation, the user model learns the user’s name, that their hobbies include swimming and chess (U6 and U13), that they are a student (U7), and that Hawaii is a travel destination of interest (U9). Some of this content will be immediately useful in the conversation, e.g., reaffirming their name and asking why they like Hawaii, while other pieces of information will be useful when initiating a new topic, e.g., talking about Sports because the user is a swimmer, or Board Games because of their interest in chess.

Athena supports 17 topics ranging from large general topics, e.g., Sports, to smaller niche topics. Each topic is associated with a set of referential expressions, for example, baseball, football, and Stephen Curry, all refer to the Sports topic. We also curated a gazetteer of 250 hobbies by analyzing the results of probing questions over several months. Each hobby is annotated with paraphrases, e.g., i like to paint, i like painting, and i painted when i was young will all match the painting hobby, and any associated topics when relevant, e.g., basketball and piano map to Sports and Music respectively.

3.1 Conversational Data Analysis

A key component when building a personalized user model is tracking hobbies and interests. Identifying common interests help practitioners select desirable topics in future conversational systems. Meanwhile, the knowledge Athena gains personalizes topic management which improves conversations walker-etal-2021-athena . By navigating to topics that the user likes, Athena is also able to ask additional personal questions later in the conversation that are more likely to interest the user (Section 4). The data presented was collected by conversing with anonymous users during the Alexa Prize competition over several weeks, i.e., several thousand conversations with a majority of users talking to Athena for the first time.

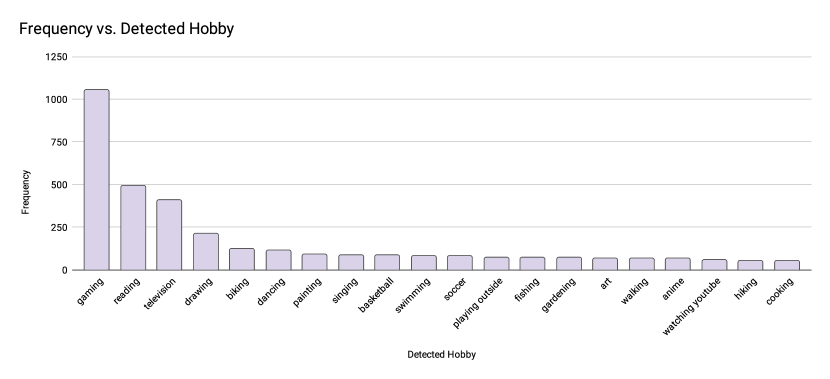

Figure 2 shows the distribution of the top 20 hobbies detected by the user model over a 32 day period of time. Only 42 out of 131 total detected hobbies were associated with more than 25 unique users. The top five hobbies, i.e., (video) gaming, reading, television, drawing, and biking, represent 48% of all detected hobbies over this period of time. Gaming in particular, represents 22% of all detected hobbies (1056 out of 4790).

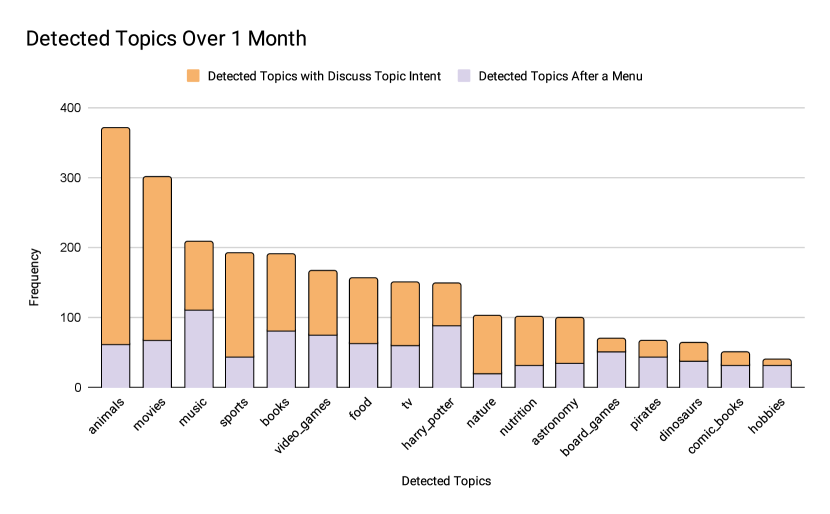

Figure 3 provides the number of explicit discuss topic requests per topic, e.g., out of the blue the user says let’s talk about movies, and the number of explicit topic requests following a menu of options provided by Athena, e.g., the user says something like let’s talk about music after Athena prompts them with are you interested in talking about animals, books, or music?. Figure 3 helps us understand which topics are most highly trafficked. Animals and Movies are the most frequent user-initiated topics (orange), likely because Movies is a commonly discussed topic in the Alexa Prize, and Animals is triggered by a user mentioning their pet. Meanwhile, Music and Harry Potter are the most common topic choice after the user has been given a menu of choices. This could indicate that users are interested in these topics, but do not necessarily expect that a socialbot will be able to discuss them. This trend is seen in other niche Athena topics, e.g., Comic Books and Dinosaurs.

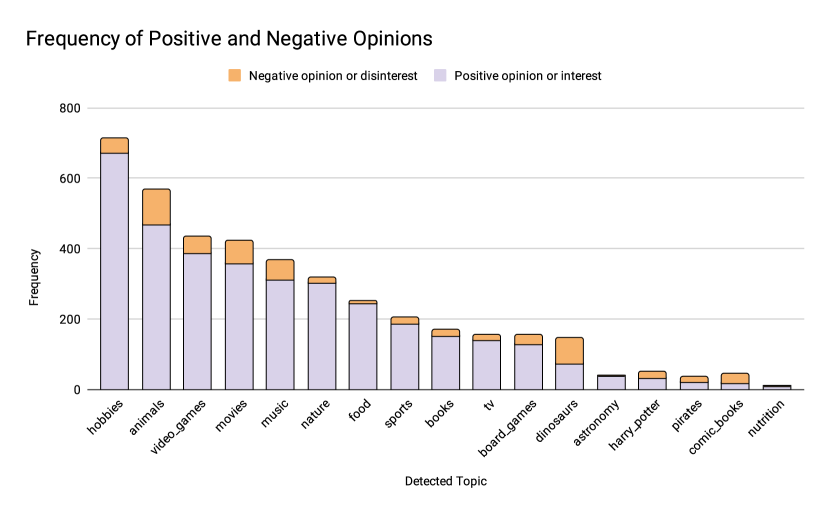

Over a 22 day period, we collected 11,415 opinions from 2,521 conversations. Users more commonly provided positive opinions than negative opinions (9,755 positive vs. 1,866 negative). Figure 4 shows the distribution of detected topics in positive and negative opinions. The trends are reasonable; people like talking about their hobbies and their pets, but may not have an opinion about niche topics.

3.2 User Advice and Questions

Athena further breaks the ice by asking the user one of three possible open-ended questions related to Athena’s self-improvement (e.g., A13 in Figure 1) and to learn which topics the user finds interesting. The question we ask is always prefaced by the statement: Hey, is it alright if I ask for a little advice?. The following represents Athena’s three open-ended questions:

-

IceQ1

Do you have any ideas how I could be more interesting?

-

IceQ2

I’m trying to figure out fun things to talk about. Would you mind telling me what kind of topics you like talking about with your friends?

-

IceQ3

I’m trying to figure out fun things to talk about. What are your personal interests and favorite conversational topics?

We collected 2,300 responses to these questions. While some users refused to participate or even became adversarial during this sequence, many others answered Athena’s call with genuine user feedback. From these responses we can estimate topics of interest to each specific user, and for future system improvements.

Responses to Athena’s first question, IceQ1, are particularly informative; a sampling of these responses can be seen in Figure 6. Some feedback is immediately useful for system development, such as reducing response latency and tweaking the way Athena talks through Speech Synthesis Markup Language (SSML), while other feedback helps us understand other desired functionalities of Athena, e.g., supporting commonly requested topics and “spirited debates”. Users are also directly stating they want a more “personable” experience where Athena asks “personal questions”, engages in topics of mutual interest, and discusses everyday events in their life such as work or school (as in our introductory excerpt in Figure 1).

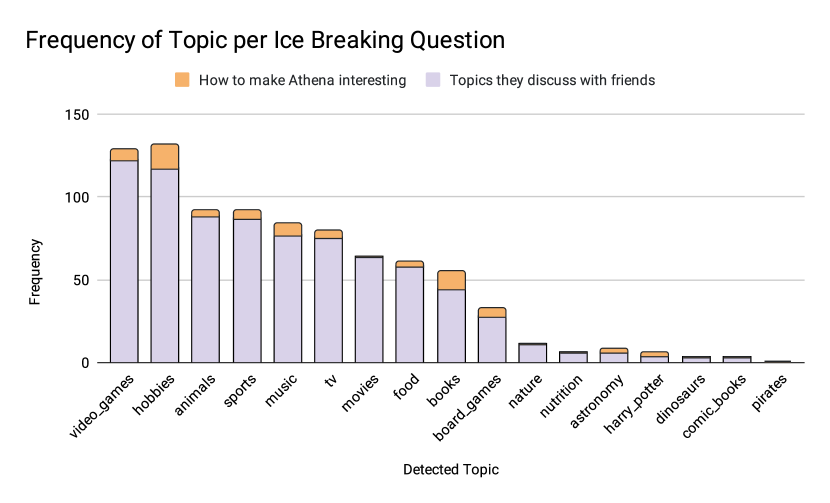

IceQ2 and IceQ3 both share a similar goal; we want the user to explicitly tell us the types of things they like talking about. The feedback related to specific hobbies (e.g., gardening) and topics (e.g., animals and hobbies) that Athena can identify will be leveraged throughout the conversation when Athena is initiating new topics. In Figure 5 we see the distribution of topics detected across the 2300 responses. IceQ1 is differentiated from IceQ2 and IceQ3, since the questions have different intentions. Summing these results, we find that 859 topics were identified in 691 responses (some responses provided more than one topic). This means that asking a single ice breaking question at the start of a conversation results in personalizable data for a system supporting similar topics to Athena 30% of the time.

An additional part of the ice breaking process is to explicitly invite the user to ask us a question, i.e., Athena states: You know, I realize I’ve been asking you a lot of questions, but how about me? Is there anything you want to know about me? (as in A15 from Figure 1). Again, some users were adversarial, but several users did ask questions, primarily about learning personal information about Athena. Ignoring bad questions, e.g., antagonistic questions or ASR errors, over 90% of questions asked were personal questions about Athena’s life and opinions. Figure 6 gives example user questions that were a result of Athena’s explicit invitation.

User Feedback User Questions can we ask questions and you answer them what’s next after the alexa bot competition i would like to develop emotional connections what is your favorite book be more funnier how old are you maybe you can tell more about yourself do robots and stuff like you have a birthday be more personable what is your favorite food and color by asking people how was your day do you have any friends maybe ask some more personal questions do you have any pets ask me personal questions what’s your favorite video game well you can have a hobby what do you do in your free time ask about people’s personal life instead of general questions do you listen to music i want you to ask about school or work what’s your name well you could ask people really weird questions do you ever get lonely good to talk about peoples families and the things they love oh yeah what’s your favorite superhero we can talk about our favorite colors and more about us would you rather be a strawberry or a cantaloupe you could learn what other people like and share with them if you could be any animal which would you be maybe ask like more out there questions where do you see yourself in twenty to thirty years

4 Experiments with Personal Opinion Questions

Inviting an exchange of opinions about the current topic of discussion creates a more intimate experience that increases user satisfaction. We have curated a dataset of topic-annotated personal opinion questions, which are inter-weaved throughout the conversation bowden2019entertaining . The personal opinion questions are split into two different strategies, Would You Rather choices (WYR) and open-ended Hypothetical questions (HYP). We picked these strategies because playfulness and humor are important when building rapport and trust shani2022alexasnowman ; meyer2015understanding . These strategies have also been characterized as good conversation starters fields2009would and several lists of questions exist across the internet111E.g., https://conversationstartersworld.com contains several lists. The wouldyourather and hypotheticalsituation Reddit communities also have 316k and 102k subscribers respectively.. These sequences can also be characterized as a game222E.g., https://psycatgames.com/app/would-you-rather/.; gamifying our personal opinion question strategies slightly may have a desirable impact since “gaming” is a popular hobby among users, i.e., Figure 2. As we will discuss in Section 4.1, it is also straightforward to crowdsource the collection of question/answer pairs per topic, and the limited scope of answer choices, especially true for WYR, makes it easier to build NLU that can understand the user’s responses.

Figure 7 demonstrates a sample conversation using these strategies. In this example, Athena initiates the Dinosaurs topic in A1, and proceeds to interweave 6 different dinosaur themed dialogue strategies: A1-A2 gives dinosaur facts, A3-A4 talks about dinosaur preferences, A4-A5 focuses on dinosaur movies, A6-A7 goes through a dinosaur WYR sequence, A7-A8 talks about why dinosaurs are extinct, and A9-A10 goes through a HYP sequence. Athena’s two personal opinion strategies extend topical depth by four turns. The user also provides us with long thoughtful responses, which indicate their engagement walker2021IWSDSParadise , furthering the sense of rapport built between Athena and the user.

| [A1] | But most people seem to find dinosaurs really interesting. There are dinosaur movies and whole floors of natural history museums dedicated to dinosaurs. Personally, I find it fascinating that modern birds are descended from a particular family of dinosaurs. |

| [U1] | hella cool |

| [A2] | Definitely. I was surprised to hear how much work went into creating the movie Land Before Time. Over 600 background paintings were made for the film. The artists had to create a believable environment in which there was almost no foliage. Most paintings depicted beautiful but barren wastelands. |

| [U2] | surprising |

| [A3] | Right? My favorite dinosaur is the Tyrannosaurus Rex. It’s huge and ferocious, but the little arms make it somewhat adorable. What about you? What’s your favorite dinosaur? |

| [U3] | i’m not sure i have to say a t. rex |

| [A4] | Okay. There are lots of movies about dinosaurs. One of the most famous dinosaur movies is Jurassic Park, old but classic. Are there any dinosaur movies that you love? |

| [U4] | i have to say jurassic park to adjust because that’s the only one i’ve seen |

| [A5] | Awesome! That one is well known! I seem to remember people saying the dinosaurs were very vivid |

| [U5] | i never knew that |

| [A6] | yeah, I love to collect these fun facts! But anyways, I’m interested in your opinion. Would you rather ride on the back of a pterodactyl or brontosaurus as your primary means of transportation? |

| [U6] | i have to say brontosaurus and i hate heights |

| [A7] | Choosing a Brontosaurus you say, great pick! I would rather ride a pterodactyl so I could experience flight as well as move around more efficiently. Riding a brontosaurus would be thrilling because of their size but less practical than a pterodactyl. So, okay. Do you know why dinosaurs are extinct? |

| [U7] | yeah cause i’m eat your head it right |

| [A8] | Ah nice, you must be super smart. Let me tell you what I heard.The mass extinction of dinosaurs is a scientific mystery, but most hypotheses include an extraterrestrial impact or a massive bout of volcanism. What do you think happened? |

| [U8] | i’d say |

| [A9] | I’d like to hear your ideas on this. Let’s say you are trapped on Jurassic World alone with the dinosaurs, what would you do to survive? |

| [U9] | i’m not sure i just say find sheltering something and start there |

| [A10] | It’s a hard question! I would try to become closer to the younger dinosaurs so that they would grow up with me and feel more comfortable with me. I would also try to help them by finding food and shelter for them. At any rate, Dinosaurs… |

4.1 Data Collection

We collected a novel dataset in which we asked crowdsourced workers to provide both questions and answers for specific topics such as Food, Nature, and Astronomy bowden2019entertaining . After filtering out low-quality and extraneous topics there are 635 pairs across 14 topics. We also annotate our data for kid-friendliness, which yields 342 question/answer pairs spanning all 14 supported topics marked as kid-friendly333Data available from https://nlds.soe.ucsc.edu/software..

When a response generator is deciding to use our personal opinion questions, it will first determine if the user is a child (over a 22 day period 11.44% (641) of users were identified as youths by our user model). While the age-appropriateness of some content may be subjective, Athena aims to err on the side of caution.

These questions were written and answered by human workers, which means the answer content inherently assumes human properties. However, users are quick to point out that, for example, Athena does not eat, go outside, or have a physical body, which can cause breakdowns mid-conversation. Therefore, we curated this dataset to ensure all of the system’s responses were realistic for an Echo device. For example, with Food Athena consistently reminds the user that she can only eat electricity.

4.2 Methodology

Personal opinion questions are used to increase topical depth. When a user is engaged with one of the 14 topics that has exchanges, one of each question type (WYR and HYP) can be asked per topic per conversation. After asking a question, we try to match the user’s answer to an expected answer. If Athena can make a match, we can customize the next grounding statement, otherwise we’ll fallback to a generic grounding statement. After acknowledging the user’s answer, the system will provide its own opinion. Finally, Athena appends a short transitional phrase to the end of its answer before initiating a new sub-dialogue. In Figure 7 we can see longer conversation snippets that use these personal opinion questions to increase topical depth. The dialogue manager has a preference to interleave personal questions with other types of exchange. We saw this preference in Figure 7, where the WYR (A6) and HYP (A9) questions are separated by other Dinosaur content.

| Comic Books | WYR | [A1] | What are your thoughts on this. If you wanted a mentor to help use your powers, would you rather choose tony stark, or doctor strange? |

| [U1] | doctor strange | ||

| Animals | WYR | [A2] | What are your thoughts on this. Would you rather tame and own a lion or a gorilla? |

| [U2] | i love both | ||

| Board Games | HYP | [A3] | What are your thoughts on this. If you had the power to become the world’s best board game player, what board game would you choose at excelling at? Why? |

| [U3] | i would choose every game because it’s always fun to play against other people and have good games | ||

| Food | HYP | [A4] | I’m super curious to hear your thoughts. If you could make one type of food healthy and have zero calories, carbs, etc what would it be? |

| [U4] | that’s a hard question |

In Figure 8, we have further examples of user responses. Responses vary between the two question strategies. In WYR sequences (U1-U2), the user tends to provide short but precise responses, e.g., doctor strange in U1, which often contain the exact text associated with an answer choice. In some cases, the user may answer without using the exact same text, or otherwise imprecisely as in U2. In HYP sequences (U3-U4), there are different answer trends. For example, some users embrace the open-ended nature of the questions by providing detailed responses, as in U3 while other users might provide precise responses, e.g., risk in an alternate response to A3, however, without the integration of extensive external knowledge resources, it is hard to determine the suitability of the answer. Meanwhile, other users may struggle to think of answer to an open-ended question on the fly, as in U4.

4.3 Evaluation

| Req. WYR | A convs. | B convs. | A rating | B rating | p-value | A length | B length | p-value |

| 0 | 763 | 232 | 3.71 | 3.78 | .709 | 22.49 | 22.05 | .774 |

| 1 | 313 | 232 | 3.94 | 3.78 | .125 | 37.39 | 22.05 | .000 |

| 2 | 111 | 232 | 4.27 | 3.78 | .000 | 58.26 | 22.05 | .000 |

| 3 | 51 | 232 | 4.38 | 3.78 | .002 | 71.77 | 22.05 | .000 |

| Req. HYP | A convs. | B convs. | A rating | B rating | p-value | A length | B length | p-value |

| 0 | 1980 | 681 | 3.70 | 3.72 | .734 | 21.92 | 21.02 | .303 |

| 1 | 804 | 681 | 3.84 | 3.72 | .085 | 35.98 | 21.02 | .000 |

| 2 | 282 | 681 | 3.92 | 3.72 | .032 | 53.84 | 21.02 | .000 |

| 3 | 104 | 681 | 4.03 | 3.72 | .034 | 75.14 | 21.02 | .000 |

We ran two A/B experiments with live traffic, i.e., any person with access to Alexa is a possible participant. In the A case (75% of traffic), just one type of the personal opinion question is enabled, while in the B case (25% of traffic) neither is enabled. The main evaluation criteria for the Alexa Prize is to create long and engaging conversations. Therefore, we evaluate the two dialogue strategies with respect to two metrics: overall user rating and conversation length. User rating is direct feedback - after the conversation ends, the user rates the system on a scale from 1-5 based on how interested they would be in talking to our system again. Length is evaluated automatically based on the number of exchanges in the conversation. In Table 1 and Table 2 we can see the Would You Rather (WYR) and Hypothetical (HYP) results respectively. In both cases, we only consider conversations that lasted longer than 6 exchanges, to account for early or accidental hang-ups at the start of the conversation, which can negatively bias results walker2021IWSDSParadise .

Our WYR evaluation ran over 5 days while HYP ran for 14 days. We initially planned to run both evaluations for 14 days, however, we felt we had a sufficient sample size with WYR after only 5 days. In both evaluations, we systematically vary a threshold for the minimum number of instances of personal opinion questions (POQs) in the conversation. Since the user rating only comes at the end of the conversation, varying this threshold should make it easier to observe the impact of our variables on the conversation. When the minimum Req. WYR/HYP value is 0, all conversations longer than six exchanges are included. Each POQ sequence includes two exchanges - one to ask the question and another to answer.

User ratings trend towards an improved experience when at last one POQ strategy is enabled, and becomes statistically significant once the threshold requires at least two POQ per conversation. When evaluating the Pearson correlational between the user ratings and the number of POQs, we see a weak, but statistically significant (p.001), correlation: .17 and .10 for WYR and HYP respectively. WYR results in slightly higher ratings than HYP, and we can see the correlation between HYP and the user ratings is also weaker than WYR. We speculate that the difference in rating may be related to the increased difficultly of NLU when acknowledging HYP answers. HYP questions are open-ended and designed to provoke innumerable valid answers, while WYR only has two valid answers. Therefore, it is easier for Athena to detect and signal an understanding of the user’s answer to a WYR question than a HYP question. An example of this scenario is in Figure 7. In A7 Athena repeats the user’s choice of brontosaurus in a WYR sequence, but when responding to a HYP sequence in A10, the complexity of the user’s answer forces Athena to answer without an explicit signal that acknowledges the user. This may suggest the importance of signalling when responding to personal questions, but requires further investigation.

While rating is direct user input, it only comes at the end of the conversation. Therefore, we also calculate how much of the conversation was part of a POQ sequence. On average, when 1 POQ is required, this translates to at least 5.5% of the conversation’s total exchanges being part of a POQ sequence. This increases to at least 7.2% of exchanges and 8.2% of exchanges when 2 POQ and 3 POQ are required, respectively. In other words, as our threshold increases, the required percentage of the conversation that is part of a POQ sequence also increases. Since both the rating and POQ contribution are increasing, we feel confident that the impact of Athena’s POQ strategies is not vanishing as length increases.

In both A/B tests the difference in conversational length becomes statistically significant when we require at least 1 personal opinion question. Additionally, both WYR and HYP see a strong Pearson correlation between the length of the conversation and the number of POQ: .82 and .80 respectively (p.001). Since conversation length is a good predictor of conversation quality walker2021IWSDSParadise , we interpret these results as confirmation that our POQs foster a more engaging user experience.

Over a 22 day period, 5,113 POQs were asked across the 14 supported topics. Of these 5,113 questions, 4,494 (about 88%) were answered in a way that allowed the conversation to continue on-topic. This validates the effectiveness of the POQ strategies at extending topical depth. Two topics (Nature and Food) make up the majority of the terminal cases, primarily due to their design.

5 Conclusion

In this paper we proposed three fundamental challenges practitioners face when building open-domain dialogue systems: covering an intractable landscape of topics, users who aimlessly meander through the conversation, and the core requirement of sociability. We propose a partial solution to these three problems lies in personalizing the conversation to each user. We implement a system, Athena, and deploy it at scale in the Amazon Alexa Prize, yielding several interesting results. Firstly, we front-load the conversation with a sequence of probing personal questions to rapidly model the user. By qualifying several thousand real user conversations we’re able to understand the types of topics users are interested in along with common user hobbies - informing future practitioners where to concentrate their efforts. Secondly, we ask the user for candid advice on how to improve Athena and invite the user to ask questions. The user input is surprising informative, helping to identify areas of improvement and future development. Moreover, the user input indicates a strong desire for a more personable experience as evidenced by direct user requests, e.g., be more personable, and by the fact that most user questions ( 90%) were personal questions about Athena’s life and opinions. This data further signals the possible benefits of asking the user candid questions during a conversation for future evaluations. Finally, we evaluated two personal opinion question strategies, Would You Rather choices and open-ended Hypothetical questions. A/B tests carried out over several days of live user traffic confirmed a statistically significant positive impact on both conversation rating and length when these questions were included in a conversation.

References

- (1) Adiwardana, D., Luong, M.T., So, D.R., Hall, J., Fiedel, N., Thoppilan, R., Yang, Z., Kulshreshtha, A., Nemade, G., Lu, Y., et al.: Towards a human-like open-domain chatbot. arXiv preprint arXiv:2001.09977 (2020)

- (2) Ahmadvand, A., Choi, I., Sahijwani, H., Schmidt, J., Sun, M., Volokhin, S., Wang, Z., Agichtein, E.: Emory irisbot: An open-domain conversational bot for personalized information access. Alexa Prize Proceedings (2018)

- (3) Basu, K., Wang Huaduo and, D.N., Li, X., Li, F., Chandra Varanasi, S., Gupta, G.: Caspr: A commonsense reasoning-based conversational socialbot. Alexa Prize Proceedings (2021)

- (4) Baymurzina, D., Kuznetsov, D., Evseev, D., Karpov, D., Sagirova, A., Peganov, A., Ignatov, F., Ermakova, E., Cherniavskii, D., Kumeyko, S., et al.: Dream technical report for the alexa prize 4. Alexa Prize Proceedings (2021)

- (5) Bowden, K.K., Oraby, S., Wu, J., Misra, A., Walker, M.A.: Combining search with structured data to create a more engaging user experience in open domain dialogue. CoRR abs/1709.05411 (2017). URL http://arxiv.org/abs/1709.05411

- (6) Bowden, K.K., Wu, J., Cui, W., Juraska, J., Harrison, V., Schwarzmann, B., Santer, N., Whittaker, S., Walker, M.: Entertaining and opinionated but too controlling: a large-scale user study of an open domain alexa prize system. In: Proceedings of the 1st International Conference on Conversational User Interfaces, pp. 1–10 (2019)

- (7) Burtsev, M., Logacheva, V., Malykh, V., Serban, I.V., Lowe, R., Prabhumoye, S., Black, A.W., Rudnicky, A., Bengio, Y.: The first conversational intelligence challenge. In: The NIPS’17 Competition: Building Intelligent Systems, pp. 25–46. Springer (2018)

- (8) Chen, C.Y., Yu, D., Wen, W., Yang, Y.M., Zhang, J., Zhou, M., Jesse, K., Austin, C., Bhowmick, A., Iyer, S., Sreenivasulu, G., Cheng, R., Bhandare, A., Yu, Z.: Gunrock: Building a human-like social bot by leveraging large scale real user data. Alexa Prize Proceedings (2018)

- (9) Chen, F., Chi, T.C., Lyu, S., Gong, J., Parekh, T., Joshi, R., Kaushik, A., Rudnicky, A.: Tartan: A two-tiered dialog framework for multi-domain social chitchat. Alexa Prize Proceedings (2020)

- (10) Chuklin, A., Markov, I., Rijke, M.d.: Click models for web search. Synthesis Lectures on Information Concepts, Retrieval, and Services 7(3), 1–115 (2015)

- (11) Curry, A.C., Papaiannou, I., Suglia, A., Agarwal, S., Shalyminov, I., Xu, X., Dusek, O., Eshghi, A., Konstas, I., Rieser, V., Lemon, O.: Alana v2: Entertaining and informative open-domain social dialogue using ontologies and entity linking. Alexa Prize Proceedings (2018)

- (12) Fang, H., Cheng, H., Sap, M., Clark, E., Holtzman, A., Choi, Y., Smith, N.A., Ostendorf, M.: Sounding board: A user-centric and content-driven social chatbot. arXiv preprint arXiv:1804.10202 (2018)

- (13) Fedorenko, D., Smetanin, N., Rodichev, A.: Avoiding echo-responses in a retrieval-based conversation system. In: Conference on Artificial Intelligence and Natural Language, pp. 91–97. Springer (2018)

- (14) Fields, D.: Would You Rather…?: 465 Provocative Questions to Get Teenagers Talking. Zondervan (2009)

- (15) Finch, S.E., Finch, J.D., Ahmadvand, A., Dong, X., Qi, R., Sahijwani, H., Volokhin, S., Wang, Z., Wang, Z., Choi, J.D., et al.: Emora: An inquisitive social chatbot who cares for you. arXiv preprint arXiv:2009.04617 (2020)

- (16) Finch, S.E., Finch, J.D., Huryn, D., Hutsell, W., Huang, X., He, H., Choi, J.D.: An approach to inference-driven dialogue management within a social chatbot. Alexa Prize Proceedings (2021)

- (17) Gabriel, R., Liu, Y., Gottardi, A., Eric, M., Khatri, A., Chadha, A., Chen, Q., Hedayatnia, B., Rajan, P., Binici, A., et al.: Further advances in open domain dialog systems in the third alexa prize socialbot grand challenge. Alexa Prize Proceedings (2020)

- (18) Harrison, V., Juraska, J., Cui, W., Reed, L., Bowden, K.K., Wu, J., Schwarzmann, B., Ebrahimi, A., Rajasekaran, R., Varghese, N., et al.: Athena: Constructing dialogues dynamically with discourse constraints. arXiv preprint arXiv:2011.10683 (2020)

- (19) Hong, C.H., Liang, Y., Roy, S.S., Jain, A., Agarwal, V., Draves, R., Zhou, Z., Chen, W., Liu, Y., Miracky, M., et al.: Audrey: A personalized open-domain conversational bot. arXiv preprint arXiv:2011.05910 (2020)

- (20) Hu, S., Liu, Y., Gottardi, A., Hedayatnia, B., Khatri, A., Chadha, A., Chen, Q., Rajan, P., Binici, A., Somani, V., et al.: Further advances in open domain dialog systems in the fourth alexa prize socialbot grand challenge. Alexa Prize Proceedings (2021)

- (21) Huang, K., Yeomans, M., Brooks, A.W., Minson, J., Gino, F.: It doesn’t hurt to ask: Question-asking increases liking. Journal of personality and social psychology 113(3), 430 (2017)

- (22) Juraska, J., Bowden, K., Reed, L., Harrison, V., Cui, W., Patil, O., Rajasekaran, R., Ramirez, A., Li, C., Zamora, E., Lee, P., Bheemanpally, J., Pandey, R., Ratnaparkhi, A., Walker, M.: Athena 2.0: Contextualized dialogue management for an Alexa Prize SocialBot. In: Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, pp. 124–133. Association for Computational Linguistics, Online and Punta Cana, Dominican Republic (2021). DOI 10.18653/v1/2021.emnlp-demo.15. URL https://aclanthology.org/2021.emnlp-demo.15

- (23) Khatri, C., Hedayatnia, B., Venkatesh, A., Nunn, J., Pan, Y., Liu, Q., Song, H., Gottardi, A., Kwatra, S., Pancholi, S., Cheng, M., Qinglang, C., Stubel, L., Gopalakrishnan, K., Bland, K., Gabriel, R., Mandal, A., Hakkani-Tur, D., Hwang, G., Michel, N., King, E., Prasad, R.: Advancing the state of the art in open domain dialog systems through the alexa prize. Alexa Prize Proceedings (2018)

- (24) Kiseleva, J., Williams, K., Hassan Awadallah, A., Crook, A.C., Zitouni, I., Anastasakos, T.: Predicting user satisfaction with intelligent assistants. In: Proceedings of the 39th International ACM SIGIR conference on Research and Development in Information Retrieval, pp. 45–54. ACM (2016)

- (25) Konrád, J., Pichl, J., Marek, P., Lorenc, P., Ta, V.D., Kobza, O., Hỳlová, L., Šedivỳ, J.: Alquist 4.0: Towards social intelligence using generative models and dialogue personalization. arXiv preprint arXiv:2109.07968 (2021)

- (26) Lewis, M., Liu, Y., Goyal, N., Ghazvininejad, M., Mohamed, A., Levy, O., Stoyanov, V., Zettlemoyer, L.: Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 7871–7880 (2020)

- (27) Li, J., Galley, M., Brockett, C., Gao, J., Dolan, B.: A Persona-Based Neural Conversation Model. arXiv preprint arXiv:1603.06155 (2016)

- (28) Liang, K., Chau, A., Li, Y., Lu, X., Yu, D., Zhou, M., Jain, I., Davidson, S., Arnold, J., Nguyen, M., et al.: Gunrock 2.0: A user adaptive social conversational system. arXiv preprint arXiv:2011.08906 (2020)

- (29) Ma, L., Li, M., Zhang, W.N., Li, J., Liu, T.: Unstructured text enhanced open-domain dialogue system: A systematic survey. ACM Transactions on Information Systems (TOIS) 40(1), 1–44 (2021)

- (30) Meyer, J.C.: Understanding humor through communication: Why be funny, anyway? Lexington Books (2015)

- (31) Radlinski, F., Craswell, N.: A theoretical framework for conversational search. In: Proceedings of the 2017 Conference on Conference Human Information Interaction and Retrieval, CHIIR ’17, pp. 117–126. ACM, New York, NY, USA (2017). DOI 10.1145/3020165.3020183. URL http://doi.acm.org/10.1145/3020165.3020183

- (32) Reis, H.T., Maniaci, M.R., Caprariello, P.A., Eastwick, P.W., Finkel, E.J.: Familiarity does indeed promote attraction in live interaction. Journal of personality and social psychology 101(3), 557 (2011)

- (33) Reis, H.T., Patrick, B.C.: Attachment and intimacy: Component processes. (1996)

- (34) Rodriguez-Cantelar, M., de la Cal, D., Estecha, M., Grande, A., Martin, D., Rodriguez, N., Martinez, R., Fernando, L.: Genuine2: an open domain chatbot based on generative models. Alexa Prize Proceedings (2021)

- (35) Roller, S., Dinan, E., Goyal, N., Ju, D., Williamson, M., Liu, Y., Xu, J., Ott, M., Smith, E.M., Boureau, Y.L., et al.: Recipes for building an open-domain chatbot. In: Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, pp. 300–325 (2021)

- (36) Saha, S., Das, S., Soper, E., Pacquetet, E., Srihari, R.K.: Proto: A neural cocktail for generating appealing conversations. arXiv preprint arXiv:2109.02513 (2021)

- (37) Serban, I.V., Sankar, C., Germain, M., Zhang, S., Lin, Z., Subramanian, S., Kim, T., Pieper, M., Chandar, S., Ke, N.R., et al.: A deep reinforcement learning chatbot. arXiv preprint arXiv:1709.02349 (2017)

- (38) Shani, C., Libov, A., Tolmach, S., Lewin-Eytan, L., Maarek, Y., Shahaf, D.: “alexa, do you want to build a snowman?” characterizing playful requests to conversational agents. In: CHI Conference on Human Factors in Computing Systems Extended Abstracts, pp. 1–7 (2022)

- (39) Song, Y., Yan, R., Li, X., Zhao, D., Zhang, M.: Two are better than one: An ensemble of retrieval-and generation-based dialog systems. arXiv preprint arXiv:1610.07149 (2016)

- (40) Sordoni, A., Galley, M., Auli, M., Brockett, C., Ji, Y., Mitchell, M., Nie, J.Y., Gao, J., Dolan, B.: A Neural Network Approach to Context-Sensitive Generation of Conversational Responses. arXiv preprint arXiv:1506.06714 (2015)

- (41) Vinyals, O., Le, Q.: A Neural Conversational Model. arXiv preprint arXiv:1506.05869 (2015)

- (42) Walker, M., Harmon, C., Graupera, J., Harrison, D., Whittaker, S.: Modeling performance in open-domain dialogue with paradise. In: The 12th International Workshop on Spoken Dialog System Technology, 15-17 November 2021, Singapore. Springer (2021). URL InPress

- (43) Weizenbaum, J.: Eliza—a computer program for the study of natural language communication between man and machine. Communications of the ACM 9(1), 36–45 (1966)

- (44) Yang, M., Zhao, Z., Zhao, W., Chen, X., Zhu, J., Zhou, L., Cao, Z.: Personalized response generation via domain adaptation. In: Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1021–1024 (2017)

- (45) Zhang, Y., Sun, S., Galley, M., Chen, Y.C., Brockett, C., Gao, X., Gao, J., Liu, J., Dolan, W.B.: Dialogpt: Large-scale generative pre-training for conversational response generation. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, pp. 270–278 (2020)

- (46) Zhou, L., Gao, J., Li, D., Shum, H.Y.: The design and implementation of xiaoice, an empathetic social chatbot. Computational Linguistics 46(1), 53–93 (2020)