LFGCF: Light Folksonomy Graph Collaborative Filtering for Tag-Aware Recommendation

Abstract

Tag-aware recommendation is a task of predicting a personalized list of items for a user by their tagging behaviors. It is crucial for many applications with tagging capabilities like last.fm or movielens. Recently, many efforts have been devoted to improving Tag-aware recommendation systems (TRS) with Graph Convolutional Networks (GCN), which has become new state-of-the-art for the general recommendation. However, some solutions are directly inherited from GCN without justifications, which is difficult to alleviate the sparsity, ambiguity, and redundancy issues introduced by tags, thus adding to difficulties of training and degrading recommendation performance.

In this work, we aim to simplify the design of GCN to make it more concise for TRS. We propose a novel tag-aware recommendation model named Light Folksonomy Graph Collaborative Filtering (LFGCF), which only includes the essential GCN components. Specifically, LFGCF first constructs Folksonomy Graphs from the records of user assigning tags and item getting tagged. Then we leverage the simple design of aggregation to learn the high-order representations on Folksonomy Graphs and use the weighted sum of the embeddings learned at several layers for information updating. We share tags embeddings to bridge the information gap between users and items. Besides, a regularization function named TransRT is proposed to better depict user preferences and item features. Extensive hyperparameters experiments and ablation studies on three real-world datasets show that LFGCF uses fewer parameters and significantly outperforms most baselines for the tag-aware top-N recommendations.

keywords:

Recommendation systems , Tag-aware collaborative filtering , Graph neural networks , Knowledge graphMSC:

[2022] 05-23, editon: 1.21 Introduction

Tag-aware recommendation systems (TRS), to better depict user preferences and item features, have been widely deployed to bridge the information gap between users and items [1, 2]. The fundamental factor of TRS is to provide a folksonomy, where users can freely assign tags to items that they interacted with (e.g., movies, music, and bookmarks) [3]. These tags are composed of concise and comprehensive words or phrases that reflect users’ subjective preferences and items’ characteristics [4]. From this perspective, tags in folksonomy can bridge collaborative information between users and items. By exploring these collabortaive information in the tagging procedure, TRS is able to provide personalized item lists for users [5, 6, 4]. Therefore, folksonomy records can be introduced into recommendation systems to enhance interpretability and improve recommendation quality.

A common paradigm is to transform tags into a generic feature vector and feed them into feature-based models to integrate auxiliary folksonomy records. For example, CFA[5] used the sparse autoencoder (SAE) to obtain tag-based user latent representations and combines those with user-based collaborative filtering (CF). Besides, DSPR[7] and HDLPR[8] leveraged the multi-layer perceptron (MLP) to process such sparse feature vectors and extract abstract user and item representations, AIRec[9] provided a hybrid user model with hierarchical attention networks, which can depict user implicit preferences from explicit folksonomy records. Some researchers have organized the folksonomy records as a graph in recent years, utilizing graph neural networks (GNN) for TRS. TGCN[4] used graph convolutional networks (GCN) for TRS and outperformed other state-of-the-art TRS models. TA-GNN[10] leveraged two graph attention networks for embeddings aggregation and achieve higher quality recommendations as well.

Although the above method improves the recommendation performance in TRS, it comes with some unacceptable issues resulting from the sparsity of data and the redundancy and ambiguity of tags [11]. To be specific, the sparsity issues from most users assigning a few amounts of tags to the items they interacted with. The redundancy and ambiguity issues from the fact that several tags have the same or different meaning due to the lack of contextual semantics. For example, they are owing to the diversity in writing or expression styles of users. Some tags with different forms have the same meanings and indicate similar preferences, such as “world war 2” and “ww2” being two different tags in folksonomy, but usually assigned to the same movie “Schindler’s List” by users. Moreover, some tags consist of polysemous words, which means they have different understanding in different contexts. The tag “apple” could be misunderstood as a technology company by most tech-enthusiasts rather than a kind of fruit. Those issues increase training difficulty and finally degrade the recommendation systems’ effectiveness.

Several recent works which introduced GNN to TRS have demonstrated its effectiveness to deepen the use of subgraph with high-hop neighbors, such as TGCN[4], GNN-PTR[12] and TA-GNN[10]. However, those works have shown promising results; we argue that its designs are rather burdensome, directly inherited from GCN without justification.

This paper focuses on the issues mentioned above and proposes a GCN-based recommendation model for TRS. Specifically, we construct two sets of edges among user tagging records. The first set of edges reflects the initiative interaction between users and tags, and the second set of edges reflects passive interaction between items and tags. Therefore, the Folksonomy Graph (FG) can be constructed based on the above edges set. It is worth mentioning that relationships between users and items are removed because part of tagging behavior is a negative interaction. For example, some users assign the tag “worst movie ever” for some items. In this case, the FG is constructed based on the tagging and tagged information. Fig.1 illustrates an example. Then, we propose a Light Folksonomy Graph Collaborative Filtering (LFGCF) inspired by LightGCN[13], on which the Graph Convolutional Networks integrate topological structure for representation learning and share tag representations, which bridge the information gaps between users and items. Finally, we design a regularization function named TransRT to model the representation and perform joint learning with the recommendation task from a user tagging and item tagged perspective.

The main contributions of this paper are summarized as follows:

-

1.

We construct FG based on the user tagging records and item tagged records, respectively, which reflect users’ preferences and items’ characteristics. The interaction between users and items is not used to construct the graph structure;

-

2.

We leverage the GCN for representation learning, which is specific light designed for TRS, and jointly optimize TransRT for the Top-K recommendation task;

-

3.

We perform extensive experiments on three public datasets, demonstrating improvements of LFGCF over state-of-the-art GCN methods for the tag-aware Top-K recommendation.

The rest of this paper is organized as follows. Section 2 present the related words about TRS and GNN-based recommendation systems. Section 3 gives some background, including problem formulation and definition FG. In Section 4, we propose a recommendation method based on GCN is described in detail. Section 5 reports the hyperparameters experiments and ablation studies results. Finally, we conclude our contributions and give further work directions in Section 6.

2 Related Works

2.1 Tag-aware recommendations

Collaborative tagging recommendation allows users to assign tags freely on all kinds of items and then utilizes them to provide better-personalized recommendations. However, just like collaborative filtering recommendation, collaborative tagging recommendation suffers from the sparsity of interactions. Nevertheless, the redundancy and the ambiguity greatly compromise the performance of recommendations. By incorporating tagging information into collaborative filtering, Zhen et al. [14] came up with TagiCofi, which managed to characterize users’ similarities better and achieved better recommendations. To tackle the redundancy of tags, Shepisten et al. [11] used hierarchical clustering on social tagging systems. Peng et al. [15] further integrated the relationship between users, items, and tags and then came up with a Joint Item-Tag recommendation. Zhang et al. [16, 17] incorporated a user-tag-item tripartite graph, which turned out effective for improving the accuracy, diversity, and novelty of recommendations. FolkRank++ was brought up by Zhao et al. [18] to dig out the similarities among users and items fully. Focusing on personalized ranking recommendations in a collaborative tagging system, Rendle et al. [3] introduced matrix factorization and brought up RTF, which optimized ranking in personalized recommendations. Later, enlightened by BPR [19], Li et al. [6] came up with BPR-T for tag-aware recommendations. With the development of deep learning, Zuo et al. [5] used deep neural networks to extract tag information, which helped alleviate the sparsity, redundancy, and ambiguity of tags and generate accurate user profiles.

Despite the fact that much effort has been devoted to tag-aware recommendations, the implicit pattern under user tagging behavior are not fully extracted. We introduce the modified knowledge graph algorithm to tackle this problem.

2.2 GNN-based recommendations

Recent years have witnessed rapid development in graph neural networks, which perform excellently in node classifications and edges predictions. Berg et al. [20] brought up GCMC, in which autoencoder was used in a user-item bipartite graph to generate expressive embeddings. Ying et al. [21] focused on web-scale recommendation systems and came up with a GCN-based algorithm: PinSage. Results showed that Pinsage had excellent robustness and could generate high-quality recommendations. Wang et al. [22] incorporated GCN and came up with NGCF. Benefited by the multi-hop neighborhood connectivities, NGCF achieved good recommendations performance. Later in the research of He et al. [13], it is proven that some designs in NGCF are burdensome and compromising recommendation performance. So LightGCN was brought up to simplify the model design, achieving better recommendations. Focusing on click-through rate (CTR) predictions, Li et al. [23] came up with Fi-GNN, in which the gated recurrent units (GRU) was used for information aggregation. DG-ENN was brought up by Guo et al. [24] for the same task. By incorporating the attribute graph and the collaborative graph, DG-ENN was able to alleviate the feature and behavior sparsity problem. For certain factors in CTR tasks, Zheng et al. [25] taken price into account when coming up with a GCN-based model. Furthermore, Su et al. [26] used L0 regularization via GNN approach to distinguish valuable factors automatically. Focusing on the re-ranking task in recommendations, Liu et al. [27] developed IRGPR, which introduced an intent embedding network to embed user intents, and it is proven effective for re-ranking. Lately, several researchers have focused on applying graph neural networks to collaborative tagging systems. Chen et al. [4] used graph convolutional networks for tag-aware recommendations, and their TGCN model outperformed other state-of-the-art models. Huang et al. [10] used two graph attention networks for embeddings aggregation and achieved high-quality recommendations as well.

Few researchers use graph neural networks on tag-aware recommendations, while the model structure is quite complicated, making the training rather tricky. Our proposed method uses a relatively light and straightforward graph structure which suppose lowers the training cost and improves the performance.

3 Material

3.1 Problem Formulation

Folksonomy also named user tagging behavior, is the fundamental factor of the TRS. It is defined as a series of tags assigned by some users when interacting with certain items they are interested in. Generally, users interacting with certain items by operations such as clicking, tagging, or commenting could be viewed as folksonomy records. It is aggregated into a triplet, i.e, , which represents user assigned tag to item . These personalized tags reflect users’ subjective preferences and characteristics of items. This tagging procedure is rich in collaborative information. By exploring this collaborative information, TRS can further infer users’ preferences, summarize features of items and understand the connotation of tags, which hopefully improve the quality of recommendation systems.

Suppose the number of elements in user set , item set , tag set are , and , respectively. The folksonomy is a tuple , where is a record in a typical TRS. During the tagging procedure, user interacts with the target item through an explicit tagging feedback by tag , i.e., the user watching the movie ’Transformers’ because of the tag ’sci-fi’.But few researchers have addressed the problem of information leaks on folksonomy. We argue that directly modeling explicit tagging feedback as implicit feedback may leak information because a part of tagging behavior is negative feedback, i,e, user tagging movie as ’boring movies’. The leak is supposed to hinder the recommendation performance in the test dataset. Our approach to tackling the problem is to model user-tag tagging interactions and item-tag tagged interactions in separately, rather than model user-item interactions directly.

This paper focuses on recommending the personalized ranking list of items for each user on the TRS. By exploring the implicit feedback in user tagging assignments, leveraging collaborative information, and training a model to refine embeddings, we can generate the Top-K list of items for each user.

| (1) |

3.2 Folksonomies Graph

To prevent the information leak from happening, we construct two sets of edges and among the folksonomies records . Set reflects the assignments between users and tags. On the one hand, set indicates the passive tagged interactions between items and tags. In TRS scenario, each kind of edge from and is respectively defined as:

| (2) |

| (3) |

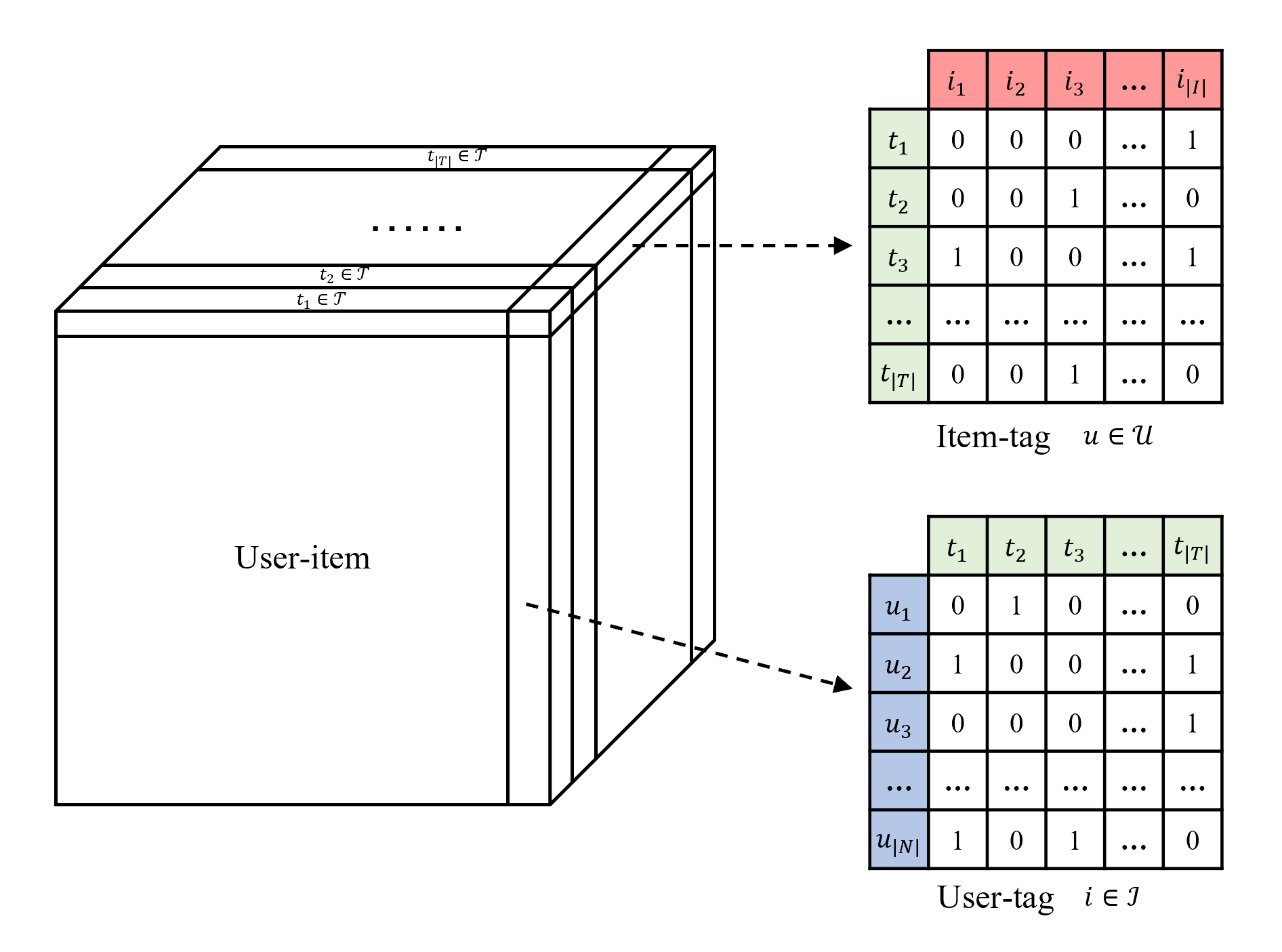

To make it easier to follow, the matrix form of folksonomy is shown in Fig. 1. Therefore, the FG is constructed based on user-tag tagging and item-tag tagged interactions according to the assignments set . Each set of edges can be respectively regarded as the set of edges in the bipartite graph , .

4 Method

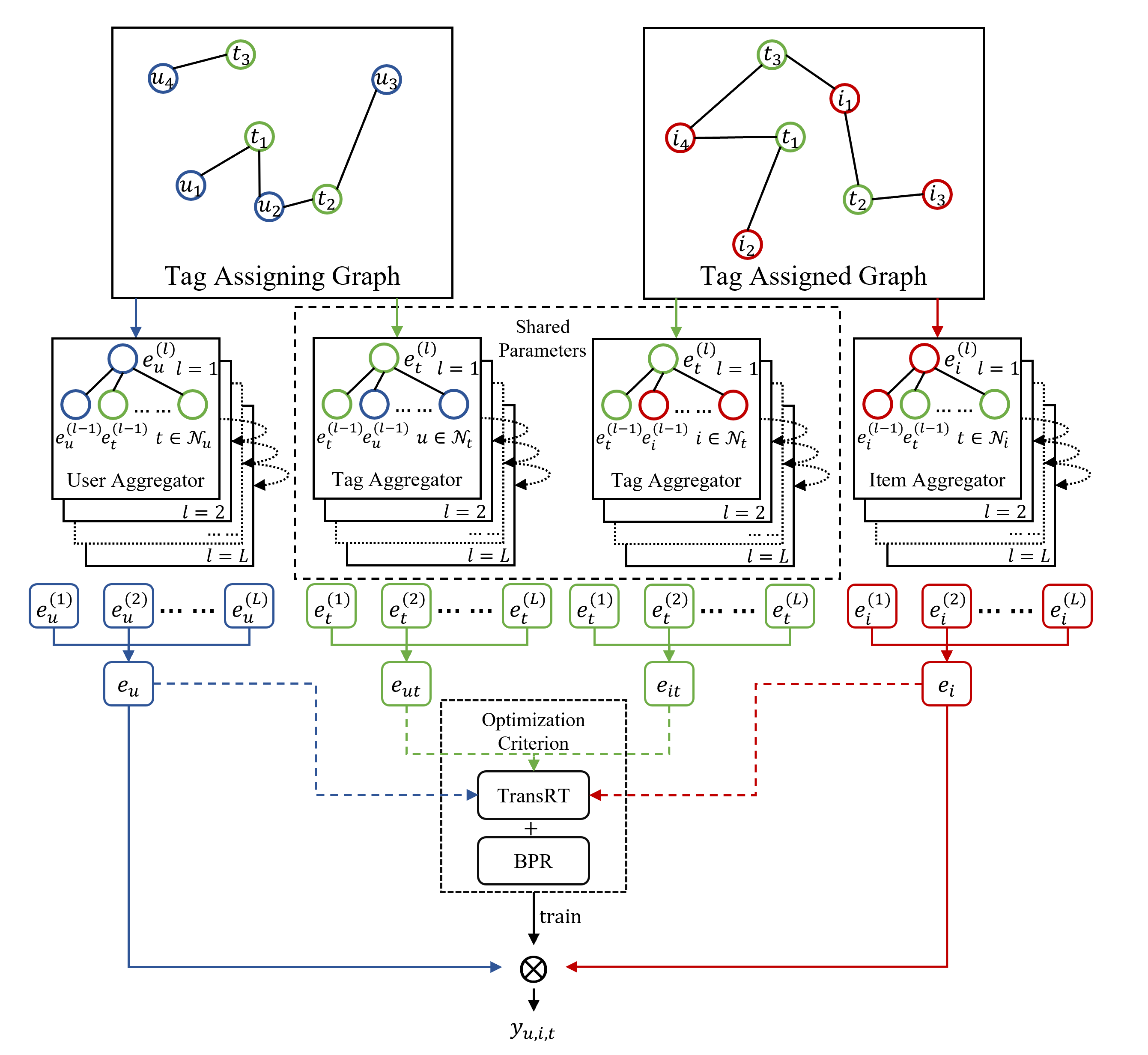

In this section, we first present the design of the Light Folksonomy Graph Collaborative Filtering (LFGCF) method, as illustrated in Fig.2, which is composed of two core modules: 1) Light Folksonomy Graph Convolutional Network, which leverages a light yet effective model by including the essential ingredients of GCN for constructing the FG from folksonomies records and GCN-based collaborative aggregated operations to capture higher-order semantic information under tagging graph and tagged graph, respectively; 2) TransR on Tags, which provides a regularization function named TransRT to bridge tagging graph and tagged graph by triplets preserved. The joint optimization details and how to do modeling training for Top-K recommendation in TRS will be discussed later in this section.

4.1 LFGCF

Mainstream GNN models, such as GCN[28] and GAT[29] were originally proposed for node or graph representation learning on attributed graphs. Specifically, each node has existing embeddings as input features, which are firstly transformed into a uniform shape by features transformation and then aggregated with its neighbors on the graph. In the end, embeddings are updated by nonlinear activations. Whereas from the view of the bipartite graph for Collaborative Filtering, each node (user or item) is only identified by a unique token without any concrete semantics as input features. In such a case, given unique token embeddings as input, performing multiple layers of feature transformation and nonlinear activations are keys to the success of modern neural networks[30]. However, these operations not only make little benefits to the performance of recommendation tasks but also increase the difficulties of representation training. Inspired by the ideas of LightGCN[13], we propose a light yet effective model by including the essential ingredients of GCN for a recommendation.

4.1.1 Light Assign Graph Convolutional Network

The fundamental factor of GCN is learning nodes recommendation by aggregating neighbors features over the graph[28]. To be specific, aggregating the features of neighbors is the new representation of nodes. The neighbors aggregated function can be universally defined as follows:

| (4) |

where the is the neighbors aggregation function, which takes -th layer’s representations of target nodes and their neighbors into consideration. Besides, the is the representations of users or item embeddings in and , respectively; the is the representations of tags and shared parameters between and . Many researchers have proposed different aggregation functions for neighbors aggregation, such as the weighted sum aggregator in GIN[31], LSTM aggregator in GraphSAGE[32], and bilinear interaction in BGNN[33]. However, most of them tie feature transformation or nonlinear activation with function, such as complex attention-based and CNN-based feature transformation in TGCN[4]. Although they all perform well on node or graph classification tasks with semantics input features and TRS recommendation tasks that only have token embeddings as input features, they could be burdensome for Top-K recommendation tasks.

4.1.2 Light Aggregation

We leverage the LFGCN in FG, which is constructed based on and . In LFCGN, we focus on the essential ingredients of GCN for recommendations. We adopt the light-weighted sum aggregator and abandon the use of feature transformation and nonlinear activation. The Light Aggregation function can be defined as follows:

| (5) | |||||

| (6) |

The symmetric normalization term follows the design of standard GCN[28], which can avoid the scale of embeddings increasing with graph convolution operations.

4.1.3 Layer Combination and Model Prediction

In our LFGCF, the only trainable parameters are the embeddings at the 0-layer. When thay are initialize, the higher layers can be computed via LFGCN defined by Equation (5-6). After K layers LFGCN, we propose layer combination function that further combine the embeddings obtained at each layer to form the final representation of nodes. The layer combination function can be defined as follows:

| (7) |

which is the layer combination function, which fusion all layers’ representation of specific type of nodes. is the number of layers.

The embeddings at different layers capture different semantics in FG. For example, the first layer enforces smoothness on users(items) and tags that have interactions, the second layer smooths users(items) that have overlap on interacted tags, and higher-layers capture higher-order proximity[22]. Thus, we do not design spectial componet, and the layer combination function can be further defined as follows:

| (8) |

which denotes the importance of the k-th layer embedding in constituting the final embedding. It can be treated as a hyperparameter to be tuned manually, or a model parameter optimized automatically. We set uniformly as , where is the number of layers. The reasons that we designded the layer combination function to get final representations are two-fold. (1) With the increasing of layers, the embeddings will be over-smoothed[34]. Thus only using the last layer is problematic. (2) Combining embeddings at different layers with different weight captures the effect of GCN with self-connections[13].

The model prediction is defined as the inner product of the user and item final representations:

| (9) |

where is used as the ranking score for recommendation generation.

4.2 TransRT

Knowledge graph embedding effectively parameterizes nodes as vector representations while keeping the graph’s topology. In this paper, we propose a new method for embedding a knowledge graph based on the idea of transformers. Here we propose a new regularization function, which is based on TransR[35], a widely used method in a knowledge graph. To be specific, it learns the embedding of each node by optimizing the translation principle , if a triplet . Herein, is the final embedding of user , item and tag , respectively, and are the projected representations of and in the tag’s space. Hence, for a given folksonomy record , its plausibility score could be defined as follows:

| (10) |

where are in the same d-dimension space, but not the same semantics space. A lower score of suggests that the folksonomy score is more likely to be reasonable and vice versa.

4.3 Jointly Optimization Details

The trainable parameters of LFGCF are only the embeddings of the 0-th layer, which combine with users, items, and tags in FG. In other words, the model complexity is the same as the standard matrix factorization (MF). To optain better ranking, we employ the Bayesian Personalized Ranking (BPR) loss[19], which is a pairwise loss that encourages the prediction of an observed record to be higher than its unobserved sampled counterpart. The BPR loss is defined as follows:

| (11) |

where indicates pairwsie observed in folksonomy records, and pairwise means that the user and item not observed in record, but uniformly sampled from the unobserved pairs.

To train TransRT, we minimize the plausibility score:

| (12) |

where controls the strength of the knowledge graph regularization, and is the plausibility score of the record .

To effective learning parameters for recommendation and preserve the regularization relationship among folksonomy records, we integrate the Top-K recommendation task and the TransRT by a jointly learning framework. Finally, the total objective function of LFGCF is defined as follows:

| (13) |

where controls the strength of regularization. We employ the Adam[36] optimize and and use it in a mini-batch manner. Besides, an early stopping strategy is also applied to avoid overfitting during training.

5 Experimental

In this section, we focus on the following questions:

RQ1

Does LFGCF outperform other tag-aware recommendation models in the Top-K recommendation task?

RQ2

Does it help improve the recommendation performance to remove some components in the GCN?

RQ3

Whether or not the implementation of TransRT solves the redundancy and ambiguity of tags?

5.1 Setup

In order to answer the questions above, a series of experiments are designed and carried out. We first show that LFGCF outperforms other state-of-the-art models, then elaborate on its expressiveness and explainability. Meanwhile, according to the investigation from [37], experiments of some other models are carried out with the different processes, which brings difficulties to repeating them. Hence, all the experiments in this paper are all under the uniformed experimental framework Recbole[38] to make fair comparisons.

5.1.1 Experiment Datasets

Extensive experiments are carried out to evaluate the proposed LFGCF based on three real-world datasets: MovieLens, LastFM, and Delicious. They were all released in HetRec [39].

-

MovieLens is a recommendation dataset that contains a list of tags assigned by users to various movies.

-

LastFM is a dataset from Last.FM music system with music listening information, tags assignments to artists, and user social networks.

-

Delicious is obtained from Del.icio.us and contains tagging information to web bookmarks.

Due to the sparsity of tagging information, some infrequently used tags exist. To rule out their negative influence of them, those tags used less than 5 times in MovieLens and LastFM and 15 times in Delicious are removed[5]. Basic statistic information of the datasets after preprocessing is summarized in Table.1

| Datasets | User | Item | Tag | Assignments | Sparsity |

|---|---|---|---|---|---|

| Last.FM | 1808 | 12212 | 2305 | 175641 | 99.20% |

| MovieLens | 1651 | 5381 | 1586 | 36728 | 99.59% |

| Delicious | 1843 | 65877 | 3508 | 330744 | 99.73% |

Since data we use are not time sequence data, so training sets, validation sets and test sets are randomly selected according to the proportion of {0.6, 0.2, 0.2}. The metrics that reflect model performances in the reminder part of the paper are all calculated from test sets.

5.2 Evaluation Metrics

The performance of TRS is directly related to the quality of Top-K recommendations, which are evaluated by the following metrics: Recall@N, Precision@N, Hit Ratio@N, NDCG@N, and MRR@N[40]. Empirically, higher metrics mean better performances. Each metric is elaborated:

-

Recall@N measures the percentage of the number of items in Top-K recommendations to the actual item set that user interact with.

(14) where denotes the Top-K recommendations and denotes the ground truth item set.

-

Precision@N measures the fraction of items the users would interact with among the Top-K recommendations.

(15) -

Hit Ratio@N measures the percentage of users who interact with the recommendations for at least one time.

(16) where is the indicator function.

-

NDCG@N reflects the quality of ranking by distinguishing the contributions of the accurately recommended items.

(17) where means the item in Top-K recommendations .

-

MRR@N computes the reciprocal rank of the first relevant item found by an rank algorithm.

(18) where means the rank position of the first relevant item in recommendations for a user.

5.3 Baselines and Parameters

To fairly evaluate the performance and effectiveness of LFGCF, we adopt some classic or state-of-the-art TRS models as baselines.

-

DSPR[7] leverages deep neural networks to learn tag-based features by mapping user and item profiles to deep latent space.

-

CFA[5] is a user-based collaborative filtering model which adopts a sparse autoencoder to extract latent features.

-

BPR-T[6] is a collaborative filtering model which incorporates tagging information and the Bayesian ranking optimization.

-

TGCN[4] is a collaborative filtering model which incorporates tagging information into GCN along with an attention mechanism.

In order to make impartial comparisons, each model is optimized with mini-batch Adam, while the batch size is set as 2048. The learning rate of each model is searched from {0.0001, 0.0005, 0.001, 0.005, 0.01, 0.05} and the regression weight is searched from {1e-5, 1e-4, 1e-3, 1e-2}. For the autoencoder in CFA and the graph structure in TGCN and LFGCF, the number of layers is searched from {1, 2, 3, 4}. For BPR-T, its three regression weight is searched from {1e-5, 1e-4, 1e-3, 1e-2, 1e-1}. Additionally, by further searching the coefficients from {1e-5, 1e-4}, we can analyze the sensibility of TransRT. The hyperparameter experiments are conducted under 10 random seeds. By conducting these experiments, all of the models are at their optimal performances, ensuring the fairness of the following comparisons.

5.4 Performance Analysis

The experiment results in this section are all achieved with the optimal hyperparameters. Best performance is in boldface, best baseline proformance is in underline and imp. means the improvement of LFGCF over the state-of-the-art baseline.

| Dataset | Metric | DSPR | CFA | BPRT | TGCN | LFGCF | imp | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| MovieLens |

|

|

|

|

|

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Last.FM |

|

|

|

|

|

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Delicious |

|

|

|

|

|

|

|

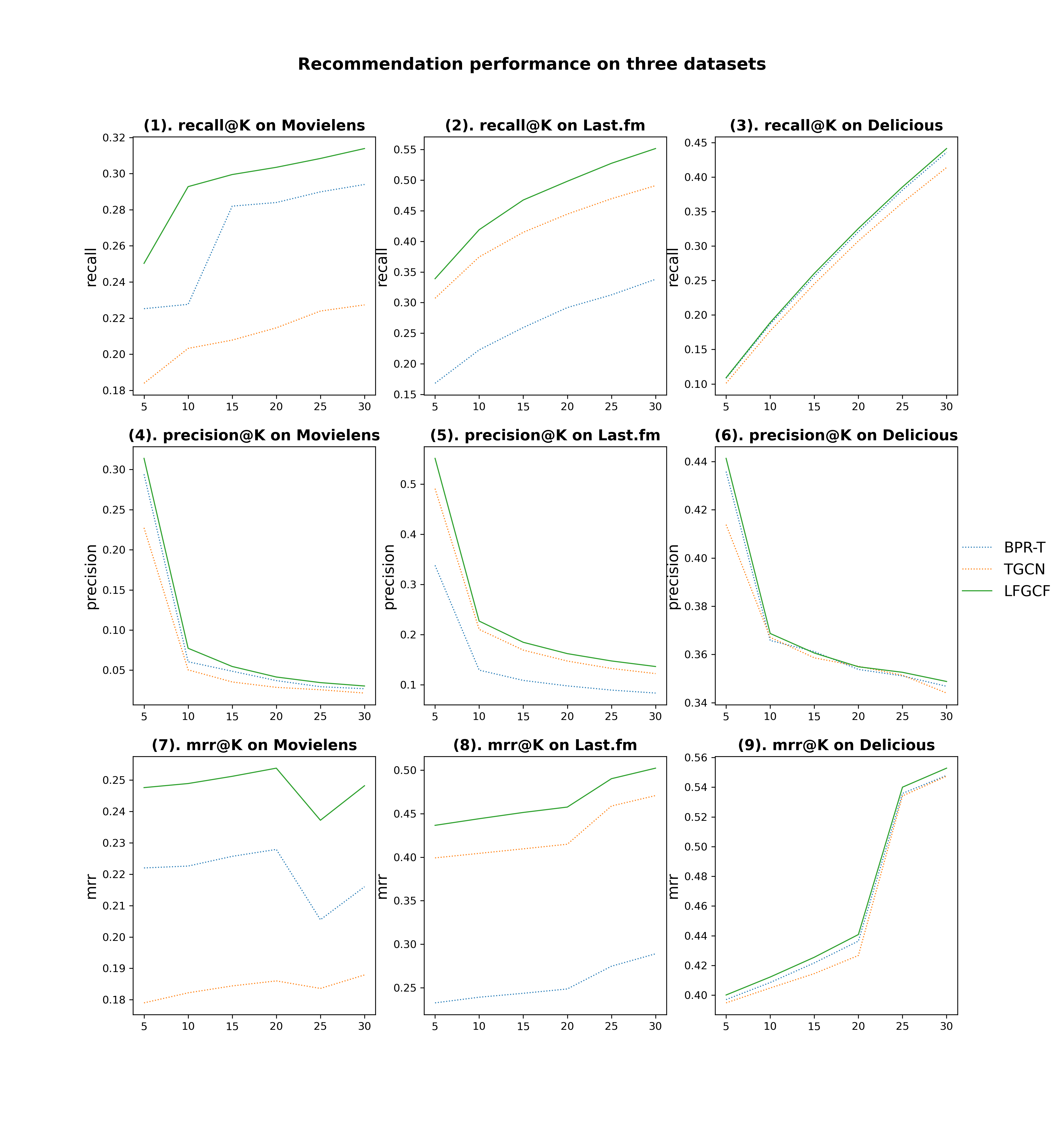

Table.LABEL:performance_comparison shows the Top-K recommendation performance metrics of LFGCF and other baselines in three datasets when . Fig.3 shows the Top-K recommendation performance of our LFGCF and other baselines in terms of Recall@N, Precision@N and MRR@N while N ranges from 5 to 30 with the interval of 5.

Fig.3 shows the Top-K recommendation performance of our LFGCF and other baselines in Recall@N, Precision@N, and MRR@N, while N ranges from 5 to 30 with an interval of 5.

The performance comparisons of our model and other baselines shows that LFGCF achieved the state-of-the-art performance while succcessfully alleviating the training difficulty with the help of light designed GCN. Among baseline models, TGCN and BPR-T outperform DSPR and CFA by a large margin.

We summarize why our LFGCF outperforms other baselines for the following reasons: (1) More effective GCN. Two LFGCN implemented for feature learning show better performances than deep neural networks in models CFA and DSPR. Effective representation propagation and aggregation benefit LFGCF by lifting performances and reducing training difficulties; (2) TransRT assists with extracting more expressive user and item representations. Recent years witnessed efforts devoted to the loss function based on BPR loss to better fit recommendation tasks. Experiments indicate that using the improved knowledge graph algorithm TransRT allows more efficient uses of collaborative tagging information.

5.5 Ablation Studies

To verify our summaries of the superior performance of LFGCF above, we conduct a series of ablation studies on LFGCF.

5.5.1 Effect of LFGCN

Some models take user-item interaction information into personalized recommendations in the research field. According to the research of He et al. [13], complex feature transformation and nonlinear activation not only bring difficulties to training but also compromise the performance. To verify the effectiveness of light designed GCN in tag-aware recommendation systems, We implement NGCFT as a baseline model based on NGCF [22]. Apart from the complex feature aggregation and propagation operations inherited from NGCF, NGCFT is identical to LFGCF in the loss function, recommendation generation, and parameter setting.

| Model | Recall@20 | Precision@20 | Hit@20 | NDCG@20 | MRR@20 |

| NGCFT | 0.2158 | 0.0227 | 0.3109 | 0.1383 | 0.1405 |

| LFGCF | 0.2767 | 0.0375 | 0.4176 | 0.2156 | 0.2199 |

| imp | 28.22% | 65.20% | 34.32% | 55.89% | 56.51% |

| Model | Recall@20 | Precision@20 | Hit@20 | NDCG@20 | MRR@20 |

| NGCFT | 0.5005 | 0.1454 | 0.7953 | 0.4543 | 0.5167 |

| LFGCF | 0.5069 | 0.1468 | 0.8035 | 0.4442 | 0.5058 |

| imp | 1.28% | 0.96% | 1.03% | -2.23% | -2.11% |

| Model | Recall@20 | Precision@20 | Hit@20 | NDCG@20 | MRR@20 |

| NGCFT | 0.3256 | 0.3574 | 0.9354 | 0.4150 | 0.5453 |

| LFGCF | 0.3341 | 0.3615 | 0.9404 | 0.4225 | 0.5572 |

| imp | 2.61% | 1.15% | 0.53% | 1.81% | 2.18% |

Generally, LFGCF outperforms NGCFT on three datasets. On MovieLens, LFGCF outperforms NGCFT by a large margin. This suggests that using the light graph structure effectively imporves the performance of recommendations.

5.5.2 Effect of TransRT

Modified knowledge graph algorithm TransRT is implemented along with BPR loss to help with model training and achieving more expressive representations. Theoretically speaking, TransRT allows for better-using tagging information to improve qualities of user and item representations, which would lift the performance of recommendations. To verify the effectiveness of TransRT, we remove TransRT and only train the LFGCF with BPR loss and name this baseline model LFGCF-RT.

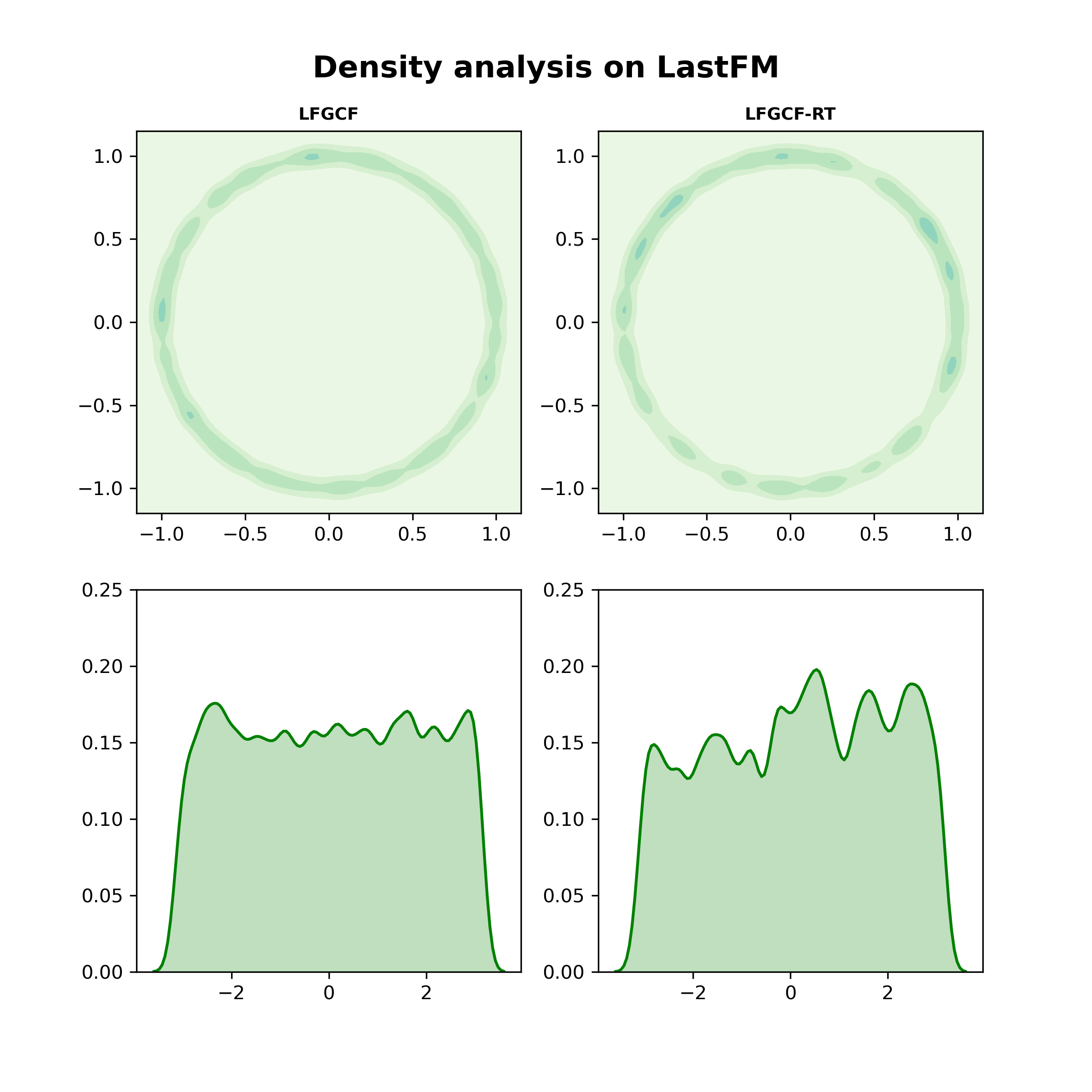

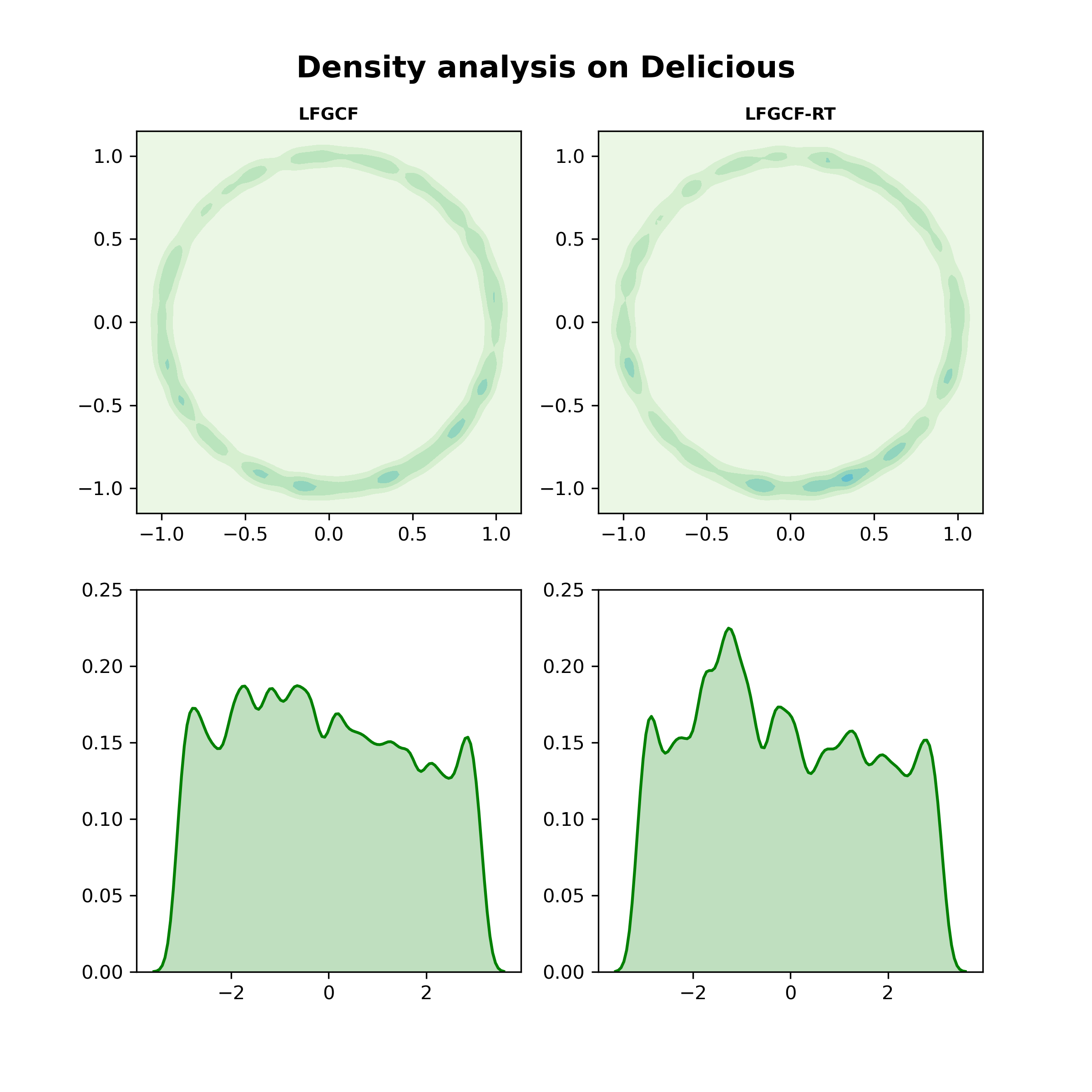

Apart from learning more expressive user and item representations, we believe that TransRT help learn tag representations as well. To verify this deduction, we follow the visualization method in [41]. Learned tag embeddings are first reduced to two dimensions using t-SNE [42]. Then 2-dimensional embeddings are normalized and mapped on the unit hypersphere (i.e., a circle with radius 1). To make the presentation, the density estimations on angles for each point on the hypersphere are visualized in Fig.4.

As it can be learned from the Fig.4, tag representations trained by LFGCF are much smoother than those learned by the baseline model without TransRT. It indicates that TransRT help promote the recommendation performance by smoothing the learned representations. To make a clear comparison between LFGCF and LFGCF-RT, the performances are shown in the Table.LABEL:transrt_ml

| Model | Recall@20 | Precision@20 | Hit@20 | NDCG@20 | MRR@20 |

| LFGCF-RT | 0.2739 | 0.0311 | 0.3782 | 0.2109 | 0.2096 |

| LFGCF | 0.2767 | 0.0375 | 0.4176 | 0.2156 | 0.2199 |

| imp | 1.02% | 20.58% | 10.42% | 2.23% | 4.91% |

| Model | Recall@20 | Precision@20 | Hit@20 | NDCG@20 | MRR@20 |

| LFGCF-RT | 0.4637 | 0.1358 | 0.7708 | 0.3995 | 0.4565 |

| LFGCF | 0.5069 | 0.1468 | 0.8035 | 0.4442 | 0.5058 |

| imp | 9.32% | 8.10% | 4.24% | 11.19% | 10.80% |

| Model | Recall@20 | Precision@20 | Hit@20 | NDCG@20 | MRR@20 |

| LFGCF-RT | 0.3161 | 0.3512 | 0.9223 | 0.4068 | 0.5516 |

| LFGCF | 0.3254 | 0.3526 | 0.9305 | 0.4122 | 0.5558 |

| imp | 2.94% | 0.40% | 0.89% | 1.33% | 0.76% |

Table.LABEL:transrt_ml shows the recommendation performance of LFGCF and LFGCF-RT. LFGCF-RT underperforms LFGCF in all three datasets, indicating that the removal of TransRT hurts LFGCF. It compromises the performance to the same level as BPR-T and TGCN. The conclusion can be drawn that the light convolutional graph is effective in learning user and item representations, but the implicit feedbacks are not fully extracted. Implementing TransRT allows for leveraging the information pattern inside user tagging behavior to make the representations more expressive.

6 Conclusions and Future Work

In this work, we explore folksonomy records with collaborative information in FG for a tag-aware recommendation. We devised a new method LFGCF, which leverages a light yet effective model to capture higher-order information in FG, then proposed a regularization function to bridge users and items in a folksonomy records triplet as its core is the GCN-based model LFGCN. It only consists of two essential components - light aggregation and information updating. In light aggregation, we remove feature transformation and nonlinear activation, which are burdensome. We construct the final representations for users and items as a weighted sum of their embeddings on several layers in information updating. For adequate modeling folksonomy records, which keep the topology information, we proposed TransRT regularization function and performed jointly learning to users’ subjective preferences and items’ characteristics. We argued that the specific light design and regularization for TRS alleviate the sparsity, redundancy, and ambiguity issues in folksonomy records. Extensive hyperparameter experiments and ablation studies on three real-world datasets demonstrate the effectiveness and rationality of LFGCN.

This work explores the specific design of GNN in TRS. We believe the insights of LFGCF are inspirational for future developments in TRS. With the prevalence of user tagging behaviors in real applications, GNN-based models are becoming increasingly crucial in TRS; by explicitly exploiting the interactions among entities in the system, they are advantageous to context-based supervised learning scheme[43] that models the interactions implicitly. Besides folksonomy, much other structural information can be extracted from real-world recommendation systems, such as social networks and knowledge graphs. For example, by integrating entity relationships with FG, we can capture the explicit information rather than collaborative information. In further work, we would like to exploit further self-supervised learning for TRS, which is a promising research direction.

References

-

[1]

A. Hotho, R. Jäschke, C. Schmitz, G. Stumme,

Information Retrieval

in Folksonomies: Search and Ranking, in: D. Hutchison, T. Kanade,

J. Kittler, J. M. Kleinberg, F. Mattern, J. C. Mitchell, M. Naor,

O. Nierstrasz, C. Pandu Rangan, B. Steffen, M. Sudan, D. Terzopoulos,

D. Tygar, M. Y. Vardi, G. Weikum, Y. Sure, J. Domingue (Eds.), The Semantic

Web: Research and Applications, Vol. 4011, Springer Berlin Heidelberg,

Berlin, Heidelberg, 2006, pp. 411–426, series Title: Lecture Notes in

Computer Science.

doi:10.1007/11762256_31.

URL http://link.springer.com/10.1007/11762256_31 -

[2]

K. Bischoff, C. S. Firan, W. Nejdl, R. Paiu,

Can all tags

be used for search?, in: Proceeding of the 17th ACM conference on

Information and knowledge mining - CIKM ’08, ACM Press, Napa Valley,

California, USA, 2008, p. 193.

doi:10.1145/1458082.1458112.

URL http://portal.acm.org/citation.cfm?doid=1458082.1458112 -

[3]

S. Rendle, L. Balby Marinho, A. Nanopoulos, L. Schmidt-Thieme,

Learning

optimal ranking with tensor factorization for tag recommendation, in:

Proceedings of the 15th ACM SIGKDD international conference on

Knowledge discovery and data mining - KDD ’09, ACM Press, Paris, France,

2009, p. 727.

doi:10.1145/1557019.1557100.

URL http://portal.acm.org/citation.cfm?doid=1557019.1557100 -

[4]

B. Chen, W. Guo, R. Tang, X. Xin, Y. Ding, X. He, D. Wang,

Tgcn: Tag graph convolutional

network for tag-aware recommendation, in: Proceedings of the 29th ACM

International Conference on Information and Knowledge Management, CIKM ’20,

Association for Computing Machinery, New York, NY, USA, 2020, pp. 155–164.

doi:10.1145/3340531.3411927.

URL https://doi.org/10.1145/3340531.3411927 -

[5]

Y. Zuo, J. Zeng, M. Gong, L. Jiao,

Tag-aware

recommender systems based on deep neural networks, Neurocomputing 204 (2016)

51–60.

doi:10.1016/j.neucom.2015.10.134.

URL https://linkinghub.elsevier.com/retrieve/pii/S0925231216301151 -

[6]

H. Li, X. Diao, J. Cao, L. Zhang, Q. Feng,

Tag-aware

recommendation based on Bayesian personalized ranking and feature mapping,

Intelligent Data Analysis 23 (3) (2019) 641–659.

doi:10.3233/IDA-193982.

URL https://www.medra.org/servlet/aliasResolver?alias=iospress&doi=10.3233/IDA-193982 -

[7]

Z. Xu, C. Chen, T. Lukasiewicz, Y. Miao, X. Meng,

Tag-aware personalized

recommendation using a deep-semantic similarity model with negative

sampling, in: Proceedings of the 25th ACM International on Conference on

Information and Knowledge Management, CIKM ’16, Association for Computing

Machinery, New York, NY, USA, 2016, p. 1921–1924.

doi:10.1145/2983323.2983874.

URL https://doi.org/10.1145/2983323.2983874 - [8] Z. Xu, T. Lukasiewicz, C. Chen, Y. Miao, X. Meng, Tag-aware personalized recommendation using a hybrid deep model, in: Proceedings of the 26th International Joint Conference on Artificial Intelligence, IJCAI’17, AAAI Press, 2017, p. 3196–3202.

-

[9]

B. Chen, Y. Ding, X. Xin, Y. Li, Y. Wang, D. Wang,

AIRec:

Attentive intersection model for tag-aware recommendation 421 105–114.

doi:10.1016/j.neucom.2020.08.018.

URL https://www.sciencedirect.com/science/article/pii/S0925231220312789 - [10] R. Huang, C. Han, L. Cui, Tag-aware attentional graph neural networks for personalized tag recommendation, in: 2021 International Joint Conference on Neural Networks (IJCNN), 2021, pp. 1–8. doi:10.1109/IJCNN52387.2021.9533380.

-

[11]

A. Shepitsen, J. Gemmell, B. Mobasher, R. Burke,

Personalized recommendation in

social tagging systems using hierarchical clustering, in: Proceedings of the

2008 ACM Conference on Recommender Systems, RecSys ’08, Association for

Computing Machinery, New York, NY, USA, 2008, p. 259–266.

doi:10.1145/1454008.1454048.

URL https://doi.org/10.1145/1454008.1454048 -

[12]

X. Chen, Y. Yu, F. Jiang, L. Zhang, R. Gao, H. Gao,

Graph Neural

Networks Boosted Personalized Tag Recommendation Algorithm, in:

2020 International Joint Conference on Neural Networks (IJCNN),

IEEE, Glasgow, United Kingdom, 2020, pp. 1–8.

doi:10.1109/IJCNN48605.2020.9207610.

URL https://ieeexplore.ieee.org/document/9207610/ -

[13]

X. He, K. Deng, X. Wang, Y. Li, Y. Zhang, M. Wang,

Lightgcn: Simplifying and

powering graph convolution network for recommendation, in: Proceedings of

the 43rd International ACM SIGIR Conference on Research and Development in

Information Retrieval, Association for Computing Machinery, New York, NY,

USA, 2020, p. 639–648.

URL https://doi.org/10.1145/3397271.3401063 -

[14]

Y. Zhen, W.-J. Li, D.-Y. Yeung,

Tagicofi: Tag informed

collaborative filtering, in: Proceedings of the Third ACM Conference on

Recommender Systems, RecSys ’09, Association for Computing Machinery, New

York, NY, USA, 2009, p. 69–76.

doi:10.1145/1639714.1639727.

URL https://doi.org/10.1145/1639714.1639727 -

[15]

J. Peng, D. D. Zeng, H. Zhao, F.-y. Wang,

Collaborative filtering in

social tagging systems based on joint item-tag recommendations, in:

Proceedings of the 19th ACM International Conference on Information and

Knowledge Management, CIKM ’10, Association for Computing Machinery, New

York, NY, USA, 2010, p. 809–818.

doi:10.1145/1871437.1871541.

URL https://doi.org/10.1145/1871437.1871541 -

[16]

Z.-K. Zhang, T. Zhou, Y.-C. Zhang,

Personalized

recommendation via integrated diffusion on user–item–tag tripartite

graphs, Physica A: Statistical Mechanics and its Applications 389 (1) (2010)

179–186.

doi:https://doi.org/10.1016/j.physa.2009.08.036.

URL https://www.sciencedirect.com/science/article/pii/S0378437109006839 -

[17]

Z.-K. Zhang, C. Liu, Y.-C. Zhang, T. Zhou,

Solving the Cold-Start Problem in

Recommender Systems with Social Tags, Expert Systems with

Applications 39 (12) (2012) 10990–11000, arXiv: 1004.3732.

doi:10.1016/j.eswa.2012.03.025.

URL http://arxiv.org/abs/1004.3732 -

[18]

FolkRank++: An Optimization

of FolkRank Tag Recommendation Algorithm Integrating User and

Item Information, KSII Transactions on Internet and Information Systems

15 (1) (Jan. 2021).

doi:10.3837/tiis.2021.01.001.

URL http://itiis.org/digital-library/24227 -

[19]

S. Rendle, L. Balby Marinho, A. Nanopoulos, L. Schmidt-Thieme,

Learning optimal ranking with

tensor factorization for tag recommendation, in: Proceedings of the 15th ACM

SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD

’09, Association for Computing Machinery, New York, NY, USA, 2009, p.

727–736.

doi:10.1145/1557019.1557100.

URL https://doi.org/10.1145/1557019.1557100 -

[20]

R. v. d. Berg, T. N. Kipf, M. Welling,

Graph Convolutional Matrix

Completion, arXiv:1706.02263 [cs, stat]ArXiv: 1706.02263 (Oct. 2017).

URL http://arxiv.org/abs/1706.02263 -

[21]

R. Ying, R. He, K. Chen, P. Eksombatchai, W. L. Hamilton, J. Leskovec,

Graph Convolutional Neural

Networks for Web-Scale Recommender Systems, in: Proceedings of the

24th ACM SIGKDD International Conference on Knowledge Discovery & Data

Mining, Association for Computing Machinery, New York, NY, USA, 2018, pp.

974–983, arXiv: 1806.01973.

doi:10.1145/3219819.3219890.

URL https://doi.org/10.1145/3219819.3219890 -

[22]

X. Wang, X. He, M. Wang, F. Feng, T.-S. Chua,

Neural graph collaborative

filtering, in: Proceedings of the 42nd International ACM SIGIR Conference on

Research and Development in Information Retrieval, SIGIR’19, Association for

Computing Machinery, New York, NY, USA, 2019, p. 165–174.

doi:10.1145/3331184.3331267.

URL https://doi.org/10.1145/3331184.3331267 -

[23]

Z. Li, Z. Cui, S. Wu, X. Zhang, L. Wang,

Fi-gnn: Modeling feature

interactions via graph neural networks for ctr prediction, in: Proceedings

of the 28th ACM International Conference on Information and Knowledge

Management, CIKM ’19, Association for Computing Machinery, New York, NY, USA,

2019, p. 539–548.

doi:10.1145/3357384.3357951.

URL https://doi.org/10.1145/3357384.3357951 -

[24]

W. Guo, R. Su, R. Tan, H. Guo, Y. Zhang, Z. Liu, R. Tang, X. He,

Dual Graph enhanced

Embedding Neural Network for CTR Prediction, in: Proceedings of

the 27th ACM SIGKDD Conference on Knowledge Discovery & Data

Mining, ACM, Virtual Event Singapore, 2021, pp. 496–504.

doi:10.1145/3447548.3467384.

URL https://dl.acm.org/doi/10.1145/3447548.3467384 -

[25]

Y. Zheng, C. Gao, X. He, Y. Li, D. Jin,

Price-aware

Recommendation with Graph Convolutional Networks, in: 2020 IEEE

36th International Conference on Data Engineering (ICDE), IEEE,

Dallas, TX, USA, 2020, pp. 133–144.

doi:10.1109/ICDE48307.2020.00019.

URL https://ieeexplore.ieee.org/document/9101532/ -

[26]

Y. Su, R. Zhang, S. Erfani, Z. Xu,

Detecting beneficial feature

interactions for recommender systems (2020).

doi:10.48550/ARXIV.2008.00404.

URL https://arxiv.org/abs/2008.00404 -

[27]

W. Liu, Q. Liu, R. Tang, J. Chen, X. He, P. A. Heng,

Personalized

Re-ranking with Item Relationships for E-commerce, in: Proceedings

of the 29th ACM International Conference on Information &

Knowledge Management, ACM, Virtual Event Ireland, 2020, pp. 925–934.

doi:10.1145/3340531.3412332.

URL https://dl.acm.org/doi/10.1145/3340531.3412332 -

[28]

T. N. Kipf, M. Welling,

Semi-supervised

classification with graph convolutional networks, in: 5th International

Conference on Learning Representations, ICLR 2017, Toulon, France, April

24-26, 2017, Conference Track Proceedings, OpenReview.net, 2017.

URL https://openreview.net/forum?id=SJU4ayYgl -

[29]

P. Velickovic, G. Cucurull, A. Casanova, A. Romero, P. Liò, Y. Bengio,

Graph attention networks, CoRR

abs/1710.10903 (2017).

arXiv:1710.10903.

URL http://arxiv.org/abs/1710.10903 -

[30]

K. He, X. Zhang, S. Ren, J. Sun, Deep

Residual Learning for Image Recognition, arXiv:1512.03385 [cs]ArXiv:

1512.03385 version: 1 (Dec. 2015).

URL http://arxiv.org/abs/1512.03385 -

[31]

K. Xu, W. Hu, J. Leskovec, S. Jegelka,

How powerful are graph

neural networks?, in: International Conference on Learning Representations,

2019.

URL https://openreview.net/forum?id=ryGs6iA5Km -

[32]

W. Hamilton, Z. Ying, J. Leskovec,

Inductive

representation learning on large graphs, in: I. Guyon, U. V. Luxburg,

S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, R. Garnett (Eds.),

Advances in Neural Information Processing Systems, Vol. 30, Curran

Associates, Inc., 2017.

URL https://proceedings.neurips.cc/paper/2017/file/5dd9db5e033da9c6fb5ba83c7a7ebea9-Paper.pdf - [33] H. Zhu, F. Feng, X. He, X. Wang, Y. Li, K. Zheng, Y. Zhang, Bilinear graph neural network with neighbor interactions, in: Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, IJCAI’20, 2021.

-

[34]

Q. Li, Z. Han, X.-M. Wu, Deeper

Insights into Graph Convolutional Networks for Semi-Supervised

Learning, number: arXiv:1801.07606 arXiv:1801.07606 [cs, stat] (Jan.

2018).

URL http://arxiv.org/abs/1801.07606 - [35] Y. Lin, Z. Liu, M. Sun, Y. Liu, X. Zhu, Learning Entity and Relation Embeddings for Knowledge Graph Completion 7.

-

[36]

D. P. Kingma, J. Ba, Adam: A method for

stochastic optimization (2014).

doi:10.48550/ARXIV.1412.6980.

URL https://arxiv.org/abs/1412.6980 -

[37]

M. F. Dacrema, P. Cremonesi, D. Jannach,

Are we really making much

progress? a worrying analysis of recent neural recommendation approaches,

in: Proceedings of the 13th ACM Conference on Recommender Systems, RecSys

’19, Association for Computing Machinery, New York, NY, USA, 2019, p.

101–109.

doi:10.1145/3298689.3347058.

URL https://doi.org/10.1145/3298689.3347058 -

[38]

W. X. Zhao, S. Mu, Y. Hou, Z. Lin, Y. Chen, X. Pan, K. Li, Y. Lu, H. Wang,

C. Tian, Y. Min, Z. Feng, X. Fan, X. Chen, P. Wang, W. Ji, Y. Li, X. Wang,

J.-R. Wen, RecBole: Towards a

Unified, Comprehensive and Efficient Framework for Recommendation

Algorithms, arXiv:2011.01731 [cs]ArXiv: 2011.01731 (Aug. 2021).

URL http://arxiv.org/abs/2011.01731 -

[39]

I. Cantador, P. Brusilovsky, T. Kuflik,

Second workshop on information

heterogeneity and fusion in recommender systems (hetrec2011), in:

Proceedings of the Fifth ACM Conference on Recommender Systems, RecSys ’11,

Association for Computing Machinery, New York, NY, USA, 2011, p. 387–388.

doi:10.1145/2043932.2044016.

URL https://doi.org/10.1145/2043932.2044016 -

[40]

K. Järvelin, J. Kekäläinen,

Cumulated gain-based

evaluation of IR techniques, ACM Transactions on Information Systems

20 (4) (2002) 422–446.

doi:10.1145/582415.582418.

URL https://dl.acm.org/doi/10.1145/582415.582418 -

[41]

J. Yu, H. Yin, X. Xia, T. Chen, L. Cui, Q. V. H. Nguyen,

Are graph augmentations necessary?

simple graph contrastive learning for recommendationarXiv:2112.08679.

URL http://arxiv.org/abs/2112.08679 - [42] L. Van der Maaten, G. Hinton, Visualizing data using t-sne., Journal of machine learning research 9 (11) (2008).

-

[43]

X. He, T.-S. Chua, Neural

Factorization Machines for Sparse Predictive Analytics,

arXiv:1708.05027 [cs]ArXiv: 1708.05027 (Aug. 2017).

URL http://arxiv.org/abs/1708.05027