capbtabboxtable[][\FBwidth]

Lifting Weak Supervision To Structured Prediction

Abstract

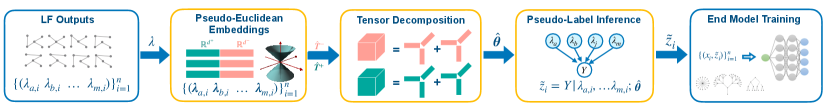

Weak supervision (WS) is a rich set of techniques that produce pseudolabels by aggregating easily obtained but potentially noisy label estimates from a variety of sources. WS is theoretically well understood for binary classification, where simple approaches enable consistent estimation of pseudolabel noise rates. Using this result, it has been shown that downstream models trained on the pseudolabels have generalization guarantees nearly identical to those trained on clean labels. While this is exciting, users often wish to use WS for structured prediction, where the output space consists of more than a binary or multi-class label set: e.g. rankings, graphs, manifolds, and more. Do the favorable theoretical properties of WS for binary classification lift to this setting? We answer this question in the affirmative for a wide range of scenarios. For labels taking values in a finite metric space, we introduce techniques new to weak supervision based on pseudo-Euclidean embeddings and tensor decompositions, providing a nearly-consistent noise rate estimator. For labels in constant-curvature Riemannian manifolds, we introduce new invariants that also yield consistent noise rate estimation. In both cases, when using the resulting pseudolabels in concert with a flexible downstream model, we obtain generalization guarantees nearly identical to those for models trained on clean data. Several of our results, which can be viewed as robustness guarantees in structured prediction with noisy labels, may be of independent interest. Empirical evaluation validates our claims and shows the merits of the proposed method111https://github.com/SprocketLab/WS-Structured-Prediction.

1 Introduction

Weak supervision (WS) is an array of methods used to construct pseudolabels for training supervised models in label-constrained settings. The standard workflow [26, 22, 10] is to assemble a set of cheaply-acquired labeling functions—simple heuristics, small programs, pretrained models, knowledge base lookups—that produce multiple noisy estimates of what the true label is for each unlabeled point in a training set. These noisy outputs are modeled and aggregated into a single higher-quality pseudolabel. Any conventional supervised end model can be trained on these pseudolabels. This pattern has been used to deliver excellent performance in a range of domains in both research and industry settings [9, 25, 27], bypassing the need to invest in large-scale manual labeling. Importantly, these successes are usually found in binary or small-cardinality classification settings.

While exciting, users often wish to use weak supervision in structured prediction (SP) settings, where the output space consists of more than a binary or multiclass label set [5, 14]. In such cases, there exists meaningful algebraic or geometric structure to exploit. Structured prediction includes, for example, learning rankings used for recommendation systems [13], regression in metric spaces [20], learning on manifolds [23], graph-based learning [12], and more.

An important advantage of WS in the standard setting of binary classification is that it sometimes yields models with nearly the same generalization guarantees as their fully-supervised counterparts. Indeed, the penalty for using pseudolabels instead of clean labels is only a multiplicative constant. This is a highly favorable tradeoff since acquiring more unlabeled data is easy. This property leads us to ask the key question for this work: does weak supervision for structured prediction preserve generalization guarantees? We answer this question in the affirmative, justifying the application of WS to settings far from its current use.

Generalization results in WS rely on two steps [24, 10]: (i) showing that the estimator used to learn the model of the labeling functions is consistent, thus recovering the noise rates for these noisy voters, and (ii) using a noise-aware loss to de-bias end-model training [18]. Lifting these two results to structured prediction is challenging. The only available weak supervision technique suitable for SP is that of [28]. It suffers from several limitations. First, it relies on the availability of isometric embeddings of metric spaces into —but does not explain how to find these. Second, it does not tackle downstream generalization at all. We resolve these two challenges.

We introduce results for a wide variety of structured prediction problems, requiring only that the labels live in some metric space. We consider both finite and continuous (manifold-valued) settings. For finite spaces, we apply two tools that are new to weak supervision. The approach we propose combines isometric pseudo-Euclidean embeddings with tensor decompositions—resulting in a nearly-consistent noise rate estimator. In the continuous case, we introduce a label model suitable for the so-called model spaces—Riemannian manifolds of constant curvature—along with extensions to even more general spaces. In both cases, we show generalization results when using the resulting pseudolabels in concert with a flexible end model from [7, 23].

Contributions:

-

•

New techniques for performing weak supervision in finite metric spaces based on isometric pseudo-Euclidean embeddings and tensor decomposition algorithms,

-

•

Generalizations to manifold-valued regression in constant-curvature manifolds,

-

•

Finite-sample error bounds for noise rate estimation in each scenario,

-

•

Generalization error guarantees for training downstream models on pseudolabels,

-

•

Experiments confirming the theoretical results and showing improvements over [28].

2 Background and Problem Setup

Our goal is to theoretically characterize how well learning with pseudolabels (built with weak supervision techniques) performs in structured prediction. We seek to understand the interplay between the noise in WS sources and the generalization performance of the downstream structured prediction model. We provide brief background and introduce our problem and some useful notation.

2.1 Structured Prediction

Structured prediction (SP) involves predicting labels in spaces with rich structure. Denote the label space by . Conventionally is a set, e.g., for binary classification. In the SP setting, has some additional algebraic or geometric structure. In this work we assume that is a metric space with metric (distance) . This covers many types of problems, including

-

•

Rankings, where , the symmetric group on , i.e., labels are permutations,

-

•

Graphs, where , the space of graphs with vertex set ,

-

•

Riemannian manifolds, including , the sphere, or , the hyperboloid.

Learning and Generalization in Structured Prediction

In conventional supervised learning we have a dataset of i.i.d. samples drawn from distribution over . As usual, we seek to learn a model that generalizes well to points not seen during training. Let be a family of functions from to . Define the risk for and as

| (1) |

For a large class of settings (including all of those we consider in this paper), [7, 23] have shown that the estimator is consistent:

| (2) |

where . Here, is the kernel matrix for a p.d. kernel , so that , , and is a regularization parameter. The procedure here is to first compute the weights and then to perform the optimization in (2) to make a prediction.

2.2 Weak Supervision

In WS, we cannot access any of the ground-truth labels . Instead we observe for each the noisy votes . These are weak supervision outputs provided by labeling functions (LFs) , where and . A two step process is used to construct pseudolabels. First, we learn a noise model (also called a label model) that determines how reliable each source is. That is, we must learn for —without having access to any samples of . Second, the noise model is used to infer a distribution (or its mode) for each point: .

We adopt the noise model from [28], which is suitable for our SP setting:

| (3) |

is the normalizing partition function, are canonical parameters, and is a set of correlations. The model can be described in terms of the mean parameters . Intuitively, if is large, the typical distance from to is small and the LF is reliable; if is small, the LF is unreliable. This model is appropriate for several reasons. It is an exponential family model with useful theoretical properties. It subsumes popular special cases of noise, including, for regression, zero-mean multivariate Gaussian noise; for permutations, a generalization of the popular Mallows model; for the binary case, it produces a close relative of the Ising model.

Our goal is to form estimates in order to construct pseudolabels. One way to build such pseudolabels is to compute . Observe how the estimated parameters are used to weight the labeling functions, ensuring that more reliable votes receive a larger weight.

We are now in a position to state the main research question for this work:

3 Noise Rate Recovery in Finite Metric Spaces

In the next two sections we handle finite metric spaces. Afterwards we tackle continuous (manifold-valued) spaces. We first discuss learning the noise parameters , then the use of pseudolabels.

Roadmap

For finite metric spaces with , we apply two tools new to weak supervision. First, we embed into a pseudo-Euclidean space [11]. These spaces generalize Euclidean space, enabling isometric (distance-preserving) embeddings for any metric. Using pseudo-Euclidean spaces make our analysis slightly more complex, but we gain the isometry property, which is critical.

Second, we form three-way tensors from embeddings of observed labeling functions. Applying tensor product decomposition algorithms [1], we can recover estimates of the mean parameters and ultimately . Finally, we reweight the model (2) to preserve generalization.

The intuition behind this approach is the following. First, we need a technique that can provide consistent or nearly-consistent estimates of the parameters in the noise model. Second, we need to handle any finite metric space. Techniques like the one introduced in [10] handle the first—but do not work for generic finite metric spaces, only binary labels and certain sequences. Techniques like the one in [28] handle any metric space—but only have consistency guarantees in highly restrictive settings (e.g., it requires an isometric embedding, that the distribution over the resulting embeddings is isomorphic to certain distributions, the true label only takes on two values). Pseudo-Euclidean embeddings used with tensor decomposition algorithms meet both requirements

3.1 Pseudo-Euclidean Embeddings

Our first task is to embed the metric space into a continuous space—enabling easier computation and potential dimensionality reduction. A standard approach is multi-dimensional scaling (MDS) [15], which embeds into . A downside of MDS is that not all metric spaces embed (isometrically) into Euclidean space, as the square distance matrix must be positive semi-definite.

A simple and elegant way to overcome this difficulty is to instead use pseudo-Euclidean spaces for embeddings. These pseudo-spaces do not require a p.s.d. inner product. As an outcome, any finite metric space can be embedded into a pseudo-Euclidean space with no distortion [11]—so that distances are exactly preserved. Such spaces have been applied to similarity-based learning methods [21, 17, 19]. A vector in a pseudo-Euclidean space has two parts: and . The dot product and the squared distance between any two vectors are and . These properties enable isometric embeddings: the distance can be decomposed into two components that are individually induced from p.s.d. inner products—and can thus be embedded via MDS. Indeed, pseudo-Euclidean embeddings effectively run MDS for each component (see Algorithm 1 steps 4-9). To recover the original distance, we obtain and and subtract.

Example: To see why such embeddings are advantageous, we compare with a one-hot vector representation (whose dimension is ). Consider a tree with a root node and three branches, each of which is a path with nodes. Let be the nodes in the tree with the shortest-hops distance as the metric. The pseudo-Euclidean embedding dimension is just ; see Appendix for more details. The one-hot embedding dimension is —arbitrarily larger!

Now we are ready to apply these embeddings to our problem. Abusing notation, we write and for the pseudo-Euclidean embeddings of , respectively. We have that , so that there is no loss of information from working with these spaces. In addition, we write the mean as and the covariance as . Our goal is to obtain an accurate estimate , which we will use to estimate the mean parameters . If we could observe , it would be easy to empirically estimate —but we do not have access to it. Our approach will be to apply tensor decomposition for multi-view mixtures [2].

3.2 Multi-View Mixtures and Tensor Decompositions

In a multi-view mixture model, multiple views of a latent variable are observed. These views are independent when conditioned on . We treat the positive and negative components and of our pseudo-Euclidean embedding as separate multi-view mixtures:

| (4) |

where , and are mean zero random vectors with covariances respectively. Here is a proxy variance whose use is described in Assumption 3.

We cannot directly estimate these parameters from observations of , due to the fact that is not observed. However, we can observe various moments of the outputs of the LFs such as tensors of outer products of LF triplets. We require that for each such a triplet exists. Then,

| (5) |

Here are the mixture probabilities (prior probabilities of ) and . We similarly define and . We then obtain estimates using an algorithm from [1] with minor modifications to handle pseudo-Euclidean rather than Euclidean space. The overall approach is shown in Algorithm 1. We have three key assumptions for our analysis,

Assumption 1.

The support of , i.e., and the label space is such that , for .

Assumption 2.

(Bounded angle between and ) Let denote the angle between any two vectors in a Euclidean space. We assume that , , and , for some sufficiently small such that , where is defined for some samples in (6).

These enable providing guarantees on recovering the mean vector magnitudes (1) and signs (2) and simplify the analysis (1), (3); all three can be relaxed at the expense of a more complex analysis.

Our first theoretical result shows that we have near-consistency in estimating the mean parameters in (3). We use standard notation ignoring logarithmic factors.

Theorem 1.

Let be the estimates of returned by Algorithm 1 with input constructed using isometric pseudo-Euclidean embeddings (in ). Suppose Assumptions 1 and 2 are met, a sufficiently large number of samples are drawn from the model in (3), and . Then there exists a constant such that with high probability and ,

where

| (6) |

We interpret Theorem 6. It is a nearly direct application of [2]. There are two noise cases for . In the high-noise case, is independent of dimension (and thus ). Intuitively, this means the average distance balls around each LF begin to overlap as the number of points grows—explaining the multiplicative term. If the noise scales down as we add more embedded points, this problem is removed, as in the low-noise case. In both cases, the second error term comes from using the algorithm of [1] and is independent of the sampling error. Since , this term goes down with . The first error term is due to sampling noise and goes to zero in the number of samples . Note the tradeoffs of using the embeddings. If we used one-hot encoding, , and in the high-noise case, we would pay a very heavy cost for . However, while sampling error is minimized when using a very small , we pay a cost in the second error term. This leads to a tradeoff in selecting the appropriate embedding dimension.

4 Generalization Error for Structured Prediction in Finite Metric Spaces

We have access to labeling function outputs for points and noise rate estimates . How can we use these to infer unobserved labels in (2)? Our approach is based on [18, 31],where the underlying loss function is modified to deal with noise. Analogously, we modify (2) in such a way that the generalization guarantee is nearly preserved.

4.1 Prediction with Pseudolabels

First, we construct the posterior distribution . We use our estimated noise model and the prior . We create pseudo-labels for each data point by drawing a random sample from the posterior distribution conditioned on the output of labeling functions: We thus observe where is sampled as above. To overcome the effect of noise we create a perturbed version of the distance function using the noise rates, generalizing [18]. This requires us to characterize the noise distribution induced by our inference procedure. In particular we seek the probability that when the true label is . This can be expressed as follows. Let denote the -fold Cartesian product of and let denote its entry. We write

| (7) |

We define using . is the noise distribution induced by the true parameters and is an approximation obtained from inference with the estimated parameters . With this terminology, we can define the perturbed version of the distance function and a corresponding replacement of (2):

| (8) |

| (9) |

We similarly define using the true noise distribution . The perturbed distance is an unbiased estimator of the true distance. However we do not know the true noise distribution hence we cannot use it for prediction. Instead we use . Note that is no longer an unbiased estimator—its bias can be expressed as function of the parameter recovery error bound in Theorem 6.

4.2 Bounding the Generalization Error

What can we say about the excess risk ? Note that compared to the prediction based on clean labels, there are two additional sources of error. One is the noise in the labels (i.e., even if we know the true , the quality of the pseudolabels is imperfect). The other is our estimation procedure for the noise distribution. We must address both sources of error.

Our analysis uses the following assumptions on the minimum and maximum singular values , and the condition number of true noise matrix and the function . Additional detail is provided in the Appendix.

Assumption 4.

(Noise model is not arbitrary) The true parameters are such that , and the condition number is sufficiently small.

Assumption 5.

(Normalized features) , for all .

Assumption 6.

(Proxy strong convexity) The function in (2) satisfies the following property with some . As we move away from the minimizer of , the function increases and the rate of increase is proportional to the distance between the points:

| (10) |

With these assumptions, we provide a generalization result for prediction with pseudolabels,

Theorem 2.

Implications and Tradeoffs:

We interpret each term in the bound. The first term is present even with access to the clean labels and hence unavoidable. The second term is the additional error we incur if we learn with the knowledge of the true noise distribution. The third term is due to the use of the estimated noise model. It is dominated by the noise rate recovery result in Theorem 6. If the third term goes to 0 (perfect recovery) then we obtain the rate , the same as in the case of access to clean labels. The third term is introduced by our noise rate recovery algorithm and has two terms: one dominated by and the other on (see discussion of Theorem 6). Thus we only pay an extra additive factor in the excess risk when using pseudolabels.

5 Manifold-Valued Label Spaces: Noise Recovery and Generalization

We introduce a simple recovery method for weak supervision in constant-curvature Riemannian manifolds. First we briefly introduce some background notation on these spaces, then provide our estimator and consistency result, then the downstream generalization result. Finally, we discuss extensions to symmetric Riemannian manifolds, an even more general class of spaces.

Background on Riemannian manifolds

The following is necessarily a very abridged background; more detail can be found in [16, 30]. A smooth manifold is a space where each point is located in a neighborhood diffeomorphic to . Attached to each point is a tangent space ; each such tangent space is a -dimensional vector space enabling the use of calculus.

A Riemannian manifold equips a smooth manifold with a Riemannian metric: a smoothly-varying inner product at each point . This tool allows us to compute angles, lengths, and ultimately, distances between points on the manifold as shortest-path distances. These shortest paths are called geodesics and can be parameterized as curves , where , or by tangent vectors . The exponential map operation takes tangent vectors to manifold points. It enables switching between these tangent vectors: implies that .

Invariant

Our first contribution is a simple invariant that enables us to recover the error parameters. Note that we cannot rely on the finite metric-space technique, since the manifolds we consider have an infinite number of points. Nor do we need an embedding—we have a continuous representation as-is. Instead, we propose a simple idea based on the law of cosines. Essentially, on average, the geodesic triangle formed by the latent variable and two observed LFs , is a right triangle. This means it can be characterized by the (Riemannian) version of the Pythagorean theorem:

Lemma 1.

For , a hyperbolic manifold, for some distribution on and labeling functions drawn from (3), , while for a spherical manifold,

These invariants enable us to easily learn by forming a triplet system. Suppose we construct the equation in Lemma 1 for three pairs of labeling functions. The resulting system can be solved to express in terms of . Specifically,

Note that we can estimate via the empirical versions of terms on the right , as these are based on observable quantities. This is a generalization of the binary case in [10] and the Gaussian (Euclidean) case in [28] to hyperbolic manifolds. A similar estimator can be obtained for spherical manifolds by replacing with .

Using this tool, we can obtain a consistent estimator for for each of . Let satisfy the following inequality ; that is, reflects the preservation of concentration when moving from distribution to . Then,

Theorem 3.

Let be a hyperbolic manifold. Fix and let . Then, there exists a constant so that with probability at least ,

As we hoped, our estimator is consistent. Note that we pay a price for a tighter bound: is large for smaller probability . It is possible to estimate the size of (more generally, it is a function of the curvature). We provide more details in the Appendix.

Next, we adapt the downstream model predictor (2) in the following way. Let . Let be such that and minimizes . Then, we set

We simply replace each of the true labels with a combination of the labeling functions. With this, we can state our final result. First, we introduce our assumptions.

Let , where the expectation is taken over the population level distribution and denotes the kernel at .

Assumption 7.

(Bounded Hugging Function c.f. [29]) Let be defined as above. For all , the hugging function at is given by . We assume that is lower bounded by .

Assumption 8.

(Kernel Symmetry) We assume that for all and all , .

The first condition provides control on how geodesic triangles behave; it relates to the curvature. We provide more details on this in the Appendix. The second assumption restricts us to kernels symmetric about the minimizers of the objective . Finally, suppose we draw and independently from . Set .

Theorem 4.

Let be a complete manifold and suppose the assumptions above hold. Then, there exist constants ,

Note that as both and grow, as long as our worst-quality LF has bounded variance, our estimator of the true predictor is consistent. Moreover, we also have favorable dependence on the noise rate. This is because the only error we incur is in computing sub-optimal coefficients. We comment on this suboptimality in the Appendix.

A simple corollary of Theorem 4 provides the generalization guarantees we sought,

Corollary 1.

Let be a complete manifold and suppose the assumptions above hold. Then, with high probability,

Extensions to Other Manifolds

First, we note that all of our approaches almost immediately lift to products of constant-curvature spaces. For example, we have that has metric , where are the projections of onto the th component.

6 Experiments

Finally, we validate our theoretical claims with experimental results demonstrating improved parameter recovery and end model generalization using our techniques over that of prior work [28]. We illustrate both the finite metric space and continuous space cases by targeting rankings (i.e., permutations) and hyperbolic spaces. In the case of rankings we show that our pseudo-Euclidean embeddings with tensor decomposition estimator yields stronger parameter recovery and downstream generalization than [28]. In the case of hyperbolic regression (an example of a Riemannian manifold), we show that our estimator yields improved parameter recovery over [28].

Finite metric spaces: Learning to rank

To experimentally evaluate our tensor decomposition estimator for finite metric spaces, we consider the problem of learning to rank. We construct a synthetic dataset whose ground truth comprises samples of two distinct rankings among the finite metric space of all length-four permutations. We construct three labeling functions by sampling rankings according to a Mallows model, for which we obtain pseudo-Euclidean embeddings to use with our tensor decomposition estimator.

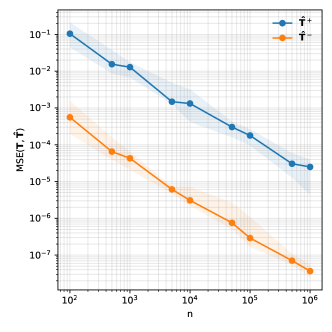

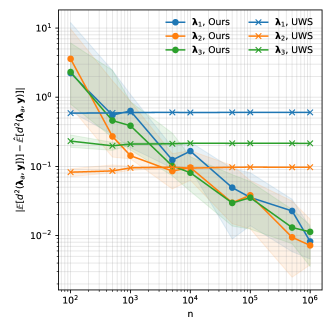

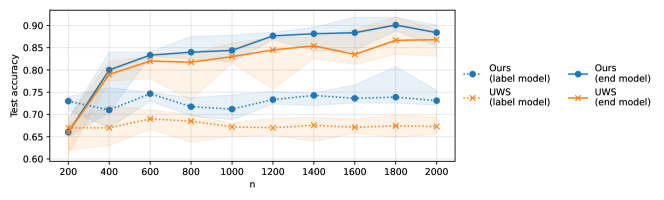

In Figure 2 (top left), we show that as we increase the number of samples, we can obtain an increasingly accurate estimate of —exhibiting the nearly-consistent behavior predicted by our theoretical claims. This leads to downstream improvements in parameter estimates, which also become more accurate as increases. In contrast, we find that the estimates of the same parameters given by [28] do not improve substantially as increases, and are ultimately worse (see Figure 2, top right). Finally, this leads to improvements in the label model accuracy as compared to that of [28], and translates to improved accuracy of an end model trained using synthetic samples (see Figure 2, bottom).

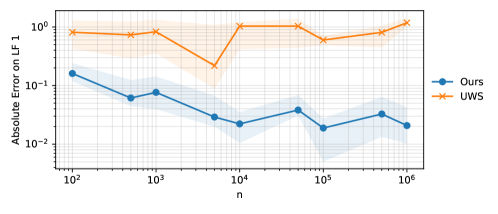

Riemannian manifolds: Hyperbolic regression

We similarly evaluate our estimator using synthetic labels from a hyperbolic manifold, matching the setting of Section 5. As shown in Figure 3, we find that our estimator consistently outperforms that of [28], often by an order of magnitude.

7 Conclusion

We studied the theoretical properties of weak supervision applied to structured prediction in two general scenarios: label spaces that are finite metric spaces or constant-curvature manifolds. We introduced ways to estimate the noise rates of labeling functions, achieving consistency or near-consistency. Using these tools, we established that with suitable modifications downstream structured prediction models maintain generalization guarantees. Future directions include extending these results to even more general manifolds and removing some of the assumptions needed in our analysis.

Acknowledgments

We are grateful for the support of the NSF (CCF2106707), the American Family Funding Initiative and the Wisconsin Alumni Research Foundation (WARF).

References

- AGH+ [14] Animashree Anandkumar, Rong Ge, Daniel Hsu, Sham M Kakade, and Matus Telgarsky. Tensor decompositions for learning latent variable models. Journal of Machine Learning Research, 15:2773–2832, 2014.

- AGJ [14] Animashree Anandkumar, Rong Ge, and Majid Janzamin. Sample complexity analysis for learning overcomplete latent variable models through tensor methods. arXiv preprint arXiv:1408.0553, 2014.

- AH [91] Helmer Aslaksen and Hsueh-Ling Huynh. Laws of trigonometry in symmetric spaces. Geometry from the Pacific Rim, 1991.

- BDDH [14] Richard Baraniuk, Mark A Davenport, Marco F Duarte, and Chinmay Hegde. An introduction to compressive sensing. 2014.

- BHS+ [07] Gükhan H. Bakir, Thomas Hofmann, Bernhard Schölkopf, Alexander J. Smola, Ben Taskar, and S. V. N. Vishwanathan. Predicting Structured Data (Neural Information Processing). The MIT Press, 2007.

- BS [16] R.B. Bapat and Sivaramakrishnan Sivasubramanian. Squared distance matrix of a tree: Inverse and inertia. Linear Algebra and its Applications, 491:328–342, 2016.

- CRR [16] Carlo Ciliberto, Lorenzo Rosasco, and Alessandro Rudi. A consistent regularization approach for structured prediction. In Advances in Neural Information Processing Systems 30 (NIPS 2016), volume 30, 2016.

- Dem [92] James Demmel. The component-wise distance to the nearest singular matrix. SIAM Journal on Matrix Analysis and Applications, 13(1):10–19, 1992.

- DRS+ [20] Jared A. Dunnmon, Alexander J. Ratner, Khaled Saab, Nishith Khandwala, Matthew Markert, Hersh Sagreiya, Roger Goldman, Christopher Lee-Messer, Matthew P. Lungren, Daniel L. Rubin, and Christopher Ré. Cross-modal data programming enables rapid medical machine learning. Patterns, 1(2), 2020.

- FCS+ [20] Daniel Y. Fu, Mayee F. Chen, Frederic Sala, Sarah M. Hooper, Kayvon Fatahalian, and Christopher Ré. Fast and three-rious: Speeding up weak supervision with triplet methods. In Proceedings of the 37th International Conference on Machine Learning (ICML 2020), 2020.

- Gol [85] Lev Goldfarb. A new approach to pattern recognition. Progress in Pattern Recognition 2, pages 241–402, 1985.

- GS [19] Colin Graber and Alexander Schwing. Graph structured prediction energy networks. In Advances in Neural Information Processing Systems 33 (NeurIPS 2019), volume 33, 2019.

- KAG [18] Anna Korba and Florence d’Alché-Buc Alexandre Garcia. A structured prediction approach for label ranking. In Advances in Neural Information Processing Systems 32 (NeurIPS 2018), volume 32, 2018.

- KL [15] Volodymyr Kuleshov and Percy S Liang. Calibrated structured prediction. In Advances in Neural Information Processing Systems 28 (NIPS 2015), 2015.

- KW [78] J.B. Kruskal and M. Wish. Multidimensional Scaling. Sage Publications, 1978.

- Lee [00] John M. Lee. Introduction to Smooth Manifolds. Springer, 2000.

- LRBM [06] Julian Laub, Volker Roth, Joachim M Buhmann, and Klaus-Robert Müller. On the information and representation of non-euclidean pairwise data. Pattern Recognition, 39(10):1815–1826, 2006.

- NDRT [13] Nagarajan Natarajan, Inderjit S. Dhillon, Pradeep Ravikumar, and Ambuj Tewari. Learning with noisy labels. In Proceedings of the 26th International Conference on Neural Information Processing Systems - Volume 1, NIPS’13, page 1196–1204, 2013.

- PHD+ [06] Elżbieta Pękalska, Artsiom Harol, Robert P. W. Duin, Barbara Spillmann, and Horst Bunke. Non-euclidean or non-metric measures can be informative. In Dit-Yan Yeung, James T. Kwok, Ana Fred, Fabio Roli, and Dick de Ridder, editors, Structural, Syntactic, and Statistical Pattern Recognition, pages 871–880, 2006.

- PM [19] Alexander Petersen and Hans-Georg Müller. Fréchet regression for random objects with euclidean predictors. Annals of Statistics, 47(2):691–719, 2019.

- PPD [01] Elżbieta Pękalska, Pavel Paclik, and Robert P.W. Duin. A generalized kernel approach to dissimilarity-based classification. Journal of Machine Learning Research, 2:175–211, 2001.

- RBE+ [18] Alexander Ratner, Stephen H. Bach, Henry Ehrenberg, Jason Fries, Sen Wu, and Christopher Ré. Snorkel: Rapid training data creation with weak supervision. In Proceedings of the 44th International Conference on Very Large Data Bases (VLDB), Rio de Janeiro, Brazil, 2018.

- RCMR [18] Alessandro Rudi, Carlo Ciliberto, GianMaria Marconi, and Lorenzo Rosasco. Manifold structured prediction. In Advances in Neural Information Processing Systems 32 (NeurIPS 2018), volume 32, 2018.

- RHD+ [19] A. J. Ratner, B. Hancock, J. Dunnmon, F. Sala, S. Pandey, and C. Ré. Training complex models with multi-task weak supervision. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, Hawaii, 2019.

- RNGS [20] Christopher Ré, Feng Niu, Pallavi Gudipati, and Charles Srisuwananukorn. Overton: A data system for monitoring and improving machine-learned products. In Proceedings of the 10th Annual Conference on Innovative Data Systems Research, 2020.

- RSW+ [16] A. J. Ratner, Christopher M. De Sa, Sen Wu, Daniel Selsam, and C. Ré. Data programming: Creating large training sets, quickly. In Proceedings of the 29th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 2016.

- SLB [20] Esteban Safranchik, Shiying Luo, and Stephen Bach. Weakly supervised sequence tagging from noisy rules. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), pages 5570–5578, Apr. 2020.

- SLV+ [22] Changho Shin, Winfred Li, Harit Vishwakarma, Nicholas Carl Roberts, and Frederic Sala. Universalizing weak supervision. In International Conference on Learning Representations, 2022.

- Str [20] Austin J. Stromme. Wasserstein Barycenters: Statistics and Optimization. MIT, 2020.

- Tu [11] Loring W. Tu. An Introduction to Manifolds. Springer, 2011.

- vRW [18] Brendan van Rooyen and Robert C. Williamson. A theory of learning with corrupted labels. Journal of Machine Learning Research, 18(228):1–50, 2018.

- ZS [16] Hongyi Zhang and Suvrit Sra. First-order methods for geodesically convex optimization. In Conference on Learning Theory, COLT 2016, 2016.

Appendix

The Appendix is organized as follows. First, we provide a glossary that summarizes the notation we use throughout the paper. Afterwards, we provide the proofs for the finite-valued metric space cases. We continue with the proofs and additional discussion for the manifold-valued label spaces. Finally, we give some additional explanations for pseudo-Euclidean spaces.

Appendix A Glossary

The glossary is given in Table 1 below.

| Symbol | Definition |

|---|---|

| feature space | |

| label metric space | |

| support of prior distribution on true labels | |

| label metric (distance) function | |

| unlabeled datapoints from | |

| latent (unobserved) labels from | |

| labeling functions / sources | |

| output of labeling functions (LFs) | |

| pseudo-Euclidean embeddings of LFs outputs | |

| output of th LF on th data point | |

| pseudo-Euclidean embedding of output of th LF on th data point | |

| number of data points | |

| number of LFs | |

| size of the support of prior on i.e. | |

| size of for the finite case | |

| true and estimated canonical parameters of model in (3) | |

| true and estimated canonical parameters arranged as vectors | |

| mean parameters in (3) | |

| pseudo-Euclidean embedding mapping | |

| true noise model with true parameters | |

| estimated noise model with parameters , | |

| a random element in the -fold Cartesian product of | |

| th element in | |

| means of distributions in (4) corresponding to | |

| error in recovering the mean parameters (6) | |

| proxy noise variance in (4) | |

| the score function in (2) with true labels | |

| the score function in (9) with noisy labels from distributions and | |

| minimizer of defined in (2) | |

| minimizers of as defined in (2) | |

| maximum singular value of | |

| minimum singular value of | |

| the condition number of matrix | |

| angle between vectors |

Appendix B Proofs for Parameter Estimation Error in Discrete Spaces

We introduce results leading to the proofs of the theorems for the finite-valued metric space case.

Lemma 2.

([2]) Let be the third order observed moments for mutually independent labeling functions triplet, as defined in (5) using a sufficiently large number of i.i.d observations drawn from models in equation (4). Suppose there are sufficiently many such triplets to cover all labeling functions. Let be the estimated parameters returned by the algorithm 1 for all . Let be defined as above in equation (6), then the following holds with high probability for all labeling functions,

| (12) |

Proof.

The result follows by first showing that our setting and assumptions imply that the conditions of Theorems 1 and 5 in [2] are satisfied, which allows us to adopt their results. We then translate the result in order to state it in terms of the distance.

The tensor concentration result in Theorem 1 in [2] relies heavily on the noise matrices satisfying the Restricted Isometry Property (RIP) property. The authors make an explicit assumption that the noise model satisfies this condition. In our setting, we have a specific form of the noise model that allows us to show that this assumption is satisfied. The RIP condition is satisfied for sub-Gaussian noise matrices [4]. Our noise matrices are supported on a discrete space and have bounded entries, and so are sub-Gaussian.

The other required conditions on the norms of factor matrices and the number of latent factors are implied by Assumption 1. Thus, we can adopt the results on recovery of parameters and the prior weights from [2]. The result gives us for all ,

and

where is defined as follows. For any ,

Next, we translate the result to the Euclidean distance. For with , it is easy to see that

This notion of distance is oblivious to sign recovery. However, when sign recovery is possible then the Euclidean distance can be bounded as follows,

Next we make use of Assumption 2 to recover the signs of . The assumption bounds the angle between true and between for some sufficiently small such that , where is defined for some samples in equation (6). We measure and and claim that whichever makes an acute angle with has the correct sign.

We have that Let be the correct sign, then,

With the correct sign and so is . Thus with incorrect sign .

Hence, after disambiguating the signs we have,

and similarly for . Next with sufficiently large such that , the result holds for squared distances. ∎

See 1

Proof.

We prove this by using the bounds on errors in the estimates of and from Lemma 12. We proceed by bounding the errors in two parts for and separately and then combine them to get the bound on overall parameter estimation error.

We first bound the error for . The true mean parameter (i.e., the true expected squared distance) can be expanded as follows:

The estimate is computed empirically. The first term is estimated observed LF outputs, i.e. . The second term is computed by using the estimated prior on the labels and for the last term we plug in the estimate of computed using the tensor-decomposition algorithm. Putting them all together we have the following estimator:

We want to bound the error of our estimator i.e. the difference . For this first consider the following,

Here we used and and . Similarly,

| (13) |

Hence the parameter estimator error,

In the second step, we bound the first term by via standard concentration inequalities.

Doing the same calculations for , we obtain

The overall error in mean parameters is then

Next, we use a known relation between the mean and the canonical parameters of the exponential model to get the result in terms of the canonical parameters:

where is the log partition function of the label model in (3) and over the parameter space . For more details see Lemma 8 from [10] and Theorem 4.3 in [28]. Letting concludes the proof. ∎

Appendix C Proofs for Generalization Error in Discrete Space

In this section we give the proof for the generalization error bound in the discrete label spaces. We first show that the perturbed (noise-aware) distance function is an unbiased estimator of the true distance. Using this we show that the noise aware score function is a good uniform approximation of the score function . Then we show that the minimizer of is close to the minimizer and that this closeness depends on how well approximates . Next, showing that is a good uniform approximation of using the results from previous section on parameter recovery leads to the result on generalization error of .

Lemma 3.

Let the distribution be given by a transition probability matrix with and suppose is invertible. Let pseudo-distance then,

| (14) |

Proof.

Here we adopt the same ideas as in [18] to create the unbiased estimator as follows, First we write the equations for the expectations for each . Which gives us a system of linear equations and solving them for gives us the expression for the unbiased estimator.

Set with th entry given by and similarly define with . Then the above system of linear equations can be expressed as follows,

∎

Next, we show that the noisy score function concentrates around the true score function for all and with high probability.

Lemma 4.

Proof.

Let be the true labels of points and let the pseudo-label for th point drawn from the true noise model be . Let be a vector such that its entry is given as , and similarly and with . Recall the definitions of the score functions and for any and in ,

Taking their difference,

Here are fixed and the randomness is over , thus we can think of as random variable and take the expectation of over the distribution . From Lemma 14 we have and this implies .

Moreover, are independent random variables and . The are bounded as follows as long as the spectral decomposition of is not arbitrary,

Now using the fact that and properties of matrix norms we get,

Moreover, which gives us the magnitude of random variables is upper bounded by . Thus using Hoeffding’s inequality and union bound over all we get,

Note that, the statement holds for without requiring an explicit union bound over . It is because the above concentration depends only on the labels and the events that the above inequality does not hold for any distinct are the same. ∎

Now, we show that the distance between minimizer of and is bounded.

Lemma 5.

Proof.

Recall the definitions,

Let and let denote the ball of radius around .

From Lemma 15 we know for

From Assumption 10 we have,

Combining the two we get a lower bound on ,

We want to find a sufficiently large ball around such that the minimizer of does not lie outside this ball. To see this let and denote the above mentioned lower and upper bounds on ,

For and some such that

Thus considering the greatest lower bound, any with cannot be the minimizer of , since there exists some other with smaller distance from that has smaller value compared to . ∎

Next we show that a good estimate of true noise matrix by leads to being uniformly close to .

Lemma 6.

Proof.

Let be a vector such that its entry is given as , and similarly, let with and with . It is easy to see that and . Now consider the following expectation w.r.t ,

Let , and using standard matrix inversion results for small perturbations, [8], and we get the following. As , we have

∎

Lemma 7.

For and defined in (9) w.r.t. noise distributions and respectively, and let then we have w.h.p.

| (18) |

with and ,

Proof.

Recall, random variables , denote the noisy labels drawn from true and estimated noise distributions respectively and denote their draw for data point . Note that we do not know and in practice and we only know . Here we are using and to compare our actual estimates using samples against the estimates one could have obtained from .

Recall the definitions,

Then,

Thus,

Finally .

Lemma 8.

Proof.

The following lemmas bound the estimation error between noise matrices and using the estimation error in the canonical parameters.

Lemma 9.

The posterior distribution function is Lipshcitz continuous in for any

Proof.

Recall the definition of the posterior distribution,

For convenience let be such that its entry

Let then

Since distances are upper bounded by 1, , so

Now,

Thus .

Using the fact that for any gives us the result.

∎

Lemma 10.

The distribution function is Lipshcitz continuous in for any

Proof.

Doing the same steps as in the proof of Lemma 9 gives the result. ∎

Lemma 11.

For the noise distributions in (7) with parameters , respectively and restricted only to the elements with non-zero prior probability, the following holds,

Proof.

It is important to note that we are restricting the values of and to which is the set of with non-zero prior probability and by our assumption it is small.

Finally, we restate and prove our generalization error result: See 2

Appendix D Proofs for Continuous Label Spaces

Next we present the proofs for the results in the continuous (manifold-valued) label spaces. We restate the first result on invariance: See 1

Proof.

We start with the hyperbolic law of cosines, which states that

where is the angle between the sides of the triangle formed by and . We can rewrite this as follows. Let , be tangent vectors in . Then,

Next, we take the expectation conditioned on . The right-most term is then

where the last equality follows from the fact that and are independent conditioned on . This leaves us with the product terms. Taking expectation again with respect to gives the result.

The spherical version of the result is nearly identical, replacing hyperbolic sines and cosines with sines and cosines, respectively. ∎

Note, in addition, that it is easy to obtain a version of this result for curvatures that are not equal to in the hyperbolic case (or in the spherical case).

We will use this result for our consistency result, restated below. See 3

Proof.

First, we will condition on the event that the observed outputs have maximal distance (i.e., diameter) . This implies that our statements hold with high probability. Then, we use McDiarmid’s inequality. For each pair of distinct LFs , we have that

Integrating the expression above in , we obtain

| (20) |

Next, we use this to control the gap on our estimator. Recall that using the triplet approach, we estimate

For notational convenience, we write for , for its empirical counterpart, and and for the versions between pairs of LFs . Then, the above becomes

Note that , so that and similarly for the empirical versions. We also have that . With this, we can begin our perturbation analysis. Applying Lemma 1, we have that

To see why the last step holds, note that , while . Next, for , . This means that using (20).

Now we can continue, adding and subtracting as before. We have that

Putting it all together, with probability at least ,

| (21) |

Next, recall that satisfies . Thus,

This concludes the proof. ∎

Next, we will prove a simple result that is needed in the proof of Theorem 4. Consider the distribution of the quantities for some fixed . We can think of this as the population-level version of sample distances that are observed in the supervised version of the problem. We do not have access to it in our approach; it will be used only as an object in our proof. Recall we set to be the population-level minimizer. Here we use the notation to denote the corresponding kernel value at a point . Finally, let us denote to be the distribution over the quantities .

Lemma 12.

Proof.

We will use a simple symmetry argument. First, note that we can write in the following way,

Since is a symmetric manifold, if , there is an isometry sending to . Using this isometry and Assumption 8, we can also write

Our approach will be to formulate similar symmetric expressions for the minimizer, but this time for the loss over the distribution . We will then be able to show, using triangle inequality, that remains the minimizer.

We can similarly express the minimizer of the loss for as

Here we have broken down the expectation over by applying the tower law; the inner expectation is conditioned on point and runs over the labeling function outputs .

Again using Assumption 8, we can write the minimizer for the loss over as , where

Thus we can also write the minimizer as , where

With this, we can write

where denotes parallel transport from to .

Note that is on the geodesic between and . We exploit this fact by applying the following squared-distance inequality. For three points , from the triangle inequality,

Squaring both sides and applying

we obtain that

so that

Setting to be and to be in the above gives

Now we can apply the fact that is on the geodesic to rewrite this as

This is because the length of the geodesic connecting and is twice that of the geodesic connecting to .

Thus, we have

and we are done. ∎

Proof.

We use Lemma 12 and compute a bound on the expected distance from the empirical estimates to the common center. In both cases, the approach is nearly identical to that of [29] (proof of Theorem 3.2.1); we include these steps for clarity. Suppose that the minimum and maximum values of are and , respectively.

Then, letting we have that, using the hugging function assumption

We also have that

Then,

Now, multiply each of the equations by and sum over them. In that case, the different on the right side is non-positive, as is the empirical minimizer. This yields

Using the minimum and maximum values of , and setting , we get

We can apply Cauchy-Schwarz, simplify, then square, obtaining

What remains is to take expectation and use the fact that the tangent vectors summed up to form are independent. This yields

Thus we obtain

or

| (22) |

We use the same approach, but this apply it to the points given by the LFs drawn from distribution . This yields

where corresponds to the expected squared distance for LF to . We bound this with triangle inequality, obtaining , so that

or,

| (23) |

Now, again using triangle inequality,

Appendix E Additional Details on Continuous Label Space

We provide some additional details on the continuous (manifold-valued) case.

Computing

In Theorem 3, we stated the result in terms of , a quantity that trades off the probability of failure for the diameter of the largest ball that contains the observed points. Note that if we fix the curvature of the manifold, it is possible to compute an exact bound for this quantity by using formulas for the sizes of balls in -dimensional manifolds of fixed curvature.

Hugging number

Note that it is possible to derive a lower bound on the hugging number as a function of the curvature. The way to do so is to use comparison theorems that upper bound triangle edge lengths with those of larger-curvature triangles. This makes it possible to establish a concrete value for as a function of the curvature.

We note, as well, that an upper bound on the hugging number can be obtained by a simple rearrangement of Lemma 6 from [32]. This result follows from a curvature lower bound based on hyperbolic law of cosines; the bound we describe follows from the opposite—an upper bound based on spherical triangles.

Weights and Suboptimality

An intuitive way to think of the estimator we described is the following simple Euclidean version. Suppose we have labeling functions that are equal to , where . In this case, if we seek an unbiased estimator with lowest variance, we require a set of weights so that and is minimized. It is not hard to derive a closed-form solution for the coefficients as a function of the terms .

Now, suppose we use the same solution, but with noisy estimates instead. Our weights will yield a suboptimal variance, but this will not affect the scaling of the rate in terms of the number of samples .

Appendix F Extended Background on Pseudo-Euclidean Embeddings

We provide some additional background on pseudo-metric spaces and pseudo-Euclidean embeddings. Our roadmap is as follows. First, we note that pseudo-Euclidean spaces are a particular kind of pseudo-metric space, so we provide additional background and formal definitions for these pseudo-metric spaces. Afterwards, we explain some of the ideas behind pseudo-Euclidean spaces, comparing them to standard Euclidean spaces in the context of embeddings.

F.1 Pseudo-metric Spaces

Pseudo-metric spaces generalize metric spaces by removing the requirement that pairs of points at distance zero must be identical:

Definition 1.

(Pseudo-metric Space) A set along with a distance function is called pseudo-metric space if satisfies the following conditions,

| (24) | ||||

| (25) | ||||

| (26) |

These spaces have additional flexibility compared to standard metric spaces: note that while , does not imply that and are identical. The downside of using such spaces, however, is that conventional algebra may not produce the usual results. For example, limits where the distance between a sequence of points and a particular point tends to zero do not convey the same information as in standard metric spaces. However, these odd properties do not concern us, as we only use the spaces for representing a set of distances from our given metric space.

A finite pseudo-metric space has .

F.2 Pseudo-Euclidean Spaces

The following definitions are for finite-dimensional vector spaces defined over the field .

Definition 2.

(Symmetric Bilinear Form / Generalized Inner Product) For a vector space over the field , a symmetric bilinear form is a function satisfying the following properties :

-

P1)

-

P2)

-

P3)

.

Definition 3.

(Squared Distance w.r.t. ) Let be a real vector space equipped with generalized inner product , then the squared distance w.r.t. between any two vectors is defined as,

This definition also gives a notion of squared length for every ,

The inner product can also be expressed in terms of a basis of the vector space . Let the dimension of be , and be a basis of , then for any two vectors ,

The matrix is called the matrix of w.r.t the basis It gives a convenient way to express the inner product as . A symmetric bilinear form on a vector space of dimension , is said to be non-degenerate if the rank of w.r.t to some basis is equal to .

Example: For the dimensional euclidean space with standard basis and as dot product we get

Definition 4.

(Pseudo-euclidean Spaces) A real vector space of dimension , equipped with a non-degenerate symmetric bilinear form is called a pseudo-euclidean (or Minkowski) vector space of signature if the matrix of w.r.t a basis of , is given as,

Embedding Algorithms

The tool that ensures we can produce isometric embeddings is the following result:

Proposition 1.

([11]) Let be a finite pseudo-metric space equipped with distance function , and let be a collection of vectors in . Then is isometrically embeddable in if and only if,

| (27) |

This bilinear form is very similar to the one used for MDS embeddings [15]—it is closely related to the squared distance matrix. The main information needed is what the signature (i.e., how many positive, negative, and zero eigenvalues) of this bilinear form is. If the dimension of the pseudo-Euclidean space we choose to embed in is at least as large as the number of positive and negative eigenvalues, we can obtain isometric embeddings. Because we are working with finite metric spaces, this number is always finite, and, in fact, is never larger than the size of the metric space. This means we can always produce isometric embeddings.

The practical aspects of how to produce the embedding are shown in the first half of Algorithm 1. The basic idea is to do an eigendecomposition and capture eigenvectors corresponding to the positive and negative eigenvalues. These allow us to perfectly reproduce the positive and negative components of the distances separately; the resulting distance is the difference between the two components. The process of performing the eigendecomposition is standard, so that the overall procedure has the same complexity as running MDS. Compare this to MDS: there, we only capture the eigenvectors corresponding to the positive eigenvalues and ignore the negative ones. Otherwise the procedure is identical.

We note that in fact it is possible to embed pseudo-metric spaces isometrically into pseudo-Euclidean spaces, but we never use this fact. Our only application of this tool is to embed conventional metric spaces. However, our results directly lift to this more general setting.

The idea of using pseudo-Euclidean spaces for embeddings that can then be used in kernel-based or other classifiers or other approaches to machine learning is not new. For example, [21] used these spaces for kernel-based learning, [17] used them for generic pairwise learning, and [19] showed that they are among several non-standard spaces that provide high-quality representations. Our contribution is using these in the context of weak supervision and learning latent variable models.

Dimensionality

We also give more detail on the example we provided showing that pseudo-Euclidean embeddings can have arbitrarily better dimensionality compared to one-hot encodings. The idea here is simple. We start with a particular kind of tree with a root and three branches that are simply long chains (paths) and have nodes each, for a total of nodes. One-hot encodings have dimension that scales with the number of nodes, i.e., dimension .

Pseudo-euclidean embeddings enable us to embed such a tree into a space of finite (and in fact, very small) dimension while preserving the shortest-hops distances between each pair of nodes in the graph. As described above, the key question is what the number of positive and negative eigenvalues for the squared distance matrix (and thus the bilinear form) is. Fortunately, for such graphs, the signature of the squared-distance matrix is known (Theorem 20 in [6]). Applying this result shows that the pseudo-Euclidean dimension is just 3, a tiny fixed value regardless of the value of above.