figure \cftpagenumbersofftable

Lightweight starshade position sensing with convolutional neural networks and simulation-based inference

Abstract

Starshades are a leading technology to enable the direct detection and spectroscopic characterization of Earth-like exoplanets. To keep the starshade and telescope aligned over large separations, reliable sensing of the peak of the diffracted light of the occluded star is required. Current techniques rely on image matching or model fitting, both of which put substantial computational burdens on resource-limited spacecraft computers. We present a lightweight image processing method based on a convolutional neural network paired with a simulation-based inference technique to estimate the position of the spot of Arago and its uncertainty. The method achieves an accuracy of a few centimeters across the entire pupil plane, while only requiring 1.6 MB in stored data structures and 5.3 MFLOPs (million floating point operations) per image at test time. By deploying our method at the Princeton Starshade Testbed, we demonstrate that the neural network can be trained on simulated images and used on real images, and that it can successfully be integrated in the control system for closed-loop formation flying.

keywords:

Starshade, convolutional neural network, simulation-based inference, formation flying*Corresponding author, peter.melchior@princeton.edu

1 Introduction

Starshades have the potential to discover and characterize the atmospheres of Earth-like exoplanets in the habitable zone of nearby stars[1, 2, 3]. Their ability to achieve high contrast while maintaining high optical throughput and broad wavelength coverage make them the most promising technology to produce the first spectrum of an exo-Earth atmosphere. Starshades are currently in the technology development phase, with a targeted effort to advance critical starshade technologies (such as formation sensing and control) to Technology Readiness Level (TRL) 5[4]. A number of mission concepts have been proposed, including: the Starshade Rendezvous Mission[2] (SRM), which pairs a 26 meter diameter starshade with the 2.4 meter diameter Nancy Grace Roman Space Telescope (NGRST; formerly known as WFIRST) separated by 27,000 km; and the Habitable Exoplanet Explorer[3] (HabEx), which pairs a 52 meter diameter starshade with a 4 meter diameter telescope separated by 72,000 km. In this work, we use SRM as a case study to demonstrate our methodology, but it is applicable to a starshade mission of any size.

To maintain high contrast during observations, the telescope must remain in the deep shadow cast by the starshade; typically 2 meters in diameter larger than the size of the primary mirror. The size of the shadow can be designed to be arbitrarily large, but at the expense of a larger starshade farther away, which takes more fuel and time to switch between targets. Therefore, improved formation flying allows for a more efficient starshade architecture. It has long been recognized that the most difficult aspect to starshade formation flying is accurately sensing the relative position between the starshade and telescope over thousands of kilometers separation. Knowledge of the lateral position of the starshade relative to the telescope’s line of sight to the target star should be accurate to tens of centimeters in order to maintain position inside the typical meter tolerance. For instance, both SRM and HabEx set a lateral position sensing requirement of 30 cm . Provided accurate sensing information is available, controlling the starshade to maintain meter alignment is relatively straightforward: the starshade observatory operates at the Sun-Earth Lagrange point (L2) where the dominant disturbances, gravity gradients, and solar radiation pressure are relatively benign and easily handled with linear control[5, 6, 7].

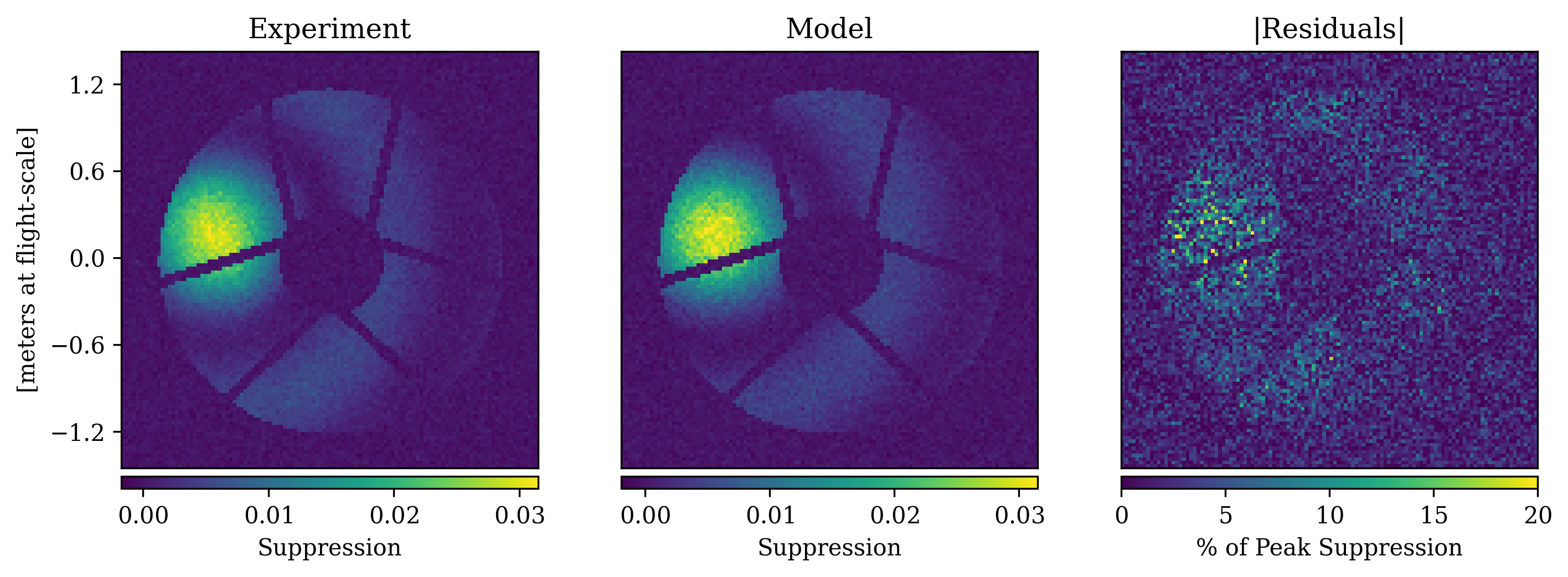

Sensing the starshade’s relative position to centimeter-level accuracy over 27,000 km is made possible by exploiting the nature of diffraction. At wavelengths outside of the starshade’s designed bandpass (“out-of-band light”), its suppression performance rapidly degrades and at the center of the shadow, a bright signal of diffracted starlight reemerges as the spot of Arago (see Figure 1). The position of this diffraction peak corresponds exactly with the position of the starshade. By imaging the diffraction pattern at the entrance pupil of the telescope, the starshade’s lateral position can thus be determined to high accuracy[8].

|

The formation sensing problem is now a matter of quickly, accurately, and reliably extracting the position of the spot of Arago from pupil plane images. Previous methods111Neural networks were also used in a very recent work[10]. that have demonstrated sufficient accuracy in this task include an image matching algorithm[11] and a non-linear least squares (NLLS) fit to a Bessel function model[7]. The image matching algorithm is slow to compute, which could limit high cadence updates to the spacecraft control. It also requires a large image library, which would occupy the limited memory of the spacecraft’s computer, and is limited by the finite resolution of the image library. The fit to the Bessel model is computationally intensive for state-of-the-art flight computers and requires an accurate initial guess to converge to the correct solution[12].

To overcome the limitations of these previous methods, we propose a convolutional neural network (CNN) to extract the starshade position from pupil plane images. In this paper we demonstrate through simulation and experiment that the CNN achieves accurate and robust position sensing with substantially reduced computing power and memory compared to previous methods. We then make use of the CNN to produce a summary statistic for a computationally efficient simulation-based inference (SBI) technique that yields an improved estimate of the starshade position and its uncertainty. We validate this method experimentally in the Princeton Starshade Testbed[9], where 10-10 contrast has been demonstrated on 1/1000 scale starshades at a flight-like Fresnel number.

The remainder of this paper is organized as follows. In Section 2 we pose the position sensing problem and describe the CNN architecture and training strategy. We show results on simulated and experimental data in Section 3 and Section 4, respectively. We further augment the CNN approach with a SBI technique in Section 5, and discuss our findings and future directions of this work in Section 6.

2 Position Sensing with CNNs

Imaging the telescope’s entrance pupil in out-of-band light shows the starshade’s diffraction pattern and provides a bright signal (out-of-band light is only suppressed by a factor of 103, compared to 1010 with in-band light) indicating the starshade position. As an example, Figure 1 shows a simulated pupil image of NGRST including obstructions from the secondary mirror and supports.

Telescopes equipped with a coronagraph typically have a camera that takes images of the pupil plane to allow low-order wavefront sensing for the coronagraph. Using pupil images therefore provides positional information without the need for additional hardware.

2.1 CNN Model Architecture

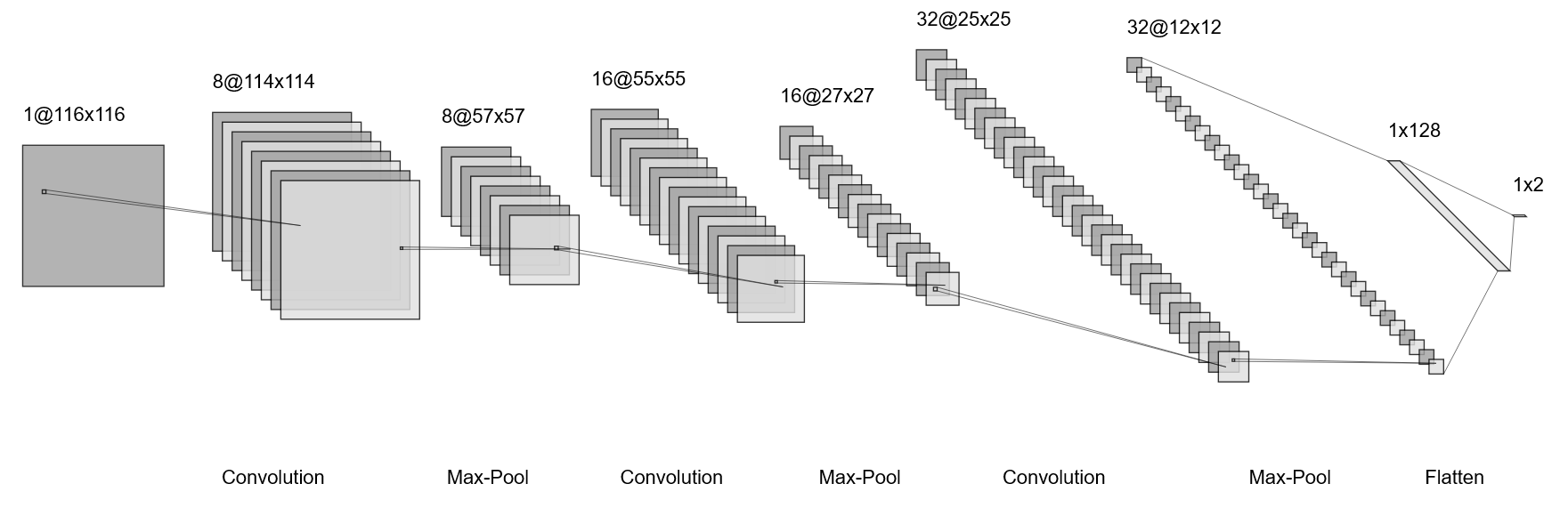

We decide to leverage this information by employing a CNN, a neural network architecture that is designed for image analysis and has shown excellent performance and accuracy for image classification [13, 14, 15], regression [16], and compression [17]. We thus seek to train a CNN on the supervised regression task with simulated pupil images at true starshade positions as input and estimated positions as outputs. To keep the computational burden low, we chose a relatively shallow CNN architecture, which is shown graphically in Figure 2 and summarized as:

-

•

Input: 9696 pupil-plane image

-

•

Convolutional Layer: 1 in-channel, 8 out-channels, 33 kernel, stride 1

-

•

Max-Pooling Layer: 22 kernel, stride 2

-

•

ReLU Activation Function

-

•

Convolutional Layer: 8 in-channels, 16 out-channels, 33 kernel, stride 1

-

•

Max-Pooling Layer: 22 kernel, stride 2

-

•

ReLU Activation Function

-

•

Convolutional Layer: 16 in-channels, 32 out-channels, 33 kernel, stride 1

-

•

Max-Pooling Layer: 22 kernel, stride 2

-

•

ReLU Activation Function

-

•

Flatten

-

•

Fully-Connected Layer: 4608 in-features, 128 out-features

-

•

Fully-Connected Layer: 128 in-features, 2 out-features

-

•

Output: estimated starshade position

|

2.2 Training on Simulated Images

Simulated images are generated using the latest diffraction models[18] that have been experimentally validated at high contrast[9]. The obstructions from NGRST’s secondary mirror and its support structure are superimposed on each image and simulated photon and detector noise are added. Figure 1 shows the agreement between a simulated image and experimental image taken in the Princeton Starshade Testbed. For now, we only simulate monochromatic light to match the experimental setup.

We want the CNN to yield accurate results for different target stars, in particular in terms of brightness and spectral type. This could be achieved by adding a conditioning variable to the last fully-connected layer, however, for this work we decided to fix the spectral type and train the CNN more robustly by varying the stellar magnitudes. We convert the pixel values to units of suppression, which is independent of the star’s brightness and exposure time. Suppression is calculated by normalizing the image by its exposure time and the photon rate of the target star without the starshade in place. The photon rate of the unblocked star is well known for all targets of interest and can be set during the pre-processing of each image. For a wider bandpass, the diffraction pattern will be slightly dependent on the spectral type of the star, but this can easily be included in the diffraction model; we defer examining the effect of a wider bandpass to a future study. In units of suppression, stars of different magnitudes have different signal-to-noise ratios (SNR) and different balances of photon noise to detector noise. We train the CNN on images with a wide range of SNR, extending to an SNR below that expected in flight to prevent testing the CNN outside of its training conditions. For this work, we define the “Peak SNR” as the mean SNR per pixel in the FWHM of the spot of Arago.

We define as loss function the usual L2 loss between the simulated and estimated positions. We also experimented with L1 loss, but found that the L2 loss function gave better results. As optimizer we choose the common Adam gradient descent scheme [19]. Adam is computationally efficient, uses little memory, and generally requires little hyperparameter tuning for convergence. Finally, we use a one-cycle learning rate policy [20], which adapts the learning rate throughout the training process, changing the value at every iteration. Starting at some initial learning rate, the policy anneals the learning rate to some maximum value, then anneals it back down to some minimum value, which is typically much lower than the initial learning rate.

The training set consists of draws from a square of 3.4 m by 3.4 m around the aligned position, which covers a wider range as the 1 m formation flying requirement, again to avoid testing the CNN outside of the training conditions. We generate 160,000 different positions, sampled uniformly from this square, and at each position the diffraction pattern is simulated and the telescope obstructions are overlaid. For each image, the Peak SNR is randomly pulled from a uniform distribution between 0.5 and 100, and noise is then added to the image. The CNN model is trained for 30 epochs using an initial learning rate of 0.001.

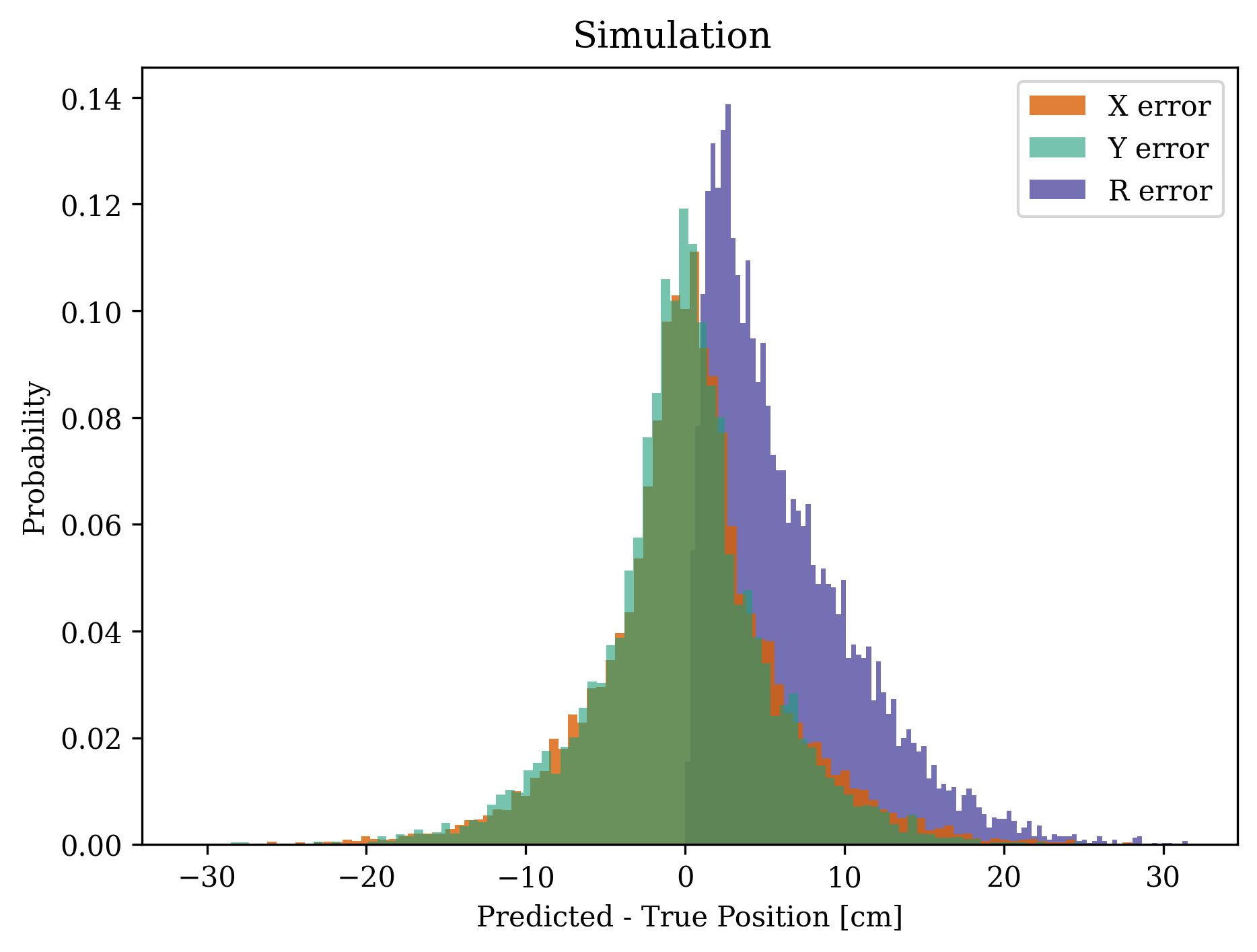

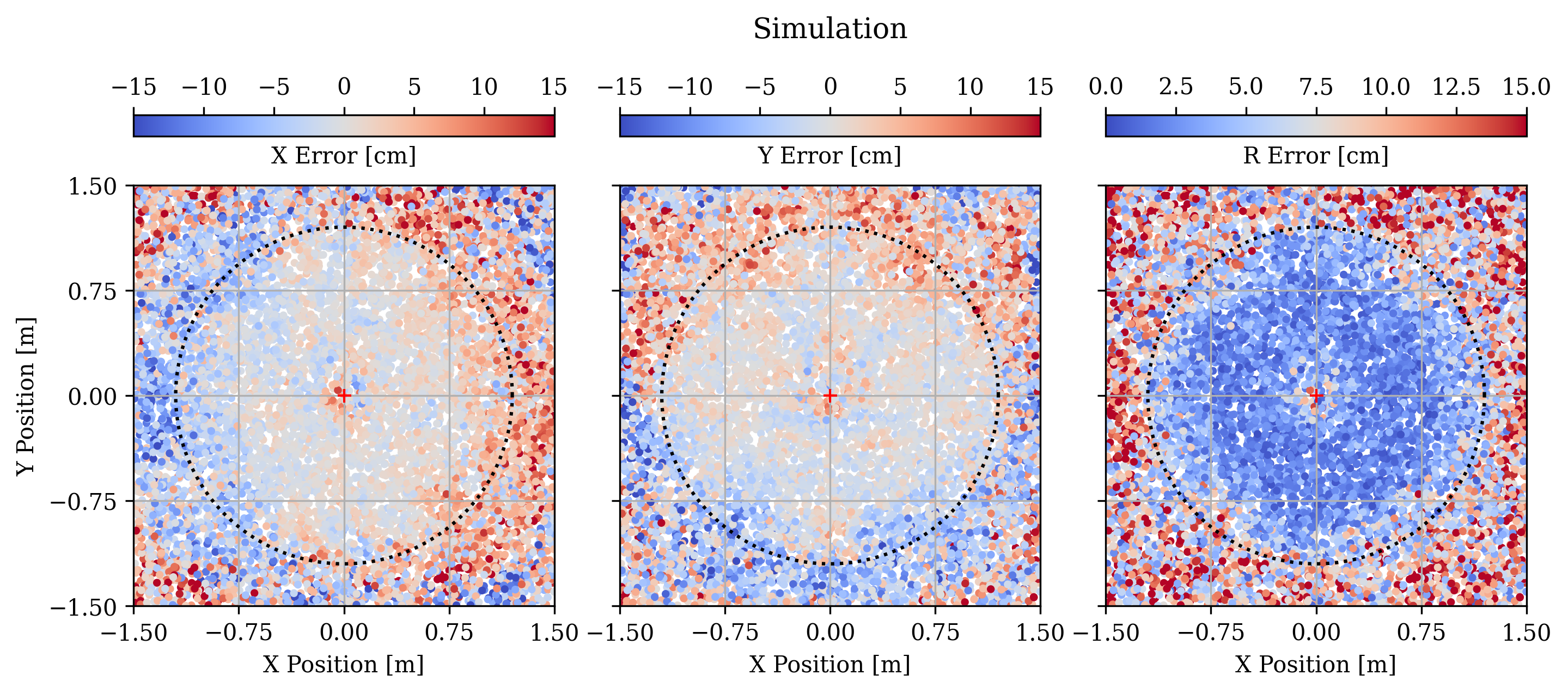

3 Simulation Results

We first investigate the performance of the CNN to estimate the starshade position. We run a baseline simulation with 9696 pixels sampling the telescope’s entrance aperture and a Peak SNR of 5, which corresponds to a 1 second exposure time of a 4 magnitude star with NGRST. We simulate 10,000 images with random starshade positions distributed over a 3 meter square. Figure 3 shows the distribution of the and magnitude position errors for the baseline simulation. The mean and standard deviation of the magnitude of the error are 6.3 cm and 4.8 cm, respectively; and 99.7% of the errors are less than 25 cm. Figure 4 plots these errors as a function of the starshade’s position. The error increases when the spot of Arago is either obscured by the secondary mirror in the center or off the edge of the aperture. The latter situation is responsible for most of the tail of the error distribution. We thus consider 6 cm to be a conservative estimate because with an active control system the starshade should never stray past meter offset during observations. If we only consider times when the offset is less than 1 meter, the mean error drops to 2.8 cm, and 99.7% are less than 10.5 cm.

It is promising to see the good performance outside of the telescope’s aperture, which suggests this method could be extended farther outside the meter control box and could be used earlier in the transition between acquisition and observation guiding modes[12]. We save the investigation into how far this method can be extended for a later date.

|

|

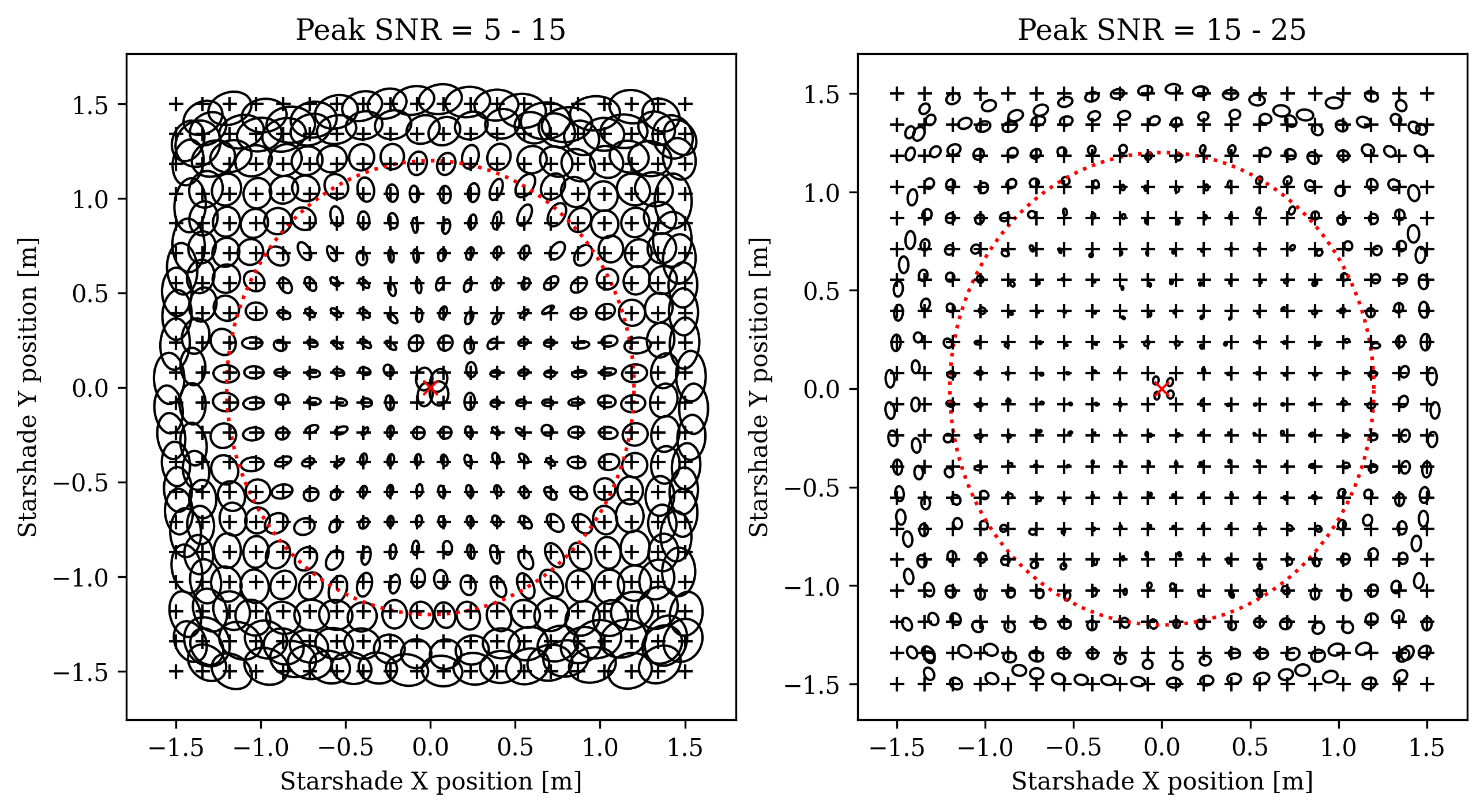

To examine the robustness of the CNN solution over the width of the shadow, we run a large number of simulations with the starshade positioned on a 20 x 20 grid over a 3 meter width. Each point on the grid, 5,000 simulations are run with the Peak SNR randomly pulled from a uniform distribution. Figure 5 shows the result of this Monte Carlo simulation: the ellipses show the covariance of predicted positions centered on the mean prediction; the grid of true starshade positions are marked with black plus signs. Inside the telescope aperture, the covariance is on the order of a few centimeters and radially correlated. The left panel of Figure 5 is the moderate SNR case with the Peak SNR drawn from 5 - 15; the right panel is the high SNR case with Peak SNR drawn from 15 - 25. There is a slight bias in the predicted positions towards the edge of the aperture, but generally this is smaller than the level. Outside the aperture, there are larger covariances and biases, with a strong inward bias at the corners. The higher Peak SNR reduces the covariances, but has little to no effect on the biases.

|

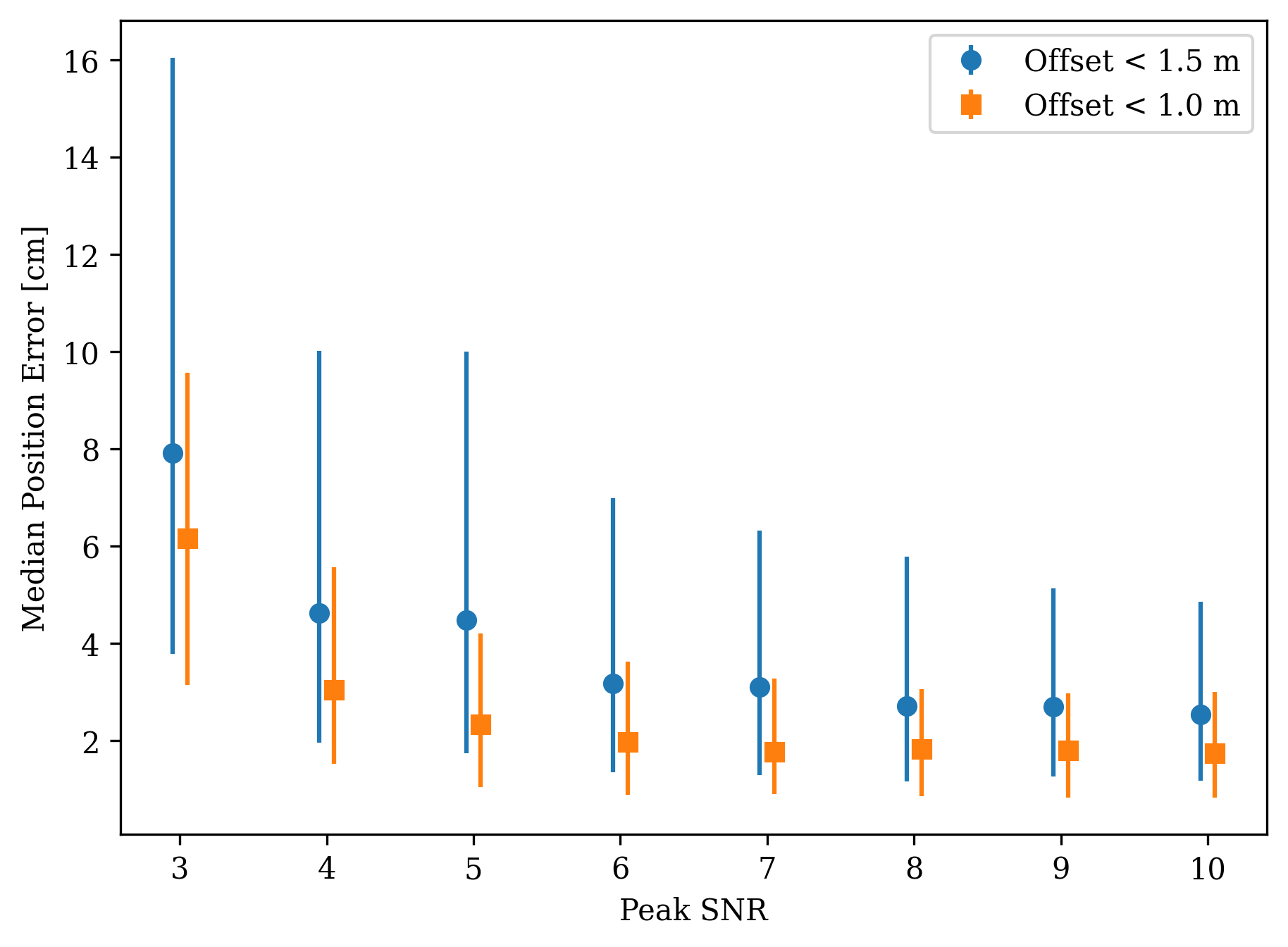

Figure 6 shows the distribution of position errors as a function of Peak SNR. For each Peak SNR, 1000 images were simulated with randomly distributed positions; two curves are shown which limit the testset to offsets of a certain radius. We find that above a Peak SNR of 5, there is little change in the position error. Limiting to offsets of 1 meter results in a 50% decrease in position error.

|

Finally, we examine the trend in performance with the number of pixels sampling the pupil. Figure 7 shows the distribution of position errors for CNNs trained on images with sizes 4848, 6464, and 9696. We find that the position error scales approximately linearly with the number of pixels sampling the pupil (in one dimension); the mean position error for 48, 64, and 96 pixels is 12.4 cm, 10.5 cm, and 6.3 cm, respectively. As the CNN is inherently lightweight and computationally fast, the cost of a larger image size is minimal compared to the image matching and model fitting methods, and the gain in performance is worth the cost. While the choice of pupil sampling size will ultimately depend on the optical layout of the pupil imaging system and its detector electronics, the CNN benefits from a larger image size.

4 Experimental Results

The Princeton Starshade Testbed[9] was built for optical model validation experiments at high contrast levels with a flight-like Fresnel number, i.e. the parameter governing optical diffraction, but at only of the scale of a real starshade. Properties of the lab and two flight configurations are listed in Table 1. The sub-scale starshades are lithographically etched from a silicon wafer and characterized well enough to predict the starshade performance at 10-9 contrast. As such, the optical model should be very accurate in predicting the out-of-band diffracted light at the 10-3 contrast level.

The testbed provides the unique opportunity to demonstrate that our approach can be trained on simulated data and then accurately be applied to experimental images. There are, however, a number of factors unique to the testbed that make the experimental results a conservative estimate of the on-orbit performance. First, the testbed operates in the atmosphere, and changes in the air temperature and density result in motion of the diffraction pattern and variation in the optical power incident on the starshade. Second, a low level background is observed from light scattering off aerosols as it propagates the 80 meters from laser to telescope. Third, the pupil imaging optics are off-the-shelf and not calibrated to the extent that the flight system will be, so any model mismatch seen in the lab is dominated by the pupil imaging optics and not the starshade. Lastly, the flight missions propose using an electron-multiplying CCD, while the lab detector (properties listed in Table 2) is operated with a conventional amplifier and thus experiences a larger contribution from read-out noise.

| Laboratory | SRM | HabEx | |

| Telescope diameter | 2.2 mm | 2.4 m | 4.0 m |

| Starshade diameter | 25.06 mm | 26 m | 52 m |

| Telescope - starshade sep. | 50.0 m | 26,000 km | 76,600 km |

| Source - starshade sep. | 27.45 m | parsec | parsec |

| Formation flying tolerance | mm | m | m |

| Guiding bandpass | 405 nm | 425 - 552 nm | 300 - 400 nm |

| Fresnel number (at nm) | 22 | 16 | 22 |

| Pupil image resolution | 23 m/pixel | 75 mm/pixel | 125 mm/pixel |

| Parameter | Value |

|---|---|

| Pixel size | 13m 13m |

| Inverse gain | 0.79 e- / count |

| Read noise | 4.8 e- / pixel / frame |

| Dark current | 710-4 e- / pixel / s |

| CIC noise | 2.510-3 e- / pixel / frame |

To correct for atmosphere-induced motions in the diffraction pattern and variations in the brightness, the true position and brightness of each experimental image is solved for via the NLLS algorithm [7]. Each image is normalized by the NLLS-solved brightness, and the NLLS-solved position is used as the true position when determining the accuracy of the CNN-DELFI position.

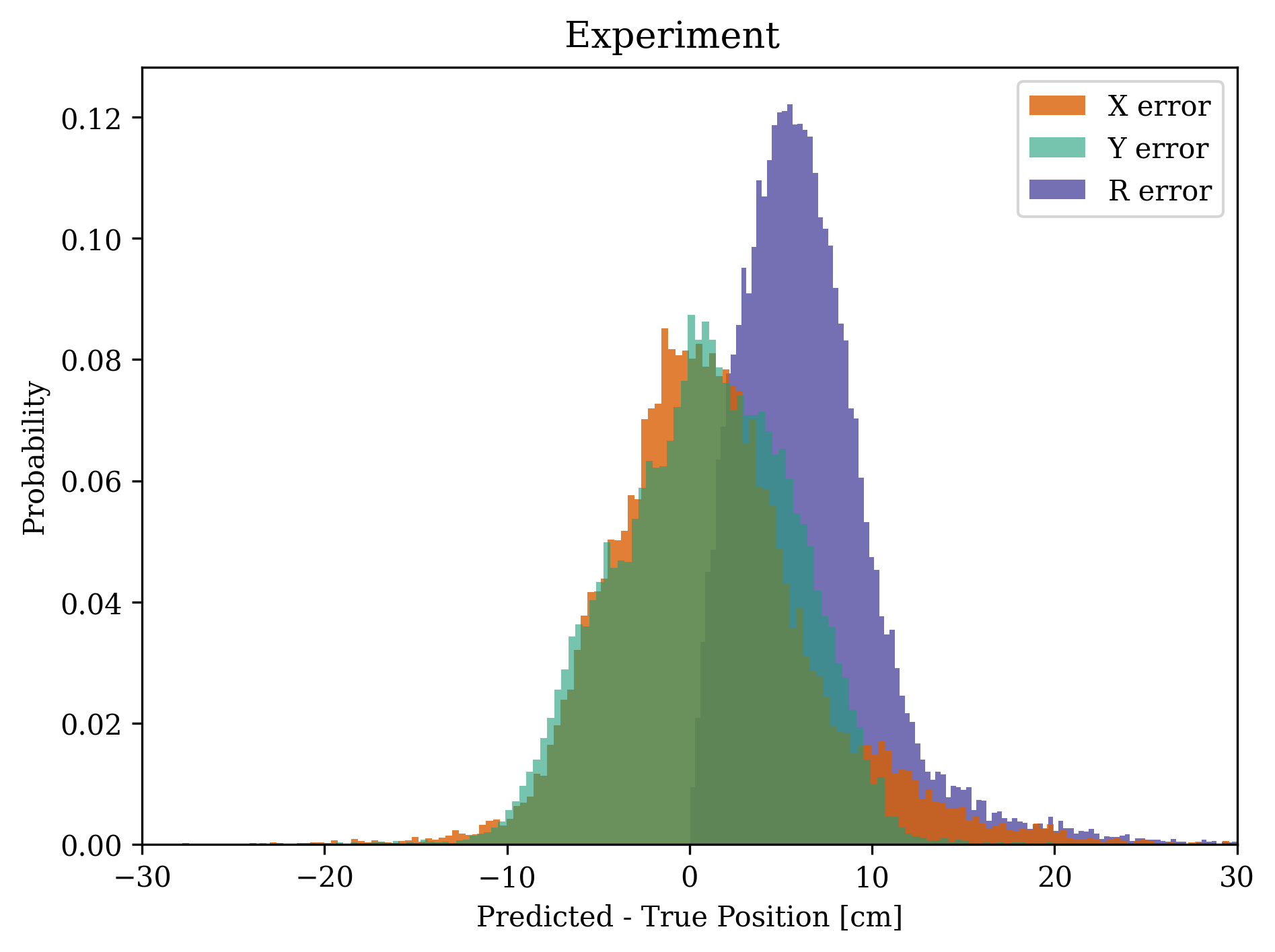

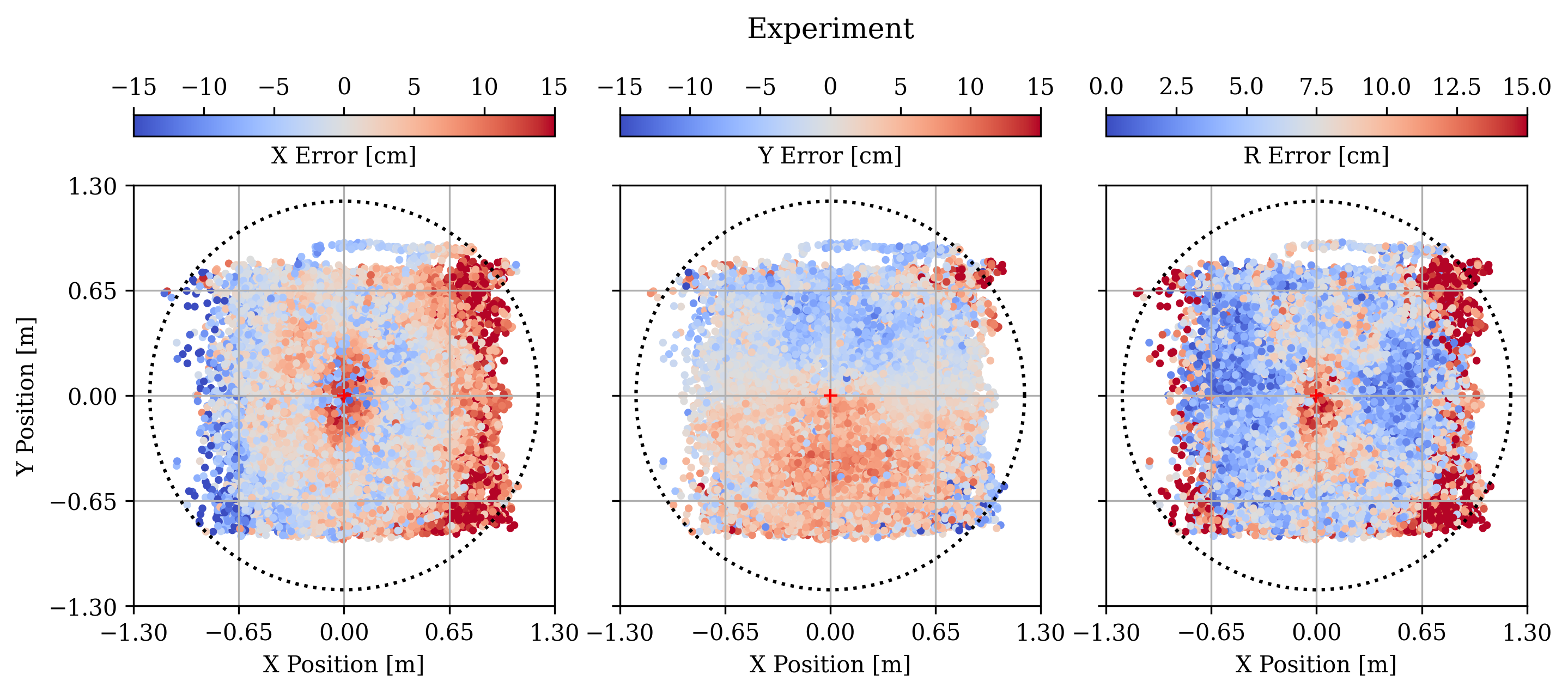

Figure 8 shows the distribution of the X, Y, and magnitude (R) position errors for 23,700 images with Peak SNR = 5 - 8. The mean and standard deviation of the magnitude of the error are 6.5 cm and 4.0 cm, respectively, and 99.7% of the errors are less than 25 cm. Figure 9 plots these errors as a function of the starshade’s position. The error follows the same structure as that of the simulation data, with the worst performance in the center and towards the edges, when the spot of Arago is blocked by the secondary mirror and moves off the edge of the aperture.

Sampled over the same region, the positional accuracy for experimental images is worse by about a factor of 2 compared to simulated images, likely due to the aforementioned issues with testing in the atmosphere, but the performance still exceeds our goal of 30 cm.

|

|

4.1 Hardware Demonstration of Closed-Loop Formation Flying

To further validate the CNN operating in the formation flying system, we implement the CNN into the closed-loop formation-flying demonstrations presented in Refs. 7, 12. During formation flying, the pupil camera takes an image every second and feeds it to the CNN to extract the starshade position. This position is filtered through an unscented Kalman filter (UKF) to estimate the relative position and velocity between the two spacecraft and a Linear Quadratic Regulator (LQR) controller determines the optimal control signal to minimize the starshade–telescope misalignment.

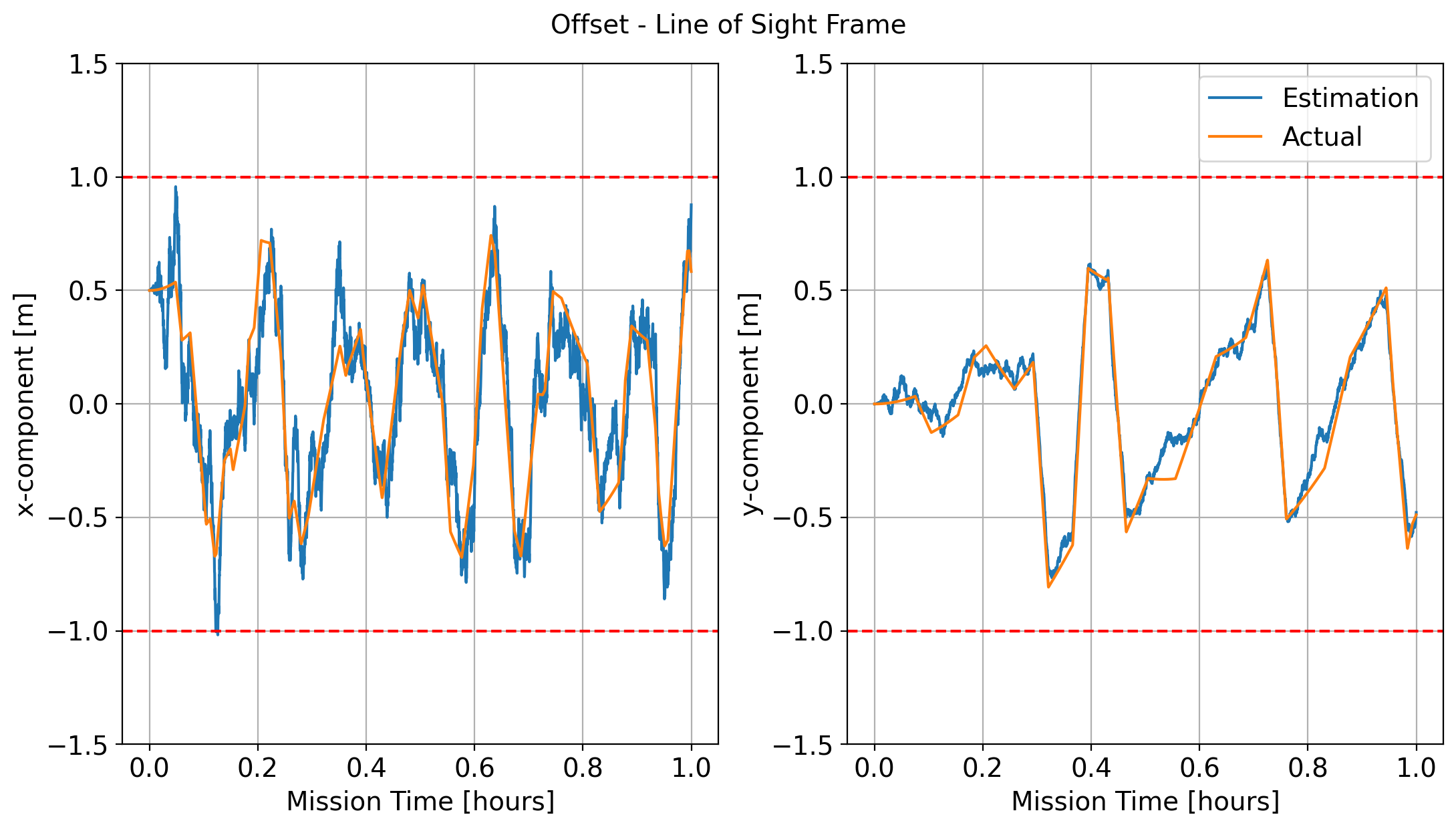

Figure 10 shows the results for an experiment with Peak SNR = 8 and with the starshade starting with a 0.5 m misalignment in the -direction. Shown are the offsets between starshade and telescope, the actual and that estimated by the UKF, in the two directions of the telescope’s line of sight. The estimated position tracks the truth well and the controller keeps the alignment within the meter requirement for the entire mission duration and with minimal thruster firings. The mean error from the CNN is 6.1 cm with the largest error at 23 cm. These results demonstrate the CNN can easily be integrated with existing control schemes and met the required tolerances.

|

5 Simulation-based Inference

The CNN produces an accurate point-estimate of the starshade position. Although it is entirely usable on its own, as we have demonstrated in the previous section, it lacks uncertainty estimates. These would be beneficial for the control system during formation flying because the UKF could then appropriately reduce the importance of the CNN predictions in regions of large uncertainty. We therefore couple the CNN with a subsequent calibration procedure, which provides a quantification of its associated uncertainty and yields an improved position estimate.

In keeping with the spirit of this work, we choose the lightweight method DELFI[21]. In short, it amounts to fitting a Gaussian mixture model (GMM) to pairs of true and CNN-estimated positions obtained from the same simulations we introduced in Section 2. With a pretrained CNN, this first step is done once and the resulting GMM is stored. At test time, the GMM is evaluated at the position as reported by the CNN, which yields a probability distribution . The mode of this distribution is the most likely true position, while the standard deviation provides an estimate of the uncertainty. Because of the mathematical structure of the GMM, the calculation at test time can be carried out analytically and require only matrix and vector operations in . We explain the approach in detail below.

5.1 Density Estimation

The first step of DELFI consists of fitting the joint distribution of true and CNN-estimated positions, i.e. . It is useful to rephrase this problem in the typical SBI terminology, namely that we fit the joint distribution of the parameters of the simulator and the summary statistic from the CNN. In principle, any summary statistic that carries information about each parameter can be used, and neural networks are often trained with the specific purpose of getting a summary from the simulated data that maximize this information[22]. The simplest approach is to train the network on the direct regression task, i.e. seeks to regress , as we have done in Section 2.

We fit the joint density with a GMM with components, using the python package pygmmis222https://github.com/pmelchior/pygmmis[23]. We repeat this process 5 times, and average the results for a GMM with components. This GMM is stored for subsequent use.

5.2 Inference

At test time, we seek to determine the probability distribution of the parameters given that we have obtained from an observed pupil image processed by the CNN. For a single Gaussian component, this operation can be carried out analytically:

| (1) |

where we make use of the definition of the marginal means and covariances for the joint 4-dimensional Gaussian:

| (2) |

For a multi-component GMM, we have to account for the relative contributions of each component at the test location , which yields a new GMM in :

| (3) |

where each component mean and covariance matrix is constructed exactly in the same way as in Equation 1, and the new mixture weights are

| (4) |

To determine an improved position estimate and its uncertainty, we can now compute the mean and covariance of this new mixture:

| (5) |

However, a GMM is essentially guaranteed to be multimodal, so we decide to use the mode, i.e. , instead of the mean as it refers to the most common true position given the observed summary. To speed up the process of finding the mode, we decide to simply adopt the center of the GMM component that has the highest amplitude as our improved position estimate.

5.3 DELFI Results

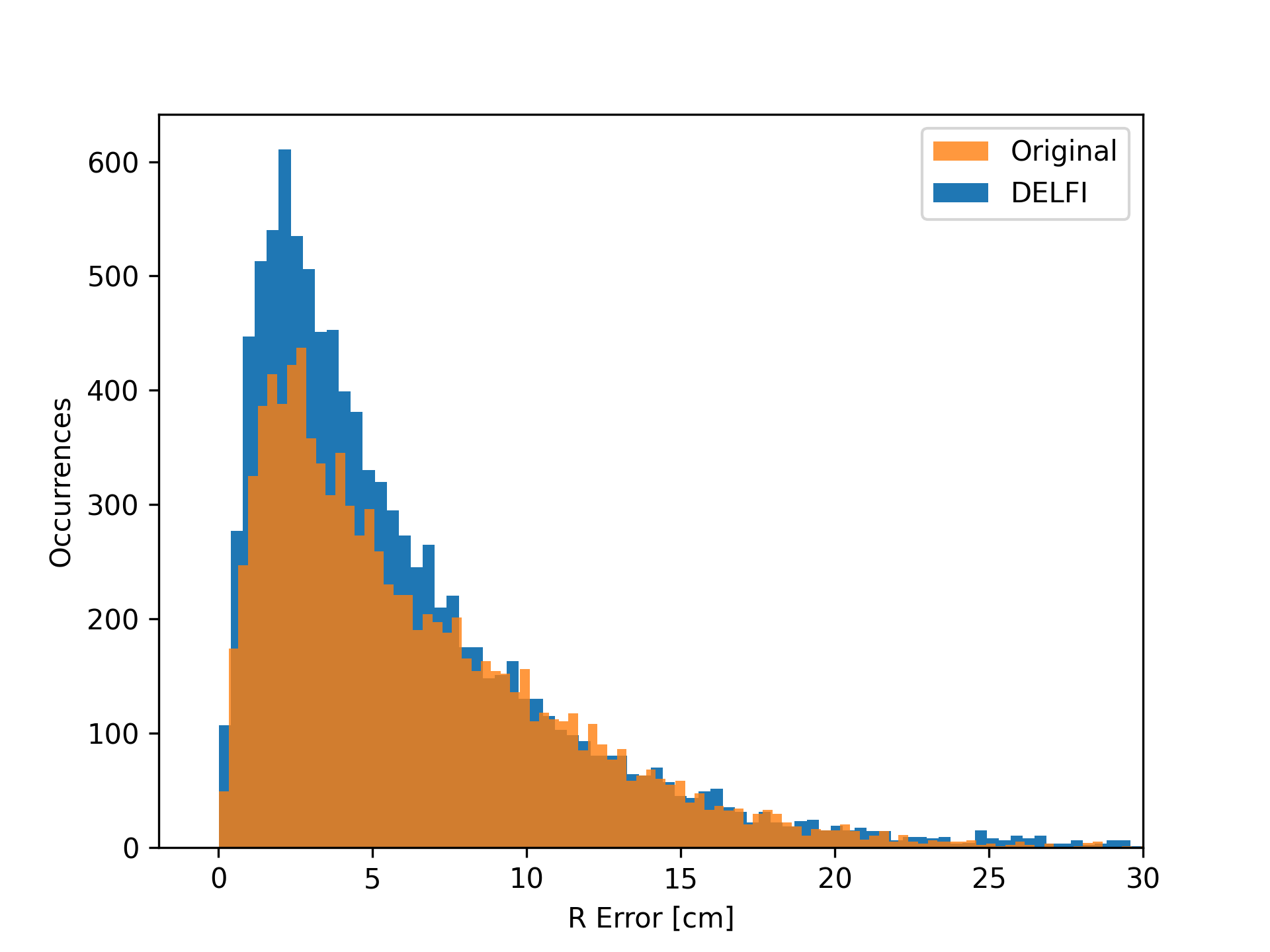

We carry out the same simulations as before for the CNN test in Section 3. Figure 11 shows a comparison of the error magnitude between the original CNN position estimates and the DELFI-corrected ones. It is evident that DELFI noticeably sharpens the peak at around cm by improving the estimates especially from regions where the CNN makes moderately large mistakes of 10 cm.

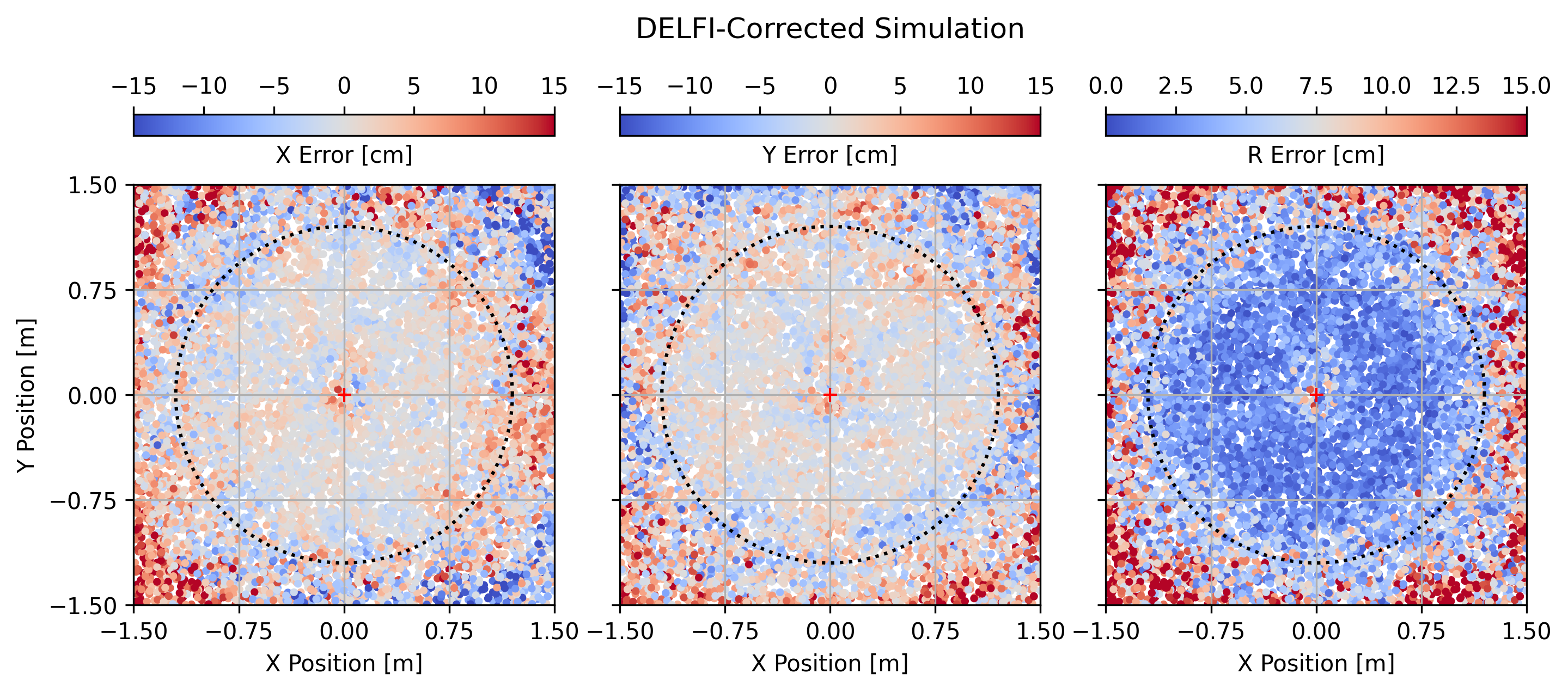

This behavior becomes more evident in Figure 12. When compared to Figure 4, the DELFI corrections show very little structure in the inner regions of the pupil plane and extend the range of position estimates with errors of less than 10 cm to well beyond the telescope aperture radius.

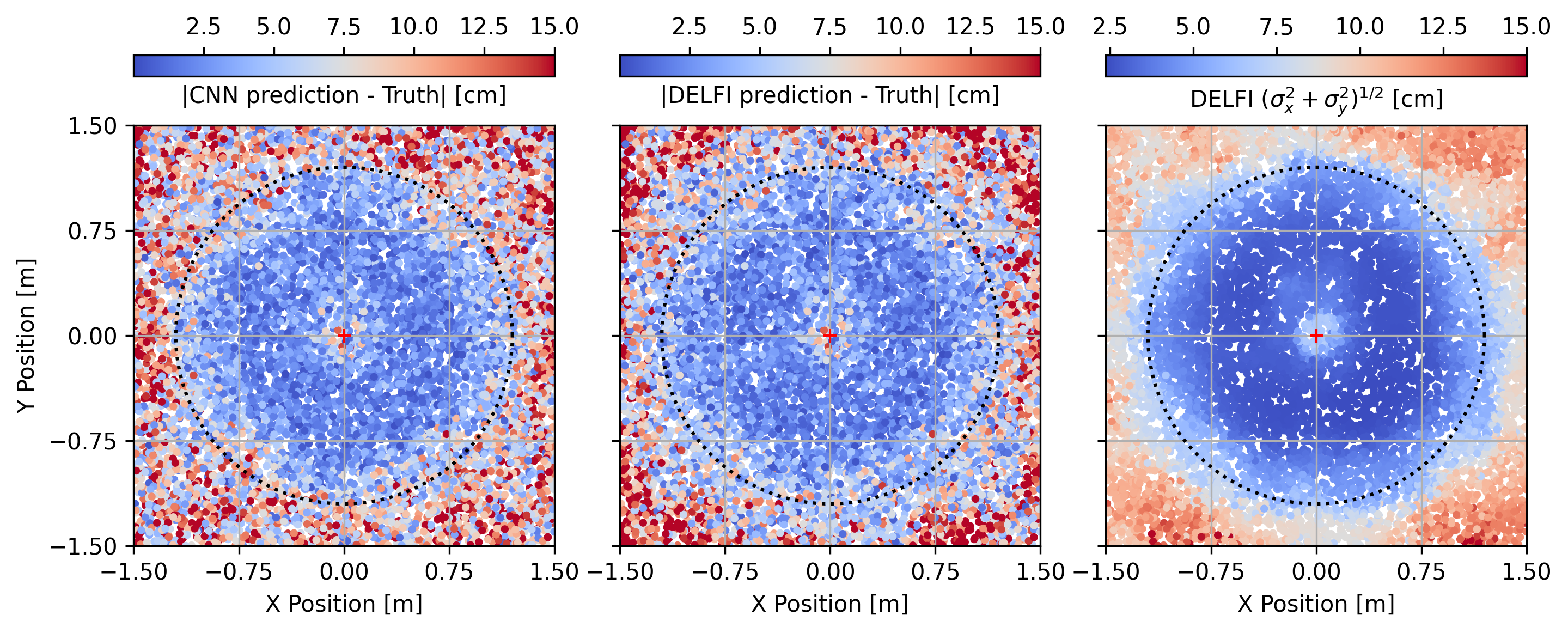

As final demonstrate of the calibration procedure, Figure 13 show the error magnitude as function of position offset for the CNN and for DELFI. The right panel shows the predicted magnitude uncertainty obtained from DELFI, which matches very well with the accuracy of its own estimates in the middle panel. The areas of large uncertainty remain those where the information from the pupil image is physically limited, namely when the spot of Arago is blocked either by the secondary mirror in the center or the outer perimeter of the telescope pupil. We can therefore conclude that DELFI provides well-calibrated position estimates and their uncertainties for further use by the control system for formation flying.

|

|

|

5.4 Impact on formation flying

To examine the impact of the DELFI provided uncertainty, we ran a suite of formation flying simulations[7] with the position uncertainty given either by the DELFI estimate, or by assuming a constant uncertainty of 2 cm. We created 100 random initial starting positions within a 1 meter offset and ran a simulated 1 hour mission for each uncertainty case. For the DELFI provided uncertainty, the average fuel consumption was 3% lower and the error in the UKF estimated position was 8% lower. With this quick check, we conclude that DELFI provides a better estimate of the measurement uncertainty that leads to a more efficient state estimation. While the effect size shown here is small, it is more important to note that this method provides a reasonable estimate of the uncertainty, and it is possible for future control schemes to utilize this information to its full extent.

6 Conclusion and Outlook

The results of Section 3 and Section 4 show that the CNN alone can easily meet the position estimation requirement of 30 cm set in mission designs[2, 3]. For the entirety of the observation phase, the control law should not allow the misalignment to exceed 1 meter, therefore we focus on results where the starshade position is less than 1 meter. When doing so, the 99.7 percentile position error for the baseline simulation is 10.5 cm, and for the experimental results is 21.5 cm.333The experimental results should be viewed as a conservative estimate of the performance as the mismatch between experimental and simulated training data is dominated by the effects of the atmosphere and uncharacterized pupil imaging optics. For an actual mission, atmospheric issues will not be present in space, and the imaging system will be better characterized on the ground and after launch for an accurate model of the instrument response. These results meet the 30 cm requirement that defines the formation sensing milestone for TRL 5[4]. As we demonstrate in Section 5, augmenting the CNN with our simulation-based calibration method DELFI further improves the estimation accuracy and pushed out typical errors of less than 10 cm to well beyond the telescope pupil.

Our results compare favorably to alternative approaches with image matching[11] and NLLS[7] algorithms, all of which have been shown to satisfy accuracy requirements. The most significant advantages of our approach is that it is lightweight in memory and fast to compute. The CNN trained on images of 9696 pixels has parameters and takes up 1.6 MB in disk space. A CNN trained on 3232 images only needs 92 kB of disk space, 500 less than the 46 MB required for the image library (3232) of the matching algorithm[11]. A DELFI model with Gaussian components also only occupies 42 kB. The CNN needs 5.3 MFLOPs (million floating point operations) to process a 9696 image; 0.36 MFLOPs to process a 3232 image. The computational cost of DELFI is entirely negligible. For the image library method, Ref. 11 reports 70 MFLOPs to search the whole library, but suggest that a reduced grid search around an initial guess could reduce that to 0.5 MFLOPs. The NLLS algorithm involves computing Equation 6 and its analytical Jacobian at each pixel once per iteration; with a good initial guess, the Levenberg-Marquardt solution typically converges within 15 iterations. The NLLS computation time is dominated by the calculation of the Bessel function and its derivative (, ), which we estimate to require FLOPs each ( is the number of terms kept in the Bessel function series expansion and is the total number of pixels). Therefore, the NLLS takes 14 MFLOPs to process a 9696 image; 1.6 MFLOPs to process a 3232 image.

Our method thus substantially reduces storage space and computation time over the image matching algorithm. It also produces a continuous position estimate, whereas the image matching results are discrete due to the finite offset sampling in the image library. Our method also outperforms the NLLS algorithm, though to a lesser degree, and provides the following extra benefits: 1) unlike NLLS, it does not require a valid initial guess to properly converge, and 2) the Bessel function model used by the NNLS is only valid out to an offset of 1.5 meters. Future work can investigate whether additional training of the CNN model and DELFI renders it effective for larger offsets.

One important aspect of practical applicability we did not address in this work is related to variations in the stellar spectrum. Due to the monochromatic laser in the experimental testbed, this study was limited to light of a single wavelength and therefore does not capture the effect of the target star’s spectral type . While we expect that CNN estimates to only weakly depend on ,444Over the 100 nm wide guiding bandpass, the diffraction pattern is well-approximated by the Bessel function model: (6) where is the intensity as a function radial coordinate , is the starshade radius, is the telescope–starshade separation, and is the wavelength of light. Inverting Equation 6, the width of the spot of Arago scales linearly with wavelength (as ). To first order, and due to the stellar flux increasing with wavelength on the Wien’s tail for solar-type stars, the diffraction pattern will be dominated by the spot of Arago broadened by the longest wavelength in the bandpass. As the CNN is trained on units of suppression and images are normalized by the unblocked star’s expected flux, the CNN is expected to be weakly dependent on spectral type .future works should be able to improve the accuracy for broadband images by training the CNN on image with a range of spectral types. For predictions it can then either implicitly marginalize over an unknown spectral type or, even better, condition its estimates on the known spectral type of the target star. The latter option can be achieved by adding the spectral type as an extra variable to the last fully connected layer of the CNN, at a minor increase of required memory. Our calibration method DELFI can also take the spectral type into account by evaluating , i.e. by modestly increasing the task of density estimation from a four- to a five-dimensional space.

We therefore conclude that position sensing with CNN and DELFI is a viable, accurate, and computationally efficient option for formation flying of future Starshade missions. To help with the adoption of our method, we publicly release the code at https://github.com/astro-data-lab/starshade-xy.

Disclosures

The authors have no disclosures to report.

Acknowledgements.

Part of this work was supported by NASA Technology Development for Exoplanet Missions (TDEM) award: NNX15AD31G. AH is supported by the Jet Propulsion Laboratory, California Institute of Technology under a contract with the National Aeronautics and Space Administration.References

- [1] W. Cash, “Detection of Earth-like planets around nearby stars using a petal-shaped occulter,” Nature 442, 51 – 53 (2006). [doi:10.1038/nature04930].

- [2] S. Seager, N. J. Kasdin, and S. R. P. Team, “Starshade Rendezvous Mission probe concept,” in American Astronomical Society Meeting Abstracts #231, American Astronomical Society Meeting Abstracts 231 (2018). https://smd-prod.s3.amazonaws.com/science-red/s3fs-public/atoms/files/Starshade2.pdf.

- [3] S. Gaudi, S. Seager, B. Mennesson, et al., “The Habitable Exoplanet Observatory HabEx mission concept study final report,” arXiv e-prints (2020). https://arxiv.org/abs/2001.06683.

- [4] P. Willems, “Starshade to TRL5 (S5) technology development plan,” Jet Propulsion Laboratory Publications (2018). https://exoplanets.nasa.gov/internal_resources/1033.

- [5] T. Flinois, M. Bottom, S. Martin, et al., “S5: Starshade technology to TRL5 Milestone 4 Final Report: Lateral formation sensing and control,” Jet Propulsion Laboratory Publications (2018).

- [6] T. L. B. Flinois, D. P. Scharf, C. R. Seubert, et al., “Starshade formation flying II: formation control,” Journal of Astronomical Telescopes, Instruments, and Systems 6(2), 1 – 28 (2020).

- [7] L. M. Palacios, A. Harness, and N. J. Kasdin, “Hardware-in-the-loop testing of formation flying control and sensing algorithms for starshade missions,” Acta Astronautica 171, 97–105 (2020).

- [8] M. C. Noecker, “Alignment of a terrestrial planet finder starshade at 20-100 megameters,” in Techniques and Instrumentation for Detection of Exoplanets III, D. R. Coulter, Ed., Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series 6693, 669306 (2007).

- [9] A. Harness, S. Shaklan, P. Willems, et al., “Optical verification experiments of sub-scale starshades,” Journal of Astronomical Telescopes, Instruments, and Systems 7(2), 1 – 32 (2021).

- [10] S. R. Martin and T. L. B. Flinois, “Simultaneous sensing of telescope pointing and starshade position,” Journal of Astronomical Telescopes, Instruments, and Systems 8(1), 1 – 18 (2022).

- [11] M. Bottom, S. Martin, E. Cady, et al., “Starshade formation flying I: optical sensing,” Journal of Astronomical Telescopes, Instruments, and Systems 6, 015003 (2020).

- [12] A. Harness, L. Palacios, and N. J. Kasdin, “Technology development for exoplanet missions final report: Formation flying for external occulters,” Jet Propulsion Laboratory Publications (2021).

- [13] Y. LeCun, C. Cortes, and C. Burges, “Mnist handwritten digit database,” ATT Labs [Online]. Available: http://yann.lecun.com/exdb/mnist 2 (2010).

- [14] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Advances in Neural Information Processing Systems, F. Pereira, C. J. C. Burges, L. Bottou, et al., Eds., 25, Curran Associates, Inc. (2012).

- [15] O. Russakovsky, J. Deng, H. Su, et al., “Imagenet large scale visual recognition challenge,” International Journal of Computer Vision 115, 211–252 (2015).

- [16] P. Fischer, A. Dosovitskiy, and T. Brox, “Image orientation estimation with convolutional networks,” in Pattern Recognition, 368–378, Springer International Publishing (2015).

- [17] L. Cavigelli, P. Hager, and L. Benini, “Cas-cnn: A deep convolutional neural network for image compression artifact suppression,” in 2017 International Joint Conference on Neural Networks (IJCNN), 752–759 (2017).

- [18] A. H. Barnett, “Efficient high-order accurate Fresnel diffraction via areal quadrature and the nonuniform fast Fourier transform,” Journal of Astronomical Telescopes, Instruments, and Systems 7(2), 1 – 19 (2021).

- [19] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, (2015).

- [20] L. N. Smith and N. Topin, “Super-convergence: Very fast training of neural networks using large learning rates,” (2018).

- [21] J. Alsing, B. Wandelt, and S. Feeney, “Massive optimal data compression and density estimation for scalable, likelihood-free inference in cosmology,” Monthly notices of the Royal Astronomical Society 477, 2874–2885 (2018).

- [22] T. Charnock, G. Lavaux, and B. D. Wandelt, “Automatic physical inference with information maximizing neural networks,” Physical Review D 97, 083004 (2018).

- [23] P. Melchior and A. D. Goulding, “Filling the gaps: Gaussian mixture models from noisy, truncated or incomplete samples,” Astronomy and Computing 25, 183–194 (2018).