Likelihood-based inference, identifiability and prediction using count data from lattice-based random walk models

Abstract

In vitro cell biology experiments are routinely used to characterize cell migration properties under various experimental conditions. These experiments can be interpreted using lattice-based random walk models to provide insight into underlying biological mechanisms, and continuum limit partial differential equation (PDE) descriptions of the stochastic models can be used to efficiently explore model properties instead of relying on repeated stochastic simulations. Working with efficient PDE models is of high interest for parameter estimation algorithms that typically require a large number of forward model simulations. Quantitative data from cell biology experiments usually involves non-negative cell counts in different regions of the experimental images, and it is not obvious how to relate finite, noisy count data to the solutions of continuous PDE models that correspond to noise-free density profiles. In this work we illustrate how to develop and implement likelihood-based methods for parameter estimation, parameter identifiability and model prediction for lattice-based models describing collective migration with an arbitrary number of interacting subpopulations. We implement a standard additive Gaussian measurement error model as well as a new physically-motivated multinomial measurement error model that relates noisy count data with the solution of continuous PDE models. Both measurement error models lead to similar outcomes for parameter estimation and parameter identifiability, whereas the standard additive Gaussian measurement error model leads to non-physical prediction outcomes. In contrast, the new multinomial measurement error model involves a lower computational overhead for parameter estimation and identifiability analysis, as well as leading to physically meaningful model predictions. Open access Julia software required to replicate the results in this study are available on GitHub.

1 Introduction

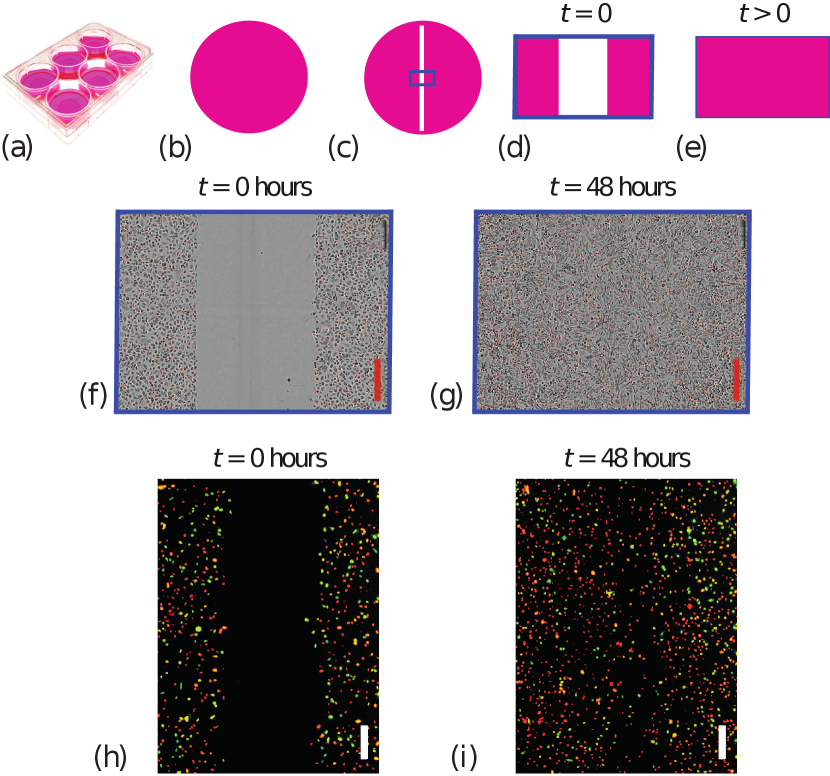

Two-dimensional cell migration assays, also called scratch assays, are routinely used to quantify how different populations of cells migrate [1]. These simple experiments, outlined in Figure 1, can be used to study potential drug treatments [2], different nutrient availability conditions [3], or interactions between different populations of cells [4]. Scratch assays involve growing uniform monolayers of cells on tissue culture plates before part of the monolayer is scratched away using a sharp-tipped instrument like a razor blade. The resulting recolonisation of the scratched region is then imaged, and the rate at which the scratch closes provides a simple measure of the ability of cells to migrate [1, 2]. Scratch assays conducted over relatively short periods of time (e.g. less than 24 hours) are often used to focus on cell migration [5], whereas experiments conducted over longer periods of time (e.g. greater than 24 hours) provide insight into the role of cell migration and cell proliferation combined [5].

One way of interpreting scratch assays is to implement a stochastic random walk model describing the motion of individual agents on a lattice [3, 6, 7, 8, 9]. The location of agents can be initialised to mimic the initial geometry of the scratch, and then agents are allowed to undergo a random walk, either biased or unbiased, with a simple exclusion mechanism to model crowding effects. Carefully choosing parameters in the random walk model to replicate experimental observations provides biological insight into the roles of directed and undirected migration among different subpopulations of cells within the experiment. Using a stochastic simulation model to interpret these experiments is advantageous because stochastic models allow us to keep track of individual cells within the population, as well as capturing the role of stochasticity in the experiments [10].

In the last decade there has been an increasing interest in parameter estimation for stochastic models of cell migration experiments [8, 9, 10]. When working with a discrete model of a spatially explicit biological process, such as cell migration, it has become customary to implement some form of Approximate Bayesian Computation (e.g. ABC rejection, ABC-MCMC) [8, 9, 10, 13], which is often motivated by noting that stochastic models are not associated with a tractable likelihood function. A similar trend has emerged in the spatial ecology literature, where parameter estimation has been dominated by different ABC methods [14, 15, 16, 17, 18]. In this work we demonstrate how to take a different approach by using likelihood-based methods for parameter estimation, parameter identifiability and model prediction [19]. Noting that scratch assays are often quantified by reporting cell counts in different spatial regions of experimental images [11, 12, 20], we explore how to use approximate continuum limit PDE models as a computationally efficient process model to describe the key mechanistic features in a scratch assay [21]. We work in a general setting by considering migration and crowding of a population composed of potentially distinct subpopulations of agents.

The main focus of our work is to compare two approaches for relating the solution of the PDE model to the observed noisy count data: (i) a traditional additive Gaussian measurement error model; and (ii) a new physically-motivated multinomial measurement error model. It is worth noting that applications of parameter estimation in biological applications involve working with an additive Gaussian measurement error model, and that this choice is often implemented without critically examining the implications of this assumption [22, 23]. Using maximum likelihood estimation and the profile likelihood [24, 25, 26, 27, 28], we obtain best-fit parameter estimates and likelihood-based confidence intervals that allow us to examine the practical identifiability of parameter estimates derived from noisy count data. Importantly, we also use a likelihood-based method to map the variability in parameter confidence sets to explore the variability in model predictions [29]. While both the additive Gaussian and multinomial measurement error models perform similarly in terms of parameter estimation and parameter identifiability, we show that the standard additive Gaussian measurement error model leads to unphysical predictions of negative agent counts, or counts of agents that locally exceed the maximum carrying capacity of the lattice. In contrast, the new physically-motivated multinomial measurement error model is simpler and faster to implement computationally compared to usual additive Gaussian measurement error model. Furthermore, the multinomial measurement error model leads to physically realistic model predictions while also avoiding the computational expense of a more standard ABC approach using a far more expensive stochastic process model.

This manuscript is organised as follows. In Section 2 we introduce the stochastic simulation model and the associated continuum-limit PDE. We also provide a general description of how noisy count data can be generated using the stochastic simulation algorithm to mimic experimental measurements for problems involving both single populations and two subpopulations of distinct agents. The two measurement error models are introduced along with methods for maximum likelihood estimation, practical identifiability analysis using the profile likelihood and likelihood-based prediction. In section 3 we present specific results for parameter estimation, parameter identifiability analysis and model prediction for a simple case of count data with one population of agents and a more realistic case of count data associated with a population composed of two subpopulations of agents. Finally, in Section 4 we summarise our findings and outline opportunities for future work.

2 Methods

2.1 Mathematical Models

In this study, we use two types of mathematical modelling frameworks. First, we use a stochastic lattice-based random walk model that will be described in Section 2.1.1. The motivation for using a computationally expensive stochastic model is that it provides a high-fidelity means of generating noisy data that is compatible with the kind of data generated experimentally. Second, we use a computationally efficient continuum limit description of the stochastic model. The continuum limit description takes the form of a system PDEs that will be described in Section 2.1.2.

2.1.1 Stochastic model

Experimental images from scratch assays shown in Figure 1 motivate our stochastic model. Scratch assay experiments are routinely used in experimental cell biology to quantify cell migration. For example, these experiments are often used to explore how different surface coatings or different putative drugs impact the ability of cells to migrate. Scratch assays are performed by growing a uniform population of cells in a tissue culture plate. A sharp-tipped instrument is used to create a scratch within the uniform monolayer, and individual cells within the population undergo random migration, where the motility of individual cells is influenced by crowding effects. The net outcome of this random migration is that cells move into the scratched region, and this closes the scratched region over time.

We use a lattice-based stochastic model to simulate the migration of distinct populations of agents on a two-dimensional lattice with lattice spacing [30]. In our simulations we think of each agent representing an individual cell in the experiment. The size of the lattice is , where is the width of the lattice and is the height of the lattice. Each site is indexed , and each site is associated with a unique Cartesian coordinate , where with and with .

Stochastic simulations are performed using a random sequential update method [31]. Simulations are initialised by placing a total of agents from distinct agent subpopulations on the lattice. If is the number of agents in the th subpopulation, then we have . To evolve the stochastic algorithm from time to time , agents are selected at random, one at a time, with replacement and given an opportunity to move [30]. When an agent belonging to subpopulation is chosen, that agent attempts to move with probability . The target site for potential motility events is chosen in the following way. The probability that a motile agent at site attempts to move to site is , whereas the probability that a motile agent at site attempts to move to site is . Here, is a bias parameter that influences the left-right directional bias in the horizontal direction. Setting indicates that there is no bias for agents in the th subpopulation. Any potential motility event that would place an agent on an occupied site is aborted. This means that our stochastic simulation algorithm is closely related to an exclusion process [31]. Periodic boundary conditions are applied along the horizontal boundaries, and reflecting boundary conditions are imposed along the vertical boundaries. Time steps in the simulations are indexed by so that for . To keep our simulation framework general we always work with a dimensionless simulations by setting . These simulations can be re-scaled using appropriate length and time scales to accommodate cells of different sizes and motility rates [6].

Although cells in the experiments in Figure 1 are free to move in any direction, scratching a uniform monolayer along the vertical direction means that the density of cells is independent of the vertical location within the image, and that the macroscopic density of cells varies with horizontal position. Therefore, these experimental images have been quantified by superimposing a series of uniformly-spaced columns across each image and counting the number of cells within each column [11, 12, 20]. This approach summarises the outcome of each experiment as a series of count data as a function of horizontal location of each column. The most standard way of reporting outcomes of a scratch assay is to image the experiment once at the end of the experiment and then counts of cells can be determined and reported. Alternatively, it is possible to repeat the imaging and counting process across a number of time points and report a time series of count data. Our modelling framework can be applied to either approach, but we will present our results for the more standard approach of working with data at one time point only, and in Section 4 we will explain how our methodology generalises to working with a time series of count data.

We will now describe how the stochastic random walk model can be used to generate count data in exactly the same way as count data are generated experimentally. In this work we always consider stochastic simulations that mimic the same geometry and design as in the experimental scratch assays. Therefore all simulations are initialised so that the expected occupancy status of lattice sites within the same column of the lattice is identical. Together with the boundary conditions, this ensures that the occupancy status of any lattice site is, on average, independent of vertical location at any time during the simulation.

The outcome of the stochastic simulations is to determine the occupancy of each lattice site for different subpopulations as a function of time. To quantify this we let denote the occupancy of site , for subpopulation after time steps. If site is occupied by an agent from subpopulation we have , otherwise . With this notation, the observed total agent count from subpopulation in column after time steps is

| (1) |

These counts are bounded since for all .

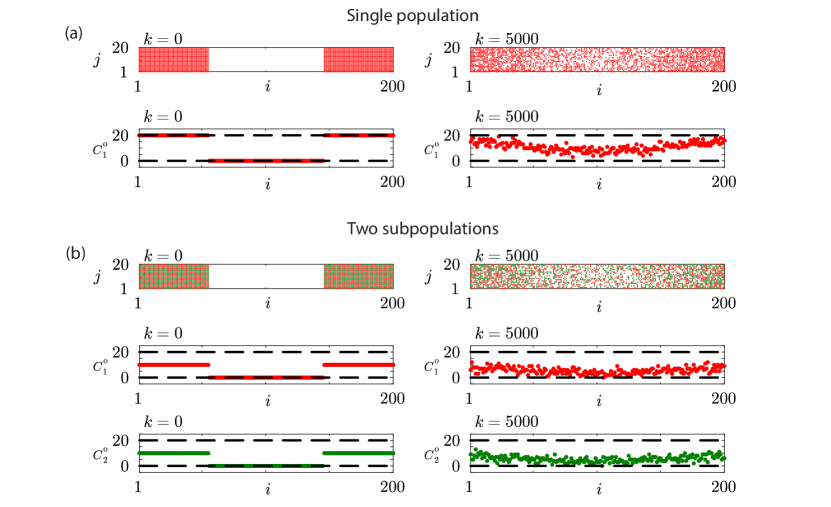

To mimic the scratch assay experiment in Figure 1(f)-(g) with a single population we consider stochastic simulations with on a lattice. Initially the lattice is populated so that each site with and is fully occupied. Agents move without directional bias by setting and . Results in Figure 2(a) show snapshots of the distribution of agents together with plots of the associated count data at the beginning and conclusion of the simulation. It is relevant to note that the count data at the end of the simulation, for , is noisy and exhibits large fluctuations across the columns of the lattice. These fluctuations are similar to the fluctuations observed in count data generated by quantifying images from a scratch assay performed using a single population of cells such as the experimental images in Figure 1(f)-(g).

We now explore how to use the stochastic model to mimic the scratch assay experiment in Figure 1(h)-(i) with two subpopulations of cells by initialising a lattice with two subpopulations of agents, . Stochastic simulations are initialised by randomly populating 50 of sites in each column with and with agents from subpopulation 1. The remaining sites in these columns are occupied by agents from subpopulation 2. Simulations are performed with and . For this choice of parameters it turns out that both subpopulations have the same migration rate and bias parameter which means that both subpopulations behave identically. Later in Section 2.2 we will show that the same ideas apply when the subpopulations are characterised by different migration rates and bias parameters. Results in Figure 2(b) show snapshots of the distribution of agents and plots of the associated count data. Since we are working with two subpopulations of agents we are able to generate two sets of count data per column, and for , after time steps. In this case we see that the count data at the end of the simulation are noisy and exhibit large fluctuations.

2.1.2 Continuum model

The stochastic model presented in Section 2.1.1 is well-suited to mimic noisy data from biological experiments. However, the stochastic model is computationally expensive, which means that it is not well-suited for parameter estimation, which can require a very large number of forward simulations. To make progress we will use a computationally efficient continuum limit approximations of the stochastic model. The continuum limit description of the stochastic model is given by a system of PDEs [30] that can be written in conservation form as

| (2) |

where is the dimensionless density of population at location and time , and the flux of subpopulation can be written as

| (3) |

where

| (4) |

In this study we focus on applications involving either one or two subpopulations. For the single population model , the continuum model simplifies to

| (5) |

where

| (6) |

For two subpopulations, , the continuum model simplifies to

| (7) |

with

| (8) |

| (9) |

where

| (10) |

An implicit assumption in the derivation of the continuum limit model is that we are dealing with a sufficiently large lattice such that fluctuations in count data are negligible. In this idealised scenario it is possible to relate count data to the solution of the continuum limit model by

| (11) |

where is the central position of the th column and indicates the occupancy of lattice site for subpopulation after time steps of the stochastic model. This relationship, which has been verified computationally [30], indicates that the solution of the continuum limit PDE model approaches the column-averaged density estimates obtained using count data only in the impractical situation where the height of the lattice (or the height of the experimental image) is sufficiently large. In practice, however, experiments always involve relatively small fields of view and consequently the associated count data can involve relatively large fluctuations that are similar to our simulation-derived count data in Figure 2 that is obtained using just . Under these practical conditions the relationship between the observed count data, for , and the solution of the continuum limit PDE, , is unclear. We will make progress in relating these two quantities by introducing two different types of measurement error models that account for the fluctuations in the count data in different ways [29].

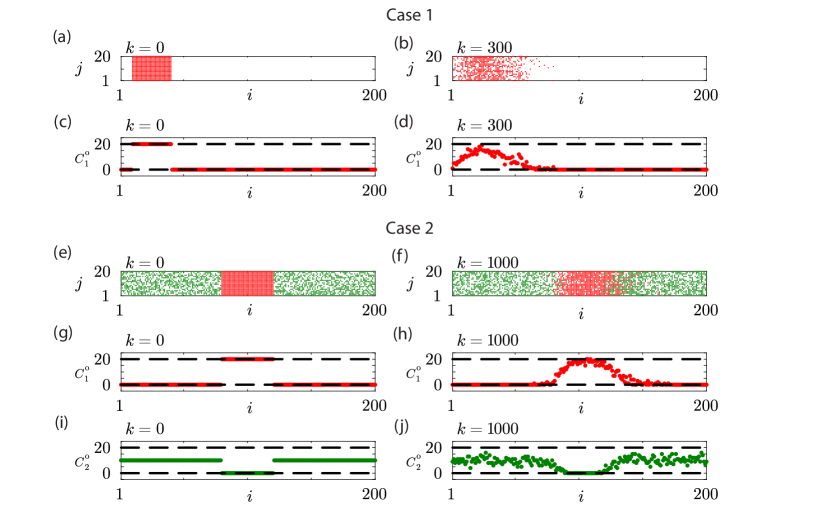

2.2 Data

For this simulation study we use synthetic data generated by stochastic model described in Section 2.1.1 to generate count data that has similar properties to count data obtained in a scratch assay. With this data we will explore different options for efficient likelihood-based parameter estimation, parameter identifiability analysis and model prediction. We will focus on two different cases: (i) Case 1 involves generating data at a single time point involving biased motility in the context of working with a single homogeneous population with ; and (ii) Case 2 involves generating data at a single time point involving biased motility with a population composed of two subpopulations with , All data are denoted using the vector . For Case 1, is a vector of length ; for Case 2, is a vector of length .

Case 1 involves a biased single population, , on a lattice where the motion of agents is biased, which corresponds to in the continuum model under idealised conditions where is sufficiently large. Since the motion is biased in the positive -direction, the initial placement of agents involves fully occupying sites with , as shown in Figure 3(a). The placement of agents towards the left boundary of the domain allows us to observe the biased motion in the positive -direction, and data collected after time steps gives rise to the snapshot shown in Figure 3(b). Count data in Figure 3(c)–(d) are given at the beginning and end of the simulation, respectively.

Case 2 involves a biased population composed of two subpopulations, , on a lattice with , corresponding to in the continuum model under idealised conditions where is sufficiently large. The initial placement of agents involves placing a group of agent from subpopulation 1 in the central region of the lattice so that all sites with are completely occupied by agents from subpopulation 1. All remaining lattice sites are randomly occupied by agents from subpopulation 2 with probability 0.5, as shown in Figure 3(e). This means that agents from subpopulation 1 will attempt to spread out from their original location with a small bias in their motion in the positive -direction. Since the motion of agents from subpopulation 1 are hindered by the presence of surrounding agents from subpopulation 2 we collect data after a larger number of time steps, , which gives rise to the snapshot in Figure 3(f). Count data for subpopulation 1 are given in Figure 3(g)–(h) whereas count data for subpopulation 2 are given in Figure 3(i)–(j).

2.3 Likelihood function

Our count data, summarised in Figure 3, are deliberately generated with so that the count data involve clear fluctuations and so the mathematical relationship between the discrete count data and the solution of the corresponding continuum limit model, Equation (2.1.2), is not guaranteed to hold. Therefore, we introduce two measurement error models [29] that allow us to relate the solution of the continuum model to the noisy count data in a probabilistic sense. In the first instance we take a standard approach and work with an additive Gaussian measurement error model because this is the most standard approach in the biological physics and mathematical biology literature. Secondly, we introduce a multinomial measurement error model which, as we will show, is a natural choice for working with count data.

The standard approach for relating data to solutions of differential equations is to assume that the data is normally distributed about the solution of the differential equation of interest. Throughout this work we will refer to this approach as working with an additive Gaussian measurement error model. For example, if we consider Case 1 where we have for and the solution of the continuum limit mathematical model is for , we make the standard assumption that for . Since we deal with counts across columns of the lattice, invoking a standard independence assumption gives us a log-likelihood function that can be written as

| (12) |

where is the Gaussian probability density function with mean and variance , and refers to the parameters in the continuum limit PDE model.

Working with an additive Gaussian measurement error model means that we have introduced additional parameters in the noise model, for . Throughout this work we will present results generated using the Gaussian additive noise model in terms of the standard deviation for . As we will demonstrate, data will be used to estimate the parameters in the continuum limit model description of the stochastic model as well as simultaneously estimating parameters in the measurement error model. For example, in Case 1 where we have we have two parameters in the continuum limit model and , and one parameter in the measurement error model . When working with the additive Gaussian measurement error model we will include both sets of parameters in the vector which means that with we have whereas with we have .

Instead of simply assuming that the data is normally distributed about the solution of the appropriate continuum limit model, we can take advantage of the structure of our count data and derive a more mechanistically-motivated measurement error model that we will refer to as a multinomial measurement error model. For example, when dealing with just a single population, , our count data simply consists of counts of agents within each column of the lattice, which implicitly defines a count of the vacant lattice sites in each column, meaning that our data takes the form and for . If we interpret at any given spatial location as corresponding to a finite number of independent samples from some underlying stochastic process, these samples can be thought of as an approximation of a continuous measurement of agent occupancy within that column. These data can be considered as samples from a distribution where the expected occupancy fraction is given by the solution of the continuum limit model . Together, these measurements imply a binomial likelihood,

| (13) |

Assuming all column counts, , are conditionally independent, given the continuum-limit solution, and taking the logarithm of the likelihood function gives us a log-likelihood function based on the binomial measurement error model for a single population with that can be written as

| (14) |

Similar arguments lead to a multinomial-based log-likelihood function for . Under these conditions our count data consists of counts of agents from each subpopulation within each column, for and . The geometry of the lattice means that counts of vacant sites is given by for . Again, we can interpret at any given spatial location as being generated by a finite number of independent samples from some underlying stochastic process, these samples can be thought of as an approximation of expected, noise-free measure of agent occupancy from the th subpopulation within that column. These data can be considered as samples from a distribution where the expected occupancy fraction is given by the solution of the continuum limit model, . Following the same arguments as above for , these measurements imply multinomial log-likelihood function which can be written as

| (15) |

This multinomial log-likelihood function relaxes to the binomial log-likelihood function, derived previously, when , and here we have written the data using the compact vector notation . In practice, when we evaluate the multinomial log-likelihood function we simply set the proportionality constant to unity. Unlike the additive Gaussian measurement error model, working with the multinomial measurement error model does not involve introducing any new parameters. When we work with we have , whereas involves .

2.4 Likelihood-based estimation and identifiability

Given a set of count data together with an appropriate process model and measurement error model with all unknown parameters summarised in the vector , we have access to a log-likelihood function, . Consequently, the choice of parameters that gives the best match to the data is given by which maximises the log-likelihood, giving rise to the maximum likelihood estimate (MLE),

| (16) |

Throughout this work we always use numerical optimization calculate . In particular all numerical optimization calculations are performed using the Nelder-Mead algorithm with simple bound constraints [32] implemented within the NLopt routine [33] in Julia. In general we find that our numerical optimization calculations are insensitive to the initial estimate and that the algorithm converges using default stopping criteria.

Given our estimate of , we use then the profile likelihood to quantify the precision of our parameter estimates by examining the curvature of the log-likelihood function [24, 25, 26, 27, 28]. To compare our results against asymptotic thresholds we work with a normalised log-likelihood function

| (17) |

so that . To proceed we partition the full parameter into interest parameters , and nuisance parameters , so that . In this study we restrict our attention to univariate profile likelihood functions which means that our interest parameter is always a single parameter. For a set of data , the profile log-likelihood for the interest parameter given the partition is

| (18) |

which implicitly defines a function of optimal values of for each value of . As for calculating the MLE, all profile likelihood functions in this work are calculated using the Nelder-Mead numerical optimization algorithm with the same bound constraints used to calculate . As a concrete example, if we work with the continuum limit model for a single population using the additive Gaussian measurement error model we have . In this scenario we can compute three univariate profile likelihood functions by choosing the interest parameter to be: (i) the diffusivity, and ; (i) the drift velocity, and ; and (iii) the standard deviation in the measurement error model, and . For all univariate profile likelihood calculations we work with a uniformly-discretized interval that contains the MLE. For example, if our interest parameter is we identify an interval, and evaluate across a uniform discretization of the interval to give a simple univariate function that we call the profile likelihood. The degree of curvature of the profile likelihood function provides information about the practical identifiability of the interest parameter. For example, if the profile log-likelihood function is flat then the interest parameter is non-identifiable. In contrast, when the profile log-likelihood function is curved the degree of curvature indicates inferential precision and we may determine likelihood-based confidence intervals where where denotes the th quantile of the distribution with degrees of freedom, which we take to be the relevant number of unknown parameters [34]. For example, identifying the interval where allows us to identify the asymptotic 95% confidence interval [24, 29] for a univariate profile likelihood function with one free parameter. This procedure gives us a simple way of identifying the width of the interval . A simple approach to determine suitable choices of and is to compute across some initial estimate of the interval and if the values of the profile log-likelihood do not intersect the relevant asymptotic threshold then we simply continue to compute the profile log-likelihood function on a wider interval. In contrast, if we compute the profile log-likelihood on some interval and find that this interval is very wide compared to the relevant asymptotic threshold we can simply re-compute the profile log-likelihood function on a narrower interval. Since these computations involve numerical optimisation calculations where we always have a reasonably good estimate to start the iterative calculations (e.g. ) we find that these computations are efficient.

2.5 Likelihood-based prediction

The methods outlined so far focus on taking noisy count data and exploring how to find the MLE parameter estimates, and to take an asymptotic log-likelihood threshold to define a parameter confidence set so that we can understand how variability in count data corresponds to variability in parameter estimates. In this section we will now explore how variability in parameter estimates maps to variability in model predictions. In particular, our focus will be to compare likelihood-based predictions using the traditional additive noise model with the multinomial noise model and explore differences in these approaches.

Given a set of count data, , and an associated normalised log-likelihood function, , we proceed by identifying the asymptotic 95% log-likelihood threshold , where is the number of free parameters. Using rejection sampling we obtain parameter sets that lie within the 95% log-likelihood threshold so that we have that satisfy for . For each within the confidence set we solve the relevant continuum model to give solutions that correspond to the mean trajectory prediction as defined by the relevant measurement error model [29]. Since all measurement error models considered in this work involve a parametric distribution, we can also construct various data realization predictions [29] by considering various measures of the width of that parameteric distribution about the mean. For simplicity we will consider the 5% and 95% quantiles of the relevant distributions as a simple measure of distribution width. To make a prediction using the single population model we denote the mean trajectory as to denote the solution of Equation (5) using the th parameter sample from within the parameter confidence set. To plot the solutions we discretize the spatial location so that we have , where for . For each of the mean trajectories we now have an interval for , where the lower bound corresponds to the 5% quantile of the measurement error noise model and the upper bound corresponds to the 95% quantile of the measurement error noise model. For a fixed parameter , the 5% and 95% quantiles of the additive Gaussian measurement error model are constants determined by . For the multinomial measurement error model the 5% and 95% quantiles are no longer constants but vary with the mean of the distribution. For the multinomial distribution relaxes to the binomial distribution and hence the bounds at location correspond to the 5% and 95% quantiles of the binomial distribution with trials with probability of success . For the multinomial measurement error model with the bounds for subpopulation at location correspond to the 5% and 95% quantiles of the th marginal distribution, again with trials with probability of success . After calculating these intervals around each mean trajectory we then take the union to define . Here the minimum value of and the maximum value of are computed for each fixed value of across the set of different parameter values, . This simple computational procedure gives us a complete picture of the prediction interval that can be interpreted as a form of tolerance interval [35] accounting for both parameter uncertainty and data realization uncertainty [21].

3 Results and Discussion

Case 1

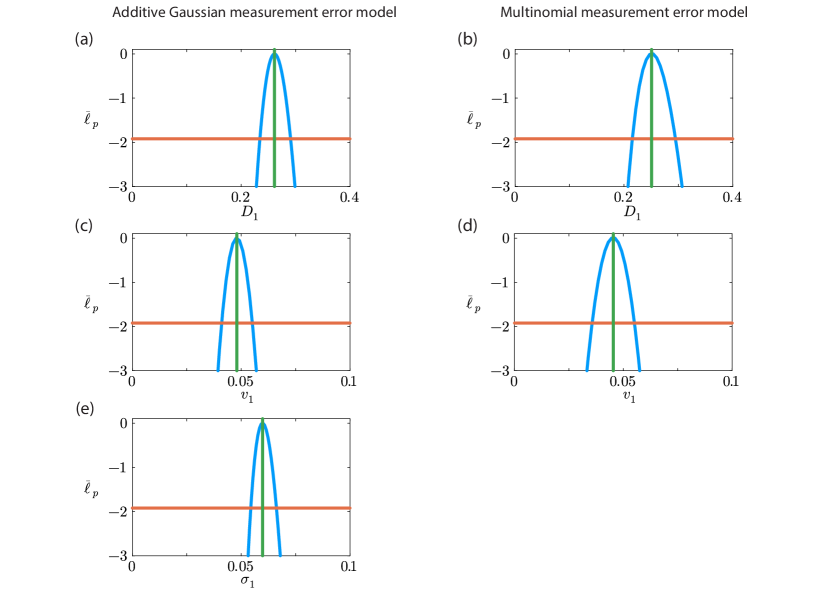

Working with the additive Gaussian measurement error model the MLE for the count data in Case 1 is . The estimates of the diffusivity and drift velocity differ slightly from the idealised result of and for perfect noise-free data with sufficiently large . We attribute these differences to the role of fluctuations in the count data that are obtained with just . When working with the multinomial measurement error model the MLE for the same count data is . Overall, comparing the MLE estimates of the diffusivity and drift velocity indicate that the different measurement error noise models lead to small differences in the MLE for and . Here, the only practical difference is that working with the additive Gaussian measurement error model involves additional computational effort required to estimate .

The broad similarity between our results for the two measurement error models can be partly explained by noting that the multinomial error model simplifies to a binomial error model when , and that the binomial distribution can be approximated by a Gaussian distribution under well-known conditions. The central limit theorem indicates that when both and are sufficiently large. In Case 1 we have on a lattice of size which means the average occupancy across all columns of the lattice is . On average this is equivalent to having 2 or 3 agents per column across the lattice. We will make progress with this estimate of despite the fact there is a very high variability in the number of agents per column in Figure 3(d) where we see that many columns contain zero agents, whereas there is one column containing 19 agents. Under this clearly questionable approximation, the normal approximation of the binomial distribution implies that , or for our data. Despite these assumptions, this estimate of is not dramatically different from our estimate of obtained by using the additive Gaussian measurement error model directly. This is consistent with our observations that our parameter estimates are not very sensitive to the choice of measurement error model.

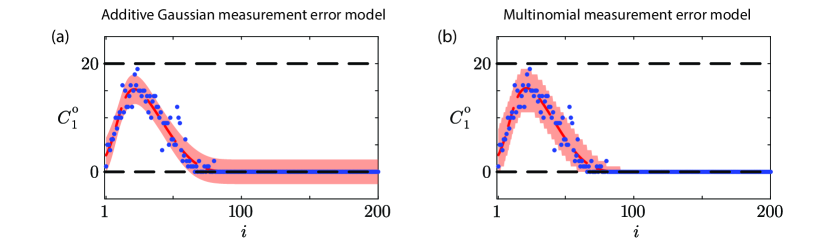

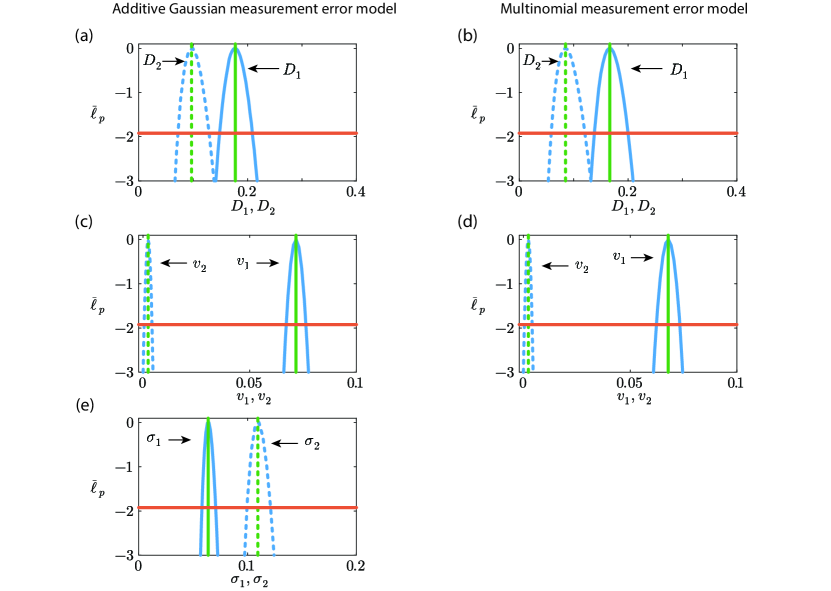

We now consider the identifiability of our parameter estimates by constructing various univariate profile likelihood functions for each parameter, as shown in Figure 4. Regardless of which measurement error model is used, overall we see that all univariate profiles are well formed about a single distinct peak at the MLE indicating that all parameters in both loglikelihood functions are practically identifiable. For all parameters we are able to identify an interval where to define a 95% asymptotic confidence interval [34]. For example, with the additive Gaussian measurement error model we have and the 95% confidence interval is , indicating that our estimate is reasonably precise. Since all profile likelihood functions are fairly narrow we conclude that all parameters are reasonably well identified by this data, and again the main difference between working with the additive Gaussian measurement error model and the multinomial measurement error model is the additional computational effort required to compute the profile likelihood for when working with the additive Gaussian measurement error model.

Results in Figure 5 illustrate the prediction intervals for data realizations for Case 1, and here we see a significant difference between the two measurement error models. Results in Figure 5(a) for the additive Gaussian measurement error model show that the prediction interval is a smooth interval about the solution evaluated at the MLE, and importantly in regions where the expected counts are zero (i.e. ), the lower bound of the prediction interval is negative. This result is unhelpful because count data are, by definition, non-negative. This is an important limitation of working with the standard additive Gaussian measurement error model, since the prediction interval does not necessarily obey the physical constraint that . Repeating this exercise using a different initial arrangement of agents on the lattice, or stopping the simulation at an earlier time can lead to prediction intervals that exceed . In contrast, results in Figure 5(b) for the multinomial measurement error model show that the prediction interval is not smooth, and this reflects the fact that count data are non-negative integers. Importantly, the prediction intervals derived using the multinomial measurement error model obey the physical constraint . Therefore, unlike our results in Figure 4 where the details of the measurement error model made little difference beyond computational efficiency, here in terms of prediction we see that the standard approach can lead to non-physical outcomes whereas the physically-motivated multinomial measurement error model is more computationally efficient to work with, as well as leading to physically meaningful prediction intervals. For the particular results in Figure 5 we have 94% of count data lying within the prediction interval for the additive Gaussian measurement error model and 97.5% of count data falling within the prediction interval for the multinomial measurement error model.

We will now repeat this exercise for Case 2 to explore the consequences within the context of working with a population composed of multiple interacting subpopulations.

Case 2

With the additive Gaussian measurement error model the MLE for the count data in Case 2 is . As for Case 1, these estimates of diffusivities and drift velocities differ from the idealised results. This difference is because we are working with noisy data with as well as the approximate nature of the mean-field PDE model. When working with the multinomial measurement error model the MLE is . Comparing the MLE estimates indicate that the different measurement error noise models only leads to small differences in the MLE, and main practical difference is that working with the additive Gaussian measurement error model involves additional computational effort required to estimate both and .

The identifiability of our parameter estimates is explored by constructing various univariate profile likelihood functions shown in Figure 6. Again, regardless of which measurement error model is used, all univariate profiles are well formed about a single distinct peak at the MLE indicating that all parameters in both loglikelihood functions are practically identifiable with reasonably narrow 95% asymptotic confidence intervals. Again the main difference between working with the additive Gaussian measurement error model and the multinomial measurement error model is the additional computational effort required to compute the profile likelihoods for and when working with the additive Gaussian measurement error model.

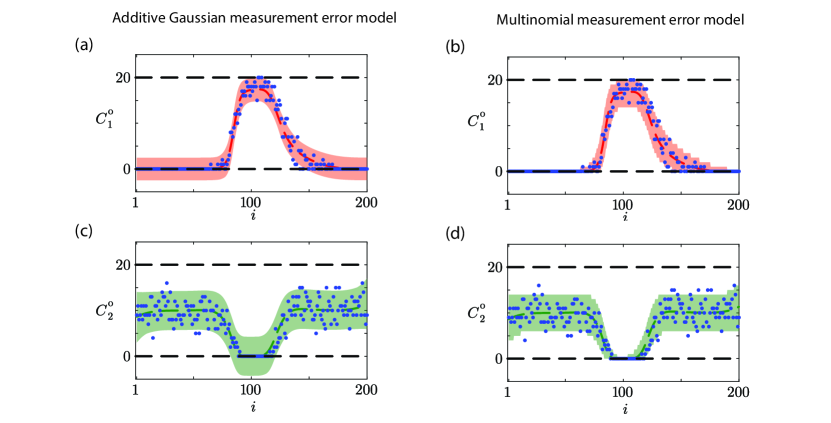

Results in Figure 7 illustrate the prediction intervals for data realizations for Case 2, and again we see a significant difference between the two measurement error models. Results in Figure 7(a) and (c) for the Gaussian measurement error model show smooth prediction intervals about the MLE solution, and in regions of low density the lower bound of the prediction interval is negative which is physically impossible. Alternatively, results in Figure 7(b) and (d) for the multinomial measurement error model leads to physically realistic, non-smooth predictions that reflect the fact that count data are non-negative integers that obey the physical constraint that and . For the particular results in Figure 7 we have 94.5% of count data lying within the prediction interval for the additive Gaussian measurement error model and 96.75% of count data falling within the prediction interval for the multinomial measurement error model.

4 Conclusion and Future Work

In this work we have presented a likelihood-based framework that can be used for parameter estimation, parameter identifiability analysis and model prediction for lattice-based random walk models. In particular, we focus on lattice-based models of biased migration of potentially distinct subpopulations of agents that can be relevant for interpreting cell biology experiments that involve multiple interacting subpopulations of cells. Many recent investigations of parameter estimation for these kinds of stochastic models have focused on various Approximate Bayesian Computation methods based on repeated stochastic simulations, and these approaches are often justified because likelihood models are not always considered. Here we take a different approach and note that count data from stochastic simulation algorithms can be described using several likelihood models and working with a continuum limit PDE approximation means that we have access to a computationally efficient PDE model together with a simple likelihood model that can facilitate maximum likelihood estimation, profile likelihood-based analysis of parameter identifiability and likelihood-based model prediction. Our approach can lead to significant computational improvements, for example computing the stochastic simulation data in Figure 3(a)-(d) for Case 1 is approximately ten times longer than solving the corresponding PDE model parameterised by with , and similar computational improvements hold for Case 2.

Many studies focusing on parameter estimation in the mathematical biology and biophysics literature often work with an additive Gaussian measurement error model to relate noisy observations to the solution of a continuous differential equation. Here we follow the same approach noting that this approach also leads to additional parameters for to be estimated along with the parameters in the process model. Alternatively, we note that count data obtained from the stochastic simulation model naturally leads to a multinomial measurement error model that can also be implemented and the main contribution of our study is to compare the performance of the likelihood-based parameter estimates, identifiability analysis and model prediction for the two measurement error models. In general we show that the two approaches can lead to very little differences in terms of parameter estimates and profile likelihood functions, however the outcomes in terms of model predictions is very different. In particular the standard approach of working with an additive Gaussian measurement error model can lead to non-physical model predictions where the lower bound of the prediction interval is negative and the upper bound of the prediction interval exceeds the maximum packing density of agents per column in the random walk model. In contrast the multinomial measurement error model leads to physically meaningful prediction intervals that obey physical constraints imposed by the lattice-based modelling framework.

The results presented in this study correspond to working with a stochastic model describing the motion of subpopulations of agents on a lattice, and the movement of agents in each subpopulation can be biased or unbiased, and this modelling framework can be described by a system of nonlinear PDEs for the non-dimensional density for . Our framework can also be applied to a broader set of mechanisms and more complicated PDE models. For example, incorporating a birth-death process into the stochastic model [36] leads to a more complicated stochastic model where can now change over time and the mean-field PDE description involves a source term,

| (19) |

where is the dimensionless density of population at location and time . Here denotes the flux of subpopulation , and this would remains the same as in (3) provided that the discrete motility mechanism remains the same, whereas denotes a source/sink term for subpopulation that models the impact of the birth-death process in the stochastic model, and we note that this source term is related to a generalised logistic growth term. Our approach for relating noisy count data from the stochastic model to the solution of a mean-field PDE model remains unchanged regardless of whether we consider incorporating a birth-death process. Clearly it is also possible to generalise the motility mechanism beyond working with the simple biased motility mechanisms explored in this work. Some interesting generalisations would be to incorporate cell pulling/pushing [37, 38] or cell swapping mechanisms [39] into the discrete model. Since the mean-field descriptions of such generalized mechanisms have been previously derived and validated [37, 38, 39] it is straightforward to incorporate these more detailed mechanisms into the same framework outlined in this study. It is worth noting some caution is warranted, however, as incorporating additional into the discrete model runs a risk of encountering identifiability issues as the size of the parameter space increases. Therefore, it is prudent to always construct the univariate profile likelihood functions to ensure that parameter estimates are sufficiently precise before biological mechanisms can be associated with parameter estimates.

A final comment is that we chose to present our Case studies in the typical scenario where data is obtained at one time point only. This simplification was motivated by the fact that it is fairly common in simple cell biology experiments to image the experiment after one fixed time point. It is conceptually and computationally straightforward to generalise our approach to work with data collected at time points simply by summing over these additional time points in the log-likelihood function, . For example, the multinomial log-likelihood function for count data collected at time points generalises to

| (20) |

where , and the data vector has length . With this modestly generalised log-likelihood function we can employ the same numerical optimization procedures to calculate and the associated profile likelihood functions, and the approach for calculating likelihood-based model predictions remains the same. A similar generalisation can also be implemented to work with the additive Gaussian measurement error model with data collected at time points.

An important feature of the modelling presented in this study is that we consider a two-dimensional stochastic model of a scratch assay where the density of agents at the beginning of the simulation is independent of vertical location and remains, on average, independent of vertical location during the simulation. This simplification is consistent with scratch assay design, and under these conditions we work with one-dimensional noisy count data, , that depends upon the horizontal position , and time . For other application we may be interested in genuinely two-dimensional count data and it is straightforward to generate and interrogate this kind of data using the same stochastic simulation model by performing a suite of identically-prepared realisations. If is a binary variable that denotes the occupancy status for subpopulation at site after time steps in the th identically-prepared realization, then is the noisy count data generated by considering identically-prepared realizations. Following the same ideas outlined in this work, we can solve a two-dimensional mean-field PDE model to give and use either an additive Gaussian measurement error model or a multinomial measurement error model to calculate , the associated profile likelihood-based confidence intervals, and likelihood-based model predictions. The key difference is that in the current work the count data is associated with trials where the expected column occupancy fraction is and is the height of the lattice. For applications where agent density varies with both vertical and horizontal position the count data is associated with trials where the expected site occupancy fraction is and is the number of identically-prepared realizations of the stochastic model. The same concepts apply to three-dimensional applications.

Appendix A Numerical methods

In this work we consider two different continuum models. For problems involving one population we generate numerical solutions of Equation (5), . For problems involving two subpopulations we generate numerical solutions of Equation (7), and . We will now describe how we obtain these numerical solutions. In brief we use a method-of-lines approach where we spatially discretize the PDE models using standard finite difference approximations for the spatial terms and then solve the resulting system of coupled ordinary differential equations (ODE) in time using the DifferentialEquations.jl package by taking advantage of automatic time stepping and temporal truncation error control [40].

To solve Equation (5) on we discretize the spatial terms on a uniform grid with grid spacing , such that for , where . At and , corresponding to mesh points and , respectively, we impose a zero flux boundary conditions to give

| (21) | ||||

| (22) | ||||

| (23) |

We solve the system of ODEs given by Equations (21)–(23) using Heun’s method with adaptive time-stepping [40]. All results presented in this work correspond to . To ensure our results are grid independent we considered a number of test cases and checked that numerical results with were indistinguishable from results with .

To solve Equation (7) on we discretize the spatial terms on a uniform grid with grid spacing , such that and for . No flux boundary conditions are imposed at and , leading to

| (24) | ||||

| (25) | ||||

| (26) |

References

- [1] Chun-Chi Liang, Ann Y Park and Jun-Lin Guan “ scratch assay: A convenient and inexpensive method for analysis of cell migration ” In Nature Protocols 2.2 Nature Publishing Group, 2007, pp. 329–333 DOI: 10.1038/nprot.2007.30

- [2] Ayman Grada et al. “Research techniques made simple: Analysis of collective cell migration using the wound healing assay” In Journal of Investigative Dermatology 137, 2017, pp. e11–e16 DOI: 10.1016/j.jid.2016.11.020

- [3] Evgeniy Khain et al. “Collective behavior of brain tumor cells: The role of hypoxia” In Physical Review E 83, 2011 DOI: 10.1103/PhysRevE.83.031920

- [4] Carles Falcó, Daniel.. Cohen, José.. Carrillo and Ruth.. Baker “Quantifying tissue growth, shape and collision via continuum models and Bayesian inference” In Journal of the Royal Society Interface 20, 2023 DOI: 10.1098/rsif.2023.0184

- [5] Stuart T Johnston, Matthew J Simpson and D… McElwain “How much information can be obtained from tracking the position of the leading edge in a scratch assay?” In Journal of the Royal Society Interface 11 Royal Society, 2014 DOI: 10.1098/rsif.2014.0325

- [6] M.. Simpson, K.. Landman and B.. Hughes “Cell invasion with proliferation mechanisms motivated by time-lapse data” In Physica A: Statistical Mechanics and its Applications 389, 2010, pp. 3779–3790 DOI: 10.1016/j.physa.2010.05.020

- [7] Thomas Callaghan, Evgeniy Khain, Leonard M. Sander and Robert M. Ziff “A stochastic model for wound healing” In Journal of Statistical Physics 122, 2006, pp. 909–924 DOI: 10.1007/s10955-006-9022-1

- [8] R.. Ross et al. “Using approximate Bayesian computation to quantify cell–cell adhesion parameters in a cell migratory process” In NPJ Systems Biology and Applications 3, 2017 DOI: 10.1038/s41540-017-0010-7

- [9] A.. Browning, S.. McCue and M.. Simpson “A Bayesian computational approach to explore the optimal duration of a cell proliferation assay” In Bulletin of Mathematical Biology 79, 2017, pp. 1888–1906 DOI: 10.1007/s11538-017-0311-4

- [10] B.. Vo, C.. Drovandi, A.. Pettitt and M.. Simpson “Quantifying uncertainty in parameter estimates for stochastic models of collective cell spreading using approximate Bayesian computation” In Mathematical Biosciences 263, 2015, pp. 133–142 DOI: 10.1016/j.mbs.2015.02.010

- [11] W. Jin et al. “Reproducibility of scratch assays is affected by the initial degree of confluence: Experiments, modelling and model selection” In Journal of Theoretical Biology 390, 2016, pp. 136–145 DOI: 10.1016/j.jtbi.2015.10.040

- [12] S.. Vittadello et al. “Mathematical models for cell migration with real-time cell cycle dynamics” In Biophysical Journal 114, 2018, pp. 1241–1253 DOI: 10.1016/j.bpj.2017.12.041

- [13] Brenda N. Vo, Christopher C. Drovandi, Anthony N. Pettitt and Graeme J. Pettet “Melanoma cell colony expansion parameters revealed by approximate Bayesian computation” In PLOS Computational Biology 11, 2015 DOI: 10.1371/journal.pcbi.1004635

- [14] Nikolai W F Bode “Parameter calibration in crowd simulation models using approximate Bayesian computation” In arXiv preprint, 2020 DOI: 10.48550/arXiv.2001.10330

- [15] Grant Hamilton et al. “Bayesian estimation of recent migration rates after a spatial expansion” In Genetics 170, 2005, pp. 409–417 DOI: 10.1534/genetics.104.034199

- [16] Rune Rasmussen and Grant Hamilton “An approximate Bayesian computation approach for estimating parameters of complex environmental processes in a cellular automata” In Environmental Modelling & Software 29, 2012, pp. 1–10 DOI: 10.1016/j.envsoft.2011.10.005

- [17] Elske van der Vaart, Mark A. Beaumont, Alice S.A. Johnston and Richard M. Sibly “Calibration and evaluation of individual-based models using Approximate Bayesian Computation” In Ecological Modelling 312, 2015, pp. 182–190 DOI: 10.1016/j.ecolmodel.2015.05.020

- [18] Elske van der Vaart, Dennis Prangle and Richard M. Sibly “Taking error into account when fitting models using Approximate Bayesian Computation” In Ecological Applications 28, 2018, pp. 267–274 DOI: 10.1002/eap.1656

- [19] Larry Wasserman “All of Statistics: A Concise Course in Statistical Inference” Springer, 2004

- [20] Alessio Gnerucci, Paola Faraoni, Elettra Sereni and Francesco Ranaldi “Scratch assay microscopy: A reaction–diffusion equation approach for common instruments and data” In Mathematical Biosciences 330, 2020 DOI: 10.1016/j.mbs.2020.108482

- [21] M.. Simpson, R.. Murphy and O.. Maclaren “Modelling count data with partial differential equation models in biology” In Journal of Theoretical Biology 580, 2024 DOI: 10.1016/j.jtbi.2024.111732

- [22] Keegan E Hines, Thomas R Middendorf and Richard W Aldrich “Determination of parameter identifiability in nonlinear biophysical models: A Bayesian approach” In Journal of General Physiology 143, 2014, pp. 401–416 DOI: 10.1085/jgp.201311116

- [23] Matthew J Simpson, Ruth E Baker, Sean T Vittadello and Oliver J Maclaren “Practical parameter identifiability for spatio-temporal models of cell invasion” In Journal of the Royal Society Interface 17, 2020 DOI: 10.1098/rsif.2020.0055

- [24] Y. Pawitan “In All Likelihood: Statistical Modelling and Inference Using Likelihood” Oxford University Press, 2001

- [25] A. Raue et al. “Structural and practical identifiability analysis of partially observed dynamical models by exploiting the profile likelihood” In Bioinformatics 25, 2009, pp. 1923–1929 DOI: 10.1093/bioinformatics/btp358

- [26] A. Raue, C. Kreutz, F.. Theis and J. Timmer “Joining forces of Bayesian and frequentist methodology: A study for inference in the presence of non-identifiability” In Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 371, 2013 DOI: 10.1098/rsta.2011.0544

- [27] A.. Villaverde, N. Tsiantis and J.. Banga “Full observability and estimation of unknown inputs, states and parameters of nonlinear biological models” In Journal of the Royal Society Interface 16, 2019 DOI: 10.1098/rsif.2019.0043

- [28] A.. Villaverde et al. “A protocol for dynamic model calibration” In Briefings in Bioinformatics 23, 2022 DOI: 10.1093/bib/bbab387

- [29] R. Murphy, O.. Maclaren and M.. Simpson “Implementing measurement error models with mechanistic mathematical models in a likelihood-based framework for estimation, identifiability analysis and prediction in the life sciences” In Journal of the Royal Society Interface 21, 2024 DOI: 10.1098/rsif.2023.0402

- [30] M.. Simpson, K.. Landman and B.. Hughes “Multi-species simple exclusion processes” In Physica A: Statistical Mechanics and its Applications 388, 2009, pp. 399–406 DOI: 10.1016/j.physa.2008.10.038

- [31] D. Chowdhury, A. Schadschneider and K. Nishinari “Physics of transport and traffic phenomena in biology: From molecular motors and cells to organisms” In Physics of Life Reviews 2, 2005, pp. 318–352 DOI: 10.1016/j.plrev.2005.09.001

- [32] J.. Nelder and R. Mead “A simplex method for function minimization” In The Computer Journal 7, 1965, pp. 308–313 DOI: 10.1093/comjnl/7.4.308

- [33] S.. Johnson “The NLopt module for Julia”, 2018 URL: https://github.com/JuliaOpt/NLopt.jl

- [34] P. Royston “Profile likelihood for estimation and confidence intervals” In The Stata Journal 7, 2007, pp. 376–387 DOI: 10.1177/1536867x0700700305

- [35] S.. Vardeman “What about the other intervals?” In The American Statistician 46, 1992, pp. 193–197 DOI: 10.1080/00031305.1992.10475882

- [36] Ruth E. Baker and Matthew J. Simpson “Correcting mean-field approximations for birth-death-movement processes” In Physical Review E 82, 2010 DOI: 10.1103/PhysRevE.82.041905

- [37] G. Chappelle and C.. Yates “Pulling in models of cell migration” In Physical Review E 99, 2019 DOI: 10.1103/PhysRevE.99.062413

- [38] C.. Yates, A. Parker and R.. Baker “Incorporating pushing in exclusion-process models of cell migration” In Physical Review E. 91, 2015 DOI: 10.1103/PhysRevE.91.052711

- [39] S.. Noureen, J.. Owen, R.. Mort and C.. Yates “Swapping in lattice-based cell migration models” In Physical Review E 107, 2023 DOI: 10.1103/PhysRevE.107.044402

- [40] C Rackauckas and Q Nie “DifferentialEquations.jl – A performant and feature-rich ecosystem for solving differential equations in Julia” In Journal of Open Research Software 5, 2017 DOI: 10.5334/jors.151