Likelihood free inference for Markov processes: a comparison

Abstract

Approaches to Bayesian inference for problems with intractable likelihoods have become increasingly important in recent years. Approximate Bayesian computation (ABC) and “likelihood free” Markov chain Monte Carlo techniques are popular methods for tackling inference in these scenarios but such techniques are computationally expensive. In this paper we compare the two approaches to inference, with a particular focus on parameter inference for stochastic kinetic models, widely used in systems biology. Discrete time transition kernels for models of this type are intractable for all but the most trivial systems yet forward simulation is usually straightforward. We discuss the relative merits and drawbacks of each approach whilst considering the computational cost implications and efficiency of these techniques. In order to explore the properties of each approach we examine a range of observation regimes using two example models. We use a Lotka–Volterra predator prey model to explore the impact of full or partial species observations using various time course observations under the assumption of known and unknown measurement error. Further investigation into the impact of observation error is then made using a Schlögl system, a test case which exhibits bi-modal state stability in some regions of parameter space.

School of Mathematics & Statistics, Newcastle University,

Newcastle upon Tyne, NE1 7RU, UK

Keywords: Markov processes; approximate Bayesian computation(ABC); pMCMC; stochastic kinetic model; systems biology; sequential Monte Carlo.

1 Introduction

Computational systems biology is concerned with the development of dynamic simulation models for complex biological processes (Kitano, 2002). Such models are useful for contributing to the quantitative understanding of the underlying process through in-silico experimentation that would be otherwise difficult, time consuming or expensive to undertake in a laboratory. Stochastic kinetic models describe the probabilistic evolution of a dynamical system made up of a network of reactions. Models of this type are increasingly used to describe the evolution of biological systems (Golightly & Wilkinson, 2005; Proctor et al., 2007; Boys et al., 2008; Wilkinson, 2009). Motivated by the need to incorporate intrinsic stochasticity in the underlying mechanics of the systems these models are naturally represented by a Markov jump process. Such systems are governed by a reaction network, each of which changes the state by a discrete amount and are hence naturally represented by a continuous time Markov process on a discrete state space. State transition densities for models of this type are analytically intractable but forward simulation is available through use of, for example, the Direct method described by Gillespie (1977). Models typically have a number of rate parameters which are important and of interest, but inference for these is an extremely challenging problem.

Parameter inference for Markov process models is often a computationally demanding problem due in part to the intractable likelihood function. Exact inference is possible through particle Markov chain Monte Carlo (pMCMC) (Andrieu & Roberts, 2009; Andrieu et al., 2010), computationally intensive methods that make use of sequential Monte Carlo sampling techniques, embedded in a MCMC scheme. PMCMC in this context requires running a sequential Monte Carlo filter, such as a bootstrap particle filter at each MCMC iteration, to provide an estimate to the likelihood. The bootstrap filter is dependent on multiple forward simulations from the model for reliable estimation, leading to an expensive algorithm.

Approximate Bayesian computation (ABC) techniques have also shown to be a useful development when tackling problems with intractable likelihood functions. They allow inference in this scenario via an approximation to the posterior distribution. As in pMCMC, the ABC framework depends on a large number of model realisations given proposed parameter vectors, retaining samples which yield simulated data that is deemed to be sufficiently close to the observed data set, see Tavare et al. (1997); Pritchard et al. (1999); Beaumont et al. (2002). Using a simple rejection sampling approach often leads to poor acceptance rates for tolerance thresholds that give an accurate approximation to the posterior, (Pritchard et al., 1999), meaning that a potentially very high number of data simulations must be made in order to obtain a good sample. Advancements within this framework have led to MCMC schemes and sequential Monte Carlo schemes, which typically return better acceptance rates than the simple rejection sampler, (Marjoram et al., 2003; Del Moral et al., 2006; Sisson et al., 2007; Toni et al., 2009).

Both particle MCMC, as in Golightly & Wilkinson (2011) and Wilkinson (2011), and ABC methods, (Drovandi & Pettitt, 2011; Fearnhead & Prangle, 2012), have been successfully applied in the context of stochastic kinetics, but it is unclear as to which approach is favorable. If exact posterior inference is desired we are limited to particle MCMC. However, if exact inference is not the primary concern and there are computational constraints, perhaps available CPU time, it is not obvious which approach should be employed. Is it the case that increased computational efficiency is an adequate trade–off for the reduction in accuracy? In this article we explore both approaches under a range of situations in an attempt to draw some preliminary conclusions on which inference scheme may perform most efficiently in the context of parameter inference for Markov processes. Efficiency will be considered under the restriction that we apply the notion of a computational budget on the allowed number of model realisations in order to make like for like comparisons. This is under the assumption that given infinite time, as well as other conditions to guarantee convergence, each of these approaches would yield the same target. The budget is set on the number of model realisations since it is often the case that the forward simulation constitutes the bulk of computational cost of algorithms of this type.

2 Stochastic kinetic models

Consider a network of reactions which involves a set of species and reactions where each reaction is given by

| (1) |

denotes the number of molecules of species that will be consumed in reaction . Similarly are the number of molecules of produced in the reaction. Letting be the matrix of ’s and the corresponding matrix of coefficients of products the reaction network can be summarised as

| (2) |

The stoichiometry matrix, defined

| (3) |

is a useful way to encode the information of the network as its columns represent the change of state caused by the different reaction events. Define as the vector denoting the number of species present at time .

Each reaction is assumed to have an associated constant and hazard function which gives the propensity for a reaction event of type at time to occur. We can consider the hazard function as arising due to interactions between species in a well mixed population. If and then full specification of the Markov process is complete given values for and .

Exact trajectories of the evolution of species counts of such a system can be obtained via the Direct method, Gillespie (1977). Algorithm 1 describes the procedure for forward simulation of a stochastic kinetic model given its stoichiometry matrix, , reaction rates , associated hazard function and initial state . Reactions simulated to occur in this way incorporate the stochastic nature and discrete state space of the system as reactions are chosen probabilistically and modify the state by discrete amounts. Whilst the Direct method allows exact simulation of the time and type of each reaction event that occurs, observed data are typically noisy, possibly partial, observations at discrete time intervals,

| (4) |

where are parameters associated with the measurement error that may also need to be inferred.

Less computationally expensive simulation algorithms such as the chemical Langevin equation (CLE) relax the restriction imposed by the discrete state space, Gillespie (2000). The stochasticity of the underlying mechanics of the system is retained but realisations of the evolution of species levels are approximate. However Gillespie (2014) show that such approximate simulators are not necessarily appropriate in all cases and that ensuring that they yield good approximations over the parameter space can be a problem. We therefore restrict ourselves to using the Direct method for the purposes of this article. For a comprehensive introduction into stochastic kinetic modelling see Wilkinson (2011).

-

1.

Set . Initialise the rate constants and initial states .

-

2.

Calculate the hazard function and .

-

3.

Set where

-

4.

Simulate the reaction index with probabilities

-

5.

Set where is the column of the stoichiometry matrix .

-

6.

If return to 2.

3 Bayesian inference for models with intractable likelihoods

3.1 Particle Markov chain Monte Carlo

-

1.

Initialise with a random starting value .

-

2.

Propose a move to a new candidate .

-

3.

Based on , compute an unbiased estimate of , ,

-

4.

Accept the move with probability

(5) else remain at .

-

5.

Return to 2.

Suppose we are interested in and that we wish to construct an MCMC algorithm whose invariant distribution is exactly this posterior. Using an appropriate proposal distribution we can construct a Metropolis Hastings algorithm to do this. However this is often impractical due to the likelihood term, , being unavailable. Andrieu & Roberts (2009) proposed a pseudo marginal approach to this issue. In order to overcome this problem of the intractable likelihood function, we replace this evaluation in the Metropolis Hastings acceptance ratio with a Monte Carlo estimate leading to the algorithm as described in algorithm 2. Provided that the resulting stationary distribution is exactly the desired target. Within the context of Markov processes it is natural to make use of sequential Monte Carlo techniques through use of a bootstrap particle filter, Doucet et al. (2001), described for this context in algorithm 3. The bootstrap filter gives unbiased estimates and hence the resultant MCMC scheme is “exact approximate”.

At time we have a set of particles . The filter assumes fixed parameters and so we drop the from our notation. for observed data with discrete time-point observations.

-

1.

Initialise at , a set of independent draws from our prior distribution on the state.

-

2.

At time , suppose we have a sample .

-

3.

Sample a set of indices for candidates for forward simulation, according to the weights .

-

4.

Simulate forward from the model the chosen paths, .

-

5.

Calculate weights, , and normalise to set .

Define , then .

The scheme as described here is a special case of the particle marginal Metropolis Hastings (PMMH) algorithm described in Andrieu et al. (2010) which can also be used to target the full joint posterior . It was noted in Andrieu & Roberts (2009) that the efficiency of this scheme was dependent on the variance of the estimated likelihoods. Increasing the number of particles yields estimates with a smaller variance at the expense of increased computation time. Optimal choices for were subject to interest in Pitt et al. (2012) and Doucet et al. (2012). The former suggest that the variance of the log–likelihood estimates should be around 1 in order to be optimal, however the latter argue that the efficiency penalty is small for values between 0.25 and 2.25.

3.2 Approximate Bayesian computation

Approximate Bayesian computation (ABC) techniques have increased in use in recent years due to their applicability to inference for a posterior distribution, , for problems in which evaluation of the likelihood function, , due to cost or analytical intractability, is unavailable. Such methods are typically computationally intensive due to their reliance on the ability to simulate realisations from the model. Ideally, given a collection of parameter vectors, , we would keep all vectors that gave rise to simulated data which is equivalent to our observed data set. In practice however, the probability that a candidate data set is almost 0. Hence an approximation to the target distribution is made through a collection of samples of parameters that lead to data simulation which is deemed to be sufficiently close to the observations. Simulated data, , is considered to be close if, for a given metric , the distance between simulated and observed data is smaller than some threshold . The simple rejection sampler is described in algorithm 4

-

1.

Generate a candidate parameter vector .

-

2.

Simulate a candidate data set .

-

3.

Calculate a measure of distance between the candidate data, , and the observed data , .

-

4.

Accept if for some predetermined, fixed, .

-

5.

Go to 1.

Instead of yielding the true posterior distribution, samples have the approximate distribution . Acceptance rates of ABC algorithms are often improved by employing dimension reduction techniques on the data. This approximation tends to the true target as when is a properly defined metric on sufficient statistics. Further approximations are made when, as is often the case, sufficient statistics are unavailable. In this situation one would choose a set of summary statistics that it is hoped is informative about the data. Blum et al. (2013) give a review of current techniques for choosing summary statistics. Over the past decade numerous proposals to improve the efficiency of ABC have been made. Favored schemes include the use of a sequential Monte Carlo sampler, which seeks to address the issue of poor acceptance rates through first allowing a high acceptance threshold and then gradually reducing the tolerance to improve the approximation to the target distribution. This algorithm is sequential in the sense that populations of simulated points (particles) are generated at each stage. The sample is then exploited as the basis for a proposal distribution used to target . Douc et al. (2007) showed that from the perspective of importance sampling this reliance on previous populations to improve proposals is entirely legitimate. Early approaches often used a geometric rate of decline for the tolerances, however adaptive schemes based on the distribution of the distances have been shown to work well (Drovandi & Pettitt, 2011). It is of note however that consideration must be given to the criteria by which we choose the new tolerance, as Silk et al. (2013) showed that convergence is not guaranteed in all cases. A sequential approach to inference within the ABC framework based on importance sampling is described in algorithm 5.

In practice there are a number of factors which contribute to the efficiency of the sequential scheme described here. A perturbation kernel , typically some Gaussian distribution, with a small variance usually leads to good acceptance rates but slow exploration of the parameter space. Conversely larger moves will explore the space more quickly but at the cost of reduced acceptance probability. Beaumont et al. (2009) consider use of an adaptive Gaussian proposal distribution, with variance equivalent to twice the empirical variance of the samples, . This was built on in an article by Filippi et al. (2013) who derived an optimal proposal variance, optimal in terms of jointly minimising the Kullback-Liebler divergence between proposal and target and maximising the acceptance rate, dependent on the current sample and the tolerance for the target. The sequence of tolerances and number of bridging distributions in the sequence also contribute to the overall effectiveness of the scheme. Intuitively, a slow decline in the threshold will lead to high acceptance rates for newly proposed parameter vectors, but posterior learning will be slow.

-

1.

Initialise and set the population indicator, .

-

2.

Set particle indicator, .

-

3.

If t = 0, sample

Else sample from the previous population with weights and perturb to obtain

If , return to 3.

Simulate a candidate dataset

If , return to 3. -

4.

Set and calculate weight for particle ,

If set , go to 3

-

5.

Normalise the weights, if , set and go to 2.

4 Basis for comparison

In order to create a framework in which we can make meaningful comparisons between these approaches to inference it is important to consider what makes a fair test, as well as some measure of efficiency of each sampler. In addition to this we are interested in the discrepancy between the resultant posterior and the true posterior. One of the primary motivations for the comparison is to determine which method is most appropriate with particular consideration to the notion of a restricted computational budget. In order to maintain as much consistency as possible over the various runs we use the Direct method, Gillespie (1977), for all realisations from a given model. We shall compare a pseudo-marginal Metropolis Hastings implementation of pMCMC with ABC approximations that use a Euclidean metric function over the full set of data points,

| (6) |

We make this choice to ensure that, in the limit, we are targeting the same posterior. Additionally, we have found that this metric performs competitively with other choices for the sample sizes considered in our simulations study. We repeat runs of each algorithm for a range of observation schemes on a number of data sets for each model, each using the same computational budget, comparing the results from each. We also include for each a long run of a pMCMC scheme which will provide us with the ‘true’ posterior of interest in each case.

4.1 Computational budget

We define our computational budget by considering each model realisation via the Direct method as 1 computational unit. We ignore the contribution to computation time of all other aspects of each algorithm as typically it is the path simulation that takes up the majority of computation time. In addition this choice ensures that the comparisons made are unaffected by certain computational optimisations, and coding tricks, which may distort the results in favor of one algorithm over another. For example ABC typically parallelises trivially and often yields almost perfect scaling between number of processors used and the speed factor gained, whereas particle MCMC does not benefit from parallel hardware in the same way, but can still be parallelised.

4.2 Initialisation

Each of the approaches to inference described above exhibit aspects which need to be chosen in some way, each of which has a bearing on the efficiency of the sampler. To make comparison as fair as possible we want each algorithm to be in some sense optimised using standard published methods. We now explain, for clarity, the way in which we have chosen various tuning parameters for each of the algorithms above. The cost of obtaining such parameters is collected and is deducted from our computational budget.

4.2.1 Particle MCMC

It has been well documented that the efficiency of random walk Metropolis algorithms is highly dependent on the choice of proposal kernel. A distribution which yields small deviations from the current state will ensure that a large number of moves are accepted but samples will be highly correlated. Large moves around the space on the other hand will often be rejected leading to the chain spending large amounts of time stuck at the same value. Under various assumptions about the target it has been shown that the optimal scaling for a Gaussian proposal kernel is

| (7) |

where is the covariance of the posterior distribution and is the number of parameters being estimated, (Roberts et al., 1997; Roberts & Rosenthal, 2001). The starting parameter vector, , of the chain also has an effect on the efficiency of the sampler. A choice of which is far from a region of non–negligible posterior density will lead to a chain which takes a long time to move toward the target distribution, whereas a chain initialised close to stationarity will yield useful samples sooner. This burn–in period can sometimes consume a sizeable fraction of the computational budget. Particle MCMC as described in section 3.1 also relies on a sequential Monte Carlo algorithm for approximation of the likelihood, . The bootstrap filter requires multiple model realisations, via a set of “particles” in order to achieve this approximation. In addition the approximation has to be calculated at every iteration of the MCMC algorithm, hence clearly the number of particles in the filter will greatly affect the runtime of the resultant algorithm. A small number of particles will result in a shorter computation time for the likelihood approximation but leads to larger variability in the estimated likelihood. This increased variability leads to decreased efficiency of the inference scheme, as noted by Andrieu & Roberts (2009). A large number of particles, useful for consistent estimates of will lead to slower posterior sampling in the chain. An optimal choice for the number of particles has been explored by Pitt et al. (2012) who suggest that the number of particles should be chosen such that the variance of the log–likelihood estimates is around 1. However Doucet et al. (2012) show that the efficiency of the scheme is good for variances between 0.25 and 2.25.

In practice, for the purpose of this comparison we choose an initial parameter vector, , as a random sample from the posterior distribution. The number of particles used in the particle filter, is then chosen by repeated runs of a particle filter with increasing until . We then use the covariance matrix of the posterior, to inform our choice for , the Gaussian random walk proposal variance,

| (8) |

During our first experiments with the pMCMC algorithm for these models we approached initialisation and tuning of the algorithm under the assumption of no knowledge of the posterior distribution of interest. However, this proved to be problematic as finding a sensible choice of , number of particles, , and proposal variance, , often used a large proportion of the allocated computational budget. Under the computational restrictions imposed by our budget choice this made pMCMC look completely uncompetitve. This problem itself is interesting as it highlights a potential drawback of using pMCMC in practice. We discuss this issue further in section 5.1.5.

4.2.2 ABC SMC

Initialisation of a sequential ABC algorithm as described in section 3.2 is somewhat less involved. This is due to the fact that optimal Gaussian proposal kernels for advancement to subsequent targets can be calculated during execution. In addition the sequence of tolerances is chosen adaptively throughout the algorithm. It remains that there is need to specify an initial tolerance value, . One could argue that tuning the choice of metric and summary statistics to be used is also of interest. Discussion of how one might do this is beyond the scope of this article however, since we are limiting ourselves to the choice in equation 6 so as to ensure that as the resultant posterior approximation tends toward the true posterior distribution of interest, . For an in depth discussion of methods in which to choose summary statistics see Blum et al. (2013). In order to choose a suitable for the scheme we simply calculate using a number of samples from . From this we take to be the value equivalent to the -ile of the resultant distribution of distances.

5 Case study

5.1 Lotka–Volterra predator prey model

5.1.1 Model description

The Lotka–Volterra predator prey system, Lotka (1925); Volterra (1926) is an example of a stochastic kinetic model that provides an ample starting point for investigation of parameter inference in models of this type. Although it is relatively simple, characterised by a set of 3 reactions on two species, it encompasses many of the difficulties associated with larger, more complex systems. Denoting the two species, prey, and predators, , evolution of the system is governed by the following three reactions:

| (12) |

These reactions can be thought of as prey birth, a predator prey interaction resulting in the death of a prey and a predator birth, and predator death respectively. Reaction events are dependent on the current state of the system as well as the reaction rate parameters. Hence the trajectory of the evolution of species counts presents a Markov process on a discrete state space. This reaction network is summarised by its stoichiometry matrix , and hazard function :

| (15) |

Realisations from the model conditional on a vector of rate parameters, , can be obtained exactly via algorithm 1, or approximated via a number of fast simulation algorithms.

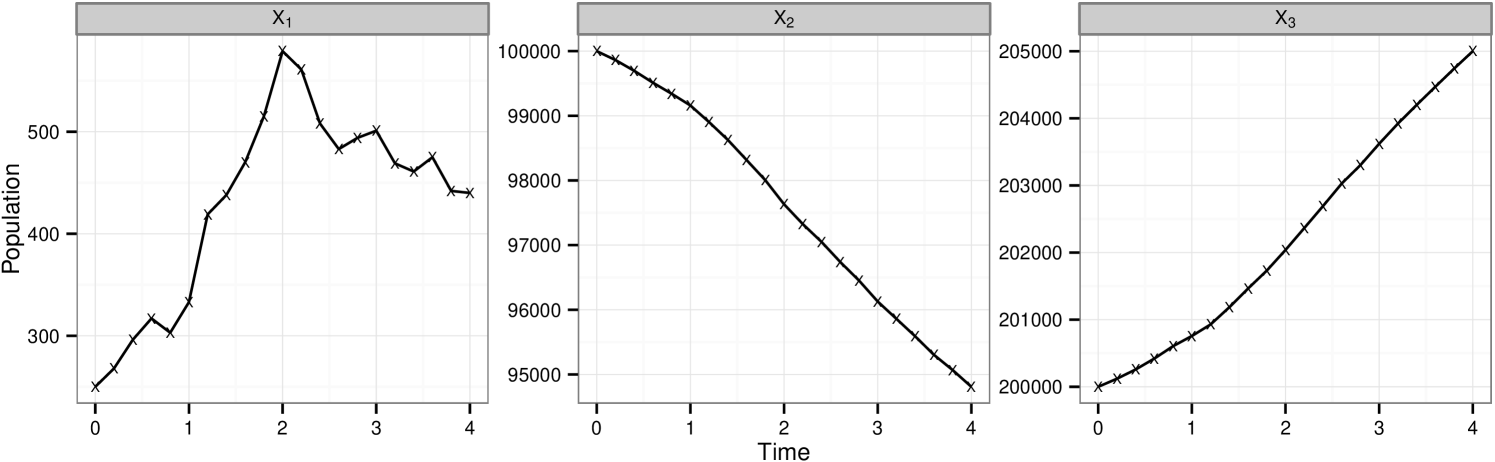

5.1.2 Synthetic data

For the purpose of making comparison we use a number of data sets over different observation regimes simulated using reaction rate vector . In each case we corrupt the with a Gaussian error with mean 0 and variance , . is used throughout. Plots of each of the data sets considered are in figure 1. Given this set of parameter values the model exhibits relatively stable oscillatory behavior for both species and provides an interesting starting point for our investigation. We shall use this model to explore posterior sampling efficiency given data sets of a range of sizes, under full and partial observation regimes, whilst also giving consideration to the effect of assuming known measurement or including this parameter in the set to be inferred. Data sets shown in figures 1b–d are denoted , and respectively. We introduce extra subscript notation such that implies the data set where predator observations have been discarded and symbolises treatment of under the assumption of unknown measurement error. In addition will be used as a reference to the collection of data sets and .

5.1.3 Inference set up

We now create a scenario in which prior parameter information is poor. We place uniform prior information on ,

| (16) |

and we place a Poisson prior distribution on the initial state

| (17) |

Where is not assumed to be known we use

| (18) |

For each repeat we allow a computational budget of model realisations from the Direct method, algorithm 1. We choose this budget based on the fact that given the our simulator achieves simulations of length equivalent to every 45-50 secs on our relatively fast Intel core i7-2600 clocked at 3.4 GHz. This yields an approximate total time spent simulating from the model of 14 hours plus some other comparably negligible computation costs for each individual inference run. Clearly improvement on simulation time can be made by parallelising the simulation of independent realisations from the direct method as well as other computational savings being made by clever optimisations in each algorithm. We have tried to disclude the effect of such algorithmic optimisation in our comparison as discussed in section 4. We include the information on approximate time here as a rough guide to practical implementation of inference for these types of models as well as the reasoning behind our particular budget choice.

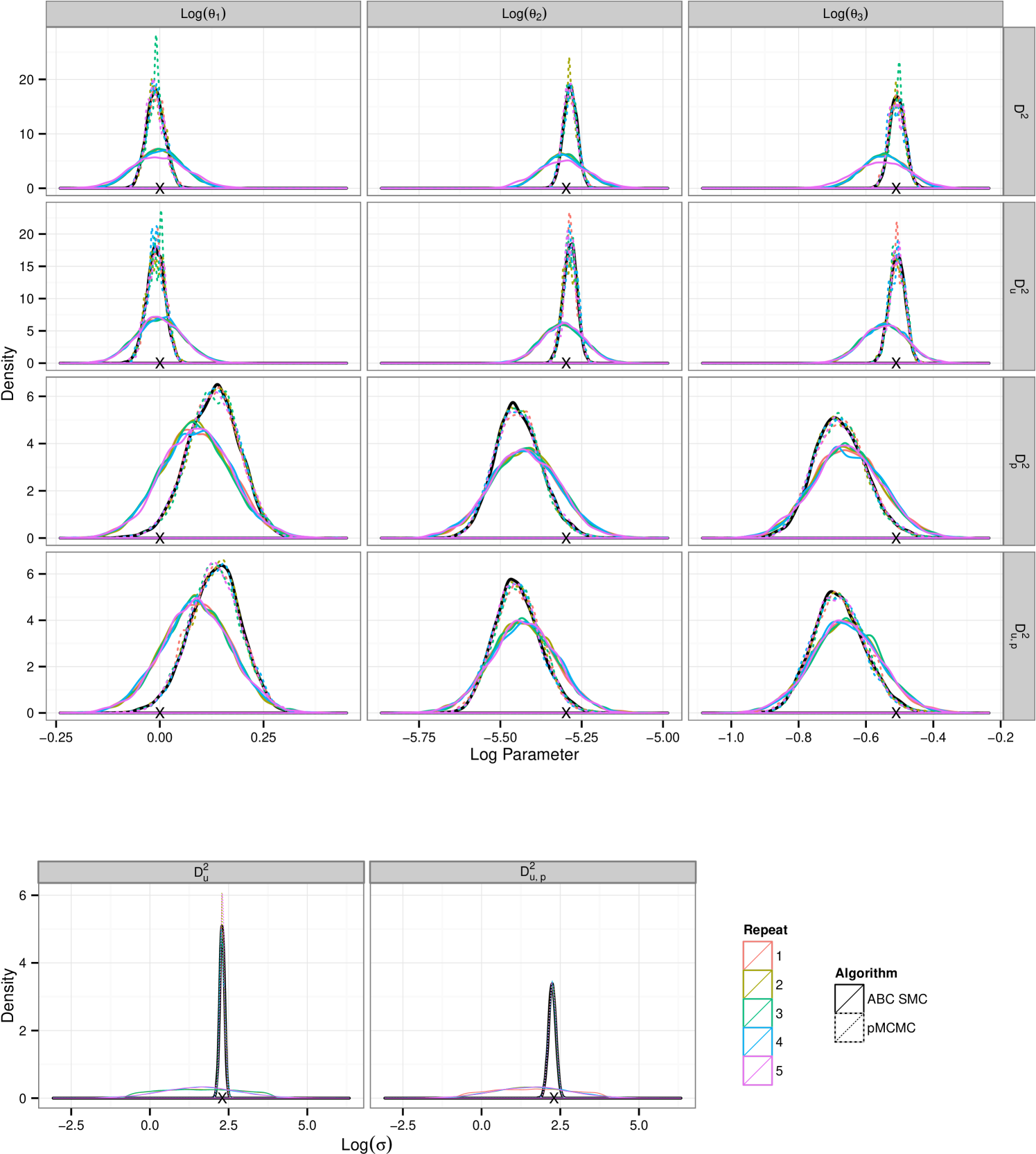

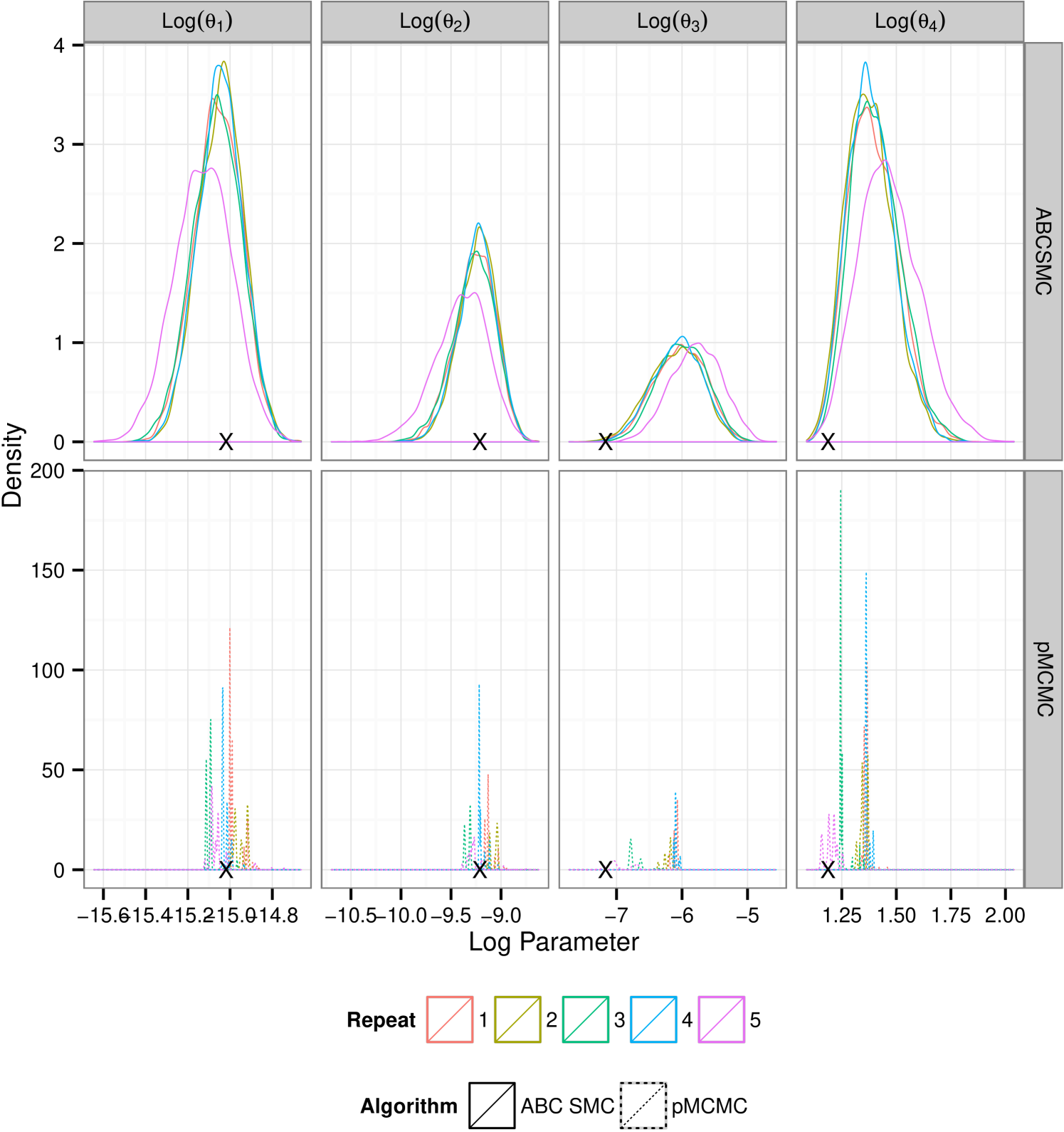

5.1.4 Discussion of results

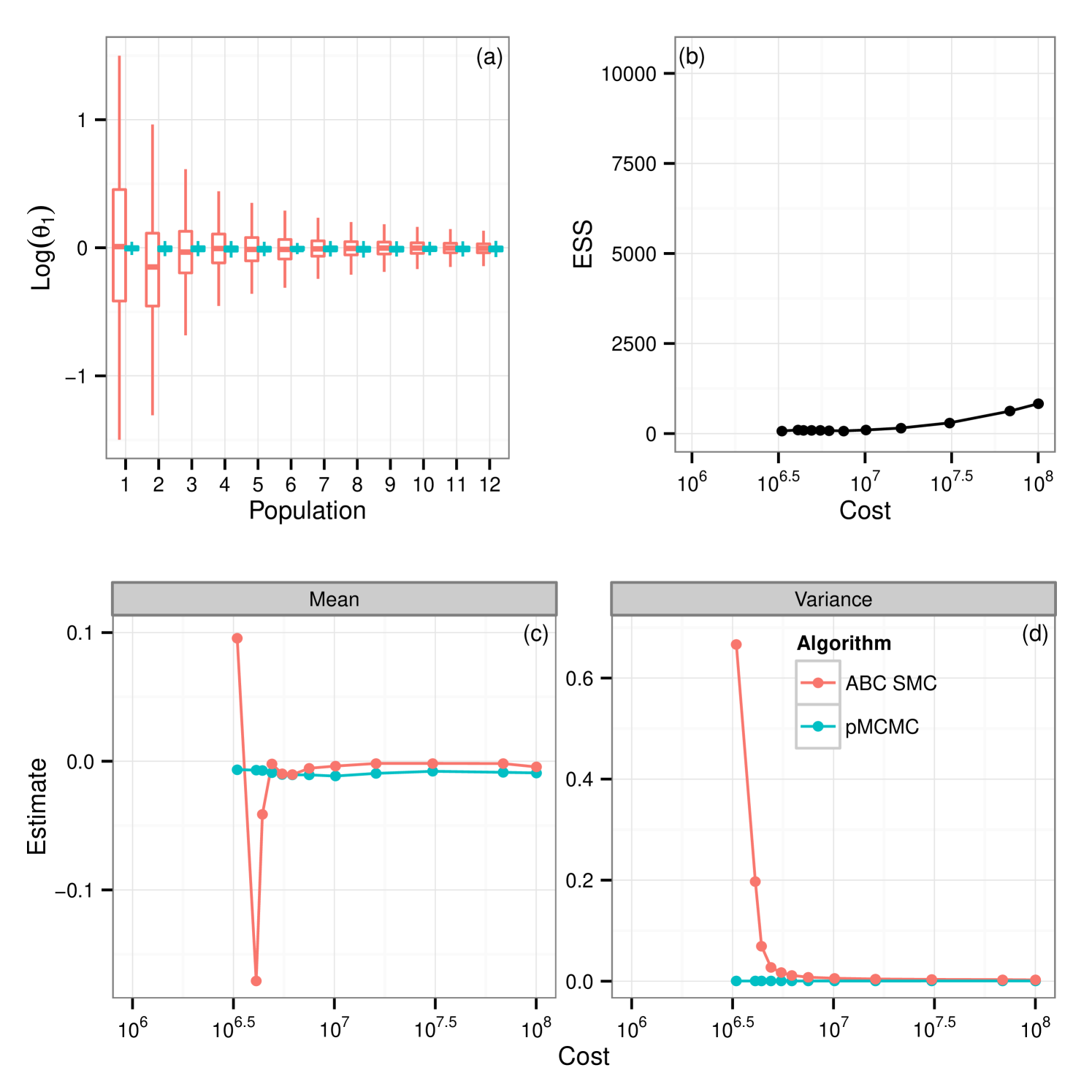

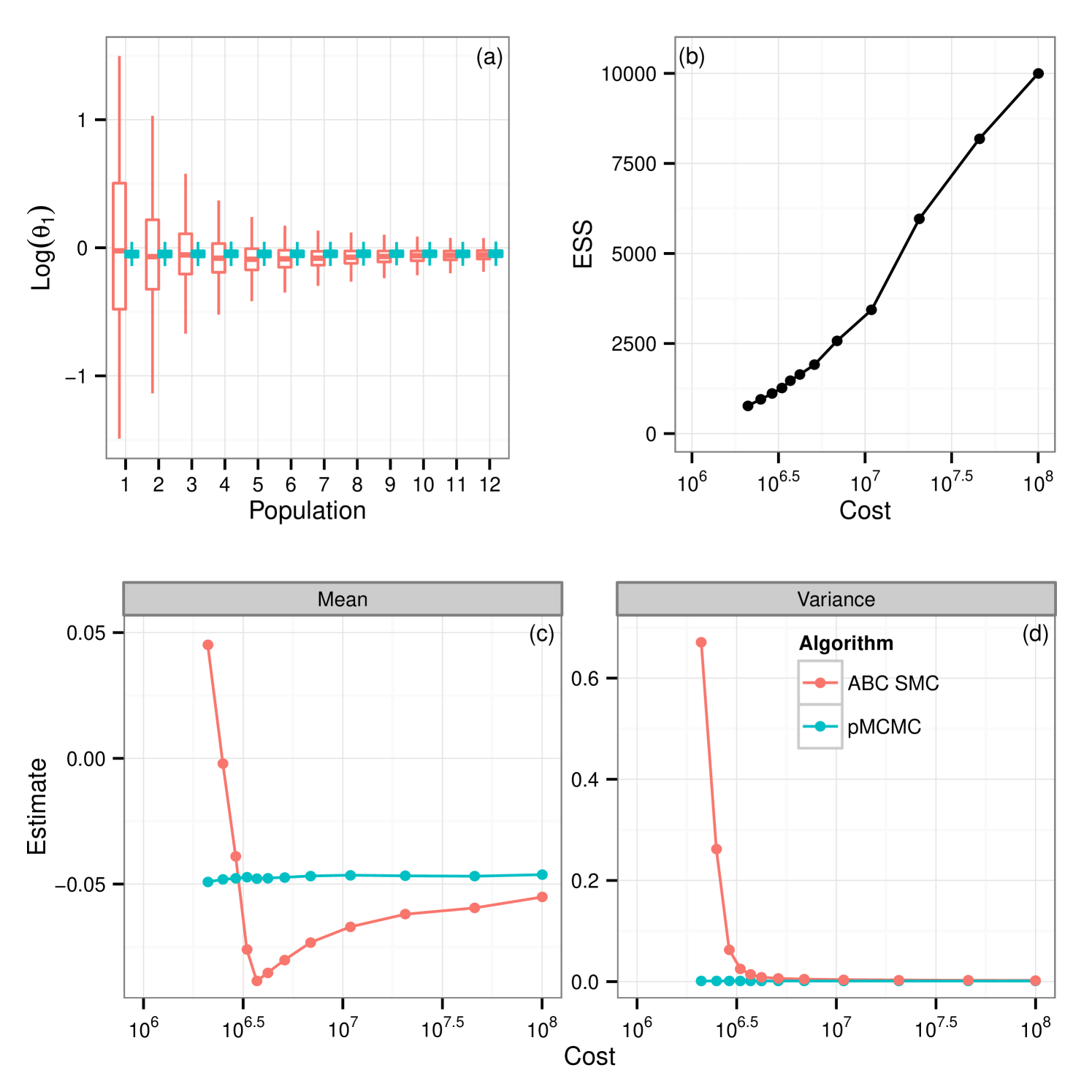

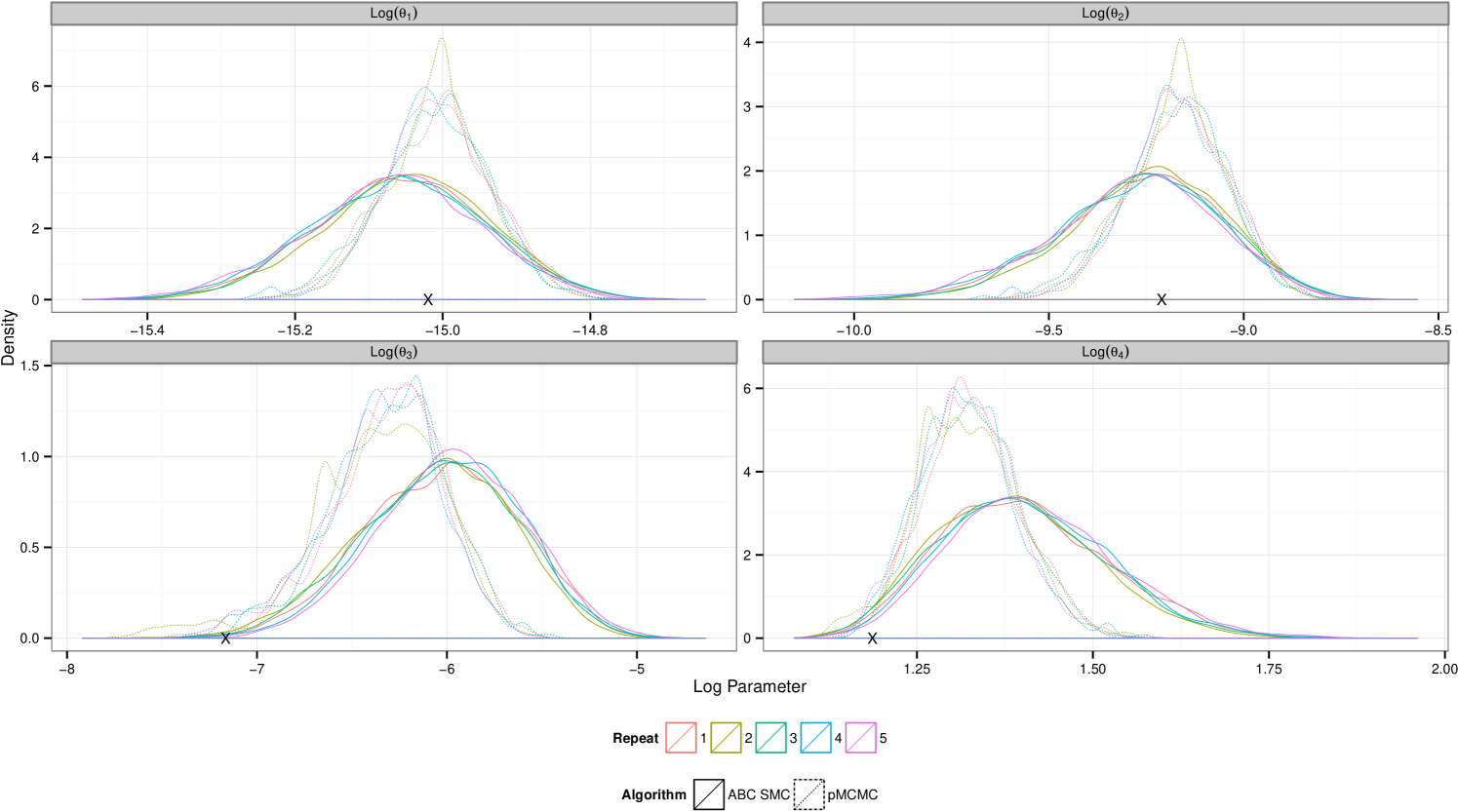

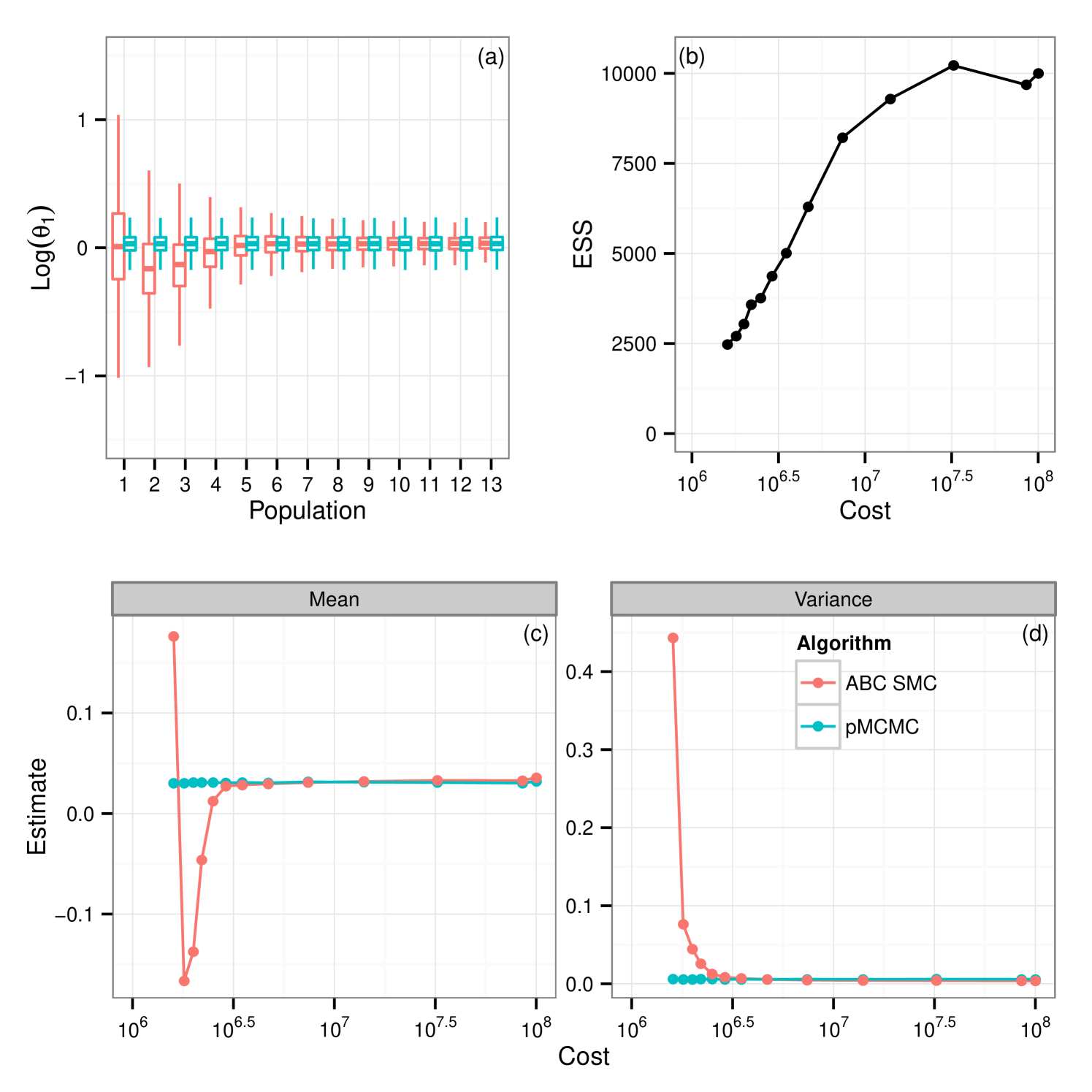

Results for data sets and are contained as supplementary material. We report results for data set , figure 1(c), shown in figure 2. Results in the supplementary material support those reported here, discrepancies between the two algorithms are reduced for and exacerbated given the longer time series of . The plots in figure 2 show that for there is a clear difference in the tails of the distribution. This feature is mirrored for . Additionally we see that, under the assumption of unknown measurement error ABC fails to identify the noise parameter, . Treatment of yields a much smaller difference in the resultant posteriors between the two schemes however reinforces the inability to infer using the ABC SMC scheme. Each density plotted is the result of samples being collected. In the case of the ABC SMC this was ensured by retaining samples at each population, giving rise to a sequence of between 12 and 14 populations in the fully observed runs and 12 populations in each partially observed run, the decreasing choice of chosen as described in section 3.2. For pMCMC we ran the sampler for the full budget and then thinned the resultant collection of vectors such that the final sample contained 10000. In the case of the average number of particles required was 132 which led to a chain that ran for approximately unthinned iterations which was then thinned by a factor of 75 to give the final sample.

From these results it would appear that given the use of the full budget pMCMC provides the better choice. Figure 3(a) shows the posterior learning experienced by the sequential ABC algorithm. The matching box plots for pMCMC is the posterior distribution using the pMCMC algorithm where we use only information gained under the same budget use as with ABC. i.e The budget used to obtain the first population of samples under ABC was . The corresponding box-plot for population 1 under pMCMC is a snapshot of the chain having used up the same budget of . It is interesting to note that the shape of the posterior distribution under pMCMC changes little over the sequence of populations leading one to believe that even with little computational expense the posterior distribution inferred by pMCMC is good. However this plot does not tell the whole story. Figure 3(b) shows estimates of the effective sample size of the posterior distributions for pMCMC in this sequence. Effective sample size is small for the populations with the lower computational expense showing that to obtain a good posterior sample from pMCMC, despite the fact that the overall shape of the distribution doesn’t change very much, we must still run for a long time, which is unsurprising. However, if we are only interested in obtaining posterior summaries, it would appear that the budget is less important. Figure 3(c-d) show posterior estimates of the mean and variance factored into the same computational expense groups as with figure 3(a) and figure 3(b). It is clear to see that for pMCMC the estimates are stable and remain reasonably constant. This is in stark contrast to estimates using the ABC approximations which appear less certain before tending toward those estimates gained using pMCMC. The overall trend here then seems to suggest that in this instance pMCMC is the favorable choice. The posterior estimates of mean and variance are stable even given a relatively short run time, and the shape of the distribution is also maintained. To obtain a large uncorrelated sample the chain must be run for a long time, although it is noted that running the ABC sampler for the same length of time does not yield better results. Posterior summaries appear consistent irrespective of the additional runtime. ABC here however is not so good. It could be argued that the posterior distributions are close given the full budget in some circumstances, notably , however with shorter runtime the approximation is much greater and hence inference is poorer as a result.

5.1.5 The tuning problem

The results presented here have ignored the issue of tuning the pMCMC algorithm. The posterior inference suggest that pMCMC is the better choice for learning about model parameters but we have tuned the pMCMC at the start, relying on knowledge of the posterior. This knowledge comes with its own expense and is in some sense self-defeating, something that is not so much of an issue for the ABC SMC. The true cost of the pMCMC inference then is somewhat higher than shown here. The ABC SMC proposal variance and tolerance sequence are chosen adaptively and so the initialisation and tuning cost is small. We made the choice to tune pMCMC using information from the posterior earlier due to the fact that in our initial experiments for this model we found that in a number of cases the computational budget used in initialising the pMCMC was large, often larger than our allocated budget. Choosing a sensible , and with little prior knowledge is difficult. Our initial attempts to find a involved sampling from the prior distribution, estimating the likelihoods and choosing the parameter vector which maximises the likelihood estimates. Due to the variability in the particle filter estimates away from the true parameter values this step typically involves a large number of particles and hence large expense. Conditional on this hopefully informative choice of we can then tune , the number of particles in the bootstrap filter, such that each estimate has less expense, by running a number of filters starting with a small number of particles and steadily increasing until the is suitably small. Again this has non-negligible expense. We want to try to find as small a value of as we can get away with for the main inference run and so this iterative procedure can be time consuming. On top of these two tuning steps we typically want to ensure that we choose a good . This often involves a pilot MCMC run using a very small proposal variance and then using the variance of the resulting distribution to inform the choice of . We found that, in practice, employing these steps to tune pMCMC was itself very expensive. Using 1000 particles in a particle filter to estimate likelihoods for 2000 parameter vectors drawn from the prior for and repeating each 10 times and maximising over the average to choose was not enough to guarantee that the resulting draw had posterior support. This alone uses 20% of the allocated budget without then tuning the number of particles. The number of particles needed to satisfy the log–likelihood estimate variance criteria is often much larger in the tails of the posterior than at the true values. Add to this estimating and then the appropriate burn–in period and it is easy to see that this operation becomes very expensive. Owen et al. (2014) showed that initialising pMCMC with a random draw from an uninformative prior does not guarantee convergence to the stationary distribution. This was due to the large variability in log-likelihood estimates given by the particle filter in regions of negligible posterior support, rather than any flaw in the theory. It is suggested that a good thing to do in situations in which prior knowledge is poor is to run an ABC SMC algorithm as an aid to tuning and initialising a pMCMC algorithm. This approach is amenable to parallelisation and exploits relative strengths of the two approaches.

5.2 Schlögl system

5.2.1 Model description

The Schlögl model is a well known test model which exhibits bimodal stability at certain parameter values. The system is characterised by a set of 4 reactions involving 3 species:

| (23) |

We can summarise this reaction network via its stoichiometry matrix and hazard function :

| (27) | ||||

| (28) |

This system provides an interesting case for which to investigate how influential the measurement error is on the efficiency of posterior sampling for each algorithm.

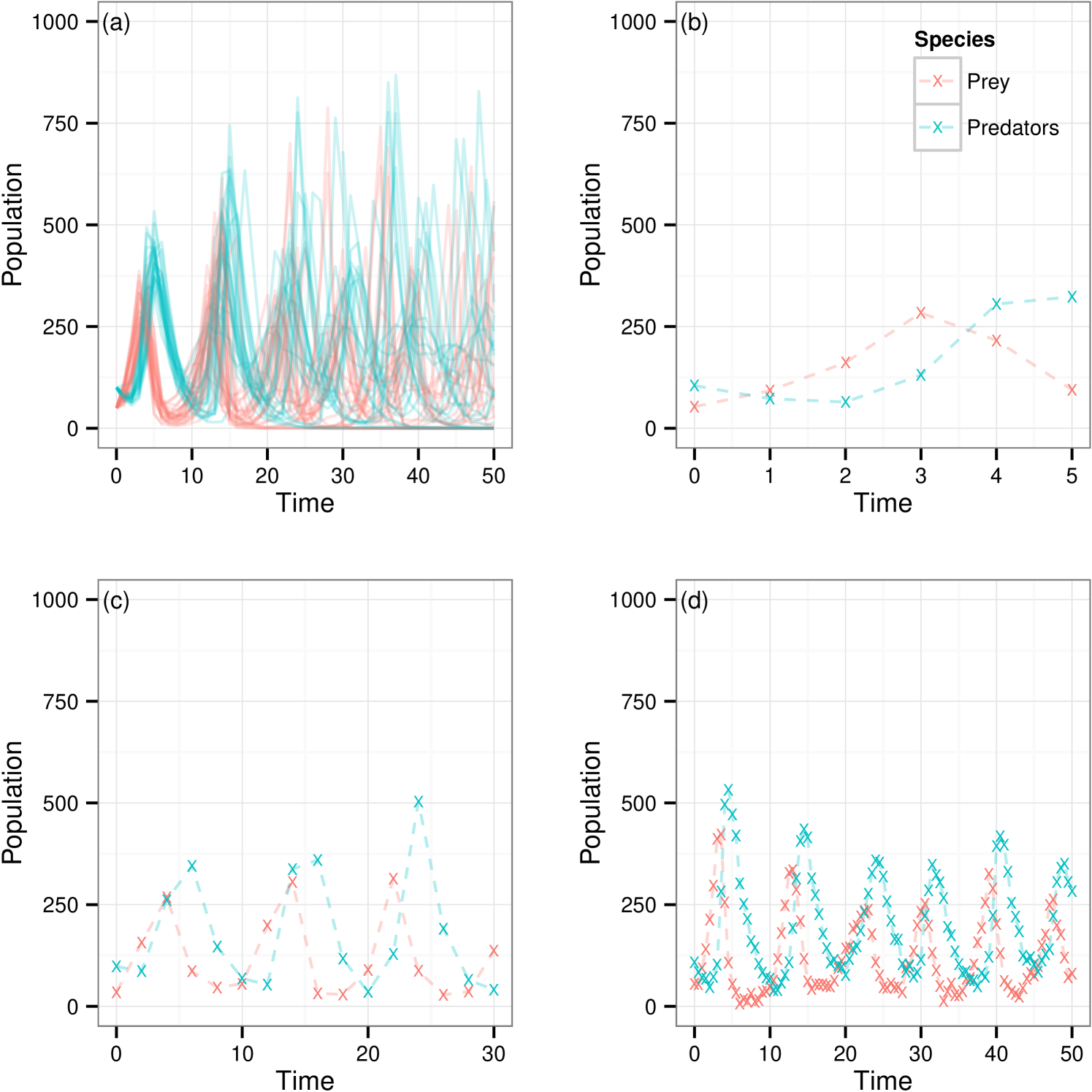

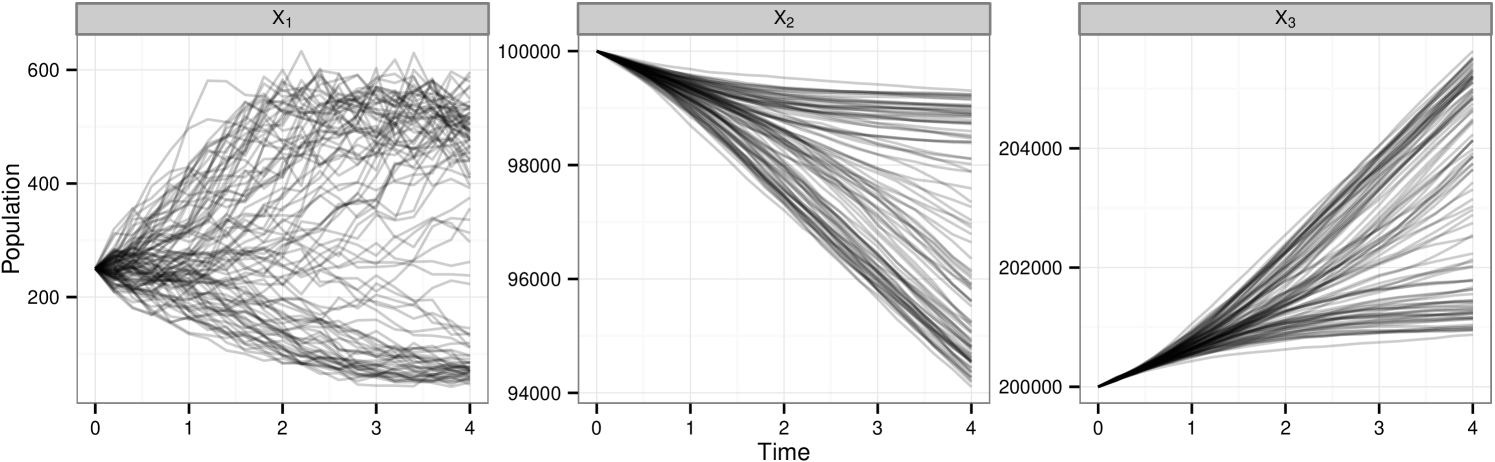

5.2.2 Synthetic data

Figure 4(a) shows 100 realisations from the model given and highlighting the bimodal characteristics given this set of parameter values. This poses an interesting challenge when it comes to parameter inference since it is not necessarily the case that all parameter vectors in a region of space closely surrounding this give the same behaviour. For our investigation of the inference methods being discussed we choose one of these data traces at random and corrupt with Gaussian error, , . A second copy of the same underlying trace is then corrupted with the same error distribution but with . We denote these data set and respectively. The chosen observed data set is shown in figure 4(b).

5.2.3 Inference set up

In contrast to the simulation study for the Lotka–Volterra model we make use of a set of more informative prior distributions, that is a Gaussian distribution on the log scale, centered at the true values with relatively small standard deviation, . In addition we assume knowledge of the initial count . For inference in this case we now restrict our focus to assuming known measurement error, and availability of observations of all 3 species in the model, these factors having been explored well in the previous example. The focus of this example is to determine whether the size of the measurement error informs our choice as to which algorithm is better.

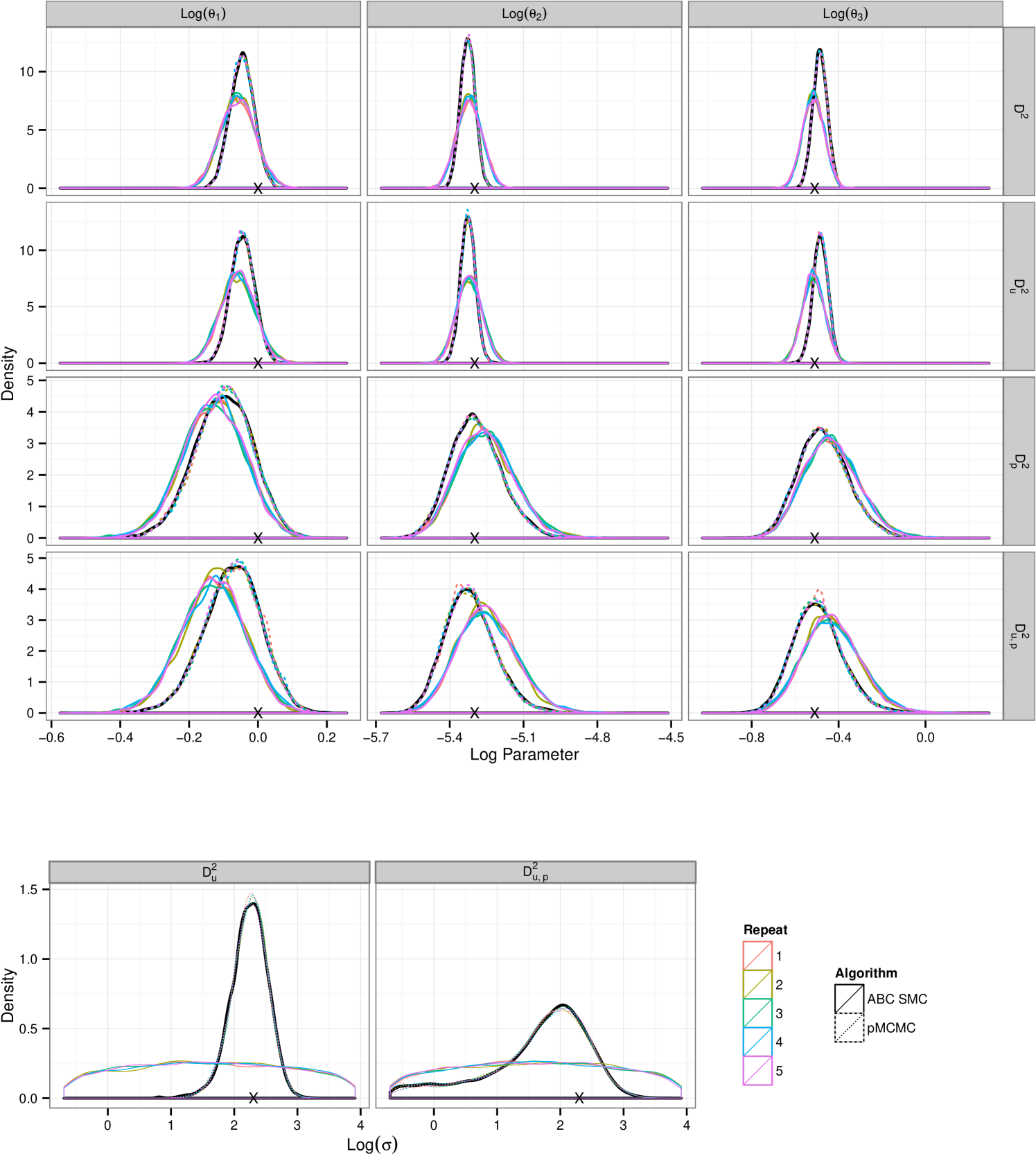

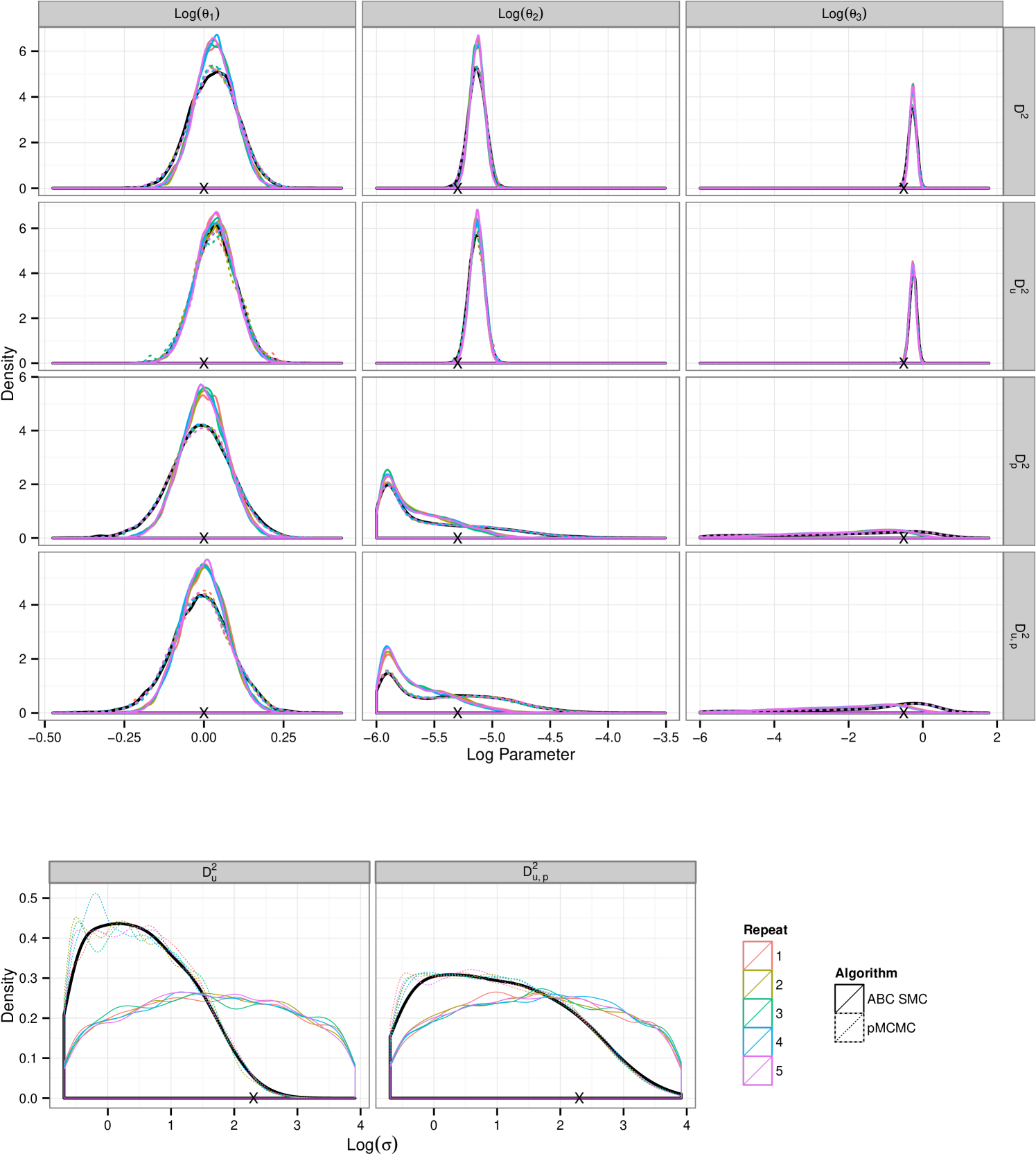

5.2.4 Discussion of results

Consistent with the results in section 5.1.4 ABC SMC yields posterior distribution with a larger mass in the tails. The plots in figure 5 show that under the larger measurement error, data set , comparative performance is similar to that seen in the previous example. However under small measurement error pMCMC struggles with this computational budget. The chain spends a large amount of time stuck at given parameter values. It is known that for likelihood free pMCMC to be efficient in this context the measurement error must be substantial. Development of pMCMC algorithms for informative observations are subject to ongoing research and typically involve bridging of the latent state conditional on the endpoints. Golightly & Wilkinson (2014) propose an approach to the problem of inference for a Markov jump process with informative observations on a discrete state space by conditioning the hazard function on the end points of the data observations.

6 Conclusions

The results in this article suggest that in most cases, for parameter inference for stochastic kinetic models with intractable likelihoods, particle MCMC is a better choice than ABC SMC provided that it can be well initialised. This distinction is less clear when applied to small data sets as seen in appendix A where posterior inference for the rate parameters are well matched. A longer time series highlights the benefit of using a particle filter whose re-sampling step ensures forward simulations are guided by the observations. ABC SMC seems to be poor at inferring the measurement error present in the model but proves to be a reasonable approach under informative observations, favorable to the likelihood free pMCMC implementation explored here. PMCMC, whilst being the better choice in most cases for inference is substantially more difficult to tune. Given that ABC methods parallelise easily and performs comparably when inferring rate parameters it poses a strong approach when measurement error is known or small, particularly for lower dimensional data and may yield good posterior distributions at a lower real time cost than pMCMC. The biggest trade-off here is finding an appropriate starting point for pMCMC, something that can be approached by using ABC SMC, as described in Owen et al. (2014).

References

- Andrieu et al. (2010) Andrieu, C., Doucet, A. & Holenstein, R. 2010 Particle Markov chain Monte Carlo methods. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 72 (3), 269–342.

- Andrieu & Roberts (2009) Andrieu, C. & Roberts, G. O. 2009 The pseudo-marginal approach for efficient Monte Carlo computations. The Annals of Statistics 37 (2), 697–725.

- Beaumont et al. (2009) Beaumont, M. A., Cornuet, J.-M., Marin, J.-M. & Robert, C. P. 2009 Adaptive approximate Bayesian computation. Biometrika 96 (4), 983–990.

- Beaumont et al. (2002) Beaumont, M. A., Zhang, W. & Balding, D. J. 2002 Approximate Bayesian computation in population genetics. Genetics 162 (4), 2025–2035.

- Blum et al. (2013) Blum, M. G., Nunes, M. A., Prangle, D., Sisson, S. A. et al. 2013 A comparative review of dimension reduction methods in approximate bayesian computation. Statistical Science 28 (2), 189–208.

- Boys et al. (2008) Boys, R. J., Wilkinson, D. J. & Kirkwood, T. B. 2008 Bayesian inference for a discretely observed stochastic kinetic model. Statistics and Computing 18 (2), 125–135.

- Del Moral et al. (2006) Del Moral, P., Doucet, A. & Jasra, A. 2006 Sequential Monte Carlo samplers. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 68 (3), 411–436.

- Douc et al. (2007) Douc, R., Guillin, A., Marin, J.-M., Robert, C. P. et al. 2007 Convergence of adaptive mixtures of importance sampling schemes. The Annals of Statistics 35 (1), 420–448.

- Doucet et al. (2001) Doucet, A., de Freitas, N. & Gordon, N. 2001 Sequential Monte Carlo methods in practice. Springer.

- Doucet et al. (2012) Doucet, A., Pitt, M. & Kohn, R. 2012 Efficient implementation of Markov chain Monte Carlo when using an unbiased likelihood estimator. arXiv:1210.1871 .

- Drovandi & Pettitt (2011) Drovandi, C. C. & Pettitt, A. N. 2011 Estimation of parameters for macroparasite population evolution using approximate Bayesian computation. Biometrics 67 (1), 225–233.

- Fearnhead & Prangle (2012) Fearnhead, P. & Prangle, D. 2012 Constructing summary statistics for approximate Bayesian computation: semi-automatic approximate Bayesian computation. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 74 (3), 419–474.

- Filippi et al. (2013) Filippi, S., Barnes, C. P., Cornebise, J. & Stumpf, M. P. 2013 On optimality of kernels for approximate bayesian computation using sequential monte carlo. Statistical applications in genetics and molecular biology 12 (1), 87–107.

- Gillespie (2014) Gillespie, C. S. 2014 Diagnostics for assessing the accuracy of approximate stochastic simulators. arXiv preprint arXiv:1409.1096 .

- Gillespie (1977) Gillespie, D. T. 1977 Exact stochastic simulation of coupled chemical reactions. The Journal of Physical Chemistry 81 (25), 2340–2361.

- Gillespie (2000) Gillespie, D. T. 2000 The chemical Langevin equation. The Journal of Chemical Physics 113 (1), 297–306.

- Golightly & Wilkinson (2005) Golightly, A. & Wilkinson, D. J. 2005 Bayesian inference for stochastic kinetic models using a diffusion approximation. Biometrics 61 (3), 781–788.

- Golightly & Wilkinson (2011) Golightly, A. & Wilkinson, D. J. 2011 Bayesian parameter inference for stochastic biochemical network models using particle Markov chain Monte Carlo. Interface Focus 1 (6), 807–820.

- Golightly & Wilkinson (2014) Golightly, A. & Wilkinson, D. J. 2014 Bayesian inference for markov jump processes with infromative observations. arXiv preprint arXiv:1409.4362 .

- Kitano (2002) Kitano, H. 2002 Computational systems biology. Nature 420 (6912), 206–210.

- Lotka (1925) Lotka, A. J. 1925 Elements of physical biology. Williams & Wilkins Baltimore.

- Marjoram et al. (2003) Marjoram, P., Molitor, J., Plagnol, V. & Tavaré, S. 2003 Markov chain Monte Carlo without likelihoods. Proceedings of the National Academy of Sciences 100 (26), 15324–15328.

- Owen et al. (2014) Owen, J., Wilkinson, D. J. & Gillespie, C. S. 2014 Scalable inference for markov processes with intractable likelihoods. arXiv preprint arXiv:1403.6886 .

- Pitt et al. (2012) Pitt, M. K., Silva, R. d. S., Giordani, P. & Kohn, R. 2012 On some properties of Markov chain Monte Carlo simulation methods based on the particle filter. Journal of Econometrics 171 (2), 134–151.

- Pritchard et al. (1999) Pritchard, J. K., Seielstad, M. T., Perez-Lezaun, A. & Feldman, M. W. 1999 Population growth of human y chromosomes: a study of y chromosome microsatellites. Molecular Biology and Evolution 16 (12), 1791–1798.

- Proctor et al. (2007) Proctor, C., Lydall, D., Boys, R., Gillespie, C., Shanley, D., Wilkinson, D. & Kirkwood, T. 2007 Modelling the checkpoint response to telomere uncapping in budding yeast. Journal of The Royal Society Interface 4 (12), 73–90.

- Roberts et al. (1997) Roberts, G. O., Gelman, A. & Gilks, W. R. 1997 Weak convergence and optimal scaling of random walk metropolis algorithms. The Annals of Applied Probability 7 (1), 110–120.

- Roberts & Rosenthal (2001) Roberts, G. O. & Rosenthal, J. S. 2001 Optimal scaling for various Metropolis-Hastings algorithms. Statistical science 16 (4), 351–367.

- Silk et al. (2013) Silk, D., Filippi, S. & Stumpf, M. P. 2013 Optimizing threshold-schedules for sequential approximate Bayesian computation: applications to molecular systems. Statistical applications in genetics and molecular biology 12 (5), 603–618.

- Sisson et al. (2007) Sisson, S., Fan, Y. & Tanaka, M. M. 2007 Sequential Monte Carlo without likelihoods. Proceedings of the National Academy of Sciences 104 (6), 1760–1765.

- Tavare et al. (1997) Tavare, S., Balding, D. J., Griffiths, R. & Donnelly, P. 1997 Inferring coalescence times from dna sequence data. Genetics 145 (2), 505–518.

- Toni et al. (2009) Toni, T., Welch, D., Strelkowa, N., Ipsen, A. & Stumpf, M. P. 2009 Approximate Bayesian computation scheme for parameter inference and model selection in dynamical systems. Journal of the Royal Society Interface 6 (31), 187–202.

- Volterra (1926) Volterra, V. 1926 Fluctuations in the abundance of a species considered mathematically. Nature 118, 558–560.

- Wilkinson (2009) Wilkinson, D. J. 2009 Stochastic modelling for quantitative description of heterogeneous biological systems. Nature Reviews Genetics 10 (2), 122–133.

- Wilkinson (2011) Wilkinson, D. J. 2011 Stochastic modelling for systems biology, 2nd edn., Chapman & Hall/CRC mathematical biology and medicine series, vol. 44. CRC press.

Appendix A Supplementary material

A.1 Analysing Lotka–Volterra

The differences between pMCMC and ABC SMC inference are less pronounced for and particularly for the reaction rate parameters, see figure 7. The analysis shown in figure 8 of imply that the posterior inferences made after a short time, less than 10% of the total allocated budget, are largely indistinguishable between the two approaches, estimates of the mean and variance are equivalent and the shape of the distribution consistent between the two approaches. If we take into consideration the cost of initialising pMCMC this would suggest that in this case ABC SMC proves a more favorable choice. However this effect is less pronounced when some information is removed. Posterior learning of the noise parameter appears to be poor, consistent with results in the text, but this is now also true for pMCMC. However the pMCMC runs subject to the budget constraints are in agreement with the long run, black, being used as the truth for comparison. This “truth” being the results of a long well thinned run using a large number of particles.

A.2 Analysing Lotka–Volterra

Inference for the larger data sets shows a larger discrepancy between the two algorithms, see figure 9 for density plots. ABC SMC consistently over–estimates the contribution in the tails of the distribution. With more abundant data pMCMC infers the noise parameter, , well whilst ABC SMC continues to do poorly in this respect, consistent with other results in this article. In all data observation regimes pMCMC results in a more peaked posterior distribution that closely matches our “truth” benchmark. Figure 10(a) shows that ABC SMC is tending in distribution to that we found by using pMCMC. Figure 10 shows that obtaining a diverse posterior sample using pMCMC is more computationally taxing for the larger dimensional data. Despite the difference between the posterior densities, estimates of the means and variances appear consistent after approximately 10% of the allowed budget.