Linear Convergent Decentralized Optimization with Compression

Abstract

Communication compression has become a key strategy to speed up distributed optimization. However, existing decentralized algorithms with compression mainly focus on compressing DGD-type algorithms. They are unsatisfactory in terms of convergence rate, stability, and the capability to handle heterogeneous data. Motivated by primal-dual algorithms, this paper proposes the first LinEAr convergent Decentralized algorithm with compression, LEAD. Our theory describes the coupled dynamics of the inexact primal and dual update as well as compression error, and we provide the first consensus error bound in such settings without assuming bounded gradients. Experiments on convex problems validate our theoretical analysis, and empirical study on deep neural nets shows that LEAD is applicable to non-convex problems.

.tocmtchapter \etocsettagdepthmtchaptersubsection \etocsettagdepthmtappendixnone

1 Introduction

Distributed optimization solves the following optimization problem

| (1) |

with computing agents and a communication network. Each is a local objective function of agent and typically defined on the data settled at that agent. The data distributions can be heterogeneous depending on the applications such as in federated learning. The variable often represents model parameters in machine learning. A distributed optimization algorithm seeks an optimal solution that minimizes the overall objective function collectively. According to the communication topology, existing algorithms can be conceptually categorized into centralized and decentralized ones. Specifically, centralized algorithms require global communication between agents (through central agents or parameter servers). While decentralized algorithms only require local communication between connected agents and are more widely applicable than centralized ones. In both paradigms, the computation can be relatively fast with powerful computing devices; efficient communication is the key to improve algorithm efficiency and system scalability, especially when the network bandwidth is limited.

In recent years, various communication compression techniques, such as quantization and sparsification, have been developed to reduce communication costs. Notably, extensive studies (Seide et al., 2014; Alistarh et al., 2017; Bernstein et al., 2018; Stich et al., 2018; Karimireddy et al., 2019; Mishchenko et al., 2019; Tang et al., 2019b; Liu et al., 2020) have utilized gradient compression to significantly boost communication efficiency for centralized optimization. They enable efficient large-scale optimization while maintaining comparable convergence rates and practical performance with their non-compressed counterparts. This great success has suggested the potential and significance of communication compression in decentralized algorithms.

While extensive attention has been paid to centralized optimization, communication compression is relatively less studied in decentralized algorithms because the algorithm design and analysis are more challenging in order to cover general communication topologies. There are recent efforts trying to push this research direction. For instance, DCD-SGD and ECD-SGD (Tang et al., 2018a) introduce difference compression and extrapolation compression to reduce model compression error. (Reisizadeh et al., 2019a; b) introduce QDGD and QuanTimed-DSGD to achieve exact convergence with small stepsize. DeepSqueeze (Tang et al., 2019a) directly compresses the local model and compensates the compression error in the next iteration. CHOCO-SGD (Koloskova et al., 2019; 2020) presents a novel quantized gossip algorithm that reduces compression error by difference compression and preserves the model average. Nevertheless, most existing works focus on the compression of primal-only algorithms, i.e., reduce to DGD (Nedic & Ozdaglar, 2009; Yuan et al., 2016) or P-DSGD (Lian et al., 2017). They are unsatisfying in terms of convergence rate, stability, and the capability to handle heterogeneous data. Part of the reason is that they inherit the drawback of DGD-type algorithms, whose convergence rate is slow in heterogeneous data scenarios where the data distributions are significantly different from agent to agent.

In the literature of decentralized optimization, it has been proved that primal-dual algorithms can achieve faster converge rates and better support heterogeneous data (Ling et al., 2015; Shi et al., 2015; Li et al., 2019; Yuan et al., 2020). However, it is unknown whether communication compression is feasible for primal-dual algorithms and how fast the convergence can be with compression. In this paper, we attempt to bridge this gap by investigating the communication compression for primal-dual decentralized algorithms. Our major contributions can be summarized as:

-

•

We delineate two key challenges in the algorithm design for communication compression in decentralized optimization, i.e., data heterogeneity and compression error, and motivated by primal-dual algorithms, we propose a novel decentralized algorithm with compression, LEAD.

-

•

We prove that for LEAD, a constant stepsize in the range is sufficient to ensure linear convergence for strongly convex and smooth objective functions. To the best of our knowledge, LEAD is the first linear convergent decentralized algorithm with compression. Moreover, LEAD provably works with unbiased compression of arbitrary precision.

-

•

We further prove that if the stochastic gradient is used, LEAD converges linearly to the neighborhood of the optimum with constant stepsize. LEAD is also able to achieve exact convergence to the optimum with diminishing stepsize.

-

•

Extensive experiments on convex problems validate our theoretical analyses, and the empirical study on training deep neural nets shows that LEAD is applicable for nonconvex problems. LEAD achieves state-of-art computation and communication efficiency in all experiments and significantly outperforms the baselines on heterogeneous data. Moreover, LEAD is robust to parameter settings and needs minor effort for parameter tuning.

2 Related Works

Decentralized optimization can be traced back to the work by Tsitsiklis et al. (1986). DGD (Nedic & Ozdaglar, 2009) is the most classical decentralized algorithm. It is intuitive and simple but converges slowly due to the diminishing stepsize that is needed to obtain the optimal solution (Yuan et al., 2016). Its stochastic version D-PSGD (Lian et al., 2017) has been shown effective for training nonconvex deep learning models. Algorithms based on primal-dual formulations or gradient tracking are proposed to eliminate the convergence bias in DGD-type algorithms and improve the convergence rate, such as D-ADMM (Mota et al., 2013), DLM (Ling et al., 2015), EXTRA (Shi et al., 2015), NIDS (Li et al., 2019), (Tang et al., 2018b), Exact Diffusion (Yuan et al., 2018), OPTRA(Xu et al., 2020), DIGing (Nedic et al., 2017), GSGT (Pu & Nedić, 2020), etc.

Recently, communication compression is applied to decentralized settings by Tang et al. (2018a). It proposes two algorithms, i.e., DCD-SGD and ECD-SGD, which require compression of high accuracy and are not stable with aggressive compression. Reisizadeh et al. (2019a; b) introduce QDGD and QuanTimed-DSGD to achieve exact convergence with small stepsize and the convergence is slow. DeepSqueeze (Tang et al., 2019a) compensates the compression error to the compression in the next iteration. Motivated by the quantized average consensus algorithms, such as (Carli et al., 2010), the quantized gossip algorithm CHOCO-Gossip (Koloskova et al., 2019) converges linearly to the consensual solution. Combining CHOCO-Gossip and D-PSGD leads to a decentralized algorithm with compression, CHOCO-SGD, which converges sublinearly under the strong convexity and gradient boundedness assumptions. Its nonconvex variant is further analyzed in (Koloskova et al., 2020). A new compression scheme using the modulo operation is introduced in (Lu & De Sa, 2020) for decentralized optimization. A general algorithmic framework aiming to maintain the linear convergence of distributed optimization under compressed communication is considered in (Magnússon et al., 2020). It requires a contractive property that is not satisfied by many decentralized algorithms including the algorithm in this paper.

3 Algorithm

We first introduce notations and definitions used in this work. We use bold upper-case letters such as to define matrices and bold lower-case letters such as to define vectors. Let and be vectors with all ones and zeros, respectively. Their dimensions will be provided when necessary. Given two matrices , we define their inner product as and the norm as . We further define and for any given symmetric positive semidefinite matrix . For simplicity, we will majorly use the matrix notation in this work. For instance, each agent holds an individual estimate of the global variable . Let and be the collections of and which are defined below:

| (2) |

We use to denote the stochastic approximation of . With these notations, the update means that for all . In this paper, we need the average of all rows in and , so we define and . They are row vectors, and we will take a transpose if we need a column vector. The pseudoinverse of a matrix is denoted as . The largest, th-largest, and smallest nonzero eigenvalues of a symmetric matrix are , , and .

Assumption 1 (Mixing matrix).

The connected network consists of a node set and an undirected edge set . The primitive symmetric doubly-stochastic matrix encodes the network structure such that if nodes and are not connected and cannot exchange information.

Assumption 1 implies that and (Xiao & Boyd, 2004; Shi et al., 2015). The matrix multiplication describes that agent takes a weighted sum from its neighbors and itself, i.e., , where denotes the neighbors of agent .

3.1 The Proposed Algorithm

The proposed algorithm LEAD to solve problem (1) is showed in Alg. 1 with matrix notations for conciseness. We will refer to the line number in the analysis. A complete algorithm description from the agent’s perspective can be found in Appendix A. The motivation behind Alg. 1 is to achieve two goals: (a) consensus () and (b) convergence (). We first discuss how goal (a) leads to goal (b) and then explain how LEAD fulfills goal (a).

Input: Stepsize , parameter (), for any

Output: or

In essence, LEAD runs the approximate SGD globally and reduces to the exact SGD under consensus. One key property for LEAD is , regardless of the compression error in . It holds because that for the initialization, we require for some , e.g., , and that the update of ensures for all and as we will explain later. Therefore, multiplying on both sides of Line 7 leads to a global average view of Alg. 1:

| (3) |

which doesn’t contain the compression error. Note that this is an approximate SGD step because, as shown in (2), the gradient is not evaluated on a global synchronized model . However, if the solution converges to the consensus solution, i.e., , then and (3) gradually reduces to exact SGD.

With the establishment of how consensus leads to convergence, the obstacle becomes how to achieve consensus under local communication and compression challenges. It requires addressing two issues, i.e., data heterogeneity and compression error. To deal with these issues, existing algorithms, such as DCD-SGD, ECD-SGD, QDGD, DeepSqueeze, Moniqua, and CHOCO-SGD, need a diminishing or constant but small stepsize depending on the total number of iterations. However, these choices unavoidably cause slower convergence and bring in the difficulty of parameter tuning. In contrast, LEAD takes a different way to solve these issues, as explained below.

Data heterogeneity. It is common in distributed settings that there exists data heterogeneity among agents, especially in real-world applications where different agents collect data from different scenarios. In other words, we generally have for . The optimality condition of problem (1) gives , where is a consensual and optimal solution. The data heterogeneity and optimality condition imply that there exist at least two agents and such that and . As a result, a simple D-PSGD algorithm cannot converge to the consensual and optimal solution as even when the stochastic gradient variance is zero.

Gradient correction. Primal-dual algorithms or gradient tracking algorithms are able to convergence much faster than DGD-type algorithms by handling the data heterogeneity issue, as introduced in Section 2. Specifically, LEAD is motivated by the design of primal-dual algorithm NIDS (Li et al., 2019) and the relation becomes clear if we consider the two-step reformulation of NIDS adopted in (Li & Yan, 2019):

| (4) | ||||

| (5) |

where and represent the primal and dual variables respectively. The dual variable plays the role of gradient correction. As , we expect and will converge to via the update in (5) since corrects the nonzero gradient asymptotically. The key design of Alg. 1 is to provide compression for the auxiliary variable defined as . Such design ensures that the dual variable lies in , which is essential for convergence. Moreover, it achieves the implicit error compression as we will explain later. To stabilize the algorithm with inexact dual update, we introduce a parameter to control the stepsize in the dual update. Therefore, if we ignore the details of the compression, Alg. 1 can be concisely written as

| (6) | ||||

| (7) | ||||

| (8) |

where represents the compression of and denote the stochastic gradients.

Nevertheless, how to compress the communication and how fast the convergence we can attain with compression error are unknown. In the following, we propose to carefully control the compression error by difference compression and error compensation such that the inexact dual update (Line 6) and primal update (Line 7) can still guarantee the convergence as proved in Section 4.

Compression error. Different from existing works, which typically compress the primal variable or its difference, LEAD first construct an intermediate variable and apply compression to obtain its coarse representation as shown in the procedure :

-

•

Compress the difference between and the state variable as ;

-

•

is encoded into the low-bit representation, which enables the efficient local communication step . It is the only communication step in each iteration.

-

•

Each agent recovers its estimate by and we have .

-

•

States and are updated based on and , respectively. We have .

By this procedure, we expect when both and converge to , the compression error vanishes asymptotically due to the assumption we make for the compression operator in Assumption 2.

Remark 1.

Note that difference compression is also applied in DCD-PSGD (Tang et al., 2018a) and CHOCO-SGD (Koloskova et al., 2019), but their state update is the simple integration of the compressed difference. We find this update is usually too aggressive and cause instability as showed in our experiments. Therefore, we adopt a momentum update motivated from DIANA (Mishchenko et al., 2019), which reduces the compression error for gradient compression in centralized optimization.

Implicit error compensation. On the other hand, even if the compression error exists, LEAD essentially compensates for the error in the inexact dual update (Line 6), making the algorithm more stable and robust. To illustrate how it works, let denote the compression error and be its -th row. The update of gives

where indicates that agent spreads total compression error to all agents and indicates that each agent compensates this error locally by adding back. This error compensation also explains why the global view in (3) doesn’t involve compression error.

Remark 2.

Note that in LEAD, the compression error is compensated into the model through Line 6 and Line 7 such that the gradient computation in the next iteration is aware of the compression error. This has some subtle but important difference from the error compensation or error feedback in (Seide et al., 2014; Wu et al., 2018; Stich et al., 2018; Karimireddy et al., 2019; Tang et al., 2019b; Liu et al., 2020; Tang et al., 2019a), where the error is stored in the memory and only compensated after gradient computation and before the compression.

4 Theoretical Analysis

In this section, we show the convergence rate for the proposed algorithm LEAD. Before showing the main theorem, we make some assumptions, which are commonly used for the analysis of decentralized optimization algorithms. All proofs are provided in Appendix E.

Assumption 2 (Unbiased and -contracted operator).

The compression operator is unbiased, i.e., , and there exists such that for all .

Assumption 3 (Stochastic gradient).

The stochastic gradient is unbiased, i.e., , and the stochastic gradient variance is bounded: for all . Denote

Assumption 4.

Each is -smooth and -strongly convex with , i.e., for and , we have

Theorem 1 (Constant stepsize).

Corollary 1 (Complexity bounds).

Define the condition numbers of the objective function and communication graph as and , respectively. Under the same setting in Theorem 1, we can choose , and such that

With full-gradient (i.e., ), we obtain the following complexity bounds:

-

•

LEAD converges to the -accurate solution with the iteration complexity

-

•

When (i.e., there is no compression), we obtain and the iteration complexity . This exactly recovers the convergence rate of NIDS (Li et al., 2019).

-

•

When the asymptotical complexity is , which also recovers that of NIDS (Li et al., 2019) and indicates that the compression doesn’t harm the convergence in this case.

-

•

With (or ) and fully connected communication graph (i.e., ), we have and . Therefore, we obtain and the complexity bound . This recovers the convergence rate of gradient descent (Nesterov, 2013).

Remark 4.

Under the setting in Theorem 1, LEAD converges linearly to the neighborhood of the optimum and converges linearly exactly to the optimum if full gradient is used, e.g., . The linear convergence of LEAD holds when , but we omit the proof.

Remark 5 (Arbitrary compression precision).

Pick any based on the compression-related constant and the network-related constant , we can select and in certain ranges to achieve the convergence. It suggests that LEAD supports unbiased compression with arbitrary precision, i.e., any .

Corollary 2 (Consensus error).

Theorem 2 (Diminishing stepsize).

Remark 6.

Compared with CHOCO-SGD, LEAD requires unbiased compression and the convergence under biased compression is not investigated yet. The analysis of CHOCO-SGD relies on the bounded gradient assumptions, i.e., , which is restrictive because it conflicts with the strong convexity while LEAD doesn’t need this assumption. Moreover, in the theorem of CHOCO-SGD, it requires a specific point set of while LEAD only requires to be within a rather large range. This may explain the advantages of LEAD over CHOCO-SGD in terms of robustness to parameter setting.

5 Numerical Experiment

We consider three machine learning problems – -regularized linear regression, logistic regression, and deep neural network. The proposed LEAD is compared with QDGD (Reisizadeh et al., 2019a), DeepSqueeze (Tang et al., 2019a), CHOCO-SGD (Koloskova et al., 2019), and two non-compressed algorithms DGD (Yuan et al., 2016) and NIDS (Li et al., 2019).

Setup. We consider eight machines connected in a ring topology network. Each agent can only exchange information with its two 1-hop neighbors. The mixing weight is simply set as . For compression, we use the unbiased -bits quantization method with -norm

| (14) |

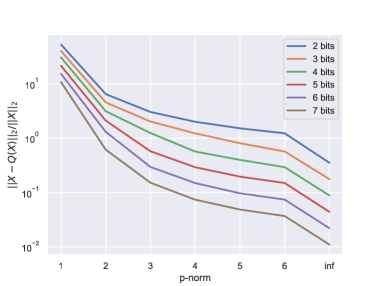

where is the Hadamard product, is the elementwise absolute value of , and is a random vector uniformly distributed in . Only sign, norm , and integers in the bracket need to be transmitted. Note that this quantization method is similar to the quantization used in QSGD (Alistarh et al., 2017) and CHOCO-SGD (Koloskova et al., 2019), but we use the -norm scaling instead of the -norm. This small change brings significant improvement on compression precision as justified both theoretically and empirically in Appendix C. In this section, we choose -bit quantization and quantize the data blockwise (block size = ).

For all experiments, we tune the stepsize from . For QDGD, CHOCO-SGD and Deepsqueeze, is tuned from . Note that different notations are used in their original papers. Here we uniformly denote the stepsize as and the additional parameter in these algorithms as for simplicity. For LEAD, we simply fix and for all experiments since we find LEAD is robust to parameter settings as we validate in the parameter sensitivity analysis in Appendix D.1. This indicates the minor effort needed for tuning LEAD. Detailed parameter settings for all experiments are summarized in Appendix D.3.

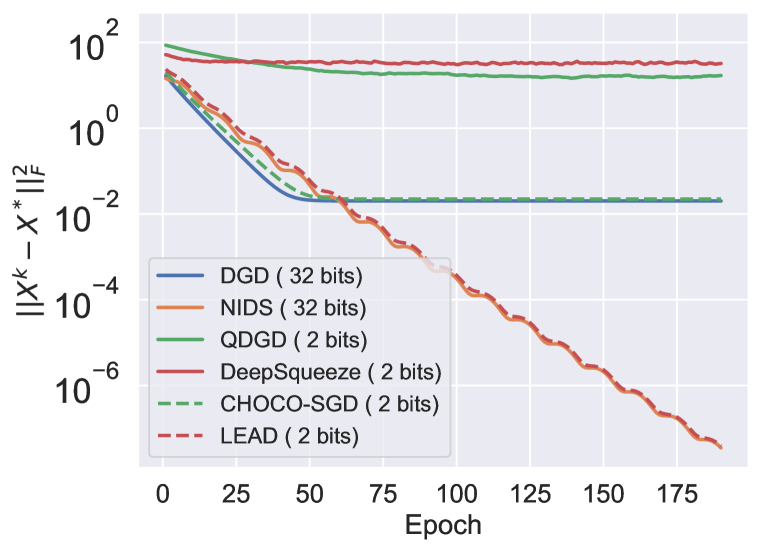

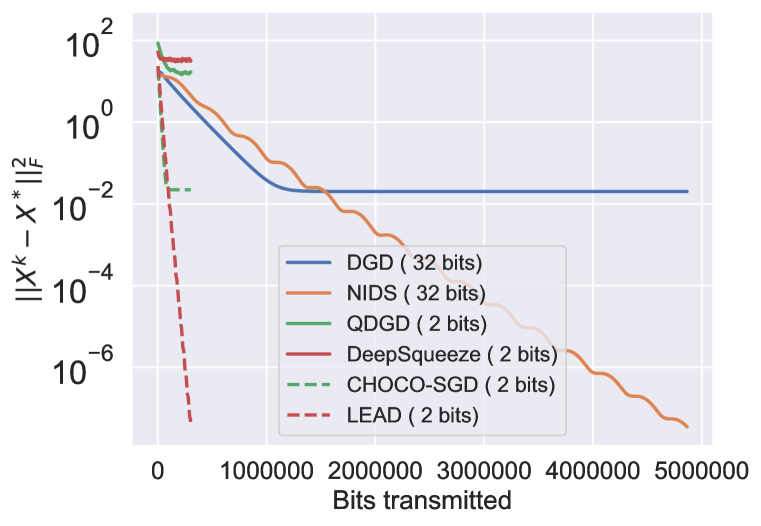

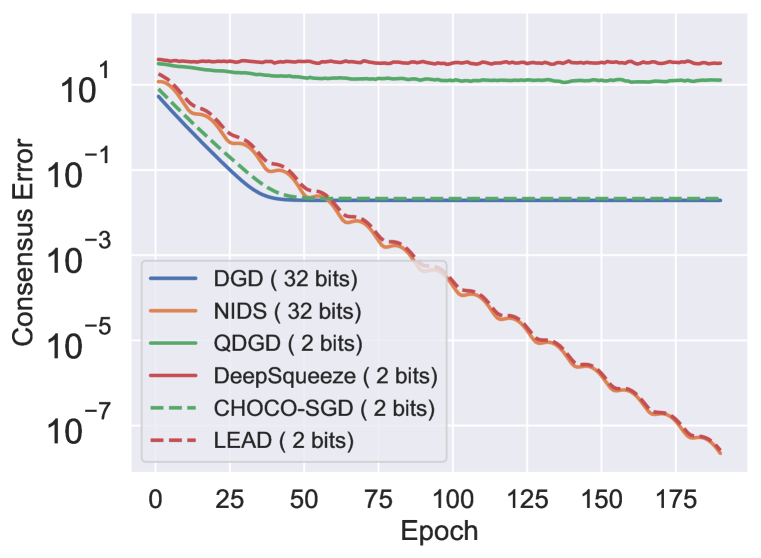

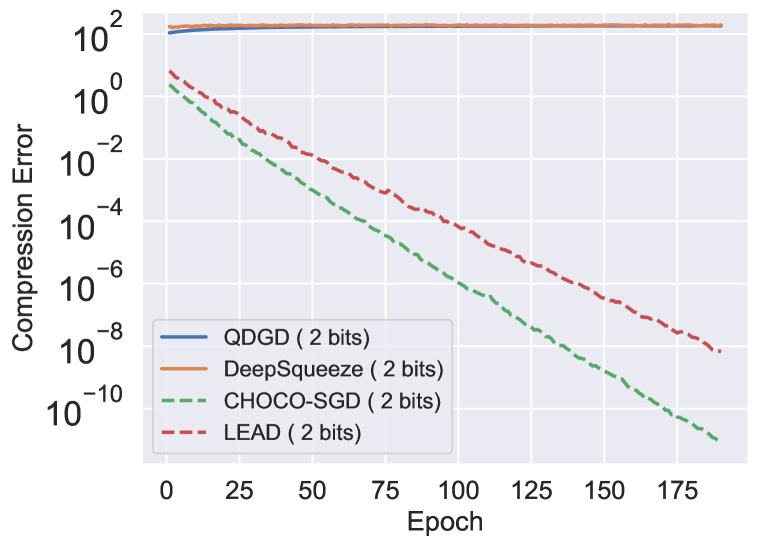

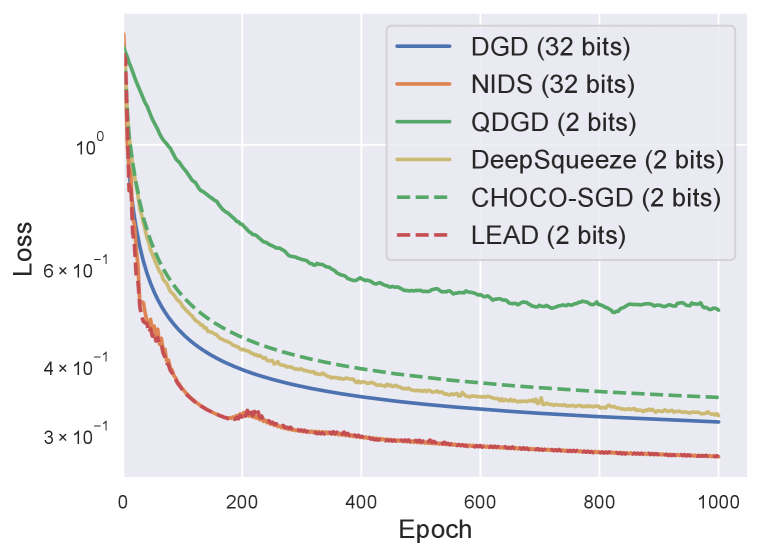

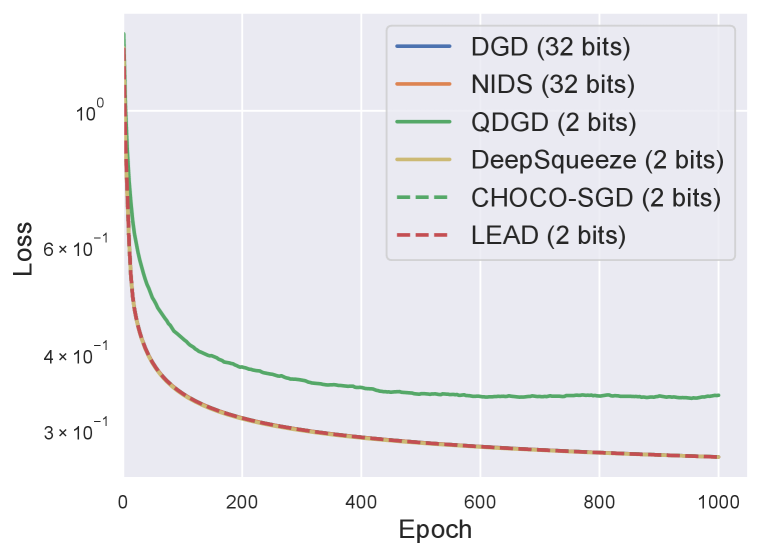

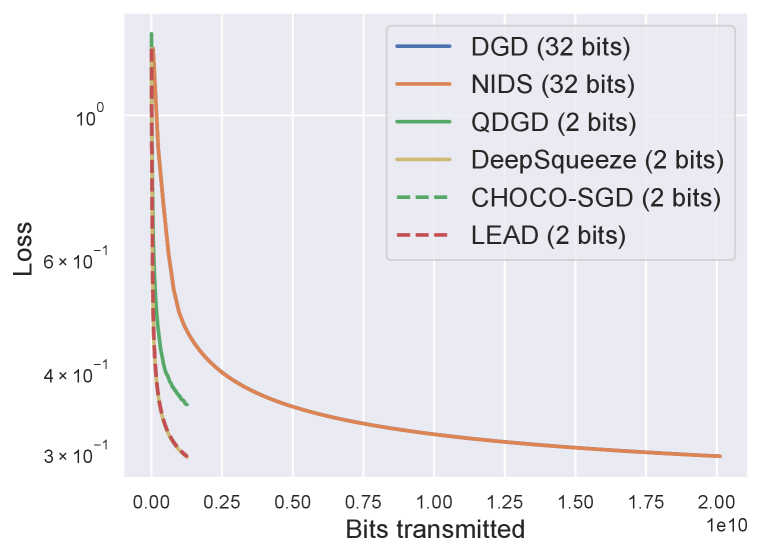

Linear regression. We consider the problem: . Data matrices and the true solution is randomly synthesized. The values are generated by adding Gaussian noise to . We let and the optimal solution of the linear regression problem be . We use full-batch gradient to exclude the impact of gradient variance. The performance is showed in Fig. 1. The distance to in Fig. 1a and the consensus error in Fig. 1c verify that LEAD converges exponentially to the optimal consensual solution. It significantly outperforms most baselines and matches NIDS well under the same number of iterations. Fig. 1b demonstrates the benefit of compression when considering the communication bits. Fig. 1d shows that the compression error vanishes for both LEAD and CHOCO-SGD while the compression error is pretty large for QDGD and DeepSqueeze because they directly compress the local models.

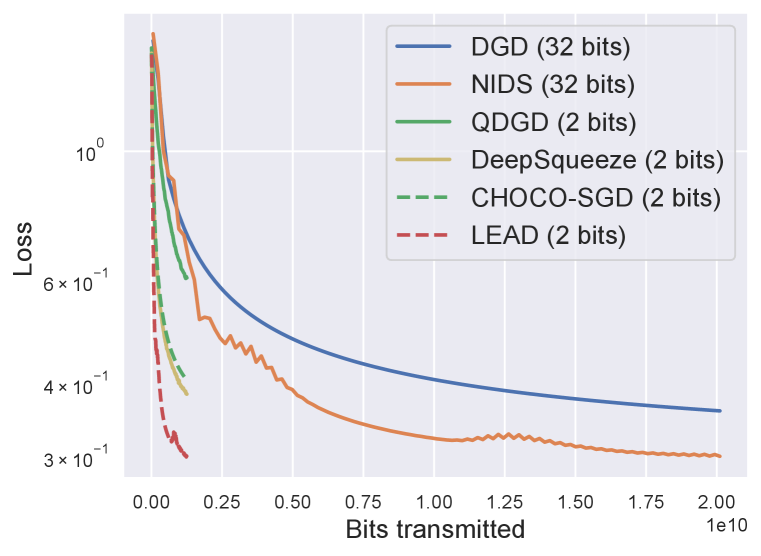

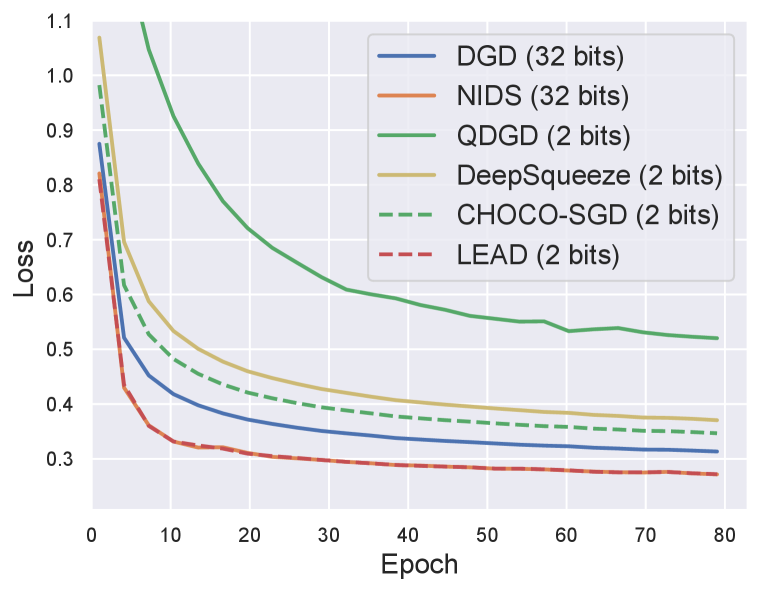

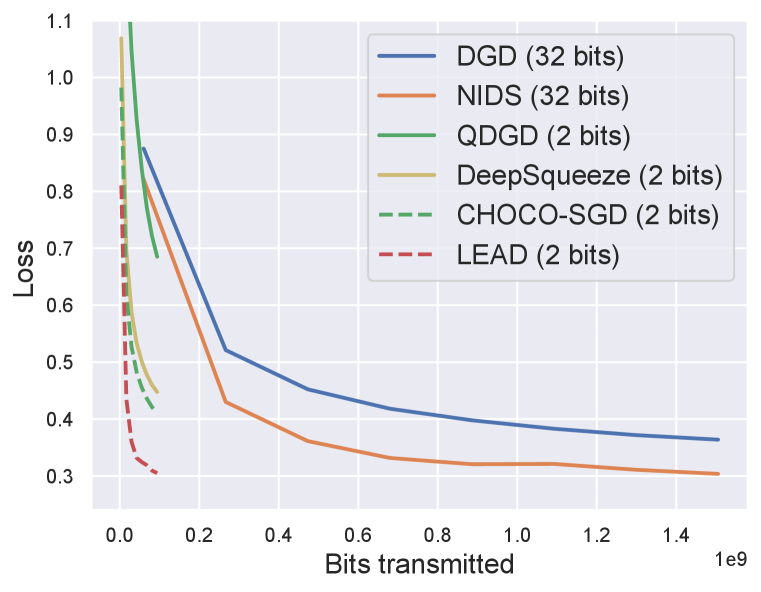

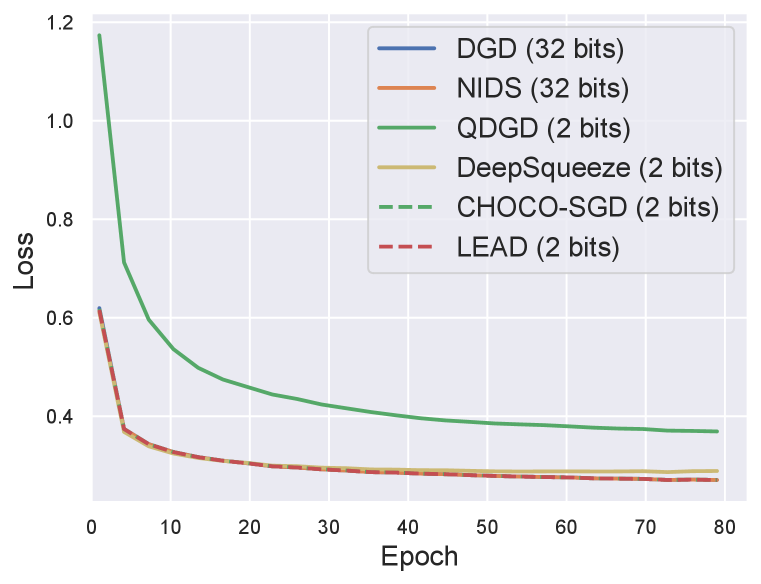

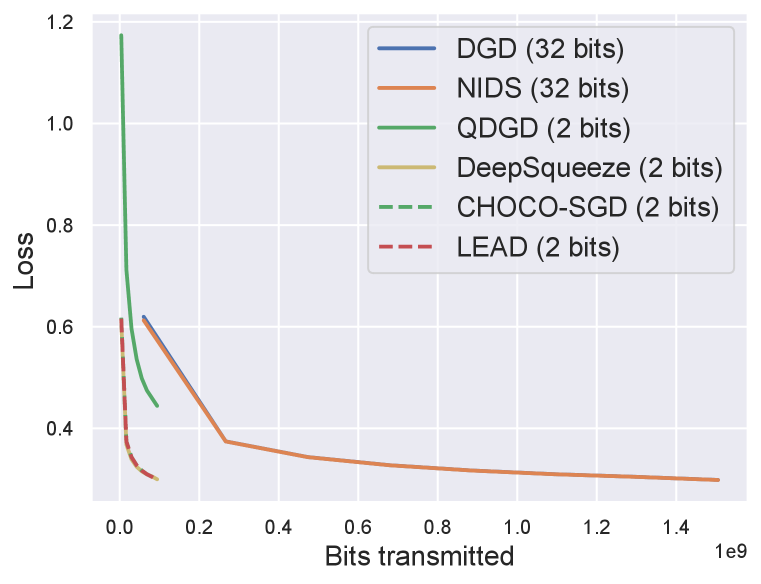

Logistic regression. We further consider a logistic regression problem on the MNIST dataset. The regularization parameter is . We consider both homogeneous and heterogeneous data settings. In the homogeneous setting, the data samples are randomly shuffled before being uniformly partitioned among all agents such that the data distribution from each agent is very similar. In the heterogeneous setting, the samples are first sorted by their labels and then partitioned among agents. Due to the space limit, we mainly present the results in heterogeneous setting here and defer the homogeneous setting to Appendix D.2. The results using full-batch gradient and mini-batch gradient (the mini-batch size is for each agent) are showed in Fig. 2 and Fig. 3 respectively and both settings shows the faster convergence and higher precision of LEAD.

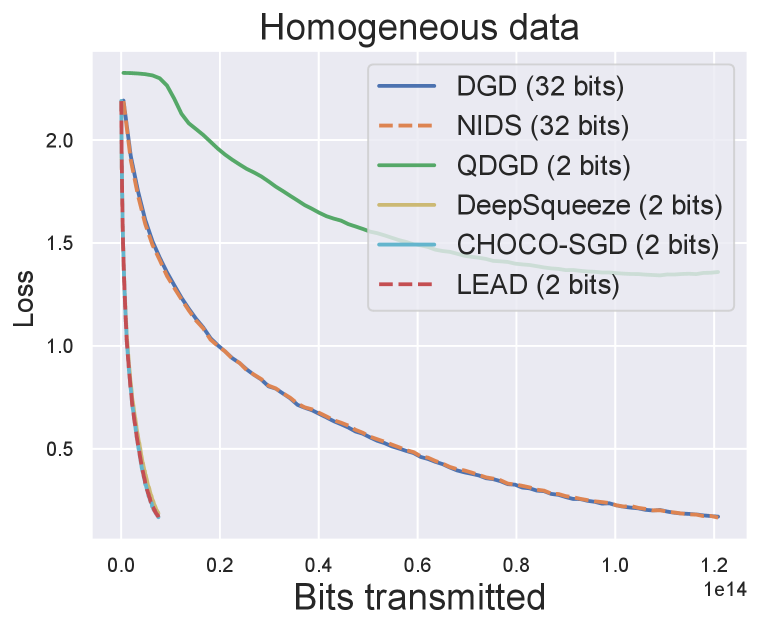

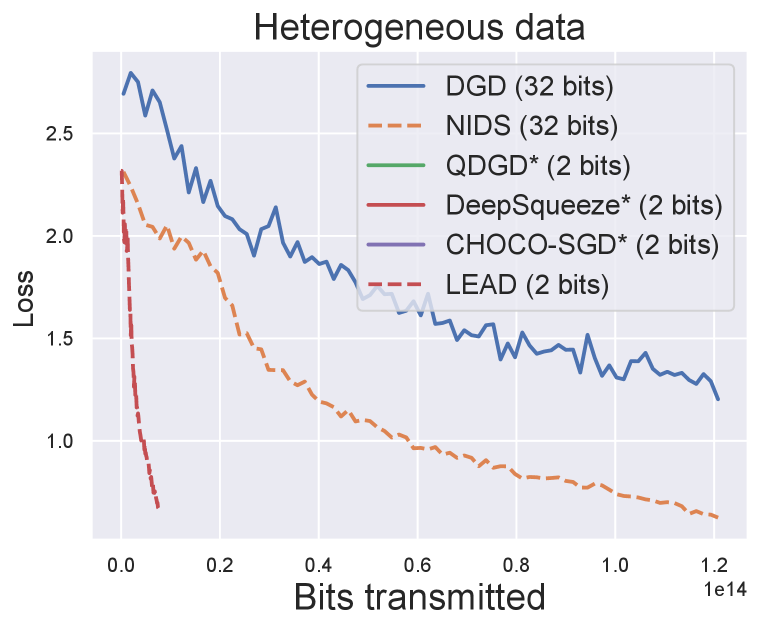

Neural network. We empirically study the performance of LEAD in optimizing deep neural network by training AlexNet ( MB) on CIFAR10 dataset. The mini-batch size is for each agents. Both the homogeneous and heterogeneous case are showed in Fig. 4. In the homogeneous case, CHOCO-SGD, DeepSqueeze and LEAD perform similarly and outperform the non-compressed variants in terms of communication efficiency, but CHOCO-SGD and DeepSqueeze need more efforts for parameter tuning because their convergence is sensitive to the setting of . In the heterogeneous cases, LEAD achieves the fastest and most stable convergence. Note that in this setting, sufficient information exchange is more important for convergence because models from different agents are moving to significantly diverse directions. In such case, DGD only converges with smaller stepsize and its communication compressed variants, including QDGD, DeepSqueeze and CHOCO-SGD, diverge in all parameter settings we try.

In summary, our experiments verify our theoretical analysis and show that LEAD is able to handle data heterogeneity very well. Furthermore, the performance of LEAD is robust to parameter settings and needs less effort for parameter tuning, which is critical in real-world applications.

6 Conclusion

In this paper, we investigate the communication compression in decentralized optimization. Motivated by primal-dual algorithms, a novel decentralized algorithm with compression, LEAD, is proposed to achieve faster convergence rate and to better handle heterogeneous data while enjoying the benefit of efficient communication. The nontrivial analyses on the coupled dynamics of inexact primal and dual updates as well as compression error establish the linear convergence of LEAD when full gradient is used and the linear convergence to the neighborhood of the optimum when stochastic gradient is used. Extensive experiments validate the theoretical analysis and demonstrate the state-of-the-art efficiency and robustness of LEAD. LEAD is also applicable to non-convex problems as empirically verified in the neural network experiments but we leave the non-convex analysis as the future work.

Acknowledgements

Xiaorui Liu and Dr. Jiliang Tang are supported by the National Science Foundation (NSF) under grant numbers CNS-1815636, IIS-1928278, IIS-1714741, IIS-1845081, IIS-1907704, and IIS-1955285. Yao Li and Dr. Ming Yan are supported by NSF grant DMS-2012439 and Facebook Faculty Research Award (Systems for ML). Dr. Rongrong Wang is supported by NSF grant CCF-1909523.

References

- Alistarh et al. (2017) Dan Alistarh, Demjan Grubic, Jerry Li, Ryota Tomioka, and Milan Vojnovic. QSGD: Communication-efficient sgd via gradient quantization and encoding. In Advances in Neural Information Processing Systems, pp. 1709–1720. 2017.

- Bernstein et al. (2018) Jeremy Bernstein, Yu-Xiang Wang, Kamyar Azizzadenesheli, and Animashree Anandkumar. SIGNSGD: compressed optimisation for non-convex problems. In Proceedings of the 35th International Conference on Machine Learning, pp. 559–568, 2018.

- Carli et al. (2010) Ruggero Carli, Fabio Fagnani, Paolo Frasca, and Sandro Zampieri. Gossip consensus algorithms via quantized communication. Automatica, 46(1):70–80, 2010.

- Karimireddy et al. (2019) Sai Praneeth Karimireddy, Quentin Rebjock, Sebastian Urban Stich, and Martin Jaggi. Error feedback fixes SignSGD and other gradient compression schemes. In Proceedings of the 36th International Conference on Machine Learning, pp. 3252–3261. PMLR, 2019.

- Koloskova et al. (2019) Anastasia Koloskova, Sebastian U. Stich, and Martin Jaggi. Decentralized stochastic optimization and gossip algorithms with compressed communication. In Proceedings of the 36th International Conference on Machine Learning, pp. 3479–3487. PMLR, 2019.

- Koloskova et al. (2020) Anastasia Koloskova, Tao Lin, Sebastian U Stich, and Martin Jaggi. Decentralized deep learning with arbitrary communication compression. In International Conference on Learning Representations, 2020.

- Li & Yan (2019) Yao Li and Ming Yan. On linear convergence of two decentralized algorithms. arXiv preprint arXiv:1906.07225, 2019.

- Li et al. (2019) Zhi Li, Wei Shi, and Ming Yan. A decentralized proximal-gradient method with network independent step-sizes and separated convergence rates. IEEE Transactions on Signal Processing, 67(17):4494–4506, 2019.

- Lian et al. (2017) Xiangru Lian, Ce Zhang, Huan Zhang, Cho-Jui Hsieh, Wei Zhang, and Ji Liu. Can decentralized algorithms outperform centralized algorithms? a case study for decentralized parallel stochastic gradient descent. In Advances in Neural Information Processing Systems, pp. 5330–5340, 2017.

- Ling et al. (2015) Qing Ling, Wei Shi, Gang Wu, and Alejandro Ribeiro. DLM: Decentralized linearized alternating direction method of multipliers. IEEE Transactions on Signal Processing, 63(15):4051–4064, 2015.

- Liu et al. (2020) Xiaorui Liu, Yao Li, Jiliang Tang, and Ming Yan. A double residual compression algorithm for efficient distributed learning. The 23rd International Conference on Artificial Intelligence and Statistics, 2020.

- Lu & De Sa (2020) Yucheng Lu and Christopher De Sa. Moniqua: Modulo quantized communication in decentralized SGD. In Proceedings of the 37th International Conference on Machine Learning, 2020.

- Magnússon et al. (2020) Sindri Magnússon, Hossein Shokri-Ghadikolaei, and Na Li. On maintaining linear convergence of distributed learning and optimization under limited communication. IEEE Transactions on Signal Processing, 68:6101–6116, 2020.

- Mishchenko et al. (2019) Konstantin Mishchenko, Eduard Gorbunov, Martin Takáč, and Peter Richtárik. Distributed learning with compressed gradient differences. arXiv preprint arXiv:1901.09269, 2019.

- Mota et al. (2013) Joao FC Mota, Joao MF Xavier, Pedro MQ Aguiar, and Markus Püschel. D-ADMM: A communication-efficient distributed algorithm for separable optimization. IEEE Transactions on Signal Processing, 61(10):2718–2723, 2013.

- Nedic & Ozdaglar (2009) Angelia Nedic and Asuman Ozdaglar. Distributed subgradient methods for multi-agent optimization. IEEE Transactions on Automatic Control, 54(1):48–61, 2009.

- Nedic et al. (2017) Angelia Nedic, Alex Olshevsky, and Wei Shi. Achieving geometric convergence for distributed optimization over time-varying graphs. SIAM Journal on Optimization, 27(4):2597–2633, 2017.

- Nesterov (2013) Yurii Nesterov. Introductory lectures on convex optimization: A basic course, volume 87. Springer Science & Business Media, 2013.

- Pu & Nedić (2020) Shi Pu and Angelia Nedić. Distributed stochastic gradient tracking methods. Mathematical Programming, pp. 1–49, 2020.

- Reisizadeh et al. (2019a) Amirhossein Reisizadeh, Aryan Mokhtari, Hamed Hassani, and Ramtin Pedarsani. An exact quantized decentralized gradient descent algorithm. IEEE Transactions on Signal Processing, 67(19):4934–4947, 2019a.

- Reisizadeh et al. (2019b) Amirhossein Reisizadeh, Hossein Taheri, Aryan Mokhtari, Hamed Hassani, and Ramtin Pedarsani. Robust and communication-efficient collaborative learning. In Advances in Neural Information Processing Systems, pp. 8388–8399, 2019b.

- Seide et al. (2014) Frank Seide, Hao Fu, Jasha Droppo, Gang Li, and Dong Yu. 1-bit stochastic gradient descent and application to data-parallel distributed training of speech DNNs. In Interspeech 2014, September 2014.

- Shi et al. (2015) Wei Shi, Qing Ling, Gang Wu, and Wotao Yin. EXTRA: An exact first-order algorithm for decentralized consensus optimization. SIAM Journal on Optimization, 25(2):944–966, 2015.

- Stich et al. (2018) Sebastian U. Stich, Jean-Baptiste Cordonnier, and Martin Jaggi. Sparsified SGD with memory. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, pp. 4452–4463, 2018.

- Tang et al. (2018a) Hanlin Tang, Shaoduo Gan, Ce Zhang, Tong Zhang, and Ji Liu. Communication compression for decentralized training. In Advances in Neural Information Processing Systems, pp. 7652–7662. 2018a.

- Tang et al. (2018b) Hanlin Tang, Xiangru Lian, Ming Yan, Ce Zhang, and Ji Liu. : Decentralized training over decentralized data. In Proceedings of the 35th International Conference on Machine Learning, pp. 4848–4856, 2018b.

- Tang et al. (2019a) Hanlin Tang, Xiangru Lian, Shuang Qiu, Lei Yuan, Ce Zhang, Tong Zhang, and Ji Liu. Deepsqueeze: Decentralization meets error-compensated compression. CoRR, abs/1907.07346, 2019a. URL http://arxiv.org/abs/1907.07346.

- Tang et al. (2019b) Hanlin Tang, Chen Yu, Xiangru Lian, Tong Zhang, and Ji Liu. DoubleSqueeze: Parallel stochastic gradient descent with double-pass error-compensated compression. In Proceedings of the 36th International Conference on Machine Learning, pp. 6155–6165, 2019b.

- Tsitsiklis et al. (1986) John Tsitsiklis, Dimitri Bertsekas, and Michael Athans. Distributed asynchronous deterministic and stochastic gradient optimization algorithms. IEEE transactions on automatic control, 31(9):803–812, 1986.

- Wu et al. (2018) Jiaxiang Wu, Weidong Huang, Junzhou Huang, and Tong Zhang. Error compensated quantized SGD and its applications to large-scale distributed optimization. In Proceedings of the 35th International Conference on Machine Learning, pp. 5325–5333, 2018.

- Xiao & Boyd (2004) Lin Xiao and Stephen Boyd. Fast linear iterations for distributed averaging. Systems & Control Letters, 53(1):65–78, 2004.

- Xu et al. (2020) Jinming Xu, Ye Tian, Ying Sun, and Gesualdo Scutari. Accelerated primal-dual algorithms for distributed smooth convex optimization over networks. In International Conference on Artificial Intelligence and Statistics, pp. 2381–2391. PMLR, 2020.

- Yuan et al. (2016) Kun Yuan, Qing Ling, and Wotao Yin. On the convergence of decentralized gradient descent. SIAM Journal on Optimization, 26(3):1835–1854, 2016.

- Yuan et al. (2018) Kun Yuan, Bicheng Ying, Xiaochuan Zhao, and Ali H Sayed. Exact diffusion for distributed optimization and learning—part i: Algorithm development. IEEE Transactions on Signal Processing, 67(3):708–723, 2018.

- Yuan et al. (2020) Kun Yuan, Wei Xu, and Qing Ling. Can primal methods outperform primal-dual methods in decentralized dynamic optimization? arXiv preprint arXiv:2003.00816, 2020.

Contents of Appendix

\etocdepthtag

.tocmtappendix \etocsettagdepthmtchapternone \etocsettagdepthmtappendixsubsection

Appendix A LEAD in agent’s perspective

In the main paper, we described the algorithm with matrix notations for concision. Here we further provide a complete algorithm description from the agents’ perspective.

input: stepsize , compression parameters (), initial values , ,

output: or

Appendix B Connections with exiting works

The non-compressed variant of LEAD in Alg. 1 recovers NIDS (Li et al., 2019), (Tang et al., 2018b) and Exact Diffusion (Yuan et al., 2018) as shown in Proposition 1. In Corollary 3, we show that the convergence rate of LEAD exactly recovers the rate of NIDS when and .

Proposition 1 (Connection to NIDS, and Exact Diffusion).

When there is no communication compression (i.e., ) and , Alg. 1 recovers :

| (15) |

Furthermore, if the stochastic estimator of the gradient is replaced by the full gradient, it recovers NIDS and Exact Diffusion with specific settings.

Corollary 3 (Consistency with NIDS).

When (no communication compression), and (full gradient), LEAD has the convergence consistent with NIDS with :

| (16) |

See the proof in E.5.

Proof of Proposition 1.

Appendix C Compression method

C.1 p-norm b-bits quantization

Theorem 3 (p-norm b-bit quantization).

Let us define the quantization operator as

| (20) |

where is the Hadamard product, is the elementwise absolute value and is a random dither vector uniformly distributed in . is unbiased, i.e., , and the compression variance is upper bounded by

| (21) |

which suggests that -norm provides the smallest upper bound for the compression variance due to if .

Remark 7.

For the compressor defined in (20), we have the following the compression constant

Proof.

Let denote , , and . We can rewrite as .

For any coordinate such that , we have with probability . Hence and

For any coordinate such that , we have and satisfies

Thus, we derive

and

Considering both cases, we have and

∎

C.2 Compression error

To verify Theorem 3, we compare the compression error of the quantization method defined in (20) with different norms (). Specifically, we uniformly generate 100 random vectors in and compute the average compression error. The result shown in Figure 5 verifies our proof in Theorem 3 that the compression error decreases when increases. This suggests that -norm provides the best compression precision under the same bit constraint.

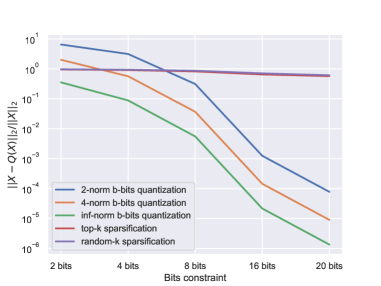

Under similar setting, we also compare the compression error with other popular compression methods, such as top-k and random-k sparsification. The x-axes represents the average bits needed to represent each element of the vector. The result is showed in Fig. 6. Note that intuitively top-k methods should perform better than random-k method, but the top-k method needs extra bits to transmitted the index while random-k method can avoid this by using the same random seed. Therefore, top-k method doesn’t outperform random-k too much under the same communication budget. The result in Fig. 6 suggests that -norm b-bits quantization provides significantly better compression precision than others under the same bit constraint.

Appendix D Experiments

D.1 Parameter sensitivity

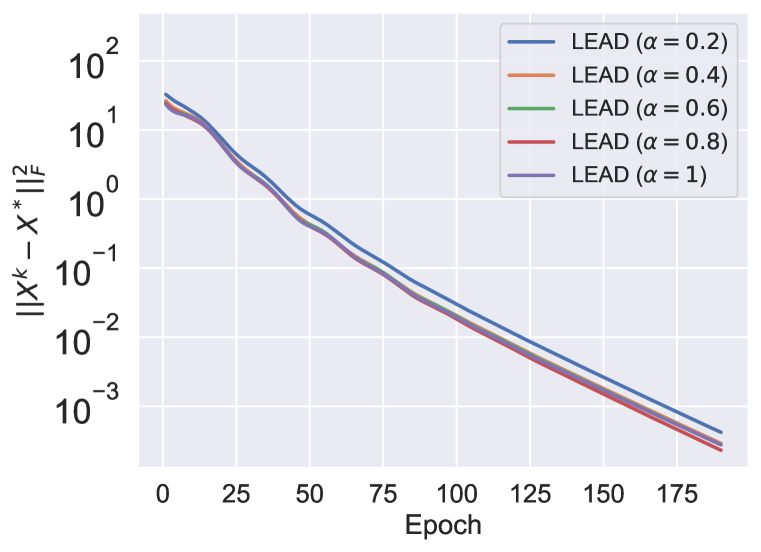

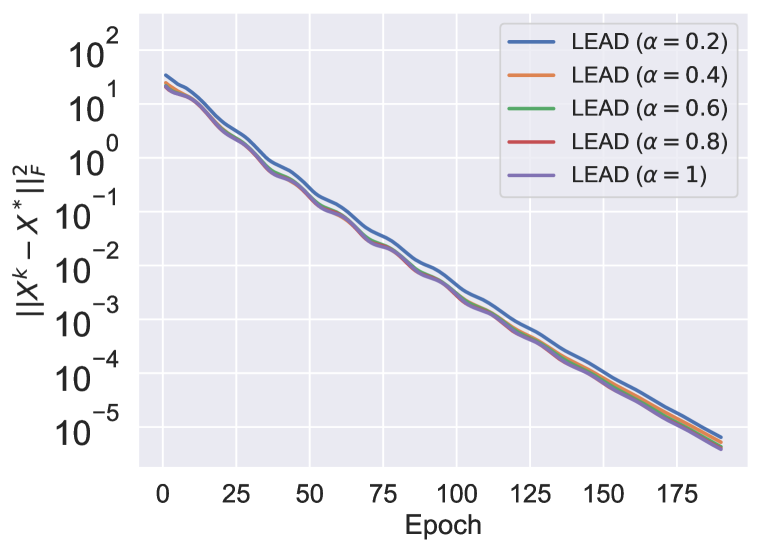

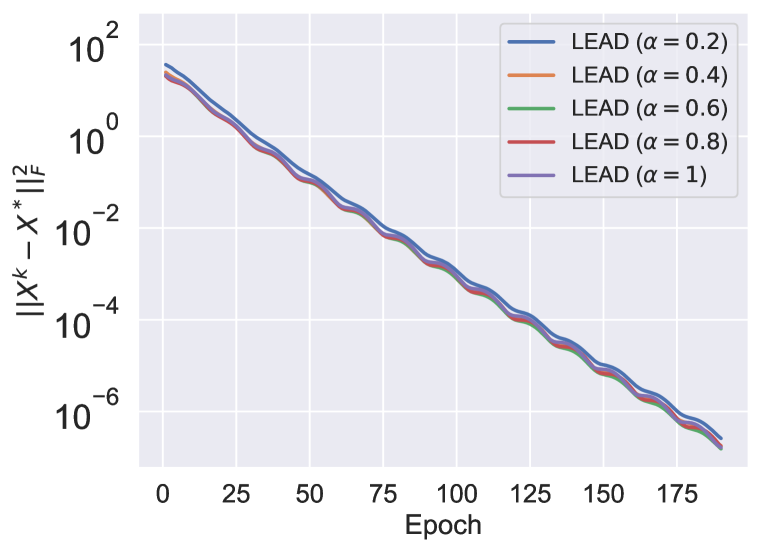

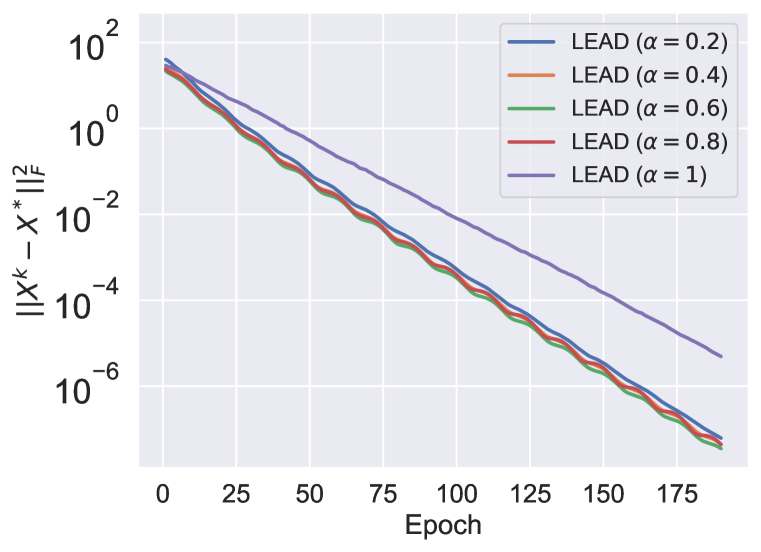

In the linear regression problem, the convergence of LEAD under different parameter settings of and are tested. The result showed in Figure 7 indicates that LEAD performs well in most settings and is robust to the parameter setting. Therefore, in this paper, we simply set and for LEAD in all experiment, which indicates the minor effort needed for parameter tuning.

D.2 Experiments in homogeneous setting

The experiments on logistic regression problem in homogeneous case are showed in Fig. 8 and Fig. 9. It shows that DeepSqueeze, CHOCO-SGD and LEAD converges similarly while DeepSqueeze and CHOCO-SGD require to tune a smaller for convergence as showed in the parameter setting in Section D.3. Generally, a smaller decreases the model propagation between agents since changes the effective mixing matrix and this may cause slower convergence. However, in the setting where data from different agents are very similar, the models move to close directions such that the convergence is not affected too much.

D.3 Parameter settings

The best parameter settings we search for all algorithms and experiments are summarized in Tables 1– 10. QDGD and DeepSqueeze are more sensitive to and CHOCO-SGD is slight more robust. LEAD is most robust to parameter settings and it works well for the setting and in all experiments in this paper.

| Algorithm | |||

| DGD | - | - | |

| NIDS | - | - | |

| QDGD | - | ||

| DeepSqueeze | - | ||

| CHOCO-SGD | - | ||

| LEAD |

| Algorithm | |||

| DGD | - | - | |

| NIDS | - | - | |

| QDGD | - | ||

| DeepSqueeze | - | ||

| CHOCO-SGD | - | ||

| LEAD |

Homogeneous case

| Algorithm | |||

| DGD | - | - | |

| NIDS | - | - | |

| QDGD | - | ||

| DeepSqueeze | - | ||

| CHOCO-SGD | - | ||

| LEAD |

Heterogeneous case

| Algorithm | |||

| DGD | - | - | |

| NIDS | - | - | |

| QDGD | - | ||

| DeepSqueeze | - | ||

| CHOCO-SGD | - | ||

| LEAD |

Homogeneous case

| Algorithm | |||

| DGD | - | - | |

| NIDS | - | - | |

| QDGD | - | ||

| DeepSqueeze | - | ||

| CHOCO-SGD | - | ||

| LEAD |

Heterogeneous case

| Algorithm | |||

| DGD | - | - | |

| NIDS | - | - | |

| QDGD | - | ||

| DeepSqueeze | - | ||

| CHOCO-SGD | - | ||

| LEAD |

Homogeneous case

| Algorithm | |||

|---|---|---|---|

| DGD | - | - | |

| NIDS | - | - | |

| QDGD | * | * | - |

| DeepSqueeze | * | * | - |

| CHOCO-SGD | * | * | - |

| LEAD |

Heterogeneous case

Appendix E Proofs of the theorems

E.1 Illustrative flow

The following flow graph depicts the relation between iterative variables and clarifies the range of conditional expectation. and are two algebras generated by the gradient sampling and the stochastic compression respectively. They satisfy

The solid and dashed arrows in the top flow illustrate the dynamics of the algorithm, while in the bottom, the arrows stand for the relation between successive --algebras. The downward arrows determine the range of --algebras. E.g., up to all random variables are in and up to , all random variables are in with Throughout the appendix, without specification, is the expectation conditioned on the corresponding stochastic estimators given the context.

E.2 Two central Lemmas

Lemma 1 (Fundamental equality).

Let be the optimal solution, and denote the compression error in the th iteration, that is . From Alg. 1, we have

where and ensures the positive definiteness of over .

E.3 Proof of Lemma 1

Before proving Lemma 1, we let and introduce the following three Lemmas.

Lemma 3.

Let be the consensus solution. Then, from Line 4-7 of Alg. 1, we obtain

| (22) |

Proof.

Lemma 4.

Let , we have

| (23) | ||||

| (24) |

where and ensures the positive definiteness of over .

Proof.

Since for any , we have

Similarly, we have

To make sure that is positive definite over , we need ∎

Lemma 5.

Taking the expectation conditioned on the compression in the th iteration, we have

Proof.

The proof is straightforward and omitted here. ∎

E.4 Proof of Lemma 2

E.5 Proof of Theorem 1

Proof of Theorem 1.

Combining Lemmas 1, 2, and 5, we have the expectation conditioned on the compression satisfying

| (31) |

where is a non-negative number to be determined. Then we deal with the three terms on the right hand side separately. We want the terms and to be nonpositive. First, we consider . Note that . If we want , then, we need , i.e., . Therefore we have

where is the second smallest eigenvalue of . It means that we also need

which is equivalent to

| (32) |

Then we look at . We have

Because we have , so we need

| (33) |

That is

| (34) | |||

| (35) |

Next, we look at . Firstly, by the bounded variance assumption, we have the expectation conditioned on the gradient sampling in th iteration satisfying

Then with the smoothness and strong convexity from Assumptions 4, we have the co-coercivity of with , which gives

When , we have

Therefore, we obtain

| (36) |

Conditioned on the the iteration, (i.e., conditioned on the gradient sampling in th iteration), the inequality (31) becomes

| (37) |

if the step size satisfies . Rewriting (E.5), we have

| (38) |

and thus

| (39) |

Recall all the conditions on the parameters , and to make sure that :

| (41) | ||||

| (42) | ||||

| (43) | ||||

| (44) |

In the following, we show that there exist parameters that satisfy these conditions.

Since we can choose any , we let

such that

Then we have

When , the condition (45) is equivalent to

| (46) |

The first term can be simplified using

due to when

Note that implies , which ensures the positive definiteness of over in Lemma 4.

Note that ensures

| (47) |

So, we can simplify the bound for as

Lastly, taking the total expectation on both sides of (40) and using tower property, we complete the proof for .

∎

Proof of Corollary 1.

Let’s first define and .

We can choose the stepsize such that the upper bound of is

due to when

Hence we can take .

The bound of is

When is chosen as , pick

| (48) |

When , the upper bound of is

In this case, we pick

| (49) |

Note since is lower bounded by . Hence in both cases (Eq. (48) and Eq. (49)), , and the third term of is upper bounded by

In two cases of , the second term of becomes

Before analysing the first term of , we look at in two cases of . When ,

When ,

In both cases, . Therefore, the first term of becomes

To summarize, we have

and therefore

With full-gradient (i.e., ), we get accuracy solution with the total number of iterations

When , i.e., there is no compression, the iteration complexity recovers that of NIDS,

When the complexity is improved to that of NIDS, i.e., the compression doesn’t harm the convergence in terms of the order of the coefficients. ∎

Proof of Corollary 2.

Note that and , then

| (50) |

The last inequality holds because we have ∎

E.6 Proof of Theorem 2

Proof of Theorem 2.

In order to get exact convergence, we pick diminishing step-size, set , , and then

If we further pick diminishing and such that then

Notice that since is increasing in with limit at .

In this case we only need,

| (51) |

And

if and note that

We define

Hence

From we get

If we pick , then it’s sufficient to let

Hence if and let then guarantees the above discussion and

So far all restrictions for are

and

Let , and we claim that if we pick and some , by setting , we get

Induction:

When it’s obvious. Suppose previous inequalities hold. Then

Multiply on both sides, we get

Hence

This induction holds for any such that is feasible, i.e.

Here we summarize the definition of constant numbers:

| (52) | ||||

| (53) | ||||

| (54) |

Therefore, let and we get

Since , we complete the proof.

∎