Linear optimal transport subspaces for point set classification

Abstract

Learning from point sets is an essential component in many computer vision and machine learning applications. Native, unordered, and permutation invariant set structure space is challenging to model, particularly for point set classification under spatial deformations. Here we propose a framework for classifying point sets experiencing certain types of spatial deformations, with a particular emphasis on datasets featuring affine deformations. Our approach employs the Linear Optimal Transport (LOT) transform to obtain a linear embedding of set-structured data. Utilizing the mathematical properties of the LOT transform, we demonstrate its capacity to accommodate variations in point sets by constructing a convex data space, effectively simplifying point set classification problems. Our method, which employs a nearest-subspace algorithm in the LOT space, demonstrates label efficiency, non-iterative behavior, and requires no hyper-parameter tuning. It achieves competitive accuracies compared to state-of-the-art methods across various point set classification tasks. Furthermore, our approach exhibits robustness in out-of-distribution scenarios where training and test distributions vary in terms of deformation magnitudes.

Index Terms:

particle-LOT, subspace modeling, classification, optimal transport.I Introduction

Point sets provide valuable insights about object geometry, making them useful for a variety of applications, including object detection, recognition, segmentation, and tracking in fields such as robotics, autonomous vehicles, virtual reality, and computer vision, among others [1, 2, 3, 4, 5]. They represent the surface geometry of an object in an N-dimensional space as a set of points, obtained using various scanning technologies such as LiDAR or photogrammetry [6, 7], or by sampling a continuous probability density function over an N-D space [8]. However, modeling the set structure space for classification presents significant challenges due to the sparsity and noise in data, the accumulation of spatial deformations (such as affine deformations) in real-world point set data, and the high dimensionality of point sets, among other factors [9, 10, 1, 11]. Furthermore, defining a metric or distance function for point set classification is challenging due to the permutation invariant nature of point sets resulting from the arbitrary order of points in a set [1, 12]. However, despite these challenges, there has been a growing interest in developing new algorithms and techniques for point set classification.

In recent years, several research efforts have focused on point set classification, resulting in the development of various methods to address challenges in this area. Over the last few decades, point set classification methods have evolved from relying on feature engineering [13, 14, 15, 16] to utilizing deep neural networks to learn representations and use them in classification tasks [3, 1, 17]. Neural networks have emerged as a leading classification framework for point sets, providing end-to-end learning capabilities and eliminating the need for hand-crafted feature engineering. They have demonstrated to achieve high accuracy in several classification tasks, and are also suitable for parallel implementation using graphical processing units (GPUs) [3, 1, 17]. However, the effectiveness of neural network-based methods is often limited by their high data requirements [18], high computational costs [1], and vulnerability to out-of-distribution samples, e.g., adversarial attacks [19, 20, 21].

While the conventional approach to modeling point sets involves direct processing of their coordinates, an alternative and less commonly used method is to represent a point set as a deformation of another point set [22]. To address this challenge, point set deformation models have been developed utilizing the mathematics of optimal mass transport [22, 23]. These models treat a point set as a smooth, nonlinear, and invertible transformation of a reference point set structure. The estimation of such models can be facilitated through the use of the linear optimal transport (LOT) transform, which has found applications in various fields [23]. The LOT of a point set provides as a linear embedding for that point set which can be used to compare with other point set data [23]. The LOT transform has been combined with various machine learning techniques and has been used in many applications [23, 20].

This paper introduces a new method for classifying point sets by expanding upon the LOT-based modeling frameworks. We start by introducing a transport generative model to define point set classes, where class elements can be conceived as instances of an unknown template point set pattern under the effect of unknown spatial deformations. Using the mathematical properties of the LOT transform, we establish that these point set classes, under our generative model (with certain conditions on spatial deformations), can be constructed as convex subspaces in the LOT space, which are capable of accommodating the variations in point set data. Subsequently, we propose a nearest subspace-based classifier in the LOT space for classifying point sets under the given generative model. Our model is also capable of mathematically encoding invariances by integrating mathematical knowledge of deformations known to be present in the data. In our experiments, we particularly focus on datasets experiencing affine deformations and demonstrate the effectiveness of our method compared to several state-of-the-art methods. Our approach exhibits particular strength in situations characterized by limited training data and in the challenging out-of-distribution setting, where the training and test distributions differ in terms of deformation magnitudes.

II Preliminaries

II-A Linear optimal transport embeddings

The fundamental principle of optimal transport theory relies on quantifying the amount of effort (measured as the product of mass and distance) required to rearrange one distribution to another, which gives rise to the Wasserstein metric between distributions. In the present study, we utilize a linearized version of this metric, as outlined in [23], which is constructed formally through a tangent space approximation of the underlying manifold.

Following the construction in [23], we define the linear optimal transport transform for probability measures in , which is the set of absolutely continuous measures with bounded finite second moments and densities 111Any has the following two properties (i) bounded second moment, i.e. ; (ii) absolute continuity with respect to the Lebesgue measure on with bounded density, i.e., has a density function defined on with . . For simplicity, let us fix a reference measure as the Lebesgue measure on a convex compact set of . Thanks to Brenier’s theorem [24], there is a unique minimizer to the following optimal transportation problem

| (1) |

where the push-forward (transport) relation is defined via for any measurable set . The linear optimal transport (LOT) transform is given by the following correspondence

| (2) |

where each probability measure is identified with the optimal transport map from a fixed reference to , which lies in a linear space. This square-root of the minimum is called the Wasserstein-2 distance between and [25]. The LOT metric between two probability distributions is 222Note that .

| (3) |

For simplicity, we denote as the LOT transform of , i.e., where is fixed.

It turned out the linearization ability of LOT is closely related to the scope of the following so-called composition property [26, 27]

| (4) |

where , and is the set of all diffeomorphisms from to . In particular, given a convex , the LOT embedding of deformed measures via maps in become convex 333 Note in general is not convex as . if all satisfies the above composition property (4), which is shown more formally below.

Proposition II.1 (Lemma A.2 in [27]).

Let be convex. Given , define . If , (4) holds, then is convex in the LOT transform domain.

When the dimension , it is shown in [26] that can only be “basic” transformations (more specifically, translations or isotropic scalings or their compositions) for the composition property (4) to hold for arbitrary ’s. Luckily, [27] proposes an approximate composition property for perturbations of the aforementioned basic transformations, the set of which we denote as .

Property 1 (Approximate composition, p.388 in [27]444This property is referred as -compatibility in [27].) Let and . Let such that for some . Then there exists some such that

| (5) |

Remark: Using the notation for LOT transform of , we have

| (6) |

With the above approximate composition property, one can show the following approximate convexity analog of Proposition II.1 using Lemma A.3, A.4 of [27]:

Proposition II.2.

Let and be convex such that for any , there exists some such that . Given , we have is -convex in the LOT transform domain, where is given in the above approximate composition property. In particular, for any and (),

| (7) |

where .

II-B Discrete implementation for point sets

For the analysis of discrete point set data, a discrete version of the Linear Optimal Transport (LOT) embedding is required. In this particular case, both the reference and target are chosen as discrete probability measures, represented by point sets in . A point set in a -dimensional space is a finite set of points in . A point set with points can be thought as the image of an injective map 555Note that a point set may be associated with many injective maps, e.g. the image sets of and are the same for any permutation .. Given a point set with points, we define a discrete probability distribution associated with the point set as

| (8) |

Given a diffeomorphism , the push-forward distribution of under is given as

| (9) |

Let denote the collection of injective maps from to . Given , the optimal transportation (Wasserstein-2) distance between associated distributions and can be obtained by solving the linear programming problem given below:

| (10) |

where , and for all . Let us fix some and use as a reference. It turned out that any minimizer matrix to the optimal transport problem in (10) is a permutation matrix[25]. In other words, there is a permutation such that

Hence with being fixed, an optimal transport map between and can be determined by and . The LOT transform for is defined as [23] 666Note one can write Note also that may not be unique in general, we follow the implementation in [23] to estimate one of them.

| (11) |

and the LOT distance between two point set measures is

| (12) |

where .

III Transport based Classification Problem statement

In this section, we present a generative model-based problem statement for the type of classification problems we discuss in this paper, building upon the preliminaries established earlier. Our focus is on point set classification, where every class can be viewed as a collection of instances of a prototype point set pattern (a template) observed under unknown spatial deformations. To formalize this concept, we introduce a generative model that provides a formal approach to characterizing point set data of this type.

Generative model Let be a set of smooth one-to-one transformations in an -dimensional space. The mass-preserving generative model for the -th class is defined to be the set

| (13) |

where corresponds to the point set distribution of the prototype template pattern for the -th class and represents the point set distribution of the -th sample from the -th class in . With these definitions, we can now construct a formal mathematical description for the generative model-based problem statement for point set classification.

Classification problem: Let the set of point set distributions are given as in equation (13). Given training samples (class 1), (class 2), as training data, determine the class of an unknown distribution .

Note that the generative model in equation (13) describes set-structured point set data, which makes it challenging to compare point sets due to their permutation-invariant nature. The generative model above is also not guaranteed to be convex, presenting challenges for effective classification using machine learning techniques. In the subsequent sections, we present solutions to the above classification problem at first by restructuring the point clouds by providing linear optimal transport (LOT) embeddings for them and then by approximating the resulting convex spaces with subspaces as done in many image [21, 28], signal [29, 30], and gradient distribution [31] classification problems.

IV Proposed solution

The LOT transform, which was previously described in section II, can significantly simplify the classification problem described earlier by providing a convex linear embedding for the set-structured point set data. Let us first investigate the generative model in equation (13) in the LOT transform space. Applying the approximate composition property (equation (6)) to the generative model in equation (13), we have the LOT-space generative model as follows:

| (14) |

In this context, and refer to the LOT embeddings of and , respectively, with respect to a reference structure (see equation (11)). Based on the preliminary results presented in Section II (Property 1, PropositionII.2, and other results), it is possible to establish the convexity of the set up to a certain bound, subject to certain constraints. Furthermore, we can show that when , the intersection of with is empty [21].

IV-A Training phase

Based on the aforementioned theoretical discussions, we put forward a straightforward non-iterative training approach for the classification method. This involves computing a projection matrix that maps each sample in the LOT space onto the subspace (as outlined in [21]), generated by the 2-convex set . Specifically, we estimate the projection matrix by applying the following procedure:

Given a set of sample training data, denoted as , the first step in our proposed method is to apply the LOT transform on them using a reference distribution . This results in the generation of transformed samples, denoted as . The reference distribution is obtained by selecting a point set at random from the training set, followed by the introduction of random perturbations. Subsequently, we estimate as follows:

| (15) |

The proposed method also provides a structure to mathematically encode invariances with respect to deformations that are known to be present in the data [21, 28]. In this paper, we prescribe methods to encode invariances with respect to a set of affine transformations: translation, isotropic and anisotropic scaling, and shear. Detailed descriptions of the deformation types used for encoding invariances and the corresponding methodologies are explained as follows:

- 1.

-

2.

Isotropic scaling: Let be the normalized isotropic scaling of by , where and . Using equation (6), we have that . As in [21, 28], an additional spanning set for isotropic scaling is not required as the subspace containing naturally contains its scalar multiplication . Therefore, the spanning set for isotropic is defined as .

-

3.

Anisotropic scaling: Let be the normalized anisotropic scaling of , where , , , and . Using equation (6), we have that . Consequently,

Therefore, the spanning set for anisotropic scaling is defined as

-

4.

Shear: Let be the normalized shear of , where and . Here, the shear matrix is constructed using the shear factors , which are located at the non-diagonal positions of . Using equation (6), we have that . Consequently,

Therefore, the spanning set for shear is defined as

Finally, in light of the preceding discussion, we can approximate the enriched subspace as

| (16) |

where .

IV-B Testing phase

To classify a given test sample , we first apply the LOT transform to to obtain its corresponding LOT space representation with respect to the reference (which was pre-selected duing the training phase). Assuming that the test samples originate from the generative model presented in equation (13) (or equation (14)), we can determine the class of an unknown test sample using the following expression:

| (17) |

where is the distance between the test sample and the trained subspaces in the LOT transform space. We can estimate the distance between and the trained subspaces using , where the matrix contains the basis vectors of the subspace arranged in its columns.

V Results

V-A Experimental setup

Our objective is to analyze how the proposed method performs compared to state-of-the-art approaches in terms of classification accuracy, required training data, and robustness in out-of-distribution scenarios in limited training data setting. To achieve this, we created training sets of varying sizes from the original training set for each dataset under examination. We then trained the models using these training sets and assessed their performance on the original test set. Each train split was generated by randomly selecting (without replacement) samples from the original training set, and we repeated the experiments for each split size ten times. The same train-test data samples were used for all algorithms in each split.

In order to assess the effectiveness of the proposed approach, we utilized several comparison methods. These included PointNet [1], DGCNN [17], and multilayer perceptron (MLP) [32] in FSpool feature embedding space [16]. We also conducted a comparative analysis with various conventional machine learning techniques across different set feature embedding spaces. These included logistic regression (LR), kernel support vector machine (k-SVM), multilayer perceptron (MLP), and nearest subspace (NS) classifier models [32] in GeM1, GeM2, GeM4 [13], COVpool [14, 15], and FSpool [16] embedding spaces. The performance of the proposed method was evaluated in relation to these baselines. We conducted these evaluations in addition to performing out-of-distribution experiments. In the proposed method, we selected the number of basis vectors for the subspaces such that the total variance explained by the chosen basis vectors in the -th class captured up to 99% of the total variance explained by that class.

To assess the relative performance of the methods, we evaluated them on several datasets, including Point cloud MNIST [33, 34], ModelNet [35], and ShapeNet [36] datasets. We additionally applied random translations, anisotropic scaling, and shear transformations to both the training and test sets of the datasets. For the ShapeNet dataset, we tested the methods under two experimental setups: the regular setup, where both the training and test sets contained point sets at the same deformation magnitude level, and the out-of-distribution setup, where the training and test sets contained point sets at different deformation magnitude levels.

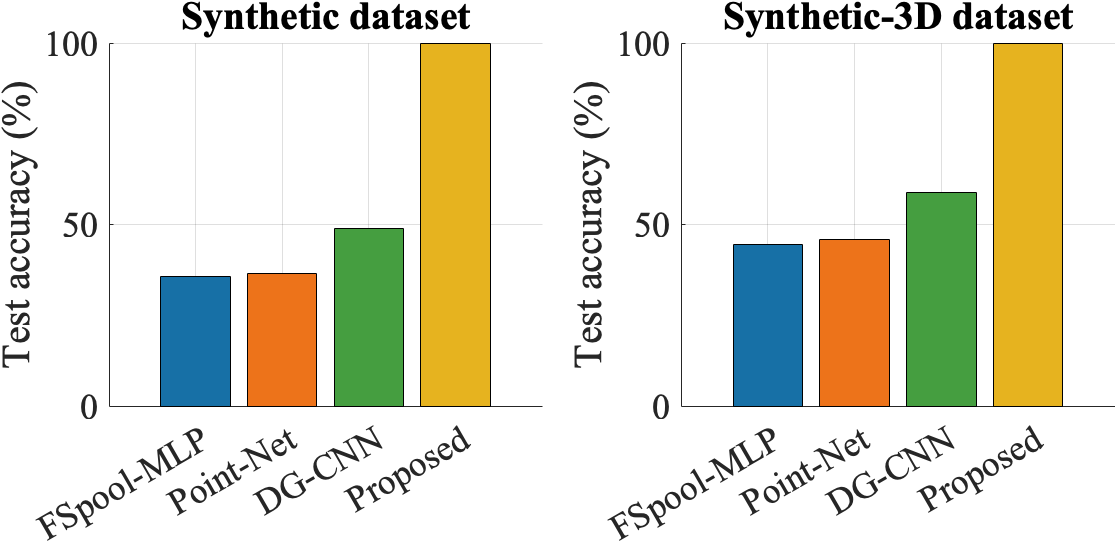

V-B Accuracy in synthetic case

We first evaluated the effectiveness of the proposed method by comparing it with other state-of-the-art techniques on two synthetic datasets. The synthetic datasets were generated by selecting one sample per class from the point cloud MNIST and ShapeNet datasets, followed by introducing random translations, anisotropic scaling, and shear transformations to each selected sample to generate training and test sets. Specifically, the training set consisted of two samples per class, while the test set comprised 25 samples per class. The obtained comparative results are displayed in Fig. 1. As observed, the proposed method substantially outperformed the other methods in this synthetic scenario.

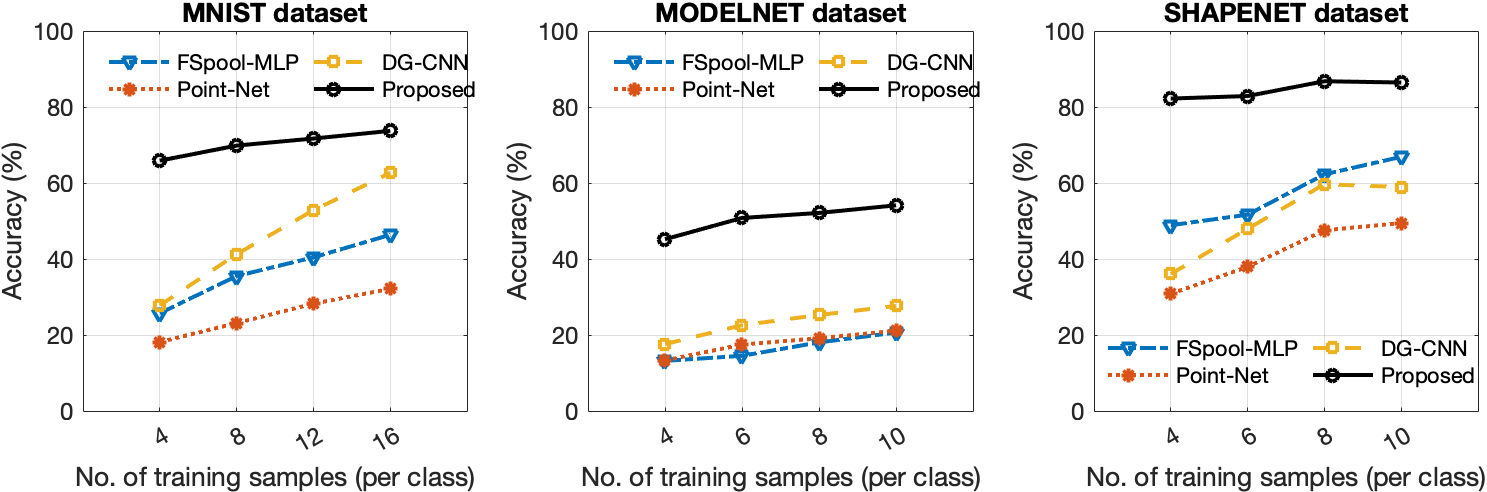

V-C Accuracy and efficiency in real datasets

We conducted the performance evaluation of the proposed method by comparing it with several state-of-the-art techniques, including PointNet, DGCNN, and MLP in FSpool feature embedding space, on the MNIST, ShapeNet, and ModelNet datasets. Fig. 2 presents the average test accuracy values obtained for different numbers of training samples per class. The results demonstrate that our proposed method outperformed the other methods across the range of training sample sizes used to train the models. Notably, the proposed method’s accuracy vs. training size curves exhibited a smoother trend in most cases compared to the other methods.

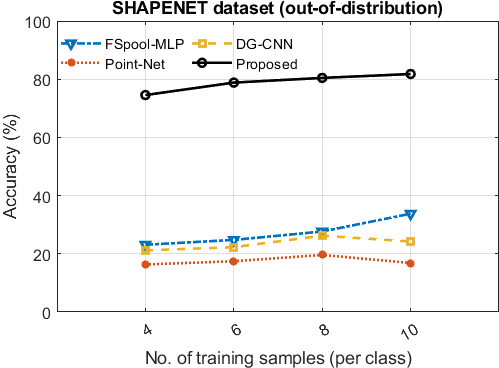

V-D Out-of-distribution robustness

To assess the effectiveness of the proposed method under the out-of-distribution setting, we introduced a gap between the magnitudes of deformations in the training and test sets. Specifically, we used as the deformation set for the ‘out-distribution’ test set, while was the deformation set for the ‘in-distribution’ training set. We trained the models using the ‘in-distribution’ data and tested using the ‘out-distribution’ data. For our out-of-distribution experiment, we used the ShapeNet dataset with small deformations as the ‘in-distribution’ training set and the ShapeNet dataset with larger deformations as the ‘out-distribution’ test set (see Fig. 3). The results show that the proposed method outperformed the other methods by an even more significant margin under the challenging out-of-distribution setup, as shown in Fig. 3. Under this setup, the proposed method obtained accuracy figures closer to that in the standard experimental setup (i.e., ShapeNet in Fig. 2). On the other hand, the accuracy of the other methods declined significantly under the out-of-distribution setup compared to the standard experimental setup (see ShapeNet results in Figs. 2 and 3).

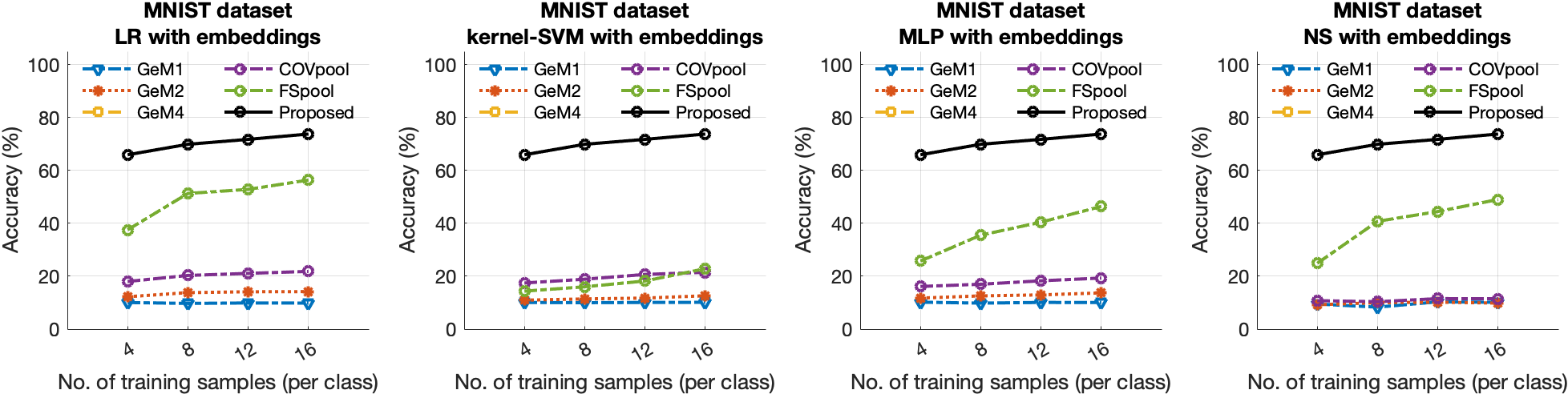

V-E Comparison with set-embedding-based methods

We further evaluated the proposed method against various set embedding-based approaches in combination with classical machine learning methods. The study involved comparing the proposed method with different classifier techniques, including LR, k-SVM, MLP, and NS [32], that were employed with various set-to-vector embedding methods, such as GeM (1,2,4) [13], COVpool [14, 15], and FSpool [16]. Fig. 4 illustrates the percentage test accuracy results obtained from these modified experiments, along with the results of the proposed method for comparison. As shown in Fig. 4, the proposed method outperformed all these models in terms of test accuracy.

VI Discussion

This paper presents a new method for classifying point sets using linear optimal transport (LOT) subspaces. Our method is appropriate for problems where the data at hand can be represented as instances of prototype template point set patterns observed under smooth, nonlinear, and one-to-one transformations. The results achieved in different experimental scenarios indicate that our proposed approach can deliver accuracy results comparable to state-of-the-art methods, provided that the data adheres to the generative model specified in equation (13). Additionally, the nearest LOT subspace technique was shown to be more data-efficient in these cases, meaning that it can attain higher accuracy levels using fewer training samples.

Our proposed method maintains high classification accuracy, even in challenging out-of-distribution experimental conditions, as depicted in Fig.3, whereas the accuracy figures of other methods decline sharply. These results indicate that our method provides a better overall representation of the underlying data distribution, resulting in robust classification performance. The key to achieving better accuracy under out-of-distribution conditions is that our method not only learns the deformations present in the data but also learns the underlying data model, including the types of the deformations, such as translation, scaling, and shear, and their respective magnitudes. These deformation types can be learned from just a few training samples containing those deformations, as well as potentially from the mathematically prescribed invariances proposed in [28].

Our proposed method, which utilizes the nearest subspace classifier in the LOT domain, is more suitable for classification problems in the above category compared with general set embedding methods in combination with classical machine learning classifiers, as demonstrated by its classification performance. Typically, point set data classes in their original domain do not constitute embeddings, and commonly used set-to-vector representation techniques are inadequate in generating effective embeddings for them, as indicated by the results. This presents a significant challenge for any machine learning approach to perform effectively. However, the subspace model is appropriate in the LOT domain since the LOT transform provides a linear embedding and convex data geometry. Moreover, considering the subspace model in the LOT space improves the generative nature of our proposed classification method by implicitly including the data points from the convex combination of the provided training data points.

VII Conclusions

In this paper, we propose an end-to-end classification system designed for a specific category of point set classification problems, where a data class can be considered as a collection of instances of a template pattern observed under a set of spatial deformations. If these deformations are appropriately modeled as a collection of smooth, one-to-one, and nonlinear transformations, then the data classes become easily separable in the transform space, specifically the LOT space, due to the properties outlined in the paper. These properties also enable the approximation of data classes as convex subspaces in the LOT space, resulting in a more suitable data model for the nearest subspace method. As we observed in our experiments, this approach yields high accuracy and robustness against out-of-distribution conditions. Many point set classification problems can be formulated in this way, and therefore, our proposed solution has wide applicability.

Finally, we note that there can be many potential adaptations of the proposed method. For instance, the linear subspace method in the presented LOT space could be adjusted to incorporate alternative assumptions regarding the set that best represents each class. While some problems might benefit from a linear subspace method similar to the one described earlier, where all linear combinations are allowed, other problems may be require constraining the model using linear convex hulls. Additionally, investigating the sliced-Wasserstein distance using discrete CDT transform (as proposed in [31]) in conjunction with subspace models is another promising avenue for future research.

Our proposed approach provides promising results in point set classification and serves as a basis for further exploration in this domain. As the amount of 3D (or N-D) data continues to increase and accurate object recognition and scene understanding become more crucial, we believe that the combination of linear optimal transport embeddings and subspace modeling in the transform space will become increasingly significant in this context. We anticipate that our proposed method will inspire further research in this direction and lead to novel developments in recognizing 3D (or N-D) objects or distributions.

Acknowledgments

This work was supported in part by NIH grant GM130825, NSF grant 1759802, and CSBC grant U54-CA274499.

References

- [1] C. R. Qi, H. Su, K. Mo, and L. J. Guibas, “Pointnet: Deep learning on point sets for 3d classification and segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 652–660, 2017.

- [2] H. Zhao, L. Jiang, C.-W. Fu, and J. Jia, “Pointweb: Enhancing local neighborhood features for point cloud processing,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 5565–5573, 2019.

- [3] Y. Li, R. Bu, M. Sun, W. Wu, X. Di, and B. Chen, “Pointcnn: Convolution on x-transformed points,” Advances in neural information processing systems, vol. 31, 2018.

- [4] X. Chen, H. Ma, J. Wan, B. Li, and T. Xia, “Multi-view 3d object detection network for autonomous driving,” in Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pp. 1907–1915, 2017.

- [5] Y. Zhou and O. Tuzel, “Voxelnet: End-to-end learning for point cloud based 3d object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4490–4499, 2018.

- [6] Y. Xu and U. Stilla, “Toward building and civil infrastructure reconstruction from point clouds: A review on data and key techniques,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 14, pp. 2857–2885, 2021.

- [7] Q. Wang, Y. Tan, and Z. Mei, “Computational methods of acquisition and processing of 3d point cloud data for construction applications,” Archives of computational methods in engineering, vol. 27, pp. 479–499, 2020.

- [8] L. Zhou, Y. Du, and J. Wu, “3d shape generation and completion through point-voxel diffusion,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5826–5835, 2021.

- [9] F. Pomerleau, F. Colas, R. Siegwart, et al., “A review of point cloud registration algorithms for mobile robotics,” Foundations and Trends® in Robotics, vol. 4, no. 1, pp. 1–104, 2015.

- [10] X. Wang, M. H. Ang Jr, and G. H. Lee, “Cascaded refinement network for point cloud completion,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 790–799, 2020.

- [11] J. Zeng, G. Cheung, M. Ng, J. Pang, and C. Yang, “3d point cloud denoising using graph laplacian regularization of a low dimensional manifold model,” IEEE Transactions on Image Processing, vol. 29, pp. 3474–3489, 2019.

- [12] Y. Lu, X. Liu, A. Soltoggio, and S. Kolouri, “Slosh: Set locality sensitive hashing via sliced-wasserstein embeddings,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 2566–2576, 2024.

- [13] F. Radenović, G. Tolias, and O. Chum, “Fine-tuning cnn image retrieval with no human annotation,” IEEE transactions on pattern analysis and machine intelligence, vol. 41, no. 7, pp. 1655–1668, 2018.

- [14] Q. Wang, J. Xie, W. Zuo, L. Zhang, and P. Li, “Deep cnns meet global covariance pooling: Better representation and generalization,” IEEE transactions on pattern analysis and machine intelligence, vol. 43, no. 8, pp. 2582–2597, 2020.

- [15] D. Acharya, Z. Huang, D. Pani Paudel, and L. Van Gool, “Covariance pooling for facial expression recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp. 367–374, 2018.

- [16] Y. Zhang, J. Hare, and A. Prügel-Bennett, “Fspool: Learning set representations with featurewise sort pooling,” arXiv preprint arXiv:1906.02795, 2019.

- [17] Y. Wang, Y. Sun, Z. Liu, S. E. Sarma, M. M. Bronstein, and J. M. Solomon, “Dynamic graph cnn for learning on point clouds,” Acm Transactions On Graphics (tog), vol. 38, no. 5, pp. 1–12, 2019.

- [18] W. Liu, J. Sun, W. Li, T. Hu, and P. Wang, “Deep learning on point clouds and its application: A survey,” Sensors, vol. 19, no. 19, p. 4188, 2019.

- [19] F. Liao, M. Liang, Y. Dong, T. Pang, X. Hu, and J. Zhu, “Defense against adversarial attacks using high-level representation guided denoiser,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1778–1787, 2018.

- [20] S. Basu, S. Kolouri, and G. K. Rohde, “Detecting and visualizing cell phenotype differences from microscopy images using transport-based morphometry,” Proceedings of the National Academy of Sciences, vol. 111, no. 9, pp. 3448–3453, 2014.

- [21] M. Shifat-E-Rabbi, X. Yin, A. H. M. Rubaiyat, S. Li, S. Kolouri, A. Aldroubi, J. M. Nichols, and G. K. Rohde, “Radon cumulative distribution transform subspace modeling for image classification,” Journal of Mathematical Imaging and Vision, vol. 63, pp. 1185–1203, 2021.

- [22] S. Kolouri, S. R. Park, M. Thorpe, D. Slepcev, and G. K. Rohde, “Optimal mass transport: Signal processing and machine-learning applications,” IEEE signal processing magazine, vol. 34, no. 4, pp. 43–59, 2017.

- [23] W. Wang, D. Slepčev, S. Basu, J. A. Ozolek, and G. K. Rohde, “A linear optimal transportation framework for quantifying and visualizing variations in sets of images,” International journal of computer vision, vol. 101, pp. 254–269, 2013.

- [24] Y. Brenier, “Polar factorization and monotone rearrangement of vector-valued functions,” Commun. Pure Appl. Math., vol. 44, no. 4, pp. 375–417, 1991.

- [25] C. Villani, Topics in Optimal Transportation. No. 58, American Mathematical Soc., 2003.

- [26] A. Aldroubi, S. Li, and G. K. Rohde, “Partitioning signal classes using transport transforms for data analysis and machine learning,” Sampl. Theory Signal Process. Data Anal., vol. 19, no. 6, 2021.

- [27] C. Moosmüller and A. Cloninger, “Linear optimal transport embedding: Provable wasserstein classification for certain rigid transformations and perturbations,” Information and Inference: A Journal of the IMA, vol. 12, no. 1, pp. 363–389, 2023.

- [28] M. Shifat-E-Rabbi, Y. Zhuang, S. Li, A. H. M. Rubaiyat, X. Yin, and G. K. Rohde, “Invariance encoding in sliced-wasserstein space for image classification with limited training data,” Pattern Recognition, vol. 137, p. 109268, 2023.

- [29] A. H. M. Rubaiyat, M. Shifat-E-Rabbi, Y. Zhuang, S. Li, and G. K. Rohde, “Nearest subspace search in the signed cumulative distribution transform space for 1d signal classification,” in ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 3508–3512, IEEE, 2022.

- [30] A. H. M. Rubaiyat, S. Li, X. Yin, M. Shifat-E-Rabbi, Y. Zhuang, and G. K. Rohde, “End-to-end signal classification in signed cumulative distribution transform space,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024.

- [31] Y. Zhuang, S. Li, M. Shifat-E-Rabbi, X. Yin, A. H. M. Rubaiyat, G. K. Rohde, et al., “Local sliced-wasserstein feature sets for illumination-invariant face recognition,” arXiv preprint arXiv:2202.10642, 2022.

- [32] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, et al., “Scikit-learn: Machine learning in python,” the Journal of machine Learning research, vol. 12, pp. 2825–2830, 2011.

- [33] C. Garcia, “Point cloud mnist 2d,” 2020.

- [34] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

- [35] Z. Wu, S. Song, A. Khosla, F. Yu, L. Zhang, X. Tang, and J. Xiao, “3d shapenets: A deep representation for volumetric shapes,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1912–1920, 2015.

- [36] A. X. Chang, T. Funkhouser, L. Guibas, P. Hanrahan, Q. Huang, Z. Li, S. Savarese, M. Savva, S. Song, H. Su, et al., “Shapenet: An information-rich 3d model repository,” arXiv preprint arXiv:1512.03012, 2015.