LIPE: Learning Personalized Identity Prior for Non-rigid Image Editing

Abstract

Although recent years have witnessed significant advancements in image editing thanks to the remarkable progress of text-to-image diffusion models, the problem of non-rigid image editing still presents its complexities and challenges. Existing methods often fail to achieve consistent results due to the absence of unique identity characteristics. Thus, learning a personalized identity prior might help with consistency in the edited results. In this paper, we explore a novel task: learning the personalized identity prior for text-based non-rigid image editing. To address the problems in jointly learning prior and editing the image, we present LIPE, a two-stage framework designed to customize the generative model utilizing a limited set of images of the same subject, and subsequently employ the model with learned prior for non-rigid image editing. Experimental results demonstrate the advantages of our approach in various editing scenarios over past related leading methods in qualitative and quantitative ways.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/fac861f3-b250-47c3-9a5a-736c721fa363/teaser.png)

1 Introduction

Non-rigid image editing, such as altering the subject’s posture, expression, or view angles, is a longstanding problem in computer vision and graphics [52, 30]. It meets a wide spectrum of requirements in our daily life: adjusting the subject (e.g., human, pets, everyday object) in the captured image before sharing it on social media platforms [55].

However, this is challenging due to the necessity of generating an outcome consistent with the original subject [9]. Given the redundancy of images for the same identity from our smartphone’s album and Internet, leveraging these photos could be highly beneficial in making an identity-preserved result in non-rigid image editing. Therefore, in this work, we target a novel problem setting: given a small set (3-5) of reference images from the same identity, can we learn a personalized identity prior that facilitates the non-rigid editing of a test image while maintaining the unique properties of the identity?

This problem has not been fully explored, and the closest works to ours are [9, 30, 22, 57], which adopt a general domain prior from the large-scale text-to-image (T2I) model for one image editing. These approaches underperform in our task for mainly two reasons. 1) Despite impressive results powered by the remarkable generative capabilities of the T2I model, they usually fail to faithfully preserve the identity’s characteristics in the input image due to the lack of personalized identity prior. 2) They mainly rely on the less controllable text prompts with inversion [73, 45, 26] for image editing, hence tend to modify the unwanted image regions. In addition, some recent works [51, 85] customize the personalized face prior with at least dozens of face images for portrait image editing on pretrained StyleGAN [16], requiring more reference images (100) and incapable of achieving non-rigid editing of more general subjects beyond faces.

A viable solution to this task involves integrating the personalizing generative prior method with the non-rigid image editing technique. However, previous personalizing generative priors methods excel in creating customized subjects but falter in generating subjects’ fine-grained actions that align with text prompts, which are not suitable for image editing task. Additionally, when modifying the target subject, directly using the generative model to edit images often alters other attributes—such as background, lighting, and appearance—which are intended to remain constant.

To address the aforementioned issues, this study introduces a novel, unified solution based on a pre-trained T2I model termed LIPE, standing for Learning personalized Identity Prior for non-rigid image Editing. For personalized identity prior learning, we identify that the critical element is utilizing the high-quality detailed text-image pairs to augment the model’s capacity for depicting fine-grained actions or views. To achieve this, we devise a pipeline that leverages the large language-and-vision assistant and large language model to augment existing data. For precisely controlling edited images, we find that introducing some masks referring to the target is beneficial. However, acquiring a mask covering the target subject before generating the final image is challenging. To overcome this, we utilize the property that attention maps can outline the general areas of the objects in the generated image in the diffusion model. Based on this, we propose an editing paradigm called Identity-aware mask blend (NIMA). NIMA automatically extracts an identity-aware mask and uses it to blend the latents of the target and source images during the denoising process, thereby allowing for more controlled non-rigid image editing.

To evaluate the effectiveness of our framework and facilitate further research, we introduce a new dataset specifically tailored to this novel task. This dataset encompasses a diverse range of classes, spanning from everyday objects (cups and toys), animals (cats and dogs) to human faces. For each class, there is one image for testing edits and several images for learning identity prior. This comprehensive dataset enables a thorough assessment of model quality. We conduct experimental comparisons between our proposed approach and related baselines. Experimental results demonstrate that our approach excels in preserving the original identity while achieving the desired edit effect.

We summarize our key contributions as follows: (1) We introduce a novel task: personalized identity prior for text-based non-rigid image editing; (2) We propose a novel solution, namely LIPE, short for Learning personalized Identity prior and Practicing non-rigid image Editing, to tackle the associated technical challenges effectively; (3) We establish a new dataset to facilitate a comprehensive assessment of related works, aiming to advance research progress within the community.

2 Method

Due to space limitations, please refer to the appendix for the related work section. Given a few reference images of the same identity, our purpose is to learn a personalized identity prior and apply it to edit the subject’s non-rigid properties (e.g., pose, viewpoint, expression) in the test image, guided by the text prompt while preserving the subject’s characteristics with high fidelity and consistency.

Our approach can be divided into two parts. First, we fine-tune the T2I model, SDXL [61] in implementation, to acquire the personalized identity prior using limited reference images like dreambooth [69] (Subsection 2.1). After that, the non-rigid image editing via identity-aware mask blend is executed on the test image (Subsection 2.2).

2.1 Personalized Identity Prior

In order to learn the personalized identity prior, many approaches [15, 69] proposed to fine-tune the pre-trained text-to-image (T2I) model , provided a limited number () of reference images. However, they relied on less detailed text-image pairs during fine-tuning, leading to a personalized identity prior suitable for subject-driven generation but less effective for the non-rigid image editing task. Although the fine-tuned model could generate images of the target subject, it struggles to generate the same subject engaged in a given specific action. This indicates that the capability of fine-tuned models to depict specific non-rigid properties needs improvement. Therefore, we aim to enhance the model’s capacity to comprehend the non-rigid properties of this identity and thus augment the model’s efficacy in the downstream non-rigid image editing task. Based on this, we have adopted a new pipeline for data augmentation, enhancing the quality of data from the ID dataset to the regularized dataset, as detailed below and shown in Fig. 2.

Editing-oriented data augmentation. Inspired by the recent progress [7] that fine-grained image captions are the key to generative models’ controllability, to enable more accurate text-to-image mapping ability for non-rigid image properties, we propose to construct more delicate editing-oriented text-image pairs to learn the personalized identity prior. Our paired data consists of two main datasets: the identity and regularization datasets.

The identity dataset is derived from the reference images and designed to learn the unique characteristics of the target identity. Motivated by the large multi-modal models [42, 83, 11], which is able to generate more flexible and customized captions than the traditional methods [38, 37, 25], we utilize the recent large language-and-vision assistant (LLaVa) [42] to generate the caption counterparts for the reference images. We have specifically designed a prompt for LLaVa in the hope that it can offer the caption we need, which includes accurate and comprehensive descriptions of the non-rigid properties of the subject, as well as other features of the image, illustrated in Fig. 2(a).

The regularization dataset is designed to address the image drift issue [69], where a diffusion model finetuned for a specific task tends to lose the general semantic knowledge and lack diversity. Different from the previous methods that solely relied on coarse prompts describing what the instance is, we produce finely detailed prompts delineating the non-rigid properties of the instance with the help of GPT-4 [1] and further utilize the recent large-scale T2I model, SDXL [61], to generate the corresponding images, as shown in Fig. 2(b). The resultant identity and regularization datasets both serve as the database to learn the identity prior. Please see the supplementary material for the details of prompt design for the large language model.

2.2 Non-rigid Image Editing via Identity-aware Mask Blend

Having learned the personalized identity prior, our objective shifts towards leveraging it for precise non-rigid image editing. To precisely control edited images, we leverage masks that represent the target objects before denoising the final outputs. To this end, we propose a non-rigid image editing pipeline that presciently extracts identity-aware masks before denoising the final output and blends the source and target information in the latent level for editing. The entire process is depicted in Fig 3. Initially, we invert the test image to acquire the inverted latents with the information of source prompt (which is obtained by a captioner in Subsection 2.1). Subsequently, we produce the target image from the inverted latent using the target prompt and utilize masks to guide the editing.

Identity-aware mask extraction. We aim to determine a precise mask for the target subject before generating the final target image. Inspired by the observation that cross-attention maps delineate areas corresponding to each semantic text token [22, 74], we consider the cross-attention maps for some text tokens related to identity (referred to as identity-aware object prompt ) as an approximate mask. To implement this, we add an extra identity-aware branch in the diffusion denoising process to calculate the identity-aware attention maps, as illustrated in Fig 4. To represent the identity, the identity-aware object prompt is often defined as a specific object, such as “penguin”, “bird”, or “cat” with the rare token . Thus in the cross-attention module of the model, apart from computing the attention map between the intended text prompt () and the image, the attention map between the identity-aware text prompt and the image is also computed to obtain a mask. It is worth noting that the identity-aware branch does not interfere with the original generation process.

To be specific, we first average the identity-aware attention maps across all layers and all previous steps to obtain a rough mask , formulated as:

| (1) |

where is the identity-aware attention map in layer and timestep .

Considering that self-attention maps reflect the layout and grouping regions [22, 75, 58, 47, 77], to refine the mask , we multiply it with averaged self-attention maps to acquire the final identity-aware mask .

| (2) |

where is the average self-attention maps across all layers and all previous steps and is the hyper-parameter controlling the extent of sharpening.

In NIMA, we obtain two types of identity-aware masks : source mask for the original test image and target mask for the target image. The source mask is obtained at the last step in the reconstruction process and the target mask is obtained during the denoising process dynamically. Target is updated at each denoising timestep .

Inverted latent blending. At each timestep , we utilize the two masks and inverted latents to control the generation process. First, mask is computed and serves as a mask guidance.

| (3) |

The union of two masks can prevent the phenomenon of overlapping two subjects in a single image.

Unlike previous editing methods [4, 3, 58], we blend the two latents generated by the inversion process instead, avoiding the inconsistent foregrounds and noticeable boundaries. For the area outside the mask, we maintain the test image’s inverted latent to preserve the original image’s background information.

| (4) |

3 Experiments

In this section, we first introduce the new dataset (LIPE) which is brought to assess different related methods (Subsection 3.1). Then, we display the comprehensive comparisons with previous related methods in both qualitative and quantitative ways (Subsection 3.2). Finally, we further analyze the role of each technical component from LIPE (Subsection 3.3). Please see the supplementary material for full implementation details and more experimental results.

3.1 Dataset

We present a novel dataset, LIPE, designed specifically for this task. LIPE comprises a total of 28 subjects, encompassing a diverse range from everyday objects and animals to human faces, facilitating the exploration of various non-rigid properties editing (e.g., poses, viewpoints, and expressions). Each instance within the LIPE dataset is mostly accompanied by 3-5 reference images for learning the identity prior, along with one image designated for testing edits. Please see the supplementary material for more details about this dataset.

3.2 Comparisons with Previous Works

We mainly make comparisons with several current leading related works specializing in non-rigid image editing tasks [9, 51, 30]. Moreover, we also design a baseline combining two leading approaches [9, 69] from identity prior learning and non-rigid image editing, denoted as DreamCtrl. DDPM inversion [26] is employed for all methods with inversion process.

We elaborate on all the related methods below:

-

MasaCtrl [9]. It performs the non-rigid image editing on the T2I model without any personalized identity prior. To better enhance the generation quality and ensure a fair comparison, source prompt is utilized in the inversion branch instead of null. This method justifies the necessity of adding personalized identity prior to the non-rigid image editing task.

-

DreamCtrl. Because personalized identity prior for text-based non-rigid image editing is a novel task, we design a baseline merging two leading methods in identity prior learning and non-rigid image editing, DreamBooth [69] and MasaCtrl [9]. Specifically, the T2I model is fine-tuned in the DreamBooth [69]’s way, and then image editing is performed on the fine-tuned model in the MasaCtrl [9]’s way. DreamCtrl shares the same regularized dataset with LIPE to enhance this method’s learning but has no editing-oriented captions.

-

MyStyle [51]. It learns a personalized identity prior from about 100 reference images and leverages it for human face image editing based on a pre-trained StyleGAN [29]. It relies on several pre-extracted semantic directions from typical StyleGAN [29] to modify the latent to achieve semantic editing. During comparison, we use directions that align closely with our target prompt’s meaning. To improve the reconstruction of the test image, we also follow the optional fine-tuning process on the test image. Moreover, because this method only focuses on the human face domain, all evaluations are considered only on human face results.

3.2.1 Qualitative Results on General Objects

The results on general objects are shown in Fig 5. Our method exhibits the flexible capacity for non-rigid transformation and high-quality preservation of background information, outperforming other approaches. Based on these outcomes, we claim that the personalized identity prior is essential for preserving the identity’s characteristics in non-rigid image editing, as the edited results of Imagic [30] and MasaCtrl [9] indicate. Compared to merely amalgamating the two existing methodologies—specifically, DreamCtrl—our approach significantly enhances the generation of specified actions while preserving the integrity of the background.

3.2.2 Qualitative Results on Human Faces

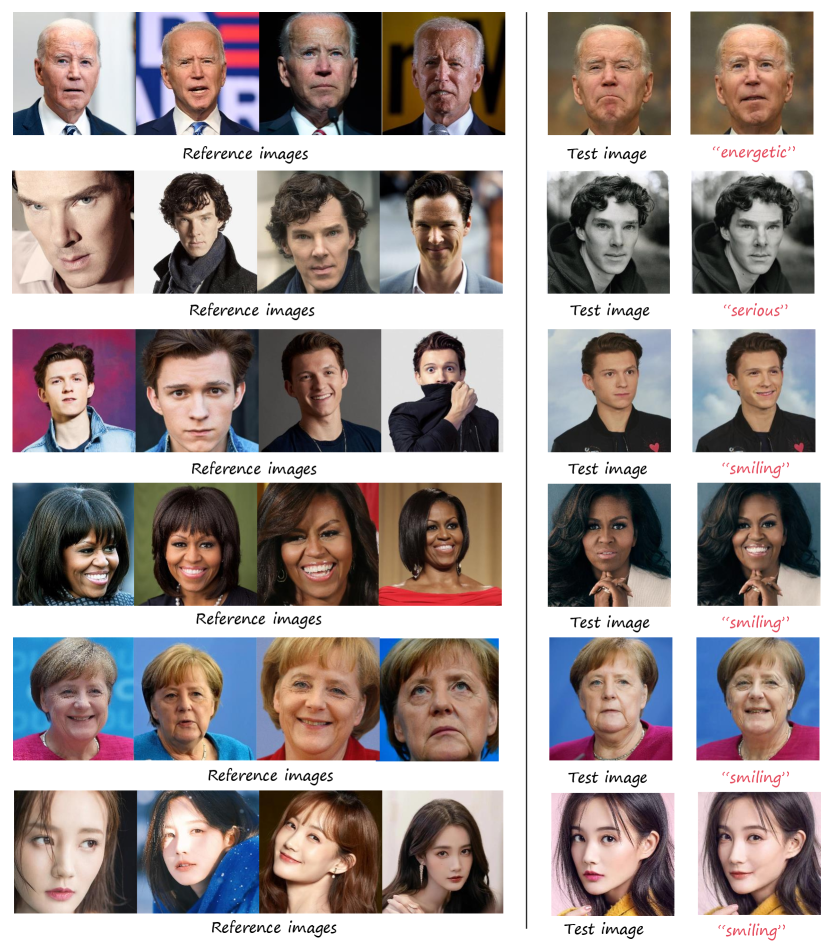

The results on human faces are shown in Fig 6. Editing human faces poses greater challenges compared to general subjects due to the complex and subtle nature of their unique characteristics. And the experimental results on human faces further corroborate the claim from our study on general subjects that only general prior is not enough for non-rigid editing. Moreover, our approach demonstrates better background preservation and efficiently outputs prompt-compliant images compared to DreamCtrl. MyStyle [51] struggles to perform effectively when trained on very limited data (less than 5 images, see 2,3,4 rows) and to preserve the information of the original image when trained on few images (less than 10 images, see 1,5 row).

| Identity | Background | Editing | Overall | |

|---|---|---|---|---|

| Imagic [30] | 0.4545 | 0.5908 | 0.1818 | 0.4090 |

| MasaCtrl [9] | 0.1785 | 0.4714 | 0.3964 | 0.3488 |

| DreamCtrl | 0.7535 | 0.6892 | 0.6464 | 0.6964 |

| MyStyle [51] | 0.7500 | 0.8100 | 0.4400 | 0.6666 |

| LIPE(Ours) | 0.9464 | 0.8857 | 0.7357 | 0.8559 |

| DINOv2-I | CLIP-I | CLIP-T | CLIP-D | |

|---|---|---|---|---|

| Imagic [30] | 0.6961 | 0.7356 | 0.2310 | 0.0138 |

| MasaCtrl [9] | 0.6027 | 0.7425 | 0.2628 | 0.0178 |

| DreamCtrl | 0.7804 | 0.8517 | 0.2304 | 0.0433 |

| MyStyle [51] | 0.8148 | 0.7371 | 0.1892 | 0.0251 |

| LIPE(Ours) | 0.8848 | 0.9247 | 0.2331 | 0.0807 |

3.2.3 User study

To evaluate the effectiveness of algorithms from the perspective of human aesthetics, we conducted a user study on the LIPE dataset. We engaged 30 users to evaluate the outcomes based on three key aspects: (1) Identity Consistency: how effectively the edited result retains the distinctive characteristics of the original identity. (2) Background Consistency: how completely the edited result maintains the background of the test image. (3) Editing Satisfaction: whether the required non-rigid transformation is successfully achieved. Users were asked to assign scores from choices in [0,0.5,1], where higher scores indicate better performance. Subsequently, the scores for each aspect were averaged across users for each method. The overall aspect score was then calculated as the mean of the scores for the three aspects. The results are reported in Table 1. Our method demonstrated significant advantages over other methods across all three aspects.

3.2.4 Quantitative Evaluation

We quantitatively evaluated our method with other methods, employing four metrics to assess identity preservation and text-image alignment. The DINOv2-I metric calculates the pairwise cosine similarity of test images and edited images in the space of DINOv2 [54] on average. Similarly, the CLIP-I metric computes the pairwise cosine similarity of two images in CLIP [62] space. These two metrics serve as indicators of identity preservation. CLIP-T, introduced by [69], evaluates text-image alignment by computing the pairwise cosine similarity between the edited images and the target prompts in the CLIP [62] space, which often measures the model’s performance for generation task. The CLIP text-image direction similarity metric (CLIP-D), introduced by [16], assesses the alignment of text changes with corresponding image alterations. It often serves as a metric for image editing tasks [48]. Results in Tab. 2 indicate LIPE achieves superior performance in identity preservation and exhibits strong alignment between text descriptions and image changes. MasaCtrl [9] displays higher CLIP-I scores, likely due to its tendency to generate the image aligning with the target prompt instead of editing the image.

3.3 Ablation Study

We conducted an ablation study to delineate the roles of detailed captions and masks in our method for non-rigid image editing. As shown in the Fig. 7, without detailed action-descriptive captions to train the model’s priors, it is challenging to make the subject perform specific actions. Moreover, the mask precisely helps control the consistency of the background before and after editing.

4 Conclusion

We have introduced a novel problem: learning a personalized identity prior and utilizing it for non-rigid image editing. To address this challenge, our method (LIPE) leverages a set of reference images to learn a personalized identity prior, which greatly benefits the editing task. Subsequently, non-rigid image editing is performed using identity-aware mask blending to achieve precise editing. Experiments show that our method outperforms previous methods in both qualitative and quantitative ways. We believe that our work opens up new possibilities in the realm of non-rigid image editing and serves as a catalyst for future research in this area.

References

- Achiam et al. [2023] Josh Achiam, Steven Adler, Sandhini Agarwal, Lama Ahmad, Ilge Akkaya, Florencia Leoni Aleman, Diogo Almeida, Janko Altenschmidt, Sam Altman, Shyamal Anadkat, et al. 2023. Gpt-4 technical report. arXiv preprint arXiv:2303.08774.

- Alaluf et al. [2022] Yuval Alaluf, Omer Tov, Ron Mokady, Rinon Gal, and Amit Bermano. 2022. Hyperstyle: Stylegan inversion with hypernetworks for real image editing. In CVPR, pages 18511–18521.

- Avrahami et al. [2023] Omri Avrahami, Ohad Fried, and Dani Lischinski. 2023. Blended latent diffusion. ACM Transactions on Graphics (TOG), 42(4):1–11.

- Avrahami et al. [2022] Omri Avrahami, Dani Lischinski, and Ohad Fried. 2022. Blended diffusion for text-driven editing of natural images. In ICCV, pages 18208–18218.

- Bar-Tal et al. [2022] Omer Bar-Tal, Dolev Ofri-Amar, Rafail Fridman, Yoni Kasten, and Tali Dekel. 2022. Text2live: Text-driven layered image and video editing. In ECCV, pages 707–723. Springer.

- Bau et al. [2020] David Bau, Hendrik Strobelt, William Peebles, Jonas Wulff, Bolei Zhou, Jun-Yan Zhu, and Antonio Torralba. 2020. Semantic photo manipulation with a generative image prior. arXiv preprint arXiv:2005.07727.

- Betker et al. [2023] James Betker, Gabriel Goh, Li Jing, Tim Brooks, Jianfeng Wang, Linjie Li, Long Ouyang, Juntang Zhuang, Joyce Lee, Yufei Guo, et al. 2023. Improving image generation with better captions. Computer Science. https://cdn. openai. com/papers/dall-e-3. pdf, 2(3):8.

- Brooks et al. [2023] Tim Brooks, Aleksander Holynski, and Alexei A Efros. 2023. Instructpix2pix: Learning to follow image editing instructions. In CVPR, pages 18392–18402.

- Cao et al. [2023] Mingdeng Cao, Xintao Wang, Zhongang Qi, Ying Shan, Xiaohu Qie, and Yinqiang Zheng. 2023. MasaCtrl: Tuning-Free Mutual Self-Attention Control for Consistent Image Synthesis and Editing. In ICCV.

- Chang et al. [2022] Huiwen Chang, Han Zhang, Lu Jiang, Ce Liu, and William T Freeman. 2022. Maskgit: Masked generative image transformer. In CVPR, pages 11315–11325.

- Chen et al. [2023] Lin Chen, Jisong Li, Xiaoyi Dong, Pan Zhang, Conghui He, Jiaqi Wang, Feng Zhao, and Dahua Lin. 2023. Sharegpt4v: Improving large multi-modal models with better captions. arXiv preprint arXiv:2311.12793.

- Couairon et al. [2022] Guillaume Couairon, Jakob Verbeek, Holger Schwenk, and Matthieu Cord. 2022. Diffedit: Diffusion-based semantic image editing with mask guidance. arXiv preprint arXiv:2210.11427.

- Dhariwal and Nichol [2021] Prafulla Dhariwal and Alexander Nichol. 2021. Diffusion models beat gans on image synthesis. NIPS, 34:8780–8794.

- Ding et al. [2021] Ming Ding, Zhuoyi Yang, Wenyi Hong, Wendi Zheng, Chang Zhou, Da Yin, Junyang Lin, Xu Zou, Zhou Shao, Hongxia Yang, et al. 2021. Cogview: Mastering text-to-image generation via transformers. NIPS, 34:19822–19835.

- Gal et al. [2022a] Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022a. An image is worth one word: Personalizing text-to-image generation using textual inversion. arXiv preprint arXiv:2208.01618.

- Gal et al. [2022b] Rinon Gal, Or Patashnik, Haggai Maron, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022b. StyleGAN-NADA: CLIP-guided domain adaptation of image generators. ACM Transactions on Graphics (TOG), 41(4):1–13.

- Geng et al. [2023] Zigang Geng, Binxin Yang, Tiankai Hang, Chen Li, Shuyang Gu, Ting Zhang, Jianmin Bao, Zheng Zhang, Han Hu, Dong Chen, et al. 2023. Instructdiffusion: A generalist modeling interface for vision tasks. arXiv preprint arXiv:2309.03895.

- Goodfellow et al. [2014] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. NIPS, 27.

- Gu et al. [2022] Shuyang Gu, Dong Chen, Jianmin Bao, Fang Wen, Bo Zhang, Dongdong Chen, Lu Yuan, and Baining Guo. 2022. Vector quantized diffusion model for text-to-image synthesis. In CVPR, pages 10696–10706.

- Gupta et al. [2023] Agrim Gupta, Lijun Yu, Kihyuk Sohn, Xiuye Gu, Meera Hahn, Li Fei-Fei, Irfan Essa, Lu Jiang, and José Lezama. 2023. Photorealistic video generation with diffusion models. arXiv preprint arXiv:2312.06662.

- Han et al. [2023] Ligong Han, Yinxiao Li, Han Zhang, Peyman Milanfar, Dimitris Metaxas, and Feng Yang. 2023. Svdiff: Compact parameter space for diffusion fine-tuning. arXiv preprint arXiv:2303.11305.

- Hertz et al. [2023] Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2023. Prompt-to-prompt image editing with cross attention control. In ICLR.

- Ho et al. [2020] Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Advances in neural information processing systems, 33:6840–6851.

- Ho and Salimans [2022] Jonathan Ho and Tim Salimans. 2022. Classifier-free diffusion guidance. arXiv preprint arXiv:2207.12598.

- Hu et al. [2022] Xiaowei Hu, Zhe Gan, Jianfeng Wang, Zhengyuan Yang, Zicheng Liu, Yumao Lu, and Lijuan Wang. 2022. Scaling up vision-language pre-training for image captioning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 17980–17989.

- Huberman-Spiegelglas et al. [2023] Inbar Huberman-Spiegelglas, Vladimir Kulikov, and Tomer Michaeli. 2023. An Edit Friendly DDPM Noise Space: Inversion and Manipulations. arXiv preprint arXiv:2304.06140.

- Jiang et al. [2022] Wei Jiang, Kwang Moo Yi, Golnoosh Samei, Oncel Tuzel, and Anurag Ranjan. 2022. Neuman: Neural human radiance field from a single video. In ECCV, pages 402–418. Springer.

- Ju et al. [2023] Xuan Ju, Ailing Zeng, Yuxuan Bian, Shaoteng Liu, and Qiang Xu. 2023. Direct inversion: Boosting diffusion-based editing with 3 lines of code. arXiv preprint arXiv:2310.01506.

- Karras et al. [2019] Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In CVPR, pages 4401–4410.

- Kawar et al. [2023] Bahjat Kawar, Shiran Zada, Oran Lang, Omer Tov, Huiwen Chang, Tali Dekel, Inbar Mosseri, and Michal Irani. 2023. Imagic: Text-based real image editing with diffusion models. In CVPR.

- Kim et al. [2022] Gwanghyun Kim, Taesung Kwon, and Jong Chul Ye. 2022. Diffusionclip: Text-guided diffusion models for robust image manipulation. In CVPR, pages 2426–2435.

- Kingma and Ba [2014] Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

- Kumari et al. [2023] Nupur Kumari, Bingliang Zhang, Richard Zhang, Eli Shechtman, and Jun-Yan Zhu. 2023. Multi-concept customization of text-to-image diffusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1931–1941.

- Li et al. [2019] Bowen Li, Xiaojuan Qi, Thomas Lukasiewicz, and Philip Torr. 2019. Controllable text-to-image generation. NIPS, 32.

- Li et al. [2020] Bowen Li, Xiaojuan Qi, Thomas Lukasiewicz, and Philip HS Torr. 2020. Manigan: Text-guided image manipulation. In CVPR, pages 7880–7889.

- Li et al. [2024] Dongxu Li, Junnan Li, and Steven Hoi. 2024. Blip-diffusion: Pre-trained subject representation for controllable text-to-image generation and editing. NIPS, 36.

- Li et al. [2023a] Junnan Li, Dongxu Li, Silvio Savarese, and Steven Hoi. 2023a. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv preprint arXiv:2301.12597.

- Li et al. [2022a] Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi. 2022a. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In ICML, pages 12888–12900. PMLR.

- Li et al. [2023b] Tianle Li, Max Ku, Cong Wei, and Wenhu Chen. 2023b. DreamEdit: Subject-driven Image Editing. arXiv preprint arXiv:2306.12624.

- Li et al. [2022b] Xiaoming Li, Shiguang Zhang, Shangchen Zhou, Lei Zhang, and Wangmeng Zuo. 2022b. Learning Dual Memory Dictionaries for Blind Face Restoration. TPAMI, 45(5):5904–5917.

- Li et al. [2023c] Zhen Li, Mingdeng Cao, Xintao Wang, Zhongang Qi, Ming-Ming Cheng, and Ying Shan. 2023c. Photomaker: Customizing realistic human photos via stacked id embedding. arXiv preprint arXiv:2312.04461.

- Liu et al. [2024] Haotian Liu, Chunyuan Li, Qingyang Wu, and Yong Jae Lee. 2024. Visual instruction tuning. NIPS, 36.

- Meng et al. [2021] Chenlin Meng, Yutong He, Yang Song, Jiaming Song, Jiajun Wu, Jun-Yan Zhu, and Stefano Ermon. 2021. Sdedit: Guided image synthesis and editing with stochastic differential equations. arXiv preprint arXiv:2108.01073.

- Miyake et al. [2023] Daiki Miyake, Akihiro Iohara, Yu Saito, and Toshiyuki Tanaka. 2023. Negative-prompt Inversion: Fast Image Inversion for Editing with Text-guided Diffusion Models. arXiv preprint arXiv:2305.16807.

- Mokady et al. [2023] Ron Mokady, Amir Hertz, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2023. Null-text inversion for editing real images using guided diffusion models. In CVPR, pages 6038–6047.

- Nam et al. [2018] Seonghyeon Nam, Yunji Kim, and Seon Joo Kim. 2018. Text-adaptive generative adversarial networks: manipulating images with natural language. NIPS, 31.

- Nguyen et al. [2024a] Quang Nguyen, Truong Vu, Anh Tran, and Khoi Nguyen. 2024a. Dataset diffusion: Diffusion-based synthetic data generation for pixel-level semantic segmentation. NIPS, 36.

- Nguyen et al. [2024b] Thao Nguyen, Yuheng Li, Utkarsh Ojha, and Yong Jae Lee. 2024b. Visual Instruction Inversion: Image Editing via Image Prompting. NIPS, 36.

- Nichol et al. [2021] Alex Nichol, Prafulla Dhariwal, Aditya Ramesh, Pranav Shyam, Pamela Mishkin, Bob McGrew, Ilya Sutskever, and Mark Chen. 2021. Glide: Towards photorealistic image generation and editing with text-guided diffusion models. arXiv preprint arXiv:2112.10741.

- Nichol and Dhariwal [2021] Alexander Quinn Nichol and Prafulla Dhariwal. 2021. Improved denoising diffusion probabilistic models. In ICML, pages 8162–8171. PMLR.

- Nitzan et al. [2022] Yotam Nitzan, Kfir Aberman, Qiurui He, Orly Liba, Michal Yarom, Yossi Gandelsman, Inbar Mosseri, Yael Pritch, and Daniel Cohen-Or. 2022. Mystyle: A personalized generative prior. ACM Transactions on Graphics (TOG), 41(6):1–10.

- Oh et al. [2001] Byong Mok Oh, Max Chen, Julie Dorsey, and Frédo Durand. 2001. Image-based modeling and photo editing. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques, pages 433–442.

- OpenAI [2024] OpenAI. 2024. Video Generation Models as World Simulators. https://openai.com/research/video-generation-models-as-world-simulators.

- Oquab et al. [2023] Maxime Oquab, Timothée Darcet, Théo Moutakanni, Huy Vo, Marc Szafraniec, Vasil Khalidov, Pierre Fernandez, Daniel Haziza, Francisco Massa, Alaaeldin El-Nouby, et al. 2023. Dinov2: Learning robust visual features without supervision. arXiv preprint arXiv:2304.07193.

- Pan et al. [2023] Xingang Pan, Ayush Tewari, Thomas Leimkühler, Lingjie Liu, Abhimitra Meka, and Christian Theobalt. 2023. Drag your gan: Interactive point-based manipulation on the generative image manifold. In ACM SIGGRAPH 2023, pages 1–11.

- Pan et al. [2021] Xingang Pan, Xiaohang Zhan, Bo Dai, Dahua Lin, Chen Change Loy, and Ping Luo. 2021. Exploiting deep generative prior for versatile image restoration and manipulation. TPAMI, 44(11):7474–7489.

- Parmar et al. [2023] Gaurav Parmar, Krishna Kumar Singh, Richard Zhang, Yijun Li, Jingwan Lu, and Jun-Yan Zhu. 2023. Zero-shot image-to-image translation. In SIGGRAPH 2023, pages 1–11.

- Patashnik et al. [2023] Or Patashnik, Daniel Garibi, Idan Azuri, Hadar Averbuch-Elor, and Daniel Cohen-Or. 2023. Localizing Object-level Shape Variations with Text-to-Image Diffusion Models. arXiv preprint arXiv:2303.11306.

- Patashnik et al. [2021] Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. 2021. Styleclip: Text-driven manipulation of stylegan imagery. In ICCV, pages 2085–2094.

- Peebles and Xie [2023] William Peebles and Saining Xie. 2023. Scalable diffusion models with transformers. In ICCV, pages 4195–4205.

- Podell et al. [2023] Dustin Podell, Zion English, Kyle Lacey, Andreas Blattmann, Tim Dockhorn, Jonas Müller, Joe Penna, and Robin Rombach. 2023. Sdxl: Improving latent diffusion models for high-resolution image synthesis. arXiv preprint arXiv:2307.01952.

- Radford et al. [2021] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. 2021. Learning transferable visual models from natural language supervision. In ICML, pages 8748–8763. PMLR.

- Ramesh et al. [2022] Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125, 1(2):3.

- Ramesh et al. [2021] Aditya Ramesh, Mikhail Pavlov, Gabriel Goh, Scott Gray, Chelsea Voss, Alec Radford, Mark Chen, and Ilya Sutskever. 2021. Zero-shot text-to-image generation. In ICML, pages 8821–8831. PMLR.

- Reed et al. [2016] Scott Reed, Zeynep Akata, Xinchen Yan, Lajanugen Logeswaran, Bernt Schiele, and Honglak Lee. 2016. Generative adversarial text to image synthesis. In ICML, pages 1060–1069. PMLR.

- Roich et al. [2022] Daniel Roich, Ron Mokady, Amit H Bermano, and Daniel Cohen-Or. 2022. Pivotal tuning for latent-based editing of real images. TOG, 42(1):1–13.

- Rombach et al. [2022] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In CVPR, pages 10684–10695.

- Ronneberger et al. [2015] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, pages 234–241. Springer.

- Ruiz et al. [2023] Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. 2023. Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. In CVPR, pages 22500–22510.

- Saharia et al. [2022] Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, et al. 2022. Photorealistic text-to-image diffusion models with deep language understanding. NIPS, 35:36479–36494.

- Shen et al. [2020] Yujun Shen, Jinjin Gu, Xiaoou Tang, and Bolei Zhou. 2020. Interpreting the latent space of gans for semantic face editing. In CVPR, pages 9243–9252.

- Shen and Zhou [2021] Yujun Shen and Bolei Zhou. 2021. Closed-form factorization of latent semantics in gans. In CVPR, pages 1532–1540.

- Song et al. [2020] Jiaming Song, Chenlin Meng, and Stefano Ermon. 2020. Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502.

- Tang et al. [2022] Raphael Tang, Linqing Liu, Akshat Pandey, Zhiying Jiang, Gefei Yang, Karun Kumar, Pontus Stenetorp, Jimmy Lin, and Ferhan Ture. 2022. What the daam: Interpreting stable diffusion using cross attention. arXiv preprint arXiv:2210.04885.

- Tumanyan et al. [2023] Narek Tumanyan, Michal Geyer, Shai Bagon, and Tali Dekel. 2023. Plug-and-play diffusion features for text-driven image-to-image translation. In CVPR, pages 1921–1930.

- Vaswani et al. [2017] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. NIPS, 30.

- Wang et al. [2023] Jinglong Wang, Xiawei Li, Jing Zhang, Qingyuan Xu, Qin Zhou, Qian Yu, Lu Sheng, and Dong Xu. 2023. Diffusion model is secretly a training-free open vocabulary semantic segmenter. arXiv preprint arXiv:2309.02773.

- Wang et al. [2024] Qixun Wang, Xu Bai, Haofan Wang, Zekui Qin, and Anthony Chen. 2024. InstantID: Zero-shot Identity-Preserving Generation in Seconds. arXiv preprint arXiv:2401.07519.

- Wu et al. [2022] Chenfei Wu, Jian Liang, Lei Ji, Fan Yang, Yuejian Fang, Daxin Jiang, and Nan Duan. 2022. Nüwa: Visual synthesis pre-training for neural visual world creation. In ECCV, pages 720–736. Springer.

- Xie et al. [2023] Shaoan Xie, Yang Zhao, Zhisheng Xiao, Kelvin CK Chan, Yandong Li, Yanwu Xu, Kun Zhang, and Tingbo Hou. 2023. DreamInpainter: Text-Guided Subject-Driven Image Inpainting with Diffusion Models. arXiv preprint arXiv:2312.03771.

- Xu et al. [2018] Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, and Xiaodong He. 2018. Attngan: Fine-grained text to image generation with attentional generative adversarial networks. In CVPR, pages 1316–1324.

- Ye et al. [2023a] Hu Ye, Jun Zhang, Sibo Liu, Xiao Han, and Wei Yang. 2023a. Ip-adapter: Text compatible image prompt adapter for text-to-image diffusion models. arXiv preprint arXiv:2308.06721.

- Ye et al. [2023b] Qinghao Ye, Haiyang Xu, Guohai Xu, Jiabo Ye, Ming Yan, Yiyang Zhou, Junyang Wang, Anwen Hu, Pengcheng Shi, Yaya Shi, et al. 2023b. mplug-owl: Modularization empowers large language models with multimodality. arXiv preprint arXiv:2304.14178.

- Yu et al. [2022] Jiahui Yu, Yuanzhong Xu, Jing Yu Koh, Thang Luong, Gunjan Baid, Zirui Wang, Vijay Vasudevan, Alexander Ku, Yinfei Yang, Burcu Karagol Ayan, et al. 2022. Scaling autoregressive models for content-rich text-to-image generation. arXiv preprint arXiv:2206.10789, 2(3):5.

- Zeng et al. [2023] Libing Zeng, Lele Chen, Yi Xu, and Nima Khademi Kalantari. 2023. MyStyle++: A Controllable Personalized Generative Prior. In SIGGRAPH Asia 2023, pages 1–11.

- Zhang et al. [2023a] Chenshuang Zhang, Chaoning Zhang, Mengchun Zhang, and In So Kweon. 2023a. Text-to-image diffusion model in generative ai: A survey. arXiv preprint arXiv:2303.07909.

- Zhang et al. [2017] Han Zhang, Tao Xu, Hongsheng Li, Shaoting Zhang, Xiaogang Wang, Xiaolei Huang, and Dimitris N Metaxas. 2017. Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks. In ICCV, pages 5907–5915.

- Zhang et al. [2023b] Zhixing Zhang, Ligong Han, Arnab Ghosh, Dimitris N Metaxas, and Jian Ren. 2023b. Sine: Single image editing with text-to-image diffusion models. In CVPR, pages 6027–6037.

Appendix A Appendix / supplemental material

We start with section B to introduce recent related research. Subsequently, section C shows background about the diffusion model and inversion technique. Next, in Section D and Section E, we show the details about our method and experiments, respectively. And section F provides additional examples, demonstrating our method across diverse subjects and backgrounds. Finally, section G discusses the limitations of our method.

Appendix B Related Work

B.1 Text-to-image generation

During the early stages, text-to-image models primarily relied on generative adversarial networks (GANs) with a generator-discriminator structure, trained on small-scale datasets [18, 65, 87, 81, 34]. Subsequently, auto-regressive methods were explored on large-scale data [64, 14, 79, 84]. However, these methods faced challenges of high computation costs and sequential error accumulation [86]. Recently, diffusion models [23, 73] have gradually become prominent in this field, leveraging large-scale models on large-scale data [67, 49, 63, 70, 19]. These models denoise images by modeling images from Gaussian distributions through a Markov-chain process and exhibit significant capabilities in generating photorealistic images. Presently, several works [60, 20, 53] employ the transformer architecture [76] as the backbone of the diffusion model instead of U-Net [68], aiming to scale it and produce high-quality images or videos.

B.2 Text-guided image editing

Before diffusion models emerged as the predominant approach in image generation tasks, numerous methods were devised to manipulate images within the latent space of Generative Adversarial Netwofrks (GANs) [2, 59, 71, 72, 46, 35, 59, 88]. They utilize the decoupling properties of features in the StyleGAN’s latent space to discover some editing directions. Apart from GAN-based methods, certain techniques employ other deep learning-based systems for image editing [5, 10].

More recently, with the popularity of diffusion models, various approaches have been devised to utilize diffusion models for image editing [8, 31, 49, 17, 43, 4, 12, 22, 75, 9, 57]. Some methods focus on general type of edits [22, 8, 12], while others specialize in image style transfer [75, 88], inpainting [4, 80], or object replacement [57]. Presently, the non-rigid edits become a noteworthy direction [9, 30]. Imagic [30] optimizes the text embedding and model’s weight to hold the original image’s information. Masactrl [9] copies the key and value features from the original generation process to keep the color and texture information of the original subject in a training-free way. However, it is hard for these approaches to achieve non-rigid edits while holding the original subject since they only use the general prior without specific identity information. Therefore, in this work, we consider adding the personalized identity prior to preserve the key features of the target subject from the test image.

B.3 Personalized Generative Prior

The problem of learning a personalized prior with the pre-trained generative model was first researched on GANs [2, 66, 56, 6]. Based on pivotal tuning [66], MyStyle [51] learns the domain prior trained on the large-scale face dataset (FFHQ [29]) and the identity prior on approximately 100 face images for portrait image editing.

Different from MyStyle [51], our work focuses on general subject editing and only requires 3-5 images to learn the personalized identity prior.

In recent years, many methods have tried to personalize the text-to-image diffusion models by fine-tuning text embedding [15], full weights[69], a subset of cross-attention layers [33] or singular values [21]. Other works incorporate extra adapters to receive a reference image and generate the image with the same subject [82, 36, 41, 78]. Based on dreambooth [69], DreamEdit [39] first learns a personalized identity prior and then employs it to do editing. However, it only handles rigid image editing tasks, such as subject replacement and subject addition. To the best of our knowledge, ours is the first paradigm to apply the personalized identity prior to a non-rigid image editing task for general subjects, based on the state-of-the-art T2I model.

Appendix C Background

C.1 Diffusion model

Diffusion models [13, 23, 67, 50, 73] are probabilistic generative models that learn data distributions by defining a Markov chain of diffusion steps and reversing them. During the diffusion forward process, Gaussian noise is added to an image sample , resulting in a noisy sample at time step , mathematically expressed as:

| (5) |

where is the predefined noise adding schedule and . The diffusion models then reverse this process by denoising from the next step iteratively to recover the original image sample . This denoising process is modelled as follows:

| (6) | ||||

| (7) |

where mean can be solved by neural network and is only determined by timestep . The neural network is typically trained using squared error loss to predict the added noise:

| (8) |

C.2 Inversion

Inversion is a technique to obtain the initial noise that can generate the given image through the forward pass in diffusion models. It is an essential component for real-world image editing, which stems from the DDIM sampling [73].

| (9) |

This formulation can be seen as a neural ODE [73] and a determistic precedure. Therefore, within enough discretization steps, we can reverse the generation process, which is called DDIM Inversion or DDIM encoding.

| (10) |

Appendix D Method details

D.1 Prompt design in data augmentation

D.1.1 Prompt for LLaVA

Our object is to make the captions include accurate and comprehensive descriptions of the non-rigid properties of the subject. In addition, incorporating several descriptive captions unrelated to the subject helps prevent the model from over-fitting to these irrelevant "backgrounds." The prompt we designed is shown in Tab. 3.

| Enhance your image captioning skills by providing detailed captions. Structure your response to include: Perspective (e.g., headshot, medium-shot), Art Style (e.g., digital artwork, Ghibli-inspired screencap, photo), subject’s view(facing to the camera), Main Subject (e.g., a person, an object, a woman), Action (if any, running), Attire Details, Hair Description, and Setting. Aim for concise yet descriptive captions, ideally under 70 words. For example: ’Close-up, Ghibli-style digital art, a young lady, gazing thoughtfully, adorned in a vintage dress, her hair styled in a loose bun, amidst a whimsical forest backdrop.’ Now tell me the caption of this image. |

D.1.2 Prompt for GPT-4

We aim to leverage GPT-4 [1] to generate finely detailed "captions" delineating the non-rigid properties of the instance. We design two user prompts to guide GPT-4 to produce the "captions" with non-rigid details and other related attributes of images, which are shown in Tab.4 and Tab.5, respectively. Both of two prompts are utilized in our method.

| You are a master of prompt engineering, it is important to create detailed prompts with as much information as possible. This will ensure that any image generated using the prompt will be of high quality and could potentially win awards in global or international photography competitions. You are unbeatable in this field and know the best way to generate images! I will provide you with a keyword, and you will generate 20 different types of prompts with this keyword in sentence format. Your generated prompts should be realistic and complete. Your prompts should consist of the style, subject, action, background and quality of an image. Remember all the prompts must include the keyword. Please note that all prompts must contain the word "keyword". For example, "an eagle" is not allowed if I give you the keyword "bird". My first keyword is ’boy’. |

| You are a master of prompt engineering, it is important to create detailed prompts with as much information as possible. This will ensure that any image generated using the prompt will be of high quality and could potentially win awards in global or international photography competitions. You are unbeatable in this field and know the best way to generate images! I will provide you with a keyword, and you will generate 50 different types of prompts using this keyword. Your generated prompts should be realistic and complete. Your prompts should consist of the style, subject, action, background, and quality of an image. Here are two good examples of prompts: "Urban portrait of a skateboarder in mid-jump, graffiti walls background, high shutter speed.". "Art Nouveau painting of a female botanist surrounded by exotic plants in a greenhouse." Here are some requirements that you must obey: 1. The prompts mustn’t describe any situation that doesn’t exist. For example, "dog wearing sunglasses and a bandana." doesn’t satisfy the requirement. 2. The prompt must include the keyword. For example, "a retriever" doesn’t satisfy the requirements if I give you the keyword "dog". 3. Only one subject is included in every prompt. For example,"a dog and a cat" doesn’t satisfy the requirements. My first keyword is ‘dog‘. |

Appendix E Experiment details

E.1 Dataset

We show the contents of our dataset LIPE in Fig. 8, consisting of a wide variety of categories, including backpacks, toys, pets, wild animals, and humans. These images were collected from other datasets [69, 33, 40, 27], online sources with Creative Commons licenses, and data taken by authors. We will make our dataset publicly available in the future. All experiments can be done on a single A100.

E.2 Baselines

The implementation details for each comparing method are included in this section. Apart from MyStyle [51], the version of the diffusion model used in the editing methods is the state-of-the-art SDXL-base-1.0 [61] and the inversion method is DDPM inversion [26] with 100 timesteps. All images will be resized to before training and inference to match the resolution of SDXL [61].

E.2.1 MasaCtrl [9]

We use the official open-source code and follow the default hyperparameters to generate the edited results. In some cases, we modify the default hyperparameters if they can’t produce reasonable results. The null text is replaced by the source prompt to improve the inversion quality.

E.2.2 Imagic [30]

Since there is no official open source code, we use the community version of diffusers for this algorithm. The steps for tuning the textual embedding and model are both 500. The learning rates are 0.001 and 5e-4, respectively. The interpolation parameter alpha is set to 1.

E.2.3 Dreamctrl

When tuning from the pre-trained stable diffusion model, we employ AdamW [32] with learning rates of 5e-6 for the text encoder and U-Net. In order to avoid OOM (Out Of Memory in Cuda), we have utilized the Xformers framework. The total fine-tuning epochs are 1000.

E.2.4 MyStyle [51]

We follow the preprocessing from MyStyle [51] steps to filter, align, and crop the images because we have found that not doing so would harm the generation efficacy of the model. Furthermore, we found that fine-tuning the model on the test images is necessary to reconstruct the test image better. We also followed the fine-tuning process on the test images as well. When applying the MyStyle method utilizing the human face domain StyleGAN pre-trained on the FFHQ dataset, we employ the 5e-2 and 3e-3 learning rates for projection and fine-tuning, respectively. Other than that, all the parameters are inherited from the official open-source code as the default parameters.

E.2.5 LIPE

When tuning from the pre-trained stable diffusion model, we also employ AdamW [32] for updating the text encoder and UNet simultaneously. The learning rates for both of them are 5e-4. And the total fine-tuning epochs are also 1000. To save GPU memory and prevent overfitting, we only optimize the attention layers of the model.

E.3 Evaluation details

We will elaborate our evaluation metrics on the experiments part in this section.

E.3.1 DINOv2-I

This is the average of the cosine similarities between the source image’s DINO embedding and the edited image’s DINO embedding. The version of the used DINO is "dinov2-base".

E.3.2 CLIP-I

This is the average of the cosine similarities between the source image’s CLIP embedding and the edited image’s CLIP embedding. The version of the used CLIP in this and the following metrics is "clip-vit-large-patch14".

E.3.3 CLIP-T

| (11) |

where and are CLIP’s image and text encoders, respectively. And , are the target domain text and image, respectively. This metric is used to represent the model’s performance in the image generation task.

E.3.4 CLIP-D

| (12) |

where . And , are the source domain text and image, respectively. This metric measures the alignment between the direction in two images and the direction in two texts.

Appendix F Additional results

We conduct experiments on more data and present supplementary results on human faces in Fig. 9 and animal examples in Fig. 10. These illustrations reinforce the effectiveness of our editing technique. Our approach achieves controlled, flexible, and faithful editing outcomes. From the results on human faces (see Fig. 9), it becomes evident that the editing outcomes are indeed correlated with personalized identity priors, which inherently encompass several non-rigid properties. Furthermore, our method demonstrates flexibility in achieving rich editing of non-rigid properties on animal subjects, as shown in Fig. 10.

Appendix G Limitations and Discussion

Our method sometimes generates results with mismatched foreground and background, as illustrated in Fig 11. This is because editing the subject’s actions occasionally produces closer-up perspectives, and our method’s strict control over the background can lead to a mismatch between the foreground and background perspectives. This failure case may be caused by the reference images containing closer-up perspectives of dogs, while the test image features dogs in distant perspectives.