Local Hyper-Flow Diffusion

Abstract

Recently, hypergraphs have attracted a lot of attention due to their ability to capture complex relations among entities. The insurgence of hypergraphs has resulted in data of increasing size and complexity that exhibit interesting small-scale and local structure, e.g., small-scale communities and localized node-ranking around a given set of seed nodes. Popular and principled ways to capture the local structure are the local hypergraph clustering problem and related seed set expansion problem. In this work, we propose the first local diffusion method that achieves edge-size-independent Cheeger-type guarantee for the problem of local hypergraph clustering while applying to a rich class of higher-order relations that covers many previously studied special cases. Our method is based on a primal-dual optimization formulation where the primal problem has a natural network flow interpretation, and the dual problem has a cut-based interpretation using the -norm penalty on associated cut-costs. We demonstrate the new technique is significantly better than state-of-the-art methods on both synthetic and real-world data.

1 Introduction

Hypergraphs [8] generalize graphs by allowing a hyperedge to consist of multiple nodes that capture higher-order relationships in complex systems and datasets [36]. Hypergraphs have been used for music recommendation on Last.fm data [10], news recommendation [29], sets of product reviews on Amazon [37], and sets of co-purchased products at Walmart [1]. Beyond the internet, hypergraphs are used for analyzing higher-order structure in neuronal, air-traffic and food networks [6, 30].

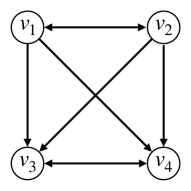

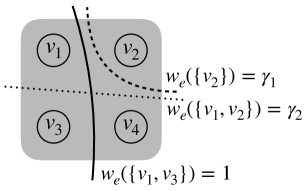

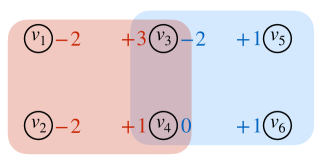

In order to explore and understand higher-order relationships in hypergraphs, recent work has made use of cut-cost functions that are defined by associating each hyperedge with a specific set function. These functions assign specific penalties of separating the nodes within individual hyperedges. They generalize the notion of hypergraph cuts and are crucial for determining small-scale community structure [30, 33]. The most popular cut-cost functions with increasing capability to model complex multiway relationships are the unit cut-cost [28, 27, 23], cardinality-based cut-cost [43, 44] and general submodular cut-cost [31, 47]. An illustration of a hyperedge and the associated cut-cost function is given in Figure 1. In the simplest setting, all cut-cost functions take value either 0 or 1 (e.g., the case when in Figure 1(b)), we obtain a unit cut-cost hypergraph. In a slightly more general setting, the cut-costs are determined solely by the number of nodes in either side of the hyperedge cut (e.g., the case when and in Figure 1(b)), we obtain a cardinality-based hypergraph. We refer to hypergraphs associated with arbitrary submodular cut-cost functions (e.g., the case when and in Figure 1(b)) as general submodular hypergraphs.

Hypergraphs that arise from data science applications consist of interesting small-scale local structure such as local communities [33, 42]. Exploiting this structure is central to the above mentioned applications on hypergraphs and related applications in machine learning and applied mathematics [7]. We consider local hypergraph clustering as the task of finding a community-like cluster around a given set of seed nodes, where nodes in the cluster are densely connected to each other while relatively isolated to the rest of the graph. One of the most powerful primitives for the local hypergraph clustering task is the graph diffusion. Diffusion on a graph is the process of spreading a given initial mass from some seed node(s) to neighbor nodes using the edges of the graph. Graph diffusions have been successfully employed in the industry, for example, both Pinterest and Twitter use diffusion methods for their recommendation systems [16, 17, 22]. Google uses diffusion methods to perform clustering query refinements [40]. Let us not forget PageRank [9, 39], Google’s model for their search engine.

Empirical and theoretical performance of local diffusion methods is often measured on the problem of local hypergraph clustering [45, 20, 33]. Existing local diffusion methods only directly apply to hypergraphs with the unit cut-cost [26, 42]. For the slightly more general cardinality-based cut-cost, they rely on graph reduction techniques which result in a rather pessimistic edge-size-dependent approximation error [46, 26, 33, 43]. Moreover, none of the existing methods is capable of processing general submodular cut-costs. In this work, we are interested in designing a diffusion framework that (i) achieves stronger theoretical guarantees for the problem of local hypergraph clustering, (ii) is flexible enough to work with general submodular hypergraphs, and (iii) permits computationally efficient algorithms. We propose the first local diffusion method that simultaneously accomplishes these goals.

In what follows we describe our main contributions and previous work. In Section 2 we provide preliminaries and notations. In Section 3 we introduce our diffusion model from a combinatorial flow perspective. In Section 4 we discuss the local hypergraph clustering problem and Cheeger-type quadratic approximation error. In Section 6 we perform experiments using both synthetic and real datasets.

1.1 Our main contributions

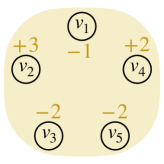

In this work we propose a generic local diffusion model that applies to hypergraphs characterized by a rich class of cut-cost functions that covers many previously studied special cases, e.g., unit, cardinality-based and submodular cut-costs. We provide the first edge-size-independent Cheeger-type approximation error for the problem of local hypergraph clustering using any of these cut-costs. In particular, assume that there exists a cluster with conductance , and assume that we are given a set of seed nodes that reasonably overlaps with , then the proposed diffusion model can be used to find a cluster with conductance at most (in the appendix we show that an -norm version of the proposed model can achieve asymptotically). Our hypergraph diffusion model is formulated as a convex optimization problem. It has a natural combinatorial flow interpretation that generalizes the notion of network flows over hyperedges. We show that the optimization problem can be solved efficiently by an alternating minimization method. In addition, we prove that the number of nonzero nodes in the optimal solution is independent of the size of the hypergraph, and it only depends on the size of the initial mass. This key property ensures that our algorithm scales well in practice for large datasets. We evaluate our method using both synthetic and real-world data. We show that our method improves accuracy significantly for hypergraphs with unit, cardinarlity-based and general submodular cut-costs for local clustering.

1.2 Previous work

Recently, clustering methods on hypergraphs received renewed interest. Different methods require different assumptions about the hyperedge cut-cost, which can be roughly categorized into unit cut-cost, cardinality-based (and submodular) cut-cost and general submodular cut-cost. Moreover, existing methods can be either global, where the output is not localized around a given set of seed nodes, or local, where the output is a tiny cluster around a set of seed nodes. Local algorithms are the only scalable ones for large hypergraphs, which is our main focus. Many works propose global methods and thus they are not scalable to large hypergraphs [49, 3, 25, 34, 6, 48, 11, 30, 31, 12, 47, 32, 13, 24, 42]. Local diffusion-based methods are more relevant to our work [26, 33, 43]. In particular, iterative hypergraph min-cut methods for the local hypergraph clustering problem can be adopted [43]. However, these methods require in theory and in practice a large seed set, i.e., they are not expansive and thus cannot work with one seed node. The expansive combinatorial diffusion [45] is generalized for hypergraphs [26], which can detect a target cluster using only one seed node. However, combinatorial methods have a large bias towards low conductance clusters as opposed to finding the target cluster [18]. The most relevant paper to our work is [33]. However, the proposed methods in [33] depend on a reduction from hypergraphs to directed graphs. This results in an approximation error for clustering that is proportional to the size of hyperedges and induces performance degeneration when the hyperedges are large. In fact, none of the above approaches (including global and local ones) has an edge-size-independent approximation error bound for even simple cardinality-based hypergraphs. Moreover, existing local approaches do not work for general submodular hypergraphs.

2 Preliminaries and Notations

Submodular function. Given a set , we denote the power set of and the cardinality of . A submodular function is a set function such that for any .

Submodular hypergaph. A hypergraph is defined by a set of nodes and a set of hyperedges , i.e., each hyperedge is a subset of . A hypergraph is termed submodular if every is associated with a submodular function [31]. The weight indicates the cut-cost of splitting the hyperedge into two subsets, and . This general form allows us to describe the potentially complex higher-order relation among multiple nodes (Figure 1). A proper hyperedge weight should satisfy that . To ease notation we extend the domain of to by setting for any . We assume without loss of generality that is normalized by , so that for any . For the sake of simplicity in presentation, we assume that for all .111This is without loss of generality. In the appendix we show that our method works with arbitrary . A submodular hypergraph is written as where . Note that when for any , the definition reduces to unit cut-cost hypergraphs. When only depends on , it reduces to cardinality-based cut-cost hypergraphs.

Vector/Function on or . For a set of nodes , we denote the indicator vector of , i.e., if and 0 otherwise. For a vector , we write , where in the entry in that corresponds to . We define the support of as . The support of a vector in is defined analogously. We refer to a function over nodes and its explicit representation as a -dimensional vector interchangeably.

Volume, cut, conductance. Given a submodular hypergraph , the degree of a node is defined as . We reserve for the vector of node degrees and . We refer to as the volume of . A cut is treated as a proper subset , or a partition where . The cut-set of is defined as ; the cut-size of is defined as . The conductance of a cut in is .

Flow. A flow routing over a hyperedge is a function where specifies the amount of mass that flows from to over . To ease notation we extend the domain of to by identifying for , so is treated as a function or equivalently a -dimensional vector. The net (out)flow at a node is given by . Given a routing function and a set of nodes , a directional routing on with direction is represented by , which specifies the net amount of mass that flows from to . A routing is called proper if it obeys flow conservation, i.e., . Our flow definition generalizes the notion of network flows to hypergraphs. We provide concrete illustrations in Figure 2.

3 Diffusion as an Optimization Problem

In this section we provide details of the proposed local diffusion method. We consider diffusion process as the task of spreading mass from a small set of seed nodes to a larger set of nodes. More precisely, given a hypergraph , we assign each node a sink capacity specified by a sink function , i.e., node is allowed to hold at most amount of mass. In this work we focus on the setting where , so that a high-degree node that is part of many hyperedges can hold more mass than a low-degree node that is part of few hyperedges. Moreover, we assign each node some initial mass specified by a source function , i.e., node holds amount of mass at the start of the diffusion. In order to encourage the spread of mass in the hypergraph, the initial mass on the seed nodes is larger than their capacity. This forces the seed nodes to diffuse mass to neighbor nodes to remove their excess mass. In Section 4 we will discuss the choice of to obtain good theoretical guarantees for the problem of local hypergraph clustering. Details about the local hypergraph clustering problem are provided in Section 4.

Given a set of proper flow routings for , recall that specifies the amount of net (out)flow at node . Therefore, the vector gives the amount of net mass at each node after routing. The excess mass at a node is . In order to force the diffusion of initial mass we could simply require that for all , or equivalently, . But to provide additional flexibility in the diffusion dynamics, we introduce a hyper-parameter and we impose a softer constraint , where is an optimization variable that controls how much excess mass is allowed on each node. In the context of numerical optimization, we show in Section 5 that allows a reformulation which makes the optimization problem amenable to efficient alternating minimization schemes.

Note that so far we have not yet talked about how specific higher-order relations among nodes within a hyperedge would affect the flow routings over it. Apparently, simply requiring that the ’s obey flow conservation (i.e., ) similar to the standard graph setting is not enough for hypergraphs. An important difference between hyperedge flows and graph edge flows is that additional constraints on are in need. To this end, we consider for some and , where

is the base polytope [4] for the submodular cut-cost associated with hyperedge . It is straightforward to see that for every and , so defines a proper flow routing over . Moreover, for any , recall that represents the net amount of mass that moves from to over hyperedge . Therefore, the constraints for mean that the directional flows are upper bounded by a submodular function . Intuitively, one may think of and as the scale and the shape of , respectively.

The goal of our diffusion problem is to find low cost routings for such that the capacity constraint is satisfied. We consider the (weighted) -norm of and as the cost of diffusion. In the appendix we show that one readily extends the -norm to -norm for any . Formally, we arrive at the following convex optimization formulation (input: the source function , the hypergraph , and a hyper-parameter ):

| (1) |

We name problem (1) Hyper-Flow Diffusion (HFD) for its combinatorial flow interpretation we discussed above. The dual problem of (1) is:

| (2) |

where in (2) is the support function of the base polytope given by . is also known as the Lovász extension of the submodular function .

We provide a combinatorial interpretation for (2) and leave algebraic derivations to the appendix. For the dual problem, one can view the solution as assigning heights to nodes, and the goal is to separate/cut the nodes with source mass from the rest of the hypergraph. Observe that the linear term in the dual objective function encourages raising higher on the seed nodes and setting it lower on others. The cost captures the discrepancy in node heights over a hyperedge and encourages smooth height transition over adjacent nodes. The dual solution embeds nodes into the nonnegative real line, and this embedding is what we actually use for local clustering and node ranking.

4 Local Hypergraph Clustering

In this section we discuss the performance of the primal-dual pair (1)-(2), respectively, in the context of local hypergraph clustering. We consider a generic hypergraph with submodular hyperedge weights . Given a set of seed nodes , the goal of local hypergraph clustering is to identify a target cluster that contains or overlaps well with . This generalizes the definition of local clustering over graphs [19]. To the best of our knowledge, we are the first one to consider this problem for general submodular hypergraphs. We consider a subset of nodes having low conductance as a good cluster, i.e., these nodes are well-connected internally and well-separated from the rest of the hypergraph. Following prior work on local hypergraph clustering, we assume the existence of an unknown target cluster with conductance . We prove that applying sweep-cut to an optimal solution of (2) returns a cluster whose conductance is at most quadratically worse than . Note that this result resembles Cheeger-type approximation guarantees of spectral clustering in the graph setting [2], and it is the first result that is independent of hyperedge size for general hypergraphs. We keep the discussion at high level and defer details to the appendix, where we prove a more general, and stronger, i.e., constant approximation error result when the primal problem (1) is penalized by the -norm for any .

In order to start a diffusion process we need to provide the source mass . Similar to the -norm flow diffusion in the graph setting [20], we let

| (3) |

where is a set of seed nodes and . Below, we make the assumptions that the seed set and the target cluster have some overlap, there is a constant factor of amount of mass trapped in initially, and the hyper-parameter is not too large. Note that Assumption 2 is without loss of generality: if the right value of is not known apriori, we can always employ binary search to find a good choice. Assumption 3 is very weak as it allows to reside in an interval containing 0.

Assumption 1.

and for some .

Assumption 2.

The source mass as specified in (3) satisfies , so .

Assumption 3.

satisfies .

Let be an optimal solution for the dual problem (2). For define the sweep sets . We state the approximation property in Theorem 1.

One of the challenges we face in establishing the result in Theorem 1 is making sure that our diffusion model enjoys both good clustering guarantees and practical algorithmic advantages at the same time. This is achieved by introducing the hyper-parameter to our diffusion problem. We will demonstrate how helps with algorithmic development in Section 5, but from a clustering perspective, the additional flexibility given by complicates the underlying diffusion dynamics, making it more difficult to analyze. Another challenge is connecting the Lovász extension in (2) with the conductance of a cluster. We resolve all these problems by combining a generalized Rayleigh quotient result for submodular hypergraphs [31], primal-dual convex conjugate relations between (1) and (2), and a classical property of the Choquet integral/Lovász extension.

Let be an optimal solution for the primal problem (1). We state the following lemma on the locality (i.e., sparsity) of the optimal solutions, which justifies why HFD is a local diffusion method.

Lemma 2.

; moreover, if .

5 Optimization algorithm for HFD

We use a simple Alternating Minimization (AM) [5] method that efficiently solves the primal diffusion problem (1). For , we define a diagonal matrix such that if and 0 otherwise. Denote . The following Lemma 1 allows us to cast problem (1) to an equivalent separable formulation amenable to the AM method.

Lemma 1.

The AM method for problem (4) is given in Algorithm 1. The first sub-problem corresponds to computing projections to a group of cones, where all the projections can be computed in parallel. The computation of each projection depends on the choice of base polytope . If the hyperedge weight is unit cut-cost, holds special structures and projection can be computed with [32]. For general , a conic Fujishige-Wolfe minimum norm algorithm can be adopted to obtain the projection [32]. The second sub-problem in Algorithm 1 can be easily computed in closed-form. We provide more information about Algorithm 1 and its convergence properties in the appendix.

Initialization:

For do:

We remark that the reformulation (4) for is crucial from an algorithmic point of view. If , then the primal problem (1) has complicated coupling constraints that are hard to deal with. In this case, one has to resort to the dual problem (2). However, problem (2) has a nonsmooth objective function, which prohibits applicability of optimization methods for smooth objective functions. Even though subgradient method may be applied, we have observed empirically that its convergence rate is extremely slow for our problem, and early stopping results in a bad quality output.

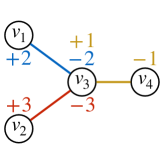

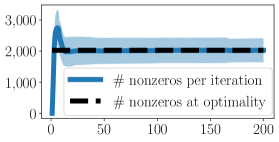

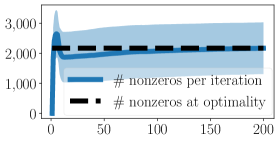

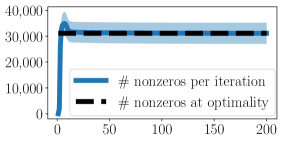

Lastly, as noted in Lemma 2, the number of nonzeros in the optimal solution is upper bounded by . In Figure 3 we plot the number of nodes having positive excess (which equals the number of nonzeros in the dual solution ) at every iteration of Algorithm 1. Figure 3 indicates that Algorithm 1 is strongly local, meaning that it works only on a small fraction of nodes (and their incident hyperedges) as opposed to producing dense iterates. This key empirical observation has enabled our algorithm to scale to large datasets by simply keeping track of all active nodes and hyperedges. Proving that the worst-case running time of AM depends only on the number of nonzero nodes at optimality as opposed to size of the whole hypergraph is an open problem, which we leave for future work.

6 Empirical Results

In this section we evaluate the performance of HFD for local clustering. First, we carry out experiments on synthetic hypergraphs with varying target cluster conductances and varying hyperedge sizes. For the unit cut-cost setting, we show that HFD is more robust and has better performance when the target cluster is noisy; for a cardinality-based cut-cost setting, we show that the edge-size-independent approximation guarantee is important for obtaining good recovery results. Second, we carry out experiments using real-world data. We show that HFD significantly outperforms existing state-of-the-art diffusion methods for both unit and cardinality-based cut-costs. Moreover, we provide a compelling example where specialized submodular cut-cost is necessary for obtaining good results. Code that reproduces all results is available at https://github.com/s-h-yang/HFD.

6.1 Synthetic experiments using hypergraph stochastic block model (HSBM)

The generative model. We generalize the standard -uniform hypergraph stochastic block model (HSBM) [21] to allow different types of inter-cluster hyperedges appear with possibly different probabilities according to the cardinality of hyperedge cut. Let be a set of nodes and let be the required constant hyperedge size. We consider HSBM with parameters , , , , . The model samples a -uniform hypergraph according to the following rules: (i) The community label is chosen uniformly at random for ;222We consider two blocks for simplicity. In general the model applies to any number of blocks. (ii) Each size subset of appears independently as a hyperedge with probability

If or all ’s are the same, then we obtain the standard two-block HSBM. We use this setting to evaluate HFD for unit cut-cost. If ’s are different, then we obtain a cardinality-based HSBM. In particular, when , it models the scenario where hyperedges containing similar numbers of nodes from each block are rare, while small noises (e.g., hyperedges that have one or two nodes in one block and all the rest in the other block) are more frequent. We use , , to evaluate HFD for cardinality-based cut-cost. There are other random hypergraph models, for example the Poisson degree-corrected HSBM [14] that deals with degree heterogeneity and edge size heterogeneity. In our experiments we focus on HSBM because it allows stronger control over hyperedge sizes. We provide details on data generation in the appendix.

Task and methods. We consider the local hypergraph clustering problem. We assume that we are given a single labelled node and the goal is to recover all nodes having the same label. Using a single seed node the most common (and sought-after) practice for local graph clustering tasks. We test the performance of HFD with two other methods: (i) Localized Quadratic Hypergraph Diffusions (LH) [33], which can be seen as a hypergraph analogue of Approximate Personalized PageRank (APPR); (ii) ACL [2], which is used to compute APPR vectors on a standard graph obtained from reducing a hypergraph through star expansion [50].333There are other heuristic methods which first reduce a hypergraph to a graph by clique expansion [6] and then apply diffusion methods for standard graphs. We did not compare with this approach because clique expansion often results in a dense graph and consequently makes the computation slow. Moreover, it has been shown in [33] that clique expansion did not offer significant performance improvement over star expansion.

Cut-costs and parameters. We consider both unit cut-cost, i.e., if and , and cardinality cut-cost . HFD that uses unit and cardinality cut-costs are denoted by U-HFD and C-HFD, respectively. LH also works with both unit and cardinality cut-costs and we specify them by U-LH and C-LH, respectively. For HFD, we initialize the seed mass so that is a constant factor times the volume of the target cluster. We set . We highly tune LH by performing binary search over its parameters and and pick the output cluster having the lowest conductance. For ACL we use the same parameter choices as in [33]. Details on parameter setting are provided in the appendix.

Results. For each hypergraph, we randomly pick a block as the target cluster. We run the methods 50 times. Each time we choose a different node from the target cluster as the single seed node.

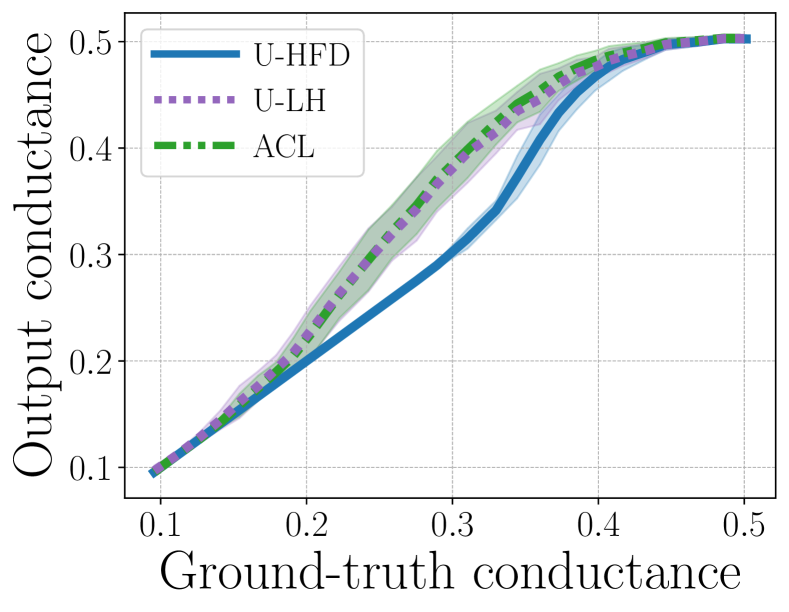

Unit cut-cost results. Figure 4 shows local clustering results when we fix but vary the conductance of the target cluster (i.e., constant but varying ). Observe that the performances of all methods become worse as the target cluster becomes more noisy, but U-HFD has significantly better performance than both U-LH and ACL when the conductance of the target cluster is between 0.2 and 0.4. The reason that U-HFD performs better is in part because it requires much weaker conditions for the theoretical conductance guarantee to hold. On the contrary, LH assumes an upper bound on the conductance of the target cluster [33]. This upper bound is dataset-dependent and could become very small in many cases, leading to poor practical performances. We provide more details in this perspective in the appendix. ACL with star expansion is a heuristic method that has no performance guarantee.

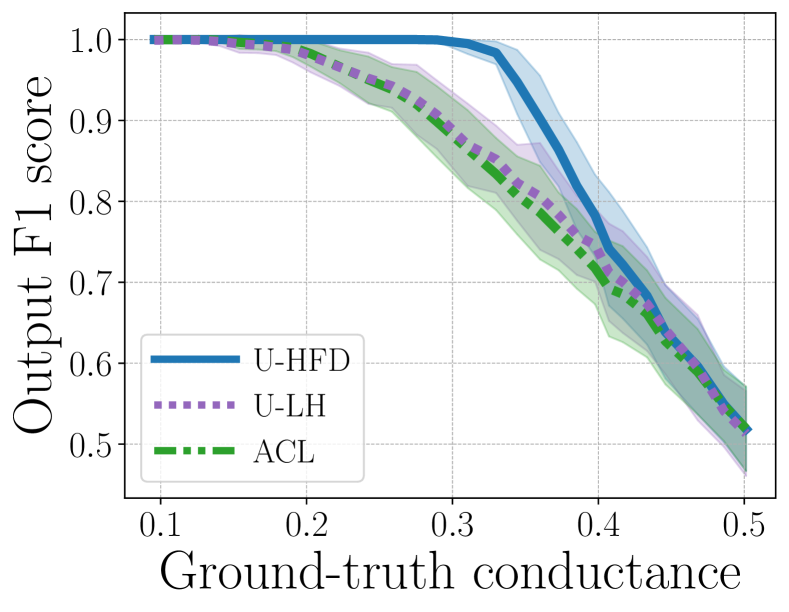

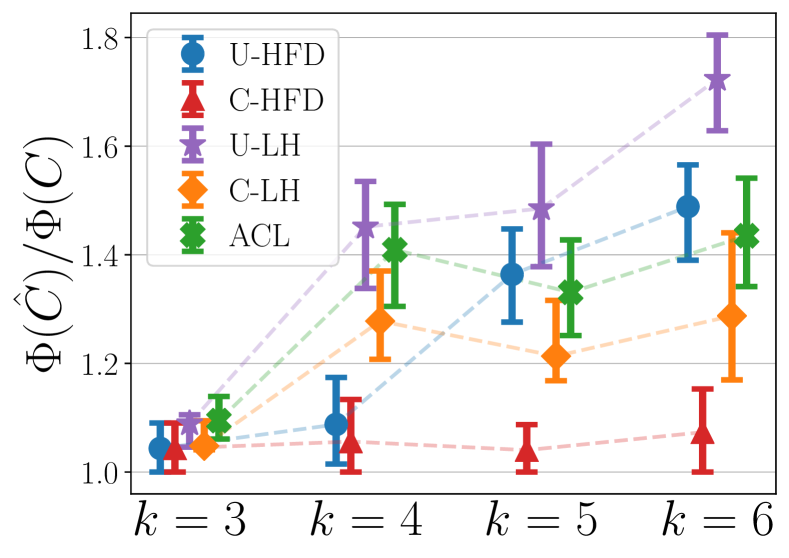

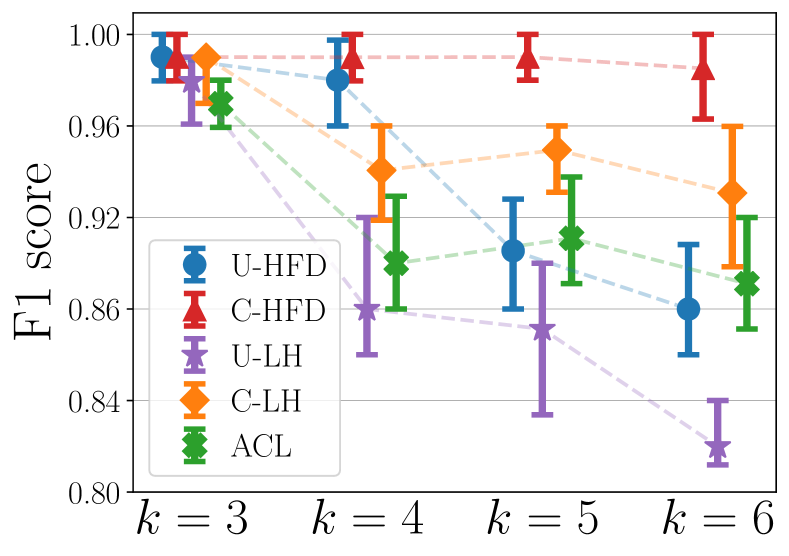

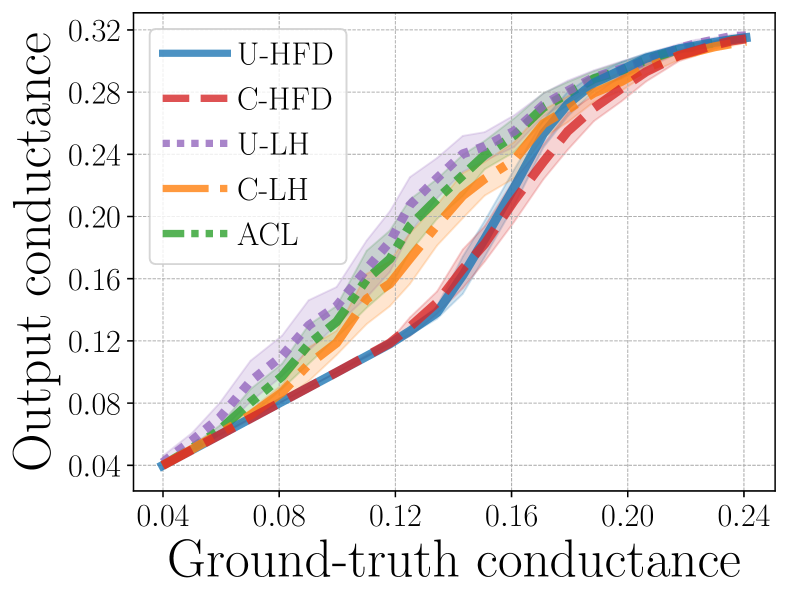

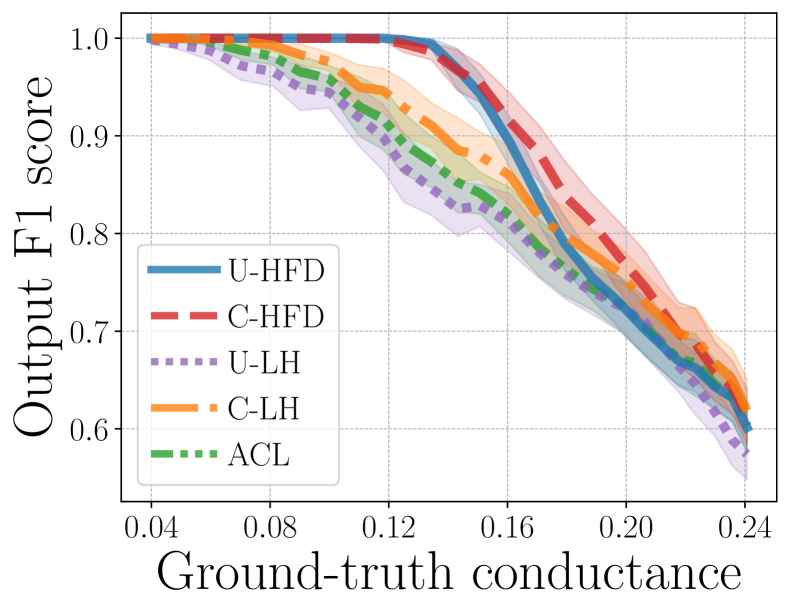

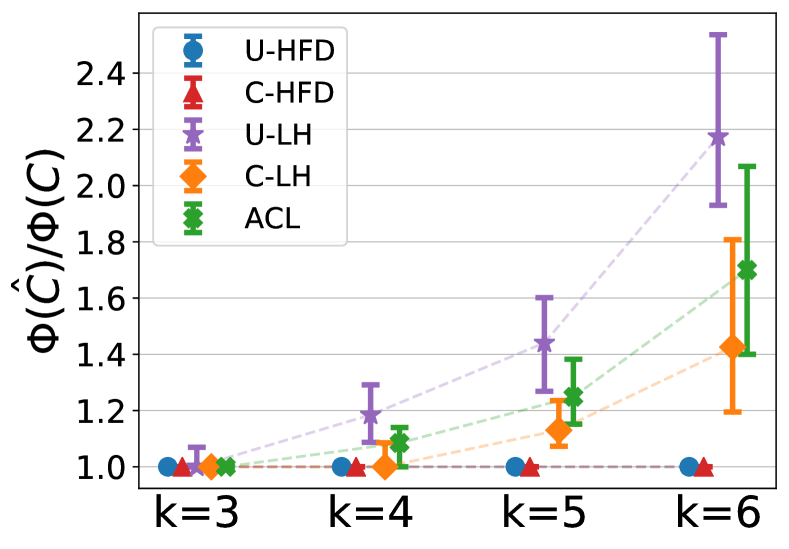

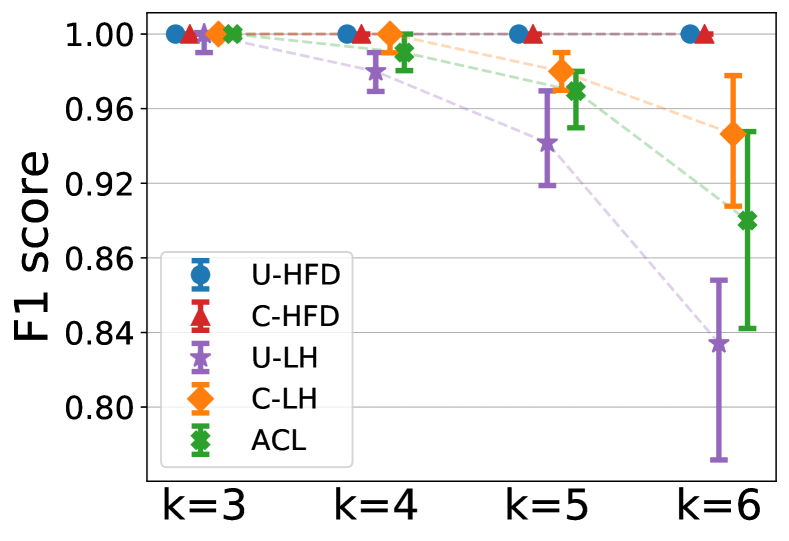

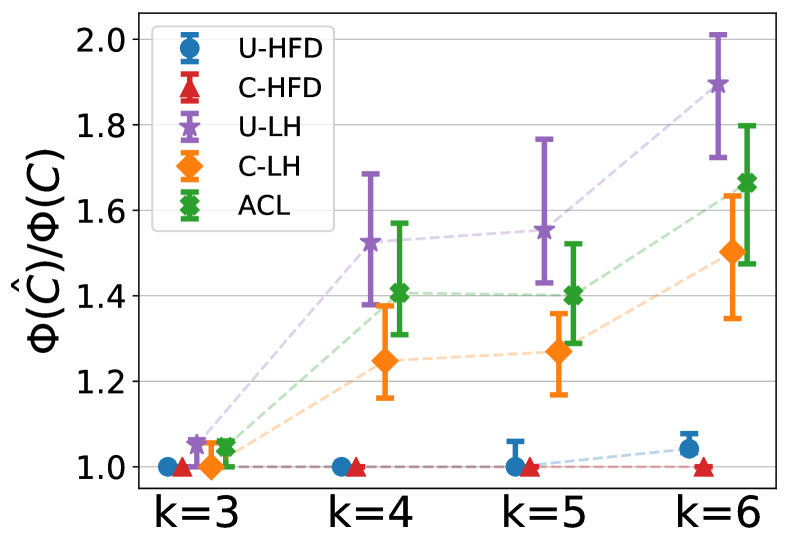

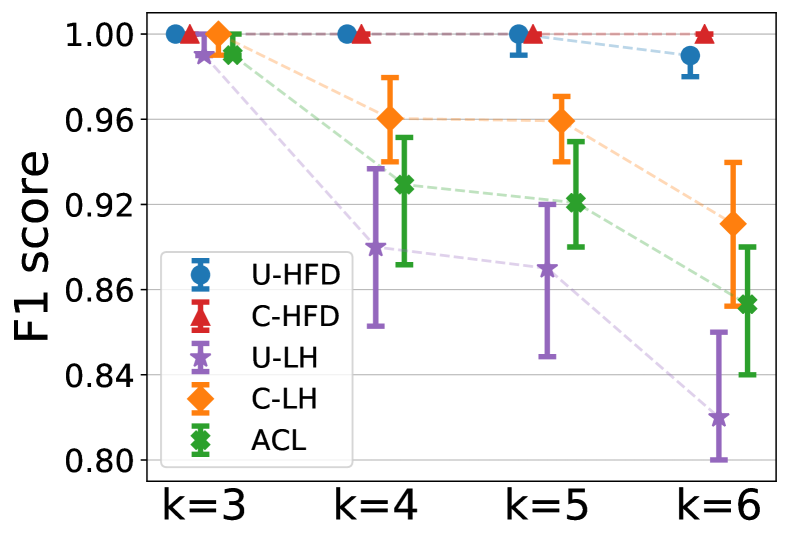

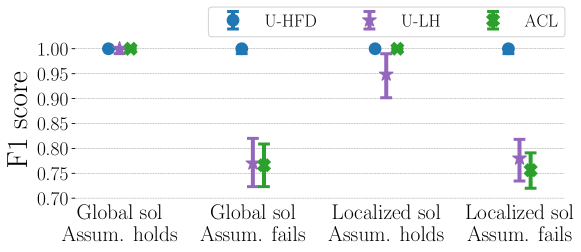

Cardinality cut-cost results. Figure 5 shows the median (markers) and 25-75 percentiles (lower-upper bars) of conductance ratios (i.e., the ratio between output conductance and ground-truth conductance, lower is better) and F1 scores for different methods for . The target cluster for each has conductance around 0.3.444See the appendix for similar results when we fix the target cluster conductances around 0.2 and 0.25, respectively. These cover a reasonably wide range of scenarios in terms of the target conductance and illustrate the performance of algorithms for different levels of noise. For , unit and cardinality cut-costs are equivalent, therefore all methods have similar performances. As increases, cardinality cut-cost provides better performance than unit cut-cost in both conductance and F1. However, since the theoretical approximation guarantee of C-LH depends on hyperedge size [33], there is a noticeable performance degradation for C-LH when we increase to . On the other hand, the performance of C-HFD appears to be independent from , which aligns with our conductance bound in Theorem 1.

6.2 Experiments using real-world data

We conduct extensive experiments using real-world data. First, we show that HFD has superior local clustering performances than existing methods for both unit and cardinality-based cut-costs. Then, we show that general submodular cut-cost (recall that HFD is the only method that applies to this setting) can be necessary for capturing complex high-order relations in the data, improving F1 scores by up to 20% for local clustering and providing the only meaningful result for node ranking. In the appendix we show additional local clustering experiments on two additional datasets, where our method improves F1 scores by 8% on average for 13 different target clusters.

Datasets. We provide basic information about the datasets used in our experiments. Complete descriptions are provided in the appendix.

Amazon-reviews ( = 2,268,264, = 4,285,363) [38, 43]. In this hypergraph each node represents a product. A set of products are connected by a hyperedge if they are reviewed by the same person. We use product category labels as ground truth cluster identities. We consider all clusters of less than 10,000 nodes.

Trivago-clicks ( = 172,738, = 233,202) [14]. The nodes in this hypergraph are accommodations/hotels. A set of nodes are connected by a hyperedge if a user performed “click-out” action during the same browsing session. We use geographical locations as ground truth cluster identities. There are 160 such clusters, and we filter them using cluster size and conductance.

Florida Bay food network ( = 128, = 141,233) [30]. Nodes in this hypergraph correspond to different species or organisms that live in the Bay, and hyperedges correspond to transformed motifs (Figure 1) of the original dataset. Each species is labelled according its role in the food chain: producers, low-level consumers, high-level consumers.

Methods and parameters. We compare HFD with LH and ACL.555We also tried a flow-improve method for hypergraphs [43], but the method was very slow in our experiments, so we only used it for small datasets. See appendix for results. The flow-improve method did not improve the performance of existing methods, therefore, we omitted it from comparisons on larger datasets. There is a heuristic nonlinear variant of LH which is shown to outperform linear LH in some cases [33]. Therefore we also compare with the same nonlinear variant considered in [33]. We denote the linear and nonlinear versions by LH-2.0 and LH-1.4, respectively. We set for HFD and we set the parameters for LH-2.0, LH-1.4 and ACL as suggested by the authors [33]. More details on parameter choices appear in the appendix. We prefix methods that use unit and cardinality-based cut-costs by U- and C-, respectively.

Experiments for unit and cardinality cut-costs. For each target cluster in Amazon-reviews and Trivago-clicks, we run the methods multiple times, each time we use a different node as the singe seed node.666We show additional results using seed sets of more than one node in the appendix. We report the median F1 scores of the output clusters in Table 1 and Table 2. For Amazon-reviews, we only compare the unit cut-cost because it is both shown in [33] and verified by our experiments that unit cut-cost is more suitable for this dataset. Observe that U-HFD obtains the highest F1 scores for nearly all clusters. In particular, U-HFD significantly outperforms other methods for clusters 12, 18, 24, where we see an increase in F1 score by up to 52%. For Trivago-clicks, C-HFD has the best performance for all but one clusters. Among the rest of all other methods, U-HFD has the second highest F1 scores for nearly all clusters. Moreover, observe that for each method (i.e., HFD, LH-2.0, LH-1.4), cardinality cut-cost leads to higher F1 than its unit cut-cost counterpart.

| Method | 1 | 2 | 3 | 12 | 15 | 17 | 18 | 24 | 25 |

| U-HFD | 0.45 | 0.09 | 0.65 | 0.92 | 0.04 | 0.10 | 0.80 | 0.81 | 0.09 |

| U-LH-2.0 | 0.23 | 0.07 | 0.23 | 0.29 | 0.05 | 0.06 | 0.21 | 0.28 | 0.05 |

| U-LH-1.4 | 0.23 | 0.09 | 0.35 | 0.40 | 0.00 | 0.07 | 0.31 | 0.35 | 0.06 |

| ACL | 0.23 | 0.07 | 0.22 | 0.25 | 0.04 | 0.05 | 0.17 | 0.20 | 0.04 |

| Method | KOR | ISL | PRI | UA-43 | VNM | HKG | MLT | GTM | UKR | EST |

| U-HFD | 0.75 | 0.99 | 0.89 | 0.85 | 0.28 | 0.82 | 0.98 | 0.94 | 0.60 | 0.94 |

| C-HFD | 0.76 | 0.99 | 0.95 | 0.94 | 0.32 | 0.80 | 0.98 | 0.97 | 0.68 | 0.94 |

| U-LH-2.0 | 0.70 | 0.86 | 0.79 | 0.70 | 0.24 | 0.92 | 0.88 | 0.82 | 0.50 | 0.90 |

| C-LH-2.0 | 0.73 | 0.90 | 0.84 | 0.78 | 0.27 | 0.94 | 0.96 | 0.88 | 0.51 | 0.83 |

| U-LH-1.4 | 0.69 | 0.84 | 0.80 | 0.75 | 0.28 | 0.87 | 0.92 | 0.83 | 0.47 | 0.90 |

| C-LH-1.4 | 0.71 | 0.88 | 0.84 | 0.78 | 0.27 | 0.88 | 0.93 | 0.85 | 0.50 | 0.85 |

| ACL | 0.65 | 0.84 | 0.75 | 0.68 | 0.23 | 0.90 | 0.83 | 0.69 | 0.50 | 0.88 |

Experiments for general submodular cut-cost. In order to understand the importance of specialized general submodular hypergraphs we study the node-ranking problem for the Florida Bay food network using hypergraph modelling shown in Figure 1. We compare HFD using unit (U-HFD, ), cardinality-based (C-HFD, and ) and submodular (S-HFD, and ) cut-costs. Our goal is to search the most similar species of a queried species based on the food-network structure. Table 3 shows that S-HFD provides the only meaningful node ranking results. Intuitively, when , separating the preys from the predators incurs 0 cost. This encourages S-HFD to diffuse mass among preys or predators only and not to cross from a predator to a prey or vice versa. As a result, similar species receive similar amount of mass and thus are ranked similarly. In the local clustering setting, Table 3 compares HFD using different cut-costs. By exploiting specialized higher-order relations, S-HFD further improves F1 scores by up to 20% over U-HFD and C-HFD. This is not surprising, given the poor node-ranking results of other cut-costs. In the appendix we show another application of submodular cut-cost for node-ranking in an international oil trade network.

| Top-2 node-ranking results | Clustering F1 | ||||

| Method | Query: Raptors | Query: Gray Snapper | Prod. | Low | High |

| U-HFD | Epiphytic Gastropods, Detriti. Gastropods | Meiofauna, Epiphytic Gastropods | 0.69 | 0.47 | 0.64 |

| C-HFD | Epiphytic Gastropods, Detriti. Gastropods | Meiofauna, Epiphytic Gastropods | 0.67 | 0.47 | 0.64 |

| S-HFD | Gruiformes, Small Shorebirds | Snook, Mackerel | 0.69 | 0.62 | 0.84 |

References

- [1] I. Amburg, N. Veldt, and A. R. Benson. Clustering in graphs and hypergraphs with categorical edge labels. In Proceedings of the Web Conference, 2020.

- [2] R. Andersen, F. Chung, and K. Lang. Local graph partitioning using pagerank vectors. FOCS ’06 Proceedings of the 47th Annual IEEE Symposium on Foundations of Computer Science, pages 475–486, 2006.

- [3] Sanjeev Arora, Satish Rao, and Umesh Vazirani. Expander flows, geometric embeddings and graph partitioning. JACM, 56(2), April 2009.

- [4] F. Bach. Learning with submodular functions: A convex optimization perspective. Foundations and Trends in Machine Learning, 6(2-3):145–373, 2013.

- [5] A. Beck. On the convergence of alternating minimization for convex programming with applications to iteratively reweighted least squares and decomposition schemes. SIAM Journal on Optimization, 25(1):185–209, 2015.

- [6] A. R. Benson, D. F. Gleich, and J. Leskovec. Higher-order organization of complex networks. Science, 353(6295):163–166, 2016.

- [7] Austin Benson, David Gleich, and Desmond Higham. Higher-order network analysis takes off, fueled by classical ideas and new data. SIAM News (online), 2021.

- [8] Claude Berge. Hypergraphs: combinatorics of finite sets, volume 45. Elsevier, 1984.

- [9] S. Brin and L. Page. The anatomy of a large-scale hypertextual web search engine. Computer Networks and ISDN Systems, 30(1–7):107–117, 1998.

- [10] J. Bu, S. Tan, C. Chen, C. Wang, H. Wu, L. Zhang, and X. He. Music recommendation by unified hypergraph: combining social media information and music content. In MM ’10: Proceedings of the 18th ACM international conference on Multimedia, 2010.

- [11] T.-H. Hubert Chan, Anand Louis, Zhihao Gavin Tang, and Chenzi Zhang. Spectral properties of hypergraph laplacian and approximation algorithms. JACM, 65(3), March 2018.

- [12] Eli Chien, Pan Li, and Olgica Milenkovic. Landing probabilities of random walks for seed-set expansion in hypergraphs, 2019.

- [13] Uthsav Chitra and Benjamin Raphael. Random walks on hypergraphs with edge-dependent vertex weights. In Kamalika Chaudhuri and Ruslan Salakhutdinov, editors, Proceedings of the 36th International Conference on Machine Learning, volume 97 of Proceedings of Machine Learning Research, pages 1172–1181. PMLR, 09–15 Jun 2019.

- [14] Philip S. Chodrow, Nate Veldt, and Austin R. Benson. Generative hypergraph clustering: from blockmodels to modularity, 2021.

- [15] Ivar Ekeland and Roger Témam. Convex Analysis and Variational Problems. Society for Industrial and Applied Mathematics, 1999.

- [16] C. Eksombatchai, P. Jindal, J. Z. Liu, Y. Liu, R. Sharma, C. Sugnet, M. Ulrich, and J. Leskovec. Pixie: A system for recommending billion items to million users in real-time. WWW ’18: Proceedings of the 2018 World Wide Web Conference, pages 1775–1784, 2018.

- [17] C. Eksombatchai, J. Leskovec, R. Sharma, C. Sugnet, and M. Ulrich. Node graph traversal methods. U.S. Patent 10 762 134 B1, Sep. 2020, 2020.

- [18] K. Fountoulakis, M. Liu, , D. F. Gleich, and M. W. Mahoney. Flow-based algorithms for improving clusters: A unifying framework, software, and performance. arXiv:2004.09608, 2020.

- [19] K. Fountoulakis, F. Roosta-Khorasani, J. Shun, X. Cheng, and M. W. Mahoney. Variational perspective on local graph clustering. Mathematical Programming B, pages 1–21, 2017.

- [20] K. Fountoulakis, D. Wang, and S. Yang. -norm flow diffusion for local graph clustering. In Proceedings of the 37th International Conference on Machine Learning, 2020.

- [21] Debarghya Ghoshdastidar and Ambedkar Dukkipati. Consistency of spectral partitioning of uniform hypergraphs under planted partition model. In Z. Ghahramani, M. Welling, C. Cortes, N. Lawrence, and K. Q. Weinberger, editors, Advances in Neural Information Processing Systems, volume 27. Curran Associates, Inc., 2014.

- [22] P. Gupta, A. Goel, J. Lin, A. Sharma, D. Wang, and R. Zadeh. WTF: the who to follow service at twitter. WWW ’13: Proceedings of the 22nd international conference on World Wide Web, pages 505–514, 2013.

- [23] Scott W. Hadley. Approximation techniques for hypergraph partitioning problems. Discrete Appl. Math., 59(2):115–127, May 1995.

- [24] Koby Hayashi, Sinan G. Aksoy, Cheong Hee Park, and Haesun Park. Hypergraph random walks, laplacians, and clustering. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, CIKM ’20, page 495–504, New York, NY, USA, 2020. Association for Computing Machinery.

- [25] Matthias Hein, Simon Setzer, Leonardo Jost, and Syama Sundar Rangapuram. The total variation on hypergraphs-learning on hypergraphs revisited. In Proceedings of the 26th International Conference on Neural Information Processing Systems-Volume 2, pages 2427–2435, 2013.

- [26] R. Ibrahim and D. F. Gleich. Local hypergraph clustering using capacity releasing diffusion. PLOS ONE, 15(12):1–20, 12 2020.

- [27] Edmund Ihler, Dorothea Wagner, and Frank Wagner. Modeling hypergraphs by graphs with the same mincut properties. Information Processing Letters, 45(4):171–175, 1993.

- [28] E. L. Lawler. Cutsets and partitions of hypergraphs. Networks, 3(3):275–285, 1973.

- [29] L. Li and T. Li. News recommendation via hypergraph learning: encapsulation of user behavior and news content. In WSDM ’13: Proceedings of the sixth ACM international conference on Web search and data mining, 2013.

- [30] P. Li and O. Milenkovic. Inhomogeneous hypergraph clustering with applications. In Advances in Neural Information Processing Systems, 2017.

- [31] P. Li and O. Milenkovic. Submodular hypergraphs: p-laplacians, cheeger inequalities and spectral clustering. In Proceedings of the 35th International Conference on Machine Learning, 2018.

- [32] Pan Li, Niao He, and Olgica Milenkovic. Quadratic decomposable submodular function minimization: Theory and practice. Journal of Machine Learning Research, 21(106):1–49, 2020.

- [33] M. Liu, N. Veldt, H. Song, P. Li, and D. F. Gleich. Strongly local hypergraph diffusions for clustering and semi-supervised learning. In TheWebConf 2021, 2021.

- [34] Anand Louis. Hypergraph markov operators, eigenvalues and approximation algorithms. STOC, page 713–722, New York, NY, USA, 2015. Association for Computing Machinery.

- [35] Rossana Mastrandrea, Julie Fournet, and Alain Barrat. Contact patterns in a high school: A comparison between data collected using wearable sensors, contact diaries and friendship surveys. PLOS ONE, 10(9):e0136497, 2015.

- [36] Ron Milo, Shai Shen-Orr, Shalev Itzkovitz, Nadav Kashtan, Dmitri Chklovskii, and Uri Alon. Network motifs: simple building blocks of complex networks. Science, 298(5594):824–827, 2002.

- [37] J. Ni, J. Li, and J. McAuley. Justifying recommendations using distantly-labeled reviews and fine-grained aspects. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 188–197, 2019.

- [38] Jianmo Ni, Jiacheng Li, and Julian McAuley. Justifying recommendations using distantly-labeled reviews and fine-grained aspects. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 188–197, 2019.

- [39] L. Page, S. Brin, R. Motwani, and T. Winograd. The pagerank citation ranking: Bringing order to the web. Technical report, Stanford, 1999. Technical Report 1999-66, Stanford InfoLab.

- [40] E. Sadikov, J. Madhavan, L. Wang, and A. Halevy. Clustering query refinements by user intent. WWW ’10: Proceedings of the 19th international conference on World wide web, pages 841–850, 2010.

- [41] Arnab Sinha, Zhihong Shen, Yang Song, Hao Ma, Darrin Eide, Bo-June (Paul) Hsu, and Kuansan Wang. An overview of microsoft academic service (MAS) and applications. In Proceedings of the 24th International Conference on World Wide Web, 2015.

- [42] Yuuki Takai, Atsushi Miyauchi, Masahiro Ikeda, and Yuichi Yoshida. Hypergraph clustering based on pagerank. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 1970–1978, 2020.

- [43] N. Veldt, A. R. Benson, and J. Kleinberg. Minimizing localized ratio cut objectives in hypergraphs. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), 2020.

- [44] Nate Veldt, Austin R. Benson, and Jon Kleinberg. Hypergraph cuts with general splitting functions, 2020.

- [45] D. Wang, K. Fountoulakis, M. Henzinger, M. W. Mahoney, and S. Rao. Capacity releasing diffusion for speed and locality. Proceedings of the 34th International Conference on Machine Learning, 70:3607–2017, 2017.

- [46] Hao Yin, Austin R Benson, Jure Leskovec, and David F Gleich. Local higher-order graph clustering. In Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining, pages 555–564, 2017.

- [47] Yuichi Yoshida. Cheeger inequalities for submodular transformations. In Proceedings of the Thirtieth Annual ACM-SIAM Symposium on Discrete Algorithms, pages 2582–2601. SIAM, 2019.

- [48] Chenzi Zhang, Shuguang Hu, Zhihao Gavin Tang, and TH Hubert Chan. Re-revisiting learning on hypergraphs: confidence interval and subgradient method. In International Conference on Machine Learning, pages 4026–4034. PMLR, 2017.

- [49] Dengyong Zhou, Jiayuan Huang, and Bernhard Schölkopf. Learning with hypergraphs: Clustering, classification, and embedding. Advances in neural information processing systems, 19:1601–1608, 2006.

- [50] J. Y. Zien, M. D. F. Schlag, and P. K. Chan. Multilevel spectral hypergraph partitioning with arbitrary vertex sizes. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 18(9):1389–1399, 1999.

Appendices for: Local Hyper-Flow Diffusion

Outline of the Appendix:

-

•

Appendix A contains supplementary material to Section 3 and Section 4 of the paper:

-

–

mathematical derivation of the dual diffusion problem;

-

–

proofs of Theorem 1 and Lemma 2.

-

–

-

•

Appendix B contains supplementary material to Section 5 of the paper:

-

–

proof of Lemma 3;

-

–

convergence properties of Algorithm 1;

-

–

specialized algorithms for alternating minimization sub-problems of Algorithm 1.

-

–

-

•

Appendix C contains supplementary material to Section 6 of the paper:

-

–

additional synthetic experiments using -uniform hypergraph stochastic block model;

-

–

complete information about the real datasets considered in Section 6 of the paper;

-

–

experiments for local clustering using seed sets that contain more than one node;

-

–

experiments using 3 additional real datasets that are not discussed in the main paper;

-

–

parameter settings and implementation details.

-

–

Appendix A Approximation guarantee for local hypergraph clustering

In this section we prove a generalized and stronger version of Theorem 1 in the main paper, where the primal and dual diffusion problems are penalized by -norm and -norm, respectively, where and . Moreover, we consider a generic hypergraph with general submodular weights for any nonzero . All claims in the main paper are therefore immediate special cases when and for all .

Unless otherwise stated, we use the same notation as in the main paper. We generalize the definition of the degree of a node as

Note that when for all , the above definition reduces to , which is the number of hyperedges to which belongs to.

Given where , , and a hyperparameter , our primal Hyper-Flow Diffusion (HFD) problem is written as

| (A.1) |

where

is the base polytope of . The vector gives the net amount of mass after routing. Note that we multiply by because we have normalized by in its definition.

Lemma 1.

The following optimization problem is dual to (A.1):

| (A.2) |

where is the support function of base polytope .

Proof.

Using convex conjugates, for , we have

| (A.3a) | ||||

| (A.3b) | ||||

Apply the definition of , we can write (A.3a) as

Therefore,

In the above derivations, we may exchange the order of minimization and maximization and arrive at the second last equality, due to Proposition 2.2, Chapter VI, in [15]. The last equality follows from

∎

Notation. For the rest of this section, we reserve the notation and for optimal solutions of (A.1) and (A.2) respectively. If , we simply treat .

The next lemma relates primal and dual optimal solutions. We make frequent use of this relation throughout our discussion.

Lemma 2.

We have that for all . Moreover, if , then for all .

Proof.

Diffusion setup. Recall that we pick a scalar and set the source as

| (A.4) |

For convenience we restate the assumptions below.

Assumption A.1.

and for some .

Assumption A.2.

The source mass as specified in (A.4) satisfies , which gives .

Assumption A.3.

satisfies .

A.1 Technical lemmas

In this subsection we state and prove some technical lemmas that will be used for the main proof in the next subsection.

The following lemma characterizes the maximizers of the support function for a base polytope.

Lemma 3 (Proposition 4.2 in [4]).

Let be a submodular function such that . Let , with unique values , taken at sets (i.e., and , , ). Let be the associated base polytope. Then is optimal for if and only if for all , .

Recall that and denote the optimal solutions of (A.1) and (A.2) respectively. We start with a lemma on the locality of the optimal solutions.

Lemma 4 (Lemma 2 in the main paper).

We have

Moreover, if , then .

Proof.

To see the first inequality, note that if for every for some , then . By Lemma 2, this means . Thus, only if there is some such that . Therefore, we have that

To see the last inequality, note that, by the first order optimality condition of (A.2), if then we must have

| (A.5) |

Denote and . Note that , and , that is, contain all hyperedges that are incident to some node in . Moreover, we have that for any ,

where for follows from Lemma 3, since for and for . The equality for follows from because and for all .

Taking sums over on both sides of equation (A.5) we obtain

The second equality follows from for all . This proves .

Finally, if , then follows from Lemma 2 that for all . ∎

The following inequality is a special case of Hölder’s inequality for degree-weighted norms. It will become useful later.

Lemma 5.

For and we have that

Proof.

Let . Apply Hölder’s inequality we have

∎

Lemma 6 (Lemma I.2 in [31]).

For any and , one has

where

Recall that the objective function of our primal diffusion problem (A.1) consists of two parts. The first part is and it penalizes the cost of flow routing, the second part is and it penalizes the cost of excess mass. An immediate consequence of Lemma 6 is the inequality in Lemma 7 that relates the cost of optimal flow routing and the cost of excess mass at optimality.

For , recall that the sweep sets are defined as .

Let and denote . That is, for some and for all .

Lemma 7.

For and we have that

Given a vector and a set , recall that we write . This actually defines a modular set-function taking input on subsets of . The Lovász extension of modular function is simply [4]. Since all modular functions are also submodular, we arrive at the following lemma that follows from a classical property of the Choquet integral/Lovász extension.

Lemma 8.

We have that

Proof.

Recall that, by definition, where is the degree vector. and are modular functions on and is a submodular function on . The Lovász extension of and are and , respectively. The Lovász extension of is . The results then follow immediately from representing the Lovász extensions using Choquet integrals. See, e.g., Proposition 3.1 in [4]. ∎

A.2 Proof of Theorem 1 in the main paper

Let us recall that the sweep sets are defined as .

Recall that is such that for some and for all . We will assume without loss of generality that , as otherwise and the statement in Theorem 9 already holds.

Denote , the cost of optimal flow routing. The following claim states that must be large.

Claim A.1.

Proof.

The proof of this claim follows from a case analysis on the total amount of excess mass at optimality. Intuitively, if the excess is small, then naturally there must be a large amount of flow in order to satisfy the primal constraint; if the excess is large, then Lemma 7 and Lemma 5 guarantee that flow is also large. We give details below.

Suppose that . Note that this also includes the case where . By Assumption A.2 there is at least amount of source mass trapped in at the beginning. Moreover, the primal constraint enforces the nodes in can settle at most amount of mass. Therefore, the remaining at least amount of mass needs to get out of using the hyperedges in . That is, the net amount of mass that moves from to satisfies . We focus on the cost of restricted to these hyperedges along. It is easy to see that

| (A.6a) | ||||

| (A.6b) | ||||

| (A.6c) | ||||

The first inequality follows because restricted to is a feasible solution in problem (A.6a). The second inequality follows because implies , therefore every feasible solution for (A.6a) is also a feasible solution for (A.6b). The third inequality follows because . Let be an optimal solution of problem (A.6c). The optimality condition of (A.6c) is given by (we may assume the factor in the gradient of is absorbed into multipliers and )

| (A.7) |

If , then the conditions in (A.7) imply that , but then by complimentary slackness we would obtain for all which will violate feasibility. Therefore we must have , and consequently, we have that

| (A.8) |

Moreover, the conditions in (A.7) imply that for , if and only if , and hence we have that

| (A.9) |

Rearrange (A.9) we get

Substitute the above into (A.8),

this gives

Therefore, the solution for (A.6c) is give by

and hence,

where the last inequality follows because and .

To connect with , we define the length of a hyperedge as

The next claim follows from simple algebraic computations and the locality of solutions in Lemma 4.

Claim A.2.

.

Proof.

For , define . Then . Moreover,

The first inequality follows from that only if , and by Lemma 2, if and only if . The second and the third inequalities are due to Lemma 4. The second to last equality follows from the diffusion setting (A.4) and Assumption A.2 that . The last inequality follows from Assumption A.1. Therefore,

where the last equality follows from Lemma 2 that . ∎

By the strong duality between (A.1) and (A.2), we know that

Hence, by Lemma 2, we get

It then follows that

| (A.10) |

We can write the left-most ratio in (A.10) in its integral form, as follows. By Lemma 8, we have

and

where the last equality follows from the fact that for . Therefore, we get

which means that there exists such that

| (A.11) |

Appendix B Optimization algorithm for HFD

In this section we give details on an Alternating Minimization (AM) algorithm [5] that solves the primal problem (A.1). In Algorithm B.1 we write the basic AM steps in a slightly more general form than what is given by Algorithm 1 in the main paper. The key observation is that the AM method provides a unified framework to solve HFD, when the objective function of the primal problem (A.1) is penalized by any -norm for .

Initialization:

For do:

Let us remind the reader the definitions and notation that we will use. We consider a generic hypergraph where are submodular hyperedge weights. For each , we define a diagonal matrix such that if and 0 otherwise. We use the notation to represent a vector in the space , where each corresponds to a block in indexed by . For a vector , is the entry in that corresponds to . For a vector , where the maximum is taken entry-wise.

We denote .

We will prove the equivalence between the primal diffusion problem (A.1) and its separable reformulation shortly, but let us start with a simple lemma that gives closed-form solution for one of the AM sub-problems.

Lemma 1.

The optimal solution to the following problem

| (B.1) |

is given by

| (B.2) |

Proof.

Rewrite (B.1) as

| s.t. | |||

Then it is immediate to see that (B.1) decomposes into sub-problems indexed by ,

| (B.3) |

where is the set of hyperedges incident to , and we use for the entry in that corresponds to . Let denote the optimal solution for (B.3). We have that if and otherwise. Therefore, it suffices to find for . The optimality condition of (B.3) is given by

where

There are two cases about . We show that in both cases the solution given by (B.2) is optimal.

Case 1. If , then we must have that for all (otherwise, the stationarity condition would be violated). This means that for all , that is, for every . Denote . Because , by complementarity we have

which implies that . Note that because and for all . Therefore we have that

Case 2. If , then we have that for all , which implies for all . Then we must have

Therefore we still have that

The required result then follows from the definition of and . ∎

We are now ready to show that the primal problem (A.1) can be cast into an equivalent separable formulation, which can then be solved by the AM method in Algorithm B.1. We give the reformulation under general -norm penalty and arbitrary .

Lemma 2 (Lemma 3 in the main paper).

Proof.

We will show the forward direction and the converse follows from exactly the same reasoning. Let and denote the optimal objective value of problems (A.1) and (B.4), respectively. Let be an optimal solution for (A.1). Define for . We show that is an optimal solution for (B.4).

Because for all , by the definition of , we know that for all . Moreover,

so

Therefore, is a feasible solution for (B.4). Furthermore,

This means that attains objective value in (B.4). Hence .

In order to show that is indeed optimal for (B.4), it left to show that . Let be an optimal solution for (B.4). Then we know that

| (B.5) |

According to Lemma 1, we know that

| (B.6) |

Define . Then . Moreover, we have that

so

Therefore, is a feasible solution for (A.1). Furthermore,

This means that attains objective value in (A.1). Hence . ∎

Remark. The constructive proof of Lemma 2 means that, given an optimal solution for problem (B.4), one can recover an optimal solution for our original primal formulation (A.1) via . It then follows from Lemma 2 that the dual optimal solution is given by . Therefore, a sweep cut rounding procedure readily applies to the solution of problem (B.4).

Let denote the objective function of problem (B.4) and let denote its optimal objective value.

The following theorem gives the convergence rate of Algorithm B.1 applied to (B.4), when its objective function is penalized by -norm for .

Theorem 3 ([5]).

Let be the sequence generated by Algorithm B.1. Then for any ,

where

where and denote the feasible set and set of optimal solutions, respectively, , and .

Remark. When , as considered in the main paper, the objective function has Lipschitz continuous gradient with constant . When , the gradient of is not generally Lipschitz continuous. However, the sub-linear convergence rate in Theorem 3 applies as long as is block Lipschitz smooth in the sub-level sets containing the iterates generated by Algorithm B.1. We give more details in Subsection B.1.

B.1 Block Lipschitz smoothness over sub-level set

Recall that denotes the objective function of problem (B.4). Lemma 4 concerns specifically the setting when problem B.4 is penalized by the -norm for some .

Lemma 4 (Block Lipschitz smoothness).

The partial gradient is Lipschitz continuous over the sub-level sets (given any fixed )

with constant such that

where and . The partial gradient is Lipschitz continuous over the sub-level sets (given any fixed )

with constant .

Proof.

Fix and consider

The function is coordinate-wise separable and hence its second order derivative is a diagonal matrix. Therefore, the largest eigenvalue of is the largest coordinate-wise second order partial derivative, that is,

So it suffices to upper bound and for all . We have that

where . It follows that for all ,

because otherwise we would have . Hence,

Finally, by the symmetry between and in , we know that . ∎

Remark. Because the iterates generated by Algorithm B.1 monotonically decrease the objective function value, in particular, we have that

for any . Therefore, the sequence of iterates live in the sub-level sets. As a result, for any , the block Lipschitz smoothness within sub-level sets suffices to obtain the sub-linear convergence rate for the AM method [5].

B.2 Alternating minimization sub-problems

We now discuss how to solve the sub-problems in Algorithm B.1 efficiently. By Lemma 1, we know that the sub-problem with respect to ,

has closed-form solution

For the sub-problem with respect to ,

note that it decomposes into independent problems that can be minimized separately. That is, for , we have

| (B.7) |

The above problem (B.7) is strictly convex so it has a unique minimizer.

We focus on first. In this case, problem (B.7) can be solved in sub-linear time using either the conic Frank-Wolfe algorithm or the conic Fujishige-Wolfe minimum norm algorithm studied in [32]. Notice that the dimension of problem (B.7) is the size of the corresponding hyperedge. Therefore, as long as the hyperedge is not extremely large, we can easily obtain a good update .

If has a special structure, for example, if the hyperedge weight models unit cut-cost, then an exact solution for (B.7) can be computed in time [32]. For completeness we transfer the algorithmic details in [32] to our setting and list them in Algorithm B.2. The basic idea is to find optimal dual variables achieving dual optimality, and then recover primal optimal solution from the dual. We refer the reader to [32] for detailed justifications. Given , , and , denote

Define

Now we discuss the case in (B.7). The dual of (B.7) is written as

| (B.8) |

Let and be optimal solutions of (B.7) and (B.8), respectively. Then one has

Both the derivation of (B.8) and the above relations between and follow from similar reasoning and algebraic computations used in the proofs of Lemma 1 and Lemma 2. Therefore, we can use subgradient method to compute first and then recover and . For special cases like the unit cut-cost, a similar approach to Algorithm B.2 can be adopted to obtain an almost (up to a binary search tolerance) exact solution, by modifying Steps 2-6 to work with general -norm and replacing Step 10 with binary search. See Algorithm B.3 for details.

Caution. To simplify notation in Algorithm B.3, for and , is to be interpreted as , where we treat . For , we define

Appendix C Empirical set-up and results

C.1 Experiments using synthetic data

In this subsection we provide details aboue how we generate synthetic hypergraphs using -uniform stochastic block model and how we set the parameters for the algorithms used in our experiments. Additional synthetic experiments that demonstrate or explain the robustness of our method are also provided.

Data generation. We generate four sets of hypergraphs using the generalized HSBM described in the main paper. All hypergraphs have nodes. For simplicity, we require that each block in the hypergraph has constant size 50.

1st set of hypergraphs. We generate the first set of hypergraphs with , constant and varying . Recall that for there is only one possible inter-cluster probability . We pick so the expected number of intra-cluster hyperedges is 1500 for each block of size 50. We set a wide range for so that the interval covers both extremes, i.e., when the ground-truth target cluster is very clean or very noisy. These hypergraphs are used to evaluate the performance of algorithms for the unit cut-cost setting when the target cluster conductance varies. Figure 4 in the main paper uses the local clustering results on these hypergraphs.

2nd set of hypergraphs. For the second set of hypergraphs, we vary . Moreover, we set , so every inter-cluster hyperedge contains a single node on one side and the rest on the other side. In this setting, separating the two ground-truth communities will incur a small penalty using the cardinality cut-cost, but a large penalty using the unit cut-cost. Therefore, methods that exploit appropriate cardinality-based cut-cost should perform better. The hypergraphs are sampled so that the conductance of a block stays the same across different ’s. We compute the conductance based on the unit cut-cost when generating the hypergraphs, because the scale of conductance based on the unit cut-cost is less affected by than the scale of conductance based on the cardinality cut-cost. See details below for how the scale of conductance based on the cardinality cut-cost is affected by . The second set of hypergraphs is used to evaluate the performance of algorithms for both unit and cardinality cut-costs when the hyperedge size varies. Figure 5 in the main paper (and Figure C.3 and Figure C.4 in the appendix) uses the local clustering results on these hypergraphs.

3rd set of hypergraphs. For the third set of hypergraphs, we set . We consider constant or , constant and varying . These hypergraphs are used to evaluate the performance of algorithms for both unit and cardinality cut-costs when the target cluster conductance varies. Figure C.1 and Figure C.2 in the appendix are based on the local clustering results on these hypergraphs.

4th set of hypergraphs. This set consists of two hypergraphs generated with , and . The ground-truth target cluster in the first hypergraph has conductance 0.05, while the ground-truth target cluster in the second hypergraph has conductance 0.3. These two hypergraphs are used to compare the performance of algorithms for the unit cut-cost setting, when the theoretical assumptions of LH holds (for the first hypergraph) or fails (for the second hypergraph).

Parameters. For HFD, for all synthetic experiments, we initialize the seed mass so that is three times the volume of the target cluster (recall from Assumption 2 this is without loss of generality). We set . We tune the parameters for LH as suggested by the authors [33]. Specifically, LH has a regularization parameter and we let where is the ratio between the number of seed node(s) and the size of the target cluster. We perform a binary search on and find that gives good results for the synthetic hypergraphs. An important parameter for LH is . When it models unit cut-cost and when it models cardinality-based cut-cost with an upper bound [33]. We consider both cases (U-LH) and (C-LH). In principle, for -uniform hypergraphs LH should produce the same result for any , so one could simply set for C-LH. However in our experiments we find that the value that gives the best clustering results can be much larger than . In order to get the best performance out of C-LH, we run C-LH for , . Among the 13 output clusters from C-LH we pick the one with the lowest conductance. For ACL, we use the same set of parameter values used in [33] because that parameter setting also produces good results in our synthetic experiments.

Scale of cardinality-based conductance. To see how ground-truth conductance scales (computed using the cardinality cut-cost) with hyperedge size , let us assume that a hypergraph , having nodes and two blocks where each block contains 50 nodes, is generated from , and . In this case, the hypergraph consists of all and only inter-cluster hyperedges. Let denote a target cluster, that is, is either one of the two ground-truth blocks. Since we have nodes and each of the two blocks contains nodes, the total number of hyperedges is

Let denote the cardinality-based cut-cost given by . Then for each we have that . Moreover, the volume of is

and hence we have

This means that, for any , , , let be one of the two blocks in , then for , for , and for . This explains why the ranges of ground-truth conductance we consider in Figure C.1 and Figure C.2 are different from the range of ground-truth conductance in Figure 4 in the main paper. For each we try to make the range of conductance (i.e., -axis) as wide as possible, but due to the different scales of cardinality-based conductance for different , the ranges vary accordingly.

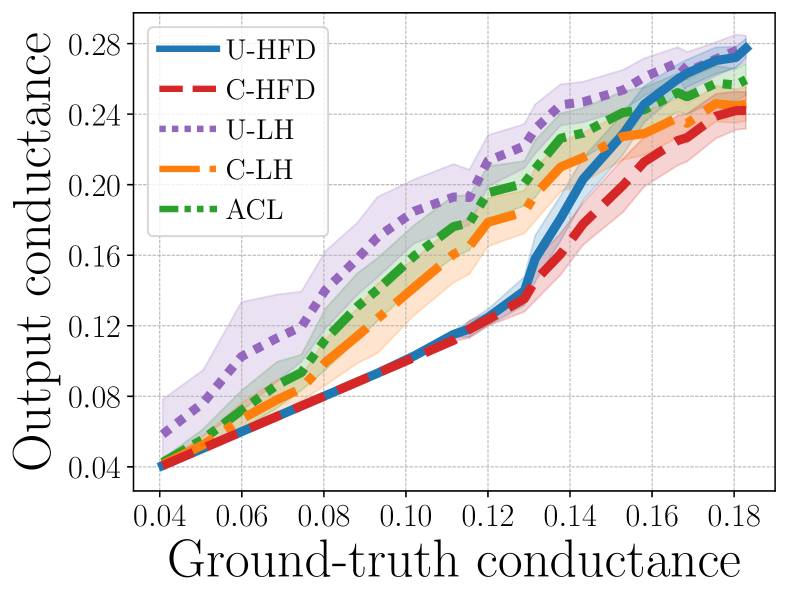

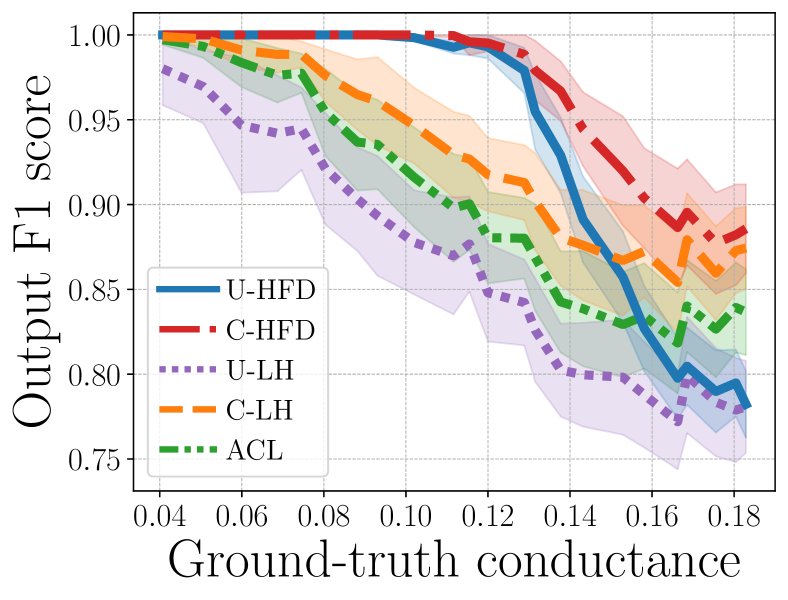

Additional results. Figure C.1 and Figure C.2 show how the algorithms perform on -uniform hypergraphs for , respectively, as we vary the target cluster conductances. The plots show that as the target cluster becomes more noisy, the performance of all methods degrades. However, C-HFD is better in terms of both conductance and F1 score, especially when the target cluster is noisy but not complete noise (i.e., the ground-truth conductance is high but not too high). For and high-conductance regime, methods that use unit cut-cost, e.g., U-HFD, have poor performance because they find low-conductance clusters based on the unit cut-cost as opposed to the cardinality cut-cost. In general, lower unit cut-cost conductance does not necessarily translates to lower cardinality-based conductance or higher F1 score. For both Figure C.1 and Figure C.2, the ground-truth conductance is computed using cardinality-based cut-cost, therefore the ground-truth conductances (on the -axes) have different scales and ranges. Figure C.3 and Figure C.4 show the median (markers) and 25-75 percentiles (lower-upper bars) of conductance ratios and F1 scores for . The target clusters have unit cut-cost conductances around 0.2 for Figure C.3 and 0.25 for Figure C.4. Notice that, when the target clusters are less noisy (cf. Figure 5 in the main paper where target clusters are more noisy, having unit conductance around 0.3), U-HFD and C-HFD are significantly better than other methods. The performance of U-HFD is slightly affected by the hyperedge size when the target clusters have unit conductance around 0.25, while the performance of C-HFD stays the same across all ’s.

Why is the empirical performance of U-HFD better than U-LH? For the unit cut-cost setting, the local clustering guarantee for HFD holds under much weaker assumptions than those required for LH. The assumptions for LH could fail in many cases, and consequently we see that U-HFD has significantly better performance than U-LH in the experiments with both synthetic and real data. More specifically, the theoretical framework for LH assumes that the node embeddings are global (i.e., the solution is dense). However, in order to obtain a localized algorithm, the authors use a regularization parameter to impose sparsity in the solution. The localized algorithm computes a sparse approximation to the original global solution, but some clustering errors could also be introduced. In general, this does not seem to be a major issue, as localized solutions only seem to slightly affect the clustering performance as shown in Figure C.5. A more crucial assumption of LH is that its approximation guarantee relies on a strong condition that the conductance of the target cluster is upper bounded by , where is a tuning parameter and is a constant that depends on both and a specific sampling strategy for selecting a seed node from the target cluster. In our experiments we find that this assumption often breaks. In what follows we provide a simple illustrating example using synthetic hypergraphs. First of all, we sample a sequence of hypergraphs using HSBM with nodes, two ground-truth communities each consisting of 50 nodes, constant , varying and . For each hypergraph we identify one ground-truth community as the target cluster, and we select a seed node uniformly at random from the target cluster. We compute the quantity and we find that this quantity is always less than 0.12 for any . This means that in order for the assumption of LH to hold, the target cluster must have conductance no more than 0.12, which is a very strict requirement and cannot hold in general. In order to compare the performances of LH when its assumption holds or fails, respectively, we picked two hypergraphs (i.e., the fourth set of hypergraphs that we generate) that correspond to the two scenarios. The target clusters have conductance 0.05 and 0.3, respectively. Therefore, the assumption for LH holds for the first hypergraph but fails for the second hypergraph. Moreover, we consider both global and localized solutions for LH. The global solution demonstrates the performance of LH under the required theoretical framework, while the localized solution demonstrates what happens in practice when one uses sparse approximation for computational efficiency. For LH, we compute the global solution by simply setting the regularization parameter to 0; we tune the localized solution and set where is the ratio between the number of seed node(s) and the size of the target cluster. The way we pick is similar to the authors’ choice for LH. For HFD, we set and initial mass 3 times the volume of the target cluster. We run both methods multiple times, each time we use a different node from the target cluster as the single seed node. The median, lower and upper quantiles of F1 scores are shown in Figure C.5. For LH, observe that (i) for both hypergraphs where the assumption either holds or fails, localizing the solution slightly reduces the F1 score, and (ii) for both global and localized solutions, LH has much worse performance on the hypergraph where its assumption does not hold. On the other hand, HFD perfectly recovers the target clusters in both settings.

C.2 Experiments using real-world data

C.2.1 Datasets and ground-truth clusters

We provide complete details on the real hypergraphs we used in the experiments. The last three datasets are used for additional experiments in the appendix only.

Amazon-reviews [38, 43]. This is a hypergraph constructed from Amazon product review data, where each node represents a product. A set of products are connected by a hyperedge if they are reviewed by the same person. We use product category labels as ground truth cluster identities. In total there are 29 product categories. Because we are mostly interested in local clustering, we consider all clusters consisting of less than 10,000 nodes.

Trivago-clicks [14]. The nodes in this hypergraph are accommodations/hotels. A set of nodes are connected by a hyperedge if a user performed “click-out” action during the same browsing session, which means the user was forwarded to a partner site. We use geographical locations as ground truth cluster identities. There are 160 such clusters. We consider all clusters in this dataset that consists of less than 1,000 nodes and has conductance less than 0.25.

Florida Bay food network [30]. Nodes in this hypergraph correspond to different species or organisms that live in the Bay, and hyperedges correspond to transformed network motifs of the original dataset. Each species is labelled according its role in the food chain.

High-school-contact [35, 14]. Nodes in this hypergraph represent high school students. A group of people are connected by a hyperedge if they were all in proximity of one another at a given time, based on data from sensors worn by students. We use the classroom to which a student belongs to as ground truth. In total there are 9 classrooms.

Microsoft-academic [41, 1]. The original co-authorship network is a subset of the Microsoft Academic Graph where nodes are authors and hyperedges correspond to a publication from those authors. We take the dual of the original hypergraph by converting hyperedges to nodes and nodes to hyperedges. After constructing the dual hypergraph, we removed all hyperedges having just one node and we kept the largest connected component. In the resulting hypergraph, each node represents a paper and is labelled by its publication venue. A set of papers are connected by a hyperedge if they share a common coauthor. We combine similar computer science conferences into four broader categories: Data (KDD, WWW, VLDB, SIGMOD), ML (ICML, NeurIPS), TCS (STOC, FOCS), CV (ICCV, CVPR).

Oil-trade network. This hypergraph is constructed using the 2017 international oil trade records from UN Comtrade Dataset. We adopt a similar modelling approach to Figure 1 in the main paper. Each node represents a country, form a hyperedge if the trade surplus from each of to each of exceeds 10 million USD (this is roughly 80% percentile country-wise oil export value). Therefore, two countries belong to the same hyperedge if they share important trading partners in common. We use this network to for the node ranking problem.

Table C.1 provides summary statistics about the hypergraphs. Table C.2 includes the statistics of all ground truth clusters that we used in the experiments.

Dataset Number of nodes Number of hyperedges Maximum hyperedge size Maximum node degree Median / Mean hyperedge size Median / Mean node degree Amazon-reviews 2,268,231 4,285,363 9,350 28,973 8.0 / 17.1 11.0 / 32.2 Trivago-clicks 172,738 233,202 86 588 3.0 / 4.1 2.0 / 5.6 Florida-Bay 126 141,233 4 19,843 4.0 / 4.0 3,770.5 / 4,483.6 Microsoft-academic 44,216 22,464 187 21 3.0 / 5.4 2.0 / 2.7 High-school-contact 327 7,818 5 148 2.0 / 2.3 53.0 / 55.6 Oil-trade 229 100,639 4 16,394 4.0 / 4.0 175.0 / 1,757.9

| Dataset | Cluster | Size | Volume | Conductance |

| Amazon-reviews | 1 - Amazon Fashion | 31 | 3042 | 0.06 |

| 2 - All Beauty | 85 | 4092 | 0.12 | |

| 3 - Appliances | 48 | 183 | 0.18 | |

| 12 - Gift Cards | 148 | 2965 | 0.13 | |

| 15 - Industrial & Scientific | 5334 | 72025 | 0.14 | |

| 17 - Luxury Beauty | 1581 | 28074 | 0.11 | |

| 18 - Magazine Subs. | 157 | 2302 | 0.13 | |

| 24 - Prime Pantry | 4970 | 131114 | 0.10 | |

| 25 - Software | 802 | 11884 | 0.14 | |

| Trivago-clicks | KOR - South Korea | 945 | 3696 | 0.24 |

| ISL - Iceland | 202 | 839 | 0.21 | |

| PRI - Puerto Rico | 144 | 473 | 0.25 | |

| UA-43 - Crimea | 200 | 1091 | 0.24 | |

| VNM - Vietnam | 832 | 2322 | 0.24 | |

| HKG - Hong Kong | 536 | 4606 | 0.24 | |

| MLT - Malta | 157 | 495 | 0.24 | |

| GTM - Guatemala | 199 | 652 | 0.24 | |

| UKR - Ukraine | 264 | 648 | 0.24 | |

| SET - Estonia | 158 | 850 | 0.23 | |

| Florida- Bay | Producers | 17 | 10781 | 0.70 |

| Low-level consumers | 35 | 173311 | 0.58 | |

| High-level consumers | 70 | 375807 | 0.54 | |

| Microsoft- academic | Data | 15817 | 45060 | 0.06 |

| ML | 10265 | 26765 | 0.16 | |

| TCS | 4159 | 10065 | 0.08 | |

| CV | 13974 | 38395 | 0.08 | |

| High-school-contact | Class 1 | 36 | 1773 | 0.25 |

| Class 2 | 34 | 1947 | 0.29 | |

| Class 3 | 40 | 2987 | 0.20 | |

| Class 4 | 29 | 913 | 0.41 | |

| Class 5 | 38 | 2271 | 0.26 | |

| Class 6 | 34 | 1320 | 0.26 | |

| Class 7 | 44 | 2951 | 0.16 | |

| Class 8 | 39 | 2204 | 0.19 | |

| Class 9 | 33 | 1826 | 0.25 |

C.2.2 Methods and parameter setting

HFD We use for all the experiments. We set the total amount of initial mass as a constant factor times the volume of the target cluster. For Amazon-reviews, on the smaller clusters 1, 2, 3, 12, 18, we used ; on the larger clusters 15, 17, 24, 25, we used . For both Trivago-clicks, High-school-contact and Microsoft-academic, we used . For Florida Bay food network, we used for clusters 1, 2, 3, respectively. In all experiments, the choice of is to ensure that the diffusion process will cover some part of the target and incur a high cost in the objective function. For the single seed node setting, we simply set the initial mass on the seed node as . For the multiple seed nodes setting where we are given a seed set , for each we set the initial mass on as .