Local Patch AutoAugment with Multi-Agent Collaboration

Abstract

Data augmentation (DA) plays a critical role in improving the generalization of deep learning models. Recent works on automatically searching for DA policies from data have achieved great success. However, existing automated DA methods generally perform the search at the image level, which limits the exploration of diversity in local regions. In this paper, we propose a more fine-grained automated DA approach, dubbed Patch AutoAugment, to divide an image into a grid of patches and search for the joint optimal augmentation policies for the patches. We formulate it as a multi-agent reinforcement learning (MARL) problem, where each agent learns an augmentation policy for each patch based on its content together with the semantics of the whole image. The agents cooperate with each other to achieve the optimal augmentation effect of the entire image by sharing a team reward. We show the effectiveness of our method on multiple benchmark datasets of image classification and fine-grained image recognition (e.g., CIFAR-10, CIFAR-100, ImageNet, CUB-200-2011, Stanford Cars and FGVC-Aircraft). Extensive experiments demonstrate that our method outperforms the state-of-the-art DA methods while requiring fewer computational resources. The code is available at https://github.com/LinShiqi047/PatchAutoAugment.

1 Introduction

Data Augmentation (DA) has been widely used to alleviate the overfitting risk in training deep neural networks by appropriately enriching the diversity of training data (Shorten & Khoshgoftaar, 2019; Gontijo-Lopes et al., 2020). Notable DA methods improve the performance and robustness of the neural networks, such as rotation, Mixup (Zhang et al., 2017) and Cutmix (Yun et al., 2019). But these approaches are typically handcraft and require human prior knowledge, which causes weak transferability of DA across different datasets. To relieve the dependence on manual design and further explore more adaptive augmentation, AutoAugment (AA) (Cubuk et al., 2018), as a new DA paradigm, is proposed to automate the search of the optimal DA policies (i.e., DA operation, probability and magnitude) from the training dataset. To be specific, AA trains a proxy model with the augmentation policy generated by a controller, which is updated through reinforcement learning using validation accuracy as the reward signal. In spite of the superior performance of AA, its optimization procedure is computationally intensive due to the need to evaluate thousands of policies. Therefore, to reduce the search costs, multiple automated DA approaches (Lim et al., 2019; Ho et al., 2019; Hataya et al., 2019; Zhang et al., 2019b; Lin et al., 2019) have been proposed. For example, (Lim et al., 2019) employs density matching as a search method to accelerate the policy search, and (Zhang et al., 2019b) introduces adversarial learning to organize the target network training and augmentation policies search in an online manner.

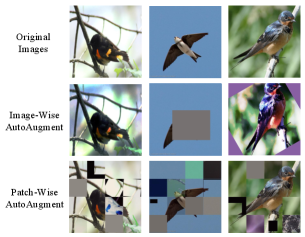

Yet the aforementioned automated DA methods all search for policies at the image level. They ignore the exploration of diversity in local regions, which may result in insufficient diversity of dataset and limit the benefits of DA (Gontijo-Lopes et al., 2020). In addition, due to this coarse-grained augmentation, they may damage key semantic information and introduce ambiguity into the training process (see a simple example in Figure 1 row 2, column 2). With those in mind, it is necessary to automatically search for the optimal augmentation policies for different regions by taking regional diversity into account. One straightforward idea is to directly apply image-wise automated DA methods in different regions. But such an intuitive solution ignores the contextual relationship between regions, which may lead to the non-globally optimal effectiveness of DA policies across the entire image. Besides, it may encounter an extremely high computational cost due to the need of optimizing multiple policies for regions respectively.

In this paper, we propose a new approach, named Patch AutoAugment (PAA) (see the last row of Figure 1), to address the above-mentioned problems. We first divide an image into a grid of patches to increase the flexibility of representations for different regions and take “patch” as the basic control unit. Then, we model the search for the augmentation policies of patches as a fully cooperative multi-agent task, and we leverage a multi-agent reinforcement learning (MARL) algorithm where agents cooperatively learn the policies. Specifically, based on the content of each patch and the semantics of the entire image, the agent searches for a policy in terms of choosing which transformation to apply out of the pre-defined DA operations. To encourage our policy networks to adaptively learn the beneficial augmentation policies for the target network, we use the feedback of the target network as the team reward signal to guide the policy networks to learn on the fly. All agents wind up benefiting from two mechanisms (i.e., parameter sharing and centralized training with decentralized execution) in MARL. In this way, all agents collaboratively and parallelly learn policies to further achieve the joint optimal DA policy across the whole image and alleviate the computational cost.

In summary, our contributions can be summarized as follows: 1) We pinpoint that exploring diversity in local regions is important for automated learned DA approaches. To our best knowledge, we are the first to propose a more fine-grained automated DA approach, that searches for the optimal policies for the patches according to the content of the patch and the semantics of the entire image. 2) To further achieve the joint optimal policy across the image, we model the DA policy search of patches as a fully cooperative multi-agent task, and adopt a multi-agent reinforcement learning algorithm for Patch AutoAugment by considering the contextual relationship between the patches. 3) Our visualization results provide some insights to the DA community on which augmentation operation should be chosen for patches with different content during the whole training process.

2 Related Work

2.1 Data Augmentation

Despite the remarkable performance of deep learning models in computer vision tasks, they often suffer from overfitting. Data augmentation (DA) as an effective technique has been proved to improve the generalization ability of deep learning models (Shorten & Khoshgoftaar, 2019). Previous works (Cubuk et al., 2020; Gontijo-Lopes et al., 2020) indicate that the main benefit of DA arises from increasing the diversity of images. Popular techniques, such as rotation, flipping, color transformation, have been performed as commonly used augmentation methods. Recently, thanks to the skillful design of human experts, many DA methods (e.g., Cutout (DeVries & Taylor, 2017), Mixup (Zhang et al., 2017) and CutMix (Yun et al., 2019)) have been proposed and show significant performance. However, these manually designed methods require additional human prior knowledge on the dataset and sometimes they are limited to certain datasets and target tasks. Naturally, automatically finding DA methods from data have emerged to overcome the limitations of dependence on manual cumbersome exploration. Some works use generative adversarial networks (GANs) to directly generate training data (Shrivastava et al., 2017; Tran et al., 2017).

Furthermore, recent studies aim to automate the search for augmentation policies that choose the optimal transformations for training images. AutoAugment (AA) (Cubuk et al., 2018) adopts a controller to generate an augmentation policy which is used to train a proxy network, then gets the validation accuracy as the reward signal to update the controller using reinforcement learning. Unfortunately, the evaluation of thousands of policies makes AA computationally expensive. Therefore, multiple automated DA approaches focus on reducing the huge complexity and have achieved great progress. For example, PBA (Ho et al., 2019) employs hyperparameter optimization, Fast AA (Lim et al., 2019) uses a density matching algorithm and Adversarial AA (Zhang et al., 2019b) proposes an adversarial framework to jointly optimize the target network and the augmentation network. However, these automated augmentation methods perform the search at the image level, i.e., they use the same policy on the whole image. It inevitably ignores the diversity of different regions in an image, which limits the diversity of data increased by data augmentation, and sometimes causes the damage of critical semantic information. In contrast, our method takes diversity in local regions and contextual relationship into account, aiming to search for augmentation policies for multiple regions and achieve the joint optimal DA effect across the entire image through MARL.

Our proposed method is conceptually orthogonal to most region-based DA methods where DA transformations are performed in a non-automated way. For example, RandomErasing (Zhong et al., 2020), CutMix (Yun et al., 2019) perform cropping or replacement operation on a randomly selected rectangle region. Some works further exploit class activation map (Zhou et al., 2016) or saliency map (Zhou et al., 2015) to select representative regions which are augmented by a randomly selected DA operation (e.g., SaliencyMix (Uddin et al., 2020), SnapMix (Huang et al., 2020)) and KeepAugment (Gong et al., 2020)). Yet our proposed method automatically searches for the augmentation transformations based on the given datasets and tasks.

2.2 Multi-Agent Reinforcement Learning

The most significant characteristic of MARL is the cooperation between agents (Tampuu et al., 2017; Ma & Cameron, 2008; Foerster et al., 2017) which is distinct from directly applying reinforcement learning (RL) algorithm to multi-agent systems. Due to the limited observation and action of a single agent, cooperation is necessary in the reinforced multi-agent system to achieve the common goal. Compared with independent agents, cooperative agents can improve the efficiency and robustness of the model (Neto, 2005; Zhang et al., 2019a; Busoniu et al., 2008). Many vision tasks use MARL to interact with the public environment to make decisions, with the goal of maximizing the expected total return of all agents, such as image segmentation (Liao et al., 2020; Han et al., 2019; Ma et al., 2020), image processing (Furuta et al., 2019).

3 Proposed Method

As above mentioned, we aim to search augmentation policies for the patches to explore more augmentation diversity. Due to the need to consider the effectiveness of DA, it is of great necessity to take the patch content and the contextual relationship between patches into account. Therefore, we model the search problem as a multi-agent Markov decision process and use multi-agent reinforcement learning (MARL) algorithm. Specifically, the search for patch policies is based on the content of the patch together with the semantics of the image, and policies are encouraged to coordinate to achieve the joint optimal DA policy across the whole image. In this section, we first describe the preliminaries of MARL, then elaborate on our augmentation policy formulation and modelling. Furthermore, we summarize the framework of Patch AutoAugment.

3.1 Preliminaries of Multi-Agent Reinforcement Learning

We first introduce the preliminaries of reinforcement learning (RL). RL models the decision-making problem as a Markov decision process (MDP) which is presented with a tuple . In RL framework, given the state , the agent takes an according to its policy and then receives a reward . The environment moves to the next state with a transition function denoted as . is a discount factor and is a time horizon. The agent aims to maximize the long-term reward over steps to learn the optimal policy .

Furthermore, multi-agent reinforcement learning (MARL) considers a group of agents, denoted as , operating cooperatively in a shared environment towards a common goal. It can be formulated as a multi-agent MDP (MAMDP) (Boutilier, 1996) represented with a tuple . Here, describes the shared state space and is a set of observations for agents. In MARL configuration, each agent receives a private observation correlated with part of state, i.e., . According to the global state and its observation , the agent takes its action based on its policy , is the action space for the -th agent and denotes the joint action space. Then, the joint action produces next state according to transition function and the environment gives the team reward to agents . The objective of each agent is to maximize the total accumulative reward to cooperatively learn the globally optimal policy that consists of the optimal policy of each agent. In this paper, as mentioned before, we employ MARL to search optimal policy for each patch, and achieve the optimal effectiveness of DA across the entire image to further improve the performance of the target network as possible.

3.2 Patch AutoAugment

In our proposed Patch AutoAugment (PAA), we formulate the task of policy search for the patches as a cooperative multi-agent decision-making problem and adopt multi-agent reinforcement learning (MARL) to solve it. In the following, We clarify the detailed formulation ) the state, observation and action modeling for the policy, ) an effective team reward function design and ) the detailed MARL algorithm for policy learning) of Patch AutoAugment.

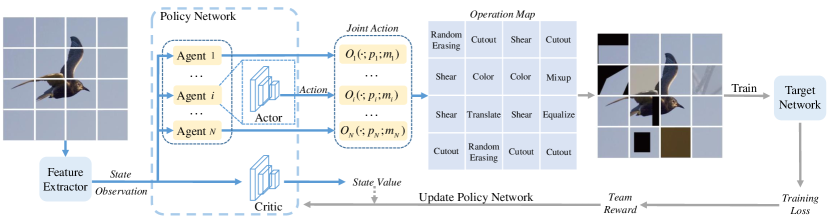

Policy Modeling. As illustrated in Figure 2, given the original input batch and the corresponding label (i.e., and is the batch size), we divide an image into equal-sized and non-overlapping patches, denoted as where is the -th patch of the image . In our proposed method, we aim to search the augmentation policy for each patch. Therefore, in MARL formulation, the augmentation policy of the patch is controlled by an agent and we detail the state, observation and action for the augmentation policy as below.

State. As aforementioned, the selection of augmentation operation for a patch is closely bound up with the contextual relationship between regions. Therefore, the augmentation policy needs to perceive the image semantics and we take the deep features of the whole image extracted by a backbone (e.g., ImageNet pre-trained ResNet-18 (He et al., 2016)) as the global state which is visible to all agents.

Observation. Apart from capturing state (i.e., the global information), the agent only use their own observation (i.e., the local information) which is invisible to other agents. The augmentation policy seeks to choose augmentation operation based on the content of the patch and the observation is generally part of the state. Considering all these factors, we utilize the deep features of the -th patch as observation , which are extracted by the same backbone as the state extractor.

Action. The augmentation policy is responsible for choosing which transformations to apply from pre-defined operations. Following the previous automated DA methods (Cubuk et al., 2018; Lim et al., 2019), we define the fifteen operation functions (i.e., ShearX/Y, TranslateX/Y, Rotate, Invert, Equalize, Solarize, Posterize, Contrast, Color, Brightness, Sharpness, RandomErasing, Cutout, Mixup, Cutmix) to construct the action space . Given the state and the observation , according to policy , each agent determines an action , which is the operation performed on the patch. Each operation is associated with two hyperparameters: probability and magnitude . In order to dramatically and effectively reduce the action space for augmentation policy, similar with (Cubuk et al., 2020; Ho et al., 2019; Lim et al., 2019), we take a fixed probability and magnitude schedule. Among them, the probability of applying the operation is sampled from the uniform distribution (i.e., . Following (Ho et al., 2019), we employ the same linear scale to be the magnitude schedule. In summary, the processed -th patch in image is denoted as with the probability and the magnitude of is , otherwise .

Note that most operations are label-invariant, except Mixup (Zhang et al., 2017) and CutMix (Yun et al., 2019) are label-disturbing operations that combine different patches as well as their labels. We take Mixup as an example, then with probability , where is a patch from another image , , for , and the one-hot label is modified as . Until all patches are processed by the corresponding operations chosen by the augmentation policies, we obtain the image augmented by our PAA, denoted as , and the final label . More operation details are shown in Appendix A.

Reward Function. The reward function is of importance to guide the agents to learn so that they follow desired behaviors. The previous work, Adversarial AA (AdvAA) (Zhang et al., 2019b), attempts to increase the training loss of the target network to generate harder augmentation policies and explore the weakness of the target network. Inspired by AdvAA, we reformulate the reward design appropriately under our configuration. In our proposed PAA, the common objective of all agents is to improve the performance of the mainstream target task through enhancing the benefits of DA. Therefore, we compare the feedback of target network on the augmented data processed by our proposed PAA with the original data and take their difference on the training losses as the reward for the policy in MARL, as in Eq. (1):

| (1) |

where and denote raw inputs and labels in supervision tasks. In our PAA model, all agents are encouraged to cooperate to achieve the common goal, thus we adopt the team reward function design (i.e., the shared reward mechanism in MARL) as Eq. (1) for all agents to make the joint augmentation policy achieve the optimal effectiveness.

Policy Learning. Here, we introduce the training for the augmentation policies mentioned above. Considering that the action space is discrete, we adopt multi-agent Advantage Actor-Critic algorithm (Lowe et al., 2017) to learn the augmentation policies and encourage the coordination behaviors. In MARL, the framework of centralized training with decentralized execution (Foerster et al., 2018; Rashid et al., 2018) is generally adopted. More concretely, each agent has an actor which is to learn discrete policy and agents share a common critic which aims to estimate the value of global state . And we use the centralized critic to train decentralised actors. Here, we reformulate it appropriately for our task. We model the centralized action-value Q function that takes the actions of all agents in addition to state information and outputs the Q-value for the team, formulated as:

| (2) |

where is the joint action of all agents and is the long-term discounted reward. Then, the advantage function on the augmentation policy is given as follows:

| (3) |

And we use to denote the critic network parameters. We take the square value of the advantage function as the loss function to update :

| (4) |

Besides, to further achieve the ability to cooperate, similar to (Foerster et al., 2018; Rashid et al., 2018), the parameters of the actor networks of all agents are shared, denoted as . In addition, the loss function for updating is defined as:

| (5) |

Framework Summary. In this part, we summarize the overall framework of our proposed PAA. As shown in Figure 2, PAA first divides an image into a grid of patches. Then, we use a feature extractor to obtain the deep features of the whole image as the state. Each agent draws its individual observation, i.e., the deep features of the patch. According to the global state (i.e., the semantics of the entire image) and the local observation (i.e., the content of the patch), the actor networks output the augmentation operations of patches to further construct the joint operation map performed on the whole image. The augmented mini-batch is processed by our proposed PAA and then we input it to the target task network for parameters updating. Moreover, the feedback of the target network is used as the team reward signal to update the policy network.

| Dataset | Model | Baseline | CutOut | Mixup | CutMix | AA | FastAA | PAA |

|---|---|---|---|---|---|---|---|---|

| CIFAR-10 | WRN-28-10 | 96.1 | 96.9 | 97.1 | 97.2 | 97.4 | 97.3 | 97.5 |

| SS(26 2x32d) | 96.4 | 97.0 | 97.2 | 97.3 | 97.5 | 97.3 | 97.6 | |

| SS(26 2x96d) | 97.1 | 97.4 | 97.7 | 97.8 | 97.7 | 97.7 | 98.1 | |

| SS(26 2x112d) | 97.2 | 97.4 | 98.0 | 98.0 | 98.1 | 98.0 | 98.1 | |

| Pyramid+SD | 97.3 | 97.7 | 98.0 | 98.1 | 98.5 | 98.2 | 98.6 | |

| CIFAR-100 | WRN-28-10 | 81.2 | 81.6 | 82.1 | 82.8 | 82.9 | 82.7 | 83.4 |

| SS(26 2x96d) | 82.9 | 84.0 | 85.4 | 85.6 | 85.7 | 85.1 | 85.9 | |

| Pyramid+SD | 86.0 | 87.8 | 88.5 | 88.9 | 89.3 | 88.1 | 89.2 |

| Method | Baseline | Mixup | CutMix | AA | FastAA | PAA |

|---|---|---|---|---|---|---|

| ResNet-50 | 76.3 / 93.1 | 77.0 / 93.4 | 77.2 / 93.5 | 77.6 / 93.8 | 77.6 / 93.7 | 78.3 / 94.1 |

| ResNet-200 | 78.5 / 94.2 | 79.6 / 94.8 | 79.9 / 94.9 | 80.1 / 95.0 | 80.6 / 95.3 | 81.0 / 95.2 |

4 Experiments

4.1 Experiment Overview

In this section, to study the effectiveness of Patch AutoAugment (PAA), we experiment with core image classification and fine-grained image recognition tasks. Exactly, we focus on CIFAR-10, CIFAR-100 (Krizhevsky et al., 2009) and ImageNet (Deng et al., 2009) datasets as well as three fine-grained object recognition datasets, i.e., CUB-200-2011 (Wah et al., 2011), Stanford Cars (Krause et al., 2013) and FGVC-Aircraft (Maji et al., 2013). We describe the datasets in detail in Appendix B. We compare PAA with baseline pre-processing, Cutout (DeVries & Taylor, 2017), Mixup (Zhang et al., 2017), Cutmix (Yun et al., 2019), AutoAugment (AA) (Cubuk et al., 2018) and Fast AutoAugment (FastAA) (Lim et al., 2019). The baseline follows (Zoph et al., 2018; Yamada et al., 2018; Gastaldi, 2017): standardizing the data, horizontally flipping with 0.5 probability, zero-padding and random cropping. More details about our policy network architectures and model hyperparameters are supplied in Appendix C and D, respectively. Moreover, we set the number of patches to for CIFAR tasks, and for ImageNet together with fine-grained recognition datasets. To ensure the reliability of our experiments, we run each experiment four times using different random seeds.

4.2 Results and Analysis

Classification Results on CIFAR-10 and CIFAR-100. For CIFAR-10 and CIFAR-100, we examine on Wide-ResNet-28-10 (WRN-28-10) (Zagoruyko & Komodakis, 2016), Shake-Shake (SS) (Gastaldi, 2017) and Pyramid-Net+ShakeDrop (Pyramid+SD) (Han et al., 2017; Yamada et al., 2018) models. The results are reported in Table 1, which shows the proposed approach consistently outperforms several state-of-the-art DA methods. We observe that the improvement of performance is relatively slight, due to the small image size of CIFAR which is . In the following, we further apply our proposed PAA on datasets with larger image sizes and other networks.

Classification Results on ImageNet. As shown in Table 2, we evaluate our method on ResNet-50 and ResNet-200 (He et al., 2016) backbone on ImageNet, and our PAA significantly improves the performance of the target networks. The results further demonstrate that our proposed method is an effective DA technique for consistent and expressive benefits for datasets with larger image sizes.

Effectiveness of Fine-grained Classification. Furthermore, we evaluate our proposed method on fine-grained image recognition tasks. According to previous work (Du et al., 2020; Chen et al., 2019), we take ResNet-50 and ResNet-101 as the backbones. The results are shown in Table 4, which illustrates that the performance of PAA is consistently better than other methods and PAA has achieved remarkable performance on these challenging fine-grained tasks.

| Dataset | GPU hours | AA | FastAA | AdvAA | PAA |

|---|---|---|---|---|---|

| CIFAR-10 | Search | 5000 | 3.5 | 0 | 0 |

| Train | 6 | 6 | - | 7.5 | |

| Total | 5006 | 9.5 | - | 7.5 | |

| ImageNet | Search | 15000 | 450 | 0 | 0 |

| Train | 160 | 160 | 1280 | 270 | |

| Total | 15160 | 610 | 1280 | 270 |

| Dataset | Model | Baseline | Mixup | CutMix | AA | FastAA | PAA |

|---|---|---|---|---|---|---|---|

| CUB | ResNet-50 | 85.5 | 86.2 | 86.1 | 86.8 | 86.5 | 87.5 |

| ResNet-101 | 85.6 | 87.7 | 87.9 | 88.1 | 87.9 | 88.3 | |

| Cars | ResNet-50 | 93.0 | 93.9 | 94.1 | 94.2 | 94.0 | 94.3 |

| ResNet-101 | 93.1 | 94.1 | 94.2 | 94.2 | 93.8 | 94.5 | |

| Aircraft | ResNet-50 | 91.0 | 92.0 | 92.2 | 92.3 | 92.2 | 92.6 |

| ResNet-101 | 91.6 | 92.9 | 92.3 | 92.8 | 92.9 | 93.5 |

4.3 Complexity Analysis

In this section, in order to further demonstrate the performance of PAA in terms of complexity, we compare the policy search time and training time of PAA with AA (Cubuk et al., 2018), FastAA (Lim et al., 2019) and AdvAA (Zhang et al., 2019b), as illustrated in Table 3. As shown in Table 3, compared to the previous works, PAA requires the fewest total computational resources, and the search time is almost negligible. As for the parameters, the total number of PAA model parameters (about 0.23M) is less than 1 of the target network (e.g., ResNet50: about 25.5M).

In summary, the main reasons to reduce the computational cost lie in three aspects: 1) The augmentation policy network is jointly optimized with the target network, similar to (Zhang et al., 2019b; Lin et al., 2019). Namely, our proposed method searches for policies in an online manner, obviating thousands of policies validation on a small proxy network and the requirement for retraining the target network. Besides, we use fixed schedules for the two corresponding hyperparameters (i.e., probability and magnitude) of each transformation to effectively reduce the search space, which makes it easier to search for effective policies. By these means, PAA compresses most of the policy search time 2) As mentioned before, a MARL algorithm is adopted, in which all agents parallelly learn the augmentation policies, to reduce the training time. 3) Compared with the previous DA methods using image-by-image sequential transformations, we perform parallel transformations on tensor. Specifically, we pick the patches in a batch performing the same operation to reconstruct a new tensor. And we use Kornia111Kornia (Riba et al., 2020) is a differentiable computer vision library for PyTorch. We use it to accelerate augmentation operation on tensors. to realize tensor transformations on GPU to further reduce the processing time which is included in the training time.

4.4 Ablation Study

In this section, we study the effectiveness of MARL and also discuss the design of patch numbers, through several ablation experiments.

| Method | Policy | Dataset | ||||

|---|---|---|---|---|---|---|

| random | SARL | MARL | CIFAR-10 | CIFAR-100 | ImageNet | |

| PRA | ✓ | 97.1 | 83.0 | 77.9 / 93.9 | ||

| PSAA | ✓ | 97.3 | 83.1 | 78.0 / 93.9 | ||

| PAA(Ours) | ✓ | 97.5 | 83.4 | 78.3 / 94.1 | ||

| N | 4 | 16 | 64 | 256 | 1024 |

|---|---|---|---|---|---|

| CIFAR-10 | 97.5 | 97.3 | 97.2 | 97.1 | - |

| CUB | 87.2 | 87.5 | 87.3 | 87.1 | 86.8 |

| ImageNet | 78.1 | 78.3 | 78.2 | 78.0 | 78.0 |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/af041df8-c967-4fbd-851e-f157374d9650/x3.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/af041df8-c967-4fbd-851e-f157374d9650/x4.png) |

Performance of random policies. We randomize the augmentation policy for each patch, dubbed Patch RandAugment and compare it with our proposed PAA. To be specific, the augmentation policy is randomly selected from predefined transformations with uniform probability. As shown in Table 5, Patch RandAugment (PRA) leads to considerable appreciable improvements. However, our Patch AutoAugment with meaningful guidance significantly surpasses the random patch policies.

Performance of the policies searched using single-agent RL. Furthermore, we directly use the same single-agent RL to search for each patch’s policy, termed as Patch SingleAutoAugment (PSAA) and compare it to our PAA, where the search task is a cooperative multi-agent problem. In short, PSAA ignores the contextual relationship between patches, where patches are non-cooperative. The results (see Table 5) indicate that taking the contextual information into account with cooperative RL-agents has improved the joint effectiveness of DA.

Impact of the number of patches. We explore the effect of the number of patches on the target model performance. As shown in Table 6, we respectively set the number of patches . The results show that on ImageNet / CUB-200-2011, when patch numbers increase, the performance accuracy first increases and then decreases. In addition, when , PAA achieves the best performance. We analyze that the small number of patches may cause PAA to be unable to effectively explore local diversity, and its advantages would be limited. In particular, when , PAA degenerates into image-wise automated DA. In contrast, under too larger values (e.g., the extreme case is to search for DA policy for each pixel), the local semantic consistency is broken and the benefit brought by the consideration of contextual relationship between patches is gradually overtaken.

4.5 Visualization

Policy Visualization. Grad-CAM (Selvaraju et al., 2017) is used to localize the important regions in the image. Therefore we can calculate the importance score of each patch, and we divide the importance scores into four bins, i.e., very important, important, normal and not important. Then, we categorize patches into four groups and draw four stacked area charts showing the percentages of operations selected by PAA augmentation policies over time. We take ResNet-50 backbone trained on CUB-200-2011 as an example.

Since our proposed PAA searches for patch policies in an online manner, the strategies change dynamically over time as shown in Figure 4. At the beginning of the training process, the selected actions are messy since the MARL network is in the exploratory stage. At the tail end of the training, the target network has converged, causing the percentage of all operations to be almost the same and the percentage to flatten out.

In addition, we have some interesting findings which may provide some insights to the DA community. ) The optimal augmentation strategies of patches vary by their important levels. Therefore, it is necessary to take the image content into account when performing data augmentation. ) In the middle of the training process, different types of patches prefer to select different augmentation operations. Concretely, as illustrated in Figure 4, for the important patches, color transformation is mostly picked. For the unimportant patches, RandomErasing and Cutout are usually chosen by PAA. Important patches commonly take along semantic information that is related to mainstream tasks. It is better to choose the mild transformations (e.g., color) for them, which can effectively protect the semantic information from being damaged. In contrast, unimportant patches typically carry unexpected features (Singla et al., 2021) which are causally unrelated to the desired class. Severe transformations (e.g., Cutout, RandomErasing) could be chosen for them, which introduce noise and disturbance to reduce the impact of unexpected features on the target network learning.

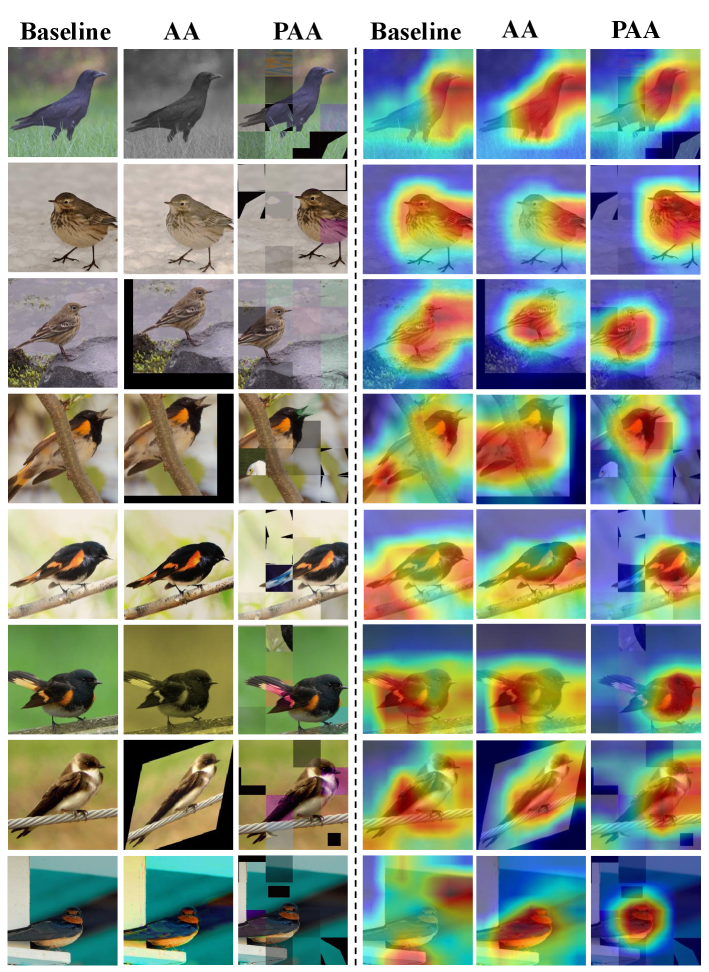

Grad-CAM Visualization. Here, we adopt Grad-CAM (Selvaraju et al., 2017) to visualize the learned features to intuitively show the impact of PAA, as shown in Figure 4. We take the ResNet-50 as the backbone which is trained with dataset processed by 1) Baseline 2) AutoAugment and 3) Patch AutoAugment, respectively. We observe that the model trained with PAA focus on more task-related areas rather than spurious correlations (e.g., the branch where the bird stands) or overemphasized features (e.g., birds’ claws). More visualization results are provided in the Appendix E.

5 Conclusion

In this paper, we propose Patch AutoAugment (PAA), a more fine-grained automated data augmentation approach. Our method adopts multi-agent reinforcement learning to automatically search for the optimal augmentation policies for patches, and encourages agents to cooperate with each other to further achieve the joint optimal policy across the entire image. Extensive experiments demonstrate that PAA improves the target network performance with low computational cost in various tasks. Meanwhile, we use visualization to show that PAA is beneficial for the target network to localize more class-related cues. Furthermore, we hope that our visual observations of policies will be useful for future development. In future work, we will investigate different schemes on dividing different regions. Furthermore, our method is naturally aligned with the patch token mechanism in the current vision transformers (Dosovitskiy et al., 2020; Touvron et al., 2021; Liu et al., 2021) and the data augmentation specific to vision transformers has not been extensively studied. Therefore, we leave the automated data augmentation for vision transformers to future work, which may provide some interesting insights to the community.

References

- Boutilier (1996) Craig Boutilier. Planning, learning and coordination in multiagent decision processes. In TARK, volume 96, pp. 195–210. Citeseer, 1996.

- Busoniu et al. (2008) Lucian Busoniu, Robert Babuska, and Bart De Schutter. A comprehensive survey of multiagent reinforcement learning. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews), 38(2):156–172, 2008.

- Chen et al. (2019) Yue Chen, Yalong Bai, Wei Zhang, and Tao Mei. Destruction and construction learning for fine-grained image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5157–5166, 2019.

- Cubuk et al. (2018) Ekin D Cubuk, Barret Zoph, Dandelion Mane, Vijay Vasudevan, and Quoc V Le. Autoaugment: Learning augmentation policies from data. arXiv preprint arXiv:1805.09501, 2018.

- Cubuk et al. (2020) Ekin D Cubuk, Barret Zoph, Jonathon Shlens, and Quoc V Le. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 702–703, 2020.

- Dabouei et al. (2021) Ali Dabouei, Sobhan Soleymani, Fariborz Taherkhani, and Nasser M Nasrabadi. Supermix: Supervising the mixing data augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13794–13803, 2021.

- Deng et al. (2009) Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, pp. 248–255. Ieee, 2009.

- DeVries & Taylor (2017) Terrance DeVries and Graham W Taylor. Improved regularization of convolutional neural networks with cutout. arXiv preprint arXiv:1708.04552, 2017.

- Dosovitskiy et al. (2020) Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- Du et al. (2020) Ruoyi Du, Dongliang Chang, Ayan Kumar Bhunia, Jiyang Xie, Zhanyu Ma, Yi-Zhe Song, and Jun Guo. Fine-grained visual classification via progressive multi-granularity training of jigsaw patches. In European Conference on Computer Vision, pp. 153–168. Springer, 2020.

- Foerster et al. (2018) Jakob Foerster, Gregory Farquhar, Triantafyllos Afouras, Nantas Nardelli, and Shimon Whiteson. Counterfactual multi-agent policy gradients. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 32, 2018.

- Foerster et al. (2017) Jakob N Foerster, Richard Y Chen, Maruan Al-Shedivat, Shimon Whiteson, Pieter Abbeel, and Igor Mordatch. Learning with opponent-learning awareness. arXiv preprint arXiv:1709.04326, 2017.

- Furuta et al. (2019) Ryosuke Furuta, Naoto Inoue, and Toshihiko Yamasaki. Pixelrl: Fully convolutional network with reinforcement learning for image processing. IEEE Transactions on Multimedia, 2019.

- Gastaldi (2017) Xavier Gastaldi. Shake-shake regularization. arXiv preprint arXiv:1705.07485, 2017.

- Gong et al. (2020) Chengyue Gong, Dilin Wang, Meng Li, Vikas Chandra, and Qiang Liu. Keepaugment: A simple information-preserving data augmentation approach. arXiv preprint arXiv:2011.11778, 2020.

- Gontijo-Lopes et al. (2020) Raphael Gontijo-Lopes, Sylvia J Smullin, Ekin D Cubuk, and Ethan Dyer. Affinity and diversity: Quantifying mechanisms of data augmentation. arXiv preprint arXiv:2002.08973, 2020.

- Han et al. (2017) Dongyoon Han, Jiwhan Kim, and Junmo Kim. Deep pyramidal residual networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 5927–5935, 2017.

- Han et al. (2019) Lei Han, Peng Sun, Yali Du, Jiechao Xiong, Qing Wang, Xinghai Sun, Han Liu, and Tong Zhang. Grid-wise control for multi-agent reinforcement learning in video game ai. In International Conference on Machine Learning, pp. 2576–2585. PMLR, 2019.

- Hataya et al. (2019) Ryuichiro Hataya, Jan Zdenek, Kazuki Yoshizoe, and Hideki Nakayama. Faster autoaugment: Learning augmentation strategies using backpropagation. arXiv preprint arXiv:1911.06987, 2019.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- Ho et al. (2019) Daniel Ho, Eric Liang, Xi Chen, Ion Stoica, and Pieter Abbeel. Population based augmentation: Efficient learning of augmentation policy schedules. In International Conference on Machine Learning, pp. 2731–2741, 2019.

- Huang et al. (2020) Shaoli Huang, Xinchao Wang, and Dacheng Tao. Snapmix: Semantically proportional mixing for augmenting fine-grained data. arXiv preprint arXiv:2012.04846, 2020.

- Krause et al. (2013) Jonathan Krause, Michael Stark, Jia Deng, and Li Fei-Fei. 3d object representations for fine-grained categorization. In Proceedings of the IEEE international conference on computer vision workshops, pp. 554–561, 2013.

- Krizhevsky et al. (2009) Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- Liao et al. (2020) Xuan Liao, Wenhao Li, Qisen Xu, Xiangfeng Wang, Bo Jin, Xiaoyun Zhang, Yanfeng Wang, and Ya Zhang. Iteratively-refined interactive 3d medical image segmentation with multi-agent reinforcement learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9394–9402, 2020.

- Lim et al. (2019) Sungbin Lim, Ildoo Kim, Taesup Kim, Chiheon Kim, and Sungwoong Kim. Fast autoaugment. In Advances in Neural Information Processing Systems, pp. 6665–6675, 2019.

- Lin et al. (2019) Chen Lin, Minghao Guo, Chuming Li, Xin Yuan, Wei Wu, Junjie Yan, Dahua Lin, and Wanli Ouyang. Online hyper-parameter learning for auto-augmentation strategy. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6579–6588, 2019.

- Liu et al. (2021) Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv preprint arXiv:2103.14030, 2021.

- Lowe et al. (2017) Ryan Lowe, Yi Wu, Aviv Tamar, Jean Harb, Pieter Abbeel, and Igor Mordatch. Multi-agent actor-critic for mixed cooperative-competitive environments. arXiv preprint arXiv:1706.02275, 2017.

- Ma et al. (2020) Chaofan Ma, Qisen Xu, Xiangfeng Wang, Bo Jin, Xiaoyun Zhang, Yanfeng Wang, and Ya Zhang. Boundary-aware supervoxel-level iteratively refined interactive 3d image segmentation with multi-agent reinforcement learning. IEEE Transactions on Medical Imaging, 2020.

- Ma & Cameron (2008) Jie Ma and Stephen Cameron. Combining policy search with planning in multi-agent cooperation. In Robot Soccer World Cup, pp. 532–543. Springer, 2008.

- Maji et al. (2013) Subhransu Maji, Esa Rahtu, Juho Kannala, Matthew Blaschko, and Andrea Vedaldi. Fine-grained visual classification of aircraft. arXiv preprint arXiv:1306.5151, 2013.

- Neto (2005) Gonçalo Neto. From single-agent to multi-agent reinforcement learning: Foundational concepts and methods. Learning theory course, 2005.

- Rashid et al. (2018) Tabish Rashid, Mikayel Samvelyan, Christian Schroeder, Gregory Farquhar, Jakob Foerster, and Shimon Whiteson. Qmix: Monotonic value function factorisation for deep multi-agent reinforcement learning. In International Conference on Machine Learning, pp. 4295–4304. PMLR, 2018.

- Riba et al. (2020) Edgar Riba, Dmytro Mishkin, Daniel Ponsa, Ethan Rublee, and Gary Bradski. Kornia: an open source differentiable computer vision library for pytorch. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3674–3683, 2020.

- Selvaraju et al. (2017) Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pp. 618–626, 2017.

- Shorten & Khoshgoftaar (2019) Connor Shorten and Taghi M Khoshgoftaar. A survey on image data augmentation for deep learning. Journal of Big Data, 6(1):1–48, 2019.

- Shrivastava et al. (2017) Ashish Shrivastava, Tomas Pfister, Oncel Tuzel, Joshua Susskind, Wenda Wang, and Russell Webb. Learning from simulated and unsupervised images through adversarial training. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2107–2116, 2017.

- Singla et al. (2021) Sahil Singla, Besmira Nushi, Shital Shah, Ece Kamar, and Eric Horvitz. Understanding failures of deep networks via robust feature extraction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12853–12862, 2021.

- Tampuu et al. (2017) Ardi Tampuu, Tambet Matiisen, Dorian Kodelja, Ilya Kuzovkin, Kristjan Korjus, Juhan Aru, Jaan Aru, and Raul Vicente. Multiagent cooperation and competition with deep reinforcement learning. PloS one, 12(4):e0172395, 2017.

- Touvron et al. (2021) Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, and Hervé Jégou. Training data-efficient image transformers & distillation through attention. In International Conference on Machine Learning, pp. 10347–10357. PMLR, 2021.

- Tran et al. (2017) Toan Tran, Trung Pham, Gustavo Carneiro, Lyle Palmer, and Ian Reid. A bayesian data augmentation approach for learning deep models. arXiv preprint arXiv:1710.10564, 2017.

- Uddin et al. (2020) AFM Uddin, Mst Monira, Wheemyung Shin, TaeChoong Chung, Sung-Ho Bae, et al. Saliencymix: A saliency guided data augmentation strategy for better regularization. arXiv preprint arXiv:2006.01791, 2020.

- Wah et al. (2011) Catherine Wah, Steve Branson, Peter Welinder, Pietro Perona, and Serge Belongie. The caltech-ucsd birds-200-2011 dataset. 2011.

- Yamada et al. (2018) Yoshihiro Yamada, Masakazu Iwamura, and Koichi Kise. Shakedrop regularization. 2018.

- Yun et al. (2019) Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, and Youngjoon Yoo. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE International Conference on Computer Vision, pp. 6023–6032, 2019.

- Zagoruyko & Komodakis (2016) Sergey Zagoruyko and Nikos Komodakis. Wide residual networks. arXiv preprint arXiv:1605.07146, 2016.

- Zhang et al. (2017) Hongyi Zhang, Moustapha Cisse, Yann N Dauphin, and David Lopez-Paz. mixup: Beyond empirical risk minimization. arXiv preprint arXiv:1710.09412, 2017.

- Zhang et al. (2019a) Kaiqing Zhang, Zhuoran Yang, and Tamer Başar. Multi-agent reinforcement learning: A selective overview of theories and algorithms. arXiv preprint arXiv:1911.10635, 2019a.

- Zhang et al. (2019b) Xinyu Zhang, Qiang Wang, Jian Zhang, and Zhao Zhong. Adversarial autoaugment. arXiv preprint arXiv:1912.11188, 2019b.

- Zhong et al. (2020) Zhun Zhong, Liang Zheng, Guoliang Kang, Shaozi Li, and Yi Yang. Random erasing data augmentation. In AAAI, pp. 13001–13008, 2020.

- Zhou et al. (2016) Bolei Zhou, Aditya Khosla, Agata Lapedriza, Aude Oliva, and Antonio Torralba. Learning deep features for discriminative localization. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2921–2929, 2016.

- Zhou et al. (2015) Li Zhou, Zhaohui Yang, Qing Yuan, Zongtan Zhou, and Dewen Hu. Salient region detection via integrating diffusion-based compactness and local contrast. IEEE Transactions on Image Processing, 24(11):3308–3320, 2015.

- Zoph et al. (2018) Barret Zoph, Vijay Vasudevan, Jonathon Shlens, and Quoc V Le. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 8697–8710, 2018.

Appendix

| Operation Name | Description | magnitudes | ||

|---|---|---|---|---|

| Brightness |

|

brightness=(0.5, 0.95) | ||

| Contrast |

|

contrast=(0.5, 0.95) | ||

| CutMix |

|

- | ||

| Cutout | Set all pixels in this patch to the average value of the patch. | - | ||

| Invert | Invert the pixels of the patch | - | ||

| Mixup |

|

|||

| Posterize | Reduce the number of bits for each pixel to magnitude bits. | bits=3 | ||

| Solarize | Invert all pixels above a threshold value of magnitude. | thresholds=0.1 | ||

| RandomErasing | Erases a random rectangle region in a patch. |

|

||

| Rotation | Rotate the patch magnitude degrees. | degrees=30.0 | ||

| Sharpness |

|

sharpness=0.5 | ||

| Shear(X/Y) |

|

shear=(-30, 30) | ||

| Translate(X/Y) |

|

translate=(0.4, 0.4) | ||

| Color | Adjust the color balance of the image. | hue=(-0.3, 0.3) | ||

| Equalize | Equalize the image histogram. | - |

| Layer | Actor network | Critic network |

|---|---|---|

| 1 | ReLU,Conv2D(32,64,3,1,1),BN | FC(1568,256) |

| 2 | ReLU,Conv2D(64,64,3,1,1),BN | ReLU |

| 3 | ReLU,Conv2D(64,15,3,2,1),Softmax | FC(256,1) |

Appendix A Operations Details

Following (Cubuk et al., 2018; Ho et al., 2019; Lim et al., 2019; Zhang et al., 2019b), we define the fifteen common augmentation operations to form the action space. Here, we detail the description of these operations, as illustrated in Table 7. In addition, we give magnitudes range of augmentation operations corresponding to hyperparameters of the functions in the Kornia PyTorch library. Some operations, such as Cutout and CutMix, have no parameters.

Additionally, in our implementation, we pick out the patches that perform the same operation and put them into a new tensor. Then, we speed up the process by performing parallel transformations on the tensor. Tensor transformations on GPU can be realized by Kornia (Riba et al., 2020) to reduce the computational costs. In particular, when performing the label-disturbing operations (i.e., Mixup and CutMix), a patch needs to mix with another patch that is randomly selected from the new tensor. Namely, a patch is mixed with another patch that performs the same operation.

| Dataset | Model | BatchSize | LR | WD | LD | LRstep | LR-A2C | Epoch |

| CIFAR-10 | WRN-28-10 | 128 | 0.1 | 5e-4 | cosine | - | 1e-3 | 200 |

| SS(26 2x32d) | 128 | 0.2 | 1e-4 | cosine | - | 1e-4 | 600 | |

| SS(26 2x96d) | 128 | 0.2 | 1e-4 | cosine | - | 1e-4 | 600 | |

| SS(26 2x112d) | 128 | 0.2 | 1e-4 | cosine | - | 1e-4 | 600 | |

| Pyramid+SD | 128 | 0.1 | 1e-4 | cosine | - | 1e-4 | 600 | |

| CIFAR-100 | WRN-28-10 | 128 | 0.1 | 5e-4 | cosine | - | 1e-4 | 200 |

| SS(26 2x96d) | 128 | 0.1 | 5e-4 | cosine | - | 1e-4 | 1200 | |

| Pyramid+SD | 128 | 0.5 | 1e-4 | cosine | - | 1e-4 | 1200 | |

| ImageNet | ResNet-50 | 512 | 0.1 | 1e-4 | multistep | [30,60,90,120,150] | 1e-4 | 270 |

| ResNet-200 | 512 | 0.1 | 1e-4 | multistep | [30,60,90,120,150] | 1e-4 | 270 | |

| CUB-200-2011 | ResNet-50 | 512 | 1e-3 | 1e-4 | multistep | [30,60,90] | 1e-4 | 200 |

| ResNet-101 | 512 | 1e-3 | 1e-4 | multistep | [30,60,90] | 1e-4 | 200 | |

| Stanford Cars | ResNet-50 | 512 | 1e-3 | 1e-4 | multistep | [30,60,90] | 1e-4 | 200 |

| ResNet-101 | 512 | 1e-3 | 1e-4 | multistep | [30,60,90] | 1e-4 | 200 | |

| FGVC-Aircraft | ResNet-50 | 512 | 1e-3 | 1e-4 | multistep | [30,60,90] | 1e-4 | 200 |

| ResNet-101 | 512 | 1e-3 | 1e-4 | multistep | [30,60,90] | 1e-4 | 200 |

Appendix B Datasets

We evaluate Patch AutoAugment (PAA) on the following datasets: CIFAR-10 (Krizhevsky et al., 2009), CIFAR-100 (Krizhevsky et al., 2009), ImageNet (Deng et al., 2009) and three fine-grained image recognition datasets (CUB-200-2011 (Wah et al., 2011), Stanford Cars (Krause et al., 2013) and FGVC-Aircraft (Maji et al., 2013)).

To be specific, both CIFAR-10 and CIFAR-100 have 50,000 training examples. Each image of size belongs to one of 10 categories. ImageNet dataset has about 1.2 million training images and 50,000 validation images with 1000 classes. Original ImageNet data have different sizes and we resize them to . In addition, we evaluate the performance of our proposed PAA on three standard fine-grained object recognition datasets. CUB-200-2011 (Wah et al., 2011) consists of 6,000 train and 5,800 test bird images distributed in 200 categories. Stanford Cars (Krause et al., 2013) contains 16,185 images in 196 classes. The FGVC-Aircraft dataset contains 10,200 images of aircraft, with 100 images for each of 102 different aircraft model variants, most of which are airplanes. The image size in the above three datasets is .

Appendix C Model Architecture

Here, we provide the detailed model architecture for each component in our PAA augmentation policy model, including feature extractor network, actor network and critic network. We use the pre-trained on ImageNet ResNet-18 backbone (excluding the final avgpool and softmax layer) to extract the deep features of the image and the patch, which are denoted as the state and the observation respectively. As for the actor network and the critic network, the detailed model architectures are shown in Table 8.

Appendix D Hyperparameters

We detail various target models hyperparameters (e.g., batch size, learning rate and training epochs) on CIFAR-10, CIFAR-100, ImageNet, CUB-200-2011, Stanford Cars and FGVC-Aircraft in Table 9. We do not specifically tune these hyperparameters, and all of these are consistent with previous works (Cubuk et al., 2018; Lim et al., 2019; Dabouei et al., 2021; Du et al., 2020; Chen et al., 2019). In our PAA model, we set time horizon , i.e., the augmentation policies take actions at every time step for more precise control. In addition, we use the SGD optimizer with an initial learning rate of 1e-4 to train the actor network and the critic network.

Appendix E More Grad-CAM Visualization

In this section, we provide more Grad-CAM (Selvaraju et al., 2017) results of ResNet-50 models trained using baseline augmented data, AA (Cubuk et al., 2018) and our proposed PAA, respectively, as shown in Figure 5. The visualization results demonstrate that our proposed PAA improves the localization ability of the target network and tends to help the target network focus on more parts of the foreground object. In short, our proposed PAA make the target network focus on the important or representative regions closely related to the class within an image.