Machine Learning Infused Distributed Optimization for Coordinating Virtual Power Plant Assets

Abstract

Amid the increasing interest in the deployment of Distributed Energy Resources (DERs), the Virtual Power Plant (VPP) has emerged as a pivotal tool for aggregating diverse DERs and facilitating their participation in wholesale energy markets. These VPP deployments have been fueled by the Federal Energy Regulatory Commission’s Order 2222, which makes DERs and VPPs competitive across market segments. However, the diversity and decentralized nature of DERs present significant challenges to the scalable coordination of VPP assets. To address efficiency and speed bottlenecks, this paper presents a novel machine learning-assisted distributed optimization to coordinate VPP assets. Our method, named as (Learning to Optimize the Optimization Process for Multi-agent Coordination), adopts a multi-agent coordination perspective where each VPP agent manages multiple DERs and utilizes neural network approximators to expedite the solution search. The method employs a gauge map to guarantee strict compliance with local constraints, effectively reducing the need for additional post-processing steps. Our results highlight the advantages of , showcasing accelerated solution times per iteration and significantly reduced convergence times. The method outperforms conventional centralized and distributed optimization methods in optimization tasks that require repetitive and sequential execution.

Index Terms:

Virtual Power Plants (VPPs), Alternating Direction Method of Multipliers (ADMM), Distributed Optimization, Distributed Energy Resources (DERs), Learning to Optimize the Optimization Process (LOOP), Collaborative Problem-solvingI Introduction

I-A Motivation

As global energy sectors transition towards sustainability, the role of Distributed Energy Resources (DERs) has become increasingly significant. However, the participation of DERs in competitive electricity markets remains a challenge [1]. While many DERs are capable of providing wholesale market services, they often individually fall short of the minimum size thresholds established by Independent System Operators (ISOs) and may not meet performance requirements [2]. As a solution to these challenges, Virtual Power Plants (VPPs) have emerged to aggregate diverse DERs, creating a unified operating profile for participation in wholesale markets and providing services to system operators [3]. Further promoting the aggregation of DERs, the Federal Energy Regulatory Commission’s (FERC’s) Order 2222, issued in September 2020, allowed DERs to compete on equal terms with other resources in ISO energy, capacity, and ancillary service markets [2]. The FERC regulatory advancement strengthens the position of DERs and VPPs in the market.

Despite their promising potential, the massive, decentralized, diverse, heterogeneous, and small-scale nature of DERs poses significant challenges to traditional centralized approaches, especially in terms of computational efficiency and speed. Centralized controls for VPPs require global information from all DERs, making them susceptible to catastrophic failures if centralized nodes fail and potentially compromising the privacy of DER owners’ information. To address these issues, there is a growing demand for efficient, scalable, distributed and decentralized optimization techniques. Our study aims to tackle these challenges and develop a solution that can efficiently harness the benefits of DERs, thereby unlocking the full potential of VPPs.

I-B Related Work

I-B1 VPP Functionalities and Objectives

VPPs act as aggregators for a variety of DERs, playing a pivotal role in mitigating integration barriers between DERs and grid operations [4]. In what folows, we will highlight recent insights gained from extensive research conducted on strategies for coordinating DERs within VPPs. For instance, optimization schemes for coordinating DERs within VPPs can be customized to achieve various objectives including:

I-B2 Shortcomings of Centralized Coordination Methods

Today’s centralized optimization methods are not designed to cope with decentralized, diverse, heterogeneous, and small-scale nature of DERs. Recent studies have shown that integrating DERs at scale may adversely impact today’s tools operation’s efficiency and performance speed [21].

Major challenges of centralized management strategies include:

-

•

Scalability issues become more pronounced with the addition of more DERs to the network, resulting in increased computational demands due to the management of a growing set of variables

-

•

Security and privacy risks as centralized decision-making models requires comprehensive data from all DERs [22].

-

•

Severe system disruptions resulting from dependence on a single centralized node, as a failure in that node may pose a significant operational risk.

-

•

Significant delays in the decision-making process due to the strain on the communication infrastructure, a situation worsened by continuous data communication and the intermittent nature of DERs.

-

•

Adaptability challenges as the centralized systems struggle to provide timely responses to network changes. This limitation stems from their requirement for a comprehensive understanding of the entire system to make informed decisions [23].

-

•

Logistical and political challenges given the diverse and intricate nature of DERs within a comprehensive centralized optimization strategy that spans across various regions and utilities [24].

In response to these challenges, there is a growing demand and interest in the development and implementation of efficient, scalable, and decentralized optimization approaches.

I-B3 State-of-the-art in Distributed Coordination

Distributed coordination methods organize DERs into clusters, with each one treated as an independent agent with capabilities for communication, computation, data storage, and operation, as demonstrated in previous work [25]. A distributed configuration enables DERs to function efficiently without dependence on a central controller. Distributed coordination paradigms, which leverage the autonomy of individual agents, have played a crucial role in the decentralized dispatch of DERs, as highlighted in recent surveys [22].

Among the numerous distributed optimization methods proposed in power systems, the Alternating Direction Method of Multipliers (ADMM) has gained popularity for its versatility across different optimization scenarios. Recent examples include a distributed model to minimize the dispatch cost of DERs in VPPs, while accounting for network constraints [18]. Another noteworthy contribution is a fully distributed methodology that, combines ADMM and consensus optimization protocols to address transmission line limits in VPPs [21]. Li et al. [26] introduced a decentralized algorithm to enable demand response optimization for electric vehicles within a VPP. Contributing to the robustness of VPPs, another decentralized algorithm based on message queuing has been proposed to enhance system resilience, particularly in cases of coordinator disruptions [27].

I-B4 Challenges of Existing Distributed Coordination Methods

Despite their many advantages, most distributed optimization techniques, even those with convergence guarantees, require significant parameter tuning to ensure numerical stability and practical convergence. Real-time energy markets impose operational constraints that require frequent updates, sometimes as frequently as every five minutes throughout the day, as indicated by [28]. The frequent update demands that the optimization of DERs dispatch within VPPs is resolved frequently and in a timely manner. Nevertheless, the iterative nature of these optimization techniques can significantly increase computation time, restricting their utility in time-sensitive scenarios. Moreover, the optimization performance may not necessarily improve, even when encountering identical or analogous dispatching problems frequently, leading to computational inefficiency.

To address these limitations, Machine Learning (ML) has been deployed to enhance the efficiency of optimization procedures, as discussed in [29]. The utilization of neural networks can expedite the search process and reduce the number of iterations needed to identify optimal solutions. Furthermore, neural approximators can continually enhance their performance as they encounter increasingly complex optimization challenges, as demonstrated in [30].

ML-assisted distributed optimizers can be broadly categorized into three distinct models: supervised learning, unsupervised learning, and reinforcement learning. In the realm of supervised learning, a data-driven method to expedite the convergence of ADMM in solving distributed DC optimal power flow (DC-OPF) is presented in [31], where authors employ penalty-based techniques to achieve local feasibility. Also, we have proposed an ML-based ADMM method to solve the DC-OPF problem which provides a rapid solution for primal and dual sub-problems in each iteration [32]. Additional applications of supervised learning are demonstrated in [33] and [34], where ML algorithms are used to provide warm-start points for ADMM. On the other hand, unsupervised learning is exemplified in [35], where a learning-assisted asynchronous ADMM method is proposed, leveraging k-means for anomaly detection. Reinforcement learning has been applied to train neural network controllers for achieving DER voltage control [36], frequency control [37], and optimal transactions [38].

Although these studies showcase the potential of ML for adaptive, real-time DER optimization in decentralized VPP models, they do not fully develop ML-infused distributed optimization methods to improve computation speed while ensuring solution feasibility.

I-C Contributions

In this paper, we propose an ML-assisted method to replace the building blocks of the ADMM-based distributed optimization technique with neural approximators. Our method is referred to as (Learning to Optimize the Optimization Process for Multi-agent Coordination). We will employ our method to find a multi-agent solution for the power dispatch problem in DER coordination within a VPP. In the muti-agent VPP configuration, each agent may control multiple DERs. The proposed method enables each agent to predict local power profiles by communicating with its neighbors. All agents collaborate to achieve a near-optimal solution for power dispatch while adhering to both local and system-level constraints.

The utilization of neural networks expedites the search process and reduces the number of iterations required to identify optimal solutions. Additionally, unlike restoration-based methods, the approach doesn’t necessitate post-processing steps to enhance feasibility because local constraints are inherently enforced through a gauge mapping method [39], and coupled constraints are penalized through ADMM iterations. This paper advances our recent work in [32] that is focused on speeding up the ADMM-based DC-OPF calculations through efficient approximation of primal and dual sub-problems. While [32] tackled the DC-OPF problem, the present paper extends our previous model to incorporate individual VPP assets, addressing the DER coordination problem. In terms of methodology, [32] employs ML to facilitate both primal and dual updates of the ADMM method. This requires neighboring agents to share updated global variables, local copies of global variables, and Lagrangian multipliers. This work, however, replaces the two ADMM update procedures with a single data infusion step that reduces agents’ communication and computation burden.

II Problem formulation

II-A Compact Formulation

II-A1 The compact formulation for original optimization problem

The centralized optimization function is:

| (1) |

where represents the collection of optimization variables across all agents. Note, denotes vector concatenation, and indicates the optimization variable vector of agent . Similarly, encompasses all input parameters across agents, with indicating agent ’s input parameter vector. The overall objective function is captured by where stands for agent ’s objective. Lastly, is the collection of all agent’s constraint sets.

II-A2 The compact formulation at the multi-agent-level

Here, we introduce the agent-based method to distribute computation responsibilities among agents. Let the variable vector of each agent, , consist of both local and global variables, which can be partitioned as . Here, captures the local variables of agent , while encapsulates the global variables shared among neighboring agents. To enable distributed computations, each agent maintains a local copy vector of other agents’ variables, , from which this vector mimics the global variables owned by neighboring agents.

Agent-level computations

| (2a) | ||||

| s.t. | (2b) | |||

| (2c) | ||||

where denotes the agent ’s local constraint set. Here, denotes the global variables owned by neighboring agents, and is an element selector matrix. The distributed optimization process and intra-agent information exchange will ensure agreement among local copies of shared global variables.

Intra-agent Information Exchange

| (3) | |||

| (4) |

The dual update procedure (3) adjusts the Lagrangian multipliers , which enforces consensus between agent and its neighbors. Here, represents the differences between agent ’s local copies and the global variables from neighboring agents, and is a penalty parameter.

In (4), captures the compact form of an optimization problem that reduces the gap between local copies of global variables while respecting the constraints of individual agents.

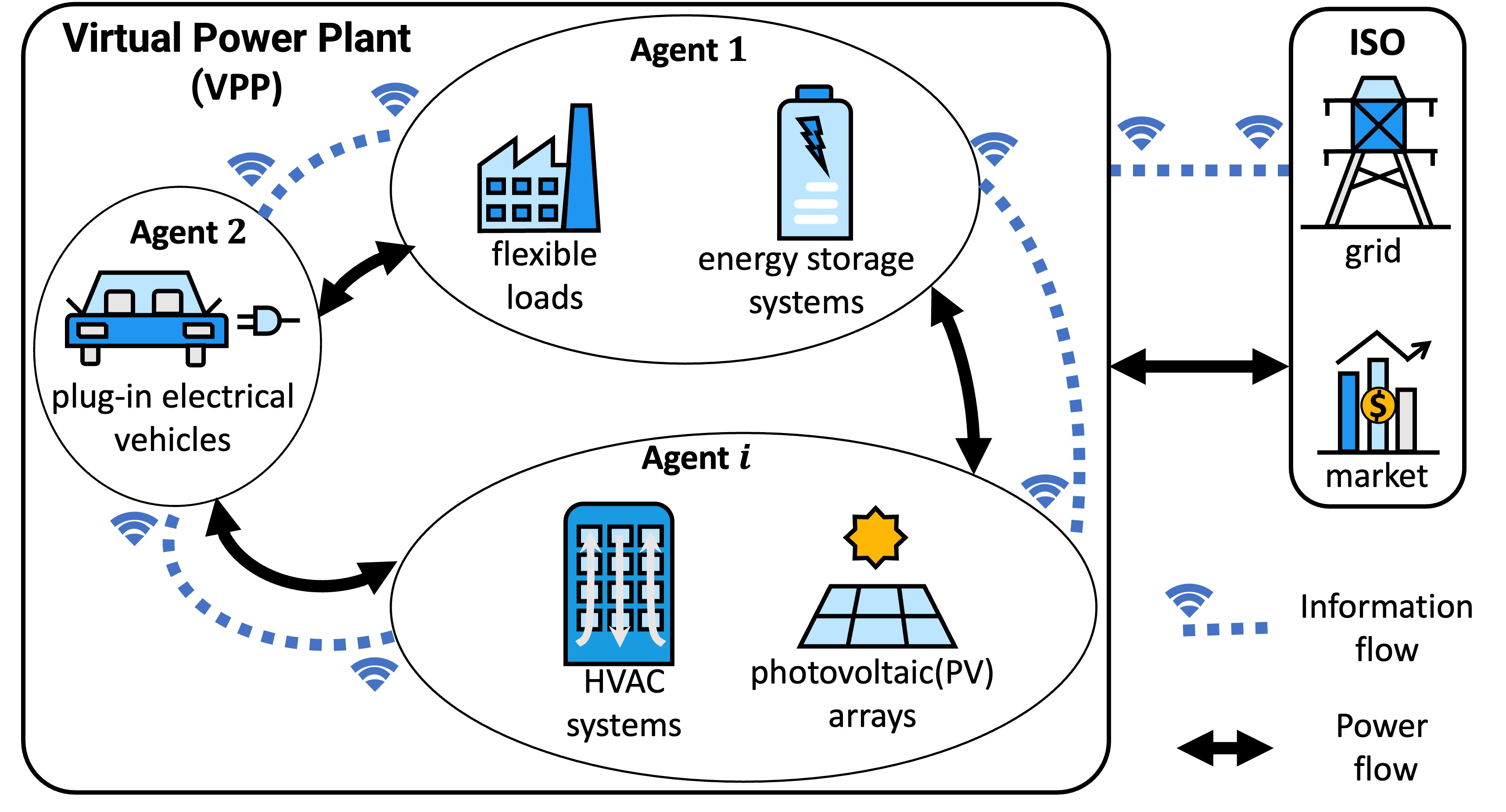

II-B VPP Model

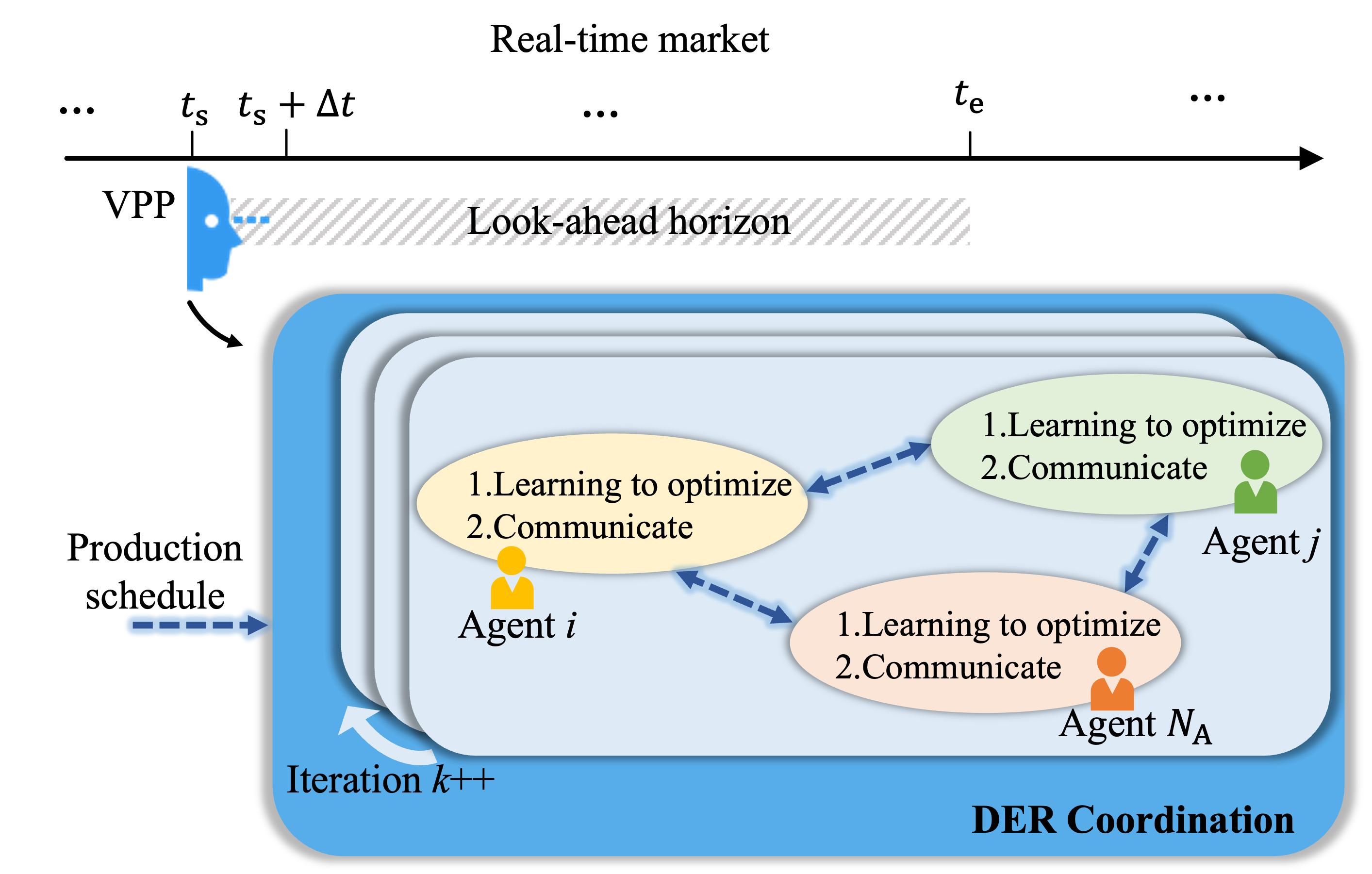

The considered VPP consists of a number of agents, each denoted by index . Every agent is responsible for aggregating a diverse set of DERs, which encompasses flexible loads (FLs), energy storage systems (ESSs), heating, ventilation, and air conditioning (HVAC) systems, plug-in electric vehicles (PEVs), and photovoltaic (PV) arrays, as shown in Fig.1. These agents might be connected to networks of different utilities. The primary objective of the VPP is to optimize the aggregate behavior of all agents while accounting for agents’ utility functions.

In this paper, we propose that the VPP operates within a two-settlement energy market, composed of a day-ahead and a real-time market. Upon the clearing of the day-ahead market, the VPP decides on hourly production schedules. The real-time market, also known as the imbalance market, is designed to settle potential day-ahead commitment violations. The real-time market productions are set in 5-minute increments. The production schedules every 5 minutes are denoted as .

The method is designed for the real-time market, where a VPP solves a dispatch optimization across its assets (agents) to honor its commitment over a given time scale, , where and represent the starting and ending times, respectively. Put differently, the VPP needs to fulfill the production schedule while minimizing the overall cost of agents. Generally, the VPP implements 5-minute binding intervals ( h) for the real-time market, and adopts look-ahead horizon , ranging from 5 minutes up to 2 hours [40], for the real-time dispatch optimization. The detailed dispatch optimization problem is presented next.

II-C Centralized Formulation of the VPP Coordination Problem

This subsection presents the centralized form of the power dispatch problem solved by a VPP over various assets for every time step . The asset constraints are:

II-C1 Constraints Pertaining to Flexible Loads

The power of a flexible load should be within a pre-defined operation range , , :

| (5) |

II-C2 Constraints Pertaining to Energy Storage Systems

and , the charging (or discharging ) power of the energy storage system must not exceed , as indicated in (6). Also, (7) and (8) define as the state of charge (SoC) and bound its limits. Here and denote the charging and discharging efficiency. Finally refers to the capacity.

| (6) | ||||

| (7) | ||||

| (8) |

II-C3 Constraints Pertaining to Heating, Ventilation, and Air Conditioning Systems

The inverter-based heating, ventilation, and air conditioning model [41] is presented below with consumption power denoted as .

| (9) |

Where is the indoor temperature at time , is the forecasted outdoor temperature, is the factor of inertia, is the coefficient of performance, is thermal conductivity. Equation (10) introduces the concept of adaptive comfort model . Equation (11) enforces the control range within the size of air-conditioning .

| (10) | |||

| (11) |

II-C4 Constraints Pertaining to Plug-in Electric Vehicles (PEV)

and , the PEV charging power must adhere to the range as described in (12). Further, (13) mandates that agent ’s cumulative charging power meet the necessary energy for daily commute [42].

| (12) | |||

| (13) |

II-C5 Constraints Pertaining to Photovoltaic Arrays

The photovoltaic power generation, given by (14) and is determined by the solar irradiance-power conversion function. Here, , represents the solar radiation intensity, denotes the surface area, and is the transformation efficiency.

| (14) |

II-C6 Constraints of Network Sharing

The net power of agent , , is given below. Note, indicates the inflexible loads.

| (15) |

Local distribution utility constraints are enforced by (16), while (17) guarantees that VPP’s output honors the production schedule of both energy markets.

| (16) | |||

| (17) |

II-C7 Objective Function

The objective function for the power dispatch problem, i.e., (18), includes:

Minimizing maintenance & operation costs of energy storage systems

represents the unit maintenance cost.

Balancing the differences between actual and preset consumption profiles for flexible loads

is the inconvenience coefficient. Here, specifies the preferred consumption level [43].

Mitigating thermal discomfort costs for HVAC systems

is the cost coefficient, indicates the optimal comfort level, and binary variable denotes occupancy state, where 1 means occupied and 0 indicates vacancy.

| (18) |

II-C8 Centralized Optimization Problem

Combining the constraints (5)-(17) and the objective function (18), we formulate the power dispatch problem. Note the formulated dispatch problem requires frequent resolution at each time instance in the real-time market. For a given agent , the optimization variables over the time interval are denoted by , while its inputs over the same interval are represented as ;

| (19) | |||

| (20) |

Let and . The DER coordination problem can be formulated as (21) or as follows,

| (21a) | ||||

| s.t. | (21b) | |||

| (21c) | ||||

II-D Agent-based Model for the VPP Coordination Problem

Agent-based problem-solving lends itself well to addressing the computational needs of the VPP coordination problem. In this subsection, we focus on finding a distributed solution for (21) (or (1)). While each sub-problem optimizes the operation of individual agents, communication enables individual agents to collectively find the system-level optimal solution.

In the context of distributed problem-solving, it is important to point out the unique challenges posed by coupling constraints such as (17). These constraints introduce intricate relationships among several agents where some variables of agent are tied with those of agent . These coupled constraints prevent separating (21) into disjointed sub-problems.

As discussed in Section IIA, we define the variables present among multiple agents’ constraints as global variables, ,

| (22) |

In contrast, the variables solely managed by non-overlapping constraints are referred to as local variables. That is, . We refer to agents whose variables are intertwined in a constraint as neighboring agents.

The ADMM method finds a decentralized solution for (21) by creating local copies of neighboring agents’ global variables and adjusting local copies iteratively to satisfy both local and consensus constraints. The adjustment continues until alignment with original global variables is achieved, at which point the global minimum has been found in a decentralized manner.

In the power dispatch problem, we introduce , which is owned by agent , and represents a copy of . Then, coupled constraint (17) become a local constraint (23) and a consensus constraint (24):

| (23) | |||

| (24) |

Let denote all local copies owned by agent imitating other neighboring agents’ global variables. Then, one could reformulate the problem (21) in accordance to and as,

| (25a) | |||

| (25b) | |||

| (25c) | |||

| (25d) | |||

where, , , , , , and in (25b) and (25c) capture the compact form of constraints (5)-(16), (23). And (25d) is the compact form of constraints (24). Here is the element selector matrix that maps elements from vector to vector based on a consensus constraint (24). Each row of contains a single 1 at a position that corresponds to the desired element from and 0s elsewhere. Therefore, represents the vector of global variables that are required to be imitated by agent .

II-E Updating Rules Within Agents

The standard form of ADMM solves problem (25) (or (2)) by dealing with the augmented Lagrangian function :

| (26a) | ||||

| (26b) | ||||

where is a positive constant. denotes the vector of all Lagrangian multipliers for the corresponding consensus equality relationship between agent ’s copy and neighboring agent ’s global variable.

The search for a solution to (26) is performed through an iterative process (indexed by ). All agents will execute this process simultaneously and independently before communicating with neighboring agents. At the agent level, these updates manifest themselves as follows,

| (27) | |||

| (28) |

The dual update equation, i.e., (27), modifies the Lagrangian multipliers to estimate the discrepancies between an agent’s local copy of variables (designed to emulate the global variables of its neighbors) and the actual global variables held by those neighbors. Subsequently, (28) provides an optimization solution leveraging prior iteration data from other agents.

It’s essential to note that agent doesn’t require all the updated values from other agents to update equations (27) and (28). Agent primarily needs:

-

•

Neighboring agents’ global variables: . In the context of the distributed DER problem, agent requires values of from their neighboring agent .

-

•

Neighboring agents’ local copies mirroring agent ’s global variables: , where functions as a selector matrix. In the distributed DER context, agent requires from their neighboring agent .

We use to represent the set of variables owned by other agents but are needed by agent to update (27) and (28). Finally, the intra-agent updates are represented by (4) and (3).

The standard form of ADMM guarantees the feasibility of local constraints by (4) and penalizes violations of consensus constraints by iteratively updating Lagrangian multipliers as (3). In what follows, we will propose a ML-based method to accelerate ADMM for decentralized DER coordination. The ADMM iterations will guide the consensus protocol, while the gauge map [39] is adopted to enforce hard local constraints.

III Proposed Methodology

III-A Overview of the Method

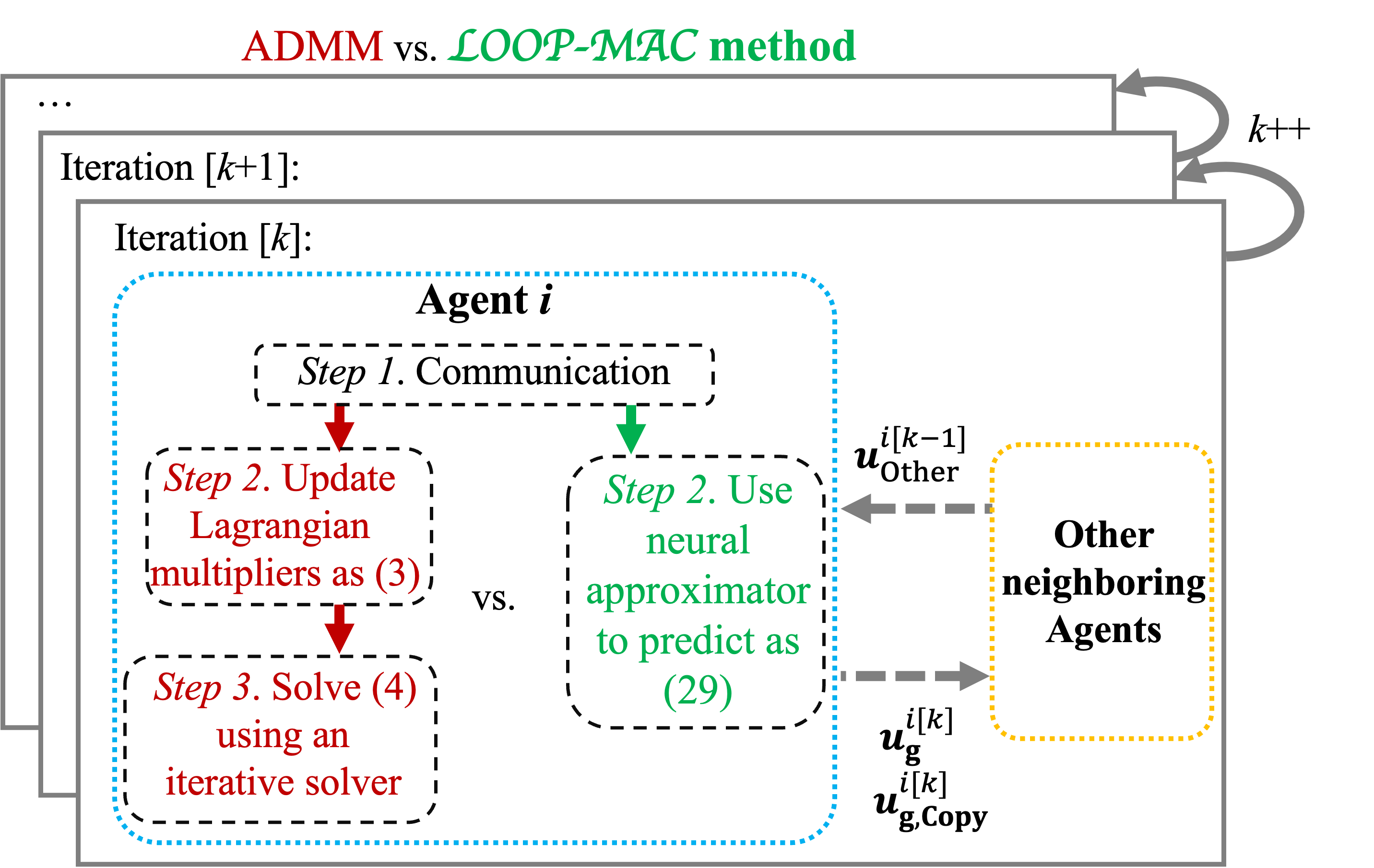

This section provides a high-level overview of the method to incorporate ML to accelerate the ADMM algorithm. As shown in Fig. 2, instead of solving agent-level local optimization problems (4) by an iterative solver, we will train agent-level neural approximators to directly map inputs to optimized value of agent’s optimization variables in a single feed-forward. The resulting prediction of each agent , denoted as , will be trained to approximate the optimal solution of (2).

| (29) |

Pseudo code of the proposed method is given in Algorithm 1. method includes two steps for each iteration. First, each agent receives variables of prior iteration from neighboring agents. Second, each agent uses a neural approximator to predict its optimal values.

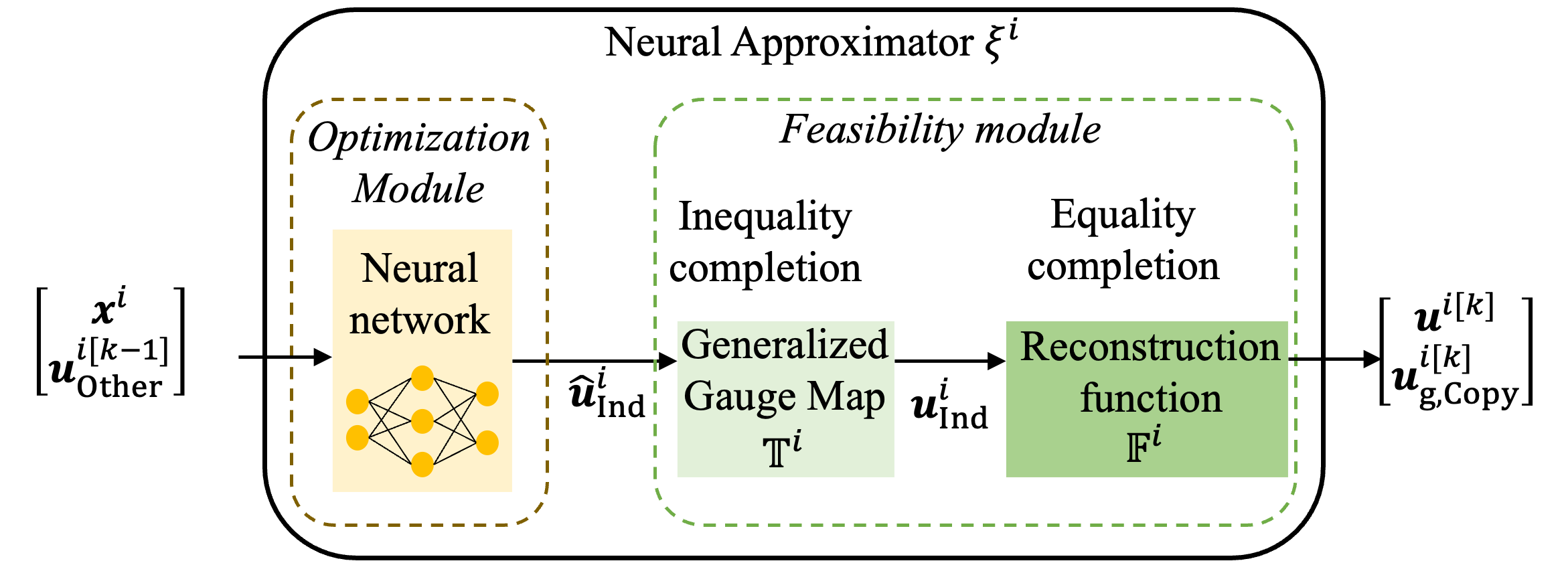

III-B Design of Neural Approximators Structures

Violations of consensus constraints could be penalized by ADMM iterations. Further, we will design each neural approximator’s structure to guarantee that its output satisfies the local constraints, i.e., . We adopt the (Learning to Optimize the Optimization Process with Linear Constraints) model proposed in [39] to develop each neural approximator . The model learns to solve optimization problems with hard linear constraints. It applies variable elimination and gauge mapping for equality and inequality completions, respectively. The model produces a feasible and near-optimal solution. In what follows, we will present the main components of and how it applies to the VPP coordination problem.

III-B1 Variable Elimination

Based on the equality constraints given in (25b), the variables and can be categorized into two sets: the dependent variables and the independent variables . The dependent variables are inherently determined by the independent variables. For instance in (9), the variable is dependent on ; hence, once is derived, can be caculated.

The function is introduced to establish the relationship between and , such that , shown in Fig. 3. A comprehensive derivation of can be found in [39]. By integrating into the definition of and substituting , the optimization problem of (4) can be restructured as a reduced-dimensional problem with as the primary variable. The corresponding constraint set for this reformulated problem is denoted by and presented as,

| (30) |

Therefore, as long as the prediction of the reformulated problem ensures , will produce the full-size vectors satisfying local constraints by concatenating and .

III-B2 Gauge Map

After variable elimination, our primary objective is to predict such that it satisfies the constraint set . Instead of directly solving this problem, we will utilize a neural network that finds a virtual prediction which lies within the -norm unit ball (denoted as ) a set constrained by upper and lower bounds. The architecture of the neural network is designed to ensure that the resulting remains confined within . Subsequently, we introduce a bijective gauge mapping, represented as , to transform from to . As presented in [39], is a predefined function with an explicit closed-form representation as below,

| (31) |

The function is the Minkowski gauge of the set , while represents an interior point of . Moreover, the shifted set, , is defined as,

| (32) |

with representing the Minkowski gauge on this set.

III-C Training the Neural Approximators

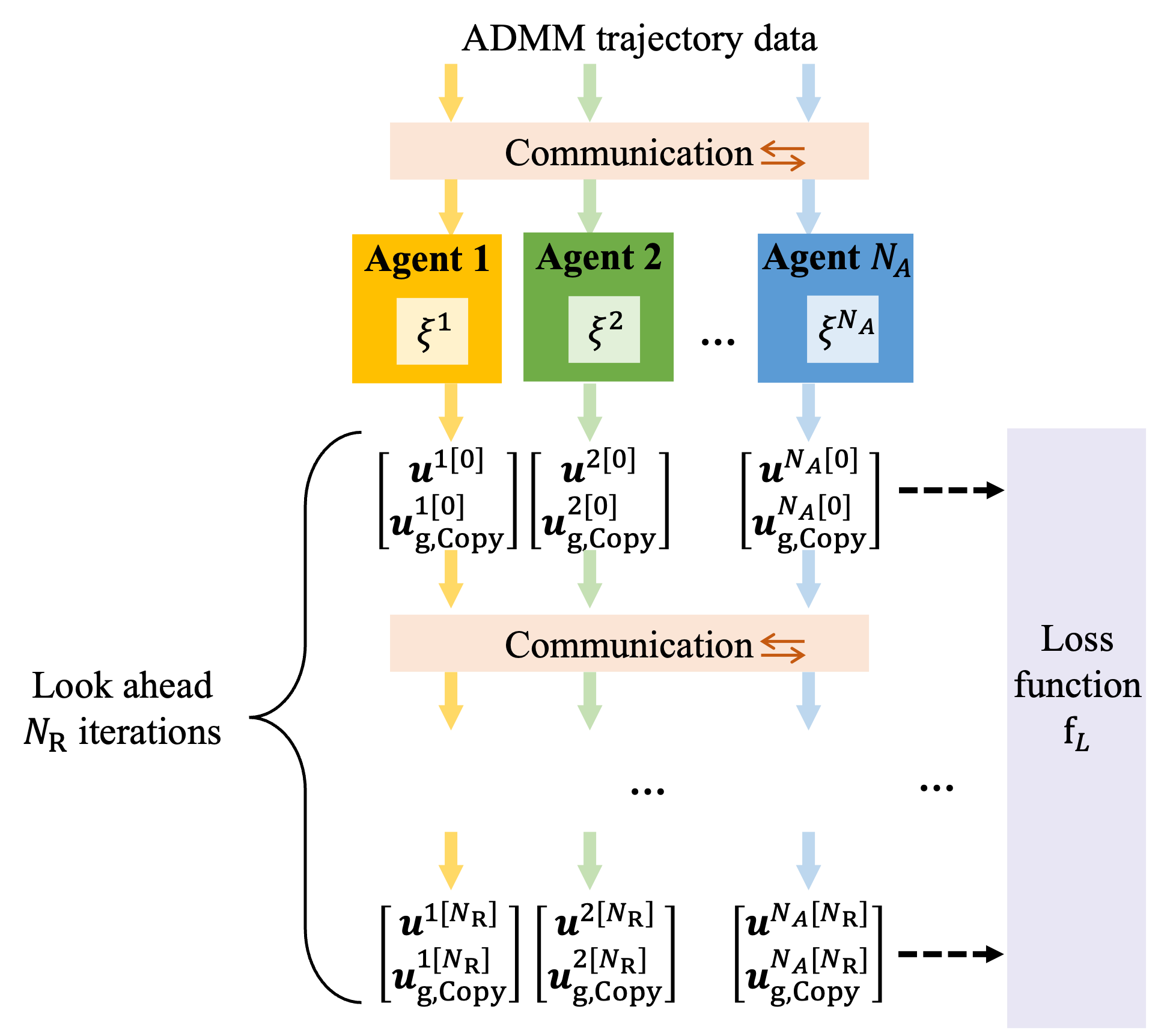

We use the historical trajectories of ADMM (i.e. applied on historical power demands) for training purposes. Note that predicting the converged ADMM values is a time-series prediction challenge. Specifically, outputs from a given iteration are requisites for the subsequent iterations. This relationship implies that are contingent upon , derived from other agents’ outputs from the prior iteration. To encapsulate this temporal dependency, our training approach adopts a look-ahead format, facilitating the joint training of all neural approximators in a recurrent manner, which ensures that prior outputs from different agents are seamlessly integrated as current inputs (see Fig. 4).

Suppose there are training data points, indexed and associated with their respective output by the superscript . As an initial step, ADMM is employed to generate all values of optimization variables required for training. Concurrently, the optimal solution pertaining to (2) is calculated. Subsequently, for recurrent steps, the loss function is defined as the cumulative distance between the prediction and the optimal solution . This summation spans all agents, every recurrent step, every iteration (), and all data points, as delineated in (33).

| (33) |

IV Experimental Results

IV-A Experiment Setup

IV-A1 Test Systems

We examine a VPP consisting of three distinct agents, as illustrated in Fig. 5.

-

•

Agent 1 manages inflexible loads, flexible loads, and energy storage systems.

-

•

Agent 2 is responsible for inflexible loads and the operations of plug-in electric vehicles.

-

•

Agent 3 oversees inflexible loads, heating, ventilation, and air conditioning systems, in addition to photovoltaics.

We derive the load profile from data recorded in central New York on July 24th, 2023 [44]. Both preferred flexible and inflexible loads typically range between 10 to 25 kW. The production schedule range is set between 45 to 115 kW.

For plug-in electric vehicles, our reference is the average hourly public L2 charging station utilization on weekdays in March 2022 as presented by Borlaug et al. [45]. In [45] the profile range for between 10 and 22 kW.

With regards to the heating, ventilation, and air conditioning systems, the target indoor temperature is maintained at . Guided by the ASHRAE(American Society of Heating, Refrigerating, and Air-Conditioning Engineers) standards [46], the acceptable summer comfort range is determined as and . External temperature readings for New York City’s Central Park on July 24th, 2023 were obtained from the National Weather Service [47].

IV-A2 Training Data

A total of 20 ADMM iterations are considered, i.e., . This results in a dataset of data points. For model validation, data from odd time steps is designated for training, whereas even time steps are reserved for testing. The DER coordination problem includes 192 optimization variables alongside 111 input variables.

IV-A3 ADMM Configuration

The ADMM initialization values are set to zero. In our ADMM implementation, the parameter is set to . Optimization computations are carried out using the widely-accepted commercial solver, Gurobi [49].

IV-A4 Neural Network Configuration

Our neural network models consist of a single hidden layer, incorporating 500 hidden units. The Rectified Linear Unit (ReLU) activation function is employed for introducing non-linearity. To ensure that resides within (the unit ball), the output layer utilizes the Hyperbolic Tangent (TanH) activation. Furthermore, 3 recurrent steps are considered, represented by .

IV-B Runtime Results

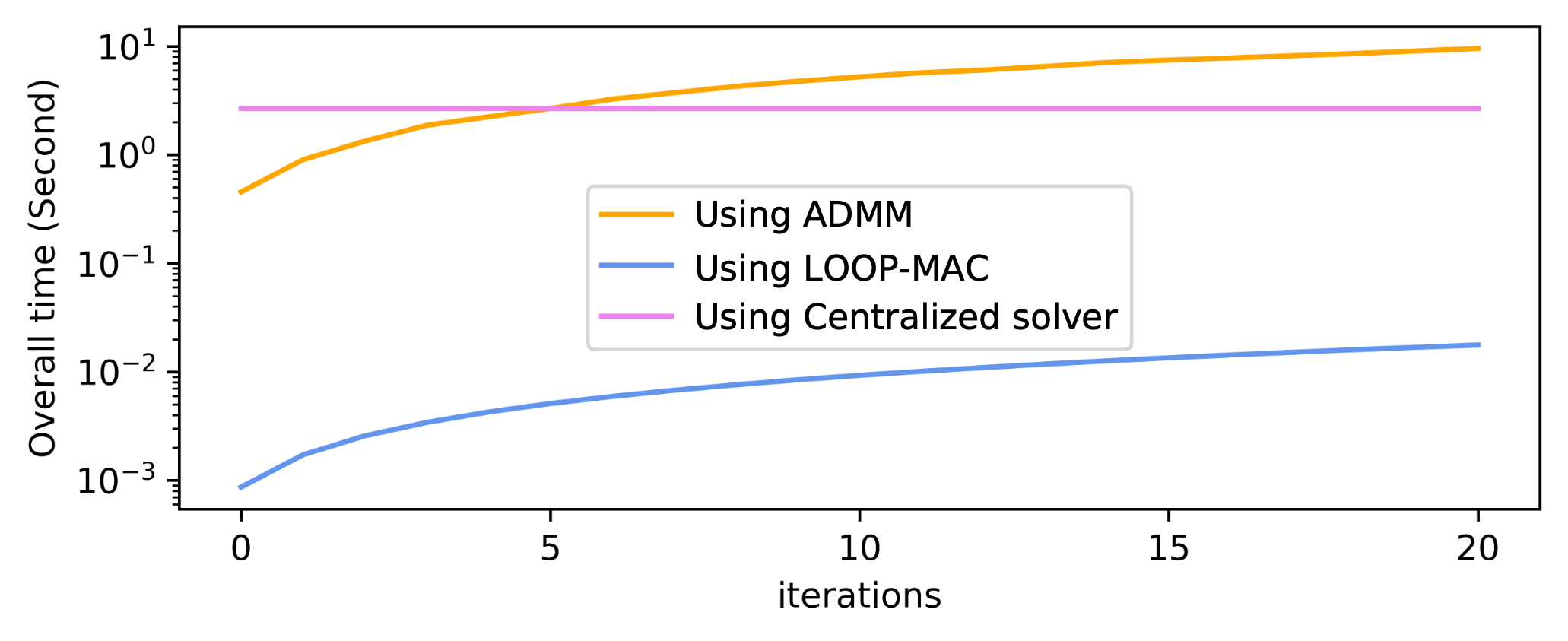

Fig. 6 illustrates the cumulative computation time across all agents and test data points over iterations. The performance comparison is conducted among the decentralized setup employing ADMM solvers, our proposed method, and traditional centralized solvers.

From the case study, it is observed that the computational time required by the classical ADMM solver exceeds the centralized solvers solution time after approximately five iterations. Remarkably, our proposed method significantly outperforms the classical ADMM, achieving 500x speed up. Also, even surpasses the efficiency of the centralized solver in terms of computation speed.

Table II provides the average computational time for a single iteration on a single data point. An insightful observation from the results suggests that the method would require around 3300 iterations to match the computational time of centralized solvers. However, based on the convergence analysis that will be provided later, method demonstrates convergence in a mere 10 iterations.

| Method | Time(Millisecond) |

|---|---|

| method | 0.0060 |

| ADMM using Gurobi[49] | 3.2966 |

| Centralized formulation using Gurobi [49] | 19.4496/ |

IV-C Optimality and Feasibility Results

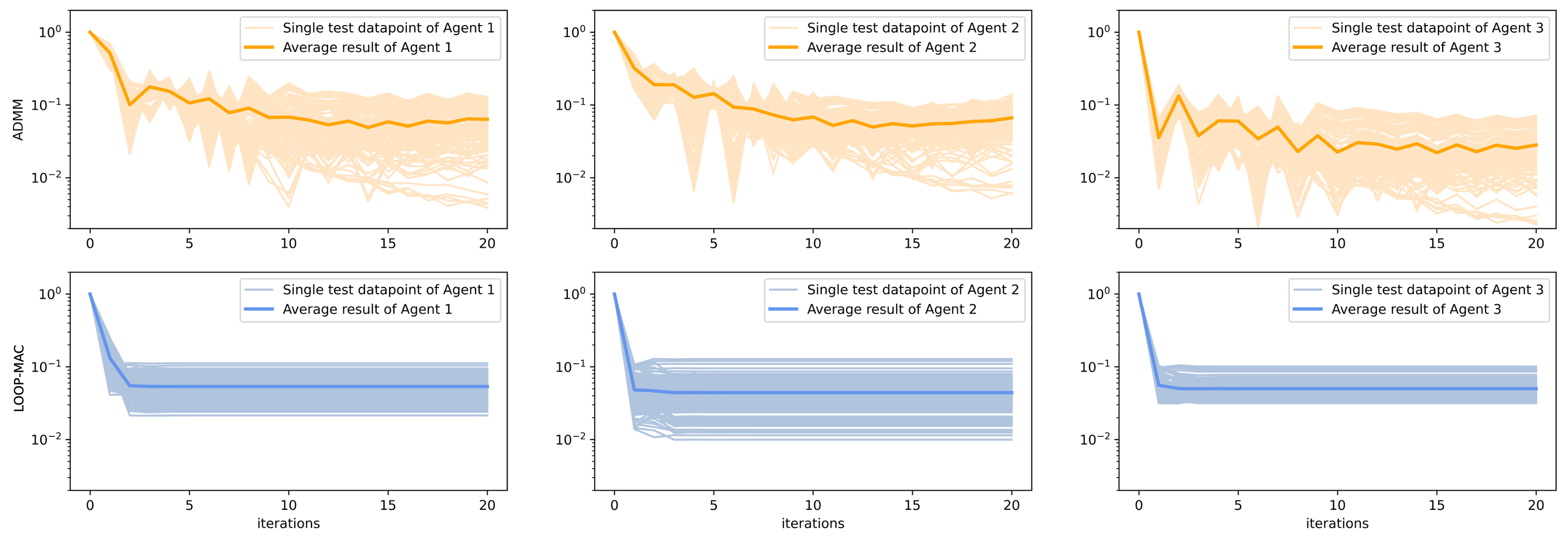

Fig. 7 presents the optimality deviation rate for both the traditional ADMM algorithm and method. The deviation rate metric quantifies the degree to which the operational profiles of the DERs deviate from the optimal (derived from solving the centralized problem). It is evident that method achieves faster convergence. Moreover, showcases faster reduction of the deviation rate compared to the standard ADMM approach.

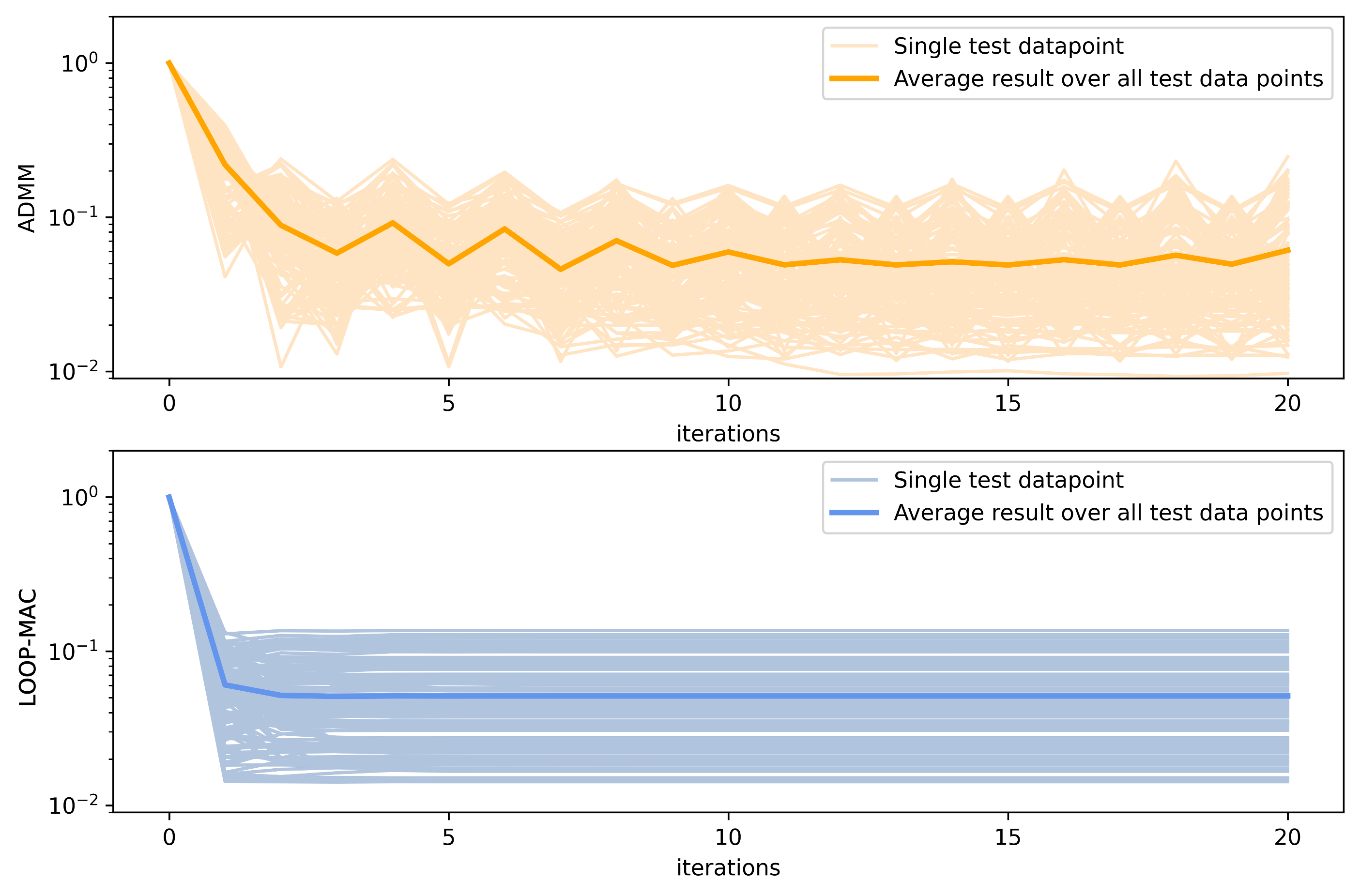

Similarly, Fig. 8 depicts the deviation rate of the VPP schedule for both the ADMM approach and method. This rate sheds light on the difference between the actual VPP production schedule and its planned output. In the context of our optimization problem, the deviation rate is equivalent to the feasibility gap rate of the coupled constraints, as shown in (17). Notably, excels in convergence speed and stability. The VPP schedule deviation rate declines more rapidly and remains stable using method, whereas the traditional ADMM method results in more oscillations and converges at a slower pace.

Table III summarizes post-convergence metrics for both algorithms across all agents, iterations, and test data points. While the minimum optimality deviation rate achieved by is slightly higher than that of the classical ADMM, our approach showcases a much lower variance and a significantly reduced maximum deviation. These results highlight method’s efficacy, especially when tasked with recurrently solving similar optimization problems. The observed improvements in variance and maximum deviation highlight the versatility and robustness of in varied problem scenarios. To sum up, the proposed solution speeds up the solution time of each ADMM iteration by up to 500X. Also, needs fewer iterations to converge, hence, the overall run time will be significantly shorter.

| ADMM | |||

|---|---|---|---|

| Optimality deviation rate | Average | 0.0527 | 0.0492 |

| Variance | 0.0012 | 0.0003 | |

| Maximum | 0.1396 | 0.1278 | |

| Minimum | 0.0023 | 0.0099 | |

| VPP schedule deviation rate | Average | 0.0611 | 0.0512 |

| Variance | 0.0026 | 0.0008 | |

| Maximum | 0.2471 | 0.1363 | |

| Minimum | 0.0097 | 0.0142 |

V Conclusion

In this work, we introduced a novel ML-based method, , to significantly enhance the performance of the distributed optimization techniques and discussed its performance in addressing challenges of the DER coordination problem (solved by VPP). Our multi-agent framework for VPP decision-making allows each agent to manage multiple DERs. Key to our proposed approach is the capability of each agent to predict their local power profiles and strategically communication with neighboring agents. The collective problem-solving efforts of these agents result in a near-optimal solution for power dispatching, ensuring compliance with both local and system-level constraints.

A key contribution of our work is developing and incorporating neural network approximators in the process of distributed decision-making. This novelty significantly accelerates the solution search and reduces the iterations required for convergence. Uniquely, in contrast to restoration-centric methodologies, bypasses the need for auxiliary post-processing steps to achieve feasibility using a two-pronged solution approach, where local constraints are inherently satisfied through the gauge mapping technique, and coupled constraints are penalized over ADMM iterations.

The method reduces the solution time per iteration by up to 500%. Coupled with requiring fewer iterations for convergence, the net result is a drastic reduction of overall convergence time while respecting the problem constraints and maintaining the quality of the resulting solution.

Acknowledgement

Thanks to Dr. Erik Blasch (Fellow member) for concept discussion. This research is funded under AFOSR grants #FA9550-24-1-0099 and FA9550-23-1-0203.

References

- [1] Q. Wang, C. Zhang, Y. Ding, G. Xydis, J. Wang, and J. Østergaard, “Review of real-time electricity markets for integrating distributed energy resources and demand response,” Applied Energy, vol. 138, pp. 695–706, 2015.

- [2] E. B.C. and A. Somani, “Impact of ferc order 2222 on der participation rules in us electricity markets,” tech. rep., Pacific Northwest National Laboratory, Richland, WA, 2022.

- [3] B. Goia, T. Cioara, and I. Anghel, “Virtual power plant optimization in smart grids: A narrative review,” Future Internet, vol. 14, no. 5, p. 128, 2022.

- [4] T. Navidi, A. El Gamal, and R. Rajagopal, “Coordinating distributed energy resources for reliability can significantly reduce future distribution grid upgrades and peak load,” Joule.

- [5] H. Pandžić, J. M. Morales, A. J. Conejo, and I. Kuzle, “Offering model for a virtual power plant based on stochastic programming,” Applied Energy, vol. 105, pp. 282–292, 2013.

- [6] E. Dall’Anese, S. S. Guggilam, A. Simonetto, Y. C. Chen, and S. V. Dhople, “Optimal regulation of virtual power plants,” IEEE transactions on power systems, vol. 33, no. 2, pp. 1868–1881, 2017.

- [7] M. Vasirani, R. Kota, R. L. Cavalcante, S. Ossowski, and N. R. Jennings, “An agent-based approach to virtual power plants of wind power generators and electric vehicles,” IEEE Transactions on Smart Grid, vol. 4, no. 3, pp. 1314–1322, 2013.

- [8] M. Mohammadi, J. Thornburg, and J. Mohammadi, “Towards an energy future with ubiquitous electric vehicles: Barriers and opportunities,” Energies, vol. 16, no. 17, p. 6379, 2023.

- [9] M. Mohammadi and A. Mohammadi, “Empowering distributed solutions in renewable energy systems and grid optimization,” in Distributed Machine Learning and Optimization: Theory and Applications, pp. 1–17, Springer, 2023.

- [10] N. Ruiz, I. Cobelo, and J. Oyarzabal, “A direct load control model for virtual power plant management,” IEEE Transactions on Power Systems, vol. 24, no. 2, pp. 959–966, 2009.

- [11] A. Bagchi, L. Goel, and P. Wang, “Adequacy assessment of generating systems incorporating storage integrated virtual power plants,” IEEE Transactions on Smart Grid, vol. 10, no. 3, pp. 3440–3451, 2018.

- [12] A. Mnatsakanyan and S. W. Kennedy, “A novel demand response model with an application for a virtual power plant,” IEEE Transactions on Smart Grid, vol. 6, no. 1, pp. 230–237, 2014.

- [13] A. Thavlov and H. W. Bindner, “Utilization of flexible demand in a virtual power plant set-up,” IEEE Transactions on Smart Grid, vol. 6, no. 2, pp. 640–647, 2014.

- [14] A. Cherukuri and J. Cortés, “Distributed coordination of ders with storage for dynamic economic dispatch,” IEEE transactions on automatic control, vol. 63, no. 3, pp. 835–842, 2017.

- [15] E. G. Kardakos, C. K. Simoglou, and A. G. Bakirtzis, “Optimal offering strategy of a virtual power plant: A stochastic bi-level approach,” IEEE Transactions on Smart Grid, vol. 7, no. 2, pp. 794–806, 2015.

- [16] M. Giuntoli and D. Poli, “Optimized thermal and electrical scheduling of a large scale virtual power plant in the presence of energy storages,” IEEE Transactions on Smart Grid, vol. 4, no. 2, pp. 942–955, 2013.

- [17] A. G. Zamani, A. Zakariazadeh, and S. Jadid, “Day-ahead resource scheduling of a renewable energy based virtual power plant,” Applied Energy, vol. 169, pp. 324–340, 2016.

- [18] H. Wang, Y. Jia, C. S. Lai, and K. Li, “Optimal virtual power plant operational regime under reserve uncertainty,” IEEE Transactions on Smart Grid, vol. 13, no. 4, pp. 2973–2985, 2022.

- [19] S. Hadayeghparast, A. S. Farsangi, and H. Shayanfar, “Day-ahead stochastic multi-objective economic/emission operational scheduling of a large scale virtual power plant,” Energy, vol. 172, pp. 630–646, 2019.

- [20] S. R. Dabbagh and M. K. Sheikh-El-Eslami, “Risk assessment of virtual power plants offering in energy and reserve markets,” IEEE Transactions on Power Systems, vol. 31, no. 5, pp. 3572–3582, 2015.

- [21] G. Chen and J. Li, “A fully distributed admm-based dispatch approach for virtual power plant problems,” Applied Mathematical Modelling, vol. 58, pp. 300–312, 2018.

- [22] D. K. Molzahn, F. Dörfler, H. Sandberg, S. H. Low, S. Chakrabarti, R. Baldick, and J. Lavaei, “A survey of distributed optimization and control algorithms for electric power systems,” IEEE Transactions on Smart Grid, vol. 8, no. 6, pp. 2941–2962, 2017.

- [23] T. Yang, X. Yi, J. Wu, Y. Yuan, D. Wu, Z. Meng, Y. Hong, H. Wang, Z. Lin, and K. H. Johansson, “A survey of distributed optimization,” Annual Reviews in Control, vol. 47, pp. 278–305, 2019.

- [24] Y. Wang, S. Wang, and L. Wu, “Distributed optimization approaches for emerging power systems operation: A review,” Electric Power Systems Research, vol. 144, pp. 127–135, 2017.

- [25] A. H. Fitwi, D. Nagothu, Y. Chen, and E. Blasch, “A distributed agent-based framework for a constellation of drones in a military operation,” in 2019 Winter Simulation Conference (WSC), pp. 2548–2559, IEEE, 2019.

- [26] Z. Li, Q. Guo, H. Sun, and H. Su, “Admm-based decentralized demand response method in electric vehicle virtual power plant,” in 2016 IEEE Power and Energy Society General Meeting (PESGM), pp. 1–5, IEEE, 2016.

- [27] L. Dong, S. Fan, Z. Wang, J. Xiao, H. Zhou, Z. Li, and G. He, “An adaptive decentralized economic dispatch method for virtual power plant,” Applied Energy, vol. 300, p. 117347, 2021.

- [28] D. E. R. T. Force, “Der integration into wholesale markets and operations,” tech. rep., Energy Systems Integration Group, Reston, VA, 2022.

- [29] F. Darema, E. P. Blasch, S. Ravela, and A. J. Aved, “The dynamic data driven applications systems (dddas) paradigm and emerging directions,” Handbook of Dynamic Data Driven Applications Systems: Volume 2, pp. 1–51, 2023.

- [30] E. Blasch, H. Li, Z. Ma, and Y. Weng, “The powerful use of ai in the energy sector: Intelligent forecasting,” arXiv preprint arXiv:2111.02026, 2021.

- [31] D. Biagioni, P. Graf, X. Zhang, A. S. Zamzam, K. Baker, and J. King, “Learning-accelerated admm for distributed dc optimal power flow,” IEEE Control Systems Letters, vol. 6, pp. 1–6, 2020.

- [32] M. Li, S. Kolouri, and J. Mohammadi, “Learning to optimize distributed optimization: Admm-based dc-opf case study,” in 2023 IEEE Power & Energy Society General Meeting (PESGM), pp. 1–5, IEEE, 2023.

- [33] T. W. Mak, M. Chatzos, M. Tanneau, and P. Van Hentenryck, “Learning regionally decentralized ac optimal power flows with admm,” IEEE Transactions on Smart Grid, 2023.

- [34] G. Tsaousoglou, P. Ellinas, and E. Varvarigos, “Operating peer-to-peer electricity markets under uncertainty via learning-based, distributed optimal control,” Applied Energy, vol. 343, p. 121234, 2023.

- [35] A. Mohammadi and A. Kargarian, “Learning-aided asynchronous admm for optimal power flow,” IEEE Transactions on Power Systems, vol. 37, no. 3, pp. 1671–1681, 2021.

- [36] W. Cui, J. Li, and B. Zhang, “Decentralized safe reinforcement learning for inverter-based voltage control,” Electric Power Systems Research, vol. 211, p. 108609, 2022.

- [37] W. Cui, G. Shi, Y. Shi, and B. Zhang, “Leveraging predictions in power system frequency control: an adaptive approach,” arXiv e-prints, pp. arXiv–2305, 2023.

- [38] M. Al-Saffar and P. Musilek, “Distributed optimization for distribution grids with stochastic der using multi-agent deep reinforcement learning,” IEEE access, vol. 9, pp. 63059–63072, 2021.

- [39] M. Li, S. Kolouri, and J. Mohammadi, “Learning to solve optimization problems with hard linear constraints,” IEEE Access, 2023.

- [40] T. Cioara, M. Antal, and C. Pop, “Deliverable d3.3-consumption flexibility models and aggregation techniques,” tech. rep., H2020 eDREAM, 2019.

- [41] Y.-Y. Hong, J.-K. Lin, C.-P. Wu, and C.-C. Chuang, “Multi-objective air-conditioning control considering fuzzy parameters using immune clonal selection programming,” IEEE Transactions on Smart Grid, vol. 3, no. 4, pp. 1603–1610, 2012.

- [42] Y. Wang, Z. Yang, M. Mourshed, Y. Guo, Q. Niu, and X. Zhu, “Demand side management of plug-in electric vehicles and coordinated unit commitment: A novel parallel competitive swarm optimization method,” Energy conversion and management, vol. 196, pp. 935–949, 2019.

- [43] S. Cui, Y.-W. Wang, and J.-W. Xiao, “Peer-to-peer energy sharing among smart energy buildings by distributed transaction,” IEEE Transactions on Smart Grid, vol. 10, no. 6, pp. 6491–6501, 2019.

- [44] N. Y. I. S. Operator, “Real-time load data for new york city’s central park,” 2023.

- [45] B. Borlaug, F. Yang, E. Pritchard, E. Wood, and J. Gonder, “Public electric vehicle charging station utilization in the united states,” Transportation Research Part D: Transport and Environment, vol. 114, p. 103564, 2023.

- [46] A. Standard, “Thermal environmental conditions for human occupancy,” ANSI/ASHRAE, 55, vol. 5, 1992.

- [47] N. W. Service, “Weather data for new york city’s central park,” 2023.

- [48] T. Stoffel and A. Andreas, “Nrel solar radiation research laboratory (srrl): Baseline measurement system (bms); golden, colorado (data),” tech. rep., National Renewable Energy Lab.(NREL), Golden, CO (United States), 1981.

- [49] Gurobi Optimization, LLC, “Gurobi Optimizer Reference Manual.”

- [50] H. Wang and J. Huang, “Incentivizing energy trading for interconnected microgrids,” IEEE Transactions on Smart Grid, vol. 9, no. 4, pp. 2647–2657, 2016.

- [51] G. Li, D. Wu, J. Hu, Y. Li, M. S. Hossain, and A. Ghoneim, “Helos: Heterogeneous load scheduling for electric vehicle-integrated microgrids,” IEEE Transactions on Vehicular Technology, vol. 66, no. 7, pp. 5785–5796, 2016.