Maneuver-Aware Pooling for Vehicle Trajectory Prediction

Abstract

Autonomous vehicles should be able to predict the future states of its environment and respond appropriately. Specifically, predicting the behavior of surrounding human drivers is vital for such platforms to share the same road with humans. Behavior of each of the surrounding vehicles is governed by the motion of its neighbor vehicles. This paper focuses on predicting the behavior of the surrounding vehicles of an autonomous vehicle on highways. We are motivated by improving the prediction accuracy when a surrounding vehicle performs lane change and highway merging maneuvers. We propose a novel pooling strategy to capture the inter-dependencies between the neighbor vehicles. Depending solely on Euclidean trajectory representation, the existing pooling strategies do not model the context information of the maneuvers intended by a surrounding vehicle. In contrast, our pooling mechanism employs polar trajectory representation, vehicles orientation and radial velocity. This results in an implicitly maneuver-aware pooling operation. We incorporated the proposed pooling mechanism into a generative encoder-decoder model, and evaluated our method on the public NGSIM dataset. The results of maneuver-based trajectory predictions demonstrate the effectiveness of the proposed method compared with the state-of-the-art approaches. Our “Pooling Toolbox” code is available at https://github.com/m-hasan-n/pooling.

I INTRODUCTION

Human drivers need to predict the intentions of other road users to safely drive vehicles and navigate through other traffic. This is critical when making decisions like yielding to a coming vehicle or merging into traffic [1]. Similarly, to be fully autonomous, an Autonomous Vehicle (AV) should be able to predict the future states of its environment and respond appropriately. This is crucial for the planning and control of such self-driving platforms. Specifically, predicting the behavior of human drivers becomes necessary for AVs to share the same road with humans [2].

Human driver behavior on a highway is highly influenced by the other neighboring drivers. While experienced human drivers are able to confidently predict future behaviors of other drivers, it is still an open problem for AVs due to the inherent challenges of maneuver prediction. Some of these challenges can be arising from: (1) trajectories tend to be non-linear over long time horizons, (2) the inherent uncertainty about latent variables such as drivers’ motivations and goals, and (3) the intrinsic multi-modality nature of the decision-making processes, reflected in the multiple possible decisions under the same traffic condition [3].

We address the problem of trajectory prediction of the human-driven vehicles surrounding an autonomous vehicle on highways. In line with the existing work [4, 3], this is achieved by iteratively predicting the trajectory of each surrounding vehicle around the AV. We call the subject surrounding vehicle to be predicted as “ego” and the vehicles around the ego vehicle as “neighbors”. Specifically, given the observed motion trajectories of human drivers for the immediate past (e.g. 3 seconds) of an ego vehicle and all its neighbor vehicles, predict the future trajectory of the ego vehicle considering all its neighboring vehicles.

This problem has been tackled in the literature (for both vehicle and pedestrian agents) from two broad aspects. First, encoding the status of the neighbors by a pooling vector [4, 3, 2] that captures their inter-dependencies. Second, using generative models that output multi-modal probability distribution over the future trajectory of the ego agent [1, 3, 2, 5, 6].

We focus on the first aspect and propose a novel pooling mechanism to capture the interaction between the neighbor vehicles. Previous pooling strategies represent vehicle trajectories using longitudinal and lateral positions. It is reasonable to assume that human drivers employ higher-order information to reason over the surrounding traffic. Thus, We hypothesize that the prediction accuracy can be improved by incorporating higher-order information like vehicles orientation and velocity in the pooling operation.

We are motivated by improving the motion prediction accuracy when vehicles perform lane change and highway merging maneuvers. Thus, we introduce a pooling strategy that is based on signals from the orientation and radial velocity of the vehicles. Hence, the resulting pooling vector is implicitly aware of the maneuvers intended by the surrounding vehicles.

We incorporated the proposed pooling strategy into a generative encoder-decoder model, that outputs a multi-modal distribution of predictions conditioned on a set of semantic maneuvers. The overall model is evaluated on the public NGSIM dataset [7, 8] and the results are compared to state-of-the-art pooling approaches. In addition to the traditional overall evaluation of the prediction accuracy, we perform a finer, maneuver-based evaluation where the accuracy is also reported for lane change and highway merging maneuvers.

The main contribution of our work is a maneuver-aware pooling mechanism that outperforms the current pooling approaches in terms of prediction accuracy as shown over an extensive maneuver-based evaluation. To help reproduce the proposed and other [4, 3, 2] pooling approaches, our “Pooling Toolbox” code is publicly available.

II Related Work and Background

II-A Generative Encoder-Decoder Models

Recurrent Neural Networks (RNNs) represent a rich class of dynamic models that extend feedforward networks for sequence generation in diverse domains like speech recognition [9], machine translation [10] and image captioning [11]. Long-Short Term Memory (LSTM) is an instance of RNNs that has been proved successful in sequence learning and generation tasks [12, 4, 11]. Since motion prediction can be considered as a sequence generation task, and inspired by the LSTM success in this domain, a number of RNN-based approaches have been proposed for trajectory prediction [4, 2, 3, 6, 13, 14, 15] of both pedestrians and vehicles.

In their seminal work Social LSTM, Alahi et al. [4] extended RNNs for human trajectory prediction using a social pooling layer that models nearby pedestrians. Gupta et al. proposed a Generative Adversarial Networks [16] (GAN): a RNN Encoder-Decoder generator and a RNN based encoder discriminator, to predict socially-acceptable multimodal pedestrian trajectories [2]. Social LSTM approach was further improved in [3] by using convolutional social pooling applied to vehicle motion prediction on highways. LSTM network is also used to predict the location of vehicles in an occupancy grid [17] at different future intervals. Convolutional networks and LSTM were combined to predict multi-modal trajectories for an agent on a bird’s eye view image [18].

II-B Multi-modal Predictive Distribution

Deo et al. proposed a model [3] that outputs a multi-modal predictive distribution over future trajectories based on three lateral and two longitudinal maneuver classes. MultiPath model [1] can predict a discrete distribution over a set of future state-sequence anchors and output multi-modal future distributions. The GAN based encoder-decoder architecture in [2] encourages diverse multimodal predictions of pedestrian trajectories with the introduced variety loss. A multi-modal probabilistic prediction approach was presented in [5] based on a Conditional Variational Autoencoder and is capable of jointly predicting sequential motions of each pair of interacting vehicles. Zyner et al. [6] presented a model based on RNN with a mixture density network output layer, for predicting driver intent at urban single-lane roundabouts through multi-modal trajectory prediction with uncertainty.

II-C Pooling Mechanisms

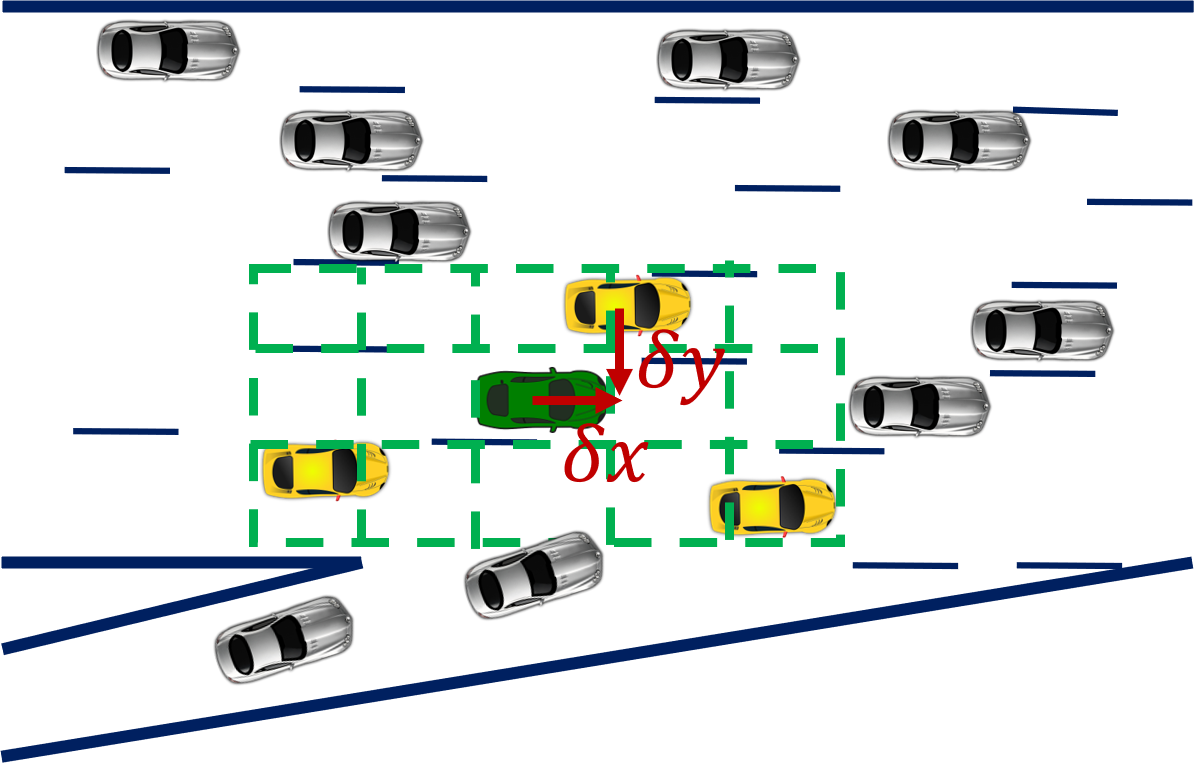

Social LSTM (S-LSTM) has been introduced for pedestrian motion prediction [4], where one LSTM is assigned to each person in a scene. To capture the interaction of people in a neighborhood, neighboring LSTMs are connected through a “Social” tensor. This tensor is constructed by defining a spatial grid around the agent being predicted (Fig. 1 left), and populating the grid with LSTM states of this agent and its neighbors based on their spatial configuration. A fully connected and a SUM pooling layers are then applied to the social tensor to produce the pooling vector.

Deo et al. introduced convolutional social pooling (CSP) [3] to predict vehicle motion behaviors on highways. They extended the work of [4] improving the pooling layer by using convolutional and Max-pooling (rather-than a fully-connected and SUM-pooling) layers to embed the LSTM states populated in the social tensor.

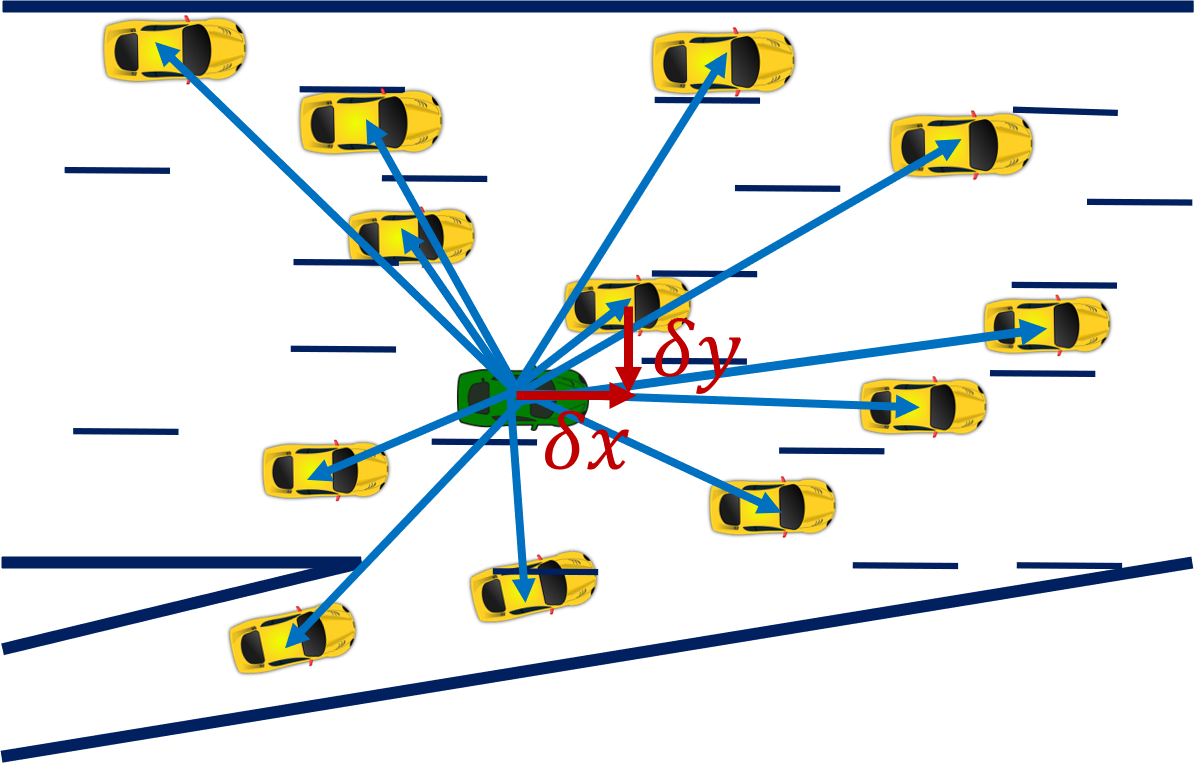

In their Social-GAN (S-GAN) work [2], Gupta et al. argued that the grid-based pooling scheme presented in Social Pooling [4, 3] is a hand-crafted solution that is slow and fails to capture global context. They proposed to concatenate the relative position between an agent and all its neighbors (Fig. 1 center) with the LSTM state of each agent. The concatenated tensor is then processed independently by a Multi-Layer Perceptron and a Max-pooling layer.

The previous approaches depend solely on the lateral and longitudinal positions to represent the vehicle trajectories. In contrast, we employ polar coordinates, vehicles orientation and radial motion velocity to represent the vehicle trajectories. Using such higher-order information results in an implicitly maneuver-aware pooling strategy, that proved to outperform the state-of-art pooling approaches in terms of the prediction accuracy.

III Problem Formulation

Inline with the previous approaches [4, 3, 2, 1], We formulate the problem of vehicle trajectory prediction as estimating the probability distribution of the possible future trajectories of an ego vehicle conditioned on its track history and track histories of the neighbor vehicles around it, at each discrete time step . Given observations in the form of past trajectories of the ego and its neighbor vehicles, our model seeks to provide a distribution over future trajectories of the ego vehicle .

III-A Inputs and Outputs

The inputs to our model are track histories:

| (1) | ||||

where is a fixed (history) time horizon, and at any time instant ,

| (2) | ||||

represents the position (represented by a distance and an angle ) and radial velocity of the ego vehicle (subscript ), and the neighbor vehicles (subscripts to ), represented in polar coordinates.

The output of the model is a probability distribution over:

| (3) | ||||

where is a fixed (future) time horizon, and at any time instant ,

| (4) | ||||

represents the future position and velocity of the ego vehicle being predicted.

III-B Multi-modal Trajectory Prediction

The multi-modal distribution over future trajectories can be hierarchically factorized [3, 1]. First, estimate the intent uncertainty over a set of maneuver classes. Second, given the intended maneuvers, predict the vehicle future trajectory.

The intentions of human drivers are modelled using two discrete sets of maneuver types: (1) location-wise maneuvers , and (2) acceleration-wise maneuvers . We model the uncertainty over each discrete set of maneuvers using a softmax distribution.

Given the intended maneuvers , the uncertainty over the future trajectory is modelled as a Gaussian distribution:

| (5) | ||||

that is parameterized by [3]:

| (6) | ||||

which are the parameters of a multivariate Gaussian distribution at each time step in the future. At any time , this is given by the Gaussian parameters: , corresponding to the mean and variance of the future vehicle position and velocity.

The multi-modal output conditional distribution over future trajectories can now be expanded in terms of the maneuvers:

| (7) | ||||

which yields a Gaussian Mixture Model distribution (GMM) [1]. The mixture weights are defined by the probabilities of the maneuvers.

IV Maneuver-Aware Pooling

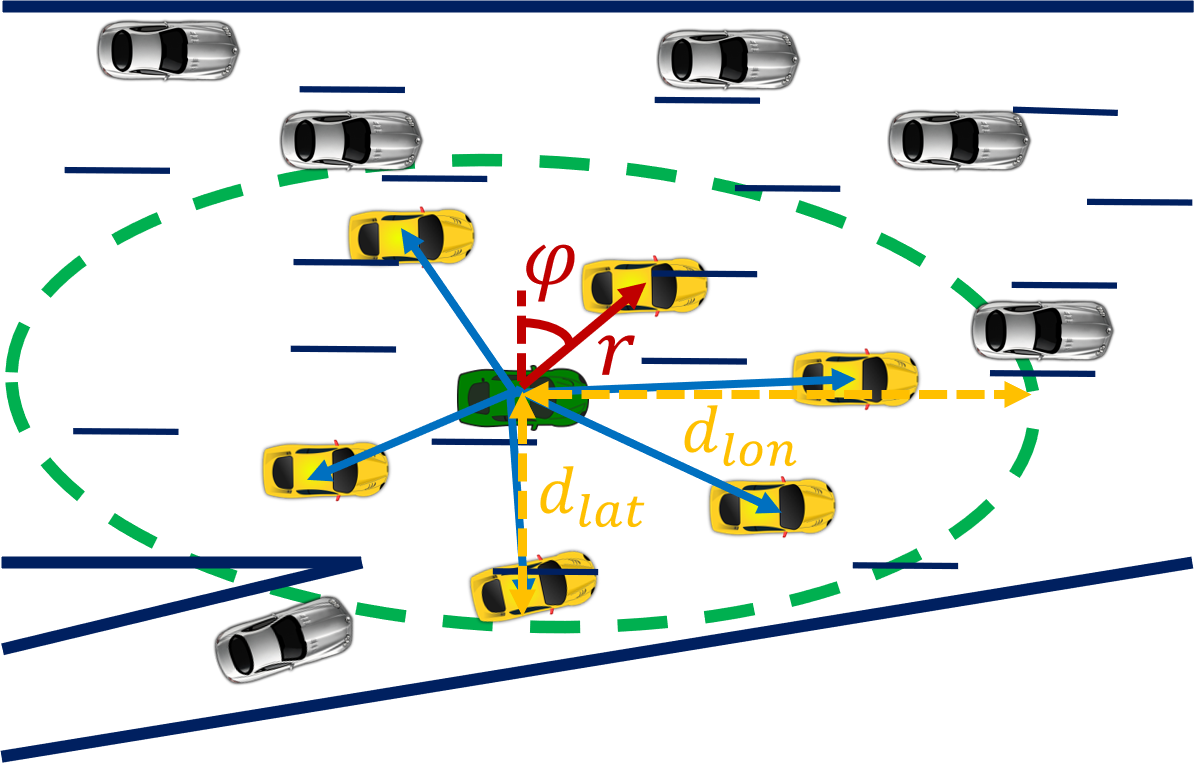

The objective of a pooling strategy is to summarize the context of interaction between the ego and surrounding vehicles within a certain neighborhood. While this neighborhood is defined by a spatial grid in Social Pooling [4, 3], it includes all agents in a given scene in the work of S-GAN [2]. Similar to S-GAN, we do not restrict the neighborhood to the handcrafted structure of the spatial grid. However, we limit it by lateral and longitudinal distances (Fig. 1 Right) around the ego vehicle, considered as hyperparameters.

To be aware of vehicle maneuvers, we represent trajectories in polar form and use information from vehicles orientation and radial motion velocity (2). Relative coordinates are used for translation invariance. The origin of a stationary frame of reference is fixed at the ego vehicle position at time . We assume that the sensors and/or computers onboard the AV can measure and/or compute the parameters required for our solution. For example, if the position history of the ego vehicle is measured in terms of lateral () and longitudinal () positions as , the resulting polar-representation is given by , where at any time step :

| (8) |

Accordingly, the angle defines the orientation of the ego vehicle at time . The same representation is also used to compute the relative position between each neighbor and the ego vehicle.

We augment vehicle positions by radial motion velocity to better represent the trajectories. Computing radial velocity is based on the vehicle velocity and orientation, which are important signals to provide information of the intended maneuver. Specifically, the radial velocity of the -th neighbor vehicle at time is defined relative to the ego as:

| (9) |

where is defined similarly to (8), denotes the linear velocity of the neighbor vehicle relative to the ego linear velocity at the the origin , and the angle defines the orientation of the neighbor vehicle.

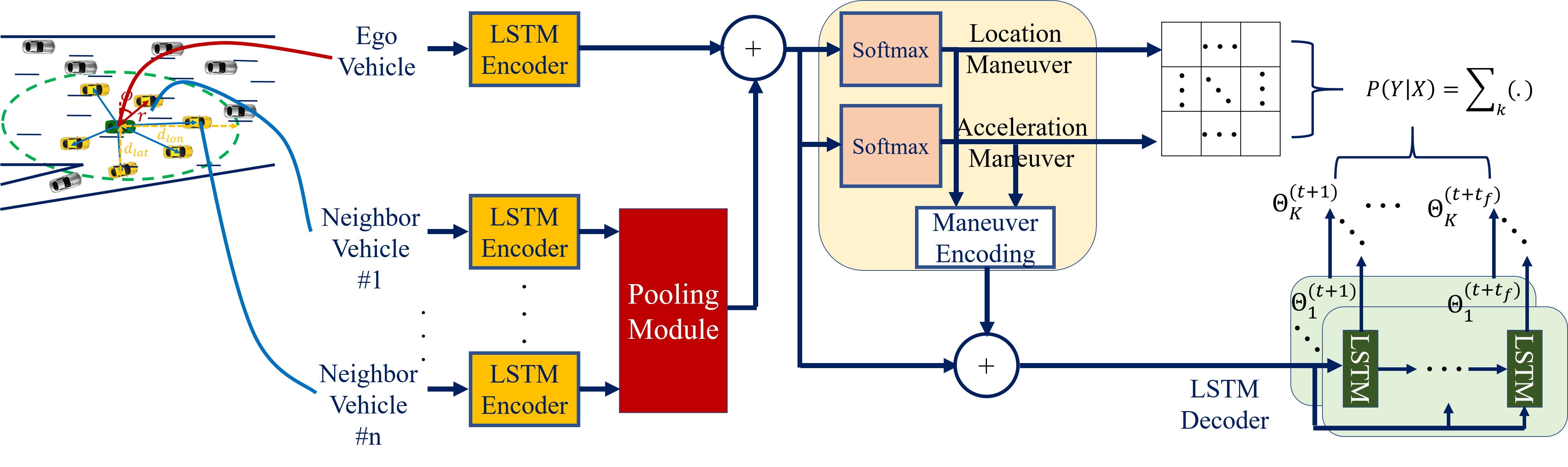

V Model

The proposed pooling mechanism is incorporated in the model shown in Fig. 2 consisting of an LSTM encoder, a pooling module, a maneuver recognition module and an LSTM decoder.

V-A Encoder

We encode the state of motion of each vehicle using an LSTM encoder. At any time , a sequence of time steps of the track history is passed through the encoder. The LSTM states for each vehicle are updated frame by frame over the past frames [3]. The LSTM weights are shared across the sequences of all vehicles [4].

V-B Pooling Module

The interaction of vehicles in a given scene is captured by the pooling module. This is achieved by pooling the LSTM states of the neighbor vehicles around the ego vehicle. The output is a pooling vector summarizing the information needed by the ego vehicle to make a maneuver decision. We extend the pooling mechanism in [2] to implement our pooling strategy detailed in Sec. IV.

First, at any time step the neighbor vehicles around the ego vehicle are identified. The relative position and radial velocity of the ego vehicle and between each neighbor and the ego are then computed (relative to the position and velocity at the origin . Second, the relative position and velocity are concatenated with each vehicle’s LSTM hidden state, and processed independently by a Multi-Layer Perceptron (MLP). Finally, the MLP output is Max-pooled element-wise to compute the pooling vector of the ego vehicle. This method can capture the inter-dependencies of the motion of neighbor vehicles, without being restricted to a specific grid size. More importantly, being based on vehicle orientation and radial motion velocity, the pooling operation becomes more aware of the intended maneuvers.

V-C Maneuver Recognition

The location-wise maneuvers (Sec. III-B) include three classes: lane keeping, left lane-change and right lane-change, which correspond to semantic location-change concepts on highways. Similarly, the acceleration-wise maneuvers include: constant speed, speeding up and slowing down classes. A vehicle is annotated with one of the lane-change classes if it changes its lane during the prediction horizon, while marked under the lane-keeping class otherwise. The NGSIM dataset provides the acceleration of each vehicle. A vehicle is annotated with a slowing/speeding class if its mean future acceleration is less/larger than / threshold, while marked under the constant-speed class otherwise.

The maneuver recognition module consists of two softmax layers to recognize the location and acceleration classes. The input to this module is the LSTM state of the ego vehicle augmented by the pooling vector from the pooling module. Each softmax layer outputs the probability of each maneuver class. The maneuver encoding block in Fig. 2 encodes the output from each softmax layer into a one-hot vector. Both one-hot vectors are concatenated with the trajectory encoding and the resulting tensor is passed to the decoder module.

V-D Decoder

An LSTM decoder is used to generate the conditional distribution over future trajectories of the ego vehicle. At any time , The decoder generates the future trajectories over the next time steps. At each time step, the decoder outputs a multi-modal distribution conditioned on the maneuver classes.

VI Experiments and Results

VI-A NGSIM Dataset

The NGSIM public dataset is used for our experiments. This dataset consists of two subsets: US-101 [7] and I-80 [8] of trajectories of real freeway traffic captured at 10 Hz. Each subset consists of three 15-min segments recorded over a time span of 45 minutes. The recorded segments represent mild, moderate and congested traffic conditions.

The dataset provides the longitudinal and lateral co-ordinates of vehicles projected to a local co-ordinate system, in addition to other data like velocity and acceleration. We divide the complete NGSIM dataset into training , validation and testing sets. All sets are randomly sampled from both US-101 and I-80 subsets.

Vehicle trajectories are split into segments of 8 s, where we use s of track history and a s prediction horizon. These 8 s segments are sampled at the dataset sampling rate of 10 Hz, and then downsampled by a factor of 2 before feeding them to the LSTMs, to reduce the model complexity [3].

| Prediction | ||||||

|---|---|---|---|---|---|---|

| Horizon (s) | S-LSTM | CSP | S-GAN | |||

| w/ | w/o | w/ | w/o | w/ | w/o | |

| 1 | 0.33 | 0.33 | 0.34 | 0.34 | 0.34 | 0.34 |

| 2 | 0.97 | 0.98 | 0.98 | 0.99 | 0.98 | 0.98 |

| 3 | 1.72 | 1.79 | 1.73 | 1.77 | 1.73 | 1.76 |

| 4 | 2.65 | 2.85 | 2.68 | 2.79 | 2.66 | 2.78 |

| 5 | 3.83 | 4.19 | 3.87 | 4.11 | 3.84 | 4.09 |

| Prediction | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Horizon (s) | overall | keep | ||||||||

| S-LSTM | CSP | S-GAN | Polar | Polar- | S-LSTM | CSP | S-GAN | Polar | Polar- | |

| 1 | 0.33 | 0.34 | 0.34 | 0.34 | 0.25 | 0.37 | 0.38 | 0.38 | 0.38 | 0.29 |

| 2 | 0.97 | 0.98 | 0.98 | 0.96 | 0.84 | 1.01 | 1.02 | 1.02 | 1.01 | 0.89 |

| 3 | 1.72 | 1.73 | 1.73 | 1.71 | 1.58 | 1.72 | 1.76 | 1.75 | 1.72 | 1.62 |

| 4 | 2.65 | 2.68 | 2.66 | 2.66 | 2.53 | 2.57 | 2.64 | 2.61 | 2.58 | 2.51 |

| 5 | 3.83 | 3.87 | 3.84 | 3.85 | 3.75 | 3.60 | 3.72 | 3.66 | 3.63 | 3.62 |

| Prediction | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Horizon (s) | merge | left | right | ||||||||||||

| S-LSTM | CSP | S-GAN | Polar | Polar- | S-LSTM | CSP | S-GAN | Polar | Polar- | S-LSTM | CSP | S-GAN | Polar | Polar- | |

| 1 | 0.35 | 0.36 | 0.37 | 0.34 | 0.25 | 0.54 | 0.53 | 0.54 | 0.48 | 0.37 | 0.60 | 0.62 | 0.58 | 0.54 | 0.39 |

| 2 | 1.03 | 1.06 | 1.07 | 0.99 | 0.91 | 1.50 | 1.50 | 1.54 | 1.41 | 1.22 | 1.93 | 1.87 | 1.76 | 1.72 | 1.38 |

| 3 | 1.89 | 1.94 | 1.95 | 1.82 | 1.76 | 2.70 | 2.72 | 2.77 | 2.61 | 2.38 | 3.53 | 3.46 | 3.38 | 3.28 | 2.83 |

| 4 | 2.93 | 2.96 | 2.93 | 2.75 | 2.81 | 4.14 | 4.18 | 4.23 | 4.09 | 3.88 | 5.47 | 5.46 | 5.35 | 5.16 | 4.63 |

| 5 | 4.19 | 4.18 | 4.08 | 3.85 | 4.04 | 5.87 | 5.88 | 5.93 | 5.83 | 5.69 | 7.59 | 7.76 | 7.64 | 7.32 | 6.74 |

VI-B Implementation Details

We extended the model in [3] to: (1) incorporate our benchmark pooling strategies, and (2) use multi-variate Gaussian distribution. The SUM pooling layer used in S-LSTM [4] is implemented using a two-dimensional kernel of size (4,3). The sizes of the convolutional social pooling layers of CSP are set as given in [3]. The MLP used in the proposed pooling module (and in S-GAN pooling [2]) has a size of 256 and is followed by a leaky-ReLU layer. The acceleration threshold in Sec.V-C is set to . The spatial grid in Fig. 1 is set to a 13 × 3 size, where each column corresponds to a single lane, and the rows are separated by a distance of 15 feet which approximately equals one car length [3]. To emphasize the effectiveness of the proposed pooling strategy, we set the distances and to the same width and length, respectively of the spatial grid. The model is implemented using PyTorch [19] and trained end-to-end.

VI-C Evaluation metric

All results are reported in terms of the root of the mean squared error (RMSE) of the predicted trajectories with respect to the ground truth future trajectories, over the prediction horizon. Since the LSTM models generate bi-variate Gaussian distributions, the means of the Gaussian components are used for RMSE calculation.

VI-D Baselines

The results of the following pooling approaches are compared:

-

•

S-LSTM: Social Pooling [4].

-

•

CSP: Convolutional Social Pooling [3].

-

•

S-GAN: the pooling strategy used in S-GAN [2].

-

•

Polar: the proposed pooling strategy where trajectories are modelled by position only in the polar coordinates and , and the model outputs a bi-variate Gaussian distribution.

-

•

Polar-: the full proposed pooling strategy presenting trajectories by position and radial velocity in polar coordinates (), and the model outputs a multi-variate Gaussian distribution.

While we employ polar representation of vehicle trajectories, other baselines (S-LSTM, CSP, S-GAN) use Euclidean lateral and longitudinal positions. The same model and testing data are used to evaluate every pooling strategy. The maneuver recognition module (Sec. V-C) was incorporated in the Polar- model only. At the evaluation time, this model outputs the maximum a posteriori probability (MAP) trajectory estimate corresponding to the maneuver classes having the maximum probability.

VI-E Results and Discussion

Besides reporting the overall prediction accuracy, we evaluate the baselines on the following maneuvers: (1) car-following by keeping the same lane (keep), (2) merging from the onramp lane (merge), (3) left lane-change (left), and (4) right lane-change (right). The overall performance of the baseline approaches in Table I implies two findings111We also noted the same findings for the maneuver-based evaluation.: the baselines have comparable prediction accuracy, and RMSE accuracy was not improved by using the maneuver recognition module. While the former motivates for a different direction of improvement (other than the pooling layer type and the neighborhood structure), the latter suggests feeding higher-order information to the pooling operation.

RMSE results of the maneuver-based evaluation are reported in Tables II (overall and keep) and III (merge, left and right). We note that the overall prediction accuracy is dominated by the keep maneuver accuracy. This is due to the inherent biasness in the data: as drivers tend to keep their lanes most of the time, the dataset provides fewer lane-change examples. However, Our proposed solution tackled this problem without changing the dataset (e.g. by augmentation).

Representing trajectories using relative distance and angle (Polar) is shown to outperform the Euclidean representation adopted by the state-of-the-art strategies, especially at the lane-change and merging maneuvers. The main reason of this improvement is employing the vehicle orientation which makes the pooling operation more maneuver-aware and hence the learning process more efficient.

The maneuver-recognition module estimates the location and acceleration maneuver classes. The input to this module is the embedding of the vehicle dynamics encoded by the LSTM hidden states. It is reasonable to expect that a single-layer LSTM can encode the change in vehicle location into states that are informative for estimating the change in location. However, the accuracy of such networks degrades when encoding the higher-order dynamics necessary for estimating the acceleration. Therefore, we augment the trajectory representation by the vehicle velocity. More importantly and inline with our proposed polar representation, we employ radial motion velocity that encodes vehicle orientation. This defines our full proposed model Polar- that further improved the prediction accuracy.

VII CONCLUSIONS

We introduced a novel pooling mechanism to improve the accuracy of predicting vehicle behaviors when performing lane change and highway merging maneuvers. To be able to capture a maneuver-aware interaction, our pooling strategy employs the orientation and radial motion velocity of the interacting vehicles. We demonstrated how incorporating such higher-order information leads to a more efficient learning process. Our maneuver-aware pooling mechanism outperforms the state-of-the-art pooling approaches in predicting vehicle trajectories in both the overall and maneuver-based evaluation.

One limitation of this work is predicting the future of a single agent based on pooling the states of its neighbors. This can be improved by jointly predicting the future maneuvers and trajectories of all the agents involved in a scene. Extending the current model to perform such joint estimation will be addressed in our future work.

ACKNOWLEDGMENT

The work described in this paper is supported by VeriCAV project, which is funded by the Centre for Connected and Autonomous Vehicles, via Innovate UK (Grant number 104527). This work was undertaken on ARC4, part of the High Performance Computing facilities at the University of Leeds, UK.

References

- [1] Y. Chai, B. Sapp, M. Bansal, and D. Anguelov, “Multipath: Multiple probabilistic anchor trajectory hypotheses for behavior prediction,” arXiv preprint arXiv:1910.05449, 2019.

- [2] A. Gupta, J. Johnson, L. Fei-Fei, S. Savarese, and A. Alahi, “Social gan: Socially acceptable trajectories with generative adversarial networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- [3] N. Deo and M. M. Trivedi, “Convolutional social pooling for vehicle trajectory prediction,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2018.

- [4] A. Alahi, K. Goel, V. Ramanathan, A. Robicquet, L. Fei-Fei, and S. Savarese, “Social lstm: Human trajectory prediction in crowded spaces,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016.

- [5] Y. Hu, W. Zhan, L. Sun, and M. Tomizuka, “Multi-modal probabilistic prediction of interactive behavior via an interpretable model,” in IEEE Intelligent Vehicles Symposium. IEEE, 2019.

- [6] A. Zyner, S. Worrall, and E. Nebot, “Naturalistic driver intention and path prediction using recurrent neural networks,” IEEE transactions on intelligent transportation systems, 2019.

- [7] J. Colyar and J. Halkias, “Us highway 101 dataset,” Federal Highway Administration (FHWA), Tech. Rep. FHWA-HRT-07-030, 2007.

- [8] ——, “Us highway i-80 dataset,” Federal Highway Administration (FHWA), Tech. Rep. FHWA-HRT-07-030, pp. 27–69, 2007.

- [9] J. Chorowski, D. Bahdanau, K. Cho, and Y. Bengio, “End-to-end continuous speech recognition using attention-based recurrent nn: First results,” arXiv preprint arXiv:1412.1602, 2014.

- [10] J. Chung, K. Kastner, L. Dinh, K. Goel, A. Courville, and Y. Bengio, “A recurrent latent variable model for sequential data,” arXiv preprint arXiv:1506.02216, 2015.

- [11] O. Vinyals, A. Toshev, S. Bengio, and D. Erhan, “Show and tell: A neural image caption generator,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2015.

- [12] A. Graves, “Generating sequences with recurrent neural networks,” arXiv preprint arXiv:1308.0850, 2013.

- [13] F. Altché and A. de La Fortelle, “An lstm network for highway trajectory prediction,” in IEEE International Conference on Intelligent Transportation Systems. IEEE, 2017.

- [14] S. H. Park, B. Kim, C. M. Kang, C. C. Chung, and J. W. Choi, “Sequence-to-sequence prediction of vehicle trajectory via lstm encoder-decoder architecture,” in IEEE Intelligent Vehicles Symposium. IEEE, 2018.

- [15] H. Xue, D. Q. Huynh, and M. Reynolds, “Ss-lstm: A hierarchical lstm model for pedestrian trajectory prediction,” in IEEE Winter Conference on Applications of Computer Vision (WACV). IEEE, 2018.

- [16] I. J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial networks,” arXiv preprint arXiv:1406.2661, 2014.

- [17] B. Kim, C. M. Kang, J. Kim, S. H. Lee, C. C. Chung, and J. W. Choi, “Probabilistic vehicle trajectory prediction over occupancy grid map via recurrent neural network,” in IEEE International Conference on Intelligent Transportation Systems, 2017.

- [18] A. Bhattacharyya, B. Schiele, and M. Fritz, “Accurate and diverse sampling of sequences based on a “best of many” sample objective,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- [19] A. Paszke, S. Gross, S. Chintala, G. Chanan, E. Yang, Z. DeVito, Z. Lin, A. Desmaison, L. Antiga, and A. Lerer, “Automatic differentiation in pytorch,” Conference on Neural Information Processing Systems, 2017.