Mapping ”Brain Terrain” Regions on Mars using Deep Learning

Abstract

One of the main objectives of the Mars Exploration Program is to search for evidence of past or current life on the planet. To achieve this, Mars exploration has been focusing on regions that may have liquid or frozen water. A set of critical areas may have seen cycles of ice thawing in the relatively recent past in response to periodic changes in the obliquity of Mars. In this work, we use convolutional neural networks (CNN) to detect surface regions containing “Brain terrain”, a landform on Mars whose similarity in morphology and scale to sorted stone circles on Earth suggests that it may have formed as a consequence of freeze/thaw cycles. We use large images (100-1000 megapixels) from the Mars Reconnaissance Orbiter to search for these landforms at resolutions close to a few tens of centimeters per pixel (25–50 cm). Over 58,000 images (28 TB) were searched (5% of the Martian surface) where we found detections in 201 images. To expedite the processing we leverage a classifier network (prior to segmentation) in the Fourier domain that can take advantage of JPEG compression by leveraging blocks of coefficients from a discrete cosine transform in lieu of decoding the entire image at the full spatial resolution. The hybrid pipeline approach maintains 93 accuracy while cutting down on 95 of the total processing time compared to running the segmentation network at the full resolution on every image.

1 Introduction

Brain terrain is a geologically young terrain that has the potential to enhance our understanding on the role of water in the recent geological history of Mars. “Brain terrain” is a descriptive term given to a decameter-scale surface texture on Mars that consists of labyrinthine ridges and troughs occurring in flat terrains, typically in topographic lows. The areas are named for their resemblance to the human brain or aquatic brain coral species. It is found primarily at mid-latitudes and is often associated with lineated valley fill (LVF), concentric crater fill (CCF) and lobate debris aprons (LDA) (e.g., Squyres 1978; Malin & Edgett 2001; Carr 2001; Mangold 2003). This terrain type shares morphological similarities to sorted stone circles on Earth, which are thought to form as the result of numerous freeze/thaw cycles of rock-bearing soil (Noe Dobrea et al., 2007). In Earth’s arctic environments, sorted stone circles and labyrinths are the result of a rock-bearing and water-rich soil layer that undergoes heave/contraction cycles as the result of freezing-thaw processes (Taber 1929; Taber 1930; Williams et al. 1989) whereby rock-soil segregation can systematically occur (Konrad & Morgenstern 1980) and lead to pattern formation (e.g. Werner 1999; Kessler & Werner 2003). The convective kinematics of this process are reasonably well explored (Goldthwait 1976; Williams et al. 1989; Hallet 2013) through the analysis of trenches and seasonal data from tilt meters and other field studies (e.g. Hallet & Waddington 2020). However, while the morphologies of brain terrain and sorted stone circles are similar, similarity in form does not necessarily imply the same underlying process. Competing hypotheses argue brain terrain formed by sublimation lag, polygon inversion, or stone sorting by freeze-thaw (Mangold 2003; Noe Dobrea et al. 2007; Levy et al. 2009), but its origin remains unresolved. Multiple classes of young geomorphic features on Mars suggest that thawing may have occurred in the recent geologic past and may still be ongoing, although significant controversy remains McEwen et al. (2011). It is therefore important to compare available hypotheses (Mangold 2003, Milliken et al. 2003, Costard et al. 2002, Kreslavsky et al. 2008, Cheng et al. 2021, Hibbard et al. 2022) by performing a careful and detailed study of this terrain.

Sorted stone circles on Earth are thought to form from cyclic freezing and thawing in permafrost regions, which drives the convection of stones, soil, and water in the active layer (Mangold, 2005). In western Spitsbergen, the stone circles consist of a central 2-3 m wide plug of soil surrounded by a 0.5-1 m wide ring of stones, with the plug domed up to 0.1 m and the stones rising up to 0.5 m (Kessler et al., 2001). Though stones are often cm-scale, meter-sized clasts in circles up to 20 m across occur as well (Trombotto 2000; Balme et al. 2009). Comparatively, we measure brain terrain cells on Mars to be 5-15 m with ridges 0.2-2 m tall, resembling terrestrial circles in scale (Kessler et al. 2001; Kääb et al. 2014). On Mars, brain terrain is relatively young based on crater counts (Morgan et al. in prep), though rigorous analysis is still needed.

The Mars Reconnaissance Orbiter (MRO) has collected images of the Martian surface for over 15 years and amassed over 50 terabytes of data. The High Resolution Imaging Science Experiment (HiRISE; 0.3 m/pixel resolution; McEwen et al. 2007) and the Context Camera (CTX; 6 m/pixel resolution; Malin & Edgett 2001) are two instruments onboard MRO that are routinely used to study geological landforms. The total volume of data from this mission poses new challenges for the planetary remote-sensing community. For example, each image includes limited metadata about its content, and it is time-consuming to manually analyze each image by eye. Therefore, there is a need for computational techniques to search the HiRISE and CTX image databases and discover new content.

Many algorithms can classify image content, often requiring preprocessing like edge detection or histogram of oriented gradients (Dalal & Triggs, 2005). For pattern recognition tasks like object classification, convolutional neural networks (CNNs) have emerged as a powerful approach and can now match human performance in object recognition tasks making them an excellent choice for an algorithm capable of learning optimal features from training data (He et al. 2015; Ioffe & Szegedy 2015). CNNs are particularly well-suited for image classification tasks because of their ability to learn optimal filters or feature detectors from the training data itself, eliminating the need for hand-crafted features or preprocessing steps like edge detection or histogram of oriented gradients. For planetary mapping, CNNs outperform other classifiers like support vector machines (Palafox et al., 2017), and can be fine-tuned to identify landforms in Martian images (Wagstaff et al. 2018; Nagle-McNaughton et al. 2020). Other relevant approaches for planetary exploration include terrain segmentation to inform navigation (Dai et al., 2022) and caption generation for planetary images (Qiu et al., 2020). To further improve computational efficiency, especially when dealing with large image archives, recent work has explored leveraging the frequency domain representations of images. Leveraging frequency domain data saves significant time when processing large archives by avoiding full decoding. This enables timely surveys to inform landing site selection and identify rare features like potential astrobiology targets. Image compression relies on frequency domain transforms like discrete cosine transforms in JPEG to remove redundant data. Recently, deep learning approaches have utilized these compressed representations for efficient near state-of-the-art object classification (Russakovsky et al. 2015; Gueguen et al. 2018; Chamain & Ding 2019).

We are interested in automating the detection of brain terrain with images from MRO/HiRISE because their resemblance to terrestrial periglacial features makes them promising regions to further investigate in the search for potential life on Mars, both past and present. To expedite the processing we leverage a classifier network (prior to segmentation) in the Fourier domain that can take advantage of JPEG compression by leveraging blocks of coefficients from a discrete cosine transform in lieu of decoding the entire image at the full spatial resolution. The hybrid pipeline approach cuts down on the total processing time compared to running a segmentation network at the full resolution on every image. In the sections below we will discuss how the training data is made, what goes into each of the networks we tested, and then quantify the performance and accuracy of predicting brain terrain.

2 Observations and Training Data

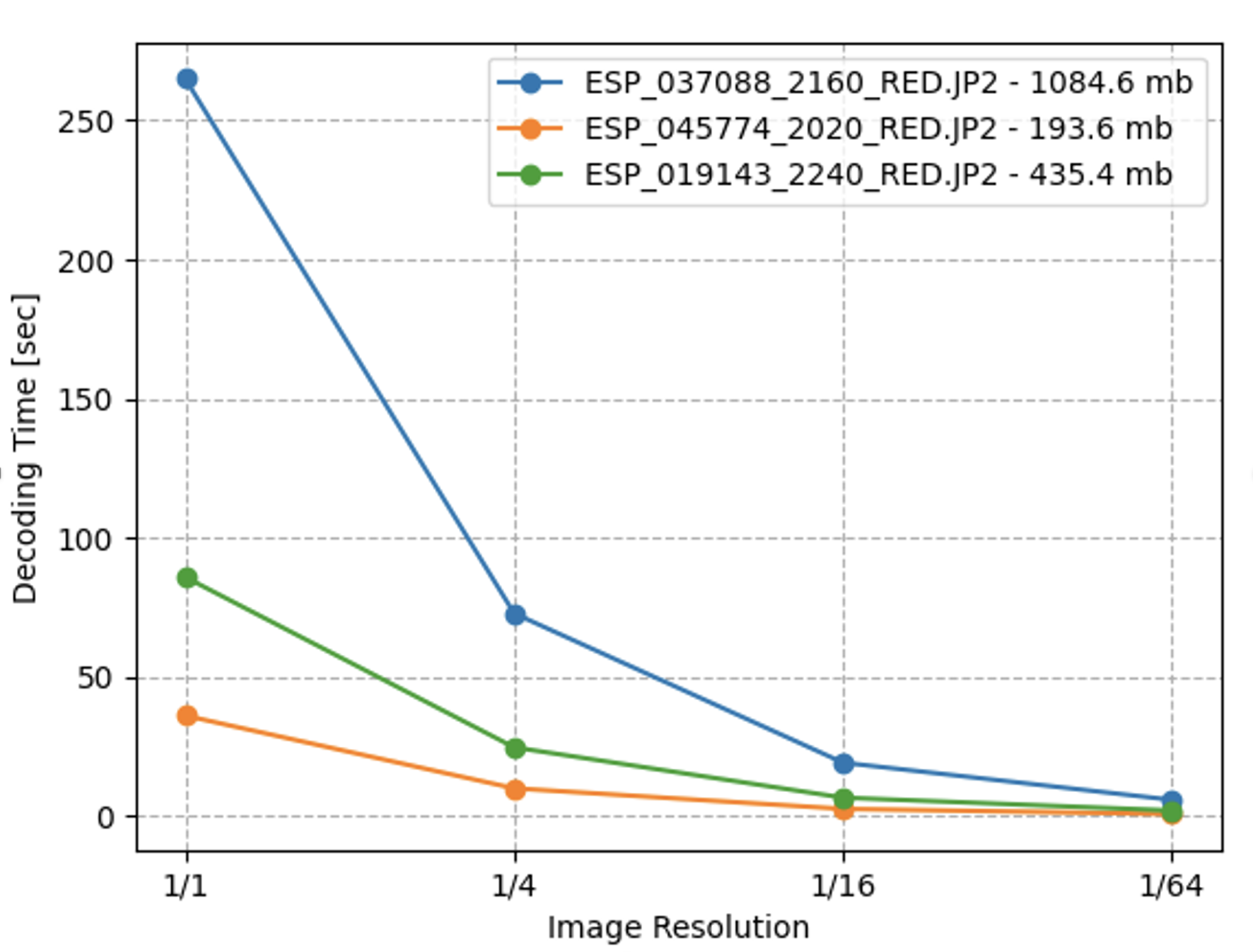

We analyze observations of the Martian surface using the High Resolution Imaging Science Experiment onboard the Mars Reconnaissance Orbiter (MRO) (McEwen et al., 2007). Images from the primary and extended mission were used and are publicly available online111https://hirise.lpl.arizona.edu/. HiRISE has a 0.5 m telescope along with an optical camera however for the purposes of our study we use images from the red channel which has a photometric range between 550–800 nm. The MRO spacecraft orbits Mars at an altitude of around 300 km and depending on the pointing, the HiRISE camera is capable of imaging the surface at resolutions between 25-50 cm/px. The camera reads swaths of data at a time tracking the ground in spans of 20,000 pixels and combined images are usually 100,000’s pixels long. The vast amount of image data is stored with 10-bit precision as a JPEG2000 image. The image encoding algorithm is capable of compressing images to 5-10 their original size, e.g., 10.8 GB of data at the full spatial resolution () compresses into as little as 675 MB of space on disk. Decoding images at the full-resolution can often take up to a few minutes on a CPU depending on the image size (see Figure 1). The HiRISE archive contains over 50 TB of compressed data spanning 85,000 images (as of writing this paper). However, for our study we used a local snap shot acquired in 2020 with 58,209 images. Processing terabytes worth of images requires spending a non-negligible time decoding the data alongside applying any computer vision algorithm afterward.

In order to automate the detection of landforms on the Martian surface we need a computer vision algorithm capable of learning the ideal features for detection. The advantage of a neural network is that it can be trained to identify subtle features (e.g. in pixel or elevation data) inherent in a large data set. This learning capability is accomplished by allowing the weights and biases to vary in such a way as to minimize the difference (i.e. the cross entropy) between the output of the neural network and the expected or desired value from the training data. Our training data is separated into two classes; a generic ‘background‘ class that represents most types of land and a ‘brain terrain‘ class for our terrain of interest. The training data is split in a 3:1 ratio in order to provide a diverse range of background samples. This skewed distribution prevents bias towards the majority class and provides diverse negative samples, enabling effective discrimination of brain terrain from visually similar terrains (e.g. aeolian ridges or deflationary terrain) while minimizing false positives. We tested different ratios and found a bigger ratio (more background) created a higher rate of false negatives while smaller ratios yielded more false positives, including a 1:1 split. Crucially, this imbalanced scenario simulates learning from limited labeled examples, a common challenge when studying rare phenomena, thus building robustness for deployment on new data where brain terrain occurrences are sparse yet scientifically compelling across the vast Martian surface. It prevents the model from becoming biased towards the overrepresented majority class and instead forces it to learn highly discriminative features for precise identification.

When we started this study, we only had two hand-labeled examples of brain terrain and used active learning to build a more robust set of training data. The initial two hand-labeled examples of brain terrain came from the images published in Noe Dobrea et al. 2007. We used those labeled regions to train an initial classifier to identify similar structures in other HiRISE images. We then manually vetted the classifier’s detections, adding any false positives to the background training set and any missed detections of true brain terrain to the positive training set. This active learning process of training, manually vetting, and adding to the training sets was iterated on to steadily improve the classifier’s performance. Each new image added to our brain terrain training data was masked manually to avoid biasing based on outputs from a preliminary algorithm. The full list of images used to generate training samples is listed in Table 1.

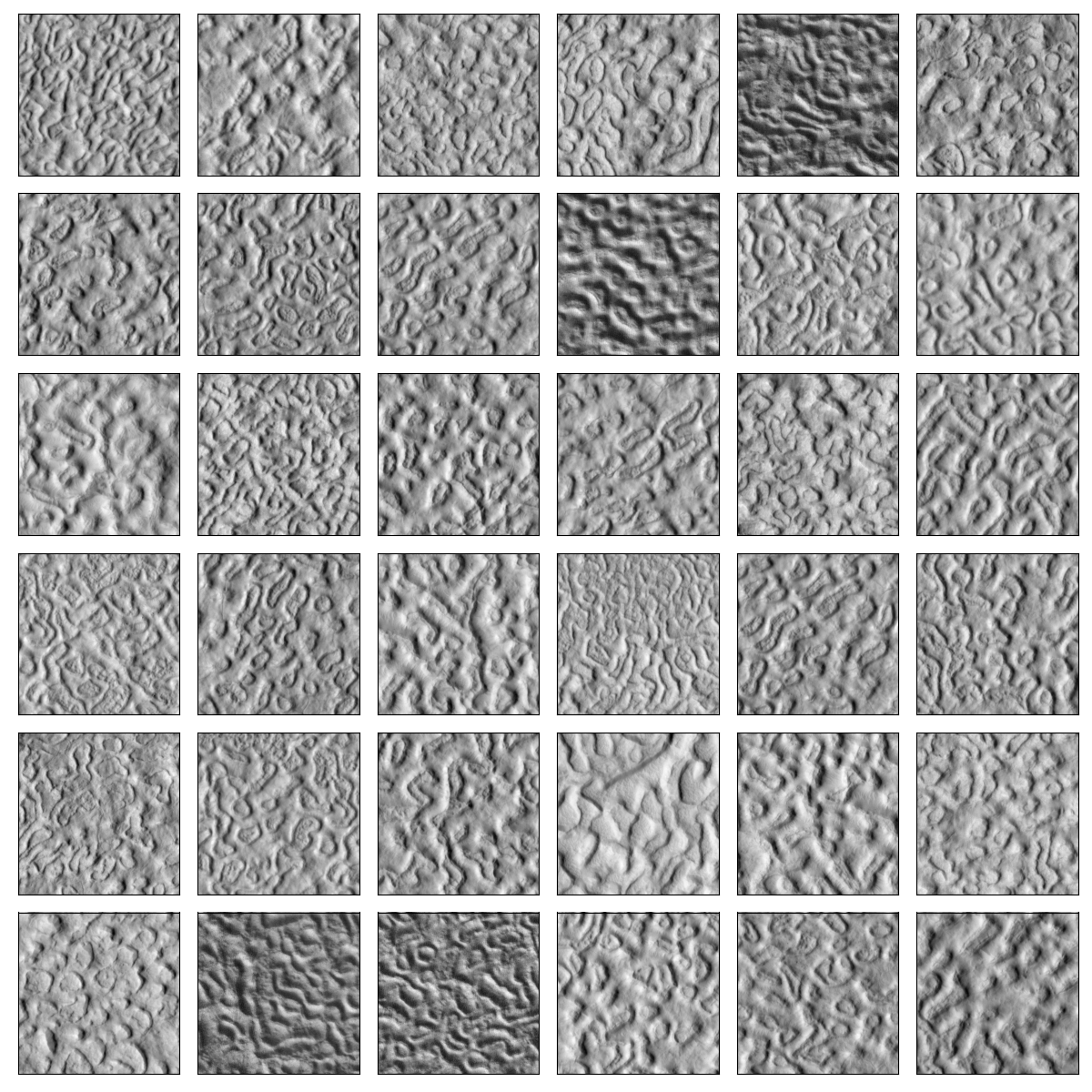

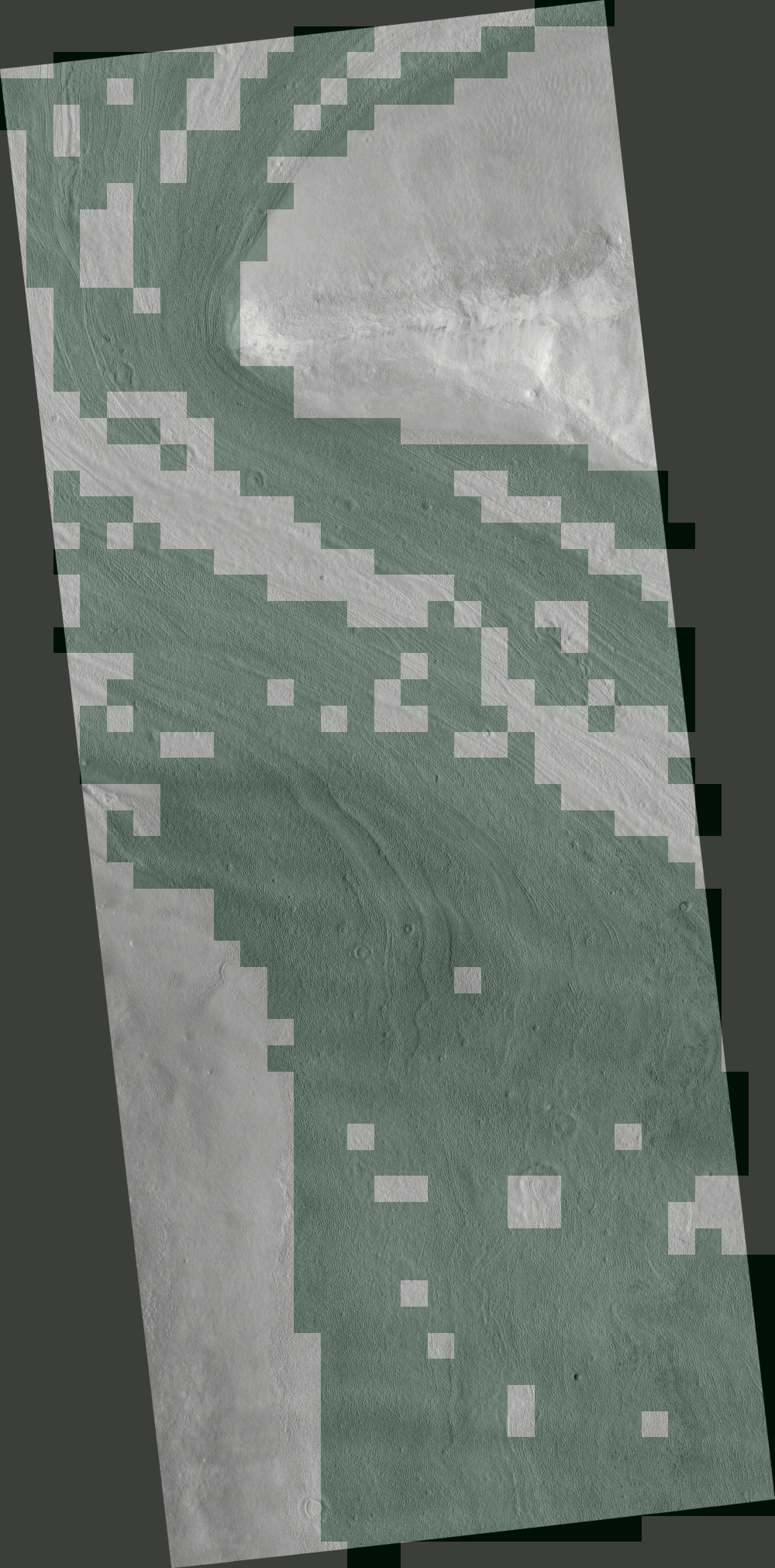

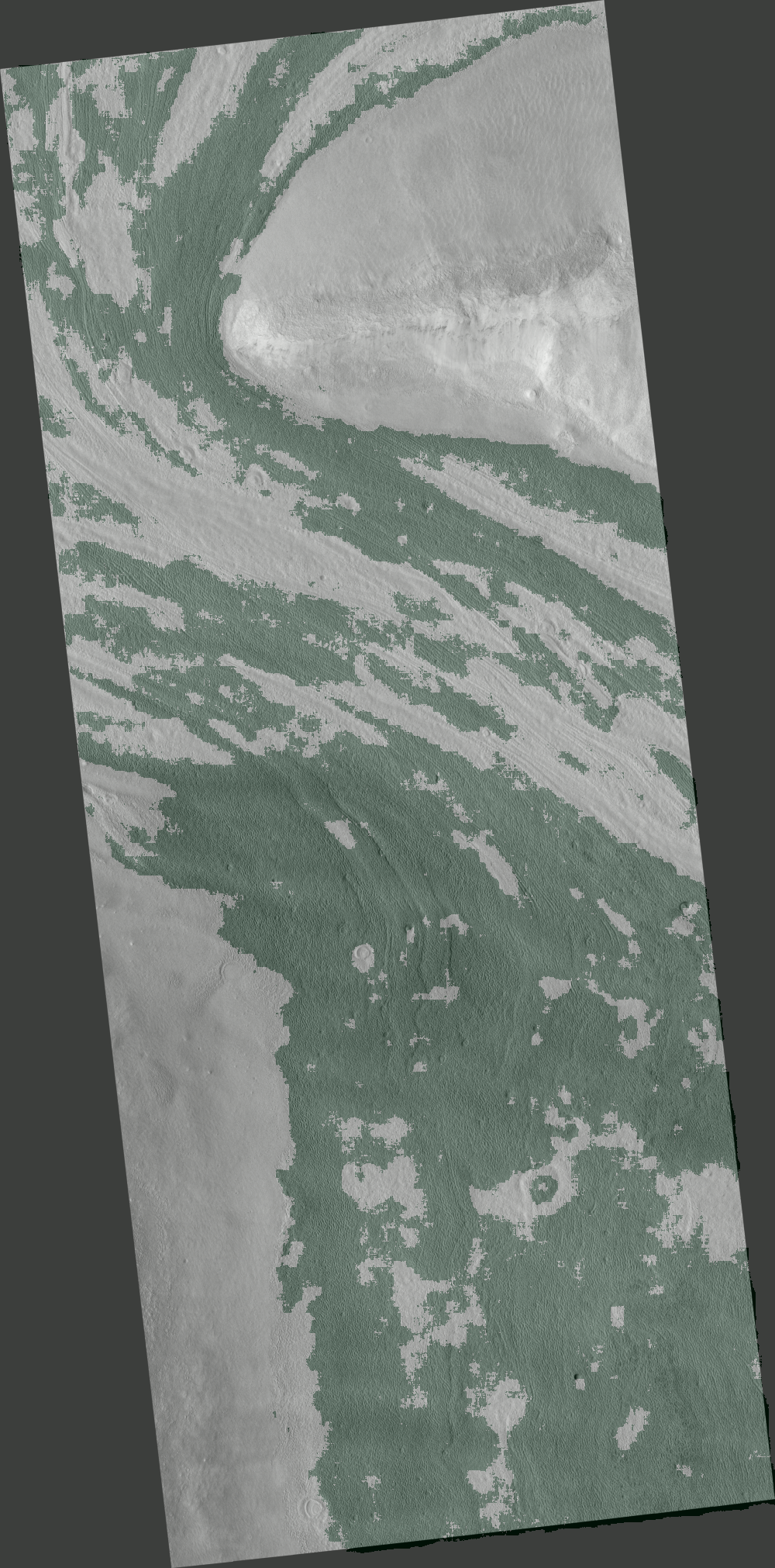

It is important to train on a diverse population of background images since it needs to generalize to the 60,000 images in the HiRISE archive snapshot that we looked at for this study. We trained multiple networks for our study and used the one with the best results. We found shrinking the input window size usually degrades performance below 128 pixels, therefore all of the classifier networks we tested have bigger window sizes (either 128, 256, or 512 pixels; see Table 2). The networks are trained on data between 25-50 cm/px and supports the conclusion of other papers stating due to the convolutions and pooling operations the network is partially scale invariant (Xu et al. 2014; Xu et al. 2019; Wimmer et al. 2023). Obviously, if the brain terrain feature is larger than the window size this conclusion breaks down and the network needs a larger input window. We generate training tiles using the map-projected satellite images and ignore portions of the image containing the black border. A subset of our brain terrain training samples can be seen in Figure 2.

| Name | Class | Description |

|---|---|---|

| ESP0162152190 | brain terrain | Area West of Erebus Montes |

| ESP0162872205 | brain terrain | Lineated Valley Floor Material in Terrain North of Arabia Region |

| ESP0187072205 | brain terrain | Candidate New Impact Site Formed between January 2010 and June 2010 |

| ESP0226292170 | brain terrain | Lobate Deposits in Crater in Arabia Terra |

| ESP0427252210 | brain terrain | Lineated Valley Floor Material in Terrain North of Arabia Region |

| ESP0526752215 | brain terrain | Region Near Erebus Montes |

| ESP0573172210 | brain terrain | Crater with Preferential Ejecta Distribution on Possible Glacial Unit |

| ESP0611412195 | brain terrain | Candidate Landing Site for SpaceX Starship in Arcadia Region |

| PSP0014262200 | brain terrain | Lobate Apron Feature in Deuteronilus Mensae Region |

| PSP0075312195 | brain terrain | Striated Flows in Canyon |

| ESP0193852210 | brain terrain | Lineated Valley Floor Material in Terrain North of Arabia Region |

| ESP0369172210 | brain terrain | Doubly-Terraced Elongated Crater in Arcadia Planitia |

| ESP0523852205 | brain terrain | Sample Region Near Erebus Montes |

| ESP0551462220 | brain terrain | Candidate Recent Impact Site |

| ESP0606982220 | brain terrain | Subliming Ice |

| ESP0610752195 | brain terrain | Candidate Landing Site for SpaceX Starship in Arcadia Region |

| ESP0774882205 | brain terrain | Lineated Valley Floor Material in Terrain North of Arabia Region |

| PSP0014102210 | brain terrain | Impact Crater Filled with Layered Deposits |

| PSP0014662215 | brain terrain | Fretted Terrain Valleys and Apron Materials |

| PSP0097402200 | brain terrain | Debris Aprons in Eastern Erebus Montes |

| ESP0114282200 | Background | Candidate New Impact Site Formed between January and October 2008 |

| ESP0115712270 | Background | Very Fresh Small Impact Crater |

| … | … | … |

| PSP0081581825 | Background | Region North of Nicholson Crater |

| PSP0103461570 | Background | Graben in Memnonia Fossae |

Note. — The full table consists of 149 images and is hosted online.

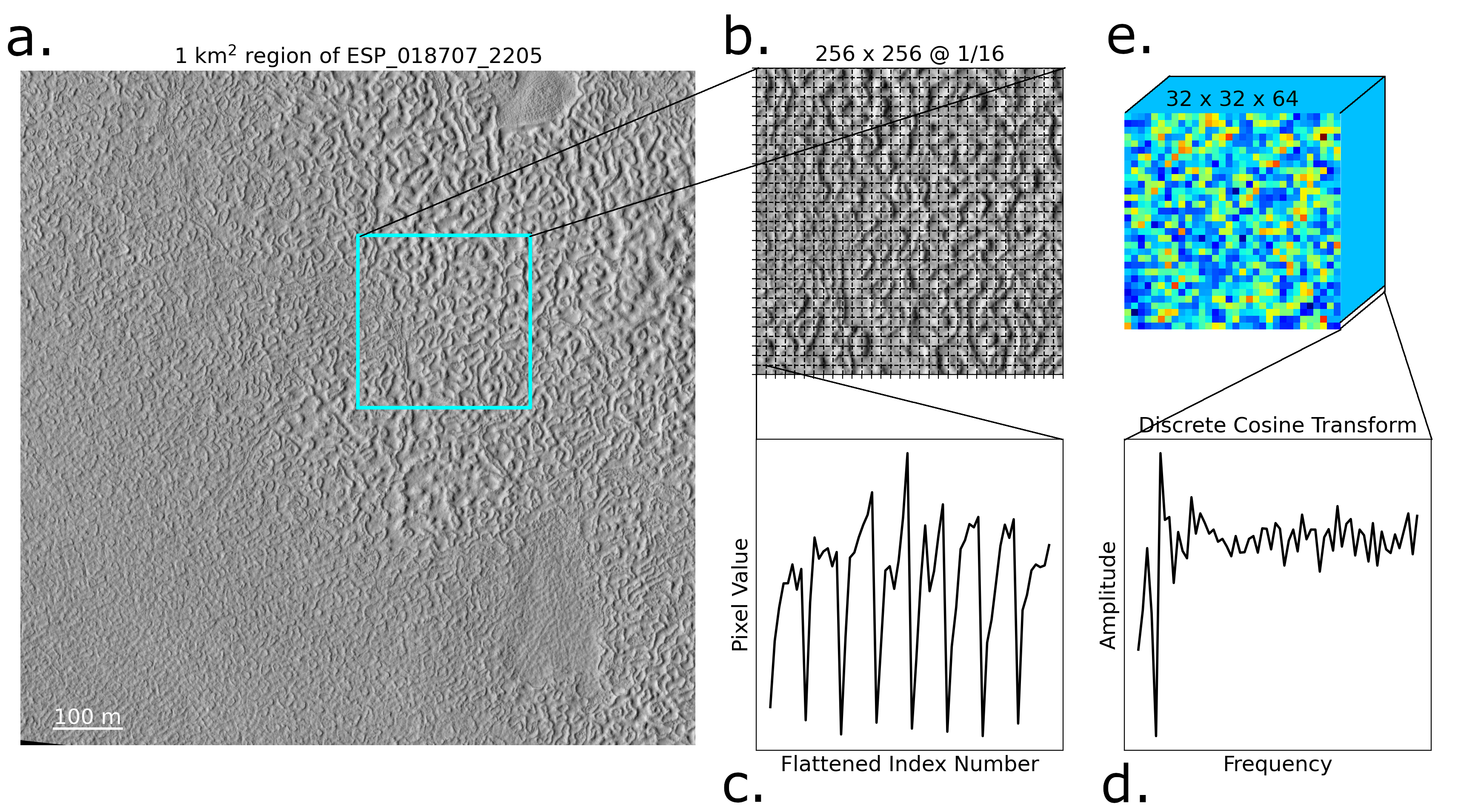

3 Cross-Validation and Algorithm Comparison

A hybrid pipeline approach allows us to save processing time by evaluating data at a lower resolution while maintaining most of the accuracy compared to a full-resolution network. The hybrid approach starts with a classifier for quickly evaluating images followed by a segmentation network for full-resolution pixel-scale inference. Multiple classifiers were tested using different architectures and input sizes which leads to differences in accuracy and evaluation speed (see Table 2). We tested two types of classifiers, one in the Fourier domain in order to leverage blocks of coefficients from an encoding process similar to JPEG and a spatial classifier for comparison. Both classifiers work with data at 1/16 resolution. The spatial classifier uses a normalization layer in the network to transform the input to have a mean of 0 and a standard deviation of 1. The Fourier domain classifier uses the JPEG encoding processing to tile an input into 8x8 tiles and then applies a discrete cosine transform to each tile before being reshaped into a smaller but deeper block for the network (see Figure 3). The tiling and encoding process can transform an input image of 256x256 into a data cube of size 32x32x64. The smaller window size is advantageous in convolutional neural networks since the first layer of the network usually reduces the dimensionality in the number of channels from 64 to 32. Additionally, the smaller image size requires fewer operations per layer and speeds up inference whereas, with a similar architecture for the spatial data, the first layer increases the dimensionality of the input since there is only one channel pertaining to color instead of frequency. Early on in our study we started with random forests and multi-layer perceptrons but quickly found more benefit in using bigger input sizes which are better suited for convolutional networks. We found a negative correlation between the input size and the number of false positives with every algorithm we tested and ultimately settled on window sizes of 128x128 or 256x256, depending on the classifier. The advantage of using a smaller window size like 128x128 is that we can make more training data for a given set of HiRISE images (see Table 2).

While the classifier network demonstrated high accuracy and computational efficiency, it lacked the precision required for detailed surface measurements. Detecting brain terrain at sub-meter scales necessitated a network capable of operating at the native HiRISE image resolution (0.25-0.5 m/pixel). To this end, we designed a custom U-net architecture based on MobileNetV3 to build a segmentation network for pixel-level predictions (Howard et al., 2019). The MobileNet architecture was chosen due to its state-of-the-art accuracy on the CiFAR1000 test while requiring less training time and computational resources compared to larger CNN architectures like ResNet and VGG (He et al., 2015). Despite its name implying mobile applications, MobileNet was designed for ARM-based processors, which are growing in popularity for space-based operations due to their energy efficiency (Dunkel et al., 2022).

The U-net architecture is widely adopted for segmentation tasks due to its ability to learn both image context and object boundaries (Ronneberger et al. 2015; Garcia-Garcia et al. 2017;). It consists of a contracting encoder path that applies convolutions to downsample the input image and extract relevant features, and an expanding decoder path that upsamples these features to produce a pixel-wise segmentation mask at the same resolution as the input. The encoder uses a series of convolutional and pooling layers to downsample the input image, extracting higher-level semantic features at deeper layers. The decoder then upsamples these feature maps to produce full-resolution segmentation masks. Crucially, the decoder combines high-level semantic features from the encoder’s deepest layers with higher-resolution features from earlier layers via skip connections. This allows the U-net to integrate local information (from early layers) with global context (from deep layers) to make accurate pixel-wise predictions. Compared to standard convolutional architectures like VGG (a very deep stack of 3x3 convolutional layers), the U-net’s decoder path and skip connections enable much more precise segmentation around the boundaries of objects/regions. This precise segmentation is highly beneficial for delineating the intricate patterns of brain terrain at a high spatial resolution. We chose MobileNetV3 as the core encoder architecture due to its efficient design achieving high accuracy at low computational cost, as highlighted in the Howard et al. 2019. This allowed fitting a high-resolution U-net within memory constraints.

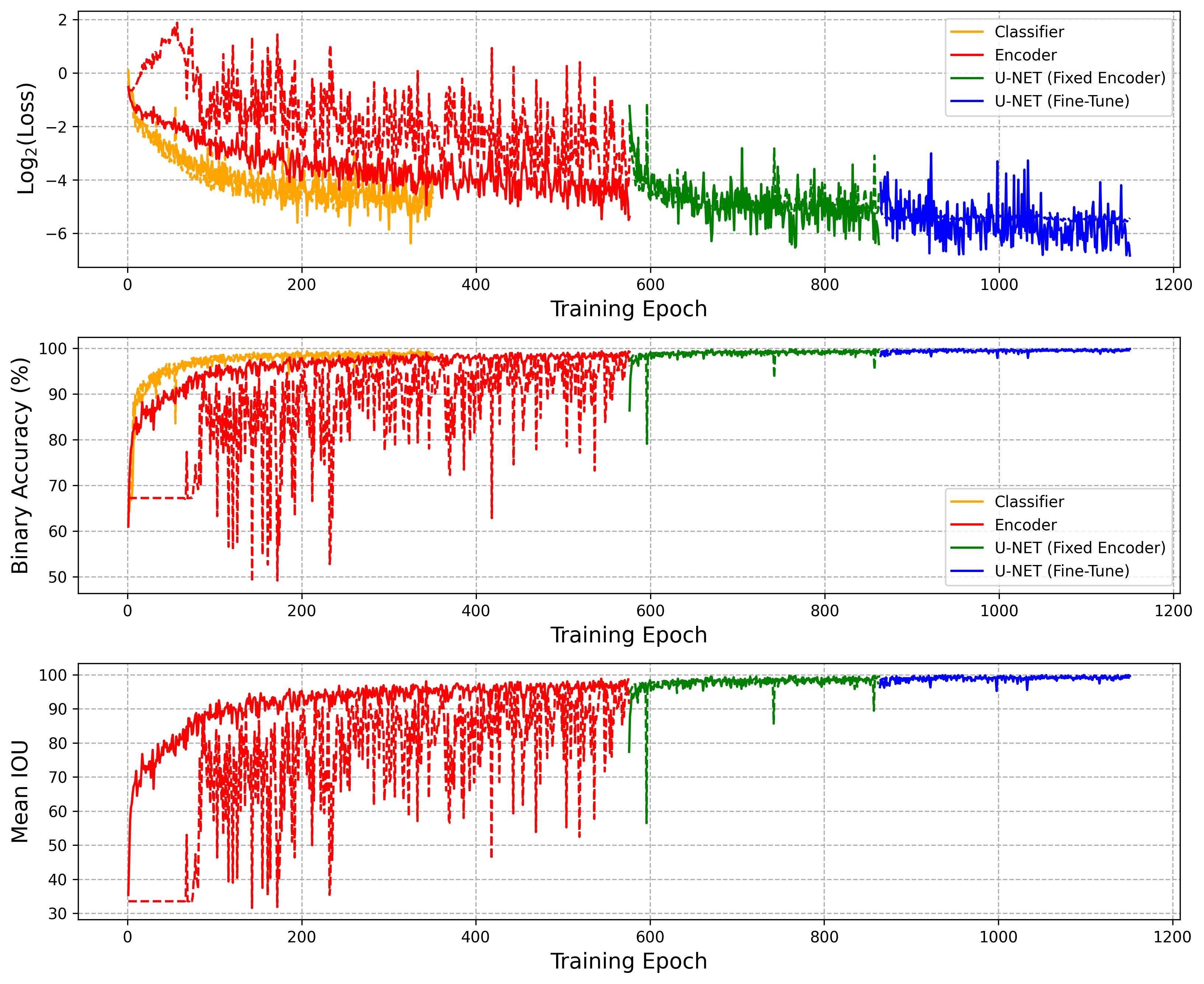

Due to the size and complexity of the U-Net architecture, we found improved performance by training the network in multiple stages. First, the encoder portion of the U-Net was trained to predict low-resolution (32x32) segmentation masks from the input images (512x512). As shown by the jitter in testing accuracy (Figure 4), the encoder alone did not generalize adequately to the testing data. Next, the full U-Net was assembled by appending the decoder portion and fixing the encoder weights. Only the decoder was trained, and conditioned on upscaling the low-resolution encoder outputs to the native image resolution. Skip connections from select encoder layers to decoder layers of equal depth were utilized, as per the U-Net design. Since the base network architecture (MobileNet) is not a U-Net, determining which layers to use for skip connections required empirical tuning. The number of upsampling layers in the decoder constrained possible skip connection sources in the encoder. Finally, end-to-end fine-tuning of the full U-Net was performed using a learning rate an order of magnitude lower than the previous training stages. The Adam optimizer was used for training, with binary cross-entropy loss, as there were only two classes of interest. A comparison of the classifier and segmentation network is in Figure 5.

To evaluate the performance of the different machine learning architectures for automating the detection of brain terrain, we used the F1 score as the primary metric. The F1 score provides a holistic measure by combining precision (the fraction of detected regions that are true positives) and recall (the fraction of actual brain terrain regions successfully detected). It ranges from 0 to 1, with a value of 1 representing perfect precision and recall. As shown in Table 2, the unet-512-spatial model achieved the highest F1 score of 0.998 on the test dataset, indicating excellent overall accuracy in correctly identifying brain terrain while minimizing both false positive and false negative detections. The F1 metric enables evaluating the critical trade-off between reducing false alarms and avoiding missed detections for this classification task. Maximizing the F1 score ensures the automated mapping approach has high fidelity for subsequent scientific analysis of the spatial distribution and morphology of brain terrains.

| model name | Training Size | Testing Size | F1 Score | TP | TN | FP | FN | 1Kx1K / sec |

|---|---|---|---|---|---|---|---|---|

| unet-512-spatial | 58641 | 6516 | 0.998 | 99.8 | 99.7 | 0.2 | 0.3 | 6.5 |

| cnn-128-spatial | 58641 | 6516 | 0.996 | 99.6 | 99.6 | 0.4 | 0.4 | 33.9 |

| resnet-128-spatial | 58641 | 6516 | 0.995 | 99.7 | 99.4 | 0.6 | 0.3 | 21.7 |

| cnn-256-spatial | 11493 | 1277 | 0.991 | 99.2 | 99.0 | 1.0 | 0.8 | 46.4 |

| resnet-256-spatial | 13736 | 1527 | 0.990 | 99.6 | 98.5 | 1.5 | 0.4 | 35.1 |

| MobileNet-128-spatial | 58641 | 6516 | 0.988 | 98.6 | 99.0 | 1.0 | 1.4 | 97.7 |

| MobileNet-256-spatial | 13736 | 1527 | 0.977 | 96.0 | 99.5 | 0.5 | 4.0 | 169.0 |

| cnn-256-dct | 13736 | 1527 | 0.930 | 90.6 | 95.7 | 4.3 | 9.4 | 228.4 |

| cnn-128-dct | 58641 | 6516 | 0.912 | 90.4 | 92.1 | 7.9 | 9.6 | 134.2 |

| resnet-256-dct | 13736 | 1527 | 0.844 | 87.3 | 80.5 | 19.5 | 12.7 | 130.0 |

| MobileNet-256-dct | 13736 | 1527 | 0.836 | 93.6 | 69.6 | 30.4 | 6.4 | 159.8 |

Note. — All metrics are computed using the test data. The last column represents how many 1K x 1K windows each model can process per second on a RTX 3090 GPU. The numbers in the model name represent the size of the input window in pixels. TP = True Positive, TN = True Negative, FP = False Positive, FN = False Negative.

4 Results and Discussion

The HiRISE camera on MRO has been acquiring data of the Martian surface for over a decade. We acquired a snapshot of the archive in 2021 which contains 58,209 images subtending over 28.1 TB of space on disk. We have a problem of needing to find a rather rare terrain over many images and we need pixel-level boundaries in order to accurate measure the spatial extent (see Figure 6). Figure 1 shows the amount of time it takes to decode a single HiRISE image based on the decoding resolution and image size. The estimated time to decode the entire archive at the native resolution is about 170.3 hours and when we combine that with segmenting the dataset we get another 145.4 hours. These numbers are based on interpolating the results of our algorithm based on image size and quantity. In total, the algorithm would take about 14 days to process the entire archive using the segmentation algorithm. Utilizing a hybrid pipeline approach with a classifier that operates on lower resolution data was proved to be 90 as accurate as our segmentation algorithm (see Table 2). Since the classifier is about 15 faster than the segmentation network and we evaluate images at 1/16 resolution with it, we process the entire archive in 21 hours.

While our methodology is quick, it also needs to be trustworthy and accurate. It is too time consuming for us to manually evaluate every image that comes out of our pipeline however we have the capacity to inspect the positive detections since they’re less numerous. Even though both algorithms flagged a handful of images we manually vetted a portion of them to ensure we had at least some reliable results to follow-up. Our vetting process consisted of manually inspecting the full-resolution images with the segmentation mask for brain terrain overlaid. We manually inspected 456 positive detections and rejected 255 as false positives. We can use the rejections to estimate a false positive rate of 0.4 (255/58209) and that value is remarkably similar to our estimated value in Table 2 which comes from a more idealized dataset. This outcome highlights the importance of encapsulating a diverse range of images in the training data in order to minimize false positives and ensure accurate results. One caveat about training on false positives (see section 2 about our active learning process) is that they can sometimes adversely effect the accuracy if the features are similar to brain terrain and there aren’t many positive samples. We don’t have a good prescription on how to systematically test this other than inspecting the image embeddings or using some form of hold-out validation during training.

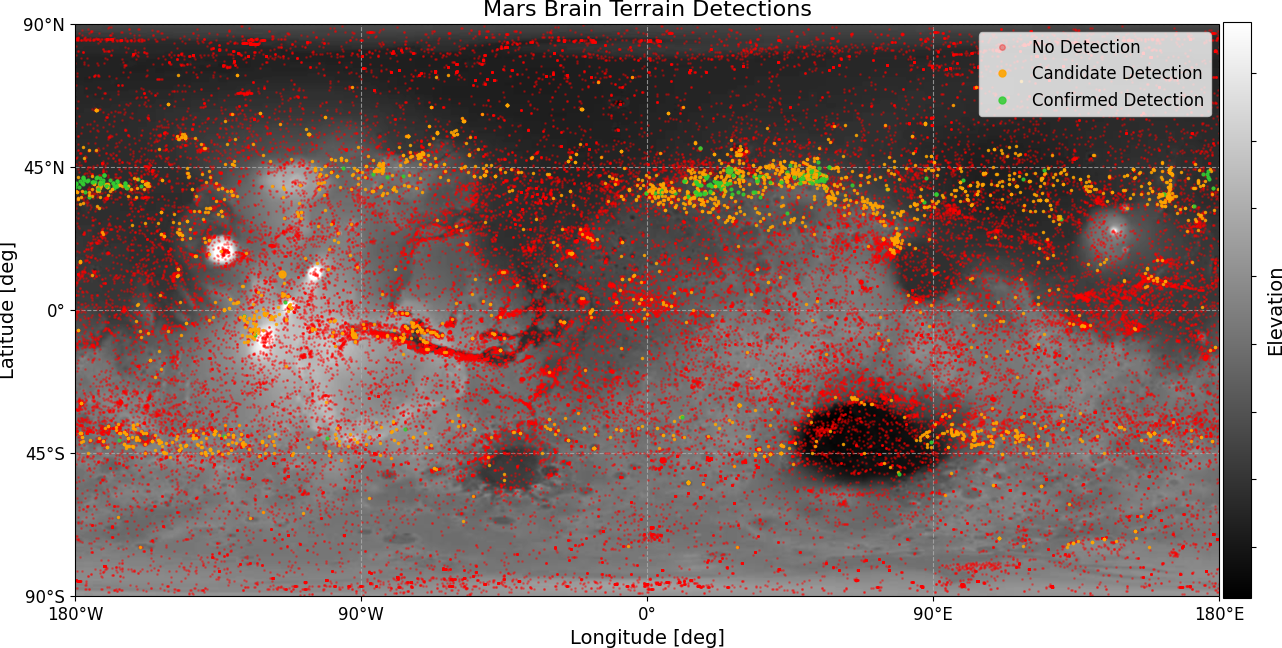

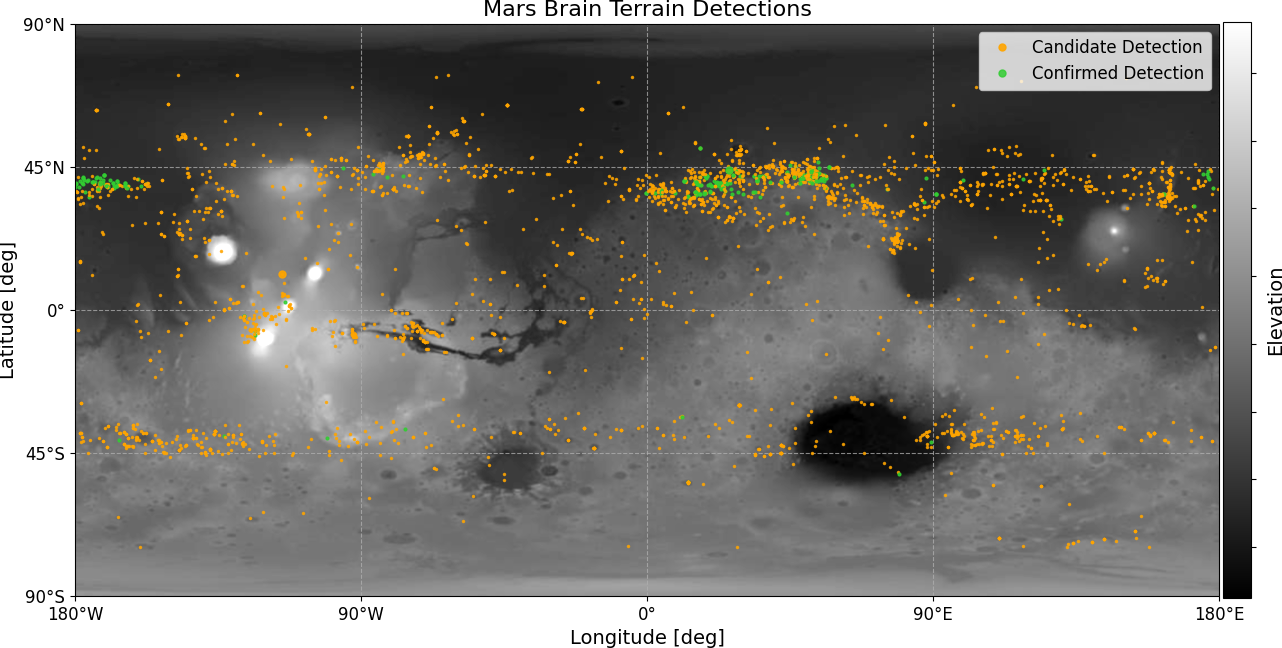

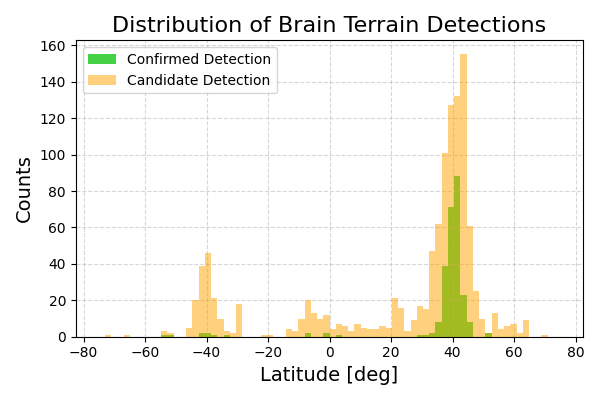

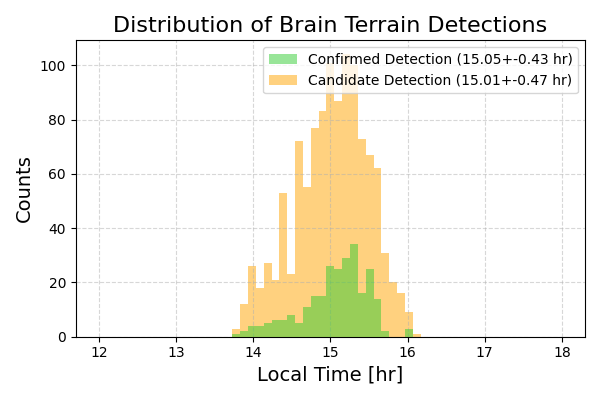

The implications of identifying the processes responsible for the relatively young brain terrains on Mars are significant. The confirmed brain terrain detections are centered primarily around 40N (see Figure 7). This preferential distribution in latitude can provide important clues about the climate conditions necessary for the formation of brain terrain on Mars. A majority of the detections in the southern hemisphere centered around 40S latitude and are classified as candidates (images flagged by both our classifier and segmentation network) because they require manual confirmation. Due to the numerous candidate detections we didn’t have time to check them by hand and are releasing an interactive table on GitHub to help with labelling the 1100 candidates222https://github.com/pearsonkyle/Mars-Brain-Coral-Network. A future study by Noe et al. (in prep.) will explore correlations between the brain terrain locations and climate models when Mars had a different obliquity. This preferential distribution suggests the formation of brain terrain may be correlated with specific climate conditions, such as those related to past changes in Mars’ obliquity. If brain terrain formed through freeze-thaw processes similar to patterned stone circles on Earth, it would imply that cryoturbation has redistributed rock near the Martian surface and that it is largely confined to a narrow latitudinal bands. Integrating multiple remote sensing datasets, including high-resolution imagery, crater counts, thermal data, and rock counts, can provide robust tests of the competing hypotheses for brain terrain formation.

5 Conclusion

We developed a hybrid pipeline using convolutional neural networks to automate the detection of rare brain terrain in a large dataset of 58,209 HiRISE images of Mars. A Fourier domain classifier on compressed imagery allowed rapid screening before pixel-level segmentation mapping of landform boundaries, enabling processing of the 28TB archive in just 21 hours rather than what would have been 14 days. We identify 201 new images containing brain terrain with an additional 1141 candidates that will require vetting by hand to be confirmed. An interactive table of the results is available on Github for the community to explore and build upon333https://github.com/pearsonkyle/Mars-Brain-Coral-Network. The confirmed brain terrain detections are centered primarily around 40N with another small group around 40S. The preferential distribution in latitude can correlate to certain climate conditions based on Mars’ history but more work is required to quantify the correlation exactly. If brain terrain formed by freeze-thaw cycles similar to the processes that create patterned stone circles on Earth (Kessler et al. 2001; Kääb et al. 2014), it would imply that freeze-thaw processes redistribute rocks near the martian surface and that this process is largely limited to a narrow latitudinal band. In a future study we will map rock distributions in HiRISE images using shadow measurements to derive size and abundance similar to Golombek et al. 2012; and Huertas et al. 2006. Quantifying rock distributions will help test hypotheses related to possible cryoturbation and freeze-thaw processes in brain terrain formation. Integrating multiple remote sensing datasets, such as high-resolution imagery, crater counts, thermal data, and rock counting from shadow analysis, can provide robust tests of the competing brain terrain formation hypotheses. This, in turn, can reveal valuable insights into Mars’ recent climate, geology, and potential habitability, which have important implications for future landing site selection and the study of these rare Martian landforms. (Gallagher et al., 2011)

6 Acknowledgements

The research described in this publication was carried out in part at the Jet Propulsion Laboratory, California Institute of Technology, under a contract with the National Aeronautics and Space Administration. This research has made use of the High Resolution Imaging Science Experiment on the Mars Reconnaissance Orbiter, under contract with the National Aeronautics and Space Administration. We acknowledge funding support from the National Aeronautics and Space Administration (NASA) Mars Data Analysis Program (MDAP) Grant Number NNH19ZDA001N.

References

- Balme et al. (2009) Balme, M., Gallagher, C., Page, D., Murray, J., & Muller, J.-P. 2009, Icarus, 200, 30, doi: https://doi.org/10.1016/j.icarus.2008.11.010

- Carr (2001) Carr, M. H. 2001, J. Geophys. Res., 106, 23571, doi: 10.1029/2000JE001316

- Chamain & Ding (2019) Chamain, L. D., & Ding, Z. 2019, arXiv e-prints, arXiv:1909.05638, doi: 10.48550/arXiv.1909.05638

- Cheng et al. (2021) Cheng, R.-L., He, H., Michalski, J. R., Li, Y.-L., & Li, L. 2021, Icarus, 363, 114434, doi: https://doi.org/10.1016/j.icarus.2021.114434

- Costard et al. (2002) Costard, F., Forget, F., Mangold, N., & Peulvast, J. P. 2002, Science, 295, 110, doi: 10.1126/science.1066698

- Dai et al. (2022) Dai, Y., Zheng, T., Xue, C., & Zhou, L. 2022, Remote Sensing, 14, doi: 10.3390/rs14246297

- Dalal & Triggs (2005) Dalal, N., & Triggs, B. 2005, in 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), Vol. 1, 886–893 vol. 1, doi: 10.1109/CVPR.2005.177

- Dunkel et al. (2022) Dunkel, E., Swope, J., Towfic, Z., et al. 2022, in IGARSS 2022 - 2022 IEEE International Geoscience and Remote Sensing Symposium, 5301–5304, doi: 10.1109/IGARSS46834.2022.9884906

- Gallagher et al. (2011) Gallagher, C., Balme, M., Conway, S., & Grindrod, P. 2011, Icarus, 211, 458, doi: https://doi.org/10.1016/j.icarus.2010.09.010

- Garcia-Garcia et al. (2017) Garcia-Garcia, A., Orts-Escolano, S., Oprea, S., Villena-Martinez, V., & Garcia-Rodriguez, J. 2017, arXiv e-prints, arXiv:1704.06857, doi: 10.48550/arXiv.1704.06857

- Goldthwait (1976) Goldthwait, R. P. 1976, Quaternary Research, 6, 27, doi: https://doi.org/10.1016/0033-5894(76)90038-7

- Golombek et al. (2012) Golombek, M., Huertas, A., Kipp, D., & Calef, F. 2012, International Journal of Mars Science and Exploration, 7, 1, doi: 10.1555/mars.2012.0001

- Gueguen et al. (2018) Gueguen, L., Sergeev, A., Kadlec, B., Liu, R., & Yosinski, J. 2018, in Advances in Neural Information Processing Systems, ed. S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, & R. Garnett, Vol. 31 (Curran Associates, Inc.). https://proceedings.neurips.cc/paper_files/paper/2018/file/7af6266cc52234b5aa339b16695f7fc4-Paper.pdf

- Hallet (2013) Hallet, B. 2013, Philosophical Transactions of the Royal Society of London Series A, 371, 20120357, doi: 10.1098/rsta.2012.0357

- Hallet & Waddington (2020) Hallet, B., & Waddington, E. 2020, Buoyancy Forces Induced by Freeze-thaw in the Active Layer: Implications for Diapirism and Soil Circulation, 251–279, doi: 10.4324/9781003028901-11

- He et al. (2015) He, K., Zhang, X., Ren, S., & Sun, J. 2015, arXiv e-prints, arXiv:1512.03385, doi: 10.48550/arXiv.1512.03385

- Hibbard et al. (2022) Hibbard, S. M., Osinski, G. R., Godin, E., Williams, N. R., & Golombek, M. P. 2022, in LPI Contributions, Vol. 2678, 53rd Lunar and Planetary Science Conference, 2551

- Howard et al. (2019) Howard, A., Sandler, M., Chu, G., et al. 2019, arXiv e-prints, arXiv:1905.02244, doi: 10.48550/arXiv.1905.02244

- Huertas et al. (2006) Huertas, A., Cheng, Y., & Madison, R. 2006, 2006 IEEE Aerospace Conference, 14 pp. https://api.semanticscholar.org/CorpusID:20565326

- Ioffe & Szegedy (2015) Ioffe, S., & Szegedy, C. 2015, arXiv e-prints, arXiv:1502.03167, doi: 10.48550/arXiv.1502.03167

- Kääb et al. (2014) Kääb, A., Girod, L., & Berthling, I. 2014, The Cryosphere, 8, 1041, doi: 10.5194/tc-8-1041-2014

- Kessler et al. (2001) Kessler, M. A., Murray, A. B., Werner, B. T., & Hallet, B. 2001, Journal of Geophysical Research: Solid Earth, 106, 13287, doi: https://doi.org/10.1029/2001JB000279

- Kessler & Werner (2003) Kessler, M. A., & Werner, B. T. 2003, Science, 299, 380, doi: 10.1126/science.1077309

- Konrad & Morgenstern (1980) Konrad, J.-M., & Morgenstern, N. R. 1980, Canadian Geotechnical Journal, 17, 473, doi: 10.1139/t80-056

- Kreslavsky et al. (2008) Kreslavsky, M. A., Head, J. W., & Marchant, D. R. 2008, Planet. Space Sci., 56, 289, doi: 10.1016/j.pss.2006.02.010

- Levy et al. (2009) Levy, J. S., Head, J. W., & Marchant, D. R. 2009, Icarus, 202, 462, doi: https://doi.org/10.1016/j.icarus.2009.02.018

- Malin & Edgett (2001) Malin, M. C., & Edgett, K. S. 2001, J. Geophys. Res., 106, 23429, doi: 10.1029/2000JE001455

- Mangold (2003) Mangold, N. 2003, Journal of Geophysical Research (Planets), 108, 8021, doi: 10.1029/2002JE001885

- Mangold (2005) —. 2005, Icarus, 174, 336, doi: 10.1016/j.icarus.2004.07.030

- McEwen et al. (2007) McEwen, A. S., Eliason, E. M., Bergstrom, J. W., et al. 2007, Journal of Geophysical Research (Planets), 112, E05S02, doi: 10.1029/2005JE002605

- McEwen et al. (2011) McEwen, A. S., Ojha, L., Dundas, C. M., et al. 2011, Science, 333, 740, doi: 10.1126/science.1204816

- Milliken et al. (2003) Milliken, R. E., Mustard, J. F., & Goldsby, D. L. 2003, Journal of Geophysical Research (Planets), 108, 5057, doi: 10.1029/2002JE002005

- Nagle-McNaughton et al. (2020) Nagle-McNaughton, T., McClanahan, T., & Scuderi, L. 2020, Remote Sensing, 12, doi: 10.3390/rs12213607

- Noe Dobrea et al. (2007) Noe Dobrea, E. Z., Asphaug, E., Grant, J. A., Kessler, M. A., & Mellon, M. T. 2007, in LPI Contributions, Vol. 1353, Seventh International Conference on Mars, ed. LPI Editorial Board, 3358

- Palafox et al. (2017) Palafox, L. F., Hamilton, C. W., Scheidt, S. P., & Alvarez, A. M. 2017, Computers and Geosciences, 101, 48, doi: https://doi.org/10.1016/j.cageo.2016.12.015

- Qiu et al. (2020) Qiu, D., Rothrock, B., Islam, T., et al. 2020, Planetary and Space Science, 188, 104943, doi: https://doi.org/10.1016/j.pss.2020.104943

- Ronneberger et al. (2015) Ronneberger, O., Fischer, P., & Brox, T. 2015, arXiv e-prints, arXiv:1505.04597, doi: 10.48550/arXiv.1505.04597

- Russakovsky et al. (2015) Russakovsky, O., Deng, J., Su, H., et al. 2015, International Journal of Computer Vision, 115, 211, doi: 10.1007/s11263-015-0816-y

- Squyres (1978) Squyres, S. W. 1978, Icarus, 34, 600, doi: 10.1016/0019-1035(78)90048-9

- Taber (1929) Taber, S. 1929, Journal of Geology, 37, 428, doi: 10.1086/623637

- Taber (1930) —. 1930, Journal of Geology, 38, 303, doi: 10.1086/623720

- Trombotto (2000) Trombotto, D. 2000, Revista do Instituto Geológico, 21, 33, doi: 10.5935/0100-929X.20000004

- Wagstaff et al. (2018) Wagstaff, K. L., Lu, Y., Stanboli, A., et al. 2018, in Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence, AAAI’18/IAAI’18/EAAI’18 (AAAI Press)

- Werner (1999) Werner, B. T. 1999, Science, 284, 102, doi: 10.1126/science.284.5411.102

- Williams et al. (1989) Williams, G. D., Powell, C. M., & Cooper, M. A. 1989, Geological Society of London Special Publications, 44, 3, doi: 10.1144/GSL.SP.1989.044.01.02

- Wimmer et al. (2023) Wimmer, T., Golkov, V., Dang, H. N., et al. 2023, arXiv e-prints, arXiv:2304.05864, doi: 10.48550/arXiv.2304.05864

- Xu et al. (2019) Xu, X., Wang, G., Sullivan, A., & Zhang, Z. 2019, arXiv e-prints, arXiv:1909.00114, doi: 10.48550/arXiv.1909.00114

- Xu et al. (2014) Xu, Y., Xiao, T., Zhang, J., Yang, K., & Zhang, Z. 2014, arXiv e-prints, arXiv:1411.6369, doi: 10.48550/arXiv.1411.6369