Mapping suburban bicycle lanes using street scene images and deep learning

Declaration

This thesis contains work that has not been submitted previously, in whole or in part, for any other academic award and is solely my original research, except where acknowledged.

This work has been carried out since March 2021, under the supervision of Dr. Dhirendra Singh.

Tyler Saxton

School of Computing Technologies

Royal Melbourne Institute of Technology

Acknowledgements

First and foremost, I would like to thank Dr. Dhirendra Singh for inspiring this research and supervising me throughout the year. I also greatly appreciate the input provided by Dr. Ron van Schyndel and the “Research Methods” class of Semester 1 2021, as I worked to develop a detailed research proposal.

To Dr. Sophie Bittinger, Dr. Logan Bittinger, Amy Pendergast, Laura Pritchard, thank you for your encouragement, and your assistance with the editing process.

Summary

Many policy makers around the world wish to encourage cycling, for health, environmental, and economic reasons. One significant way they can do this is by providing appropriate infrastructure, including formal on-road bicycle lanes. It is important for policy makers to have access to accurate information about the existing bicycle network, in order to plan and prioritise upgrades. Cyclists also benefit when good maps of the bicycle network are available to help them to plan their routes. This thesis presents an approach to constructing a map of all bicycle lanes within a survey area, based on computer analysis of street scene images sourced from Google Street View or “dash cam” footage.

Abstract

On-road bicycle lanes improve safety for cyclists, and encourage participation in cycling for active transport and recreation. With many local authorities responsible for portions of the infrastructure, official maps and datasets of bicycle lanes may be out-of-date and incomplete. Even “crowdsourced” databases may have significant gaps, especially outside popular metropolitan areas. This thesis presents a method to create a map of bicycle lanes in a survey area by taking sample street scene images from each road, and then applying a deep learning model that has been trained to recognise bicycle lane symbols. The list of coordinates where bicycle lane markings are detected is then correlated to geospatial data about the road network to record bicycle lane routes. The method was applied to successfully build a map for a survey area in the outer suburbs of Melbourne. It was able to identify bicycle lanes not previously recorded in the official state government dataset, OpenStreetMap, or the “biking” layer of Google Maps.

Chapter 1 Introduction

The benefits of “active transport”, such as walking and cycling, have been well documented in previous studies. Participants’ health may improve due to their increased physical activity. There are environmental benefits due to reduced emissions and pollution. And there are economic benefits, including a reduced burden on the health system, and reduced transportation costs for participants [1] [2].

Federal and state government policy makers in Australia therefore wish to encourage cycling [3] [4]. However, the share of cycling for trips to work in Melbourne is only 1.5% [5]. For many commuters, a perceived lack of safety of cycling is a major barrier to adoption. Other significant factors are the availability of shared bicycle schemes and storage facilities, and the risk of theft [6]. Cycling infrastructure has a significant impact on real and perceived cyclist safety, and this research project will focus on that issue. Important safety factors include the presence and width of a bicycle lane, the presence of on-street parking, downhill and uphill grades, and the quality of the road surface [7] [8]. A comprehensive dataset of cycling infrastructure would help policy makers prioritize areas in need of improvement to safety.

In Victoria, Australia, the state government publishes a “Principal Bicycle Network” dataset to assist with planning [9], however, during an exploration of the dataset, it was found to be significantly out of date. (See section 2.3.) Individual Local Government Areas may produce their own maps of bicycle routes, but availability is inconsistent [10].

The aim of this research project was to construct a dataset or map of bicycle lanes in a local area, by collecting street scene images at known coordinates, and then using a “deep learning” model to detect locations where bicycle lane markings are found. Detection locations were matched to an existing dataset of roads in the area, to infer bicycle lane routes along stretches of road where markings were consistently found. If a baseline map of bicycle lane routes can be built this way, then the process could be extended in future to gather information about other significant factors, such as how frequently the bicycle lane is obstructed by parked vehicles, or the presence of debris or damage to the road surface.

Google Street View has been chosen as a source of street scene image data due to its wide geographical coverage, and the accessibility of the data via a public API. However, a significant limiting factor is that the Google Street View images for any given location might be several years out of date. Therefore, the use of images collected from a “dash cam” was also explored. A local government that is responsible for building and maintaining bicycle lanes could use dash cameras to gather its own images, at regular intervals, for more up-to-date data.

1.1 Research questions

-

•

RQ1: Can a “deep learning” model be used to identify bicycle lane markings in street scene images sourced from Google Street View, in at least 80% of images where those markings appear, with a precision of at least 80%?

-

•

RQ2: Can the model then be used to correctly detect and map all bicycle lane routes across all streets in a survey area with Google Street View coverage?

-

•

RQ3: Can a similar process be applied to correctly detect and map bicycle lane routes from street scene images collected from dash camera video footage in a survey area?

-

•

RQ4: Can the approach used to map bicycle lane routes from dash camera video footage be re-used to visually survey other details about the infrastructure?

Models from the TensorFlow 2 Model Garden were first trained to detect bicycle lane markings in a custom dataset of Google Street View images. These initial models generalised well enough to detect bicycle lane markings in dash camera footage, so they were used to gather additional images from the dash camera to include in the dataset for further training and validation.

The remainder of this thesis is laid out as follows: Chapter 2 is a literature review, providing motivations for the research, and a background in relevant techniques; chapter 3 describes the approach that was taken; chapter 4 reports the results and discusses the limitations and findings; chapter 5 discusses the overall conclusions of the research. Please see Appendix A for instructions on how to access and use the code and data assets produced as part of this research project.

Chapter 2 Literature Review

2.1 Motivating literature

Prior research has clearly shown health, economic, and environmental benefits from active transport. Lee et al., 2012 [1] analysed World Health Organization survey data from 2008, and showed that physical inactivity significantly increased the relative risk of coronary heart disease, type 2 diabetes, breast cancer, colon cancer, and all-cause mortality, across dozens of countries. Rabl & de Nazelle, 2012 [2] demonstrated that active transport by walking or cycling improves those relative risks for participants. Moderate to vigorous cycling activity for 5 hours a week reduced the all-cause mortality relative risk by more than 30%. They estimated an economic gain from improved participant health and reduced pollution, offset slightly by the cost of cycling accidents.

In Australia, federal and state governments are committed to the principle of supporting active transport through the provision of cycling infrastructure, declaring their commitment through public statements on their official websites [3] [4]. Many other governments around the world have adopted similar policies.

Taylor & Thompson, 2019 [5] surveyed the use of active transport in Melbourne, to establish a baseline of current commuter behaviour. They found that cycling only accounted for 1.5% of trips to work in the area. It could therefore be argued that there is room for improvement.

Schepers et al., 2015 [11] produced a summary of literature related to cycling infrastructure and how it can encourage active transport, resulting in the aforementioned benefits. The paper found that providing cycling infrastructure that is perceived as being safer does encourage participation. Other papers such as Wilson et al., 2018 [6] agreed.

Other researchers have examined which factors affect the perceived and actual safety of cycling routes, in a variety of settings.

Klobucar & Fricker, 2007 [7] surveyed a group of cyclists in Indiana, USA, asking them to ride a particular route and rate the safety of each road segment along the route, then asking them to review video footage of other routes and rate the safety of those routes, too. A regression model was created to predict the cyclists’ likely safety ratings for other routes. The creation of the model led to a list of road segment characteristics that were apparently most influential in the area.

Tescheke et al., 2012 [8] surveyed patients who attended hospital emergency rooms in Toronto and Vancouver in Canada, due to their involvement in a cycling accident. Details of the circumstances of each accident were gathered, along with the outcomes. The dataset was analysed to determine which factors increased (or decreased) the relative odds of a cyclist being involved in an accident.

Malik et al., 2021 [12] modelled cyclist safety in Tyne and Wear County in north-east England, a more rural setting.

The factors that contribute to cyclist safety vary by locality. For example, cyclists in one city might be concerned by the hazard of tram tracks, whereas this might not be a relevant concern in another city, or a less built-up area. The common themes among the aforementioned papers were: the presence and width of a bicycle lane; the presence of on-street parking; downhill or uphill slopes; the volume, speed and profile of motor vehicle traffic; the quality of the road surface; lighting; and the presence of construction work.

Most of these factors can be influenced by infrastructure and road design. Therefore, it would be valuable to quantify as many of these factors as possible in a dataset, to assist policy makers in deciding what changes to make, and where, to provide a safer network of cycling infrastructure.

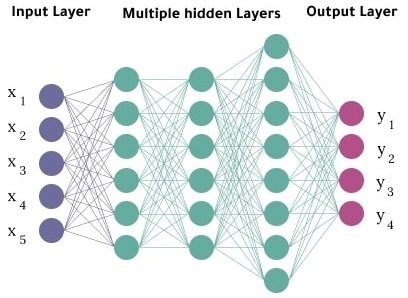

2.2 Relevant literature in machine learning

“Deep Learning” is a paradigm by which computational models can be constructed to tackle many problems, including visual object recognition. A Deep Learning model can be trained to perform visual object recognition tasks by supplying it with a “training” dataset of images where the objects it must recognise have been pre-labelled. During training, the model processes its training dataset over and over again, and with each iteration the weights in its multi-layer neural network are refined through the use of a “backpropagation” algorithm [13]. With a sufficient number of training “epochs”, the model hopefully develops the ability to detect if and where the objects of interest appear in each image, to an acceptable level of performance. See figure 2.1.

Tensorflow [15] [16] and PyTorch [17] are two dominant frameworks for building, training, evaluating, and applying Deep Learning models. Within these frameworks, researchers have progressively developed new models for visual object recognition, typically with a focus on either improving the accuracy of the results, increasing speed to allow “real time” processing of video streams, or creating models that will work on low-cost hardware. Significant Deep Learning models considered within this research project include: R-CNN, proposed by Ren et al., 2015 [18]; SSD, proposed by Liu et al., 2016 [19]; ResNet, proposed by He et al., 2016 [20]; YOLO and subsequent variants, first proposed by Redmon et al., 2016 [21]; MobileNetV2, proposed by Sandler et al., 2018 [22]; a nd CenterNet, proposed by Duan et al., 2019 [23].

The Tensorflow 2 Model Garden [24] provides access to many of these models, pre-trained on the COCO 17 (“Microsoft COCO: Common Objects in Context”) dataset. It therefore provides a convenient library of Deep Learning models that have already received a significant amount of training for the general problem of visual object recognition, and can be re-used to recognise new classes of objects through a process of “transfer learning” [25] [26].

Semantic image segmentation of street scene images is an important and active area of computer science research. “Deep Learning” models that can understand sequences of images in real time are essential for self-driving vehicles or similar driver-assistance systems, so many papers have focussed on that area. In order to train a Deep Learning model that specialises in understanding street scene images, a labelled dataset of street scene images is required. Papers by Cordts et al., 2016 [27] and Zhou et al., 2019 [28] announced the publication of the “Cityscapes” and “ade20k” image datasets, respectively. These datasets each contain many street scene images, with objects of interest labelled in a format suitable for training deep learning models to understand similar on-road scenarios. Many papers have used these datasets in order to train new machine learning models. Chen et al., 2018 [29] is one example of a heavily-cited paper where “CityScapes” data has been used to train a “real time” model to understand street scene images. Unfortunately, neither of the datasets has cycling infrastructure labelled. The “CityScapes” dataset has labelled bicycle lanes under the “sidewalks” category. This is useful to train a car not to drive there, but a cyclist might not be legally allowed to ride on a sidewalk designed for pedestrian traffic.

In another branch of research, deep learning tools have been used to manage roadside infrastructure and assets. Campbell et al., 2019 [30] used an “SSD MobileNet” model to detect “Stop” and “Give Way” signs on the side of the road in Google Street View images, to help build a database of road sign assets. An application called “RectLabel” was used to label 500 sample images for each type of sign. Photogrammetry was used to estimate a location for each detected sign, based on the Google Street View camera’s position and optical characteristics and the bounding box of the detected sign within the image. Zhang et al., 2018 [31] performed a similar exercise, detecting road-side utility poles using a “ResNet” model.

In Australia, the “Supplement to Australian Standard AS 1742.9:2000” sets out the official standards for how bicycle lanes must be constructed and marked. Generally, a lane marking depicting a bicycle should be painted on the road inside the bicycle lane within 15 metres before and after each intersection, and at intervals of up to 200 metres[32]. Therefore, it is proposed that a Deep Learning model could be trained to detect these markings, similar to how Campbell et al., 2019 [30] trained a model to detect road signs, and Zhang et al., 2018 [31] trained a model to detect utility poles, using pre-trained models from the TensorFlow 2 Model Garden [24] as a starting point.

The use of satellite imagery was also considered. Li et al., 2016 [33] showed that a road network could be extracted from satellite imagery using a convolutional neural network (CNN), and this is particularly useful in rural areas where maps are not already available. However the resolution of publicly available satellite image data would not be sufficient to identify a bicycle lane on a road, and it would not be able to distinguish a standard bicycle lane from a paved shoulder. Satellite imagery may have a role to play in detecting off-street bicycle tracks in “green” parkland area, but it was decided to exclude off-street routes from the scope of this research, and focus on on-road infrastructure.

Aerial photography may be useful, where it is available with sufficient detail. However it would require a different data source for every jurisdiction. Ning et al., 2021 [34] had success extracting sidewalks from local aerial photography using a “YOLACT” model. Areas of uncertainty caused by tree cover were filled in using Google Street View images. The model appeared to rely on a concrete sidewalk having a very different colour to the adjacent bitumen road. The approach might not be able to distinguish bitumen bicycle lanes.

Aside from formal bicycle lanes, cyclist safety can also be improved by a paved shoulder, or by creating lanes that are wide enough to safely accommodate a vehicle passing a cyclist. It may be possible to detect and map these arrangements by recognising lane markings and road boundaries. A common method of detecting lane boundaries is through the combination of a Canny edge detector [35] and a Hough transformation [36]. The OpenCV library provides a frequently used implementation [37]. If the Canny-Hough approach struggles with a poorly defined road boundary or “noise” from roadside objects, a Deep Learning approach may help: Mamidala et al., 2019 [38] successfully used a “CNN” model to detect the outer boundary of roads in Google Street View images.

During the literature review, one other paper was identified where the authors had applied Deep Learning techniques in the domain of cyclist safety: Rita, 2020 [39] used the “MS Coco” and “CityScapes” datasets to train “YOLOv5” and “PSPNet101” models to identify various classes of object (Bicycle, Car, Truck, Fire Hydrant, etc.) in Google Street View images of London. A matrix of correlations between objects was calculated. This was used to infer the circumstances where cyclists might feel the most safe. For example, there was a high correlation between “Person” and “Bicycle” which “suggests pedestrians and cyclists feel safe occupying the same space”. This is research did not involve creating maps.

2.3 Cycling infrastructure datasets and standards

The Victorian State Government in Australia started publishing an official “Principal Bicycle Network” dataset on data.gov.au in 2020 [9]. It is an official dataset that is intended to help policy makers with their planning. It includes “existing” and “planned” bicycle routes. The dataset only covers formal bicycle lanes, so it generally excludes roads with a simple paved shoulder. In some country areas, the routes did not appear to be formally marked as standard bicycle lanes. It was found that some existing bicycle lanes are still marked as “planned” in the dataset, or not listed at all. There is a field to record when each entry was last validated, but the most recent timestamp was in 2014. The data appears to be incomplete and out of date. Its scope is limited to the State of Victoria.

“OpenStreetMap” [40] is a source of crowdsourced map data. It provides detailed information about road networks worldwide, and the attributes of each road segment. “Cycleway” tags can be used to mark not just where a bicycle lane is, but other interesting attributes, such as whether the cycleway is shared with public transport, whether there is a specially marked area for cyclists to stop at each intersection, and how wide the bicycle lane is. Given that the data is crowdsourced, the quality and availability of the data may vary by location.

“Google Maps” provides a “biking layer” [41]. This includes off-street bicycle paths and on-street bicycle lanes. It only gives a “yes” or “no” opinion about whether a route is especially suited to bicycles, with no further information provided. But that may be of assistance in scouting locations to use in a dataset of Google Street View images.

Chapter 3 Methods

This section describes the methodology that was used to address each of the research questions. To assist with reproducibility, all code has been published on GitHub and FigShare, and the dash camera footage and data has been published on FigShare. For detailed instructions on how to access the code and data, and how to run the code via the provided Jupyter Notebooks, please refer to appendix A.

3.1 RQ1: Training a model to identify bicycle lanes in Google Street View images

A review of the relevant Australian standards for bicycle lanes [32] suggested that the best way to search for bicycle lanes in street scene images would be to focus on the bicycle lane markings that are painted on the road surface. See figure 3.1 for an example marking.

This marking is universal across all standard bicycle lanes in Australia, and can distinguish a bicycle lane from other parts of the road. It may sometimes appear on a green surface, and occasionally it may be partially occluded due to limited space or wear and tear. Similar markings exist in many other jurisdictions outside Australia.

In order to train a deep learning model to recognise bicycle lane markings in street scene images, it was necessary to first create a dataset of labelled images for training and validation. This could then be used to apply a variety of pre-trained models from the “TensorFlow 2 Model Garden” to the problem, through a process of transfer learning.

3.1.1 Creating a labelled dataset of Google Street View images

The official Australian design standards for bicycle lanes specify that bicycle lane markings should appear within 15m of each intersection [32]. Therefore, intersections along known bicycle lane routes are locations where example images are likely to be found.

3.1.1.1 Identifying candidate intersections to sample

Two possible sources of information about “known” bicycle lane routes were considered for use in the sampling process: The “Principal Bicycle Dataset”, and XML extracts from the OpenStreetMap database. These datasets are discussed in section 2.3, with further details of the structure of the OpenStreetMap data provided in Appendix B. The “Principal Bicycle Network” dataset was chosen, as the incumbent official dataset for local policy makers. Due to the age of the data, it was also less likely to suggest bicycle routes not yet captured in the Google Street View images. Using OpenStreetMap as a source of “known” bicycle lane routes would generalise to other jurisdictions, therefore tools were created to support both approaches, and their operation is described in appendix A.1.1 to A.1.3. Given a list of “known” bicycle lane routes from either of these sources, each route can be looked up in an OpenStreetMap database to identify its intersections.

OpenStreetMap data can be downloaded for an individual country via the “GeoFabrik” website [42] and then sliced into a smaller area, if necessary, using the “osmium” command line tool [43]. For more background on concepts in the OpenStreetMap data, please refer to Appendix B. Briefly, an OpenStreetMap XML extract file contains “ways”, which represent lines on a map, such as road segments, and “nodes”, which represent points with latitude/longitude coordinates that make up the “ways” and thereby describe the shape of each line on a map. A list of candidate intersections along “known” bicycle lane routes can be extracted from OpenStreetMap XML data by looking for “nodes” that are common to two or more “ways” that are roads and have distinct names.

Generating a list of intersections from the “Principal Bicycle Network” dataset involves additional work, because it only lists bicycle lane routes, and does not have any information about intersecting roads. Each point in the dataset is “reverse-geocoded” to find the name of the road using an API call to a local instance of a “Nominatim” server, as described in appendix C.2. An OpenStreetMap XML extract for Victoria is then searched for all “ways” with a matching name, to find the corresponding road segments in the OpenStreetMap data. The bounding box around each “way” is compared to the bounding box of the “Principal Bicycle Network” route, to ensure they roughly overlap, and remove matches for other roads with the same name, in a completely different area. Once matching “ways” have been found for the route, they can be checked for intersections as per the OpenStreetMap process.

For every intersection identified, it is useful to calculate a “heading” for the road at the intersection, to help gather Google Street View images that are aligned to the direction of the road. The Python “geographiclib” library can calculate a heading from one point to the next. It is assumed that the heading at each intersection is the average of the heading from the previous “node” and the heading to the next “node”.

3.1.1.2 Downloading sample Google Street View images for the dataset

Google provides an API for downloading Google Street View images of up to 640x640 pixels, at a cost of $7.00 USD per 1,000 images. Volume discounts are available, with a reduced cost of $5.60 USD per 1,000 image for volumes from 100,001 to 500,000 images. Beyond that, further volume discounts can be negotiated with Google’s sales team [44].

Google Street View images were cached locally, to avoid unnecessary costs when requesting an image that has previously been downloaded.

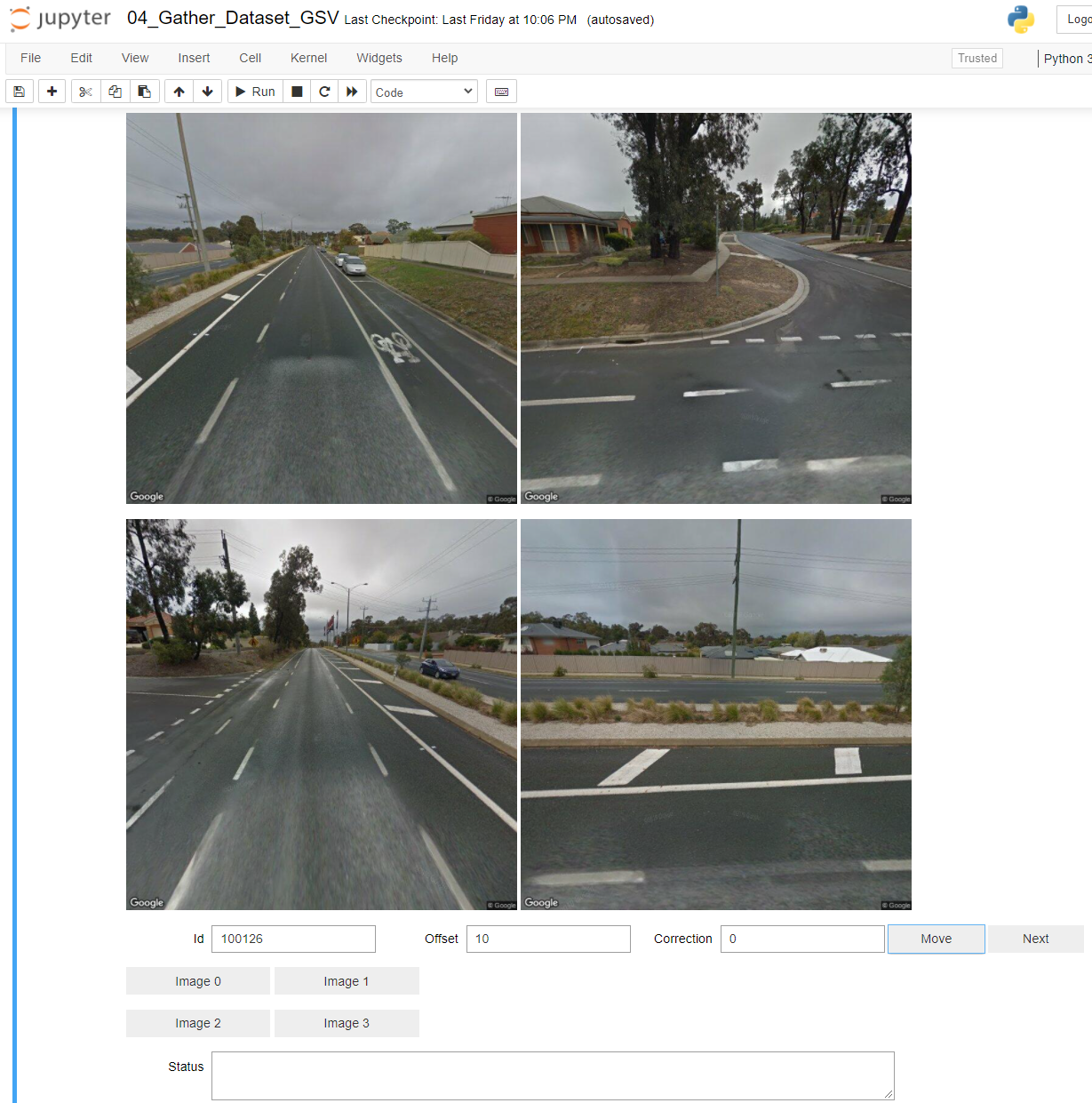

The Google Street View API allows images to be requested for a location (latitude/longitude) with a desired heading, field-of-view angle, and camera angle relative to the “horizon”. A Jupyter Notebook with an “ipywidgets” GUI was created to: Randomly select a sample location from the list of candidate intersections in Victoria; download four Google Street View images at 0, 90, 180, and 270 degrees relative to the heading of the road, with a 90 degree field of view, and the camera angled downwards 20 degrees towards the ground to focus on any nearby road markings; allow the operator to press buttons to record which images should be included in the dataset due to the presence of an interesting feature. Please see appendix A.1.4 for screenshots and detailed instructions on how to use the tool.

Bicycle lane markings are not always visible from the middle of an intersection, therefore, options were provided to allow the operator to move the camera up and down the road, 10 metres at a time, to get a better view of a marking that is just visible in the distance.

If the operator could not clearly tell that a white marking in an image was a bicycle lane marking, then the image was not included in the dataset.

The GUI allowed multiple candidate intersections to be assessed per minute. An initial set of 256 images was collected over the course of approximately 4 hours, based on a random sample of points across the State of Victoria.

3.1.1.3 Labelling the dataset

Once a suitable number of Google Street View images containing bicycle lane markings had been collected, they were copied to a single folder and then labelled using the open-source tool “labelImg” [45]. Please refer to appendix A.1.5 for more information on how to perform the labelling process. The “labelImg” tool allows the operator to examine each image in a folder, draw bounding boxes around objects of interest in each image, and assign a label to each bounding box to categorise its contents. The output of the process was one XML file per image, in a format that could be understood by TensorFlow 2 training tools and scripts.

3.1.2 Training and evaluating candidate models from the TensorFlow 2 Model Garden

Training and evaluation of candidate models was conducted on local infrastructure, as described in Appendix C.1. TensorFlow was configured for GPU-accelerated development, using CUDA and cuDNN libraries from NVIDIA.

The dataset that was collected in section 3.1.1.2 and labelled in section 3.1.1.3 was randomly split into “training” and “test” datasets according to an 80:20 ratio. There were 205 images in the “training” dataset, and 51 images in the “test” dataset.

The Jupyter Notebook described in appendix A.1.6 was used to download a candidate pre-trained model from the TensorFlow 2 Object Garden and configure it to search for bicycle lane markings based on the collected dataset. The Jupyter Notebook printed out commands that could be used from the command line, to train the model for a specified number of training steps against the “training” dataset, evaluate its performance against the “test” dataset, and create a “frozen” copy of the model as-at the current level of training.

Three significant factors were found to influence the performance of the model: The size of the dataset available for “training” and “test” purposes; the number of “epochs” or “training steps” spent training the model on the data; and the “confidence score ” threshold, from 0% to 100%, at which the model declares that a bicycle lane marking has been detected.

The initial size of the dataset was set based on previous experience in other papers such as Campbell et al., 2019 [30], where models had been successfully trained with around 500 images. An initial dataset of 256 images was gathered, and further images were added later in response to model performance.

During the training process, the TensorFlow 2 training script reported “loss” performance metrics every 100 training steps. This performance metric typically gradually improved as more training was conducted over the dataset, until a point of diminishing returns was reached. Every 2000 training steps, the training was paused, and an evaluation script was run to test the performance of the model against the independent “test” dataset that had been withheld from the training process. When training reached the point that further training steps did not significantly improve performance, training was halted.

The “confidence” threshold was adjusted manually, by reviewing the false positives and false negatives produced by the model, and adjusting it to provide a balance between over-confidence and conservatism. As part of the evaluation process, copies of each image were written to disk with an overlay showing any detected bounding box and its confidence score. These outputs were reviewed as part of the threshold tuning process. The boundary boxes of detections were also checked to ensure that the results were sensible.

Multiple pre-trained models from the TensorFlow 2 Model Garden were trialled, with selections guided by their benchmark “mAP” (mean Average Precision) metrics for processing of the “COCO 17” dataset. The process of building a map of bicycle lanes for an area can be considered a “batch” process, with no fixed time constraints, therefore mean Average Precision was prioritized over benchmark “speed”.

The TensorFlow 2 Model Garden provided an efficient way to test multiple models via a single framework. There are popular object detection models, such as the “YOLO” series of models, that are not supported by it. For the purposes of this research, it was not considered necessary to perform an exhaustive search of all possible models across multiple frameworks. A different model (e.g. YOLOv3) or a different framework (e.g. PyTorch) could be substituted if required.

Please refer to Appendix A.1.6 for instructions on how to run the training process.

3.2 RQ2: Building a map of bicycle lane routes from Google Street View images in a survey area

In section 3.1, a model was trained to detect bicycle lane markings in one street view image at a time. To generate a map or dataset of bicycle lanes in an area, the first step is to determine a list of locations for which Google Street View images should be downloaded and processed by the model. A batch process can then used to process each image and record whether or not a bicycle lane marking was found. Finally, the list of sample locations where there was a “hit” must be correlated to geospatial data about the road network to infer routes, draw them on a map, and compare them to other sources.

3.2.1 Sampling strategy for Google Street View images

In section 3.1.1.2, it was noted that Google charges up to $7.00 USD per 1,000 Google Street View images, and that the most likely place to find a bicycle lane marker is in the area immediately surrounding an intersection. To reduce costs, a strategy of sampling only intersections and their immediate vicinity was adopted. Samples could also be taken at regular intervals along each road, if initial results suggested that this was necessary to compensate for missed detections.

The Jupyter Notebook described in appendix A.1.8 was used to load an OpenStreetMap XML extract file for the survey area into memory, and then traverse it to produce a batch file with the coordinates of every point within 20 metres of an intersection, at intervals of 10 metres. The length of the batch file was inspected, to assess potential costs, and then the required images were downloaded via the Google Street View API, if they were not found in the local cache.

It was discovered that some intersections would be missing on roads at the very margins of the survey area, if the intersecting road existed entirely outside the bounds of the OpenStreetMap XML extract for the survey area. A second extract, capturing bounding box with an extra margin of 200 metres around the survey area, was used to rectify the missing intersection issue. The 200 metres margin was found to be sufficient to ensure that the adjoining road was included in the extract by the osmium tool. Roads in the second extract were not considered part of the survey, but data about them was taken into account to ensure that intersections at the margin of the survey area were not missed.

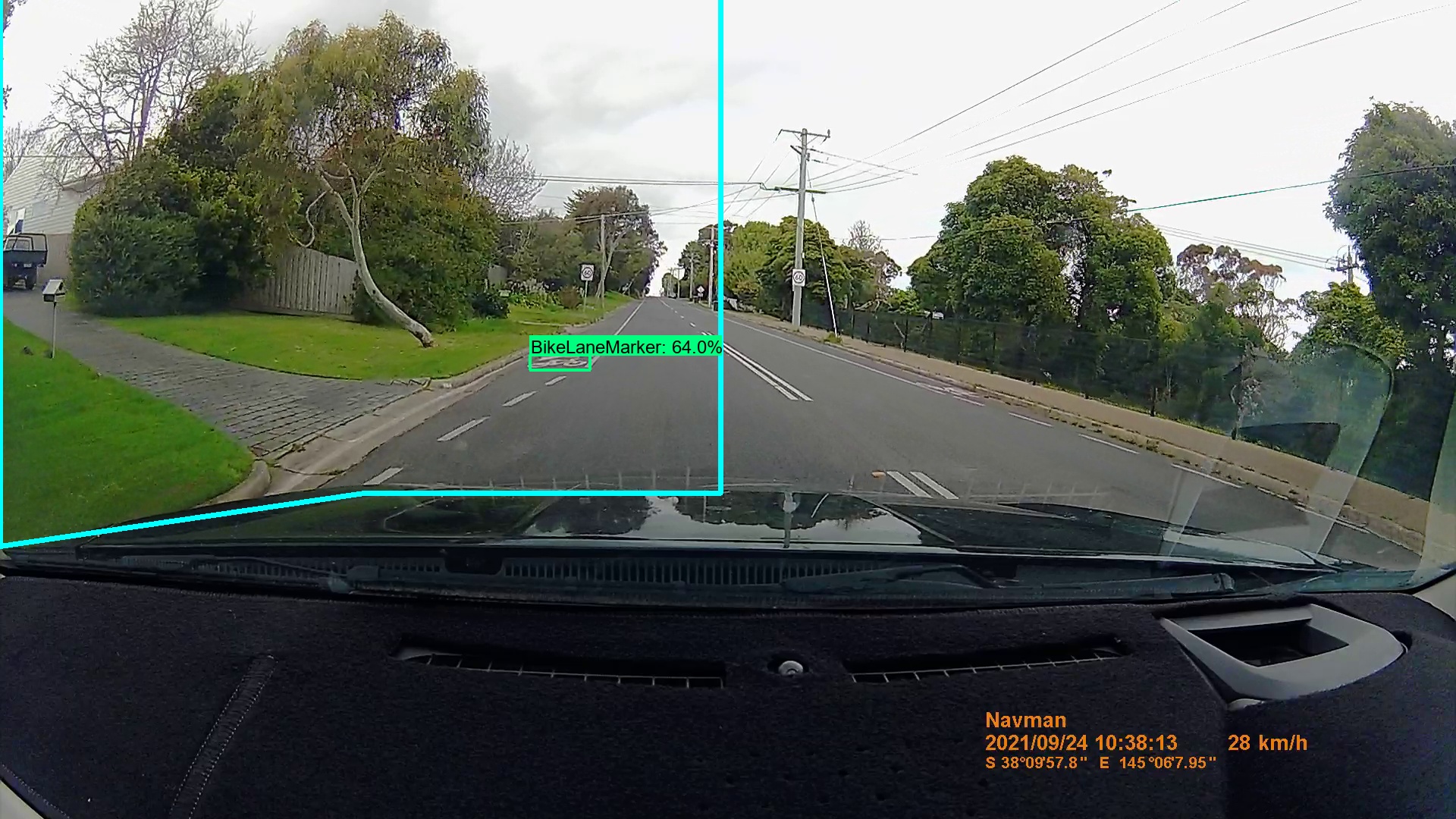

3.2.2 Applying a detection model to a batch of Google Street View images

The Jupyter Notebook described in appendix A.1.10 was also used to apply the detection model to the sampled Google Street View images, and produce an output CSV with the coordinates of every point where a bicycle lane marking was detected. If one or more bicycle lane markings were detected in an image, a copy of the image was saved to a folder of “hits” with a bounding box and confidence score overlay. An example image with detection overlay is shown in figure 3.2. If no bicycle lane marking was found, a copy of the image was instead placed into a “miss” folder. Partitioning the result images into “hits” and “misses” allows the operator to quickly browse the images to check for any false positives or false negatives.

3.2.3 Inferring bicycle lane routes from Google Street View detection points

The result of the batch process in section 3.2.2 is a list of locations where a bicycle lane marking was found, linked to the OpenStreetMap “way” IDs and “node” IDs that they were sampled from. The next step is to use those detection points to infer contiguous routes that can be drawn as lines on a map.

Four Google Street View images were sampled at each sample point in the survey, providing a 360 degree view. An approximate heading was calculated for each road at each intersection, but occasionally that did not appear to match the retrieved images. Therefore, if a bicycle lane marking was detected at an intersection, it could be difficult to accurately decide which road it belonged to.

The proposed solution was to infer routes from the detection points based on the assumption that if markings were detected at two or more consecutive intersections along a named road, there is a bicycle lane along that segment. A configuration option was provided to allow for one or more intersections with “missing” detections before assuming that the bicycle lane route has been interrupted.

If a street shared an intersection with a road that had a bicycle lane, it would not usually be reported as having a bicycle lane unless it had another intersection with a bicycle lane at the next intersection.

A continuous road may be represented in OpenStreetMap as multiple “way” segments if any of its characteristics change from one segment to the next, such as a change of speed limit. Before inferring bicycle lane routes along a road from the detection points, it was necessary to link adjacent “way” segments from a single named road back up into a single chain. Otherwise, the option to allow for intersections with “missing” detections would not always work as intended.

Each inferred route was drawn as a line in a geojson file, which can be drawn on a map or measured as per section 3.2.4 below.

This approach was intended to draw a map of bicycle lane routes irrespective of which side of the road the bicycle lane is on. If additional information is required about roads where bicycle lanes are only present on one side of the road, the process would need to be refined to take into account the heading of the road, the heading of the camera angle, and exactly where in the frame the bicycle lane marking was detected.

3.2.4 Comparing results to other data sources

Once a geojson file has been produced to describe the detected bicycle lane routes, it can be drawn on a map in a Jupyter Notebook using the Python “ipyleaflet” library. See appendix A.1.11.

The OpenStreetMap XML extract for the survey area can be filtered down to “ways” that are roads with the “cycleway” tag, and then converted into an equivalent geojson file. The detected routes and the routes that are tagged in OpenStreetMap can be viewed side-by-side on a map.

To allow more detailed comparisons, the geojson files were drawn simulaneously, in a single process. For each road where a bicycle lane marking was detected, or tagged in OpenStreetMap, a process “walks” down “nodes” in order. If the nodes are part of a “way” that has been tagged as a “cycleway”, their coordinates are added to a line in the “OpenStreetMap” geojson. If a detection point is encountered at two intersections in a row, then the intersection nodes and the nodes in between them are added to a line in the “detected routes” geojson. At the same time, additional geojson files are drawn to show the route sections where both sources agree that there is a bicycle lane, and route sections where one source registers a bicycle lane and the other does not.

The total length of all routes in a geojson file can be calculated by using the Python “geographiclib” library to measure the distance between each pair of points along the route. Therefore, the geojson files can not only be used to compare routes on a map, but to measure the similarities and differences in metres.

The “Principal Bicycle Network” dataset is available in a geojson format. This allows a quick visual comparison with OpenStreetMap and the detected routes. It is possible to align the “Principal Bicycle Network” dataset to the OpenStreetMap data to perform a detailed comparison with measurements, however this was not attempted. The “Principal Bicycle Network” dataset had significant gaps in the survey areas, so significant that quantifying the differences in metres would not have been very meaningful. Please see the results section 4.2.

3.3 RQ3: Building a map of bicycle lane routes from dash camera footage captured in a survey area

The use of Google Street View images for detection of bicycle lanes has both advantages and disadvantages to consider. Google Street View image data is readily available for a wide area across many jurisdictions internationally, and it can be collected without a physical presence on location. However, usage comes at a cost, which may be a significant factor if mapping is required across a large area. The image resolution is limited to 640x640 per image, though perhaps this can be worked around by requesting many more images per location, each with a tighter field of view, at additional cost. It appears that distinct images are only available every 10 metres. The images may be several years out of date. The position of the camera cannot be controlled, and it might miss service roads or the other side of a divided road. With only one image available for each location, a bicycle lane marking might be obscured due to the presence of other traffic.

A local authority might prefer to gather their own footage, to address these shortcomings. For the third research question, we explore whether bicycle lanes can be detected using dash camera footage instead, perhaps captured from vehicles that must regularly traverse the roads in the area to provide other services, such as waste disposal or deliveries.

3.3.1 Choice of dash camera hardware

For these experiments, the “Navman MiVue 1100 Sensor XL DC Dual Dash Cam” was self-installed inside a sedan, at a cost of $529 AUD. This model was selected because it provided location metadata in NMEA files accompanying the MP4 video recordings. For each minute of footage recorded, the device produced an MP4 file and a corresponding NMEA file. The NMEA file provided the latitude, longitude, heading, and altitude at one second intervals.

Video footage was recorded at 60 frames per second, at 1920x1080 resolution. Only images from the front-facing camera were used.

The camera features a “wide angle” lens to capture a good view of the road and its general surroundings, however optical specifications such as focal length were not available from the manufacturer’s website.

3.3.2 Gathering dash camera footage

On the 3rd of October, 2021, Melbourne set the record for the longest “COVID lockdown” in the world [46]. Severe restrictions were placed on residents being more than 5km from home, gradually easing to 10km and then 15km for a period of many months during the course of the research[47]. Permitted reasons to leave home were restricted. Therefore it was only possible to experiment with dash camera footage from a confined area.

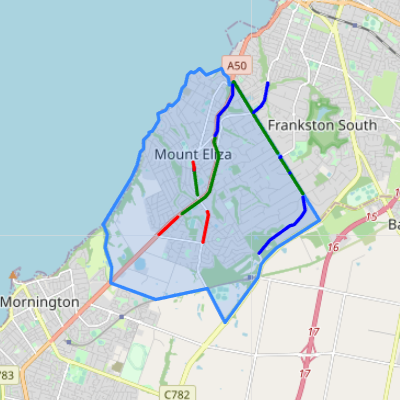

Approximately 100 minutes of footage was gathered by driving within a local area around the outer metropolitan suburbs of Mount Eliza, Frankston, Langwarrin and Baxter in Melbourne, Victoria, Australia. Not every road in the area was covered. Roads were selected for inclusion in the footage to include all known bicycle lanes in the area, based on local knowledge and a review of the OpenStreetMap data. A variety of other roads were included, to cover roads with and without a paved shoulder, major divided roads and small local roads, and a mix of residential and commercial areas.

The dash camera video footage and corresponding NMEA files were transferred to a computer and processed using the Jupyter Notebook described in appendix A.1.12.

For each pair of files, the NMEA file was loaded into memory, then the video file was split into a series of images. The 60 frame per second footage was reduced to 5 frames per second by only sampling every 12th image. Sampling images at a higher frequency than this did not appear to be necessary, due to the high similarity between adjacent frames. Each extracted frame was assigned a location by extrapolating the readings from the two nearest measurements in the NMEA file. An entry was then added to an output “batch” CSV file with the path to the image file, and its location metadata.

3.3.3 Training the bicycle lane detection model

The dash camera footage was initially processed using the original model that was trained exclusively on Google Street View images in section 3.1, without any retraining based on dash camera images. The original model was able to detect every bicycle lane marking in the test footage, however further training and refinement was required to address some performance issues:

-

•

Although the Google Street View-trained model was able to detect every marking during initial trials, it typically only did so when the marking was very close to the camera vehicle. Further training was apparently needed on footage from the dash camera in order to allow it to detect markings that were further away, but still close enough to be clearly visible.

-

•

There were also issues with false positives that had not been apparent when using the Google Street View model on Google Street View images. The model would sometimes yield false positives in the following situations:

-

–

Other white markings on the road, such as turning arrows, diagonal stripes to represent a traffic island, “keep clear” signs, or dashed lines advising traffic to “give way” at an intersection

-

–

Pale road defects or other anomalies such as manhole covers

-

–

Reflections from the bonnet of the camera vehicle

-

–

Clouds or random objects well outside the boundary of the road

-

–

See figure 3.4 for examples.

A decision was made that the detection model needed to be trained with additional data from the dash camera footage. The footage was divided up into a “training area” consisting of footage captured in the Frankston, Langwarrin, and Baxter area, and “test area” footage from Mount Eliza.

The Jupyter Notebook documented in appendix A.1.16 was used to process the dash camera footage from the “training area” with the initial detection model that had been trained exclusively on Google Street View images. Every image that yielded a detection – whether a true positive or a false positive – was then labelled and allocated to the “training dataset” and “test dataset” according to an 80:20 ratio. A total of 342 images were added to the datasets, supplementing the existing Google Street View images.

The original Google Street View training images were labelled with a single class “BikeLaneMarker”. The new training images were labelled with the following additional classes, to encourage the detection model to think of many of the sources of false positives as something other than a bicycle lane marking: “ArrowMarker”, “IslandMarker”, “RoadWriting”, “GiveWayMarker”, and “RoadDefect”.

The Google Street View images that had already been included in the dataset were not re-labelled to consider the additional classes, though this was considered as an option if required to improve performance.

To reduce false positives due to reflections from the bonnet of the camera vehicle, or clouds and other random objects well away from the road, a detection mask was defined so that bicycle lane markings would only count as detections if they fell into an area on the left hand side of the road, in front of the bonnet of the car. See figure 3.5 for a visualization of this mask in an example image.

The new images for the enhanced “training” and “test” datasets were gathered and labelled via the Jupyter Notebook documented in appendix A.1.13 and the “labelImg” tool. A new model was then trained and evaluated via the Jupyter Notebook documented in appendix A.1.14, and its predictions for the “training” and “test” datasets were analysed in detail using the Jupyter Notebook documented in appendix A.1.15. Finally, the selected model was applied to the dash camera footage from the “test” area of Mount Eliza via the Jupyter Notebook documented in appendix A.1.16.

3.3.4 Mapping dash camera detections and comparing to other sources

Section 3.2.4 described the process that was used to produce geojson files to compare routes that were detected from Google Street View images to the routes that are recorded in OpenStreetMap. When sampling images from Google Street View, each sample location can be traced back to a “way” and a “node” in OpenStreetMap, where the latitude and longitude details came from. Therefore any detections are already precisely aligned to points in the OpenStreetMap extract.

Images that were collected from dash camera footage take their coordinates from the dash camera’s GPS receiver, and they are sampled from locations anywhere along the road. To infer routes from bicycle lane markings detected in dash camera images, each detection location is mapped to the nearest intersection “node” in the OpenStreetMap data. Once this has been done, the rest of the process is the same as for detections from Google Street View images.

To find the nearest intersection node to a point, the Python “shapely” library is first used to find the nearest “way” to the point. The “shapely” library appears to use bounding boxes to accelerate this process: It was significantly faster than a brute-force search of all nodes in the OpenStreetMap data. Once the closest “way” is found, it is traversed to find the nearest “node” that is an intersection. At the same time, the process records the nearest “node” of any type, to help with traceability in case the detection occurred a significant distance away from the nearest intersection.

Wherever OpenStreetMap represented a highway or other divided road as multiple parallel ways, the above process was able to distinguish the side of the road that was closest to the camera position. It is important to gather footage from both sides of a divided road when measuring the differences between the generated map and OpenStreetMap. Otherwise, OpenStreetMap might count a bicycle lane on two “ways” for the divided road, where the generated map only counts one.

3.4 RQ4: Surveying other infrastructure details using dash camera footage

Further work was conducted to demonstrate how the approach of sampling images, processing them with a model, and correlating the results back to a map, might be re-used to survey other details of interest. A process was created to map any apparent “paved shoulders” on the side of the road in the survey area. Previous studies such as Klobucar & Fricker, 2007 [7] have observed that a paved shoulder can improve cyclist safety, even if it is not formally marked as a bicycle lane.

For a discussion of other areas of potential for future work, please refer to section 4.6.

To identify a paved shoulder, it is necessary to detect lane markings and the edge of the road on the side of the road that vehicles drive on. For the dash camera footage collected in this research project, this is the left-hand side of the road.

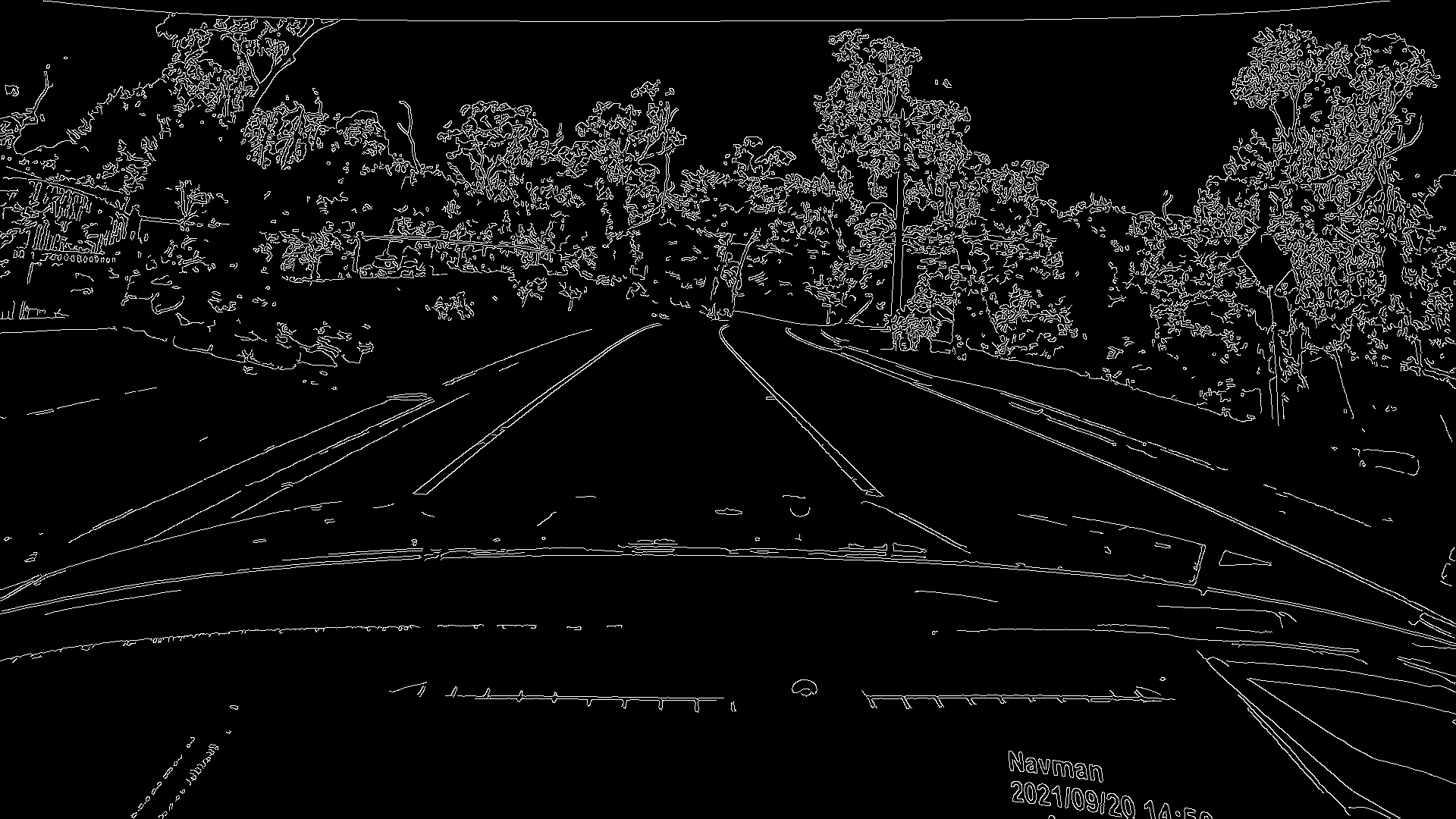

3.4.1 Correcting dash camera images for lens distortion

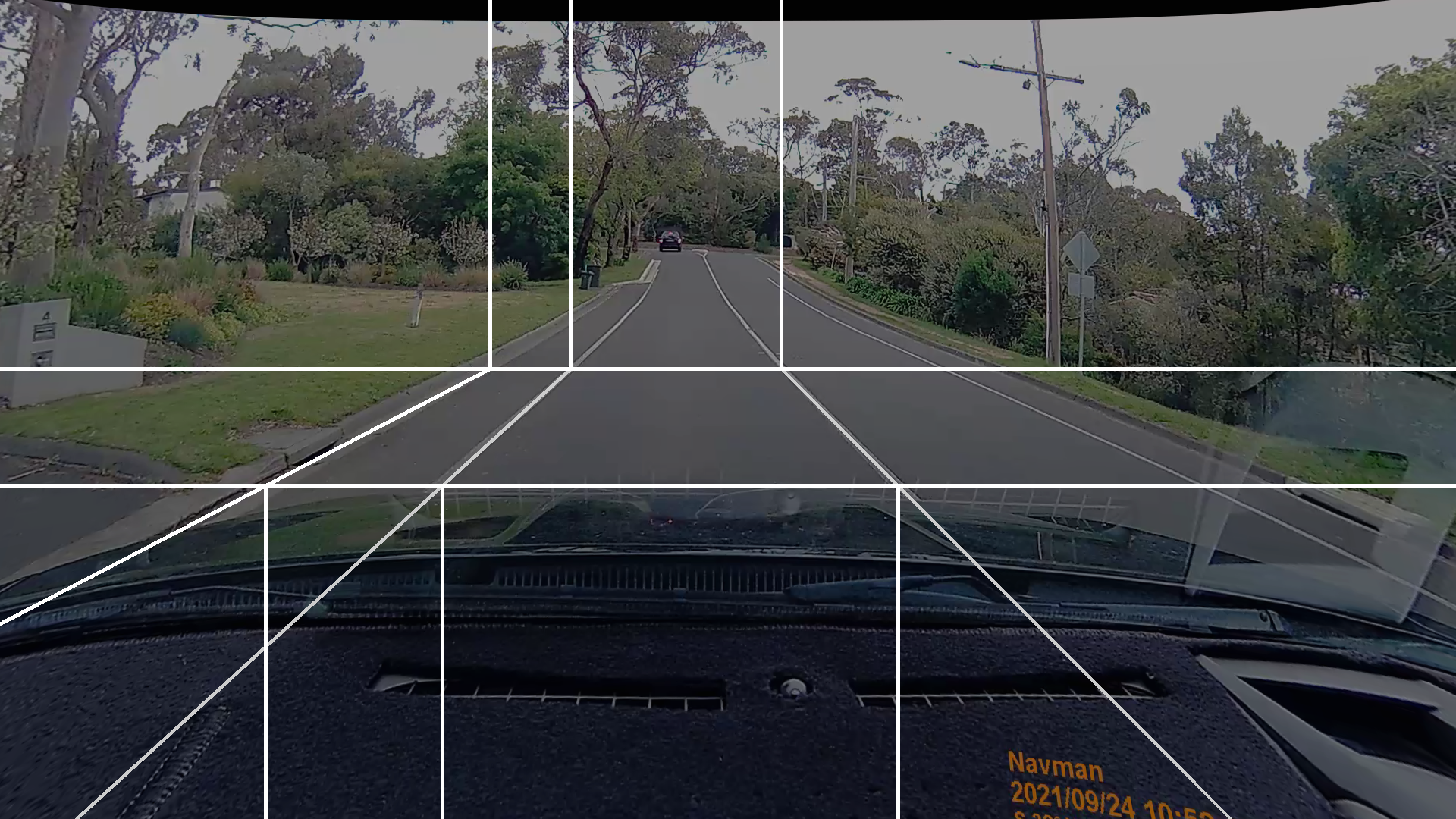

Lens distortion is where the optical properties of a camera’s lens causes straight lines to appear curved in an image. The “OpenCV” library provides a method to correct distortion for a specific camera [48]. The camera is used to capture a range of images where a special calibration tool appears in frame. See figure 3.6 for some example images. An image of a “chessboard” pattern of known dimensions is held in front of the camera in different positions.

After the calibration images are collected, they are processed by OpenCV to create a mathematical model of the camera’s lens distortion. With this model, OpenCV can apply a transformation to images from the same camera, to correct for lens distortion.

When searching for paved shoulders in a survey area, we are looking for lines in an image that are often straight. The dash camera being used to collect images was therefore calibrated, and in this exercise, all images were processed to correct for distortion. See figure 3.7 for an example raw image from the dash camera, and a corresponding corrected version.

There was not much difference between the corrected and uncorrected images. The most obvious sign that the correction has been applied in figure 3.7 is that the top edge of the corrected image is bent downwards slightly, and the location/speed/time information that usually appears in the bottom right corner has been shifted. Although the operation to correct for lens distortion did not appear to have a significant impact when it came to detecting paved shoulders, it was retained in the pipeline to support any future photogrammetry work to estimate the widths of the lanes.

3.4.2 Detecting lane markings

A common solution to the problem of detecting lane markings involves applying a combination of the Canny edge detection algorithm proposed by Canny, 1987 [35] and the Hough transformation proposed by Hough, 1972 [36]. This general approach has been followed in many papers such as Li et al, 2016 [49] and Chai et al, 2014 [50], and it is frequently used in capstone projects in the self-driving car domain. The approach does not involve any machine learning or deep learning techniques.

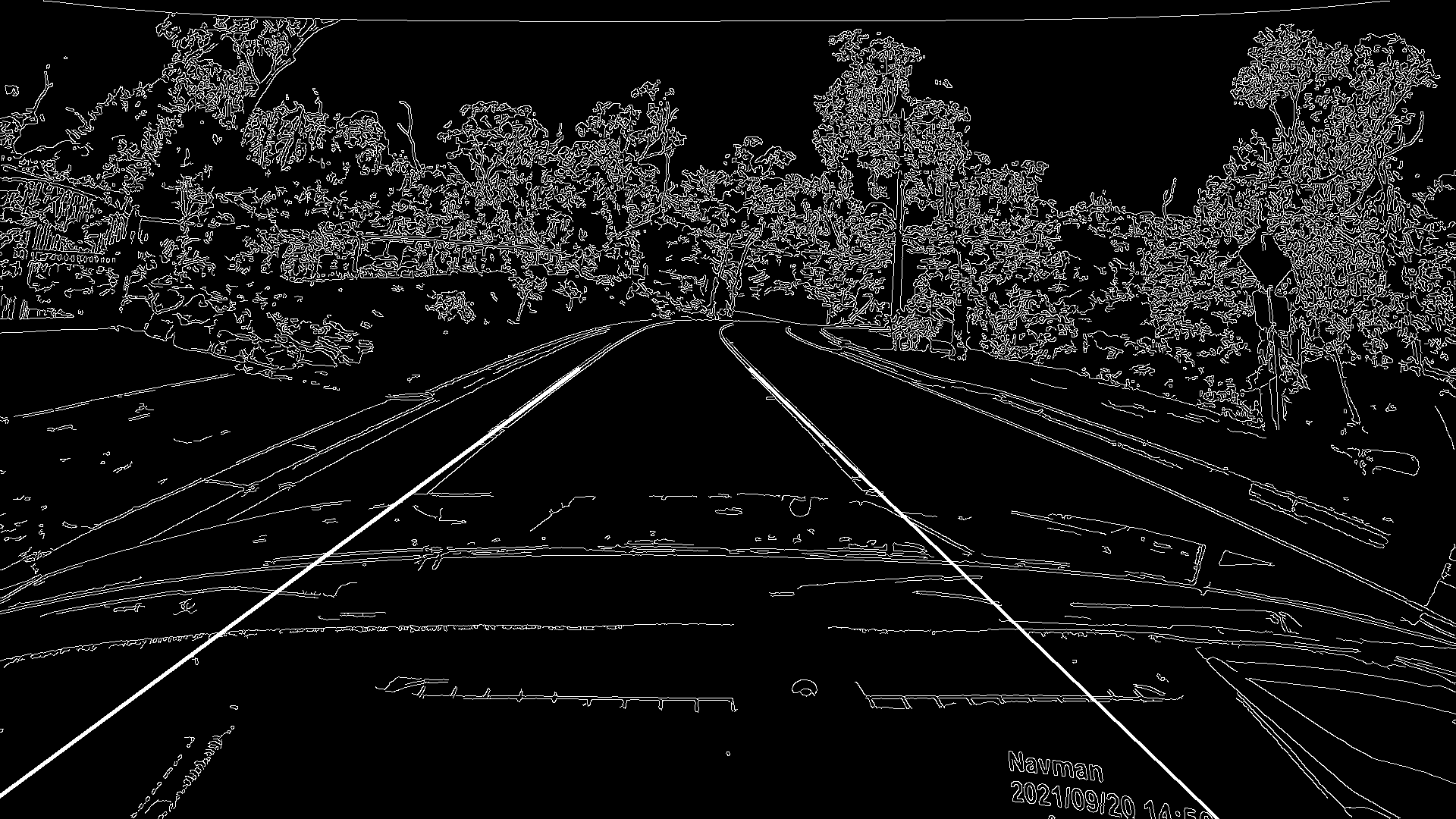

The Canny-Hough approach typically works as follows:

-

a.

Correct the image for lens distortion.

-

b.

Apply Canny edge detection to detect edges in an image [35].

-

c.

Apply a mask to exclude edges outside a triangle representing the area immediately in front of the vehicle.

-

d.

Apply a Hough transform [36] to convert these edges into lines, each line having a slope and an intercept.

-

e.

Partition the lines into two groups: Lines that slope upwards when scanning from left to right, and lines that slope in the opposite direction. For each group of lines, take the average slope and intercept. These represent the assumed lane boundaries. Optionally redraw the image with the detected lines drawn as an overlay, to visualize them.

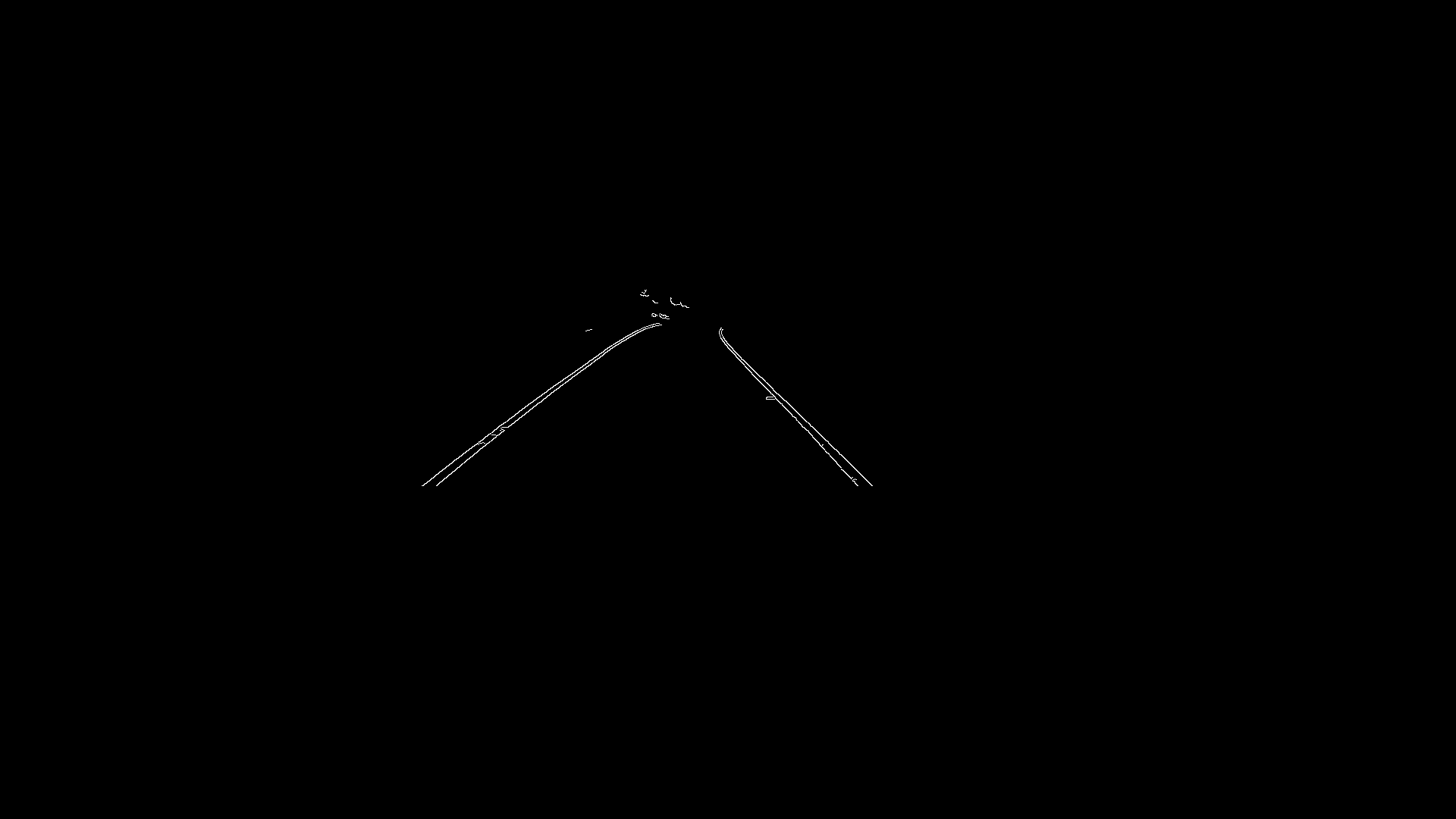

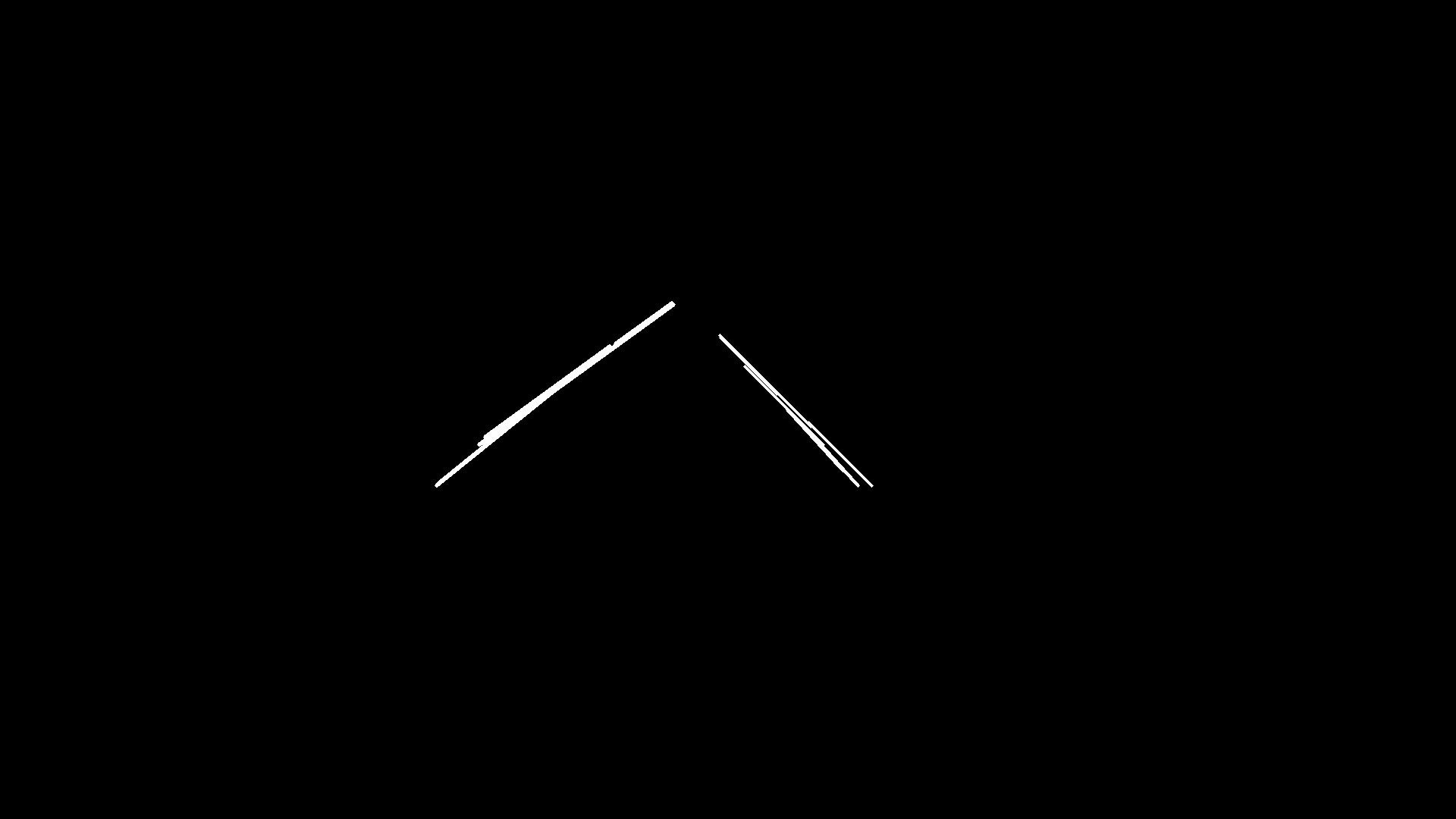

Please see figure 3.8 for example images to demonstrate the process.

The “OpenCV” library provides convenient tools to implement the required transformations.

Typically, the Canny-Hough process is used to detect the camera vehicle’s own lane. In order to detect a paved shoulder, we first want to detect the boundaries of our vehicle’s own lane, then perform a second pass at the image where the mask has shifted to focus exclusively on areas further to the edge of the road.

A paved shoulder will be defined by two lines, each with a “slope” and an “intercept”. One line will shared with a boundary of the camera’s own lane. The other line will be the edge of the road. To detect this extra line, we apply a mask to the Canny edge detection image just to the left of the camera’s own lane boundary that was found in the first step. We then apply the same Hough transformation to detect lines, and average the ones with the expected “left” slope, to find a slope and intercept for the outer boundary of the paved shoulder. See figure 3.9 for an example.

If the Canny-Hough approach cannot find another line to the left of the camera vehicle’s own lane, it is a strong sign that there is no clearly defined paved shoulder on the road at that location. If a line is found, it could be a paved shoulder, or it could be a false positive caused by “noise” from something on the side of the road, but within the area included by the mask.

Please see figure 3.10 below for example images where lines were detected for a possible paved shoulder. In each of the example figures, two horizontal lines have been drawn. The lower horizontal line shows where the detected lines intersect a horizontal line just above the bonnet of the car, the nearest point in the frame with a clear view. The upper horizontal line shows where they intersect at an arbitrary point further into the distance. Vertical lines are also drawn to highlight the intersection points. Together, the horizontal and vertical lines are a visual aid to help assess the width and stability of any detected lanes, as the operator views the images quickly in sequence.

Figure 3.10(a) shows a true positive match, where the boundaries of a paved shoulder have been correctly identified. Figure 3.10(b) shows a situation where a concrete gutter has resulted in a very narrow area being detected. This potential for this type of false positive can be mitigated by requiring a minimum width for any detected lane. Figure 3.10(c) shows a false positive match, where “noise” from a low wooden fence parallel to the road has caused the average of lines to the left of the camera’s old lane to appear to be a paved shoulder, off-road and in the grass. This phantom line is not “stable” and only appears for a few frames. This type of false positive can be reduced using metrics that require a paved shoulder to be consistently detected across most images in a road segment, and/or requiring that the slope and position of the line not vary too much from frame to frame.

3.4.3 Mapping paved shoulders across a survey area

The Canny-Hough lane detection method can be applied to all sample frames from the survey area, using a similar batch process to the bicycle lane detection process in section 3.3 and substituting a different detection model. The challenge is setting robust criteria for flagging a “detection” that will result in a sensible map. The simplest criteria would be to look for frames where lines were detected for both boundaries of a paved shoulder, but when it was applied to the training area footage, the results were very inconsistent. On roads where there was a paved shoulder or bicycle lane, the boundaries of that lane remained relatively stable from frame to frame, and the width between the boundaries of the detected area was relatively wide. There were few “skipped” frames where the boundaries were not detected. In contrast, on roads without a paved shoulder or bicycle lane, there may be many “skipped” frames, the slopes of the detected boundaries were inconsistent from frame-to-frame, and the detected boundary lines often had an intersection point much closer to the bottom of the frame.

The following solution was proposed:

-

•

Each frame would be linked to its two nearest intersection “nodes” in the OpenStreetMap data. All frames associated with a single stretch of road between two intersections would be assessed as a group.

-

•

If the model fails to find both boundaries of a paved shoulder in more than a threshold percentage of frames along a stretch of road, then it is assumed that there isn’t one.

-

•

Frames from locations within 30 metres of an intersection were excluded from consideration, to account for routine tapering of paved shoulders at intersections.

-

•

A horizontal line is drawn across the frame towards a “horizon” as per figure 3.10. The width of the space between the boundary lines is measured at this height within the frame, in pixels. The mean width is calculated across all frames in the stretch of road where boundary lines were detected. If the mean is less than a threshold number of pixels, then the paved shoulder is too narrow, and it is assumed not to exist. This accounts for very narrow margins such a gutter that are of no use to a cyclist.

-

•

If the model finds both left and right boundary lines for a possible paved shoulder, use the slopes and intercepts to calculate an (x, y) coordinate relative to the bitmap image where those two boundary lines intercept. Calculate the standard deviation of the x and y intersection values across all frames in the stretch of road where both boundary lines are defined. If the standard deviation in either the x or y dimension is greater than a threshold number of pixels, then the boundary lines are considered to be too inconsistent across images. The stretch of road is assumed not to have a paved shoulder.

The thresholds described above were tuned by analysing the dash camera footage from the “training” area and producing a CSV file with one record per frame, in chronological order. Each frame was mapped to the nearest “way” and the two nearest intersection “nodes” in the OpenStreetMap data, to associate it with a particular stretch of road, from intersection-to-intersection. It was also labelled with the name of the road, for traceability. The required summary statistics were calculated for each group of frames associated with a stretch of road, and these were attached to the CSV file as additional columns. Finally the footage was reviewed, and using the road names, changes of heading and “node” IDs as a guide, each record was labelled to record whether there was really a paved shoulder there or not.

A manual tuning process was performed, filtering the rows based on the column recording the “ground truth”, to find thresholds that appeared to correctly predict the outcome as often as possible.

The model was then applied to the footage from the Mount Eliza “testing” area to see how it performed with the selected thresholds.

To construct a map of detected paved shoulders, the dataset was summarised to a list of distinct combinations of “way” ID and intersection “node” IDs for each road section where a paved shoulder was predicted. This information was cross-referenced to the OpenStreetMap data for each “way” ID, and a line was “drawn” in a geojson file for all “nodes” on the way between the two intersection nodes, including the intersection nodes themselves. The geojson file could then be drawn on map in a Jupyter Notebook using the Python “ipyleafelet” library as per sections 3.2 and 3.3.

In this exercise, lane detection was attempted from the dash camera footage only, as a demonstration of how the techniques developed to address the first three research questions could be re-used to gather other information visually. It could be applied to Google Street View images, however it was only attempted with the dash camera footage, where it is easier to ensure that each image is taken with a heading that is consistent with the direction of the road.

Chapter 4 Results and Discussion

4.1 RQ1: Training a model to identify bicycle lanes in Google Street View images

Initial performance targets of 80% recall and 80% precision were chosen for this research question, to provide a solid baseline before attempting to infer and map routes from the detection points as part of the subsequent phases of research. To infer routes from detection points with the method proposed in section 3.2, we need to be confident that bicycle lane markings will be detected at most intersections, if present, otherwise the missed detections would cause an incorrect discontinuity in the inferred route. A recall of 80% would mean that we can expect to detect the markings most of the time. We also want to limit false positives, because two adjacent false positives could cause us to infer a route where there is none. A precision of 80% would mean that only a small minority of our detections are false positives, and we would be very unlucky to infer a long route spanning multiple intersections that is not real.

An initial dataset of 256 Google Street View images was gathered, with the option to gather more, if necessary, to achieve acceptable performance for the first two research questions. They were labelled and split into 205 “training” images and 51 “test” images, according to an 80:20 split. One of the 51 “test” images did not contain any bicycle lane markings. It was initially included in the dataset in error, however it was retained because it proved useful for quick sanity checks, to ensure the model was not blindly flagging bicycle lane markings in every image.

Five pre-trained object detection models from the TensorFlow 2 Model Garden were trained and evaluated using these “training” and “test” datasets, through a process of transfer learning. Each candidate model was trained for 2,000 steps at a time, with training paused every 2,000 steps to evaluate performance against the “test” dataset. Training continued until it yielded no significant improvement to the mean Average Precision of bounding box detection when evaluated against the “test” dataset.

Table 4.1 lists the five pre-trained object detection models that were tested, and the mean Average Precision of their bounding box detection when evaluated on the “test” dataset after 2,000 training steps. The “EfficientDet D1 640x640” and “SSD ResNet101 V1 FPN 640x640” were unable to detect any bicycle lane markings even after 4,000 training steps, so training on them was ceased, to focus on the other three models that were producing more immediate results.

| Pre-Trained Model | Steps | Evaluation mean Average Precision |

|---|---|---|

| CenterNet HourGlass104 512x512 | 2,000 | 0.691116 |

| Faster R-CNN ResNet50 V1 640x640 | 2,000 | 0.476506 |

| SSD MobileNet V1 FPN 640x640 | 2,000 | 0.599674 |

| EfficientDet D1 640x640 | n/a | n/a |

| SSD ResNet101 V1 FPN 640x640 | n/a | n/a |

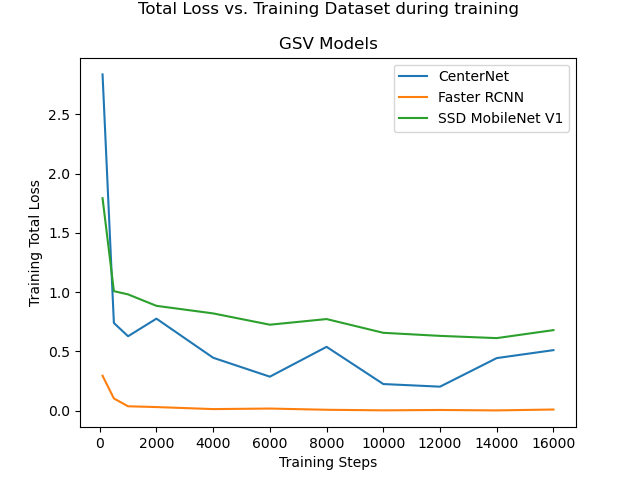

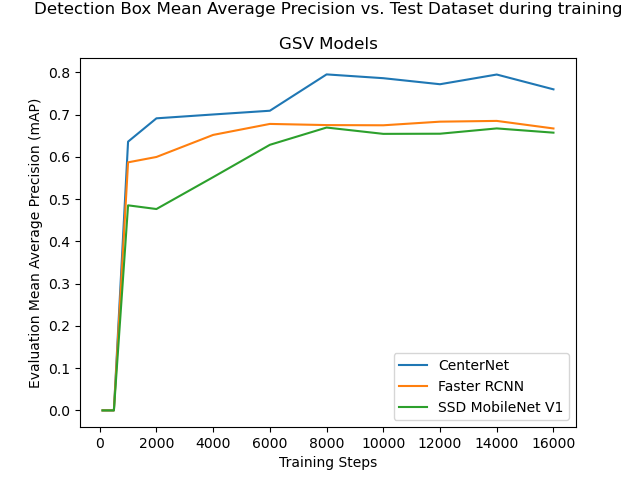

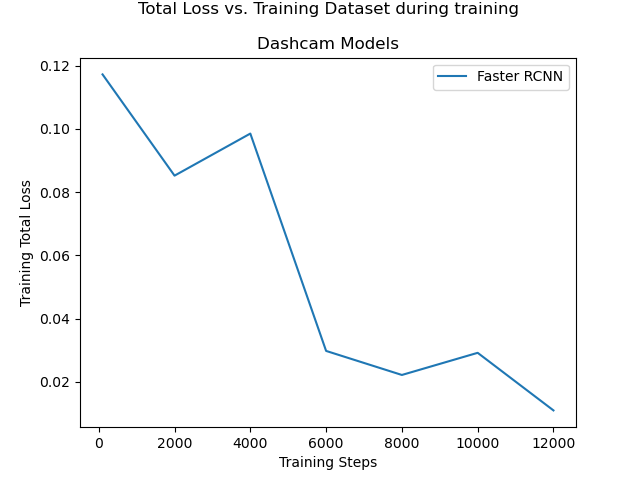

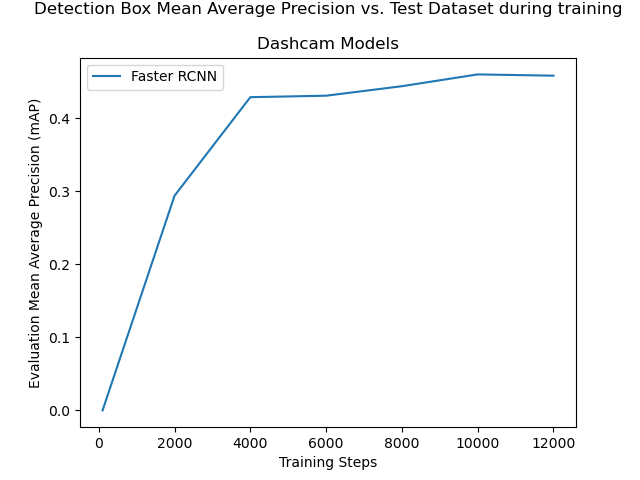

Figure 4.1(a) shows the “Total Loss” reported during training of the three remaining models. All three models reached a point of diminishing returns at 2,000 training steps.

Figure 4.1(b) shows the Bounding Box mean Average Precision during evaluation with the “test” dataset. Continuing the training beyond 2,000 training steps yielded small improvements in that metric. However, the most important measure of success for this model was whether it could correctly identify whether a bicycle lane marking appeared in an image or not. It was not necessary to know precisely where the bounding box was, so improvements to the Bounding Box mean Average Precision are only useful up to a point. Using the tool described in appendix A.1.8, each of the three models were applied to the “training” dataset and the “test” dataset, to inspect its predictions for each image. The images were examined to confirm that the bounding boxes were drawn in a sensible location, and to confirm whether the model had successfully found a bicycle lane marking in each image. The results are recorded in table 4.2.

| Pre-Trained Model | Train | Test | ||

|---|---|---|---|---|

| False Posn | False -ve | False Posn | False -ve | |

| CenterNet HourGlass104 512x512 | 0 | 11 | 0 | 13 |

| Faster R-CNN ResNet50 V1 640x640 | 1 | 0 | 4 | 0 |

| SSD MobileNet V1 FPN 640x640 | 8 | 30 | 4 | 9 |

The “Faster R-CNN ResNet50 V1 640x640” model was able to successfully locate a bicycle lane marking in every single image in “training” and “test” datasets where one appeared. It was not fooled by a solitary image with no bicycle lane markings that had been included in the “test” dataset. It produced a bounding box in the wrong position for four images in the test dataset. It easily met the target performance metrics, with 100% recall and 92% precision.

The “Faster R-CNN ResNet50 V1 640x640” model, trained to 2,000 training steps, was chosen as the preferred model, and used to create a bicycle lane route maps for research question 2.

The initial dataset of 256 images appeared to be sufficient to get reliable detection results with this model, so no further images were collected from Google Street View for the “training” and “test” datasets at this stage. If better performance was required to obtain sensible results when drawing maps of bicycle routes in the next section, we would address that by increasing the number of training steps, the number of training images available, add labels to common sources of false positive results, then re-assess the candidate models after further training.

4.2 RQ2: Building a map of bicycle lane routes from Google Street View images in a survey area

4.2.1 Mount Eliza

The model that was selected in section 4.1 was applied to a sample survey area in the vicinity of Mount Eliza. This area was chosen due to its accessibility: It was one of very few areas with bicycle lanes that was reachable within the COVID-19 lockdown restrictions that were in place at the time [46] [47]. It was therefore possible to validate the results via an in-person survey, in addition to comparing the results to OpenStreetMap and the “Principal Bicycle Network” dataset. It also allowed for comparison with results from dash camera footage in section 4.3.

A total of 1,113 locations were sampled from the survey area, at points within 20 metres of every intersection. Four images were downloaded via the Google Street View API at each location, a total of 4,452 images at a cost of approximately $31 USD. The exact survey area within Mount Eliza was chosen to include a mix of roads with and without bicycle lanes, ranging from no-through roads to a highway.

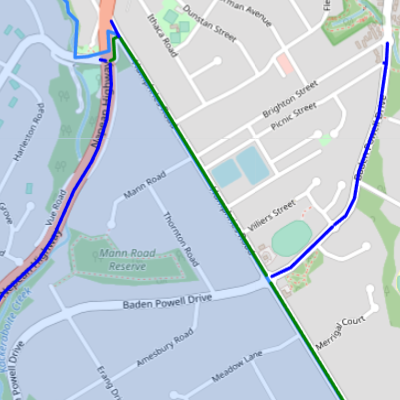

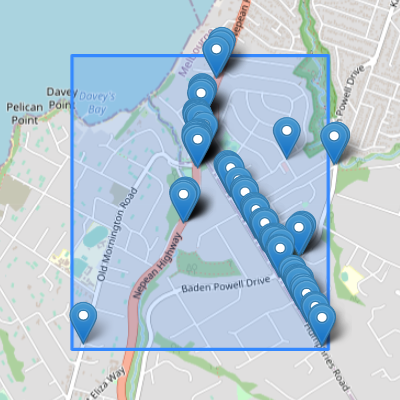

Figure 4.2 is a screenshot from an interactive map in the Jupyter Notebook described in appendix A.1.11, showing existing and planned bicycle routes in the survey area according to the “Principal Bicycle Network” dataset. The blue bounding box shows the survey area. The red lines show “existing” routes, and the blue lines show “planned” routes. The “Principal Bicycle Network” dataset is out of date. It correctly records that there is an existing route on the Nepean Highway, in red. However, the long, straight “planned” route, Humphries Road, has existed for at least several years, and so has the route to the north-east of it, on Baden Powell Drive. The planned route on Baden Powell Drive to the west of Humphries Road does not yet exist.

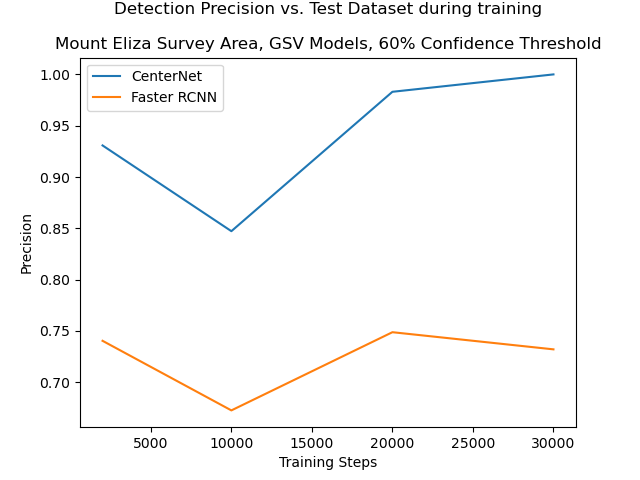

In section 4.1, the models were trained for 2,000 training steps, beyond which further training did not appear to yield any improvements when the model was evaluated against the small 51 image “test” dataset. The Faster R-CNN model appeared to be the most promising. However, when that model was applied to Google Street View images from the Mount Eliza survey area, the initial results were poor. Precision was significantly lower than it had been in the initial evaluation, with many false positive detections for random objects such as bushes, or leaves on the road. The models received further training on the original “training” dataset, up to 30,000 training steps, and the CenterNet model had the best performance, producing zero false positive detections after 30,000 training steps. It was therefore used to construct maps from the Google Street View images in the survey areas. See figure 4.3 for training statistics.

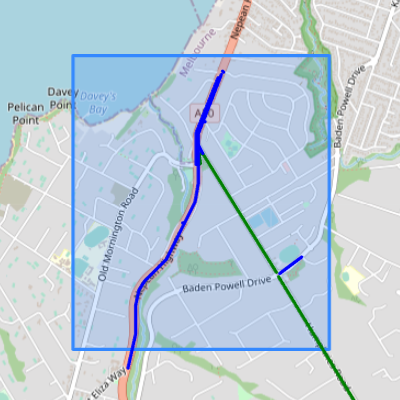

Figure 4.4(a) shows the individual points that were detected by the model from Google Street View images sampled near intersections in the survey area. Figure 4.4(b) shows the bicycle lane routes that were inferred from those detections, according to the method described in section 3.2.3. The routes are colour-coded to highlight areas of agreement and disagreement between the detection model and OpenStreetMap. Where both the detection model and OpenStreetMap agree that there is a bicycle lane, routes are coloured green. They agree that there is a bicycle lane on Humphries Road. Routes that were detected by the model but not recorded with a “cycleway” tag in OpenStreetMap are coloured blue. The OpenStreetMap data is missing the cycleway on the Nepean Highway, and the fragment of the Baden Powell Drive cycleway that was detected near the boundary of the survey area. Routes that are recorded in OpenStreetMap but were not detected would be coloured red, however there were no such routes in this survey.

Table 4.3 shows a comparison between the routes detected for Mount Eliza from the Google Street View images to what is recorded in OpenStreetMap, in metres. The two sources agreed upon 2,344 metres of bicycle lane routes. The detection model found an additional 2,787 metres that were not recorded in OpenStreetMap, including parts of the divided Nepean Highway where both sides of the road were counted due to them being mapped in OpenStreetMap separately. Distances are approximate due to the level of precision available when calculating distances between each point in the geojson files.

| Route source | Metres |

|---|---|

| Detection Model | 5,216m |

| OpenStreetMap | 2,344m |

| Both | 2,344m |

| Detection model only | 2,787m |

| OpenStreetMap only | 0m |

Based on an in-person survey of the area, and inspection of the Google Street View images that were downloaded, the detection model correctly detected all bicycle lanes within the survey area, with no false positives. It identified two routes that had not been recorded in OpenStreetMap.

4.2.2 Heidelberg

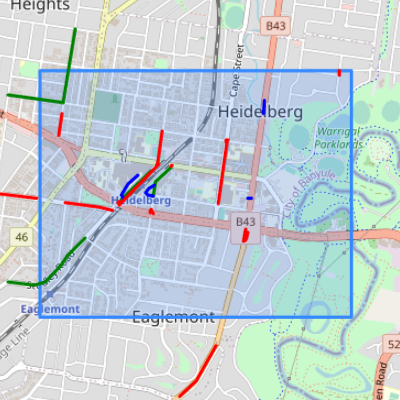

After surveying Mount Eliza with the Google Street View approach, the exercise was repeated for a survey area in Heidelberg, Victoria. The purpose of this exercise was to assess how well the detection models generalised to a more heavily built-up area, closer to the city. It included both residential and commercial areas. See figure 4.5 for the results.

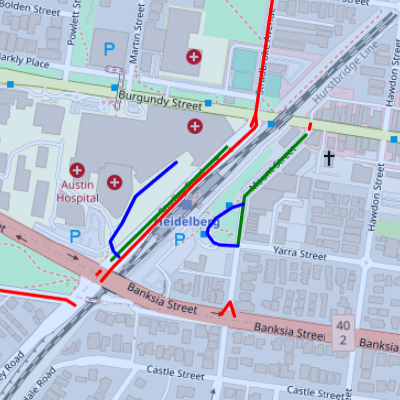

The red lines in the map again represent routes that are recorded in OpenStreetMap but not detected. Any red lines outside the bounding box describing the survey area can be ignored. The Google Street View images for the red lines within the survey area were examined manually, to assess whether the model had produced sensible results based on the input. Zooming into the red diagonal line on Studley Road near the Heidelberg train station, it can be seen that the model did correctly predict that there was a bicycle lane, but it only marked it on one side of the road, whereas it is tagged separately on both sides of a divided road in OpenStreetMap. The remainder of the red lines are routes where there is no bicycle lane visible in the Google Street View images, and the model has produced a sensible result. See figure 4.6 for example images from Cape Street and Stradbroke Street. It is possible that this is caused by out-of-date Google Street View images, however the images for Stradbroke Street were only six months old, and the streets did not seem wide enough to easily accommodate the addition of a bicycle lane. These routes might be errors in the OpenStreetMap data. An on-site survey by a local resident with access to the area confirmed this.

The blue lines near the Austin Hospital and Heidelberg Station are false positives. They are caused by the road being close enough to see a bicycle lane marking on a nearby road.

There is a very short segment where OpenStreetMap records a cycleway on Lower Heidelberg Road near the intersection of Banksia Street. This route would be barely larger than the nearby intersection, and it is almost certainly an error in OpenStreetMap.

A comparison between the improved detection model results and OpenStreetMap in terms of route lengths can be found in table 4.4.

| Route source | Metres |

|---|---|

| Detection Model | 4,563m |

| OpenStreetMap | 5,317m |

| Both | 2,094m |

| Detection model only | 469m |

| OpenStreetMap only | 2,536m |

The model was able to identify all bicycle lane routes that were recorded in OpenStreetMap and visible in the Google Street View images. It produced 469 metres worth of false positives, due to bicycle lane markings on one road being visible from multiple points on a nearby road. An approach using dash camera footage could help to address this issue, by ensuring that the camera is always correctly oriented to face the direction of the road, thereby allowing detection masks to be used to eliminate bicycle lane markings from nearby roads.

4.3 RQ3: Building a map of bicycle lane routes from dash camera footage captured in a survey area

4.3.1 Re-training the model for dash camera footage

Due to COVID-19 restrictions [46] [47], dash camera footage could only be gathered in a circular area with a 15km radius, much of which was a body of water. Therefore, the range of areas available to gather dash camera footage for research question 3 was restricted to a handful of outer metropolitan suburbs. Footage from the Frankston, Langwarrin and Baxter area was used to train a the detection model to work with dash camera footage, and footage from Mount Eliza was used to test it.

Training was conducted as per the approach documented in section 3.3. Initially, the original model from section 4.2 that had been trained exclusively on Google Street View images was used to process the dash camera footage from the training area. The initial positive matches – whether true positives or false positives – were added to the “training” and “test” datasets according to an 80:20 split. The resulting “training” dataset consisted of 205 Google Street View images and 252 dash camera images. The “test” dataset consisted of 51 Google Street View images and 85 dash camera images.

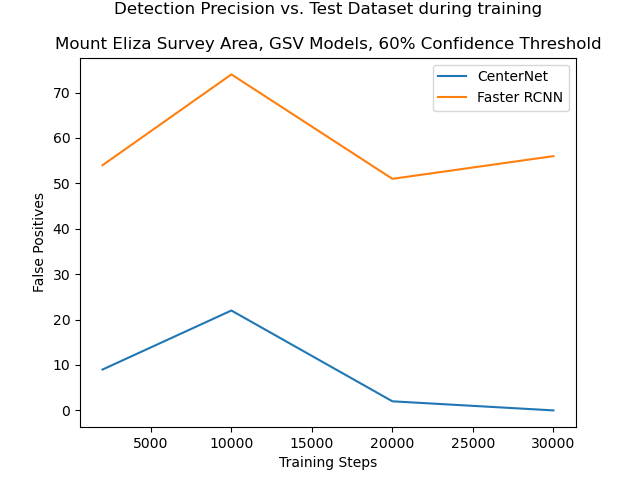

Another “Faster R-CNN ResNet50 V1 640x640” model was then trained and evaluated using the combined Google Street View + dash camera footage datasets. To deal with remaining false positives, the dash camera images were labelled with additional classes to distinguish common misleading features from bicycle lane markings, and a detection mask was applied to remove reflections from the bonnet of the car, and distractions from the right hand side of the road. Please see section 3.3 for a full discussion of these enhancements. The results observed from the model after every 2,000 training steps are recorded in figure 4.7 and table 4.5.

| Model | Steps | Train | Test | ||||

|---|---|---|---|---|---|---|---|

| Total Loss | False +ve | False -ve | mAP | False +ve | False -ve | ||

| Faster R-CNN | 2,000 | 0.0852 | 1 | 8 | 0.293945 | 3 | 9 |

| Faster R-CNN | 4,000 | 0.0985 | 0 | 8 | 0.428875 | 1 | 2 |

| Faster R-CNN | 6,000 | 0.0298 | 0 | 0 | 0.430906 | 3 | 3 |

| Faster R-CNN | 8,000 | 0.0222 | 0 | 0 | 0.443913 | 1 | 2 |

| Faster R-CNN | 10,000 | 0.0292 | 0 | 0 | 0.46007 | 2 | 2 |

| Faster R-CNN | 12,000 | 0.011 | 0 | 0 | 0.458306 | 0 | 1 |

Figures 4.7(a) and 4.7(b) show that there were diminishing returns from further training beyond 4,000 to 6,000 training steps, in terms of both the “Total Loss” reported in the training process, and the “mean Average Precision” of the bounding boxes reported from the evaluation process against the “test” dataset.

The model was applied to the “training” and “test” images via the tool documented in appendix A.1.15 to examine the bounding boxes it chose to draw, and whether it believed there was a detection or not. The results of this review are found in the “False +ve” and “False -ve” columns reported in table 4.5. After 6,000 training steps, the model was able correctly detect all bicycle lane images in the “training” data, with no false positives, false negatives, or misplaced bounding boxes. In the test data, there were a total of 3 false positives and 3 false negatives out of 136 test images. These false results came from a mix of Google Street View images and dash camera images. After 8,000 training steps, the performance improved further, in that there was 1 false positive and 2 false negatives when analysing the test data, and these were all for challenging Google Street View images. See figure 4.8.