Mass-Matrix Differential-Algebraic Equation Formulation for Transient Stability Simulation

Abstract

This letter proposes a mass-matrix differential-algebraic equation (DAE) formulation for transient stability simulation. This formulation has two prominent advantages: compatible with a multitude of implicit DAE solvers and can be conveniently implemented based on the traditional formulation, for example, by separating the parameters in denominators into the diagonals of the mass matrix. It also allows reducing the dynamics using null time constants. Benchmark studies are presented on the time and accuracy of 17 implicit solvers for the proposed formulation using the Kundur’s two-area system and a 2,000 bus system.

Index Terms:

Differential-algebraic equations, mass-matrix formulation, transient stability simulation, numerical integration.I Introduction

Power systems transient stability programs traditionally employ the following explicit differential-algebraic equations (DAEs) for transient stability analysis:

| (1) |

where () and () are the differential and the algebraic equations, respectively, are the differential states, are the algebraic variables, and are discontinuous states from discrete models.

Recent works such as [1] propose an explicit formulation that can convert differential states into algebraic ones. This is referred to as the flexible formulation given by

| (2) |

where is a time-invariant diagonal matrix with diagonal satisfying

| (3) |

More recently, a semi-implicit formulation is proposed in [2] with the abilities to 1) increase the sparsity of Jacobians, 2) reduce computation efforts, and 3) enable state-to-algebraic switching, given by

| (4) |

where and are and time-variant, highly sparse, non-diagonal and non-full rank matrices. This formulation, however, can be challenging to implement due to the complexity and effort associated with implementing custom solvers and porting models from (1) to (4), which, although, can be carried out incrementally.

This letter proposes a mass-matrix formulation that can take advantage of numerous DAE solvers and is straightforward to implement. It also allows for converting differential states to algebraic ones by setting associated parameters to zero.

II Mass-Matrix Explicit DAE Formulation

II-A Mass-Matrix DAE Formulation

The proposed mass-matrix formulation is given by

| (5) |

where is a possibly-diagonal matrix with its upper-left block being . () is chosen based on and determines the diagonality of .

The choice of is not unique. For transient stability simulations, the simplest choice of is the numerators of if is a fraction expression with a constant parameter as the denominator. In such case, becomes a time-invariant diagonal matrix with the element being

| (6) |

The proposed mass-matrix formulation in (5) has the following relationships with the existing ones:

- 1.

- 2.

- 3.

Such relationships can be represented as:

| (7) |

The main advantages of (5) are two-fold:

-

1.

As a general formulation, it is directly supported by a broad spectrum of full-fledged DAE solvers. Power system researchers can thus focus on formulating models without worrying about the details in solution techniques.

-

2.

Simple to implement. The only required change to the traditional formulation is to separate the parameters (typically, time or mass constants) from the denominators of into the mass matrix. Mixing models in the mass-matrix and the traditional formulations is allowed, as the latter is a special case.

In addition, the mass-matrix formulation shares the same advantage as the semi-implicit formulation that allows model simplification by setting some time constants to zero.

II-B Modeling Examples

This section presents some basic transfer functions and synchronous generators in the mass-matrix formulation, in order to demonstrate the implementation simplicity.

II-B1 First-order lag block

The conventional formulation of lag block has one differential equations given by

| (8) |

Using the proposed formulation, the numerator can be chosen as the right-hand-side (RHS) by moving to the mass matrix diagonal:

| (9) |

which allows using to convert the lag block to a pure gain. This formulation is the same as using the semi-implicit formulation.

II-B2 Lead-lag block

The lead-lag block can be implemented in the following serial approach:

| (10) |

The mass-matrix formulation can be given by

| (11) |

where is an auxiliary parameter given by

| (12) |

can be pre-calculated before simulation and thus will not increase the computation operations. This implementation retains the ability to convert the lead-lag (when ) to a lag block or to a pass-through block (when ).

II-B3 Synchronous Generator Model

The round-rotor generator model [3] is conventionally given by

| (13) |

where and are calculated algebraically. The proposed mass-matrix DAE formulation is

| (14) |

Generator model reductions can be achieved by setting the corresponding time constants to zero. For example, the one d- and one q-axis flux-decay model can be obtained by setting .

II-C Implicit Trapezoidal Method for Mass-Matrix DAE

The mass-matrix formulation of DAE can be solved by a variety of numerical integration methods. Implicit numerical integration schemes are known for their good performance for Ordinary Differential Equations (ODEs) and DAEs that are stiff, which is the case for transient simulation. At each step, ITM solves a set of nonlinear equations consisting of both differential and algebraic equations simultaneously through Newton’s iteration. This subsection discusses the form of iteration for the mass-matrix formulation.

The nonlinear equations to solve for (1) at time are given by

| (15) |

where is the step size, and and are the differential states and equation RHS computed at the previous time (). Note that for , the sign and the scaling factor can be chosen arbitrarily, and a small close to improves the convergence. Accordingly, solutions are obtained by iteratively updating variables using (16), where the increments are calculated using (17).

| (16) |

| (17) |

Comparing (5) with (1), we can observe that the mass-matrix formulation can be derived by multiplying to both sides of the equation. Therefore, mutltiplying to (17) yields

| (18) |

The Jacobian matrix for calculating increments is given by:

| (19) |

Comparing (19) to (17), one can notice that the only required change to the solver is to substitute the identity matrix with the mass matrix . Therefore, numerical integration routines can be readily adapted for the proposed formulation.

II-D Remarks on the Computational Complexity

In terms of computational complexity, (5) is not more demanding than (1). When one uses a constant diagonal mass-matrix formulation, the number of division operations can be slightly reduced for evaluating and the subsequent Jacobian elements. Such effect, however, is negligible in actual test cases mostly because the number of reduced operations is small w.r.t. the total operations.

III Case Studies

III-A Solvers and Simulation Setup

The most significant advantage of the mass-matrix formulation is its compatibility with fine-tuned DAE solver packages, which usually come with a multitude of solution methods and error control mechanisms and have undergone rigorous tests. Such compatibility allows power system researchers to focus on modeling while utilizing the state-of-the-art numerical solvers.

| Solver Name | Order | Stability | Remarks | |||

|---|---|---|---|---|---|---|

| Rosenbrock methods (for small-stiffness problems) | ||||||

| Ros4LStab | 4 | A | ||||

| \cdashline2-5[1pt/1pt] | Rodas4 | 4 | A | Order 3 interpolant | ||

| \cdashline2-5[1pt/1pt] | Rodas42 | 4 | A | Order 3 interpolant | ||

| \cdashline2-5[1pt/1pt] | Rodas4P | 4 | A |

|

||

| \cdashline2-5[1pt/1pt] | Rodas5 | 5 | A | Hermite interpolant | ||

| Rosenbrock-Wanner methods (allow approximate Jacobian) | ||||||

| Rosenbrock23 | 2/3 | L | ||||

| \cdashline2-5[1pt/1pt] | ROS34PW1a | 4 | L | |||

| \cdashline2-5[1pt/1pt] | ROS34PW1b | 4 | L | |||

| \cdashline2-5[1pt/1pt] | ROS34PW2 | 4 | L | |||

| \cdashline2-5[1pt/1pt] | ROS34PW3 | 4 | A | |||

| Implicit Runge-Kutta methods (for stiff problems, low accuracy) | ||||||

| Trapezoid | 2 | A | Adaptive time step | |||

| \cdashline2-5[1pt/1pt] | ImplicitEuler | 1 | L | Adaptive time step | ||

| Multistep methods (for stiff problems) | ||||||

| QNDF1 | 1 | L | Quasi-constant time step | |||

| \cdashline2-5[1pt/1pt] | QNDF2 | 2 | L | Quasi-constant time step | ||

| \cdashline2-5[1pt/1pt] | QBDF1 | 1 | L |

|

||

| \cdashline2-5[1pt/1pt] | QBDF2 | 2 | L | |||

| \cdashline2-5[1pt/1pt] | QNDF | 15 | L | |||

For example, the DifferentialEquations.jl package [5] provides four categories of methods that naturally support the mass-matrix formulation: Rosenbrock methods and Rosenbrock-Wanner methods (for small stiffness systems), and Implicit Runge-Kutta methods and multistep methods (for stiff problems). An overview of the interfaced solvers in this work is shown in Table I, where “A” or “L” in the stability column indicates an algorithm being A-stable or L-stable [6]. Details of the solvers can be found in [5] and the accompanying documentation.

The proposed work has been implemented in ANDES [4], a Python-based hybrid symbolic-numeric library for power systems, and interfaced with the solvers in the Julia language with the Jacobian callback provided. Case studies utilize Python 3.7.6, ANDES 1.0.8, Julia 1.5.0, and DifferentialEquations 6.15.0. Simulations are executed on an Intel Xeon W-2133 CPU with 32 GiB of RAM running Ubuntu 16.04 LTS.

III-B Solver Benchmarks and Statistics

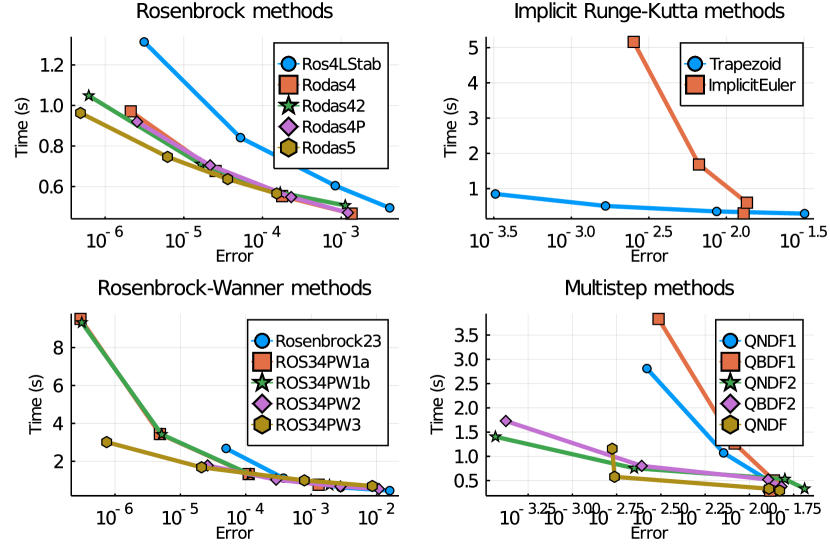

First, the Kundur’s system modeled in the proposed formulation is used for solver benchmarking. The system contains 52 differential states and 140 algebraic variables, and the rank of the mass matrix is 48 (two generators are reduced with ). The response following a line trip at s and a reconnection after 50 ms is simulated for 5 s. The baseline “accurate” solution is obtained using Rodas5, which is efficient in high accuracy, with both absolute and relative tolerances set to . Next, each solver is given four pairs of absolute and relative tolerances, chosen from , and , respectively. Errors are obtained as the difference between the accurate and the actual solutions at the final simulation step. Each case is run for five times to compute the average time. The results shown in Figure 1, and some observations are:

-

1.

The commonly used Trapezoidal method is balanced in speed and accuracy. ImplicitEuler is neither efficient nor accurate.

-

2.

Depending on the stiffness, the Rosenbrock methods can be faster than the Trapezoid method at some accuracy levels.

-

3.

The Rosenbrock-Wanner methods allow approximate Jacobians. When accurate Jacobians are used (in our case and most other transient stability simulation tools), the Rosenbrock-Wanner methods tend to be slower than the Rosenbrock ones.

-

4.

The QNDF method has similar speed and accuracy to the Trapezoid method. QBDF1 performs similarly to Implicit Euler.

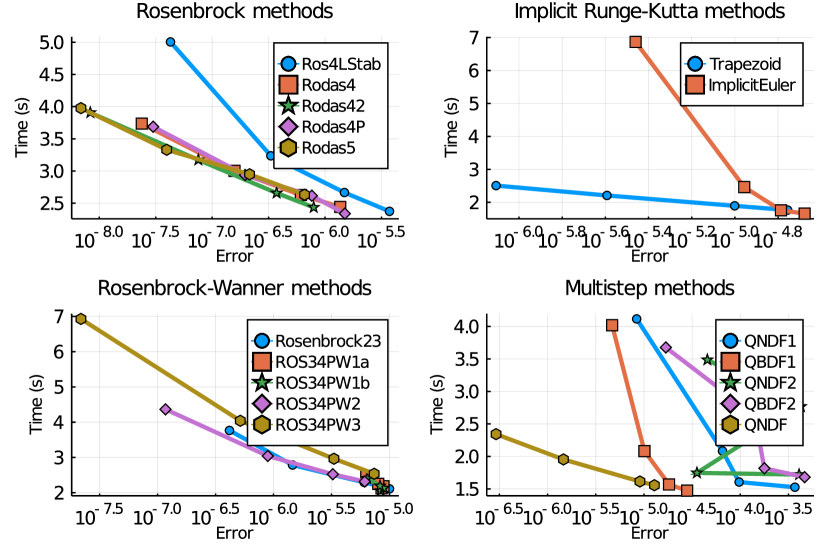

Next, the Great Britain (GB) 2,224-bus system [7] with dynamics is used for benchmarking. The system contains 788 state variables and 9,176 algebraic variables. A disturbance of line trip at s and a reconnection after 100 ms is simulated for 5 s. Benchmark results are shown in Figure 2. The observations from the Kundur’s system also apply to this case. For a different system, one can perform similar benchmarks to identify the best solvers that satisfy the speed and accuracy requirements.

IV Conclusions

This letter proposes a mass-matrix formulation for transient simulation with the advantages of being compatible with the traditional formulation, compatible with a multitude of solvers, and simple to implement. Modeling examples for common transfer functions and synchronous generators are shown, and the implicit trapezoid method is deduced for solving DAE in the mass-matrix formulation. The solver compatibility is verified using 17 solvers benchmarked for two test systems. In conclusion, the compatibility and simplicity make the proposed method highly suitable for transient stability simulation.

References

- [1] P. Aristidou, D. Fabozzi, and T. Van Cutsem, “Dynamic simulation of large-scale power systems using a parallel schur-complement-based decomposition method,” IEEE Transactions on Parallel and Distributed Systems, vol. 25, no. 10, pp. 2561–2570, 2013.

- [2] F. Milano, “Semi-implicit formulation of differential-algebraic equations for transient stability analysis,” IEEE Transactions on Power Systems, vol. 31, no. 6, pp. 4534–4543, 2016.

- [3] J. H. Chow and J. J. Sanchez-Gasca, Power System Modeling, Computation, and Control. John Wiley & Sons, 2020.

- [4] H. Cui, F. Li, and K. Tomsovic, “Hybrid symbolic-numeric library for power system modeling and analysis,” arXiv preprint arXiv:2002.09455, 2020.

- [5] C. Rackauckas and Q. Nie, “Differentialequations. jl–a performant and feature-rich ecosystem for solving differential equations in julia,” Journal of Open Research Software, vol. 5, no. 1, 2017.

- [6] G. Wanner and E. Hairer, Solving ordinary differential equations II. Springer Berlin Heidelberg, 1996.

- [7] T. U. of Edinburgh. (2020, aug) Gb network. [Online]. Available: https://www.maths.ed.ac.uk/optenergy/NetworkData/fullGB/