Maximum Volume Subset Selection for Anchored Boxes

Abstract

Let be a set of axis-parallel boxes in such that each box has a corner at the origin and the other corner in the positive quadrant of , and let be a positive integer. We study the problem of selecting boxes in that maximize the volume of the union of the selected boxes. This research is motivated by applications in skyline queries for databases and in multicriteria optimization, where the problem is known as the hypervolume subset selection problem. It is known that the problem can be solved in polynomial time in the plane, while the best known running time in any dimension is . We show that:

-

•

The problem is NP-hard already in 3 dimensions.

-

•

In 3 dimensions, we break the bound , by providing an algorithm.

-

•

For any constant dimension , we present an efficient polynomial-time approximation scheme.

1 Introduction

An anchored box is an orthogonal range of the form , spanned by the point . This paper is concerned with the problem Volume Selection: Given a set of points in , select points in maximizing the volume of the union of their anchored boxes. That is, we want to compute

as well as a set of size realizing this value. Here, vol denotes the usual volume.

Motivation

This geometric problem is of key importance in the context of multicriteria optimization and decision analysis, where it is known as the hypervolume subset selection problem (HSSP) [2, 3, 4, 24, 12, 13]. In this context, the points in correspond to solutions of an optimization problem with objectives, and the goal is to find a small subset of that ’’represents‘‘ the set well. The quality of a representative subset is measured by the volume of the union of the anchored boxes spanned by points in ; this is also known as the hypervolume indicator [36]. Note that with this quality indicator, finding the optimal size- representation is equivalent to our problem . In applications, such bounded-size representations are required in archivers for non-dominated sets [23] and for multicriteria optimization algorithms and heuristics [3, 10, 7].111We remark that in these applications the anchor point is often not the origin, however, by a simple translation we can move our anchor point from to any other point in . Besides, the problem has recently received attention in the context of skyline operators in databases [17].

In 2 dimensions, the problem can be solved in polynomial time [2, 13, 24], which is used in applications such as analyzing benchmark functions [2] and efficient postprocessing of multiobjective algorithms [12]. A natural question is whether efficient algorithms also exist in dimension , and thus whether these applications can be pushed beyond two objectives.

In this paper, we answer this question negatively, by proving that Volume Selection is NP-hard already in 3 dimensions. We then consider the question whether the previous bound can be improved, which we answer affirmatively in 3 dimensions. Finally, for any constant dimension, we improve the best-known -approximation to an efficient polynomial-time approximation scheme (EPTAS). See Section 1.2 for details.

1.1 Further Related Work

Klee‘s Measure Problem

To compute the volume of the union of (not necessarily anchored) axis-aligned boxes in is known as Klee‘s measure problem. The fastest known algorithm takes time222In -notation, we always assume to be a constant, and is to be understood as . , which can be improved to if all boxes are cubes [15]. By a simple reduction [8], the same running time as on cubes can be obtained on anchored boxes, which can be improved to for [6]. These results are relevant to this paper because Klee‘s measure problem on anchored boxes (spanned by the points in ) is a special case of Volume Selection (by calling ).

Chan [14] gave a reduction from -Clique to Klee‘s measure problem in dimensions. This proves NP-hardness of Klee‘s measure problem when is part of the input (and thus can be as large as ). Moreover, since -Clique has no -time algorithm under the Exponential Time Hypothesis [16], Klee‘s measure problem has no -time algorithm under the same assumption. The same hardness results also hold for Klee‘s measure problem on anchored boxes, by a reduction in [8] (NP-hardness was first proven in [11]).

Finally, we mention that Klee‘s measure problem has a very efficient randomized -approximation algorithm in time with error probability [9].

Known Results for Volume Selection

As mentioned above, 2-dimensional Volume Selection can be solved in polynomial time; the initial algorithm [2] was later improved to [13, 24]. In higher dimensions, by enumerating all size- subsets and solving an instance of Klee‘s measure problem on anchored boxes for each one, there is an algorithm. For small , this can be improved to [10]. Volume Selection is NP-hard when is part of the input, since the same holds already for Klee‘s measure problem on anchored boxes. However, this does not explain the exponential dependence on for constant .

Since the volume of the union of boxes is a submodular function (see, e.g., [33]), the greedy algorithm for submodular function maximization [28] yields a -approximation of . This algorithm solves instances of Klee‘s measure problem on at most anchored boxes, and thus runs in time . Using [9], this running time improves to , at the cost of decreasing the approximation ratio to and introducing an error probability . See [20] for related results in dimensions.

A problem closely related to Volume Selection is Convex Hull Subset Selection: Given points in , select points that maximize the volume of their convex hull. For this problem, NP-hardness was recently announced in the case [30].

1.2 Our Results

In this paper we push forward the understanding of Volume Selection. We prove that Volume Selection is NP-hard already for (Section 3). Previously, NP-hardness was only known when is part of the input and thus can be as large as . Moreover, this establishes Volume Selection as another example for problems that can be solved in polynomial time in the plane but are NP-hard in three or more dimensions (see also [5, 26]).

In the remainder, we focus on the regime where is a constant and . All known algorithms (explicitly or implicitly) enumerate all size- subsets of the input set and thus take time . In 3 dimensions, we break this time bound by providing an algorithm (Section 4). To this end, we project the 3-dimensional Volume Selection to a 2-dimensional problem and then use planar separator techniques.

Finally, in Section 5 we design an EPTAS for Volume Selection. More precisely, we present a -approximation algorithm running in time , for any constant dimension . Note that the ’’combinatorial explosion‘‘ is restricted to and ; for any constant the algorithm runs in time . This improves the previously best-known -approximation, even in terms of running time.

2 Preliminaries

All boxes considered in the paper are axis-parallel and anchored at the origin. For points , we say that dominates if for all . For , we let . Note that is the set of all points that are dominated by . A point set is a set of points in . We denote the union by . The usual Euclidean volume is denoted by vol. With this notation, we set

We study Volume Selection: Given a point set of size and , compute

Note that we can relax the requirement to without changing this value.

3 Hardness in 3 Dimensions

We consider the following decision variant of 3-dimensional Volume Selection.

3d Volume Selection

Input: A triple , where is a set of points in , is a positive integer and is a positive real value.

Question: Is there a subset of points such that ?

We are going to show that the problem is NP-complete. First, we show that an intermediate problem about selecting a large independent set in a given induced subgraph of the triangular grid is NP-hard. The reduction for this problem is from independent set in planar graphs of maximum degree . Then we argue that this problem can be embedded using boxes whose points lie in two parallel planes. One plane is used to define the triangular-grid-like structure and the other is used to encode the subset of vertices that describe the induced subgraph of the grid.

3.1 Triangular Grid

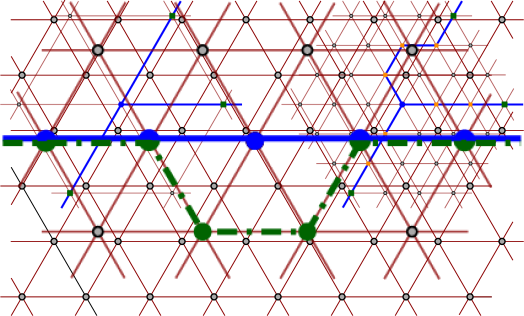

Let be the infinite graph with vertex set and edge set (see Figure 1)

First we show that the following intermediate problem, which is closely related to independent set, is NP-hard.

Independent Set on Induced Triangular Grid

Input: A pair , where is a subset of and is a positive integer.

Question: Is there a subset of size such that no two vertices in are connected by an edge of ?

Lemma 3.1.

Independent Set on Induced Triangular Grid is NP-complete.

Proof.

It is obvious that the problem is in NP.

Garey and Johnson [19] show that the problem Vertex Cover is NP-complete for planar graphs of degree at most . Since a subset is a vertex cover of graph if and only if is an independent set of , it follows that the problem Independent Set is NP-complete for planar graphs of degree at most . For the rest of the proof, let be a planar graph of degree at most .

Let us define a -representation of to be a pair , where and is a mapping, with the following properties:

-

•

Each vertex of is mapped to a distinct vertex of .

-

•

Each edge of is mapped to a simple path contained in and connecting to .

-

•

For each two distinct edges and of , the paths and are disjoint except at the common endpoints .

-

•

The graph is precisely the union of and over all vertices and edges of .

Note that if is a -representation of then is a subdivision of . The map identifies which parts of correspond to which parts of .

A planar graph with vertices and maximum degree (and also ) can be drawn in a square grid of polynomial size, and such a drawing can be obtained in polynomial time, see, e.g., the results by Storer [31] or by Tamassia and Tollis [32]. Applying the shear mapping to the plane, the square grid becomes a subgraph of . Therefore, we can obtain a -representation of of polynomial size. Note that we only use edges of that are horizontal or have positive slope; edges of with negative slope are not used.

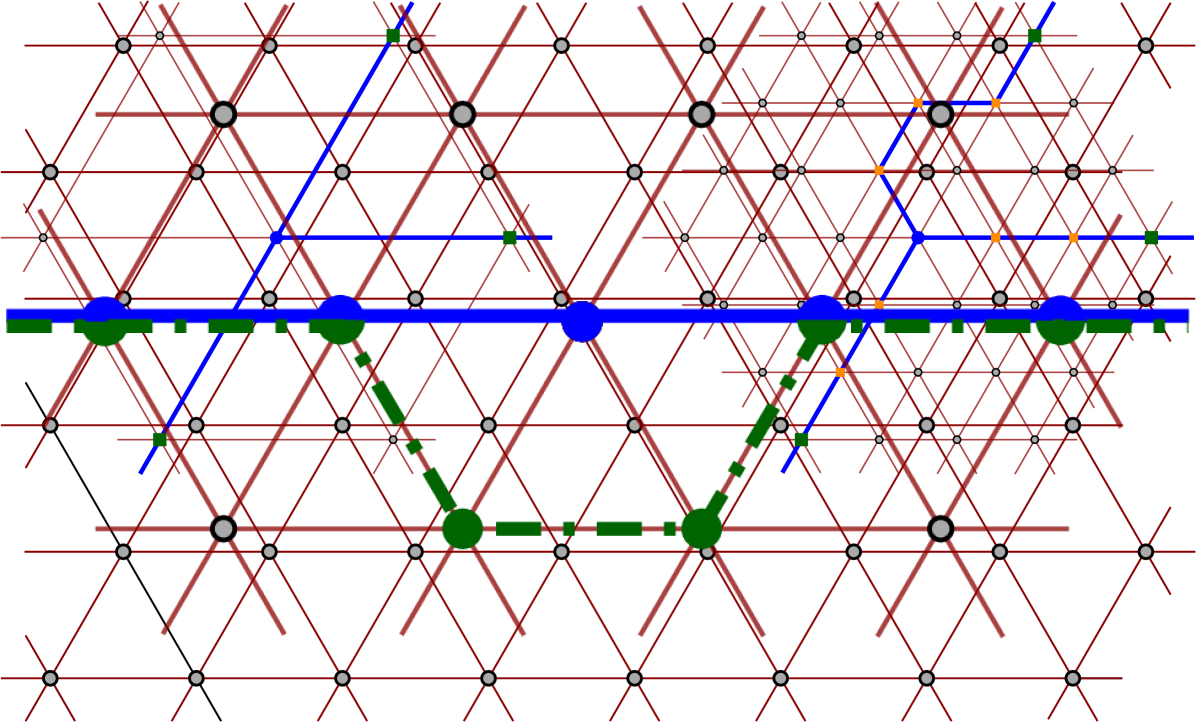

Next, we obtain another -representation such that is an induced subgraph of . Induced means that two vertices of are connected with an edge in if and only if the edge exists in . For this, we first scale up the -representation by a factor so that each edge of becomes a 2-edge path. The new vertices used in the subdivision have degree and its incident edges have the same orientation. After the subdivision, vertices of degree look like in Figure 2. Scaling up the figure by a factor of , and rerouting within a small neighbourhood of each vertex that was already in , we obtain a -representation such that is an induced subgraph of . See Figure 2 for an example of such a local transformation.

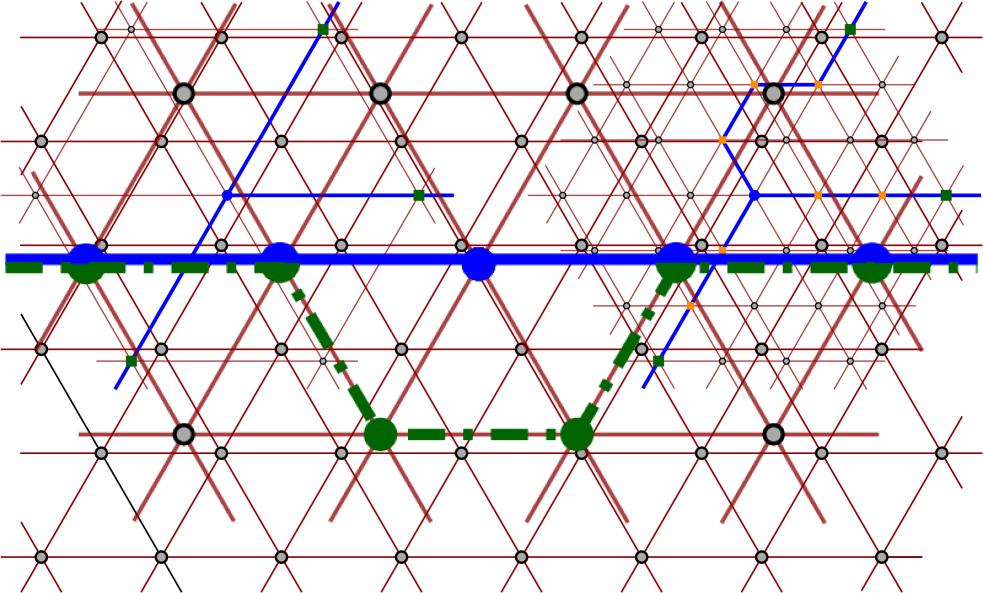

Now we have a -representation such that is an induced subgraph of . We want to obtain another -representation where for each edge the path uses an even number of interior edges. For this, we can slightly reroute each path that has an odd number of interior points, see Figure 3. To make sure that the graph is still induced, we can first scale up the situation by a factor , and then reroute all the edges that use an odd number of interior vertices. (This is actually all the edges because of the scaling.) Let be the resulting -representation of . Note that is an induced subgraph of and it is a subdivision of where each edge is subdivided an even number of times.

Let denote the size of the largest independent set in . For each edge of , let be the number of internal vertices in the path . Then . Indeed, we can obtain from by repeatedly replacing an edge by a -edge path, i.e., making 2 subdivisions on the same edge. Moreover, any such replacement increases the size of the largest independent set by exactly 1.

It follows that the problem Independent Set is NP-complete in induced subgraphs of the triangular grid . This is precisely the problem Independent Set on Induced Triangular Grid, where we take to be the set of vertices defining the induced subgraph. ∎

3.2 The Point Set

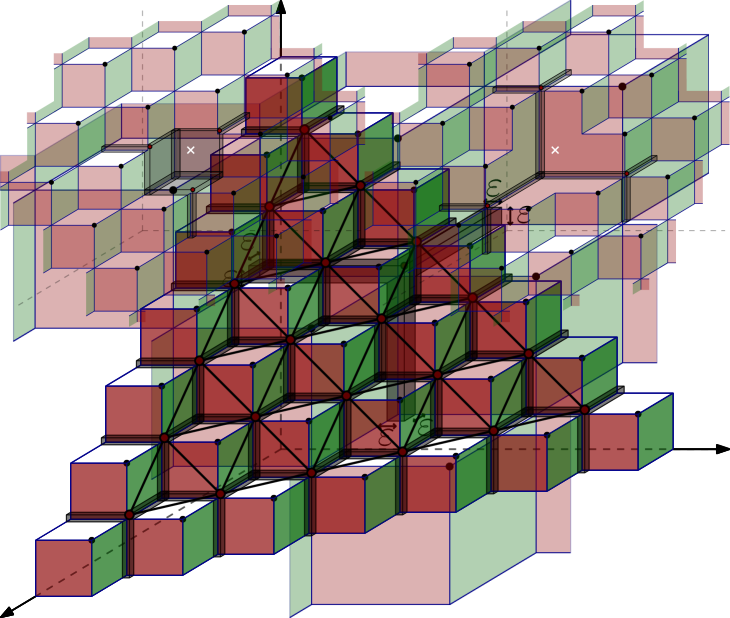

Let be an arbitrary integer and consider the point set defined by (see Figure 4)

Standard induction shows that the set has points and that

This last number appears as sequence A000292, tetrahedral (or triangular pyramidal) numbers, in [27].

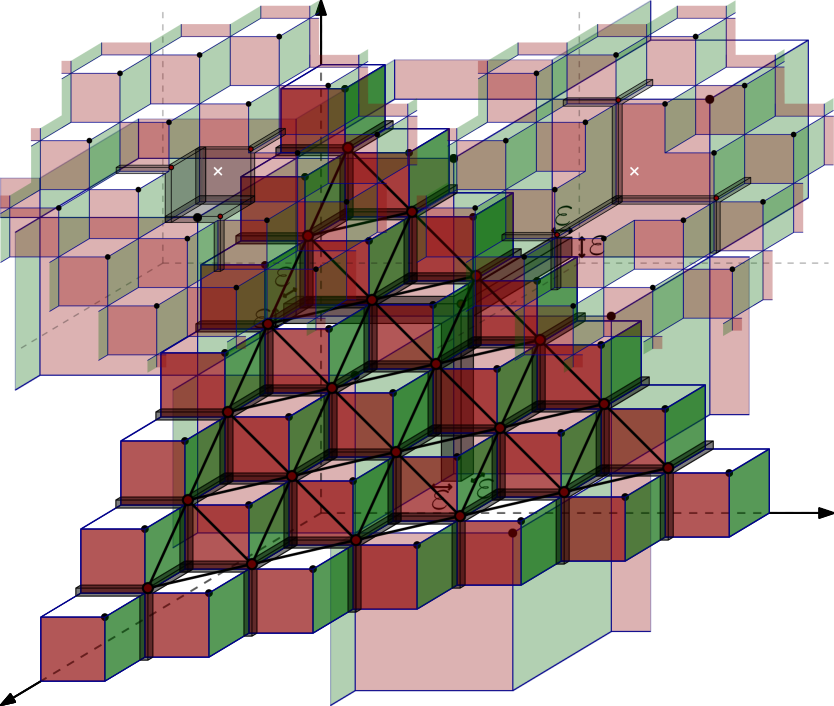

Consider the real number , and define the vector . Note that is much smaller than . For each point , consider the point , see Figure 5. Let us define the set to be

It is clear that has points, for . The points of lie on the plane .

For each point of define

Note that is the union of boxes of size and a cube of size , see Figure 5. To get the intuition for the following lemma, see Figure 6.

Lemma 3.2.

Consider any .

-

•

If the sets , for all , are pairwise disjoint, then .

-

•

If contains two points and such that and intersect, then .

Proof.

Note that for each we have

If the sets are pairwise disjoint then

Consider now the case when contains two points and such that and intersect. The geometry of the point set implies that and intersect in a cube of size , see Figure 6. Therefore, we have

| ∎ |

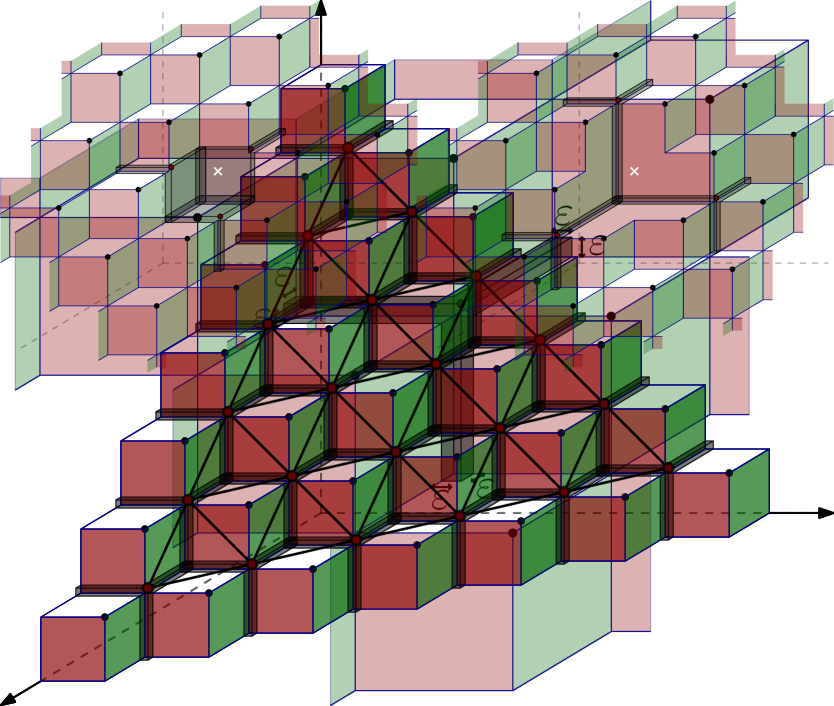

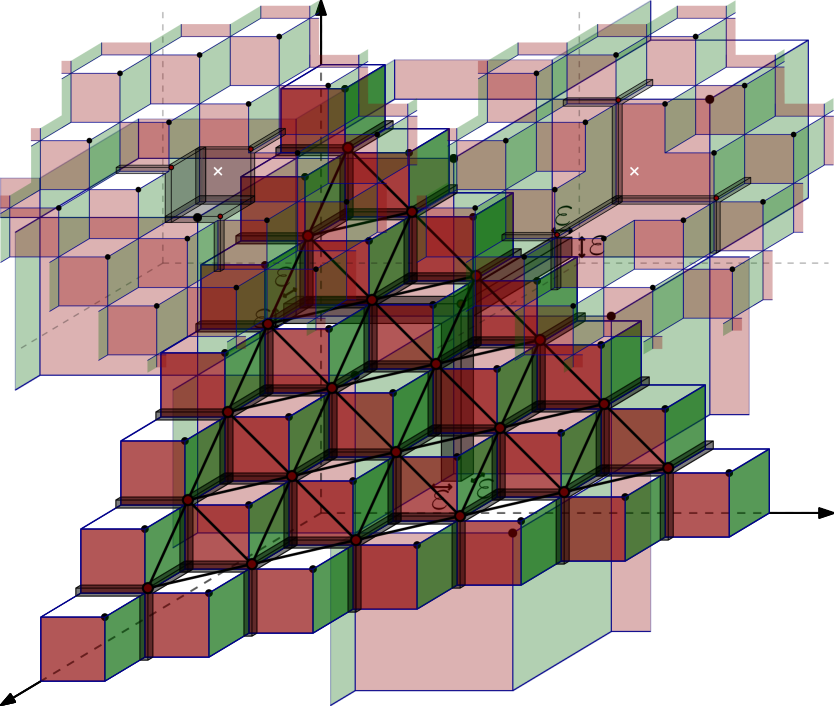

We can define naturally a graph on the set by using the intersection of the sets . The vertex set of is , and two points define an edge of if and only if and intersect, see Figure 7. Simple geometry shows that is isomorphic to a part of the triangular grid . Thus, choosing large enough, we can get an arbitrarily large portion of the triangular grid . Note that a subset of vertices is independent in if and only if the sets are pairwise disjoint.

We next show that picking points in has higher priority than picking points in .

Lemma 3.3.

Let be a subset of such that is not empty. Then .

Proof.

Assume that contains exactly one point, denoted by . Having a smaller set can only decrease the value of . Then

Consider the sets of points

Figure 8 is useful for the following computations. For each point we have

For each point we have

Using that because , we get

For all points of we have

We thus have

where the last step uses . ∎

3.3 The Reduction

We are now ready to prove NP-completeness of 3d Volume Selection.

Theorem 3.4.

The problem 3d Volume Selection is NP-complete.

Proof.

It is obvious that the problem is in NP. To show hardness we reduce from the problem Independent Set Induced Triangular Grid, shown to be NP-complete in Lemma 3.1.

Consider an instance to Independent Set on Induced Triangular Grid, where is a subset of the vertices of the triangular grid and is an integer. Take large enough so that is isomorphic to an induced subgraph of that contains . Recall that . For each vertex of let be the corresponding vertex of . For each subset of , let be the subset of that corresponds to , that is, .

Consider the set of points , the parameter , and the value . We claim that is a yes-instance for Independent Set on Induced Triangular Grid if and only if is a yes-instance for 3d Volume Selection.

If is a yes-instance for Independent Set on Induced Triangular Grid, there is a subset of independent vertices in . This implies that is an independent set in , that is, the sets are pairwise disjoint. Lemma 3.2 then implies that

Therefore is a subset of with points such that . It follows that is a yes-instance for 3d Volume Selection.

Assume now that is a yes-instance for 3d Volume Selection. This means that contains a subset of points such that

Because of Lemma 3.3, it must be that is contained in , as otherwise we would have . Since we have and , we obtain that is for some . Moreover, . By Lemma 3.2, if is not an independent set in , we have

which contradicts the assumption that . Therefore it must be that is an independent set in . It follows that has size and forms an independent set in , and thus is a yes-instance for Independent Set on Induced Triangular Grid. ∎

4 Exact Algorithm in 3 Dimensions

In this section we design an algorithm to solve Volume Selection in 3 dimensions in time . The main insight is that, for an optimal solution , the boundary of is a planar graph with vertices, and therefore has a balanced separator with vertices. We would like to guess the separator, break the problem into two subproblems, and solve each of them recursively. This basic idea leads to a few technical challenges to take care of. One obstacle is that subproblems should be really independent because we do not want to double count some covered parts. Essentially, a separator in the graph-theory sense does not imply independent subproblems in our context. Another technicality is that some of the subproblems that we encounter recursively cannot be solved optimally; we can only get a lower bound to the optimal value. However, for the subproblems that define the optimal solution at the higher level of the recursion, we do compute an optimal solution.

Let be a set of points in the positive quadrant of . Through our discussion, we will assume that is fixed and thus drop the dependency on and from the notation. We can assume that no point of is dominated by another point of . Using an infinitesimal perturbation of the points, we can assume that all points have all coordinates different. Indeed, we can replace each point by the point , where is a different integer for each point of and is an infinitesimal value or a value that is small enough.

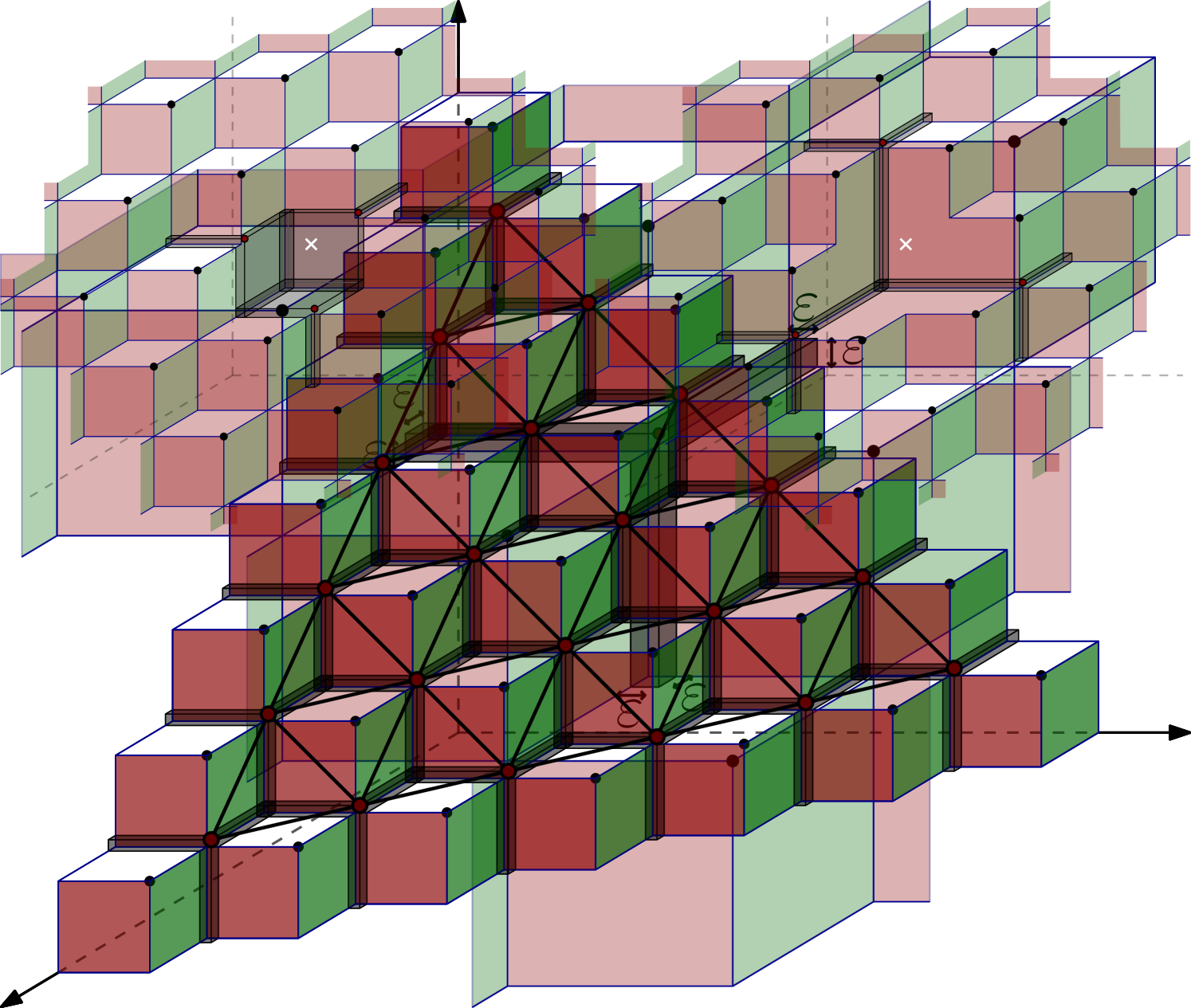

Let be the largest - or -coordinate in , thus . We define to be the square in defined by . It has side length .

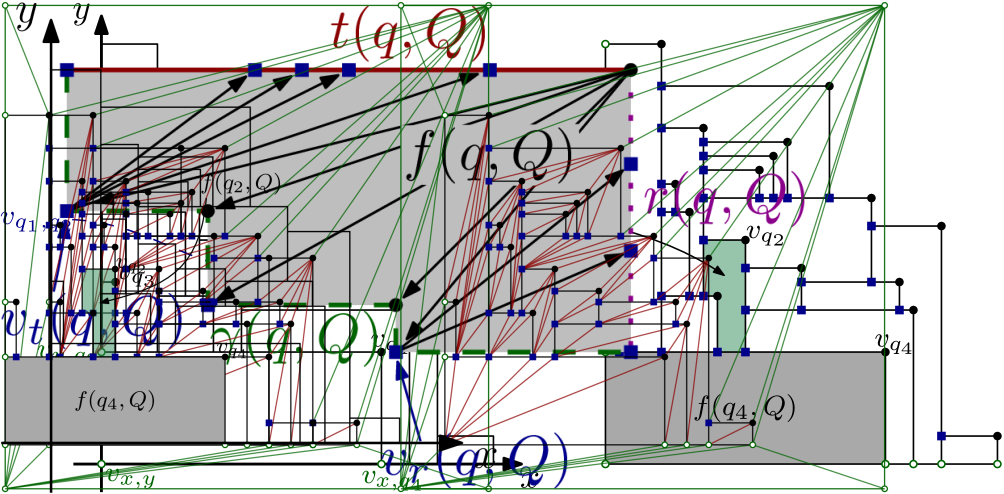

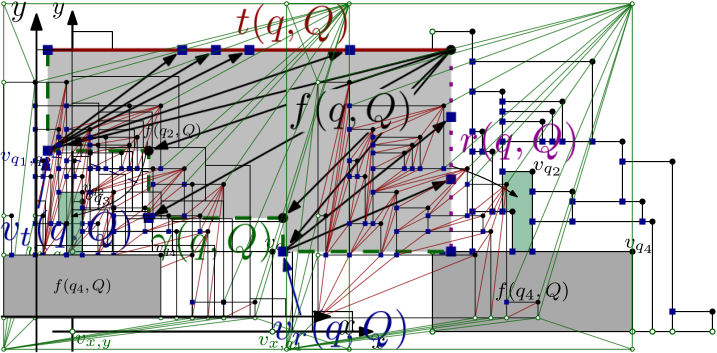

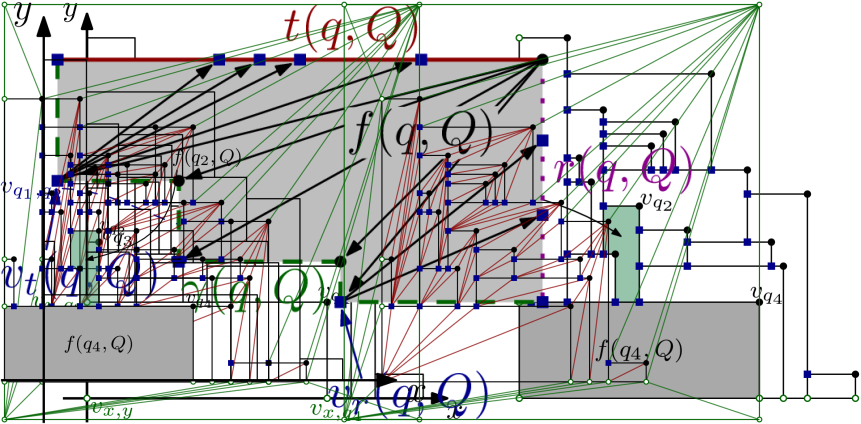

For each subset of , consider the projection of onto the -plane. This defines a plane graph, which we denote by , and which we define precisely in the following, see Figure 9. We consider as a geometric, embedded graph where each vertex is a point and each edge is (drawn as) a straight-line segment, in fact, a horizontal or vertical straight-line segment on the -plane. There are different types of vertices in . The projection of each point defines a vertex, which we denote by . When for two distinct points the boundary of the projection of the boxes and the boundary of the projection of intersect outside the - and -axis, then they do so exactly once because of our assumption on general position, and this defines a vertex that we denote by . (Not all pairs define such a vertex.) Additionally, each point defines a vertex at position and a vertex at position . Finally, we have a vertex placed at the origin. The vertices of are connected in a natural way: the boundary of the visible part of connects the points that appear on that boundary. In particular, the vertices on the -axis are connected and so do those on the -axis. Since we assume general position, each vertex uniquely determines the boxes that define it. Each vertex defines a bounded face in . This is the projection of the face on the boundary of contained in the plane , see Figure 9, right. In fact, each bounded face of is for some .

We triangulate each bounded face of canonically, as follows, see Figure 10. The boundary of a bounded face is made of a top horizontal segment (which may contain several edges of the graph), a right vertical segment (which may contain several edges of the graph), and a monotone path from the top, left corner to the bottom, right corner. Such a monotone path alternates horizontal and vertical segments and has non-decreasing -coordinates and non-increasing -coordinates. Let be the first interior vertex of and let be the last interior vertex of . Note that is the vertex where and meet. We add diagonals from to all interior vertices of , diagonals from to all the interior vertices of and diagonals from to all the interior vertices of . This is the canonical triangulation of the face , and we apply it to each bounded face of .

The outer face of may also have many vertices. We place on top the square , with vertices . From the vertices at and we add all possible edges, while keeping planarity. From the vertex we add the edges to , to , and to the highest vertex on the -axis. Similarly, from the vertex we add the edges to , to , and to the rightmost vertex on the -axis. With such an operation, the outer face is defined by the boundary of the square .

Let be the resulting geometric, embedded graph, see Figure 11. The graph is a triangulation of the square with internal vertices. It is easy to see that and have vertices and edges. For example, one can argue that has faces and no parallel edges, and the graph is a triangulation of with additional vertices. To treat some extreme cases, we also define , as a graph, with the diagonal of positive slope.

A polygonal domain is a subset of the plane defined by a polygon where we remove the interior of some polygons, which form holes. The combinatorial complexity of a domain , denoted by , is the number of vertices and edges used to define it. We say that a polygonal curve or a family of polygonal curves in is -compliant if the edges of of the curves are also edges of . A polygonal domain is -compliant if its boundary is -compliant. Note that a -compliant polygonal domain has combinatorial complexity because the graph has edges.

Consider a set and a -compliant polygonal curve . Let be the points of that participate in the definition of the vertices on . Thus, if is in , we add to ; if is in , we add and to ; if is in , we add to , and so on. Since each vertex on contributes vertices to , we have . For a family of polygonal curves we define . For a polygonal domain with boundary we then have .

Lemma 4.1.

If is a -compliant polygonal curve then, for each with , the curve is also -compliant.

Proof.

For each edge of , the edge is also contained in for all that contain . It follows that has all the edges contained in , and thus contains . ∎

We are going to use dynamic programming based on planar separators of for an optimal solution . A valid tuple to define a subproblem is a tuple , where , is an -compliant polygonal domain, and is a positive integer. The tuple models a subproblem where the points of are already selected to be part of the feasible solution, is a -compliant domain so that we only care about the volume inside the cylinder , and we can still select points from . We have two different values associated to each valid tuple, depending on which subsets of vertices from can be selected:

Obviously, we have for all valid tuples

On the other hand, we are interested in the valid tuple , for which we have .

We would like to get a recursive formula for or using planar separators. More precisely, we would like to use a separator in for an optimal solution, and then branch on all possible such separators. However, none of the two definitions seem good enough for this. If we would use , then we divide into domains that may have too much freedom and the interaction between subproblems gets complex. If we would use , then merging the problems becomes an issue. Thus, we take a mixed route where we argue that, for the valid tuples that are relevant for finding the optimal solution, we actually have .

We start showing how to compute in the obvious way. This will be used to solve the base cases of the recursion.

Lemma 4.2.

We can compute in time.

Proof.

We enumerate all the subsets of with points, and for each such we proceed as follows. We first build and check whether is contained in the edge set of . If it is not, then is not -compliant and we move to the next subset . Otherwise, we compute , its restriction to , and its volume. Standard approaches can be used to do this in time, for example working with the projection onto the -plane. (The actual degree of the polynomial is not relevant.) This procedure enumerates subsets of and for each one spends time. The result follows. ∎

A valid partition of is a collection of valid tuples such that

-

•

for some set ;

-

•

;

-

•

the domains ,, have pairwise disjoint interiors and ;

-

•

; and

-

•

for each .

Let be the family of valid partitions for the tuple . We remark that different valid partitions may have different cardinality.

Lemma 4.3.

For each valid tuple we have

Proof.

For any valid partition , let be the smallest set such that for all tuples . This means that for an arbitrary . For each such tuple , let be an optimal solution to , and define

Then from the properties of valid partitions we have

Obviously, is contained in because contains .

We have seen that for each valid partition the set is a feasible solution considered in the problem . Therefore

Using that the interiors of are pairwise disjoint, and then using that is contained in for all , we obtain

Since is optimal for , we obtain the desired

Lemma 4.4.

For each valid tuple we have

Proof.

Let be the optimal solution defining . Thus, has at most points, is -compliant, and

Consider the triangulation . This is a -connected planar graph. Recall that the boundary of is contained in because is -compliant. Note that has vertices.

Assign weight to the vertices , , and weight to the rest of vertices in . The sum of the weights is obviously . Because of the cycle-separator theorem of Miller [25], there is a cycle in with vertices, such that the interior of has at most vertices of and the exterior of has at most vertices of .

Since has vertices, the set also has vertices. Note that . Take , so that is the disjoint union of and . Because of Lemma 4.1, the domain and the cycle are -compliant.

The cycle breaks the domain into at least domains. Let be those domains. Since the boundary of each domain is contained in , each domain is -compliant. For each domain , let and let . Since the interior of is either in the interior or the exterior of , we have for each . Moreover, because the points of that could be counted twice have the corresponding vertex on , but then they also belong to and thus cannot belong to .

The properties we have derived imply that is a valid partition of , and thus . Moreover is a feasible solution for the problem . Indeed, since is -compliant and -compliant, Lemma 4.1 implies that is also -compliant.

Note that, for each in the partition we have

| (1) |

Indeed, for a point , may contribute to the union , but when projected onto the -plane it lies outside the domain because the face lies outside .

Therefore we obtain

where we used . With equation (1), and then using that is feasible for , we get

The statement now follows since . ∎

Our dynamic programming algorithm closely follows the inequality of Lemma 4.4. Specifically, we define for each valid tuple the value

Lemma 4.5.

For each valid tuple we have

Proof.

We show this by induction on . When , then from the definitions we have

This covers the base cases. For larger values of , we use Lemma 4.4, the induction hypothesis, and the definition of to derive

Also for larger values of , we use the definition of , the induction hypothesis, and Lemma 4.3, to derive

| ∎ |

Since we know that , Lemma 4.5 implies that . Hence, it suffices to compute using its recursive definition. In the remainder, we bound the running time of this algorithm.

Theorem 4.6.

In 3 dimensions, Volume Selection can be solved in time .

Proof.

We compute using its recursive definition. We need a bound on the number of different subproblems, defined by valid tuples that appear in the recursion. We will see that there are different subproblems.

Starting with , consider a sequence of valid tuples , , such that, for , the tuple appears in some valid partition of . Because of the properties of valid partitions, we have and .

Let be the first index with . Consider first the indices , where . Then and it follows by induction that

where we have used that . By definition of , for we have . Therefore, for all indices we have .

For each valid tuple that appears in the recursive computation of , there is some sequence of valid tuples, as considered before, that contains it. It follows that, for all valid tuples considered through the algorithm we have .

Let us give an upper bound on the valid tuples that appear in the computation. There are choices for the set . Once we have fixed , the domain has to be -compliant, and this means that we have to select edges in the triangulated graph . Since has vertices and edges, there are at most possible choices for . Finally, we have options for the value . Therefore, there are at most

valid tuples that appear in the recursion.

We next bound how much time we spend for each tuple. Consider a valid tuple that appears through the recursion. If , we compute using Lemma 4.2 in time. Otherwise, to compute we have to iterate over all the valid partitions . There are such valid partitions. Indeed, we have to select the subset with vertices and then the partitioning of into regions that are -compliant. This can be bounded by . (Alternatively, we can iterate over the possible options to define the separating cycle used in the proof of Lemma 4.4.)

We conclude that in the computation of we have to consider valid tuples and for each one of them computing takes time. The result follows. ∎

We only described an algorithm that computes , i.e., the maximal volume realized by any size- subset of . It is easy to augment the algorithm with appropriate bookkeeping to also compute an actual optimal subset.

5 Efficient Polynomial-Time Approximation Scheme

In this section we design an approximation algorithm for Volume Selection.

Theorem 5.1.

Given a point set of size in , , and , we can compute a -approximation of in time . We can also compute a set of size at most such that is a -approximation of in the same time.

We also discuss an improvement to time in Section 5.4.

The approach is based on the shifting technique of Hochbaum and Maass [21]. However, there are some non-standard aspects in our application. It is impossible to break the problem into independent subproblems because all the anchored boxes intersect around the origin. We instead break the input into subproblems that are almost independent. To achieve this, we use an exponential grid, instead of the usual regular grid with equal-size cells. Alternatively, this could be interpreted as using a regular grid in a - plot of the input points.

Throughout this section we need two numbers . Specifically, we define as the smallest integer larger than , and as the smallest power of larger than . We consider a partitioning of the positive quadrant into regions of the form

On top of this partitioning we consider a grid, where each grid cell contains regions and the grid boundaries are thick, i.e., two grid cells do not touch but have a region in between. More precisely, for any offset , we define the grid cells

Note that each grid cell indeed consists of regions, and the space not contained in any grid cell (i.e., the grid boundaries) consists of all regions with for some .

Our approximation algorithm now works as follows (cf. the pseudocode given below).

(1) Iterate over all grid offsets . This is the key step of the shifting technique of Hochbaum and Maass [21].

(2) For any choice of the offset , remove all points not contained in any grid cell, i.e., remove points contained in the thick grid boundaries. This yields a set of remaining points.

(3) The grid cells now induce a partitioning of into sets , where each is the intersection of with a grid cell (with for some ). Note that these grid cell subproblems are not independent, since any two boxes have a common intersection near the origin, no matter how different their coordinates are. However, we will see that we may treat as independent subproblems since we only want an approximation.

(4) We discretize by rounding down all coordinates of all points in to powers of333Here we use that is a power of , to ensure that rounded points are contained in the same cells as their originals. . We can remove duplicate points that are rounded to the same coordinates. This yields sets . Note that within each grid cell in any dimension the largest and smallest coordinate differ by a factor of at most . Hence, there are at most different rounded coordinates in each dimension, and thus the total number of points in each is .

(5) Since there are only few points in each , we can precompute all Volume Selection solutions on each set , i.e., for any and any we precompute . We do so by exhaustively enumerating all subsets of , and for each one computing by inclusion-exclusion in time (see, e.g., [34, 35]). This runs in total time .

(6) It remains to split the points that we want to choose over the subproblems . As we treat these subproblems independently, we compute

Note that if the subproblems would be independent, then this expression would yield the exact result. We argue below that the subproblems are sufficiently close to being independent that this expression yields a -approximation of . Observe that the expression can be computed efficiently by dynamic programming, where we compute for each and the following value:

The following rule computes this table (see the pseudocode below for further details):

(7) Finally, we optimize over the offset by returning the maximal .

This finishes the description of the approximation algorithm. In pseudocode, this yields the following procedure.

-

(1)

Iterate over all offsets :

-

(2)

. Delete any from that is not contained in any grid cell .

-

(3)

Partition into , where for some grid cell .

-

(4)

Round down all coordinates to powers of and remove duplicates, obtaining .

-

(5)

Compute for all , .

-

(6)

Compute by dynamic programming:

-

–

Initialize for all , .

-

–

For , for , and for :

-

–

Set

-

–

-

–

Set .

-

–

-

(2)

-

(7)

Return .

5.1 Running Time

Step (1) yields a factor in the running time. Since we can compute for each point in constant time the grid cell it is contained in, step (2) runs in time . For the partitioning in step (3), we use a dictionary data structure storing all with nonempty . Then we can assign any point to the other points in its cell by one lookup in the dictionary, in time . Thus, step (3) can be performed in time . Step (4) immediately works in the same running time. For step (5) we already argued above that it can be performed in time . Finally, from the pseudocode for step (6) we read off a running time of . The total running time is thus

5.2 Correctness

The following lemmas show that the above algorithm indeed computes a -approximation of . Reducing by an appropriate constant factor then yields a -approximation.

Lemma 5.2 (Removing grid boundaries).

Let be a point set and let . Remove all points contained in grid boundaries with offset to obtain the point set . Then for all we have

and for some we have

Proof.

Since we only remove points, the first inequality is immediate. For the second inequality we use a probabilistic argument. Consider an optimal solution, i.e., a set of size at most with . Let . For a uniformly random offset , consider the probability that a fixed point survives, i.e., we have . Consider the region containing point , where . Recall that the grid boundaries consist of all regions with for some . For a uniformly random , for fixed the equation holds with probability . By a union bound, the probability that at least one of these equations holds for is at most (by definition of as the smallest integer larger than ). Hence, survives with probability at least .

Now for each point identify a point dominating . Since survives in with probability at least , the point is dominated by with probability at least . By integrating over all we thus obtain an expected volume of

It follows that for some we have . For this we have

where the first inequality uses and the definition of VolSel as maximizing over all subsets, and the last inequality holds since we picked as an optimal solution, realizing . ∎

Lemma 5.3 (Rounding down coordinates).

Let be a point set, and let be the same point set after rounding down all coordinates to powers of . Then for any

Proof.

Let be the set with all coordinates scaled down by a factor . By a simple scaling invariance, we have . Note that for any point the corresponding point dominates , and the corresponding point is dominated by . Now pick any subset of of size , and let be the corresponding subsets of . Then we have , which implies , and thus

In the proof of the next lemma it becomes important that we have used the thick grid boundaries, with a separating region, when defining the grid cells.

Lemma 5.4 (Treating subproblems as independent I).

For any offset , let be point sets contained in different grid cells with respect to offset . Then we have

Proof.

The second inequality is essentially the union bound. Specifically, for any sets the volume of is at most the sum over all volumes of for . In particular, this statement holds with , which yields the second inequality.

For the first inequality, observe that we obtain the total volume of all points dominated by by summing up the volume of all points dominated by but not by any , , for each , i.e., we have

| (2) |

Now let be the grid cell containing for , where . We may assume that these cells are ordered in non-decreasing order of . Observe that in this ordering, for any we have for some . Recall that . It follows that each point in has -th coordinate at most for some . Setting , we thus have , which yields

| (3) |

Let be the -dimensional volume of the intersection of with the plane . Since all points in have -th coordinate at least , we have . Moreover, has -dimensional volume . Together, this yields . With (2) and (3), we thus obtain

since . ∎

Lemma 5.5 (Treating subproblems as independent II).

For any offset , let be point sets contained in different grid cells, and . Set . Then we have

Proof.

Consider an optimal solution of and let for . Then by choice of as an optimal solution, and by Lemma 5.4, we have

Since VolSel maximizes over all subsets and , we further obtain

This shows the second inequality.

For the first inequality, we pick sets , where for all and , realizing . We then argue analogously:

Note that the above lemmas indeed prove that the algorithm returns a -approximation to the value . In step (2) we delete the points containing the the grid boundaries, which yields an approximation for some choice of the offset by Lemma 5.2. As we iterate over all possible choices for and maximize over the resulting volume, we obtain an approximation. In step (4) we round down coordinates, which yields an approximation by Lemma 5.3. Finally, in step (6) we solve the problem , which yields an approximation to by Lemma 5.5. All other steps do not change the point set or the considered problem. The final approximation factor is .

5.3 Computing an Output Set

The above algorithm only gives an approximation for the value , but does not yield a subset of size realizing this value. However, by tracing the dynamic programming table we can reconstruct the values with . By storing in step (5) not only the values but also corresponding subsets , we can thus construct a subset with . Lemma 5.4 now implies that

By storing in step (4) for each rounded point an original point, we can construct a set corresponding to the rounded points such that

and thus is a subset of of size at most yielding a -approximation of the optimal volume .

Note that we do not compute the exact volume of the output set . Instead, the value only is a -approximation of . To explain this effect, recall that exactly computing for any given set takes time (under the Exponential Time Hypothesis). As our running time is for any constant , we cannot expect to compute exactly.

5.4 Improved Algorithm

The following improvement was suggested to us by Timothy Chan. For constant and the algorithm shown above runs in time . The bottleneck for the -term is step (6): Given for all , , we want to compute

Note that it suffices to compute an -approximation to this value, to end up with an -approximation overall.

This problem is an instance of the multiple-choice 0/1 knapsack problem, where we are given a budget and items with corresponding weights and profits , as well as a partitioning , and the task is to compute the maximum over all sets satisfying and for all . In order to cast the above problem as an instance of multiple-choice 0/1 knapsack, we simply set and define and for all . We also set . Note that now the constraint corresponds to and the objective corresponds to .

For the multiple-choice 0/1 knapsack problem there are known PTAS techniques. In particular, in his Master‘s thesis, Rhee [29, Section 4.2] claims a time bound of . In our case, we have and . Moreover, . This yields a time of .

Plugging this solution for step (6) into the algorithm from the previous sections, we obtain time

This can be simplified to , which is bounded by .

6 Conclusions

We considered the volume selection problem, where we are given points in and want to select of them that maximize the volume of the union of the spanned anchored boxes. We show: (1) Volume selection is NP-hard in dimension (previously this was only known when is part of the input). (2) In 3 dimensions, we design an algorithm (the previously best was ). (3) We design an efficient polynomial time approximation scheme for any constant dimension (previously only a -approximation was known).

We leave open to improve our NP-hardness result to a matching lower bound under the Exponential Time Hypothesis, e.g., to show that in any algorithm takes time and in any constant dimension any algorithm takes time . Alternatively, there could be a faster algorithm, e.g., in time . Finally, we leave open to figure out the optimal dependence on of a -approximation algorithm.

Moving away from the applications, one could also study volume selection on general axis-aligned boxes in , i.e., not necessarily anchored boxes. This problem General Volume Selection is an optimization variant of Klee‘s measure problem and thus might be theoretically motivated. However, General Volume Selection is probably much harder than the restriction to anchored boxes, by analogies to the problem of computing an independent set of boxes, which is not known to have a PTAS [1]. In particular, General Volume Selection is NP-hard already in 2 dimensions, which follows from NP-hardness of computing an independent set in a family of congruent squares in the plane [18, 22].

Acknowledgements

This work was initiated during the Fixed-Parameter Computational Geometry Workshop at the Lorentz Center, 2016. We are grateful to the other participants of the workshop and the Lorentz Center for their support. We are especially grateful to Günter Rote for several discussions and related work.

References

- [1] A. Adamaszek and A. Wiese. Approximation schemes for maximum weight independent set of rectangles. In Proc. of the 54th IEEE Symp. on Found. of Comp. Science (FOCS), pages 400–409. IEEE, 2013.

- [2] A. Auger, J. Bader, D. Brockhoff, and E. Zitzler. Investigating and exploiting the bias of the weighted hypervolume to articulate user preferences. In Proc. of the 11th Conf. on Genetic and Evolutionary Computation (GECCO), pages 563–570. ACM, 2009.

- [3] A. Auger, J. Bader, D. Brockhoff, and E. Zitzler. Hypervolume-based multiobjective optimization: Theoretical foundations and practical implications. Theoretical Comp. Science, 425:75–103, 2012.

- [4] J. Bader. Hypervolume-based search for multiobjective optimization: theory and methods. PhD thesis, ETH Zurich, Zurich, Switzerland, 1993.

- [5] F. Barahona. On the computational complexity of Ising spin glass models. J. of Physics A: Mathematical and General, 15(10):3241, 1982.

- [6] N. Beume, C. M. Fonseca, M. López-Ibáñez, L. Paquete, and J. Vahrenhold. On the complexity of computing the hypervolume indicator. IEEE Trans. on Evolutionary Computation, 13(5):1075–1082, 2009.

- [7] N. Beume, B. Naujoks, and M. Emmerich. SMS-EMOA: Multiobjective selection based on dominated hypervolume. European J. of Operational Research, 181(3):1653–1669, 2007.

- [8] K. Bringmann. Bringing order to special cases of Klee’s measure problem. In Proc. of the 38th Int. Symp. on Mathematical Foundations of Comp. Science (MFCS), pages 207–218. Springer, 2013.

- [9] K. Bringmann and T. Friedrich. Approximating the volume of unions and intersections of high-dimensional geometric objects. Computational Geometry, 43(6):601 – 610, 2010.

- [10] K. Bringmann and T. Friedrich. An efficient algorithm for computing hypervolume contributions. Evolutionary Computation, 18(3):383–402, 2010.

- [11] K. Bringmann and T. Friedrich. Approximating the least hypervolume contributor: NP-hard in general, but fast in practice. Theoretical Comp. Science, 425:104–116, 2012.

- [12] K. Bringmann, T. Friedrich, and P. Klitzke. Generic postprocessing via subset selection for hypervolume and epsilon-indicator. In Proc. of the 13th Int. Conf. on Parallel Problem Solving from Nature (PPSN), pages 518–527. Springer, 2014.

- [13] K. Bringmann, T. Friedrich, and P. Klitzke. Two-dimensional subset selection for hypervolume and epsilon-indicator. In Proc. of the 16th Conf. on Genetic and Evolutionary Comput. (GECCO), pages 589–596. ACM, 2014.

- [14] T. M. Chan. A (slightly) faster algorithm for Klee‘s measure problem. Computational Geometry, 43(3):243–250, 2010.

- [15] T. M. Chan. Klee‘s measure problem made easy. In Proc. of the 54th IEEE Symp. on Found. of Comp. Science (FOCS), pages 410–419. IEEE, 2013.

- [16] J. Chen, X. Huang, I. A. Kanj, and G. Xia. Linear FPT reductions and computational lower bounds. In Proc. of the 36th ACM Symp. on Theory of Computing (STOC), pages 212–221. ACM, 2004.

- [17] M. Emmerich, A. H. Deutz, and I. Yevseyeva. A Bayesian approach to portfolio selection in multicriteria group decision making. Procedia Comp. Science, 64:993–1000, 2015.

- [18] R. J. Fowler, M. S. Paterson, and S. L. Tanimoto. Optimal packing and covering in the plane are NP-complete. Information Processing Lett., 12(3):133–137, 1981.

- [19] M. R. Garey and D. S. Johnson. The rectilinear Steiner tree problem in NP complete. SIAM J. of Applied Math., 32:826–834, 1977.

- [20] A. P. Guerreiro, C. M. Fonseca, and L. Paquete. Greedy hypervolume subset selection in low dimensions. Evolutionary Computation, 24(3):521–544, 2016.

- [21] D. S. Hochbaum and W. Maass. Approximation schemes for covering and packing problems in image processing and VLSI. J. ACM, 32(1):130–136, 1985.

- [22] H. Imai and T. Asano. Finding the connected components and a maximum clique of an intersection graph of rectangles in the plane. J. of Algorithms, 4(4):310–323, 1983.

- [23] J. D. Knowles, D. W. Corne, and M. Fleischer. Bounded archiving using the Lebesgue measure. In Proc. of the 2003 Congress on Evolutionary Computation (CEC), volume 4, pages 2490–2497. IEEE, 2003.

- [24] T. Kuhn, C. M. Fonseca, L. Paquete, S. Ruzika, M. M. Duarte, and J. R. Figueira. Hypervolume subset selection in two dimensions: Formulations and algorithms. Evolutionary Computation, 2015.

- [25] G. L. Miller. Finding small simple cycle separators for 2-connected planar graphs. J. Comput. Syst. Sci., 32(3):265–279, 1986.

- [26] J. S. B. Mitchell and M. Sharir. New results on shortest paths in three dimensions. In Proc. of the 20th ACM Symp. on Computational Geometry (SoCG), pages 124–133, 2004.

- [27] N. J. A. Sloane, editor. The on-line encyclopedia of integer sequences. Published electronically at https://oeis.org. Visited November 19, 2016.

- [28] G. L. Nemhauser, L. A. Wolsey, and M. L. Fisher. An analysis of approximations for maximizing submodular set functions—I. Mathematical Programming, 14(1):265–294, 1978.

- [29] D. Rhee. Faster fully polynomial approximation schemes for knapsack problems, 2015. Master‘s thesis. https://dspace.mit.edu/handle/1721.1/98564.

- [30] G. Rote, K. Buchin, K. Bringmann, S. Cabello, and M. Emmerich. Selecting points that maximize the convex hull volume (extended abstract). In JCDCG3 2016; The 19th Japan Conf. on Discrete and Computational Geometry, Graphs, and Games, pages 58–60, 9 2016. http://www.jcdcgg.u-tokai.ac.jp/JCDCG3_abstracts.pdf.

- [31] J. A. Storer. On minimal-node-cost planar embeddings. Networks, 14(2):181–212, 1984.

- [32] R. Tamassia and I. G. Tollis. Planar grid embedding in linear time. IEEE Trans. on Circuits and Systems, 36(9):1230–1234, 1989.

- [33] T. Ulrich and L. Thiele. Bounding the effectiveness of hypervolume-based (+)-archiving algorithms. In Learning and Intelligent Optimization, pages 235–249. Springer, 2012.

- [34] L. While, P. Hingston, L. Barone, and S. Huband. A faster algorithm for calculating hypervolume. IEEE Trans. on Evolutionary Computation, 10(1):29–38, 2006.

- [35] J. Wu and S. Azarm. Metrics for quality assessment of a multiobjective design optimization solution set. J. of Mechanical Design, 123(1):18–25, 2001.

- [36] E. Zitzler, L. Thiele, M. Laumanns, C. M. Fonseca, and V. G. Da Fonseca. Performance assessment of multiobjective optimizers: an analysis and review. IEEE Trans. on Evolutionary Computation, 7(2):117–132, 2003.