MC-Blur: A Comprehensive Benchmark for Image Deblurring

Abstract

Blur artifacts can seriously degrade the visual quality of images, and numerous deblurring methods have been proposed for specific scenarios. However, in most real-world images, blur is caused by different factors, e.g., motion, and defocus. In this paper, we address how other deblurring methods perform in the case of multiple types of blur. For in-depth performance evaluation, we construct a new large-scale multi-cause image deblurring dataset (MC-Blur), including real-world and synthesized blurry images with different blur factors. The images in the proposed MC-Blur dataset are collected using other techniques: averaging sharp images captured by a -fps high-speed camera, convolving Ultra-High-Definition (UHD) sharp images with large-size kernels, adding defocus to images, and real-world blurry images captured by various camera models. Based on the MC-Blur dataset, we conduct extensive benchmarking studies to compare SOTA methods in different scenarios, analyze their efficiency, and investigate the buildataset’s capacity. These benchmarking results provide a comprehensive overview of the advantages and limitations of current deblurring methods, revealing our dataset’s advances. The dataset is available to the public at https://github.com/HDCVLab/MC-Blur-Dataset.

Index Terms:

Deblurring benchmark, Large-scale multi-cause dataset, Motion deblur, UHD deblur, Defocus deblur, Real-world deblurI Introduction

Image deblurring is an important problem in computer vision and image processing, which aims to restore a sharp image from an observed blurry input [1]. Deblurring has been widely used in medical image analysis, computational photography, and video enhancement applications. Conventional methods formulate the task as an inverse filtering problem using the uniform blur model:

| (1) |

where is the observed blurry image, is the latent sharp image, is the unknown blur kernel, is the additive noise, and is the convolution operation. Image deblurring is a well-known ill-posed problem, and numerous priors, such as natural image statistics, have been employed to constrain the solution space. However, estimating based on this formulation typically involves iterative and time-consuming estimation processes.

Numerous deep models have recently been applied to image deblurring within the supervised learning framework. These models require a large number of paired sharp and blurry images to train networks in an end-to-end manner. Several datasets have been created by averaging continuous frames, convolving with blur kernels, or directly taking photos with two cameras with different shutter durations to obtain pairs of images. Although these datasets have advanced the deep deblurring models, there remain unaddressed issues with these datasets: 1) As shown in [2], averaging sharp images of low frame rate to synthesize blurry images can cause unnatural blur. For datasets that include motion blur, images are usually generated by averaging continuous frames captured with a relatively slow and fixed shutter speed (e.g., the GoPro dataset (240 fps)), or from images in interpolated high fps videos (e.g., the REDS dataset [2]); 2) For datasets containing non-uniform blur, e.g., the dataset by Köhler et al. [3], the number of images is insufficient for training deep networks, images are not of high definition, and the kernel size is relatively small. With an increasing number of devices being able to capture Ultra-High-Definition (UHD) images, existing datasets are not suitable for training models capable of handling such images; 3) Datasets of real-world blurry images typically require additional processing steps such as accurate alignment [4]; 4) While defocus is a common cause of blurry images, few datasets are explicitly developed for this type of blur. In addition, existing ones like [5] are usually of small scale or lack images of heavy defocus blur, making them infeasible for studying heavy defocus deblurring.

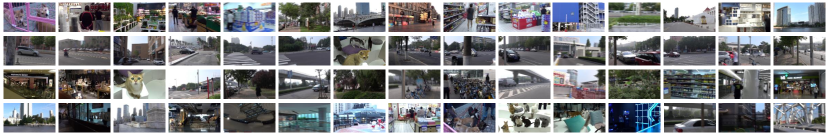

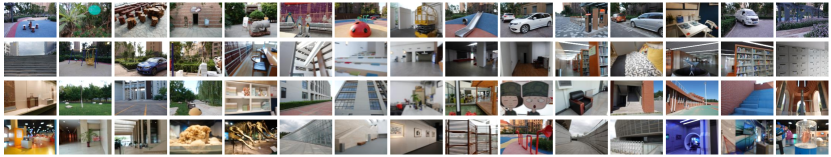

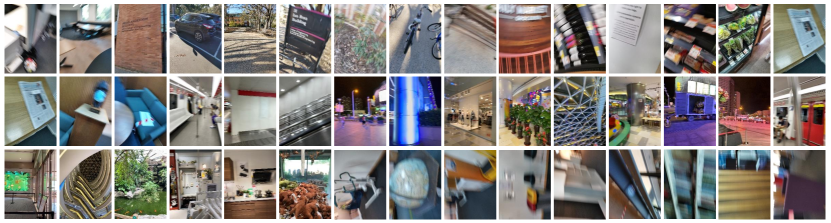

To overcome these limitations, we construct a comprehensive and large-scale multi-cause dataset, including blurry images caused by multiple factors, named MC-Blur dataset (See Fig. 1). This dataset is composed of four subsets. The first one, Real High-fps based Motion-blurred subset (RHM), includes images averaged from sharp frames to synthesize motion blur. Unlike existing datasets, sharp frames in RHM are captured with various ultra-high-speed cameras (iPhone, Samsung, Sony, etc.) at different frame rates (, , and fps). With different types of devices and frame rates, this subset mimics various motion blur in the real world. The second one, the large-kernel UHD Motion-blurred subset (UHDM), contains motion blur based on convolving sharp images with blur kernels. Due to the increasing number of high-definition cameras, we capture many UHD images at 4K+ resolution. These UHD images are convolved with large blur kernels. The third subset, LSD (Large-Scale Defocus), is specific to defocus blur. We capture images with various heavy defocus effects by manually changing the focus setting. The fourth one, the Real Mixed Blurry Qualitative subset (RMBQ), comprises real-world blurry images captured by different types of devices, e.g., mobile cameras. While no sharp images are available as ground truth, this subset is included for qualitative performance evaluation in real-world scenarios.

The main contributions of this paper are summarized as follows.

-

•

We build a large-scale and comprehensive dataset (MC-Blur) of images containing blur artifacts due to multiple causes:

-

–

The RHM subset provides motion-blurred images synthesized from real, higher-and-unfixed fps video frames without artificially interpolated technologies. Experimental studies demonstrate its superior generalization potential compared with the widely employed ones.

-

–

The UHDM subset is the first large-scale UHD image deblurring dataset expected to drive future research regarding the problem of single UHD image deblurring.

-

–

The LSD subset is the largest defocus blurry dataset, providing blurry images from the real world of heavier defocus artifacts compared with existing ones. This large amount of images of more serious artifacts will benefit our community in exploring the problem of heavy defocus deblurring.

-

–

The RMBQ subset provides large-scale, real blurry images captured by various mobile devices, serving as a credible testing bench for the qualitative study of future research in terms of real-world scenarios.

-

–

-

•

We carry out extensive benchmarking analysis of recent state-of-the-art image deblurring methods on our MC-Blur dataset. The benchmark study, including evaluating main-stream image deblurring methods, efficiency analysis, and effectiveness of cross-dataset learning, provides a comprehensive understanding of the SOTA methods in various scenarios.

The subsequent sections are structured as follows. Section II provides an overview of related works on image deblurring datasets and methods. In Section III, the specifics of the proposed MC-Blur dataset are presented. Section IV showcases the benchmarking results of existing deblurring approaches on the aforementioned MC-Blur dataset. Finally, Section V summarizes the findings and conclusions of this study.

II Related Work

In this section, we provide an overview of the datasets commonly employed for the image deburring task. Subsequently, we review existing image deblurring methods in the literature.

II-A Image Deblurring Datasets

Several datasets have been developed for advances in image deblurring [6, 7, 3, 8, 9, 10, 11, 12, 13, 14, 15]. For example, motion blur is simulated by convolving images with uniform blur kernels by Levin et al. [6] and Sun et al. [7], or with non-uniform kernels by Köhler et al. [3]. In [8], Lai et al. introduce a dataset including images of both real-world and synthetic blur (uniform blur kernels). However, the size of this dataset is still relatively small. Even when synthesizing blurry images via realistic blur kernels, the scale of the datasets mentioned above is small, making them difficult for deep learning-based deblurring methods. Furthermore, the blur kernels are relatively small, making them less effective for deblurring Ultra-High-Definition (UHD) images.

Considering that images are captured within the duration of camera exposure, blurry images can be modeled by the integration of neighboring frames [16],

| (2) |

where is the exposure time period and is the Camera Response Function (CRF). To model this process [16] and alleviate the problem of alignment [4], several deblurring datasets have been created based on the discrete formulation,

| (3) |

where is the number of frames.

In particular, the GoPro dataset [9] has been widely used for training deep models. Its sharp images are captured by a GoPro Hero4Black camera with a shutter speed of fps. Blurry images are generated by averaging continuous sharp frames over a time window. Similarly, based on this method, the HIDE [10] and REDS [2] datasets are created.

Rim et al. [4] develop a dataset containing real blurry images and the corresponding sharp images. Two different cameras take image pairs with varying times of exposure. While the blur is realistic, this work requires an additional image alignment step to generate image pairs, which causes the problem of imprecise alignment. In addition, this dataset also lacks defocus blurry images or UHD images, which is of great interest for real-world scenarios. On the other hand, Abuolaim and Brown [5] capture images with defocus blur, but this number is small compared to the recent large-scale deblurring datasets. Moreover, the extent of defocus blur on their blurry images is relatively slight. The details of the existing representative datasets and the proposed MC-Blur dataset are listed in Table I.

II-B Deblurring Methods

In the computer vision community, the problem of image deblurring has garnered significant attention owing to its inherently ill-posed nature. Consequently, numerous image deblurring methods have emerged in the literature. Broadly speaking, these methods can be categorized into two main groups: 1) conventional deblurring methods and 2) deep learning-based deblurring methods. We introduce these methods in the following.

Conventional Deblurring Methods. Early deblurring methods refer to traditional approaches that address the image deblurring problem by incorporating constraints on blur kernels or latent images [17, 18, 19]. Consequently, lots of effective priors have been proposed, including the sparse gradients distribution model [20], dark channel prior [21], Local Maximum Gradient prior [22], structure prior [17], superpixel segmentation prior [19], and more. However, most image deblurring methods primarily focus on mitigating blur caused by camera movement. At the same time, real dynamic scenes involve additional complexities, such as camera movement, object movement (rigid or non-rigid), and variations in scene depth. Consequently, these methods often encounter challenges when handling blur within dynamic scenes.

Deep Deblurring Methods. In recent years, numerous deep learning methods have been proposed to address various computer vision tasks [23, 24, 25], which also include single image deblurring [26, 27, 28, 29, 30], and video deblurring [31, 32, 33, 34]. Deep deblurring methods typically train neural networks in an end-to-end manner, using blurry images as inputs and updating network parameters by comparing the outputs and the ground truth sharp images [35, 36, 37, 11]. The idea of multi-scale processing and an adversarial loss is used in [9] for image deblurring. Kupyn et al. [38] adopt a conditional GAN for the deblurring task, resulting in the DeblurGAN model. An enhancement in terms of accuracy and speed is introduced with DeblurGAN-v2 [39]. In [27], patch-level deblurring is carried out to obtain an initial global estimation. The blur kernel is estimated, and the final result is obtained via deconvolution. Motivated by Spatial Pyramid Matching, a multi-patch scheme is applied to learn hierarchical representations in [40]. A CNN is combined with an RNN in [41] to deblur images of spatial-variant blur in dynamic scenes. Similarly, an LSTM with a CNN is employed in [42]. More recently, Zamir et al. [43] introduce a multi-stage architecture that gradually learns restoration functions for the degraded inputs, thus decomposing the overarching recovery process into a series of more manageable steps. They [44] also develop an efficient Transformer-based model designed to address the limitations of convolutional neural networks (CNNs) and enable effective image restoration. In addition, new architectures including skip connections [45, 46, 47, 48, 49, 50, 51], reblurring networks [52, 53, 54], unsupervised/self-supervised learning [55, 56, 57], attention blocks [58, 59, 60, 61, 62, 63, 64, 65, 66], NAS [67] and Nerf [68], are developed to improve the image deblurring performance.

| Dataset | Sharp | Blurred | Motion | Defocus | Real | Aligned |

| Levin et al. [6] | 4 | 32 | ||||

| Sun et al. [7] | 80 | 640 | ||||

| Köhler et al. [3] | 4 | 48 | ||||

| Lai et al. [8] | 108 | 300 | ||||

| GoPro [9] | 3,214 | 3,214 | ||||

| HIDE [10] | 8,422 | 8,422 | ||||

| Blur-DVS [11] | 2,178 | 2,918 | ||||

| Abuolaim et al. [5] | 500 | 500 | ||||

| RealBlur [4] | 9476 | 9476 | ||||

| RHM-250fps | 25,000 | 25,000 | ||||

| RHM-500fps | 25,000 | 25,000 | ||||

| RHM-1000fps | 37,500 | 37,500 | ||||

| UHDM | 2,000 | 10,000 | ||||

| LSD | 2,800 | 2,800 | ||||

| RMBQ | - | 10,000 | ||||

| MC-Blur dataset | 92,300 | 110,300 |

III MC-Blur Dataset

The advances in learning-based methods for image deblurring rely heavily on the quality and scale of datasets. To benchmark the state-of-the-art image deblurring methods in various conditions, we construct the large-scale multi-cause (MC-Blur) dataset. It consists of four blur types: uniform blur, motion blur by averaging continuous frames, heavy defocus blur, and real-world blur. In addition, the MC-Blur dataset includes many images captured during day and night time. We collect these images from over 1000 diverse scenes such as buildings, city scenes, vehicles, natural landscapes, people, animals, and sculptures. The four subsets are introduced in the following.

RHM Subset. Averaging continuous frames within a time window to generate motion-blurred images is a common practice for synthesizing images with motion blur. For example, in the GoPro dataset, images captured at fps are used to produce blurry images [9]. However, if the frame rate of the images to be averaged is not sufficiently high, the synthesized motion blur can be unnatural [2]. As such, Nah et al. [2] record videos at fps and interpolate them to fps by CNNs. We capture sharp images using high-frame-rate cameras to remove this potential error source to create the real high fps-based motion-blurred dataset, RHM. Images in RHM are captured in three settings. The first setting corresponds to the highest fps, up to fps. The sharp videos are recorded using a Sony RX10 camera. This subset contains images for training and for testing. The sharp images in the second setting are captured with the same camera at fps. Ultra-high-speed (UHS) cameras usually adopt the MEMC (motion estimation and compensation) module, i.e., frame interpolation, to increase the frame rate. In this paper, all UHS frames are captured without using MEMC. The training and testing sets contain and images, respectively. The third setting corresponds to images captured at fps with mobile devices such as iPhone, Huawei, and Sony RX10 cameras. For training and testing, this set contains and images, respectively. All images are resized via bicubic downsampling to reduce noise. The image resolutions in this set are and pixels.

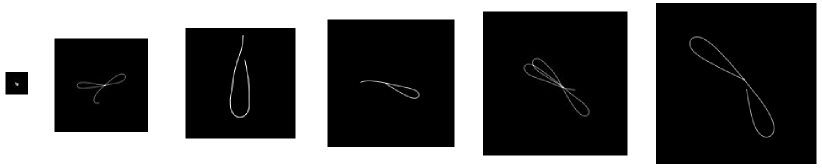

UHDM Subset. Another way to synthesize degraded images caused by motion blur is to convolve images with kernels. Existing datasets based on this approach use low-resolution images or small blur kernels. For example, when the image resolution is lower than pixels, the size of the blur kernels is usually set within the range from to pixels. We note that deblurring 4K+ images requires restoration with more details, which is challenging if the models are trained with low-resolution images. To address this critical concern and ensure our dataset mirrors real-world scenarios, we capture sharp images of 4K-6K resolutions to create the large-kernel UHD motion-blurred set, UHDM. In our quest for realism, we employ blur kernels of varying sizes — , , , , and — to convolve with the sharp images. This meticulous attention to diverse kernel sizes imbues our dataset’s heightened sense of authenticity. The training and testing sets contain and images, respectively. Blur kernels are generated via 3D camera trajectories [69]. The blur kernels with different sizes are shown in Fig. 2.

| Method | DeepDeblur [9] | DeblurGAN [38] | SRN [42] | DeblurGAN-v2 [39] |

|---|---|---|---|---|

| 250fps | 30.38/0.8766 | 24.89/0.6364 | 30.57/0.8799 | 26.99/0.8061 |

| 500fps | 31.08/0.8974 | 24.66/0.6748 | 31.54/0.9051 | 27.67/0.8320 |

| 1000fps | 32.41/0.8966 | 25.20/0.6535 | 32.69/0.9016 | 29.81/0.8461 |

| DMPHN [40] | DBGAN [52] | MPRNet [43] | Restormer [44] | MIMO-UNet [70] |

| 30.42/0.8768 | 27.89/0.8191 | 31.52/0.9239 | 32.02/0.9285 | 31.42/0.9211 |

| 31.43/0.9018 | 28.36/0.8388 | 32.08/0.9300 | 30.98/0.9160 | 32.89/0.9398 |

| 32.41/0.9096 | 29.66/0.8318 | 33.36/0.9332 | 32.77/0/9264 | 33.75/0.9360 |

LSD Subset. A few datasets on defocus image deblurring have recently been developed. To create the Dual-Pixel [5] dataset, Abuolaim et al. capture pairs of images of the same static scene at two aperture sizes via a Canon EOS 5D Mark IV DSLR camera. Focus distance and focal length differ across captured pairs to capture various defocus blurry images. However, this dataset is mainly designed for the dual-pixel problem. As a result, it provides only pairs of blurry images and their corresponding all-in-focus images, and the scale is small for approaches based on deep learning models. Meanwhile, these blurry images contain large sharp patches, and their extent of defocus blur is relatively slight. Given existing deep deblurring networks mainly take patches cropped from blurry images as input, the role of these sharp areas is less important during the training stage.

We collect a Large-Scale heavy Defocus blurred set, LSD, consisting of image pairs of sharp images and blurry images with the defocus effect in the training set, and image pairs for testing. The image resolution is at least pixels. For the convenience of training, we crop sixteen patches () without overlap from each image during the training and testing stages. Unlike the DPDD dataset, whose blurry images (about ) contain large sharp regions, images in our LSD are completely defocused without any sharp region. We manually control the focus to obtain the heavily blurry images and their corresponding sharp ones.

RMBQ Subset. The above three sets contain different kinds of real and synthesized blurry images. In the real world, blur artifacts can be more complex and difficult to approximate. For instance, real-world blur is caused by multiple reasons, such as the blur caused by both camera shake and object movement. Thus, it is difficult to guarantee the generalization of models trained with images containing only a specific kind of blur. Therefore, we capture another set of blurry images with various devices, including high-end digital cameras and mobile phones (iPhone, Samsung, and Huawei). There are images in this real mixed blurry qualitative set, RMBQ. This set is designed only for qualitative evaluation, as no sharp ground truth images are available.

IV Benchmarking and Analysis

In this section, we present the benchmarking results for established deblurring approaches using the proposed MC-Blur dataset. We begin by introducing the evaluated deblurring methods in Sec. IV-A and assess their performance across various categories of blurry images in Sec. IV-B. Subsequently, we conduct an efficiency analysis on UHD blurry images in Sec. IV-C. Following that, we present benchmark studies involving cross-dataset learning in Sec. IV-D, IV-E, and IV-F. Finally, we summarize the key insights derived from these benchmarking experiments in Sec. IV-G.

IV-A Evaluated Deblurring Methods

We evaluate nine state-of-the-art deblurring methods on the proposed MC-Blur dataset, including the multi-scale architectures (DeepDeblur [9], SRN [42] and MIMO-UNet [70]), GAN based frameworks (DeblurGAN [38], DeblurGAN-v2 [39], and DBGAN [52]), multi-patch networks (DMPHN [40] and MPRNet [43]), and attention-based networks (Restormer [44]). We employ PSNR and SSIM as quantitative metrics to evaluate the deblurring methods while also comparing their performance qualitatively on synthesized and real blurry images. In addition, we use NIQE and SSEQ to evaluate the deblurring methods’ performance on real-world blur images.

| Method | PSNR | SSIM |

|---|---|---|

| DeepDeblur [9] | 22.23 | 0.6322 |

| DeblurGAN [38] | 20.39 | 0.5568 |

| SRN [42] | 22.28 | 0.6346 |

| DeblurGAN-v2 [39] | 21.03 | 0.5839 |

| DMPHN [40] | 22.20 | 0.6378 |

| DBGAN [52] | 21.52 | 0.6025 |

| MPRNet [43] | 23.70 | 0.7472 |

| Restormer [44] | 22.39 | 0.7356 |

| MIMO-UNet [70] | 22.97 | 0.7317 |

| Method | PSNR | SSIM |

|---|---|---|

| DeepDeblur [9] | 20.73 | 0.7218 |

| DeblurGAN [38] | 20.04 | 0.6335 |

| SRN [42] | 21.66 | 0.7664 |

| DeblurGAN-v2 [39] | 21.13 | 0.6964 |

| DMPHN [40] | 21.23 | 0.7519 |

| DBGAN [52] | 21.56 | 0.7536 |

| MPRNet [43] | 21.32 | 0.7897 |

| Restormer [44] | 22.35 | 0.8072 |

| MIMO-UNet [70] | 22.56 | 0.8265 |

| Method | DeepDeblur [9] | DeblurGAN [38] | SRN [42] | DeblurGAN-v2 [39] |

|---|---|---|---|---|

| Speed (sec.) | 26.76 | 2.46 | 28.41 | 3.63 |

| Params (M) | 11.72 | 6.07 | 6.88 | 7.84 |

| DMPHN [40] | DBGAN [52] | MPRNet [43] | Restormer [44] | MIMO-UNet [70] |

| 17.63 | 31.62 | 27.91 | 42.77 | 2.45 |

| 21.69 | 11.59 | 20.13 | 26.10 | 6.81 |

IV-B Benchmarking on the MC-Blur Dataset

RHM Subset. To evaluate image deblurring methods’ performance, we first conduct experiments on RHM. During the training stage, samples from all the training subsets of RHM are used together. We test them separately on the 250fps, 500fps, and 1000fps sets in the test stage. Table II shows that DeepDeblur, SRN, DMPHN, MPRNet, Restormer, and MIMO-UNet perform well in terms of PSNR and SSIM. One contributing factor is the utilization of pixel-level loss functions in these methods, which significantly contributes to achieving elevated values in full-reference pixel-based metrics. The DeblurGAN, DeblurGAN-v2, and DBGAN models use discriminators to help synthesize more realistic deblurred images. These models are not only enforced to focus on pixel-wise measures (L1 or L2) but also pay attention to the whole image. Deblurring networks focusing on pixel-wise measures are expected to achieve higher values of PSNR and SSIM. MIMO-UNet and Restormer consistently outperform other methods, demonstrating the remarkable efficacy of their multi-scale schemes and transformer blocks. We also show a visual comparison of RHM in Fig. 3.

UHDM Subset. In this part, the same deblurring methods are evaluated on the UHDM images. The training and testing samples are all from UHDM. Table III shows that all methods’ PSNR and SSIM values are significantly lower than those in Table II. It could be attributed to several reasons. One reason is that we use large-size blur kernels to synthesize blurry images, making the UHDM set more difficult. Another reason is that compared with non-UHD image deblurring, deblurring UHD images require recovering more details. Therefore, UHD image deblurring is a more challenging task. In addition, all the benchmarking deblurring networks are proposed for non-UHD image deblurring, which is also a reason causing their performance drop on UHD image deblurring. Among these methods, MPRNet stands out as the top performer, underscoring the effectiveness of its multi-stage architecture. Furthermore, MIMO-UNet and Restormer also demonstrate strong performance in the UHD image deblurring setting. We show qualitative results corresponding to the large-kernel blur caused in Fig. 4.

| Train | Test-1 (PSNR/SSIM) | Test-2 (NIQE/SSEQ) | |||||

|---|---|---|---|---|---|---|---|

| GoPro | RealBlur-J | RHM | GoPro | RealBlur-J | RHM | RMBQ | RWBI |

| ✓ | 30.05/0.9329 | 26.52/0.8635 | 29.52/0.8914 | 6.0430/28.0522 | 5.6065/37.1958 | ||

| ✓ | 23.45/0.8385 | 28.73/0.9011 | 24.67/0.8251 | 6.3704/34.9025 | 6.4757/40.2203 | ||

| ✓ | 30.04/0.9313 | 26.78/0.8732 | 32.47/0.9297 | 5.5654/25.5820 | 5.0310/35.0606 | ||

| ✓ | ✓ | 27.62/0.8970 | 28.71/0.8993 | 27.96/0.8632 | 5.6983/29.7583 | 6.0287/38.8343 | |

| ✓ | ✓ | ✓ | 30.75/0.9395 | 29.80/0.9208 | 32.63/0.9313 | 5.5039/26.6139 | 4.9222/34.3614 |

LSD Subset. To investigate the performance of the SOTA deblurring methods in the case of defocus blur, we conduct a benchmark study on the LSD subset. All the training and test samples are from LSD. Quantitative results are reported in Table IV, and qualitative results are shown in Fig. 5. We note that defocus image deblurring is a more complex problem compared with deblurring of motion-blurred images. This is because defocus blur happens when the camera lens cannot converge all incoming light onto one sensor point, causing loss of vital image info, making it difficult to observe details and sharpen edges. Unlike motion blur, defocus severity is closely tied to object depth. Objects at varying distances experience different blurriness. This depth-dependent blur requires precise spatially varying kernel estimation for accurate deblurring. While the SOTA deep deblurring methods can restore high-quality motion-deblurred images synthesized by averaging neighboring frames, the performance of defocus deblurring is significantly lower. Among these methods, MIMO-UNet, as a multi-scale deblurring approach, excels in performance, providing further evidence of the effectiveness of its multi-scale deblurring scheme for enhancing defocused image quality.

RMBQ Subset. In addition, we evaluate the performance of deblurring methods in real-world scenarios. The deblurred images by the evaluated methods (trained on RHM and tested on RMBQ) are shown in Fig. 6 and Fig. 7. Overall, the deblurring methods trained on the proposed MC-Blur dataset generate sharp images given the input, recovering sharp text or image structure. Among these methods, MIMO-UNet exhibits superior qualitative performance in real-world blurry images. For instance, in Fig. 6, the word ‘SPECIAL,’ and in Fig. 7, ‘WET PAINT,’ appear sharper than all other methods. These results suggest that MIMO-UNet can outperform the benchmarking methods in real-world scenarios.

IV-C Efficiency Analysis on UHD Images

Efficiency should be considered when the image resolution is high, especially for UHD images. Table V shows the efficiency evaluation results on UHD images. These experiments are carried out using a standard platform with a P40 GPU. The DeepDeblur [9], SRN [42], DMPHN [40], DBGAN [52], MPRNet [43] and Restormer [44] models require more than ten seconds to process one UHD image. On the other hand, it takes , , seconds for DeblurGAN [38], DeblurGAN-v2 [39] and MIMO-UNet [70] models, respectively, to process one UHD image. We evaluate the speed and parameter efficiency of state-of-the-art deblurring methods. As such, we offer valuable insights into the efficiency of current methods in handling blurry images. Furthermore, this evaluation serves as a benchmark for the research community and underscores the practical applicability of our dataset.

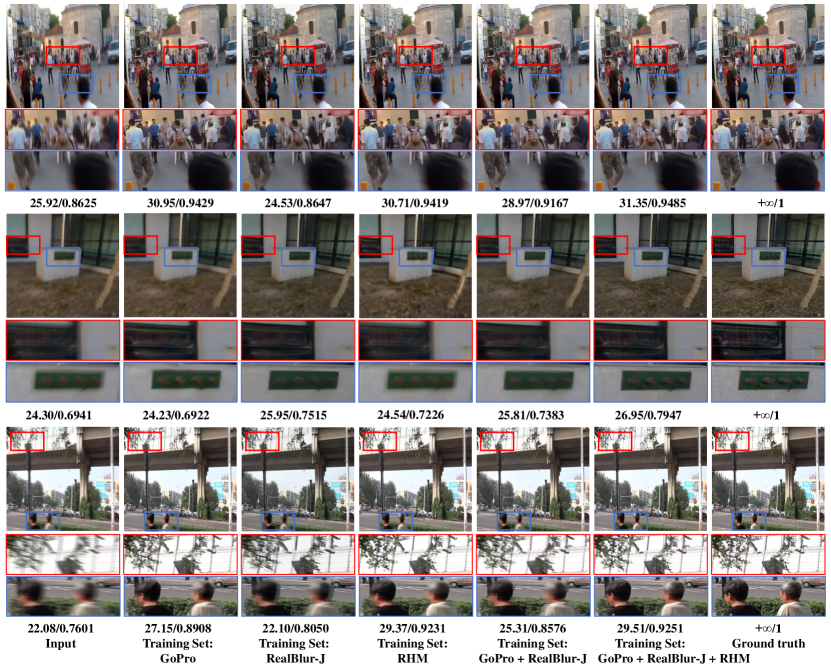

IV-D Cross-dataset Learning for Motion Deblurring

To illustrate the advantages of our RHM over the existing ones, we conduct an analysis with a cross-dataset learning protocol in this section. Specifically, we select a main-stream deblurring method [43] and train this identical deblurring network using five combinations of different training sets. Then we evaluate the trained different versions of [43] on various testing sets. Note that all parameter settings are set as the original paper, except that the epoch is set as . Table VI shows the results.

Based on the top three rows in Table VI, the deblurring network is expected to achieve the best performance when the training and testing datasets come from the same source. It is also observed that our RHM provides better generalization potential to the deblurring network when trained with a single source than its counterparts. For instance, when tested on different test datasets, the network trained with RHM consistently achieves the best performance (see the italic values in the table). If the test set is not constrained to be different from the training source, the best performance will be achieved when the training and the test data sources are identical.

When trained with multiple sources (the bottom two rows in Table VI), the performance is significantly improved if our RHM is taken as an additional training source. Fig. 8 demonstrates exemplar testing results corresponding to Test-1 in Table VI by the deblurring network [43] trained with the five settings of training source. These qualitative results coincide with our analysis from Table VI.

In addition, we also evaluate on other real blurry datasets (i.e., the proposed RMBQ and RWBI datasets [52]), which do not provide ground-truth sharp images. The results in Table VI demonstrate that the network trained with our RHM performs better in real-world scenery.

| Train | Test (PSNR/SSIM) | |||

|---|---|---|---|---|

| LSD | DPDD | LSD | DPDD | LSD&DPDD |

| ✓ | 24.58/0.8311 | 20.77/0.7830 | 22.68/0.8071 | |

| ✓ | 23.77/0.8053 | 21.32/0.7897 | 22.55/0.7975 | |

IV-E The Effectiveness of The LSD Set

As mentioned above, there exists a high-quality real defocus blurry DPDD dataset. Compared to the DPDD dataset, the proposed LSD set consists of heavier defocus blurry images; thus, there is a significant difference between them. In this section, to verify the effectiveness of the proposed LSD, we conduct another experimental study.

Similarly, we train an identical deblurring method [43], using our proposed LSD and DPDD respectively as training sets and then evaluate the trained different versions on different testing sets, including LSD, DPDD and the joint of LSD and DPDD. Table VII shows that, though both LSD and DPDD consist of real defocus blurry images, a model trained on one dataset cannot perform well on the other. Specifically, the model trained on LSD performs better for heavy-defocus deblurring, while the model trained on DPDD performs better on non-heavy-defocus blurry images. It is also important to observe that, for the testing set consisting of the joint of LSD and DPDD, the model trained with LSD performs better than the one prepared with DPDD. This suggests the utility advance of LSD over DPDD. We attribute this to the heavy defocus blurry images in the LSD set.

IV-F Cross-set Learning Regarding Blur Factors

To further study how different blur factors influence the behavior of SOTA methods, we examine how methods trained on the motion blur subset (i.e., RHM) behave on subsets of other factors (i.e., LSD and UHDM). We train MPRNet, Restormer, and MIMO-UNet on the RHM and test these methods respectively on UHDM and LSD.

Experimental results in Table LABEL:table:cross-sets_2 show that models trained on one type of blurry images perform poorly on recovering sharp images from other types of blur. Therefore, proposing a method that can recover sharp images from various kinds of blurry images is still an open topic.

IV-G Discussion

The benchmarking results on different sets of the proposed MC-Blur dataset, reveal several interesting findings. First, GAN-based networks achieve lower values of PSNR & SSIM for motion-blurred images than methods without using the GAN framework. However, the two networks (with and without using the GAN framework) show fewer differences for defocus images. This indicates that paying attention to the whole images (e.g., the adversarial loss function), rather than just considering the pixel level (e.g., L1 and L2 loss functions), may be a direction for defocus deblurring. The results in Tables II, IV support this finding. Second, current deep deblurring networks can generate high-quality images for motion-blurred images. However, it is difficult for them to achieve similar performance on large kernel-based Ultra-High-Definition blurry images (Tables II, III). Since increasing numbers of modern mobile devices allow capturing UHD images, it may be a meaningful direction for researchers to study UHD image deblurring. Third, current deep methods can deblur a non-UHD image in two seconds [40]. However, as shown in Table V, handling a UHD image takes significantly longer. Therefore, generating deblurred UHD images at a high rate while maintaining deblurring performance is still an open problem. In addition, this work has limitations. First, the proposed dataset is mainly for benchmarking single-image deblurring; thus, we do not evaluate video deblurring methods. Second, the MC-Blur dataset does not include human faces, so it is unsuitable for face restoration. Third, except the RMBQ subset, all the other subsets focus on a single blur factor.

V Conclusion

We establish the first large-scale multi-cause image deblurring dataset, MC-Blur, to benchmark deblurring methods on images with blur caused by various factors. The MC-Blur dataset includes a real high fps-based motion-blurred set, a large-kernel Ultra-High-Definition motion-blurred set, a large-scale heavy defocus blurry set and a real mixed blurry set. Based on these unique sets of images, the current SOTA image deblurring approaches are benchmarked to study their advances and limitations in diverse scenarios. Cross-dataset benchmarking is also carried out to verify the advantage of the proposed MC-Blur dataset. As such, we supply a comprehensive understanding of the SOTA image deblurring methods. The established MC-Blur is expected to drive the community’s research of multi-cause image deblurring. In the future, we plan to expand our efforts by generating additional datasets for evaluating video deblurring methods, assessing the performance of current techniques on images afflicted by more than two degrading factors, and exploring the potential advantages of deblurring in applications such as video segmentation and tracking.

References

- [1] K. Zhang, W. Ren, W. Luo, W.-S. Lai, B. Stenger, M.-H. Yang, and H. Li, “Deep image deblurring: A survey,” International Journal of Computer Vision, vol. 130, no. 9, pp. 2103–2130, 2022.

- [2] S. Nah, S. Baik, S. Hong, G. Moon, S. Son, R. Timofte, and K. M. Lee, “Ntire 2019 challenge on video deblurring and super-resolution: Dataset and study,” in IEEE Conference on Computer Vision and Pattern Recognition Workshop, 2019.

- [3] R. Köhler, M. Hirsch, B. Mohler, B. Schölkopf, and S. Harmeling, “Recording and playback of camera shake: Benchmarking blind deconvolution with a real-world database,” in European Conference on Computer Vision, 2012.

- [4] J. Rim, H. Lee, J. Won, and S. Cho, “Real-world blur dataset for learning and benchmarking deblurring algorithms,” in European Conference on Computer Vision, 2020.

- [5] A. Abuolaim and M. S. Brown, “Defocus deblurring using dual-pixel data,” in European Conference on Computer Vision, 2020.

- [6] A. Levin, Y. Weiss, F. Durand, and W. T. Freeman, “Understanding and evaluating blind deconvolution algorithms,” in IEEE Conference on Computer Vision and Pattern Recognition, 2009.

- [7] L. Sun and J. Hays, “Super-resolution from internet-scale scene matching,” in IEEE International Conference on Computational Photography, 2012.

- [8] W.-S. Lai, J.-B. Huang, Z. Hu, N. Ahuja, and M.-H. Yang, “A comparative study for single image blind deblurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2016.

- [9] S. Nah, T. Hyun Kim, and K. Mu Lee, “Deep multi-scale convolutional neural network for dynamic scene deblurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2017.

- [10] Z. Shen, W. Wang, X. Lu, J. Shen, H. Ling, T. Xu, and L. Shao, “Human-aware motion deblurring,” in IEEE International Conference on Computer Vision, 2019.

- [11] Z. Jiang, Y. Zhang, D. Zou, J. Ren, J. Lv, and Y. Liu, “Learning event-based motion deblurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2020.

- [12] S. Su, M. Delbracio, J. Wang, G. Sapiro, W. Heidrich, and O. Wang, “Deep video deblurring for hand-held cameras,” in IEEE Conference on Computer Vision and Pattern Recognition, 2017.

- [13] M. Hradiš, J. Kotera, P. Zemcık, and F. Šroubek, “Convolutional neural networks for direct text deblurring,” in British Machine Vision Conference, 2015.

- [14] Z. Shen, W.-S. Lai, T. Xu, J. Kautz, and M.-H. Yang, “Deep semantic face deblurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- [15] S. Zhou, J. Zhang, W. Zuo, H. Xie, J. Pan, and J. S. Ren, “Davanet: Stereo deblurring with view aggregation,” in IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [16] M. Hirsch, C. J. Schuler, S. Harmeling, and B. Schölkopf, “Fast removal of non-uniform camera shake,” in IEEE International Conference on Computer Vision, 2011.

- [17] Y. Bai, H. Jia, M. Jiang, X. Liu, X. Xie, and W. Gao, “Single-image blind deblurring using multi-scale latent structure prior,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 30, no. 7, pp. 2033–2045, 2019.

- [18] F. Wen, R. Ying, Y. Liu, P. Liu, and T.-K. Truong, “A simple local minimal intensity prior and an improved algorithm for blind image deblurring,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 31, no. 8, pp. 2923–2937, 2020.

- [19] B. Luo, Z. Cheng, L. Xu, G. Zhang, and H. Li, “Blind image deblurring via superpixel segmentation prior,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, no. 3, pp. 1467–1482, 2021.

- [20] J. Pan, Z. Hu, Z. Su, and M.-H. Yang, “Deblurring text images via l0-regularized intensity and gradient prior,” in IEEE Conference on Computer Vision and Pattern Recognition, 2014.

- [21] J. Pan, D. Sun, H. Pfister, and M.-H. Yang, “Deblurring images via dark channel prior,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40, no. 10, pp. 2315–2328, 2017.

- [22] L. Chen, F. Fang, T. Wang, and G. Zhang, “Blind image deblurring with local maximum gradient prior,” in IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [23] S. Wang, C. Li, Y. Li, Y. Yuan, and G. Wang, “Self-supervised information bottleneck for deep multi-view subspace clustering,” IEEE Transactions on Image Processing, vol. 32, pp. 1555–1567, 2023.

- [24] C. Li, X. Wang, W. Dong, J. Yan, Q. Liu, and H. Zha, “Joint active learning with feature selection via cur matrix decomposition,” IEEE transactions on pattern analysis and machine intelligence, vol. 41, no. 6, pp. 1382–1396, 2018.

- [25] C. Li, F. Wei, W. Dong, X. Wang, Q. Liu, and X. Zhang, “Dynamic structure embedded online multiple-output regression for streaming data,” IEEE transactions on pattern analysis and machine intelligence, vol. 41, no. 2, pp. 323–336, 2018.

- [26] J. Sun, W. Cao, Z. Xu, and J. Ponce, “Learning a convolutional neural network for non-uniform motion blur removal,” in IEEE Conference on Computer Vision and Pattern Recognition, 2015.

- [27] A. Chakrabarti, “A neural approach to blind motion deblurring,” in European Conference on Computer Vision, 2016.

- [28] T. M. Nimisha, A. Kumar Singh, and A. N. Rajagopalan, “Blur-invariant deep learning for blind-deblurring,” in IEEE International Conference on Computer Vision, 2017.

- [29] X. Xu, J. Pan, Y.-J. Zhang, and M.-H. Yang, “Motion blur kernel estimation via deep learning,” IEEE Transactions on Image Processing, vol. 27, no. 1, pp. 194–205, 2017.

- [30] M. Jin, G. Meishvili, and P. Favaro, “Learning to extract a video sequence from a single motion-blurred image,” in IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- [31] T. Hyun Kim, K. Mu Lee, B. Scholkopf, and M. Hirsch, “Online video deblurring via dynamic temporal blending network,” in IEEE International Conference on Computer Vision, 2017.

- [32] M. Aittala and F. Durand, “Burst image deblurring using permutation invariant convolutional neural networks,” in European Conference on Computer Vision, 2018.

- [33] S. Nah, S. Son, and K. M. Lee, “Recurrent neural networks with intra-frame iterations for video deblurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [34] X. Wang, K. C. Chan, K. Yu, C. Dong, and C. Change Loy, “EDVR: Video restoration with enhanced deformable convolutional networks,” in IEEE Conference on Computer Vision and Pattern Recognition Workshop, 2019.

- [35] J. Mustaniemi, J. Kannala, S. Särkkä, J. Matas, and J. Heikkila, “Gyroscope-aided motion deblurring with deep networks,” in IEEE Winter Conference on Applications of Computer Vision, 2019.

- [36] R. Aljadaany, D. K. Pal, and M. Savvides, “Douglas-rachford networks: Learning both the image prior and data fidelity terms for blind image deconvolution,” in IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [37] A. Kaufman and R. Fattal, “Deblurring using analysis-synthesis networks pair,” in IEEE Conference on Computer Vision and Pattern Recognition, 2020.

- [38] O. Kupyn, V. Budzan, M. Mykhailych, D. Mishkin, and J. Matas, “Deblurgan: Blind motion deblurring using conditional adversarial networks,” in IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- [39] O. Kupyn, T. Martyniuk, J. Wu, and Z. Wang, “Deblurgan-v2: Deblurring (orders-of-magnitude) faster and better,” in IEEE International Conference on Computer Vision, 2019.

- [40] H. Zhang, Y. Dai, H. Li, and P. Koniusz, “Deep stacked hierarchical multi-patch network for image deblurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [41] J. Zhang, J. Pan, J. Ren, Y. Song, L. Bao, R. W. Lau, and M.-H. Yang, “Dynamic scene deblurring using spatially variant recurrent neural networks,” in IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- [42] X. Tao, H. Gao, X. Shen, J. Wang, and J. Jia, “Scale-recurrent network for deep image deblurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- [43] S. W. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, M.-H. Yang, and L. Shao, “Multi-stage progressive image restoration,” in IEEE Conference on Computer Vision and Pattern Recognition, 2021.

- [44] S. W. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, and M.-H. Yang, “Restormer: Efficient transformer for high-resolution image restoration,” in IEEE Conference on Computer Vision and Pattern Recognition, 2022.

- [45] H. Gao, X. Tao, X. Shen, and J. Jia, “Dynamic scene deblurring with parameter selective sharing and nested skip connections,” in IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [46] D. Ren, K. Zhang, Q. Wang, Q. Hu, and W. Zuo, “Neural blind deconvolution using deep priors,” in IEEE Conference on Computer Vision and Pattern Recognition, 2020.

- [47] S. Zhou, J. Zhang, J. Pan, H. Xie, W. Zuo, and J. Ren, “Spatio-temporal filter adaptive network for video deblurring,” in IEEE International Conference on Computer Vision, 2019.

- [48] J. Pan, H. Bai, and J. Tang, “Cascaded deep video deblurring using temporal sharpness prior,” in IEEE Conference on Computer Vision and Pattern Recognition, 2020.

- [49] K. Zhang, W. Luo, Y. Zhong, L. Ma, W. Liu, and H. Li, “Adversarial spatio-temporal learning for video deblurring,” IEEE Transactions on Image Processing, vol. 28, no. 1, pp. 291–301, 2018.

- [50] W. Niu, K. Zhang, W. Luo, and Y. Zhong, “Blind motion deblurring super-resolution: When dynamic spatio-temporal learning meets static image understanding,” IEEE Transactions on Image Processing, vol. 30, pp. 7101–7111, 2021.

- [51] W. Niu, K. Zhang, W. Luo, Y. Zhong, and H. Li, “Deep robust image deblurring via blur distilling and information comparison in latent space,” Neurocomputing, vol. 466, pp. 69–79, 2021.

- [52] K. Zhang, W. Luo, Y. Zhong, B. Stenger, L. Ma, W. Liu, and H. Li, “Deblurring by realistic blurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2020.

- [53] H. Chen, J. Gu, O. Gallo, M.-Y. Liu, A. Veeraraghavan, and J. Kautz, “Reblur2deblur: Deblurring videos via self-supervised learning,” in IEEE International Conference on Computational Photography, 2018.

- [54] J. Rim, G. Kim, J. Kim, J. Lee, S. Lee, and S. Cho, “Realistic blur synthesis for learning image deblurring,” in European Conference on Computer Vision, 2022.

- [55] T. Madam Nimisha, K. Sunil, and A. Rajagopalan, “Unsupervised class-specific deblurring,” in European Conference on Computer Vision, 2018.

- [56] B. Lu, J.-C. Chen, and R. Chellappa, “Unsupervised domain-specific deblurring via disentangled representations,” in IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [57] J. Li, W. Wang, Y. Nan, and H. Ji, “Self-supervised blind motion deblurring with deep expectation maximization,” in IEEE Conference on Computer Vision and Pattern Recognition, 2023.

- [58] K. Purohit and A. Rajagopalan, “Region-adaptive dense network for efficient motion deblurring,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 07, 2020, pp. 11 882–11 889.

- [59] M. Suin, K. Purohit, and A. Rajagopalan, “Spatially-attentive patch-hierarchical network for adaptive motion deblurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2020.

- [60] P. D. Park, D. U. Kang, J. Kim, and S. Y. Chun, “Multi-temporal recurrent neural networks for progressive non-uniform single image deblurring with incremental temporal training,” in European Conference on Computer Vision, 2020.

- [61] X. Zhang, T. Wang, R. Jiang, L. Zhao, and Y. Xu, “Multi-attention convolutional neural network for video deblurring,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, no. 4, pp. 1986–1997, 2021.

- [62] L. Sun, C. Sakaridis, J. Liang, Q. Jiang, K. Yang, P. Sun, Y. Ye, K. Wang, and L. V. Gool, “Event-based fusion for motion deblurring with cross-modal attention,” in European Conference on Computer Vision, 2022.

- [63] L. Kong, J. Dong, J. Ge, M. Li, and J. Pan, “Efficient frequency domain-based transformers for high-quality image deblurring,” in IEEE Conference on Computer Vision and Pattern Recognition, 2023.

- [64] A. Dudhane, S. W. Zamir, S. Khan, F. S. Khan, and M.-H. Yang, “Burstormer: Burst image restoration and enhancement transformer,” IEEE Conference on Computer Vision and Pattern Recognition, 2023.

- [65] D. Park, B. H. Lee, and S. Y. Chun, “All-in-one image restoration for unknown degradations using adaptive discriminative filters for specific degradations,” in IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2023.

- [66] D. Li, C. Xu, K. Zhang, X. Yu, Y. Zhong, W. Ren, H. Suominen, and H. Li, “Arvo: Learning all-range volumetric correspondence for video deblurring,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 7721–7731.

- [67] X. Hu, W. Ren, K. Yu, K. Zhang, X. Cao, W. Liu, and B. Menze, “Pyramid architecture search for real-time image deblurring,” in IEEE International Conference on Computer Vision, 2021.

- [68] P. Wang, L. Zhao, R. Ma, and P. Liu, “Bad-nerf: Bundle adjusted deblur neural radiance fields,” in IEEE Conference on Computer Vision and Pattern Recognition, 2023.

- [69] G. Boracchi and A. Foi, “Modeling the performance of image restoration from motion blur,” IEEE Transactions on Image Processing, 2012.

- [70] S.-J. Cho, S.-W. Ji, J.-P. Hong, S.-W. Jung, and S.-J. Ko, “Rethinking coarse-to-fine approach in single image deblurring,” in IEEE International Conference on Computer Vision, 2021.