MDEAW: A Multimodal Dataset for Emotion Analysis through EDA and PPG signals from wireless wearable low-cost off-the-shelf Devices

Abstract

We present MDEAW, a multimodal database consisting of Electrodermal Activity (EDA) and Photoplethysmography (PPG) signals recorded during the exams for the course taught by the teacher at Eurecat Academy, Sabadell, Barcelona in order to elicit the emotional reactions to the students in a class room scenario. Signals from 10 students were recorded along with the students self-assessment of their affective state after each stimuli, in terms 6 basic emotion states. All the signals were captured using portable, wearable, wireless, low-cost, and off-the-shelf equipment that has the potential to allow the use of affective computing methods in everyday applications. A baseline for student-wise affect recognition using EDA and PPG-based features, as well as their fusion, was established through ReMECS, Fed-ReMECS and Fed-ReMECS-U. These results indicate the prospects of using low-cost devices for affective state recognition applications. The proposed database will be made publicly available in order to allow researchers to achieve a more thorough evaluation of the suitability of these capturing devices for emotion state recognition applications.

Index Terms:

Affective Computing, Dataset for Emotion Recognition, E-learning Dataset for Emotion Analysis, Federated Learning, Data Streaming, Students’ Emotion State AnalysisI Motivation

The main motivation of conducting this experiment at Eurecat’s Augmented Workplace is to see how emotions affect students’ learning outcomes. The hypothesis is that positive emotions promote good learning outcomes, while negative emotions could be a reason for bad results. From the common sense perspective, this hypothesis, sounds reasonable. The outcome of this study is to formally prove the hypothesis. Also, to the best of our knowledge there is no suitable dataset available in the emotion research domain which is related to emotional state analysis in the real life E-learning or education context.

II Experimental Protocol

II-A Experimental environment

The experiment is conducted at a classroom of Eurecat Academy, Sabadell, Barcelona. The classroom was a regular classroom with fully equipped machineries and other instruments. The picture (Fig. 1) is the actual view of the classroom where the experiment was conducted.

II-B Learning exercise

The stimuli used in this experiment are the exams for the course taught by the teacher at Eurecat Academy, Sabadell, Barcelona in order to elicit the emotional reactions to the students in a class room scenario and record Electrodermal Activity (EDA) and Photoplethysmography (PPG) data. The exam was designed by the tutor of that course and it was divided into smaller exercises (see Annex A for the examination formulation).

Each exercise duration was 5 minute slots and there were a total of 12 sets of exercises, so in total the whole exam session was 84 minutes. In between each of the 12 sets we gave 2 minutes gap to for the students to “cool down”. Also, students were advised that their annotations would not affect their evaluations to avoid any possible bias. In each of the 12 sets, from exercise Set 1 to Set 7; and 11 and 12 had 4 questions in each. Exercise Set 8 to 10 had one subjective question in each. The exercises sets are added in the additional materials for further reference.

Before starting the exam we instructed the students the whole exam pattern. In which students were asked to give their current emotional states that they had felt after answering each questions. For the emotion states input from students we used 6 basic discrete emotion model [1]111https://www.paulekman.com/universal-emotions/ developed by Paul Ekman, widely accepted theory of basic emotions and their expressions. These emotion labels are as follows:

-

1.

Sadness

-

2.

Happiness

-

3.

Fear

-

4.

Anger

-

5.

Surprise

-

6.

Disgust

II-C Students

In the exam there were 10 students. The students were all male. Their age varies from 23 to 57 years.

II-D Data Acquisition

The sensors used to record the EDA and PPG data is Consensys Bundle Development kit from Shimmer Sensing. The pictures of the sensor is in Fig 2.

Before starting the exam we put the sensors on the students shown in the following picture (Fig. 3) for collecting the EDA and PPG data. The sensors were put on the non active hand of each student (such as if he/she is right handed we put the sensor on the left hand and so on). The whole experiment was carried out in 2 sessions, in each session there were 5 students.

The following picture (Fig. 4) is from the class while doing the experiment.

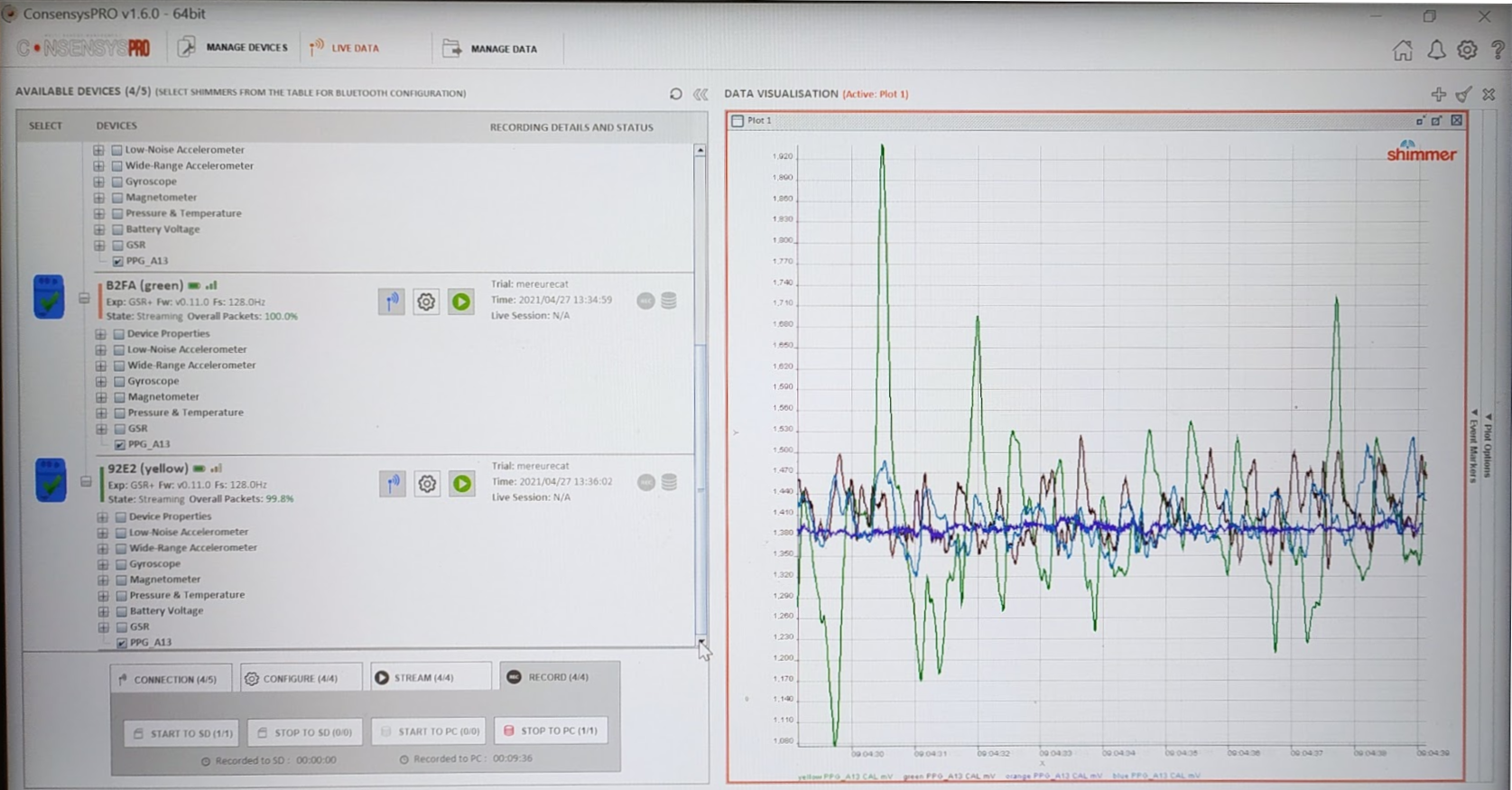

The data collection from multiple Shimmer sensors were done using the Shimmer’s Consensys software, in which all the data streams are recorded and saved. In the picture (Fig. 5), the software interface while doing the experiment is presented.

II-E Data Formatting

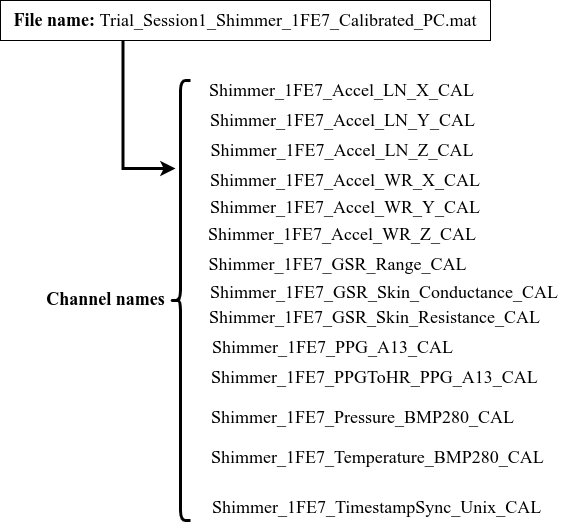

The data recorded in the Shimmer’s Consensys software is exported into two format, such as .csv and .mat. In each file the data is saved in the following format (Fig. 6):

Each .mat or .csv file contains these channels which are available in the Shimmer sensor. From the filename shown in Fig. 6, we can see that Consensys software saves the file in the following format:

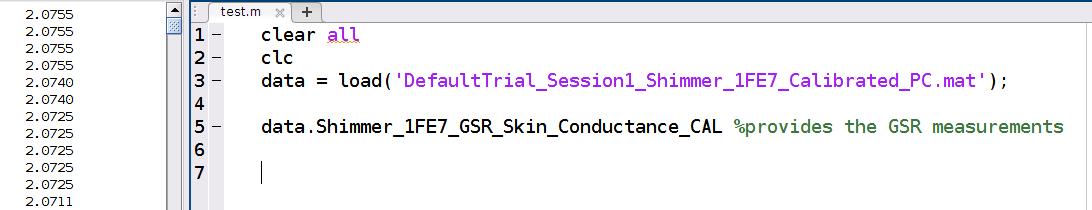

The sensor recordings can be accessed by providing the channel names as dot(.) operator, e.g. in Fig. 7.

The emotion labels were saved in .csv file with the file name which contains [session name + student ID + type of data (EDA/PPG)].

II-F Data Set

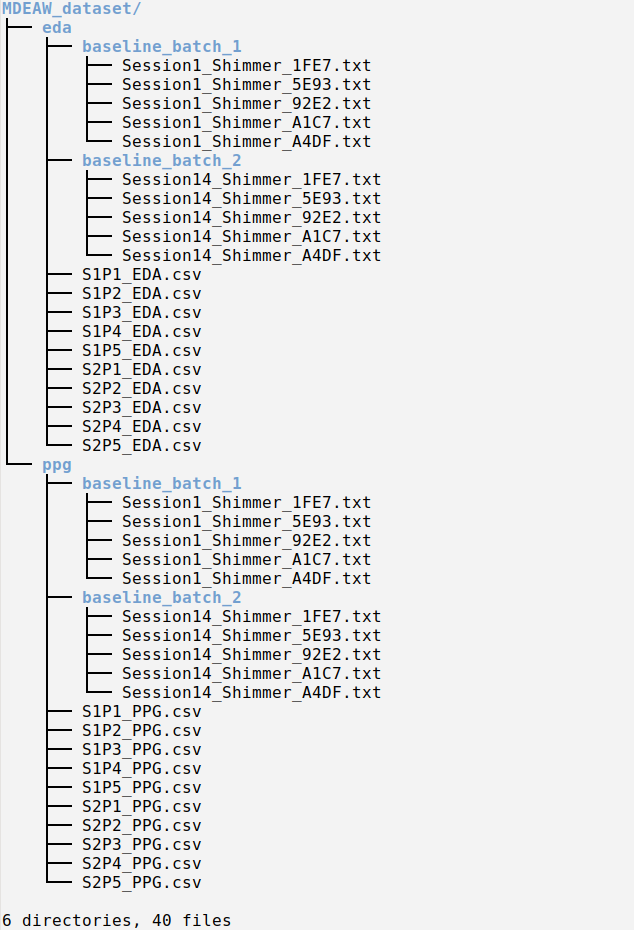

For easy accessibility and better understandability we have extracted only the EDA and PPG data from the data recordings saved in Shimmer’s Consensys software. The final datatset with the EDA and PPG recordings with the emotion labels are saved in .csv file. The folder structure of the MDEAW dataset is shown in Fig. 8.

Inside each csv file the EDA and PPG recordings are organised as follows:

The MDEAW dataset will be made publicly available.

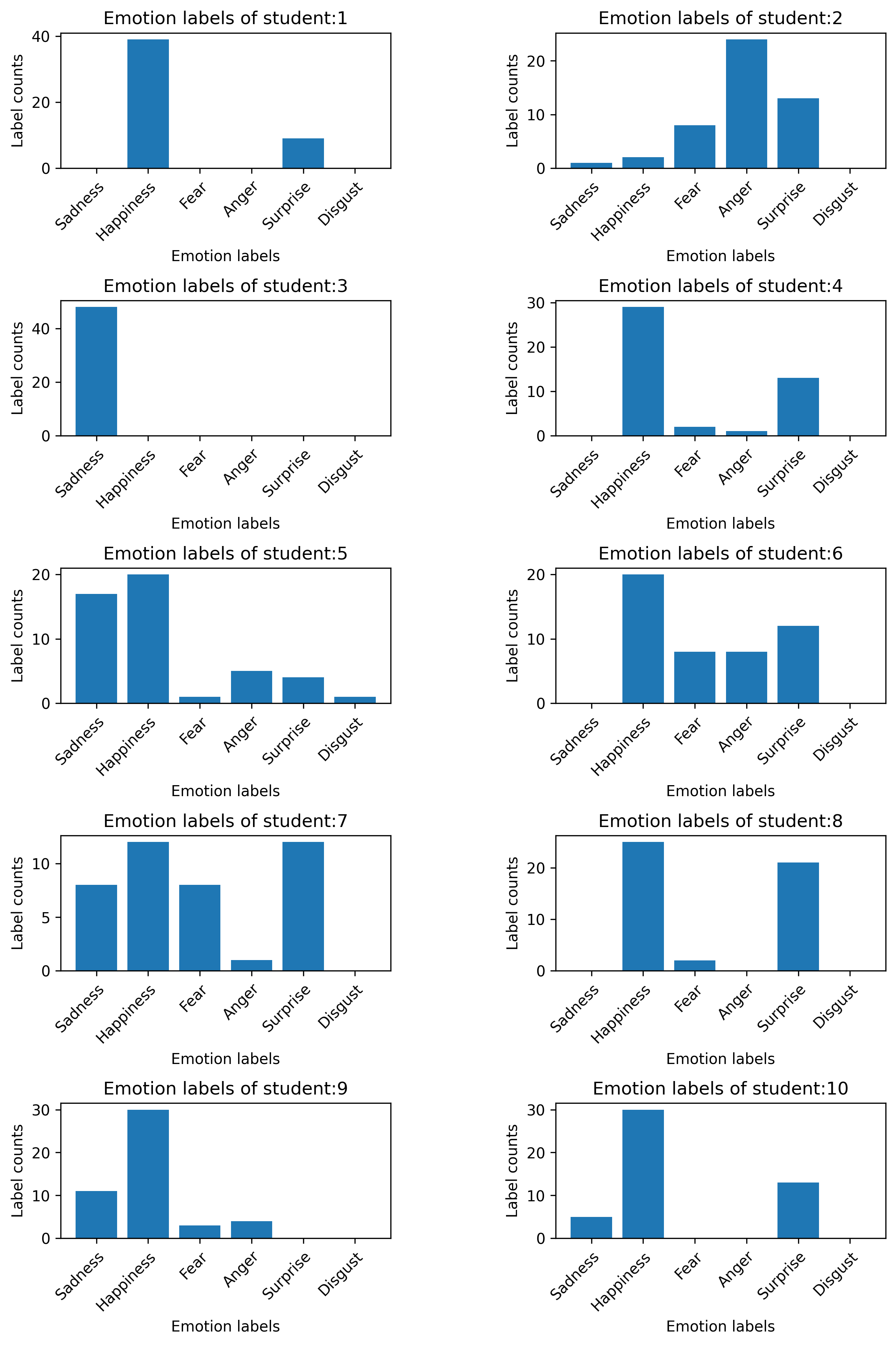

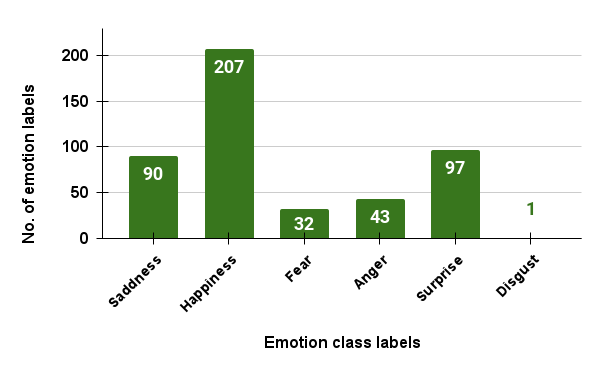

In Fig 9, the emotion class frequencies per student is presented and in Fig. 10 the overall emotion class frequencies for the MDEAW dataset is presented. This figures shows the class distributions of the MDEAW dataset.

III Analysis of the Data Set

III-A Feature Extraction

It’s important to extract features from different physiological signals (such as EDA, PPG etc.) for retrieving relevant information, which effectively represents emotion states. Based on the extracted features the emotion classifier is tested and trained.

The most popular and widely used time-frequency analysis of various signals (especially EDA, PPG, etc.) for feature extraction is Wavelet Decomposition (WD) [2]. Its popularity and wide use are due to its localized analysis approach(i,e. time-frequency), multi-rate filtering, and multi-scale zooming. It is better suited for non-stationary signals (such as EDA, PPG etc.) [3]. Most frequently used wavelet base functions are Meyer WD, Morlet Mother WD, Haar Mother WD and Daubechies WD [4]. The most frequently used features extracted from each sub-bands of EDA and PPG are entropy, median, mean, standard deviation, variance, 5th percentile value, 25th percentile value, 75th percentile value, 95th percentile value, root means square value, zero crossing rate, mean crossing rate [5, 6, 7].

In our experiment we have extracted and used these features for emotion classification. The wavelet feature extraction technique is used to extract features from multi-modal signal streams (EDA, and PPG signals from the dataset) in this experiment. The wavelet Daubechies 4 (Db4) is the base function for feature extraction. Our experiment decomposes EDA and RB, into three levels, respectively.

III-A1 Fusion of EDA and PPG-based features

The use of features based on multiple modalities has been shown to provide increased classification accuracy compared to approaches based on a single modality. In order to evaluate the performance of the combined EDA and PPG-based features, the two feature vectors and are fused as follows: First, the values of each feature vector are normalised in the range [0, 1] in order to compensate for the differences in numerical range. Then, the two normalised feature vectors and are concatenated in the final feature vector = [ ].

IV Emotion Recognition Results for the Data Set

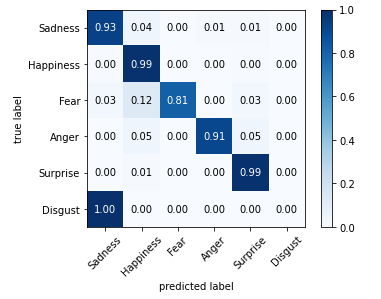

For the emotion recognition results, we have used our previously developed Real-time Multimodal Emotion Classification System (ReMECS [8]) based on Feed-Forward Neural Network, trained in an online fashion using the Incremental Stochastic Gradient Descent algorithm. The avg. accuracy and F1-score along with the standard deviation (STD) are presented in TABLE I. More results can be found in Appendix B.

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|

| MDEAW | 0.9583 ( 0.1998) | 0.9583 ( 0.1998) |

Also, the confusion matrix is presented in Fig. 11.

V A New Round of the Experiment

We have planned a new round of the experiment, to extend the dataset. The reason for conducting another round of experiment to make the MDEAW dataset more diverse and also to increase number data samples by increasing number participants. The diversity can help each procedure to guarantee a total good machine learning: diversity of the training data ensures that the training data can provide more discriminative information for the model, diversity of the learned model (diversity in parameters of each model or diversity among different base models) makes each parameter/model capture unique or complement information and the diversity in inference can provide multiple choices each of which corresponds to a specific plausible local optimal result [9]. Having more number of diverse data samples in the MDEAW dataset will help ML/DL models to generalize more so that the accuracy and efficiency of those intelligent emotion models increase.

In the new round of experiment we will follow the same design pattern followed for the first experiment for the EDA and PPG data collection from the students but the course and the subject may be different or same based on the availability. The reason for adding the term ”based on availability” is because we do the experiment on real students with the real courses studied at our Eurecat Academy.

Right now, the dataset has 10 students data, which is rather small and if we train (offline mode training) any ML/DL model, there might be a chance that the model will be over-fitted easily. So, by increasing the data samples in the MDEAW dataset we can avoid the issue so that ML/DL models can learn and generalize properly and those trained models can be used for emotion classification from new EDA and PPG signals. Also, making the MDEAW dataset diverse, balanced so that the ML/DL model’s accuracy can be good for emotion classification.

Acknowledgement

The authors would like to thank the tutors and students at Eurecat - Centro Tecnológico de Cataluña for their participation and collaboration in the experimental study.

This study is carried out as part of the Ph.D work of the student Arijit Nandi and has been funded by ACCIÓ, Catalunya, Spain (Pla d’Actuació de Centres Tecnològics 2021) under the project TutorIA for three years, from December 2019 to December 2022.

Disclaimer

While every care has been taken to ensure the accuracy of the data included in the MDEAW dataset,the authors and the Eurecat - Centro Tecnológico de Cataluña, Barcelona, Spain do not provide any guaranties and disclaim all responsibility and all liability (including without limitation, liability in negligence) for all expenses, losses, damages (including indirect or consequential damage) and costs which you might incur as a result of the provided data being inaccurate or incomplete in any way and for any reason 2022, Eurecat - Centro Tecnológico de Cataluña, Barcelona, Spain.

Contact

For any questions regarding the MDEAW database please contact: mail@eurecat.org Eurecat - Centro Tecnológico de Cataluña, Barcelona, Spain.

References

- [1] P. Ekman, “An argument for basic emotions,” Cognition and Emotion, vol. 6, no. 3-4, pp. 169–200, 1992. [Online]. Available: https://doi.org/10.1080/02699939208411068

- [2] M. R. Islam and M. Ahmad, “Wavelet analysis based classification of emotion from eeg signal,” in Int’l Conf. on Electrical, Computer and Comm. Eng., 2019, pp. 1–6.

- [3] J. Zhang, Z. Yin, P. Chen, and S. Nichele, “Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review,” Information Fusion, vol. 59, pp. 103 – 126, 2020.

- [4] A. Subasi, “Eeg signal classification using wavelet feature extraction and a mixture of expert model,” Expert Systems with Applications, vol. 32, no. 4, pp. 1084 – 1093, 2007.

- [5] P. Bota, C. Wang, A. Fred, and H. Silva, “Emotion assessment using feature fusion and decision fusion classification based on physiological data: Are we there yet?” Sensors, vol. 20, no. 17, 2020.

- [6] D. Ayata, Y. Yaslan, and E. Kamasak, Mustafa, “Emotion recognition from multimodal physiological signals for emotion aware healthcare systems,” J. of Medical and Biological Eng., pp. 149–157, 2020.

- [7] D. Ayata, Y. Yaslan, and M. Kamaşak, “Emotion recognition via random forest and galvanic skin response: Comparison of time based feature sets, window sizes and wavelet approaches,” in Medical Technologies National Congress, 2016, pp. 1–4.

- [8] A. Nandi, F. Xhafa, L. Subirats, and S. Fort, “Real-time multimodal emotion classification system in e-learning context,” in Proceedings of the 22nd Engineering Applications of Neural Networks Conference. Cham: Springer International Publishing, 2021, pp. 423–435.

- [9] Z. Gong, P. Zhong, and W. Hu, “Diversity in machine learning,” IEEE Access, vol. 7, pp. 64 323–64 350, 2019.

- [10] A. Nandi and F. Xhafa, “A federated learning method for real-time emotion state classification from multi-modal streaming,” Methods, vol. 204, pp. 340–347, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S104620232200072X

- [11] A. Nandi, F. Xhafa, L. Subirats, and S. Fort, “Federated learning with exponentially weighted moving average for real-time emotion classification,” in Proceedings of the 13th 13th International Symposium on Ambient Intelligence Conference. Cham: Springer International Publishing, 2022, pp. 423–435.

Appendix A Learning Examination Exercises

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0001.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0002.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0003.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0004.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0005.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0006.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0007.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0008.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0009.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0010.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0011.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0012.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0013.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0014.jpg)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2cc34876-5a1a-4078-a6f6-db047718a3ff/page-0015.jpg)

Appendix B Further Data Analysis Results

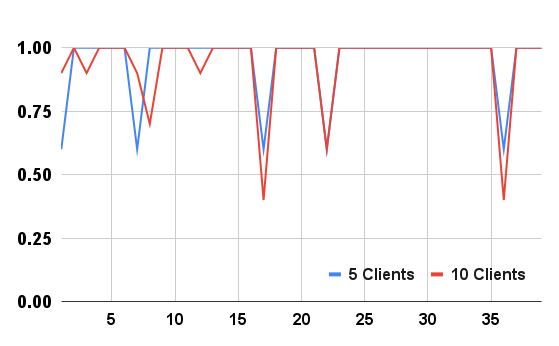

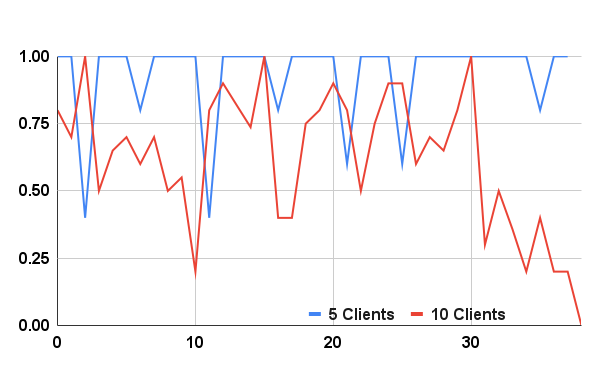

We have applied our previously developed frameworks of Fed-ReMECS [10] and Fed-ReMECS-U [11] with the MDEAW dataset. Herewith, we show some of the results.

B-A Results from Fed-ReMECS Framework

| No. of clients | Accuracy |

|---|---|

| 5 | 0.9315 (0.16) |

| 10 | 0.6195 (0.25) |

The global model performance in case of Fed-ReMECS is shown in Fig. 12

B-B Results from Fed-ReMECS-U Framework

:

| No. of clients | Accuracy |

|---|---|

| 5 | 0.9487 (0.13) |

| 10 | 0.9410 (0.15) |

The global model performance in case of Fed-ReMECS-U is shown in Fig. 13