Memory Approximate Message Passing

Abstract

Approximate message passing (AMP) is a low-cost iterative parameter-estimation technique for certain high-dimensional linear systems with non-Gaussian distributions. However, AMP only applies to independent identically distributed (IID) transform matrices, but may become unreliable for other matrix ensembles, especially for ill-conditioned ones. To handle this difficulty, orthogonal/vector AMP (OAMP/VAMP) was proposed for general right-unitarily-invariant matrices. However, the Bayes-optimal OAMP/VAMP requires high-complexity linear minimum mean square error estimator. To solve the disadvantages of AMP and OAMP/VAMP, this paper proposes a memory AMP (MAMP), in which a long-memory matched filter is proposed for interference suppression. The complexity of MAMP is comparable to AMP. The asymptotic Gaussianity of estimation errors in MAMP is guaranteed by the orthogonality principle. A state evolution is derived to asymptotically characterize the performance of MAMP. Based on the state evolution, the relaxation parameters and damping vector in MAMP are optimized. For all right-unitarily-invariant matrices, the optimized MAMP converges to OAMP/VAMP, and thus is Bayes-optimal if it has a unique fixed point. Finally, simulations are provided to verify the validity and accuracy of the theoretical results.

I Introduction

Consider the problem of signal reconstruction for a noisy linear system:

| (1) |

where is a vector of observations, is a transform matrix, is a vector to be estimated and is a vector of Gaussian additive noise samples. The entries of are independent and identically distributed (IID) with zero mean and unit variance, i.e., . In this paper, we consider a large system with and a fixed (compressed ratio). In the special case when is Gaussian, the optimal solution can be obtained using standard linear minimum mean square error (MMSE) methods. Otherwise, the problem is in general NP hard [2, 3].

I-A Background

Approximate message passing (AMP) has attracted extensive research interest for this problem [4, 5]. AMP adopts a low-complexity matched filter (MF), so its complexity is as low as per iteration. Remarkably, the asymptotic performance of AMP can be described by a scalar recursion called state evolution derived heuristically in [5] and proved rigorously in [4]. State evolution analysis in [4] implies that AMP is Bayes-optimal for zero-mean IID sub-Gaussian sensing matrices when the compression rate is larger than a certain threshold [7]. Spatial coupling [7, 8, 9, 10] is used for the optimality of AMP for any compression rate.

A basic assumption of AMP is that has IID Gaussian entries [5, 4]. For matrices with correlated entries, AMP may perform poorly or even diverge [11, 12, 13]. It was discovered in [14, 15] that a variant of AMP based on a unitary transformation, called UTAMP, performs well for difficult (e.g. correlated) matrices . Independently, orthogonal AMP (OAMP) was proposed in [16] for unitarily invariant . OAMP is related to a variant of the expectation propagation algorithm [17] (called diagonally-restricted expectation consistent inference in [18] or scalar expectation propagation in [19]), as observed in [20, 21]. A closely related algorithm, an MMSE-based vector AMP (VAMP) [21], is equivalent to expectation propagation in its diagonally-restricted form [18]. The accuracy of state evolution for such expectation propagation type algorithms (including VAMP and OAMP) was conjectured in [16] and proved in [21, 20]. The Bayes optimality of OAMP is derived in [21, 20, 16] when the compression rate is larger than a certain threshold, and the advantages of AMP-type algorithms over conventional turbo receivers [23, 22] are demonstrated in [25, 24].

The main weakness of OAMP/VAMP is the high-complexity incurred by linear MMSE (LMMSE) estimator. Singular-value decomposition (SVD) was used to avoid the high-complexity LMMSE in each iteration [21], but the complexity of the SVD itself is as high as that of the LMMSE estimator. The performance of OAMP/VAMP degrades significantly when the LMMSE estimator is replaced by the low-complexity MF [16] used in AMP. This limits the application of OAMP/VAMP to large-scale systems for which LMMSE is too complex.

In summary, the existing Bayes-optimal AMP-type algorithms are either limited to IID matrices (e.g. AMP) or need high-complexity LMMSE (e.g. OAMP/VAMP). Hence, a low-complexity Bayes-optimal message passing algorithm for unitarily invariant matrices is desired.

A long-memory AMP algorithm was originally constructed in [26] to solve the Thouless-Anderson-Palmer equations for Ising models with general invariant random matrices. The results in [26] were rigorously justified via SE in [27]. Recently, Takeuchi proposed convolutional AMP (CAMP), in which the AMP is modified by replacing the Onsager term with a convolution of all preceding messages [28]. The CAMP has low complexity and applies to unitarily invariant matrices. It is proved that the CAMP is Bayes-optimal if it converges to a unique fixed point [28]. However, it is found that the CAMP has a low convergence speed and may fail to converge, particularly for matrices with high condition numbers. In addition, a heuristic damping was used to improve the convergence of CAMP. However, the damping is performed on the a-posteriori outputs, which breaks orthogonality and the asymptotic Gaussianity of estimation errors [28].

I-B Contributions

To overcome the difficulties in AMP, OAMP/VAMP and CAMP, we propose a memory AMP (MAMP) using a low-complexity long-memory MF. Due to the correlated long memory, stricter orthogonality is required for MAMP to guarantee the asymptotic Gaussianity of estimation errors in MAMP [20, 28]. In detail, the step-by-step orthogonalization between current input and output estimation errors in OAMP/VAMP is not sufficient, and instead, the current output estimation error is required to be orthogonal to all preceding input estimation errors. A covariance-matrix state evolution is established for MAMP. Based on state evolution, relaxation parameters and a damping vector, preserving the orthogonality (e.g. the asymptotic Gaussianity of estimation errors), are analytically optimized to guarantee and improve the convergence of MAMP. The main properties of MAMP are summarized as follows.

-

•

MAMP has comparable complexity to AMP and much lower complexity than OAMP/VAMP.

-

•

MAMP converges to the same fixed point as that of OAMP/VAMP for all unitarily invariant matrices. As a result, it is Bayes-optimal if it has a unique fixed point.

II Preliminaries

II-A Problem Formulation

Fig. 1(a) illustrates the system in (1) with two constraints:

| (2) |

Our aim is to use the AMP-type iterative approach in Fig. 1(b) to find an MMSE estimation of , i.e., its MSE converges to

| (3) |

where is the a-posteriori meas of .

Definition 1 (Bayes Optimality)

An iterative approach is said to be Bayes optimal if its MSE converges to the MMSE of the system in (1).

II-B Assumptions

Let the singular value decomposition of be , where and are orthogonal matrices, and is a diagonal matrix. We assume that is known and is right-unitarily-invariant, i.e., , and are independent, and is Haar distributed. Let and , where and denote the minimal and maximal eigenvalues of , respectively. We assume that , and are known. This assumption can be relaxed using specific approximations (see [1]).

II-C Non-memory Iterative Process and Orthogonality

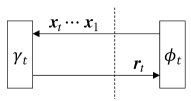

Non-memory Iterative Process (NMIP): Fig. 1(b) illustrates an NMIP consisting of a linear estimator (LE) and a non-linear estimator (NLE): Starting with ,

| (4) |

where and process the two constraints and separately, based only on their current inputs and respectively. Let

| (5a) | |||

| where and indicate the estimation errors with zero means and variances: | |||

| (5b) | |||

The asymptotic IID Gaussian property of an NMIP was conjectured in [16] and proved in [20, 21].

Lemma 1 (Orthogonality and Asymptotic IID Gaussianity)

Assume that is unitarily invariant with and the following orthogonality holds for all :

| (6) |

Then, for Lipschitz-continuous [29] and separable-and-Lipschitz-continuous , we have: ,

| (7a) | ||||

| (7b) | ||||

where and are independent of .

II-D Overview of OAMP/VAMP

OAMP/VAMP [16, 21]: Let , be an MMSE estimator, and be an estimator of defined as

| (8) |

An OAMP/VAMP is then defined as: Starting with , and ,

| (9a) | ||||

| (9b) | ||||

| where | ||||

| (9c) | ||||

| (9d) | ||||

| (9e) | ||||

| (9f) | ||||

and is independent of .

We assume that is an MMSE estimator given by

| (10) |

It was proved in [20] that OAMP/VAMP satisfies the orthogonality in (6). Hence, the IID Gaussian property in (7) holds for OAMP/VAMP.

Lemma 2 (Bayes Optimality [32, 30, 16, 31])

Assume that with a fixed , and OAMP/VAMP satisfies the unique fixed point condition. Then, OAMP/VAMP is Bayes optimal for right-unitarily-invariant matrices.

Complexity: The NLE in OAMP/VAMP is a symbol-by-symbol estimator, whose time complexity is as low as . The complexity of OAMP/VAMP is dominated by LMMSE-LE, which costs time complexity per iteration for matrix multiplication and matrix inversion.

III Memory AMP

III-A Memory Iterative Process and Orthogonality

Memory Iterative Process (MIP): Fig. 2 illustrates an MIP based on a long-memory linear estimator (LMLE) and a non-linear estimator (NLE) defined as: Starting with ,

| (11a) | |||||

| (11b) | |||||

We call (11) MIP since contains long memory . It should be emphasized that the step-by-step orthogonalization between current input and output estimation errors is not sufficient to guarantee the asymptotic IID Gaussianity for MIP. Thus, a stricter orthogonality is required, i.e., the estimation error of is required to be orthogonal to all preceding estimation errors [28, 33].

Lemma 3 (Orthogonality and Asymptotic IID Gaussianity [28, 33])

Assume that is unitarily invariant with and the following orthogonality holds for all :

| (12) |

Then, for Lipschitz-continuous [29] and separable-and-Lipschitz-continuous , we have: ,

| (13a) | |||

| (13b) | |||

where with and with . Besides, and are independent of .

III-B Memory AMP (MAMP)

Memory AMP: Let , and consider

| (14) |

An MAMP process is defined as: Starting with and ,

| (15a) | ||||

where is the same as that in OAMP/VAMP (see (9)).

The following are intuitions of MAMP.

-

•

In LMIE, all preceding messages are utilized in to guarantee the orthogonality in (12). In NLE, at most preceding messages are utilized in (e.g. damping) to guarantee and improve the convergence of MAMP.

-

•

ensures that MAMP has the same fixed point as OAMP/VAMP.

- •

- •

- •

We call (15) memory AMP as it involves a long memory at LMIE that is different from the non-memory LE in OAMP/VAMP. Matrix-vector multiplications instead of matrix inverse are involved. Thus, the complexity of MAMP is comparable to AMP, i.e., as low as per iteration.

IV Main Properties of MAMP

IV-A Orthogonality and Asymptotic IID Gaussianity

Let . For , we define

| (15pa) | ||||

| (15pb) | ||||

For ,

| (15q) |

Furthermore, if .

Proposition 1

The in (15) and the corresponding errors can be expanded to

| (15ra) | |||

| where | |||

| (15rb) | |||

IV-B State Evolution

Using the IID Gaussian property in Theorem 1, we establish a state evolution for the dynamics of the MSE of MAMP. The main challenge is the correlation between the long-memory inputs of LMIE. It requires a covariance-matrix state evolution to track the dynamics of MSE.

Define the covariance matrices as follows:

| (15sa) | |||

| where | |||

| (15sb) | |||

Proposition 2 (State Evolution)

The covariance matrices of MAMP can be tracked by the following state evolution: Starting with ,

| (15t) |

The details of and are provided in [1].

IV-C Convergence and Bayes Optimality

The following theorem gives the convergence and Bayes optimality of the optimized MAMP. The proof is provided in Appendix F in [1].

Theorem 2 (Convergence and Bayes Optimality)

Assume that with a fixed and is right-unitarily-invariant. The MAMP with optimized (see Section V) converges to the same fixed point as OAMP/VAMP, i.e., it is Bayes optimal if it has a unique fixed point.

IV-D Complexity Comparison

Table I compares the time and space complexity of MAMP, CAMP, AMP and OAMP/VAMP, where is the number of iterations. MAMP and CAMP have the similar time and space complexity. OAMP/VAMP has higher complexity than AMP, CAMP and MAMP, while MAMP and CAMP have comparable complexity to AMP for . For more details, refer to Section IV-D in [1].

V Parameter Optimization

The parameters are optimized step-by-step for each iteration assuming that the parameters in previous iterations are fixed. More specifically, we first optimize . Then, given , we optimize . Finally, given and , we optimize .

V-A Optimization of

V-B Optimization of

Proposition 3

Fixed , the optimal that minimizes is given by and for ,

| (15ag) |

where

| (15aha) | ||||

| (15ahb) | ||||

| (15ahc) | ||||

V-C Optimization of

Let be the covariance matrix of the input errors of , i.e. .

Proposition 4 (Optimal damping)

Fixed and , the optimal that minimizes is given by

| (15ai) |

It is easy to see that the MSE of current iteration with optimized damping is not worse than that of the previous iteration, which is a special case of . That is, the MSE of MAMP with optimized damping is monotonically decreasing in the iterations.

VI Simulation Results

We study a compressed sensing problem where follows a symbol-wise Bernoulli-Gaussian distribution, i.e. ,

| (15al) |

The variance of is normalized to 1. The signal-to-noise ratio (SNR) is defined as .

Let the SVD of be . The system model in (1) can be rewritten as[16, 21]:

| (15am) |

Note that has the same distribution as . Thus, we can assume without loss of generality. To reduce the calculation complexity of OAMP/VAMP, we approximate a large random unitary matrix by , where is a random permutation matrix and is a discrete Fourier transform (DFT) matrix. Note that all the algorithms involved here admit fast implementation for this matrix model. The eigenvalues are generated as: for and , where . Here, controls the condition number of . Note that MAMP does not require the SVD structure of . MAMP only needs the right-unitarily invariance of .

VI-A Influence of Relaxation Parameters and Damping

Fig. VI-A shows the influence of the relaxation parameters and damping. Without damping (e.g. ) the convergence of MAMP is not guaranteed. In addition, the optimization of has significant improvement in the MSE of MAMP. That is

-

(i)

damping guarantees the convergence of MAMP, and

-

(ii)

the relaxation parameters do not change the fixed point of MAMP, but they can be optimized to improve the convergence speed.

VI-B Comparison with AMP and CAMP

Fig. 4 shows MSE versus the number of iterations for AMP, CAMP, OMAP/VAMP and MAMP. To improve the convergence, both AMP and CAMP are damped. As can be seen, for an ill-conditioned matrix with , the MSE performance of AMP is poor. CAMP converges to the same performance as that of OAMP/VAMP. However, the state evolution (SE) of CAMP is inaccurate since damping is made on the a-posteriori outputs, which breaks the Gaussianity of the estimation errors. MAMP converges faster than CAMP to OAMP/VAMP. Furthermore, the state evolution of MAMP is accurate since damping is made on the orthogonal outputs, which preserves the Gaussianity of the estimation errors.

VI-C Influence of High Condition Number and Damping Length

Fig. 5 shows MSE versus the number of iterations for MAMP with different damping lengths. As can be seen, MAMP converges to OAMP/VAMP for the matrix with high condition number , and the state evolution (SE) of MAMP matches well with the simulated MSE. Note that CAMP diverges when (see Fig. 4 in [28]). In addition, MAMP with (damping length) has significant improvement in convergence speed compared with when the condition number is large. It should be mentioned that is generally enough for MAMP, since the MSEs of MAMP are almost the same when .

VII Conclusions

This paper proposes a low-cost MAMP for high-dimensional linear systems with unitarily transform matrices. The proposed MAMP is not only Bayes-optimal, but also has comparable complexity to AMP. Specifically, the techniques of long memory and orthogonalization are used to achieve the Bayes-optimal solution of the problem with a low-complexity MF. The convergence of MAMP is optimized with some relaxation parameters and a damping vector. The optimized MAMP is guaranteed to converge to the high-complexity OAMP/VAMP for all right-unitarily-invariant matrices.

References

- [1] L. Liu, S. Huang, and B. M. Kurkoski, “Memory approximate message passing,” arXiv preprint arXiv:2012.10861, Dec. 2020. [Online] Available: arxiv.org/abs/2012.10861.

- [2] D. Micciancio, “The hardness of the closest vector problem with preprocessing,” IEEE Trans. Inf. Theory, vol. 47, no. 3, pp. 1212-1215, Mar. 2001.

- [3] S. Verdú, “Optimum multi-user signal detection,” Ph.D. dissertation, Department of Electrical and Computer Engineering, University of Illinois at Urbana-Champaign, Urbana, IL, Aug. 1984.

- [4] M. Bayati and A. Montanari, “The dynamics of message passing on dense graphs, with applications to compressed sensing,” IEEE Trans. Inf. Theory, vol. 57, no. 2, pp. 764–785, Feb. 2011.

- [5] D. L. Donoho, A. Maleki, and A. Montanari, “Message-passing algorithms for compressed sensing,” in Proc. Nat. Acad. Sci., vol. 106, no. 45, Nov. 2009.

- [6] T. Richardson and R. Urbanke, Modern Coding Theory. New York: Cambridge University Press, 2008.

- [7] K. Takeuchi, T. Tanaka, and T. Kawabata, “Performance improvement of iterative multiuser detection for large sparsely-spread CDMA systems by spatial coupling,” IEEE Trans. Inf. Theory, vol. 61, no. 4, pp. 1768-1794, Apr. 2015.

- [8] S. Kudekar, T. Richardson, and R. Urbanke, “Threshold saturation via spatial coupling: Why convolutional LDPC ensembles perform so well over the BEC,” IEEE Trans. Inf. Theory, vol. 57, no. 2, pp. 803–834, Feb. 2011.

- [9] F. Krzakala, M. Mézard, F. Sausset, Y. F. Sun, and L. Zdeborová, “Statistical-physics-based reconstruction in compressed sensing,” Phys. Rev. X, vol. 2, pp. 021 005–1–18, May 2012.

- [10] D. L. Donoho, A. Javanmard, and A. Montanari, “Information-theoretically optimal compressed sensing via spatial coupling and approximate message passing,” IEEE Trans. Inf. Theory, vol. 59, no. 11, pp. 7434-7464, Nov. 2013.

- [11] A. Manoel, F. Krzakala, E. W. Tramel, and L. Zdeborová, “Sparse estimation with the swept approximated message-passing algorithm,” arXiv preprint arXiv:1406.4311, 2014.

- [12] S. Rangan, A. K. Fletcher, P. Schniter, and U. S. Kamilov, “Inference for generalized linear models via alternating directions and bethe free energy minimization,” IEEE Trans. Inf. Theory, vol. 63, no. 1, pp. 676–697, Jan 2017.

- [13] J. Vila, P. Schniter, S. Rangan, F. Krzakala, and L. Zdeborová, “Adaptive damping and mean removal for the generalized approximate message passing algorithm,” in Acoustics, Speech and Signal Processing (ICASSP), 2015 IEEE International Conference on, 2015, pp. 2021–2025.

- [14] Q. Guo and J. Xi, “Approximate message passing with unitary transformation,” CoRR, vol. abs/1504.04799, 2015. [Online]. Available: http://arxiv.org/abs/1504.04799

- [15] Z. Yuan, Q. Guo and M. Luo, “Approximate message passing with unitary transformation for robust bilinear Recovery,” IEEE Trans. Signal Process., doi: 10.1109/TSP.2020.3044847.

- [16] J. Ma and L. Ping, “Orthogonal AMP,” IEEE Access, vol. 5, pp. 2020–2033, 2017, preprint arXiv:1602.06509, 2016.

- [17] T. P. Minka, “Expectation propagation for approximate bayesian inference,” in Proceedings of the Seventeenth conference on Uncertainty in artificial intelligence, 2001, pp. 362–369.

- [18] M. Opper and O. Winther, “Expectation consistent approximate inference,” Journal of Machine Learning Research, vol. 6, no. Dec, pp. 2177–2204, 2005.

- [19] B. Çakmak and M. Opper, “Expectation propagation for approximate inference: Free probability framework,” arXiv preprint arXiv:1801.05411, 2018.

- [20] K. Takeuchi, “Rigorous dynamics of expectation-propagation-based signal recovery from unitarily invariant measurements,” IEEE Trans. Inf. Theory, vol. 66, no. 1, 368-386, Jam. 2020.

- [21] S. Rangan, P. Schniter, and A. Fletcher, “Vector approximate message passing,” IEEE Trans. Inf. Theory, vol. 65, no. 10, pp. 6664-6684, Oct. 2019.

- [22] M. Tuchler, A. C. Singer and R. Koetter, “Minimum mean squared error equalization using a priori information,” IEEE Trans. Signal Process., vol. 50, no. 3, pp. 673-683, March 2002.

- [23] L. Liu, Y. Chi, C. Yuen, Y. L. Guan, and Y. Li, “Capacity-achieving MIMO-NOMA: Iterative LMMSE detection,” IEEE Trans. Signal Process., vol. 67, no. 7, 1758-1773, April 2019.

- [24] J. Ma, L. Liu, X. Yuan and L. Ping, ”On orthogonal AMP in coded linear vector systems,” IEEE Trans. Wireless Commun., vol. 18, no. 12, pp. 5658-5672, Dec. 2019.

- [25] L. Liu, C. Liang, J. Ma, and L. Ping, “Capacity optimality of AMP in coded systems,” Submitted to IEEE Trans. Inf. Theory, 2019. [Online] Available: arxiv.org/pdf/1901.09559.pdf

- [26] M. Opper, B. Çakmak, and O. Winther, “A theory of solving TAP equations for Ising models with general invariant random matrices,” J.Phys. A: Math. Theor., vol. 49, no. 11, p. 114002, Feb. 2016.

- [27] Z. Fan, “Approximate message passing algorithms for rotationally invariant matrices,” arXiv:2008.11892, 2020.

- [28] K. Takeuchi, “Bayes-optimal convolutional AMP,” IEEE Trans. Inf. Theory, 2021, DOI: 10.1109/TIT.2021.3077471. [Online] arXiv preprint arXiv:2003.12245v3, 2020.

- [29] R. Berthier, A. Montanari, and P. M. Nguyen, “State evolution for approximate message passing with non-separable functions,” arXiv preprint arXiv:1708.03950, 2017.

- [30] A. M. Tulino, G. Caire, S. Verdú, and S. Shamai (Shitz), “Support recovery with sparsely sampled free random matrices,” IEEE Trans. Inf. Theory, vol. 59, no. 7, pp. 4243–4271, Jul. 2013.

- [31] K. Takeda, S. Uda, and Y. Kabashima, “Analysis of CDMA systems that are characterized by eigenvalue spectrum,” Europhys. Lett., vol. 76, no. 6, pp. 1193-1199, 2006.

- [32] J. Barbier, N. Macris, A. Maillard, and F. Krzakala, “The mutual information in random linear estimation beyond i.i.d. matrices,” arXiv preprint arXiv:1802.08963, 2018.

- [33] K. Takeuchi, “A unified framework of state evolution for message-passing algorithms,” arXiv:1901.03041, 2019.