Mini-Batch Gradient-Based MCMC for Decentralized Massive MIMO Detection

Abstract

Massive multiple-input multiple-output (MIMO) technology has significantly enhanced spectral and power efficiency in cellular communications and is expected to further evolve towards extra-large-scale MIMO. However, centralized processing for massive MIMO faces practical obstacles, including excessive computational complexity and a substantial volume of baseband data to be exchanged. To address these challenges, decentralized baseband processing has emerged as a promising solution. This approach involves partitioning the antenna array into clusters with dedicated computing hardware for parallel processing. In this paper, we investigate the gradient-based Markov chain Monte Carlo (MCMC) method—an advanced MIMO detection technique known for its near-optimal performance in centralized implementation—within the context of a decentralized baseband processing architecture. This decentralized design mitigates the computation burden at a single processing unit by utilizing computational resources in a distributed and parallel manner. Additionally, we integrate the mini-batch stochastic gradient descent method into the proposed decentralized detector, achieving remarkable performance with high efficiency. Simulation results demonstrate substantial performance gains of the proposed method over existing decentralized detectors across various scenarios. Moreover, complexity analysis reveals the advantages of the proposed decentralized strategy in terms of computation delay and interconnection bandwidth when compared to conventional centralized detectors.

Index Terms:

Massive MIMO, decentralized baseband processing, data detection, Markov chain Monte Carlo, mini-batch stochastic gradient descent.I Introduction

MASSIVE multiple-input multiple-output (MIMO) technology has emerged as a promising solution to address the increasing demand for high data rates and improved spectral efficiency in wireless communication systems of the fifth generation and beyond [2]. By deploying a large number of antennas at the base station (BS), it becomes feasible to achieve a substantial enhancement in network capacity and throughput. However, the utilization of an extremely large antenna array introduces high-dimensional signal processing tasks, particularly challenging in MIMO uplinks, where accurate detection of signals from the users is required to fulfill the potential of massive MIMO [3, 4].

Many existing MIMO detection schemes, such as the optimal maximum likelihood (ML) detector and various linear and nonlinear detectors [3], are designed according to the conventional centralized baseband processing architecture. However, this architecture faces several challenges when applied to extremely large-scale MIMO systems. In this setup, a central unit (CU) assumes responsibility for the entire symbol detection process, imposing stringent demands on the computational capability, baseband interconnection bandwidth, and storage capacity of a single unit [5, 6, 7]. Notably, existing MIMO detectors inherently involve matrix operations, such as matrix multiplications or inversions, which become impractical on a single computing device as the antenna count increases due to substantial computational complexity. Additionally, for centralized MIMO detection, the transfer of global channel state information and raw baseband data from all BS antennas to the CU is required. This process demands significant interconnection bandwidth, often surpassing data rate limits of existing interconnection standards or chip I/O interfaces [8, 6, 5].

To address these challenges, decentralized baseband processing (DBP) has been proposed and gained significant attention recently [6]. The concept behind DBP is to partition the large-scale antenna array into a few smaller disjoint antenna clusters, each with local radio frequency (RF) chains and independent baseband processing modules located in distributed units (DUs). The DUs handle local baseband data received from RF chains within their respective clusters. This DBP approach distributes the demanding signal processing tasks across parallel procedures on multiple computing units, like field-programmable gate arrays [9] or graphics processing units [10], substantially reducing computation demands at the CU and overall processing latency. Moreover, the requirement for raw baseband data transfer between each baseband unit and the corresponding RF circuitry is considerably diminished compared to the centralized architecture, as only a subset of BS antennas needs to transmit their data to the relevant processing module.

Despite the potential advantages of the DBP architecture, research into customized decentralized MIMO detection schemes within this framework remains incomplete. Specifically, the exploration of high-performance decentralized detectors is as important as it is currently lacking. Pioneering work [6] decentralized the conjugate gradient algorithm and the alternating direction method of multipliers (ADMM), resulting in iterative consensus-sharing schemes approaching the performance of the centralized linear minimum mean square error (LMMSE) method. However, the latter is widely known to be suboptimal. Similar endeavors were pursued in [9] and [11] to decentralize the Newton and coordinate descent methods, respectively, aiming to bypass the intricate matrix inversions involved in centralized linear detectors. Furthermore, authors of [12] developed decentralized feedforward architectures [5, 13] for classical maximum ratio combining, zero-forcing, and LMMSE detectors, considerably reducing system latency. Nonetheless, these linear detectors are markedly suboptimal, and the fully decentralized scheme experiences substantial performance degradation with an increasing number of clusters.

Instead of decentralizing linear detectors, an alternative research direction explores the decentralized design of nonlinear message passing-based detection methods. Specifically, authors of [12] also applied the large-MIMO approximate message passing (LAMA) algorithm within partially and fully decentralized feedforward architectures. However, LAMA’s instability arises when channel fading coefficients deviate from the independent and identically distributed (IID) Gaussian distribution. In [14], a decentralized detector based on expectation propagation (EP) was proposed, demonstrating performance advantages over various decentralized linear detectors [6, 10, 11]. Nonetheless, the decentralized EP method necessitates iterative matrix inversions at each DU, posing challenges for achieving computational efficiency with increasing user numbers. Other message passing detector variants tailored for the decentralized architecture have been explored [15, 16, 17]. Regrettably, these endeavors have yet to strike a desirable balance between performance and complexity.

As evident from the discussion above, existing decentralized MIMO detectors often struggle with suboptimal trade-offs between performance and complexity—particularly in scenarios with high user density or strong correlation channels. These limitations underscore the need to explore advanced detection techniques for formulating more competitive decentralized detectors. Recently, Markov chain Monte Carlo (MCMC) has demonstrated its potential to overcome the limitations of deterministic Bayesian inference approaches (e.g., EP) [18, 19]. MCMC-based MIMO detectors are favored for their near-optimal performance [20, 21] and hardware-friendly architecture [22]. Furthermore, the combination of gradient descent (GD) optimization and MCMC offers a framework to efficiently solve the MIMO detection optimum search problem [23, 24, 25, 26]. This scheme employs GD to explore important regions of the discrete search space and utilizes the random walk and acceptance test of MCMC to generate samples conforming to the posterior distribution of transmit symbols [27]. Thus, the gradient-based MCMC method holds the potential to achieve near-ML performance, unattainable by LMMSE or message passing-based detectors, while maintaining acceptable complexity. Notably, recent work [27] combines MCMC with Nesterov’s accelerated gradient (NAG) method [28] to derive a NAG-aided MCMC (NAG-MCMC) detector with near-optimal performance, high efficiency, and excellent scalability. However, existing literature has not explored the decentralized design of potent MCMC-based MIMO detectors and their gradient-based variations.

Motivated by the potential benefits of the DBP architecture and the limited exploration of decentralized detectors within this framework, this study seeks to establish a decentralized gradient-based MCMC detector operating within the DBP framework. The central goal is to mitigate computational and interconnection bandwidth limitations associated with conventional centralized processing, all while upholding near-optimal detection performance. The contributions of this paper are summarized as follows:

-

•

Decentralized Gradient-Based MCMC Detector for DBP Architecture: This study pioneers the decentralization of the gradient-based MCMC method for MIMO detection within the DBP architecture, employing both star and daisy-chain topologies. The proposed decentralized detector inherits the remarkable detection performance and scalability inherent in the original gradient-based MCMC algorithm. Decentralization enables distributed and parallel computations, efficiently redistributing the computation burden from the CU to multiple DUs. Consequently, our proposed detector exhibits high computational efficiency in terms of latency.

-

•

Mini-Batch-Based Gradient Updating for Efficiency Enhancement: We introduce a mini-batch-based gradient updating rule for the decentralized gradient-based MCMC detector, resulting in the Mini-NAG-MCMC algorithm. This innovation significantly reduces computation and interconnection demands by selecting only a mini-batch of DUs for gradient computations per iteration. The integration of stochastic gradients within the updating rule synergizes with the MCMC technique, facilitating escape from local minima and achieving near-optimal performance. Additionally, we leverage the asymptotically favorable propagation property of massive MIMO systems, incorporating an approximation into the proposed detector to further reduce overhead.

-

•

Comprehensive Analysis and Performance Validation: We conduct a comprehensive analysis of the proposed method’s computational complexity and interconnection bandwidth, showcasing its superiority over centralized schemes. Furthermore, extensive simulations validate the method’s performance superiority over existing decentralized detectors across diverse scenarios, including IID Rayleigh fading MIMO channels and realistic 3rd Generation Partnership Project (3GPP) channel models. These results affirm the proposed method’s effective balance between performance and complexity.

Notations: Boldface letters denote column vectors or matrices. denotes a zero vector, and denotes an identity matrix. , , and denote the transpose, conjugate transpose, and inverse of the matrix , respectively. generates a diagonal matrix by assigning zero values to the off-diagonal elements of . is norm, and is Frobenius norm. represents the expectation of random variables. denotes a circularly symmetric complex Gaussian distribution with mean and variance . indicates a uniform distribution between . and are the sets of real and complex numbers, respectively. The set contains all nonnegative integers up to .

II System Model

In this section, we initially present the uplink MIMO system model and its associated MIMO detection problem. Subsequently, we elaborate on the particulars of the DBP architecture for MIMO uplink and discuss the fundamental aspects of designing decentralized MIMO detectors.

II-A Uplink MIMO System Model

Consider an uplink massive MIMO system with single-antenna users and a BS equipped with receiving antennas, as shown in Fig. 1. Each user independently encodes its information bit stream, modulates the coded bits using the quadrature amplitude modulation (QAM) scheme, and transmits the modulated symbols over the wireless channels to the BS. The input-output relationship111We consider a flat-fading channel in the system model; however, the proposed method can be readily generalized to handle frequency-selectivity by using orthogonal frequency division multiplexing (OFDM). In this approach, MIMO detection is independently conducted on each flat-fading subcarrier. of this uplink transmission is given by

| (1) |

where is the received signal at the BS, is the uplink channel matrix, consists of transmit symbols from each user, with representing the QAM lattice set having a cardinality of and unit symbol energy, and represents the IID circular complex Gaussian noise with zero-mean and variance entries. The signal-to-noise ratio (SNR) is given by . The objective of uplink MIMO detection is to estimate the transmit vector given the received signal and the knowledge of the uplink channel matrix . The optimal ML solution is given by

| (2) |

Note that the finite set of makes the optimal MIMO detection an integer least-squares problem, known to be non-deterministic polynomial-time hard (NP-hard) [3].

II-B Decentralized Baseband Processing

To deal with the heavy interconnection and computation burdens due to the use of extra-large-scale antenna arrays, DBP has been proposed as a promising alternative to traditional centralized processing [6]. Throughout the paper, we assume that the massive MIMO BS possesses the DBP architecture as shown in Fig. 1. The BS antenna array is partitioned into antenna clusters, where the -th cluster is associated with antennas (), and . Without loss of generality, we assume that each cluster is of equal size, i.e., . Each cluster is equipped with local RF chains that are connected to dedicated hardware referred to as the DU.

We consider two widely accepted DBP topologies, namely star and daisy-chain topologies [6, 7, 9, 29, 30], which are depicted in Fig. 1. We assume data links among the CU and DUs are bidirectional for both topologies. The star topology is featured by all DUs connected to the CU (solid lines). Thus, the information exchange between the CU and each DU is in parallel. The daisy-chain topology is featured by DUs connected sequentially [7] (dashed lines), with a single connection to the CU at the end. Hence, communication is limited solely to adjacent units in the daisy-chain topology and the information exchange is performed in a sequential manner. Both these topologies have their distinct strengths in the trade-off among throughput, latency, and interconnection bandwidth [9]. The star topology is well recognized for the low data transfer latency owing to the simultaneous information exchange between the CU and each DU, while the daisy-chain topology is known for the constant interconnection bandwidth regardless of the number of DUs, as will be shown in Sec. IV-B.

Under the DBP architecture, the uplink channel matrix, received signal, and noise vector are partitioned row-wise as , , and , respectively. Hence, the signal model at the -th antenna cluster can be written as

| (3) |

where is the channel matrix between the users and the -th antenna cluster. and represent the local received signal and noise vector of the -th antenna cluster, respectively.

Each DU exclusively utilizes its local channel matrix and the received signal to compute intermediate detection results.222We assume that the local channel matrix is perfectly known at each DU since we focus on MIMO detector design in this paper. In realistic scenarios, this knowledge can be obtained by performing local channel estimation. The CU works as a coordinator for periodically aggregating the intermediate information from the DUs and forming the global consensus [6], which is then broadcast to the DUs for subsequent updates, and finally producing the estimate. In this decentralized scheme, the heavy computation burden at the CU can be offloaded to DUs and be performed in a parallel and distributed fashion. Hence, the overall computation latency would be significantly reduced. Meanwhile, the DUs can process the locally stored data to obtain low-dimensional intermediate information instead of directly transmitting full-dimensional data, thus saving interconnection bandwidth.

III Mini-Batch Gradient-Based MCMC

In this section, we first outline the basics of the gradient-based MCMC method from [27]. Then, we detail the development of the decentralized gradient-based MCMC detector, Mini-NAG-MCMC, customized for the DBP architecture.

III-A Basics of Gradient-Based MCMC

The MCMC method samples from the target posterior distribution using a Markov chain given by , where is the number of sampling iterations. The state transition in this chain is regulated by a transition kernel , specifically designed to align the chain’s stationary distribution with the target distribution. Hence, the realization of this chain can be utilized to (approximately) generate samples from the target distribution, facilitating statistical inference. Notably, the integration of MCMC and the GD method has recently emerged as a promising approach for resolving nonconvex optimization challenges [23]. The underlying concept of this gradient-based MCMC approach involves leveraging gradients and the Metropolis-Hastings (MH) criterion [31, 25] to design the transition kernel, expediting the Markov chain’s convergence to the target distribution. When using gradient-based MCMC for MIMO detection, each state of the Markov chain corresponds to a sample of over the discrete space . The initial sample is either randomly generated or transferred from another detector. The transition from to , i.e., the generation of the -th sample , includes the following steps [25, 27]:

III-A1 GD

The constraint on the transmit vector can be relaxed to the continuous complex space to allow the use of gradients. With this relaxation, the GD method can be performed to provide efficient navigation to important regions of the discrete search space. Initializing , where is the previous sample, the GD iteration takes the basic form as follows:

| (4) |

where is the index of GD iteration, is the total number of GD iterations, is the learning rate, is the objective function of MIMO detection, which is generally chosen as the residual norm from the estimate, , and is the gradient of the objective function.

III-A2 Random Walk and QAM Mapping

After a preset number of GD steps, we generate the MCMC candidate sample as follows:

| (5) |

where is the output of GD, is a random Gaussian vector that represents the perturbation introduced by the random walk, and the function maps each element of its input to the closest QAM lattice point. The random walk prevents the algorithm from getting trapped in local minima along the GD direction. The QAM mapping discretizes the continuous update to obtain valid candidate samples.

III-A3 Acceptance Test

The MH criterion [25] is applied to accept the candidate sample with a probability given by

| (6) |

which approximately aligns the Markov chain’s stationary distribution with the target posterior distribution. This acceptance probability of can be achieved by the following rule:

| (7) |

where is drawn from the uniform distribution .

Running the gradient-based MCMC sampling for several iterations, a sample list containing important samples for inference would be identified. This sample list can be used to generate the hard decision by choosing the best sample according to

| (8) |

III-B Mini-Batch Gradient-Based MCMC for Decentralized MIMO Detection

Next, we present the decentralized gradient-based MCMC algorithm tailored for the DBP architecture. The central concept revolves around distributing the resource-intensive gradient computations across multiple DUs, thereby diminishing computation latency and bolstering overall efficiency.

We first provide some useful notations for ease of illustration. The local objective function at each DU is defined as

| (9) |

Therefore, the local gradient is given by

| (10) |

The optimal MIMO detection problem in (2) is rewritten as

| (11) |

where the global objective function is given by

| (12) |

The decomposition of the global objective function into multiple local objective functions allows the calculation of gradients on each DU as shown in (10). These local gradients can then be collected at the CU to derive the full gradient , which enables the GD-based optimization in (4). The distributed computations alleviate the computation burden of directly calculating the full gradient at the CU.

However, the decentralization of the standard full-batch GD in (4) does not lead to a reduction in the overall computation cost. Moreover, this decentralized full-batch GD requires information exchange between the CU and all DUs in each iteration, resulting in high interconnection costs. To further mitigate the computation and interconnection requirements associated with the full-batch GD, we turn to the mini-batch stochastic GD method [32], which shows promise in efficiently optimizing objective functions in finite-sum form as (12). This method serves as an efficient alternative to the full-batch GD in (4), offering the potential for rapid convergence and overhead reduction. Specifically, we introduce a mini-batch-based gradient updating rule tailored to the DBP architecture, as detailed below.

In each GD iteration , the estimate is not updated using the full gradient . Instead, a subset of DUs, termed a mini-batch and denoted as , is randomly selected for the update. The mini-batch contains elements. Here, represents the batch size, and we assume that the number of antenna clusters is divisible by . The mini-batch-based GD iteration operates as

| (13) |

where denotes the estimated gradient based on the mini-batch . This is expressed as

| (14) |

where the scaling factor ensures the unbiasedness of the mini-batch gradient as an estimator of the full gradient [32], denoted as,

| (15) |

with the expectation taken across any possible selections of mini-batch . This guarantees the asymptotic convergence to the minimum of the continuous-relaxed version of problem (11) via the mini-batch GD method.

Remark 1

As shown in (14), only a portion of all DUs are needed for gradient computations and information exchange with the CU per mini-batch GD iteration, thus providing significant design flexibility for balancing performance and complexity by adjusting the mini-batch size. Moreover, simulation results reveal that employing mini-batch-based gradients with a sufficiently large mini-batch size (e.g., ) leads to virtually identical performance compared to using full gradients. Hence, the proposed approach enables substantial savings in computational resources and interconnection bandwidth.

With the proposed mini-batch-based gradient updating rule in mind, we next introduce the Mini-NAG-MCMC algorithm for decentralized MIMO detection. For ease of presentation, in the remainder of this paper, we consider the star topology as displayed in Fig. 2 unless noted otherwise. We assume that a total of samples are generated to perform the final inference for MIMO detection. The generation of each sample is divided into two stages as detailed in the following.

III-B1 Mini-Batch GD Stage

In the mini-batch GD stage, we select NAG as the GD component due to its fastest convergence rate among the first-order gradient-based optimization algorithms [28]. NAG incorporates momentum in the update rule to expedite and stabilize convergence. To integrate the NAG method into the DBP architecture, in each GD iteration , the incorporation of momentum is performed at the CU to derive the intermediate point as

| (16) |

where is the previous update stored at the CU (). The momentum factor determines the proportion of the previous update that is added to the current update.

The intermediate point is then broadcast to DUs belonging to the randomly chosen mini-batch . Upon receiving the broadcast, each DU computes the local gradient in parallel based on (10). These local gradients are then uploaded and aggregated for performing the descent from and updating the momentum at the CU:

| (17) | ||||

| (18) |

The NAG procedure is iteratively conducted until a preset number of iterations is reached. The output of NAG, , is then used as the input for the subsequent MCMC sampling.

One critical parameter that requires careful selection in the NAG is the learning rate . From [28], a general learning rate choice for an -Lipschitz smooth objective function is

| (19) |

where is the Lipschitz constant, and choosing the upper bound is beneficial for convergence. For the objective function in (12), we have

| (20) |

where is the global Gram matrix, and takes the largest eigenvalue of [27, 33]. To avoid the computationally expensive eigenvalue decomposition, it is recommended in [27] to replace with its upper bound and set the learning rate as

| (21) |

A straightforward way to derive in (21) under the DBP architecture is to accumulate the local Gram matrices from all DUs, forming the global Gram matrix at the CU for calculating (21). Specifically, during the preprocessing period, each DU computes the local Gram matrix,

| (22) |

which is then collected at the CU to derive the global Gram matrix, .

Under the so-called favorable propagation in massive MIMO systems [34], the computation of can be further simplified. In such cases, we have

| (23) |

where denotes the -th column vector of . This equation indicates that, for MIMO uplink with a large number of BS antennas, the Gram matrix is diagonally dominant and can be approximated by its diagonal counterpart given as

| (24) |

where is the diagonal counterpart of the local Gram matrix and can be calculated using the column vector of :

| (25) |

Finally, the learning rate in (21) can be approximated as

| (26) |

Remark 2

With the approximation , the complexity on the order of for calculating (22) at each DU is substituted by the low complexity of for calculating (25). Meanwhile, only the real-valued diagonal elements of are required to be exchanged and accumulated, thereby alleviating the high interconnection bandwidth to convey the entire Gram matrix.

III-B2 MCMC Sampling Stage

After a sufficient GD procedure to refine the estimate, the candidate sample is generated at the CU by adding random perturbation to and mapping the perturbed estimate to the QAM lattice using (5). The random Gaussian vector in (5) is given by

| (27) |

where is the magnitude of the random perturbation, i.e., step size of the random walk, is the Cholesky factor specifying the covariance of the random perturbation as , and follows . The step size is selected to enable sufficient ability to escape local minima while maintaining a reasonable acceptance probability [35]. Moreover, according to MCMC literature [36, 37], can be set to specify a covariance proportional to the inverse of the Hessian matrix of the objective function. However, this setting is computationally expensive. Experimental observations indicate that using an identity matrix for causes almost no performance degradation compared to the former setting, while substantially reducing the computational cost.

Subsequently, the CU performs the MH acceptance test, where the candidate sample is accepted with a probability computed according to (6). This probability can be alternatively expressed as

| (28) |

Evaluation of the global objective function requires the knowledge of and , which is not directly accessible to the CU. Instead, we evaluate the local objective function at each DU and then aggregate them at the CU. Specifically, for the acceptance test of the -th sampling iteration, the CU first broadcasts the candidate sample to all the DUs. Then, each DU independently evaluates the local objective function in (9) and uploads the value to the CU for aggregation to derive

| (29) |

This global objective function value is subsequently used to compute the acceptance probability in (28). The value is stored or discarded based on the acceptance or rejection of the candidate sample.

| (30) |

Finally, these global objective function values for the drawn samples are used for generating the final estimate.

The above two stages are alternatively executed times for the Markov chain to converge to the steady state and derive a sufficient number of samples that follow the posterior distribution, forming the sample list for statistical inference at the CU. Moreover, parallel samplers can be deployed to reduce sampling latency and correlation. In hard decision, the final estimate can be obtained by selecting the sample from that minimizes the objective function . Details of the Mini-NAG-MCMC algorithm are summarized in Algorithm 1. In the Appendix, we provide the proof of the convergence of the proposed algorithm in a simplified setting.

Remark 3

It is worth mentioning that the mini-batch gradient-based MCMC method is also applicable to the daisy-chain topology. The difference lies in the exchange of information among processing units. Without loss of generality, we assume DU is within the selected mini-batch in both the star and daisy-chain topologies. For the daisy-chain topology, we assume the DUs are connected in the same order as shown in Fig. 1. Taking the information upload stage as an instance, DU in the star topology directly uploads its local gradient to the CU as shown in Fig. 2; By contrast, DU in the daisy-chain topology needs to accumulate the gradients of its own with those from the former DUs within the mini-batch to derive and then uploads it to the CU. This sequential information exchange results in an increased data transfer latency.

Remark 4

Remark 5

Although the use of multiple computing units in decentralized detection inevitably results in additional energy consumption, the proposed decentralized detector allows for energy-efficient implementation by significantly reducing the computation of each DU. This enables DUs to operate in a more energy-efficient region with a lower computational density compared to the CU in the centralized scheme. Moreover, the mini-batch-based gradient updating rule can further increase energy efficiency by involving only a subset of DUs in gradient computations per iteration while keeping others inactive.

IV Complexity Analysis

In this section, we analyze the complexity of the proposed Mini-NAG-MCMC from two aspects: computational complexity and interconnection bandwidth.

| Algorithms | Computational complexity | ||

|---|---|---|---|

| Each DU | CU | ||

| Decentralized | Mini-NAG-MCMC | ||

| DeADMM | |||

| DeADMM | |||

| DeLAMA | |||

| DeEP | |||

| DeNewton | |||

| Centralized | LMMSE | ||

| -best | |||

| NAG-MCMC | |||

-

Note: is the number of iterations of the iterative detection schemes; is the list size of the -best detector; is used for NAG-MCMC and Mini-NAG-MCMC.

IV-A Computational Complexity

Table I presents the computational complexity of Mini-NAG-MCMC compared with various centralized and decentralized detection algorithms. The decentralized baselines include the decentralized ADMM (DeADMM) [6], LAMA (DeLAMA)333We consider the fully decentralized version of the LAMA detector proposed in [12]. [12], EP (DeEP) [14], and Newton (DeNewton) [9] detectors. Centralized LMMSE [38], -best sphere decoding [40], and NAG-MCMC [27] algorithms are also compared. For the considered decentralized schemes, operations in the CU and each DU are counted separately. We only present the count of a single DU to reflect the parallel processing of the DBP architecture. We remark that while this count is lower than the total computational cost from all local processing units, it reflects the computation latency of the algorithm.

In the comparison, the complexity of a matrix inversion is , where denotes the standard asymptotic notation [39, Ch. 3.1] to indicate the order of complexity. The complexity of Mini-NAG-MCMC is dominated by gradient computations and objective function evaluation, costing and at each DU, respectively. The other operations in the MCMC sampling stage, such as the random walk, QAM mapping, and acceptance test, incur a modest cost of multiplications at the CU. The comparison in Table I shows that Mini-NAG-MCMC is highly efficient due to avoiding high-complexity matrix multiplications and inversions.

Table I also reveals that the proposed Mini-NAG-MCMC effectively relieves the computation burden at the CU compared to the centralized algorithms by shifting the computationally intensive gradient computations to the DUs. As a result, the computational complexity at each processing unit is independent of the number of BS antennas , which is beneficial for reducing computation latency. Furthermore, the proposed Mini-NAG-MCMC possesses the advantage that only a portion of the DUs is required to perform computations in each mini-batch GD iteration of the algorithm. Thus, the total computational cost can be saved to a large extent compared to other decentralized schemes.

Note that the number of iterations also has a significant impact on the complexity of the proposed method and other iterative detection schemes in Table I. However, it is practically difficult to provide an analytical estimate of the number of iterations required to reach a certain performance level. Hence, we resort to empirically evaluating the computational complexity of the detectors listed in Table I and meanwhile taking into account the achieved performance. Detailed results are shown in Sec. V.

IV-B Interconnection Bandwidth

The interconnection bandwidth is measured by the number of bits transferred from and to the CU within a symbol period. Denote by the bit-width of each real value (real/imaginary parts of a complex scalar number) in the implementation. Note that the representation of each QAM symbol in the derived samples only requires bits, which can be significantly smaller than . For centralized schemes, the entities required to be uploaded to the CU include the full channel matrix and received signal , resulting in the bandwidth

| (31) |

For the star topology-based Mini-NAG-MCMC, the CU receives and real values during preprocessing and sampling iterations, respectively. The CU also broadcasts continuous-valued real numbers and QAM symbols during the sampling. For the daisy-chain topology-based Mini-NAG-MCMC, the numbers that are required to be transferred are identical to those in the star topology. However, the CU requires communication with only one directly connected DU, thereby significantly reducing interconnection costs. Hence, the interconnection bandwidth of Mini-NAG-MCMC with star and daisy-chain topologies are respectively given by

| (32) | ||||

| (33) |

The results of both topologies do not depend on the BS antenna count , a notable advantage over centralized schemes.

We note that if we consider the interconnection cost on all links in the daisy-chain topology, the total interconnection bandwidth would be comparable to that of the star topology. However, the daisy-chain architecture can uniformly allocate the bandwidth demand among all links, resulting in a significant reduction in the individual requirements on each link [7]. Notwithstanding, the price to pay is extended processing latency as a result of the sequential processing architecture.

V Simulation Results

In this section, we present numerical results to evaluate the proposed Mini-NAG-MCMC in terms of error rate performance, computational complexity, and interconnection cost.

V-A Simulation Setup

Both IID Rayleigh fading and realistic 3GPP channel models are considered in the simulation. The Rayleigh fading MIMO channel has elements that follow the Gaussian distribution . For realistic channels, the 3D channel model outlined in the 3GPP 36.873 technical report [41] is utilized. This model has been widely adopted for its accurate representation of real-world channel environments. We consider both uncoded and coded MIMO systems. For uncoded systems, we evaluate the bit error rate (BER) performance until bits are transmitted or the number of bit errors exceeds 1000. Different independent channel realizations are considered for each symbol transmission. For coded systems, we employ a rate- low-density parity-check (LDPC) code and the OFDM scheme, wherein each user’s coded bits are converted to QAM symbols and mapped onto 216 subcarriers for data transmission. The detector calculates soft outputs using max-log approximation [20]. Belief propagation decoding is then utilized with a maximum number of 10 iterations. The block error rate (BLER) is evaluated until coded blocks are transmitted with independent channel realizations.

We compare our method with the centralized LMMSE and NAG-MCMC [27] schemes and representative decentralized detector baselines as listed in Table I. The optimal ML [3] and near-optimal -best [40] detectors are used as performance benchmarks. For parameter settings of NAG-MCMC and the proposed Mini-NAG-MCMC, the number of NAG iterations is set to . The choice of momentum factor follows the recommended setting in [28]:

| (34) |

The random walk step size is empirically set to . To ensure computational efficiency, we set in (27). The initial sample for both NAG-MCMC and Mini-NAG-MCMC is randomly selected from .

V-B Convergence Analysis

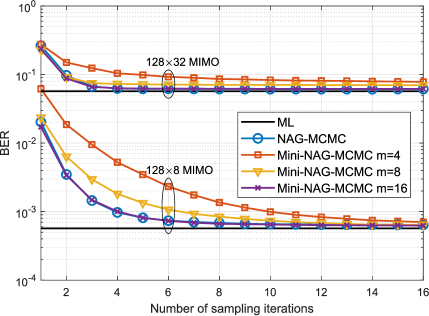

Fig. 3 presents the convergence performance of Mini-NAG-MCMC in terms of the BER versus the number of sampling iterations.444Note that conducting a rigorous theoretical analysis of convergence is challenging for gradient-based MCMC methods in discrete search spaces [22], [26]. Therefore, we have attempted to empirically evaluate the convergence property via numerical simulations, aiming to provide some valuable insights. The MIMO system is configured with and or . The system employs 16-QAM modulation and operates under IID Rayleigh fading channels.

Fig. 3(a) shows the convergence behavior under different choices of mini-batch sizes. We also provide the convergence performance of the centralized NAG-MCMC algorithm as a reference. The results indicate that Mini-NAG-MCMC converges faster as the mini-batch size increases in both and cases. This result is in accordance with expectation because the variance of the mini-batch gradient approximation in (14) is a decreasing function of [42]. Meanwhile, the convergence speed is virtually unaffected by the number of users, revealing the robustness of Mini-NAG-MCMC to varying user loads.

Remarkably, the Mini-NAG-MCMC with achieves virtually the same convergence performance as the centralized NAG-MCMC that uses full gradients. This phenomenon can be attributed to the sufficient accuracy of the mini-batch approximation when is sufficiently large (e.g., in this simulation) and the synergistic effect of stochastic gradients and MCMC in escaping local minima. Consequently, the proposed method achieves equivalent performance to the centralized counterpart that computes full gradients, while offering the benefit of reduced computational cost since only half of all the DUs are utilized for computations per iteration.

Fig. 3(b) compares the convergence performance under different numbers of clusters, with the mini-batch size fixed as . The figure reveals that the convergence speed shows minimal variation with respect to the number of clusters, which is a notable advantage of the proposed Mini-NAG-MCMC. Hence, the proposed method can accommodate to different levels of decentralization, allowing for trade-offs between the computational complexity at each DU and the number of interconnection interfaces (as well as bandwidth) by flexibly adjusting the system parameter according to different requirements.

V-C Uncoded Detection Performance

In this subsection, we investigate the proposed method under Rayleigh fading MIMO channels in uncoded systems. Fig. 4 provides the BER performance and computational complexity for a massive MIMO system with BS antennas, users, clusters, and 16-QAM modulation. The mini-batch size for Mini-NAG-MCMC is . We compare the proposed method with centralized LMMSE [38], -best [40], and NAG-MCMC [27] detectors and two representative decentralized baselines, namely the DeADMM [6] and DeEP [14] detectors.555For the implementation of the DeADMM scheme, we use the code released at https://github.com/VIP-Group/DBP. The list size for the -best detector is set to , serving as a near-optimal baseline. The number of iterations for DeADMM and DeEP is set as and , respectively, which is necessitated by the convergence of the algorithm in the considered system setup.

Fig. 4(a) shows that the LMMSE and DeADMM detectors have a significant performance gap to the ML detector. In contrast, Mini-NAG-MCMC achieves a noticeable performance gain over LMMSE and DeADMM when the number of sampling iterations is and further approaches the ML performance with a slight increase in . Furthermore, Mini-NAG-MCMC obtains comparable BER performance to NAG-MCMC when both methods are equipped with the same .

Fig. 4(b) illustrates the complexity bar chart of different detectors in terms of the number of real-valued multiplications required per detection. The number of multiplications at the CU for Mini-NAG-MCMC is much lower than the counterparts at the CU for the centralized LMMSE, -best, and NAG-MCMC detectors. We remark that despite the total computational cost of Mini-NAG-MCMC being comparable to the centralized LMMSE and NAG-MCMC, the proposed decentralized algorithm offers dual advantages: First, the complexity at each DU is notably low, effectively leading to a reduced computation latency. Second, the distribution of complexity among many DUs allows the use of cheap computing hardware within each processing unit, in contrast to the centralized algorithms that exert the entire complexity burden on the CU. Furthermore, the computational cost of Mini-NAG-MCMC is also similar to that of the DeADMM and substantially lower than that of the DeEP.

Considering its near-ML performance and low complexity at each unit, as shown in Fig. 4, our proposed Mini-NAG-MCMC demonstrates high promise in achieving an attractive trade-off between performance and complexity.

Fig. 5 shows the results when the number of users is increased to , with the other system settings remaining unchanged compared to Fig. 4. Fig. 5(a) indicates that the performance of LMMSE, DeADMM, and DeEP further degrades as compared to the near-optimal -best detector when the user number increases. However, Mini-NAG-MCMC demonstrates significant performance improvement over LMMSE when and achieves comparable performance to the -best when , consistent with the results presented in Fig. 4(a). This finding reflects that the proposed Mini-NAG-MCMC and the associated learning rate approximation are robust to the increased user load. Moreover, upon comparing Fig. 5(b) to Fig. 4(b), it is observed that the complexity increase of Mini-NAG-MCMC is subtle in contrast to the LMMSE, -best, and DeEP detectors. Specifically, the complexity increase is 50% for Mini-NAG-MCMC, whereas the corresponding values for LMMSE, -best, and DeEP are 165%, 125%, and 230%, respectively, demonstrating the outstanding scalability of Mini-NAG-MCMC as revealed in Sec. IV-A.

Fig. 6 presents the BER performance under high-order 64-QAM/256-QAM modulation schemes. The other system configurations are the same as Fig. 5. In this experimental setup, the -best detector is configured with to provide a near-ML baseline. The DeADMM and DeEP detectors execute for and iterations, respectively. The proposed Mini-NAG-MCMC is set up with iterations and a mini-batch size of . The figure illustrates that the proposed method achieves a performance gain of more than 1.5 dB when compared to the LMMSE detector and the decentralized baselines and approaches the performance of the near-optimal -best. These results demonstrate that the proposed decentralized detector scales to high-order modulation.

V-D Coded Detection Performance

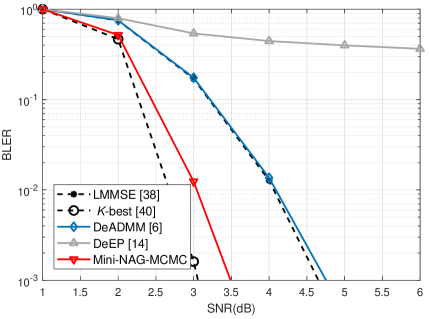

We further investigate the proposed method under the more realistic 3GPP 3D MIMO channel model [41] with coded transmissions. We examine the urban macrocell non-line-of-sight scenario. For the simulation of this scenario, the QuaDRiGa simulator [43] is employed. In the considered scenario, the BS is equipped with antennas, which are uniformly partitioned into clusters, to serve users. The BS adopts a uniform planar antenna array with half-wavelength antenna spacing. The users are evenly dropped within a cell sector centered around the BS, spanning a radius ranging from 10 m to 500 m. The carrier frequency is set as 2.53 GHz, and the channel spans over 20 MHz bandwidth. To cope with the complicated channel environments, the -best detector is set up with . The number of iterations for DeADMM and DeEP is selected as and , respectively. The number of iterations and mini-batch size for Mini-NAG-MCMC are and , respectively.

As shown in Fig. 7, Mini-NAG-MCMC demonstrates stable performance, exhibiting significant robustness to the realistic channel model. Specifically, Mini-NAG-MCMC outperforms LMMSE and other decentralized detectors, achieving an approximate 1 dB gain under both 16-QAM and 64-QAM modulation. Furthermore, the gap between Mini-NAG-MCMC and the near-optimal performance achieved under centralized schemes by the -best detector is a mere 0.4 dB. Considering the distribution of complexity across DUs, achieving near-optimal performance in this context is indeed promising.

V-E Interconnection Bandwidth Comparison

We evaluate the interconnection cost of the proposed Mini-NAG-MCMC in this subsection. The bit-width is selected as in the evaluation. The BER performance versus interconnection bandwidth of Mini-NAG-MCMC with star and daisy-chain topologies for a MIMO system with , , and is presented in Fig. 8. The mini-batch size for Mini-NAG-MCMC is set to . In the figure, the -axis represents the percentage of interconnection bandwidth of Mini-NAG-MCMC relative to the centralized algorithm, calculated according to (31)-(33) by setting the number of sampling iterations to . The -axis represents the performance gap between Mini-NAG-MCMC and the centralized ML algorithm in terms of the SNR required to achieve a target . From the figure, we have the following observations:

-

•

Mini-NAG-MCMC achieves a flexible trade-off between performance and interconnection bandwidth.

-

•

The daisy-chain topology provides lower interconnection bandwidth than the star topology and is more appreciated in interconnection bandwidth-constrained scenarios, despite a larger processing delay.

Furthermore, Fig. 9 presents the interconnection bandwidth of Mini-NAG-MCMC compared with the centralized algorithm as a function of the number of BS antennas when and . The mini-batch size and the number of sampling iterations for Mini-NAG-MCMC are and , respectively. The figure shows that the proposed method with both star and daisy-chain topologies exhibits significantly reduced interconnection cost compared to the centralized processing as increases. This result confirms the scalability of the proposed method to massive MIMO systems with a large number of BS antennas.

V-F Trade-off between Performance and Complexity

Fig. 10 presents the performance-complexity trade-off of various detectors for a massive MIMO system with , , , 16-QAM modulation, and Rayleigh fading channels. All the detectors are implemented using the star DBP topology. The figure reveals that the proposed Mini-NAG-MCMC () achieves the most promising results among the considered detectors in the trade-off between BER performance, computational complexity, and interconnection bandwidth. Despite the higher interconnection bandwidth of Mini-NAG-MCMC compared to DeLAMA and DeEP, the gap can be narrowed by deploying the proposed detector using the daisy-chain topology. This result highlights the potential of the proposed method as a highly implementable and desirable solution for massive MIMO detectors under DBP architectures.

VI Conclusion

In this paper, the gradient-based MCMC method has been utilized for developing a novel decentralized detector for massive MIMO systems operating within the DBP architecture. Our approach involves decentralizing the gradient-based MCMC technique, distributing the computationally demanding gradient computations among multiple DUs for parallel processing. These DUs are coordinated by a CU that engages in MCMC sampling to derive a list of important samples for MIMO detection. This design enables a balanced allocation and efficient use of computational resources. We have also incorporated the concept of mini-batch GD in the design of the decentralized detector, leading to reduced computation and interconnection demands across DUs while preserving high performance. Through extensive evaluation, we have demonstrated the clear superiority of the proposed Mini-NAG-MCMC over existing decentralized detectors, notably in terms of BER. Furthermore, a complexity analysis solidifies the proposed method’s edge in effectively balancing performance, computational overhead, and interconnection bandwidth.

[Convergence of the Proposed Algorithm] We illustrate the proposed algorithm’s convergence in a simplified setting where the algorithm uses , as employed in the experiments. Specifically, at each sampling iteration, a candidate state is generated as follows

| (35) |

where denotes the previous state. By setting the global objective function as , we target the tempered posterior distribution given by

| (36) |

where is the temperature parameter [20, 44]. Assuming that the prior distribution is equiprobable, the standard MH criterion [31] calculates the acceptance probability of as

| (37) |

However, the proposal probability in (37), which corresponds to (35), is computationally expensive. Specifically, considering the discrete space , the proposal probability can be expressed as [45]

| (38) |

where is the -th element of , denotes an indicator function that returns one only if and zero otherwise, and denotes a normalization constant given by

| (39) |

which is generally intractable due to the requirement for traversal over the entire space of size .

To address this challenge and simplify computation, we choose to omit the proposal probability ratio in (37), by noting that at the latter iterations of the algorithm as the chain reaches the high probability region of the state space. Given mild assumptions from [46], this strategy is supported by the following proposition.

Proposition 1

Assume the following:

-

1.

.

-

2.

The learning rate for large , where denotes the standard asymptotic notation for higher-order infinitesimals [39, Ch. 3.1].

-

3.

There exists a such that for all , where:

-

•

denotes the supremum over all possible selections of ,

-

•

denotes the Hessian matrix on mini-batch .

-

•

Then, we have

| (40) |

Proof:

Defining and by the assumption , we have

| (41) |

Note that

| (42) |

Using the Taylor series expansion for some between and , and by the assumption , we have

| (43) | ||||

| (44) |

where the first inequality in (44) follows from the Cauchy-Schwarz inequality. Then by the assumption for large , which is approximately satisfied by the learning rate selection in (26), it follows that (42) is of the same order as . Therefore, the proposal probability ratio in (41) becomes

| (45) |

completing the proof. ∎

After omitting the proposal probability ratio, we derive the modified acceptance probability as follows

| (46) |

which we used in (28) for our proposed algorithm. By accepting as the new state with this probability, the Markov chain approximately satisfies detailed balance, which is a sufficient condition to align the stationary distribution of the Markov chain with the target posterior distribution [18, 47].

Moreover, for any two states , the transition probability of the Markov chain is given by

| (49) |

where the second branch holds because the chain remains in state either when the candidate is or if the candidate is but gets rejected. With this transition probability, it is easy to show that

| (50) |

implying the chain’s irreducibility by definition [48], and

| (51) |

where denotes the set of positive integers, and “gcd” represents the greatest common divisor, verifying the chain’s aperiodicity by definition [48]. Using these properties, it can be shown that is a unique stationary distribution of the proposed algorithm’s underlying Markov chain [49, Corollary 1.17]. This chain approximately converges to the target posterior distribution according to the convergence theorem of MCMC [49, Th. 4.9], thereby verifying the convergence of the proposed algorithm.

References

- [1] X. Zhou, L. Liang, J. Zhang, C.-K. Wen, and S. Jin, “Decentralized massive MIMO detection using mini-batch gradient-based MCMC,” in Proc. IEEE Int. Workshop Signal Process. Adv. Wireless Commun. (SPAWC), Lucca, Italy, Sep. 2024, pp. 1–5.

- [2] E. Björnson, J. Hoydis, and L. Sanguinetti, “Massive MIMO networks: Spectral, energy, and hardware efficiency,” Foundations and Trends® in Signal Processing, vol. 11, no. 3-4, pp. 154–655, 2017.

- [3] S. Yang and L. Hanzo, “Fifty years of MIMO detection: The road to large-scale MIMOs,” IEEE Commun. Surv. Tuts., vol. 17, no. 4, pp. 1941–1988, 2015.

- [4] E. Björnson, L. Sanguinetti, H. Wymeersch, J. Hoydis, and T. L. Marzetta, “Massive MIMO is a reality—What is next?: Five promising research directions for antenna arrays,” Digit. Signal Process., vol. 94, pp. 3–20, Nov. 2019.

- [5] A. Puglielli et al., “Design of energy- and cost-efficient massive MIMO arrays,” Proc. IEEE, vol. 104, no. 3, pp. 586–606, Mar. 2016.

- [6] K. Li, R. R. Sharan, Y. Chen, T. Goldstein, J. R. Cavallaro, and C. Studer, “Decentralized baseband processing for massive MU-MIMO systems,” IEEE J. Emerg. Sel. Topics Circuits Syst., vol. 7, no. 4, pp. 491–507, Dec. 2017.

- [7] J. Rodríguez Sánchez, F. Rusek, O. Edfors, M. Sarajlić, and L. Liu, “Decentralized massive MIMO processing exploring daisy-chain architecture and recursive algorithms,” IEEE Trans. Signal Process., vol. 68, pp. 687–700, Jan. 2020.

- [8] “Common public radio interface,” [Online]. Available: http://www.cpri.info, 2019.

- [9] A. Kulkarni, M. A. Ouameur, and D. Massicotte, “Hardware topologies for decentralized large-scale MIMO detection using Newton method,” IEEE Trans. Circuits Syst. I, Reg. Papers, vol. 68, no. 9, pp. 3732–3745, Sep. 2021.

- [10] K. Li, Y. Chen, R. Sharan, T. Goldstein, J. R. Cavallaro, and C. Studer, “Decentralized data detection for massive MU-MIMO on a Xeon Phi cluster,” in Proc. Asilomar Conf. Signals, Syst. Comput., Nov. 2016, pp. 468–472.

- [11] K. Li, O. Castañeda, C. Jeon, J. R. Cavallaro, and C. Studer, “Decentralized coordinate-descent data detection and precoding for massive MU-MIMO,” in Proc. IEEE Int. Symp. Circuits Syst., May 2019, pp. 1–5.

- [12] C. Jeon, K. Li, J. R. Cavallaro, and C. Studer, “Decentralized equalization with feedforward architectures for massive MU-MIMO,” IEEE Trans. Signal Process., vol. 67, no. 17, pp. 4418–4432, Sep. 2019.

- [13] E. Bertilsson, O. Gustafsson, and E. G. Larsson, “A scalable architecture for massive MIMO base stations using distributed processing,” in Proc. Asilomar Conf. Signals, Syst., Comput., Nov. 2016, pp. 864–868.

- [14] H. Wang, A. Kosasih, C.-K. Wen, S. Jin, and W. Hardjawana, “Expectation propagation detector for extra-large scale massive MIMO,” IEEE Trans. Wireless Commun., vol. 19, no. 3, pp. 2036–2051, Mar. 2020.

- [15] Z. Zhang, H. Li, Y. Dong, X. Wang, and X. Dai, “Decentralized signal detection via expectation propagation algorithm for uplink massive MIMO systems,” IEEE Trans. Veh. Technol., vol. 69, no. 10, pp. 11 233–11 240, Oct. 2020.

- [16] Y. Dong, H. Li, C. Gong, X. Wang, and X. Dai, “An enhanced fully decentralized detector for the uplink M-MIMO system,” IEEE Trans. Veh. Technol., vol. 71, no. 12, pp. 13 030–13 042, Dec. 2022.

- [17] Z. Zhang, Y. Dong, K. Long, X. Wang, and X. Dai, “Decentralized baseband processing with Gaussian message passing detection for uplink massive MU-MIMO systems,” IEEE Trans. Veh. Technol., vol. 71, no. 2, pp. 2152–2157, Feb. 2022.

- [18] C. M. Bishop, Pattern recognition and machine learning. New York, NY, USA: Springer, 2006.

- [19] S. Brooks, A. Gelman, G. Jones, and X.-L. Meng, Handbook of Markov chain Monte Carlo. Boca Raton, FL, USA: Chapman & Hall, 2011.

- [20] B. Farhang-Boroujeny, H. Zhu, and Z. Shi, “Markov chain Monte Carlo algorithms for CDMA and MIMO communication systems,” IEEE Trans. Signal Process., vol. 54, no. 5, pp. 1896–1909, May 2006.

- [21] J. C. Hedstrom, C. H. Yuen, R.-R. Chen, and B. Farhang-Boroujeny, “Achieving near MAP performance with an excited Markov chain Monte Carlo MIMO detector,” IEEE Trans. Wireless Commun., vol. 16, no. 12, pp. 7718–7732, Dec. 2017.

- [22] S. A. Laraway and B. Farhang-Boroujeny, “Implementation of a Markov chain Monte Carlo based multiuser/MIMO detector,” IEEE Trans. Circuits and Syst. I: Reg. Papers, vol. 56, no. 1, pp. 246–255, Jan. 2009.

- [23] Y.-A. Ma, Y. Chen, C. Jin, N. Flammarion, and M. I. Jordan, “Sampling can be faster than optimization,” Proc. Nat. Acad. Sci., vol. 116, no. 42, pp. 20 881–20 885, Sep. 2019.

- [24] M. Welling and Y. W. Teh, “Bayesian learning via stochastic gradient Langevin dynamics,” in Proc. 28th Int. Conf. Mach. Learn. (ICML), pp. 681-688, 2011.

- [25] N. M. Gowda, S. Krishnamurthy, and A. Belogolovy, “Metropolis-Hastings random walk along the gradient descent direction for MIMO detection,” in Proc. IEEE Int. Conf. Commun. (ICC), Montreal, QC, Canada, Jun. 2021, pp. 1–7.

- [26] N. Zilberstein, C. Dick, R. Doost-Mohammady, A. Sabharwal, and S. Segarra, “Annealed Langevin dynamics for massive MIMO detection,” IEEE Trans. Wireless Commun., vol. 22, no. 6, pp. 3762–3776, Jun. 2023.

- [27] X. Zhou, L. Liang, J. Zhang, C.-K. Wen, and S. Jin, “Gradient-based Markov chain Monte Carlo for MIMO detection,” IEEE Trans. Wireless Commun., vol. 23, no. 7, pp. 7566–7581, Jul. 2024.

- [28] Y. Nesterov, Introductory lectures on convex optimization. Springer Science+Business Media, 2004.

- [29] H. Li, Y. Dong, C. Gong, X. Wang, and X. Dai, “Decentralized groupwise expectation propagation detector for uplink massive MU-MIMO systems,” IEEE Internet Things J., vol. 10, no. 6, pp. 5393–5405, Mar. 2023.

- [30] X. Zhao, M. Li, B. Wang, E. Song, T.-H. Chang, and Q. Shi, “Decentralized equalization for massive MIMO systems with colored noise samples,” May 2023. [Online] Available: http://arxiv.org/abs/2305.12805.

- [31] W. K. Hastings, “Monte Carlo sampling methods using Markov chains and their applications,” Biometrika, vol. 57, no. 1, pp. 97–109, Apr. 1970.

- [32] L. Bottou, F. E. Curtis, and J. Nocedal, “Optimization methods for large-scale machine learning,” SIAM Rev., vol. 60, no. 2, pp. 223–311, Jan. 2018.

- [33] B. O’Donoghue and E. Candès, “Adaptive restart for accelerated gradient schemes,” Found. Comput. Math., vol. 15, no. 3, pp. 715–732, Jun. 2015.

- [34] H. Q. Ngo, E. G. Larsson, and T. L. Marzetta, “Aspects of favorable propagation in massive MIMO,” in Proc. Eur. Signal Process. Conf. (EUSIPCO), Sep. 2014, pp. 76–80.

- [35] A. Barbu and S.-C. Zhu, “Hamiltonian and Langevin Monte Carlo,” in Monte Carlo methods, A. Barbu and S.-C. Zhu, Eds. Singapore: Springer, 2020, pp. 281–325.

- [36] J. Martin, C. L. Wilcox, C. Burstedde, and O. Ghattas, “A stochastic Newton MCMC method for large-scale statistical inverse problems with application to seismic inversion,” SIAM J. Sci. Comput., vol. 34, no. 3, pp. 1460–1487, Jan. 2012.

- [37] K. P. Murphy, Probabilistic machine learning: Advanced topics., Cambridge, MA, USA: MIT Press, 2023.

- [38] P. W. Wolniansky, G. J. Foschini, G. D. Golden, and R. A. Valenzuela, “V-BLAST: An architecture for realizing very high data rates over the rich-scattering wireless channel,” Proc. Int. Symp. Signals Syst. Electron. (ISSSE), Oct. 1998, pp. 295–300.

- [39] T. H. Cormen, C. E. Leiserson, R. L. Rivest, and C. Stein, Introduction to Algorithms, 2nd ed. Cambridge, Massachusetts: MIT Press, 2001.

- [40] Z. Guo and P. Nilsson, “Algorithm and implementation of the K-best sphere decoding for MIMO detection,” IEEE J. Sel. Areas Commun., vol. 24, no. 3, pp. 491–503, Mar. 2006.

- [41] 3GPP TR 36.873, “Study on 3D channel model for LTE (Release 12),” Tech. Rep., Jun. 2017. [Online]. Available: https://www.3gpp.org/ftp/Specs/archive/36_series/36.873/.

- [42] X. Qian and D. Klabjan, “The impact of the mini-batch size on the variance of gradients in stochastic gradient descent,” Apr. 2020. [Online] Available: http://arxiv.org/abs/2004.13146.

- [43] S. Jaeckel, L. Raschkowski, K. Börner, and L. Thiele, “QuaDRiGa: A 3-D multi-cell channel model with time evolution for enabling virtual field trials,” IEEE Trans. Antennas Propag., vol. 62, no. 6, pp. 3242–3256, Jun. 2014.

- [44] B. Hassibi, M. Hansen, A. G. Dimakis, H. A. J. Alshamary, and W. Xu, “Optimized Markov chain Monte Carlo for signal detection in MIMO systems: An analysis of the stationary distribution and mixing time,” IEEE Trans. Signal Process., vol. 62, no. 17, pp. 4436–4450, Sep. 2014.

- [45] R. Zhang, X. Liu, and Q. Liu, “A Langevin-like sampler for discrete distributions,” in Proc. Int. Conf. Mach. Learn. (ICML), Jun. 2022, pp. 26 375–26 396.

- [46] T.-Y. Wu, Y. X. R. Wang, and W. H. Wong, “Mini-batch Metropolis-Hastings with reversible SGLD proposal,” J. Amer. Stat. Assoc., vol. 117, no. 537, pp. 386–394, Jan. 2022.

- [47] A. Doucet and X. Wang, “Monte Carlo methods for signal processing: A review in the statistical signal processing context,” IEEE Signal Process. Mag., vol. 22, no. 6, pp. 152–170, Nov. 2005.

- [48] J. R. Norris, Markov Chains. Cambridge, U.K.: Cambridge Univ. Press, 1997.

- [49] D. A. Levin, Y. Peres, and E. L. Wilmer, Markov Chains and Mixing Times. Providence, RI, USA: Amer. Math. Soc., 2008.