Minimum Gamma Divergence for

Regression and Classification Problems

Preface

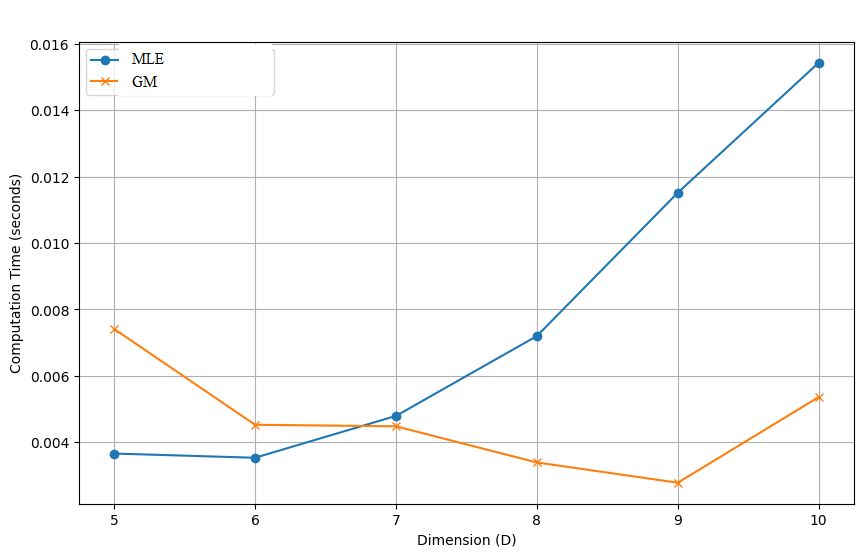

In an era where data drives decision-making across diverse fields, the need for robust and efficient statistical methods has never been greater. As a researcher deeply involved in the study of divergence measures, I have witnessed firsthand the transformative impact these tools can have on statistical inference and machine learning. This book aims to provide a comprehensive guide to the class of power divergences, with a particular focus on the -divergence, exploring their theoretical underpinnings, practical applications, and potential for enhancing robustness in statistical models and machine learning algorithms.

The inspiration for this book stems from the growing recognition that traditional statistical methods often fall short in the presence of model misspecification, outliers, and noisy data. Divergence measures, such as the -divergence, offer a promising alternative by providing robust estimation techniques that can withstand these challenges. This book seeks to bridge the gap between theoretical development and practical application, offering new insights and methodologies that can be readily applied in various scientific and engineering disciplines.

The book is structured into four main chapters. Chapter 1 introduces the foundational concepts of divergence measures, including the well-known Kullback-Leibler divergence and its limitations. It then presents a detailed exploration of power divergences, such as the , , and -divergences, highlighting their unique properties and advantages. Chapter 2 explores minimum divergence methods for regression models, demonstrating how these methods can improve robustness and efficiency in statistical estimation. Chapter 3 extends these methods to Poisson point processes, with a focus on ecological applications, providing a robust framework for modeling species distributions and other spatial phenomena. Finally, Chapter 4 explores the use of divergence measures in machine learning, including applications in Boltzmann machines, AdaBoost, and active learning. The chapter emphasizes the practical benefits of these measures in enhancing model robustness and performance.

By providing a detailed examination of divergence measures, this book aims to offer a valuable resource for statisticians, machine learning practitioners, and researchers. It presents a unified perspective on the use of power divergences in various contexts, offering practical examples and empirical results to illustrate their effectiveness. The methodologies discussed in this book are designed to be both insightful and practical, enabling readers to apply these concepts in their work and research.

This book is the culmination of years of research and collaboration. I am grateful to my colleagues and students whose questions and feedback have shaped the content of this book. Special thanks to Hironori Fujisawa, Masayuki Henmi, Takashi Takenouchi, Osamu Komori, Kenichi Hatashi, Su-Yun Huang, Hung Hung, Shogo Kato, Yusuke Saigusa and Hideitsu Hino for their invaluable support and contributions.

I invite you to explore the rich landscape of divergence measures presented in this book. Whether you are a researcher, practitioner, or student, I hope you find the concepts and methods discussed here to be both insightful and practical. It is my sincere wish that this book will contribute to the advancement of robust statistical methods and inspire further research and innovation in the field.

Tokyo, 2024 Shinto Eguchi

Chapter 1 Power divergence

We present a mathematical framework for discussing the class of divergence measures, which are essential tools for quantifying the difference between two probability distributions. These measures find applications in various fields such as statistics, machine learning, and data science. We begin by discussing the well-known Kullback-Leibler (KL) Divergence, highlighting its advantages and limitations. To address the shortcomings of KL-divergence, the paper introduces three alternative types: , , and -divergences. We emphasize the importance of choosing the right ”reference measure,” especially for and divergences, as it significantly impacts the results.

1.1 Introduction

We provide a comprehensive study of divergence measures that are essential tools for quantifying the difference between two probability distributions. These measures find applications in various fields such as statistics, machine learning, and data science [1, 6, 19, 79, 20]. See also [98, 17, 58, 34, 72, 80, 50].

We present the , , and divergence measures, each characterized by distinctive properties and advantages. These measures are particularly well-suited for a variety of applications, offering tailored solutions to specific challenges in statistical inference and machine learning. We further explore the practical applications of these divergence measures, examining their implementation in statistical models such as generalized linear models and Poisson point processes. Special attention is given to selecting the appropriate ’reference measure,’ which is crucial for the accuracy and effectiveness of these methods. The study concludes by identifying areas for future research, including the further exploration of reference measures. Overall, the paper serves as a valuable resource for understanding the mathematical and practical aspects of divergence measures.

In recent years, a number of studies have been conducted on the robustness of machine learning models using the -divergence, which was proposed in [33]. This book highlights that the -divergence can be defined even when the power exponent is negative, provided certain integrability conditions are met [26]. Specifically, one key condition is that the probability distributions are defined on a set of finite discrete values. We demonstrate that the -divergence with is intimately connected to the inequality between the arithmetic mean and the geometric mean of the ratio of two probability mass functions, thus terming it the geometric-mean (GM) divergence. Likewise, we show that the -divergence with can be derived from the inequality between the arithmetic mean and the harmonic mean of the mass functions, leading to its designation as the harmonic-mean (HM) divergence.

1.2 Probabilistic framework

Let be a random variable with a set of possible values in . We denote as a -finite measure, referred to as the reference measure. The reference measure is typically either the Lebesgue measure for a case where is a continuous random variable or the counting measure for a case where is a discrete one. Let us define as the space encompassing all probability measures ’s that are absolutely continuous with respect to each other. The probability for an event can be expressed as:

where is referred to as the Radon-Nicodym (RN) derivative. Specifically, is referred to as the probability density function (pdf) and the probability mass function (pmf) if is a continuous and discrete random variable, respectively.

Definition 1.

Let denote a functional defined on . Then, we call a divergence measure if for all and of , and means .

Consider two normal distributions and . If both distributions have the same mean and variance , they are identical, and their divergence is zero. However, as the mean and variance of one distribution diverge from those of the other, the divergence measure increases, quantifying how one distribution is different from the other. Thus, a divergence measure quantifies how one probability distribution diverges from another. The key properties are non-negativity, asymmetry and zero when identical. The asymmetry of the divergence measure helps one to discuss model comparisons, variational inferences, generative models, optimal control policies and so on. Researchers have proposed various divergence measures in statistics and machine learning to compare two models or to measure the information loss when approximating a distribution. It is more appropriately termed ‘information divergence’, although here is simply called ‘divergence’ for simplicity. As a specific example, the Kullback-Leibler (KL) divergence is given by the following equation:

| (1.1) |

where and . The KL-divergence is essentially independent of the choice since it is written without as

This implies that any properties for the KL-divergence directly can be thought as intrinsic properties between probability measures and regardless of the RN-derivatives with respect to the reference measure. The definition (1.1) implicitly assumes the integrability. This implies that the integrals of their products with the logarithm of their ratio must be finite. Such assumptions are almost acceptable in the practical applications in statistics and the machine learning. However, if we assume a Cauchy distribution as and a normal distribution , then is not a finite value. Thus, the KL-divergence is associated with instable behaviors, which arises non-robustness for the minimum KL-divergence method or the maximum likelihood. This aspect will be discussed in the following chapter.

If we write the cross-entropy as

| (1.2) |

then the KL-divergence is written by the difference as

The KL-divergence is a divergence measure due to the convexity of the negative logarithmic function. In foundational statistics, the Neyman-Pearson lemma holds a pivotal role. This lemma posits that the likelihood ratio test (LRT) is the most powerful method for hypothesis testing when comparing a null hypothesis distribution against an alternative distribution . In this context, the KL-divergence can be interpreted as the expected value of the LRT under the null hypothesis distribution . For a more in-depth discussion of the close relationship between and the Neyman-Pearson lemma, the reader is referred to [25].

In the context of machine learning, KL-divergence is often used in algorithms like variational autoencoders. Here, KL-divergence helps quantify how closely the learned distribution approximates the real data distribution. Lower KL-divergence values indicate better approximations, thus helping in the model’s optimization process.

1.3 Power divergence measures

The KL-divergence is sometimes referred to as log divergence due to its definition involving the logarithmic function. Alternatively, a specific class of power divergence measures can be derived from power functions characterized by the exponent parameters , , and , as detailed below. Among the numerous ways to quantify the divergence or distance between two probability distributions, ‘power divergence measure’ occupies a unique and significant property. Originating from foundational concepts in information theory, these measures have been extended and adapted to address various challenges across statistics and machine learning. As we strive to make better decisions based on data, understanding the nuances between different divergence measures becomes crucial. This section introduces the power divergence measures through three key types: , , and divergences, see [9] for a comprehensive review. Each of these offers distinct advantages and limitations, and serves as a building block for diverse applications ranging from robust parameter estimation to model selection and beyond.

(1) -divergence:

where belongs to , cf. [8, 1] for further details. Let us introduce

as a generator function for . Then the -divergence is written as

Note that . Equality is achieved if and only if , indicating that is a convex function. This implies with equality if and only if . This shows that is a divergence measure. The log expression [8] is given by

The -divergence is associated with the Pythagorean identity in the space . Assume that a triple of , and satisfies

This equation reflects a Pythagorean relation, wherein the triple forms a right triangle if is considered the squared Euclidean distance between and . We define two curves and in such that the RN-derivatives of and is given by and

respectively, where is a normalizing constant. We then observe that the Pythagorean relation remains unchanged for the triple , as illustrated by the following equation:

In accordance with this, The -divergence allows for to be as if it were an Euclidean space. This property plays a central role in the approach of information geometry. It gives geometric insights for statistics and machine learning [73].

For example, consider a multinomial distribution MN with a probability mass function (pmf):

| (1.3) |

for with , where . The -divergence between multinomial distributions and can be expressed as follows:

| (1.4) |

where is the counting measure.

The -divergence is independent of the choice of since

This indicates that is independent of the choice of , as for any . Consequently, equation (1.4) is also independent of . In general, the Csisar class of divergence is independent of the choice of the reference measure [26].

(2) -divergence:

| (1.5) |

where belongs to . For more details, refer to [2, 65]. Let us consider a generator function defined as follows:

It follows from the convexity of that This concludes that is a divergence measure due to

We also observe the property preserving the Pythagorean relation for the -divergence. When , and form a right triangle by the -divergence, then the right triangle is preserved for the triple , anf .

It’s worth noting that the -divergence is dependent on the choice of reference measure . For instance, if we choose as the reference measure, then the -divergence is given by:

Here, and . This can be rewritten as

| (1.6) |

where . Hence, the integrands of are given by the integrands of multiplied by . The choice of the reference measure gives a substantial effect for evaluating the -divergence.

We again consider a multinomial distribution MN defined in (1.3). Unfortunately, the -divergence with the counting measure would have an intractable expression. Therefore, we select in a way that the Radon-Nikodym (RN) derivative is defined as

| (1.7) |

as a reference measure. Accordingly, , and hence

which is equal to . Using this approach, a closed form expression for -divergence can be derived:

| (1.8) |

due to (1.6). In this way, the expression (1.8) has a tractable form, in which this is reduced to the standard one of -divergence when . Subsequent discussions will explore the choice of reference measure that provides the most accurate inference within statistical models, such as the generalized linear model and the model of inhomogeneous Poisson point processes.

(3) -divergence [33]:

| (1.9) |

If we define the -cross entropy as:

then the -divergence is written by the difference:

It is noteworthy that the cross -entropy is a convex-linear functional with respect to the first argument:

where ’s are positive weights with and . This property gives explicitly the empirical expression for the -entropy given data set . Consider the empirical distribution as , where is the Dirac measure at the atom . Then

If we assume that is a sequence of identically and independently distributed with , then

and hence almost surely converges to due to the strong law of large numbers. Subsequently, this will be used to define the empirical loss based on the dataset. Needless to say, the empirical expression of the cross entropy in (1.2) is the negative log likelihood. The -diagonal entropy is proportional to the Lebesgue norm with exponent as

Considering the conjugate exponent , the Hölder inequality for and states

This holds for any with the equality if and only if . This implies the -divergence satisfies the definition of ‘divergence measure’ for any . It should be noted that the Hölder inequality is employed for not the pair and but that of and , which yields the property ‘zero when identical’ as a divergence measure. Also, the -divergence approaches the KL-divergence in the limit:

This is because for all as well as the and divergence measures.

We observe a close relationship between and divergence measures. Consider a maximum problem of the -divergence: . By definition, if we write , then for all . Thus, the maximizer of is given by

If we confuse with , then the close relationship is found in

In accordance with this, the -divergence can be viewed as the -divergence interpreted in a projective geometry [27]. Similarly, consider a dual problem: . Then, the maximizer of is given by

Hence, the scale adjusted divergence is given by

| (1.10) |

Thus, we get a dualistic version of the -divergence as

| (1.11) |

We refer to as the dual -divergence. If we define the dual -entropy as

then . In effect, the -divergence and its dual are connected as follows.

1.4 The -divergence and its dual

In the evolving landscape of statistical divergence measures, a lesser-explored but highly potent member of the family is the divergence. This divergence serves as an interesting alternative to the more commonly used and divergences, with unique properties and advantages that make it particularly suited for certain classes of problems. The dual divergence offers further flexibility, allowing for nuanced analysis from different perspectives. The following section is dedicated to a deep-dive into the mathematical formulations and properties of these divergences, shedding light on their invariance characteristics, relationships to other divergences, and potential applications. Notably, we shall establish that divergence is well-defined even for negative values of the exponent , and examine its special cases which connect to the geometric and harmonic mean divergences. This comprehensive treatment aims to illuminate the role that and its dual can play in advancing both theoretical and applied aspects of statistical inference and machine learning [28].

Let us focus on the -divergence in power divergence measures. We define a power-transformed function as follows:

| (1.12) |

which we refer to as the -expression of , where . Thus, the measure having the RN-derivative belongs to since . We can write

and

These equations directly yield an observation: and are scale-invariant, but only with respect to one of the two arguments.

| (1.13) |

while

The power exponent is usually assumed to be positive. However, we extend to be a real number in this discussion, see [23] for a brief discussion.

Proposition 1.

Proof.

We introduce two generator functions defined as:

| (1.14) |

for . By definition, the divergence can be expressed as:

| (1.15) |

Due to the convexity of in for any , we have

| (1.16) |

with equality if and only if . The right-hand-side of (1.16) can be rewritten as:

The second term identically vanishes since and have both total mass one. Similarly, we observe for any real number that

| (1.17) |

which is equal or greater than and the equality holds if and only if due the convexity of . Therefore, and are both divergence measures for any real number . ∎

We will discuss with a negative power exponent in a context of statistical inferences. The -divergence (1.9) is implicitly assumed to be integrable as well as the KL-divergence, in which the integrability condition for the -divergence with is presented. Let us look into the case of a multinomial distribution Bin defined in (1.3) with the reference measure given by (1.7). An argument similar to that on the -divergence yields

as the -divergence of the log expression in (1.21), where and are cell probability vectors of dimension. The -divergence with the counting measure would also have no closed expression. Therefore, careful consideration is needed when choosing the reference measure for divergence. Let be the RN-derivative of with respect to the Lebesgue measure . Then, the -divergence (1.9) with

where . Our key objective is to identify a that ensures stable and smooth behavior under a given statistical model and dataset.

We discuss a generalization of the -divergence. Let be a convex function. Then, -divergence is defined by

| (1.18) |

where is a normalizing constant satisfying and the function satisfies

| (1.19) |

It is derived from the assumption of the convexity of that

which is equal to

| (1.20) |

up to the proportional factor due to (1.19). By the definition of normalizing constants, the integral in (1.20) vanishes. Hence, becomes a divergence measure. Specifically, for due to the normalizing constant . For example, if as in (1.14), reduces to the -divergence. There are various examples of other than (1.14), for example,

which is related to the -entropy discussed in a physical context [52, 78]. We do not go further into this topic as it is beyond the scope of this paper.

We investigate a notable property of the dual divergence. There exists a strong relationship between the generalized mean of probability measures and the minimization of the average dual divergence. Subsequently, we will explore its applications in active learning.

Proposition 2.

Consider an average of dual -divergence measures as

Let . Then, the Radon-Nikodym (RN) derivative of is uniquely determined as follows:

where and is the normalizing constant.

Proof.

If we write by , then

which is equal to

This expression simplifies to . Therefore, and the equality holds if and only if This is due to the property of as a divergence measure. ∎

The optimal distribution can be viewed as the consensus one integrating committee members’ distributions ’s into the average of divergence measures with importance weights . We adopt a ”query by committee” approach and examine the robustness against variations in committee distributions. Proposition 2 leads to an average version of the Pythagorean relations:

We refer to as the power mean of the set . In general, a generalized mean is defined as

where is a one-to-one function on . We confirm that, if , then that is the arithmetic mean, or the mixture distribution of ’s with mixture proportions ’s. If , then

which is the harmonic mean of ’s with weights ’s. As goes to , the dual -divergence converges to the dual KL-divergence defined by . The minimizer is given by

which is the harmonic mean of ’s with weights ’s. We will discuss divergence measures using the harmonic and geometric means of ratios for RN-derivatives in a later section.

We often utilize the logarithmic expression for the divergence, given by

| (1.21) |

We find a remarkable property such that

for all and , noting the log expression is written by

| (1.22) |

by the use of -expression defined in (1.12). This implies that measures not a departure between ad but an angle between them. When , this is the negative log cosine similarity for and . In effect, the cosine for and are in is defined by

This is closely related to in a discrete space when . which is closely related to on discrete space when . We will discuss an extension of to be defined on a space of all signed measures that comprehensively gives an asymmetry in measuring the angle.

In summary, the exploration of power divergence measures in this section has illuminated their potential as versatile tools for quantifying the divergence between probability distributions. From the foundational Kullback-Leibler divergence to the more specialized , , and divergence measures, we have seen that each type has its own strengths and limitations, making them suited for particular classes of problems. We have also underscored the mathematical properties that make these divergences unique, such as invariance under different conditions and applicability in empirical settings. As the field of statistics and machine learning continues to evolve, it’s evident that these power divergence measures will find even broader applications, providing rigorous ways to compare models, make predictions, and draw inferences from increasingly complex data.

1.5 GM and HM divergence measures

We discuss a situation where a random variable is discrete, taking values in a finite set of non-negative integers denoted by . Let be the space of all probability measures on . In the realm of statistical divergence measures, arithmetic, geometric, and harmonic means for a probability measure of receives less attention despite their mathematical elegance and potential applications. For this, consider the RN-derivative of a probability measure relative to that equals a ratio of probability mass functions (pmfs) and induced by and in . Then, there is well-known inequality between the arithmetic and geometric means:

| (1.23) |

and that between the arithmetic and harmonic means:

| (1.24) |

where is a weight function that is arbitrarily a fixed pmf on . Equality in (1.23) or (1.24) holds if and only if . This well-known inequality relationships among these means serve as the mathematical bedrock for defining new divergence measures. Specifically, the Geometric Mean (GM) and Harmonic Mean (HM) divergences are inspired by inequalities involving these means and ratios of probabilities as follows.

First, we define the GM-divergence as

transforming the expression (1.23), where , and are the pmfs with respect to , and , respectively. Note that is a divergence measure on as defined in Definition 1. We restrict to be a finite discrete set for this discussion; however, our results can be generalized. In effect, the GM-divergence has a general form:

| (1.25) |

For comparison, we have a look at the -divergence

| (1.26) |

by selecting a probability measure as a reference measure.

Proof.

We write the GM-divergence by the difference of the cross and diagonal entropy measures: , where

The GM-divergence has a log expression:

We note that is equal to taking the limit of to . Here we discuss the case of the Poisson distribution family. We choose a Poisson distribution Po as the reference measure. Thus, the GM-divergence of the log-form is given by

Second, we introduce the HM-divergence. Suppose in the inequality (1.24). Then, (1.24) is written as

| (1.28) |

We define the harmonic-mean (HM ) divergence arranging the inequality (1.28) as

Here

is the cross entropy, where and is the pmfs of and , respectively. The (diagonal) entropy is given by the harmonic mean of ’s:

Note that qualifies as a divergence measure on , as defined in Definition 1, due to the inequality (1.28). When , is equal to in (1.9) with the counting measure . The log form is given by

The GM-divergence provides an insightful lens through which we can examine statistical similarity or dissimilarity by leveraging the multiplicative nature of probabilities. The HM-divergence, on the other hand, focuses on rates and ratios, thus providing a complementary perspective to the GM-divergence, particularly useful in scenarios where rate-based analysis is pivotal. By extending the divergence measures to include GM and HM divergence, we gain a nuanced toolkit for quantifying divergence, each with unique advantages and applications. For instance, the GM-divergence could be particularly useful in applications where multiplicative effects are prominent, such as in network science or econometrics. Similarly, the HM-divergence might be beneficial in settings like biostatistics or communications, where rate and proportion are of prime importance. This framework, rooted in the relationships among arithmetic, geometric, and harmonic means, not only expands the class of divergence measures but also elevates our understanding of how different mathematical properties can be tailored to suit the needs of diverse statistical challenges.”

1.6 Concluding remarks

In summary, this chapter has laid the groundwork for understanding the class of power divergence measures in a probabilistic framework. We study that divergence measures quantify the difference between two probability distributions and have applications in statistics and machine learning. It begins with the well-known Kullback-Leibler (KL) divergence, highlighting its advantages and limitations. To address limitations of KL-divergence, three types of power divergence measures are introduced.

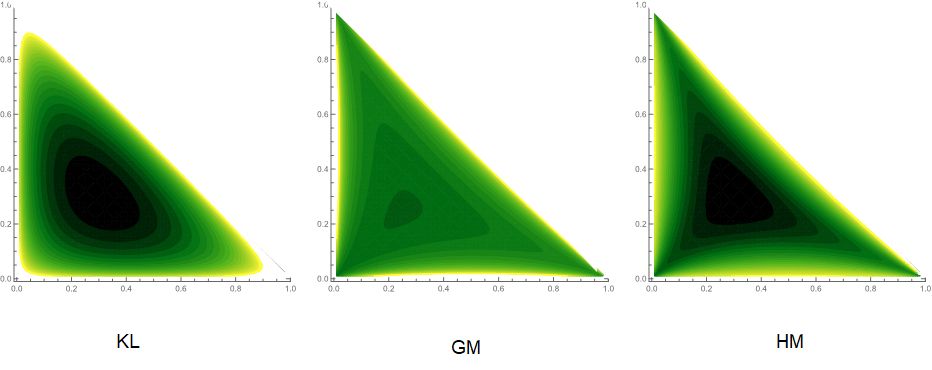

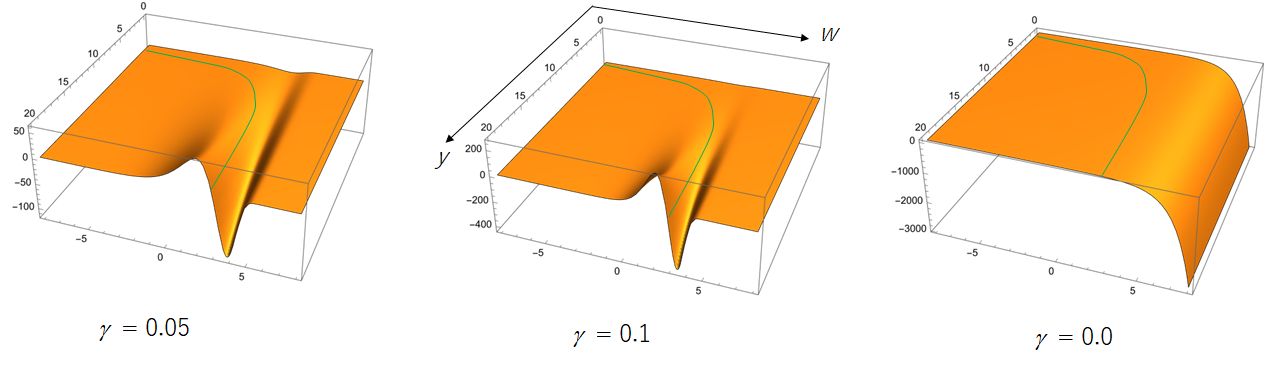

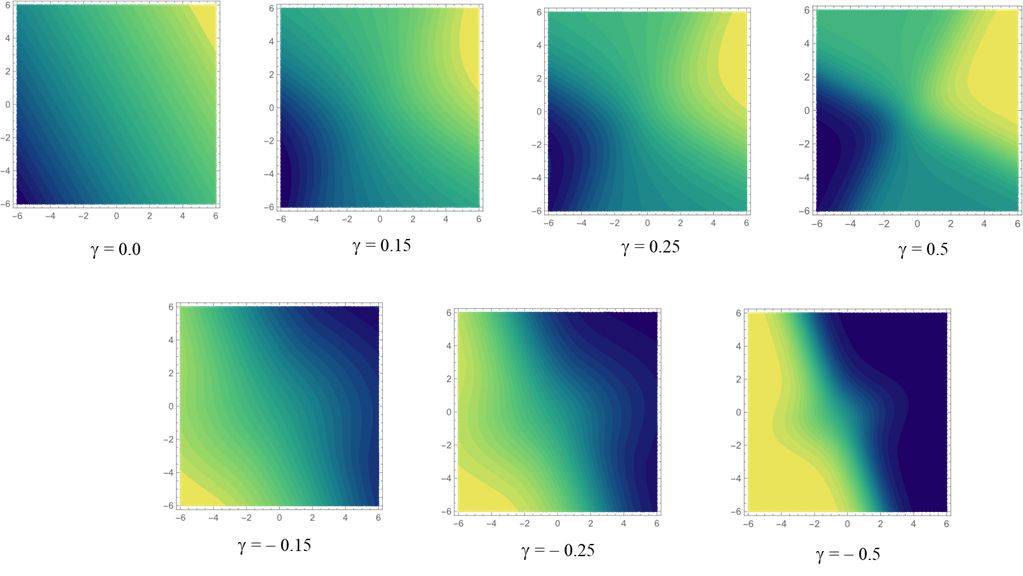

Let us look at the , , and -divergence measures for a Poisson distribution model. Let denote a Poisson distribution with the RN-derivative

| (1.29) |

with respect to the reference measure having . Seven examples of power divergence between Poisson distributions and are listed in Table 1.1. Note that this choice of the reference measure enable us to having such an tractable form of the and divergences as well as its variants. Here we use a basic formula to obtain these divergence measures:

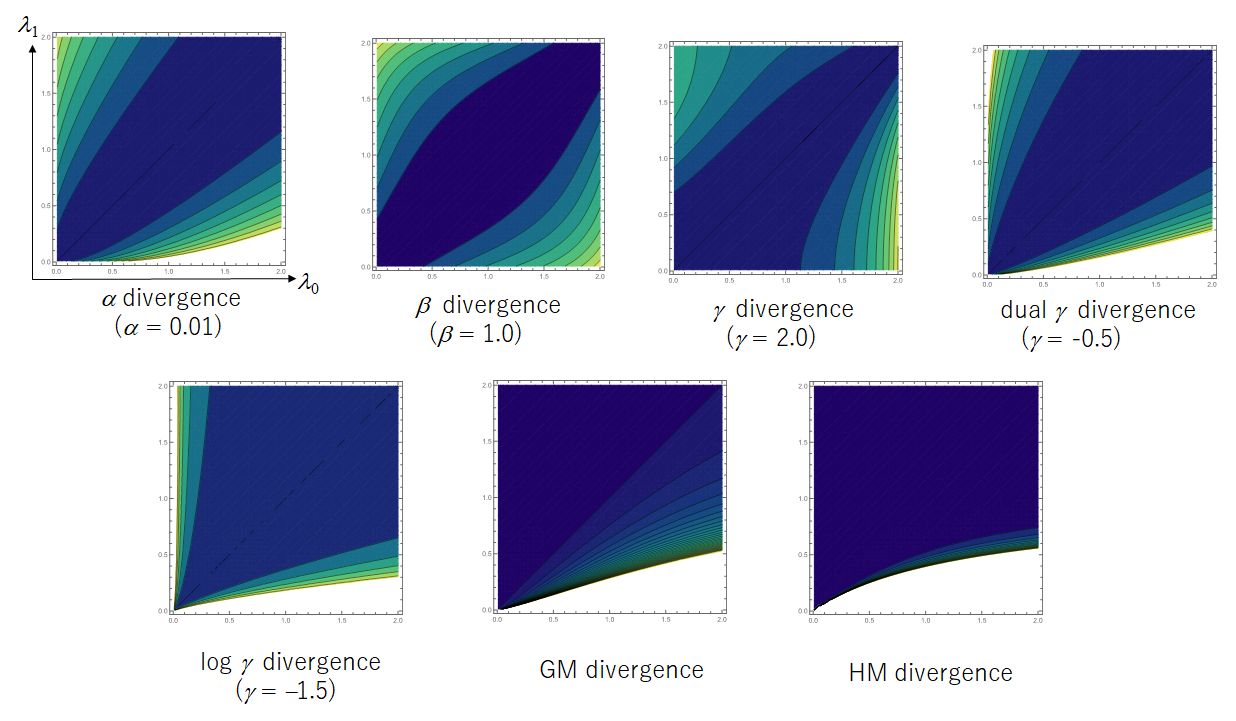

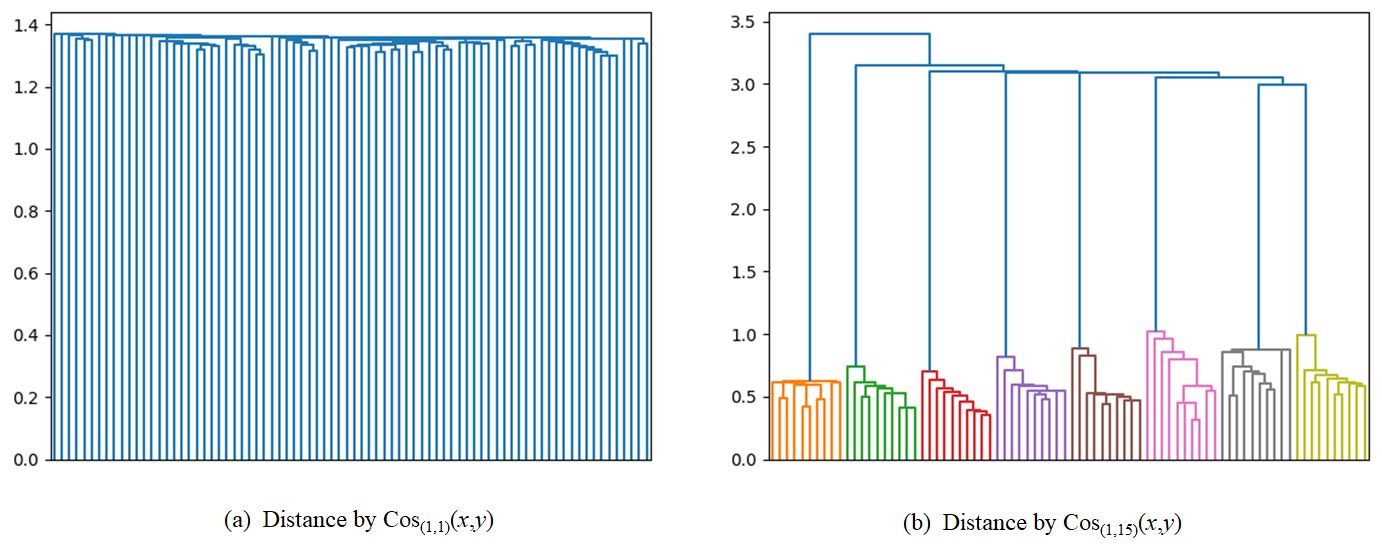

for an exponent of . The contour sets of seven divergences between Poisson distributions are plotted in Figure 1.1. All the divergences attain the unique minimum in the diagonal line . The contour set of GM and HM divergences are flat compared to those of other divergences.

| -divergence | |

|---|---|

| -divergence | |

| -divergence | |

| dual -divergence | |

| log -divergence | |

| GM-divergence | |

| HM-divergence |

The -divergence is intrinsic to assess the divergence between two probability measures. One of the most important properties is invariance with the choice of the reference measure that expresses the Radon-Nicodym derivatives for the two probability measure . The invariance provides direct understandings for the intrinsic properties beyond properties for the probability density or mass functions. A serious drawback is pointed out that an empirical counterpart is not available for a given data set in most practical situations. This makes difficult for applications for statistical inference for estimation and prediction. In effect, the statistical applications are limited to the curved exponential family that is modeled in an exponential family. See [18] for the statistical curvature characterizing the second order efficiency.

The -divergence and the -divergence are not invariant with the choice of the reference measure. We have to determine the reference measure from the point of the application to statistics and machine learning. Subsequently, we discuss the appropriate selection of the reference measure in both cases of and divergences. Both divergence measures are efficient for applications in areas of statistics and machine learning since the empirical loss function for a parametric model distribution is applicable for any dataset. For example, the -divergence is utilized as a cost function to measure the difference between a data matrix and the factorized matrix in the nonnegative matrix factorization. Such applications the minimum -divergence method is more robust than the maximum likelihood method which can be viewed as the minimum KL-divergence method. In practice, the is not scale invariant in the space of all finite measures that involves that of all the probability measures. We will study that the lack of the scale invariance does not allow simple estimating functions even under a normal distribution model.

Alternatively, the -divergence is scale-invariant with respect to the second argument. The -divergence provides a simple estimating function for the minimum -estimator. This property enables to proposing an efficient learning algorithm for solving the estimating equation. For example, the -divergence is used for the clustering analysis. The cluster centers are determined by local minimums of the empirical loss function defined by the -divergence, see [75, 74] for the learning architecture. A fixed-point type of algorithm is proposed to conduct a fast detection for the local minimums. Such practical properties in applications will be explored in the following section. We consider the dual -divergence that is invariant for the first argument. We will explore the applicability for defining the consensus distribution in a context of active learning. It is confirmed that the -divergence is well defined even for negative value of the exponent . The -divergences with and are reduced to the GM and HM divergences, respectively. In a subsequent discussion, special attentions to the GM and HM divergences are explored for various objectives in applications, see [26] for the application to dynamic treatment regimes in the medical science.

Chapter 2 Minimum divergence for regression model

This chapter explores statistical estimation within regression models. We introduce a comprehensive class of estimators known as Minimum Divergence Estimators (MDEs), along with their empirical loss functions under a parametric framework. Standard properties such as unbiasedness, consistency, and asymptotic normality of these estimators are thoroughly examined. Additionally, the chapter addresses the issue of model misspecification, which can result in biases, inaccurate inferences, and diminished statistical power, and highlights the vulnerability of conventional methods to such misspecifications. Our primary goal is to identify estimators that remain robust against potential biases arising from model misspecification. We place particular emphasis on the -divergence, which underpins the -estimator known for its efficiency and robustness.

2.1 Introduction

We study statistical estimation in a regression model including a generalized linear model. The maximum likelihood (ML) method is widely employed and developed for the estimation problem. This estimation method has been standard on the basis of the solid evidence in which the ML-estimator is asymptotically consistent and efficient when the underlying distribution is correctly specified a regression model. See [16, 62, 7, 39]. The power of parametric inference in regression models is substantial, offering several advantages and capabilities that are essential for effective statistical analysis and decision-making. The ML method has been a cornerstone of parametric inference. This principle yields estimators that are asymptotically unbiased, consistent, and efficient, given that the model is correctly specified. Specifically, generalized linear models (GLMs) extend linear models to accommodate response variables that have error distribution models other than a normal distribution, enabling the use of ML estimation across binomial, Poisson, and other exponential family distributions.

However, we are frequently concerned with model misspecification, which occurs when the statistical model does not accurately represent the data-generating process. This could be due to the omission of important variables, the inclusion of irrelevant variables, incorrect functional forms, and wrong error structures. Such misspecification can lead to biases, inaccurate inferences, and reduced statistical power because of misspecification for the parametric model. See [95] for the critical issue of model misspecification. A misspecified model is more sensitive to outliers, often resulting in more biased estimates. Outliers can also obscure true relationship between variables, making it difficult to detect model misspecification by overshadowing the true relationships between variables. Unfortunately, the performance of the ML-estimator is easily degraded in such difficult situations because of the excessive sensitivities to model misspecification. Such limitations in the face of model misspecification and complex data structures have prompted the development of a broad spectrum of alternative methodologies. In this way, we take the MDE approach other than the maximum likelihood.

We discuss a class of estimating methods through minimization of divergence measure [2, 65, 69, 67, 21, 66, 22, 55, 53, 54, 26]. These are known as minimum divergence estimators (MDEs). The empirical loss functions for a given dataset are discussed in a unified perspective under a parametric model. Thus, we derive a broad class of estimation methods via MDEs. Our primary objective is to find estimators that are robust against potential biases in the presence of model misspecification. MDEs can be applied to a case where the outcome is a continuous variable in a straightforward manner, in which the reference measure to define the divergence is fixed by the Lebesgue measure. Alternatively, more consideration is needed regarding the choice of a reference measure in a discrete variable case for the outcome. In particular, and divergence measures are strongly associated with a specific dependence for the choice of a reference measure. We explore effective choices for the reference measure to ensure that the corresponding divergences are tractable and can be expressed feasibly. We focus on the -divergence as a robust MDE through an effective choice of the reference measure.

This chapter is organized as follows. Section 2.4 gives an overview of M-estimation in a framework of generalized linear model. In Section 2.3 the -divergence is introduced in a regression model and the -loss function is discussed. In Section 2.4 we focus on the -estimator in a normal linear regression model. A simple numerical example demonstrates a robust property of the -estimator compared to the ML-estimator. Section 2.5 discusses a logistic model for a binary regression. The -loss function is shown to have a robust property where the Euclidean distance of the estimating function to the decision boundary is uniformly bounded when is in a specific range. In Section 2.6 extends the result in the binary case to a multiclass case. Section 2.7 considers a Poisson regression model focusing on a log-linear model. The -divergence is given by a specific choice of the reference measure. The robustness for the -estimator is confirmed for any in the specific range. A simple numerical experiment is conducted. Finally, concluding remarks for geometric understandings are given in 2.8.

2.2 M-estimators in a generalized linear model

Let us establish a probabilistic framework for a -dimensional covariate variable in a subset of , and an outcome with a value of a subset of in a regression model paradigm. The major objective is to estimate the regression function

based on a given dataset. In a paradigm of prediction, is often called a feature vector, where to build a predictor defined by a function from to is one of the most important tasks. Let be a space of conditional probability measures conditioned on . For any event in the conditional probability given is written by

where is the RN-derivative of given with a reference measure . A statistical model embedded in is written as

| (2.1) |

where is a parameter of a parameter space . Then, the Kullback-Leibler (KL) divergence on is given by

with the cross entropy,

Note that the KL-divergence is independent of the choice of reference measure as discussed in Chapter 1. Let be a random sample drawn from a distribution of . The goal is to estimate the parameter in in (2.1). Then, the negative log-likelihood function is defined by

where is the RN-derivative of with respect to . Note that, for any measure equivalent to the negative log-likelihood functions up to a constant. The expectation of under the model distribution of is equal to the cross entropy:

| (2.2) |

where and is the true value of the parameter and is the conditional expectation under the model distribution ’s. Hence,

which can be viewed as an empirical analogue of the Pythagorean equation. Due to the property of the KL-divergence as a divergence measure,

By definition, the ML-estimator is the minimizer of in ; while the true value is the minimizer of in . The continuous mapping theorem reveals the consistency of the ML-estimator for the true parameter, see [61, 94] The estimating function is defined by the gradient of the negative log-likelihood function

Hence, the ML-estimator is a solution of the estimating equation, under regularity conditions. We note that the solution of the expected estimating function under the distribution with the true value is itself, that is,

This implies that the continuous mapping theorem again concludes the consistency of the ML-estimator for the true value .

The framework of a generalized linear model (GLM) is suitable for a wide range of data types other than the ordinary linear regression model, see [62]. While the ordinary linear regression usually assumes that the response variable is normally distributed, GLMs allow for response variables that have different distributions, such as the Bernoulli, categorical, Poisson, negative binomial distributions and exponential families in a unified manner. In this way, GLMs provide excellent applicability for a wide range of data types, including count data, binary data, and other types of skewed or non-continuous data. A GLM consists of three main components:

-

1

Random Component: Specifies the probability distribution of the response variable . This is typically a member of the exponential family of distributions (e.g., normal, exponential, binomial, Poisson, etc.).

-

2

Systematic Component: Represents the linear combination of the predictor variables, similar to ordinary linear regression. It is usually expressed as .

-

3

Link Function: Provides the relationship between the random and systematic components. The expected value of given , or the regression function is one-to-one with the linear combination of predictors through the link function .

In the framework of the GLM, an exponential dispersion model is employed as

with respect to a reference measure , where and is called the canonical and the dispersion parameters, respectively, see [51]. Here we assume that can be defined in . This allows for a linear modeling with a flexible form of the link function . Specifically, if is an identity function, then is referred to as the canonical link function. This formulation involves most of practical models in statistics such as the logistic and the log linear models. In practice, the dispersion parameter is usually estimated separately from , and hence, we assume is known to be for simplicity. This leads to a generalized linear model:

| (2.3) |

with as the conditional RN-derivative of given . The regression function is given by

due to the Bartlett identity.

Let us consider M-estimators for a parameter in the linear model (2.3). Originally, the M-estimator is introduced to cover robust estimators of a location parameter, see [47] for breakthrough ideas for robust statistics, and [83] for robust regression. We define an M-type loss function for the GLM defined in (2.3):

| (2.4) |

for a given dataset and we call

the M-estimator. Here the generator function is assumed to be convex with respect to . If , then the M-estimator is nothing but the ML estimator. Thus, the estimating function is given by

| (2.5) |

where . If we confine the generator function to a form of , then this formulation reduces to the original form of M-estimation [48, 93]. In general, the estimating function is characterized by . Hereafter we assume that

where is the expectation under . This assumption leads to consistency for the estimator . We note that the relationship between the loss function and the estimating function is not one-to-one. Indeed, there exist many choices of the estimating function for obtaining the estimator other than (2.5). We have a geometric discussion for an unbiased estimating function.

We investigate the behavior of the score function of the -estimator. By definition, the -estimator is the solution such that the sample mean of the score function is equated to zero. We write a linear predictor as , where . We call

| (2.6) |

the prediction boundary. Then, the following formula is well-known in the Euclidean geometry.

Proposition 4.

Let and be the Euclidean distance from to the prediction boundary defined in (2.6). Then,

| (2.7) |

Proof.

Let be the projection of onto . Then, , where denotes the Euclidean norm. There exists a non zero scalar such that noting that a normal vector to the hyperplane is given by . Hence, and

| (2.8) |

which concludes (2.7) since due to .

∎

Thus, a covariate vector of is decomposed into the orthogonal and horizontal components as , where

| (2.9) |

We note that and . Due to the orthogonal decomposition (2.9) of , the estimating function is also decomposed into

where

Here we use a property: . Thus, in , and are strongly connected each other; in , and are less connected.

The estimating function (2.5) is decomposed into a sum of the orthogonal and horizontal components,

where

We consider a specific type of contamination in the covariate space .

Proposition 5.

Let and , where with arbitrarily a fixed scalar depending on . Then, , and

Proof.

By definition, and due to . These imply the conclusion. ∎

We observe that and have both no influence with the contamination in . Alternatively, has a substantial influence by scalar multiplication. Hence, we can change the definition of the horizontal component as

choosing as . Then, it has a mild behavior such that

In this way, the estimating function (2.5) of M-estimator can be written as

| (2.10) |

Proposition 6.

Assume there exists a constant such that

Then, the estimating function in (2.10) of the M-estimator is bounded with respect to any dataset .

Proof.

It follows from the assumption such that there exists a constant such that since

Therefore, we observe

which is equal to .

∎

On the other hand, suppose another type of contamination , where with a fixed scalar depending on . Then, and have both strong influences; has no influence.

The ML-estimator is a standard estimator that is defined by maximization of the likelihood for a given data set . In effect, the negative log-likelihood function is defined by

The likelihood estimating function is given by

| (2.11) |

Here the regression parameter is of our main interests. We note that the ML-estimator can be obtained without the nuisance parameter even if it is unknown. In effect, there are some methods for estimating using the deviance and the Pearson divergence in a case where is unknown. The expected value of the negative log-likelihood conditional on is given by

up to a constant since the conditional expectation is given by due to a basic property of the exponential dispersion model (2.3).

2.3 The -loss function and its variants

Let us discuss the the -divergence in the framework of regression model based on the discussion in the general distribution setting of the preceding section. The -divergence is given by

with the cross entropy,

The loss function derived from the -divergence is

where is the -expression of , that is

| (2.12) |

We define the -estimator for the parameter by . By definition, the -estimator reduces to the ML-estimator when is taken a limit to .

Remark 1.

Let us discuss a behavior of the -loss function when becomes larger in which the outcome is finite-discrete in . For simplicity, we define the loss function as

Let and . Then, the -expression satisfies

Similarly,

Hence, is equivalent to the 0-1 loss function ; while

| (2.13) |

This is the number of ’s equal to the worst predictor . If we focus on a case of , then is nothing but the 0-1 loss function since . In principle, the minimization of the 0-1 loss is hard due to the non-differentiability. The -loss function smoothly connects the log-loss and the 0-1 loss without the computational challenge. See [31, 71] for detailed discussion for the 0-1 loss optimization.

In a subsequent discussion, the -expression will play an important role on clarifying the statistical properties of the -estimator. In fact, the -expression function is a counterpart of the log model function: in . Here we have a note as one of the most basic properties that

| (2.14) |

Equation (2.14) yields

and, hence,

| (2.15) |

This implies

Thus, we observe due to the discussion similar to that for the ML-estimator and the KL-divergence that consistent for . The -estimating function is defined by

Then, we have a basic property that the -estimating function should satisfy in general.

Proposition 7.

The true value of the parameter is the solution of the expected -estimating equation under the expectation of the true distribution. That is, if ,

| (2.16) |

where is the conditional expectation under the true distribution ’s given .

Proof.

By definition,

Here we note

up to a proportionality constant. Hence,

If , then this vanishes identically due to the total mass one of . ∎

The -estimator is a solution of the estimating equation; while true value is the solution of the expected estimating equation under the true distribution with . Similarly, this shows the consistency of the -estimator for the true value of the parameter. The -estimating function is said to be unbiased in the sense of (2.16). Such a unbiased property leads to the consistency of the estimator. However, if the underlying distribution is misspecified, then we have to evaluate the expectation in (2.16) under the misspecified distribution other than the true distribution. Thus, the unbiased property is generally broken down, and the Euclidean norm of the estimating function may be divergent at the worst case. We will investigate such behaviors in misspecified situations later.

Now, we consider the MDEs via the GM and HM divergences introduced in Chapter 1. First, consider the loss function defined by the GM-divergence:

where is the reference probability measure in . We define as , which we refer to as the GM-estimator. The -estimating equation is given by

| (2.17) |

where . Secondly, consider the loss function defined by the HM-divergence:

The -model can be viewed as an inverse-weighted probability model on the account of

We define the HM estimator by . The -estimating equation is given by

We note from the discussion in Section 2 that and are equal to and with ; and are equal to and with . We will discuss the dependence on the reference measure , in which we like to elucidate which choice of gives a reasonable performance in the presence of possible model misspecification.

We focus on the GLM framework in which we look into the formula on the -divergence. Then, the choice of the reference measure should be paid attentions to the -divergence. The original reference measure is changed to such that Hence, the model is given by withr respect to . We note that is a probability measure since the RN-derivative is equal to defined in (2.3) when is a zero vector. This makes the model more mathematically tractable and allows us to use standard statistical methods for estimation and inference. Then, the -expression for is given by

| (2.18) |

This property of reflexiveness is convenient the analysis based on the -divergence. First of all, the -loss function is given by

| (2.19) |

due to the -expression (2.18). The -estimating function is given by

| (2.20) | ||||

We note that the change of the reference measure from to is the key for the minimum -divergence estimation. In fact, the -loss function would not have a closed form as (2.19) unless the reference measure is changed. Here, we remark that the -loss function is a specific example of M-type loss function in (2.4) with a relationship of

The expected loss function is given by

where denotes the expectation under the true distribution . This function attains a global minimum at as discussed around (2.15) in the general framework. Similarly, the GM-loss function is written by

where . The HM loss function is written by

since the -expression becomes when . In accordance with these, all the formulas for the loss functions defined in the general model (2.1) are reasonably transported in GLM. Subsequently, we go on the specific model of GLM to discuss deeper properties.

We have discussed the generalization of the -divergence in the preceding section. The generalized divergence defined in (1.18) in Chapter 2 yields the loss function by

where is a normalizing factor satisfying

| (2.21) |

The similar discussion as in the above conducts

The estimating function is written as

due to assumption (1.19). This implies

which vanishes since all ’s have total mass one as in (2.21). Consequently, we can derive the MD estimator based on the generalized divergence with the -divergence as the standard. In Section 3, we will consider another candidate of for estimation under a Poisson point process model.

2.4 Normal linear regression

Linear regression, one of the most familiar and most widely used statistical techniques, dates back to the 19-th century in the mathematical formulation by Carolus F. Gauss [96]. It originally emerged from the eminent observation of Francis Galton on regression towards the mean at the begging of the 20-th century. Thus, the ordinary least squares method is evolved with the advancement of statistical theory and computational methods. As the application of linear regression expanded, statisticians recognized its sensitivity to outliers. Outliers can significantly influence the regression model’s estimates, leading to misleading results. To address these limitations, robust regression methods were developed. These methods aim to provide estimates that are less affected by outliers or violations of model assumptions like normality of errors or homoscedasticity.

Let be an outcome variable in and be a covariate vector in a subset of . Assume the conditional probability density function (pdf) of given as

| (2.22) |

Thus, the normal linear regression model (2.22) is one of the simplest examples of GLM with an identity link function where is a dispersion parameter. Indeed, is a crucial parameter for assessing model fit. We will discuss the estimation for the parameter later. The KL-divergence between normal distributions is given by

For a given dataset , the negative log-likelihood function is as follows:

The estimating function for is

where is assumed to be known. In fact, it is estimated in a practical situation where is unknown. Equating the estimating function to zero gives the likelihood equations in which the ML-estimator is nothing but the least square estimator. This is a well-known element in statistics with a wide range of applications, where several standard tools for assessing model fit and diagnostics have been established.

On the other hand, robust regression robust methods aim to provide estimates that are less affected by outliers or violations of model assumptions like normality of errors. The key is the introduction of M-estimators, which generalize maximum likelihood estimators. They work by minimizing a sum of a loss function applied to the residuals. The choice of the loss function (such as Huber’s winsorized loss or Tukey’s biweight loss [3]) determines the robustness and efficiency of the estimator. The M-estimator, , of a parameter is obtained by minimizing an objective function, typically defined by a sum of ’s applied to the adjusted residuals:

| (2.23) |

The estimating equation is given by

where .

Here are typical examples:

(1). Quadratic loss: , which is equivalent to the log-likelihood function

(2). Huber’s loss:

(3). Tukey’s loss:

where and are hyper parameters.

We return the discussion for the -estimator. The -divergence is given by

where . The -expression of the normal linear model is given by

where is a normal density function with mean and variance . Hence, the -loss function is given by

which is written as

| (2.24) |

up to a scalar multiplication. Consequently, the -loss function is a specific example of -loss function in (2.23) viewing as . We note that the -estimator is one of M-estimators. The -estimating function is defined as where the score function is defined by

| (2.25) |

The generator function is given as as an M-estimator.

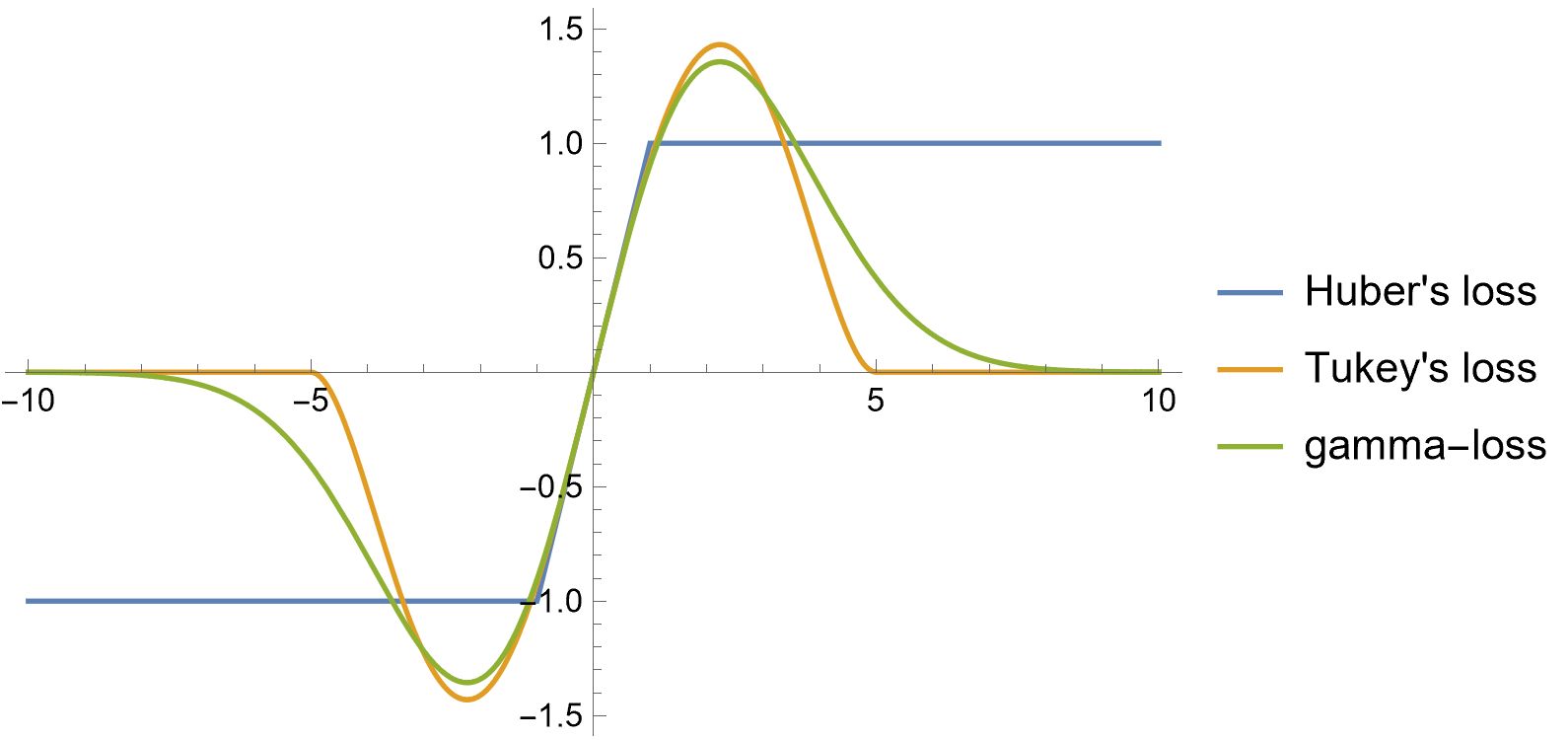

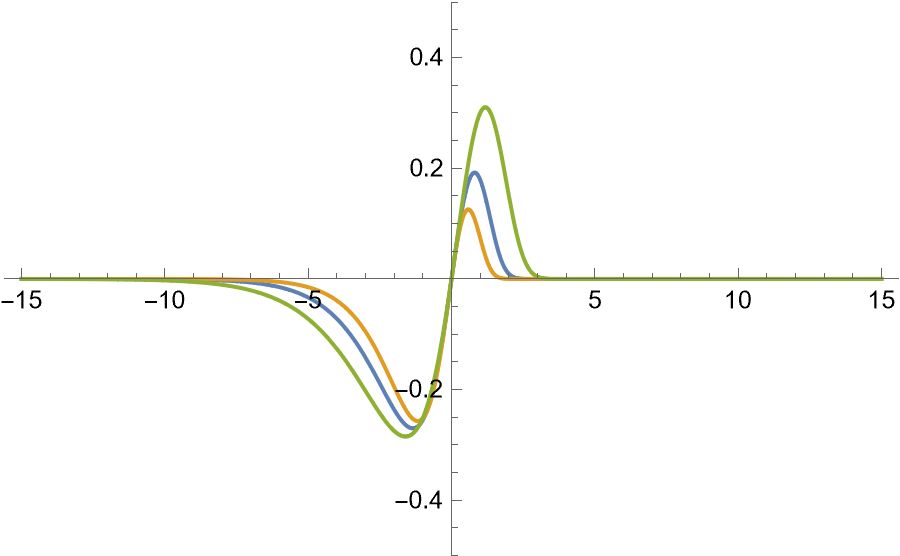

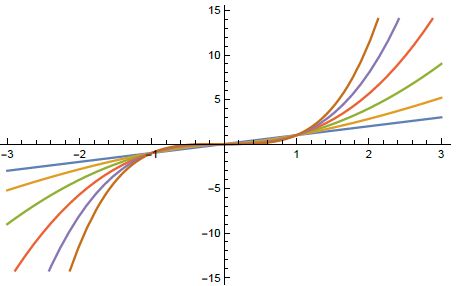

Fig 2.1 displays the plots of the generator functions:

(1). -loss, ,

(2). Huber’s loss,

(3). Tukey’s loss, .

It is observed the generator functions of the -loss and Tukey’s loss are both redescending. This means the influence of each data point on the estimation decreases to zero beyond a certain threshold, effectively eliminating the impact of extreme outliers.

Unlike the quadratic loss and Huber’s loss functions, such redescending loss functions are non-convex. This characteristic makes it more robust but also introduces challenges in optimization, as it can lead to multiple local minimums.

The variance parameter in the normal regression model is referred to as a dispersion parameter in GLM. In a situation where is unknown the likelihood method is similar to the known case. The ML-estimator for is derived by

plugging in . Alternatively, the -estimator for is is derived by the solution of the joint estimating equation combining

with the estimating equation for . Similarly, we can find that the boundedness property for the -score function for holds.

Let us apply the geometric discussion associated with the decision boundary in (2.6) to the normal regression model. We write the estimating function of M-estimator in (2.23) as

for a given dataset . Due to the orthogonal decomposition of , the estimating function is also decomposed into a sum of the orthogonal and horizontal components, , where

We note that this decomposition is the same as that for the GLM in Section . We consider a specific type of contamination in the covariate space such that , where with a fixed scalar depending on . As in the discussion for the general setting of the GLM, and have both strong influences; has no influence. Let us investigate a preferable property for the -estimator applying the decomposition formula above.

Proposition 8.

Let be the -score function defined in (2.25). Then,

| (2.26) |

for any fixed of and any , where is the Euclidean distance.

Proof.

It is written that

where . Therefore,

which is bounded by

This is simplified as

Therefore, (2.26) is concluded for the fixed . ∎

It follows from Proposition 8 that all the estimating scores of the -estimator appropriately lies in a tubular neighborhood

| (2.27) |

surrounding . As a result, the distance from the estimating function to the boundary is bounded, that is,

However, in the limit case of or the ML-estimator, this boundedness property for covariate outlying is broken down. Tukey’s biweight loss estimating function satisfies the boundedness; Huber’s loss estimating function does not satisfy that.

We have a brief study for numerical experiments. Assume that covariate vectors ’s are generated from a bivariate normal distribution , where denotes a 3-dimensional identity matrix. This simulation was designed based on a scenario about the conditional distribution of the response variables ’s as follows.

- Specified model

-

- Misspecified model

-

Here parameters were set as , and with ; with .

We compared the estimates the ML-estimator and the -estimator with , where the simulation was conducted by 300 replications. In the the case of specified model, the ML-estimator was slightly superior to the -estimator in a point of the root means square estimate (rmse), however the superiority is almost negligible. Next, we suppose a mixture distribution of two normal regression modes in which one was the same model as the above with the mixing probability ; the other was still a normal regression model but the the minus slope vector with the mixing probability . Under such a misspecified setting, -estimator was crucially superior to the ML-estimator, where the rmse of the ML-estimator is more than double that of the -estimator. Thus, the ML-estimator is sensitive to the presence of such a heterogeneous subgroup; the -estimator is robust. Proposition 8 suggests that the effect of the subgroup is substantially suppressed in the estimating function of the -estimator. See Table 2.1 and Figure 2.2 for details.

(a). The case of specified model

| Method | estimate | rmse |

|---|---|---|

| ML-estimate | ||

| -estimate |

(b). The case of misspecified model

| Method | estimate | rmse |

|---|---|---|

| ML-estimate | ||

| -estimate |

2.5 Binary logistic regression

We consider a binary outcome with a value in and a covariate in a subset of . The probability distribution is characterized by a probability mass function (pmf) or the RN-derivative with respect to a counting measure :

which is referred to as the Bernoulli distribution , where is the probability of . A binary regression model is defined by a link function of the systematic component into the random component: so that the conditional pmf given with a linear model is given by

| (2.28) |

The KL-divergence between Bernoulli distributions is given by

For a given dataset , the negative log-likelihood function is given by

and the likelihood equation is written by

| (2.29) |

On the other hand, the -divergence is given by

where is the counting measure on . Note that this depends on the choice of as the reference measure on . The -expression of the logistic model (2.28) is given by

Hence, the -loss function is written by

| (2.30) |

and the -estimating function is written as

where

| (2.31) |

see [49] for the discussion for robust mislabel. See [24, 69, 90, 92, 91, 55] for other type of MDE approaches than the estimation.

The -divergence on the space of Bernoulli distributions is well defined for all real number . Let us fix as , and thus the GM-divergence between Bernoulli distributions is given by

where the reference measure is chosen by . Hence, the GM-loss function is given by

The GM-loss function with the reference measure is equal to the exponential loss function for AdaBoost algorithm discussed for an ensemble learning [30]. The integrated discrimination improvement index via odds [40] is based on the GM-loss function to assess prediction performance. We will give a further discussion in a subsequent chapter. The GM-estimating function is written by

due to for . Therefore, this estimating function is unbiased for any , that is, the expected estimating function conditional on under the logistic model (2.28) is equal to a zero vector.

We discuss which is effective for practical problems in logistic regression applications. In particular, we focus on a problem of imbalanced samples that is an important issue in the binary regression. An imbalanced dataset is one where the distribution of samples across these two classes is not equal. For example, in a medical diagnosis dataset, the number of patients with a rare disease (class 1) may be significantly lower than those without it (class 0). In this way, it is characterized as

There are difficult issues for the model bias, the poor generalization and the inaccurate performance metrics for the prediction. Imbalanced samples can lead to biased or inconsistent estimators, affecting hypothesis tests and confidence intervals. For these problem resampling techniques have been exploited by oversampling the minority class or undersampling the majority class can balance the dataset. Also, the cost-sensitive Learning introduces a cost matrix to penalize misclassification of the minority class more heavily. The asymmetric logistic regression is proposed introducing a new parameter to account for data complexity [55]. They observe that this parameter controls the influence from imbalanced sampling. Here we tackle with this problem by the GM-estimator choosing an appropriate reference distribution in the GM-loss function. We select as the reference measure, where is the proportion of the negative sample, namely . Then, the resultant loss function is given by

| (2.32) |

We refer this to as the inverse-weighted GM-loss function since the weight

Hence, the estimating function is given by

Equating the estimating function to zero gives the equality between two sums of positive and negative samples:

Alternatively, the likelihood estimating equation is written as

Both of estimating equations are unbiased, however the weightings are contrast each other.

We conduct a brief study for numerical experiments. Assume that covariate vectors are generated from a mixture of bivariate normal distributions as

where denotes a 2-dimensional identity matrix. Here, we set as , and the mixture ratio will be taken by some fixed values. The outcome variables are generated from Bernoulli distributions as , where

where we set as and This simulation is designed to have imbalanced samples such that the positive sample proportion approximately becomes near .

We compared the ML-estimator with the inverse-weighted GM estimator with 30 replications. Thus, we observe that the GM estimator have a better performance over the ML-estimator in the sense of true positive rate. Table LABEL:TPRTNR is the list of the true positive and negative rates based on test samples with size . Note that two label conditional distributions are are . These are set to be sufficiently separated from each other. Hence, the classification problem becomes extremely an easy task when is a moderate value. Both ML-estimator and GM estimator have good performance in cases of . Alternatively, we observe that the true positive rate for GM estimator is considerably higher than that of ML-estimator in a situation of imbalance samples as in either case of .

| MLE | GME | |

|---|---|---|

denotes a pair of the true positive and negative rates and .

We next focus on the HM-divergence (-divergence, ):

where the reference measure is determined by . The HM-loss function is derived as

for the logistic model (2.28). Note that the HM-loss function is the -loss function with , which the -expression is reduced to

Hence, the HM-estimating function is written as

This is a weighted likelihood score function with the conditional variance of as the weight function. We will observe that this weighting has an effective key for the HM estimator to be robust for covariate outliers.

Let us investigate the behavior of the estimating function of the -estimator. In general, is unbiased, that is, under the conditional expectation with the true distribution with the pmf . However, this property easily violated if the expectation is taken by a misspecified distribution with the pmf other than the true distribution [12, 13, 53]. Hence, we look into the expected estimating function under the misspecified model.

Proposition 9.

Proof.

It is written from (2.31) that

| (2.34) |

Hence, if , then

where

| (2.35) |

We observe that, if or , then

This concludes (2.33).

∎

.

We note that Proposition 9 focuses only on the logistic model (2.28), however such a boundedness property holds in both the probit model and the complementary log-log model.

We consider a geometric understanding for the bounded property in (2.33). In GLM, the linear predictor is written by , where and are referred to as a slope vector and intercept term, respectively. The decision boundary is defined as as in (2.6). The Euclidean distance of into ,

is referred to as the margin of from the decision boundary , which plays.a central role on the support vector machine [14]. Let

| (2.36) |

This is the -tubular neighborhood including . In this perspective, Proposition 9 states for any that the conditional expectation of -estimating function is in the tubular neighborhood with probability one even under the misspecified distribution outside the parametric model (2.28). On the other hands, the likelihood estimating function does not satisfy such a stable property because the margin of the conditional expectation becomes unbounded. Therefore, we result that the -estimator is robust for misspecification for the model for or ; while the ML-estimator is not robust.

We observe in the Euclidean geometric view that, for a feature vector of , the decision hyperplane decompose into orthogonal and tangential components as , where and . Note and . In accordance with this geometric view, we give more insights on the robust performance for the -estimator class. We write the -estimating function (2.31) by . Then,

| (2.37) |

Therefore, we conclude that

| (2.38) |

Thus, we observe a robust property of the -estimator in a more direct perspective.

Proposition 10.

Assume or . Then, the -estimating function based on a dataset satisfies

| (2.39) |

Proof.

The log-likelihood estimating function is given by

| (2.41) |

Hence, is unbounded in since either of two terms in (2.41) diverges to infinity as goes to infinity. The GM-estimating function is written by

This implies that is unbounded.

We have a brief study for numerical experiments in two types of sampling. One is based on the covariate distribution conditional on the outcome , which is widely analyzed in case-control studies. The other is based on the conditional distribution of given the covariate vector , which is common in cohort-control studies. First, we consider a model of an outcome-conditional distribution. Assume that the conditional distribution of given is a bivariate normal distribution , where is a 2-dimensional identity matrix. Then, the marginal distribution of is written as , where . The the conditional pmf of given is given by

due to the Bayes formula, where and . Let The simulation was conducted based on a scenario about the positive and negative samples with and , respectively, as follows.

(a). Specified model: and

(b). Misspecified model:

and

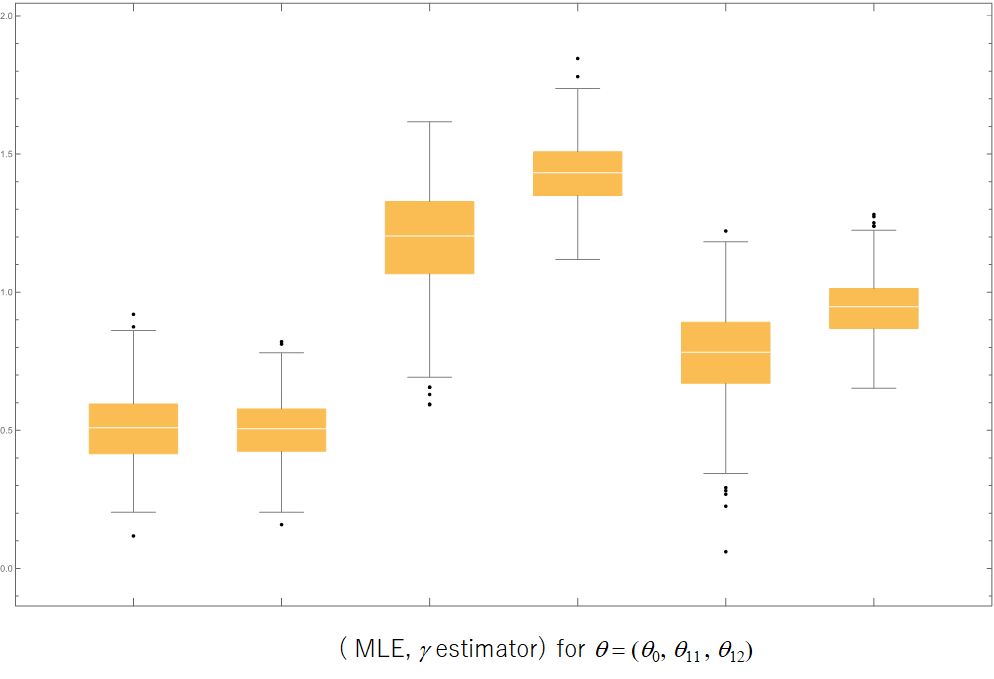

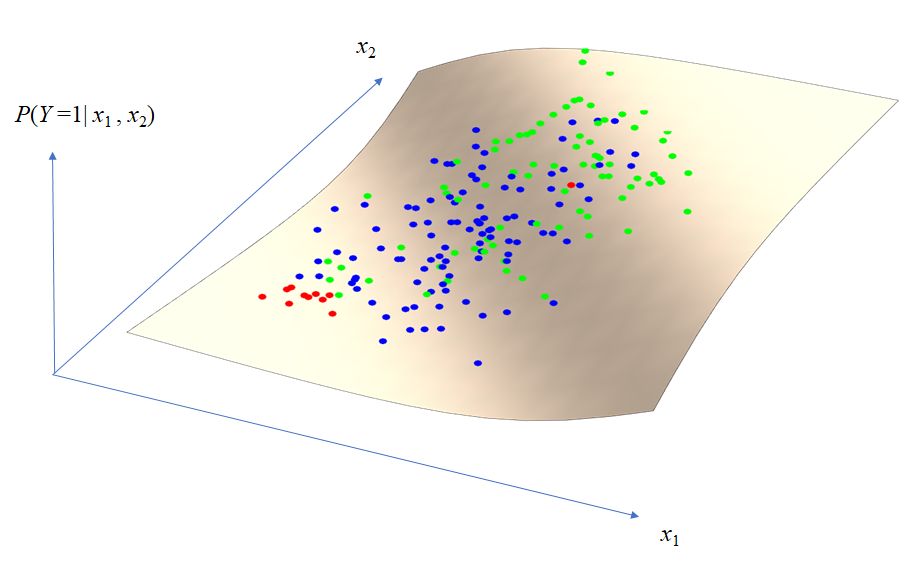

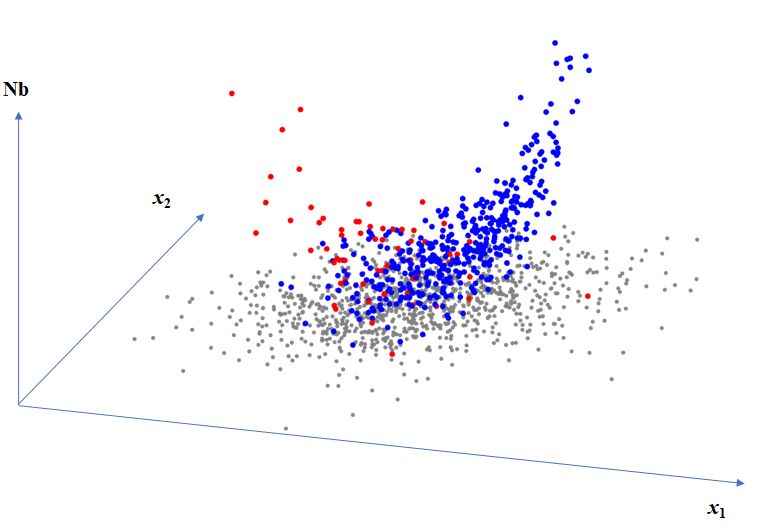

Here parameters were set as , , and , so that . Figure 2.3 shows the plot of 103 negative samples (Blue), 87 negative samples (Green), 10 negative outliers (Red) on the logistic model surface . Thus, 10 negative outliers are away from the hull of 87 negative samples.

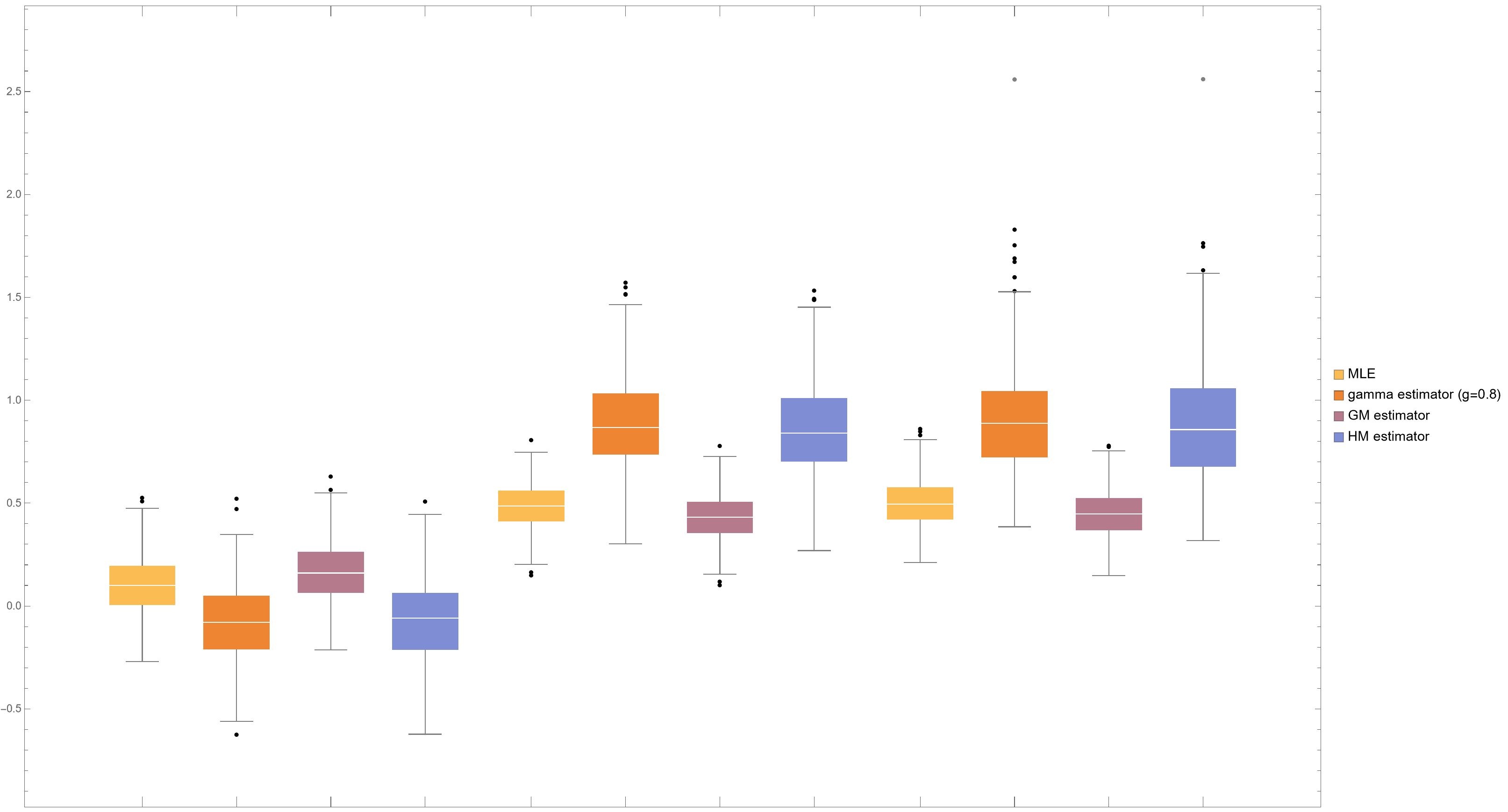

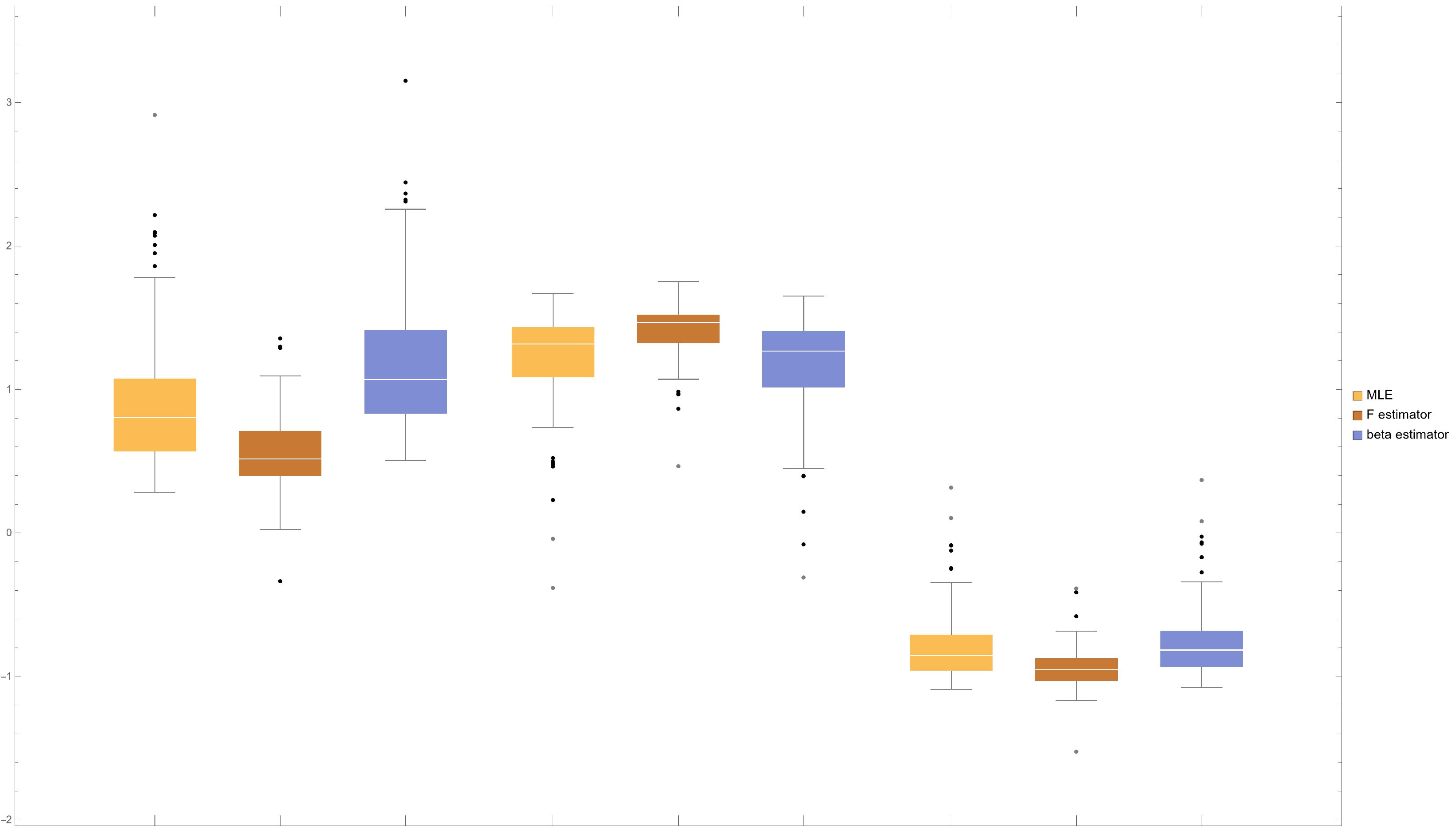

We compared the estimates the ML-estimator , the -estimator with , the GM-estimator and -estimator , where the simulation was conducted by 300 replications. See Table 2.3 for the performance of four estimators in case (a) and (b) and Figure 2.4 for the box-whisker plot in case (b). In the case (a) of specified model, the ML-estimator was superior to other estimators in a point of the root means square estimate (rmse), however the superiority is subtle. Next, we observe for case (b) of misspecified model in which the conditional distribution given is contaminated with a normal distribution with mixing ratio . Under this setting, -estimator and the HM -estimator were substantially robust; the ML-estimator and GM-estimator were sensitive to the misspecification. Upon closer observation, it becomes apparent that -estimator and the HM -estimator were superior to the ML-estimator and GM-estimator in the bias behaviors rather than the variance ones as shown in Figure 2.4. This observation is consistent with what Proposition (10) asserts: The estimator has a boundedness property if or . Because the ML-estimator, the GM-estimator and the HM-estimator equal the -estimators with , respectively.

(a). The case of specified model

| Method | estimate | rmse |

|---|---|---|

| ML-estimator | ||

| -estimate | ||

| GM-estimate | ||

| HM-estimate |

(b). The case of misspecified model

| Method | estimate | rmse |

|---|---|---|

| ML-estimator | ||

| -estimate | ||

| GM-estimate | ||

| HM-estimate |

Second, we consider a model of a covariate-conditional distribution of . Assume that follows a standard normal distribution and a conditional distribution of given follows a logistic model

The simulation was conducted based on a scenario as follows.

(a). Specified model:

(b). Misspecified model:

Here parameters were set as . Figure 2.3 shows the plot of 103 negative samples (Blue), 87 negative samples (Green), 10 negative outliers (Red) on the logistic model surface . Thus, 10 negative outliers are away from the hull of 87 negative samples.

Similarly, a comparison among the ML-estimator , the -estimator with , the GM-estimator and -estimator with replications. See Table 2.4. In the case (a), the ML-estimator was slightly superior to other estimators. For case (b), -estimator and the HM -estimator were more robust; the ML-estimator and GM-estimator, which was the same tendency as the case of the outcome-conditional model.

(a). The case of specified model

| Method | estimate | rmse |

|---|---|---|

| ML-estimator | ||

| -estimate | ||

| GM-estimate | ||

| HM-estimate |

(b). The case of misspecified model

| Method | estimate | rmse |

|---|---|---|

| ML-estimator | ||

| -estimate | ||

| GM-estimate | ||

| HM-estimate |

2.6 Multiclass logistic regression

We consider a situation where an outcome variable has a value in and a covariate with a value in a subset of . The probability distribution is given by a probability mass function (pmf)

which is referred to as the categorical distribution , where is the probability vector, with being .

Remark 2.

We begin with a simple case of estimating without any covariates. Let be a random sample drawn from . Then, the estimators discussed here equal the observed frequency vector as follows. First of all, the ML-estimator is the observed frequency vector with components , where . Next, the -loss function

is written as . We observe

up to a constant. Therefore, the -estimator for is equal to for all . Similarly, the -estimator is equal to for all . However the -estimator does not satisfy that except for the limit case of to , or the ML-estimator.

We return the discussion for the regression model with a covariate vector . A multiclass logistic regression model is defined by a soft max function as a link function of the systematic component into the random component. The conditional pmf given is given by

| (2.44) |

which is referred as a multinomial logistic model, where and . The KL-divergence between categorical distributions is given by

For a given dataset , the negative log-likelihood function is given by

where we set if . The likelihood equation is written by the -th component:

for . The -divergence is given by

We remark that the -expression defined in (2.12) is given by

where is in the multi logistic model (2.44). Hence, the -loss function is given by

and the -estimating equation is written as

where is defined by

| (2.45) |

The GM-divergence between categorical distributions:

where the reference distribution is chosen by . Hence, the GM-loss function is given by

where . We will have a further discussion later such that the GM-loss is closely related to the exponential loss for Multiclass AdaBoost algorithm. The GM-estimating function is given by

Finally, the HM-divergence is

The HM-loss function is derived as

for the logistic model (2.44) noting . This is the sum of squared probabilities of the inverse label. Hence, the HM-estimating function is written as

Let us have a brief look at the behavior of the -estimating function in the presence of misspecification of the parametric model in the multiclass logistic distribution (2.44). Basically, most of properties are similar to those in the Bernoulli logistic model.

Proposition 11.

Consider the -estimating function under a multiclass logistic model (2.44). Assume or . Then,

| (2.46) |

where

| (2.47) |

with

Proof.

The -th linear predictor is written by with the slope vector and the intercept . The -th decision boundary is given by

| (2.49) |

In a context of prediction, a predictor for the label based on a given feature vector is give by

which is equal to the Bayes rule under the multiclass logistic model, where in the parametrization as in (2.44). We observe through the discussion similar to Proposition 9 in the situation of the Bernoulli logistic model that is uniformly bounded in even under any misspecified distribution outside the parametric model. Therefore, we result that the -estimator has such a stable behavior for all the sample if is in a range . The ML-estimator and the GM-estimators equals -estimators with and , respectively. Therefore, both ’s are outside the range, which suggests they are suffered from the unboundedness.

We next study ordinal regression, also known as ordinal classification. Consider an ordinal outcome having values in . The probability of falling into a certain category or lower is modeled as

| (2.50) |

for , where . The model (2.50) is referred to as ordinal logistic model noting with a logistic distribution . Here, the thresholds are assumed to ensure that the probability statement (2.50) makes sense. Each threshold effectively sets a boundary point on the latent continuous scale, beyond which the likelihood of higher category outcomes increases. The difference between consecutive thresholds also gives insight into the ”distance” or discrimination between adjacent categories on the latent scale, governed by the predictors.

For a given observations the negative log-likelihood function

where . Similarly, the -loss function can be given in a straightforward manner. However, these loss functions seem complicated since the conditional probability is introduced indirectly as a difference between the cumulative distribution functions ’s.

To address this issue, it is treated that each threshold as a separate binarized response, effectively turning the ordinal regression problem into multiple binary regression problems. Let and be cumulative distribution functions on . We define a dichotomized cross entropy

This is a sum of the cross entropys between a Bernoulli distributions and . The KL divergence is given as . Thus, the dichotomized log-likelihood function is given by

where . Note , where denotes the expectation under the distribution and . Under the ordinal logistic model (2.50),

On the other hand, the dichotomized -loss function is given by

where is the -expression for , that is,

Under the ordinary logistic model (2.50),