Mixed effects models for extreme value index regression

Abstract

Extreme value theory (EVT) provides an elegant mathematical tool for the statistical analysis of rare events. When data are collected from multiple population subgroups, because some subgroups may have less data available for extreme value analysis, a scientific interest of many researchers would be to improve the estimates obtained directly from each subgroup. To achieve this, we incorporate the mixed effects model (MEM) into the regression technique in EVT. In small area estimation, the MEM has attracted considerable attention as a primary tool for producing reliable estimates for subgroups with small sample sizes, i.e., “small areas.” The key idea of MEM is to incorporate information from all subgroups into a single model and to borrow strength from all subgroups to improve estimates for each subgroup. Using this property, in extreme value analysis, the MEM may contribute to reducing the bias and variance of the direct estimates from each subgroup. This prompts us to evaluate the effectiveness of the MEM for EVT through theoretical studies and numerical experiments, including its application to the risk assessment of a number of stocks in the cryptocurrency market.

Keywords: Extreme value index regression; Extreme value theory; Mixed effects model; Pareto-type distribution; Risk assessment

1 Introduction

Statistical analysis of rare events is crucial for risk assessment in various fields, including meteorology, environment, seismology, finance, economics and insurance. Extreme value theory (EVT) provides an elegant mathematical tool for the analysis of rare events.

In the framework of univariate EVT, the generalized extreme value distribution (see, Fisher and Tippett 1928; Gumbel 1958) and generalized Pareto distribution (GPD, see, Davison and Smith 1990) are standard models for fitting extreme value data. In addition, the class of GPDs with unbounded tails is called the Pareto-type distribution, which has been recognized as an important model for analyzing heavy-tailed data (see, Beirlant et al. 2004). As an important direction of development in EVT, these models have been extended to regression models to incorporate covariate information into extreme value analysis (see, Davison and Smith 1990; Beirlant and Goegebeur 2003; Beirlant et al. 2004; Wang and Tsai 2009). However, few regression techniques have been developed for unit-level data in extreme value analysis.

The data category of interest is denoted by , where is the number of population subgroups, is the sample size from the th population subgroup, is the response of interest, and is the vector of relevant covariates. In much of the literature on small area estimation (SAE), this data category is referred to as unit-level data, and each subgroup is then called an “area” (see, Rao and Molina 2015; Sugasawa and Kubokawa 2020; Molina, Corral, and Nguyen 2022). In particular, an area with a small sample size is the so-called “small area,” which is described in detail by Jiang (2017). Examples of an area include a geographic region such as a state, county or municipality, or a demographic group such as a specific age-sex-race group. From the definition on p. 173 of Rao and Molina (2015), unit-level data means that the number of areas is known, and each observation is explicitly assigned to one of the areas. This study aims to develop the regression technique of extreme value analysis for unit-level data.

Our purpose is not to pool data from multiple areas into a single area by clustering, nor to create a new set of areas with heterogeneous characteristics (see, Bottolo et al. 2003; Rohrbeck and Tawn 2021; de Carvalho, Huser, and Rubio 2023; Dupuis, Engelke, and Trapin 2023 and references therein). Unlike these approaches, our goal is to enhance the accuracy of extreme value analysis by using information from all areas simultaneously, instead of building models by area. However, if the heterogeneity between all areas is modeled as parameters, the number of parameters depends on the number of areas . Accordingly, for large , the fully parametric model can lead to a large bias in the parameter estimates (see, Section 4 of Ruppert, Wand, and Carroll 2003; Broström and Holmberg 2011). Thus, we want to develop a model for extreme value analysis that does not require many parameters for unit-level data. To this end, we incorporate the mixed effects model (MEM) into EVT. The MEM has been described in Jiang (2007), Wu (2009), Jiang (2017), and references therein. This model captures the heterogeneity between areas as a latent structure rather than as parameters. In SAE (see, Torabi 2019; Sugasawa and Kubokawa 2020), the efficiency of MEM is well known for its so-called “borrowing of strength” property (see, Dempster, Rubin, and Tsutakawa 1981). Dempster, Rubin, and Tsutakawa (1981) described the “borrowing of strength” as follows:

Using concepts of variability between and within units, one can improve estimates based on the data from a single unit by the appropriate use of data from the remaining units. (Dempster, Rubin, and Tsutakawa 1981, p. 341)

In other words, the “borrowing of strength” indicates that direct estimates based only on area-specific data are improved by using information from other areas (see, Section 1 of Diallo and Rao 2018). For small areas, the “borrowing of strength” would be particularly helpful because the accuracy of their direct estimates is not sufficiently guaranteed (see, Molina and Rao 2010; Diallo and Rao 2018). In extreme value analysis, we use only data with small or large values; hence, the effective sample size tends to be small for some areas. Thus, the MEM would be crucial to obtain more efficient estimators for extreme value analysis. However, to the best of our knowledge, there are no results for combining the MEM with EVT. Therefore, it would be important to show that the “borrowing of strength” is also valid for extreme value analysis. We will reveal such considerations theoretically and numerically.

In this study, we incorporate the MEM into the extreme value index (EVI) of the Pareto-type distribution. For this model, we first pick the extreme value data for each area using the peak-over-threshold method. Then, the regression parameters are estimated by the maximum likelihood method. In addition, the random components of the MEM, called random effects, which correspond to the latent area-wise differences in the regression coefficients, are predicted by the conditional mode. We investigate the asymptotic normality of the proposed estimator (see, Section 3.2). From this asymptotic normality, we find that the variance of the estimator improves as the number of areas increases. In other words, the proposed estimator is generally stable even when the effective sample size is small for certain areas. Owing to this property, the proposed estimator can reduce the severe bias of Pareto-type modeling by setting reasonably higher thresholds while achieving its stable behavior. Furthermore, we show numerically through the Monte Carlo simulation study that our estimates for each area, obtained by combining the proposed estimator and predictor, not only have significantly smaller variances than the direct estimates, but are also less biased (see, Section 1.3 of our supplementary material). Surprisingly, in the context of EVT, the “borrowing of strength” of the MEM contributes to reducing both bias and variance.

As an application, we analyze the returns of 413 cryptocurrencies. Since their risks as assets may vary by stock, an accurate risk assessment for each stock would be highly desirable. Along with some analysis, we will demonstrate the effectiveness of the MEM in analyzing the risks of many cryptocurrency stocks. We note that considering many stocks is equivalent to the mathematical situation that the number of areas is large. Thus, the theoretical study of the proposed estimator will be established under .

The remainder of this article is organized as follows. Section 2 proposes the regression model using the MEM for the Pareto-type distribution. Section 3 examines its asymptotic properties. As a real data example, Section 4 analyzes a cryptocurrency dataset. Section 5 summarizes this study and discusses future research. The simulation studies for the proposed model and technical details of the asymptotic theory in Section 3 are described in our supplementary material.

2 Model and method

Let be defined as the set of all positive real numbers. Throughout this article, we consider the unit-level data

| (1) |

where is the number of areas, is the sample size from the th area, is the continuous random variable corresponding to the response of interest, and is the random vector representing the associated predictors. Here, is regarded as the observation for the th unit in the th area. We denote the index sets by and . In the following Sections 2.1-2.4, we describe the proposed MEM and associated estimation and prediction methods.

2.1 Mixed effects model under Pareto-type distribution

Let be an independent and identically distributed (i.i.d.) random sample from some distribution. Subsequently, we assume that for each and , the response is conditionally independently obtained from a certain conditional distribution for the given , where is determined for each . In this study, we are interested in the right tail behavior of each . Here, the right tail of each is modeled by the Pareto-type distribution as

| (2) |

where is a positive function called EVI, and is a conditional slowly varying function with respect to given , i.e., for any and , as . The EVI function , which determines the heaviness of the right tail of , is assumed here to be the classical linear model formulated as follows:

| (3) |

where , and and are regression coefficient vectors. Note that is different between areas, whereas is common to all areas. When , the above model is reduced to that of Wang and Tsai (2009). The purpose of the model (2) with (3) is to estimate the parameter vectors and . However, this model has parameters; hence, if is large, the associated estimators may be severely biased (see, Section 4 of Ruppert, Wand, and Carroll 2003; Broström and Holmberg 2011). To overcome this bias problem, we use the MEM instead of the fully parametric model (2) with (3).

For , we introduce the random effects such that

| (4) |

where refers to the multivariate normal distribution with zero mean vector and unknown covariance matrix . The MEM uses these random effects to express the differences in between areas as a latent structure. Let be the conditional distribution function of given and . In this expression, the information about the differences in between areas is assigned to by . Note that the random effects are not observed as data.

As an alternative model to (2) with (3), the Pareto-type distribution using the MEM is defined as follows:

| (5) |

where conditional on and is a slowly varying function with respect to . Then, as an extension of (3) to the MEM, the EVI function is assumed to be

| (6) |

Compared to (3), the area-wise differences in the slopes of log-EVI with respect to are represented by as a latent structure. Thus, the total number of parameters in the model (5) using (6) is , which is independent of and less than that of the fully parametric model (2) with (3) when is large. Here, is the number of parameters included in .

The simplest model of (6) is the location-shifting MEM with and , denoted and by the scalars and ,

| (7) |

which can be regarded as an EVI regression version of the nested error regression model (see, Battese, Harter, and Fuller 1988). The model (7) indicates that the intercept of accepts the heterogeneity between areas, although each covariate has the common slope of across all areas. The nested error regression model is useful for SAE (see, Diallo and Rao 2018; Sugasawa and Kubokawa 2020). The application in Section 4 demonstrates the analysis using the model (7) and verifies its effectiveness numerically. Alternatively, the case yields the most complicated model of (6), indicating that the slope of with respect to each covariate varies across areas.

2.2 Approximate maximum likelihood estimator

In this section, we construct estimators of the unknown parameters included in the model (5) with (6).

Let be the conditional distribution function given , and , where are thresholds. In this paper, we assume that belongs to the Hall class (see, Hall 1982), which is mentioned in (A1) of Section 3.1. From this, we have

| (8) |

for sufficiently large . Using (8) instead of (5), we can remove for the estimation of . Similarly, from (8) and the assumption (A1), the density of given , and is obtained as follows:

| (9) |

Wang and Tsai (2009) considered the similar approximated distribution (8) and density (9) in linear extreme value index regression. Estimation using data exceeding thresholds is the so-called peak-over-threshold method (see, Hill 1975; Wang and Tsai 2009).

We assume that and are independent for and . Furthermore, we assume that given and is conditionally independent for and and has the distribution function (5) (see, Jiang, Wand, and Bhaskaran 2022). Using (9), we then define the likelihood of as

where denotes the expectation over the random effects distribution, is any vector corresponding to , and is any positive definite matrix corresponding to . The above is derived from the standard definition of the likelihood for the MEM, because are unobserved random variables, unlike the data (1) (see, Section 2 of Wu 2009). From (4), (6), (9) and (A1), the log-likelihood of can be expressed as

| (10) | ||||

where is an indicator function that returns 1 if and 0 otherwise, is a density function of , and is a suitable constant independent of . Again, because are not observed as data, the log-likelihood (10) includes the integral over the domain of the random effects. We denote the approximate maximum likelihood estimator of by , which is the maximizer of the right-hand side of (10).

2.3 Prediction of random effects

In the proposed model (5) using (6), we are not only interested in estimating the parameters , but also in predicting the random effects . Here, we propose the conditional mode method to predict these random effects (see, Santner and Duffy 1989; Section 11 of Wu 2009). Now, the conditional density function of given the data (1) is proportional to

as a function of . Then, the predictor of is defined as the mode of this conditional distribution, i.e.,

| (11) |

where and included in are replaced by (9) and the estimator , respectively.

2.4 Threshold selection

The thresholds in (10) are tuning parameters that balance between the quality of the approximation (9) and the amount of data exceeding the thresholds. By setting higher thresholds, we can generally improve the estimation bias, but the estimator becomes more unstable. Conversely, if we lower the thresholds, the estimator will behave more stably, but may be more biased. Therefore, these thresholds should be chosen appropriately, considering this trade-off relationship. Here, for each area, we apply the discrepancy measure (see, Wang and Tsai 2009), which considers the goodness of fit of the model, to select the area-wise optimal threshold. In the simulation studies in Section 1.2 of our supplementary material, we verify the performance of the proposed estimator using this threshold selection method.

3 Asymptotic properties

We investigate the asymptotic properties of the proposed estimator . In general, the following three types of asymptotic scenarios may be considered:

-

(i)

remains finite while tend to infinity.

-

(ii)

remain finite while tends to infinity.

-

(iii)

and tend to infinity.

In applications using the peak-over-threshold method, the sample size of threshold exceedances is often small for some areas. Therefore, we want to use as many related area sources as possible. Such a scenario can be expressed mathematically as . Thus, (i) does not match the background of using the MEM for extreme value analysis. Meanwhile, if the thresholds as well as the sample sizes exceeding the thresholds are fixed, the consistency of the proposed estimator would not be shown because the bias occurring from the approximation (9) cannot be improved. Ignoring such a bias is outside the concept of EVT (see, Theorems 2 and 4 of Wang and Tsai 2009). This implies that (ii) is also not realistic in our study. To evaluate the impact of the choice of thresholds and bias of the proposed estimator, we must consider the case where and the sample sizes exceeding the thresholds also tend to infinity, which can be taken under . Consequently, (iii) is most important for establishing EVT for the MEM, and we assume this case in the following Sections 3.1 and 3.2.

Nie (2007) and Jiang, Wand, and Bhaskaran (2022) showed the asymptotic normality of the maximum likelihood estimator of the generalized mixed effects model under (iii). Thus, we can say that the following Theorem 1 extends their results from the generalized mixed effects model to the MEM for EVT.

3.1 Conditions

Let , which is the sample size exceeding the threshold for the th area. Additionally, we define as the average of the effective sample sizes of all areas. Note that and are random variables, not constants. In the case (iii) defined above, we assume that for each , the threshold diverges to infinity in tandem with the sequence of and the th within-area sample size . Accordingly, we denote by . The asymptotic properties of the proposed estimator rely on the following assumptions (A1)-(A6):

-

(A1)

in (5) belongs to the Hall class (see, Hall 1982), that is,

(12) where , , and are continuous and bounded. Furthermore, satisfies

-

(A2)

There exists a bounded and continuous function such that

-

(A3)

As and ,

-

(A4)

There exist some bounded and continuous functions such that under given , uniformly for all and as and , where the symbol “” represents convergence in probability.

-

(A5)

as and .

-

(A6)

There exist some bounded and continuous functions such that

as and , where

and refers to the Euclidean norm.

(A1) and (A2) regularize the tail behavior of the conditional response distribution (5) (see, Wang and Tsai 2009; Ma, Jiang, and Huang 2019). (A3)-(A6) impose the constraints on the divergence rates of the thresholds . (A3) implies that for each , the effective sample size asymptotically diverges to infinity. Under (A4), are not critically different. Furthermore, (A5) means that the number of areas is relatively larger than the effective sample sizes . (A5) mathematically links the divergence rates of and . (A6) is related to the asymptotic bias of the proposed estimator. If (A6) fails, the consistency of the proposed estimator may not be guaranteed.

3.2 Asymptotic normality

Let be a matrix of zeros and ones such that for all symmetric matrices , where is a vector operator, and is a vector half operator that stacks the lower triangular half of a given square matrix into the single vector of length (see, Magnus and Neudecker 1988). The Moore-Penrose inverse of is .

For the maximum likelihood estimator , we obtain the following Theorem 1.

Theorem 1.

Suppose that (A1)-(A6) hold. Then, as and ,

where the symbol “” denotes convergence in distribution, s are zero matrices of appropriate size, and and are defined as follows:

where for , and is the Kronecker product.

Remark 1.

From Theorem 1, and are -consistent, and is -consistent. Furthermore, , and are asymptotically independent. If and are sufficiently large, the covariance matrix of the proposed estimator is obtained as

Theorem 1 also reveals the asymptotic bias of the proposed estimator caused by the approximation (9). If and are sufficiently large, it can be approximated as

As shown in (A6), depends on the EVI function , and the proposed estimator is more biased for larger , that is, the heavier the right tail of the response distribution (5). Furthermore, is also affected by defined in (12), and the proposed estimator is more biased for smaller . Meanwhile, in (12), which is the scaling constant to ensure that the upper bound of (5) is equal to one, is not related to the asymptotic bias of the proposed estimator.

Remark 2.

From Theorem 1, we can confirm the good compatibility between the MEM and EVT as follows. In extreme value analysis, we want to set the threshold as high as possible to ensure a good fit with the Pareto distribution, as shown in (9). However, the estimator may have a large variance because the amount of available data becomes small. Meanwhile, the variance of the proposed estimator for (5) with (6) depends strongly on the number of areas and improves as increases, as described in Remark 1. Note that the magnitude of is unaffected by the choice of thresholds , unlike . Therefore, even if the threshold is high for some areas, the proposed estimator is expected to remain stable as long as is sufficiently large. Note that estimating the bias of the proposed estimator is a difficult problem because and in (12) must be estimated. However, if is sufficiently large, by setting reasonably high thresholds, we may avoid this bias estimation problem while ensuring the stability of the estimator. Such phenomena are numerically confirmed in the simulation study in Section 1.2 of our supplementary material.

Remark 3.

Theorem 1 is directly applicable to confidence interval construction and statistical hypothesis testing on the parameters , and . To obtain more efficient estimates, the choice of covariates is crucial. Alternatively, including too many meaningless covariates in the model will adversely affect the parameter estimates, and “borrowing of strength” will not be effective. Therefore, we must check the efficiency of the selected explanatory variables. Hypothesis testing is useful for this purpose. The typical statement of such a hypothesis test is whether or not each component of and is significantly different from zero. When we organize this test, we must estimate , which can be naturally estimated by

| (13) |

where . In Section 4.3, the hypothesis test on is demonstrated for a real dataset (see, Section 1.1 of our supplementary material).

As described in Section 2.1, an important example of (6) is the type of nested error regression model (7). For the model (7), Theorem 1 can be simplified to the following Corollary 1. Let define for the random effects and denote its proposed estimator as .

Corollary 1.

Suppose that (A1)-(A6) hold. Then, as and ,

where , , and are defined as follows:

where is with and , , and is defined in Theorem 1.

4 Application

4.1 Background

In this section, we analyze the returns of a large number of cryptocurrencies. Over the past decade, the cryptocurrency market has grown rapidly, led by Bitcoin. As of January 2024, the number of active cryptocurrency stocks is estimated to be over 9,000 (see, https://www.statista.com/statistics/863917/number-crypto-coins-tokens/). However, despite the large number of stocks, most studies are limited to a few major cryptocurrencies such as Bitcoin. Gkillas and Katsiampa (2018) studied the extreme value analysis of the returns of five cryptocurrencies, namely Bitcoin, Ethereum, Ripple, Litecoin and Bitcoin Cash. We extend their work to a larger number of stocks including these five cryptocurrencies. Our goal is to demonstrate the advantage of simultaneously analyzing many cryptocurrency stocks using the MEM. In Section 4.3, we report the interpretation of our model. In Section 4.4, we compare the performance of our method with that of stock-by-stock analysis using the method of Wang and Tsai (2009).

4.2 Dataset

The cryptocurrency dataset is available on CoinMarketCap (see, https://coinmarketcap.com/). We use this dataset from the last ten years, from January 1, 2014 to December 31, 2023. Then, we cover the 413 stocks that are in the top 500 on CoinMarketCap as of February 2, 2024 and have returns of 364 days or more. From the daily closing prices for each stock, the returns can be calculated as , where refers to the closing price on the th day in the th cryptocurrency. Let the non-missing returns of the th cryptocurrency be denoted as for . The sample size of the th cryptocurrency is primarily determined by the launch date. Note that the index does not represent the same date for all stocks.

We examine the tail behavior of both the negative and positive returns for each stock. In many applications of EVT, the sample kurtosis has been used to check the effectiveness of using the Pareto-type distribution (see, Wang and Tsai 2009; Ma, Jiang, and Huang 2019). In this study, the sample kurtosis of was greater than zero for each , and the minimum sample kurtosis for the 413 stocks was 1.833, implying that the returns of each cryptocurrency are heavily distributed. Therefore, we use the Pareto-type distribution instead of the GPD to analyze high threshold exceedances for the both negative and positive returns. The following Sections 4.3 and 4.4 describe our method only for positive returns. However, using the same method by replacing with , we can also implement the analysis of extreme negative returns.

For each pair of the 413 stocks, we estimated the tail dependence parameter from returns above the 95th percentile for the both negative and positive returns (see, Reiss and Thomas 2007). The results showed that the percentage of combinations with a tail dependence over 0.7 was only 0.074% for negative returns and only 0.032% for positive returns. Therefore, in this analysis, we do not consider the dependence between each pair of stocks.

4.3 Analysis by our model

In many cryptocurrency applications, dummy variables such as year, month, and day of the week have often been utilized as covariates to account for the non-stationarity of returns. In Longin and Pagliardi (2016) and Gkillas and Katsiampa (2018), the returns were adjusted in terms of variance using some dummy variables and were analyzed under the assumption that the EVI of each cryptocurrency remained constant over the period, despite the dramatic growth of the market. However, in their approach, it is unclear which variable influences returns to what extent. Therefore, in our application, we employ EVI regression to examine the effects of dummy variables with year (9 variables), month (11 variables) and day of the week (6 variables) on the tail behavior of the distribution of returns. The second column of Table 1 shows the assignment of 26 dummy variables. We denote the vector of these dummy variables as for and . For our model in Section 2.1, we set and and denote the random effects as , where is an unknown variance. Then, for the underlying Pareto-type distribution (5), the EVI (6) conditional on and is modeled as

| (14) |

where and are unknown coefficients. Below, we analyze the returns of the 413 cryptocurrencies simultaneously using (14). For the implementation, we then use the glmer() function in the package lme4 (see, Bates et al. 2015) within the R computing environment (see, R Core Team 2021). A more detailed explanation is given in Section 1 of our supplementary material.

| Estimate | Rejection | p-value | |||||||

| Negative | Positive | Negative | Positive | Negative | Positive | ||||

| 2014 | 1 | 1 | |||||||

| 2015 | 1 | 1 | |||||||

| 2016 | 1 | 1 | |||||||

| 2017 | 1 | 1 | |||||||

| 2018 | 1 | 1 | |||||||

| 2019 | 1 | 1 | |||||||

| 2020 | 1 | 1 | |||||||

| 2021 | 1 | 1 | |||||||

| 2022 | 1 | 0 | |||||||

| 2023 | |||||||||

| Jan | 1 | 1 | |||||||

| Feb | 1 | 1 | |||||||

| Mar | 1 | 1 | |||||||

| Apr | 0 | 0 | |||||||

| May | 1 | 1 | |||||||

| Jun | 1 | 1 | |||||||

| Jul | 1 | 1 | |||||||

| Aug | 1 | 0 | |||||||

| Sep | 1 | 0 | |||||||

| Oct | 1 | 0 | |||||||

| Nov | 1 | 1 | |||||||

| Dec | |||||||||

| San | 0 | 0 | |||||||

| Mon | 1 | 0 | |||||||

| Tue | 1 | 0 | |||||||

| Wed | 1 | 0 | |||||||

| Thu | 1 | 1 | |||||||

| Fri | 0 | 0 | |||||||

| Sat | |||||||||

First, we estimate the parameters in (14). The third and fourth columns of Table 1 showed the maximum likelihood estimates of these parameters for negative returns and positive returns, respectively. In these columns, the values in parentheses show the widths of the confidence intervals calculated from Corollary 1. According to the estimates in Table 1, for any combination of our dummy variables, the EVI (14) was higher for positive returns than for negative returns. This result suggests that positive returns may have had a greater impact on the cryptocurrency market than negative returns. Accordingly, this seems to support the evidence of the overall growth of the market. Meanwhile, the effects of dummy variables for year reduced the EVI over the years, implying that many cryptocurrency stocks were less risky as assets than in the past.

Second, we conduct the Wald hypothesis tests to check whether each of our covariates has a significant effect in the model (14). The hypothesis tests of interest are expressed as follows:

for , where is the null hypothesis, is the alternative hypothesis, and is the th component of . From Corollary 1, we define the test statistic as

| (15) |

where is the entry of , and and are the th components of and , respectively. In (15), we estimate by (13) and assume that is a zero vector. Under the null hypothesis , the distribution of can be approximated by . Therefore, for a given significance level , we reject the null hypothesis if , where is the -th percentile point of . The fifth and sixth columns of Table 1 show the test results with for our real dataset, where “1” indicates that the null hypothesis was rejected. In addition, the seventh and eighth columns show the associated p-values. From the fifth column of Table 1, which had many “1”, we can see that for negative returns, many dummy variables for year, month, and day of the week cannot be ignored as covariates for predicting the EVI. The sixth column of Table 1 implies that for positive returns, the effects of days of the week may be meaningless in our model (14). For both negative and positive returns, the dummy variables for year may be particularly important as covariates because their p-values were quite small.

Third, we predict the random effects in (14). Since citing all for is too voluminous, we only present the results for the major cryptocurrencies studied in Gkillas and Katsiampa (2018), namely Bitcoin, Ethereum, Ripple, Litecoin and Bitcoin Cash. Table 2 shows the predicted random effects for these five cryptocurrencies. Based on the model (14), the results in Table 2 mean that Bitcoin Cash had the largest EVI among the five stocks for both negative and positive returns. Thus, Bitcoin Cash may have been the riskiest of the above five cryptocurrencies in the sense that its returns were the most heavily distributed.

| Bitcoin | Ethereum | Ripple (XRP) | Litecoin | Bitcoin Cash | ||

|---|---|---|---|---|---|---|

| Negative | ||||||

| Positive | ||||||

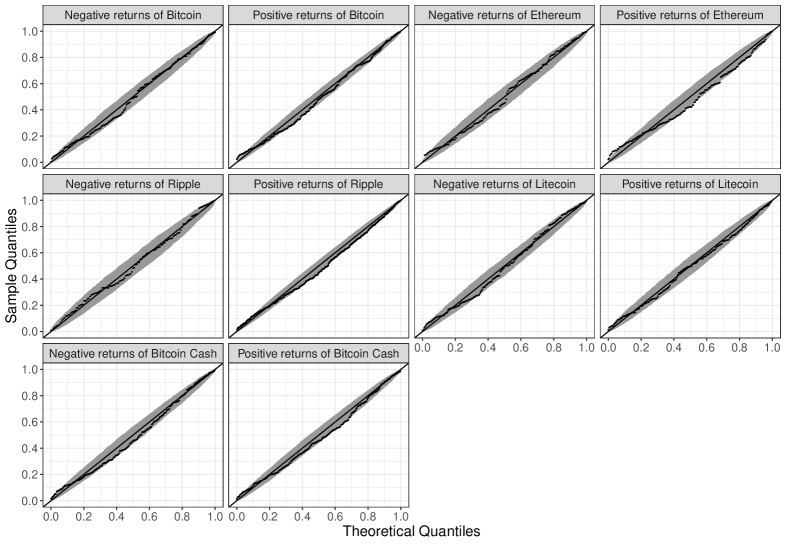

Finally, we evaluate the goodness of fit of the model using the similar method appeared in Wang and Tsai (2009). From (9), the distribution of

conditional on and can be approximated by the uniform distribution on (see, Wang and Tsai 2009). Let be with , and . Then, for each , can be considered as a uniformly distributed random sample. Figure 1 shows the Q-Q plots of against equally divided points on for the five representative cryptocurrencies discussed above, where the bands are the 95% pointwise confidence bands constructed by the function geom_qq_band() with bandType="boot" in the package qqplotr (see, Almeida, Loy, and Hofmann 2018) within R. In each panel of Figure 1, the points were approximately aligned on a straight line with an intercept of 0 and slope of 1. This means that our model fits both negative and positive returns well for each of the five stocks.

4.4 Comparison

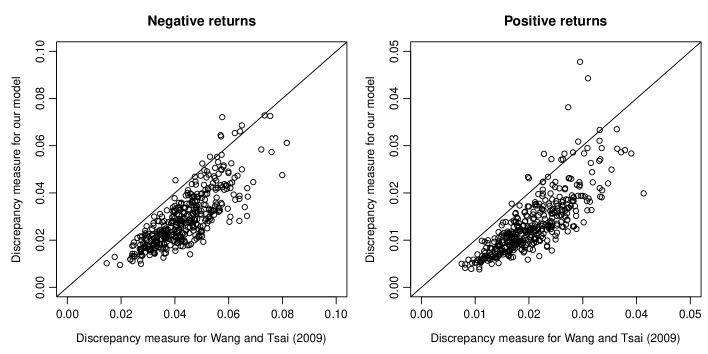

In this section, we compare our model (14) with the method proposed by Wang and Tsai (2009). The latter competitor applies the EVI regression to data stock by stock. To compare these two methods, we iteratively compute the following 2-fold cross-validation criterion. At each iteration step, the returns for each stock are randomly divided into training and test data. As a criterion, we adapt the discrepancy measure proposed by Wang and Tsai (2009). For each of our model and the model of Wang and Tsai (2009), the discrepancy measure for each stock is computed from the test data using the estimated parameters, predicted random effects, and selected thresholds from the training data. Note that our model and the model proposed by Wang and Tsai (2009) use the same threshold exceedances (see, Section 2.4). After 100 iterations, we take the average of the discrepancy measures obtained for each stock. Each panel of Figure 2 shows the scatter plot of averaged discrepancy measures between our model and the model of Wang and Tsai (2009) for the 413 stocks. We can see from the results that the discrepancy measures of our model are smaller than those of Wang and Tsai (2009) for many stocks for both negative and positive returns, suggesting that our model provides better performance.

5 Discussion

In this study, we investigated the MEM for the EVI in the Pareto-type distribution for unit-level data. In other words, this study incorporated the method of SAE into EVT. As explained in Section 2, the parameters of the proposed model were estimated by the maximum likelihood method, and the random effects were predicted by the conditional mode. In Section 3, we established the asymptotic normality of the estimator. Together with the simulation studies in Section 1 of our supplementary material and real data example in Section 4, we can conclude the following advantages of using the MEM for EVI regression. First, in extreme value analysis, the sample size is generally small for some areas because of the peak over threshold. However, as described in Sections 1.3 of our supplementary material and Section 4, the common parametric part in the MEM can adequately guide the differences between areas. Interestingly, the “borrowing of strength” of the MEM is effective for EVI regression because it improves the bias and variance of the peak-over-threshold method (see, Section 1.3 of our supplementary material). Thus, the proposed model provides a significantly efficient tool that is an alternative to direct estimates from each area. Second, the proposed model is effective even when the number of areas is large. This is shown theoretically in Theorem 1 of Section 3.2, while Section 1.2 of our supplementary material proves this property numerically. Furthermore, as a result supporting the use of the proposed model, in Section 1.3 of our supplementary material, the extreme value analysis using the MEM provided more reasonable results than the fully parametric model. Finally, in extreme value analysis, general EVI estimators sometimes have a large bias resulting from the approximation of the peak over threshold. However, from Theorem 1, we found that when is large, the proposed estimator can be designed to reduce the bias while maintaining its stable variance. This is a somewhat surprising result, because a large number of areas typically leads to poor performance of the estimator in the fully parametric model. Thus, the MEM may be one of the effective approaches to overcome the severe problem of bias in extreme value analysis.

We describe future research using the MEM for extreme value analysis. The first work of interest is the development of models such as the simultaneous autoregressive model and the conditional autoregressive model to explain the dependencies between areas (see, Rao and Molina 2015 and references therein). Such models may help provide better estimates in some applications, including spatial data. Second, it may be feasible to extend the MEM to other EVT models such as the generalized extreme value distribution and GPD. In this study, the methods derived from these models and their theoretical results were not clarified and thus require future detailed study. Third, we expect to extend the MEM to extreme quantile regression (see, Wang, Li, and He 2012; Wang and Li 2013). Finally, although this paper studied the MEM with Gaussian random effects, it may be important to consider other distributions of the random effects (see, Section 9.2 of Wu 2009 and Yavuz and Arslan 2018). The development of the MEM with non-Gaussian random effects is also an interesting future work in EVT.

Acknowledgments

We would like to thank Editage (https://www.editage.jp/) for the English language editing.

Data availability statement

The dataset analyzed during this study was processed from those obtained from CoinMarketCap (see, https://coinmarketcap.com/). This dataset and the associated code of R (https://www.r-project.org/) are available from the corresponding author on reasonable request.

References

- [1] Almeida, S., A. Loy, and H. Hofmann. 2018. ggplot2 compatible quantile-quantile plots in R. The R Journal 10 (2):248-61. doi:10.32614/RJ-2018-051.

- [2] Bates, D., M. Mächler, B. Bolker, and S. Walker. 2015. Fitting linear mixed-effects models using lme4. Journal of Statistical Software 67 (1):1-48. doi:10.18637/jss.v067.i01.

- [3] Battese, G., R. Harter, and W. Fuller. 1988. An error-components model for prediction of county crop areas using survey and satellite data. Journal of the American Statistical Association 83 (401):28-36. doi:10.1080/01621459.1988.10478561.

- [4] Beirlant, J., and Y. Goegebeur. 2003. Regression with response distributions of Pareto-type. Computational Statistics & Data Analysis 42 (4):595-619. doi:10.1016/S0167-9473(02)00120-2.

- [5] Beirlant, J., Y. Goegebeur, J. Teugels, and J. Segers. 2004. Statistics of extremes: Theory and applications. New Jersey: John Wiley & Sons.

- [6] Bottolo, L., G. Consonni, P. Dellaportas, and A. Lijoi. 2003. Bayesian analysis of extreme values by mixture modeling. Extremes 6 (1):25-47. doi:10.1023/A:1026225113154.

- [7] Broström, G., and H. Holmberg. 2011. Generalized linear models with clustered data: Fixed and random effects models. Computational Statistics & Data Analysis 55 (12):3123-34. doi:10.1016/j.csda.2011.06.011.

- [8] Davison, A. C., and R. L. Smith. 1990. Models for exceedances over high thresholds. Journal of the Royal Statistical Society: Series B (Methodological) 52 (3):393-425. doi:10.1111/j.2517-6161.1990.tb01796.x.

- [9] de Carvalho, M., R. Huser, and R. Rubio. 2023. Similarity-based clustering for patterns of extreme values. Stat 12 (1):e560. doi:10.1002/sta4.560.

- [10] Dempster, A. P., D. B. Rubin, and R. K. Tsutakawa. 1981. Estimation in covariance components models. Journal of the American Statistical Association 76 (374):341-53. doi:10.1080/01621459.1981.10477653.

- [11] Diallo, M., and J. N. K. Rao. 2018. Small area estimation of complex parameters under unit-level models with skew-normal errors. Scandinavian Journal of Statistics 45 (4):1092-116. doi:10.1111/sjos.12336.

- [12] Dupuis, D. J., S. Engelke, and L. Trapin. 2023. Modeling panels of extremes. The Annals of Applied Statistics 17 (1):498-517. doi:10.1214/22-AOAS1639.

- [13] Fisher, R., and L. Tippett. 1928. Limiting forms of the frequency distribution of the largest or smallest member of a sample. Mathematical Proceedings of the Cambridge Philosophical Society 24 (2):180-90. doi:10.1017/S0305004100015681.

- [14] Gkillas, K., and P. Katsiampa. 2018. An application of extreme value theory to cryptocurrencies. Economics Letters 164:109-11. doi:10.1016/j.econlet.2018.01.020.

- [15] Gumbel, E. J. 1958. Statistics of extremes. West Sussex: Columbia University Press.

- [16] Hall, P. 1982. On some simple estimates of an exponent of regular variation. Journal of the Royal Statistical Society: Series B (Methodological) 44 (1):37-42. doi:10.1111/j.2517-6161.1982.tb01183.x.

- [17] Hill, B. M. 1975. A simple general approach to inference about the tail of a distribution. The Annals of Statistics 3 (5):1163-74. doi:10.1214/aos/1176343247.

- [18] Jiang, J. 2007. Linear and generalized linear mixed models and their applications. New York: Springer.

- [19] Jiang, J. 2017. Asymptotic analysis of mixed effects models: Theory, applications, and open problems. Boca Raton, Florida: CRC Press.

- [20] Jiang, J., M. P. Wand, and A. Bhaskaran. 2022. Usable and precise asymptotics for generalized linear mixed model analysis and design. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 84 (1):55-82. doi:10.1111/rssb.12473.

- [21] Longin, F., and G. Pagliardi. 2016. Tail relation between return and volume in the US stock market: An analysis based on extreme value theory. Economics Letters 145:252-4. doi:10.1016/j.econlet.2016.06.026.

- [22] Ma, Y., Y. Jiang, and W. Huang. 2019. Tail index varying coefficient model. Communications in Statistics - Theory and Methods 48 (2):235-56. doi:10.1080/03610926.2017.1406519.

- [23] Magnus, J. R., and H. Neudecker. 1988. Matrix differential calculus with applications in statistics and econometrics. New Jersey: John Wiley & Sons.

- [24] Molina, I., P. Corral, and M. Nguyen. 2022. Estimation of poverty and inequality in small areas: Review and discussion. TEST 31 (4):1143-66. doi:10.1007/s11749-022-00822-1.

- [25] Molina, I., and J. N. K. Rao. 2010. Small area estimation of poverty indicators. The Canadian Journal of Statistics 38 (3):369-85. https://www.jstor.org/stable/27896031.

- [26] Nie, L. 2007. Convergence rate of MLE in generalized linear and nonlinear mixed-effects models: Theory and applications. Journal of Statistical Planning and Inference 137 (6):1787-804. doi:10.1016/j.jspi.2005.06.010.

- [27] R Core Team. 2021. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing.

- [28] Rao, J. N. K., and I. Molina. 2015. Small area estimation. Hoboken, New Jersey: John Wiley & Sons.

- [29] Reiss, R. D., and M. Thomas. 2007. Statistical analysis of extreme values: With applications to insurance, finance, hydrology and other fields. Basel, Switzerland: Birkhäuser Basel.

- [30] Rohrbeck, C., and J. Tawn. 2021. Bayesian spatial clustering of extremal behavior for hydrological variables. Journal of Computational and Graphical Statistics 30 (1):91-105. doi:10.1080/10618600.2020.1777139.

- [31] Ruppert, D., M. P. Wand, and R. J. Carroll. 2003. Semiparametric regression. New York: Cambridge University Press.

- [32] Santner, T. J., and D. E. Duffy. 1989. The statistical analysis of discrete data. New York: Springer.

- [33] Sugasawa, S., and T. Kubokawa. 2020. Small area estimation with mixed models: A review. Japanese Journal of Statistics and Data Science 3 (2):693-720. doi:10.1007/s42081-020-00076-x.

- [34] Torabi, M. 2019. Spatial generalized linear mixed models in small area estimation. Canadian Journal of Statistics 47 (3):426-37. doi:10.1002/cjs.11502.

- [35] Wang, H. J., and D. Li. 2013. Estimation of extreme conditional quantiles through power transformation. Journal of the American Statistical Association 108 (503):1062-74. doi:10.1080/01621459.2013.820134.

- [36] Wang, H. J., D. Li, and X. He. 2012. Estimation of high conditional quantiles for heavy-tailed distributions. Journal of the American Statistical Association 107 (500):1453-64. doi:10.1080/01621459.2012.716382.

- [37] Wang, H., and C. L. Tsai. 2009. Tail index regression. Journal of the American Statistical Association 104 (487):1233-40. doi:10.1198/jasa.2009.tm08458.

- [38] Wu, L. 2009. Mixed effects models for complex data. Boca Raton, Florida: CRC Press.

- [39] Yavuz, F. G., and O. Arslan. 2018. Linear mixed model with Laplace distribution (LLMM). Statistical Papers 59 (1):271-289. doi:10.1007/s00362-016-0763-x.

Supplemental online material for

“Mixed effects models for extreme value index regression”

This supplementary material supports our main article entitled “Mixed effects models for extreme value index regression” and is organized as follows. Section 1 provides some Monte Carlo simulation studies to verify the finite sample performance of the model proposed in Section 2 of our main article. Section 2 describes the technical details of the proof of Theorem 1 in Section 3.2 of our main article. Note that we use many of the symbols defined in our main article.

1 Simulation

From Eq. (9) of our main article, we can approximate the distribution of conditional on , and by the exponential distribution, which belongs to the gamma distribution (see, Wang and Tsai 2009). Therefore, our estimator and predictor proposed in Section 2 of our main article can be easily implemented by using the function glmer() with family=Gamma(link="log") in the package lme4 (see, Bates et al. 2015) within the R computing environment (see, R Core Team 2021). In the function glmer(), when , the integral in the log-likelihood defined in Eq. (10) of our main article is approximated by the adaptive Gauss-Hermite quadrature, and and are then optimized by “bobyqa” and “Nelder Mead”, respectively.

In the following Sections 1.1-1.3, we investigate the performance of our estimator and predictor through some simulation studies using the above package.

1.1 Practicality of asymptotic normality of the estimator

In this section, we illustrate the applicability of our asymptotic normality constructed in Corollary 1 of our main article to finite samples. This is positioned as a preliminary study for hypothesis testing on a real data example in Section 4 of our main article.

We simulate the dataset as follows. Let denote and set for and . First, we independently generate from the standard normal distribution or uniform distribution on . Note that in both covariate cases, has zero mean and unit variance. In the next step, we obtain an independent sample from with . Finally, for each and , we generate using a given conditional response distribution . The same data generation procedure will be used in Section 1.2. Here, to obtain , we use the Pareto distribution

| (16) |

and apply the nested error regression type model formulated as Eq. (7) of our main article with and , i.e.,

| (17) |

where . Let us denote the proposed estimator of by . Because the Pareto distribution (16) satisfies Eq. (12) of our main article with , the estimator does not have the asymptotic bias described in Remark 1 in Section 3.2 of our main article. Therefore, we do not use the thresholds , and thus the effective sample size for each is unchanged from .

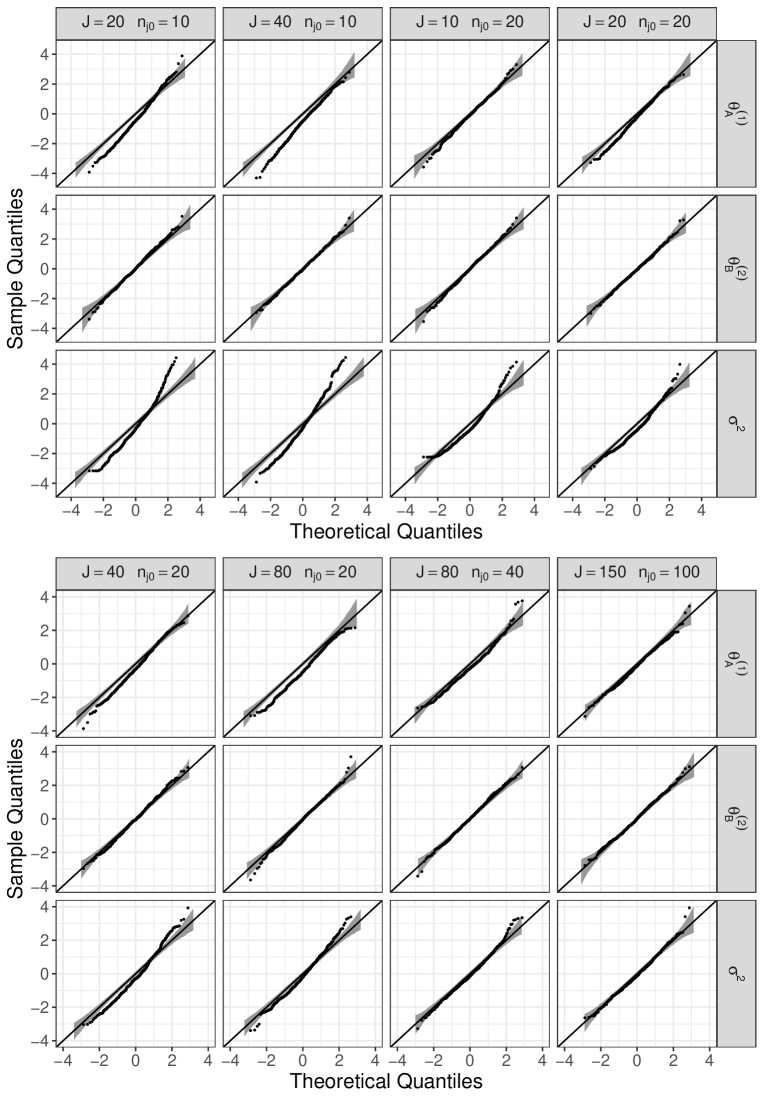

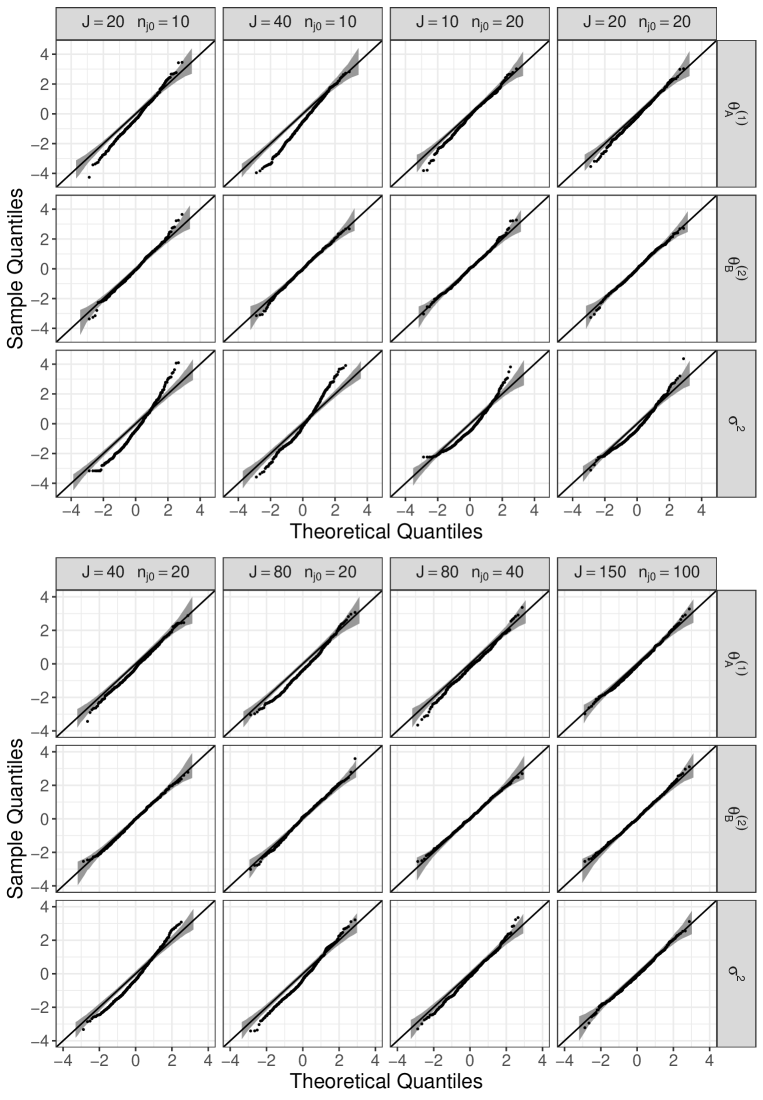

Under the above model setups, from Corollary 1 of our main article, , and are asymptotically distributed as . Note that this simulation setting satisfies in Corollary 1 of our main article. To obtain the empirical distributions of the above standardized estimators, we use 500 datasets and repeatedly estimate the parameters from each dataset. Figures 3 and 4 show the Q-Q plots for the obtained standardized estimates against for the normal covariate and uniform covariate, respectively. In these figures, varies by column as , , , , , , , and . Furthermore, the bands in each panel are the 95% pointwise confidence bands constructed by the function geom_qq_band() with bandType="boot" in the package qqplotr (see, Almeida, Loy, and Hofmann 2018) within R. If all generated are close to each other, may be estimated to be zero by glmer(). Thus, for and , the Q-Q plot contained several equal values for . Comparing Figures 3 and 4, the type of the distribution of did not significantly affect the results. We can see from Figures 3 and 4 that for and , the empirical distribution of the standardized estimators had heavier tails than , but this tendency disappeared with increasing and . In contrast to and , the Q-Q plot for was good for all pairs of . Consequently, these results reflect the claims of Corollary 1 of our main article.

1.2 Behavior of the estimator for numerous areas

In this section, we examine the numerical performance of the estimator for large . To obtain the dataset according to the procedure in Section 1.1, we use (17) and the following conditional distribution (a) or (b) instead of (16):

-

(a)

Student’s -distribution

with , where is a gamma function. For Eq. (12) of our main article, this distribution belongs to the Pareto-type distribution with .

-

(b)

Burr distribution

with , and . The Burr distribution satisfies Eq. (12) of our main article with .

For (a), we obtain a sample directly from the -distribution for a given non-integer degree of freedom. As described in Remark 1 of our main article, the proposed estimator is more biased for smaller .

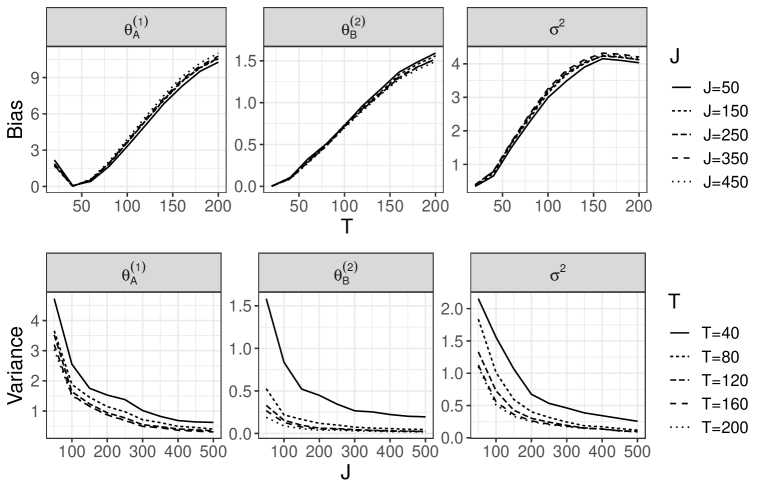

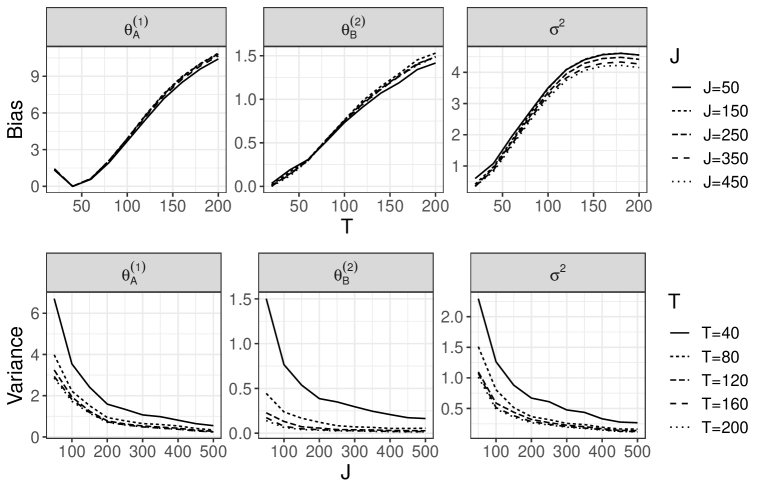

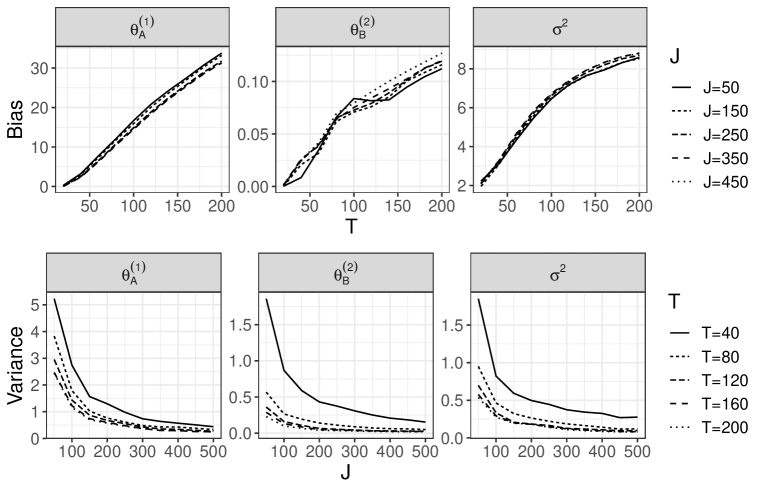

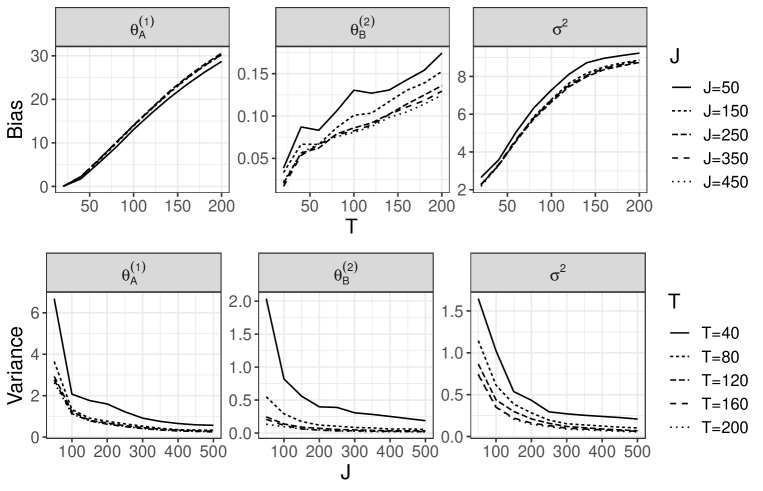

In advance, we generate the unit-level data with 500 areas and using the above procedure. Then, we use a part of the dataset according to the following rules. The number of areas is increased by a factor of 10 from 50 to 500. Furthermore, for the discrepancy measure described in Section 2.4 of our main article, we use the 10th to th largest responses in the th area as the candidates for the th threshold , where varies from 20 to 200 in increments of 20. Roughly speaking, a smaller means that higher thresholds are chosen. For each and , we obtain the estimates . Following the above rules, we iterate the estimation for 100 sets of unit-level data. Figures 5-8 show the sample squared bias and variance of the estimator for each and . The details can be found in the description of each figure. Overall, we can see that our estimator remained stable when was sufficiently large, even when was small. Based on the first row of each figure, our estimator then did not suffer from a large bias for large . Such results guarantee the considerations in Remark 2 of Section 3.2 of our main article. As described in Remark 1 of our main article, from the second row of each figure, the variance of was strongly dependent on both and when was small, while the variances of and were almost unaffected by .

1.3 Borrowing of strength for extremes

In this section, we compare our model, the fully parametric model defined in Eqs. (2) and (3) of our main article, and model proposed by Wang and Tsai (2009). The use of the model proposed by Wang and Tsai (2009) implies that areas are analyzed separately. To obtain the estimates for the latter two parametric models, we use the function glm() with family=Gamma(link="log") within R.

Unlike Sections 1.1 and 1.2, the dataset is simulated from the fully parametric model as follows. Let be divided into and set , , and . We generate from . To generate , we then combine the two distributions

| (18) |

and

| (19) |

where is described in detail in Section 4.1 of Wang and Tsai (2009). Then, the extreme value index is modeled as , where and the parameters are assigned the following two types. One is defined as the -th quantile of for and -th quantile of for , and the other is fixed as for and for . These two settings of are denoted by Normal type and Uniform type, respectively.

| Our model | Fully parametric model | Direct estimates | ||

| Normal type | ||||

| Bias | ||||

| Variance | ||||

| Uniform type | ||||

| Bias | ||||

| Variance | ||||

Without introducing thresholds, we estimate and by each of our model, the fully parametric model expressed as Eqs. (2) and (3) of our main article, and model proposed by Wang and Tsai (2009). In our model, estimates for are obtained by combining the estimator and predictor proposed in Section 2 of our main article. For the model proposed by Wang and Tsai (2009), different estimates of are obtained across the areas, but the mean of these is used as the estimate of . Under the above simulation settings, if the areas were analyzed separately, the estimates for the areas from (19) would have large bias and variance. Therefore, we are interested in whether these direct estimates can be improved by simultaneously analyzing the areas using our model. To verify this, we evaluate the sample bias and variance of the estimator for each method. Table 3 shows the results based on 500 sets of unit-level data, where the superscripts and in this table refer to the means for and for , respectively. According to Table 3, our model produced the most stable estimates of the three models. In particular, for , the variance of the estimates was dramatically improved using our model rather than the direct estimates. In addition, we can see that in extreme value analysis, the “borrowing of strength” of the mixed effects model also contributes to reducing the bias of the estimator. In the fully parametric model, the bias of the estimator was larger than that of the direct estimates. Meanwhile, even when the assumption of normality of the random effects in Eq. (4) of our main article was not satisfied, our model was still favorable.

2 Proof of theorems

We give the proof of Theorem 1 in Section 3.2 of our main article. For convenience, we introduce some new symbols:

-

•

, and , which are -dimensional vectors.

-

•

, and .

-

•

For each , we denote

where . In Eq. (10) of our main article, the approximated log-likelihood can be redefined as .

-

•

For any smooth function , we denote and . In particular, we denote and , where , and are part of . As a special case, for any smooth real-valued function of , we simply write and for .

-

•

For any column vector , we denote .

-

•

Let be the -diagonal matrix with

, where is the -dimensional vector with all elements equal to 1.

Let denote . For each , conditional on satisfies the following Lemma 1.

Lemma 1.

Suppose that (A3) and (A4) hold. Then, under given , as and .

Proof of Lemma 1.

We have

and

which converges to 0 as and from (A3). Accordingly, under given , as and ,

Thus, Lemma 1 is proved by combining this result with (A4). ∎

For each , we denote

| (20) |

where

which satisfies

and

In the following Lemmas 2 and 3, we reveal the asymptotic properties of .

Lemma 2.

Suppose that (A1)-(A4) and (A6) hold. Then, under given , as and ,

Proof of Lemma 2.

For each , can be written as

| (21) | ||||

| (22) |

In the following Steps 1 and 2, we derive the asymptotic distributions of (21) and (22) conditional on . By combining Lemma 1 and these steps, Lemma 2 holds from Slutsky’s theorem.

Step 1.

Step 2.

For (21), we show that under given ,

| (24) |

as and . Because (24) is the sum of conditionally independent and identically distributed random vectors, we can apply the central limit theorem. Obviously, the conditional expectation of (24) is . Moreover, we obtain

| (25) | |||

| (26) |

From Step 1, (26) converges to as and . Therefore, we show that (25) converges to as and . From Eq. (9) of our main article, we have

| (27) |

uniformly for all as and . In addition, from (A2), we have

| (28) |

uniformly for all as and . Now, (25) can be written as

∎

Lemma 3.

Suppose that (A1)-(A4) hold. Then, under given , as and ,

Proof of Lemma 3.

For each , can be written as

We show that under given ,

| (29) |

as and . From Eq. (9) of our main article, we have

and

uniformly for all as and . Now, (29) has the form of the sum of conditionally independent and identically distributed random vectors, and and are independent. These facts yield that for (29),

and

as and , where is defined in (28). Therefore, (29) holds. By combining Lemma 1 and (29), we then obtain Lemma 3. ∎

Lemma 4.

Suppose that (A1)-(A4) and (A6) hold. Then, as and , uniformly for all .

Proof of Lemma 4.

We show that under given , uniformly for all and . By the Taylor expansion of , we have

for any and . From Lemmas 2 and 3, we have that for any , there exists a large constant such that for any and ,

| (30) |

We assume that for all , is the positive definite matrix, which implies that is the strictly convex function. Therefore, is the unique global minimizer of . Then, we obtain Lemma 4 (see, the proof of Theorem 1 of Fan and Li 2001). ∎

To show Lemma 6 below, we use the result of the following Laplace approximation. The proof is described in (2.6) of Tierney, Kass, and Kadane (1989) and Appendix A of Miyata (2004).

Lemma 5.

For any smooth functions , and ,

where is the minimizer of , is the vector with the th entry equal to , is the matrix with the entry equal to the entry of , and denotes the array with the entry .

Lemma 6.

Suppose that (A1)-(A4) and (A6) hold. Then, as and ,

| (31) | |||

| (32) |

and

| (33) | ||||

where

for and .

Proof of Lemma 6.

For each , can be written as

and

where and . For each , and , we denote the th component of as . From Lemma 5, we have

| (34) | ||||

for and . In the following Steps 1-3, we evaluate based on (34).

Step 1.

Step 2.

Step 3.

∎

Lemma 7.

Suppose that (A1)-(A6) hold. Then, as and ,

Proof of Lemma 7.

Let denote

From Lemma 6, we then have

| (36) |

From the reproductive property of the normal distribution, the first term on the right-hand side of (36) converges to in distribution as . From the proof of Lemma 2, for the second term of the right-hand side of (36), we have

and , which implies that the sum of and the second term on the right-hand side of (36) converges to in probability as and . Thus, Lemma 7 is shown. ∎

Lemma 8.

Suppose that (A1)-(A6) hold. Then, as and ,

Proof of Lemma 8.

We denote

From Lemma 6, we have

| (37) |

Now, the right-hand side of (37) can be written as

| (38) | |||

| (39) |

Similar to the proof of Lemma 7, (39) converges to in probability as and . Similar to Lemma 2, for (38), we have that under given ,

as and . Therefore, (38) is the weighted sum of independent and asymptotically identically distributed random vectors, which can be applied the weighted central limit theorem (see, Weber 2006). As a result, (38) converges to in distribution as and . Thus, the proof of Lemma 8 is completed. ∎

Lemma 9.

Suppose that (A1)-(A6) hold. Then, as and ,

Proof of Lemma 9.

Proposition 1.

Suppose that (A1)-(A6) hold. Then, as and ,

Proof of Proposition 1.

Proposition 2.

Suppose that (A1)-(A6) hold. Then, as and ,

Proof of Proposition 2.

Proof of Theorem 1 in Section 2.3 of our main article.

For any , the Taylor expansion of around yields that

From Propositions 1 and 2, for any , there exists a large constant such that

| (43) |

as and . We assume that for all , is the positive definite matrix, which implies that is the strictly convex function. Therefore, is the unique global maximizer of . Then, (43) implies (see, the proof of Theorem 1 of Fan and Li 2001). Because is the global maximizer of , we have . From the Taylor expansion of , we have

Therefore, by applying Propositions 1 and 2, we obtain Theorem 1 of our main article. ∎

References

- [1] Almeida, S., A. Loy, and H. Hofmann. 2018. ggplot2 compatible quantile-quantile plots in R. The R Journal 10 (2):248-61. doi:10.32614/RJ-2018-051.

- [2] Bates, D., M. Mächler, B. Bolker, and S. Walker. 2015. Fitting linear mixed-effects models using lme4. Journal of Statistical Software 67 (1):1-48. doi:10.18637/jss.v067.i01.

- [3] Fan, J., and R. Li. 2001. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association 96 (456):1348-60. doi:10.1198/016214501753382273.

- [4] Miyata, Y. 2004. Fully exponential Laplace approximations using asymptotic modes. Journal of the American Statistical Association 99 (468):1037-49. doi:10.1198/016214504000001673.

- [5] Nie, L. 2007. Convergence rate of MLE in generalized linear and nonlinear mixed-effects models: Theory and applications. Journal of Statistical Planning and Inference 137 (6):1787-804. doi:10.1016/j.jspi.2005.06.010.

- [6] R Core Team. 2021. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing.

- [7] Tierney, L., R. E. Kass, and J. B. Kadane. 1989. Fully exponential Laplace approximations to expectations and variances of nonpositive functions. Journal of the American Statistical Association 84 (407):710-6. doi:10.1080/01621459.1989.10478824.

- [8] Wang, H., and C. L. Tsai. 2009. Tail index regression. Journal of the American Statistical Association 104 (487):1233-40. doi:10.1198/jasa.2009.tm08458.

- [9] Weber, M. 2006. A weighted central limit theorem. Statistics & Probability Letters 76 (14):1482-7. doi:10.1016/j.spl.2006.03.007.