Mixed geometry information regularization for image multiplicative denoising

Abstract

This paper focuses on solving the multiplicative gamma denoising problem via a variation model. Variation-based regularization models have been extensively employed in a variety of inverse problem tasks in image processing. However, sufficient geometric priors and efficient algorithms are still very difficult problems in the model design process. To overcome these issues, in this paper we propose a mixed geometry information model, incorporating area term and curvature term as prior knowledge. In addition to its ability to effectively remove multiplicative noise, our model is able to preserve edges and prevent staircasing effects. Meanwhile, to address the challenges stemming from the nonlinearity and non-convexity inherent in higher-order regularization, we propose the efficient additive operator splitting algorithm (AOS) and scalar auxiliary variable algorithm (SAV). The unconditional stability possessed by these algorithms enables us to use large time step. And the SAV method shows higher computational accuracy in our model. We employ the second-order SAV algorithm to further speed up the calculation while maintaining accuracy. We demonstrate the effectiveness and efficiency of the model and algorithms by a lot of numerical experiments, where the model we proposed has better features texture-preserving properties without generating any false information.

-

29 April 2024

Keywords: multiplicative denoising, mixed geometry information,

additive operator splitting, scalar auxiliary variable

1 Introduction

Multiplicative noise, commonly referred to as speckle noise, is prevalent in the synthetic aperture radar (SAR) images [1, 2], laser images [3], ultrasound images [4], and tomographic images [5]. It destroys almost all the original information, hence it is necessitating urgent attention to perform multiplicative noise removal in real imaging systems. For a mathematical description of such noise, we consider a clean image , where is a bounded open set in with a Lipschitz boundary. Let represent the multiplicative noise, then the pollution process of noisy image can be expressed mathematically as follows

| (1) |

In this study, our focus is on the consideration that follows the gamma distribution [6], which is commonly encountered in SAR images. Specifically, obeys the mean value of and the variance of . The probability density function is formally expressed as

| (2) |

where denotes the characteristic function of the set . Different from the additive noise, multiplicative noise exhibits coherence and non-Gaussian properties [7], making traditional additive denoising models fail and posing more challenges.

In the literature, various multiplicative denoising methods have been proposed, including variational methods [8, 9, 10, 11], partial differential equation (PDE) methods [12, 13], non-local filtering methods [14, 15, 16, 17], and so on. Among these, variation-based models renowned for their adeptness in incorporating prior information and possessing stringent theoretical guarantees, and have attracted significant attentions [18, 8, 9, 19, 20]. A classic model within this category is the Rudin, Lions, and Osher (RLO) model [18]. Notably, RLO utilized total variation (TV) as a regularizer, allowing for the existence of discontinuous solutions. It can effectively remove internal noise while preserving boundaries. However, the model solely considers the statistical information of mean and variance, thereby constraining its denoising capabilities. To address this limitation, Aubert and Aujol [8] proposed the AA model, which combined the TV regularizer with a modified fidelity term derived from maximum a posteriori estimation.

While the classic TV regularizer and its variants can effectively remove noise, they often exhibit defects, such as the staircasing effects, corners loss and contrast reduction [21, 22, 23, 24]. In response to these limitations, numerous curvature-based models have been proposed. Curvature serves as a crucial tool for characterizing curves and surfaces in image processing. Models incorporating such priors can enforce continuity constraints on the solution space, allowing for the preservation of jumps and mitigation of the aforementioned deficiencies. Meanwhile, as models containing a type of geometric information, the curvature-based models also exhibit good performance in protecting image geometric structure, such as corners [25, 26, 27, 28, 29].

Besides employing curvature-based models, denoising can also be achieved by minimizing the area of image surface [30, 31, 32, 33]. Sapiro et al. [30] first minimize the area of surfaces to penalize the roughness of the solution, which can avoid smoothing the edges while denoising. Yezzi [31] proposed a modified momentum model from the non-variational perspective, and the corresponding model can flatten out oscillations while protecting the edge.

In conclusion, compared with the TV-norm based models, the geometry-based models can effectively remove the multiplicative noise while protecting image features. However, it can be seen that both types of models suffers from some limitations. For example, the model based only on surface area has the advantage of preserving edges and contours but also brings problems such as contrast reduction and staircasing effects. The model based solely on curvature can avoid the above problems, but it also brings the disadvantage of being unable to protect the edges of objects and making the boundaries too smooth [34].

Then it is natural to consider a model that mixes geometric information to compensate for their shortcomings. Here we use a multiplicative structure similar to Euler’s elastica to balance their effects. We take the minimal surface area term as the main denoising term, which has excellent edge protection ability and low computational cost. However, it is accompanied by unnatural artifacts such as the staircasing effects. We combine the curvature term with it to alleviate this phenomenon. In addition, we incorporate the gray level indicator to perform adaptive multiplicative denoising.

To sum up, the model we proposed by considering curvature and surface area, not only possesses strong denoising capabilities but also preserves the geometric information of images, such as texture, edges, and corners. However, the integration of curvature and surface results in a high-order nonlinear functional model, which poses challenge in numerical solving. Frequently, related models employ rapid algorithms like the augmented Lagrangian method (ALM) and primal-dual method to expedite computations. Nevertheless, such approach introduces the issue of manually selecting numerous multipliers, resulting in additional parameter adjustment burden [35, 36, 37, 38, 39]. Addressing these challenges necessitates the development of an efficient and accurate numerical algorithm for minimizing the energy functional. To maintain equivalence during algorithmic iterations, we first employ the additive operator splitting (AOS) algorithm to solve the gradient flow. The AOS algorithm is a classic algorithm used in image processing and has unconditional stability. However, when applied to our model, it sometimes leads to computational inaccuracies. It is worth mentioning that in recent years, Shen et al. [40] have proposed the scalar auxiliary variable (SAV) method to speed up the solving process of gradient flow problems. It is also unconditionally stable and capable of iteratively minimizing the original energy functional without necessitating modification or relaxation of the initial problem. Therefore the first-order and second-order SAV algorithm is used to calculate our model.

The main contributions of our work mainly lie in the following three aspects:

•We propose an adaptive multiplicative denoising model incorporating mixed geometry information. We mix the area term and the curvature term to protect edges and mitigate staircasing effects respectively. Furthermore, tailored to the coherence characteristics of multiplicative noise, we introduce gray level indicator to perform stronger denoising on areas with high signal intensity.

•To overcome the numerical challenges inherent in solving nonlinear models, we utilize the AOS and SAV algorithms to expedite the conventional gradient flow method. These algorithms exhibit unconditional stability and unconditional energy stability, respectively. Compared with the AOS algorithm, the SAV algorithm has a better ability to maintain accuracy in our model.

•Adequate numerical experiments demonstrate that the proposed model effectively removes noise while preserving the geometric features of the original image, including corners and edges.

The rest of this paper is organized as follows. We propose a model based on mixed geometry information regularization in Sect. 2. In Sect. 3 we derive the gradient flow corresponding to the mixed geometric information model. In Sect. 4, the unconditionally stable algorithms based on AOS and SAV are proposed. Sect. 5 is devoted to numerical experiment. In Sect. 6, we summarize the whole paper.

2 Adaptive Minimization Model Based on Mixed Geometry Information

2.1 The Comparison between Single Geometric Information and Mixed Geometric Information

Among traditional variational denoising methods, regularization models based on geometric information exhibit superior performance. Models with TV regularization or minimal surface regularization have the advantage of edge protection, but these models bring bad experimental effects such as loss of image contrast [23]. At the same time, the curvature-based model can effectively maintain contrast, but the edge protection ability is reduced and the computational overhead increases several times [27, 41]. We can find that models based on single geometric information enjoy some advantages but also have disadvantages. Then a natural idea is that we can design a mixed geometric model that can combine their advantages and compensate for each other’s shortcomings.

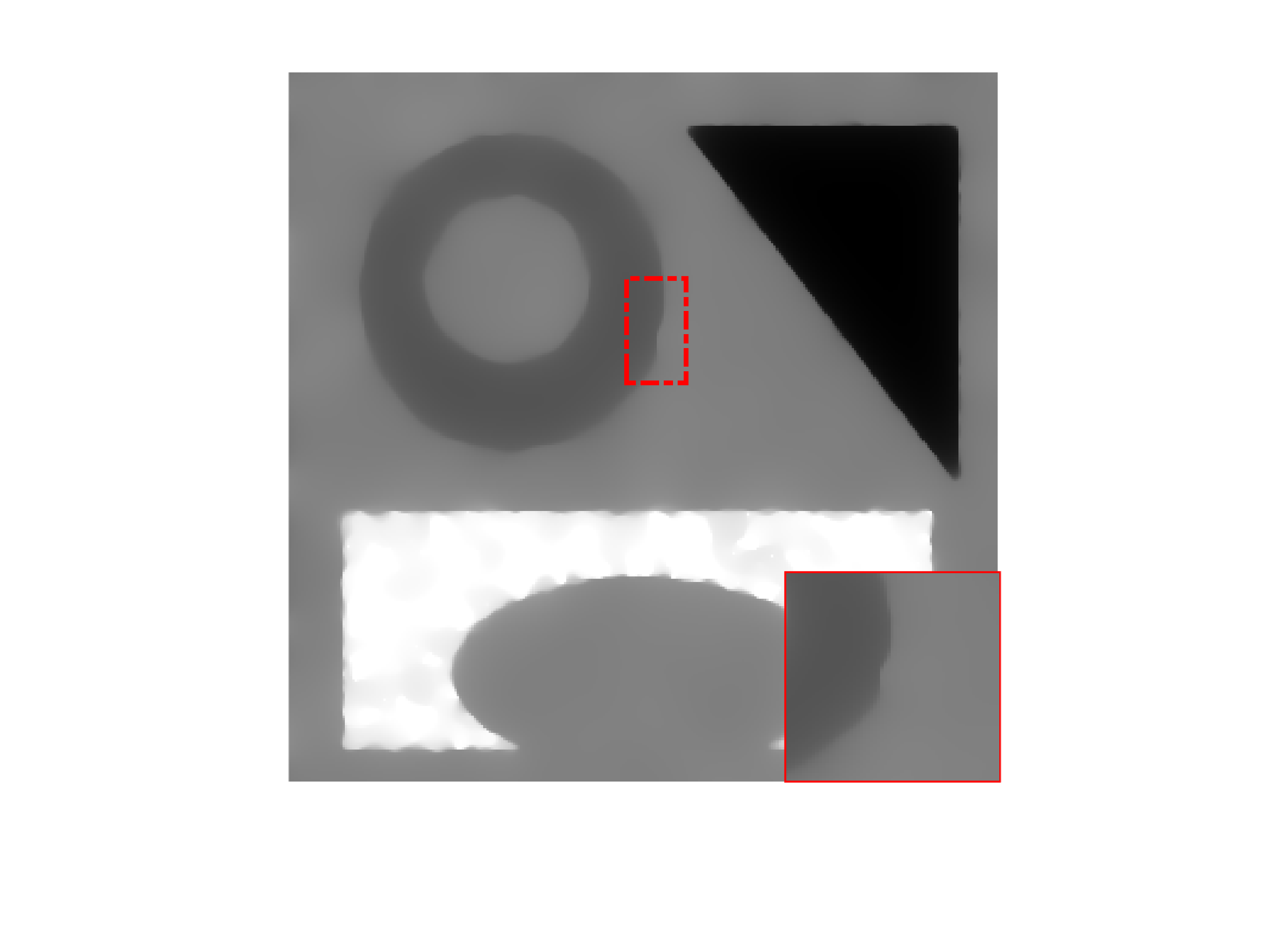

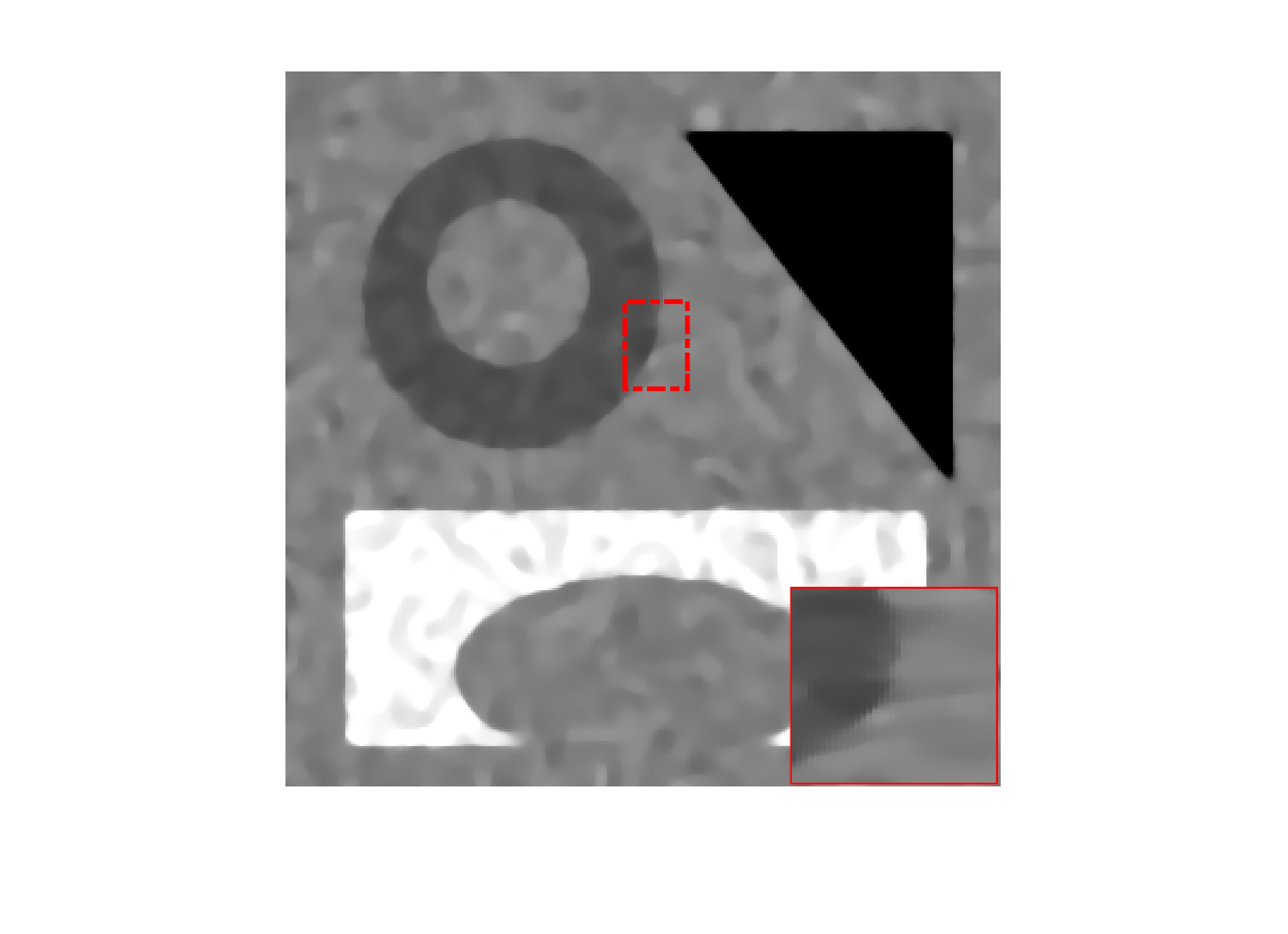

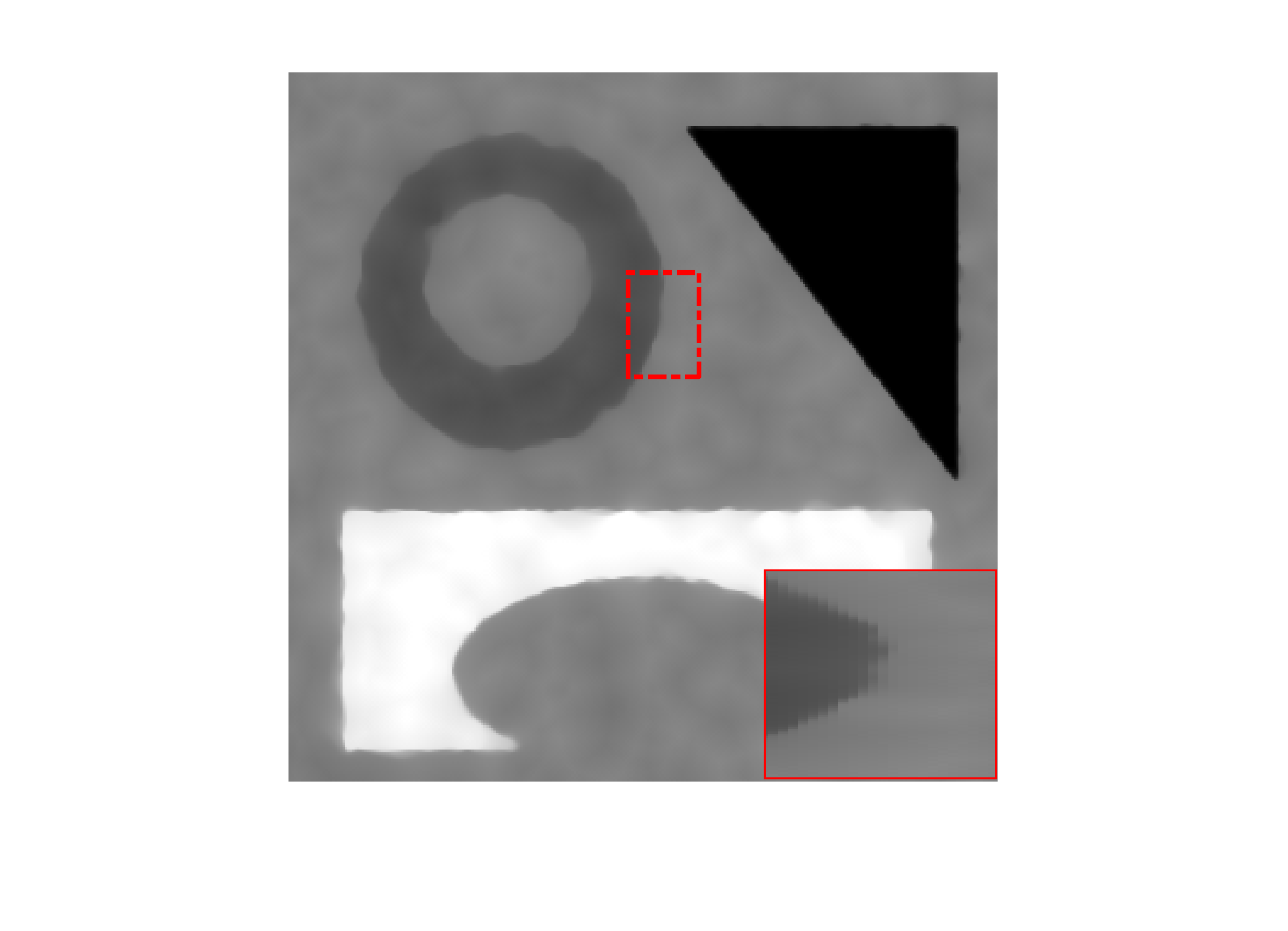

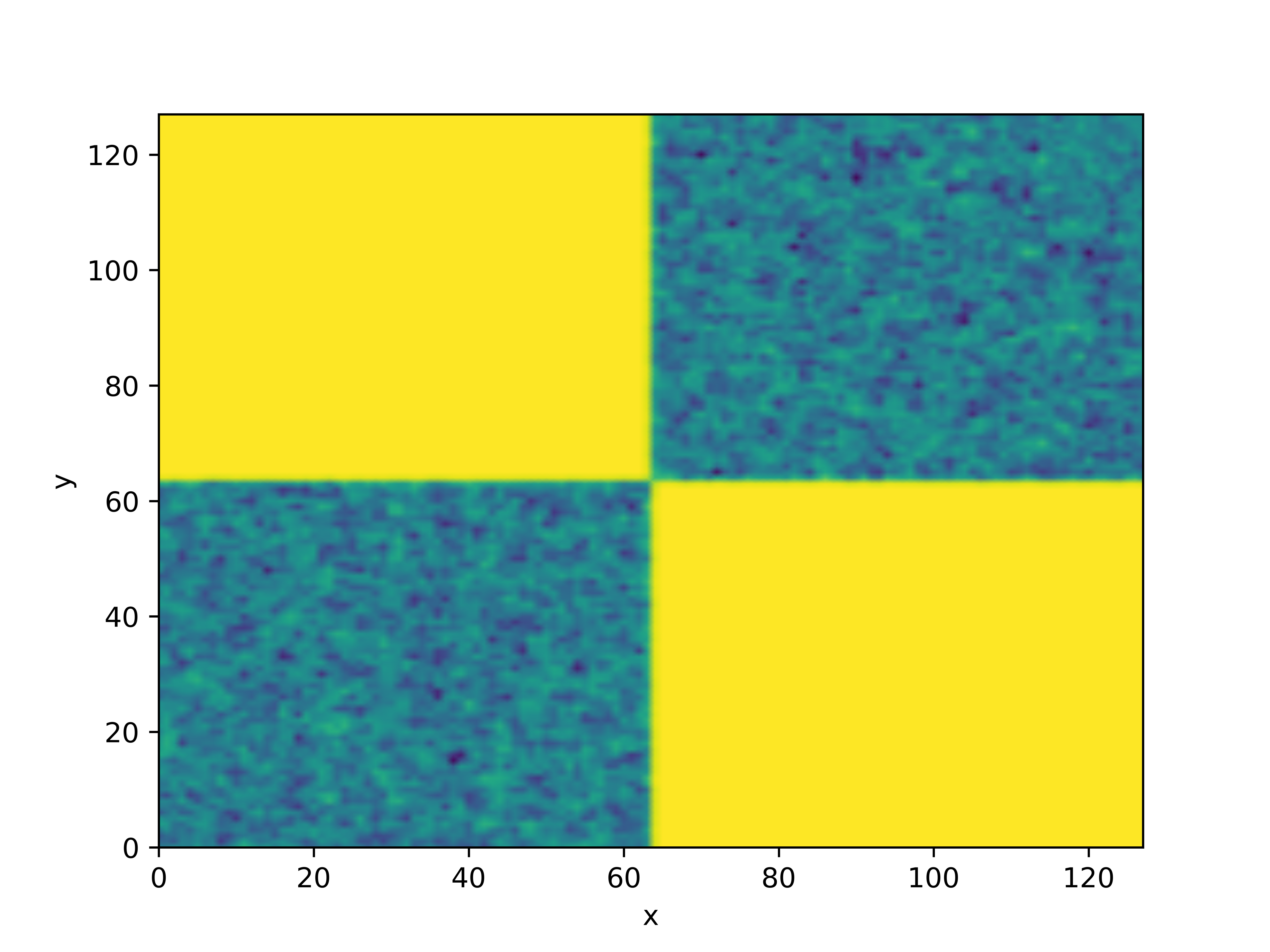

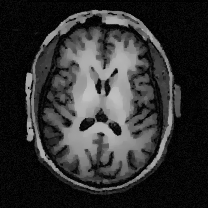

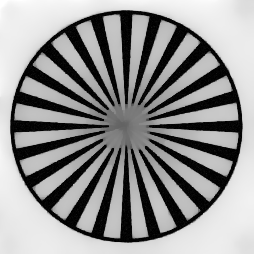

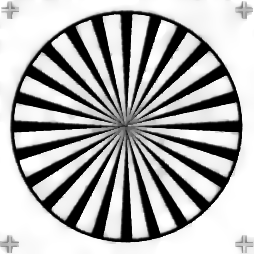

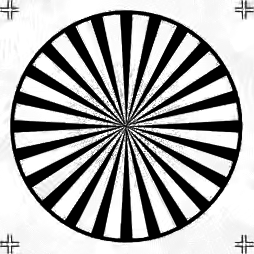

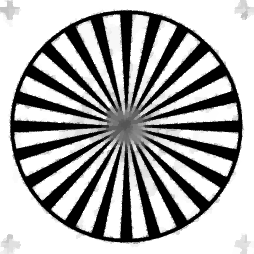

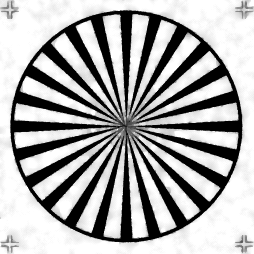

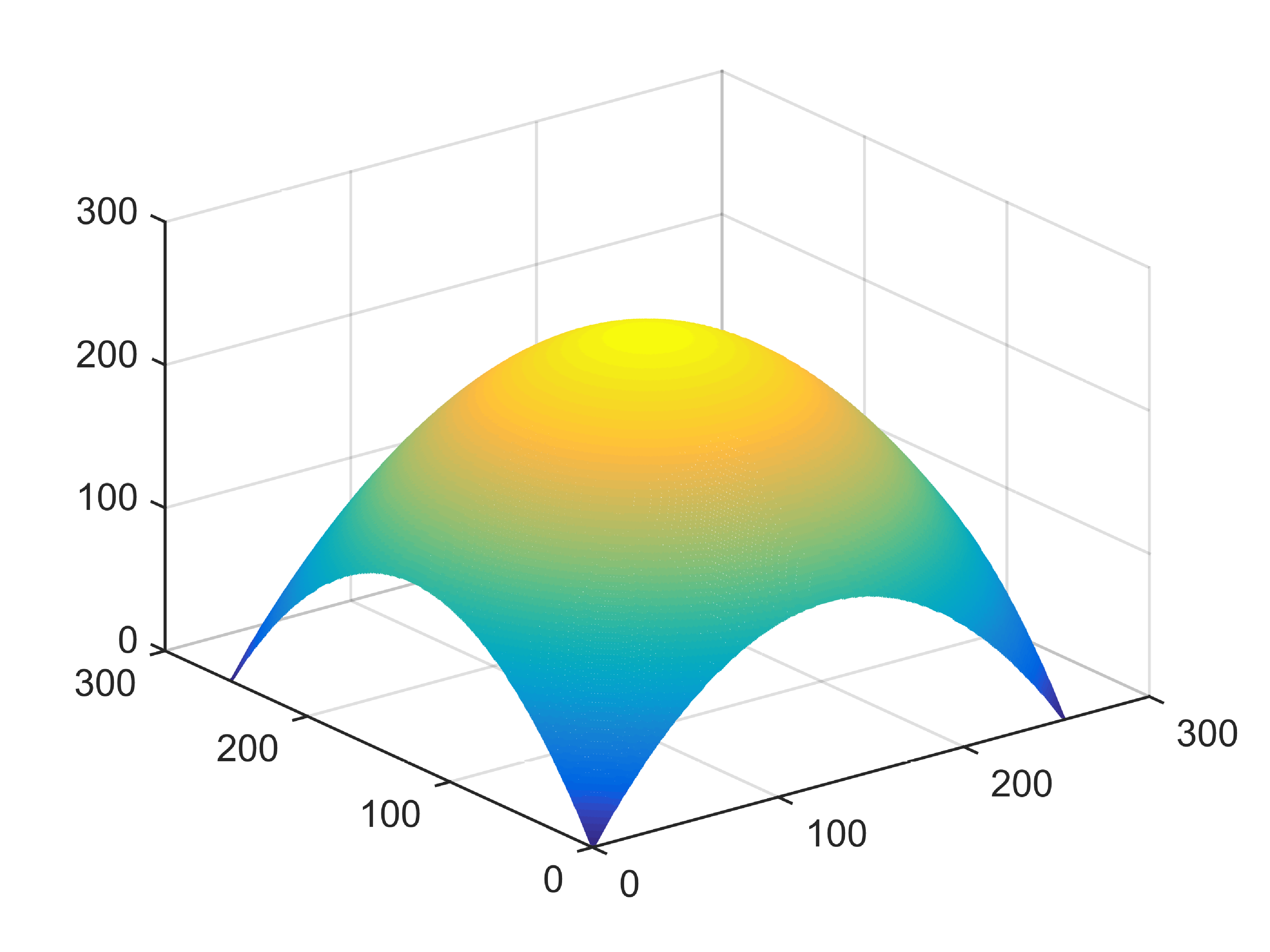

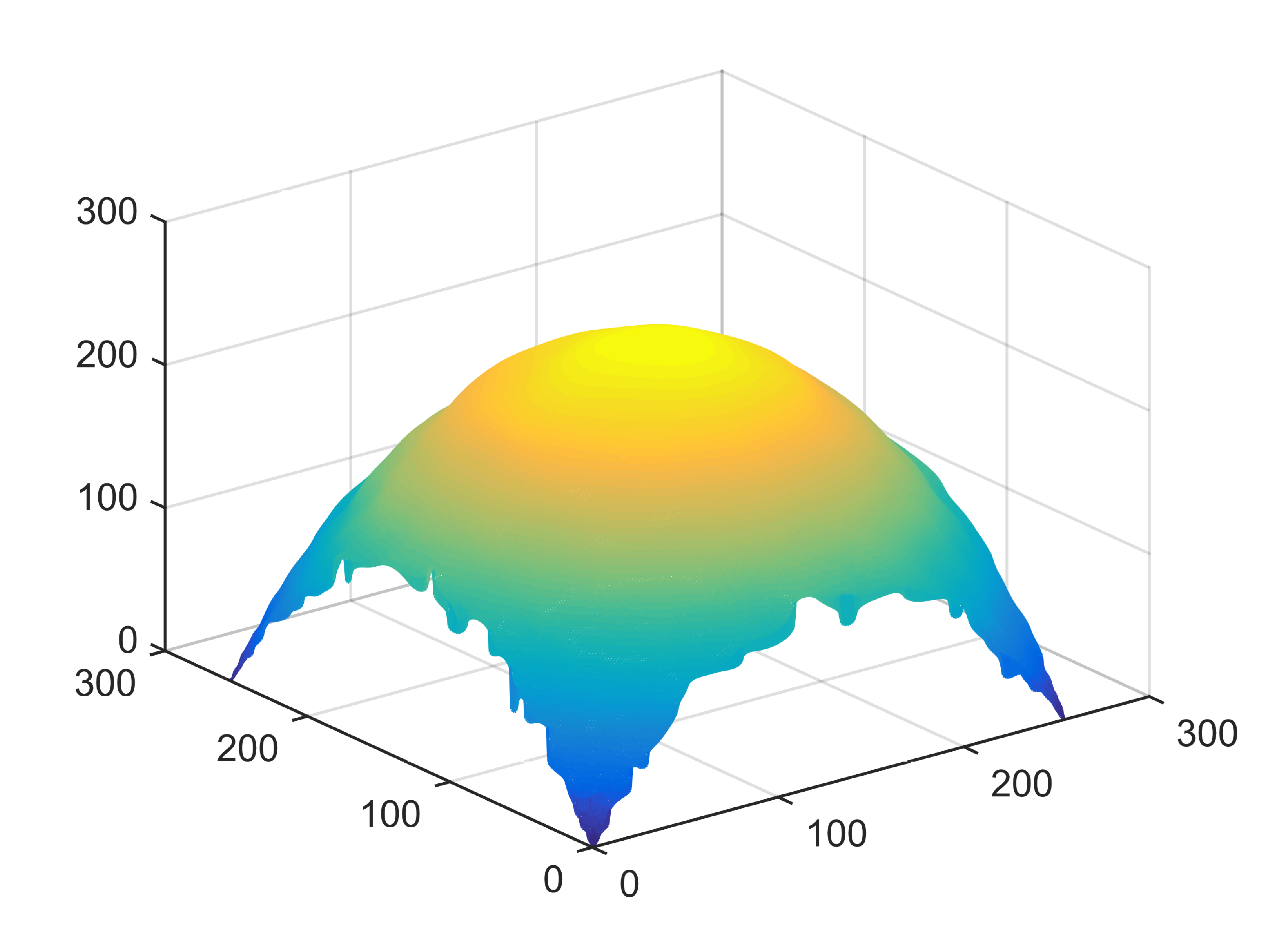

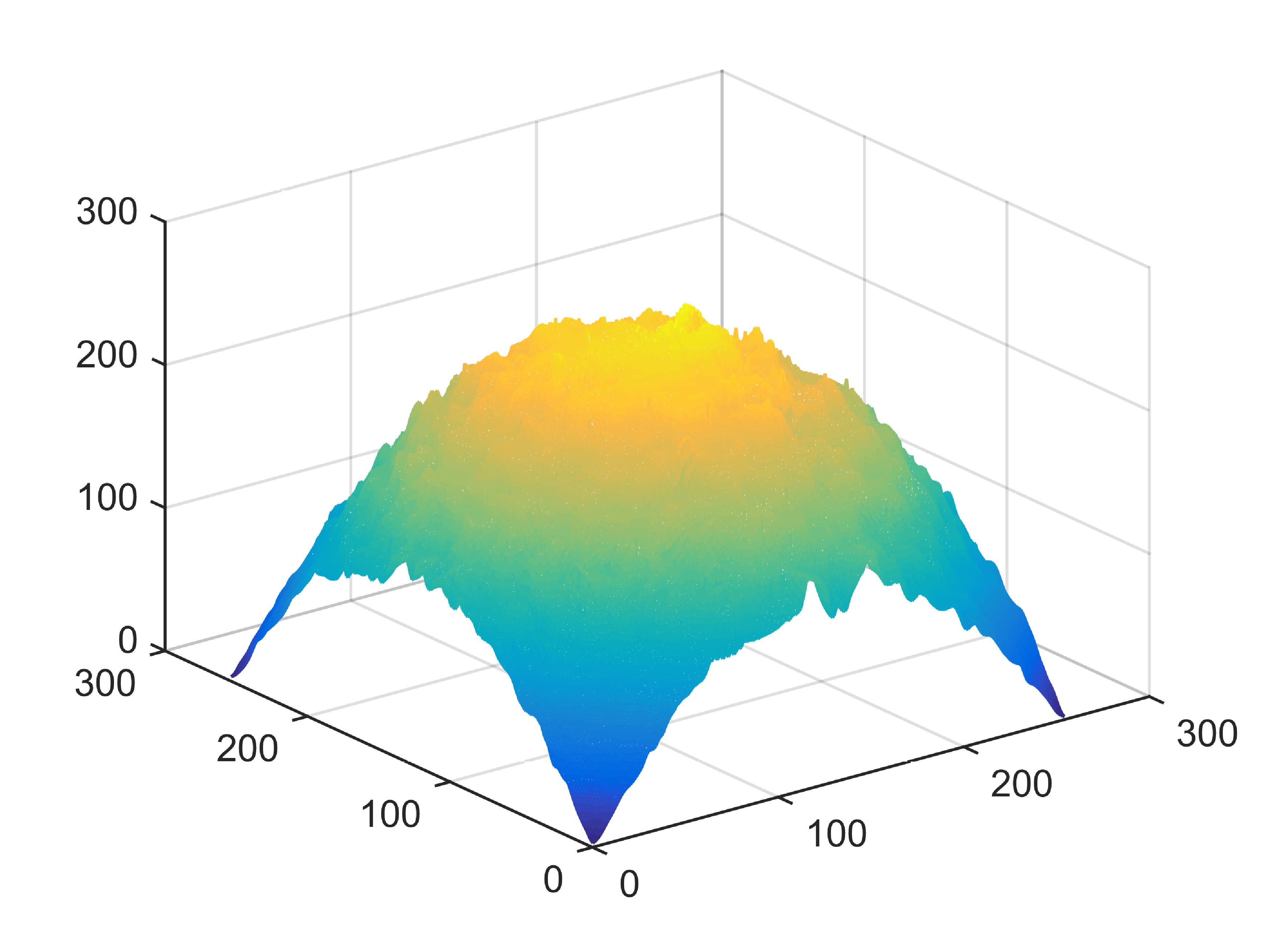

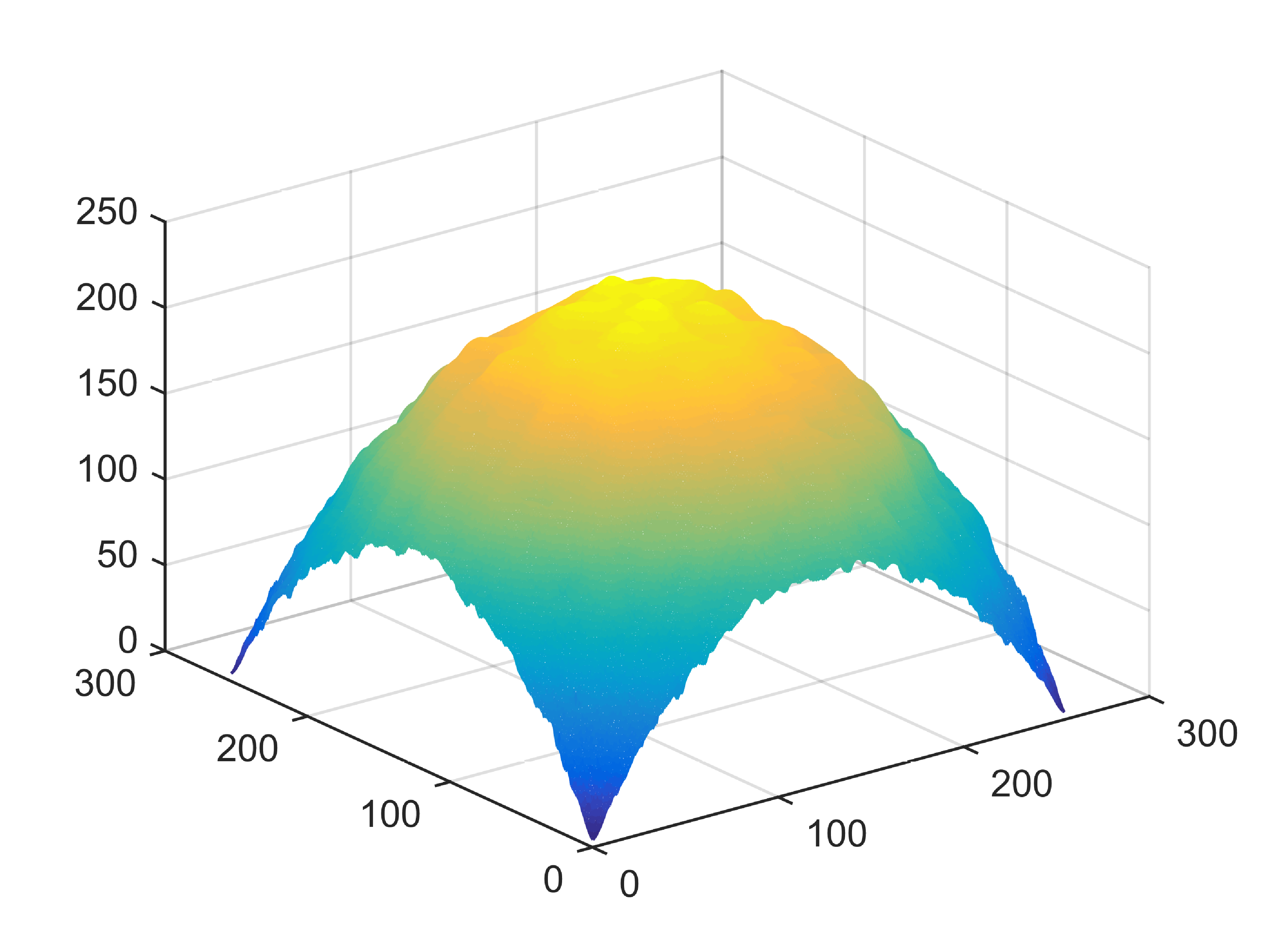

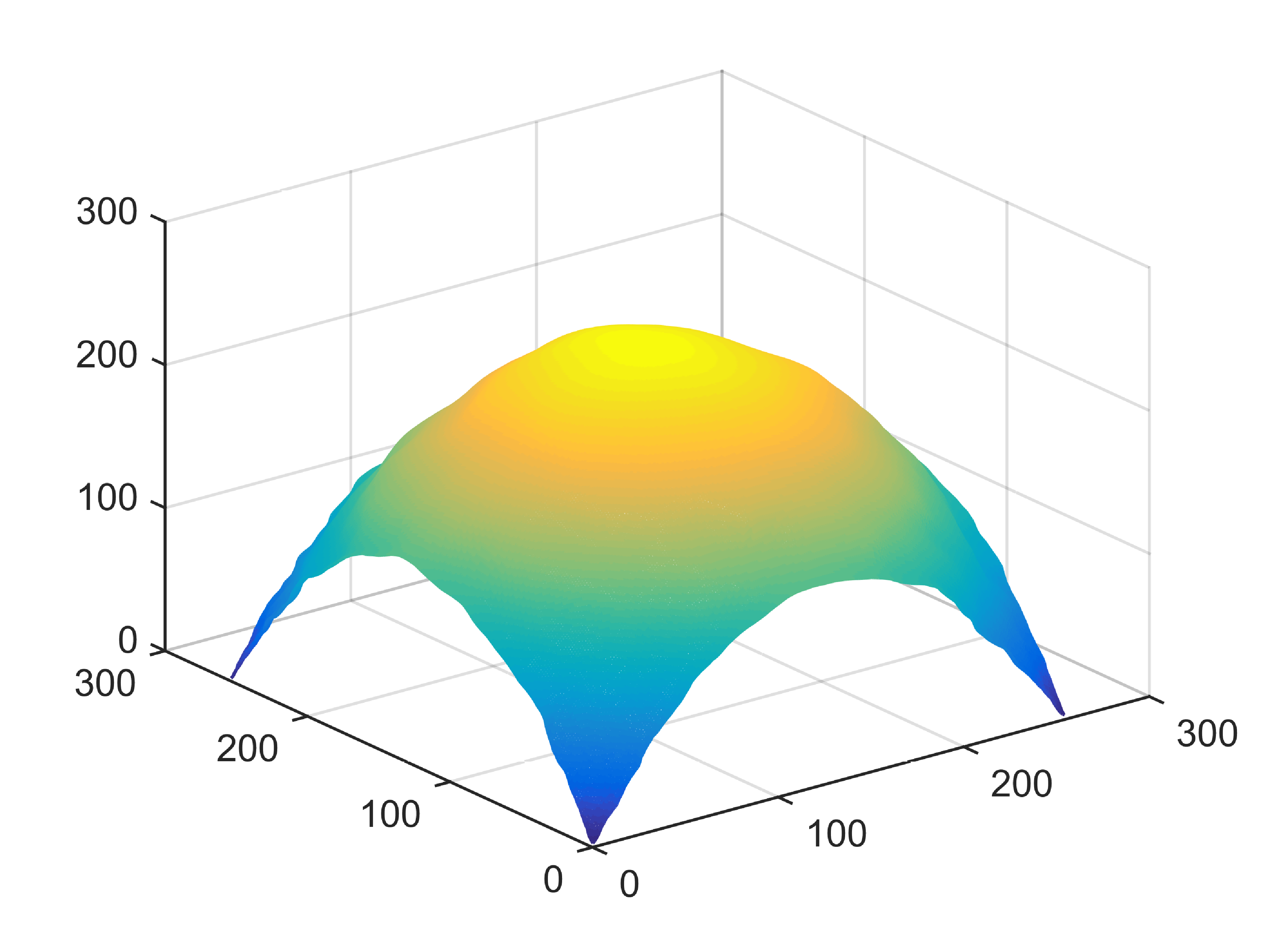

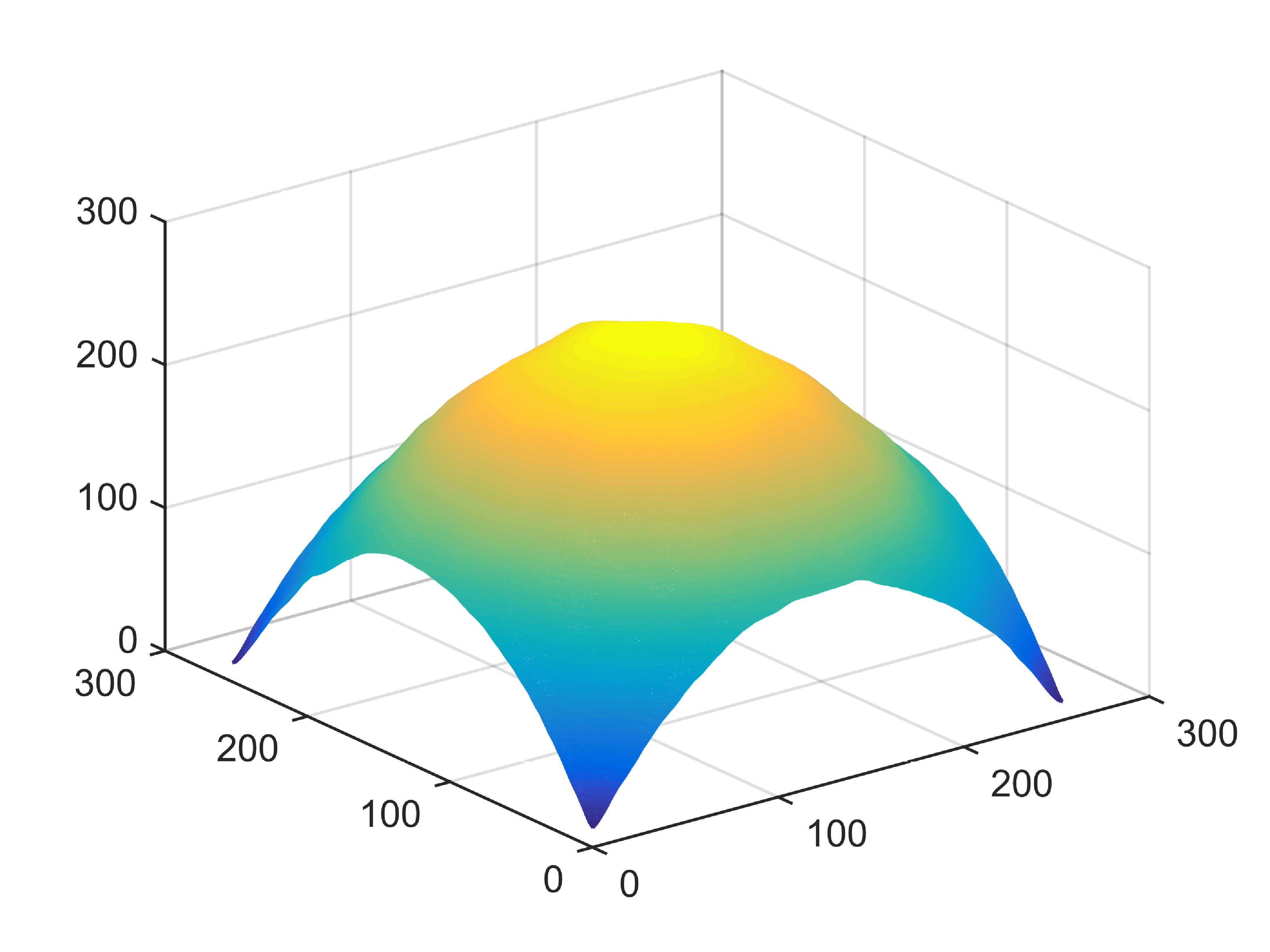

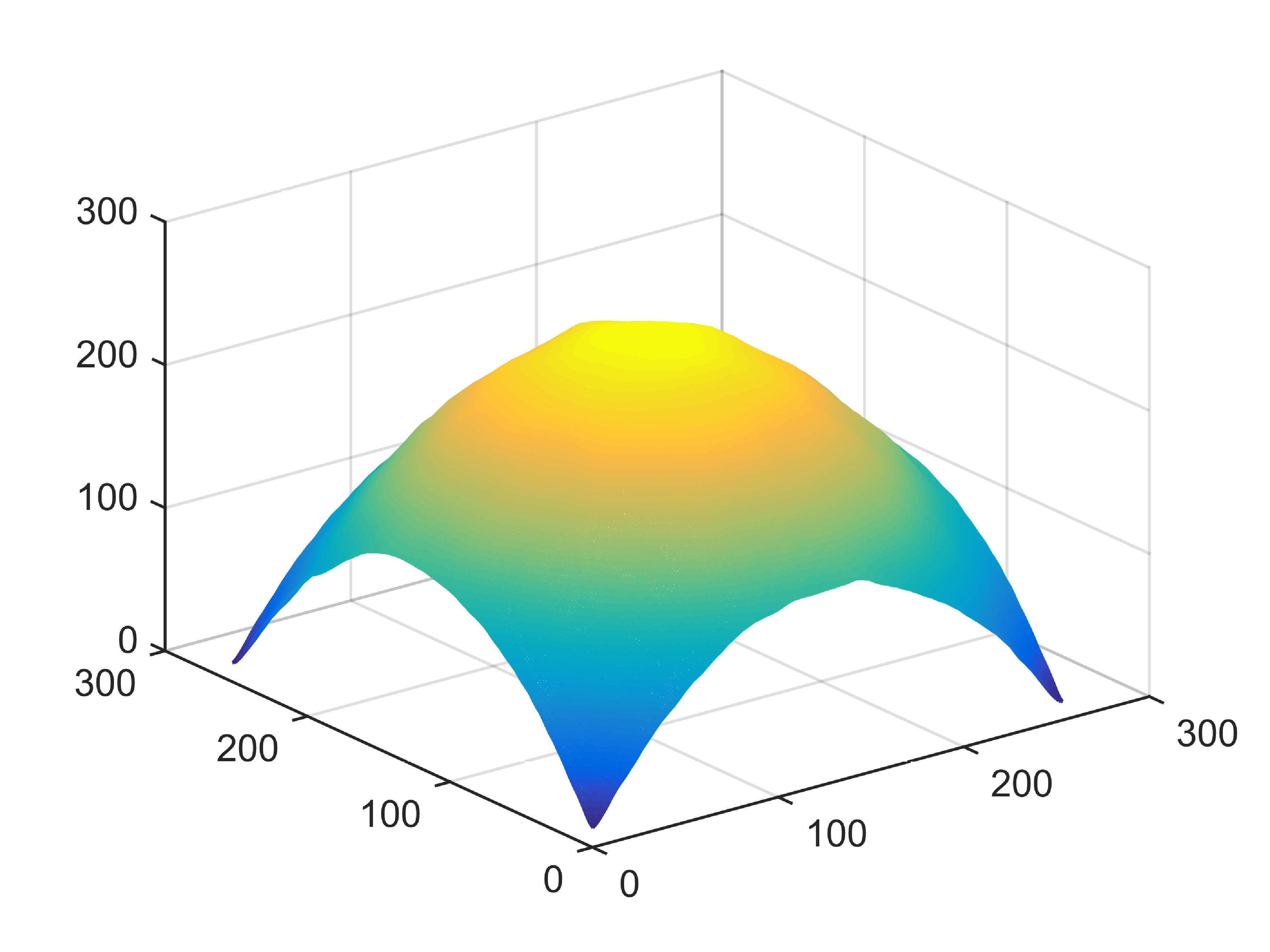

Suppose the image are degraded by the multiplicative gamma noise pollution level . As displayed in Fig. 1, during the multiplicative denoising process, the minimal surface model has lower image contrast than the model based on curvature. In terms of visual effects, curvature-based model can protect image details better but over-smooths the edges. To further improve their denoising performance, we use a mixed geometric information model. As shown in the results in Fig. 1, the mixed geometric information model has the best edge structure protection capabilities and comprehensive results.

2.2 Gray Level Indicator

Unlike additive noise, the extent of corruption caused by multiplicative noise is linked to the signal strength of its clean image. Based on the above analysis, we design gray level indicator for adaptive denoising. Regions with higher original signal strength indicate a greater degree of pollution, necessitating stronger denoising in such regions. However, multiplicative noise severely destroys almost all information in the clean image, making it challenging to directly obtain a corrupted image. Therefore, to achieve our objective, we use a modified gray level indicator. For the image , the gray level indicator can be expressed as

| (3) |

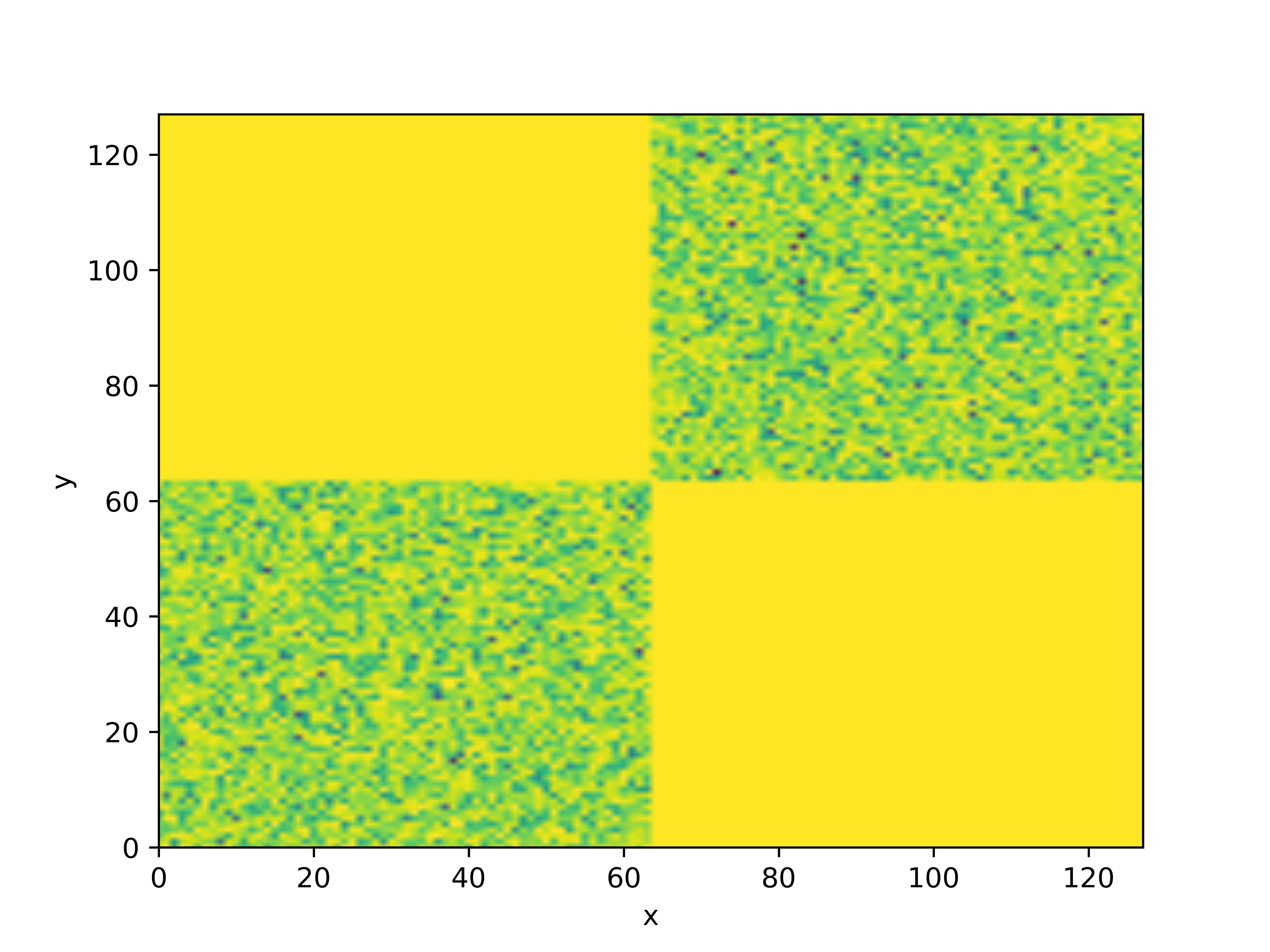

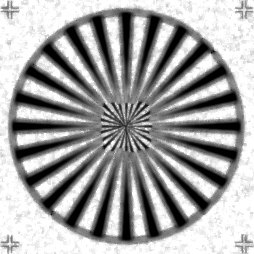

where is the two-dimensional convolution kernel with standard deviation , . utilizes a Gaussian convolution kernel to pre-smooth the noisy image and approximate the clean image. This operation makes the indicator less sensitive to noise of scale smaller than . Normalization allows the indicator to be regarded as a weight function. The higher the signal strength, the more attention it warrants, and active minimization of the surface should be prioritized. As depicted in the Fig. 2, the indicator effectively characterizes the pollution degree of the noisy image and accurately captures the spatial variation of the image.

2.3 Proposed Model

Assuming that the gray image is defined on the domain , and is considered as a regular surface in three-dimensional space. Encouraged by the success of mean curvature in handling additive noise, we incorporate the mean curvature of surfaces into the our proposed model. We reconsider the image surface as the zero level set of the level set function [42], . Therefore, its unit outer normal is

| (4) |

Further, the mean curvature of a surface is defined as the divergence of the unit normal

| (5) |

Clearly, the smoothness of the image can be effectively represented by the curvature. Through such a transformation with the level set method, the value of can be used to rapidly and accurately calculate the mean curvature using methods such as the finite difference method.

Considering that the mean curvature regularization of the surface is used in the model, we regularize the surface area rather than directly limiting the length of the level set curve. This also has the advantage that it avoids the singularity caused by division by zero. Specifically, constraining all contours and regularizing the elastic energy of the level set function, we get the following regularization term

| (6) | ||||

Further, by combining the gray level indicator and fidelity term designed for the multiplicative noise process, we propose a model that mixes area and curvature geometric information

| (7) |

where is the gray level indicator, and and are positive constants utilized to balance the fidelity term and the regularization term. To better address the characteristics of multiplicative denoising and achieve the regional adaptive effect, we employ the gray level indicator from [12]. To further illustrate the rationality of the model, we will further introduce the degradation model of the model, each of which is a well-known classic denoising model.

2.4 Degenerate Case of the Model

In order to further illustrate the advantages of mixing area terms with curvature terms, we conducted the following experiments. In particular, the free parameters and adaptive part of the model can degenerate the model into many simpler forms despite the high complexity of the model. (1) and is a constant, , degenerate into regularization resembling Euler’s elastica; (2) and is a constant, , degenerate into minimal surface regularization; (3) is not a constant, , degenerate into adaptive minimal surface regularization; (4) is not a constant, , it is a multiplicative deduction based on the mixed geometry information of the surface. We next analyze various visual denoising effects of the model.

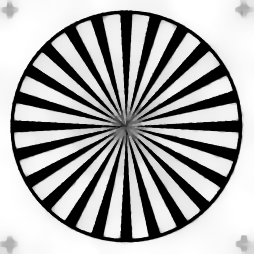

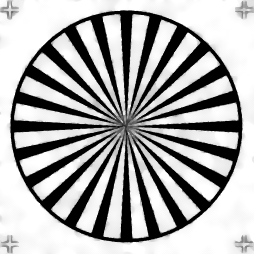

In the experiment, the noise parameter was initially selected. The traditional minimal surface model exhibits a conspicuous phenomenon of fragmentation, which leads to more false boundaries in the result, which is not conducive to the subsequent analysis. Upon employing the gray level indicator, Fig. 3d illustrates a significant reduction in the staircasing effects of the minimal surface model, and Fig. 3e shows a smoother result. However, a “fish scale phenomeno” emerges, accompanied by noticeable jagged edges at the shape’s periphery. In contrast to previous models, our proposed model effectively restores image details and successfully avoids the staircasing effects. The amalgamation of area and curvature geometric information yields mutual benefits, surpassing the denoising capabilities of each individual geometric feature in multiplicative denoising.

3 Fourth-Order Euler-Lagrange Equation of mixed geometry information model

In view of the gradient flow approach, we discretize the Euler-Lagrange equation to solve the functional minimization problem. This kind of continuous approach can accurately depict the geometric characteristics of the image without causing grid bias or measurement artifacts. Additionally, unlike optimization-based methods, this approach does not introduce errors between relaxed functional and original functional.

We first derive the gradient flow corresponding to our model. To simplify the model derivation, we consider as a weighting function in the subsequent analysis, thereby yielding the Euler-Lagrange equation that corresponds to the mixed geometry information model

| (8) | ||||

where , , . The corresponding gradient descent flow is

| (9) |

with Neumann boundary condition on

| (10) |

where is the outward normal derivative at the boundary.

From formula (8) we can see that is the main term of diffusion. When is large, the diffusion coefficient is relatively small, which indicates that diffusion should be slowed down at the edges. When is quite small, the forward diffusion coefficient is relatively large, which indicates that rapid diffusion denoising is performed inside the image. Similar to [23], when , our model will have a degenerate form

| (11) |

which manifests as anisotropic diffusion and slight biharmonic diffusion. Due to the fact of , anisotropic diffusion plays a dominant role in the inner region.

In addition, for non-smooth boundaries, due to the existence of the denominator part , this will lead to a reduction in forward heat diffusion efficiency. However, the phenomenon of non-smooth boundaries still exists. In our model (8), due to the combination of the curvature term and the surface area term, it can be exactly eliminated with . This is crucial for the backward diffusion of the model. At this time, for the rough edges of the image, will dominate the diffusion process of the model, causing the boundaries sharper and the interior smoother.

4 Numerical Implementation

In this section, we first introduce the numerical discretization process. To overcome the time step limitations in the finite difference method, we employ the unconditionally stable AOS and SAV algorithms.

4.1 Operator discretization

We proceed by taking time as the evolution parameter for advancing the fourth-order equation to facilitate the explicit update of . Initially, we assume the image size is . Let represent the time step and define the grid as follows

| (12) | ||||

Generally and . Denoting as the value of at , various approximations of the derivative in the direction and the direction are listed

| (13) | ||||

We exclusively provide the discrete scheme for the second term in (8), with a similar discretization approach applicable to the remaining terms. The second term is reformulated as

| (14) |

where the forms of and are

| (15) | ||||

Furthermore, we list an approximation of

| (16) |

Therefore, we need to calculate , , , . Taking as an example, the discretization of others is omitted for simplicity. Let , and presented below is the approximate scheme for at .

| (17) | ||||

where the minmod function is used to increase the anisotropy of the equation

| (18) |

The approximation of and at can be expressed as

| (19) | ||||

4.2 Numerical Discretization for Additive Operator Splitting Algorithm

Let and represents the th update result of , then the evolution process can be expressed as

| (20) | ||||

where , . Discretization of Neumann boundary conditions is given by

| (21) |

The explicit scheme evolves in a small time step size due to the constraints imposed by CFL conditions. This restriction diminishes computational efficiency making iteration in this scheme unfeasible.

While the theoretical foundation of model (7) effectively preserves the texture features, the incorporation of high-order nonlinear terms introduces substantial challenges in devising efficient numerical algorithms. Addressing the numerical instability associated with the explicit finite difference method and aiming to retain disorder in diffusion directions, we employ the additive operator splitting (AOS) algorithm as proposed in [43] for the numerical solution of our model.

The AOS algorithm decomposes the original diffusion into horizontal and vertical directions diffusion, then obtains and from , and finally uses the average value of and as the iteratively updated . From the form of (23), we can easily calculate the diffusion matrices and in the and directions of the size image, taking the direction as an example:

| (24) |

where boundary conditions are correspondingly defined as , then one can derive each element in the matrix accordingly

| (25) |

The values and are approximated through forward and backward differences. Subsequently, we adjust the original AOS algorithm and add an additional term. Upon acquiring the diffusion matrix, one-dimensional diffusion is independently applied to the rows and columns of for iterations.

| (26) |

where and can be solved in an efficient way.

| (27) | ||||

Following the utilization of the Thomas algorithm for the alternate computation of two variables, the denoising result for iterative updates is derived by averaging the values of and

| (28) |

After the tridiagonal matrices and are obtained through (24), the problem of image denoising is transformed into the problem of solving linear system. The AOS algorithm possesses absolute stability, consistently yielding outstanding experimental results even under large time step sizes. However, in order to maintain the accuracy of the algorithm, it is common to set a smaller time step size. Consequently, in experiments, a delicate balance must be struck between solution accuracy and computational efficiency. The choice of an appropriate time step size involves a tradeoff. Algorithm 1 outlines the AOS algorithm for solving (22).

Theorem 1.

For any time step , the scheme (27) in AOS algorithm is absolutely stable.

Proof.

From the form of in (23), we know , for , . Then the main diagonal elements in the matrix corresponding to (24) are negative values, and the subdiagonal elements are positive values. And we can easily see that is a symmetric matrix. In addition, we need to note that is not only a weak diagonally dominant matrix, but also a irreducibly matrix. Applying the Cottle theorem [44], has negative eigenvalues .

Let represents the matrix . The eigenvalue of matrix is . On the other hand, we know the fact that the sum of eigenvalues is equal to the sum of the diagonal elements. So the spectral radius of is strictly greater than 0 due to the fact that the main diagonal elements of are strictly less than 0. Then denoting as the spectral radius of . For any

| (29) | ||||

This inequality indicates is a convergent sequence. Similarly, the convergence of can be obtained. Thus the semi-implicit AOS algorithm 1 corresponding to (7) is unconditionally stable for any .

Remark.

While there is an additional term involving in the operator in (23), this term cannot be incorporated into the operator . On the one hand, the operator fails to ensure the positive definiteness of the coefficient of , leading to the inability to satisfy the prerequisite conditions for in the operator. Hence unconditional stability cannot be guaranteed, resulting in oscillatory behavior during the iterative process. On the other hand, overly complex diffusion coefficients may, to some extent, conflict with each other, introducing ambiguity in the diffusion process. This underscores that the AOS framework fundamentally operates as a second-order solution framework. Consequently, the inclusion of high-order derivatives in the diffusion coefficients for both the and directions should be approached cautiously. An excessive presence of high-order terms may potentially lead to algorithmic malfunction.

4.3 Numeric Discretization of Scalar Auxiliary Variable Scheme

While the AOS algorithm has been used to successfully solve numerous nonlinear models, it is accompanied by the drawbacks of reduced accuracy and ‘streaking artifacts’ [45, 46]. It is worth noting that in order to solve the problem of energy functional minimization, Shen et al. [40, 47] transformed the gradient flow solution of the original problem by introducing a scalar auxiliary variable, and proposed the scalar auxiliary variable (SAV) method. As a burgeoning numerical algorithm in the field of image processing, SAV represents a general and efficient solution framework with simple numerical scheme, accurate calculation accuracy, and unconditional stability [48, 41, 49].

Redefine the new energy functional (7), which can be rewritten as:

| (30) |

where is a non-negative symmetric linear operator, typically independent of the function and containing a nonlinear term related to . As all terms in problem (7) are nonlinear operators, we take equal to the total energy functional subtract linear term inner product. This choice ensures that is still equal to the total energy functional, allowing them to be harmonized into a unified form. Furthermore, the gradient flow corresponding to the minimization of the functional (30) is

| (31) |

By the chain rule, we get that the derivative of energy with respect to time

| (32) |

Therefore, the gradient descent method effectively maintains the reduction of energy during the evolution process. Obviously, the objective functional possesses a well-defined lower bound, so there exists a positive constant such that . To facilitate analysis, we introduce the scalar auxiliary variable and present the equivalent form of the gradient flow within the SAV framework

| (33a) | ||||

| (33b) | ||||

| (33c) | ||||

Next, we introduce the first-order scheme of SAV

| (34a) | |||

| (34b) | |||

| (34c) | |||

Although the update of in (34) may appear intricate, the computational cost primarily focuses on solving a linear system with constant coefficients, and the associated calculation cost is comparatively modest. Moreover, we can efficiently address (34) through the following process:

| (35) |

where

| (36) | ||||

Multipling the (35) by the and integrating, we obtain

| (37) |

Then there holds

| (38) |

Finally, we substitute (38) back into (35) to get , which gives

| (39) |

In summary, the first-order scheme of the SAV algorithm can be summarized as Algorithm 2.

SAV approaches of second-order or higher-order schemes can also be easily constructed, typically exhibiting energy stability. Therefore the selection of the time step size becomes less critical, as it does not necessitate adherence to the CFL condition. In what follows, we introduce a second-order scheme within the SAV framework, employing the Crank-Nicolson scheme.

| (40a) | |||

| (40b) | |||

| (40c) | |||

where the value of can be approximated using an explicit difference method, as exemplified by the following formulation

| (41) |

Then the iterative update of can be derived in a similar process in the first-order scheme. First, substitute (40b) and (40c) into (40a), we get

| (42) |

where

| (43) | ||||

Similarly, multipling (43) by and integrating, then there holds

| (44) |

Furthermore, it arrives at

| (45) |

Finally, combining (42) and (45), it leads to the iterative update result of

| (46) |

During the practical evolution process, the energy functional may experience rapid decay within a short time period, followed by slight changes in the remaining periods. Therefore, it becomes imperative to opt for a small step size when the energy undergoes rapid changes and a relatively larger step size during periods of energy stability. To address this, we implement an adaptive time step strategy according to [47],

| (47) |

where is a default safety coefficient, is a constant representing reference tolerance and is the relative error in the iteration, is the minimum time step size, is the maximum time step size.

We now give the unconditional energy stability properties of the numerical scheme.

Theorem 2.

The energy functional in the scheme (33) is steadily declining

Proof.

Multiply the three equations of (34) by , , and , respectively. Then integrate (34a) and (34b), and sum up them. We readily derive the following theorem on energy dissipation. The proof follows from the proof given in [47].

Theorem 3.

The scheme (34) is first-order accurate and unconditionally energy stable in the sense that

Similarly, multiply the three equations of scheme (40) by , , and , respectively, integrate the first two equations, and finally sum up all the three equations. Using the linear and symmetric properties of , we get the following theorem.

Theorem 4.

The scheme (40) is second-order accurate and unconditionally energy stable in the sense that

5 Numerical experiment

In this section, we evaluate the performance of the proposed model and algorithm through numerical experiments on the multiplicative denoising problem. Specifically, we use Peak Signal to Noise Ratio(PSNR) and Structural SIMilarity(SSIM)[50] as quantitative evaluation metrics to measure the quality of recovered images. PSNR and SSIM are defined as

| (52) | ||||

where is the original image of size , is the restored image, and are mean values of and respectively, and represent the variances of and respectively, is the covariance of and , and are constants.

5.1 Parameters Discussing in AOS Algorithm

As mentioned in Section 4.2, the AOS algorithm is absolutely stable, so the time step can be selected arbitrarily in terms of stability. However, although an excessively large time step can greatly improve the computational efficiency, it also lead to too fast to diffuse and miss the best denoising result. Therefore, it is very important to find a compromise step to balance efficiency and accuracy. In order to obtain a suitable time step size, we tested the time step size at 4 different scales, while keeping other parameters fixed.

5.2 Parameters Discussing in SAV Algorithm

In this section, we take the first-order SAV algorithm as an example to show the results. The second-order SAV algorithm obtains similar experimental results. We test an important parameter in the algorithm, the constant . As mentioned above, the energy functional itself has a lower bound, which needs to be added to a constant to ensure its non-negativity. The optimization process of modified functional is equivalent to the original functional, but in numerical calculation, it may be different when changes.

As observed, PSNR does not have much correlation with the value of , so we choose . But one issue that needs to be pointed out is that the denoising effect of Fig. 5d is extremely unstable. This is due to the rapid decay of the energy to a small magnitude during the evolution. Under the condition of the relative error stopping criterion, the relative error is easily less than the agreed threshold when the constant is too large, so the loop is jumped out, resulting in unsatisfactory results. In addition, unlike the arbitrary choice of in the literature [41], our energy functional itself has a lower bound but may have negative values. Therefore, when is too small, it will bring the singularity of the equation, and the choice of is related to the image itself. For example, when , it is successful for , but it will fail for . Therefore, combined with the consideration of non-negative auxiliary variables and the convergence of the model, we change the stopping criterion in to absolute error, which can effectively resolve their contradictions.

5.3 Denoising Performance

In this subsection, we compare the proposed model with many efficient multiplicative denoising models, including AA model[8], DD model[12], MuLog+BM3D model[51], NTV model [20], AAFD model[13], EE model[29] which is solved by alternating direction method of multipliers.

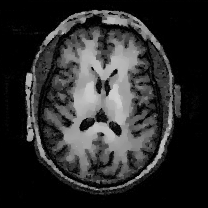

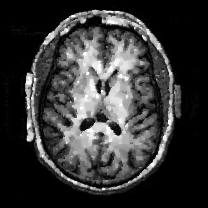

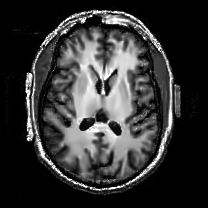

In order to illustrate the superiority of the model and algorithm, we used gamma noise pollution with noise levels of , , and respectively. Generally, all models can suppress noise very well. However, the NTV model cannot effectively solve the high-noise situation of , because the expectation and variance of the reciprocal of gamma noise are infinite when . In order to quantitatively evaluate the model, we report the PSNR and SSIM values of the recovery results of various methods in Table 1, where the optimal experimental results are marked in bold. We usually choose a not too large weight for high-order terms to alleviate the step effect and assist the prior of the area term. On the one hand, we can see that in most cases the AOS algorithm can obtain experimental results quickly, but it also brings the effect of large errors, which can be found in the results of L=1 and L=4 for “Halo” in Table 1. Therefore, we actually recommend using the SAV algorithm to obtain higher quality experimental results.

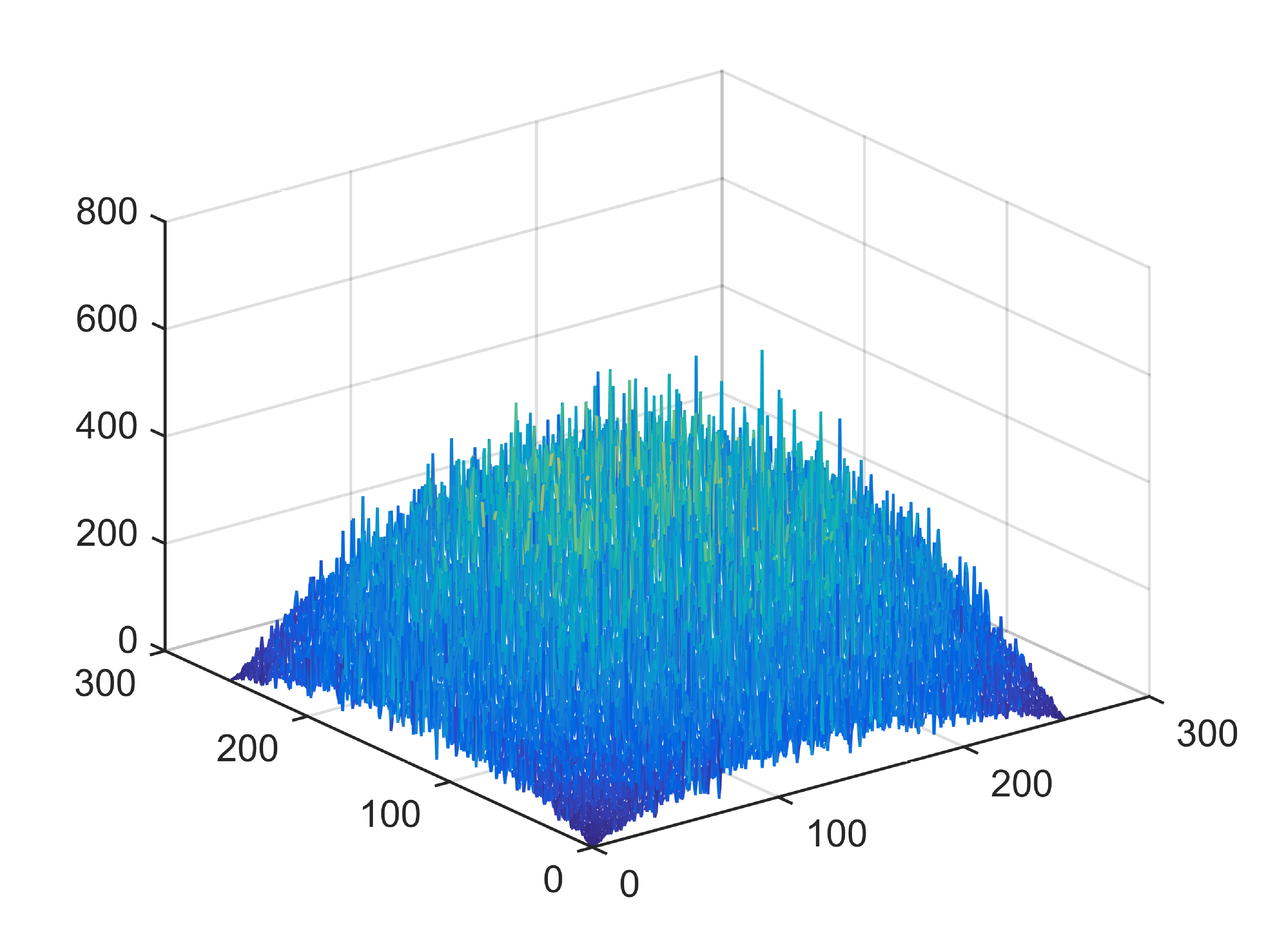

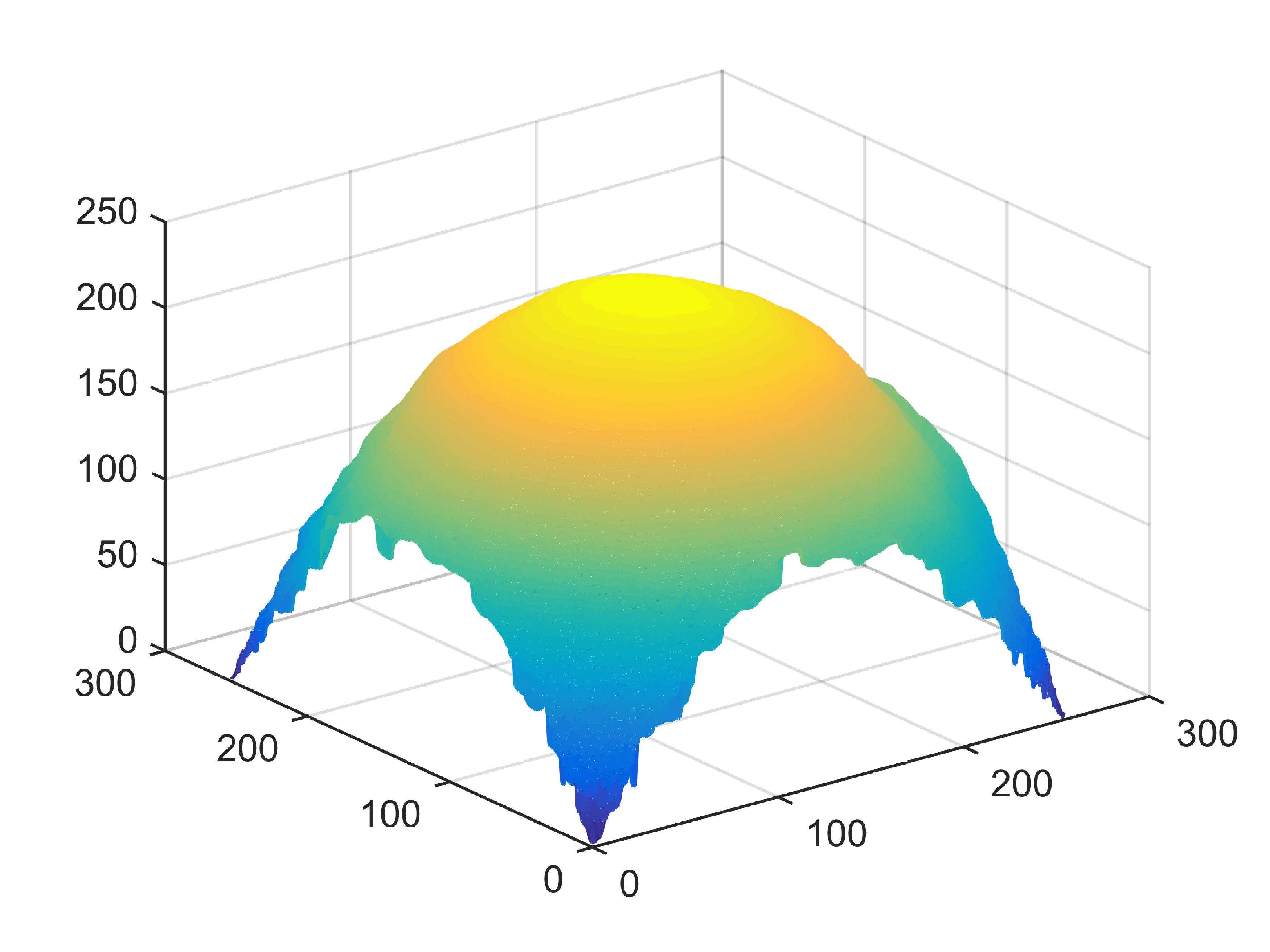

When , an obvious feature is that quite a few white point outliers appear randomly in the image. And an advantage of the diffusion equation-based method is that it will smooth the image at any noise level. In contrast, these random outliers appearing in MuLoG’s denoising results brings bad visual effects. These phenomena are depicted in Fig. 9. In addition, DD, EE and our model based on adaptive area denoising can restore various areas of the image to varying degrees, and can well protect the real texture of the image. Meanwhile, the MuLoG method can effectively protect the contrast and tiny texture of the image. From Fig. 8, 11, 14, we can clearly see that the AA model exposes so relatively large linear region that is prone to occur in low-order models. Although NTV can remove noise very well, due to the limitations of the model itself, it is difficult to remove large noise, and there are still dirty spots in Fig. 11 that cannot be removed. It is worth pointing out that due to the use of block matching technology, the MuLoG method often appears artificial boundaries, which can be easily seen from the center of Dartboard in 16. Inevitably, in addition, for “Halo”, this method fails greatly, the false boundaries almost occupy the entire image, and it is unbearable. Compared with the DD and EE models, the model we proposed can protect the boundaries very well and obtain a denoising effect with richer details. This can be seen further when we plot the surface figure of “Halo” in Fig. 17. From Fig. 16 we can see that our model has the best performance among all models in maintaining the smoothness and connectivity of image lines, which benefits from the curvature model’s prior regularization of image curves.

As can be seen from Table 1, although MuLoG has more artificial boundaries, it still achieves best PSNR and SSIM in some figures. Compared with other methods, our model can effectively avoid the staircasing effects on natural images, SAR images, ultrasound images, and synthetic images. It can effectively protect detailed textures and smooth noise. Especially for “Halo”, which is a synthetic images with large slopes, the model we proposed has achieved amazing experimental results and can almost restore the original image, far exceeding other models. Therefore, our model is very suitable for the denoising process of gradient images.

| Image | Model | PSNR | SSIM | ||||

|---|---|---|---|---|---|---|---|

| L | 1 | 4 | 10 | 1 | 4 | 10 | |

| AA | 21.66 | 23.76 | 19.86 | 0.45 | 0.65 | 0.57 | |

| DD | 22.39 | 24.94 | 25.97 | 0.48 | 0.68 | ||

| MuLoG+BM3D | 22.18 | 24.86 | 0.40 | 0.65 | |||

| Aerial | NTV | - | 23.43 | 24.07 | - | 0.66 | 0.64 |

| EE | 23.45 | 26.79 | 0.59 | 0.78 | |||

| AOS | 22.52 | 24.85 | 26.92 | 0.50 | 0.68 | 0.79 | |

| SAV1 | 22.54 | 27.11 | 0.50 | ||||

| SAV2 | 22.53 | 24.88 | 27.02 | 0.49 | 0.68 | ||

| AA | 16.83 | 19.75 | 20.32 | 0.68 | 0.81 | 0.57 | |

| DD | 18.93 | 22.01 | 24.39 | 0.74 | 0.85 | 0.90 | |

| MuLoG+BM3D | 22.47 | 24.50 | |||||

| Brain | NTV | - | 21.22 | 23.65 | - | 0.83 | 0.87 |

| EE | 18.93 | 21.04 | 23.24 | 0.75 | 0.84 | 0.89 | |

| AOS | 19.23 | 22.12 | 24.10 | 0.75 | 0.84 | 0.88 | |

| SAV1 | 19.36 | 24.73 | 0.74 | 0.86 | 0.90 | ||

| SAV2 | 19.39 | 22.50 | 0.75 | 0.86 | 0.90 | ||

| AA | 27.86 | 31.50 | 33.31 | 0.94 | 0.98 | 0.99 | |

| DD | 33.00 | 36.66 | 39.05 | ||||

| MuLoG+BM3D | 26.20 | 30.79 | 33.31 | 0.78 | 0.87 | 0.88 | |

| Halo | NTV | - | 27.22 | 27.88 | - | 0.98 | 0.97 |

| EE | 29.89 | 34.93 | 36.74 | 0.92 | 0.94 | 0.94 | |

| AOS | 32.13 | 37.18 | |||||

| SAV1 | 40.60 | 0.99 | |||||

| SAV2 | 37.58 | 40.63 | 0.96 | 0.99 |

5.4 Discussion between First-order and Second-order SAV

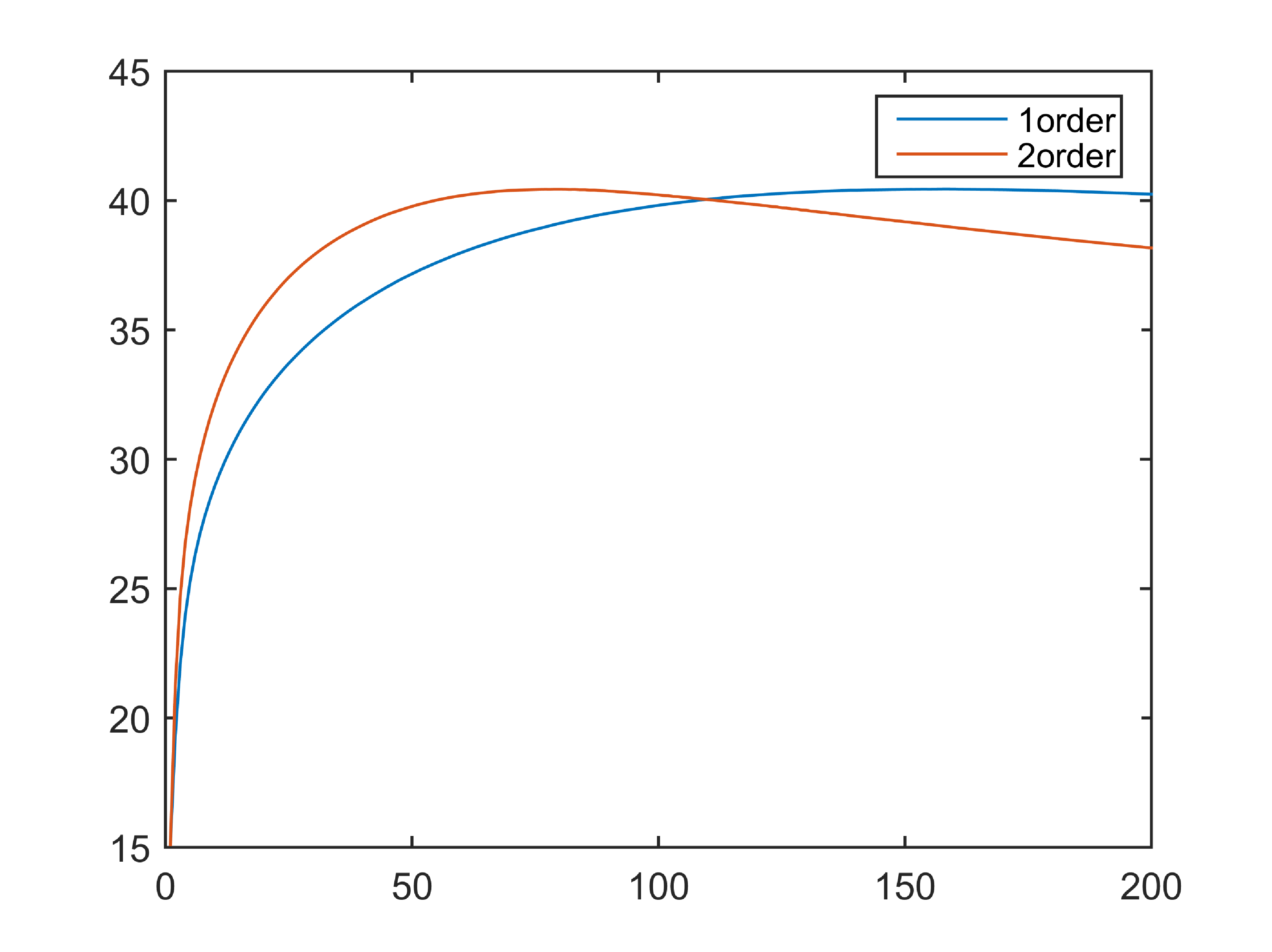

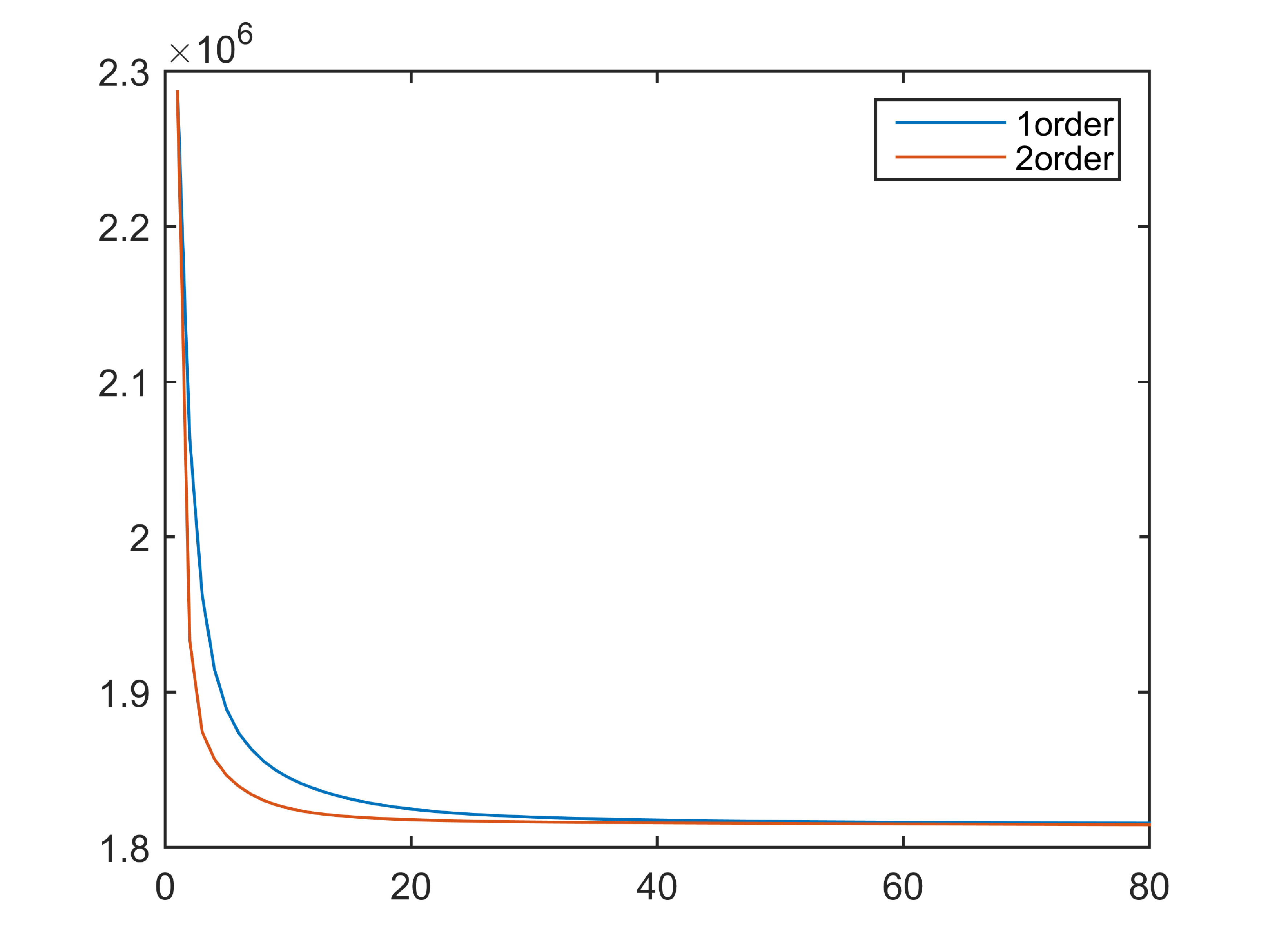

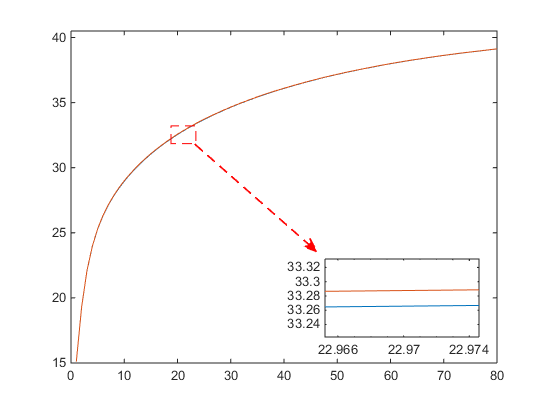

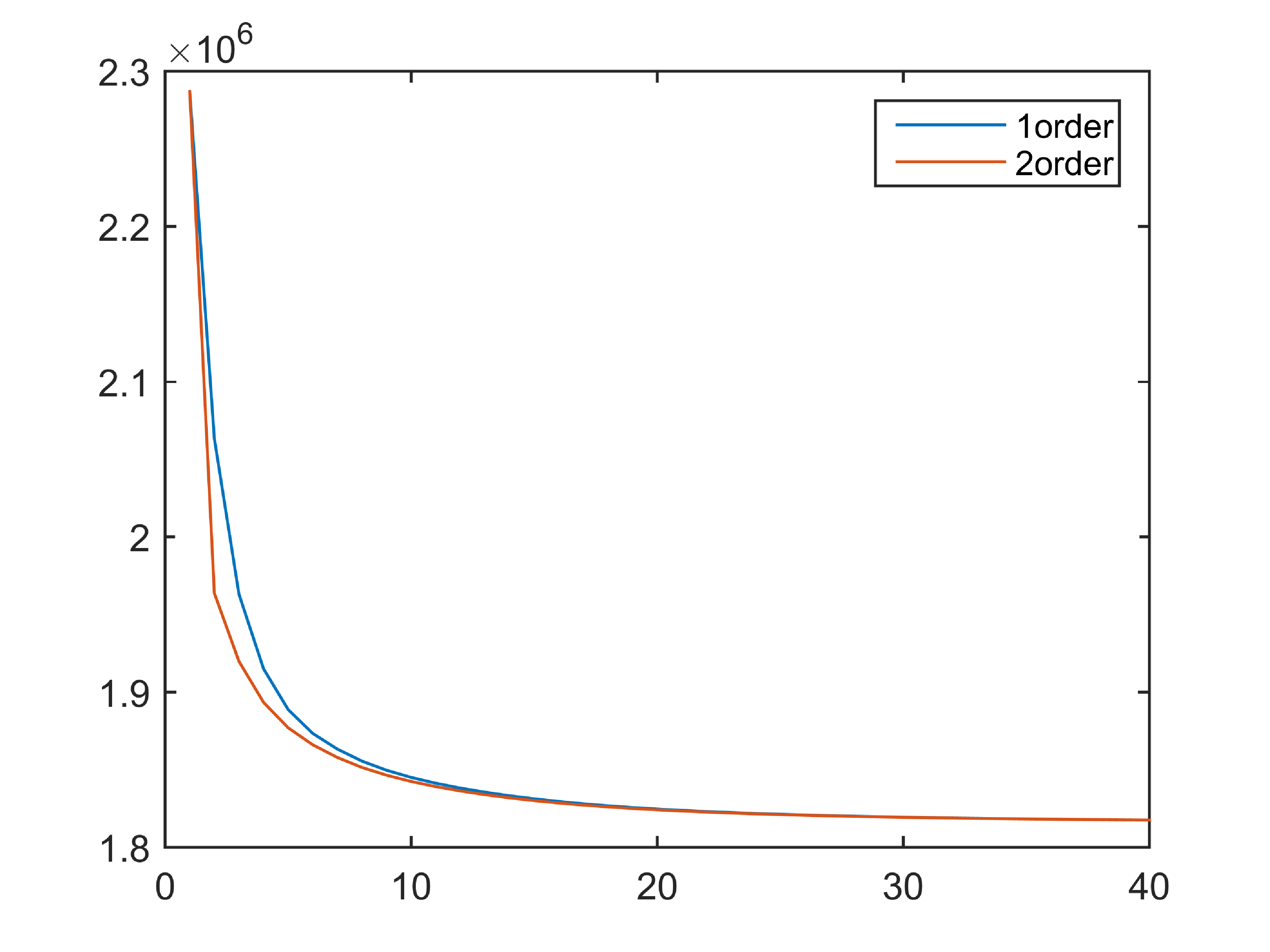

In order to better verify the advantages of the second-order SAV method, the PSNR and energy drop curves of each order model in the image “Hole” are shown in Fig. 18. We set the remaining parameters keep equal except for the time step size in the model. Compared with first-order SAV, we can clearly see that the second-order model can achieve the best denoising effect when iterating for 80 steps, while the first-order model requires 200 iterations to achieve the same denoising effect. The second-order SAV algorithm reaches the best experimental results faster and decay rapidly. Fig. 18b illustrates that the higher-order model can perform energy reduction faster. This means that in the second-order scheme, we can use a larger step size and achieve the same error accuracy, which is consistent with the theoretical error analysis of the numerical scheme. In order to further eliminate the iteration acceleration caused by the step size factor, we choose to choose the same time step for the two schemes. As shown in Fig. 19b, we can find that the second-order algorithm still performs well under the same step size. Compared with the first-order algorithm, it has the advantage in terms of computing speed. In addition, due to the advantages of the model itself, the convergence of PSNR in the two algorithms is almost the same, but after zooming in, it can be found that the second-order algorithm always keep it slightly higher with a certain gap. Under the premise of the same step size, the second-order algorithm can improve the denoising effect to a certain extent compared with the first-order algorithm.

6 Conclusions

In this paper, we propose a mixed geometry information model to deal with the multiplicative denoising task. The model we propose can well match the characteristics. Based on the surface area and curvature, we designed the mixed geometry information term to protect the edges and texture of the image. At the same time, in order to efficiently address the difficulties caused by solving highly nonlinear models, we proposed AOS and SAV algorithms to achieve unconditional stability. The SAV algorithm is more recommended to solve our model due to its higher accuracy. Numerical experiments are implemented on SAR images, ultrasound images and synthetic images, and various experimental results demonstrate the superiority and excellent performance of our model. Compared with other high-order methods, our model can well maintain image contrast, protect detailed texture and edge information. In addition, quantitative analysis results show that our proposed model has ideal experimental results compared with other state-of-the-art multiplicative denoising models.

Data availability statement

No new data were created or analysed in this study.

Acknowledgements

The work is partially supported by the National Natural Science Foundation of China (Nos. U21B2075, 12171123, 12101158 and 12301536), the Fundamental Research Funds for the Central Universities grant (Nos. 2022FRFK060020 and 2022FRFK060029), the Natural Sciences Foundation of Heilongjiang Province (No. ZD2022A001).

References

References

- [1] J Patrick Fitch. Synthetic aperture radar. Springer Science & Business Media, 2012.

- [2] Chris Oliver and Shaun Quegan. Understanding synthetic aperture radar images. SciTech Publishing, 2004.

- [3] Joseph W Goodman. Statistical properties of laser speckle patterns. In Laser speckle and related phenomena, pages 9–75. Springer, 1975.

- [4] Robert F Wagner, Stephen W Smith, John M Sandrik, and Hector Lopez. Statistics of speckle in ultrasound b-scans. IEEE Trans. Son. Ultrason., 30(3):156–163, 1983.

- [5] John M Ollinger and Jeffrey A Fessler. Positron-emission tomography. IEEE Signal Proc. Mag., 14(1):43–55, 1997.

- [6] Richard Bamler. Principles of synthetic aperture radar. Surv. Geophys., 21(2-3):147–157, 2000.

- [7] Xiangchu Feng and Xiaolong Zhu. Models for multiplicative noise removal. Math. Model Algor. Comput. Vis. Imag., pages 1–34, 2021.

- [8] Gilles Aubert and Jean-François Aujol. A variational approach to removing multiplicative noise. SIAM J. Appl. Math., 68(4):925–946, 2008.

- [9] Jianing Shi and Stanley Osher. A nonlinear inverse scale space method for a convex multiplicative noise model. SIAM J. Imaging Sci., 1(3):294–321, 2008.

- [10] Zhengmeng Jin and Xiaoping Yang. Analysis of a new variational model for multiplicative noise removal. J. Math. Anal. Appl., 362(2):415–426, 2010.

- [11] Asmat Ullah, Wen Chen, Mushtaq Ahmad Khan, and HongGuang Sun. A new variational approach for multiplicative noise and blur removal. PLOS ONE, 12(1):1–26, 2017.

- [12] Zhenyu Zhou, Zhichang Guo, Gang Dong, Jiebao Sun, Dazhi Zhang, and Boying Wu. A doubly degenerate diffusion model based on the gray level indicator for multiplicative noise removal. IEEE Trans. Image Process., 24(1):249–260, 2015.

- [13] Wenjuan Yao, Zhichang Guo, Jiebao Sun, Boying Wu, and Huijun Gao. Multiplicative noise removal for texture images based on adaptive anisotropic fractional diffusion equations. SIAM J. Imaging Sci., 12(2):839–873, 2019.

- [14] Satyakam Baraha, Ajit Kumar Sahoo, and Sowjanya Modalavalasa. A systematic review on recent developments in nonlocal and variational methods for sar image despeckling. IEEE Signal Process, 196:108521, 2022.

- [15] T. Teuber and A. Lang. A new similarity measure for nonlocal filtering in the presence of multiplicative noise. Comput. Statist. Data Anal., 56(12):3821–3842, 2012.

- [16] Charles-Alban Deledalle, Loïc Denis, Florence Tupin, Andreas Reigber, and Marc Jäger. Nl-sar: A unified nonlocal framework for resolution-preserving (pol)(in) sar denoising. IEEE Trans. Geosci. Remote Sens., 53(4):2021–2038, 2014.

- [17] Pedro AA Penna and Nelson DA Mascarenhas. Sar speckle nonlocal filtering with statistical modeling of haar wavelet coefficients and stochastic distances. IEEE Trans. Geosci. Remote Sens., 57(9):7194–7208, 2019.

- [18] Stanley Osher, Nikos Paragios, Leonid Rudin, Pierre-Luis Lions, and Stanley Osher. Multiplicative denoising and deblurring: theory and algorithms, 2003.

- [19] Yu-Mei Huang, Michael K. Ng, and You-Wei Wen. A new total variation method for multiplicative noise removal. SIAM J. Imaging Sci., 2(1):20–40, 2009.

- [20] Xi-Le Zhao, Fan Wang, and Michael K. Ng. A new convex optimization model for multiplicative noise and blur removal. SIAM J. Imaging Sci., 7(1):456–475, 2014.

- [21] Tony Chan, Selim Esedoglu, Frederick Park, A Yip, et al. Recent developments in total variation image restoration. Math. Models Comput. Vis., 17(2):17–31, 2005.

- [22] David Strong and Tony Chan. Edge-preserving and scale-dependent properties of total variation regularization. Inverse Problems, 19(6):165–187, 2003.

- [23] Wei Zhu and Tony Chan. Image denoising using mean curvature of image surface. SIAM J. Imaging Sci., 5(1):1–32, 2012.

- [24] K. Papafitsoros and C. B. Schönlieb. A combined first and second order variational approach for image reconstruction. J. Math. Imaging Vision, 48(2):308–338, 2014.

- [25] Mushtaq Ahmad Khan, Wen Chen, and Asmat Ullah. Higher order variational multiplicative noise removal model. In 2015 int conf comput comput sci ICCCS, pages 116–118. IEEE, 2015.

- [26] Li Gun, Li Cuihua, Zhu Yingpan, and Huang Feijiang. An improved speckle-reduction algorithm for sar images based on anisotropic diffusion. Multimedia Tools Appl., 76(17):17615–17632, 2017.

- [27] Fuquan Ren and Roberta Rui Zhou. Optimization model for multiplicative noise and blur removal based on gaussian curvature regularization. J. Opt. Soc. Am. A, 35(5):798–812, 2018.

- [28] Xinli Xu, Teng Yu, Xinmei Xu, Guojia Hou, Ryan Wen Liu, and Huizhu Pan. Variational total curvature model for multiplicative noise removal. IET Comput. Vis., 12(4):542–552, 2018.

- [29] Yu Zhang, Songsong Li, Zhichang Guo, Boying Wu, and Shan Du. Image multiplicative denoising using adaptive Euler’s elastica as the regularization. J. Sci. Comput., 90(2):1–34, 2022.

- [30] Guillermo Sapiro and Allen Tannenbaum. On invariant curve evolution and image analysis. Indiana Univ. Math. J., pages 985–1009, 1993.

- [31] Anthony Yezzi. Modified curvature motion for image smoothing and enhancement. IEEE Trans. Image Process., 7(3):345–352, 1998.

- [32] Ron Kimmel, Ravi Malladi, and Nir Sochen. Images as embedded maps and minimal surfaces: movies, color, texture, and volumetric medical images. Int. J. Comput. Vis., 39(2):111–129, 2000.

- [33] Peihua Qiu and Partha Sarathi Mukherjee. Edge structure preserving 3d image denoising by local surface approximation. IEEE Trans. Pattern Anal. Mach. Intell., 34(8):1457–1468, 2011.

- [34] Marius Lysaker, Arvid Lundervold, and Xue-Cheng Tai. Noise removal using fourth-order partial differential equation with applications to medical magnetic resonance images in space and time. IEEE Trans. Image Process., 12(12):1579–1590, 2003.

- [35] Xue-Cheng Tai, Jooyoung Hahn, and Ginmo Jason Chung. A fast algorithm for Euler’s elastica model using augmented Lagrangian method. SIAM J. Imaging Sci., 4(1):313–344, 2011.

- [36] Xue-Cheng Tai. Fast numerical schemes related to curvature minimization:a brief and elementary review. Actes des rencontres du CIRM, 3(1):17–30, 2013.

- [37] Yuping Duan, Yu Wang, and Jooyoung Hahn. A fast augmented Lagrangian method for Euler’s elastica models. Numer. Math. Theory Methods Appl., 6(1):47–71, 2013.

- [38] Wei Zhu, Xue-Cheng Tai, and Tony Chan. Augmented Lagrangian method for a mean curvature based image denoising model. Inverse Probl. Imaging, 7(4):1409–1432, 2013.

- [39] Luca Calatroni, Alessandro Lanza, Monica Pragliola, and Fiorella Sgallari. Adaptive parameter selection for weighted-tv image reconstruction problems. J. Phys. Conf. Ser., 1476(1):012003, 2020.

- [40] Jie Shen, Jie Xu, and Jiang Yang. The scalar auxiliary variable (SAV) approach for gradient flows. J. Comput. Phys., 353:407–416, 2018.

- [41] Chenxin Wang, Zhenwei Zhang, Zhichang Guo, Tieyong Zeng, and Yuping Duan. Efficient sav algorithms for curvature minimization problems. IEEE Trans. Circuits Syst. Video Technol., 33(4):1624–1642, 2022.

- [42] Martin Burger. A level set method for inverse problems. Inverse Problems, 17(5):1327–1355, 2001.

- [43] Joachim Weickert, BM Ter Haar Romeny, and Max A Viergever. Efficient and reliable schemes for nonlinear diffusion filtering. IEEE Trans. Image Process., 7(3):398–410, 1998.

- [44] Richard W. Cottle, Jong-Shi Pang, and Richard E. Stone. The linear complementarity problem, volume 60 of Classics in Applied Mathematics. SIAM, 2009.

- [45] Dongbo Min, Sunghwan Choi, Jiangbo Lu, Bumsub Ham, Kwanghoon Sohn, and Minh N Do. Fast global image smoothing based on weighted least squares. IEEE Trans. Image Process., 23(12):5638–5653, 2014.

- [46] Pablo F Alcantarilla and T Solutions. Fast explicit diffusion for accelerated features in nonlinear scale spaces. IEEE Trans. Pattern Anal. Mach. Intell., 34(7):1281–1298, 2011.

- [47] Jie Shen, Jie Xu, and Jiang Yang. A new class of efficient and robust energy stable schemes for gradient flows. SIAM Rev., 61(3):474–506, 2019.

- [48] Wenjuan Yao, Jie Shen, Zhichang Guo, Jiebao Sun, and Boying Wu. A total fractional-order variation model for image super-resolution and its SAV algorithm. J. Sci. Comput., 82(3):1–18, 2020.

- [49] Xiangyu Bai, Jiebao Sun, Jie Shen, Wenjuan Yao, and Zhichang Guo. A Ginzburg-Landau- model and its SAV algorithm for image inpainting. J. Sci. Comput., 96(2):1–27, 2023.

- [50] Zhou Wang, Alan C Bovik, Hamid R Sheikh, and Eero P Simoncelli. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process., 13(4):600–612, 2004.

- [51] Charles-Alban Deledalle, Loic Denis, Sonia Tabti, and Florence Tupin. Mulog, or how to apply gaussian denoisers to multi-channel sar speckle reduction? IEEE Trans. Image Process., 26(9):4389–4403, 2017.