Mixed Supervision of Histopathology Improves Prostate Cancer Classification from MRI

Abstract

Non-invasive prostate cancer detection from MRI has the potential to revolutionize patient care by providing early detection of clinically-significant disease (ISUP grade group ), but has thus far shown limited positive predictive value. To address this, we present an MRI-based deep learning method for predicting clinically significant prostate cancer applicable to a patient population with subsequent ground truth biopsy results ranging from benign pathology to ISUP grade group 5. Specifically, we demonstrate that mixed supervision via diverse histopathological ground truth improves classification performance despite the cost of reduced concordance with image-based segmentation. That is, where prior approaches have utilized pathology results as ground truth derived from targeted biopsies and whole-mount prostatectomy to strongly supervise the localization of clinically significant cancer, our approach also utilizes weak supervision signals extracted from nontargeted systematic biopsies with regional localization to improve overall performance. Our key innovation is performing regression by distribution rather than simply by value, enabling use of additional pathology findings traditionally ignored by deep learning strategies. We evaluated our model on a dataset of 973 (testing ) multi-parametric prostate MRI exams collected at UCSF from 2015-2018 followed by MRI/ultrasound fusion (targeted) biopsy and systematic (nontargeted) biopsy of the prostate gland, demonstrating that deep networks trained with mixed supervision of histopathology can significantly exceed the performance of the Prostate Imaging-Reporting and Data System (PI-RADS) clinical standard for prostate MRI interpretation.

MRI, prostate cancer, deep learning, histopathology, regression, weak spatial supervision \IEEEpeerreviewmaketitle

1 Introduction

Although prostate cancer has the highest incidence of any invasive cancer in American men, survival from localized prostate cancer is 100% [1]. Prostate Specific Antigen (PSA) blood tests historically used for prostate cancer screening have high sensitivity but low specificity. Prostate MRI has been widely incorporated into practice in combination with Prostate Imaging-Reporting and Data System (PI-RADS), allowing direct MR-guided biopsy of the prostate gland or more commonly MR/ultrasound fusion biopsy [2, 3]. However, PI-RADs demonstrates a wide variability in positive predictive value and overall low positive predictive value for clinically significant prostate cancer (CS-PCa) [4]. Thus, there is great interest in developing data-driven deep learning methods to augment prostate MRI interpretation to the benefit of improved detection of CS-PCa.

Treatment of prostate cancer is driven by biopsying the prostate gland, an invasive procedure with possible complications. Historically, systematic biopsy is used to sample sextants of the prostate gland (left apex, left mid gland, left base, right apex, right mid gland and right base) as estimated by the urologist. MRI/ultrasound fusion biopsy is able to target MRI lesions identified as suspicious on prostate MRI. However, prostate biopsies do not sample the entire gland and may miss small and subtle tumors. Whole-mount analysis of prostatectomy specimens whereby the entire prostate gland is surgically removed is better as the entire prostate gland is analyzed. However, only a small proportion of patients undergo prostatectomy and relying on this population leads to significant sample bias. Ultimately, biopsy data is abundant and better represents the distribution of the population being initially evaluated for prostate cancer by prostate MRI. Thus, an artificial intelligence (AI) strategy with the aim of detecting CS-PCa in the population being screened for prostate cancer must incorporate prostate biospy data.

Most deep learning strategies focus on MRI-visible disease, which subsequently undergo MRI/ultrasound fusion (i.e. targeted) biopsies111However, these prior approaches often inconsistently report results with missing comparisons to PI-RADS or the distribution of tumor grades, leading to ultimately poor generalization performance for developed models [5].. In [6], authors used lesion biopsy data from and T2-weighted MRI from 312 men with a 2D UNet model, achieving 62-62.5% balanced accuracy, but found that it was not statistically better than PI-RADS. In [7], authors focused on clinically significant cancer in 417 patients receiving prostatectomy, achieving 0.79-0.81 AUC for CS-PCa detection using multi-parametric MRI, but found it was 1.5-3.5% lower in accuracy than radiologists. Authors in [8] used the Prostate-X2 challenge dataset and found a voxel-wise kappa of 0.4460.082 using cross-validation, but a lesion-wise kappa of 0.130.27 on the test set. Meanwhile, in [9] authors showed with 986 exams that image-level labels can achieve 0.75 0.03 AUC in grade group classification via attention-based multiple instance learning. Finally, in [10] authors used 1043 in-house and 347 Prostate-X exams, and showed that a 3D UNet could predict CS-PCa in prostate MRI lesions at nearly 56.1% sensitivity and 62.7% precision, compared to 30.8% classification accuracy with PI-RADS. Despite differences in the reported metric and issues balancing model sensitivity and false-positive rate [11], the consensus here is that advanced deep learning techniques can feasibly classify cancer inside suspicious MRI-visible lesions.

Most AI models for prostate cancer detection rely on MRI-visible lesions. However, not all tumors are visible on prostate MRI. For example, a study utilizing our dataset (973 prostate MRI exams conducted at UCSF from 2015-2018 with subsequent MRI/ultrasound fusion biopsy and full systematic biopsy) showed that 14.4% of cases had a clinically significant upgrade of prostate cancer by nontargeted systematic biopsy compared to MRI targeted biopsy (i.e. MRI visible lesions) [12]. Thus, incorporation of both targeted fusion biopsy for MRI-visible lesions and non-targeted systematic biopsy of disease not necessarily perceived prospectively on MRI, may improve automated algorithms for the detection and risk stratification of prostate cancer.

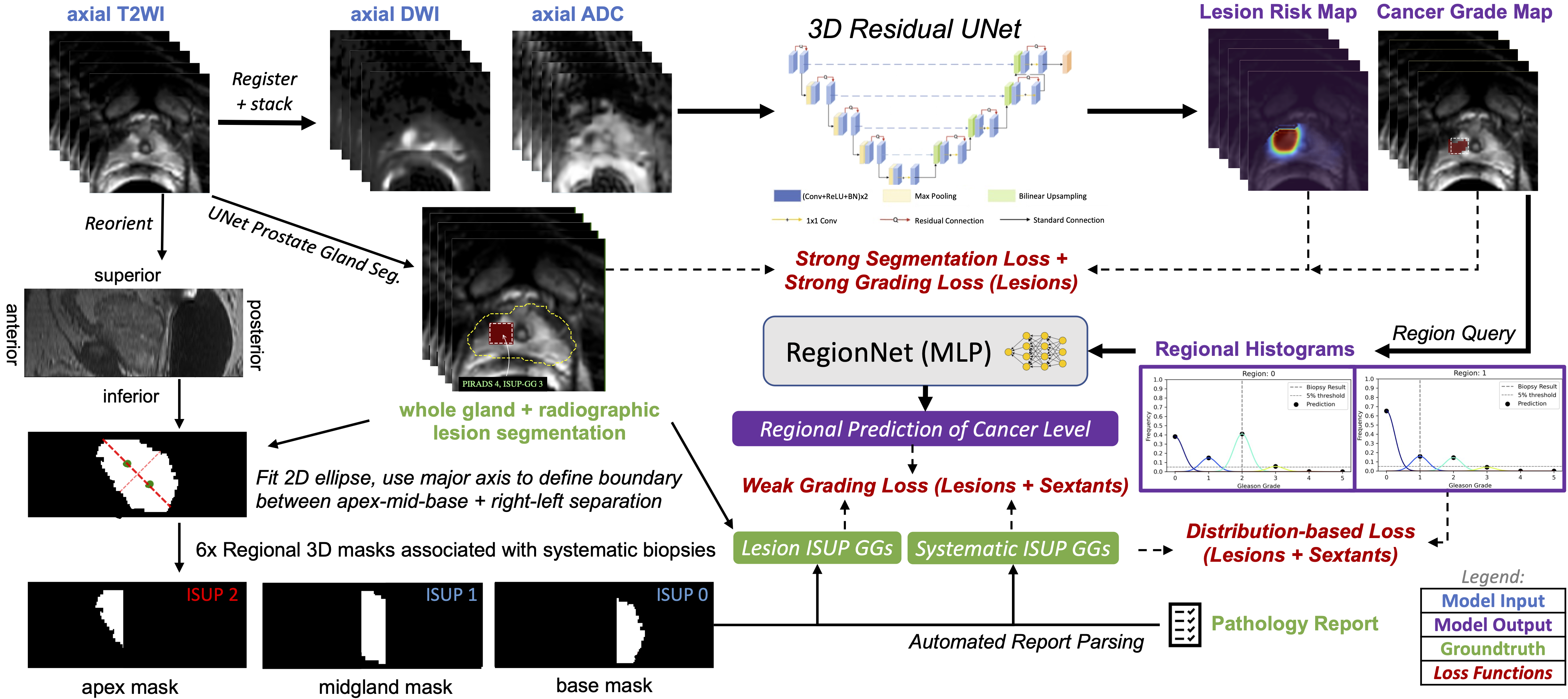

In this paper, we describe a new approach that can incorporate additional supervision from all biopsy data to DNNs for identifying clinically significant prostate cancer (CS-PCa) from MRI. Specifically, we introduce a unified classification model architecture, 3D UCNet, which is composed of global, regional, and spatial prediction modules for predicting cancer on an exam-wise, region-wise, and voxel-wise basis, respectively (Fig. 1). Of primary importance in our approach is how we encode and exploit histopathology ground truth. Unlike the cardinal encoding used in [7], we adopt a multi-class histogram representation that supports regional classification of homogeneous and inhomogeneous tissue via a classification head, as well as a novel distribution-based loss function that enables visualization of cancer grade prediction in each region. The handling of inhomogeneous tissue is essential for leveraging systematic biopsy data, since the full extent of the cancerous region sampled by the biopsy core is nonsalient in MRI, and thus the Gleason pattern or ISUP grade group (GG) extracted from pathology report processing must be applied delicately to avoid inconsistency during model training.

1.1 Contributions

Thus our contributions are as follows:

-

•

We introduce 3D UCNet, which features regional and global prediction modules that enable gland-level classification of cancer from a semantic segmentation backbone.

-

•

We introduce a histogram-based representation that enables a new type of indirect (weak) supervision consistent with how biopsy cores are annotated by pathologists.

-

•

We demonstrate that mixed supervision techniques expand the type of histopathology ground truth used in training and improve CNN-based detection of clinically significant prostate cancer from prostate MRI.

-

•

We open source our models, algorithms, and analysis: https://gitlab.com/abhe/prostate-mpmri

2 Dataset and Assumptions

2.1 Multi-parametric Prostate MRI

Multi-parametric prostate MRI (mp-MRI) is composed of T2-weighted imaging (T2WI), diffusion-weighted imaging (DWI) with an associated apparent diffusion coefficient (ADC) map, and dynamic contrast-enhanced (DCE) MRI. DWI, which typically includes both low and high b-value images, and the associated ADC map represent the restriction of water movement in tissue, which is thought to complement the anatomic T2WI series for detecting prostate cancer [13]. DCE provides data on the contrast enhancement kinetics of the imaged soft tissues and may help to delineate prostate tumors. All together mp-MRI provides a rich set of information, although variations due to differences in choice of MR pulse sequence, scanner hardware, and individual patient are commonplace.

Herein, we used bi-parametric (bp-MRI) consisting of just the T2WI and DWI data [14, 15]. The T2WI, high B-value DWI and ADC map were registered and resampled uniformly across the dataset to the same spatial resolution of [1.0, 1.0, 2.24]mm for x, y, and z axis respectively and normalized. For analysis, these imaging volumes are cropped with centering of the prostate gland and final pixel dimensions of [64, 64, 32].

2.2 Histopathology Derived from Prostate Biopsy

Prostate MRI exams are typically performed after the prostate-specific antigen (PSA) is found to be elevated along with the clinical assessment of a urologist. For our dataset, fellowship-trained abdominal imaging radiologists at UCSF provided routine clinical interpretation of prostate MRI exams with identification of MRI targets, each with an associated PI-RADS scores. These MRI targets were subsequently underwent MRI/ultrasound fusion guided prostate biopsy by a urologist, whereby an axial T2WI is fused by software with live ultrasound to sample MRI targets under ultrasound guidance. In addition to these targeted biopsies, urologists routinely performed systematic biopsies, sampling the prostate in 6 sextants (left apex, left mid gland, left base, right apex, right mid gland, right base). As the exact coordinates of these systematic biopsies are not recorded, these biopsies are only associated with the sextant regions of the prostate gland. Thus, for our dataset, we assumed the location of the systematic biopsies corresponded to a region defined by the geometric division of the prostate into sextants in the registered MR-image space. For the targeted biopsies, we assumed the location of the targeted biopsy corresponded to a radiologist annotated bounding box around each MRI-identified lesion on each applicable slice 1. The ground truth histopathology for each biopsy sample was determined by a genitourinary pathologist with more than 20 years of experience who graded all specimens using Gleason score [16]. Gleason scores were converted to ISUP grade group (1-5). We used 0 to represent benign pathology results. ISUP grade group indicates clinically significant disease.

2.3 Gland Segmentation and Contrast Normalization

As T2 and DWI have arbitrary non-quantitative image amplitudes, we applied interquartile range (IQR)-based intra-image normalization to control to the MR image intensity values across research sites and eliminate outlying values created by imaging artifacts. Specifically, each image was normalized to the image-level IQR computed within the 3D prostate gland (annotated by a radiologist or defined by a previously developed neural network segmentation model) according to [17]:

| (1) |

We subsequently applied Z-score image normalization to each exam to overcome the problem of high variability of intensity distribution between different patients by transforming the intensities to have zero mean and unit variance.

2.4 Summary of Datasets

Table 1 summarizes the distribution of ISUP grade groups in the training, validation, and testing cohorts used in this study. Clinical exam data from 2015-2018 was collected at the University of California, San Francisco (UCSF) under IRB approval. As evident, the distribution of ISUP grade group is imbalanced in our dataset.

| Training | Validation | Testing | |

|---|---|---|---|

| max ISUP 0 | 92 (13.5%) | 17 (17.7%) | 24 (12.1%) |

| max ISUP 1 | 222 (32.7%) | 27 (28.1%) | 73 (36.9%) |

| max ISUP 2 | 228 (33.6%) | 30 (31.3%) | 61 (30.8%) |

| max ISUP 3-5 | 137 (20.2%) | 22 (22.9%) | 40 (20.2%) |

| Totals | 679 (69.8%) | 96 (9.9%) | 198 (20.3%) |

All 973 patient exams used in this study included systematic biopsy results from all 6 sextants and at least one targeted-biopsy result. This results in 7278 biopsy results that were used in training, validation or testing. Each exam also includes, for each targeted biopsy, a PI-RADS score assigned by a board-certified radiologist when the case was interpreted clinically.

3 UCNet for Mixed Histopathology Supervision

For this problem, we utilized an image-to-image CNN with an additional fully-connected classification output head, a unified classification architecture we call “UCNet”. Our UCNet implementation takes 3D T2WI, high b-value DWI and ADC map as input and predicts lesion segmentation maps, ISUP grade group maps, and region-wise histograms that are used to determine region-wise and exam-wise cancer severity. We believe this architecture is applicable to many deep learning tasks in medical imaging, but it is particularly well-suited to handling the variety of ground truth histopathology data available for prostate cancer. Of particular importance here is the dynamically-populated multi-task objective we used to train UCNet using highly heterogeneous pathology ground truth collected across the UCSF patient population.

Specifically, we use a fully-convolutional 3D residual UNet [18] as the backbone for UCNet, which in this case accepts a image tensor as input, and produces voxel-wise tanh-activated lesion maps and softmax-activated grade group membership predictions , where represents the number of unique grade groups chosen for regression. For example, in the case of binary detection of CS-PCa (ISUP grade groups 2). is an adjustable hyperparameter, but we find yields slightly higher validation accuracy for detection of CS-PCa compared to fully multi-class grading approaches.

3.1 Voxel-wise Prediction

We used strong supervision for lesion segmentation and lesion grading, when we had relatively localized information.

We used a combination of dice and weighted binary cross-entropy losses to supervise the radiological lesion segmentation output, , given the ground truth segmentations , since this has been shown to achieve higher accuracy [19]:

| (2) |

| (3) |

| (4) |

where is chosen arbitrarily small to prevent overflow, BCE represents the binary cross-entropy function, represents the number of voxels in class and is the number of classes. Here, is determined from as the voxel-wise max of the region masks , over regions where the supervision signal in the first column of the histopathology matrix is 1 to represent MRI-annotated lesions.

Similarly, we applied categorical binary cross entropy loss to each voxel of the predicted grade membership maps, , given one-hot encoded ground truth histopathology data from regions where holds a supervision signal of 1 and a regression grade group that is not NaN. This is an important subtlety that allows supervision of segmentation outputs from patient exams even when the pathological grade group is not known for individual lesions. This strong voxel-wise grading or classification loss is then defined as:

| (5) |

where represents an indicator (Kronecker delta) function selecting the voxels corresponding spatially to region .

3.2 Regional Prediction

Unfortunately, strong voxel-wise supervision signals are not available for all histopathology data types, such as biopsy data. This is because the biopsy core only targets a small portion of the prostate gland, either a lesion or a normal-appearing tissue. For targeted lesion biopsies, we assume that the biopsy sample is representative of a homogeneous cancer profile within a tumor that is reasonably localized in the MRI coordinate system, enabling the use of the aforementioned strong classification objective, . Systematic biopsies, however, are neither localized nor representative of the cancer profile in heterogeneous tissue throughout the gland, preventing the use of such strong voxel-wise objectives.

To this end, we utilized two weak supervision objectives in regions where the supervision signal in is :

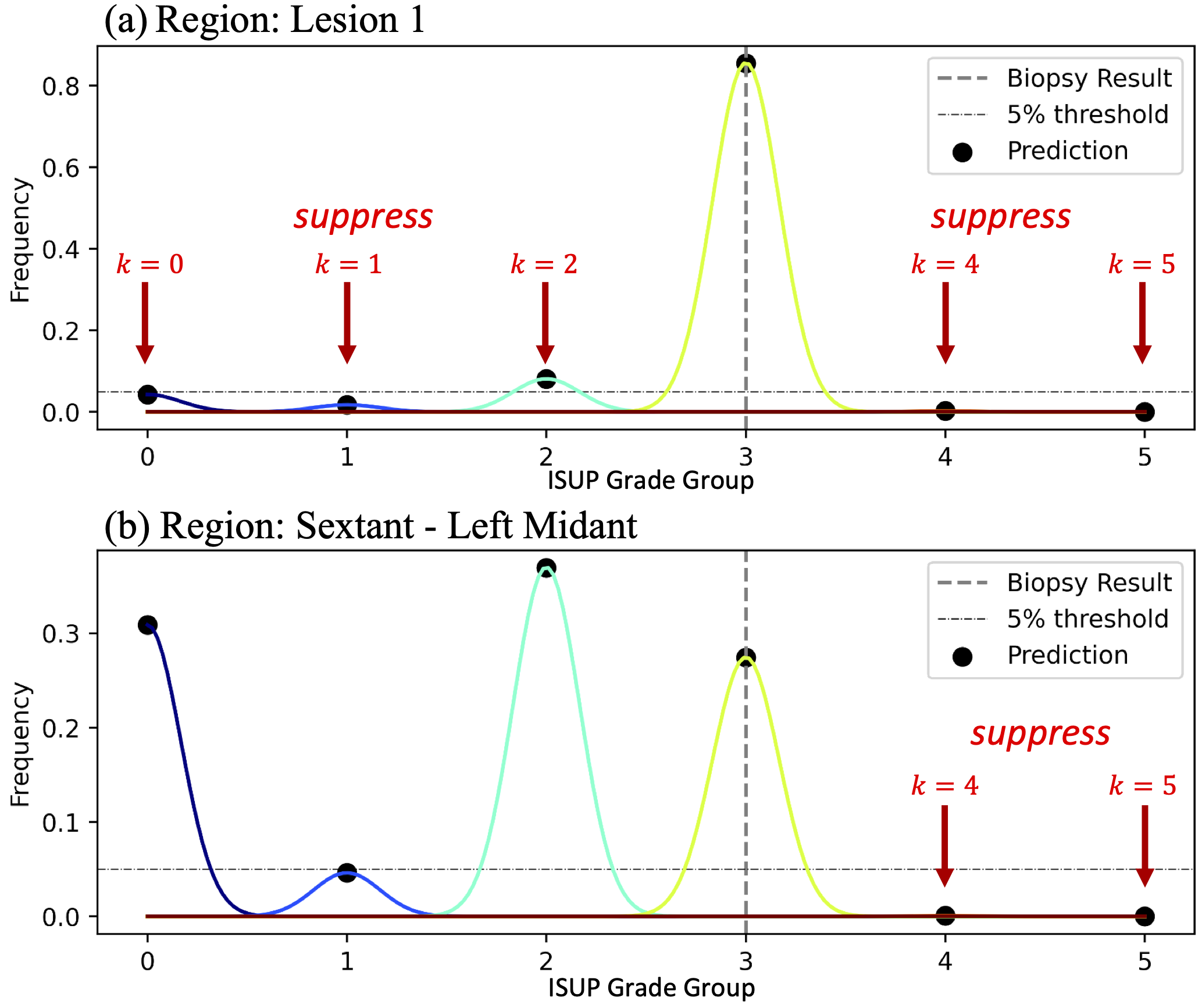

3.2.1 Histogram Suppression

Rather than regressing by value with a voxel-wise classification loss, we regress by distribution by computing and penalizing a histogram of predicted voxel-wise grade groups for each region of interest. This can be achieved naturally (maintaining model differentiability) by selecting -dimensional voxels of using the corresponding region masks in , and computing their -dimensional average, resulting in a set of approximate histograms .

For regions where signal (individual MRI target lesions), we assume the biopsy core represents a homogeneous cancer profile, so we penalized non-zero histogram bins that don’t correspond to the ground truth grade group (Fig. 2a):

| (6) |

For regions where signal (sextants, where only the highest grade is known), we assume the biopsy core represents the highest cancer in a heterogeneous tissue, so we only penalized non-zero histogram bins that represented grade groups higher than the ground truth regression grade group (Fig. 2b):

| (7) |

The net effect of these losses is to suppress the proportion of voxels representing ISUP grade groups not supported by the histopathology data and its expected uncertainty. Note that when , the histogram bin corresponding to the ground truth grade group may not necessarily have the highest frequency in the region, in agreement with the expectation for heterogeneous tissue.

3.2.2 Regional Grading

To provide a clear indication of ISUP grade group in such cases, without relying on manual interpretation of the histograms, we fed the regional histograms into a small dense network with ReLU activation in the final layer. This results in multi-hot encoded vectors , indicating the presence of various ISUP grade groups in regions .

To maintain the clearest interpretation of , we used a single-layer ReLU-activated network with tunable bias and weight-matrix frozen as identity . The bias, therein representing a set of “optimal” thresholds for declaring clinically-significant prostate cancer in a given region, is optimized through backpropagation, just as other parameters in the fully-convolutional portion of UCNet, via the loss:

| (8) |

3.3 Multi-task Objective

The overall multi-task objective is:

| (9) | |||

where we empirically choose , and is chosen based on the availability of different annotation ground truth available for each exam in a minibatch, as well as the type of supervision signals desired in the experiment. Herein, we choose to use all described objectives whenever possible. For training, we used a batch size of 6 MRI exams and the AdamW optimizer with initial learning rate of , determined empirically by repeatedly sweeping the initial learning rate.

3.3.1 Objective-Aware Balanced-Batching

To achieve the best performance, we curated the distribution of each batch by round-robin sampling of MRI exams where the highest ISUP grade group for any region is 0 (negative), 1 (benign), 2 (clinically-significant), 3-5. We find that this empirically leads to better efficiency in training the multi-task objective, since there are more representative samples per task per iteration.

3.3.2 Global Prediction

The UCNet architecture supports global prediction modules, which can be based either on the fully-encoded features produced by the encoding branch or the spatial map that is rendered by the decoding branch of the fully-convolutional backbone. We selected the later to focus on improving lesion-wise segmentation and classification accuracy, and to compute a global prediction by maximum filtering of the individual lesion predictions.

3.4 Inference

Inference is performed in a similar fashion to training, except with all augmentation turned off. Note that, due to the nature of weak histopathology ground truth, it is not feasible to train UCNet using volumetric patches. Instead, we feed full (registered and resampled) multi-contrast prostate MRI exams as input to UCNet, producing a set of predictions corresponding to all volumetric regions of the prostate gland.

4 Experiments

To determine the potential advantage of mixed-supervision deep learning methods for detection of clinically significant prostate cancer, we designed experiments that represent variations in the supervision methods used during training, as well as alternative classification strategies used during inference or evaluation. Specifically, we choose to withhold or include various types of annotation ground truth, including lesion segmentation masks, voxel-wise ISUP grade group in lesions derived from MR-targeted biopsy and expert histopathological analysis, and maximum ISUP grade group in regions derived from systematic biopsy and expert histopathological analysis. For the regions with biopsy ground truth, in particular, we additionally compare two types of supervision: weak classification-based supervision (Section 3.2.2) and a novel weak distribution-based supervision (Section 3.2.1).

Each experiment can be identified by a unique experiment ID, represented by a 4-bit vector, corresponding exactly to the in Equation 9. However, there are numerous other differences implied for inference when changing supervision methods, as elucidated in the following.

4.1 Experiment 0001 - lesion segmentation only

, represents a baseline lesion segmentation experiment, where the combined class-balanced BCE-loss and dice loss (Eq. 2) is the sole loss term in the objective. The network model employed is simply a 3D residual UNet, representing the backbone of the UCNet architecture.

4.2 Experiment 0011 - lesion segmentation and classification

, represents a baseline lesion segmentation and classification experiment, utilizing the same 3D residual UNet backbone of UCNet. The loss terms in the training objective are the segmentation loss (Eq. 2) as well as the strong voxel-wise cross entropy objective (Eq. 5). Here, classification decisions are based on the most frequent or mean value in each region based on thresholded voxels of . Note, for binary classification the mean and mode inference methods are equivalent. This experiment serves as an important baseline for subsequent methods that utilize the same UNet backbone, but with additional supervision signals.

4.3 Experiment 0111 - lesion segmentation, lesion classification, and regional classification with histogram suppression

, represents a mixed-supervision objective, novel for its use of distribution-based representations of histopathology. In this experiment, the prediction is penalized in regions where the maximum ISUP grade is higher than the ground truth (Eq. 7), e.g. when only the maximum is known for biopsy cores in sextants that represent heterogeneous tissue (i.e. a mixture of background prostate tissue with clinically significant prostate cancer if it were present). When the biopsy is derived from apparent homogeneous tissue, such as MRI-identified lesions, voxels are penalized in histogram bins both higher and lower (Eq. 6) than the ground truth maximum. Specifically, the addition of Equations 6-7 encourages regression by distribution rather than regression by value, representing the first (to our knowledge) deep learning method to incorporate the distribution of cancer histopathology. Note that the number of free model parameters is equal in Experiment 0001, 0011, and 0111. In this setting, and to maintain a clear comparison to the strongly-supervised case, classification is made in the same fashion as in Experiment 0011 by computing the mean or mode value in each region based on thresholded voxels of .

4.4 Experiment 1111 - lesion segmentation, lesion classification, regional classification with histogram suppression, and regional classification with RegionNet

represents another mixed-supervision objective that combines the histogram-based penalization of Experiment 0111 together with traditional weak instance-wise or region-wise classification supervision (Eq. 8). A region-wise classification is produced by passing the histogram representation of each region to a small neural network, RegionNet. To drive the clearest interpretation and comparison to Experiment 0111, we choose RegionNet as a dense ReLU-activated 1-layer neural network of input and output dimension and frozen with weight matrix , so the free bias terms represent “optimal” thresholds for declaring clinically-significant cancer in each region. Classification in this setting is achieved by finding the most-significant bit (MSB) of the -bit output for each region , which represents the highest level of cancer.

This experiment aims to show that accounting for tissue heterogeneity can dramatically improve classification accuracy of deep learning cancer classification models. Specifically, the combination of distribution-based penalization (Experiment 0111), together with optimal thresholding and MSB-based classification is designed to enable detection of cancer in heterogeneous tissue where the highest level of cancer is not the most prevalent (e.g. mean or mode).

| Experiment ID | 0001 | 0011 | 0111 | 1111 | PI-RADS | PI-RADS |

|---|---|---|---|---|---|---|

| Lesion Segmentation IoU | 0.220 | 0.188 | 0.162 | 0.150 | – – | |

| Lesion Accuracy | – – | 59.5% [94.0%, 25.0%] | 61.1% [93.0%, 29.2%] | 70.3% [76.6%, 63.9%] | 62.4% [31.8%, 93.1%] | 66.7% [82.5%, 52.4%] |

| Gland Accuracy (via Lesions) | – – | 55.8% [90.7%, 20.8%] | 57.7% [90.7%, 24.8%] | 62.8% [70.1%, 55.4%] | 61.0% [30.9%, 91.1%] | 64.4% [79.4%, 49.5%] |

4.5 Training Details and Stopping Criteria

For each experiment, UCNet was trained twice: (1) by performing an initial learning rate sweep and selecting an optimal point based on decrease in the objective, and (2) by fixing the initial learning rate to 0.0001. Training was performed for 500 epochs, and the checkpoint with the maximum lesion-wise validation accuracy was chosen. In all cases, the fixed learning rate run achieved superior validation performance, and these models were thus chosen for test-set evaluation and reporting.

4.6 Performance Metrics

4.6.1 Lesion Segmentation

Lesion segmentation accuracy is measured by the standard intersection-over-union (IoU) metric, measuring the overlap between the radiologist annotation (performed as a bounding box) and the predicted binarized lesion segmentation map . Although IoU tends to underestimate the lesion segmentation performance, we believe this is provides an accurate measure of model confidence in segmentation on this challenging task. Note that the assumed radiologist ground truth is not based on pathology, so the IoU metric is only serving to measure the overlap or consistency between the model and the radiologist. Furthermore, the bounding box annotations are imprecise which will also reduce the IoU performance, especially for small non-spherical lesions.

4.6.2 Lesion Classification Accuracy

Unlike many prior works that measure area under the receiver-operating characteristic curve (AUC) via cross-validation [6, 9, 11], here we measure overall class-balanced lesion classification accuracy on a withheld test set. Class-balanced accuracy is computed simply as the arithmetic mean of the true-positive rate (TPR) and true-negative rate (TNR), or in the multi-class setting, as the arithmetic mean of the trace of confusion matrix. We use class-balanced accuracy for two reasons: (1) we believe this is a more representative measure of model performance because it weights positive and negative cases equally, and (2) it captures model performance without any post-training tuning of the final threshold. For clarity, we note that the TPR and TNR represent sensitivity and specificity, respectively.

4.6.3 Gland Classification Accuracy

Gland accuracy is measured in a similar fashion to lesion classification accuracy using class-balanced accuracy metrics. Specifically in the binary CS-PCa setting, the maximum prediction for all lesions (or regions, as appropriate) in an exam is compared to the ground truth maximum to yield a true-positive or true-negative count. The TPR and TNR are subsequently averaged to yield the overall class-balanced gland classification accuracy.

5 Results

5.1 Comparison of Multi-Task Objectives

Table 2 displays the withheld test-set performance of the experiments described in Section 4 with respect to various metrics of success. Experiment 1111, which represents a mixed-supervision objective combining all the aforementioned strong and weak types of supervision, performs the best with respect to lesion classification accuracy as well as gland-wise classification accuracy (based on lesions) compared to the other DNN models, followed by the model trained in Experiment 0111, followed finally by the model in Experiment 0011. This provides strong evidence that weak supervision methods are complementary to strong supervision methods.

Moreover, the mixed supervision strategies in Experiment 1111 achieve 70.3% overall (class-balanced) accuracy in classification of clinically significant cancer in lesions, which exceeds the 66.7% performance of the best PI-RADS cutoff (PI-RADS 5) classification assigned by radiologists for this dataset. We believe this is achieved not only by learning from hundreds of datasets, but by incorporation of non-salient cancer histopathology acquired through systematic biopsy.

Table 2 indicates that although Experiment 1111 performs the best lesion classification, Experiment 0001 achieves the best overlap with radiologists’ segmentation of lesions, indicating a trade-off between these two metrics for our dataset. However, high accuracy of the lesion segmentation is not a major issue in prostate MRI interpretation, as these are relatively readily visualized by radiologists, but the classification of CS-PCa is the more challenging task. Yet, the radiologist derived lesion segmentation information is still valuable for improving classification performance. For example, Experiment 1110, which includes all mixed-supervision objectives but not lesion segmentation objectives, does not perform as well as Experiment 1111 with respect to lesion classification accuracy (66.2% vs 70.3%). This suggests the lesion segmentation provides some extra attention to suspicious areas during training, improving convergence to desirable minima. We interpret this lesion segmentation versus classification performance tradeoff in the following way: while lesion segmentation maps and corresponding IoU metrics generally correlate with model performance, they do not dictate the lesion classification performance.

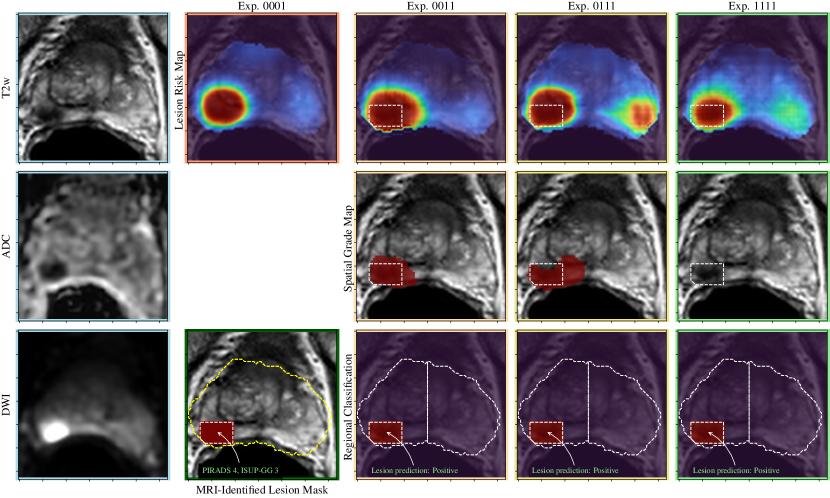

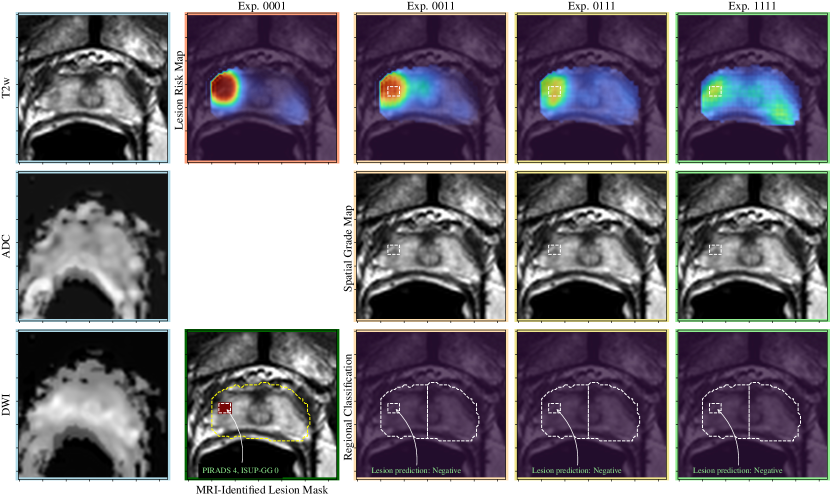

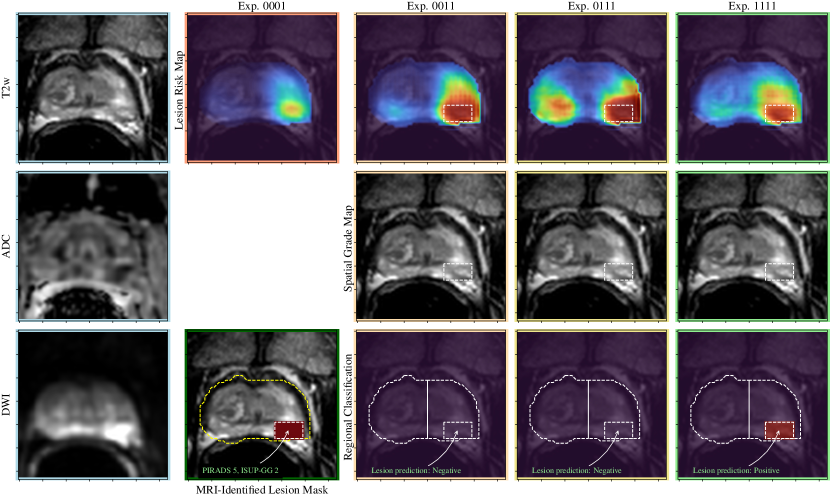

5.2 Sample Results

A visual comparison of the experiments described in Section 4 is shown in Figures 3-5, including the pre-processed input images and the various outputs which include a lesion risk map, predicted spatial grading map, and results of the regional classification.

In Figure 3, an example is shown where a PI-RADS 4 lesion is identified by the model that is T2 hypointense, markedly hypointense on the ADC map and demonstrating diffusion restriction (high signal intensity) in the right mid posterolateral peripheral zone. This lesion was correctly predicted as clinically significant prostate cancer in all experiments, which corresponds to a biopsy result of ISUP grade group 3.

In Figure 5, an example is shown where a PI-RADS-4 lesion is identified in the right lateral peripheral zone at the apex that is T2 hypointense, hypointense on ADC, and demonstrating restricted diffusion (high signal intensity). This lesion is predicted as not being CS-PCa on all experiments, which corresponds to a biopsy result of benign.

In Figure 5, an example is shown where there is a PI-RADS 5 lesion in the left posterolateral peripheral zone that is subtle to detect on T2 weighted imaging, hypointense on the ADC map and demonstrating restricted diffusion (high signal intensity). In experiments 0011 and 0111, the lesion is accurately segmented but not predicted as clinically significant prostate cancer. With the addition of weak supervision methods in experiment 1111, the lesion is predicted to be CS-PCa, corresponding to the biopsy result of an ISUP grade group 2. Thus, this represents a false negative case that was converted to a true positive case with the addition of weak supervision methods.

6 Discussion

The results show the benefits of combining strong and weak supervision of pathology into UCNet deep learning model to predict clinically-significant prostate cancer, most clearly summarized in Table 2. This is somewhat intuitive, as we expect improved accuracy by incorporating a much larger set of training labels. However, taking advantage of both heterogeneous pathology ground truth in the form of strongly supervised targeted biopsy and weakly supervised systematic biopsy results required a novel architecture, training, and evaluation methods. Our results indicate that UCNet was successfully able to take advantage of these diverse sources of training data. This is important, as the inclusion of systematic biopsy procedures continues to be debated clinically [20].

Our results are particularly interesting because the lesion-wise classification accuracy is improved by utilizing ground truth derived from poorly localized systematic biopsy data from tissue that did not appear cancerous on MRI. While this trend seems to occur at the expense of reduced concordance with radiologist-derived MRI target segmentation, lesion classification is the key challenge, not segmentation. Furthermore, we note that the current dataset includes 2D bounding boxes for each lesion over the applicable axial T2WI MRI slices which systematically overestimates the lesion size. The absolute IoU metrics must be put in context then, as they will not be comparable to more finely contoured lesion segmentations, such as those in [9].

In our experiments we also observed improved performance by classifying lesions based on the highest grade of cancer rather than the most prevalent cancer. This is shown by comparing Experiment 0111 and Experiment 1111, where the latter had improved performance (improved sensitivity) based on the change to using the RegionNet classifier which applies a bias to threshold the predictions of ISUP grade present along with a most-significant-bit (MSB) classification that picks out the highest grade of cancer predicted to be present. This approach also matches how pathologists interpret biopsy samples, where scoring is done based on the highest cancer grades observed. We believe this classification technique allows the deep learning model to preserve the distribution of cancer. This is achieved mechanistically by providing flexibility when interpreting the voxel-based representation, which matched accordingly with the expected uncertainty of the histopathology ground truth. It is also this approach that expands the number of exams and types of ground truth that can be used during training via the mixed supervision objective.

Furthermore, through mixed supervision the UCNet lesion classifier significantly outperforms (70.3%) the MRI-based lesion reporting and classification used by radiologists based on PI-RADs (PI-RADS5 66.7%, PI-RADS4 66.7%). Note that although PI-RADs5 achieves better performance than PI-RADs4, this typically targets only the most obvious MRI-visible lesions, further emphasizing the superior performance of UCNet for classifying less obvious disease. This suggests that there is additional information in prostate MRI images apart from those considered by radiologists using the PI-RADS standardized reporting system that can improve performance. While UCNet’s improvement over PI-RADS4 is minimal for Gland Accuracy (+1.8%), we note that UCNet with mixed supervision is able to improve the balance between specificity and sensitivity compared with all other methods (both human and DNN-based), addressing many of the concerns raised by the community [5, 11].

This study has limitations. First, this work was conducted with single institutional data, which typically raises concerns over model generalizability. However, this study uses one of the largest prostate-MRI datasets (3x larger than Prostate-X [21]) with real world clinical data variability including varying acquisition protocols and usage of endorectal coil. Furthermore, our dataset uses the most common approach of combined fusion and systematic biopsy, while Prostate-X used direct MRI-guided biopsy only. Overall, we believe our real world dataset will result in improved generalizability compared to models trained on smaller, more homogeneous datasets. Conscious of this, our approach is designed to enable scaling to diverse multi-institutional datasets with diverse histopathology sources, which can be explored in future work.

Second, in our dataset we had to approximate the systematic biopsy result locations by geometrically splitting the prostate gland into six sextants to represent systematic biopsies, and by using retrospective MRI-based segmentation to represent lesions. This has the potential to cause errors in the assignment of ISUP grade groups to each region as the exact location of systematic biopsy within the approximated region was not known. If the ultrasound-guidance images were available they could potentially be used to localize the biopsy core by needle position within the gland, as suggested in [3]. Other datasets, such as Prostate-X, used direct MR-guided biopsy data that potentially provides more accurate localization, although it is also more limited given the absence of systematic biopsy data.

Finally, we emphasize here that MRI-based prostate cancer classification is a difficult task and the major motivating for this project. The current radiology standard of PI-RADS to classify lesions based on size and signal characteristics has relatively poor performance [4]. Prior work based on radiomics, machine learning, and deep learning have also shown promise but also relatively modest performance improvements [22]. For our dataset, a sophisticated approach was needed to outperform PI-RADS using both targeted fusion biopsy and nontargeted systematic biopsy results. We are optimistic that continued algorithm improvements as well as accumulation of larger, high quality but diverse datasets will further improve the detection of clinically-significant prostate cancer and ultimately impact patient management.

7 Conclusion

The proposed mixed supervision methods improved the lesion-wise accuracy of machine learning-based prostate cancer classification algorithms by enabling use of more, diverse histopathology data. We demonstrated this technique by augmenting a popular deep neural network architecture, 3D residual UNet, using regional-query, soft histograms, and a simple threshold-based classification module that enables simultaneous use of voxel- and region-level histopathology ground truth, if available. This is particularly useful for improving classification since systematic biopsy results are typically disregarded in favor of MRI-targeted lesion biopsy results. The results demonstrated a significant improvement in lesion-classification accuracy using mixed supervision objectives, exceeding PI-RADS on a large and diverse dataset of 973 prostate cancer patients.

Acknowledgements

This work was supported by NIH/NIBIB grant #F32EB030411, NIH/NCI grant #R01CA229354, the UCSF Benioff Initiative for Prostate Cancer Research, a Society for Abdominal Radiology Morton A. Bosniak Research Award, a RSNA Research Resident/Fellow Grant, the Cancer League and Helen Diller Family Comprehensive Cancer Center at UCSF.

References

- [1] N. C. Institute, “SEER Cancer stat facts: prostate cancer,” 2016.

- [2] A. B. Rosenkrantz, S. Verma, P. Choyke, S. C. Eberhardt, S. E. Eggener, K. Gaitonde, M. A. Haider, D. J. Margolis, L. S. Marks, P. Pinto, et al., “Prostate magnetic resonance imaging and magnetic resonance imaging targeted biopsy in patients with a prior negative biopsy: a consensus statement by aua and sar,” The Journal of urology, vol. 196, no. 6, pp. 1613–1618, 2016.

- [3] C. P. Filson, S. Natarajan, D. J. Margolis, J. Huang, P. Lieu, F. J. Dorey, R. E. Reiter, and L. S. Marks, “Prostate cancer detection with magnetic resonance-ultrasound fusion biopsy: the role of systematic and targeted biopsies,” Cancer, vol. 122, no. 6, pp. 884–892, 2016.

- [4] A. C. Westphalen, C. E. McCulloch, J. M. Anaokar, S. Arora, N. S. Barashi, J. O. Barentsz, T. K. Bathala, L. K. Bittencourt, M. T. Booker, V. G. Braxton, et al., “Variability of the positive predictive value of pi-rads for prostate mri across 26 centers: experience of the society of abdominal radiology prostate cancer disease-focused panel,” Radiology, vol. 296, no. 1, pp. 76–84, 2020.

- [5] M. J. Belue, S. A. Harmon, N. S. Lay, A. Daryanani, T. E. Phelps, P. L. Choyke, and B. Turkbey, “The low rate of adherence to checklist for artificial intelligence in medical imaging criteria among published prostate mri artificial intelligence algorithms,” Journal of the American College of Radiology, 2022.

- [6] P. Schelb, S. Kohl, J. P. Radtke, M. Wiesenfarth, P. Kickingereder, S. Bickelhaupt, T. A. Kuder, A. Stenzinger, M. Hohenfellner, H.-P. Schlemmer, et al., “Classification of cancer at prostate mri: deep learning versus clinical pi-rads assessment,” Radiology, vol. 293, no. 3, pp. 607–617, 2019.

- [7] R. Cao, A. M. Bajgiran, S. A. Mirak, S. Shakeri, X. Zhong, D. Enzmann, S. Raman, and K. Sung, “Joint prostate cancer detection and gleason score prediction in mp-mri via focalnet,” IEEE transactions on medical imaging, vol. 38, no. 11, pp. 2496–2506, 2019.

- [8] C. De Vente, P. Vos, M. Hosseinzadeh, J. Pluim, and M. Veta, “Deep learning regression for prostate cancer detection and grading in bi-parametric mri,” IEEE Transactions on Biomedical Engineering, vol. 68, no. 2, pp. 374–383, 2020.

- [9] E. Redekop, K. V. Sarma, A. Kinnaird, A. Sisk, S. S. Raman, L. S. Marks, W. Speier, and C. W. Arnold, “Attention-guided prostate lesion localization and grade group classification with multiple instance learning,” in Medical Imaging with Deep Learning, 2021.

- [10] S. Mehralivand, D. Yang, S. A. Harmon, D. Xu, Z. Xu, H. Roth, S. Masoudi, T. H. Sanford, D. Kesani, N. S. Lay, et al., “A cascaded deep learning–based artificial intelligence algorithm for automated lesion detection and classification on biparametric prostate magnetic resonance imaging,” Academic Radiology, vol. 29, no. 8, pp. 1159–1168, 2022.

- [11] C. Roest, S. J. Fransen, T. C. Kwee, and D. Yakar, “Comparative performance of deep learning and radiologists for the diagnosis and localization of clinically significant prostate cancer at mri: A systematic review,” Life, vol. 12, no. 10, p. 1490, 2022.

- [12] N. Velarde, A. C. Westphalen, H. G. Nguyen, J. Neuhaus, K. Shinohara, J. P. Simko, P. E. Larson, and K. Magudia, “Us lesion visibility predicts clinically significant upgrade of prostate cancer by systematic biopsy,” Abdominal Radiology, vol. 47, no. 3, pp. 1133–1141, 2022.

- [13] R. T. Gupta, K. A. Mehta, B. Turkbey, and S. Verma, “Pi-rads: Past, present, and future,” Journal of Magnetic Resonance Imaging, vol. 52, no. 1, pp. 33–53, 2020.

- [14] M. Gatti, R. Faletti, G. Calleris, J. Giglio, C. Berzovini, F. Gentile, G. Marra, F. Misischi, L. Molinaro, L. Bergamasco, et al., “Prostate cancer detection with biparametric magnetic resonance imaging (bpmri) by readers with different experience: performance and comparison with multiparametric (mpmri),” Abdominal Radiology, vol. 44, no. 5, pp. 1883–1893, 2019.

- [15] M. van der Leest, B. Israel, E. B. Cornel, P. Zamecnik, I. G. Schoots, H. van der Lelij, A. R. Padhani, M. Rovers, I. van Oort, M. Sedelaar, et al., “High diagnostic performance of short magnetic resonance imaging protocols for prostate cancer detection in biopsy-naive men: the next step in magnetic resonance imaging accessibility,” European urology, vol. 76, no. 5, pp. 574–581, 2019.

- [16] J. I. Epstein, L. Egevad, M. B. Amin, B. Delahunt, J. R. Srigley, and P. A. Humphrey, “The 2014 international society of urological pathology (isup) consensus conference on gleason grading of prostatic carcinoma,” The American journal of surgical pathology, vol. 40, no. 2, pp. 244–252, 2016.

- [17] O. J. Pellicer-Valero, J. L. Marenco Jiménez, V. Gonzalez-Perez, J. L. Casanova Ramón-Borja, I. Martín García, M. Barrios Benito, P. Pelechano Gómez, J. Rubio-Briones, M. J. Rupérez, and J. D. Martín-Guerrero, “Deep learning for fully automatic detection, segmentation, and gleason grade estimation of prostate cancer in multiparametric magnetic resonance images,” Scientific reports, vol. 12, no. 1, pp. 1–13, 2022.

- [18] K. Lee, J. Zung, P. Li, V. Jain, and H. S. Seung, “Superhuman accuracy on the snemi3d connectomics challenge,” arXiv preprint arXiv:1706.00120, 2017.

- [19] A. Rajagopal, V. C. Madala, T. A. Hope, and P. Larson, “Understanding and Visualizing Generalization in UNets,” in Medical Imaging with Deep Learning, pp. 665–681, PMLR, 2021.

- [20] J. Hugosson, M. Månsson, J. Wallström, U. Axcrona, S. V. Carlsson, L. Egevad, K. Geterud, A. Khatami, K. Kohestani, C.-G. Pihl, et al., “Prostate cancer screening with psa and mri followed by targeted biopsy only,” New England Journal of Medicine, vol. 387, no. 23, pp. 2126–2137, 2022.

- [21] G. Litjens, T. Kooi, B. E. Bejnordi, A. A. A. Setio, F. Ciompi, M. Ghafoorian, J. A. Van Der Laak, B. Van Ginneken, and C. I. Sánchez, “A survey on deep learning in medical image analysis,” Medical image analysis, vol. 42, pp. 60–88, 2017.

- [22] B. Turkbey and M. A. Haider, “Deep learning-based artificial intelligence applications in prostate mri: brief summary,” The British Journal of Radiology, vol. 95, no. 1131, p. 20210563, 2022.