Mobile-end Tone Mapping based on Integral Image and Integral Histogram

Abstract

Wide dynamic range (WDR) image tone mapping is in high demand in many applications like film production, security monitoring, and photography. It is especially crucial for mobile devices because most of the images taken today are from mobile phones, hence such technology is highly demanded in the consumer market of mobile devices and is essential for good customer experience. However, high quality and high-performance WDR image tone mapping implementations are rarely found in the mobile-end. In this paper, we introduce a high performance, mobile-end WDR image tone mapping implementation. It leverages the tone mapping results of multiple receptive fields and calculates a suitable value for each pixel. The utilization of integral image and integral histogram significantly reduce the required computation. Moreover, GPU parallel computation is used to increase the processing speed. The experimental results indicate that our implementation can process a high-resolution WDR image within a second on mobile devices and produce appealing image quality.

Index Terms:

Wide dynamic range, compression, mobile graphic processing unit (GPU), tone mapping, parallel computing.I Introduction

Wide dynamic range is defined as the ratio of the intensity of the brightest point to that of the darkest point in a scene or image. Traditional display devices such as LCD, CRT, and LED are usually limited to 8 bits Thus they can only represent the dynamic range of 255:1. However, the fast developing image sensor technology and image processing algorithms enable the capture of WDR images with a much wider dynamic range than that of the display devices. Therefore it is impossible to properly reproduce the WDR image on the display directly. Tone mapping algorithms are often called tone mapping operators (TMO), they serve the goal of compressing the WDR image to match the dynamic range of the display devices. The development of tone mapping algorithms started to emerge in the early 1990s. Tumblin and Rushmeier [1] and Ward [2] did the earliest attempts. Tumblin and Rushmeier aimed to match the perceived brightness of the displayed image with that of the scene. Ward used a linear scaling, focusing on preserving the image contrasts. These algorithms are classified as global tone mapping functions because they use the same tone-curve for all the pixels of the WDR image. In general, global tone mapping algorithms are computationally easy and mostly artifact-free. Hence, they have unique advantages in implementations. However, there are only a few global TMO implementations found in the literature because they are prone to loose details and contrast in either bright or dark regions due to the global compression feature. Drago et al. [3] proposed a function that could change the base of the logarithmic function according to the pixel brightness. It is one of the most commonly used examples of tone mapping in various publications. The sigmoid function is similar to the response curve of the human visual system (HVS), it is thus used in many bio-inspired TMOs. Works like [4, 5, 6, 7] try to simulate the procedure of dynamic range compression of the HVS by mimicking the response curve of our photo-receptors. Although these approaches may be effective in reducing the dynamic range, they have an inherent flaw that the tone mapped image represents the internal representation rather than the luminance which is more expected by our eyes.

In many research works, tone mapping is regarded as a constrained optimization problem. The objective is to achieve a tone-mapping that is most preferred in terms of objective quality. Mantiuk et al. tried to minimize visible distortion during the tone mapping [8]. Ma et al. proposed a tone mapping method that can optimize the tone mapped image quality index [9]. Recently, tone mapping with edge preserving filter has become the most popular way to tone map WDR images [10, 11, 12, 13, 14]. It first applies an edge-preserving filter to the log domain of the WDR image’s luminance channel. The edge-preserving filter separates the image into two layers, namely a base layer that contains global brightness information and a detailed layer that mainly consists of local texture. The base layer is processed with the compressive tone-curve while the detail layer is untouched. Thus, local details could be preserved while the overall dynamic range is reduced. Finally, the base-layer and the detail-layer are combined and transformed back to the original linear domain for display.

Most tone mapping algorithms are implemented in desktop-end. However, there are several GPU implementations made in recent years, which demonstrate great computational efficiency with the help of GPU’s parallel processing ability. Chen et. al achieved 50 Hz tone mapping by developing a new data structure and paralleling their edge preserving filter on GPU [15]. Akyuz demonstrated a WDR imaging pipeline realized by GPU [16]. It yields 2 to 3 orders of performance improvement when compared to the CPU implementation. Urena et. al evaluated both GPU and FPGA performances on a new tone mapping algorithm [17]. When compared with CPU implementation, speed-up factors of 7.5 and 15 are achieved for the GPU and FPGA, respectively.

In this paper, we propose an algorithm that is inspired by the HVS, it tone maps a pixel by taking into account multiple receptive fields that surround the pixel. The utilization of integral image and integral histogram make the whole tone mapping process highly parallel. Experimental results including the image quality evaluation and mobile-end implementation performance are carried out to prove the effectiveness and efficiency of our work.

The rest of the paper is structured as follows. Section 2 details the proposed algorithm and section 3 describes the mobile-end implementation. Section 4 provides experimental results. The last section concludes this work.

II Proposed Algorithm

When viewing WDR scenes, our HVS adopts the local adaptation mechanism to help us see the details in all parts of the scene. Local adaptation can be mainly explained as the ability to accommodate the level of a certain visual field around the current fixation point. Moreover, it also reveals that different luminance intervals could result in overlapping reactions on the limited response range of the visual system, thus extending our visual response range to cope with the full dynamic range of high contrast scenes. Inspired by the concept of local adaptation, we design our tone mapping algorithm as follows. The WDR image is first transferred to using logarithmic compression. For every pixel , one can always find different receptive fields where is the center of every receptive field. To adapt to every visual field, we can tone map them separately. Since HVS has a logarithmic response to light intensity, we choose histogram adjustment [18] which is one of the simplest tone mapping methods to tone map every receptive field. The processing flow of applying histogram adjustment to every receptive field is summarized as follow: first, a histogram of image luminance in the logarithmic domain is constructed for every . Denoting as the pixel count in a bin of the histogram, a cumulative probability function is defined as

| (1) |

The tone mapped value for the center pixel of is calculated using the following equation:

| (2) |

where and are the minimum and maximum display luminance, is the center pixel of receptive field

For each receptive field , Eq. 2 will give a tone mapped value of . The values of all receptive fields will be fused together using a weight function:

| (3) |

is the weight for value , and it is calculated using the following formula

| (4) |

is the local variance of receptive field centered at pixel location , and is a user defined parameter.

The proposed algorithm will be computationally expensive because for every pixel location , one needs to compute different histograms and different variances. In the following section, we will show how the histograms and variances are calculated in a single pass fashion.

II-A Integral Image and Integral Histogram based Acceleration

The integral image is prominently used within the Viola-Jones object detection framework from 2001 [19]. It can significantly reduce the computation burden when an accumulative sum of image area is required. The following equation defines an integral image.

| (5) |

where is the value of the pixel at . A great feature of integral image is that summation of any rectangular region in the original image can be computed efficiently in a single pass. For example, if there are four points , , and , in image , the accumulative summation of rectangular that is enclosed by the four points is equal to:

| (6) |

It can be seen from Eq. 6 that with the help of integral image, only one addition and two subtractions are required for calculating an accumulative sum.

A natural extension of integral image is the integral histogram. It is a fast way to extract histogram of a specific area [20]. If the pixel values of an image are equally segmented into different bins of a histogram, then we can build an -channel map where the -th channel is defined as follow:

| (7) |

indicates the -th bin. For each channel , we can have an integral image

| (8) |

Then, we can build a -channel integral histogram where the -th bin of the histogram is calculated with

| (9) |

It is evident that with the help of integral histogram. An -bin histogram of any local area can be calculated easily with addition and 2 subtractions.

With the help of integral histogram, the histogram calculation of Eq. 1 becomes straightforward. We first equally divide image into bins based on pixel values. is a user defined parameter. The integral histogram of the logarithmic image can be built according to Eq. 7 and Eq. 8. Then for any receptive field , the pixel count of can be solved by Eq. 9. Hence, Eq. 1 and Eq. 2 can be derived easily. The local variance value has the following form:

| (10) |

where and are the accumulative sums of receptive field centered at pixel location , and is the number of pixels within the receptive field. The two accumulative sums in Eq. 10 can be computed with integral image of and .

The majority of the computational burden of Eq. 1 to Eq. 4 are optimized with the help of integral image and integral histogram. The remaining computation is just some basic operations. After the formation of the integral image and integral histogram, the entire tone mapping process becomes pixel-parallel. Which means all pixels can be computed in parallel without data dependency. This feature is significantly helpful when it comes to GPU implementation where large amounts of single-instruction-multiple-data (SIMD) processing units are available. We will show the GPU pixel-parallel implementation in Section III.

II-B Analysis

In this part, we make a detailed analysis of how the proposed algorithm compresses the dynamic range while preserving local details.

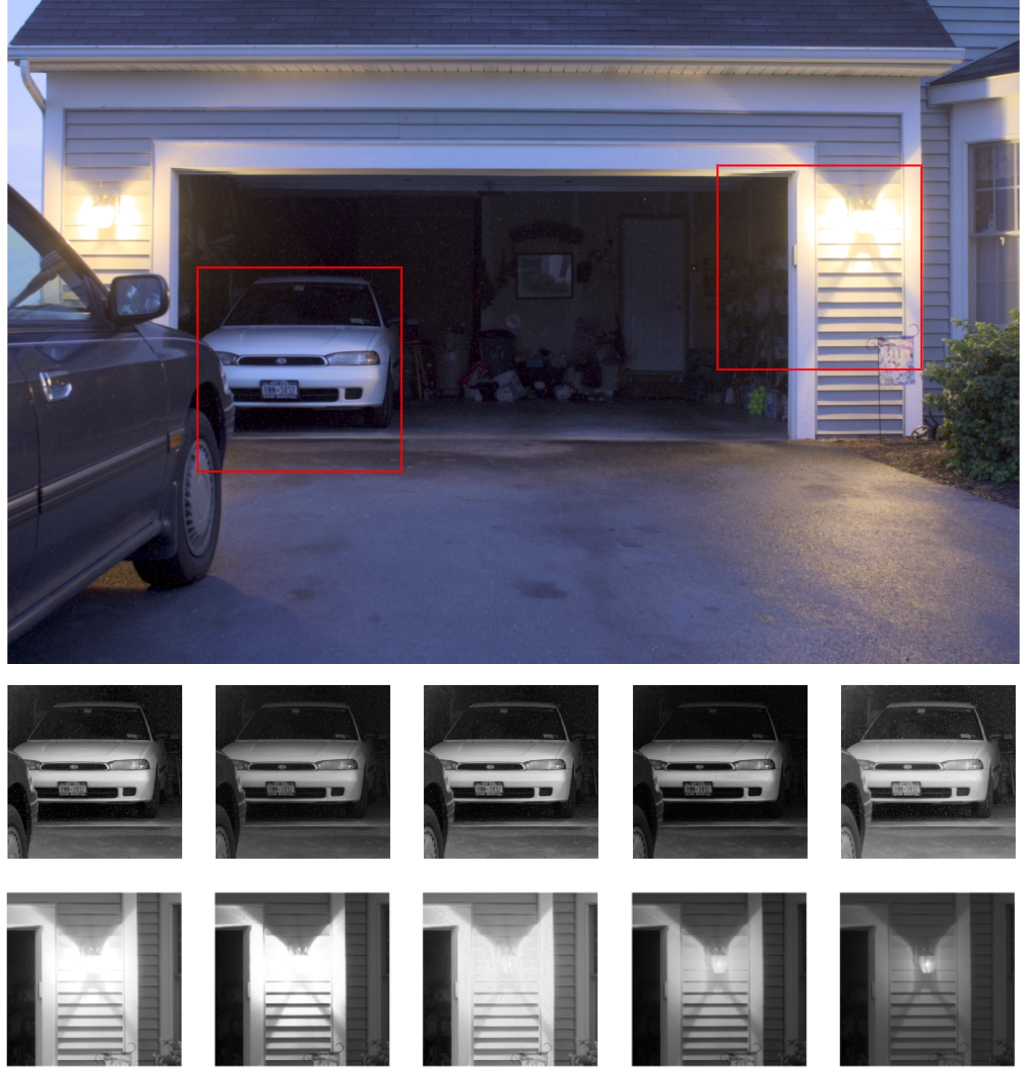

The main reason that the algorithm adopts a simple tone mapping method (Eq. 2) rather than a more complicated one to tone map every receptive field is because the local dynamic range of a single receptive field is mostly much lower than the dynamic range of the entire WDR image. Fig. 1 shows an example that a simple tone mapping method is already sufficient to produce good results. The image on top of Fig. 1 is the original WDR image. We tone map this image with different algorithms. Two patches of the same size and at the same location are selected from the corresponding tone mapped images. From left to right on the two bottom rows of Fig. 1, the image patches are from Mantiuk et al. [8], Durand et al. [10], Reinhard et al. [21] and Drago et al. [3], respectively. The last column shows image patches from the original WDR image and then tone mapped with a logarithmic response function. The image patches using local processing show comparable or even better results when compared with other algorithms. In the lamp area, the image is not as saturated as the other four images, and in the car area, the image is brighter than the other four images. This example demonstrates that with local adaptation, even a simple tone mapping method can reveal the local details of wide dynamic range scenes.

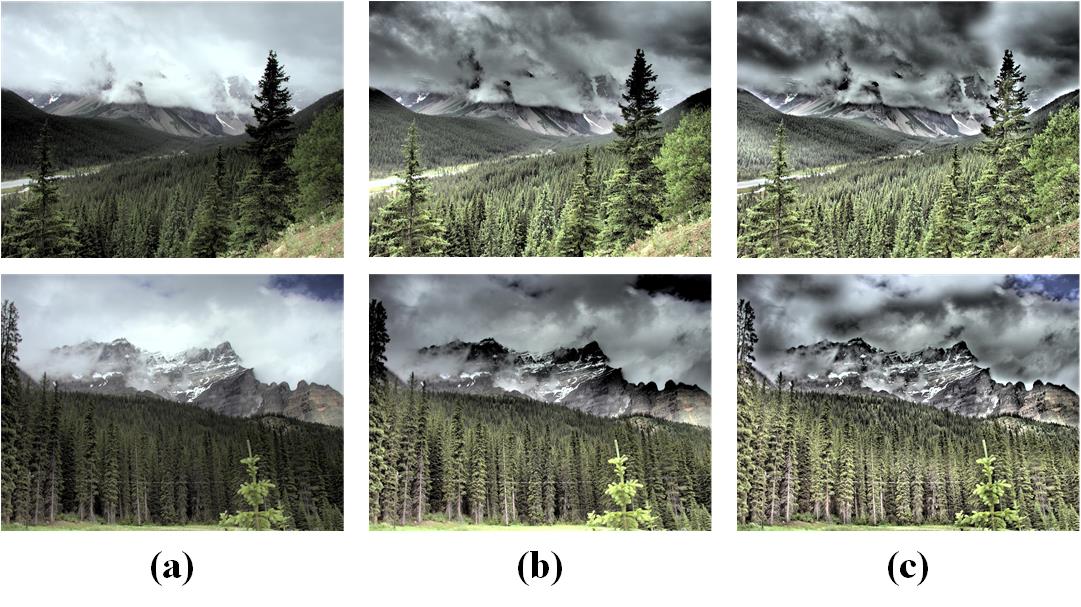

However, revealing local detail is not enough for good tone mapping, because artifacts usually emerge if there is no consideration of global statistics. Figure. 2 shows a tone mapping example of our algorithm with only one receptive field ( in Eq. 3). It is apparent that the smaller the receptive field, the better the details, but there are many disturbing artifacts especially at uniform areas. On the other hand, the larger the receptive field, the fewer the details as well as the artifacts. Actually, the size of the receptive field can balance between the global and local statistics during tone mapping. Consider the most extreme situation when the receptive field is as large as the WDR image itself, then the tone mapping becomes global. In this circumstance, there will be no artifacts because the tone mapping is global, it can preserve the brightness consistency of the image based on the monotonic tone mapping curve. But, the details are also concealed due to the global compression feature. If the receptive field is so small that there are only several pixels, the details will be greatly exaggerated because every receptive field will be given the maximum display range between and regardless of its size. If the pixels have similar values, for instance, they are all background pixels of clear sky, then, some pixel will still be tone mapped to and become artifacts because of Eq. 2. The local compression could sabotage the image brightness consistency, especially at uniform areas because the tone mapping function of the entire WDR image is no longer monotonic. Although the results are significantly different when tone mapped with large and small receptive fields, it is also evident that the displayed visual appearances are complimentary which contain local detail and global brightness consistency. Since every pixel is included in different receptive fields, one solution to obtain these local and global appearances is to fuse all receptive fields using a weighted summation as shown in Eq. 3.

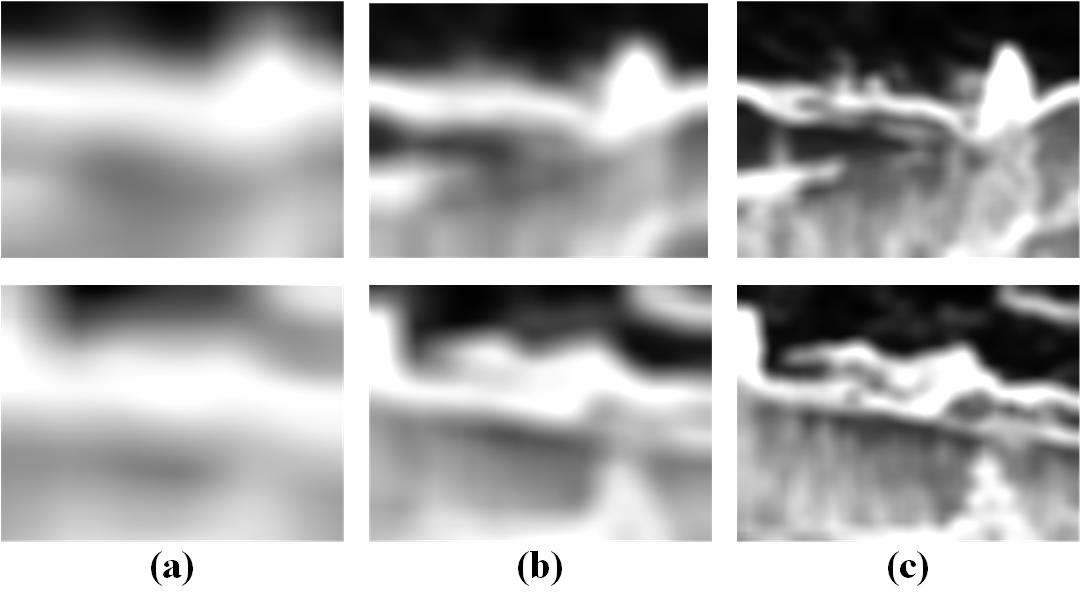

To reveal local detail and maintain brightness consistency, we should consider the two possible cases of local receptive fields. In a “flat” receptive field where pixel values are mostly the same, we should tone map it more globally to maintain its image consistency and reduce possible artifacts. In a “high variance” receptive field where pixel values fluctuate significantly, we should tone map it more locally to reveal details. Eq. 4 is taken from guided image filter [12] and it is a very efficient way to measure the “flatten” or “variance” degree of a local image area. If the variance value is much larger than , the weight value will be close to 1, which indicates that the centre pixel of receptive field is “high variance”. If the variance is much less than , the weight value will be close to 0, and the center pixel is regarded as belonging to a “flat” receptive field. Eq. 4 gives pixel-wise and receptive-field-wise weight for each pixel and it has edge preserving feature that is helpful for artifacts reduction. Fig. 3 shows the weight map calculated with different receptive field. Bright pixels mean the computed weight value is close to one and dark pixels mean that it is close to zero. It can be seen that in smaller scales, the weight can better preserve details such as edges and textures.

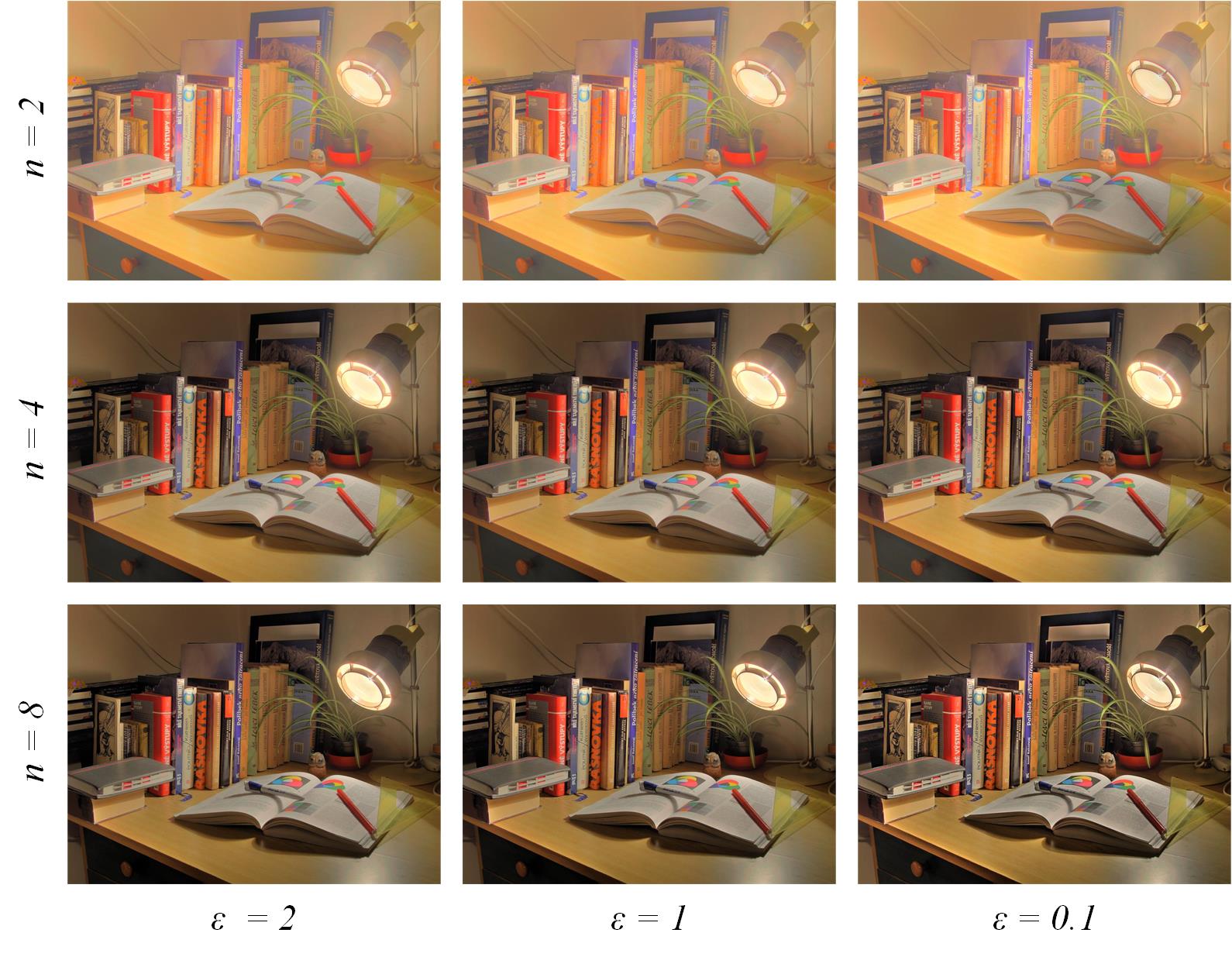

II-C Parameter Setting

There are three free parameters involved in our algorithm, namely, the number of different receptive fields , the number of the histogram bins , and the regularization term . The number of receptive fields is the most important parameter in the proposed algorithm. As we have discussed previously, to maintain the image brightness consistency, the largest receptive field should be the same as the image size. Yet to obtain possible details in different receptive fields, we need smaller receptive fields as well. We adopt the popular image pyramid method and define the relationship for any two adjacent receptive fields and as . Consider a WDR image, will yield the smallest receptive field of which is enough for the tone mapping function of Eq. 2 to preserve local details. The effect of the number of bins and the regularization term parameters for a tone mapped image are shown in Fig. 4. Nine tone mapped images are presented in a matrix with varying vertically and varying horizontally. It is apparent that the image contrast increases as increases. The decrease of in Eq. 4 will result image with better local details. In Fig. 4, the texture of the window glass is best preserved with smallest value. We find values for and to usually produce satisfactory results, i.e. good brightness while preserving local contrast and details.

III Mobile-end Implementation

The proposed algorithm is implemented in the iOS platform as an application. The application first captures four different exposures and then the four images are used to recover a WDR image using the algorithm proposed by Paul Debevec [22].

The generated WDR image is converted to luminance channel using the following equation:

| (11) |

where , , and are the red, green and blue channel of the WDR image, respectively. Then, the tone mapped image is generated by applying the proposed algorithm on the luminance image . At last, the color information is restored using the same color restoration function used in [23]:

| (12) |

where represents the three color channels, and , denote the luminance before and after WDR tone mapping, respectively. is a parameter controlling color saturation that is set as . In the following, we first briefly introduce the programming model and then will focus on the GPU implementation of the tone mapping algorithm.

III-A Mobile-end GPU Implementation

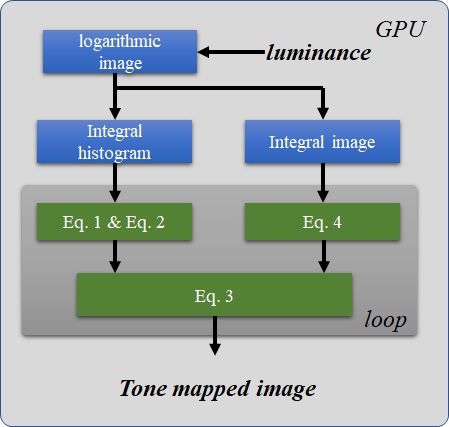

The algorithm is implemented using Apple’s GPU application programming interface, Metal. Metal allows for the parallel processing of data much like OpenGL or other shader programming languages. In Metal, kernel function is the basic function that runs in SIMD fashion in GPU. Each instance of kernel function is called a thread. Threads are further organized into threadgroups that are executed together and can share a common block of memory. Since the proposed algorithm is pixel-parallel, then we can assign every pixel a thread to tone map itself and all threads share the common memory which is the calculated integral image and integral histogram. The overall implementation flow of the algorithm is shown in Fig. 5. The input and output of the algorithm are the calculated lumniance image and tone mapped lumniance image, respectively. The blue blocks indicate functions that execute only once during the computation. They are used to calculate the logarithmic image, integral histogram and integral image and , respectively. MPSImageIntegral function that is provided by Metal Performance Shaders is used to calculate the integral image and integral histogram. The core functions are labeled with green color in Fig. 5.

Our implementation calculates different scales in sequence, the functions that are required in this procedure are labeled as green, and they will be called for times in a main loop (shaded gray). The pseudocode code of Algorithm 1 shows how the Eq. 4 is implemented in GPU as kernel function. and are the computed integral image and of and , respectively. They can be accessed by all threads. and are the vertical and horizontal coordinates of current thread. Line 2 to line 5 are used to calculate coordinates of the four corner pixels of Eq. 6 and Eq. 9. calculates the number of pixels in the receptive fields. Eq. 6 and Eq. 9 are executed in line 13 and line 18. Line 19 implement Eq. 10 and Eq. 4 is carried out in line 20. The implementation of algorithm 1 only employs basic addition, subtraction and indexing operations of GPU.

Eq. 1, Eq. 2 and Eq. 3 are also implemented as kernel functions because they only depend on the pixel value and the integral histogram. The parallel processing feature of the algorithm greatly reduce the computation complexity and cost of the implementation. Evaluation results of the GPU implementation will be shown in the following section.

IV Experimental Results

In this section, we present image quality comparison of our algorithm as well as the performance of the GPU implementation.

Our algorithm is compared with three tone mapping algorithms — local Laplacian-based tone mapping by Paris et al. [14], the fast bilateral filtering tone mapping method by Durand et al. [10] and edge-preserving multi-scale decomposition algorithm proposed by Gu et al. [13]. The three algorithms reported state-of-the-art tone mapped image quality. We use the codes of [14] and [10] provided by the authors themselves. For Gu’s method, we implemented the algorithm based on the code of [12]. In the image quality assessment, all compared algorithms use their default parameter settings. Our algorithm use parameter setting , and . For application performance evaluation, we tested our application on an iPhone 6 plus device.

| Image |

|

|

|

Proposed | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BristolBridge | 0.0802 | 0.3262 | 0.2160 | 0.8558 | ||||||

| ClockBuilding | 0.8032 | 0.8829 | 0.6845 | 0.8345 | ||||||

| CrowFootGlacier | 0.0887 | 0.4725 | 0.5205 | 0.7416 | ||||||

| DomeBuilding | 0.4134 | 0.9108 | 0.4570 | 0.9799 | ||||||

| FribourgGate | 0.3923 | 0.86837 | 0.8239 | 0.8541 | ||||||

| MontrealStore | 0.3959 | 0.9725 | 0.4489 | 0.9383 | ||||||

| Moraine2 | 0.1260 | 0.7519 | 0.2984 | 0.6234 | ||||||

| Oaks | 0.2199 | 0.9281 | 0.4045 | 0.9770 | ||||||

| StreetLamp | 0.5590 | 0.7952 | 0.4141 | 0.8145 | ||||||

| Vernicular | 0.4190 | 0.6256 | 0.7458 | 0.6023 | ||||||

| Average | 0.3498 | 0.7534 | 0.5014 | 0.8221 |

| Image |

|

|

|

Proposed | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BristolBridge | 0.8368 | 0.7947 | 0.8387 | 0.9095 | ||||||

| ClockBuilding | 0.8780 | 0.8101 | 0.8795 | 0.8942 | ||||||

| CrowFootGlacier | 0.9274 | 0.8181 | 0.9422 | 0.9424 | ||||||

| DomeBuilding | 0.7364 | 0.6968 | 0.7498 | 0.7933 | ||||||

| FribourgGate | 0.9362 | 0.9045 | 0.9419 | 0.9448 | ||||||

| MontrealStore | 0.9330 | 0.8940 | 0.9321 | 0.9213 | ||||||

| Moraine2 | 0.9144 | 0.9014 | 0.9242 | 0.9576 | ||||||

| Oaks | 0.9333 | 0.8832 | 0.9578 | 0.9703 | ||||||

| StreetLamp | 0.8844 | 0.8694 | 0.8654 | 0.9436 | ||||||

| Vernicular | 0.9117 | 0.8946 | 0.9255 | 0.9360 | ||||||

| Average | 0.8892 | 0.8467 | 0.8958 | 0.9213 |

IV-A Image Quality Assessment

It can be understood from the earlier explanation that our algorithm utilizes all available display levels in each local processing. This significantly increases image brightness, especially in dark regions. Our experiments tested different WDR images to evaluate the image quality of the tone mapped images. One example is shown in Fig. 6. It shows one image tone mapped with different algorithms. In reading order, the images are tone mapped with Durand et al., Gu et al., Paris et al., and the proposed algorithm, respectively. A visual impression can tell that our algorithm gives an image with higher brightness value over the other three images. In fact the average brightness value for the four images is 93.78, 120.92, 98.01 and 122.14 respectively. Despite the fact that the image generated with Gu et al. has similar overall brightness, it lacks global contrast and many unwanted details are enhanced which makes the image look unnatural.

For objective assessment, we use the tone-mapped image quality index (TMQI) [24] to calculate an overall quality score that combines a multi-scale structural fidelity measure and a measure of image naturalness. The structural fidelity measure is a full-reference assessment based on the structural similarity (SSIM) index. The naturalness measure is a no-reference assessment based on statistics of good-quality natural images. The results of the naturalness score, structural fidelity score, and the overall score are listed in Table I, Table II and Table III. The winner algorithm’s score is shown in bold font. In the naturalness score, our algorithm scores highest in 5 images and achieves an average value of 0.8221 for which is highest among the tested algorithms. In terms of structural similarity, our algorithm wins 9 out of 10 images and achieves an average score of 0.9213. In terms of overall quality, our algorithm produces the best scores for 9 images.

| Image |

|

|

|

Proposed | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BristolBridge | 0.7921 | 0.8369 | 0.8265 | 0.9564 | ||||||

| ClockBuilding | 0.9403 | 0.9334 | 0.9224 | 0.9493 | ||||||

| CrowFootGlacier | 0.8187 | 0.8705 | 0.9120 | 0.9477 | ||||||

| DomeBuilding | 0.8362 | 0.9038 | 0.8480 | 0.9426 | ||||||

| FribourgGate | 0.8877 | 0.9570 | 0.9600 | 0.9652 | ||||||

| MontrealStore | 0.8875 | 0.9692 | 0.8969 | 0.9715 | ||||||

| Moraine2 | 0.8254 | 0.9387 | 0.8666 | 0.9329 | ||||||

| Oaks | 0.8525 | 0.9600 | 0.8954 | 0.9894 | ||||||

| StreetLamp | 0.9034 | 0.9368 | 0.8731 | 0.9590 | ||||||

| Vernicular | 0.8863 | 0.9171 | 0.9440 | 0.9240 | ||||||

| Average | 0.8630 | 0.9223 | 0.8945 | 0.9538 |

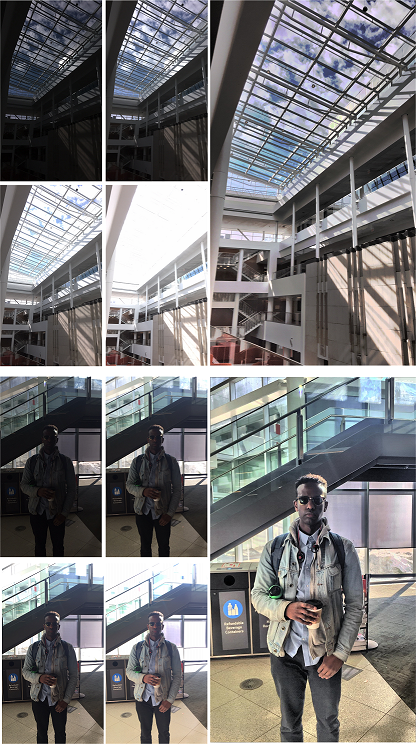

Field assessment of the image quality has also been conducted using the developed iOS application. Fig. 6 shows two sets of examples. The first scene is taken in a commonly seen extreme light condition. The four images with different exposure are shown on the two left columns and the tone mapped image is shown on the right-most column. Our algorithm produces a bright image with clear details — For example, the outdoor cloud and the frame of skylight window are clearly visible. The inner structure of the building such as stairs and railing are also clearly shown in the tone mapped image. Another scene is a portrait image because taking portraits is the most common use of phone camera. The bottom image of Fig. 6 shows an example image of a portrait taken by our application. This image is taken under backlight condition, it is a challenging situation because the strong background light could make the portrait dark or even invisible. The tone mapped image in Fig. 6 shows the portrait clearly and the detail in the background is also well preserved. This experiment indicates that our application is very suitable for imaging under extreme light conditions.

IV-B Application Performance Assessment

| Number of scales | ||||

|---|---|---|---|---|

| 122.11 | 303.79 | 637.90 | ||

| 145.82 | 351.13 | 788.27 | ||

| 166.91 | 402.36 | 865.69 | ||

| 130.33 | 284.74 | 677.69 | ||

| 174.73 | 359.80 | 793.60 | ||

| 175.14 | 422.65 | 920.95 | ||

| 139.69 | 293.37 | 869.33 | ||

| 187.64 | 355.05 | 886.96 | ||

| 212.44 | 432.90 | 1016.26 |

Ideally, we hope to compare the application performance with some other works which are implemented also in mobile-end. However, most tone mapping algorithms reported are implemented on desktop platforms. It would be unfair to compare implementations on different platforms. Hence, we focus our analysis on the mobile-end implementation.

The proposed algorithm has three parameters, namely the number of scales , the number of bins and the regularization term . A detailed performance analysis should fully consider the variation of the three parameters. The regularization term is a scalar value which does not affect neither the memory requirement nor the total amount of computation, so it will not affect the performance of our application. However, the number of scales affects the computation burden and the number of bins affects the required memory usage. To evaluate the two parameters and ’s effect on the processing speed, we tested parameter sets, varying from 3 to 5 and from 3 to 5. The evaluation results of three commonly used resolutions , and are shown in Table IV. For identical parameter settings, the processing time increases mostly linearly as the image resolution increases. For example, the processing times are about ms, ms and ms for the three different resolutions when . The influence of the parameter on the processing time is limited. Under same image resolution with a fixed number of scales, the processing time only fluctuates in a very limited range. In resolution, the processing time are ms, ms and ms when equals to and equals to 3, 4 and 5, respectively. In resolution, the processing time is ms, ms and ms when equals to and equals to , and , respectively. From Table IV, we can see that the scale parameter has greater influence on the application processing time than the parameter. In resolution, the application processing time is , and ms when the is equal to 3 an is equal to 3, 4, 5, respectively. As for the and resolution, the processing time can increase about when scale changes from 3 to 5. It is easy to conclude that the greatest factor that affects the application processing time is the image resolution. Parameter affects the application time secondarily while parameter has only very little influence.

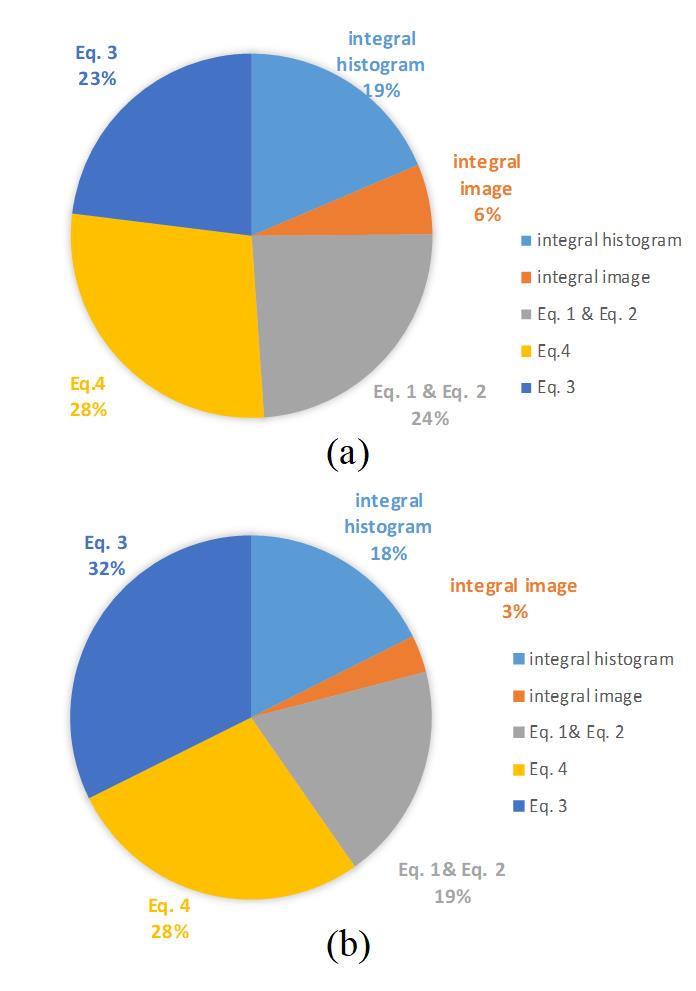

A processing time breakdown using two different parameter settings is shown in Fig. 7. Fig. 7 (a) is the processing time breakdown when , and image resolution is . Fig. 7 (b) is the processing time breakdown when , and image resolution equals to . We choose the two extreme cases to show the rough proportion of each function. Since Eq. 3, Eq. 4 and Eq. 1 & Eq. 2 will be looped for several times, hence, they consumes the majority of processing time. They account for 23%, 28% and 24% of the total processing time in Fig. 7 (a) and 32%, 28% and 19% in Fig. 7(b). The integral histogram and integral images only need to be computed once in our algorithm and they consume 19% and 6% of processing time in Fig. 7 (a), 18% 3% in Fig. 7(b) respectively.

V Conclusion

A tone-mapping algorithm based on integral image and integral histogram for tone mapping of wide dynamic range images is presented. The algorithm is motivated by the local processing feature of the human visual system. It adopts multiple receptive fields to combines global image consistency and local image details into one final image. Quality evaluation as well as field testing were carried out and discussed in detail. In the objective assessment, results showed that the proposed algorithm performed best in both structural similarity score and naturalness score. Hence, highest TMQI index scores were achieved by our algorithm compared to the three other state of the art algorithms. In the application field test, our algorithm also produced appealing image which displayed scene details. Mobile GPU implementation of the proposed algorithm was presented, which can perform tone mapping of typical 1080P WDR color images at about 1 second, thus making it suitable for mobile phone users.

Acknowledgments

The authors would like to thank the Alberta Innovates Technology Futures (AITF) and Natural Sciences and Engineering Research Council of Canada (NSERC) for supporting this research.

References

- [1] J. Tumblin and H. Rushmeier, “Tone reproduction for realistic images,” IEEE Computer graphics and Applications, vol. 13, no. 6, pp. 42–48, 1993.

- [2] G. Ward, “A contrast-based scalefactor for luminance display,” Graphics gems IV, pp. 415–421, 1994.

- [3] F. Drago, K. Myszkowski, T. Annen, and N. Chiba, “Adaptive logarithmic mapping for displaying high contrast scenes,” in Computer Graphics Forum, no. 3, pp. 419–426. Wiley Online Library, 2003.

- [4] C. Schlick, “Quantization techniques for visualization of high dynamic range pictures,” in Photorealistic Rendering Techniques, pp. 7–20. Springer, 1995.

- [5] J. Van Hateren, “Encoding of high dynamic range video with a model of human cones,” ACM Transactions on Graphics (TOG), vol. 25, no. 4, pp. 1380–1399, 2006.

- [6] A. Benoit, D. Alleysson, J. Herault, and P. Le Callet, “Spatio-temporal tone mapping operator based on a retina model,” Computational color imaging, pp. 12–22, 2009.

- [7] E. Reinhard and K. Devlin, “Dynamic range reduction inspired by photoreceptor physiology,” IEEE Transactions on Visualization and Computer Graphics, vol. 11, no. 1, pp. 13–24, 2005.

- [8] R. Mantiuk, S. Daly, and L. Kerofsky, “Display adaptive tone mapping,” in ACM Transactions on Graphics (TOG), vol. 27, no. 3, p. 68. ACM, 2008.

- [9] K. Ma, H. Yeganeh, K. Zeng, and Z. Wang, “High dynamic range image tone mapping by optimizing tone mapped image quality index,” in 2014 IEEE International Conference on Multimedia and Expo (ICME), pp. 1–6. IEEE, 2014.

- [10] F. Durand and J. Dorsey, “Fast bilateral filtering for the display of high-dynamic-range images,” in ACM transactions on graphics (TOG), vol. 21, no. 3, pp. 257–266. ACM, 2002.

- [11] Z. Farbman, R. Fattal, D. Lischinski, and R. Szeliski, “Edge-preserving decompositions for multi-scale tone and detail manipulation,” in ACM Transactions on Graphics (TOG), vol. 27, no. 3, p. 67. ACM, 2008.

- [12] K. He, J. Sun, and X. Tang, “Guided image filtering,” in European conference on computer vision, pp. 1–14. Springer, 2010.

- [13] B. Gu, W. Li, M. Zhu, and M. Wang, “Local edge-preserving multiscale decomposition for high dynamic range image tone mapping,” IEEE Transactions on image Processing, vol. 22, no. 1, pp. 70–79, 2013.

- [14] S. Paris, S. W. Hasinoff, and J. Kautz, “Local laplacian filters: edge-aware image processing with a laplacian pyramid,” Communications of the ACM, vol. 58, no. 3, pp. 81–91, 2015.

- [15] J. Chen, S. Paris, and F. Durand, “Real-time edge-aware image processing with the bilateral grid,” ACM Transactions on Graphics (TOG), vol. 26, no. 3, p. 103, 2007.

- [16] A. O. Akyüz, “High dynamic range imaging pipeline on the gpu,” Journal of Real-Time Image Processing, vol. 10, no. 2, pp. 273–287, 2015.

- [17] R. Ureña, J. M. Gómez-López, C. Morillas, F. Pelayo et al., “Real-time tone mapping on gpu and fpga,” EURASIP Journal on Image and Video Processing, vol. 2012, no. 1, p. 1, 2012.

- [18] G. W. Larson, H. Rushmeier, and C. Piatko, “A visibility matching tone reproduction operator for high dynamic range scenes,” IEEE Transactions on Visualization and Computer Graphics, vol. 3, no. 4, pp. 291–306, 1997.

- [19] P. Viola and M. J. Jones, “Robust real-time face detection,” International journal of computer vision, vol. 57, no. 2, pp. 137–154, 2004.

- [20] F. Porikli, “Integral histogram: A fast way to extract histograms in cartesian spaces,” in Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on, vol. 1, pp. 829–836. IEEE, 2005.

- [21] E. Reinhard, M. Stark, P. Shirley, and J. Ferwerda, “Photographic tone reproduction for digital images,” ACM transactions on graphics (TOG), vol. 21, no. 3, pp. 267–276, 2002.

- [22] P. E. Debevec and J. Malik, “Recovering high dynamic range radiance maps from photographs,” in ACM SIGGRAPH 2008 classes, p. 31. ACM, 2008.

- [23] R. Fattal, D. Lischinski, and M. Werman, “Gradient domain high dynamic range compression,” in ACM Transactions on Graphics (TOG), vol. 21, no. 3, pp. 249–256. ACM, 2002.

- [24] H. Yeganeh and Z. Wang, “Objective quality assessment of tone-mapped images,” IEEE Transactions on Image Processing, vol. 22, no. 2, pp. 657–667, 2013.

- [25] E. Reinhard, W. Heidrich, P. Debevec, S. Pattanaik, G. Ward, and K. Myszkowski, High dynamic range imaging: acquisition, display, and image-based lighting. Morgan Kaufmann, 2005.