Model-based Randomness Monitor for Stealthy Sensor Attacks

Abstract

Malicious attacks on modern autonomous cyber-physical systems (CPSs) can leverage information about the system dynamics and noise characteristics to hide while hijacking the system toward undesired states. Given attacks attempting to hide within the system noise profile to remain undetected, an attacker with the intent to hijack a system will alter sensor measurements, contradicting with what is expected by the system’s model. To deal with this problem, in this paper we present a framework to detect non-randomness in sensor measurements on CPSs under the effect of sensor attacks. Specifically, we propose a run-time monitor that leverages two statistical tests, the Wilcoxon Signed-Rank test and Serial Independence Runs test to detect inconsistent patterns in the measurement data. For the proposed statistical tests we provide formal guarantees and bounds for attack detection. We validate our approach through simulations and experiments on an unmanned ground vehicle (UGV) under stealthy attacks and compare our framework with other anomaly detectors.

I Introduction

Modern autonomous systems are fitted with multiple sensors, computers, and networking devices that make them capable of many applications with little/no human supervision. Autonomous navigation, transportation, surveillance, and task oriented jobs are becoming more common and ready for deployment in real world applications especially in the automotive, industrial, and military domains. These enhancements in autonomy are possible thanks to the tight interaction between computation, sensing, communications, and actuation that characterize cyber-physical systems (CPSs). These systems are however vulnerable and susceptible to cyber-attacks like sensor spoofing which can compromise their integrity and the safety of the surroundings. In the context of autonomous vehicle technologies, one of the most typical threats is hijacking in which an adversary is capable to administer malicious attacks with the intent of leading the system to an undesired state. An example of this problem was demonstrated by authors in [1] in which GPS data were spoofed to slowly drive a yacht off the intended route.

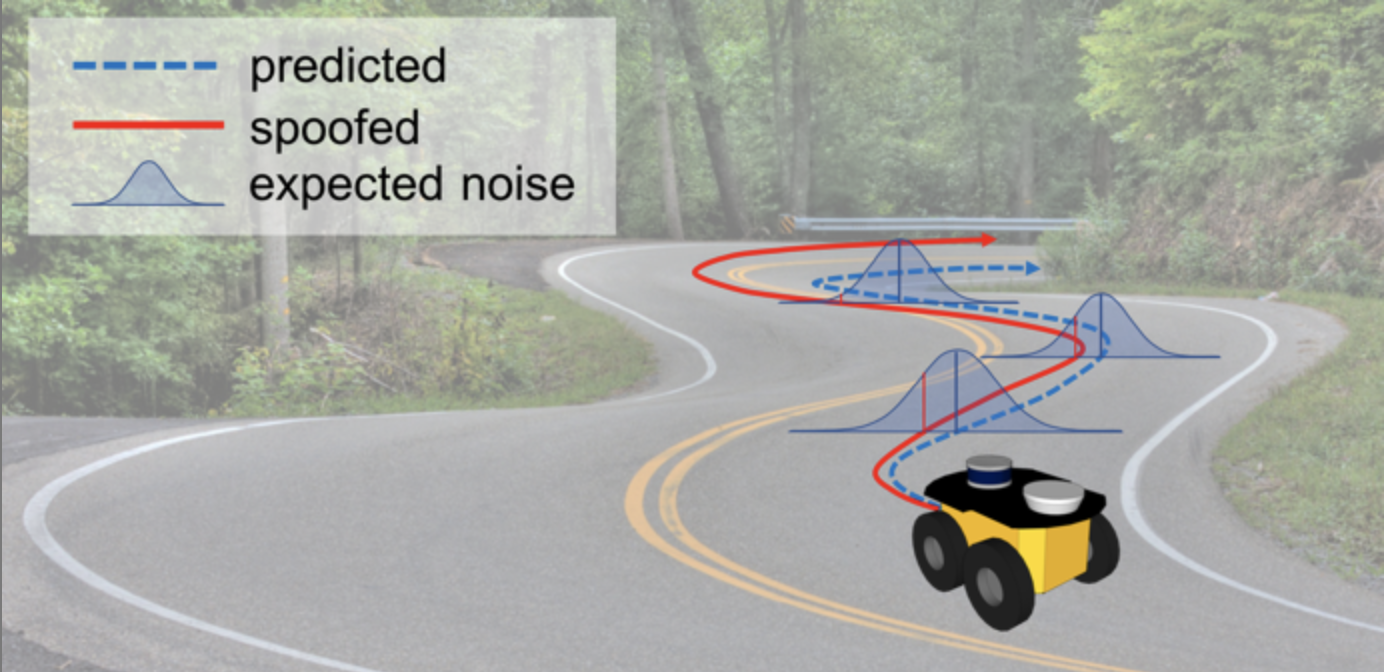

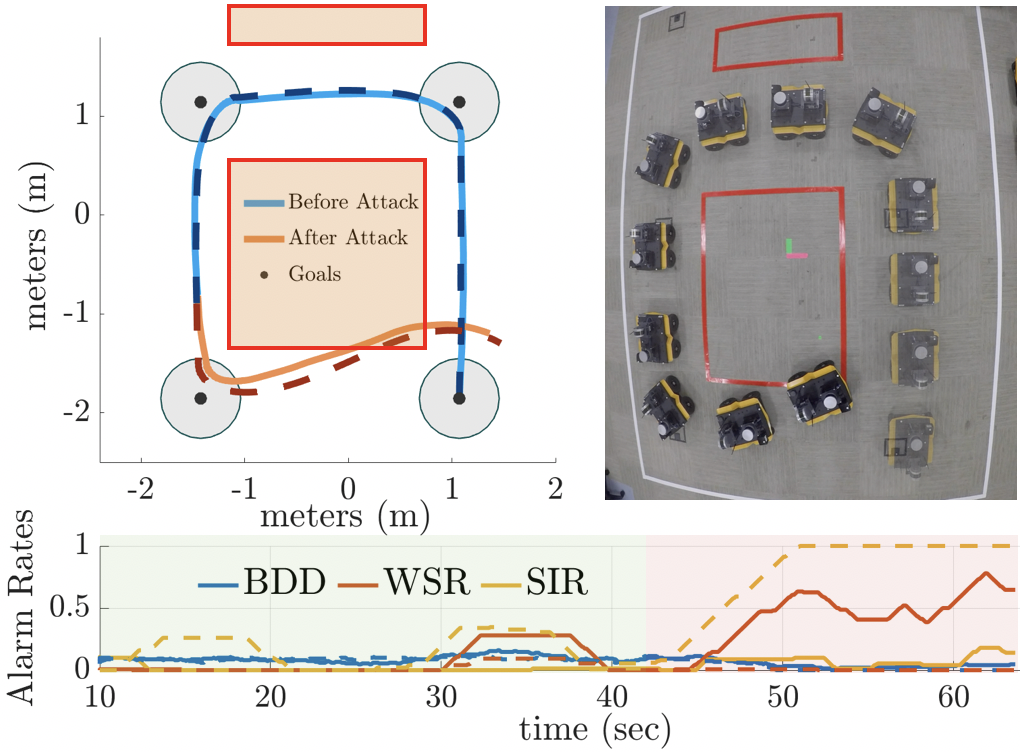

If we look at the specific architecture of these robotic systems, typical autonomous applications employ go-to-goal and trajectory tracking and if one or more on-board sensors are compromised, system behavior can become unreliable. These vehicles typically have well studied dynamics and their sensors have specific expected behaviors according to their characterized noise models. An attacker that wants to perform a malicious hijacking can create non-random patterns or add small biases in the measurements to slowly push the system towards undesired states, for example creating undesired deviations as depicted in Fig. 1, all while remaining hidden within the system’s and sensors noise profile. Hence, in order for an attacker to hijack the system with stealthy attack signals, a violation to the expected random behavior of the sensor measurements must occur.

With these considerations and problem in mind, in this work, we leverage the known characteristics of the residual – the difference between sensor measurements and state prediction – to build a run-time monitor to detect non-random behaviors. To monitor randomness, the non-parametric statistical Wilcoxon Signed-Rank [2] and Serial Independence Runs [3] tests are applied to individual sensors to determine if their measurements are being received randomly. The Wilcoxon test is an indicator of whether the residual is symmetric over its expected value, whereas the Serial Independence runs test indicates whether the sequences of residuals are arriving in a random manner. Thus, the main objective of this work is to find hidden attacks exhibiting non-random behavior within the noise. Given the nature of the non-parametric statistical tests that we propose, only random behavior of the residual is considered here, leaving the magnitude bounds of the residual un-monitored. Several detectors providing magnitude bounds on attacks have been already researched in the literature, thus in this work we also present a framework to combine existing approaches for magnitude bound detection with the proposed randomness monitor. In doing so, our approach improves the state-of-the-art attack detection by adding an extra layer of checks.

I-A Related Work

This work builds on previous research considering deceptive cyber-attacks to hijack a system by injecting false data to sensor measurements while trying to remain undetected [4]. Many of the previous works use the residual for detection, which gives clues whether sensor measurements are healthy (uncompromised). Previous works characterizing the effects of stealthy sensor attacks on the Kalman filter can be found in [5, 6]. Similarly, authors in [4, 7] discuss how stealthy, undetectable attacks can compromise closed-loop systems, causing state and system dynamic degradation

Several procedures and techniques that analyze the residual for attack detection exist, one of which is the Sequential Probability Ratio Testing (SPRT) [8] that tests the sequence of incoming residuals one at a time by taking the log-likelihood function (LLF). The Cumulative Sum (CUSUM) procedure proposed in [9] and [10] leverages the known characteristics of the residual covariance and sequentially sums the residual error to find changes in mean of the distribution. Compound Scalar Testing (CST) in [7] is another technique which is computationally friendly by reducing the residual vector with the known residual covariance matrix into a scalar value with distribution. An improvement of CST in [11] is made by including a coding matrix to sensor outputs that is unknown to attackers, then an iterative optimization algorithm is used to solve for a transform matrix to detect stealthy attacks. Similar to our work where monitors are placed on individual sensors, the authors in [12] propose a Trust-based framework for sensor sets by “side-channel” monitors to provide a weight for trustworthiness to determine whether sensors have been compromised. Other works have proposed attack resiliency by leveraging information from redundant sensing. In [13], authors solve to reconstruct the state estimate of stochastic systems using an optimization problem when less than half of the sensors are compromised. Different from these previous works, we build a framework to monitor sensor measurements to find previously undetectable attacks by searching for non-random behavior.

The remainder of this work is organized as follows. In Section II we begin with system, estimator models and problem formulation, followed by the description of our Random Monitor framework in Section III. In Section IV an analysis of worst-case stealthy attacks and characterization of the effects on system performance is presented. Finally, in Section V we demonstrate through simulations and experiments the performance of our framework augmented with boundary detectors before drawing conclusions in Section VI.

II Preliminaries & Problem Formulation

In this work we consider autonomous systems whose dynamics can be described by a discrete-time linear time-invariant (LTI) system in the following form:

| (1) |

with the state matrix, the input matrix, and the output matrix with the state vector , system input , output vector providing measurements from sensors from the set , and sampling time-instants . Process and measurement noises are i.i.d. multivariate zero-mean Gaussian uncertainties and with covariance matrices and and are assumed static.

During operations, a standard Kalman Filter (KF) is implemented to provide a state estimate in the form

| (2) |

where the Kalman gain matrix is

| (3) |

therefore, we assume that the KF is at steady state, i.e., . The estimation error of the KF is defined as while its residual is given by

| (4) |

The covariance of the residual (4) is defined as

| (5) |

In the absence of sensor attacks, the residual for the sensor follows a Gaussian distribution where is the diagonal element of the residual covariance matrix in (5) such that

| (6) |

We describe the system output considering sensor attacks as

| (7) |

where represents the sensor attack vector. Our proposed framework consists in adding a monitor on each sensor searching for non-random behavior of the sensor measurement residual, hence any sensor may be compromised.

Definition 1

A sensor measurement is random if:

-

•

a sequence of residuals over a time window occurs in an unpredictable, pattern-free manner.

-

•

residuals have proper distributions over .

Since we are considering sensor spoofing, an attack signal containing malicious data can disrupt randomness, causing measurements to display non-random behavior. Formally, the problem that we are interested in solving is:

III Randomness Monitoring Framework

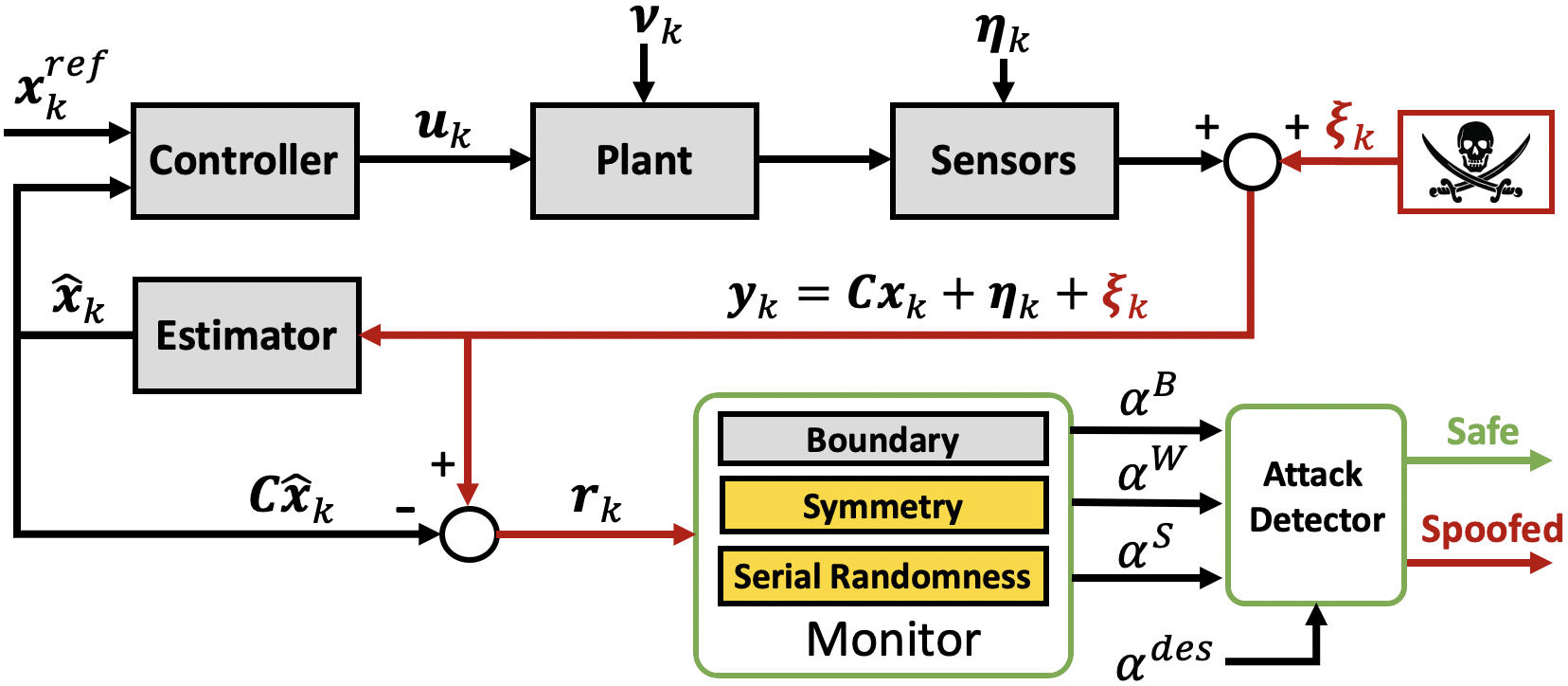

The overall cyber-physical system architecture including our Randomness Monitor framework is summarized in Fig. 2. The Randomness Monitor, augmented to any boundary detector providing magnitude bounds, is placed in the system feedback to monitor the residual sequence.

We introduce a framework to monitor randomness of the residual sequence through two tests and provide tuning bounds for each to result in desired false alarm rates. From (4), the residual should have a symmetric distribution centered at zero and the sequence of residuals should arrive in a random order, having an absence of structure or patterns. For example, a continuously alternating pattern of “negative” and “positive” values, or a pattern of only “negative” values would clearly not satisfy random sequences.

Both tests operate online providing an alarm when the residual does not satisfy the conditions of each test. A desired false alarm rate for each sensor is tuned for each test, and in the absence of sensor attacks, the observed alarm rate for each test should match closely with the tuned desired value .

III-A Residual Symmetry Monitor

To monitor whether the sequence of residuals are symmetrically distributed and zero-mean, we leverage the Wilcoxon Signed-Rank (WSR) test [2] as follows. A hypothesis test is formed by for no attacks and with attacks:

| (8) |

A monitor is built to check if the residual sequence over a sliding monitoring window for previous steps is symmetric. We denote the vector of residual sequences over the sliding window as where the residual sequence for an sensor is . Following , we would expect that the number of positive and negative values of over the monitoring window are equal. Additionally, a symmetric distribution indicates that the expected absolute magnitude of positive and negative residuals over a given window of length are equal,

| (9) |

where and denote the expected absolute magnitude for positive and negative values of the residual within the window for any given sensor. In other words, we would expect the sum of absolute values from the residual to be equal for both the positive and negative values. The WSR test takes both the sign and magnitude of the residual into account to determine whether conditions satisfy . Large differences in the residual signs or signed magnitudes imply non-similar distributions, causing the test to reject the no attack assumption and triggering an alarm.

To perform the WSR test at each time step , we first look at the number of residuals over the monitoring window of a given sensor, ranking the absolute values of residuals , starting with for the smallest absolute value, for the second smallest, and so on until reaching the largest absolute value with . Ranks of absolute values for positive (i.e. ) and negative (i.e. ) residuals over the window are placed into the sets and at every time instance , respectively.

Remark 1

For residuals equal to each other and not equal to (tied for the same rank), an average of the ranks that would have been assigned to these residuals is given to each of the tied values. Furthermore, residuals equal to are removed and is reduced accordingly.

Following, we compute the sum of ranks for both the positive and negative valued residuals,

| (10) |

Residuals with symmetric distributions have similar valued sum of ranks, i.e. , whereas the sum of ranks in non-symmetric distributions are not similar resulting in a rejection of in (8), which we will now discuss how to solve. Assuming a large window of size 111For window length of smaller size, exact tables need to be used for probability distributions of the Wilcoxon Signed-Rank random variable [14]. [14], the Wilcoxon random variables , converge to a Normal distribution (without attacks) as and can be approximated to a standard normal distribution. The approximated expected value and variance of the two sum of ranks and , denoted as is

| (11) |

The z-score of (10) for a given sensor is computed by

| (12) |

and the p-value used to determine whether to reject the null hypothesis (i.e. no attacks) is computed from (12) as

| (13) |

When falls below the threshold , i.e., , we reject from (8) and an alarm is triggered, otherwise . In the absence of attacks, the alarm rate for an sensor should be approximately the same as the desired false alarm rate . Computation of is over the sliding window of length by . Conversely, an attack that affects the residual distribution symmetry, triggering the alarm more frequently, causing an elevation of alarm rate . For alarm rates exceeding a user defined alarm rate threshold, i.e. , the sensor is deemed compromised. In the following lemma we provide a proof for bounds of the WSR test variables (10) to satisfy a desired false alarm rate .

Lemma 1

Given the residual for an sensor over a monitoring window consisting of residuals and desired false alarm rate , an alarm is triggered by the WSR test when is not satisfied where

| (14) |

Proof:

From the Wilcoxon test statistic equaling the sum of ranks in (10), we can rearrange (12) such that where is the critical value of for satisfying a desired alarm rate to not reject (8). The lower bound of must satisfy

| (15) |

to not sound off an alarm . Conversely, we want to show that if the lower bound in (15) holds then the upper bound holds as well. By again manipulating (12) such that we take the maximum where this time is the critical value of for the upper bound satisfying a desired alarm rate to not reject (8), the upper bound is written as

| (16) |

to not trigger the alarm . In the calculation of the critical z-score value from the standard normal distribution to satisfy a given desired alarm rate , it is easy to show that and giving the final bounds of as

satisfying the bounds of in (14). With this we conclude that if does not satisfy (15) then is not satisfied, triggering alarm for a desired false alarm rate , ending the proof. ∎

III-B Serial Randomness Monitor

The WSR test alone is not sufficient to test for randomness, since an attacker could manipulate measurements by creating specific patterns to avoid detection on the WSR test. To test further, we need to determine if the sequence of residuals are being received randomly by leveraging the Serial Independence runs (SIR) test [3]. The SIR test examines the number of runs that occur over the sequence, where a “run” is defined as one or more consecutive residuals that are greater or less than the previous value. A random sequence of residuals over a given window length should exhibit a specific expected number of runs: too many or too few number of runs would not satisfy random sequential behavior. A hypothesis test is formed with for the absence of sensor attacks and where attacks are present by

| (17) |

where is the number of observed runs, to determine whether the number of runs satisfy a randomly behaving sequence. First, we take the difference of residuals at time instances and over a window

| (18) |

where is the monitor window shortened by one by removing the oldest time instance. This in turn gives us residual differences.

Remark 2

A residual difference , from (18) is not considered in the test and the size of is reduced accordingly, i.e., .

From the sequence of residual differences (18), we take the sign of each residual within the window ,

| (19) |

forming a sequence of positive and negative signs. The number of runs are observed over the sequence of residual differences. The expected mean and variance of runs [3] are computed by

| (20) |

Assuming large data sets (i.e. window length ) [3], the distribution of converges to a normal distribution as and can be approximated to a zero mean unit variance standard normal distribution . From the number of observed runs and number of residual differences , we compute the z-score test statistic for Serial Independence from a standard normal distribution

| (21) |

Using the z-score from (21) we compute the p-value of the observed signed residual differences by

| (22) |

When is satisfied where denotes the threshold, we reject the null hypothesis from (17) and an alarm is triggered. In the absence of attacks, the alarm rate is approximately the same as the desired false alarm rate . Alarm rate over the sliding window is computed by . Alarm rates exceeding a user defined alarm rate threshold, i.e. , signifies that the sensor is compromised.

Remark 3

A special case of triggering alarm is when Remark 2 is satisfied, when two consecutive residuals are equal. Since , the probability of having two residuals of the same value is equal to .

The following lemma provides a proof for bounds of in the SIR test to satisfy a desired false alarm rate .

Lemma 2

Given the residual differences for an sensor over a window and desired false alarm rate , an alarm is triggered by the SIR test when is not satisfied where

| (23) |

Proof:

With an observed number of runs within a window of residual differences, we can rearrange (21) such that where , we find the bounds of to not reject (17) for a desired false alarm rate are

| (24) |

From (24) we can finally obtain the bounds of in (23) for alarm triggering at a desired false alarm rate . ∎

IV Stealthy Attack Analysis

This section analyzes the advantages of including the proposed randomness monitoring framework into well known boundary/bad-data attack detectors. To this end, we first introduce two well known anomaly (boundary) detectors – Bad-Data [4] and Cumulative Sum [9] detectors – and analyze the effects of stealthy attacks on a system with and without our Randomness Monitor.

IV-A Boundary Detectors

To show that our framework can easily be augmented with any detector that provides magnitude boundaries, we consider two different boundary detectors found in the CPS security literature. Both boundary detectors discussed in this section leverage the absolute value of the residual (4) for attack detection. Consequently, in the absence of attacks (i.e. ), this leads to following a half-normal distribution [15] defined by

| (25) |

where was defined as the diagonal element in (5).

The first detector that we consider is the Bad-Data Detector (BDD) [4], a benchmark attack detector to find anomalies in sensor measurements, alarming when the residual error goes beyond a threshold. Similar to our detection framework in Section III, the BDD can also be tuned for a desired false alarm rate . Considering the residual in (4), the BDD procedure for each sensor is as follows:

Bad-Data Detector Procedure

| (26) |

Assuming the system is without attacks, the tuned threshold for the BDD in (26) with is solved by where is the inverse error function, resulting in .

The second well-known boundary detector that we consider is the CUmulative SUM (CUSUM), which has been shown to have tighter bounds on attack detection than the BDD [9]. The CUSUM leverages the absolute value of the residual in the detection procedure and is solved by

CUSUM Detector Procedure

| (27) |

The working principle of of this detector is to accumulate the residual sequence in , triggering an alarm when the test variable surpasses the threshold . A detailed explanation of how to tune threshold given a bias for a desired false alarm rate can be found in [9].

IV-B State Deviation under Worst-case Stealthy Attacks

We consider the reference tracking feedback controller

| (28) |

where is the state feedback control gain matrix, is a reference gain for output tracking, is the reference state, and is the KF state estimate from (2)-(3). Choosing a suitable such that is stable (i.e. , where is the spectral radius) and is assumed stabilizable, the closed-loop system can be written within terms of the KF estimation error as

| (29) |

As an attacker injects signals into the measurements (i.e. ), system dynamics are indirectly affected via the interconnected term from the estimation error dynamics.

In the remaining of this section we describe the maximum damage that can occur due to worst-case scenario stealthy sensor attacks. We assume the attacker has perfect knowledge of system dynamics, detection procedures, and state estimation. The objective of an attacker is to cause maximum damage to the system state by injecting attack signals to measurements while also remaining undetected. With only the BDD implemented, the effects of a worst-case scenario attack while not triggering an alarm can be derived from (4) and (26) with a sustained attack signal

| (30) |

causing the residual to not trigger the BDD alarm.

Now considering CUSUM as a stand-alone detector, an adversarial wants to avoid attack vectors such that the monitoring test variable exceeds threshold , thereby causing a reset in (27) by satisfying the CUSUM procedure sequence if . For maximum effect on state deviation, the attacker saturates the CUSUM test statistic such that to achieve no alarm sequences. Here we define a saturation as follows:

Definition 2

Saturation of a boundary detector is defined as the maximum allowable attack signal to force the residual to, but without exceeding, the detector threshold.

Beginning at a time , an attacker immediately saturates with the attack signal,

| (31) |

followed by

| (32) |

for all future time instances to hold at threshold .

With the Randomness Monitor augmented with either BDD or CUSUM, an attacker can no longer hold an attack sequence to one side as described in attacks (30)-(32). Rather, an attacker is forced to create an attack sequence such that alternates residual signs if it wants to avoid triggering alarms for both the WSR and SIR tests. The most effective attack for maximum state deviation with our augmented framework is to saturate the boundary detector as often as possible, while leaving the remaining attack signals with an opposite sign with respect to the saturating attacks to force the residual to be as close as possible to zero.

From the WSR test, given a monitoring window , the minimum number of non-saturating attack signals to not trigger an alarm is

| (33) |

in which and is the set of all as introduced in Section III-A. From (33), we can then find the maximum number of saturating attack signals by .

Proposition 1

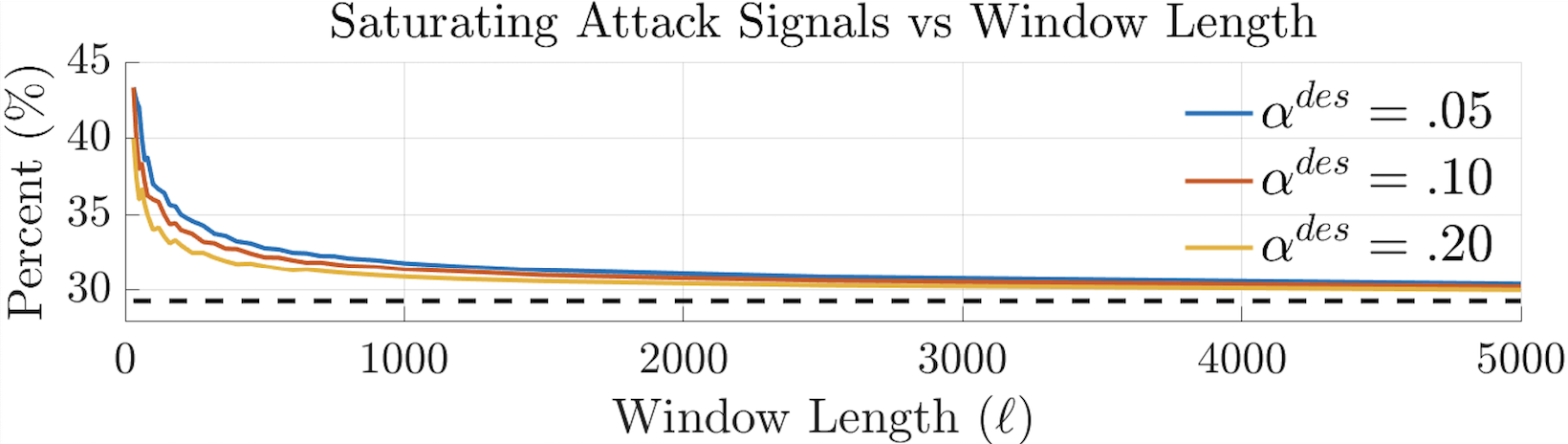

The maximum allowable saturating attack signal converges to for any as shown by the dashed black line in Fig 3.

To this point, we have discussed worst-case scenario attack sequences causing saturation of the test variable (in this paper BDD and CUSUM) to maximize the effect of the attack. However, from Remark 3 in Section III-B, a special case to satisfy requirements of the SIR test is when two consecutive residuals of same value triggers an alarm . To work around this issue, a stealthy attacker with perfect knowledge of the SIR test can include a small uniformly random number to the attack signal denoted by where is infinitesimally small and . Thus, the worst-case scenario with the Randomness Monitor augmented to the BDD follows

| (34) |

in order to not trigger an alarm. Similarly, but with the CUSUM detector, an undetectable attack sequence follows

| (35) |

Given the alternating signed sequence of residuals over the monitoring window, the expected value of under worst-case scenario stealthy attacks is denoted as

| (36) |

With our framework augmented to the BDD, the expected value of the residual sequence is described as and the expectation of the closed-loop system (29) under attack (34) results in

| (37) |

Note, in (37), the reference signal term from (29) has been removed as we are interested in the expected state deviation under an attack. It is clear that if and then the expectation of the estimation error for destabilized states diverge to infinity as (depending on algebraic properties of ), indirectly causing these states within to also diverge unbounded.

Lemma 3

Proof:

Similarly, with the Randomness Monitor augmented to CUSUM, the expected closed-loop system evolution under attack sequence (35) is described by

| (40) |

where is the expected value of the residual sequence vector for CUSUM in (36).

Lemma 4

Proof:

The proof is omitted here due to space constraints but follows the proof for Lemma 3. ∎

V Results

The proposed Randomness Monitor framework was validated in simulation and experiments and compared to state-of-the-art detection techniques introduced in Section IV-B. The case study presented in this paper is an autonomous waypoint navigation of a skid-steering differential-drive UGV with the following linearized model [16]

| (42) |

where is the velocity, is the vehicle’s heading angle, and its angular velocity, forming the state vector . and describe the left and right input forces from the wheels, is the vehicle width, while and are resistances due to the wheels rolling and turning. The continuous-time model (42) is discretized with a sampling rate to satisfy the system model described in (1).

In both simulation and experiment we perform two different attack sequences: Attack #1 where a stealthy attack sequence concentrates the residual distribution with a non-zero mean and smaller variance whereas Attack #2 creates a signed pattern sequence {+, +, +, -} of residual differences . Both attacks are chosen to not increase the boundary detector alarm rate.

V-A Simulations

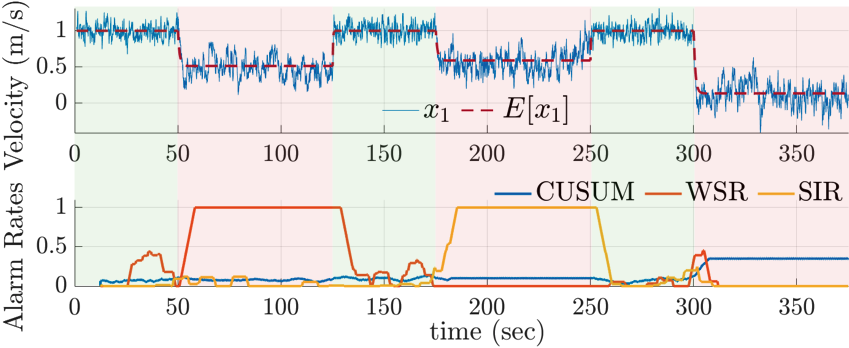

Considering the UGV system model (42) in our case study, we show the effect of stealthy attacks on the velocity sensor on state with a monitoring window length . Table I gives the resulting alarm rates when our framework is augmented to boundary detectors (BDD and CUSUM) with all detectors tuned for desired false alarm rates and in separate simulations we show the alarm rate for No Attack, Attack #1, and Attack #2. As expected, with no attacks present, all alarm rates converge approximately to the desired false alarm rate . Under Attack #1, alarm rates for only the WSR increase to higher values and similarly the Attack #2 pattern gives an increased alarm rate to only the SIR test. We should note that the window length results in different behaviors: short window lengths result in faster responses, while longer window lengths react slower but are able to detect more hidden attacks exhibiting non-random behavior than a monitor with a short window length. Fig. 4 demonstrates attacks on the velocity sensor where our detectors are tuned for and compared with the CUSUM boundary detector. Attack #1 occurs between ()s triggering the WSR test, Attack #2 between ()s triggering the SIR test, and from s a third attack satisfying bounds for both randomness tests but violating the CUSUM test is presented. Velocity is reduced as expected according to (29) while experiencing the effects of each attack.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/93904162-8c0b-474c-9ebc-82a2429240a9/Table_sim3.png)

V-B Experiments

In this section we present a case study for a UGV performing way-point navigation under stealthy sensor attacks. For our case, the UGV travels to a series of goal positions while avoiding a restricted area with a desired cruise velocity m/s while experiencing the same class of attacks as in Section V-A. This time the IMU sensor that measures angle is spoofed while our Randomness Monitor is augmented with the BDD. Fig. 5 shows the UGV position while traveling to the four goal points. For both attacks the vehicle enters the restricted area (marked by red tape) while the boundary detector (BDD) does not see the attack in each case. The alarm rate for the WSR test increases for the case under Attack #1 (solid line) and the SIR test alarm rate increases during the case for Attack #2 (dashed line), as expected.

VI Conclusions & Future Work

In this paper we have proposed a monitoring framework to find cyber-attacks that present non-random behavior with the intention to hijack a system from a desired state. Our framework leverages the Wilcoxon Signed-Rank test and Serial Independence Runs test over a sliding monitor window to detect stealthy attacks when augmented to state-of-the-art boundary detectors. Among the key results of this work we provide: bounds for desired false alarm rate for each test which are leveraged to detect attacks, bounds on state deviation under worst case attack scenario, demonstrating that the proposed framework outperform detectors that solely use boundary tests. The proposed approach was validated through simulations and experiments on UGV case studies.

In our future work we plan to extend the current work to remove this dependency from the monitoring window and plan to leverage our approach in systems with redundant sensors to remove the compromised sensors and build attack resilient controllers similar to our previous work in [6].

Acknowledgments

This work is based on research sponsored by ONR under agreement number N000141712012, and NSF under grant #1816591.

References

- [1] J. Bhatti and T. E. Humphreys, “Hostile control of ships via false gps signals: Demonstration and detection,” Navigation, vol. 64, no. 1, pp. 51–66, 2017.

- [2] F. Wilcoxon, “Individual comparisons by ranking methods,” Biometrics Bulletin, vol. 1, no. 6, pp. 80–83, 1945.

- [3] C. Cammarota, “The difference-sign runs length distribution in testing for serial independence,” Journal of Applied Statistics, vol. 38, no. 5, pp. 1033–1043, 2011.

- [4] Y. Mo, E. Garone, A. Casavola, and B. Sinopoli, “False data injection attacks against state estimation in wireless sensor networks,” in 2010 IEEE 49th Conference on Decision and Control, pp. 5967–5972.

- [5] C. Bai and V. Gupta, “On kalman filtering in the presence of a compromised sensor: Fundamental performance bounds,” in 2014 American Control Conference, June 2014, pp. 3029–3034.

- [6] N. Bezzo, J. Weimer, M. Pajic, O. Sokolsky, G. J. Pappas, and I. Lee, “Attack resilient state estimation for autonomous robotic systems,” in 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Sept 2014, pp. 3692–3698.

- [7] C. Kwon, W. Liu, and I. Hwang, “Security analysis for cyber-physical systems against stealthy deception attacks,” in 2013 American Control Conference, June 2013, pp. 3344–3349.

- [8] C. Kwon, S. Yantek, and I. Hwang, “Real-time safety assessment of unmanned aircraft systems against stealthy cyber attacks,” Journal of Aerospace Information Systems, vol. 13, no. 1, pp. 27–45, 2016.

- [9] C. Murguia and J. Ruths, “Characterization of a cusum model-based sensor attack detector,” in 2016 IEEE 55th Conference on Decision and Control (CDC), Dec 2016, pp. 1303–1309.

- [10] C. Murguia and J. Ruths, “On model-based detectors for linear time-invariant stochastic systems under sensor attacks,” IET Control Theory Applications, vol. 13, no. 8, pp. 1051–1061, 2019.

- [11] F. Miao, Q. Zhu, M. Pajic, and G. J. Pappas, “Coding sensor outputs for injection attacks detection,” in 53rd IEEE Conference on Decision and Control, Dec 2014, pp. 5776–5781.

- [12] T. Severson, et al., “Trust-based framework for resilience to sensor-targeted attacks in cyber-physical systems,” in 2018 Annual American Control Conference (ACC), June 2018, pp. 6499–6505.

- [13] M. Pajic, J. Weimer, N. Bezzo, O. Sokolsky, G. J. Pappas, and I. Lee, “Design and implementation of attack-resilient cyberphysical systems: With a focus on attack-resilient state estimators,” IEEE Control Systems Magazine, vol. 37, no. 2, pp. 66–81, April 2017.

- [14] S. Siegel, Nonparametric statistics for the behavioral sciences. McGraw-Hill New York, 1956.

- [15] S. M. Ross, Introduction to Probability Models, Ninth Edition. Orlando, FL, USA: Academic Press, Inc., 2006.

- [16] J. J. Nutaro, Building software for simulation: theory and algorithms, with applications in C++. John Wiley & Sons, 2011.