Modeling frequency distribution above a priority in presence of IBNR

Abstract

In reinsurance, Poisson and Negative binomial distributions are employed for modeling frequency. However, the incomplete data regarding reported incurred claims above a priority level presents challenges in estimation. This paper focuses on frequency estimation using Schnieper’s framework [7] for claim numbering. We demonstrate that Schnieper’s model is consistent with a Poisson distribution for the total number of claims above a priority at each year of development, providing a robust basis for parameter estimation. Additionally, we explain how to build an alternative assumption based on a Negative binomial distribution, which yields similar results. The study includes a bootstrap procedure to manage uncertainty in parameter estimation and a case study comparing assumptions and evaluating the impact of the bootstrap approach.

1 Introduction

In his profession, a reinsurer has to quote prices for excess of loss covers. Generally, the reinsurer estimates the frequency and severity distributions. For the frequency, the most common choices are the Poisson and Negative binomial distributions. The Poisson distribution can be viewed as the natural distribution in an ideal world: when all claims are independent and occur with a non-random intensity, the distribution is Poisson. However, when there is some uncaptured randomness, the variance is greater than the mean. In the particular case where the intensity of a Poisson distribution follows a Gamma distribution, the overall distribution is known to be a Negative binomial one.

The data the reinsurer receives are often incomplete: only the reported incurred claims above a certain threshold, typically known as the priority. In the context of excess of loss, [7, Schnieper] proposed a model that separates the IBNR (Incurred But Not Reported) into what he termed true IBNR: newly reported claims, and IBNER (Incurred But Not Enough Reported): variation in estimated cost over time.

Although [4, Mack] used some of the ideas from the Schnieper’s method, it has not received much attention. Major contributions based on the Schnieper model include [3] and [2]. In the former, the author derives an estimator for the mean square error of the reserves. In the latter, they proposed a non-parametric bootstrap procedure to estimate the distribution of the reserve. Additionally, some valuable insights inspired by Schnieper’s model are found in [5] and [6]. In the former, the author uses Schnieper’s approach to determine the implicit part of the IBNER in the Chain Ladder reserve. In the latter, they adapt the methodology in order to also separate the paid from the incurred claims.

Schnieper also addressed a special case: claim numbering above a priority. The frequency of claims exceeding the priority over time is divided into: claims that newly reach the priority and claims that fall below it. In particular, he proposed assuming a Poisson distribution for claims that reach the priority and a Binomial distribution for claims that drop below it.

In this paper, we focus on frequency estimation in presence of incomplete data, specifically the reported incurred above a priority, using Schnieper’ framework for claim numbering. We show that the total number of claims in his model follows a Poisson distribution at each year of development. Consequently, this framework is consistent with a Poisson distribution for the total number of claims above a priority and provides a consistent framework for parameter estimation. We also propose an alternative assumption based on a Negative binomial distribution, which yields similar results. We show that the total number of claims also follows a Negative binomial distribution for each year of development and we provide an estimation procedure. Additionally, we address claim reserving by providing the distribution of ultimate claim numbers, conditioned on current incurred claims.

The paper is organized as follows. Section 2 presents Schnieper’s general model, with a review of key estimators. Section 3 covers claim numbers above a priority. The first part deals with the Schnieper assumption, from which we derive additional results. Specifically, we obtain the distribution of the total number of claims for both purposes: quotation and reserving. The second part presents an alternative assumption under which we show that the total claim number above a priority follows a Negative binomial distribution. Section 4 describes a bootstrap procedure for each case, addressing uncertainty in parameter estimation. Finally, Section 5 provides a case study comparing assumptions and evaluating the impact of the bootstrap approach and its contribution to the different assumptions.

2 The general model

The Schnieper model, with an aim of excess of loss cover, separates two different behaviors in the IBNR data:

-

•

The occurrence of newly reported claims, which are assumed to arise randomly based on the level of exposure ;

-

•

The progression of previously reported claims, which is determined by the current known amounts.

The Schnieper’s framework requires more summary statistics than the aggregated evolution of the incurred claims, which we introduce below, where denotes the number of years, represents the occurrence year, and represents the development year:

-

•

The random variables represent the total amount of new excess claims, referring to claims that have not been recorded as excess claims in previous development years ;

-

•

The random variables represent the decrease in the total claims amount between development years and , concerning claims that were already known in development year .

The can be negative in the event of an increase and, by construction, for all .

Given and , the cumulative incurred data can be calculated using the following iterative process:

| (1) | ||||||

We also introduce non-negative exposures that are assumed to be known and associated with the data mentioned above. Finally, we introduce the following filtration:

The current available information is . In the context of Schnieper’s general model, the following assumption is made:

Assumption 2.1.

-

H1

The random variables and are independent for .

-

H2

For , there exists and for , there exists such that

-

H3

For , there exist and such that

The evolution of the follows the same process as in Mack’s model [4], using incurred claims as the exposure combined with a development factor. However, the new claims generated by the represent an additional additive component that depends on the exposure.

From the above assumption, Schnieper introduced the following estimators for the ’s and the ’s.

| (2) | ||||

They are obviously biasfree estimates of the ’s and ’s respectively. Additionally, they are the best linear estimators in and respectively as a consequence of H3.

Schnieper developed a way to estimate the expected value of and the pure premium for the following year, including the total reserve along with its associated mean square error based on estimators of the ’s and ’s that he provides. We now present the model for claim number, which can be considered a specific instance of the broader model.

3 The model for claim numbers

Schnieper also dealt with a special case: the number of claims above a priority, which is the focus of this paper. From this point onward, for a given priority level, we define

-

•

The random variables represent the number of new excess claims pertaining to accident year in development year (were below the priority or not reported in development year ) ;

-

•

The random variables represent the number of claims that exceeded the priority in development year but have since decreased in cost to fall below the priority in development year .

In this context, the are now non-negative and remain bounded by . Additionally, all data are integer.

Schnieper proposed that the new claims, denoted as , follow a Poisson distribution, while the claims decreasing below the priority, represented by , follow a Binomial distribution. The reasoning is as follows: claims that rise above the priority (either new or previously below the threshold) occur independently at non random intensity, aligning with a Poisson distribution. Meanwhile, each claim above the priority has a certain probability of falling below the threshold each year, occurring independently and leading to a binomial distribution. The next subsection will provide a detailed explanation of these assumptions and additional results as stated in [7], including the finding that follows a Poisson distribution at each date.

3.1 The Poisson assumption

The following assumption corresponds to [7, Assumptions ]. We add H1’ to slighty reinforce H1.

Assumption 3.1.

-

H1’

The random variables and are independent for , and the pairs are independent for all .

-

H2’

For , there exists and for , there exists such that

Note that H1’ implies H1, and H2’ implies H2 and H3 from Section 2. H1’ adds independance between and on the same dates, while H2’ specifies the distributions. We assume now that the assumptions H1’ and H2’ hold. Given these, [7] showed that the ’s and ’s defined in (2) are also the maximum likelihood estimators and are efficient, with their variances given by the inverse of the Fisher information.

From what Schnieper stated in this framework, we shall show additional results. Specifically, under H1’ and H2’, the follow a Poisson distribution. Before stating the result, recall first a classic lemma that will be essential.

Lemma 3.1.

Let be a random variable with distribution with and a random variable such that for . Then

Proof.

For completeness, we provide the proof of this elementary result . Let .

∎

Proposition 3.2.

For all , we have

with

Proof.

The above result provides the distribution of given the corresponding exposure. In practice, we are also interested in the conditional distribution for . Before presenting this result, we introduce an essential lemma.

Lemma 3.3.

Let . Let , and for . Then

Proof.

We prove the lemma by induction. It is clear that . Let , and assume by induction that

Let . For ease of notation, we introduce . It follows that

∎

Proposition 3.4.

For all such that , we have

where the right-hand side should be interpreted as the sum of two independent random variables, and with

Proof.

The result indicates that, following the observation of , the distribution of an unobserved can be described as the sum of two components: current claims that exceed the priority threshold and are likely to stay above it, and new claims that may initially rise above the priority but might later fall below it.

If we are interested in estimating , we can use the estimator where are estimators for . The sequences and are estimated by and , as defined in (2).

In practice, the Poisson distribution is commonly used for modeling the number of claims due to its simplicity and the assumption of independent arrivals with non-random intensity across all claims. However, it is often observed that the empirical variance exceeds the empirical mean, suggesting that the claim data might not fully adhere to the assumptions of the Poisson distribution.

For instance, if the intensity parameter of claim arrivals for each policy follows a Gamma distribution, the total number of claims is known to follow a Negative binomial distribution. This distribution is favored because it remains straightforward to use, accommodates excess variance, and converges to the Poisson distribution when this variance diminishes.

In the following subsection, we propose using the Negative binomial distribution instead of the Poisson, demonstrating that, with an appropriate assumption, it can yield comparable results.

3.2 The Negative binomial assumption

In this section, we aim to establish a Negative binomial framework that yields results similar to those obtained from the previous Poisson framework. Specifically, we define the Negative binomial distribution as follows: let where and . The probability mass function of is given by

| (3) |

We then proceed by introducing a new assumption that serves as a replacement for H2’. The change of this assumption has an impact only on the sequence of random variables .

Assumption 3.2.

-

H2”

For , there exists and for , there exists such that

Similarly, H2" implies H2 and H3 from Section 2. The structure of the ’s may not initially appear clear or intuitive. However, the representation of these parameters will be clarified later. The free parameters are the (which replace the ’s from H2’), the and . There is only one additional parameter, compared to H2’. This extra parameter governs the additional variance due to the specific configuration of the family .

Remark 3.5.

The ’s can be explicitly expressed in terms of and the ’s:

The above remark can be verified through direct induction. Estimating the ’s, ’s, and cannot yield explicit maximum likelihood estimates. For ’s, we can use the same estimator as the one defined in the Poisson framework in (2). When is known, to estimate the ’s, we can use a moment estimator based on the expected value and set:

| (4) |

Finally, the estimation of can be computed numerically using maximum likelihood methods:

| (5) |

in which denotes the probability mass function of the Negative binomial distribution with parameter , see (3).

It remains to explain why we choose this form for the ’s. It is a consequence of the following result, in order to have a consistent form, as in the Poisson case. To establish the distribution of the , we begin with a classic lemma.

Lemma 3.6.

Let be a random variable with distribution for and , and a random variable such that for . Then

Proof.

Let .

The final line is obtained by noting that . ∎

Proposition 3.7.

For all , we have

with

Proof.

We prove the lemma by induction. Let be fixed. By construction . Assume by induction that follows a Negative binomial distribution with parameter . Recall that

Under H2”, by Lemma 3.6,

and finally:

∎

The form of the ’s can now be understood. Assuming new claims above the priority threshold follow a Negative binomial distribution and that some claims may later fall below the priority, we aim to maintain consistency at any point in time with the Negative binomial distribution. Consequently, this leads to the specific form of the ’s.

The ’s are increasing, leading to a smaller excess of variance over time. Notably, the likelihood of claims dropping below the priority with probabilities ’s influences the Negative binomial distribution, including claims not yet reported in future development years. And more likely the claim are droping below the thereshold, faster decreases the excess of variance for the new claims.

In particular, for the extremal cases, if , meaning that no claims drops below the priority, the excess of variance does not reduce. Conversely, when , meaning all claims drop below the priority, the excess variance vanishes.

The preceding result provides the distribution of given the related exposure. Additionally, we may be interested in the distribution of for , as described in the following proposition.

Proposition 3.8.

For all such that , we have

where the right-hand side should be interpreted as the sum of two independent random variables, and with

Proof.

4 Bootstrap methodology

In [2], the author discusses a bootstrap methodology for the general Schnieper model to resimulate the ’s and ’s, accounting for uncertainty in the parameters. They also simulate a Gaussian random variable to integrate the internal randomness of the process for each development stage. This follows the main ideas of the non-parametric bootstrap as summarized in [1].

Here, we present a distinct approach that utilizes the specific framework of claim numbers and proposes a comprehensive parametric bootstrap methodology without computing residuals or making any additional assumption.

4.1 The Poisson case

Let be the total number of bootstrap simulations to be performed. To account for the inherent randomness of the ’s and ’s, for . To do it efficiently, we shall use the following lemma.

Lemma 4.1.

Under H1 and H2’, we have:

where .

Proof.

Direct consequence of H1 and H2’. ∎

This provides a direct method to simulate the bootstrapped ’s and ’s.

where and come from and the ’s are the observed data.

Bootstrap simulation of .

Following Proposition 3.2, the bootstrap simulation is performed as follows:

Bootstrap simulation of .

For the lower triangle, the simulation is conducted using:

On the right-hand side of the last equality, the difference of the two distributions should be interpreted as the difference of two independent random variables. This procedure generates a bootstrap distribution for the random variable on the lower triangle. In this process, the uncertainty associated with the estimators of the parameters is integrated.

Remark 4.2.

Based on Proposition 3.4, when our focus is on , we can efficiently simulate , using

where the right-hand side should be interpreted as the sum of two independent random variables, and with

This provides a more efficient algorithm.

4.2 The Negative binomial case

We extend the Poisson model to fit the Negative binomial framework. To account for the variability in the parameters ’s, ’s and , for . We have the following lemma, similar to Lemma 4.1.

Lemma 4.3.

Under H1 and H2", we have:

where .

Proof.

Direct consequence of H1 and H2". ∎

However, unlike the Poisson case, we cannot apply the above lemma straightforwardly since it does not provide the distribution of . Additionally, the estimator of , defined in (5), is non-trivially dependent on the .

Nonetheless, for the , we can proceed as follows:

For the ’s and , we resimulate the upper triangle .

From these upper triangles, we can estimate the ’s and . These are denoted respectively as and .

Bootstrap simulation of .

Following Proposition 3.7, the simulation is straightforward:

Bootstrap simulation of .

For the lower triangle, we simulate :

On the right-hand side of the last equality, the difference of the two distributions should be interpreted as the difference of two independent random variables. Similarly to the approach discussed in Remark 4.2 for the Poisson case, if our focus is solely on the distribution , we can bypass simulating the entire lower triangle using Proposition 3.8.

5 Example

We present two triangles of simulated data to illustrate both cases with years of observations. For the first one, the exposure and the triangle are:

| 1 | 20 | 5 | 9 | 11 | 12 | 13 | 11 |

|---|---|---|---|---|---|---|---|

| 2 | 25 | 11 | 16 | 13 | 11 | 17 | |

| 3 | 32 | 9 | 17 | 22 | 22 | ||

| 4 | 38 | 10 | 10 | 11 | |||

| 5 | 42 | 17 | 18 | ||||

| 6 | 45 | 14 |

whose decomposition in and is:

| 1 | 5 | 4 | 5 | 2 | 1 | 0 |

|---|---|---|---|---|---|---|

| 2 | 11 | 9 | 4 | 4 | 6 | |

| 3 | 9 | 14 | 9 | 3 | ||

| 4 | 10 | 7 | 5 | |||

| 5 | 17 | 10 | ||||

| 6 | 14 |

| 1 | 0 | 0 | 3 | 1 | 0 | 2 |

|---|---|---|---|---|---|---|

| 2 | 0 | 4 | 7 | 6 | 0 | |

| 3 | 0 | 6 | 4 | 3 | ||

| 4 | 0 | 7 | 4 | |||

| 5 | 0 | 9 | ||||

| 6 | 0 |

We can derive directly the ’s and ’s.

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| 0.327 | 0.28 | 0.2 | 0.117 | 0.156 | 0 | |

| 0.5 | 0.346 | 0.217 | 0 | 0.154 | – |

Let be the exposure for the upcoming year. Under the Poisson assumption, using the estimator of the intensity leads to:

Under the Negative binomial assumption and utilizing the ’s and the ’s, and computing by maximum likelihood leads to optimal . In this case, the Negative binomial distribution converges to the Poisson distribution: the assumption does not appear suitable.

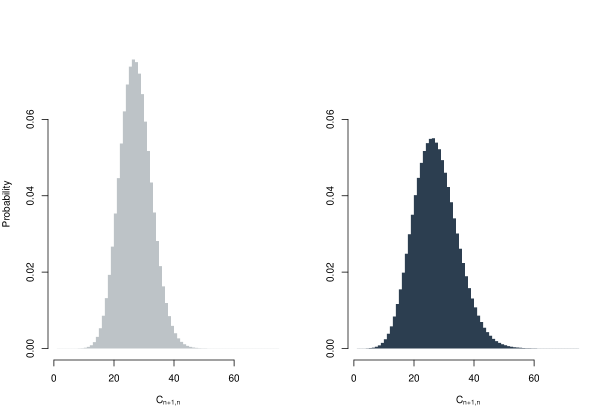

To account for the uncertainty in the unknown parameters, we can use the bootstrap procedure. We get that the variance of is now around 53.361, which is notably higher than the variance obtained when using the Poisson distribution with the estimated parameter. Figure 1 illustrates the histogram of with the distribution (lighter on the left) compared to the distribution obtained from the bootstrap procedure (darker on the right).

We now introduce a second set of triangles, the exposure and the triangle are:

| 1 | 20 | 8 | 4 | 12 | 12 | 14 | 13 |

|---|---|---|---|---|---|---|---|

| 2 | 25 | 3 | 5 | 7 | 10 | 15 | |

| 3 | 32 | 5 | 10 | 11 | 9 | ||

| 4 | 38 | 27 | 20 | 29 | |||

| 5 | 42 | 23 | 18 | ||||

| 6 | 45 | 14 |

whose decomposition in and is:

| 1 | 8 | 3 | 9 | 4 | 3 | 0 |

|---|---|---|---|---|---|---|

| 2 | 3 | 5 | 4 | 3 | 6 | |

| 3 | 5 | 7 | 3 | 3 | ||

| 4 | 27 | 8 | 13 | |||

| 5 | 23 | 7 | ||||

| 6 | 14 |

| 1 | 0 | 7 | 1 | 4 | 1 | 1 |

|---|---|---|---|---|---|---|

| 2 | 0 | 3 | 2 | 0 | 1 | |

| 3 | 0 | 2 | 2 | 5 | ||

| 4 | 0 | 15 | 4 | |||

| 5 | 0 | 12 | ||||

| 6 | 0 |

Again, we can derive directly the ’s and ’s.

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| 0.396 | 0.191 | 0.252 | 0.13 | 0.2 | 0 | |

| 0.591 | 0.231 | 0.3 | 0.091 | 0.071 | – |

Given an exposure of for the next year and assuming a Poisson distribution, we get that

Computing by maximum likelihood does not lead to anymore. We find . This implies that

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| 0.397 | 0.616 | 0.676 | 0.749 | 0.767 | 0.78 | |

| 0.263 | 0.402 | 0.418 | 0.448 | 0.328 | 0.177 |

In particular, under the Negative binomial assumption,

By construction, the expected value of remains at 30.243, but the variance increases to 38.796.

To choose between the two assumptions, we can calculate the log-likelihood and AIC for both cases. Table 1 presents the results.

| log-L. | AIC | |

|---|---|---|

| -53.937 | 119.875 | |

| -50.793 | 115.586 |

It appears that using the Negative binomial distribution is the most suitable choice in this scenario. Given that the ’s are also part of the definition of the ’s, one might question whether it would be beneficial to estimate both simultaneously using data from both and . Let denote the new estimators. Table 2 provides a comparison, which shows that the difference is minimal.

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| 0.591 | 0.231 | 0.3 | 0.091 | 0.071 | |

| 0.601 | 0.232 | 0.305 | 0.092 | 0.071 |

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| 0.397 | 0.616 | 0.676 | 0.749 | 0.767 | 0.78 | |

| 0.393 | 0.619 | 0.679 | 0.753 | 0.77 | 0.783 |

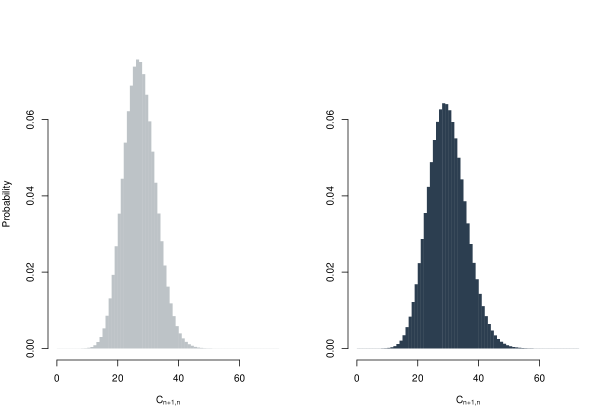

Figure 2 shows the distribution from the Poisson assumption (lighter on the left) compared with the distribution from the negative binomial assumption (darker on the right).

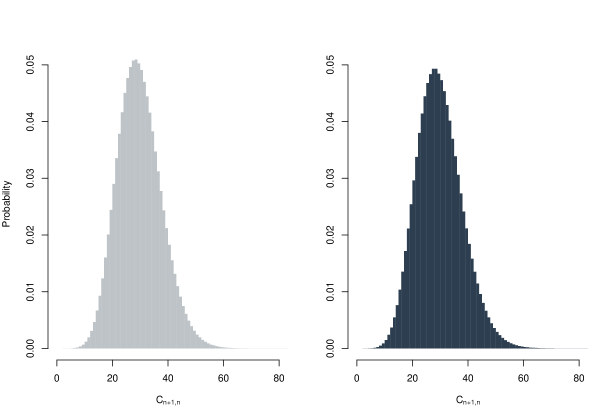

Figure 3 illustrates the bootstrap distribution from the Poisson assumption (lighter on the left) and from the Binomial negative assumption (darker on the right). In each simulation using the negative binomial approach, if the estimated was close to 1, suggesting that the Poisson distribution was a better fit, the simulation was conducted using the Poisson framework. The bootstrap results show that the variance of the distribution under the Poisson assumption is 62.633, while the variance under the negative binomial assumption is 67.658.

Acknowledgments

The author acknowledges the financial support provided by the Fondation Natixis.

References

- [1] P. D. England and R. J. Verrall. Predictive distributions of outstanding liabilities in general insurance. Annals of Actuarial Science, 1(2):221–270, 2006.

- [2] Huijuan Liu and Richard Verrall. A bootstrap estimate of the predictive distribution of outstanding claims for the schnieper model. ASTIN Bulletin, 39(2):677–689, 2009.

- [3] Huijuan Liu and Richard Verrall. Predictive distributions for reserves which separate true ibnr and ibner claims. ASTIN Bulletin, 39(1):35–60, 2009.

- [4] Thomas Mack. Distribution-free calculation of the standard error of chain ladder reserve estimates. ASTIN Bulletin, 23(2):213–225, 1993.

- [5] Esbjörn Ohlsson. Using separate exposure for ibnyr and ibner in the chain ladder method, 2015.

- [6] Esbjörn Ohlsson and Björn Wallberg-Beutelrock. Claims reserving using separate exposure for claims with and without a case reserve, 2022.

- [7] R. Schnieper. Separating true ibnr and ibner claims. ASTIN Bulletin, 21(1):111–127, 1991.