\ul

Modeling novel physics in virtual reality labs: An affective analysis of student learning

Abstract

We report on a study of the effects of laboratory activities that model fictitious laws of physics in a virtual reality environment on (1) students’ epistemology about the role of experimental physics in class and in the world; (2) students’ self-efficacy; and (3) the quality of student engagement with the lab activities. We create opportunities for students to practice physics as a means of creating and validating new knowledge by simulating real and fictitious physics in virtual reality (VR). This approach seeks to steer students away from a confirmation mindset in labs by eliminating any form of prior or outside models to confirm. We refer to the activities using this approach as Novel Observations in Mixed Reality (NOMR) labs. We examined NOMR’s effects in 100-level and 200-level undergraduate courses. Using pre-post measurements we find that after NOMR labs, students in both populations were more expertlike in their epistemology about experimental physics and held stronger self-efficacy about their abilities to do the kinds of things experimental physicists do. Through the lens of the psychological theory of flow, we found that students engage as productively with NOMR labs as with traditional hands-on labs. This engagement persisted after the novelty of VR in the classroom wore off, suggesting that these effects are due to the pedagogical design rather than the medium of the intervention. We conclude that these NOMR labs offer an approach to physics laboratory instruction that centers the development of students’ understanding of and comfort with the authentic practice of science.

I Introduction

This study seeks to characterize aspects of student learning that are both highly valued [1] and challenging to assess. In the context of experimental physics courses and using a virtual reality (VR) environment, students engage in activities with novel force laws that are designed to meet a need for introductory laboratory activities that deepen undergraduate physics students’ understanding of the process of generating new knowledge in science, and the quantitative scientific scrutiny involved. The objective of this study is to better understand the extent to which exploring novel physics, made possible through the use of immersive technologies, can render students more expert-like in their beliefs (1) about how scientific knowledge is generated and (2) in their capacity to produce scientific knowledge.

In light of the physics education research community’s current understanding that laboratory instruction is not an effective means of teaching conceptual content [2, 3], we instead seek to use labs as a place where students engage in the authentic practice of science, equipping them with the tools to understand the world through an empirical lens in alignment with the AAPT’s recommendations for undergraduate physics lab instruction [1]. We aim to foster an expertlike understanding of the role and process of experimentation [4, 5], build their confidence in their ability to design, perform, and interpret experiments [6, 7], and keep them actively engaged through the whole process [8].

Understanding how to provide engaging opportunities for students to develop mathematical models of novel phenomena in a teaching laboratory is a difficult open problem in physics pedagogy [1, 2, 9]. This kind of divergent, creative activity is fraught with challenges related to student autonomy and safety, \taggedtodoTODO P4 I think there’s a relevant Holmes paper here? opportunities for meaningful contexts [9], and the expertise of instructors to engage in a manner that responds to what is happening within each group. \taggedtodoTODO P4 cite Doucette? These issues are particularly challenging in large-enrollment courses where labs are commonly taught by inexperienced undergraduate and graduate teaching assistants (TAs).

Our framework for designing activities in which students learn to generate models is the Investigative Science Learning Environment (ISLE) approach [5, 10, 11, 12, 13, 14] by Etkina, Brookes, and Planinsic. In ISLE, model generation happens during what the authors have named observational experiments, where students engage in open-minded exploration with the goal of developing a model for an unknown phenomenon. This phase of the ISLE process, itself a simplified but authentic representation of the scientific process, is followed by iteratively testing, revising, refining, and applying the model. In our approach, we alter the language slightly from Etkina to optimize transparency for the students of what they are doing. We refer to the processes of model-generating and model-testing, rather than observational and testing, experiments. Note that, in context of ISLE, the terms “model,” “explanation,” and “hypothesis” are interchangeable [15].

Model-generating experiments involving novel scenarios are difficult to create, especially in introductory courses. In many cases, experimental physics questions that reasonably could be investigated at the introductory level are well-known with easily-Googled answers. In presence of known answers, students tend to hold those in highest regard, seeking to confirm known answers above anything and everything else, even in the face of contradictory data [16] or explicit instruction to the contrary. Thus, any access to a “right answer” can derail efforts to engage students in authentic model generation. We address this expectation of getting a right answer by putting students into a different universe with new physics that builds on Newton’s laws and fundamental conservation laws – where neither they, nor their textbook, nor Google, nor their TAs have a ready-made model at hand. The problem of shifting students’ mindset toward generating new models becomes trivial when there are no existing models to confirm.

The activities described in this paper have been part of the University of Washington (UW) introductory physics curriculum for over two years. In the Novel Observations in Mixed Reality (NOMR) labs [17, 18], students explore real and fictitious physical phenomena in an immersive 3D environment. Instructors are struck by the ways that students mature as scientists through these labs, an impression that is not easily quantified. The following is an excerpt from a post-course survey that is fairly typical at the sophomore level, and representative of those most impacted at the introductory level.

“VR labs were fantastic for learning how to effectively approach a physics situation where I didn’t already know what would happen. In most experiments I have done in previous courses, I had learned what to expect before I was actually making observations and collecting data, so this course helped me learn a new way to approach experiments.”

This study is a step toward characterizing this kind of intellectual growth we observe in many students. The work is situated in efforts across the physics education community to find and adapt affective assessment tools beyond standard course evaluations. Our study seeks to establish whether students’ belief in their ability to do physics and their sense of belonging in physics grow along with their understanding of the nature and role of experimental physics to generate new knowledge. We assess the impact of the intervention on students’: epistemology about experimental physics; physics \taggedremoved\stidentity and self-efficacy; and engagement in the learning process. This study contributes to the ongoing research into assessment of what students take away from effective laboratory instruction [19, 20]. Specifically, we focus on the following research questions:

-

RQ1

What changes are observed in students’ epistemology about experimental physics as a result of the NOMR labs?

-

RQ2

What changes are observed in students’ physics self-efficacy in experimental physics as a result of the NOMR labs?

-

RQ3

To what extent are students productively engaged in the NOMR activities, and how does that engagement compare with the hands-on labs in the same course?

II Background

II.1 ISLE

We begin by considering what lab activities reflecting the real-world practice of science look like. The ISLE approach to physics education [5, 10, 11, 12, 13, 14] prioritizes epistemologically authentic investigation of physics as a means to develop students’ scientific abilities [7] and habits of mind. Teaching students to think like expert physicists takes priority over covering conceptual content.

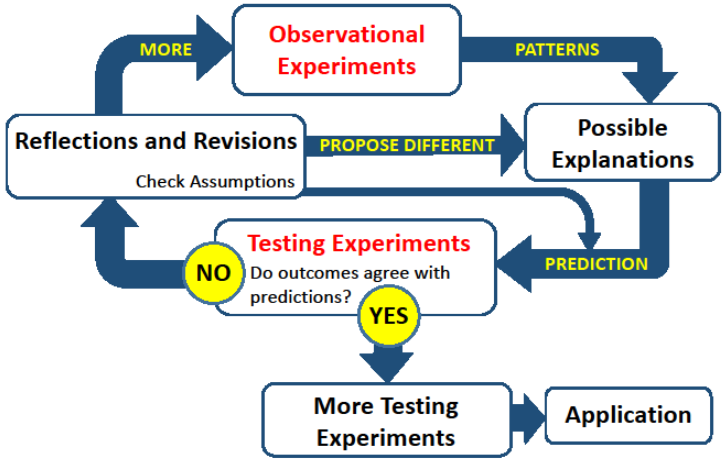

Three types of experiments form the core of ISLE instructional activities, related to each other by the ISLE process (Figure 1):

- Model-generating experiments

-

Labelled in the diagram as Observational Experiments, students engage in open-minded exploration of a previously unknown physical phenomenon. They make note of patterns in the phenomenon’s behavior and devise explanations for those patterns. These patterns become mathematical models and mechanistic explanations of the phenomenon. The models created in model-generating experiments form the basis of students’ knowledge of the phenomenon.

- Testing experiments

-

A model from a prior model-generating experiment is tested. Students design an experiment with well-determined independent, dependent, and controlled variables to test a prediction about the outcome of an experiment that follows from the model in question. They run the experiment, collect and analyze their data, and judge whether the outcome is consistent with the prediction. If so, they have supported, or failed to reject, the model. If not, the model is rejected.

- Application experiments

-

Students apply tested models to determine the value of unknown physical quantities or solve practical problems.

In the ISLE approach, content and process are considered to be inextricably paired; these three types of activities are how students uncover new physics content. Students encounter physical phenomena for the first time through hands-on experimentation, and only after identifying patterns and developing their own explanations do they read about the phenomena in their text.

ISLE-approach labs consist primarily of questions to guide students’ thoughts rather than dictate them. In this way, students learn to ask and answer questions in the way that a scientist would. This process is guided and refined by scientific abilities rubrics [7] used to assess and give feedback on their work.

II.2 Affective measures

This study employs three different research-validated surveys to explore different aspects of students’ engagement and learning. Table 1 gives the name, abbreviation, target metrics, format, and administration schedule for each survey.

| Survey | Target Metrics | Format | Schedule | |

|---|---|---|---|---|

| Lab Epistemology Survey | LES | Student attitudes and beliefs about the nature and role of experimentation in physics | Five open-ended short-answer questions | Pre-survey at the beginning of the term;100-level post-survey after NOMR activities concluded; 200-level post-survey after course final |

| Physics Identity Survey | PhIS | Degree of students’ self-identification as a physicist, interest in physics, and belief in their ability to practice and succeed at physics | Six 6-point Likert items (self-perception and interest); Five 7-point Likert items (self-efficacy) | |

| Flow Survey | FS | Degree and nature of students’ engagement with the week’s lab activity | Seven 7-point Likert items | Weekly at the conclusion of each lab activity |

II.2.1 Epistemology about experimental physics

The Lab Epistemology Survey (LES) originally developed by Hu and Zwickl [21, 22] is used in this study as a measure of students’ epistemology about the role of experimental physics in class and in the world. The LES was developed as an instrument to characterize the beliefs held by physics students at introductory undergraduate, upper-division undergraduate, and graduate levels about about experimentation, models, and their roles in the scientific process. This study uses the LES pre-post to assess changes in students’ epistemology about experimental physics before and after students complete the NOMR labs. Measuring changes in students’ epistemology gives us a window into whether they are adopting the beliefs, attitudes, and mindset about experimental physics characteristic of expert physicists.

The LES is composed of six open-ended questions, accompanied by a codebook used to identify themes in student responses in a consistent and reproducible way. We focus on the first two questions:

-

LES1

In your opinion, why are experiments a common part of physics classes? Provide examples or any evidence to support your answer.

-

LES2

In your opinion, why do scientists do experiments for their research? Provide examples or any evidence to support your answer.

| Item | Code | Definition | Example Student Response |

|---|---|---|---|

| LES1: Why are experiments a common part of physics classes? | Modeling | Experiments in class let students develop their own models for phenomena, discover things on their own, and/or develop their own ideas. | Because it helps show the process of developing a model, rather than just taking it as fact and using it to solve problems. By studying “mystery particles” in lab, we had to experiment and develop our own observations. |

| Scientific abilities | Experiments help cultivate students’ scientific abilities, such as experimental design, data collection, and data analysis skills. | Experiments provide a way to provide reasoning skills as applied to physics, of which experiments [sic] reasoning is needed to have problem [solving] skills not only in the course but in other aspects of life. | |

| Model testing | The purpose of doing physics experiments is to prove, support, or test a model. | Experiments are necessary to test theories. Theories cannot be made into laws without testing. | |

| Supplemental learning | Experiments provide supplemental learning experiences for concepts and theories. | Experiments are a common part of physics courses because they help you understand the concepts we are learning. | |

| LES2: Why do scientists do experiments for their research? | Discoverment | Experiments contribute to some aspect of the iterative and generative nature of the scientific process aside from testing an existing model. | Scientists in the real world are consistently working to provide new findings that deepen our understanding of the world. [There are] plenty of examples in the past, including Newton’s laws of motion and evolutionary theory. |

| Model testing | The purpose of doing physics experiments is to prove, support, or test a model. | You cannot confirm a hypothesis without performing experiments. Without gather repeatable data you cannot decide if something is true or not |

Novice responses to these LES items exhibit an almost singular focus on the idea that experiments in instructional labs exist to supplement conceptual learning or test theories (using a layperson’s understanding of the term “theory”). The term “theory” is somewhat vague here: It has a specific definition in the context of physics, but is used in a lay sense by students, often translating to “anything that is not an experiment” or “what we know from the textbook or lab manual.” Expertlike responses more frequently acknowledge the role of in-class experimentation in the development of scientific abilities and as a means to better understand the scientific process. With regard to experiments in scientific research, novices tend to focus on the notion that experiments exist to test theories. Experts more frequently cite the creation of new models and the iterative nature of experimental model development as purposes of experiments in research.

The original LES [21] included the codes Theory testing (“The purpose of doing physics experiments is to prove a theory or test a hypothesis.”) and Theory development (“Experiments inspire the development or improvement of theories.”). Due to the vague nature of the term “theory,” it is unclear how Hu and Zwickl drew a distinction between “theory” and “hypothesis” as used by students. To use the term “theory” in our analysis, we would need to establish our own definition, at risk of misrepresenting students’ responses in a replication study.

In alignment with ISLE, we remove references to the term “theory” in favor of the term “model.” A model is a foundational concept in ISLE, used heavily throughout both populations’ lab activities. The modified codes we use in our analysis are Model testing (“The purpose of doing physics experiments is to prove, support, or test a model.”) and Model development (“Experiments inspire the development or improvement of models.”).

The distinction between Model development and Discovery (Original definition: “Experiments help investigate unknowns.”) is subtle and not one we are interested in probing; rather, we are more interested in understanding whether students’ responses reflect any acknowledgement at all of steps of the ISLE process aside from model testing. Instead, we collapse the two codes into a single Discoverment code: “Experiments contribute to some aspect of the iterative and generative nature of the scientific process aside from testing an existing model.”

The final code list is given with definitions and examples in Table 2.

II.2.2 Physics self-efficacy

In context of the physics classroom, self-efficacy refers to students’ belief in their ability to practice and succeed at physics. Developing students’ self-efficacy is a primary goal for our laboratory instruction, as we want students to walk away from the course with confidence in their ability to design, perform, and interpret experiments [6, 7]. \taggedremoved\stPhysics identity refers to the degree to which students think physics is related to who they are. The literature has shown that physics identity and science identity more broadly are connected to persistence in scientific fields [24]. We seek to understand how NOMR affects each of these constructs.

This study employs the Physics Identity Survey (PhIS), which we adapted from a science identity survey administered to middle school biology students to evaluate shifts arising from their participation in an immersive virtual lab [23]. The original survey was developed through the lens of Hazari’s science identity framework [24].

The PhIS is divided into two sets of items probing (1) self-efficacy, and (2) physics identity and interest. We focus on the self-efficacy items, listed below, each on the scale [1: Not at all Confident – 7: Completely Confident]:

-

PhIS1

How confident are you that you can design an experiment to answer a scientific question in physics?

-

PhIS2

How confident are you that you can look at data that you collect and characterize its patterns mathematically?

-

PhIS3

How confident are you that you can understand the kinds of problems that experimental physicists would investigate?

-

PhIS4

How confident are you that you could contribute to a team of physicists investigating an experimental physics problem?

-

PhIS5

How confident are you that you can defend your data analysis to a team of expert physicists?

removed\stWe focus on one physics identity and interest item, on the scale [1: Definitely False – 6: Definitely True]:

-

PhIS6

I consider myself a physics person.

removed\stThe physics identity and interest items were adapted from those of the original study by replacing each instance of “science” with “physics”. The interest items gauged students’ interest in learning about nature, environmental science, and ecosystems; we referred to students’ interest in physics and experimental physics in their place. The self-efficacy These items were adapted by swapping out learning goals of the ecosystems biology course of the original study for learning goals of the lab courses of concern in this study, e.g., designing an experiment to answer a scientific question in physics and mathematically characterizing patterns observed in data.

We validated the Likert scale items comprising the PhIS in accordance with Adams and Weiman’s recommendations for the development of formative assessment instruments [25]. We conducted think-aloud interviews with eight 100-level physics students. Participants were asked to rate each Likert-scale item and explain their choice as they did so. Participants were recruited through an announcement over the course’s web page and incentivized incentivized to participate with $20 gift cards. The interviews were conducted online, audio recorded, and transcribed by Otter.ai and subsequently hand-corrected.

The interview transcripts were examined to assess the alignment of students’ reasoning for the responses they chose with the construct each item was meant to assess. Students’ understanding of each item reflected our expectations, and their reasoning for each choice revealed nothing unexpected. The validation interview results did not lead to any modification of the PhIS.

II.2.3 Flow as a measure of engagement

We use the psychological theory of flow pioneered by Csíkszentmihályi [26] as a lens through which to examine students’ engagement with class activities. Known colloquially as being “in the zone,” flow is described as a state in which one is completely absorbed in an activity for its own sake, where one action leads smoothly into the next, and one’s sense of time becomes distorted. A balance between the person’s self-perceived skillfulness at and the challenge posed by an activity is instrumental to achieving a flow state; great challenge must be met with commensurate belief in one’s own skill. It is in this state that the most effective learning happens [27].

Csíkszentmihályi identified seven conditions for a person to achieve flow:

-

1.

They know what to do (a clear goal).

-

2.

They know how to do it.

-

3.

They are receiving clear and immediate feedback to know how well they are doing.

-

4.

They know where to go (if navigation is involved).

-

5.

They see what they are doing as challenging.

-

6.

They are confident in their ability to complete the task.

-

7.

Their environment is free of distractions.

As the flow model is fundamentally one of engagement with an activity, it has utility as a measurement of students’ engagement with the learning process [27, 28, 29]. Active engagement is key to learning [8]; conversely, even well-designed instructional activities with epistemologically authentic inquiry as in ISLE cannot reach students who are not engaged in the learning process.

A subset of the flow conditions comprise an effective basis for maintaining task involvement: A learner needs feedback, confidence in their ability to complete the task, and an environment free of mental distractions. To stick with a task to completion, it is critical a the student’s self-efficacy is great enough that they believe they can do so [6]. To support this belief, they need clear feedback to know how well they are doing and what the next steps are.

Rebello and Zollman note [28] that the zone of proximal development [30], the optimal adaptability corridor [31], and flow are all representations of a balance between a learner’s skill and challenge. To express the optimal adaptability corridor’s dimensions in terms of flow, horizontal transfer (efficiency) maps to skill, and vertical transfer (innovation) maps to challenge. Flow comes in as a means to tie this balance to other affective elements of the student experience, unifying a number of affective constructs in educational psychology under one quantifiable umbrella.

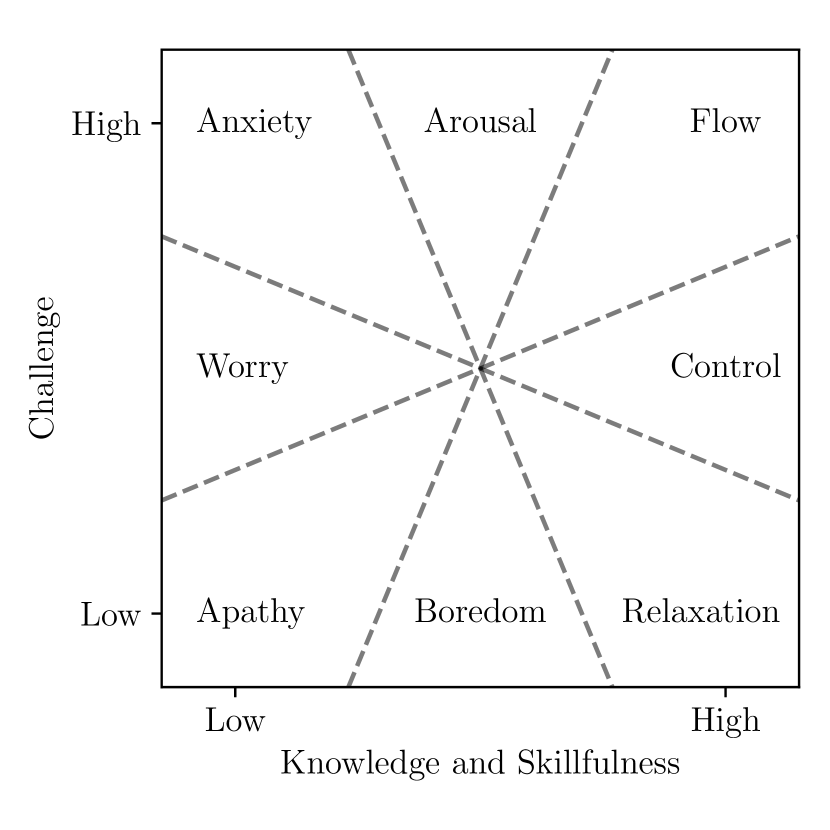

Massimini and Carli’s efforts [32, 33] to develop a quantitative instrument to measure flow led to the eight-channel flow model we use in this study. One begins by constructing a mental state diagram, hereafter referred to as a flow plot, with perceived knowledge and skillfulness on the horizontal axis, and perceived challenge on the vertical axis. Each flow plot is divided into eight channels (Figure 2), representing different relative combinations of challenge and skill. The top-right channel, Flow, is the most productive, representing a great challenge met with commensurate skill. Flow’s neighbor channels Control and Arousal represent less challenge and less skill than Flow, respectively. Flow, Control, and Arousal are considered productive channels for learning [27]. The Relaxation channel represents a surplus of skill and dearth of challenge; its mirror channel Anxiety represents an extreme challenge one feels poorly equipped to handle. The least productive states of Worry, Apathy, and Boredom fill out the lower regions of the challenge-skill space, with skill and challenge both insufficient to support productive engagement.

Karelina, Etkina, Bohacek, Vonk, Kagan, Warren, and Brookes [29] used the eight-channel model to compare students’ engagement with content-equivalent ISLE-aligned hands-on and video-based labs; we follow much of their methodology in our study of students’ engagement with VR labs.

The Flow Survey (FS) is drawn from Karelina et al.’s adaptation of a subset of items from the psychometrically-validated Flow State Scale [34]. The FS uses items from their adaptation with minor wording changes for our experimental context.

It consists of seven 7-point Likert scale items:

-

F1

To what extent was the instructor’s assistance needed? [1: Not at all – 7: A lot]

-

F2

To what extent did you know what to do (goal of the task)? [1: Not at all – 7: A lot]

-

F3

To what extent did you know how to do it? [1: No idea – 7: Completely]

-

F4

To what extent did you know how well you were doing? [1: No idea – 7: Completely]

-

F5

To what extent was the lab challenging? [1: Not at all – 7: Extremely]

-

F6

To what extent did you feel knowledgeable and skillful during the lab? [1: Not at all – 7: Extremely]

-

F7

To what extent was the lab fun and interesting? [1: Not at all – 7: Extremely]

We follow Karelina et al.’s analysis methods to create flow plots using two items: F5 as a measure of percieved knowledge and skill on the horizontal axis, and F6 as a measure of challenge on the vertical axis. Students who give a high score to both items are understood to be in a flow state.

Karelina et al.’s study compared average responses along each axis of the flow plot with two-tailed paired t-tests to determine differences in students’ perceived skill and challenge between video and hands-on treatment groups. These quantitative comparisons were backed by visual comparisons of which channels students tended to fall into in each treatment group.

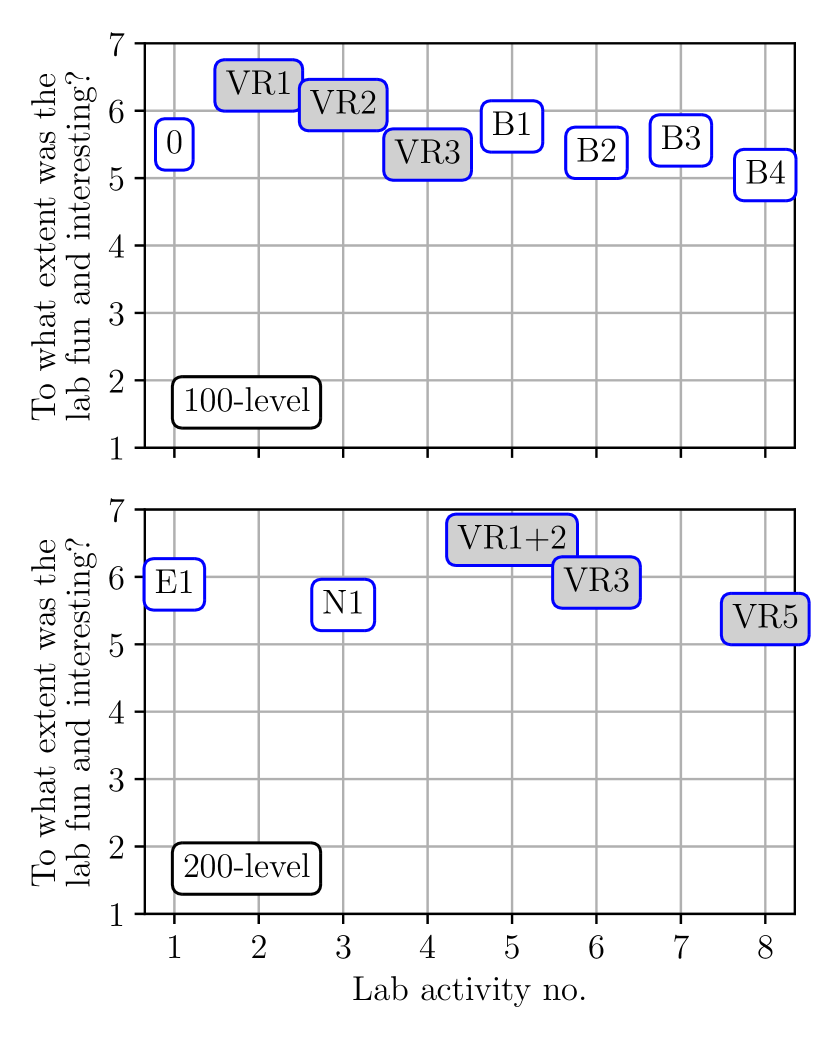

We also make use of F7 (“…fun and interesting?”) to characterize the effect of the novelty of VR on students’ experience. Weekly measures of this item allow for comparison across lab activities, e.g., comparing hands-on labs to VR labs.

III Methods

III.1 Structure of NOMR labs

The intervention examined in this study uses VR labs to allow students to experience and analyze physical laws in the context of particle interactions that do not exist in nature or on the Internet. We include the constraint that they be consistent with our universe’s physics so that students can rely on their extant physics knowledge when reasoning in the VR space. These fictitious physical laws can be construed as hypothetical mathematical variations on Coulomb’s Law. A selection of fictitious phenomena are described in more detail in [17].

The virtual apparatus is designed such that it does not give perfect answers; experimental uncertainty is still very much present even in the simulation. The “right answers” programmed into the simulation are never shared with students or their TAs, such that the only “right” answer is the one that students can make the best case for.

In the virtual lab space, \taggedtodoTODO P4 Add figure with screenshot students can access force and distance measurement tools and a supply of particles (modeled as hard spheres) exhibiting the behavior(s) they are investigating. These particles can be moved around the space freely, as well as be fixed in place individually. To facilitate creating static arrangements of particles, physics can be temporarily paused in the entire space. As there is no copy of the lab manual nor any means by which to record data while in the headset, the operator relies on their group’s interaction and record-keeping. Each group of 3-4 students shares one VR headset, with its display mirrored onto a lab computer.

Multiple instructional practices are in place to combat gendered task division common to inquiry-based physics labs [35, 36]. All groups complete a teamwork agreement at the beginning of the term outlining expectations and norms for their interactions in and out of class. The NOMR lab manuals prompt students to take turns using the headset at multiple junctures. Students are encouraged to have each member of the group use the headset to collect data, as a means of obtaining multiple measures from which to determine a central value and uncertainty for each of their measurements.

The NOMR labs described in this study are used in two instructional contexts: in introductory calculus-based physics, and in a sophomore-level lab for applied physics majors. The first lab encountered, called Charge and Mint, is comprised of two activities. Introductory students complete these components as two separate labs (VR1 and VR2; see full lab titles and schedule in Table 3), and students in the advanced course complete both components in a single lab session (VR1+2).

First, groups design and conduct an experiment to test whether virtual analogues of electrically charged particles follow some re-scaled version of Coulomb’s law. This lab serves to familiarize students with the VR environment and doubles as an opportunity to teach (or review) data linearization.

Second, they take qualitative and quantitative data to create an empirical model for the interaction between fictitious minty particles, which behave according to an unknown force law. That force law is not included here in an effort to keep it out of print; instead, we present a handful of observations students might make, and leave the specifics of the model to the reader’s imagination:

-

•

Minty particles repel when they are near each other, and attract when they are far away. A turnaround point where the force is zero exists at a certain separation between particles.

-

•

If two minty particles are brought as close as possible to one another and released from rest, they appear to undergo oscillatory motion. Considering the full range of distances achieved in this motion, the range of distances for which the force between the particles is repulsive seems to be shorter than the range of distances for which it is attractive.

-

•

Beyond the repulsive region, no matter how far apart two minty particles are moved, they continue to exert upon each other a substantial attractive force which increases with distance.

Charge and Mint is used as a preparatory lab to get students familiar with the virtual learning environment and comfortable with the idea of developing a mathematical model for a completely unknown phenomenon. Once the preparatory lab is complete, students are given a new, more complex phenomenon to explore and model, without any phenomenon-specific scaffolding. This final lab takes two forms: the one-week Exotic Matter Lab for introductory students (lab VR3), and the three-week Manifold Lab (VR3-VR5) for advanced students.

The Exotic Matter Lab’s content is identical to the first week of the Manifold Lab: Every group is assigned a different set of fictitious phenomena (referred to as a scenario). This phase allows for differentiated instruction as the TA assigns scenarios at the start of class based on their impressions of each group’s strengths and weaknesses and the nature of the challenge each scenario poses. Each scenario contains up to three distinct types of particles, all visually identical on creation, picked from at random whenever the user creates a new particle. The phenomena underpinning each scenario, and the subject of students’ inquiry, are the force laws governing the interactions between the particles. In most cases, a single force law dictates the interaction between each pair of particles, though students may develop different valid interpretations supported by their data. The force laws programmed into NOMR are never shared with students or TAs.

In this model-generating experiment, students are told that they have at most three distinct types of particles in their scenario and given tools to label particles and temporarily remove particles from play. Their goals are to determine how many types of particles they have, develop a procedure for identifying an unknown particle, and come up with a testable empirical model describing some subset of the behaviors they observe. Students write up their findings in a full lab report. For introductory students, this model-generating experiment report marks the end of their foray into VR.

Advanced students working through the Manifold Lab instead submit reports describing their model-generating experiments and resulting models to a class-wide repository. During class in the second week, each group selects another group’s report describing a model of a scenario they had not yet interacted with themselves. They write and submit a proposal before the third week of lab, describing an experiment to test the other group’s model. These experiments are carried out in the third week, and their results presented in an oral talk symposium in the fourth week.

The Manifold Lab is presented to students with a game-like narrative in which they function as research scientists. They explore different “pocket” universes with novel forms of matter obeying fundamental force laws unknown to our universe. The privilege to conduct the second experiment with better equipment depends on applying (non-competitively) for grant funds: Before performing the second experiment, students write a single-page “grant proposal” in which they summarize another group’s findings, propose an experiment to test their model, and request additional or improved equipment within the VR lab. The instructor serves as an entity equivalent to the NSF and its reviewers: They review students’ grant proposals for feasibility and work with each group to revise proposed experiments such that it is likely that each will produce a clear outcome that builds on the prior group’s findings. Each group receives a few credits to spend on equipment, e.g. more precise measurement tools, a larger workspace, tools that snap to more convenient configurations, or the ability to automatically pause physics after a set amount of time. Occasionally, a clever idea from a group of students inspires the development of a new tool in NOMR, which is added to the upgrade options going forward.

This design seeks to emulate the experience of working within a professional scientific collaboration: The class as a whole collaborates by sharing data and designing experiments to test and revise each other’s models. In doing so, students complete an entire cycle of the ISLE process: One group creates a model through a model-generating experiment, another tests it with a testing experiment, and those results serve to reject, revise, or further substantiate the model.

III.2 Instructional Context

The study activities took place at the University of Washington in Seattle (UW), a large R1 public research university in the Pacific Northwest. Of the population of students enrolled in the courses examined in this study, 65% identify as male, and 35% as female (non-binary gender identities are not reflected in UW records, and we did not solicit this information from students separately). White (41%) and Asian (36%) students make up the majority of the population, followed by students who identify with two or more races (8.7%), Hispanic or Latino,a,e students (7.6%), and Black students (2.8%).

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/02f5ab26-7444-43a6-85ba-4708cb9f53ed/cz_timeline.png)

Our data come from two physics courses at UW during Fall 2022. All instruction was held in person except in the event a student couldn’t attend a lab due to illness, in which case their lab partners brought them in via video call, when possible. In both courses, groups of 3-4 students worked together for the entire quarter. Each class’s lab curriculum schedule is shown in Table 3.

- 100-level population

-

NOMR was implemented during calculus-based electromagnetism, the second of a three-quarter introductory physics sequence. 467 students enrolled in Fall 2022, and 380 students consented to participate in the study. We refer to the consenting students as the 100-level population hereafter. This course consisted largely of engineering (74%) and science (17%) students filling prerequisites for their major. Students met weekly for three 1-hour lecture sections, a 1-hour tutorial section, and a 2-hour lab section.

- 200-level population

-

Introduction to Experimental Physics used NOMR as well. This course enrolled 38 students, mostly applied physics majors (55%) who typically intend to follow an industry-oriented path after graduation, alongside other physics and astronomy majors (21%), pre-science majors (13%), computer science majors with physics minors (8%), and one math major. All 38 students consented to participate in the study; we refer to them as the 200-level population hereafter. Students met weekly for a 1.5-hour lecture section and 3-hour lab section. Eight members of the 200-level population had previously seen NOMR labs in the modern 100-level labs; all other 200-level students had taken traditional or online (due to COVID) 100-level labs, without VR.

III.2.1 Lab activities

All lab activities in the 100- and 200-level courses are designed in alignment with the ISLE approach. We say “in alignment” because a full implementation of ISLE requires integration of the ISLE process across all components of a course (lecture, lab, etc.), which is not the case at UW.

Every lab activity can be categorized as a hypothesis generating, hypothesis testing, or application experiment (as in Table 3), excluding weeks in the 200-level course dedicated specifically to writing and communication. Students’ work is guided and assessed with the ISLE scientific abilities rubrics [7].

The first four weeks of the 100-level labs (labs 0, VR1-VR3) focus on particle interactions. Lab 0 is a qualitative testing experiment on Coulomb’s law. Students test the effects of charge and separation on the electric force between a copper sphere and a Teflon rod. This is the simplest lab of the quarter, and deliberately so. It is the first lab of the quarter, when students are still joining the course and switching between sections. The following three weeks (labs VR1-VR3) are NOMR labs, as described in section III.1.

The remaining four weeks of labs are traditional hands-on labs exploring circuits:

- B1

-

Students begin their exploration of circuits with a model-generating experiment seeking to develop a model to describe the behavior of battery-bulb circuits in series, parallel, and mixed configurations.

- B2

-

Models generated in the prior week are tested against a mixed-configuration circuit. Students create predictions for the current and voltage through each element of the circuit based on their model from the prior week, build the circuit, collect data, and compare the results to their predictions. Where there is disagreement, students revisit and revise their model.

- B3

-

Capacitors are introduced. Students perform a model-generating experiment to develop a mathematical model for the voltage across a charging capacitor.

- B4

-

Students are given a resistor of unknown value and a model of voltage across a discharging capacitor. Using this model, students perform an application experiment to determine the value of the resistor, with uncertainty, by manipulating the model to give the resistance in terms of the slope of a linearized plot and the capacitance of the capacitor.

The 200-level course opens with the electron beam lab (E1-E2). Each group is given an electron beam apparatus (commonly called an “e/m apparatus”) that fires electrons across a user-specified voltage into a helium-filled bulb subject to an approximately uniform magnetic field generated by Helmholtz coils outside the bulb. Students are asked to devise and answer a scientific question with the apparatus. Most often, this ends up being a testing experiment based on students’ knowledge of electrons’ motion in a magnetic field. Occasionally, it turns into a model-generating experiment if a group does not recall this model.

The subsequent nuclear decay lab (N1-N2) works in similar fashion: Students are given an apparatus, instructed in its operation, and are set loose to devise and answer a scientific question of their choosing. In this case, the apparatus is a radioactive Cs source, a Geiger-Muller tube with event counting hardware and software, a box of barriers of various material and thickness, and a stand for all of the above with slots in which to place the source and barriers.

The rest of the 200-level labs are NOMR labs documented in section III.1: Charge and Mint (VR1+2) and the Manifold Lab (VR3-VR6).

III.3 Data Collection

III.3.1 Lab Epistemology Survey and Physics Identity Survey

The LES and PhIS were administered as part of the same survey in all cases.

100-level students completed the pre-survey in Week 2, after Lab 0, which was a traditional hands-on lab, and before VR1, the first NOMR lab. The survey was included as part of a timed quiz, and we recognize the time constraint may have influenced students’ responses. The post-survey was included as part of an untimed reflection on the performance of their group. It was administered in Week 4 after the conclusion of VR3, the final NOMR lab for 100-level students. Therefore, the pre-post shifts reported here reflect changes in 100-level students’ responses before and after only the NOMR labs.

200-level students completed the pre-survey in Week 1 before the start of classes, and completed the post-survey in Week 10, after their final presentations. The pre-post shifts reported here reflect changes in students’ responses before and after all 9 weeks of labs, including non-NOMR activities.

III.3.2 Flow Survey

The FS was administered every week at the end of lab to both populations. We offered a small amount of extra credit for each week the survey was completed, and emphasized that the surveys would help improve the lab curriculum in future terms. In the 100-level population, 42 students completed all eight surveys; in the 200-level population, 14 students completed all eight surveys. The findings reflect responses only from these students who completed all eight surveys.

IV Findings

IV.1 RQ1: What changes are observed in students’ epistemology about experimental physics as a result of the NOMR labs?

Responses to LES1 and LES2 were coded independently by the first author and another researcher. The researchers met to reconcile disagreements after the first coding pass, with a disagreement rate of roughly for LES1 and for LES2. After further expanding on the existing code definitions, adding examples, and adjusting the codes as described in Section II.2.1, we reached inter-rater agreement across all codes.

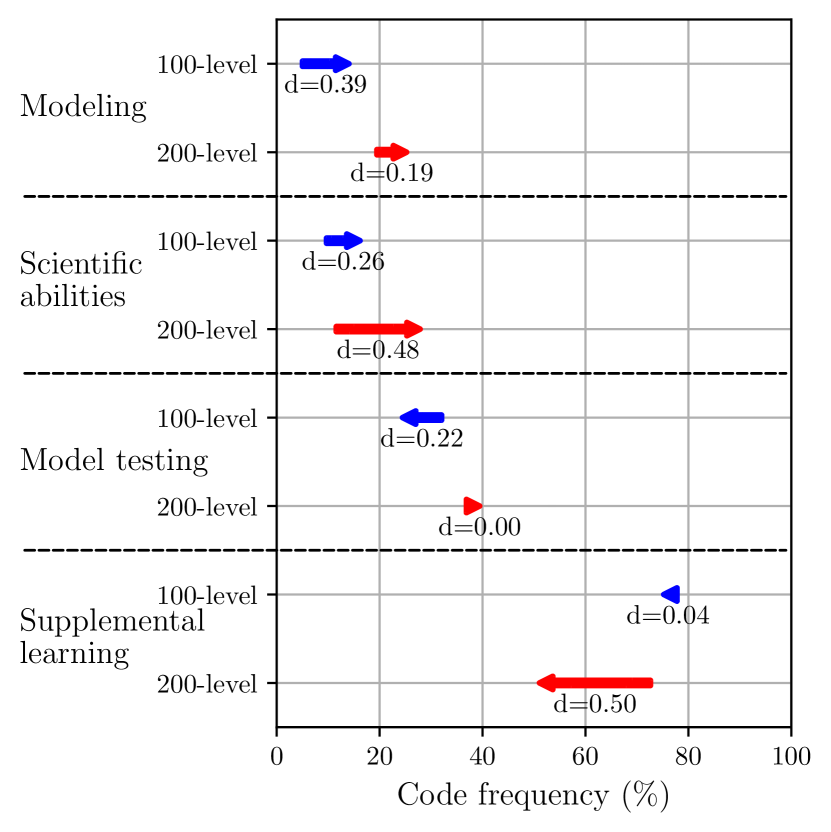

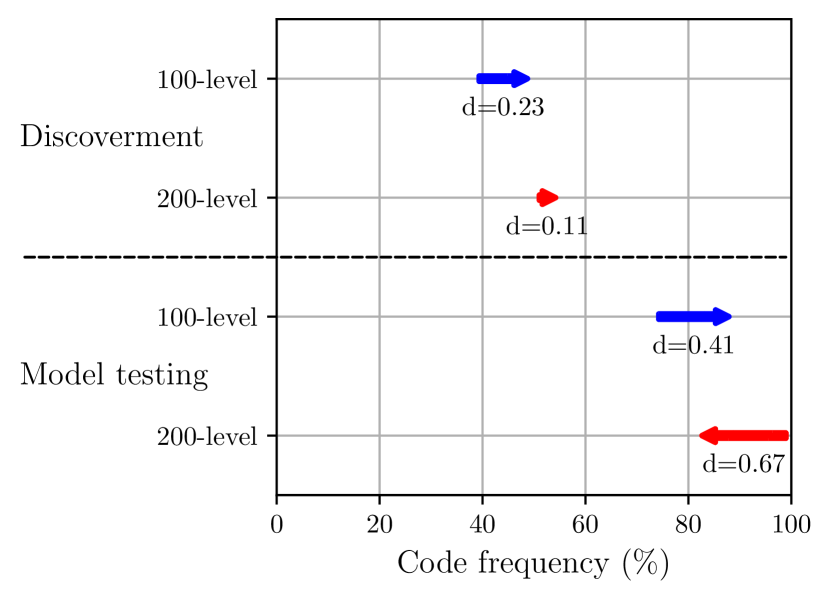

The LES findings are presented as code frequencies: the fraction of responses in a population that were assigned each code. Figures 3 and 4 depict the shift from pre to post for each population-item. Each student response could be assigned no code, one code, or more than one of the codes associated with the item. As most responses were assigned one or more codes, the total number of codes is greater than the number of responses.

Cohen’s is presented for each shift, calculated from pre and post code frequencies and :

| (1) |

Whether a code is or is not assigned to a given response is a binary variable, so the binary standard deviation is used.

IV.1.1 LES1: Why are experiments a common part of physics classes?

Responses to LES1 were assigned up to four codes, defined with examples in Table 2 and reproduced below:

- Modeling

-

Experiments in class let students develop their own models for phenomena, discover things on their own, and/or develop their own ideas.

- Scientific abilities

-

Experiments help cultivate students’ scientific abilities, such as experimental design, data collection, and data analysis skills.

- Model testing

-

The purpose of doing physics experiments is to prove, support, or test a model.

- Supplemental learning

-

Experiments provide supplemental learning experiences for concepts and theories.

We added the Modeling code in response to the recurring presence of its ideas in our dataset and its relevance to our research questions. It captures the creation of new models that results from model-generating experiments in the ISLE process, while Model testing represents the subsequent model-testing function of a testing experiment.

Example responses that were assigned each code are given in Table 2. A sample response to LES1 that was assigned multiple codes follows:

“Experiments allow us to challenge what we know while apply what we have learned. Its a new way of learning things–a more hands-on approach. We can learn about the scientific models and how experiments are designed to either explain a new phenomena or test a pre-existing model.”

This response is assigned Modeling for the phrase “We can learn…how experiments are designed to either explain a new phenomena…” and Model Testing for the last part of that sentence: “…or test a pre-existing model.” Supplemental learning is present in a couple of places: “We can learn about the scientific models…” and “Its [sic] a new way of learning things–a more hands-on approach.” Finally, the clause “…how experiments are designed…” merits the Scientific abilities code.

Both populations’ code frequencies are plotted in Figure 3. Comparing pre-post results, we find that the students in our study became more likely to indicate a belief that labs are meant to develop their Scientific abilities and give them opportunities to develop their own models (Modeling). The 100-level population became less likely to cite Model testing as a purpose of in-class experiments, while the 200-level population became dramatically less likely to cite Supplemental learning for the same.

IV.1.2 LES2: Why do scientists do experiments in professional research?

Responses to LES2 were tagged with up to two codes, defined with examples in Table 2 and reproduced below:

- Discoverment

-

Experiments contribute to some aspect of the iterative and generative nature of the scientific process aside from testing an existing model.

- Model testing

-

The purpose of doing physics experiments is to prove, support, or test a model.

LES2’s code frequencies are plotted in Figure 4. Both populations acknowledged the iterative and generative elements of the scientific process (Discoverment) more frequently after NOMR labs than at the beginning of the course. The 100-level population’s Discoverment code frequency increased by a significant degree; the 200-level population’s frequency started higher and saw a smaller increase. The 200-level change is small enough to be statistically insignificant. \taggedremoved\stRoughly 20% of the 200-level students had also experienced NOMR labs at the 100-level in a prior course.

The 100-level population was assigned Model testing codes more frequently after NOMR labs than at the beginning of the course, jumping from 73% to 89%. The 200-level population’s code frequencies saw a significant decrease in Model testing, moving from 100% to 82% of matched responses.

IV.2 RQ2: What changes are observed in students’ physics self-efficacy in experimental physics as a result of the NOMR labs?

IV.2.1 Statistical Analysis

todoTODO P2 Many citations still to add here.

We assess pre-post shifts in students’ responses to the Likert items comprising the PhIS by calculating -values using the Wilcoxon signed-rank test [37]. We chose the Wilcoxon test because it is a nonparametric test and thus makes no assumptions about whether the dataset is normally distributed. We opted against using the Mann-Whitney U test as we are interested in testing for differences within paired samples. The Wilcoxon signed-rank test is a more appropriate choice than the Mann-Whitney U test, since the Mann-Whitney test is used for independent samples. \taggedtodoTODO P2 cite

We report the common-language effect size computed from the Wilcoxon test statistic and the total rank sum by the expression: \taggedtodoTODO P1 cite . In general terms, tells us what fraction of students reported a higher score in the post-survey than in the pre-survey. However, this metric does not account for ties, where a student gives the same response for an item in the pre- and post-surveys; ties are ignored in the calculation of the effect size. The common-language effect size can range from 0 to 1 for a given item, where 0 indicates that all respondents gave an equal or lower score to the item on the post-survey than on the pre-survey, 0.5 indicates that as many respondents reported a higher score as reported a lower one, and 1 indicates that every respondent’s post-survey score was equal to or higher than their pre-survey score.

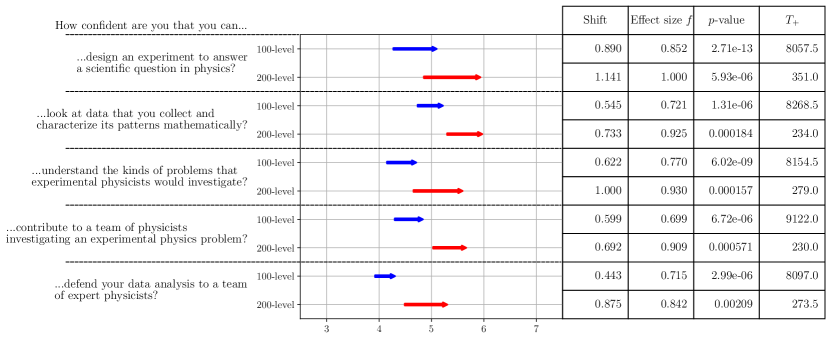

IV.2.2 Self-efficacy

As shown in Figure 5, positive shifts are observed for both populations in all self-efficacy items. The figure shows interpolated medians for each item before and after the intervention; each arrow’s tail represents the pre-intervention median, and each head the post-intervention median. Thus, the length represents pre-post change. The 100-level and 200-level populations’ data are shown in blue and red, respectively. The effect sizes vary, but there is a positive shift for every self-efficacy item at a 99.7% confidence level or better () in both populations.

Comparing the size of the populations’ arrows for each item, we note that the shift in the 200-level students’ responses is consistently greater than that of the 100-level students, by roughly 50% on average. This magnified effect is observed despite the 200-level pre-data medians being consistently higher than those of the 100-level students, leaving less room for improvement.

Every individual 200-level response to the item “How confident are you that you can design an experiment to answer a scientific question in physics?” either did not change or became more expertlike. This is a useful example for understanding the common-language effect size. Of all the students whose scores changed, 100% of them increased. Therefore, .

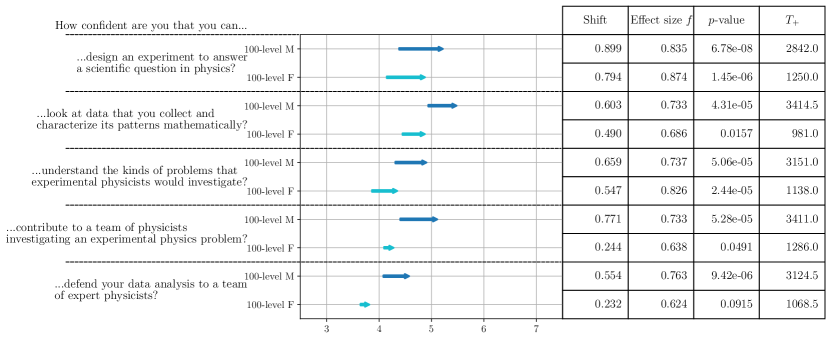

The PhIS results are unique among our dataset in that there are notable differences between responses given by male- and female-identifying students, as shown in Figure 6. While there are positive shifts for all items for both genders, female-identifying students consistently started lower on each item and saw a smaller increase. Female-identifying students’ increases on the last two items are notably small; the shift in responses to PhIS4 barely meets the traditional significance threshold , and the shift for PhIS5 does not.

removed\stPhysics identity item PhIS5 (“I see myself as a physics person.”) has featured prominently in prior research [24]. \taggedtodoTODO P3 find 1-2 more We observed very small shifts in both populations, neither of them statistically significant. \taggedtodoTODO P3 add phys identity findings to appendix

IV.3 RQ3: To what extent are students productively engaged in the NOMR activities, and how does that engagement compare with the hands-on labs in the same course?

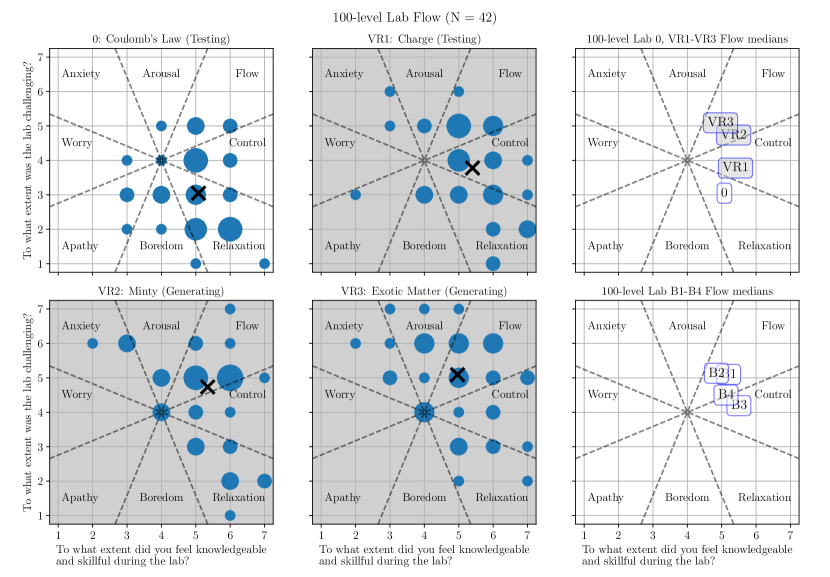

Following the analysis methods of Karelina et al. [29] described in Section II.2.3, we produced flow plots for each week of each population’s lab activities. The number of students who responded with each pair, representing (skill/knowledge, challenge) is indicated by the size of the dot at that point. The area of each dot is proportional to the number of students it represents. For example, suppose 4 students responded that the lab was extremely challenging () and they felt only moderately skillful (), and 16 students responded that the lab was a significant challenge () that they felt prepared to tackle (). We would see a dot at with radius and a second dot at with radius . The absence of a dot indicates that zero students gave the associated response.

For our analysis, we invent a quantity to help characterize the quality of student engagement through the lens of flow across an entire class. We calculate the interpolated median of these data along each dimension of the flow plot (skill/knowledge on the -axis, challenge on the -axis) and plot the point defined by these medians. As a metric for the aggregate engagement state of the class, the closer to the top-right corner this 2-D median is, the more effective the lab was at inducing productive engagement. We use the interpolated median rather than the mean, as it better captures the distribution of these ordinal data.

One can produce error bars for the interpolated medians by taking the standard error along each dimension. We found this to consistently produce error bars the same size or smaller than the marker, so we have omitted them.

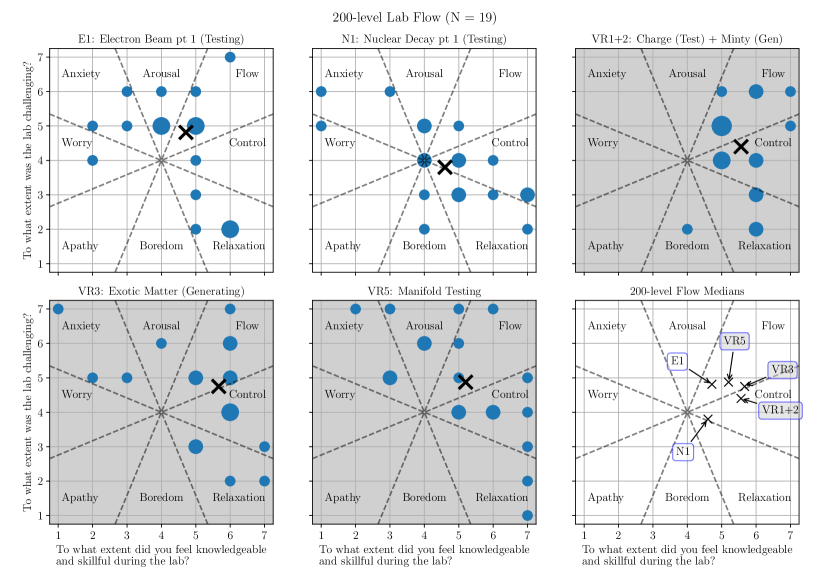

The 100-level population’s flow data from labs 0-VR3 (the first half of the course) are shown in Figure 7 alongside summaries of the 2D medians for labs 0-VR3 and B1-B4. The 200-level population’s flow data are plotted and their medians summarized in Figure 8.

We note a few key findings from the flow plots of 100-level students (Figure 7):

-

1.

In both 100-level model-generating VR labs (VR2 and VR3), zero respondents reported Boredom, Worry, or Apathy. This is not the case in any other 100-level labs.

-

2.

There is gradual migration out of Relaxation from labs 0-VR3.

-

3.

The especially high-challenge, high-skill point in the Flow channel had zero respondents in the first two labs 0 and VR1, one respondent in lab VR2, and several respondents in lab VR3.

Looking at the migration in the 2D medians of the 100-level students’ responses to labs 0-VR3 (Figure 7, top right), we see a trend consistent with the design of the curriculum: it starts out easy and becomes more difficult by the week (upward movement), but students report feeling equipped to handle the increased difficulty (remain on the right side). Lab VR3, the third and final week of NOMR lab activities, had the median closest to the top-right of the plot out of all of the VR labs.

The rightmost column of Figure 7 lets us compare the 2-D medians of the NOMR labs to the ISLE-based hands-on circuit labs B1-B4, shown on the top-right and bottom-right of the figure, respectively. The students complete all A labs before embarking on the B labs, which comprise the latter half of the course. We note that lab VR3 and the first two weeks of circuit labs (B1 and B2) all achieved similar states of productive engagement, landing near the diagonal in the Flow channel.

In the 200-level population, students completed every survey over the course of the quarter; the flow plots and aggregated medians are shown in Figure 8. Lab activities focusing on communication and writing with no new experimentation (Table 3), are omitted from the flow plots. As with the 100-level population, the VR labs’ medians follow a path up and to the left over time, starting in Control and landing solidly in Flow. The median corresponding to the final VR lab (VR5) is farthest along the diagonal bisecting the Flow channel out of all the labs. We note that only 3 student responses for VR5 are actually in the Flow region, with the majority sitting in Control and Arousal and a few in Anxiety and Relaxation. The Charge and Mint lab (VR1+2) and Exotic Matter lab (VR3) had the most individual responses in the Flow region at 7 out of 19.

Figure 9 shows both populations’ responses to F7 (“To what extent was the lab fun and interesting?”) for each lab activity. At the 100-level, students’ responses are very high for VR1, remain elevated for VR2, and their responses for VR3 are indistinguishable from any other activity.

We see a similar pattern in the 200-level students’ responses to the three VR labs in their curriculum: The Charge and Mint lab (VR1+2) was seen as the most fun (median ), followed by the subsequent Manifold lab part A (VR3) with a half-point lower median, and the final Manifold lab part C (VR5) landed between the hands-on labs with a median of .

Both populations reported the highest flow state in the last week of NOMR activities. The last NOMR lab for 100-level students was a model generating experiment (VR3). For 200-level students, it was a test of models generated in a prior experiment (VR5).

V Discussion

V.1 RQ1: What changes are observed in students’ epistemology about experimental physics as a result of the NOMR labs?

V.1.1 LES1: Why are experiments a common part of physics classes?

The data show (Figure 3) that for both populations in our study, the frequency of each code underwent a shift consistent with growth toward an expertlike understanding of the role of experimentation in physics classes. Both populations started with the Modeling code occurring much less frequently than Model testing. After the intervention, both received the Modeling code more frequently and the 100-level population received Model testing less frequently than when they started. In particular, the 200-level population closed the gap entirely: Their post-survey frequencies for Modeling and Model testing had equal values at the end of the quarter. Movement toward equal emphasis on these two codes is considered expertlike as it is in alignment with the epistemological basis of the ISLE approach, itself grounded in the real-world practice of physics.

Supplemental learning came up much more frequently than Scientific abilities in pre- and post-surveys in both populations. However, both populations narrowed that gap: Both populations reported Scientific abilities more often and the 200-level population reported Supplemental learning less often than when they began, which are shifts to more expertlike belief.

The small 100-level Supplemental learning shift is a little surprising in context of the model-generating NOMR labs, in which students are coming up with models for completely fictitious phenomena in a clear and deliberate departure from lecture content. Supplemental learning is not a goal of those labs. It could be that the high and unaffected Supplemental learning code frequency is due to students’ long history of traditional science labs where supplemental learning is indeed the primary goal; years of conditioning are not readily overcome by a 3-4 week intervention.

In sum, The LES code frequencies in both populations reveal that the students’ beliefs are changed by the NOMR labs in significant ways. Regarding experimentation as part of their course-taking, they are less likely to consider classroom laboratory activities to be a supplement to the theory they learn in lecture (primarily in the form of testing theories they have already learned), and more likely to see them as an opportunity to gain new knowledge in the form of developing scientific abilities and developing scientific models.

V.1.2 LES2: Why do scientists do experiments in professional research?

We compare our Discoverment code frequencies to the original study’s findings by adding together each of their populations’ Model Development and Discovery code frequencies. We expect that a subset of responses in each population received both codes, so these combined Discoverment code frequencies are likely over-estimates.

We would expect a collection of expert responses to LES2 to receive both Discoverment and Model testing codes at a fairly high frequency. The original study’s PhD student responses merited Discoverment at 65% frequency and Model testing at 90% [21]. These were the greatest and smallest frequencies among all populations in the original study, respectively. PhD students were the most experienced population in the original study, suggesting that expertlike change would manifest as an increase in the Discoverment code frequency and a decrease in the Model testing code frequency.

The increase in Discoverment frequencies in both UW populations suggest growth toward an expertlike understanding of the multi-faceted role of experimentation in scientific research. On account of the low starting point for both populations, we suggest that we can interpret any increase as developing more toward expertlike beliefs.

The Model testing shifts are more ambiguous: the increase in 100-level responses is opposite to what we would consider an expertlike change. However, that increase brings the 100-level Model testing frequency almost exactly in line with that of the PhD student population in the original study. On its own, this increase could be generously interpreted to suggest that 100-level students’ belief that experiments in scientific research serve to test, support, or prove models was unchanged given the inherent uncertainty in qualitative analysis. More conservatively, this incomplete but accurate belief was bolstered alongside beliefs (i.e. Discoverment) that lead to a more complete expertlike understanding. The 200-level population saw a decrease in Model testing codes, moving from 100% to 82% frequency. This is below that of any population in the original study. Unlike the 100-level activities, the 200-level activities–especially the Manifold Lab–are routinely connected to examples of real-world research as part of the introduction for each lab. Explicitly drawing these parallels may have contributed to the relatively large shift in the 200-level responses.

Taken as a whole, these findings represent growth toward an expertlike understanding of the role of experimentation in physics. After NOMR, students shift away from viewing experimental physics exclusively as a theory-testing endeavor, to one that includes a variety of important aspects of the role of experimentation in generating new knowledge. This shift brings students closer to to the expert view of scientific knowledge as a process that involves rigorous validation in the natural world.

V.2 RQ2: What changes are observed in students’ physics self-efficacy in experimental physics as a result of the NOMR labs?

The presence of a positive change at a 99.7% confidence level or better () for every PhIS self-efficacy item for both populations suggests that students’ self-efficacy around conducting physics experiments is tangibly improved after participating in NOMR labs. Students believe that they are learning in ways consistent with widely agreed-upon undergraduate physics laboratory learning goals [1]. That these shifts are observed in the 200-level population is not especially surprising, as each item represents a core learning outcome for a quarter-long course for physics majors that specifically focuses on experimental physics. It is surprising that we see similar shifts in the 100-level population after just a few weeks of NOMR laboratory exercises. Still, the 100-level shifts’ lesser magnitude is consistent with the relatively light depth and duration of the 100-level intervention compared with the 200-level version. All told, students’ responses moved closer to those of expert physicists and indicate that their confidence in their own ability to do experimental physics is strengthened significantly.

Looking at the differences by gender in the 100-level data, the lower starting point for female-identifying students across all items is consistent with research into identity and belongingness in introductory physics [24]. Put colloquially, the field of physics is commonly thought of as being an old boys’ club, and female-identifying students have on average a harder time developing science identity in physics.

The dramatically smaller shifts in female-identifying students’ responses to PhIS4 and PhIS5 are of particular interest. These items are focused on one’s extrinsic interactions with a group of physicists than on one’s intrinsic ability to perform a category of tasks. For that reason, it is plausible to believe that these items are inherently gendered; that is, administering these two items would elicit a similar difference by gender in any context. It may be the case that male-identifying students build more confidence in NOMR labs than female-identifying students do, but the absence of gender-distinct results in the LES and flow data suggest the interaction with the headsets seems to be gender neutral. Thus, we hesitate to attribute the results from these items to the instrument or the intervention, and highlight this as an area for future study.

removed\stThe physics identity and interest item yielded no measurable pre-post change, which we interpret as evidence that NOMR has done no harm in physics identity formation. In other words, we have not scared students off; in context of an introductory physics course, we interpret that as a positive result.

Both populations’ self-efficacy about designing, conducting, and interpreting experiments is significantly improved after working through NOMR labs. These shifts are aligned with the AAPT laboratory learning objectives [1]. We suggest these data indicate that the NOMR labs are helping students develop confidence in their professional capacity as experimentalists, while also helping them develop more expertlike habits of mind about experimental physics\taggedremoved\st, all at no expense to their interest in and identity with physics.

V.3 RQ3: To what extent are students productively engaged in the NOMR activities, and how does that engagement compare with the hands-on labs in the same course?

We see the majority of students in the productive zones of Flow, Arousal, and Control during the model-generating NOMR labs. We interpret being in a flow state as optimized student engagement in the learning activities. Achieving flow requires that students know what to do, how to do it, and how well they are doing; students tuning out or becoming lost in the face of the open-endedness of the activities would be reflected in low-skill responses on the left of the flow plots. The data show that the NOMR labs are providing just enough scaffolding to keep students in the zone of proximal development and in flow [28]. Of the first series of 100-level labs (0-VR3), VR3 induced the most productive aggregate state of engagement in students; no responses indicated a state of Worry, Apathy, or Boredom. The 200-level population achieved more productive engagement with the VR labs than either of the hands-on labs E1 and N1.

We recognize that VR is an engaging environment on its own. While it may be hard to disentangle the novelty of VR from the activities themselves, we do see evidence that the novelty wears off. We consider the effect of the gaming/entertainment appeal of VR by examining student responses to item F7 (plotted in Figure 9): “To what extent was the lab fun and interesting?” The novelty effect is associated with the introduction of an exciting new technology in the classroom, which induces an initial boost in student engagement that eventually wanes [38].

We estimate that VR’s novelty lasts two weeks in our context, as we observe in both populations the highest F7 score in the first NOMR lab, the second highest in the second NOMR lab, and the third NOMR lab is no more or less fun than any of the hands-on labs in the course.

Despite the third week of NOMR labs not benefiting from the novelty effect, students reported the greatest aggregate flow state during that activity (VR3 and VR5 at the 100-level and 200-level, respectively). This suggests that the novelty effect does not fully explain the productive and deep engagement with VR physics labs. Students are not engaged simply because VR is fun; they are engaged because the physics is compelling. NOMR labs use VR specifically because its “secret sauce” of hands-on interaction with fictitious physical phenomena is otherwise impossible. We consider students’ strong engagement after the novelty has worn off as evidence that NOMR labs may be leveraging the unique affordances of VR in a pedagogically useful way.

Our comparison of VR and hands-on labs contrasts with Karelina et al.’s comparison [29] between students’ engagement with video labs and hands-on labs. They found students reported video labs to be slightly more challenging, less fun, and that they felt less skillful when compared to hands-on labs. Our findings demonstrate that students’ engagement with VR labs can be similar to or better than their engagement with hands-on labs in the same course. It is important to note that Karelina et al. compared two distinct populations of students who went through hands-on and video versions of the same lab activity, while our study compares responses to different lab activities from the same population, so comparisons between our findings and theirs should be made with caution.

We hesitate to overinterpret our analysis of the flow data. In this study we analyze absolute rather than relative scores. The original application of the eight-channel flow model [33] collected responses from each participant at many points in time over several days. The researchers determined each participant’s average response for an item. When creating flow plots, they plotted z-scores relative to each participant’s average response to each item. This method accounts for the fact that every individual interprets Likert scale questions differently; one person’s 3/7 is another’s 6/7. Karelina et al. [29] adapted this original methodology in favor of examining absolute scores due to the limitations of a classroom setting. They had no more than 2-3 responses from any given participant, and we replicated their methodology for comparability.

Further, we note that flow states manifest in neurodiverse learners in ways that are not fully understood [39]. The fact that the measurements of students’ flow state takes place in a group learning context adds the complexities of group social interactions to the picture. Flow is an individual measurement of an experience that occurs in a group context, which does not give us any information about group dynamics and cohesion. These shortcomings are excellent areas for further work.

V.4 Use of fictitious physics with virtual reality

Existing survey data does not fully capture the in-class and in-headset experience of NOMR labs. Students’ experimental results in NOMR labs are different from reexaminations of well-understood phenomena: Every group’s findings are new knowledge students have generated from scratch. We posit that, in this way, NOMR labs may allow students to experience the satisfaction of discovery that professional physicists find so compelling, as reflected in feedback from post-course surveys:

“I was thrilled and enlightened to be put in a position to analyze physical phenomena that were undocumented and that I had never heard of. Being able to work with a pocket universe and using experimentation to describe it was the best experience in physics courses I’ve ever experienced. I preferred this VR experience to any physical lab for the sole reason of it being entirely new and having to get every ounce of info about it through experimentation and collaboration.”

“It’s been so much fun learning physics in an exploratory way that focuses on letting us be creative with our thinking. I’ve not only learned a lot about error analysis and creating models, but also gained a much better perspective on how science and research ‘work’ in the real world.”

As NOMR lab instructors and experienced teachers, we observe students engaging in scientific creativity in ways we have not seen before. Rigorous characterization of creativity is a challenging research task, and is not captured in the surveys we administered. We postulate that the use of VR is in part responsible for helping unleash student creativity, and highlight this as an area for further study.

This study does not create evidence one way or another about whether VR is necessary for the implementation of the fictitious physics approach to stimulate creativity in introductory labs. We predict that such an intervention would not be as effective without VR, in that the joy of discovery expressed above would likely be altered by the difficulties associated with non-immersive simulations. Manipulating objects in 3D space on a 2D screen can be challenging, not to mention that it risks widening the conceptual gap between the novel physics and the physics of our universe. Being overly disconnected from a physically interactive 3D space may preclude the suspension of disbelief that allows students to engage so readily in NOMR. It is our experience that the preceding argument is difficult to make convincingly without sharing the in-headset experience; making it rigorously will require substantial human-computer interaction research.

There remains a possibility that a non-VR version’s effectiveness could be sufficient for a headset-free version of NOMR to be a favorable trade-off, considering the expense and overhead associated with VR technology. Presently, Meta Quest 2 headsets (the same used for this study) cost each; at eight lab groups to a section, the cost of outfitting a classroom to run NOMR labs is . While not outrageous, this is beyond the reach of many physics laboratory budgets, especially in under-served communities. Phone-based virtual or augmented reality, 3D simulations experienced on a monitor and controlled via mouse and keyboard, or entirely 2D simulations could all employ fictitious physics in a similar way to NOMR labs without the expense of full VR headsets. Further developments based on the work in this paper can contribute to exploring these avenues to open up broader access to this instructional innovation.

| Research Question | Survey | Outcome |

|---|---|---|

| RQ1: What changes are observed in students’ epistemology about experimental physics as a result of the NOMR labs? | LES | Students become more expertlike in their epistemologies associated with the role of experimentation in learning, and in research. NOMR students shift away from viewing experimental physics exclusively as a theory-testing endeavor, to one that includes a variety of important aspects of the role of experimentation in generating new knowledge. |

| RQ2: What changes are observed in students’ physics self-efficacy in experimental physics as a result of the NOMR labs? | PhIS | NOMR labs help students develop belief in their professional capacity as experimentalists, while also helping them develop more expertlike habits of mind about experimental physics. |

| RQ3: To what extent are students productively engaged in the NOMR activities, and how does that engagement compare with the hands-on labs in the same course? | FS | Students become increasingly engaged with successive NOMR labs, even after the novelty wears off. They are most engaged when developing their own hypotheses with novel physics. |

V.5 Epistemological hazards of fictitious physics

The use of fictitious laws of physics raises concerns about whether interacting with fictitious laws of physics can negatively affect students’ physical intuition and conceptual understanding. These concerns have been on our minds since the first trial of NOMR in early 2020. For that reason, we take care to maintain conceptual separation between NOMR-unique physics and the physics of our universe:

-

•

The introduction of every NOMR lab manual (except VR1: Charge) makes clear that the physics students will be investigating was created for the purpose of the lab and does not exist in our universe. The lab manuals frame activities with fictitious physics as being original investigations into unknown physics, putting students in the shoes of Coulomb and peers.

-

•

Fictitious particles are given silly names (e.g. minty particles); where they are not named in the lab manual, students are encouraged to come up with their own names for the particles. They discovered them, after all.

-

•

At no point do students work with simulations of both real and fictitious physics at the same time.

-

•

Fictitious particles are only ever referenced in context of the laboratory component of a course; they are not mentioned in lecture or tutorial components.

To date, we have not seen evidence of negative impacts on students’ conceptual or procedural physics knowledge arising from their work with fictitious physics.

VI Conclusions

Steering students away from confirmation of known facts and into a different (simulated) universe sounds like a day in the life of Ms. Frizzle’s class [40]; in lieu of a magic school bus, we use VR headsets. In both cases, students are transported to the teacher’s choice of hands-on learning environment to create knowledge through collaboration with their peers in a fun, engaging, and memorable environment. In NOMR, students learn to gain knowledge in the way that experts do.

This study contributes to the physics education community’s effort to create laboratory activities that foster students’ growth along affective and epistemological dimensions. We have demonstrated that these goals can be achieved by inventing new physics for students to explore, effectively drawing a new frontier of physics at the introductory level. Our findings (summarized in Table 4) begin to paint a positive picture of the affective impacts of labs featuring fictitious physics, but our experience as instructors suggests that we have yet to capture the full effect of this approach.