Modeling the Complex Dynamics and Changing Correlations of Epileptic Events

Abstract

Patients with epilepsy can manifest short, sub-clinical epileptic “bursts” in addition to full-blown clinical seizures. We believe the relationship between these two classes of events—something not previously studied quantitatively—could yield important insights into the nature and intrinsic dynamics of seizures. A goal of our work is to parse these complex epileptic events into distinct dynamic regimes. A challenge posed by the intracranial EEG (iEEG) data we study is the fact that the number and placement of electrodes can vary between patients. We develop a Bayesian nonparametric Markov switching process that allows for (i) shared dynamic regimes between a variable number of channels, (ii) asynchronous regime-switching, and (iii) an unknown dictionary of dynamic regimes. We encode a sparse and changing set of dependencies between the channels using a Markov-switching Gaussian graphical model for the innovations process driving the channel dynamics and demonstrate the importance of this model in parsing and out-of-sample predictions of iEEG data. We show that our model produces intuitive state assignments that can help automate clinical analysis of seizures and enable the comparison of sub-clinical bursts and full clinical seizures.

keywords:

Bayesian nonparametric , EEG , factorial hidden Markov model , graphical model , time series1 Introduction

Despite over three decades of research, we still have very little idea of what defines a seizure. This ignorance stems both from the complexity of epilepsy as a disease and a paucity of quantitative tools that are flexible enough to describe epileptic events but restrictive enough to distill intelligible information from them. Much of the recent machine learning work in electroencephalogram (EEG) analysis has focused on seizure prediction, [cf., 1, 2], an important area of study but one that generally has not focused on parsing the EEG directly, as a human EEG reader would. Such parsings are central for diagnosis and relating various types of abnormal activity. Recent evidence shows that the range of epileptic events extends beyond clinical seizures to include shorter, sub-clinical “bursts” lasting fewer than 10 seconds [3]. What is the relationship between these shorter bursts and the longer seizures? In this work, we demonstrate that machine learning techniques can have substantial impact in this domain by unpacking how seizures begin, progress, and end.

In particular, we build a Bayesian nonparametric time series model to analyze intracranial EEG (iEEG) data. We take a modeling approach similar to a physician’s in analyzing EEG events: look directly at the evolution of the raw EEG voltage traces. EEG signals exhibit nonstationary behavior during a variety of neurological events, and time-varying autoregressive (AR) processes have been proposed to model single channel data [4]. Here we aim to parse the recordings into interpretable regions of activity and thus propose to use autoregressive hidden Markov models (AR-HMMs) to define locally stationary processes. In the presence of multiple channels of simultaneous recordings, as is almost always the case in EEG, we wish to share AR states between the channels while allowing for asynchronous switches. The recent beta process (BP) AR-HMM of Fox et al. [5] provides a flexible model of such dynamics: a shared library of infinitely many possible AR states is defined and each time series uses a finite subset of the states. The process encourages sharing of AR states, while allowing for time-series-specific variability.

Conditioned on the selected AR dynamics, the BP-AR-HMM assumes independence between time series. In the case of iEEG, this assumption is almost assuredly false. Figure 1 shows an example of a 4x8 intracranial electrode grid and the residual EEG traces of 16 channels after subtracting the predicted value in each channel using a conventional BP-AR-HMM. While the error term in some channels remains low throughout the recording, other channels—especially those spatially adjacent in the electrode grid—have very correlated error traces. We propose to capture correlations between channels by modeling a multivariate innovations process that drives independently evolving channel dynamics. We demonstrate the importance of accounting for this error trace in predicting heldout seizure recordings, making this a crucial modeling step before undertaking large-scale EEG analysis.

To aid in scaling to large electrode grids, we exploit a sparse dependency structure for the multivariate innovations process. In particular, we assume a graph with known vertex structure that encodes conditional independencies in the multivariate innovations process. The graph structure is based on the spatial adjacencies of the iEEG channels, with a few exceptions to make the graphical model fully decomposable. Figure 1 (bottom left) shows an example of such a graphical model over the channels. Although the relative position of channels in the electrode grid is clear, determining the precise 3D location of each channel is extremely difficult. Furthermore, unlike in scalp EEG or magentoencephalogram (MEG), which have generally consistent channel positions from patient to patient, iEEG channels vary in number and position for each patient. These issues impede the use of alternative spatial and multivariate time series modeling techniques.

It is well-known that the correlations between EEG channels usually vary during the beginning, middle, and end of a seizure [6, 7]. Prado et al. [8] employ a mixture-of-expert vector autoregressive (VAR) model to describe the different dynamics present in seven channels of scalp EEG. We take a similar approach by allowing for a Markov evolution to an underlying innovations covariance state.

An alternative modeling approach is to treat the channel recordings as a single multivariate time series, perhaps using a switching VAR process as in Prado et al. [8]. However, such an approach (i) assumes synchronous switches in dynamics between channels, (ii) scales poorly with the number of channels, and (iii) requires an identical number of channels between patients to share dynamics between event recordings.

Other work has explored nonparametric modeling of multiple time series. The infinite factorial HMM of Van Gael et al. [9] considers an infinite collection of chains each with a binary state space. The infinite hierarchical HMM [10] also involves infinitely many chains with finite state spaces, but with constrained transitions between the chains in a top down fashion. The infinite DBN of Doshi-Velez et al. [11] considers more general connection structures and arbitrary state spaces. Alternatively, the graph-coupled HMM of Dong et al. [12] allows graph-structured dependencies in the underlying states of some Markov chains. Here, we consider a finite set of chains with infinite state spaces that evolve independently. The factorial structure combines the chain-specific AR dynamic states and the graph-structured innovations to generate the multivariate observations with sparse dependencies.

Expanding upon previous work [13], we show that our model for correlated time series has better out-of-sample predictions of iEEG data than standard AR- and BP-AR-HMMs and demonstrate the utility of our model in comparing short, sub-clinical epileptic bursts with longer, clinical seizures. Our inferred parsings of iEEG data concur with key features hand-annotated by clinicians but provide additional insight beyond what can be extracted from a visual read of the data. The importance of our methodology is multifold: (i) the output is interpretable to a practitioner and (ii) the parsings can be used to relate seizure types both within and between patients even with different electrode setups. Enabling such broad-scale automatic analysis, and identifying dynamics unique to sub-clinical seizures, can lead to new insights in epilepsy treatments.

Although we are motivated by the study of seizures from iEEG data, our work is much more broadly applicable in time series analysis. For example, perhaps one has a collection of stocks and wants to model shared dynamics between them while capturing changing correlations. The BP-AR-HMM was applied to the analysis of a collection of motion capture data assuming independence between individuals; our modeling extension could account for coordinated motion with a sparse dependency structure between individuals. Regardless, we find the impact in the neuroscience domain to be quite significant.

2 A Structured Bayesian Nonparametric Factorial AR-HMM

2.1 Dynamic Model

Consider an event with univariate time series of length . This event could be a seizure, where each time series is one of the iEEG voltage-recording channels. For clarity of exposition, we refer to the individual univariate time series as channels and the resulting -dimensional multivariate time series (stacking up the channel series) as the event. We denote the scalar value for each channel at each (discrete) time point as and model it using an -order AR-HMM [5]. That is, each channel is modeled via Markov switches between a set of AR dynamics. Denoting the latent state at time by , we have:

| (1) |

Here, are the AR parameters for state and is the transition distribution from state to any other state. We also introduce the notation as the vector of previous observations .

In contrast to a vector AR (VAR) HMM specification of the event, our modeling of channel dynamics separately as in Eq. (1) allows for (i) asynchronous switches and (ii) sharing of dynamic parameters between recordings with a potentially different number of channels. However, a key aspect of our data is the fact that the channels are correlated. Likewise, these correlations change as the patient progresses through various seizure event states (e.g., “resting”, “onset”, “offset”, …). That is, the channels may display one innovation covariance before a seizure (e.g., relatively independent and low-magnitude) but quite a different covariance during a seizure (e.g., correlated, higher magnitude). To capture this, we jointly model the innovations driving the AR-HMMs of Eq. (1) as

| (2) |

where denotes a Markov-evolving event state distinct from the individual channel states , the transition distributions, and the event-state-specific channel covariance. That is each describes a different set of channel relationships.

For compactness, we sometimes alternately write

| (3) |

where is the concatenation of channel observations at time and is the vector of concatenated channel states. The overall dynamic model is represented graphically in Figure 2.

Scaling to large electrode grids

To scale our model to a large number of channels, we consider a Gaussian graphical model (GGM) for capturing a sparse dependency structure amongst the channels. Let be an undirected graph with the set of channel nodes and the set of edges with if and are connected by an edge in the graph. Then, for all , implying is conditionally independent of given for all channels . In our dynamic model of Eq. (1), statements of conditional independence of translate directly to statements of the observations .

In our application, we choose based on the spatial adjacencies of channels in the electrode grid, as depicted in Figure 1 (bottom left). In addition to encoding the spatial proximities of iEEG electrodes, the graphical model also yields a sparse precision matrix , allowing for more efficient scaling to the large number of channels commonly present in iEEG. These computational efficiencies are made clear in Section 3.

Interpretation as a sparse factorial HMM

Recall that our formulation involves independently evolving Markov chains: chains for the channel states plus one for the event state sequence . As indicated by the observation model of Eq. (3), the Markov chains jointly generate our observation vector leading to an interpretation of our formulation as a factorial HMM [14]. However, here we have a sparse dependency structure in how the Markov chains influence a given observation , as induced by the conditional independencies in encoded in the graph . That is, only depends on the set of for which is a neighbor of in .

2.2 Prior Specification

Emission parameters

As in the AR-HMM, we place a multivariate normal prior on the AR coefficients,

| (4) |

with mean and covariance . Throughout this work, we let .

For the channel covariances with sparse precisions determined by the graph , we specify a hyper-inverse Wishart (HIW) prior,

| (5) |

where denotes the degrees of freedom and the scale. The HIW prior [15] enforces hyper-Markov conditions specified by .

Feature constrained channel transition distributions

A natural question is how many AR states are the channels switching between? Likewise, which are shared between the channels and which are unique? We expect to see similar dynamics present in the channels (sharing of AR processes), but also some differences. For example, maybe only some of the channels ever get excited into a certain state. To capture this structure, we take a Bayesian nonparametric approach building on the beta process (BP) AR-HMM of Fox et al. [16]. Through the beta process prior [17], the BP-AR-HMM defines a shared library of infinitely many AR coefficients , but encourages each channel to only use a sparse subset of them.

The BP-AR-HMM specifically defines a featural model. Let be a binary feature vector associated with channel with indicating that channel uses the dynamic described by . Formally, the feature assignments and their corresponding parameters are generated by a beta process random measure and the conjugate Bernoulli process (BeP),

| (6) |

with base measure over the parameter space for our -order autoregressive parameters . As specified in Eq. (4), we take the normalized measure to be . The discrete measures and can be represented as

| (7) |

with .

The resulting feature vectors constrain the set of available states can take by constraining each transition distributions, , to be 0 when . Specifically, the BP-AR-HMM defines by introducing a set of gamma random variables, , and setting

| (8) | ||||

| (9) |

The positive elements of can also be thought of as a sample from a finite Dirichlet distribution with only dimensions, where represents the number of states channel uses. For convenience, we sometimes denote the set of transition variables as . As in the sticky HDP-HMM of Fox et al. [18], the parameter encourages self-transitions (i.e., state at time to state at time ).

Unconstrained event transition distributions

We again take a Bayesian nonparametric approach to define the event state HMM, building on the sticky HDP-HMM [18]. In particular, the transition distributions are hierarchically defined as

| (10) |

where refers to a stick-breaking measure, also known as , with generated by

| (11) |

Again, the sticky parameter promotes self-transitions, reducing state redundancy.

We term this model the sparse factorial BP-AR-HMM. Although the graph can be arbitrarily structured, because of our motivating seizure modeling application with a focus on a spatial-based graph structure, we often describe the sparse factorial BP-AR-HMM as capturing spatial correlations. We depict this model in the directed acyclic graphs shown in Figure 3. Note that while we formally consider a model of only a single event for notational simplicity, our formulation scales straightforwardly to multiple independent events. In this case, everything except the library of AR states becomes event-specific. If all events share the same channel setup, we can assume the channel covariances are shared as well.

3 Posterior Computations

Although the components of our model related to the individual channel dynamics are similar to those in the BP-AR-HMM, our posterior computations are significantly different due to the coupling of the Markov chains via the correlated innovations . In the BP-AR-HMM, conditioned on the feature assignments, each time series is independent. Here, however, we are faced with a factorial HMM structure and the associated challenges. Yet the underlying graph structure of the channel dependencies mitigates the scale of these challenges.

Conditioned on channel sequences , we can marginalize ; because of the graph structure, we need only condition on a sparse set of other channels (i.e., neighbors of channel in the graph). This step is important for efficiently sampling the feature assignments .

At a high level, each MCMC iteration proceeds through sampling channel states, events states, dynamic model parameters, and hyperparameters. Algorithm 1 summarizes these steps, which we briefly describe below and more fully in Appendices B-D.

Individual channel variables

We harness the fact that we can compute the marginal likelihood of given and the neighborhood set of other channels in order to block sample . That is, we first sample marginalizing and then sample given the sampled . Sampling the active features for channel follows as in Fox et al. [5], using the Indian buffet process (IBP) [19] predictive representation associated with the beta process, but using a likelihood term that conditions on neighboring channel state sequences and observations . We additionally condition on the event state sequence to define the sequence of distributions on the innovations. Generically, this yields

| (12) |

Here, denotes the set of feature assignments not including . The first term is given by the IBP prior and the second term is the marginal conditional likelihood (marginalizing ). Based on the derived marginal conditional likelihood, feature sampling follows similarly to that of Fox et al. [5].

Conditioned on , we block sample the state sequence using a backward filtering forward sampling algorithm (see A)based on a decomposition of the full conditional as

| (13) |

For sampling the transition parameters , we follow Hughes et al. [20, Supplement] and sample from the full conditional

| (14) |

where denotes the number of times channel transitions from state to state . We sample from its posterior via two auxiliary variables,

| (15) |

where gives the transition counts from state in channel .

Event variables

Conditioned on the channel state sequences and AR coefficients , we can compute an innovations sequence as , where we recall the definition of and from Eq. (3). These innovations are the observations of the sticky HDP-HMM of Eq. (2). For simplicity and to allow block-sampling of , we consider a weak limit approximation of the sticky HDP-HMM as in [18]. The top-level Dirichlet process is approximated by an -dimensional Dirichlet distribution [21], inducing a finite Dirichlet for :

| (16) |

Here, provides an upper bound on the number of states in the HDP-HMM. The weak limit approximation still encourages using a subset of these states.

Based on the weak limit approximation, we first sample the parent transition distribution as in [22, 18], followed by sampling each from its Dirichlet posterior,

| (17) |

where is a vector of transition counts of from state to the different states.

Using standard conjugacy results, based on “observations” for such that , the full conditional for is given by

| (18) |

where

Details on how to efficiently sample from a HIW distribution are provided in [23].

Conditioned on the truncated HDP-HMM event transition distributions and emission parameters , we use a standard backward filtering forward sampling scheme to block sample .

AR coefficients,

Each observation is generated based on a matrix of AR parameters . Thus, sampling involves conditioning on and disentangling the contribution of on each . As derived in E, the full conditional for is a multivariate normal

| (19) |

where

The vectors and denote the indices of channels assigned and not assigned to state at time , respectively. We use these to index into the rows and columns of the vectors , , and matrix . Each column of matrix is the previous observations for one of the channels assigned to state at time .

Hyperparameters

See D for the prior and full conditionals of the hyperparameters , , , , , and .

4 Experiments

4.1 Simulation Experiments

To initially explore some characteristics of our sparse factorial BP-AR-HMM, we examined a small simulated dataset of six time series in a 2x3 spatial arrangement, with vertices connecting all adjacent nodes (i.e., two cliques of 4 nodes each). We generated an event of length 2000 time points as follows. We defined five first-order AR channel states linearly spaced between and and three event states with covariances shown in the bottom left of Figure 4. Channel and event state transition distributions were set to and , respectively, for a self-transition and uniform between the other states. Channel feature indicators were simulated from an IBP with (no channel had indicators exceeding the five specified states). The sampled were then used to modify the channel state transition distributions by setting to 0 transitions to states with and then renormalizing. Using these feature-constrained transition distributions, we simulated sequences for each channel and for . The event sequence was likewise simulated. Based on these sampled state sequences, and using the defined state-specific AR coefficients and channel covariances, we generated observations as in Eq. (3).

| channel state | true | post. mean | post. 95% interval |

|---|---|---|---|

| 1 | -0.900 | -0.906 | [-0.917, -0.896] |

| 2 | -0.450 | -0.456 | [-0.474, -0.436] |

| 3 | 0 | -0.009 | [-0.038, 0.020] |

| 4 | 0.450 | 0.445 | [0.425, 0.466] |

| 5 | 0.900 | 0.902 | [0.890, 0.913] |

We ran our MCMC sampler for 6000 iterations, discarding the first 1000 as burn-in and thinning the chain by 10. Figure 4 shows the generated data and its true states along with the inferred states and learned channel covariances for a representative posterior sample. The event state matching is almost perfect, and the channel state matching is quite good, though we see that the sampler added an additional (yellow) state in the middle of the first time series when it should have assigned that section to the cyan state. The scale and structure of the estimated event state covariances match the true covariances quite well. Furthermore, Table 1 shows how the posterior estimates of the channel state AR coefficients also center well around the true values.

4.2 Parsing a Seizure

We tested the sparse factorial BP-AR-HMM on two similar seizures (events) from a patient of the Children’s Hospital of Pennsylvania. These seizures were chosen because qualitatively they displayed a variety of dynamics throughout the beginning, middle, and end of the seizure and thus are ideal for exploring the extent to which our sparse factorial BP-AR-HMM can parse a set of rich neurophysiologic signals. We used the 90 seconds of data after the clinically-determined starts of each seizure from 16 channels, whose spatial layout in the electrode grid is shown in Figure 5 along with the graph encoding our conditional independence assumptions. The data were low-pass filtered and downsampled from 200 to 50 Hz, preserving the clinically important signals but reducing the computational burden of posterior inference. The data was also scaled to have 99% of values within [-10, 10] for numerical reasons. We examined a th-order sparse factorial BP-AR-HMM and ran 10 MCMC chains for 6000 iterations, discarding 1000 samples as burn-in and using 10-sample thinning.

The sparse factorial BP-AR-HMM inferred state sequences for the sample corresponding to a minimum expected Hamming distance criterion ([18]) are shown in Figure 5. The results were analyzed by a board-certified epileptologist who agreed with the model’s judgement in identifying the subtle changes from the background dynamic (cyan) initially present in all channels. The model’s grouping of spatially-proximate channels into similar state transition patterns (e.g., channels 03, 07, 11, 15) was clinically intuitive and consistent with his own reading of the raw EEG. Using only the raw EEG, and prior to disclosing our results, he independently identified roughly six points in the duration of the seizure where the dynamics fundamentally change. The three main event state transitions shown in Figure 5 occurred almost exactly at the same time as three of his own marked transitions. The fourth coincides with a major shift in the channel dynamics with most channels transitioning to the green dynamic. The other two transitions he marked that are not displayed occurred after this onset period. From this analysis, we see that our event states provide an important global summary of the dynamics of the seizure that augments the information conveyed from the channel state sequences.

Clinical relevance

While interpreting these state sequences and covariances from the model, it is important to keep in mind that they are ultimately estimates of a system whose parsing even highly-trained physicians disagree upon. Nevertheless, we believe that the event states directly describe the activity of particular clinical interest.

In modeling the correlations between channels, the event states give insight into how different physiologic areas of the brain interact over the course of a seizure. In the clinical workup for resective brain surgery, these event states could help define and specifically quantify the full range of ways in which neurophysiologic regions initiate seizures and how others are recruited over the numerous seizures of a patient. In addition, given fixed model parameters, our model can fit the channel and event state sequences of an hour’s worth of 64-channel EEG data in a matter of minutes on a single 8-core machine, possibly facilitating epileptologist EEG annotation of long-term monitoring records.

The ultimate clinical aim of this work, however, involves understanding the relationship between epileptic bursts and seizures. Because the event state aspect of our model involves a Markov assumption, the intrinsic length of the event has little bearing on the states assigned to particular time points. Thus, these event states allow us to straightforwardly compare the neurophysiologic relationship dynamics in short bursts (often less than two seconds long) to those in much longer seizures (on the order of two minutes long), as explored in Section 4.4. Prior to this analysis, we first examine the importance of our various model components by comparing to baseline alternatives.

4.3 Model Comparison

The advantages of a spatial model

We explored the extent to which the spatial information and sparse dependencies encoded in the HIW prior improves our predictions of heldout data relative to a number of baseline models. To assess the impact of the sparse dependencies induced by the Gaussian graphical model for , we compare to a full-covariance model with an IW prior on (dense factorial). For assessing the importance of spatial correlations, we additionally compare to two alternatives where channels evolve independently: the BP-AR-HMM of Fox et al. [5] and an AR-HMM without the feature-based modeling provided by the beta process [24]. Both of these models use inverse gamma (IG) priors on the individual channel innovation variances. We learned a set of AR coefficients and event covariances on one seizure and then computed the heldout log-likelihood on a separate seizure, constraining it use the learned model parameters from the training seizure.

For the training seizure, MCMC samples were collected over 5000 samples across 10 chains, each with a 1000-sample burn in and 10-sample thinning. To compute the predictive log-likelihood of the heldout seizure, we analytically marginalized the heldout event state sequence but perform a Monte Carlo integration over the feature vectors and channel states using our MCMC sampler. For each original MCMC sample generated from the training seizure, a secondary chain is run fixing and and sampling , , , , and for the heldout seizure. We approximate by averaging the secondary chain’s closed-form , described in B.

Figure 6 (left) shows how conditioning on the innovations of neighboring channels in the sparse factorial model improves the prediction of an individual channel, as seen by its reduced innovation trace relative to the original BP-AR-HMM. The quantitative benefits of accounting for these correlations are seen in our predictions of heldout events, as depicted in Figure 6 (right), which compares the heldout log-likelihoods for the original and the factorial models listed above. As expected, the factorial models have significantly larger predictive power than the original models. Though hard to see due to the large factorial/original difference, the BP-based model also improves on the standard non-feature-based AR-HMM. Performance of the sparse factorial model is also at least as competitive as a full-covariance model (dense factorial). We would expect to see even larger gains for electrode grids with more channels due to the parsimonious representation presented by the graphical model. Regardless, these results demonstrate that the assumptions of sparsity in the channel dependencies do not adversely affect our performance.

The advantages of sparse factorial dependencies

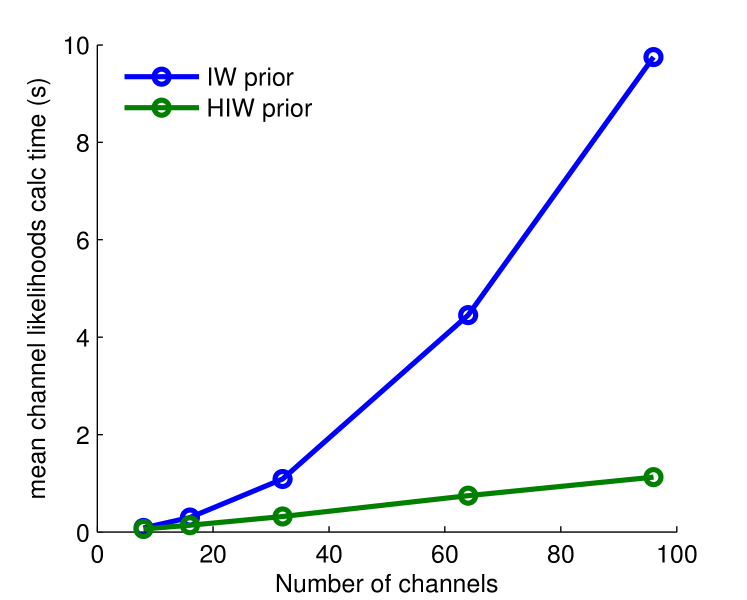

In addition to providing a parsimonious modeling tool, the sparse dependencies among channels induced by the HIW prior allow our computations to scale linearly to the large number of channels present in iEEG. We compared a dense factorial BP-AR-HMM (entailing a fully-connected spatial graph) and a sparse factorial BP-AR-HMM on five datasets of 8, 16, 32, 64, and 96 channels (from three 32-channel electrodes) from the same seizure used previously. We ran the two models on each of the five datasets for at least 1000 MCMC iterations, using a profiler to tabulate the time spent in each step of the MCMC iteration.

Figure 7 shows the average time required to calculate the channel likelihoods at each time point under each AR channel state. This computation is used both for calculating the marginal likelihood (averaging over all the state sequences ) required in active feature sampling as well as in sampling the state sequences . In our sparse factorial model, each channel has a constant set of dependencies, assuming neighboring channels. As such, the channel likelihood computation at each time point has an complexity, implying an complexity for calculating the likelihoods of all channels at each time point. In contrast, the likelihood computation at each time point under the full covariance model had complexity , implying for calculating all the channel likelihoods. For , as is typically the case, our sparse dependency model is significantly more computationally efficient.

Anecdotally, we also found that the IW prior experiments—especially those with larger number of channels—tended to occasionally have numerical underflow problems associated with the inverse term in the conditional channel likelihood calculation. This underflow in the IW prior model calculations is not surprising since the matrices inverted are of dimension (for channels), whereas in the HIW prior, the sparse spatial dependencies of the electrode grids make these matrices no larger than eight-by-eight.

4.4 Comparing Epileptic Events of Different Scales

We applied our sparse factorial BP-AR-HMM to six channels of iEEG over 15 events from a human patient with hippocampal depth electrodes. These events comprise 14 short sub-clinical epileptic bursts of roughly five to eight seconds and a final, 2-3 minute clinical seizure. Our hypothesis was that the sub-clinical bursts display initiation dynamics similar to those of a full, clinical seizure and thus contain information about the seizure-generation process.

The events were automatically extracted from the patient’s continuous iEEG record by taking sections of iEEG whose median line-length feature [25] crossed a preset threshold, also including 10 seconds before and after each event. The iEEG was preprocessed in the same way as in the previous section. The six channels studied came from a depth electrode implanted in the left temporal lobe of the patient’s brain. We ran our MCMC sampler jointly on the 15 events. In particular, the AR channel state and event state parameters, and , were shared between the 15 events such that the parsings of each recording jointly informed the posteriors of these shared parameters. The hyperparameter settings, number of MCMC iterations, chains, and thinning was as in the experiment of Section 4.2.

Figure 8 compares two of the 14 sub-clinical bursts and the onset of the single seizure. We have aligned the three events relative to the beginnings of the red event state common to all three, which we treat as the start of the epileptic activity. The individual channel states of the four middle channels are also all green throughout most of the red event state. It is interesting to note that at this time the fifth channel’s activity in all three events is much lower than those of the three channels above it, yet it is still assigned to the green state and continues in that state along with the other three channels as the event state switches from the red to the lime green state in all three events. While clinical opinions can vary widely in EEG reading, a physician would most likely not consider this segment of the fifth channel similar to the other three, as our model consistently does. But on a relative voltage axis, the segments actually look quite similar. In a sense, the fifth channel has the same dynamics as the other three but just with smaller magnitude. This kind of relationship is difficult for the human EEG readers to identify and shows how models such as ours are capable of providing new perspectives not readily apparent to a human reader. Additionally, we note the similarities in event state transitions.

The similarities mentioned above, among others, suggest some relationship between these two different classes of epileptic events. However, all bursts make a notable departure from the seizure: a large one-second depolarization in the middle three channels, highlighted at the end by the magenta event state and followed shortly thereafter by the end of the event. Neither the states assigned by our model nor the iEEG itself indicates that dynamic present in the clinical seizure. This difference leads us to posit that perhaps these sub-clinical bursts are a kind of false-start seizure, with similar onset patterns but a disrupting discharge that prevents the event from escalating to a full-blown seizure.

5 Conclusion

In this work, we develop a sparse factorial BP-AR-HMM model that allows for shared dynamic regimes between a variable number of time series, asynchronous regime-switching, and an unknown dictionary of dynamic regimes. Key to our model is capturing the between-series correlations and their evolution with a Markov-switching multivariate innovations process. For scalability, we assume a sparse dependency structure between the time series using a Gaussian graphical model.

This model is inspired by challenges in modeling high-dimensional intracranial EEG time series of seizures, which contain a large variety of small- and large-scale dynamics that are of interest to clinicians. We demonstrate the value of this unsupervised model by showing its ability to parse seizures in a clinically intuitive manner and to produce state of the art out-of-sample predictions on the iEEG data. Finally, we show how our model allows for flexible, large-scale analysis of different classes of epileptic events, opening new, valuable clinical research directions previously too challenging to pursue. Such analyses have direct relevance to clinical decision-making for patients with epilepsy.

References

- D’Alessandro et al. [2005] M. D’Alessandro, G. Vachtsevanos, R. Esteller, J. Echauz, S. Cranstoun, G. Worrell, L. Parish, B. Litt, A multi-feature and multi-channel univariate selection process for seizure prediction., Clinical Neurophysiology 116 (2005) 506–516.

- Mirowski et al. [2009] P. Mirowski, D. Madhavan, Y. Lecun, R. Kuzniecky, Classification of patterns of EEG synchronization for seizure prediction., Clinical Neurophysiology 120 (2009) 1927–1940.

- Litt et al. [2001] B. Litt, R. Esteller, J. Echauz, M. D’Alessandro, R. Shor, T. Henry, P. Pennell, C. Epstein, R. Bakay, M. Dichter, G. Vachtsevanos, Epileptic seizures may begin hours in advance of clinical onset: a report of five patients., Neuron 30 (2001) 51–64.

- Krystal et al. [1999] A. D. Krystal, R. Prado, M. West, New methods of time series analysis of non-stationary EEG data: eigenstructure decompositions of time varying autoregressions., Clinical Neurophysiology 110 (1999) 2197–2206.

- Fox et al. [2009] E. B. Fox, E. B. Sudderth, M. I. Jordan, A. S. Willsky, Sharing features among dynamical systems with beta processes, Advances in Neural Information Processing Systems (2009).

- Schindler et al. [2007] K. Schindler, H. Leung, C. E. Elger, K. Lehnertz, Assessing seizure dynamics by analysing the correlation structure of multichannel intracranial EEG., Brain 130 (2007) 65–77.

- Schiff et al. [2005] S. J. Schiff, T. Sauer, R. Kumar, S. L. Weinstein, Neuronal spatiotemporal pattern discrimination: the dynamical evolution of seizures., NeuroImage 28 (2005) 1043–1055.

- Prado et al. [2006] R. Prado, F. Molina, G. Huerta, Multivariate time series modeling and classification via hierarchical VAR mixtures, Computational Statistics & Data Analysis 51 (2006) 1445–1462.

- Van Gael et al. [2008] J. Van Gael, Y. Saatci, Y. W. Teh, Z. Ghahramani, Beam sampling for the infinite hidden Markov model, in: Proceedings of the 25th International Conference on Machine Learning, 2008.

- Heller et al. [2009] K. Heller, Y. W. Teh, D. Gorur, The Infinite Hierarchical Hidden Markov Model, in: Proceedings of the 12th International Conference on Artificial Intelligence and Statistics, 2009.

- Doshi-Velez et al. [2011] F. Doshi-Velez, D. Wingate, J. Tenenbaum, N. Roy, Infinite dynamic bayesian networks, in: Proceedings of the 28th International Conference on Machine Learning, 2011.

- Dong et al. [2012] W. Dong, A. Pentland, K. A. Heller, Graph-Coupled HMMs for Modeling the Spread of Infection, in: Proceedings of the 28th Conference Conference on Uncertainty in Artificial Intelligence, 2012.

- Wulsin et al. [2013] D. F. Wulsin, E. B. Fox, B. Litt, Parsing Epileptic Events Using a Markov Switching Process for Correlated Time Series, in: Proceedings of the 30th International Conference on Machine Learning, 2013.

- Ghahramani and Jordan [1997] Z. Ghahramani, M. I. Jordan, Factorial hidden Markov models, Machine Learning 29 (1997) 245–273.

- Dawid and Lauritzen [1993] A. P. Dawid, S. L. Lauritzen, Hyper Markov laws in the statistical analysis of decomposable graphical models, The Annals of Statistics 21 (1993) 1272–1317.

- Fox et al. [2011] E. B. Fox, E. B. Sudderth, M. I. Jordan, A. S. Willsky, Joint Modeling of Multiple Related Time Series via the Beta Process, Arxiv preprint arXiv:1111.4226v1 (2011).

- Thibaux and Jordan [2007] R. Thibaux, M. I. Jordan, Hierarchical beta processes and the Indian buffet process, in: Proceedings of the 10th Conference on Artificial Intelligence and Statistics, 2007.

- Fox et al. [2011] E. B. Fox, E. B. Sudderth, M. I. Jordan, A. S. Willsky, A sticky HDP-HMM with application to speaker diarization, The Annals of Applied Statistics 5 (2011) 1020–1056.

- Griffiths and Ghahramani [2005] T. L. Griffiths, Z. Ghahramani, Infinite latent feature models and the Indian buffet process, Gatsby Computational Neuroscience Unit, Technical Report #2005-001 (2005).

- Hughes et al. [2012] M. C. Hughes, E. B. Fox, E. B. Sudderth, Effective split-merge Monte Carlo methods for nonparametric models of sequential data, in: Advances in Neural Information Processing Systems, 2012.

- Ishwaran and Zarepour [2002] H. Ishwaran, M. Zarepour, Exact and approximate sum representations for the Dirichlet process, Canadian Journal of Statistics 30 (2002) 269–283.

- Teh et al. [2006] Y. W. Teh, M. I. Jordan, M. J. Beal, D. M. Blei, Hierarchical Dirichlet processes, Journal of the American Statistical Association 101 (2006) 1566–1581.

- Carvalho et al. [2007] C. M. Carvalho, H. Massam, M. West, Simulation of hyper-inverse Wishart distributions in graphical models, Biometrika 94 (2007) 647–659.

- Fox et al. [2011] E. B. Fox, E. B. Sudderth, M. I. Jordan, A. S. Willsky, Bayesian nonparametric inference of switching dynamic linear models, IEEE Transactions on Signal Processing 59 (2011) 1569–1585.

- Esteller et al. [2001] R. Esteller, J. Echauz, T. Tcheng, B. Litt, B. Pless, Line length: an efficient feature for seizure onset detection, in: Proceedings of the 23rd Engineering in Medicine and Biology Society Conference, 2001.

- Wulsin [2013] D. F. Wulsin, Bayesian Nonparametric Modeling of Epileptic Events, Ph.D. thesis, University of Pennsylvania, 2013.

- Gelman et al. [2004] A. Gelman, J. B. Carlin, H. S. Stern, D. S. Rubin, Bayesian Data Analysis, second ed., Chapman & Hall/CRC, Boca Raton, Florida, 2004.

- Green [1995] P. J. Green, Reversible jump Markov chain Monte Carlo computation and Bayesian model determination, Biometrika 82 (1995) 711–732.

- Richardson and Green [1997] S. Richardson, P. J. Green, On Bayesian analysis of mixtures with an unknown number of components, Journal of the Royal Statistical Society, Series B 59 (1997) 731–792.

- Brooks et al. [2011] S. P. Brooks, A. Gelman, G. L. Jones, X. Meng (Eds.), Handbook of Markov Chain Monte Carlo, Chapman & Hall/CRC, Boca Raton, Florida, 2011.

- Fox [2009] E. B. Fox, Bayesian Nonparametric Learning of Complex Dynamical Phenomena, Ph.D. thesis, Massachusetts Institute of Technology, 2009.

Appendix A HMM sum-product algorithm

Consider a hidden Markov model of a sequence with corresponding discrete states , each of which takes one of values. The joint probability of and is

| (20) |

sometimes called a forward message, which depends on a recursive call for and with

| (21) |

Calculating the marginal likelihood simply involves one last marginalization over ,

| (22) |

Algorithm 2 provides a numerically stable recipe for calculating this marginal likelihood.

We can sample the states from their joint distribution, also known as block sampling, via a similar recursive formulation. The conditional likelihood of the last samples given the state at is

| (23) |

which depends recursively on the backward messages for each . To sample at once we use the joint posterior distribution of the entire state sequence , which factors into

| (24) |

If we first sample , we can condition on it to then sample and continue in this fashion until we finish with . The posterior for is the product of the backward message, the likelihood of given , and the probability of given ,

| (25) |

where for . A numerical stable recipe for this backward-filtering forward-sampling is given in Algorithm 3.

Appendix B Individual channel variables posterior

Sampling the variables associated with the individual channel involves first sampling active features (while marginalizing ), then conditioning on these feature assignments to block sample the state sequence , and finally sampling the transition distribution given the feature indicators and state sequence . Explicit algorithms for this sampling are given in Wulsin [26, Section 4.2.1].

Channel marginal likelihood

Let index the neighboring channels in the graph upon which channel is conditioned. The conditional likelihood of observation under AR model given the neighboring observations at time is

| (26) |

for

| (27) |

which follows from the conditional distribution of the multivariate normal [27, pg. 579]. Using the forward-filtering scheme (see Algorithm 2) to marginalize over the exponentially many state sequences , we can calculate the channel marginal likelihood,

| (28) |

of channel ’s observations over all given the observations and the assigned states of neighboring channels and given the event state sequence . As previously discussed, taking the non-zero elements of the infinite-dimensional transition distributions , derived from and as in Eq. (9), yields a set of -dimensional active feature transition distributions , reducing this marginalization to a series of matrix-vector products.

Sampling active features,

We briefly describe the active feature sampling scheme given in detail by Fox et al. [5]. Recall that for our HIW-spatial BP-AR-HMM, we need to condition on neighboring channel state sequences and event state sequences . Sampling the feature indicators for channel via the Indian buffet process (IBP) involves considering those features shared by other channels and those unique to channel . Let denote the total number of active features used by at least one of the channels. We consider the set of shared features across channels not including those specific to channel as and the set of unique features for channel as .

Shared features

The posterior for each shared feature for channel is given by

| (29) |

where the marginal likelihood of term (see Eq. 28) follows from the sum-product algorithm. Recalling the form of the IBP posterior predictive distribution, we have , where denotes the number of other channels that use feature . We use this posterior to formulate a Metropolis-Hastings proposal that flips the current indicator value to its complement with probability ,

| (30) |

where

| (31) |

Unique features

We either propose a new feature or remove a unique feature for channel using a birth and death reversible jump MCMC sampler [28, 29, 30] (see Fox et al. [16] for details relevant to the BP-AR-HMM). We denote the number of unique features for channel as . We define the vector of shared feature indicators as and that for unique feature indicators as , which together define the full feature indicator vector for channel . Similarly, and describe the model dynamics and transition parameters associated with these unique features. We propose a new unique feature vector and corresponding model dynamics and transition parameters (sampled from their priors in the case of feature birth) with a proposal distribution of

| (32) |

A new unique feature is proposed with probability and each existing unique feature is removed with probability . This proposal is accepted with probability

| (33) |

Channel state sequence,

We block sample the state sequence for all the time points of channel , given that channel’s feature-constrained transition distributions , the state parameters , the observations , and the neighboring observations and current states . The joint probability of the state sequence is given by

| (34) |

Again following the backward filtering forward sampling scheme (Algorithm 3), at each time point we sample state conditioned on by marginalizing . The conditional probability of is given by

| (35) |

where is the transition distribution given the assigned state at , is the vector of likelihoods under each possible state at time (as in Eq. (26)), and is the vector of backwards messages (see Eq. (23)) from time point to .

Channel transition parameters,

Following [20, Supplement], the posterior for the transition variable is given by

| (36) |

where denotes the number of times channel transitions from state to state . We can sample from this posterior via two auxiliary variables,

| (37) |

Appendix C Event state variables posterior

Since we model the event state process with a (truncated approximation to the) HDP-HMM, inference is more straightforward than with the channel states. We block sample the event state sequence and then sample the event state transition distributions .

Event marginal likelihood

Let denote the vector of channel states at time . Since the space of is exponentially large, we cannot integrate it out to compute the marginal conditional likelihood of the data given the event state sequence (and model parameters). Instead, we consider the conditional likelihood of an observation at time given channel states and event state ,

| (38) |

Recalling Eq. (3), we see that this conditional likelihood of is equivalent to a zero-mean multivariate normal model on the channel innovations ,

As with the channel marginal likelihoods, we use the forward-filtering algorithm (see Algorithm 2) to marginalize over the possible event state sequences , yielding a likelihood conditional on the channel states and autoregressive parameters , in addition to the event transition distribution and event state covariances ,

| (39) |

Event state sequence,

The mechanics of sampling the event state sequence directly parallel those of sampling the individual channel state sequences . The joint probability of the event state sequence is given by

| (40) |

We again use the backward filtering forward sampling scheme of the sum-product algorithm to block sample each event state whose conditional probability distribution over the states is given by

| (41) |

where is the transition distribution given the assigned state at , is the vector of likelihoods under each of the possible states at time (as in Eq. (38)), and is again the vector of backwards messages from time point to . denotes element-wise product.

Event transition parameters,

The Dirichlet posterior for the event state ’s transition distribution simply involves the transition counts from event state to all states,

| (42) |

for global weights , concentration parameter , and self-transition parameter .

Global transition parameters,

The Dirichlet posterior of the global transition distribution involves the auxiliary variables ,

| (43) |

where these auxiliary variables are defined as

| (44) |

and . See Fox [31, Appendix A] for full derivations.

Appendix D Hyperparameters posterior

Below we give brief descriptions for the MCMC sampling of the hyperparameters in our model. Full derivations are given in Fox [31, Section 5.2.3, Appendix C].

Channel dynamics model hyperparameters, ,

We use Metropolis-Hastings steps to propose a new value from gamma distributions with fixed variance and accept with probability ,

| (45) |

where , , and we have a prior on . The likelihood term follows from the Dirichlet distribution and is given by

| (46) |

where that for is similar. Recall that the transition parameters are independent over , and thus their likelihoods multiply. The proposal and acceptance ratio for is similar.

Channel active features model hyperparameter

We place a prior on , which implies a gamma posterior of the form

| (47) |

where denotes the number of channel states activated in at least one of the channels.

Event dynamics model hyperparameters, , , ,

Instead of sampling and independently, we an additional parameter and sample and , which is simpler than sampling and independently.

With a prior on , we use auxiliary variables and to define the posterior,

| (48) |

where is the sum over auxiliary variables defined in Eq. (44), and the auxiliary variables and are sampled as

With a prior on , we use auxiliary variables to define the posterior,

| (49) |

For over , the posterior of the auxiliary variable is

| (50) |

With a prior on , we use auxiliary variables and to define the posterior,

| (51) |

The auxiliary variables are sampled via

Appendix E Autoregressive state coefficients posterior

Recall that our prior on the autoregressive coefficients is a multivariate normal with zero mean and covariance ,

| (52) |

The conditional event likelihood given the channel states and the event states is

| (53) |

The product of these prior and likelihood terms is the joint distribution over and ,

| (54) |

We take a brief tangent to prove a useful identity,

Lemma E.1.

Let the column vector and the symmetric matrix be defined as

where , , , , , and . Then

| (55) |

Proof.

∎

Note that this identity also holds for any permutation applied to the rows of and the rows and columns of . We now can manipulate the likelihood term of Eq. (54) into a form that separates from . Suppose that denotes the indices of the channels where and denotes those for whom . Furthermore, we use the superscript indexing on these two sets of indices to select the corresponding portions of the vector and the , , and matrices. We start by decomposing the likelihood term into three parts,

| (56) |

which we then insert into our previous expression for the joint distribution of and (Eq. (54)),

| (57) |

Conditioning on allows us to absorb the third term of the sum into the proportionality, and after replacing with a more explicit expression, we have

| (58) |

which we can further expand to yield

| (59) |

Absorbing more terms unrelated to into the proportionality, we have

| (60) |

which after some rearranging gives

| (61) |

Before completing the square, we will find it useful to introduce a bit more notation to simplify the expression,

yielding

| (62) |

We desire an expression in the form for unknown and so that it conforms to the multivariate normal density with mean and precision . We already have our value from the quadratic term above,

| (63) |

which allows us to solve the cross-term for ,

| (64) |

We can pull the final required term from the proportionality and complete the square. Thus, we have the form of the posterior for ,

| (65) |

where

| (66) |

Appendix F Experiment Parameters

Below, we give explicit values for the various priors and parameters used in our experiments.

| parameter | description | value |

|---|---|---|

| number of time series per event | 6 | |

| AR model order | 1 | |

| prior mean | 0 | |

| prior covariance | ||

| truncated number of event states | 20 | |

| HIW prior degrees of freedom | ||

| HIW prior scale | ||

| gamma prior | ||

| gamma prior | ||

| beta prior | ||

| gamma prior | ||

| gamma prior | ||

| Metropolis-Hastings proposal variance | ||

| Metropolis-Hastings proposal variance | ||

| gamma prior |

| parameter | description | value |

|---|---|---|

| number of time series per event | 16 and 6 | |

| AR model order | 5 | |

| prior mean | ||

| prior covariance | ||

| truncated number of event states | 30 | |

| (H)IW prior degrees of freedom | ||

| (H)IW prior scale | ||

| gamma prior | ||

| gamma prior | ||

| beta prior | ||

| gamma prior | ||

| gamma prior | ||

| Metropolis-Hastings proposal variance | ||

| Metropolis-Hastings proposal variance | ||

| gamma prior |

Acknowledgements

This work is supported in part by AFOSR Grant FA9550-12-1-0453 and DARPA Grant FA9550-12-1-0406 negotiated by AFOSR, ONR Grant N00014-10-1-0746, NINDS RO1-NS041811, RO1-NS48598, and U24-NS063930-03, and the Mirowski Discovery Fund for Epilepsy Research.