More efficient approximation of smoothing splines via space-filling basis selection

Abstract

We consider the problem of approximating smoothing spline estimators in a nonparametric regression model. When applied to a sample of size , the smoothing spline estimator can be expressed as a linear combination of basis functions, requiring computational time when the number of predictors . Such a sizable computational cost hinders the broad applicability of smoothing splines. In practice, the full sample smoothing spline estimator can be approximated by an estimator based on randomly-selected basis functions, resulting in a computational cost of . It is known that these two estimators converge at the identical rate when is of the order , where depends on the true function , and depends on the type of spline. Such is called the essential number of basis functions. In this article, we develop a more efficient basis selection method. By selecting the ones corresponding to roughly equal-spaced observations, the proposed method chooses a set of basis functions with a large diversity. The asymptotic analysis shows our proposed smoothing spline estimator can decrease to roughly , when . Applications on synthetic and real-world datasets show the proposed method leads to a smaller prediction error compared with other basis selection methods.

Keywords: Space-filling design; Star discrepancy; Nonparametric regression; Penalized least squares; Subsampling.

1 Introduction

Consider the nonparametric regression model , where denotes the th observation of the response, represents an unknown function to be estimated, is the th observation of the predictor variable, and are independent and identically distributed random errors with zero mean and unknown variance . The function can be estimated by minimizing the penalized least squares criterion,

| (1) |

where denotes a quadratic roughness penalty (Wahba, 1990; Wang et al., 2011; Gu, 2013). The smoothing parameter here administrates the trade-off between the goodness-of-fit of the model and the roughness of the function . In this paper, expression (1) is minimized in a reproducing kernel Hilbert space , which leads to a smoothing spline estimate for .

Although univariate smoothing splines can be computed in time (Reinsch, 1967), it takes time to find the minimizer of (1) when . Such a computational cost hinders the use of smoothing splines for large samples. To reduce the computational cost for smoothing splines, extensive efforts have been made to approximate the minimizer of (1) by restricting the estimator to a subspace . Let the dimension of the space be and the restricted estimator be . Compared with the computational cost of calculating , the computational cost of can be reduced to . Along this line of thinking, numerous studies have been developed in recent decades. Luo and Wahba (1997) and Zhang et al. (2004) approximated the minimizer of (1) using variable selection techniques. Pseudosplines (Hastie, 1996) and penalized splines (Ruppert et al., 2009) were also developed to approximate smoothing splines.

Although these methods have already yielded impressive algorithmic benefits, they are usually ad hoc in choosing the value of . The value of regulates the trade-off between the computational time and the prediction accuracy. One fundamental question is how small can be in order to ensure the restricted estimator converge to the true function at the identical rate as the full sample estimator . To answer this question, Gu and Kim (2002); Ma et al. (2015) developed random sampling methods for selecting the basis functions and established the coherent theory for the convergence of the restricted estimator . To ensure that has the same convergence rate as , both methods in Gu and Kim (2002) and Ma et al. (2015) require be of the order , where is an arbitrary small positive number, depends on the true function , and depends on the fitted spline. In Gao (1999), it is shown that fewer basis functions are needed to warrant the aforementioned convergence rate if we select the basis functions , where are roughly equal-spaced. However, they only provide the theory in the univariate predictor case, and their method cannot be directly applied to multivariate cases.

In this paper, we develop a more efficient computational method to approximate smoothing splines. The distinguishing feature of the proposed method is that it considers the notion of diversity of the selected basis functions. We propose the space-filling basis selection method, which chooses the basis functions with a large diversity, by selecting the ones that correspond to roughly uniformly-distributed observations. When , we show that the smoothing spline estimator proposed here has the same convergence rate as the full sample estimator, and the order of the essential number of basis function is reduced to .

2 Smoothing splines and the basis selection method

Let be a reproducing kernel Hilbert space, where is a squared semi-norm. Let be the null space of and assume that is a finite-dimensional linear subspace of with basis in which is the dimension of . Let be the orthogonal complement of in such that . The space is a reproducing kernel Hilbert space with as the squared norm. The reproducing kernel of is denoted by . The well-known representer theorem (Wahba, 1990) states that there exist vectors and , such that the minimizer of (1) in is given by Let be the vector of response observations, be the matrix with the th entry , and be the matrix with the th entry . Solving the minimization problem in (1) thus is equivalent to solving

| (2) |

where the smoothing parameter can be selected based on the generalized cross-validation criterion (Wahba and Craven, 1978). In a general case where and , the computation cost for calculating in equation (2) is of the order , which is prohibitive when the sample size is considerable. To reduce this computational burden, one can restrict the full sample estimator to a subspace , where . Here, , termed as the effective model space, can be constructed by selecting a subsample from . Such an approach is thus called the basis selection method.

Denote a matrix with the th entry and with the th entry . The minimizer of (1) in the effective model space thus can be written as and the coefficients, and can be obtained through solving

| (3) |

The evaluation of the restricted estimator at sample points thus satisfies , where . In a general case where , the overall computational cost for the basis selection method is , which is a significant reduction compared with . Recall that the value of controls the trade-off between the computational time and the prediction accuracy. To ensure that converges to the true function at the same rate as , researchers showed that the essential number of basis functions needs to be of the order , where is an arbitrary small positive number (Kim and Gu, 2004; Ma et al., 2015). In the next section, we present the proposed space-filling basis selection method, which reduces such an order to .

3 Space-filling basis selection

3.1 Motivation and Notations

To motivate the development of the proposed method, we first re-examine the ensemble learning methods, which are well-known in statistics and machine learning (Dietterich, 2002; Rokach, 2010). To achieve better predictive performance than a single learner 222A learner is either a model or a learning algorithm., ensemble learning methods first build a committee which consists of a number of different learners, then aggregate the predictions of these learners in the committee. The aggregation is usually achieved by employing the majority vote or by calculating a weighted average. The diversity among the learners in the committee holds the key to the success of the ensemble learning methods. A large diversity in the committee yields a better performance of ensemble learning methods (Kuncheva and Whitaker, 2003).

The restricted smoothing spline estimator can be considered as an ensemble learning method. In particular, the prediction of is conducted by taking a weighted average of the predictions of the selected basis functions , , in addition to the basis functions in the null space . Inspired by Kuncheva and Whitaker (2003), we propose to select a subsample , such that the diversity among the basis functions is as large as possible. One crucial question is how to measure the diversity among a set of basis functions. Notice that adjacent data points, i.e., () yields similar basis functions, i.e., . On the other hand, if is far away from , the basis function tends to be different from . These observations inspire us to select a set of basis functions where are as uniformly-distributed as possible. The uniformly-distributed property, usually termed as the space-filling property in the experimental design literature (Pukelsheim, 2006), can be systematically measured by the star discrepancy.

Since the star discrepancy is defined for data in , in practice, we need to map the data with arbitrary distribution to this domain. Suppose are independent and identically distributed observations generated from the cumulative distribution function with bounded support . Suppose is a transformation, such that has the uniform distribution on . In a simple case where and is known, we can find the transformation by setting . In a more general case where and is unknown, the transformation can be calculated via the optimal transport theory (Villani, 2008). However, finding the exact solution via the optimal transport theory can be time-consuming. Instead, one may approximate the transformation using the iterative transformation approach (Pukelsheim, 2006) or the sliced optimal transport map approach (Rabin et al., 2011). The computational cost of these two approaches is of the order , where denotes the number of iterations (Kolouri et al., 2018; Cuturi and Doucet, 2014; Bonneel et al., 2015). Such a computational cost is negligible compared with the computational cost of the proposed method. In practice, the data can always be preprocessed using the transformation of . Without loss of generality, may be assumed to be independent and identically distributed observations generated from the uniform distribution on .

3.2 Star discrepancy and space-filling design

Let , be a hyper-rectangle and be the indicator function. The local discrepancy and the star discrepancy are defined as follows (Fang et al., 2005; Pukelsheim, 2006).

Given in and a hyper-rectangle , the corresponding local discrepancy is defined as The star discrepancy corresponding to is defined as

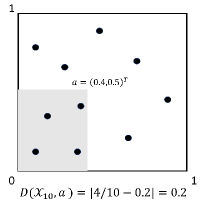

The local discrepancy measures the difference between the volume of the hyper-rectangle and the fraction of the data points located in . The local discrepancy is illustrated in the left panel of Fig. 1. The star discrepancy calculates the supreme of all the local discrepancy over . In other words, the smaller the is, the more space-filling the data points are (Fang et al., 2005).

|

|

Chung (1949) showed that when is generated from the uniform distribution in , converges to 0 with the order of convergence . Faster convergence rate can be achieved using space-filling design methods (Pukelsheim, 2006) and the low-discrepancy sequence method (Halton, 1960; Sobol, 1967; Owen, 2003). The space-filling design methods, developed in the experimental design literature, aim to generate a set of design points that can cover the space as uniformly as possible. For further details, please refer to Wu and Hamada (2011); Fang et al. (2005); Pukelsheim (2006). The low-discrepancy sequence method Such a method is frequently applied in the field of quasi-Monte Carlo and is extensively employed for numerical integration. For a Sobol sequence , one type of low-discrepancy sequence, it is known that is of the order , which is roughly the square order for fixed . For more in-depth discussions on the quasi-Monte Carlo methods, see e.g., Lemieux (2009); Leobacher and Pillichshammer (2014); Dick et al. (2013) or Chapter 5 in Glasserman (2013) and references therein.

Existing studies suggested that space-filling property can be exploited to achieve a fast convergence rate for numerical integration and response surface estimation (Fang et al., 2005; Pukelsheim, 2006). These results inspire us to select the space-filling basis functions in smoothing splines. Unfortunately, the existing techniques of space-filling design cannot be applied to our basis selection problem directly due to the following fact. The design space in space-filling design methods is usually continuous, whereas our sample space is finite and discrete. We propose an algorithm to overcome the barrier.

3.3 Main algorithm

We shall develop a space-filling basis selection method, in which we select the space-filling data points in a computationally-attractive manner. First, a set of design points are generated, either using low-discrepancy sequence or space-filling design methods. Subsequently, the nearest neighbor is selected for each , from the sample points . Thus, can approximate the design points well, if is sufficiently close to , for . The proposed method is summarized as follows.

-

•

Step 1. Generate a set of design points from .

-

•

Step 2. Select the nearest neighbor for each design point from . Let the selected data points be ,.

-

•

Step 3. Minimize the penalized least squares criterion (1) over the following effective model space

The proposed algorithm is illustrated through a toy example in the right panel of Fig. 1. One hundred data points (gray dots) are generated from the uniform distribution in , and a set of design points (black triangles) are generated through the max projection design (Joseph et al., 2015), a recently developed space-filling design method. The nearest neighbor to each design point is selected (circle). It is observed that the selected subsample is space-filling since it can effectively approximate the design points.

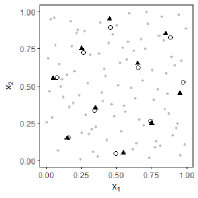

In Fig. 2, the proposed space-filling basis selection method is compared with the uniform basis selection method (Gu and Kim, 2002) and the adaptive basis selection method (Ma et al., 2015) using a two-dimensional toy example. We generate 5000 data points from the uniform distribution in . The leftmost panel in Fig. 2 presents the heat map for the true response surface . The dimension of the effective model space is set to be , for all basis selection methods. The selected basis functions are labeled as solid dots in each panel. The right three panels of Fig. 2 plot the heat maps of the spline estimates of all three basis selection methods. In the uniform basis selection method, the default random number generator in R is employed to select the basis functions. It is observed that the selected points are not uniformly distributed. This is a very common phenomenon for uniform basis selection since the randomly selected points do not necessarily look uniformly-distributed, especially when the number of selected points is small. In contrast, it is observed that the basis functions selected by the proposed method are space-filling. Using the space-filling design techniques, the proposed method overcomes the pitfall of uniform basis selection method and uniformly-distribute the selected points. The true response can be better estimated using the proposed method than using other methods.

Now we calculate the computational cost of the proposed method. In Step 1, the design points can be generated beforehand; thus, the computational cost in Step 1 can be ignored. For the nearest neighbor search in Step 2, we employ the -d tree method, which takes flops (Bentley, 1975; Wald and Havran, 2006). The computational cost of this step can be further reduced if we are willing to sacrifice the accuracy of the searching results, e.g., using those approximate nearest neighbor searching algorithms (Altman, 1992; Arya et al., 1994). For Step 3, computing the smoothing spline estimates in the restricted space is of the order , as discussed in Section 2.2. In summary, the overall computational cost for the space-filling basis selection method is of the order .

4 Convergence rates for function estimation

Recall that the data points are assumed to be generated from the uniform distribution on . Thus, for each coordinate , the corresponding marginal density . We define that . The following four regularity conditions are required for the asymptotic analysis, and the first three are the identical conditions employed by Ma et al. (2015), in which one can find more technical discussions.

Condition 1. The function is completely continuous with respect to ;

Condition 2. for some and , for sufficiently large ;

Condition 3. for all and , , where , are the eigenfunctions associated with and in , denotes a positive constant;

Condition 4. for all and , , where denotes the total variation, , and represents a positive constant. The total variation here is defined in the sense of Hardy and Krause (Owen, 2003). As a specific case when , the total variation , revealing that a smooth function displays a small total variation. Intuitively, the total variation measures how wiggly the function is.

Condition 1 indicates that there exist a sequence of eigenfunctions and the associated sequence of eigenvalues satisfying and , where is the Kronecker delta. The growth rate of the eigenvalues dictates how fast should approach to 0, and further what the convergence rate of smoothing spline estimates is (Gu, 2013). Notice that the eigenfunctions s have a close relationship with the Demmler-Reinsch basis, which are orthogonal vectors representing norm (Ruppert, 2002). The eigenfunctions s can be calculated explicitly in several specific scenarios. For instance, s are the sine and cosine functions when , where denotes a periodic function on . For more details on the construction of s can be found in Section 9.1 of Gu (2013).

We now present our main theoretical results, and all the proofs are relegated to the Supplementary Material. For a set of design points of size , we now assume the star discrepancy converges to zero at the rate of , or for an arbitrary small positive number . Such a convergence rate is warranted if is generated from a low-discrepancy sequence or space-filling design methods, as discussed in Section 3.1. Recall that the proposed method aims to select a subsample that is space-filling, and the key to success depends on whether the chosen subsample can effectively approximate the design points . The following lemma bounds the difference between and in terms of star discrepancy.

Lemma 1

Suppose is fixed and , for any arbitrary small . If , as , we have

Lemma 1 states that the selected subsample can effectively approximate the design points in the sense that the convergence rate of is similar to that of . The following theorem is the Koksma–Hlawka inequality, which will be used in proving our main theorem. See Kuipers and Niederreiter (2012) for a proof.

Theorem 1 (Koksma–Hlawka inequality)

Let be a set of data points in , and be a function defined on with bounded total variation . We have

Lemma 2

If , under Condition 4, for all and , we have

Lemma 2 shows the advantage of , the subsample selected by the proposed method, over a randomly selected subsample . To be specific, as a direct consequence of Condition 3, we have for all and . Lemma 2 therefore implies the subsample can more efficiently approximate the integration , for all and . We now present our main theoretical result.

Theorem 2

Suppose for some and is an arbitrary small positive number. Under Conditions 1 - 4, , as and , we have . In particular, if , the estimator achieves the optimal convergence rate

It is shown in Theorem 9.17 of Gu (2013) that the full sample smoothing spline estimator has the convergence rate, under some regularity conditions. Theorem 2 thus states that proposed estimator achieves the same convergence rate as the full sample estimator , under two extra conditions imposed on (a) , and (b) as . Moreover, Theorem 2 indicates that to achieve the identical convergence rate as the full sample estimator , the proposed approach requires a much smaller number of basis functions, in the case when . indicates an essential choice of for the proposed estimator should satisfy , when . For comparison, both the random basis selection (Gu and Kim, 2002) and the adaptive basis selection method (Ma et al., 2015) require the essential number of basis functions to be . As a result, the proposed estimator is more efficient since it reduces the order of the essential number of basis functions.

Given , when , it follows naturally when is satisfied. Otherwise, when , becomes sufficient but not necessary for . We thus stress that the essential number of basis functions for the proposed method, , can only be achieved when . The parameter in Theorem 2 is closely associated with the true function and will affect the convergence rate of the proposed estimator. Intuitively, the larger the is, the smoother the function will be. For , the optimal convergence rate of falls in the interval . To the best of our knowledge, the problem of selecting the optimal has rarely been studied, and one exception is Serra and Krivobokova (2017), where the author studied such a problem in one-dimensional cases. Serra and Krivobokova (2017) provided a Bayesian approach for selecting the optimal parameter, named , which is known to be proportional to . Nevertheless, since the constant is usually unknown, such an approach still cannot be used to select the optimal in practice. Furthermore, whether such an approach can be extended to the high-dimensional cases remains unclear.

For the dimension of the effective model space , a suitable choice is in the following two cases. Case 1. Univariate cubic smoothing splines with the penalty , and ; Case 2. Tensor-product splines with , where . For , the dimension roughly lies in the interval .

5 Simulation Results

To assess the performance of the proposed space-filling basis selection method, we carry out extensive analysis on simulated datasets. We report some of them in this section. We compare the proposed method with uniform basis selection and adaptive basis selection, and report both prediction error and running time.

The following four functions on (Lin and Zhang, 2006) are used as the building blocks in our simulation study, , , , and . In addition, we also use the following functions on (Wood, 2003) as the building blocks,

where and . The signal-to-noise ratio (SNR), defined as , is set to be at two levels: 5 and 2. We generate replicated samples with sample sizes and dimensions uniformly on from the following four regression settings,

(1) A 2-d function ;

(2) A 2-d function ;

(3) A 4-d function ;

(4) A 6-d function .

In the simulation, is set to be and , based on the asymptotic results. To combat the curse of dimensionality, we fit smoothing spline analysis of variance models with all main effects and two-way interactions. The prediction error is measured by the mean squared error (MSE), defined as , where denotes an independent testing dataset uniformly generated on with . The max projection design (Joseph et al., 2015) is used to generate design points in Step 1 of the proposed method. Our empirical studies suggest that the Sobol sequence and other space-filling techniques, e.g., the Latin hypercube design (Pukelsheim, 2006) and the uniform design (Fang et al., 2000), also yield similar performance.

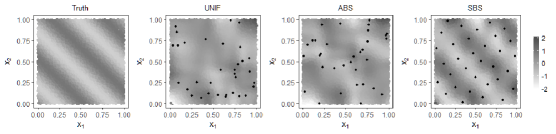

|

Figure 3 shows the MSE against the sample size on the log-log scale. Each column presents the results of a function setting as described above. We set the signal-to-noise ratio to be five and two in the upper row and the lower row, respectively. We use solid lines for the proposed method, dotted lines for adaptive basis selection method, and dashed lines for uniform basis selection method. The number of the basis functions is and for the lines with triangles and the lines with circles, respectively. The vertical bars represent standard error bars obtained from 20 replicates. The full sample estimator is omitted here due to the high computation cost. It is observed that the space-filling basis selection method provides more accurate smoothing spline predictions than the other two methods in almost all settings. Notably, the lines with circles for the space-filling basis selection method displays a linear trend similar to the lines with triangles for the other two methods. This observation reveals the proposed estimator yields a faster convergence rate than the other two methods.

More simulation results can be found in the Supplementary Material, in which we consider the regression functions that exhibit several sharp peaks. In those cases, the results suggest that both the space-filling basis selection method and the adaptive basis selection method outperform the uniform basis selection, whereas neither the space-filling basis selection method nor the adaptive basis selection method dominates each other. Moreover, the proposed space-filling basis selection method outperforms the adaptive basis selection method as the sample size gets larger.

| True function | SNR | UNIF | ABS | SBS |

|---|---|---|---|---|

| Function 1 | 5 | 0.97(0.15) | 0.90(0.05) | 0.90(0.04) |

| 2 | 0.92(0.10) | 0.87(0.04) | 0.87(0.06) | |

| Function 2 | 5 | 0.88(0.04) | 0.87(0.03) | 0.90(0.06) |

| 2 | 0.86(0.05) | 0.85(0.02) | 0.90(0.06) | |

| Function 3 | 5 | 3.92(0.24) | 3.95(0.24) | 4.04(0.19) |

| 2 | 4.08(0.30) | 4.51(0.66) | 4.27(0.39) | |

| Function 4 | 5 | 12.95(0.61) | 15.10(3.20) | 15.45(3.04) |

| 2 | 14.33(1.44) | 13.72(1.02) | 14.25(1.09) |

Table 1 summarizes the computing times of model-fitting using all methods on a synthetic dataset with and . The simulation is replicated for 20 runs using a computer with an Intel 2.6 GHz processor. In Table 1, UNIF, ABS, and SBS represent the uniform basis selection method, the adaptive basis selection method, and the proposed space-filling basis selection method, respectively. The time for calculating the smoothing parameter is not included. The result for the full basis smoothing spline estimator is omitted here due to the huge computational cost. The computational time for generating a set of design points, i.e., Step 1 in the proposed algorithm, is not included since the design points can be generated beforehand. It is observed that the computing time of the proposed method is comparable with that of the other two basis selection methods under all settings. Combining such an observation with the result in Fig. 3, it is concluded that the proposed method can achieve a more accurate prediction, without requiring much more computational time.

6 Real data example

The problem of measuring total column ozone has attracted significant attention for decades. Ozone depletion facilitates the transmission of ultraviolet radiation (290–400 nm wavelength) through the atmosphere and causes severe damage to DNA and cellular proteins that are involved in biochemical processes, affecting growth and reproduction. Statistical analysis of total column ozone data has three steps. In the first step, the raw satellite data (level 1) are retrieved by NASA. Subsequently, NASA calibrates and preprocesses the data to generate spatially and temporally irregular total column ozone measurements (level 2). Finally, the level 2 data are processed to yield the level 3 data, which are the daily and spatially regular data product released extensively to the public.

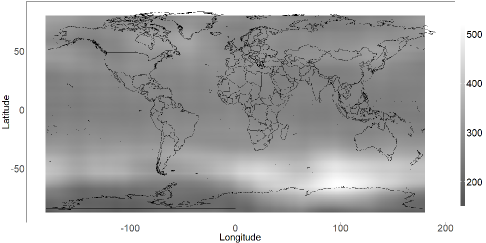

We fit the nonparametric model to a level 2 total column ozone dataset (=173,405) compiled by Cressie and Johannesson (2008). Here, is the level 2 total column ozone measurement at the th longitude, i.e., , and the th latitude, i.e., , and represent the independent and identically distributed random errors. The heat map of the raw data is presented in the Supplementary Material. The thin-plate smoothing spline is used for the model-fitting, and the proposed method is employed to facilitate the estimation. The number of basis functions is set to . The design points employed in the proposed basis selection method are yielded from a Sobol sequence (Dutang and Savicky, 2013). The heat map of the predicted image on a regular grid is presented in Fig. 4. It is seen that the total column ozone value decreases dramatically to form the ozone hole over the South Pole, around – latitudinal zone.

|

We now report computing times of the model-fitting that are performed on the identical laptop computer for the simulation studies. The computational times, in CPU seconds, are presented in parentheses, including basis selection (0.1s), model fitting (129s), and prediction (21s). Further comparison between the proposed method and other basis selection methods on this dataset can be found in the Supplementary Material.

7 Acknowledgement

This study was partially supported by the National Science Foundation and the National Institutes of Health.

References

- Altman (1992) Naomi S Altman. An introduction to kernel and nearest-neighbor nonparametric regression. The American Statistician, 46(3):175–185, 1992.

- Arya et al. (1994) Sunil Arya, David M Mount, Nathan Netanyahu, Ruth Silverman, and Angela Y Wu. An optimal algorithm for approximate nearest neighbor searching in fixed dimensions. In Proc. 5th ACM-SIAM Sympos. Discrete Algorithms, pages 573–582, 1994.

- Bentley (1975) Jon Louis Bentley. Multidimensional binary search trees used for associative searching. Communications of the ACM, 18(9):509–517, 1975.

- Bonneel et al. (2015) Nicolas Bonneel, Julien Rabin, Gabriel Peyré, and Hanspeter Pfister. Sliced and radon wasserstein barycenters of measures. Journal of Mathematical Imaging and Vision, 51(1):22–45, 2015.

- Chung (1949) Kai-Lai Chung. An estimate concerning the kolmogroff limit distribution. Transactions of the American Mathematical Society, 67(1):36–50, 1949.

- Cressie and Johannesson (2008) Noel Cressie and Gardar Johannesson. Fixed rank kriging for very large spatial data sets. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 70(1):209–226, 2008.

- Cuturi and Doucet (2014) Marco Cuturi and Arnaud Doucet. Fast computation of wasserstein barycenters. In International Conference on Machine Learning, pages 685–693, 2014.

- Dick et al. (2013) Josef Dick, Frances Y Kuo, and Ian H Sloan. High-dimensional integration: the quasi-monte carlo way. Acta Numerica, 22:133–288, 2013.

- Dietterich (2002) Thomas G Dietterich. Ensemble learning. The handbook of brain theory and neural networks, 2:110–125, 2002.

- Dutang and Savicky (2013) C Dutang and P Savicky. randtoolbox: Generating and testing random numbers. R package, page 67, 2013.

- Fang et al. (2000) Kai-Tai Fang, Dennis KJ Lin, Peter Winker, and Yong Zhang. Uniform design: Theory and application. Technometrics, 42(3):237–248, 2000.

- Fang et al. (2005) Kai-Tai Fang, Runze Li, and Agus Sudjianto. Design and Modeling for Computer Experiments. CRC Press, 2005.

- Gao (1999) Fangyu Gao. Penalized multivariate logistic regression with a large data set. Technical report, University of Wisconsin–Madison, 1999.

- Glasserman (2013) Paul Glasserman. Monte Carlo methods in financial engineering, volume 53. Springer Science & Business Media, 2013.

- Gu (2013) Chong Gu. Smoothing spline ANOVA models. Springer Science & Business Media, 2013.

- Gu and Kim (2002) Chong Gu and Young-Ju Kim. Penalized likelihood regression: general formulation and efficient approximation. Canadian Journal of Statistics, 30(4):619–628, 2002.

- Halton (1960) John H Halton. On the efficiency of certain quasi-random sequences of points in evaluating multi-dimensional integrals. Numerische Mathematik, 2(1):84–90, 1960.

- Hastie (1996) Trevor Hastie. Pseudosplines. Journal of the Royal Statistical Society. Series B (Methodological), pages 379–396, 1996.

- Joseph et al. (2015) V Roshan Joseph, Evren Gul, and Shan Ba. Maximum projection designs for computer experiments. Biometrika, 102(2):371–380, 2015.

- Kim and Gu (2004) Young-Ju Kim and Chong Gu. Smoothing spline gaussian regression: more scalable computation via efficient approximation. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 66(2):337–356, 2004.

- Kolouri et al. (2018) Soheil Kolouri, Charles E Martin, and Gustavo K Rohde. Sliced-wasserstein autoencoder: An embarrassingly simple generative model. arXiv preprint arXiv:1804.01947, 2018.

- Kuipers and Niederreiter (2012) Lauwerens Kuipers and Harald Niederreiter. Uniform distribution of sequences. Courier Corporation, 2012.

- Kuncheva and Whitaker (2003) Ludmila I Kuncheva and Christopher J Whitaker. Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Machine learning, 51(2):181–207, 2003.

- Lemieux (2009) Christiane Lemieux. Monte Carlo and quasi-Monte Carlo sampling. Springer, New York, 2009.

- Leobacher and Pillichshammer (2014) Gunther Leobacher and Friedrich Pillichshammer. Introduction to quasi-Monte Carlo integration and applications. Springer, 2014.

- Lin and Zhang (2006) Yi Lin and Hao Helen Zhang. Component selection and smoothing in multivariate nonparametric regression. The Annals of Statistics, 34(5):2272–2297, 2006.

- Luo and Wahba (1997) Zhen Luo and Grace Wahba. Hybrid adaptive splines. Journal of the American Statistical Association, 92(437):107–116, 1997.

- Ma et al. (2015) Ping Ma, Jianhua Z Huang, and Nan Zhang. Efficient computation of smoothing splines via adaptive basis sampling. Biometrika, 102(3):631–645, 2015.

- Owen (2003) Art B Owen. Quasi-monte carlo sampling. Monte Carlo Ray Tracing: Siggraph, 1:69–88, 2003.

- Pukelsheim (2006) Friedrich Pukelsheim. Optimal design of experiments. SIAM, 2006.

- Rabin et al. (2011) Julien Rabin, Gabriel Peyré, Julie Delon, and Marc Bernot. Wasserstein barycenter and its application to texture mixing. In International Conference on Scale Space and Variational Methods in Computer Vision, pages 435–446. Springer, 2011.

- Reinsch (1967) Christian H Reinsch. Smoothing by spline functions. Numerische mathematik, 10(3):177–183, 1967.

- Rokach (2010) Lior Rokach. Ensemble-based classifiers. Artificial Intelligence Review, 33(1-2):1–39, 2010.

- Ruppert (2002) David Ruppert. Selecting the number of knots for penalized splines. Journal of computational and graphical statistics, 11(4):735–757, 2002.

- Ruppert et al. (2009) David Ruppert, Matt P Wand, and Raymond J Carroll. Semiparametric regression during 2003–2007. Electronic journal of statistics, 3:1193, 2009.

- Serra and Krivobokova (2017) Paulo Serra and Tatyana Krivobokova. Adaptive empirical bayesian smoothing splines. Bayesian Analysis, 12(1):219–238, 2017.

- Sobol (1967) I.M. Sobol. The distribution of points in a cube and the approximate evaluation of integrals. USSR Computational Mathematics and Mathematical Physics, 7(4):86–112, 1967.

- Villani (2008) Cédric Villani. Optimal transport: old and new. Springer Science & Business Media, 2008.

- Wahba (1990) Grace Wahba. Spline models for observational data. SIAM, 1990.

- Wahba and Craven (1978) Grace Wahba and Peter Craven. Smoothing noisy data with spline functions. estimating the correct degree of smoothing by the method of generalized cross-validation. Numerische Mathematik, 31:377–404, 1978.

- Wald and Havran (2006) Ingo Wald and Vlastimil Havran. On building fast kd-trees for ray tracing, and on doing that in o (n log n). In Interactive Ray Tracing 2006, IEEE Symposium on, pages 61–69. IEEE, 2006.

- Wang et al. (2011) Xiao Wang, Jinglai Shen, and David Ruppert. On the asymptotics of penalized spline smoothing. Electronic Journal of Statistics, 5:1–17, 2011.

- Wood (2003) Simon N Wood. Thin plate regression splines. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 65(1):95–114, 2003.

- Wu and Hamada (2011) CF Jeff Wu and Michael S Hamada. Experiments: planning, analysis, and optimization. John Wiley & Sons, 2011.

- Zhang et al. (2004) Hao Helen Zhang, Grace Wahba, Yi Lin, Meta Voelker, Michael Ferris, Ronald Klein, and Barbara Klein. Variable selection and model building via likelihood basis pursuit. Journal of the American Statistical Association, 99(467):659–672, 2004.