Multi-Agent Meta-Reinforcement Learning for Self-Powered and Sustainable Edge Computing Systems

Abstract

The stringent requirements of mobile edge computing (MEC) applications and functions fathom the high capacity and dense deployment of MEC hosts to the upcoming wireless networks. However, operating such high capacity MEC hosts can significantly increase energy consumption. Thus, a base station (BS) unit can act as a self-powered BS. In this paper, an effective energy dispatch mechanism for self-powered wireless networks with edge computing capabilities is studied. First, a two-stage linear stochastic programming problem is formulated with the goal of minimizing the total energy consumption cost of the system while fulfilling the energy demand. Second, a semi-distributed data-driven solution is proposed by developing a novel multi-agent meta-reinforcement learning (MAMRL) framework to solve the formulated problem. In particular, each BS plays the role of a local agent that explores a Markovian behavior for both energy consumption and generation while each BS transfers time-varying features to a meta-agent. Sequentially, the meta-agent optimizes (i.e., exploits) the energy dispatch decision by accepting only the observations from each local agent with its own state information. Meanwhile, each BS agent estimates its own energy dispatch policy by applying the learned parameters from meta-agent. Finally, the proposed MAMRL framework is benchmarked by analyzing deterministic, asymmetric, and stochastic environments in terms of non-renewable energy usages, energy cost, and accuracy. Experimental results show that the proposed MAMRL model can reduce up to non-renewable energy usage and by the energy cost (with prediction accuracy), compared to other baseline methods.

Index Terms:

Mobile edge computing (MEC), stochastic optimization, meta-reinforcement learning, self-powered, demand response.I Introduction

Next-generation wireless networks are expected to significantly rely on edge applications and functions that include edge computing and edge artificial intelligence (edge AI) [1, 2, 3, 4, 5, 6, 7]. To successfully support such edge services within a wireless network with mobile edge computing (MEC) capabilities, energy management (i.e., demand and supply) is one of the most critical design challenges. In particular, it is imperative to equip next-generation wireless networks with alternative energy sources, such as renewable energy, in order to provide extremely reliable energy dispatch with less energy consumption cost [8, 9, 11, 10, 12, 13, 14, 15]. An efficient energy dispatch design requires energy sustainability, which not only saves energy consumption cost, but also fulfills the energy demand of the edge computing by enabling its own renewable energy sources. Specifically, sustainable energy is the practice of seamless energy flow to the MEC system that emerges to meet the energy demand without compromising the ability of future energy generation. Furthermore, to ensure a sustainable MEC operation, the retrogressive penetration of uncertainty for energy consumption and generation is essential. A summary of the challenges that are solved by the literature to enable renewable energy sources for the wireless network is presented in Table I.

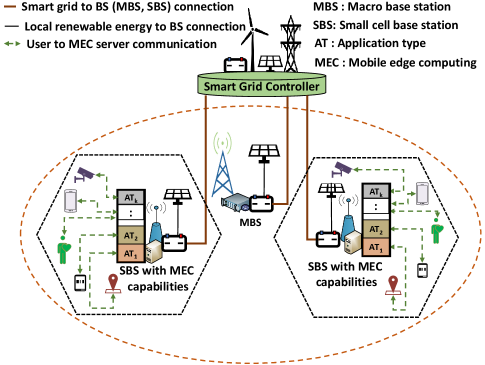

To provide sustainable edge computing for next-generation wireless systems, each base station (BS) with MEC capabilities unit can be equipped with renewable energy sources. Thus, the energy source of such a BS unit not only relies solely on the power grid, but also on the equipped renewable energy sources. In particular, in a self-powered network, wireless BSs with MEC capabilities is equipped with its own renewable energy sources that can generate renewable energy, consume, store, and share energy with other BS units.

Delivering seamless energy flow with a low energy consumption cost in a self-powered wireless network with MEC capabilities can lead to uncertainty in both energy demand and generation. In particular, the randomness of the energy demand is induced by the uncertain resources (i.e., computation and communication) request by the edge services and applications. Meanwhile, the energy generation of a renewable source (i.e., a solar panel) at each self-powered BS unit varies on the time of a day. In other words, the pattern of energy demand and generation will differ from one self-powered BS unit to another. Thus, such fluctuating energy demand and generation pattern induces a non-independent and identically distributed (non-i.i.d.) of energy dispatch at each BS over time. To overcome this non-i.i.d. energy demand and generation, characterizing the expected amount of uncertainty is crucial to ensure a seamless energy flow to the self-powered wireless network. As such, when designing self-powered wireless networks, it is necessary to take into account this uncertainty in the energy patterns.

| Ref. | Energy sources | MEC capabilities | Non-i.i.d. dataset | Energy dispatch | Energy cost | Remarks |

| [8] | Renewable | No | No | No | No | Activation and deactivation of BSs in a self-powered network |

| [9] | Hybrid energy | No | No | No | No | User scheduling and network resource management |

| [10] | Hybrid energy | Yes | No | No | No | Load balancing between the centralized cloud and edge server |

| [11] | Microgrid | Yes | No | Yes | No | MEC task assignment and energy demand-response (DR) management |

| [12] | Microgrid | Yes | No | Yes | No | Risk-sensitive energy profiling for microgrid-powered MEC network |

| [13] | Renewable | No | No | Yes | No | Energy load balancing among the SBSs with a microgrid |

| [14] | Smart grid enabled hybrid energy | No | No | Yes | No | Joint network resource allocation and energy sharing among the BSs |

| [15] | Hybrid energy | No | No | Yes | No | Overall system architecture for edge computing and renewable energy resources |

| This work | Smart grid enabled self-powered renewable energy | Yes | Yes | Yes | Yes | An effective energy dispatch mechanism for self-powered wireless networks with edge computing capabilities |

I-A Related Works

The problem of energy management for MEC-enabled wireless networks has been studied in [16, 17, 18, 19, 20, 21, 22] (summary in Table II). In [16], the authors proposed a joint mechanism for radio resource management and users task offloading with the goal of minimizing the long-term power consumption for both mobile devices and the MEC server. The authors in [17] proposed a heuristic to solve the joint problem of computational resource allocation, uplink transmission power, and user task offloading problem. The work in [18] studied the tradeoff between communication and computation for a MEC system and the authors proposed a MEC server CPU scaling mechanism for reducing the energy consumption. Further, the work in [19] proposed an energy-aware mobility management scheme for MEC in ultra-dense networks, and they addressed the problem using Lyapunov optimization and multi-armed bandits. Recently, the authors in [21] proposed a distributed power control scheme for a small cell network by using the concept of a multi-agent calibrate learning. Further, the authors in [22] studied the problem of energy storage and energy harvesting (EH) for a wireless network using deviation theory and Markov processes. However, all of these existing works assume that the consumed energy is available from the energy utility source to the wireless network system [16, 17, 18, 19, 20, 21, 22]. Since the assumed models are often focused on energy management and user task offloading on network resource allocations, the random demand for computational (e.g., CPU computation, memory, etc.) and communication requirements of the edge applications and services are not considered. In fact, even if enough energy supply is available, the energy cost related to network operation can be significant because of the usage of non-renewable (e.g., coal, petroleum, natural gas). Indeed, it is necessary to include renewable energy sources towards the next-generation wireless networking infrastructure.

| Ref. | Contributions | Method | Limitation |

|---|---|---|---|

| [16] | Radio resource management and users task offloading | Optimization | Usage of non-renewable, deterministic environment |

| [17] | Computational resource allocation, uplink transmission power, and user task offloading | Heuristic | Usage of non-renewable, energy dispatch, performance guarantee |

| [18] | MEC server CPU scaling mechanism for reducing the energy consumption | Optimization | Usage of non-renewable, energy dispatch |

| [19] | Energy-aware mobility management scheme for MEC | Lyapunov and multi-armed bandits | Energy dispatch, i.i.d. energy demand-response |

| [20] | Energy efficient green-IoT network | Heuristic | Edge computing, Energy dispatch, deterministic environment |

| [21] | Distributed power control scheme for a small cell network | Multi-agent calibrate learning | Usage of non-renewable, energy dispatch |

| [22] | Energy storage and energy harvesting (EH) for a wireless network | Deviation theory and Markov processes | MEC capabilities, i.i.d. energy demand-response |

| [23] | Non-coordinated energy shedding and mis-aligned incentives for mixed-use building | Auction theory | MEC capabilities, i.i.d. energy demand-response |

| [24] | Tradeoff between effectiveness and available amounts of training data | Deep meta-RL | Stochastic environment and a multi-agent scenario |

| [25] | Controling the meta-parameter in both static and dynamic environments | SGD-based meta-parameter learning | Single-agent, same environment |

| [26] | Learning to learn mechanism with the recurrent neural networks | Generalized transfer learning | Deterministic environment, single-agent |

| [27] | Asynchronous multi-agent RL framework | One-step Q-learning, one-step Sarsa, and n-step Q-learning | Deterministic environment |

| [28] | General-purpose multi-agent scheme | Extension of the actor-critic policy gradient | Same environment for all of the local actors |

Recently, some of the challenges of renewable energy powered wireless networks have been studied in [8, 9, 11, 10, 12, 13, 14, 23]. In [8], the authors proposed an online optimization framework to analyze the activation and deactivation of BSs in a self-powered network. In [9], proposed a hybrid power source infrastructure to support heterogeneous networks (HetNets), a model-free deep reinforcement learning (RL) mechanism was proposed for user scheduling and network resource management. In [10], the authors developed an RL scheme for edge resource management while incorporating renewable energy in the edge network. In particular, the goal of [10] is to minimize a long-term system cost by load balancing between the centralized cloud and edge server. The authors in [11] introduced a microgrid enabled edge computing system. A joint optimization problem is studied for MEC task assignment and energy demand-response (DR) management. The authors in [11] developed a model-based deep RL framework to tackle the joint problem. In [12], the authors proposed a risk-sensitive energy profiling for microgrid-powered MEC network to ensure a sustainable energy supply for green edge computing by capturing the conditional value at risk (CVaR) tail distribution of the energy shortfall. The authors in [12] proposed a multi-agent RL system to solve the energy scheduling problem. In [13], the authors proposed a self-sustainable mobile networks, using graph-based approach for intelligent energy management with a microgrid. The authors in [14] proposed a smart grid-enabled wireless network and minimized grid energy consumption by applying energy sharing among the BSs. Furthermore, in [23], the authors addressed challenges of non-coordinated energy shedding and mis-aligned incentives for mixed-use building (i.e., buildings and data centers) using auction theory to reduce energy usage. However, these works [9, 11, 10, 12, 13, 14, 23] do not investigate the problem of energy dispatch nor do they account for the energy cost of MEC-enabled, self-powered networks when the demand and generation of each self-powered BS are non-i.i.d.. Dealing with non-i.i.d. energy demand and generation among self-powered BSs is challenging due to the intrinsic energy requirements of each BS evolve the uncertainty. In order to overcome this unique energy dispatch challenge, we propose to develop a multi-agent meta-reinforcement learning framework that can adapt new uncertain environment without considering the entire past experience.

Some interesting problems related to meta-RL and multi-agent deep RL are studied in [24, 25, 26, 27, 28] (summary in Table II). In [24], the authors focused on studying the challenges of the tradeoff between effectiveness and available amounts of training data for a deep-RL based learning system. To this end, the authors in [24] tackled those challenges by exploring a deep meta-reinforcement learning architecture. This learning architecture comprises of two learning systems: 1) lower-level system that can learn each new task very quickly, 2) higher-level system is responsible to improve the performance of each lower-level system task. In particular, this learning mechanism is involved with one lower-level system that can learn relatively quickly as compared with a higher-level system. This lower-level system can adapt to a new task while a higher-level system performs fine-tuning so as to improve the performance of the lower-level system. In particular, in deep meta-reinforcement learning, a lower-level system quantifies a reward based on the desired action and feeds back that reward to a higher-level system to tune the weights of a recurrent network. However, the authors in [24] do not consider a stochastic environment nor do they extend their work for a multi-agent scenario. The authors in [25] proposed a stochastic gradient-based meta-parameter learning scheme for tuning reinforcement learning parameters to the physical environmental dynamics. Particularly, the experiment in [25] performed in both animal and robot environments, where an animal must recognize food before it starves and a robot must recharge before the battery is empty. Thus, the proposed scheme can effectively find meta-parameter values and controls the meta-parameter in both static and dynamic environments. In [26], the authors investigated a learning to learn (i.e., meta-learning) mechanism with the recurrent neural networks, where the meta-learning problem was designed as a generalized transfer learning scheme. In particular, the authors in [26] considered a parametrized optimizer that can transfer the neural network parameters update to an optimizee. Meanwhile, the optimizee can determine the gradients without relying on the optimizer parameters. Moreover, the optimizee sends the error to the optimizer, and updates its own parameters based on the transferred parameters. This mechanism allows an agent to learn new tasks for a similar structure. An asynchronous multi-agent RL framework was studied in [27], where the authors investigated how parallel actor learners of asynchronous advantage actor-critic (A3C) can achieve better stability during the neural network training comparted to asynchronous RL schemes. Such schemes include asynchronous one-step Q-learning, one-step Sarsa, and n-step Q-learning. The authors in [28] proposed a general-purpose multi-agent scheme by adopting the framework of centralized training with decentralized execution. In particular, the authors in [28] proposed an extension of the actor-critic policy gradient mechanism by modifying the role of the critic. This critic is augmented with an additional policy information from the other actors (agents). Sequentially, each local actor executes in a decentralized manner and sends its own policy to the centralized critic for further investigation. However, the environment (i.e., state information) of this model remains the same for all of the local actors while in our setting the environment of each BS agent is deferent from others based on its own energy demand and generation. Moreover, the works in [24, 25, 26, 27, 28], do not consider a multi-agent environment in which the policy of each agent relies on its own state information. In particular, such state information belongs to a non-i.i.d. learning environment when environmental dynamics become distinct among the agents.

I-B Contributions

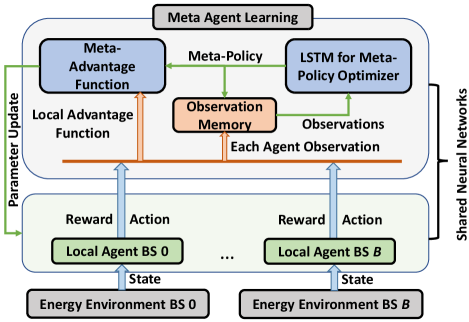

The main contribution of this paper is a novel energy management framework for next-generation MEC in self-powered wireless network that is reliable against extreme uncertain energy demand and generation. We formulate a two-stage stochastic energy cost minimization problem that can balance renewable, non-renewable, and storage energy without knowing the actual demand. In fact, the formulated problem also investigates the realization of renewable energy generation after receiving the uncertain energy demand from the MEC applications and service requests. To solve this problem, we propose a multi-agent meta-reinforcement learning (MAMRL) framework that dynamically observes the non-i.i.d. behavior of time-varying features in both energy demand and generation at each BS and, then transfers those observations to obtain an energy dispatch decision and execute the energy dispatch policy to the self-powered BS. Fig. 1 illustrates how we propose to dispatch energy to ensure sustainable edge computing over a self-powered network using MAMRL framework. As we can see, each BS that includes small cell base stations (SBSs) and a macro base station (MBS) will act as a local agent and transfer their own decision (reward and action) to the meta-agent. Then, the meta-agent accumulates all of the non-i.i.d. observations from each local agent (i.e., SBSs and MBS) and optimizes the energy dispatch policy. The proposed MAMRL framework then provides feedback to each BS agent for exploring efficiently that acquire the right decision more quickly. Thus, the proposed MAMRL framework ensures autonomous decision making under an uncertain and unknown environment. Our key contributions include:

-

•

We formulate a self-powered energy dispatch problem for MEC-supported wireless network, in which the objective is to minimize the total energy consumption cost of network while considering the uncertainty of both energy consumption and generation. The formulated problem is, thus, a two-stage linear stochastic programming. In particular, the first stage makes a decision when energy demand is unknown, and the second stage discretizes the realization of renewable energy generation after knowing energy demand of the network.

-

•

To solve the formulated problem, we propose a new multi-agent meta-reinforcement learning framework by considering the skill transfer mechanism [24, 25] between each local agent (i.e., self-powered BS) and meta-agent. In this MAMRL scheme, each local agent explores its own energy dispatch decision using Markovian properties for capturing the time-varying features of both energy demand and generation. Meanwhile, the meta-agent evaluates (exploits) that decision for each local agent and optimizes the energy dispatch decision. In particular, we design a long short-term memory (LSTM) as a meta-agent (i.e., run at MBS) that is capable of avoiding the incompetent decision from each local agent and learns the right features more quickly by maintaining its own state information.

-

•

We develop the proposed MAMRL energy dispatch framework in a semi-distributed manner. Each local agent (i.e., self-powered BS) estimates its own energy dispatch decision using local energy data (i.e., demand and generation), and provides observations to the meta-agent individually. Consequently, the meta-agent optimizes the decision centrally and assists the local agent toward a globally optimized decision. Thus, this approach not only reduces the computational complexity and communication overhead but it also mitigates the curse of dimensionality under the uncertainty by utilizing non-i.i.d. energy demand and generation from each local agent.

-

•

Experimental results using real datasets establish a significant performance gain of the energy dispatch under the deterministic, asymmetric, and stochastic environments. Particularly, the results show that the proposed MAMRL model saves up to of energy consumption cost over a baseline approach while achieving an average accuracy of around in a stochastic environment. Our approach also decreases the usage of non-renewable energy up to of total consumed energy.

The rest of the paper is organized as follows. Section II presents the system model of self-powered edge computing. The problem formulation is described in Section III. Section IV provides MAMRL framework for solving energy dispatch problem. Experimental results are analyzed in Section V. Finally, conclusions are drawn in Section VI.

II System Model of Self-Powered Edge Computing

Consider a self-powered wireless network that is connected with a smart grid controller as shown in Fig. 2. Such a wireless network enables edge computing services for various MEC applications and services. The energy consumption of the network depends on network operations energy consumption along with the task loads of the MEC applications. Meanwhile, the energy supply of the network relies on the energy generation from renewable sources that are attached to the BSs, as well as both renewable and non-renewable sources of the smart grid. Furthermore, the smart grid controller is a representative of the main power grid (i.e, smart grid), where an additional amount of energy can be supplied via the smart grid controller to the network. Therefore, we will first discuss the energy demand model that includes MEC server energy consumption, and network communication energy consumption. We will then describe the energy generation model that consists of the non-renewable energy generation cost, surplus energy storage cost, and total energy generation cost. Table III illustrates the summary of notations.

II-A Energy Demand Model

Consider a set of ( for MBS) BSs that encompass SBSs overlaid over a single MBS. Each BS includes a set of MEC application servers. We consider a finite time horizon with each time slot being indexed by and having a duration of 15 minutes [29]. The observational period of each time slot ends at the -th minute and is capable of capturing the changes of network dynamics [11, 12, 30]. A set of heterogeneous MEC application task requests from users will arrive to BS with an average task arrival rate (bits/s) at time . The task arrival rate at BS follows a Poisson process at time slot . BS integrates heterogeneous active MEC application servers that has (bits/s) processing capacity. Thus, computational task requests will be accumulated into the service pool with an average traffic size (bits) at time slot . The average traffic arrival rate is defined as . Therefore, an M/M/K queuing model is suitable to model these user tasks using MEC servers at BS and time [31, 32]. The task size of this queuing model is exponentially distributed since the average traffic size is already known. Hence, the service rate of the BS is determined by . At any given time , we assume that all of the tasks in are uniformly distributed at each BS . Thus, for a given MEC server task association indicator if task is assigned to server at BS , and otherwise, the average MEC server utilization is defined as follows [11]:

| (1) |

| Notation | Description |

|---|---|

| Set of BSs (SBSs and MBS) | |

| Set of active servers under the BS | |

| Set of user tasks at BS | |

| Set of renewable energy sources | |

| Server utilization in BS | |

| No. of CPU cores | |

| Average downlink data of BS | |

| Fixed channel bandwidth of BS for user task | |

| Transmission power of BS | |

| Downlink channel gain between user task to BS | |

| Co-channel interference for user task at BS | |

| Energy coefficient for BS | |

| MEC server CPU frequency for a single core | |

| Server switching capacitance | |

| MEC server static energy consumption | |

| MEC server idle state power consumption | |

| Scaling factor of heterogeneous MEC CPU core | |

| Static energy consumption of BS | |

| Renewable energy cost per unit | |

| Non-renewable energy cost per unit | |

| Storage energy cost per unit | |

| Amount of renewable energy | |

| Amount of non-renewable energy | |

| Amount of surplus energy | |

| Energy demand at time slot | |

| Random variable for energy demand | |

| Maximum capacity of renewable energy at BS | |

| Set of observation at BS | |

| Big notation to represent complexity | |

| Entropy regularization coefficient | |

| Discount factor | |

| Learning parameters for BS | |

| Energy dispatch policy with parameters at BS | |

| Meta-agent learning parameters |

II-A1 MEC Server Energy Consumption

In case of MEC server energy consumption, the computational energy consumption (dynamic energy) will be dependent on the CPU activity for executing computational tasks [17, 33, 16]. Further, such dynamic energy is also accounted with the thermal design power (TDP), memory, and disk I/O operations of the MEC server [17, 33, 16] and we denote as . Meanwhile, static energy includes the idle state power of CPU activities [16, 18]. We consider, a single core CPU with a processor frequency (cycles/s), an average server utilization (using (1)) at time slot , and a switching capacitance (farad) [17]. The dynamic power consumption of such single core CPU can be calculated by applying a cubic formula [18, 34]. Thus, energy consumption of MEC servers with CPU cores at BS is defined as follows:

| (2) |

where denotes a scaling factor of heterogeneous CPU core of the MEC server. Thus, the value of is dependent on the processor architecture [35] that assures the heterogeneity of the MEC server.

II-A2 Base Station Energy Consumption

The energy consumption needed for the operation of the network base stations (i.e., SBSs and MBS) includes two types of energy: dynamic and static energy consumption [36]. On one hand, a static energy consumption includes the energy for maintaining the idle state of any BS, a constant power consumption for receiving packet from users, and the energy for wired transmission among the BSs. On the other hand, the dynamic energy consumption of the BSs depends on the amount of data transfer from BSs to users which essentially relates to the downlink [37] transmit energy. Thus, we consider that each BS operates at a fixed channel bandwidth and constant transmission power [37]. Then the average downlink data of BS will be given by [11]:

| (3) |

where represents downlink channel gain between user task to BS , determines a variance of an Additive White Gaussian Noise (AWGN), and denotes the co-channel interference [38, 39] among the BSs. Here, the co-channel interference relates to the transmissions from other BSs that use the same subchannels of . and represent, respectively, the transmit power and the channel gain of the BS . Therefore, downlink energy consumption of the data transfer of BS is defined by [watt-seconds or joule], where [seconds] determines the duration of transmit power [watt]. Thus, the network energy consumption for BS at time is defined as follows [36, 19]:

| (4) |

where determines the energy coefficient for transferring data through the network. In fact, the value of depends on the type of the network device (e.g., for a unit transceiver remote radio head [36]).

II-A3 Total Energy Demand

The total energy consumption (demand) of the network consists of both MEC server computational energy (in (2)) consumption, and network the operational energy (i.e., BSs energy consumption in (4)). Thus, the overall energy demand of the network at time slot is given as follows:

| (5) |

The demand is random over time and completely depends on the computational tasks load of the MEC servers.

II-B Energy Generation Model

The energy supply of the self-powered wireless network with MEC capabilities relates to the network’s own renewable (e.g., solar, wind, biofuels, etc.) sources as well as the main grid’s non-renewable (e.g., diesel generator, coal power, and so on) energy sources [8, 9]. In this energy generation model, we consider a set of renewable energy sources of the network, with each element representing the set of renewable energy sources of BS . Each renewable energy source at BS can generate an amount of renewable energy at time . Therefore, the total amount of renewable energy generation at BS will be for time slot . Thus, the total renewable energy generation for the considered network at time is defined as . The maximum limit of this renewable energy is less than or equal to the maximum capacity of renewable energy generation at time period . Thus, we consider a maximum storage limit that is equal to the maximum capacity of the renewable energy generation [40, 41, 42]. Further, the self-powered wireless network is able to get an additional non-renewable energy amount from the main grid at time . The per unit renewable and non-renewable energy cost are defined by and , respectively. In general, the renewable energy cost only depends on the maintenance cost of the renewable energy sources [40, 41, 42]. Therefore, the per unit non-renewable energy cost is greater than the renewable energy cost . Additionally, the surplus amount of the energy at time can be stored in energy storage medium for the future usages [41, 42] and the energy storage cost of per unit energy store is denoted by .

II-B1 Non-renewable Energy Generation Cost

In order to fulfill the energy demand when it is greater than the generated renewable energy , the main grid can provide an additional amount of energy from its non-renewable sources. Thus, the non-renewable energy generation cost of the network is determined as follows:

| (6) |

where represents a unit energy cost.

II-B2 Surplus Energy Storage Cost

The surplus amount of energy is stored in a storage medium when (i.e., energy demand is smaller than the renewable energy generation) at time . We consider the per unit energy storage cost . This storage cost depends on the storage medium and amount of the energy store at time [41, 43, 23, 44]. With the per unit energy storage cost , the total storage cost at time is defined as follows:

| (7) |

II-B3 Total Energy Generation Cost

The total energy generation cost includes renewable, non-renewable, and storage energy cost. Naturally, this total energy generation cost will depend on the energy demand of the network at time . Therefore, the total energy generation cost at time is defined as follows:

| (8) |

where the energy cost of the renewable, non-renewable, and storage energy are given by , , and , respectively. In (8), energy demand and renewable energy generation are stochastic in nature. The energy cost of non-renewable energy (6) and storage energy (7) completely rely on energy demand and renewable energy generation . Hence, to address the uncertainty of both energy demand and renewable energy generation in a self-powered wireless network, we formulate a two-stage stochastic programing problem. In particular, the first stage makes a decision of the energy dispatch without knowing the actual demand of the network. Then we make further energy dispatch decisions by analyzing the uncertainty of the network demand in the second stage. A detailed discussion of the problem formulation is given in the following section.

III Problem Formulation with a Two-Stage Stochastic Model

We now consider the case in which the non-renewable energy cost is greater than the renewable energy cost, that is often the case in a practical smart grid as discussed in [40], [41], [42], and [45]. Here, and are the continuous variables over the observational duration . The objective is to minimize the total energy consumption cost . is the decision variable and the energy demand is a parameter. When the energy demand is known, the optimization problem will be:

| (9) | ||||

In problem (9), after removing the non-negativity constraints , we can rewrite the objective function in the form of piecewise linear functions as follows:

| (10) |

Where and determine the cost of non-renewable (i.e., ) and storage (i.e., ) energy, respectively. Therefore, we have to choose one out of the two cases. In fact, if the energy demand is known and also the amount of renewable energy is the same as the energy demand, then problem (10) provides the optimal decision in order to exact amount of demand . However, the challenge here is to make a decision about the renewable energy usage before the demand becomes known. To overcome this challenge, we consider the energy demand as a random variable whose probability distribution can be estimated from the previous history of the energy demand. We can re-write problem (9) using the expectation of the total cost as follows:

| (11) | ||||

The solution of problem (11) will provide an optimal result on average. However, in the practical scenario, we need to solve problem (11) repeatedly over the uncertain energy demand . Thus, this solution approach does not significantly affect our model in terms of scalability while number of BSs generates a large variety of energy demand over the observational period of . In fact, energy demand and generation can change over time for each BS , and they can also induce large variations of demand-generation among the BSs. Hence, the solution to problem (11) cannot rely on an iterative scheme due to a lake of the adaptation for uncertain change of energy demand and generation over time.

We consider the moment of random variable that has a finitely supported distribution and takes values with respective probabilities of BSs . The cumulative distribution function (CDF) of energy demand is a step function and jumps of size at each demand . Therefore, the probability distribution of each BS energy demand belongs to the CDF of historical observation of energy demand . In this case, we can convert problem (11) into a deterministic optimization problem and the expectation of energy usage cost is determined by . Thus, we can rewrite the problem (9) as a linear programming problem using the representation in (10) as follows:

| (12) | ||||

| s.t. | (12a) | |||

| (12b) | ||||

| (12c) | ||||

For a fixed value of the renewable energy , problem (12) is an equivalent of problem (10). Meanwhile, problem (12) is equal to . We have converted the piecewise linear function from problem (10) into the inequality constraints (12a) and (12b). Constraint (12c) ensures a limit on the maximum allowable renewable energy usage. We consider as a highest probability of energy demand at each BS . Therefore, for BSs, we define as the probability of energy demand with respect to BSs . Thus, we can rewrite the problem (11) for BSs is as follows:

| (13) | ||||

| (13a) | ||||

| (13b) | ||||

| (13c) | ||||

where represents the highest probability of the energy demand , in which is a random variable and denotes a realization of energy demand on BS at time . The value of belongs to the empirical CDF of the energy demand for BS . This CDF is calculated from the historical observation of the energy demand at BS . In fact, for a fixed value of non-renewable energy , problem (13) is separable. As a result, we can decompose this problem with a structure of two-stage linear stochastic programming problem [46, 47].

To find an approximation for a random variable with a finite probability distribution, we decompose problem (13) in a two-stage linear stochastic programming under uncertainty. The decision is made using historical data of energy demand, which is fully independent from the future observation. As a result, the first stage of self-powered energy dispatch problem for sustainable edge computing is formulated as follows:

| (14) | ||||

| s.t. | (14a) | |||

where determines an optimal value of the second stage problem. In problem (14), the decision variable is calculated before the realization of uncertain energy demand . Meanwhile, at the first stage of the formulated problem (14), the cost is minimized for the decision variable which then allows us to estimate the expected energy cost for the second stage decision. Constraint (14a) provides a boundary for the maximum allowable renewable energy usage. Thus, based on the decision of the first stage problem, the second stage problem can be defined as follows:

| (15) | ||||

| s.t. | (15a) | |||

| (15b) | ||||

| (15c) | ||||

In the second stage problem , the decision variables and depend on the realization of the energy demand of the first stage problem , where, determines the amount of renewable energy usage at time . The first constraint is an equality constraint that determines the surplus amount of energy must be equal to the absolute value difference between the usage of renewable and non-renewable energy amount. The second constraint is an inequality constraint that uses the optimal demand value from the first stage realization. In particular, the value of demand comes from that is the historical observation of energy demand. Finally, the constraint protects from the non-negativity for the non-renewable energy usage.

The formulated problems and can characterize the uncertainty between network energy demand and renewable energy generation. Particularly, the second stage problem contains random demand that leads the optimal cost as a random variable. As a result, we can rewrite the problems and in a one large linear programming problem for BSs and the problem formulation is as follows:

| (16) | ||||

| s.t. | (16a) | |||

| (16b) | ||||

| (16c) | ||||

| (16d) | ||||

In problem , for BSs, energy demand happens with positive probabilities and . The decision variables are , and , which represent the amount of renewable, non-renewable, and storage energy, respectively. Constraint defines a relationship among all of the decision variables , and . In essence, this constraint discretizes the surplus amount of energy for storage. Hence, constraint ensures the utilization of non-renewable energy based on the energy demand of the network. Constraint ensures that the decision variable will not be a negative value. Finally, constraint restricts the renewable energy usages in to maximum capacity at time . Problem is an integrated form of the first-stage problem in and the second-stage problem in , where the solution of and completely depends on realization of demand for all BSs. The decision of the comes before the realization of demand and, thus, the estimation of renewable energy generation will be independent and random. Therefore, problem holds the property of relatively complete recourse. In problem , the number of variables and constraints is proportional to the numbers of BSs, . Additionally, the complexity of the decision problem leads to due to the combinatorial properties of the decisions and constraints [46, 47, 48].

The goal of the self-powered energy dispatch problem is to find an optimal energy dispatch policy that includes amount of renewable , non-renewable , and storage energy of each BS while minimizing the energy consumption cost. Meanwhile, such energy dispatch policy relies on an empirical probability distribution of historical demand at each BS at time . In order to solve problem , we choose an approach that does not rely on the conservativeness of a theoretical probability distribution of energy demand in problem , and also will capture the uncertainty of renewable energy generation from the historical data. In contrast, we can construct a theoretical probability distribution of energy demand when we know what the exact distribution is as well as what its parameters will be (e.g., mean, variance, and standard deviation). In fact, in practice, the distribution of energy demand is unknown and instead, a certain amount of historical energy demand data are available. As a result, we cannot rely on this distribution to measure uncertainty while the renewable energy generation and energy demand are random over time. Hence, we can obtain time-variant features of both energy demand and generation by characterizing the Markovian properties from the historical observation over time. In particular, we capture the dynamics of Markovian by considering a data-driven approach. This approach can overcome the conservativeness of theoretical probability distribution as historical observation goes to finitely many.

To prevalence the aforementioned contemporary, we propose a multi-agent meta-reinforcement learning framework that can explore the Markovian behavior from historical energy demand and generation of each BS . Meanwhile, meta-agent can cope with such time-varying features to a globally optimal energy dispatch policy for each BS .

We design an MAMRL framework by converting the cost minimization problem to a reward maximization problem that we then solve with a data-driven approach. In the MAMRL setting, each agent works as a local agent for each BS and determines an observation (i.e., exploration) for the decision variables, renewable , non-renewable , and storage energy. The goal of this exploration is to find time-varying features from the local historical data so that the energy demand of the network is satisfied. Furthermore, using these observations and current state information, a meta-agent is used to determine a stochastic energy dispatch policy. Thus, to obtain such dispatch policy, the meta-agent only requires the observations (behavior) from each local agent. Then, the meta-agent can evaluate (exploit) behavior toward an optimal decision for dispatching energy. Further, the MAMRL approach is capable of capturing the exploration-exploitation tradeoff in a way that the meta-agent optimizes decisions of the each self-powered BS under uncertainty. A detailed discussion of the MAMRL framework is given in the following section.

IV Energy Dispatch with Multi-Agent Meta-Reinforcement Learning Framework

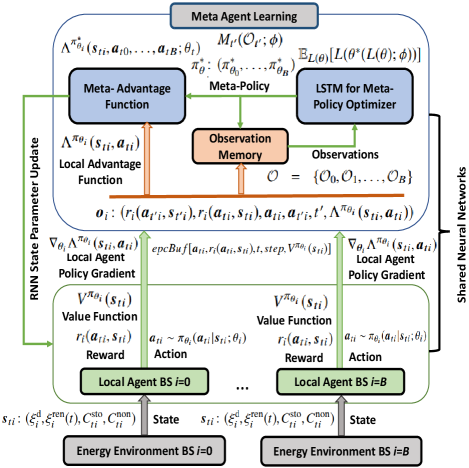

In this section, we developed our proposed multi-agent meta-reinforcement learning framework (as seen in Fig. 3) for energy dispatch in the considered network. The proposed MAMRL framework includes two types of agents: A local agent that acts as a local learner at each self-powered with MEC capabilities BS and a meta-agent that learns the global energy dispatch policy. In particular, each local BS agent can discretize the Markovian dynamics for energy demand-generation of each BS (i.e., both SBSs and MBS) separately by applying deep-reinforcement learning. Meanwhile, we train a long short-term memory (LSTM) [49, 50] as a meta-agent at the MBS that optimizes [26] the accumulated energy dispatch of the local agents. As a result, the meta-agent can handle the non-i.i.d. energy demand-generation of the each local agent with own state information of the LSTM. To this end, MAMRL mitigates the curse of dimensionality for the uncertainty of energy demand and generation while providing an energy dispatch solution with a less computational and communication complexity (i.e., less message passing between the local agents and meta-agent).

IV-A Preliminary Setup

In the MAMRL setting, each BS acts as a local agent and the number of local agents is equal to BSs (i.e., MBS and SBSs). We define a set of state spaces and a set of actions for the agents. The state space of a local agent is defined by , where , and represent the amount of energy demand, renewable generation, storage cost, and non-renewable energy cost, respectively, at time . We execute Algorithm 1 to generate the state space for every BSs , individually. In Algorithm 1, lines to calculate the individual energy consumption of the MEC computation and network operation using (2) and (4), respectively. Overall, the energy demand of the BS is computed in line and the self-powered energy generation is estimated by line in Algorithm 1. Non-renewable and storage energy costs are calculated in lines and for time slot . Finally, line creates state space tuple (i.e., ) for time in Algorithm 1.

IV-B Local Agent Design

Consider each local BS agent that can take two types of actions and which are the amount of storage energy , and the amount of non-renewable energy at time . We consider a discrete set of actions that consists of two actions for each BS unit . Since the state and action both contain a time varying information of the agent , we consider the dynamics of Markovian and represent problem as a discounted reward maximization problem for each agent (i.e., each BS). Thus, the objective function of the discounted reward maximization problem of agent is defined as follows [28]:

| (17) |

where is a discount factor and each reward is considered as,

| (18) |

In (18), determines a ratio between renewable energy generation and energy demand (supply-demand ratio) of the BS agent at time . When renewable energy generation-demand ratio is larger than then the BS agent achieves a reward of because the amount of renewable energy exceeds the demand that can be stored in the storage unit.

Each action of BS agent determines a stochastic policy . is a parameter of and the energy dispatch policy is defined by . Policy decides a state transition function for the next state . Thus, the state transition function of BS agent is determined by a reward function , where . Further, each BS agent chooses an action from a parametrized energy dispatch policy . Therefore, for a given state , the state value function with a cumulative discounted reward will be:

| (19) |

where is a discount factor and ensures the convergence of state value function over the infinity time horizon. Thus, for a given state , the optimal policy for the next state can be determined by an optimal state value function while a Markovian property is imposed. Therefore, the optimal value function is given as follows:

| (20) |

Here, the optimal value function (20) learns a parameterized policy by using an LSTM-based Q-networks for the parameters . Thus, each BS agent determines its parametrized energy dispatch policy , where and for the parameters . The decision of each BS agent relies on . In particular, energy dispatch policy is the probability of taking action for a given state with parameters . In this setting, each local agent is comprised of an actor and a critic [27, 51]. The policy of energy dispatch is determined by choosing an action in (20) that can be seen as an actor of BS agent . Meanwhile, the value function (19) is estimated by a critic of each local BS agent . The critic can criticize actions that are made by the actor of each BS agent . Therefore, each BS agent can determine a temporal difference (TD) error [51] based on the current energy dispatch policy of the actor and value estimation by the critic. The TD error is considered as an advantage function and the advantage function of agent is defined as follows:

| (21) |

Thus, the policy gradient of each BS agent is determined as,

| (22) |

where , and represent the actor and critic, respectively, for each local BS agent .

Using (22), we can discretize the energy dispatch decision for each self-powered BS in the network. In fact, we can achieve a centralized solution for when all of the BSs state information (i.e., demand and generation) are known. However, the space complexity for computation increases as and also the computational complexity becomes [21]. Further, the solution does not meet the exploration-exploitation dilemma since the centralized (i.e., single agent) method ignores the interactions and energy dispatch decision strategies of other agents (i.e., BSs) which creates an imbalance between exploration and exploitation. In other words, this learning approach optimizes the action policy by exploring its own state information. Therefore, when we change the energy environment (i.e., demand and generation), this method cannot cope with an unknown environment due to the lack of diverse state information during the training. Next, we propose an approach that not only reduces the complexity but also explores alternative energy dispatch decision to achieve the highest expected reward in (17).

IV-C Multi-Agent Meta-Reinforcement Learning Modeling

We consider a set of observations [24, 52] and for an BS agent , a single observation tuple is given by . For a given state , the observation of the next state consists of , where , , , and are next-state discounted rewards, current state discounted rewards, next action, current action, time slot, and TD error, respectively. Here, a complete information of the observation is correlated with the state space while observation does not require the complete state information of the previous states.

Thus, the space complexity for computation at each BS agent leads to . Meanwhile, the computational complexity for each time slot becomes , where is the learning parameter and represents the numbers of LSTM units. Each BS agent requires to send an amount of observational data (i.e., payload) to the meta-agent. Therefore, the communication overhead for each BS agent leads to . On the other hand, the computational complexity of the meta-agent leads to while represents learning parameter at meta-agent. In particular, for a fixed number of output memory , the meta-agent’s update complexity at each time slot becomes [53]. Further, when transferring the learned parameters from the meta-agent to all local agents , the communication overhead goes to the at each time slot . Here, the size of depends on the memory size of the LSTM cell at the meta-agent [see Appendix A].

In the MAMRL framework, the local agents work as an optimizee and the meta-agent performs the role of optimizer [26]. To model our meta-agent, we consider an LSTM architecture [49, 50] that stores its own state information (i.e., parameters) and the local agent (i.e., optimizee) only provides the observation of a current state. In the proposed MAMRL framework, a policy is determined by updating the parameters 111We consider recurrent neural networks (RNNs) state parameters for the parameterization of energy dispatch policy. In particular, we consider a long short-term memory (LSTM) for RNN, in which cell state and hidden state are considered as parameters. . Therefore, we can represent the state value function (20) for time is as follows: , and the advantage (temporal difference) function (21) is presented by, . As a result, the parameterized policy is defined by, . Considering all of the BS agents and the advantage function is rewritten as,

| (23) |

where is a joint energy dispatch policy and represents state transition probability. Using (23), we can get the value loss function for agent and the objective is to minimize the temporal difference [27],

| (24) |

To improve the exploration with a low bias, we consider an entropy regularization 222Entropy [54, 55, 56, 57] can allow us to manage non-i.i.d. datasets when changes in the environment over time lead to an uncertainty. Therefore, we use entropy regularization to handle the non-i.i.d. energy demand and generation over time by managing with the uncertainty for each BS agent . that cope with the non-i.i.d. energy demand and generation for all of the BS agents . Here, is a coefficient for the magnitude of regularization and determines the entropy of the policy for the parameter . Additionally, a larger value of encourages the agents to have a more diverse exploration to estimate the energy dispatch policy. Thus, we can redefine the policy loss function as follows:

| (25) |

Therefore, the policy gradient of the loss function (25) is defined in terms of temporal difference and entropy. The policy gradient of the loss function is defined as follows:

| (26) |

To design our meta-agent, we consider meta-agent parameters and optimized parameters of the optimizee (i.e., local agent). The meta-agent is defined as , where is modeled by an LSTM. Consider an observational vector of a local BS agent at time and each observation is . The LSTM-based meta-agent takes the observational vector as an input. Meanwhile, the meta-agent holds long-term dependencies by generating its own state with parameters . To do this, the LSTM model creates several gates to determine an optimal policy and advantage function for the next state . As a result, the structure of the recurrent neural network for the meta-agent is the same as the LSTM model [49, 50]. In particular, each LSTM unit for the meta-agent consists of four gate layers such as forget gate , input gate , cell state , and output layer. The cell state gate usages a activation function and other gates are used sigmoid as an activation function. Thus, the outcome of the meta policy for a single unit LSTM cell is presented as follows:

| (27) | |||

| (27a) | |||

| (27b) | |||

| (27c) | |||

| (27d) | |||

| (27e) | |||

| (27f) | |||

In the meta-agent policy formulation (27), the forget gate vector (27a) determines what information is needed to throw away. Input gate vector (27b) helps to decide which information is needed to update, the cell state (27c) creates a vector of new candidate values using function, and updates the cell state information by applying (27d). The output layer (27e) that determines what parts of the cell state are going to output and calculate the cell outputs using the equation . Further, the cell state through the will restrict the values between and . This entire process is followed for each LSTM block and finally, determines the meta-policy for of the state . In addition, optimized RNN state parameters are obtained from the cell state (27d) and hidden state of an LSTM unit. Thus, the loss function of meta-agent depends on the distribution of and the expectation of the meta-agent loss function is defined as follows [26]:

| (28) |

In the proposed MAMRL framework, we transfer the learned parameters (i.e., cell state and hidden state) of meta-agent to the local agents so that each local agent will be estimated an optimal energy dispatch policy by updating its own learning parameters. Thus, the parameters of each agent (i.e., BS) is updated with while to decide the energy dispatch policy.

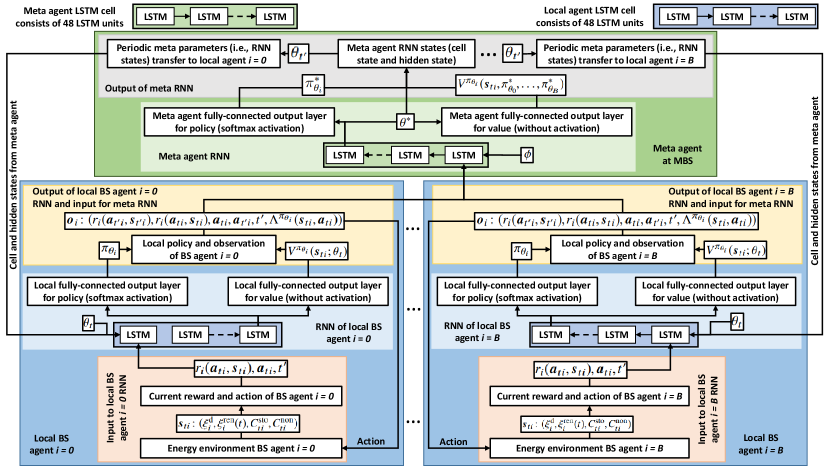

We consider an LSTM-based recurrent neural network (RNN) for the both local agents and the meta-agent. This LSTM RNN consists of LSTM units for each LSTM cell as shown in Fig. 4. In particular, the configuration of the LSTM for the meta-agent and each local agent is the same while the objective of the loss functions differ from local agent to meta-agent. In which, local BS agent determines its own energy dispatch policy by exploring its own environmental state information for reducing the TD error. Meanwhile, meta-agent deals with the observations of each local BS agent by exploiting its own RNN states information using entropy based loss function to capture non-i.i.d. energy demand and generation of each local BS. Therefore, having different loss functions for local and meta agent leads the proposed MAMRL model to learn a domain specific generalized model so that it can cope with an unknown environment. Further, this RNN consists of a branch of two fully connected output layers on top of the LSTM cell. In particular, fully connected layer with a softmax activation is considered for energy dispatch policy determination, and another fully connected output layer without activation function is deployed for value function estimation. Thus, the advantage is calculated based on value function estimation from the second fully connected layer. Each local LSTM-based RNN receives a current reward , , current action , and next time slot as an input for each BS agent . Meanwhile, this local LSTM model estimates a policy and value for BS agent . On the other hand, meta agent LSTM-based RNN feeds input as an observational tuple from each BS agent . This observation consists of the current and next reward, current and next action, next time slot, and TD error for each BS agent . Thus, this meta agent estimates parameters to find a globally optimal energy dispatch policy for each BS . The learned parameters of the meta-agent are transferred to each local BS agent asynchronously while this local agent updates its own parameters for estimating the globally optimal energy dispatch policy via the local LSTM-based RNN. In particular, the learned parameters (i.e., RNN states) are transfered from meta-agent to each local agent . Additionally, these RNN state parameters include cell state and hidden state of the LSTM cell, which do not depend on any of the fully connected out layers of the proposed RNN architecture. Meanwhile, each local agent updates its own RNN states using the transferred parameters by the meta-agent. We consider a cellular network for exchanging observations and parameters between local BS agent and meta-agent.

We run the proposed Algorithm 2 at each self-powered BS with MEC capabilities as local agent . The input of Algorithm 2 is the state information of local agent , which is the output from Algorithm 1. The cumulative discounted reward (17) and state value in (19) are calculated in lines and , respectively (in Algorithm 2) for each step (until the maximum step size 333To capture the heterogeneity for energy demand and generation of each BS separately, we consider the same number of user tasks that are executed by each BS agent during one observational period as the steps size. for time step ). Consequently, based on a chosen action from the estimated policy (in line ), episode buffer is generated and appended in line . Advantage function (21) of local agent is evaluated in line and the policy gradient (22) is calculated in line using an LSTM-based local neural network. Algorithm 2 generates observational tuple in line . Here, we transfer the knowledge of local BS agent to the meta-agent learner (deployed in MBS) in Algorithm 3 so as to optimize the energy dispatch decision (in Algorithm 2 line ). Hence, the observation tuple of local BS agent consists of only the decision from BS , where does not require to send all of the state information to meta-agent learner. Employing the meta-agent policy gradient, each local agent is capable of updating the energy dispatch decision policy in line in Algorithm 2. Finally, the energy dispatch policy is executed in line at the BS by local agent .

The meta-agent learner (Algorithm 3 in MBS) receives the observations from each local BS agent asynchronously. Then the meta-agent asynchronously updates the meta policy gradient of the each BS agent . Lines from to of Algorithm 3 represent the LSTM block for the meta-agent. In Algorithm 3, entropy loss (25) and gradient of the loss (26) are estimated in lines and , respectively. In order to estimate this, Algorithm 3 deploys a fully connected output layer without activation function, so that advantage loss can be calculated without affecting the value that is calculated by the value function of the proposed MAMRL framework. The meta-agent energy dispatch policy is updated in line of Algorithm 3. Before that, a fully connected output layer with a softmax activation function of the LSTM cell assists to determine the energy dispatch policy and meta policy loss in lines and (in Algorithm 3), respectively, for the meta-agent. Additionally, the meta-agent utilizes the observations of the local agents and determines its own state information that helps to estimate the energy dispatch policy of the meta-agent. In line , the meta-agent RNN states (i.e., cell and hidden states) are received from the considered LSTM cell in Algorithm 3. Finally, the meta-agent policy and RNN states are transfered to each BS agent for updating the parameters (i.e., RNN states) of each local BS agent. To this end, a meta-agent learner deployed at center node (i.e., MBS) in the considered network and sends the learning parameters of the optimal energy dispatch policy to each local BS (i.e., MBS and SBS) through the network.

The proposed MAMRL framework established a guarantee to converge with an optimal energy dispatch policy. In fact, the MAMRL framework can be reduced to a -player Markovian game [58, 59] as a base problem that establishes more insight into convergence and optimality. The proposed MAMRL model has at least one Nash equilibrium point that ensures an optimal energy dispatch policy. This argument is similar from the previous studies of -player Markovian game [58, 59]. Hence, we can conclude with the following proposition:

Proposition 1.

is an optimal energy dispatch policy that is an equilibrium point with an equilibrium value for BS [see Appendix B].

We can justify the convergence of MAMRL framework via the following Proposition:

Proposition 2.

Consider a stochastic environment with a state space of BS agents such that all BS agents are initialized with an equal probability of for a binary actions, , where , and . Therefore, to estimate the gradient of loss function (24), we can establish a relationship among the gradient of approximation and true gradient ,

| (29) |

[See Appendix C].

Propositions 1 and 2 validate the optimality and convergence, respectively for the proposed MAMRL framework. Proposition 1 guarantees an optimal energy dispatch policy. Meanwhile, Proposition 2 ensures that the proposed MAMRL model can meet the convergence for a single state . That implies this model is also able to converge for .

The significance of the proposed MAMRL model are explained as follows:

-

•

First, each BS (i.e., local agent) can explore its own energy dispatch policy based on individual requirements for the energy generation and consumption. Meanwhile, the meta-agent exploits each BS energy dispatch decision from its own recurrent neural networks state information. As a result, individual BS anticipates its own energy demand and generation while meta-agent handles the non-i.i.d. energy demand and generation for all BS agents to efficiently meet the exploration-exploitation tradeoff of the proposed MAMRL.

-

•

Second, the proposed MAMRL model can effectively handle distinct environment dynamics for non-i.i.d. energy demand and generation among the agents.

-

•

Third, the proposed MAMRL model ensures less information exchange between the local agents and meta-agent. In particular, each local BS agent only sends an observational vector to meta-agent and received neural network parameters at the end of minutes observation period. Additionally, the proposed MAMRL model does not require sending an entire environment state from each local agent to the meta-agent.

-

•

Finally, the meta-agent can learn a generalized model toward the energy dispatch decision and transfer its skill to each local BS agent. This, in turn, can significantly increase the learning accuracy as well as reduce the computational time for each local BS agent thus enhancing the robustness of the energy dispatch decision.

We benchmark the proposed MAMRL framework by performing an extensive experimental analysis, and the experimental analysis and discussion are given in the later section.

V Experimental Results and Analysis

| Description | Value |

|---|---|

| No. of SBSs (no. of local agents) | |

| No. of MEC servers in each SBS | |

| No. of MBS (meta-agent) | |

| Channel bandwidth | kHz [62] |

| System bandwidth | MHz [17] |

| Transmission power | dB [16] |

| Channel gain | [17] |

| A variance of an AWGN | -114 dBm/Hz [62] |

| Energy coefficient for data transfer | [36] |

| MEC server CPU frequency | 2.5 GHz [16] |

| Server switching capacitance | (farad) [17] |

| MEC static energy | Watts [63] |

| Task sizes (uniformly distributed) | bytes [60] |

| No. of task requests at BS | [11] |

| Unit cost renewal energy | per MW-hour [45] |

| Unit cost non-renewal energy | per MW-hour [45] |

| Unit cost storage energy | additional [44] |

| Initial discount factor | |

| Initial action selection probability | |

| One observation period | minutes |

| No. of episodes | |

| No. of epochs for each day | |

| No. of steps for each epoch at each agent | = [60] |

| No. of actions | (i.e., , |

| No. of LSTM units in one LSTM cell | |

| No. of LSTM cells | (i.e., B+1) |

| LSTM cell API BasicLSTMCell(.) | tf.contrib.rnn [64] |

| Entropy regularization coefficient | |

| Learning rate | |

| Optimizer | Adam [65] |

| Output layer activation function | Softmax [51] |

In our experiment, we use the CRAWDAD nyupoly/video packet delivery dataset [60] to discretize the self-powered SBS network’s energy consumption. Further, we choose a state-of-the-art UMass solar panel dataset [61] to evaluate renewable energy generation. We create deterministic, asymmetric, and stochastic environments by selecting different days of the same solar unit for the generation. Meanwhile, usage several session from the network packet delivery dataset. We train our proposed meta-reinforcement learning (Meta-RL)-based MAMRL framework using deterministic environment and evaluate the testing performance for the three environments. Three environments444For example, we train and test the MAMRL model using the known (i.e., deterministic environment) network energy consumption, and renewable generation data of day . Then we have tested the trained model using day data, where network energy consumption is known, and renewable generation is unknown which represents an asymmetric environment. In a stochastic environment, let us consider day data, where both energy consumption and renewable generation are unknown to the trained model. are as follows: 1) In the deterministic environment, both network energy consumption and renewable generation are known, 2) network energy consumption is known but renewable generation is unknown in the asymmetric environment, and 3) the stochastic environment contains both energy consumption and renewable generation are unknown. To benchmark the proposed MAMRL framework intuitively, we have considered a centralized single-agent deep-RL, multi-agent centralized A3C deep-RL with a same neural networks configuration as the proposed MAMRL, and a pure greedy model as baselines. These are as follows:

- •

-

•

An asynchronous advantage actor-critic (A3C) based multi-agent RL framework [28] is considered a second benchmark in a cooperative environment [27]. In particular, each local actor can find its own policy in a decentralized manner while a centralized critic is augmented with additional policy information. Therefore, this model is learned by a centralized training with decentralized execution [28]. We call this model a multi-agent centralized A3C deep-RL [28]. The environment (i.e., state information) of this model remains the same for all of the local actor agents. To ensure a meaningful comparison with the proposed MAMRL model, we employ this joint energy dispatch policy using the same advantage function (23) as the MAMRL model.

-

•

We deploy a pure greedy-based algorithm [51] to find the best action-value mapping. In particular, this algorithm never takes the risk to choose an unknown action. Meanwhile, it explores other strategies and learns from them so as to infer more reasonable decisions. Thus, we choose this upper confidence bounded action selection mechanism [51] as one of the baselines used for benchmarking our proposed MAMRL model.

We implement our MAMRL framework using multi-threading programming in Python platform 555MAMRL, along with TensorFlow APIs [68]. Table IV shows the key parameters of this experiment setup.

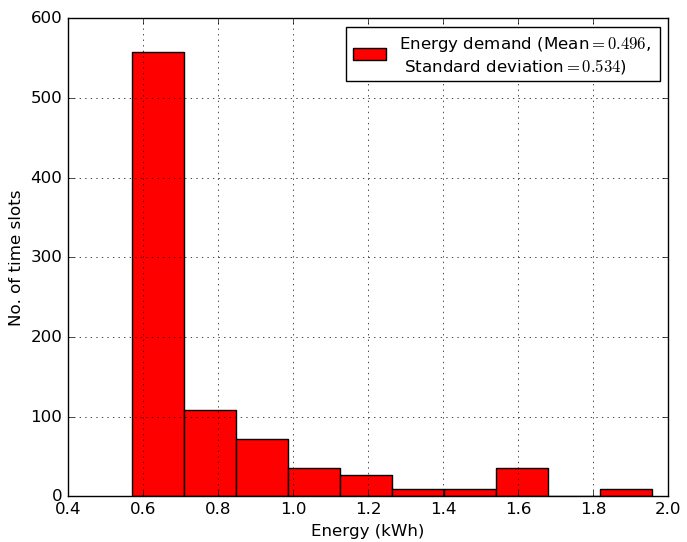

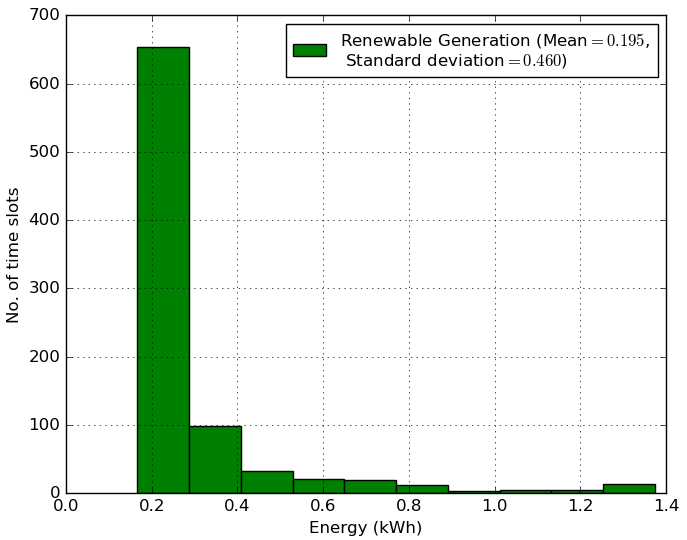

We prepossess both of the datasets ([60] and [61]) using Algorithm 1 that generates the state space information. The histograms of the network energy demand (in 5(a)) and a renewable energy generation (in 5(b)) of the deterministic environment are shown in Fig. 5. To the best of our knowledge, there are no publicly available datasets that comprises both of energy consumption and generation of a self-powered network with MEC capabilities. Additionally, if we change the environment using other datasets, the proposed MAMRL framework can deal with the new, unknown environment by using the skill transfer feature from the meta-agent to each local BS agent. In particular, the MAMRL approach can readily deal with the case in which the BS agent achieves a much lower reward due to more variability in consumption and generation. As a result, the above experiment setup is reasonable for the benchmarking of the proposed MAMRL framework.

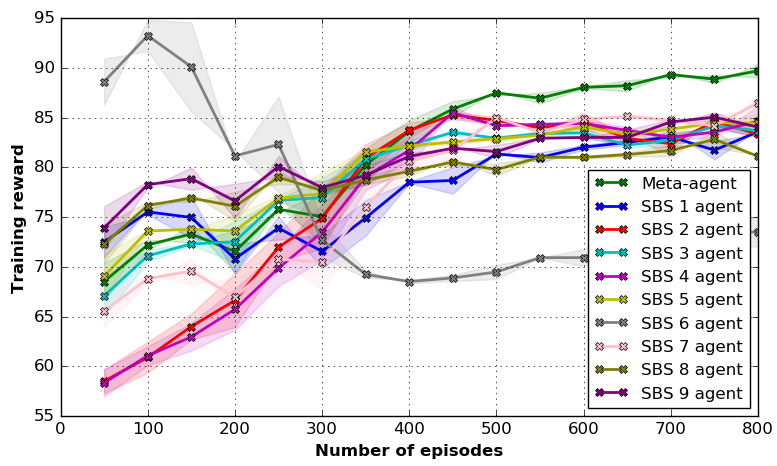

Fig. 6 illustrates the reward achieved by each local SBS along with a meta-agent, where we take an average reward for each episodes. In the MAMRL setting, we design a maximum reward of ( minute slot for hours), where meta-agent converges with a high reward value (around ). Hence, all of the local agents converge with around reward value except the SBS that achieves a reward of at convergence because its energy consumption and generation vary more than the others. In fact, this variation of reward among the BSs is leading to anticipate the non-i.i.d. energy demand and generation of the considered network as well as densification of the exploration and exploitation tradeoff for energy dispatch. The proposed approach can adapt the uncertain energy demand and generation over time by characterizing the expected amount of uncertainty in an energy dispatch decision for each BS individually. Meanwhile, the meta-agent exploits the energy dispatch decision by employing a joint policy toward the globally optimal energy dispatch for each BS . Therefore, the challenges of distinct energy demand and generation of the state space among the BSs can be efficiently handled by applying learned parameters from the meta-agent to each BS during the training that establishes a balance between exploration and exploitation.

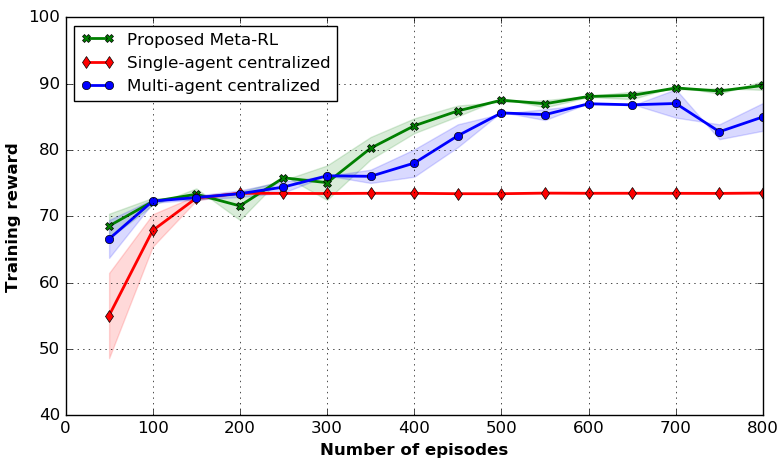

We compare the achieved reward of proposed MAMRL model with single-agent centralized and multi-agent centralized models in Fig. 7. The single agent centralized (diamond mark with red line) model converges faster than the other two models but it achieves the lowest reward due to the lack of exploitation as it has only one agent. Further, the multi-agent centralized (circle mark with blue line) model converges with a higher reward than the single agent method. The proposed MAMRL (cross mark with green line) model outperforms the other two models while converges with the highest reward value. In addition, multi-agent centralized needs the entire state information. In contrast, the meta-agent requires only the observation from local agents, and it can optimize the neural network parameters by using its own state information.

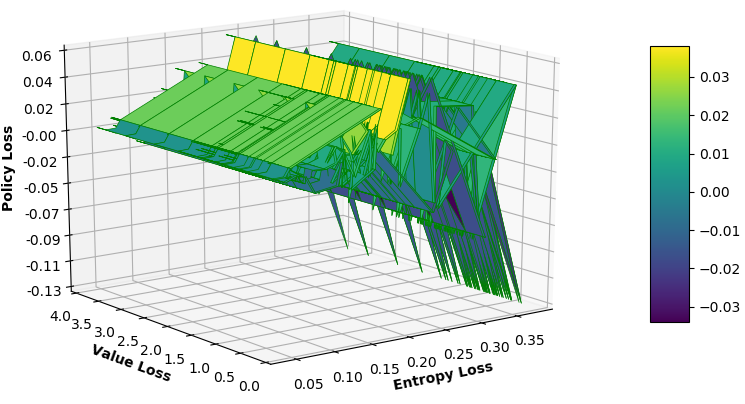

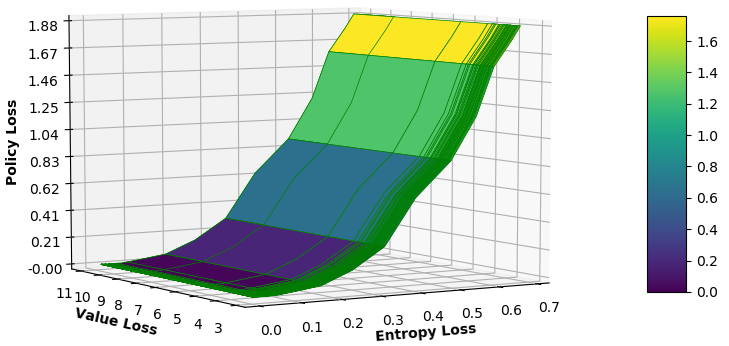

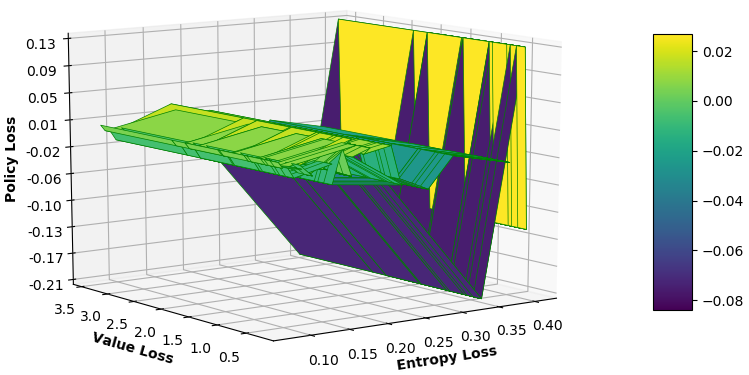

We analyze the relationship among the value loss, entropy loss, and policy loss in Fig. 8, where the maximum policy loss of the proposed MAMRL (in 8(a)) model is around whereas single-agent centralized (in 8(b)) and multi-agent centralized (in 8(c)) methods gain about and , respectively. Therefore, the training accuracy increases due to more variation between exploration and exploitation. Thus, our MAMRL model is capable of incorporating the decision of each local BS agent that solves the challenge of non-i.i.d. demand-generation for the other BSs.

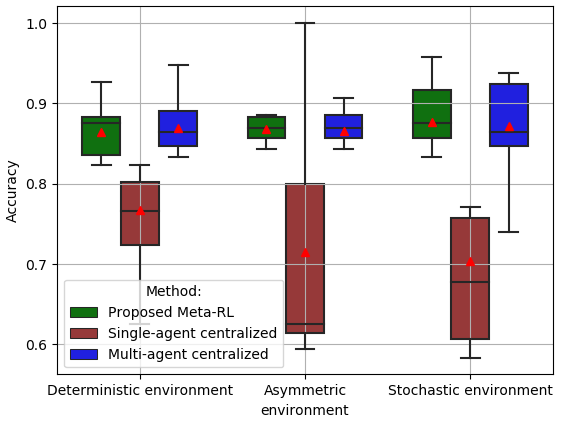

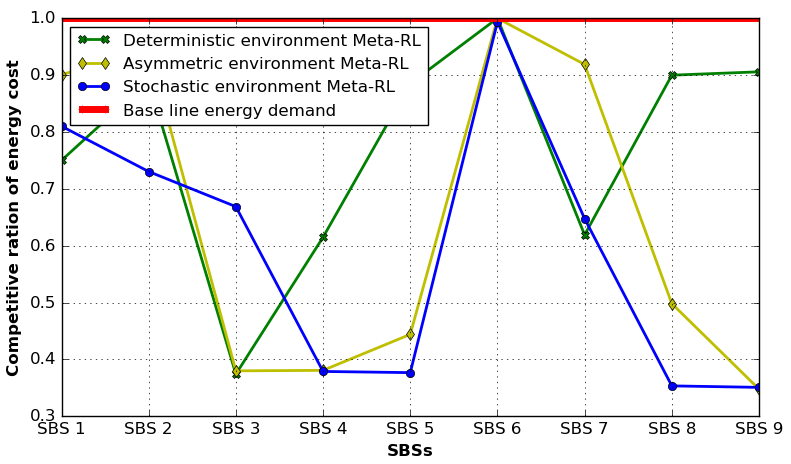

In Fig. 9, we examine the testing accuracy [69] of the storage energy and the non-renewable energy generation decision 666Each BS agent can calculate its action from a globally optimal energy dispatch policy by using (i.e., ). In which, at the end of minutes duration of each time slot , the each BS agent can choose one action (i.e., storage or non-renewable) from the energy dispatch policy . for time slots ( days) of SBSs under the deterministic, asymmetric, and stochastic environments. In the experiment, we have used the well-known UMass solar panel dataset [61] for renewable energy generation information as well as, the CRAWDAD nyupoly/video dataset[60], for estimating the energy consumption of the self-powered network. Further, we preprocess both of the datasets ([60] and [61]) using Algorithm 1 that generates the state space information. Thus, the ground truth comes from this state-space information of the considered datasets, where the actions are depended on the renewable energy generation and consumption of a particular BS . The proposed MAMRL (green box) and multi-agent centralized (blue box) methods achieve a maximum accuracy of around and , respectively, under the stochastic environment (in Fig. 9). Further, Fig. 9 shows that the mean accuracy () of the proposed method is also higher than the centralized solution (). Similarly, in the deterministic and asymmetric environment, the average accuracy (around ) of the proposed low complexity semi-distributed solution is almost the same as the baseline method.

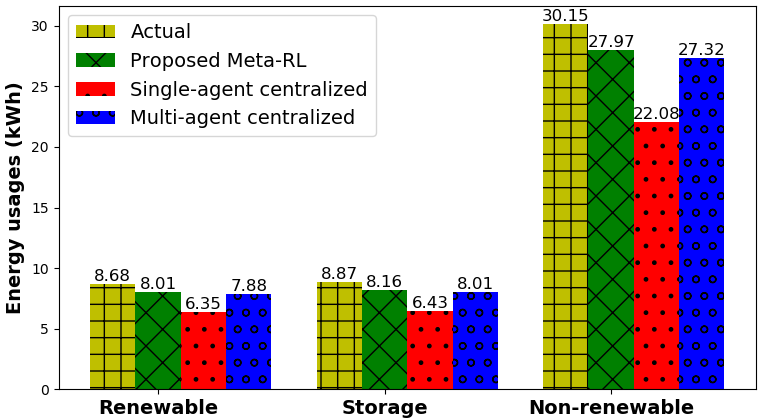

The prediction results of renewable, storage and non-renewable energy usage for a single SBS (SBS ) for hours ( time slots) under the stochastic environment are shown in Fig. 10. The proposed MAMRL outperforms all other baselines and achieves an accuracy of around . In contrast, the accuracy of the other two methods is and for the single-agent centralized and multi-agent centralized, respectively.

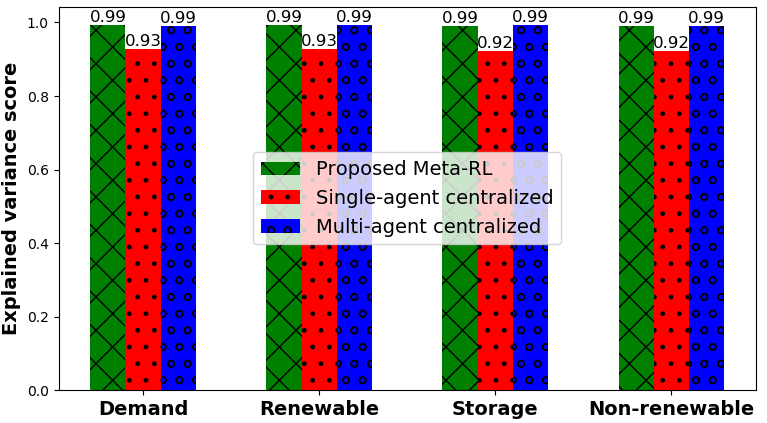

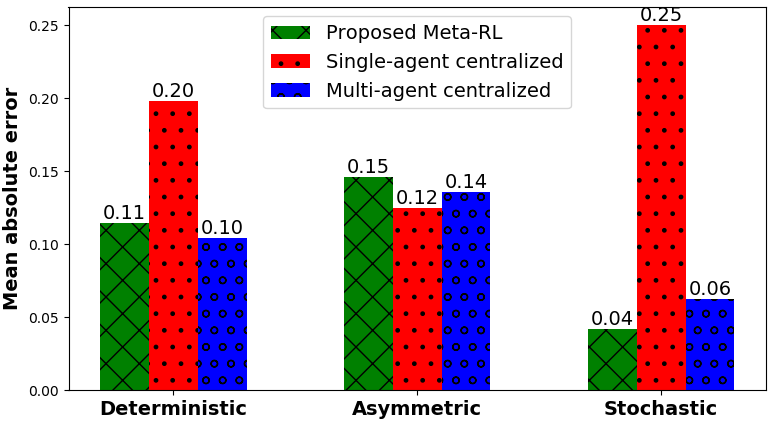

In Figs. 11 and 12, we validate our approach with two standard regression model evaluation metric, explained variance 777We measure the discrepancy for energy dispatch decisions between the proposed and baseline models on the ground truth of the datasets ([60] and [61]). We deploy the explained variance regression score function using sklearn API [70] to measure and compare this discrepancy. (i.e., explained variation) and mean absolute error (MAE) [69], respectively. Fig. 11 shows that the explained variance score of the proposed MAMRL method almost the same as the multi-agent centralized. However, in the case of renewable energy generation, MAMRL method significantly performs better (i.e., more score) than the multi-agent centralized solution. In particular, the proposed MAMRL model has pursued the uncertainty of renewable energy generation by the dynamics of Markovian for each BS. Further, meta-agent anticipates the energy dispatch by other BSs decisions and its own state information. We analyze the MAE 888This performance metric provides us with the average magnitude of errors for the energy dispatch decision of a single SBS (SBS 2) for hours ( time slots). Particularly, we analyze the average error over the time slots of the absolute differences between prediction and actual observation. To evaluate this metric, we have used the mean absolute error regression loss function of sklearn API [71]. for the three environments (i.e., deterministic, asymmetric, and stochastic) among the proposed MAMRL, single-agent centralized, and multi-agent centralized methods in Fig. 12. The MAE of the proposed MAMRL is , , and for deterministic, asymmetric, and stochastic, respectively since meta-agent has the capability to adopt the uncertain environment very fast. This adaptability is enhanced by the exploration mechanism that is taken into account at each BS, and exploitation that performs by capitalizing the non-i.i.d. explored information of all BSs.

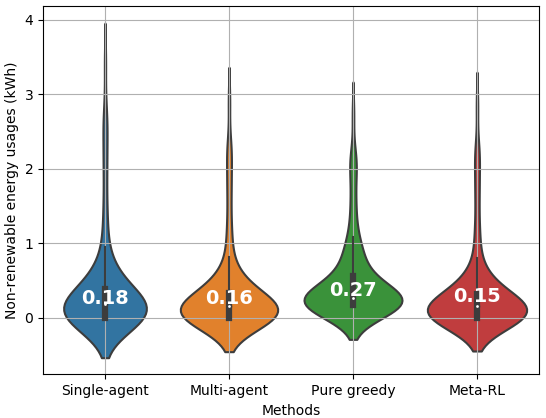

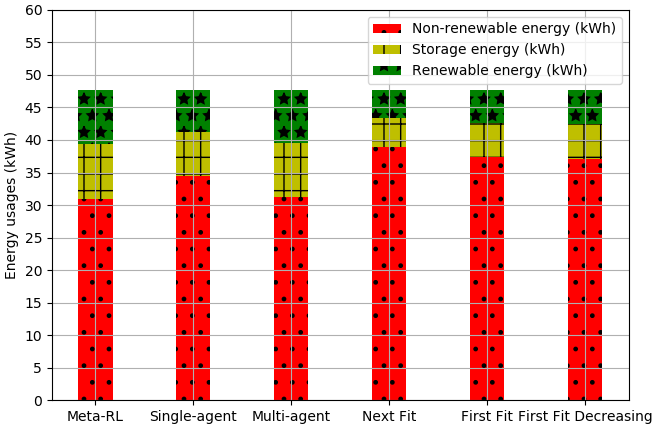

Fig. 13 illustrates the efficacy of the proposed MAMRL model in terms of the non-renewable energy usages into a stochastic environment with other benchmarks. This figure considers a kernel density analysis for hours ( time slots) under a stochastic environment, where the median of the non-renewable energy usages (kWh), and (kWh) for the proposed MAMRL, and pure greedy, respectively, at each minutes time slot. Further, the proposed MAMRL can significantly reduce the usages of non-renewable energy for the considered self-powered wireless network, where the MAMRL can save up to of the non-renewable energy usages. Here, the meta agent of the MAMRL model can discretize uncertainty from each local BS agent and transfer the knowledge (i.e., learning parameters) to each local agent that can take a globally optimal energy dispatch decision.

| Method | Non-renewable energy usage (kWh) | Storage energy usage (kWh) | Renewable energy usage (kWh) | Non-renewable energy usage cost ($) | Storage energy usage cost ($) | Renewable energy usage cost ($) | Total energy usage cost ($) | Cost difference with ground truth (%) |

| Ground truth (i.e., optimal) | NA | |||||||

| MAMRL (proposed) | ||||||||

| Single-agent RL | ||||||||

| Multi-agent RL | ||||||||

| Next Fit | ||||||||

| First Fit | ||||||||

| First Fit Decreasing | ||||||||

| Without renewable | NA | NA | NA | NA |

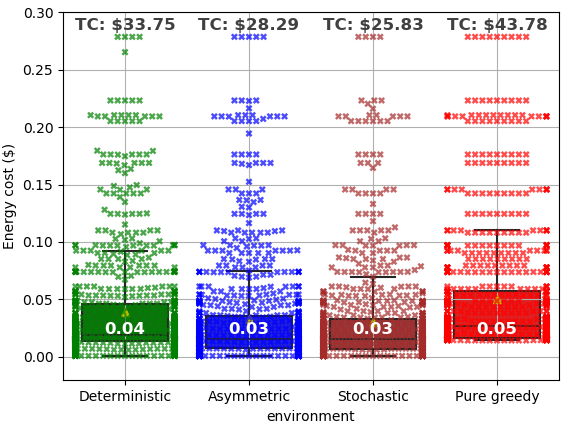

Fig. 14 presents the energy consumption cost analysis for SBSs over hours ( time slots) under deterministic, asymmetric, and stochastic environments using the proposed Meta-RL method while comparing it to the pure greedy method. The total energy cost achieved by the proposed approach for a particular day will be , , and for deterministic, asymmetric, and stochastic environments, respectively. Fig. 14 also shows that the proposed method significantly reduces the energy consumption cost (by at least ) for all three environments over the pure greedy method. The median of the energy cost at each time slot is , , and for the deterministic, asymmetric, and stochastic environments, respectively. In contrast, Fig. 14 has shown that a median energy cost for the pure greedy baseline is at each time slot that is due to a lack of the competence to cope with an unknown environment for energy consumption and generation. Therefore, the proposed MAMRL model can overcome the challenges of an unknown environment as well as non-i.i.d. characteristics for energy consumption and generation of a self-powered MEC network.