Multi-dimensional Speech Quality Assessment in Crowdsourcing

Abstract

Subjective speech quality assessment is the gold standard for evaluating speech enhancement processing and telecommunication systems. The commonly used standard ITU-T Rec. P.800 defines how to measure speech quality in lab environments, and ITU-T Rec. P.808 extended it for crowdsourcing. ITU-T Rec. P.835 extends P.800 to measure the quality of speech in the presence of noise. ITU-T Rec. P.804 targets the conversation test and introduces perceptual speech quality dimensions which are measured during the listening phase of the conversation. The perceptual dimensions are noisiness, coloration, discontinuity, and loudness. We create a crowdsourcing implementation of a multi-dimensional subjective test following the scales from P.804 and extend it to include reverberation, the speech signal, and overall quality. We show the tool is both accurate and reproducible. The tool has been used in the ICASSP 2023 Speech Signal Improvement challenge and we show the utility of these speech quality dimensions in this challenge. The tool will be publicly available as open-source at https://github.com/microsoft/P.808.

Index Terms: speech quality assessment, subjective test, crowdsourcing, perceptual dimensions, signal quality.

1 Introduction

Audio telecommunication systems, such as remote collaboration systems, smartphones, and telephones, are now ubiquitous and essential tools for work and personal use. Audio engineers and researchers have been working to improve the speech quality of these systems, with the goal of making them as good or better than face-to-face communication. However, there is still room for improvement, as it is still common to hear frequency response distortions, isolated and non-stationary distortions, loudness issues, reverberation, and background noise in audio calls.

Subjective speech quality assessment is the gold standard for evaluating speech enhancement processing and telecommunication systems, and the ITU-T has developed several recommendations for subjective speech quality assessment. ITU-T P.800 [1] describes lab-based methods for the subjective determination of speech quality, including the Absolute Category Rating (ACR). ITU-T P.808 [2] describes a crowdsourcing approach for conducting subjective evaluations of speech quality. It provides guidance on test material, experimental design, and a procedure for conducting listening tests in the crowd. The methods are complementary to laboratory-based evaluations described in P.800. An open-source implementation of P.808 is described in [3]. ITU-T P.835 [4] provides a subjective evaluation framework that gives standalone quality scores of speech (SIG) and background noise (BAK) in addition to the overall quality (OVRL). An open-source implementation of P.835 is described in [5]. Perceptual dimensions for speech quality are identified in [6] and extended to be noisiness, coloration, discontinuity and loudness [7]. Those are extensively studied in conversational test [8, 9, 10] and are focus of more recent multidimensional speech quality assessment standards namely ITU-T P.863.2 [11] and P.804 [12] (listening phase) (Table 1).

Intrusive objective speech quality assessment tools such as Perceptual Evaluation of Speech Quality (PESQ) [13] and Perceptual Objective Listening Quality Analysis (POLQA) [14] require a clean reference of speech. Non-intrusive objective speech quality assessment tools like ITU-T P.563 [15] do not require a reference, though it has low correlation to subjective quality [16]. Newer neural net-based methods, such as [16, 17, 18, 19] provide better correlations to subjective quality. NISQA [20] is an objective metric for P.804, but the correlation to subjective quality is not sufficient to use as a challenge metric.

Lab-based subjective testing in practice is slow due to the recruitment of test subjects and the limited number of test subjects, and expensive due to paying qualified test subjects and the cost of the test lab. The speed and cost result in the vast majority of research papers not using subjective tests but rather objective functions that are not well correlated to subjective opinion. An alternative to lab-based subjective tests is to crowdsource the testing. We introduce a crowdsourced multi-dimensional speech quality assessment tool that extends P.804 by adding SIG, OVRL, and reverberation (see Table 1). We show the tool is both accurate compared to lab results and is reproducible. The tool has been successfully used in the ICASSP 2023 Speech Signal Improvement challenge [21].

In Section 2 we describe the implementation of the tool. In Section 3 we provide accuracy and reproducibility analysis. In Section 4 we provide an example usage of the tool. In Section 5 we discuss conclusions and future work.

| Area | Description | Possible source |

|---|---|---|

| Noisiness | Background noise, circuit noise, coding noise; BAK | Coding, circuit or background noise; device |

| Coloration | Frequency response distortions | Bandwidth limitation, resonances, unbalanced freq. response |

| Discontinuity | Isolated and non-stationary distortions | Packet loss; processing; non-linearities |

| Loudness | Important for the overall quality and intelligibility | Automatic gain control; mic distance |

| Reverberation | Room reverberation of speech and noise | Rooms with high reverberation |

| Speech Signal | Overall signal quality | |

| Overall | Overall quality |

2 Implementation

We extended the P.808 Toolkit[3] to include a test template for a multi-dimensional quality assessment. The toolkit provides scripts for preparing the test, including packing the test clips in small test packages, preparing the reliability check questions, and analyzing the results. We ask participants to rate the perceptual quality dimensions of speech namely coloration, discontinuity, noisiness, and loudness, and also reverberation, Signal Quality, and Overall quality of each audio clip. In the following, each section of the test template, as seen by participants, is described. These sections are predefined and only the audio clips under the test will be changed from one study to another.

In the first section, the participant’s eligibility and their device suitability are tested and a qualification is assigned to those that pass which remains valid for the entire experiment. The participant’s hearing ability is evaluated through digit-triplet-test [22]. Moreover, we test if their listening device supports the required bandwidths (i.e., full-band, wide-band, and narrow-band); details are in Section 2.1).

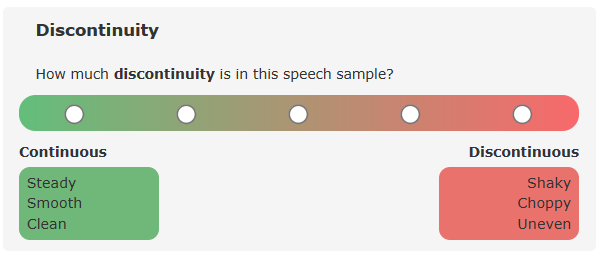

Next, the participant’s environment and device are tested using a modified-JND test [23] in which they should select which stimulus from a pair has a better quality in four questions. A temporal certificate will be issued for participants after passing this section which expires after two hours and consequently repeating this section will be required. Detailed instructions are given in the next section including introducing the rating scales and providing multiple samples for each perceptual dimension. Participants are required to listen to all samples for the first time. Figure 1 illustrates how the rating scale for quality dimensions is presented to participants. In addition, we used a Likert 5-point scale for signal quality and overall quality as specified by ITU-T Rec. P.835. In the Training section participants should first adjust the playback loudness to a comfortable level by listening to a provided sample and then rate 7 audio clips. This section is similar to the ratings section, but the platform provides live feedback based on their ratings. By completing this section a temporal certificate is assigned to the participants which is valid for one hour. Last is the Ratings section, where participants listen to ten audio clips and two gold standard and trapping questions and cast their votes on each scale. The gold standard questions are the ones that the experimenter already knows their answers (being excellent or bad) and participants are expected to vote on each scale with a minor deviation from known the answer [22]. Trapping questions are questions in which a synthetic voice is overlaid to a normal clip and asks participants to provide a specific vote to show their attention [24]. For this test, we provide scripts for creating the trapping clips, which ask participants to select answers reflecting the best or worst quality in all scales. For rating an audio clip, the participant should first listen to the end of the clip, and then they start casting their votes. During that time, the audio will be played back in a loop. After participants finish with a test set, they can continue with the next one where only the rating section will be shown until other temporal certificates are valid. By the expiration of any certificate, the corresponding section will be shown when they start the next test set.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c2893e95-4f7e-45c4-b125-ea49314be18a/scales.png)

2.1 Survey optimization

We utilized the multi-scale template in various research studies and improved it through the incorporation of experts and test participant feedback.

Descriptive adjectives: The understanding of perceptual dimensions might not be intuitive for naive test participants, therefore the P.804 recommendation includes a set of descriptive adjectives to describe the presence or absence of each quality dimension. We expanded this list through multiple preliminary studies, where participants were asked to listen to samples from each perceptual dimension and name three adjectives that best describe them. For each dimension, we selected the top three most frequently selected terms and presented them below each pole of the scale, as shown in Figure 1. The list of selected terms is reported in Table 2. We used discrete scales for dimensions to be consistent with Signal and Overall scales.

Bandwidth check: This test ensures the participant devices support the expected bandwidth. The test consists of five samples, and each has two parts separated by a beep tone. The second part is the same as the first part but in three samples superimposed by additive noise. Participants should listen to each sample and select if both parts have the same or different quality. We filtered the white noise with the following bandpass filters: 3.5-22K (all devices should play the noise), 9.5-22k (super-wide-band or fullband is supported), and 15-22K (fullband is supported).

Gold questions: Gold questions are widely used in crowdsourcing [22]. Here we observed gold questions that represent the strong presence of an impairment on one dimension and the clear absence of impairment on another dimension can best reveal an inattentive participant.

Randomization: We randomize the presentation order of scales for each participant. However, the Signal and Overall quality are always presented at the end. The randomized order is kept for each participant until a new round of training is required.

3 Validation

3.1 Reproducibility

We used a subset of the blind test set from ICASSP SIG 2023 challenge [21] for our reproducibility test. We selected 50 audio clips from the challenge which are processed by 18 models (and the degraded source clips) leading to 950 audio clips. We repeated our crowdsourcing test 5 times with a mutually exclusive group of workers, on separate days on Amazon Mechanical Turk. We calculated the Mean Opinion Scores (MOS) per clip and per model and show the correlation between different runs and scales in clip and model level in Table 3, respectively. The results show a strong correlation between different runs at the model level.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c2893e95-4f7e-45c4-b125-ea49314be18a/reprod.png)

3.2 Accuracy

In a separate experiment, a subset of data employed in Section 3.1 is assessed by expert listeners. This subset comprises 20 degraded source clips and their enhanced versions generated by 9 models from the ICASSP SIG 2023 challenge. Table 4 presents the correlation between MOS values provided by experts and crowdsourcing, indicating a robust correlation for all dimensions except coloration and reverberation. Further investigation reveals a poor agreement among experts on these dimensions (, , for reverberation and coloration, respectively).

| Scales | Per Model | Per Clip | ||

|---|---|---|---|---|

| PCC | SRCC | PCC | SRCC | |

| Coloration | 0.487 | 0.576 | 0.485 | 0.472 |

| Discontinuity | 0.919 | 0.729 | 0.687 | 0.588 |

| Loudness | 0.928 | 0.939 | 0.718 | 0.683 |

| Noisiness | 0.972 | 0.964 | 0.731 | 0.703 |

| Reverberation | 0.817 | 0.697 | 0.584 | 0.509 |

| Signal | 0.966 | 0.903 | 0.795 | 0.783 |

| Overall | 0.991 | 0.842 | 0.842 | 0.844 |

4 Usage

The ICASSP 2023 Speech Signal Improvement Challenge [21] aimed to encourage research in enhancing speech signal quality in communication systems, a persistent issue in audio communication and conferencing. Participants were provided with a test and blind sets, and winners were determined through this multi-dimensional subjective test. Both the test and blind sets have 500 samples, encompassing a diverse range of speech distortions, including frequency response distortions, bandwidth limitations, reverberation, and packet loss. Overall 9 teams participated in this challenge and the reported results are based on 9x500 processed clips used in the subjective test.

We compared the correlation between quality scores collected using this survey (P.804) and P.835-based [5] subjective tests for all entries, which are reported in Table 5. A robust correlation was observed in the shared scores between the two subjective methodologies. Regarding team rankings, the only swap occurred between two teams when utilizing scores from the P.835 test, resulting in a tied rank based on P.804 ratings. Moreover, we compute the PCC between the subjective P.804 metrics and the metrics obtained using DNSMOS P.835 [18] and NISQA [20]. The correlations vary from PCC to , highlighting the ongoing need for a subjective test to precisely assess speech quality.

In addition, we conducted Explanatory Factor Analysis (EFA) [25] to explore the underlying relationships among quality dimensions and assess if there’s shared variance among sub-dimensions. We applied the Maximum Likelihood extraction method with Varimax rotation, extracting three factors as suggested by the Scree plot. Bartlett’s test of sphericity yielded a significant result, and the KMO value of 0.65 indicated that the data was suitable for explanatory factor analysis. The factor loadings of quality scores on each factor are shown in Table 6. In total, three factors accounted for of the variance in the data. Factor 1 primarily represented signal quality, with high loadings from Signal, Coloration, and Loudness. Discontinuity formed a separate factor, with some cross-loadings from Signal, suggesting limited shared variance between Discontinuity ratings and both Coloration and Loudness. As anticipated, Noisiness constituted a distinct factor orthogonal to the others, with loading from Reverberation. Considering all mentioned factors, we highlight the importance of adding the signal and reverb dimensions to the P.804, since they contribute to orthogonal factors in a significant percentage.

| Dimension | PCC | SRCC | Kendall Tau-b | Tau-b95 |

|---|---|---|---|---|

| Background/Noisiness | 0.964 | 0.926 | 0.825 | 0.853 |

| Signal | 0.954 | 0.933 | 0.801 | 0.914 |

| Overall | 0.965 | 0.940 | 0.825 | 0.822 |

| Quality score | Factor 1 | Factor 2 | Factor 3 |

|---|---|---|---|

| Coloration | 0.787 | ||

| Discontinuity | 0.936 | ||

| Loudness | 0.476 | ||

| Noisiness | 0.742 | ||

| Reverberation | 0.413 | ||

| Signal | 0.824 | 0.481 |

| Feature | Effects | ||

|---|---|---|---|

| Total | Indirect | Direct | |

| Coloration | 0.427 | 0.352 | 0.102 |

| Discontinuity | 0.343 | 0.277 | 0.066 |

| Loudness | 0.229 | 0.083 | 0.146 |

| Noisiness | 0.146 | 0.047 | 0.099 |

| Reverberation | 0.103 | 0.065 | 0.038 |

Additionally, a majority of the sub-dimensions exert an influence on the quality of the signal and the overall quality. To investigate the indirect and total effects of the sub-dimensions on the overall quality, a mediation analysis was conducted, with signal quality serving as the mediator variable. The outcomes of this analysis are presented in Table 7, which reveals that Coloration had the highest total effect on the overall quality of this dataset.

5 Conclusions

This paper describes an open-source toolkit designed for multi-dimensional subjective speech quality assessment in crowdsourcing. We detail the various sections of the test template and present evidence that the collected ratings obtained using this toolkit are both valid and reproducible. Additionally, it was demonstrated that the toolkit can be used to rank speech enhancement models on large-scale subjective tests, and it can provide insights into the effect of each perceptual dimension on overall quality. Results also showed that coloration, discontinuity, and noisiness as three orthogonal factors that other dimensions load onto. Future work includes improving the survey training and scale descriptions to improve coloration and reverberation accuracy with respect to expert raters.

References

- [1] “ITU-T P.800: Methods for subjective determination of transmission quality,” 1996.

- [2] “ITU-T Recomendation P.808: Subjective evaluation of speech quality with a crowdsourcing approach,” 2018.

- [3] B. Naderi and R. Cutler, “An Open Source Implementation of ITU-T Recommendation P.808 with Validation,” INTERSPEECH, pp. 2862–2866, Oct. 2020.

- [4] “ITU-T Recommendation P.835: Subjective test methodology for evaluating speech communication systems that include noise suppression algorithm,” 2003.

- [5] B. Naderi and R. Cutler, “Subjective Evaluation of Noise Suppression Algorithms in Crowdsourcing,” in INTERSPEECH, 2021.

- [6] M. Wältermann, K. Scholz, A. Raake, U. Heute, and S. Möller, “Underlying quality dimensions of modern telephone connections,” in INTERSPEECH 2006, 2006.

- [7] M. Wältermann, Dimension-based quality modeling of transmitted speech, Springer Science & Business Media, 2013.

- [8] F. Köster, D. Guse, and S. Möller, “Identifying Speech Quality Dimensions in a Telephone Conversation,” Acta Acustica united with Acustica, vol. 103, no. 3, pp. 506–522, May 2017.

- [9] F. Köster, Multidimensional analysis of conversational telephone speech, Springer, 2018.

- [10] F. Köster and S. Möller, “Perceptual speech quality dimensions in a conversational situation,” in INTERSPEECH 2015, 2015.

- [11] “ITU-T Recommendation P.863.2: Extension of ITU-T P.863 for multi-dimensional assessment of degradations in telephony speech signals up to full-band,” 2022.

- [12] “ITU-T Recommendation P.804: Subjective diagnostic test method for conversational speech quality analysis,” 2017.

- [13] A. Rix, J. Beerends, M. Hollier, and A. Hekstra, “Perceptual evaluation of speech quality (PESQ) - a new method for speech quality assessment of telephone networks and codecs,” in ICASSP. 2001, vol. 2, pp. 749–752, IEEE.

- [14] J. G. Beerends, M. Obermann, R. Ullmann, J. Pomy, and M. Keyhl, “Perceptual Objective Listening Quality Assessment (POLQA), The Third Generation ITU-T Standard for End-to-End Speech Quality Measurement Part I–Temporal Alignment,” J. Audio Eng. Soc., vol. 61, no. 6, pp. 19, 2013.

- [15] “ITU-T Recommendation P.563: Single-ended method for objective speech quality assessment in narrow-band telephony applications,” 2004.

- [16] A. R. Avila, H. Gamper, C. Reddy, R. Cutler, I. Tashev, and J. Gehrke, “Non-intrusive Speech Quality Assessment Using Neural Networks,” in ICASSP, Brighton, United Kingdom, May 2019, pp. 631–635, IEEE.

- [17] C. K. A. Reddy, V. Gopal, and R. Cutler, “DNSMOS: A Non-Intrusive Perceptual Objective Speech Quality metric to evaluate Noise Suppressors,” in INTERSPEECH, 2021.

- [18] C. K. A. Reddy, V. Gopal, and R. Cutler, “DNSMOS P.835: A Non-Intrusive Perceptual Objective Speech Quality Metric to Evaluate Noise Suppressors,” in ICASSP, 2022, pp. 886–890, ISSN: 2379-190X.

- [19] G. Yi, W. Xiao, Y. Xiao, B. Naderi, S. Möller, W. Wardah, G. Mittag, R. Cutler, Z. Zhang, D. S. Williamson, F. Chen, F. Yang, and S. Shang, “ConferencingSpeech 2022 Challenge: Non-intrusive Objective Speech Quality Assessment (NISQA) Challenge for Online Conferencing Applications,” in INTERSPEECH, 2022.

- [20] G. Mittag, B. Naderi, A. Chehadi, and S. Möller, “NISQA: A deep cnn-self-attention model for multidimensional speech quality prediction with crowdsourced datasets,” in INTERSPEECH, 2021.

- [21] R. Cutler, A. Saabas, B. Naderi, N.-C. Ristea, S. Braun, and S. Branets, “ICASSP 2023 Speech Signal Improvement Challenge,” Apr. 2023, arXiv:2303.06566 [eess].

- [22] B. Naderi, R. Zequeira Jiménez, M. Hirth, S. Möller, F. Metzger, and T. Hoßfeld, “Towards speech quality assessment using a crowdsourcing approach: evaluation of standardized methods,” Quality and User Experience, vol. 6, no. 1, pp. 2, Nov. 2020.

- [23] B. Naderi and S. Möller, “Application of just-noticeable difference in quality as environment suitability test for crowdsourcing speech quality assessment task,” in QoMEX. 2020, pp. 1–6, IEEE.

- [24] B. Naderi, T. Polzehl, I. Wechsung, F. Köster, and S. Möller, “Effect of trapping questions on the reliability of speech quality judgments in a crowdsourcing paradigm,” in INTERSPEECH, 2015.

- [25] M. W. Watkins, “Exploratory factor analysis: A guide to best practice,” Journal of Black Psychology, vol. 44, no. 3, pp. 219–246, 2018.

- [26] B. Naderi and S. Möller, “Transformation of Mean Opinion Scores to Avoid Misleading of Ranked Based Statistical Techniques,” in QoMEX, May 2020, pp. 1–4, ISSN: 2472-7814.